CHAPTER 2

State of the Art/Science

The first phase of this study involved determining the state of the art/science broadly. Rather than focusing solely on project management and what “should be,” this phase focused on a wide range of disciplinary areas (such as engineering, political science, environmental management, and medicine) to explore what “might be” in regard to the practice of eliciting expert judgment.

2.1 Theory-Practice Gap

The idea that a gap or divide exists between theory and practice has been widely discussed (e.g., Brendillet, Tywoniak, & Dwivedula, 2015; Kraaijenbrink, 2010; Sandberg & Tsoukas, 2011; Van de Ven & Johnson, 2006). Additionally, several studies suggested that such a theory-practice gap exists within project management as well (e.g., Koskela & Howell, 2002; Söderlund, 2004; Svejvig & Andersen, 2015).

This study clearly shows that a gap does indeed remain between the theory and practice of expert judgment within project management. The first phase of this research project, reported in this chapter, identifies a body of the most relevant theory. The second phase of the research project, reported in the next chapter, identifies the current practice of expert judgment in project management. Comparing the two reveals the theory-practice gap that exists.

Although many expert judgment elicitation techniques are well established, much has been learned over the past several decades about how they can be improved (e.g., Armstrong, 2011). Additionally, even though the study of expert judgment within the context of project management has been steadily increasing (Jørgensen & Shepperd, 2007), that study does not span the breadth of the discipline.

Much advancement has been made in the field of expert judgment with regard to project time estimation (Trendowicz, Munch, & Jeffery, 2011). The most common form of time estimation is the program evaluation and review technique (PERT) a three-point estimation technique (developed in 1959 by Malcolm et al.). The original PERT can be found in virtually any textbook on project management. More recently, many advances have been made in the PERT, including new and improved expressions of PERT mean and variance (e.g., Golenko-Ginzburg, 1989; Hahn, 2008; Herrerías, García, & Cruz, 2003; Herrerías-Velasco et al., 2011), alternative distributional forms (e.g., Garcia, Garcia-Perez, & Sanchez-Granero, 2012; Herrerías-Velasco et al., 2011; Premachandra, 2001), and new ways to estimate key parameters (e.g., Sasieni, 1986; van Dorp, 2012). Yet, these advances have not been incorporated into either the academic resources (e.g., texts) or the professional practitioner resources (e.g., software, guides). As a result, even in one of the most widely studied areas of project management expert judgment, a gap remains between the theory and the practice.

Another area of advancement and innovation in expert judgment has been observed in software project management—specifically in the area of cost estimation. Because estimates often propagate throughout an entire project plan (Sudhakar, 2013), and since flawed estimation has been identified as one of the top failure factors in software project management (Dwivedi et al., 2013), cost estimation has been identified as critical to project success. For example, in a study of 250 complex software projects (Jones, 2004), less than 10% of the projects were successful (i.e., less than six months over schedule, less than 15% over budget). In a continuing effort to improve cost estimation, a variety of new and improved methods has been identified (e.g., Kim & Reinschmidt, 2011; Li, Xie, & Goh, 2009; Liu & Napier, 2010). Just as with time estimation, these new approaches have not been widely adopted (Trendowicz, Munch, & Jeffery, 2011). Again, even though the theory has advanced, there is a gap in that the practice lags behind theoretical improvements.

Additionally, regardless of which expert judgment elicitation methods are used in project management, estimation is known to be flawed (Budzier & Flyvberg, 2013; Flyvberg, 2006; Flyvberg, Holm, & Buhl, 2005). Noted in the seminal work of Kahneman, Slovic, and Tversky (1982) and expanded through an abundance of recent research (as summarized in Lawrence, Goodwin, O’Connor, & Onkal, 2006), expert judgments are subject to well-known cognitive biases. One of the most common forms of cognitive bias in experts is overconfidence (Lichtenstein, Fischhoff, & Phillips, 1981; Lin & Bier, 2008). There have been many studies to improve how we elicit expert judgment to reduce overconfidence and, in turn, increase accuracy by changing the mode of elicitation (e.g., Soll & Klayman, 2004; Soll & Larrick, 2009; Speirs-Bridge et al., 2010; Teigen & Jorgensen, 2005; Welsh, Lee, & Begg, 2008, 2009; Winman, Hansson, & Juslin, 2004), by including feedback (e.g., Bolger & Önkal-Atay, 2004; Haran, Moore, & Morewedge, 2010; Herzog & Hertwig, 2009; Rauhut & Lorenz, 2010; Vul & Pashler, 2008), and through other means. Here, too, the theoretical enhancements to mitigate the adverse impacts of overconfidence have not been widely adopted into practice.

2.2 Method

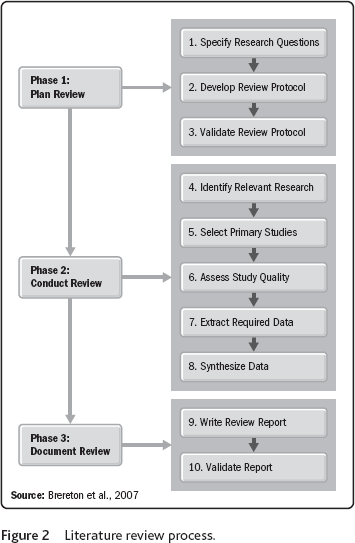

A 10-step review process (Brereton et al., 2007) was used to conduct this literature review. Figure 2 provides an overview of the 10 steps involved in this particular process. These steps could be aggregated into three main stages (i.e., plan review, conduct review, and document review).

2.3 Data and Sample

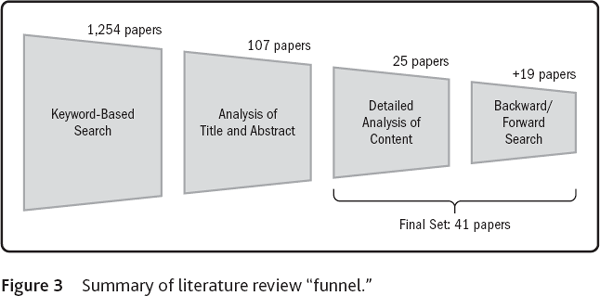

Because the most recent compendium of expert judgment elicitation (i.e., O’Hagan et al., 2006) published the results of a comprehensive literature review completed in 2005, this literature review set out to emulate the process of that work by examining the most recent decade (which would have brought the results of that work up to the present). However, it was quickly determined that such an expansive review of the most recent decade of literature (using similar search terms, parameters, and databases) was not possible. The BEEP (Bayesian Elicitation of Experts’ Probabilities) project commissioned by the UK National Health Service (as reported by O’Hagan et al., 2006), which reviewed more than two decades’ worth of literature, was conducted by a large team of researchers over a multiyear period. Because our study was conducted by a small team of researchers over a few months, such a comprehensive decade study period proved to be beyond the scope of the study. Instead, this study focused on the most recent year (i.e., mid-2013 to mid-2014) of the decade since the BEEP project was completed. This compromise was deemed adequate because additional sources of information would be uncovered in the forward and backward searches of the relevant literature from that one-year period. Figure 3 summarizes the results of the literature review conducted in this study.

Similar to the BEEP project (as reported by O’Hagan et al., 2006), this study searched the ISI Science, Social Sciences, and Humanities Citation Indices under the terms expert judgment, expert opinion, and elicitation for the most recent one-year period. More than 1,200 articles were identified as relevant and investigated further. A careful reading of the abstracts of those references led to the selection of more than 100 sources, whose full text was retrieved and read. The resulting detailed content analysis of these articles yielded 25 papers that were relevant to the topic of expert judgment elicitation (for project management). In an attempt to address the entire decade-long period since the BEEP project, a forward and backward search was conducted and an additional 19 articles were identified as relevant, bringing the final set of articles to 41 articles. In comparison, the BEEP project identified 13,000 references from keyword searches. The most relevant 2,000 were narrowed down to 400 based upon a review of the abstracts. The remaining 400 were read in detail. As is typical of this type of work, though the literature review was intended to be comprehensive in scope, there will inevitably be omissions. Further, the discussion and emphases contained in this report about those references (and the attempt to translate the ideas from many varied disciplines to the world of project management) will reflect the perspective of the author.

2.4 Analysis and Results

In order to provide this review of the literature structure, the first step was to identify a framework by which the results could be organized. To accomplish this, several general (as well as some specific) expert judgment elicitation processes and protocols were examined. There was a wide variety of protocols that involved a few or several steps. For example, the U.S. Environmental Protection Agency (2011) has as few as three steps; Catenacci, Bosetti, Fiorese, and Verdolini (2015) offer a three-phase protocol; Meyer and Booker (2001) suggest seven steps; Ayyub (2001) provides eight steps; Aliakabargolkar and Crawley (2014) offer a 10-step model for space exploration; and the EU Atomic Energy Community protocol (Cooke & Goossens, 2000) has as many as 15 steps. Table 2 examines a few of the most prominent processes in chronological order. It starts with the protocol designed in the seminal work of the U.S. Nuclear Regulatory Commission (NUREG) (leftmost column). It then proceeds to a protocol developed for the EU Atomic Energy Community by the researchers from the Technical University in Delft, the Netherlands (second column). Their protocol built on the work of the NUREG-1150 and is also based on their experience completing hundreds of studies and compiling thousands of judgments (Cooke & Goossens, 2008). Next, the generic protocols are presented from a trio of books on expert judgment (opinion) (columns three through five). These references have been widely used by practitioners conducting expert judgment elicitation. It should be observed that there are many similarities and few differences among the five elicitation protocols presented. Some offer peer review, some have much more detailed planning, and some have more interactive elicitation methods, but all follow a similar pattern that can be organized into the five Project Management Process Groups of the PMBOK® Guide: initiation, planning, executing, monitoring and controlling, and closing.

In the previous table, note that the numbering provided corresponds to that of the original source. In some cases, the ordering of the numbered process steps is out of sequence of the original source to demonstrate the relationship to the generic seven steps used in this study (i.e., those provided in the leftmost column). In an effort to integrate the various protocols of elicitation from Table 2, the following generic seven-step protocol is proposed (as presented in the row headers on the left side of the table):

- Frame the Problem

- Plan the Elicitation

- Select the Experts

- Train the Experts

- Elicit Judgments

- Analyze/Aggregate Judgments

- Document/Communicate Results

Note that this summary protocol is nicely aligned with the phases of project management from the PMBOK® Guide. The summary protocol includes an initiation phase (step 1), a planning phase (step 2), an execution phase (steps 3–5), a monitor/control phase (step 6), and a closeout phase (step 7). Such an orientation would be well understood by project management practitioners.

Two prominent compilations of effective practices in expert elicitation have been overlaid upon the suggested seven-step protocol to demonstrate its coherence. Table 3 shows 12 principles of expert judgment recommended by Jørgensen (2004) and 10 recommendations suggested by Kynn (2008) that are based upon separate, comprehensive reviews of the literature. These provide a preview of some of the findings about ways to improve expert elicitation (and would serve as a good starting point to learn more about how to improve expert judgment).

Table 3 Compilation of ways to improve expert judgment.

| 12 Principles (Jørgensen, 2004) | 10 Recommendations (Kynn, 2008) | |

| Frame the Problem | ||

| Plan the Elicitation |

|

|

| Select the Experts |

|

|

| Train the Experts |

|

|

| Elicit the Judgments |

|

|

| Aggregate/Analyze the Judgments |

|

|

| Document/Communicate the Results | ||

With the exception of the first and last steps, the suggestions contained in Table 3 span the generic seven-step protocol of expert judgment elicitation proposed. Regarding a protocol of expert judgment elicitation, all seven steps should be retained for completeness until they can be properly tested (which was beyond the scope of this study). Meantime, for simplicity and consistency, the five middle steps provided in Table 3 will become the organizing frame of the literature review to identify the state of the art/science as it pertains to expert judgment elicitation.

2.4.1 Planning the Elicitation

Once the problem has been framed (and the desired data and information to be elicited have been identified), planning commences and an appropriate method must be chosen to elicit the requisite expert judgment.

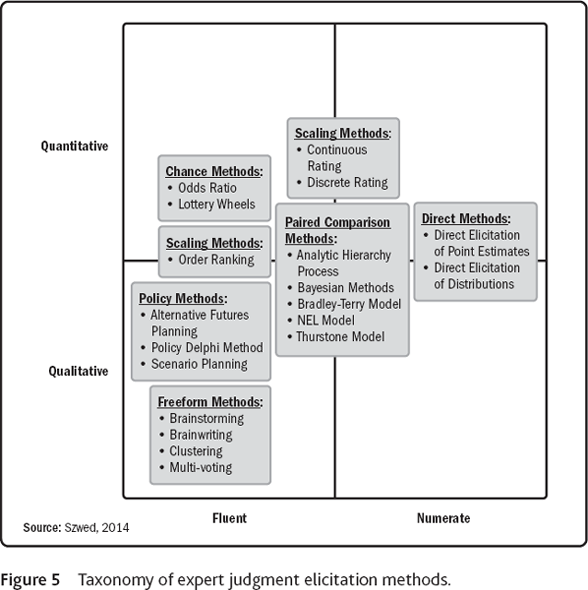

In a broad sense, the form of the data and information may be considered either qualitative or quantitative using Stevens’s (1946) scales of measurement—nominal and ordinal data being primarily qualitative; interval and ratio data being predominantly quantitative data (also referred to as “weak” and “strong” data scales, respectively, by Wachowicz and Blaszczyk [2013]). It has been suggested that some experts have a preference for one form of elicitation over the other (i.e., quantitative versus qualitative) (Larichev & Brown, 2000). This dichotomy has also been tested to demonstrate how experts’ numeracy or fluency will affect their ability to provide judgments about quantitative or qualitative information, respectively (Fasolo & Bana e Costa, 2014).

Additionally, recent neuroscience has further established this dichotomy of judgment types (i.e., qualitative and quantitative) by examining how the expert’s brain functions when rendering these two types of judgments. In recent decades, there has been a growth in our understanding of the human brain and its functioning (across a wide variety of contexts) as a result of advances in brain imaging using functional magnetic resonance imaging (fMRI). One study identified that the task-positive network (TPN) regions of the brain have been shown to be activated in a broad range of attention-focused tasks (e.g., Buckner, Andrews-Hanna, & Schacter, 2008; Fox, Corbetta, Snyder, & Vincent, 2006). Because of this, the TPN would likely be the area of the brain activated through evaluative expert judgment situations. On the other hand, the default mode network (DMN) regions of the brain have been shown to be activated in idea generation (e.g., Beaty et al., 2014; Kleibeuker, Koolschijn, Jolles, de Dreu, & Crone, 2013), envisioning the future (e.g., Uddin, Kelly, Biswal, Castellanos, & Milham, 2009), and creativity or insightful problem solving (e.g., Subramaniam, Kounios, Parrish, & Jung-Beeman, 2009; Takeuchi et al., 2011). Therefore, in generative expert judgment situations, the DMN would likely be activated. Interestingly, activities in the TPN tend to inhibit activities in the DMN, and vice versa (e.g., Boyatzis, Rochford, & Jack, 2014; Jack, Dawson, & Norr, 2013). Therefore, such evidence from neuroscience would seem to emphasize the importance of matching methods for eliciting expert judgment to the desired form of information. So, identifying whether qualitative or quantitative information is needed will determine whether generative or evaluative methods are needed.

Therefore, in the context of project management, two basic forms of expert judgment elicitation methods may be suggested: generative and evaluative. On one hand, generative elicitation methods would yield a list of generated items, scenarios, lists, and so forth. For example, in the Collect Requirements process (identified as 5.2 in the PMBOK® Guide), a generative elicitation process would be used to generate a list of requirements using stakeholder input. On the other hand, the evaluative elicitation methods would be used to evaluate (or quantify) a specific phenomenon of interest. In the Estimate Activity Durations process (identified as 6.5 in the PMBOK® Guide), any one of many evaluative expert judgment elicitation processes could be employed to create the requisite time estimates.

In order to be beneficial to project management practitioners, all PMBOK® Guide project management processes (which specifically list expert judgment as a tool or technique—see Table 1) will be categorized as either generative or evaluative in Table 4. This set of processes was determined to be either generative or evaluative—the two suggested basic forms of expert judgment elicitation for project management. If the primary output(s) of a process is/are numerical in nature (e.g., cost, time, probability estimates), the process is deemed to be evaluative. If the primary output(s) of a process is/are verbal in nature (e.g., lists, plans, registers), then the process is deemed to be generative in nature.

Table 4 Categorization of PMBOK® Guide processes.

| Generative PMBOK® processes | Process outputs |

| 4.1 Develop project charter 4.2 Develop project management plan 4.3 Monitor and control project work 4.4 Perform integrated change control 4.5 Close project or phase 5.1 Plan scope management 5.4 Create work breakdown structure 6.1 Plan schedule management 6.2 Define activities 6.4 Estimate activity resources 7.1 Plan cost management 9.1 Plan human resource management 10.3 Control communications 11.1 Plan risk management 11.2 Identify risks 12.1 Plan procurement management 12.2 Contract procurements 13.4 Control stakeholder engagement |

Project charter Project management plan Deliverables, work performance data, change requests, updates Change requests, work performance reports, updates Approved change requests, change log, updates Scope management plan, requirements management plan Scope baseline, updates Schedule management plan Activity list, activity attributes, milestone list Activity resource requirements, resource breakdown structure, updates Cost management plan Human resource management plan Work performance information, change requests, updates Risk management plan Risk register Procurement management plan, SOW, documents, change requests Selected sellers, agreements, resource calendars, change requests, updates Work performance information, change requests, updates |

| Evaluative PMBOK® processes | Process outputs |

| 6.5 Estimate activity durations 7.2 Estimate costs 7.3 Determine budget 11.3 Perform qualitative risk analysis 11.4 Perform quantitative risk analysis 11.5 Plan risk responses |

Activity duration estimates, updates Activity cost estimates, basis of estimates, updates Cost baseline, project funding requirements, updates Project management plan updates (e.g., P-I matrix) Project management plan updates (e.g., probabilistic information) Project management plan updates (e.g., risk register) |

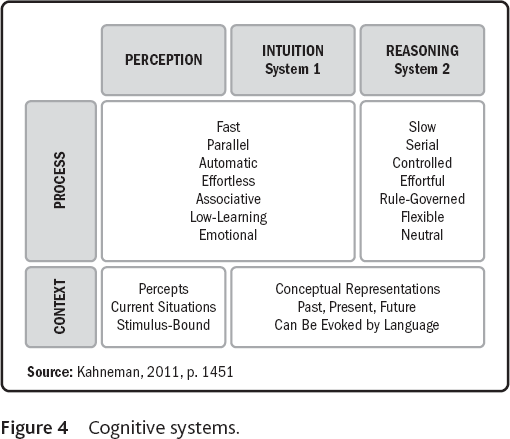

To further reinforce the science behind expert judgment, let’s now look at a framework for classifying how judgments are made (Kahneman, 2011). In Figure 4, there are two “systems” that describe different ways in which the mind thinks. In describing these cognitive systems, Kahneman (2011) invokes agency upon the “systems” as a way to better describe the differences in how they work, even though they are not systems per se. Stanovich and West (2000) use the more neutral term type in their description. System 1 relies on association and produces impressions about the attributes of objects from perception and thought. It is automatic and quick-acting. The judgments from system 1 are always explicit and intentional, whether or not they are expressed. System 2, on the other hand, is what we normally think of when we consider judgment; it is deliberate and conscious.

Reconsidering the two forms of expert judgment, it would seem natural that system 1 would do a good job of generative elicitation and system 2 would be best used in evaluative elicitation. Because certain elicitation methods are better suited for yielding qualitative information and others are more suited for providing quantitative information, once it has been determined which of the basic forms of expert judgment (i.e., generative or evaluative) is needed, an appropriate elicitation method must be selected. Based upon a review of the literature, expert judgment elicitation methods have been labeled either generative or evaluative. Table 5 provides a list of some of the generative expert elicitation methods that may be chosen.

Table 5 Generative expert judgment elicitation methods.

| Appreciative Inquiry |

| Brainstorming |

| Brainwriting |

| Clustering |

| Codiscovery (Barnum, 2010) |

| Delphi Technique/Method (Dalkey & Helmer, 1963) |

| Dual Verbal Elicitation (McDonald, Zhao, & Edwards, 2013) |

| Metaphors (e.g., Jacobs, Oliver, & Heracleous, 2013; Cornelissen, 2005) |

| Nominal Group Technique (Delbecq & Van de Ven, 1975) |

| Photo Narrative (Parke et al., 2013) |

| Scenario Planning |

| Think-Aloud Protocols |

Similarly, Table 6 provides a list of some of the evaluative expert elicitation methods that may be chosen.

Because there is a wide variety of methods by which to elicit expert judgments (e.g., those listed in Tables 5 and 6), the choice of elicitation method will depend upon both the type of information needed and the type of expertise available. After determining the form of the expert judgment needed for a particular project management process, it will be necessary to determine the type of expertise that is necessary (and determine if it is available).

2.4.2 Selecting the Experts

There are many considerations to take into account when selecting experts. First, it is important to identify the requisite expertise that will be required to accomplish the process or task at hand. There are several compelling definitions of expertise. For example, Collins and Evans (2007) offer a taxonomy of expertise, as shown in Table 7.

Table 6 Evaluative expert judgment elicitation methods.

| Point Distribution Methods | |

|

Distribution Estimation Methods

|

Paired Comparison Methods

|

|

Chance Methods

|

|

|

Scaling Methods (e.g., Torgersen, 1958)

|

Other

|

Woods and Ford (1993) describe four fundamental ways in which expertise (as opposed to amateur or lay judgment) is demonstrated:

- Expert knowledge is grounded in specific cases.

- Experts represent problems in terms of formal principles.

- Experts solve problems using known strategies.

- Experts relay less on declarative knowledge and more on procedural knowledge.

Table 7 Taxonomy of expertise.

| Type | Characteristics |

| Contributory expertise | Fully developed and internalized skills and knowledge, including an ability to contribute new knowledge and/or teach |

| Interactional expertise | Knowledge gained from learning the language of specialist groups, without necessarily obtaining practical competence |

| Primary source knowledge | Knowledge from primary literature includes basic technical competence |

| Popular understanding | Knowledge from media, with little detail, less complexity |

| Specific instruction | Formulaic, rule-based knowledge, typically simple, context-specific and local |

Source: Collins & Evans, 2007

Shanteau (1992) argues that evidence of relevant experience and training includes the following:

- Certifications such as academic degrees or professional training

- Professional reputation of the expert (as a potentially reliable guide)

- Impartiality

- Multiplicity of viewpoints (i.e., consideration of multiple forms of data and perspectives

Hora and von Winterfeldt (1997) suggest the following criteria for scrutinizing experts (particularly in a highly public and controversial context):

- Tangible evidence of expertise

- Reputation

- Availability and willingness to participate

- Understanding of the general problem area

- Impartiality

- Lack of an economic or personal stake in potential findings

Experts selected for the elicitation should possess high professional standing and widely recognized competence (Burgman, McBride, et al., 2011). The group of experts should represent a diversity of technical perspectives on the issue of concern. Although experience does not necessarily yield expertise, there is some evidence to suggest that professionals with more expertise are subject to less bias and better judgments (Adelman & Bresnick, 1992; Adelman, Tollcott, & Bresnick, 1993; Anderson & Sunder, 1995; Bolger & Wright, 1994; Johnson, 1995). As a result, expertise is context-dependent (Burgman, Carr, et al., 2011) and should be “unequally distributed” (including traditional and nontraditional experts) rather than merely determined by formal qualifications or professional membership (Evans, 2008). In response to the potential benefits of using expertise across the community (Carr, 2004; Hong & Page, 2004), Collins and Evans (2007) recommend the following prescriptions for identifying experts:

- Identify core expertise requirements and the pool of potential experts, including lay expertise.

- Create objective selection criteria and clear rules for engaging experts and stratify the pool of experts and select participants transparently based on the strata.

- Evaluate the social and scientific context of the problem.

- Identify potential conflicts of interest and motivational biases and control bias by “balancing” the composition of expert groups with respect to the issue at hand (especially if the pool of experts is small).

- Test expertise relevant to the issues.

- Provide opportunities for stakeholders to cross-examine all expert opinions.

- Train experts and provide routine, systematic, relevant feedback on their performance.

Furthermore, Cooke and Goossens (2000) noted that experts should be willing to be identified publicly (but their exact judgments may be withheld except for competent peer review), provide their rationale supporting their judgments, and disclose any potential conflicts of interest. Cooke and Goossens recommended the following procedure:

- Publish expert names and affiliations in the study.

- Retain all information for competent peer review (post-evaluation), but not for unrestricted distribution.

- Allow de-identified judgments to be available for unrestricted distribution.

- Document and supply rationales for all judgments.

- Provide each expert with feedback on his or her own performance.

- Request expert permission for any published use beyond the above.

Though this procedure was devised specifically for studies that would guide public policy (where validation and transparency are important), similar measures could be employed for project management.

Once the pool of experts with the requisite expertise has been established, then an appropriate number of experts must be selected. The number of experts selected depends upon the nature of the decision context and the nature of the problem, including the degree of uncertainty expected. As a general rule of thumb, six to eight experts (and no fewer than four) (Clemen & Winkler, 1999; Hora, 2004) should be obtained, and at least some of the experts should come from outside of the organization conducting the elicitation. A pool of candidate experts (who possess the requisite expertise and have demonstrated interest and commitment to participate) may be reviewed by a committee, and a sufficient number of the best experts should be selected from that pool.

Experimental research has shown that expert performance is also impacted by the format of the elicitation process (Aloysius, Davis, Wilson, Taylor, & Kottwmann, 2006; Bottomley, Doyle, & Green, 2000; Fong et al., 2015). Therefore, from the planning stage, the form of the information elicited must be considered (i.e., whether it is generative or evaluative). Once the form of the expert judgment has been identified as either generative or evaluative, the requisite expertise must be identified and an adequate pool of experts must be selected to achieve that expertise. It was initially suggested that numerate experts would perform better on quantitative (or evaluative) exlicitation tasks, while literate experts would perform better on qualitative (or generative) elicitation tasks (Larichev & Brown, 2000). More recently, this was demonstrated using technically equivalent numerical and nonnumerical elicitation methods (Fasolo & Bana e Costa, 2014). Using this framework, Figure 5 provides a mapping of the various families of expert judgment elicitation methods to the two types of expertise.

Numerate experts have facility with and possess the ability to discuss and describe quantities, probabilities, and numbers. Fluent (or literate) experts possess the ability to discuss and describe qualities using words and narrative. Some experts may be both numerate and literate. Additionally, in some contexts, experts may be more comfortable providing relative estimates. In such cases, paired comparison methods and scaling methods using order ranking would be appropriate. When experts are capable of providing absolute estimates, direct methods and scaling methods using discrete or continuous ratings would be appropriate. Decisions (or generative processes in the project management world) have been referred to as nodes for creativity (Kara, 2015), and fluency has been indicated as a means to invoke both system 1 and system 2 (Smerek, 2014). Fluency is often measured by examining vocabulary and testing the number of words an expert can spontaneously generate that begin with a specific letter or letters in a limited amount of time (e.g., Guilford, 1967; Guilford & Guilford, 1980; Spreen & Strauss, 1998). In cases where generative methods are deemed most appropriate, fluent (or literate) experts should be chosen. Likewise, in cases where evaluative methods are deemed most appropriate, numerate experts should be sought.

Because a significant portion of the expert judgment elicitation processes is evaluative in nature, it would be beneficial if there could be some means to assess experts’ capabilities within system 2 (as shown in Figure 4). If the elicitation process called for evaluation that required system 2 cognitive skills and an expert unconsciously relied on system 1 to develop judgments (which we know are prone to a great many cognitive biases and heuristics), we would want some means to evaluate which system the expert invoked.

One of the main functions of system 2 is to monitor and control the thoughts and actions “suggested” by system 1, allowing some to be expressed directly in behavior and suppressing or modifying others. Because awareness about how judgment is processed is not readily apparent, Frederick (2005) developed the Cognitive Reflection Test to determine whether someone was actively employing system 2. The following questions come from this test:

- A bat and ball cost US$1.10. The bat costs one dollar more than the ball. How much does the ball cost?

- 10 cents

- 5 cents

- It takes 5 machines 5 minutes to makes 5 widgets. How long would it take 100 machines to make 100 widgets?

- 100 minutes

- 5 minutes

- In a lake, there is a patch of lily pads. Every day, the patch doubles in size. If it takes 48 days for the patch to cover the entire lake, how long would it take the patch to cover half of the lake?

- 24 days

- 47 days

In each of these questions, the correct answer is the second one; however, our intuitive system 1 response would have us favoring the first answer. Because we are overconfident in our intuitions, we often fail to check our work. Thousands of college students have been given this test. More than 80% (50% at more selective schools) gave the intuitive—incorrect—answers. Even though the answers are easily calculated, students who answered incorrectly simply did not check their work and relied on their intuition. Thus, it would be beneficial to use such a simple diagnostic test, in addition to subjective expertise, to determine which experts demonstrate the most control over their intuitions (and thus may be less susceptible to bias) (see, e.g., Tumonis, Šavelskis, & Žalytė, 2013). Campitelli and Labolitta (2010) found that cognitive reflection is related to the concept of actively open-minded thinking and that it interacts positively with knowledge and domain-specific heuristics and plays an important role in the adaptation of the expert to the decision environment. Cognitive reflection was also found to be a better predictor of performance on heuristics-and-biases tasks than cognitive ability, thinking dispositions, and executive functioning (Toplak, West, & Stanovich, 2011). Thus, it is expected that cognitive reflection will be a useful screening technique for experts. Additionally, by being better able to retrieve and use applicable numerical ideas, highly numerate experts have been shown to be less susceptible to biases (such as framing effects) and also more effective at providing numerical estimates (Lipkus, Samsa, & Rimer, 2001).

All of these considerations—requisite expertise, number of experts, form of expert judgment, and expert performance—will shape the selection of the pool of experts.

2.4.3 Training Experts

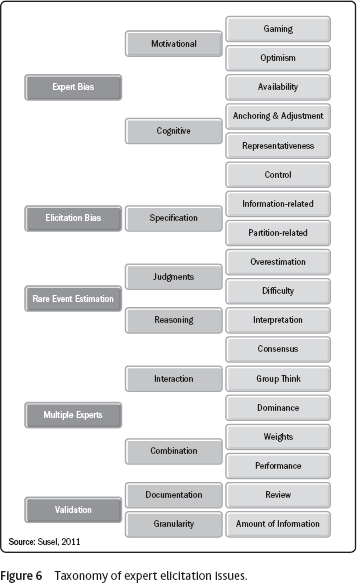

Once experts have been selected, they should be trained in an effort to ensure and improve the quality of their judgments. Expert judgment is influenced by many known issues and challenges (see Figure 6).

As a result of the known issues, there are several reasons for conducting pre-elicitation training of experts:

- To familiarize the experts with the problem under consideration and ensure that they share a similar baseline of information (e.g., basic domain knowledge or probabilistic and uncertainty training)

- To introduce the experts to the elicitation protocol, procedure, and process

- To introduce or reinforce the experts on uncertainty and probability encoding and provide them practice in formally articulating their judgments and rationale

- To provide awareness of the potential for cognitive biases that may influence their judgments

Fischhoff (1982) proposed a framework for elicitation enhancement strategies. These were simplified by O’Hagan et al. (2006) into the following:

- Fix the task.

- Fix the expert.

- Match the expert to the task.

We will examine each of these in reverse order. Matching the expert to the task at hand was covered in detail in the previous section. In short, qualitative/quantitative information should be elicited using generative/evaluative methods to gather judgment from fluent/numerate experts. Next, we will examine some of the efforts that work to “fix” or train the expert. Following that, there will be a considerable look at “fixing” the task—typically by creating methods for minimizing expert bias.

Before the elicitation session begins, it is important to explain to the experts why their judgments are required. Clemen and Reilly (2001) note that it is important to establish rapport with the experts and to engender enthusiasm for the project. Walker, Evans, and MacIntosh (2001) suggest that training of experts should involve:

- information about the judgments (e.g., probability distributions);

- information about the most common cognitive biases and heuristics, including advice on how to overcome them; and

- practice elicitations (particularly examples where the true value is known).

In other words, if it is possible, you would like the experts to share a common understanding of exactly what information is being elicited. Although experts will approach the elicitation with a variety of differing perspectives based upon their diversity of training and experience, it is paramount that they all address the same problem as posed by the elicitation. This can be accomplished through pre-elicitation training. Also, it is important to allow the experts to gain experience with the elicitation protocol (i.e., the questionnaire, survey, interview, etc.) in advance of the actual elicitation. This way, when it comes time to provide their judgments, they will have the best chance of being consistently supplied.

Pre-elicitation training may also include tuning expert numeracy—for example, many experts are not familiar with describing their degrees of belief and uncertainty in terms of quantiles (e.g., 5%, 50%, 95%). Allowing all experts to participate in a group training session allows each the benefit of hearing the others’ questions (and your responses) and ensures that all have a common understanding of what will be asked of them.

In terms of expert judgment elicitation, there are a number of common mechanisms that have been used for debiasing (Jørgensen, Halkjelsvik, & Kitchenham, 2012; Simola, Mengolini, Bolado-Lavin, & Gandossi, 2005) experts (typically in attempts to reduce overconfidence):

- Expert training

- Feedback

- Incentive schemes, such as scoring rules

Though these efforts have been met with mixed results in the past (Alpert & Raiffa, 1982; Arkes, Christianson, Lai, & Blumer, 1987; Hogarth, 1975; Koriat, Lichtenstein, & Fischoff, 1980; Lichtenstein et al., 1981), more recent efforts (which will be described next) have demonstrated results in helping to debias experts.

Considerable attention has been devoted to the challenge brought about by cognitive biases and heuristics. For in-depth coverage of this specific set of issues, please refer to any of the many comprehensive books on the subject (e.g., Gilovich, Griffin, & Kahneman, 2002; Kahneman et al., 1982; Kahneman & Tversky, 2000; Tversky & Kahneman, 1974). Some of the most common biases and heuristics are described in Table 8.

Table 8 Cognitive biases and heuristics.

| Bias or Heuristic | Description | Solution |

| Anchoring | Anchoring-and-adjustment involves starting from an initial value that is adjusted to yield the final answer. The initial value, or starting point, may be suggested by the formulation of the problem, or it may be the result of a partial computation. In either case, “adjustments are typically insufficient” (Tversky & Kahneman, 1974, p. 1128). | The most common demonstration of anchoring would be when a three-point estimate is asked of the expert: the most likely value or 50%, the practical minimum or 5%, and the practical maximum or 95%. Rather than asking for the most likely first, ask for the range values, the 5% and 95% (such that there will be a 90% chance the true value falls into the range), and then the most likely, the 50%. This will help avoid insufficient adjustment from the most likely estimate. |

| Representativeness | Representativeness refers to making an uncertainty judgment on the basis of “the degree to which it (i) is similar in essential properties to its parent population and (ii) reflects the salient features of the process by which it is generated” (Kahneman & Tversky, 1972, p. 431). Supporting evidence has come from reports that people ignore base rates, neglect sample size, overlook regression toward the mean, and misestimate conjunctive probabilities (Kahneman & Tversky, 2000; Tversky & Kahneman, 1974). | The representativeness bias can become an issue when extrapolating data or judgments from known populations of different sizes. This is particularly prevalent when making comparisons of likelihood. As a result, it is important to remind experts to continually think about base rates, sample size, and regression to the mean. |

| Availability | Availability is used to estimate “frequency or probability by the ease with which instances or associations come to mind” (Tversky & Kahneman, 1974, p. 208). In contrast to representativeness, which involves assessments of similarity or connotative distance, availability reflects assessments of associative distance (Tversky & Kahneman, 1974). Availability has been reported to be influenced by imaginability, familiarity, and vividness, and has been supported by evidence of stereotypic and scenario thinking (Tversky & Kahneman, 1974). | Availability results from an ease of recall. One way to mitigate effects of the availability heuristic would be to require and allow experts to review and consider the full spectrum of reports and studies immediately prior to the elicitation. This will enable them to have all of the information available for their judgments rather than just those that are more recent in memory. |

| Framing | Drawing different conclusions from the same information based on how that information is presented (Tversky & Kahneman, 1981). For example, suppose a scenario is presented such that an outbreak of an unusual disease is expected to kill 600 people. Two alternative programs are suggested. When the difference between the programs was framed showing program A saved 200 people and program B had P(600 saved) = 1/3 and P(0 saved) = 2/3, 72% of respondents opted for program A. However, when the difference between the programs was framed showing program C where 400 people died and program D had P(0 die) = 1/3 and P(600 die) = 2/3, 78% of respondents opted for program D. Even though programs A and C are identical (as are programs B and D), the results were different based on framing. | One way to mitigate the effects of framing is to carefully use neutral wording. Another possible way is to provide equivalent wordings to demonstrate potential framing issues. |

| Overconfidence | Overconfidence results when an expert’s subjective confidence in their own judgments exceeds (or is reliably greater than) their objective accuracy. This overconfidence can be observed in subjective statements of confidence or when the range of the 5% and 95% estimates of a three-point estimate are insufficiently broadly ranged, and thus the standard deviation or variance of their distribution is too small. Overconfidence may be observed when it is possible to evaluate expert performance using known seed variables. | Overconfidence may be held partially in check by demonstrating propensity for overconfidence during training. For example, experts will be asked to provide three-point estimates (5%, 50%, and 95%) for five known encyclopedic quantities (e.g. length of Mississippi River, population of Washington, DC). Typically, less than 5% of experts will answer all five questions such that the true value falls within their range of confidence (between 5% and 95%). The majority of experts will correctly capture within their range the true value of two or fewer quantities. This training causes experts to more accurately express their uncertainty and better calibrate their confidence. |

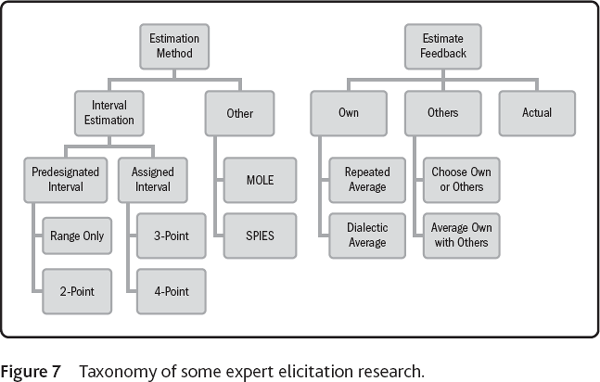

Looking only at a single bias, in 2011, a metasearch of more than 100 electronic bibliographic databases identified 2,092 articles with “overconfidence” in the title for an eight-year period. Of those, 26 (some with duplicates) clustered on the keyword “interval estimates.” A survey of the most recent research on overconfidence and interval estimation will now be summarized using the taxonomy provided in Figure 7. Articles generally fell within two categories: ones that dealt with improving elicitation via various methods and others that used feedback to improve elicitation. The majority of the “method” articles were focused on interval estimation, but there were two additional methods (i.e., SPIES and MOLE). The interval estimation methods were then broken down into whether or not the interval was specified in advance (thus, pre-designated) or whether the experts assigned their own intervals. Finally, the methods were further broken down into how many estimates or “points” were required for each judgment. The “feedback” articles were primarily sorted by the source of the feedback (i.e., self when experts were provided feedback on their own estimates, others when experts were provided feedback on other experts, estimates, and actual when feedback provided the eventual actual value of the estimate).

Interval estimation is a widely used means of eliciting expert judgments. Typically, experts are asked to provide values for a predesignated interval. For example, an expert would be asked to provide the 5th and 95th percentile estimates (such that the true value would theoretically occur within that interval 90% of the time, in the long run). Soll and Klayman (2004) examined the effects of a range only (so-called one-point, two-point [where experts are asked above and below percentile values], and three-point estimates [which include an estimate of the most likely value in addition to the estimates assigned to the upper and lower bounds of the predesignated interval]). They demonstrated that the three-point estimate produced an average overconfidence of 14%, in comparison to average overconfidence of 23% and 41% for two-point and one-point estimates, respectively.

An alternative to this approach is to ask the experts to estimate the practical minimum and the practical maximum, and then ask them to assign an interval based upon their confidence (e.g., 50%, 82%, and 90%). Winman, Hansson, and Juslin (2004) have shown that allowing experts to assign the interval (what they called interval evaluation) yielded less overconfidence than having experts produce a predetermined interval. Average overconfidence dropped from 32% for the estimates of predesignated intervals to 14% for expert-assigned intervals.

Teigen and Jørgensen (2005) conducted similar experiments and demonstrated similar improvements in overconfidence reduction. Estimates of the predesignated 90% interval had an average overconfidence of 67%, estimates of the predesignated 50% interval had an average overconfidence of 27%, and estimates for the expert-assigned intervals had an average overconfidence of 15%. Speirs-Bridge et al. (2010) extended this investigation to include a four-point estimate. The four-point estimate asked the experts to identify upper and lower bounds, in addition to the most likely value, and then asked the experts to assign an interval based upon their confidence. The study employed authentic experts estimating real information (as opposed to students estimating artificial values) and yielded improvements in overconfidence; the four-point method had an average overconfidence of 12%, compared with 28% for the three-point method. Thus, one conclusion to be drawn is that when experts are asked to provide additional information about their estimates (from one to two to three and finally to four points), overconfidence is reduced.

The more-or-less-elicitation (MOLE) method has demonstrated improved accuracy and precision for elicited ranges (Welsh et al., 2008, 2009), but requires repeated relative judgments that are not often possible in the context of extensive expert elicitations. Similarly, subjective probability interval estimates (SPIES) require experts to assign probabilities across the full range of possible values; this method yielded 3% overconfidence, as compared to 16% overconfidence using a three-point estimate (Haran et al., 2010). This may not be feasible either; first, the range of possible values may not be known, and second, requiring multiple elicitations for each quantity of interest to obtain a full distribution will likely exceed the cognitive capacity of experts and result in fatigue during extensive elicitations. Therefore, although the MOLE and SPIES methods show promise, the focus here will return to interval estimation.

Alternatively, aside from the method chosen, how the problem is decomposed is also important. There is emerging evidence that “unpacking the future” by decomposing the distal future into more proximal futures improves calibration and reduces overconfidence (Jain, Mukherjee, Bearden, & Gaba, 2013).

Feedback regarding actual performance on elicitations will improve overconfidence. When experts were provided feedback on how well their estimated intervals compared to the true values, overconfidence was reduced from 16% to 2% after the first session and to -4% after multiple sessions (Bolger & Önkal-Atay, 2004).

Furthermore, the average of quantitative estimates of a group of individuals is consistently more accurate than the typical single estimate because both random and systematic errors tend to cancel (Vul & Pashler, 2008), a phenomenon that has become known as the wisdom of the crowd. Additionally, a similar effect can be created when one individual makes repeated estimates. Averaging a first estimate with a second, dialectic (i.e., antithetical) estimate simulates an averaging of errors (Herzog & Hertwig, 2009); even though averaging with another expert increased accuracy by 7%, the dialectic averaging increased accuracy by 4% (beyond that from mere reliability gains). Although it has been shown that diverse groups make better decisions than individuals (or homogeneous expert groups) (Hong & Page, 2004), the internal averaging effect was confirmed in another study of the “wisdom of crowds in one mind” and calculated the optimal number of times to elicit from each individual expert (Rauhut & Lorenz, 2010). When given the choice of whether or not to average with other experts, those who chose their own judgments over others’ frequently exhibited 24% overconfidence, those who occasionally chose their own over others’ exhibited 17% overconfidence, and those who regularly combined their judgments with others’ exhibited 13% overconfidence (Soll & Larrick, 2009). Therefore, feedback and averaging generally help reduce overconfidence.

Even with this quick review of a small slice of the expert judgment literature, it should be readily apparent that there is a considerable amount of experiments, study, literature, and theory on expert judgment that should ultimately be applied to the practice of project management in a more systematic manner.

Additionally, because bias may have the greatest impact on judgment, attention can be focused on debiasing. Ways to debias judgment include “modifying” either the person or the environment (Soll, Milkman, & Payne, 2014), including teaching cognitive strategies, providing nudges to induce reflective thinking (Jain et al., 2013), and so on. Training could also be as simple as providing the experts with exposure to the types of questions that will prime their thinking.

Appropriate expert training may include a mix of orientation, practice, debiasing, feedback, and providing appropriate incentives.

2.4.4 Eliciting Judgments

As seen previously, there are many elicitation methods for gathering expert judgments. Also, it has been observed that the method must be matched to the purpose or problem.

Due to the subjective nature of elicitation it is important to provide a transparent account of how values are elicited and what information was available to experts to aid in their estimation of various quantities. (Roelofs & Roelofs, 2013, p. 1651)

For example, if the elicitation task involves creating a list of ideas, scenarios, risk, and so forth, then a generative method would be the best choice. As noted in the state-of-the-practice survey, brainstorming was the most frequently used expert judgment tool/technique. It is easy to implement, familiar, and widely used. Osborn (1957) noted that people can generate twice as many ideas when working in groups compared to working alone by adhering to the following simple rules: More ideas are better, wilder ideas are better, improve and combine ideas to create more, and refrain from criticism. These rules remove the inhibitions of criticism. However, researchers have demonstrated that nominal groups (where participants work independently before combining their ideas) outperform brainstorming groups by a factor of two under similar conditions (e.g., Taylor, Berry, & Block, 1958). Some of the theories of the productivity loss from brainstorming include production blocking (from monochannel communication) (Lamn & Trommsdorff, 1973), evaluation apprehension (or fear of criticsm from others) (Collaros & Anderson, 1969), and free riding (or social loafing). The effects of these barriers to productivity may be moderated through allowing independent work prior to group brainstorming sessions, clearly describing instructions and rules against evaluation, limiting group size, and ensuring incentives and assessment evaluation (Diehl & Stroebe, 1987). Other more recent techniques to improve brainstorming include cognitive priming (Dennis, Minas, & Bhagwatwar, 2013), avoiding categorization or clustering a priori (Deuja, Kohn, Paulus, & Korde, 2014), and pacing/awareness to avoid cognitive fixation (Kohn & Smith, 2011).

Despite its prevalence and persistence (Gobble, 2014) and the fact that it may well serve other organizational purposes (Furnham, 2000), brainstorming will continue to be an option and should be used with due caution and in accordance with the intent of the original creators and the researchers who have improved the productivity of brainstorming.

There are many variants of the traditional (now almost six decades old) technique of brainstorming. Increasingly, brainstorming involves virtual groups (e.g., Alahuhta, Nordbäck, Sivunen, & Surakka, 2014; Dzindolet, Paulus, & Glazer, 2012) and crowd sourcing (Poetz & Schreier, 2012), as technology improves opportunities for involvement from a diversity of experts and users. Brainwriting (or brain sketching) is another method of generative elicitation, where group members begin by silently sketching their ideas and annotations on large sheets of paper that are then shared among group members for another round of brainwriting (VanGundy, 1988). Six-five-three brainwriting is where six participants each write three ideas on a sheet of paper that is circulated and the process is repeated five times (e.g., Otto & Wood, 2001; Shah, 1998; Shah, Kulkarni, & Vargas-Hernández, 2000; Shah, Smith, & Vargas-Hernández, 2003). Collaborative sketching (or C-Sketch) (Shah, Vargas-Hernández, Summers, & Kulkarni, 2001) is a variant of 6-5-3 brainwriting, where participants draw diagrams instead of using words, and the misinterpretation of ambiguous drawings may lead to new ideas. One study found rotational brainwriting techniques to outperform nominal group techniques in terms of both quantity and quality (Linsey & Becker, 2011).

Another common method, the nominal group technique (Delbecq & Van de Ven, 1975), has proven to be equally or more effective than brainstorming. This technique involves having group members work in silence without talking (i.e., they are a group in name only) before ideas are shared and expanded. As can be imagined, there are hundreds of idea generation techniques (e.g., Adams, 1986; Higgins, 1994). There is no single best method (e.g., nominal groups outperform brainstorming, brainwriting outperforms nominal groups, different methods outperform others in differing contexts, etc.), and the selection of methods will be dictated by the nature of the problem and the skill and experience of the person(s) conducting the elicitation.

When it comes to evaluative expert elicitation, there are even more methods to choose from (see Figure 5 and Table 6). Additionally, several prominent methods are described in this chapter. It would be far too cumbersome to attempt to explain all of the various methods here. Instead, attention will be turned toward the elicitation process itself (rather than the method of elicitation) to identify some of the key elements of an elicitation according to Meyer and Booker (2001):

- Will the elicitation be individual or interactive (i.e., the situation or setting)?

- What form of communication will be used (e.g., face-to-face, virtual, etc.)?

- Which technique will be selected (see Tables 5 and 6 for examples of the various generative and evaluative methods)?

- What will be the form of the response mode (e.g., estimate, rating, ranking, open, etc.)?

- Will experts be provided with feedback?

There are many factors to consider when designing an expert judgment elicitation (e.g., how best to survey experts [Baker, Bosetti, Jenni, & Ricci, 2014]) and, in order to achieve the best possible expert judgment, much planning and attention must be paid to the execution.

2.4.5 Analyzing and Aggregating Judgments

Once the judgments have been elicited, they will need to be evaluated and, if deemed necessary, combined or aggregated. The nature of how the judgments are analyzed and aggregated will be dependent upon the form of the information or data sought. There are two basic forms of aggregation methods: behavioral and mathematical. In general, generative (qualitative) judgments are most often combined using behavioral aggregation methods, and evaluative (quantitative) judgments are combined using mathematical aggregation methods.

There are several comprehensive reviews of aggregation methods in the literature (e.g., Clemen, 1989; Clemen & Winkler, 1999; French, 1985, 2011; Genest & Zidek, 1986), including several that have annotated bibliographies. Rather than replicate those contributions here, only the high points will be summarized.

Behavioral methods require the experts to interact in order to generate some agreement. These methods generate consensus aggregation as a by-product of the expert judgment elicitation process, rather than as a consequence of some manipulation after the elicitation (as is the case with mathematical aggregation). The following are some behavioral expert judgment aggregation methods:

- Group Assignment: This method has the experts work together to develop a group assignment of the probability distribution or quantity of interest.

- Consensus Direct Estimation: Here, too, the group of experts identifies a quantity of interest and then comes to a consensus through interaction.

- Delphi Method (Dalkey & Helmer, 1963): This well-known iterative, asynchronous process is typically performed by experts independently. The anonymous results are then shared and other experts are allowed to comment and update their estimates. Rounds continue until there is sufficient consensus. Its advantages include anonymity, the opportunity to gain new information or defend outlier position, and self-rating. There are also many disadvantages, as enumerated by Sackman (1975), including the fact that the process is time-consuming, does not adhere to psychometric rules, results in unequal treatment of experts, offers no means for dealing with lack of consensus, requires no explanation as to why experts prematurely exit surveys, and convergence may be the result of boredom. Gustafson, Shulka, Delbecq, and Walster (1973) found that the technique produced worse results than the nominal group technique and simple averaging.

- Nominal Group Technique (Delbecq & Van de Ven, 1975): Experts make judgments first independently and then come together to form consensus. This technique allows synergy among experts, but there is potential for bias (as in any of the behavioral methods).

- Analytic Hierarchy Process (Saaty, 1980): Experts individually rank alternatives using relative scales and the rankings are then combined. The advantages of this process are that it allows hierarchical design, is easy, is structured such that comparisons are reciprocal, and provides means for diagnosing experts through consistency measures. The disadvantages have been identified by Dyer (1990) and include the potential for rank reversal and independence in weights between hierarchies.

- Kaplan Method (Kaplan, 1990): This method requires the facilitation of experts in discussing and developing a consensus body of evidence. Using that consensus body of evidence, a distribution is proposed and then argued among the experts based upon the shared evidence until consensus is obtained.

With all interactive groups, there is potential for problems, such as groupthink (Jannis, 1982; Jannis & Mann, 1977), polarization (Plous, 1993), and expert dominance. Despite these potential problems (which can be addressed through the elicitation protocol), group performance is typically better than that of the average group member, but not as good as that of the best group member, according to one study of 50 years of research on decision making (Hill, 1982).

Mathematical methods use analytical processes or mathematical models to combine individual expert judgments into a combined judgment. The following are some mathematical expert judgment aggregation methods (which are typically conducted after judgments have been elicited):

- Weighted Arithmetic Mean (also known as Linear Opinion Pool [Stone, 1961]): This appealing approach averages expert judgments (e.g., probability) using the same or performance-based weights. It is a simple average of the judgments. Some advantages of this method include ease of calculation, ease of understanding, maintaining unanimity, the fact that weights can represent expert quality, and satisfying marginalization. However, the determination of weights may be subjective (Genest & McConway, 1990).

- Weighted Geometric Mean (also known as Logarithmic Opinion Pool): This method uses a multiplicative average and a normalizing constant. It is a weighted average. Some advantages include that it can be easily updated with new information and weights can represent expert quality. Again, determination of weights is subjective.

- Mendell-Sheridan Model (1989): This Bayesian approach creates joint expert quantile estimates. In contrast to frequentist approaches to determining probabilities based upon the frequency of occurrence of an event, Bayesian approaches allow a degree of belief to be incorporated. Some of the advantages include that it has default egalitarian priors (i.e., equally weighted prior beliefs), experts are not restricted to a class of distribution, it updates accounts for correlation, and it has been experimentally tested. It is, however, computationally complex, sensitive to units, and dependent upon seed variables to “warm up.”

- Morris Model (1977): This Bayesian method provides a composite probability assignment for the quantity of interest incorporating the decision makers’ prior understanding of the situation (offered in the form of a distribution). Some advantages include the fact that conflicting expert assessments can be accommodated, invariance to scale and shift, precision and accuracy are dealt with separately through decomposition, and calibration is inherent to the model. Disadvantages include its restriction to normality assumptions of expert priors and the fact that it does not address the issue of expert dependence.

- Additive (and Multiplicative) Error Models (Mosleh & Apostalakis, 1982): Using vectors for quantile estimates, a combined distribution is developed that provides expert performance and correlation data. It is relatively simple and the errors are normally distributed. However, there is a heavy burden on the decision maker to supply the prior, bias, and accuracy for each expert. Also, it does not generalize to all classes of distributions.

- Paired Comparison Model (Pulkkinen, 1993): This Bayesian model creates a composite posterior mean and variance based upon expert-paired comparison information. Advantages include the fact that the comparisons are intuitively accessible, the likelihood is derived from comparison responses, and it is relatively flexible. However, it has weak dependence among experts and cannot be solved in closed form (requiring simulation solution).

- Information Theoretic Model (Kullback, 1959): This method identifies an aggregate probability distribution with the least cross-entropy. Some advantages are that it is a normative model, it retains distributional family (often a simple combination of parameters), and it provides alternative objective criteria to fit application. However, experts may require weighting.

Many of the mathematical aggregation methods use performance-based weights (or scoring rules) as a means of combining expert judgments, such that the judgments of more accurate experts (i.e., those with superior performance) are given higher weighting in the aggregation (and vice versa). However, evaluating expert performance is extremely difficult, and the quality of the “experiential insight” (Crawford-Brown, 2001) of each particular expert must be evaluated as objectively as possible. This is difficult: The accuracy of an expert’s judgment about an unknown quantity of interest is not typically known at the time of the aggregation because the project has not yet taken place. However, there are means to evaluate expert accuracy using “seed” variables. The actual values of the seed variables are known to the analysts administering the elicitation (e.g., in cases where historic data may be available or in cases where additional reports were unavailable to the experts). The seed variables are introduced into the elicitation protocol, and experts (who do not explicitly know the true value of the seed variables) estimate those values along with those of the quantities of interest. Expert performance is then evaluated by examining the experts’ performance on the set of seed variables. Measuring expert performance is important because in addition to being a means of combining expert judgments, it can also serve to enhance the credibility of a study or plan. (See Cooke [1999] for a procedure that “calibrates” experts using seed variables.) Despite a strong case to be made for such an aggregation method, there is some evidence that Cooke’s (1999) classical method performs no better than equal weighting and may suffer from sample bias (Clemen, 2008). There are also other metrics of expert performance using various forms of measuring accuracy, bias, and calibration, in addition to conventional scoring rules (e.g., Cooke, 2015; DeGroot & Fienberg, 1982; Murphy, 1972a, 1972b; Matheson & Winkler, 1976; Yates, 1994a, 1994b).

Also, there may be some instances when you would not combine the judgments, such as when there may be considerable difference in opinion and it is important to retain that distribution of judgment (Keith, 1996). In summary, though there is an enormous amount of research and literature devoted to the topic of the aggregation of expert judgments, behavioral methods are best suited for generative judgments and mathematical methods are best for evaluative judgments. Further, although there are many different and sophisticated methods for mathematical aggregation, simple averaging often outperforms other methods (Clemen & Winkler, 1999).

2.5 Findings and Implications

This review of the literature has uncovered several significant findings regarding how the state of the art/science can inform the practice of expert judgment in project management:

- There are a great many elicitation protocols. The generic seven-step protocol is a compilation of some of the most prominent and adheres to the five phases of project management.

- Expert judgment in project management conforms to two basic forms: generative and evaluative.

- Generative information may best be obtained from fluent (or literate) experts. Evaluative information may best be obtained from numerate experts.

- Expert selection is important and expertise should be matched to the information needed.

- There are some expert judgment elicitation methods that are better suited for generative tasks. Other expert judgment elicitation methods are better suited for evaluative tasks.

- Expert judgment can be improved through pre-elicitation training, debiasing, and feedback.

- Expert judgments can be aggregated using mathematical (e.g., arithmetic average) or behavioral (e.g., consensus) means. Often, the best way to combine evaluative judgment (e.g., interval estimates) is by simple averaging.

Given the breadth and depth of the state of the art/science (i.e., in disciplines other than project management), there is considerable opportunity to improve the practice in project management and advance how expert judgment is used as a tool/technique.