In this chapter we will learn the following topics:

- Best practices in SOA testing and test automation

- Why do we need best practices?

- How would the best practices help?

Best practices are essential for the successful implementation of any automation testing project. Best practices help us to avoid hurdles and help us achieve the desired results in Test Automation with the successful implementation of the framework with minimum maintenance and maximum reusable script.

Best practices are the guidelines following which we face less challenges and gain maximum efficiency.

Like any other test automation, SOA testing also has its best practices as experts have learnt in their career while implementing SOA test automation frameworks.

Let's go through the best practices for SOA test automation

- Choose the right tool

- Get involved early in the development and design phase

- Get the right resources

- Plan candidate for virtualization or service mocking

- Process

- Define candidate for automation

- Dedicated and locked test environment

- Encapsulated framework

- Dynamic assertions

- Three levels in SOA testing

- Perform non-functional tests

- Correct onshore nearshore and offshore ratio

- Utilizing unit test with test automation

- Build good manual test cases

- Is the project mature enough for test automation?

So let's discuss all of these, one-by-one and in detail.

Choosing the right tool is really important and the following factors should be considered while choosing an SOA test automation tool:

- Budget: budget should be considered an important factor. We should choose the right tools to suit our budget as we might need to buy licenses for each tester if the client doesn't already have the licenses.

- Protocols support: We need to identify the protocol supported, as the tool might not support the protocol used in the current project. For example, TCP /IP is not supported by SoapUI but it is supported by IBM RIT [Rational Integration Tester].

- Message format support: We need to identify the message supported, as the tool might not support the protocol used in the current project, for example, SWIFT or ISO format messages are not supported by SoapUI, but they are supported by IBM RIT.

- Training needs: We need to verify the training needs for the particular tool we are using and also the training cost which takes another toll on the project

- Tool support: The tool support should be available and reachable.

- User reviews: To verify the right choice it is recommended that we verify the user reviews of the tools on sites like LinkedIn.

- Virtualization/mock service support: We need to verify if the tool supports virtualization/Mock Service and also the protocols supported by it.

- Support for non-functional testing: Support for non-functional testing is essential and tools should be validated for the support of performance and security testing.

- Support for UI interactions: In end-to-end testing we might need to interact with the UI as well, so we might need a tool which may invoke a UI when needed as well; consideration should be given to this point as well. For example, LISA which is also capable of invoking the UI.

SOA manual and test automation both require early involvement of the test team as SOA testers should not just be technologically skilled, but should also know the business as this will help in creation of better test automation suites.

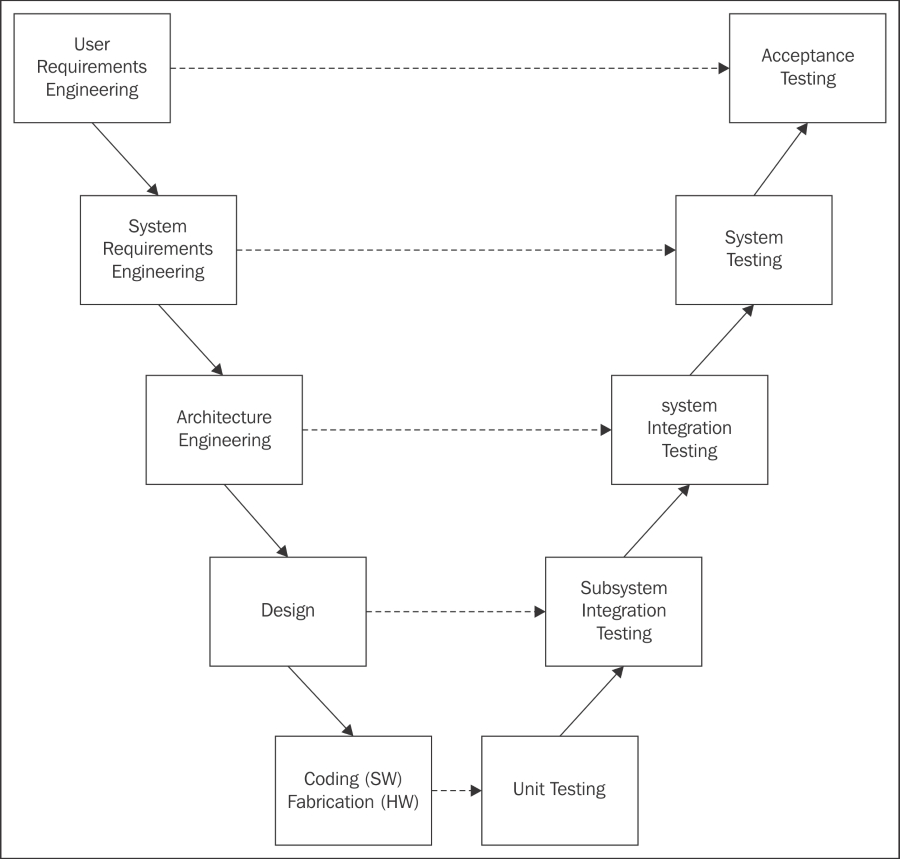

To best suit the needs of the early involvement of the test team we should use the following V Model:

V Model

It is recommended that the V model is implemented in any SOA testing and test automation Project because of the following reason:

- It supports a top down as well as bottom up approach for testing and test automation

- The test levels define each testing in detail and with specifications

- This model facilitates testing at every stage and hence throughout the lifecycle

The preceding points reflect that the V model provides more clarity of the business to the testers as they are involved early. This pays in return with great test cases and great test automation.

In any application we have third-party systems, which have their services that need to be tested. At the time of unit testing and integration testing we might not get the real system available to us so we cannot really test the integration between systems hence cannot execute any test.

So what do we do?

We virtualize/mock service the systems which are not available to us.

We should consider the following factors while evaluating a tool for virtualization:

- Protocols supported

- Message formats supported

- Virtualization server cost

The preceding factors, once evaluated, will help you choose a tool from a test virtualization perspective.

It is very important to consider this as we should have the sufficient amount of expertise in the tool we plan to select these for a project. We should evaluate this point based on the following constraint:

- Number of expert resources

- Training required

- Learning curve

- Ease of use

- Any previous experiences in the organization.

The preceding factors would help everyone evaluating the tool for their project.

The testing process in SOA testing should be well defined. Following are some of the factors to validate:

- Define the test-coverage metrics

- End-to-end traceability metrics

- Execution process and tracking

- No ad-hoc testing

- Define the tests

- Define test entry and exit criteria at each stage

It is not possible to automate all the test cases, so it is important to identify the correct candidates for test automation.

The advantage of test automation is that test is repeatable whereas a test whose frequency of execution is less can be taken care by manual testing.

A correct test case candidate for test automation should satisfy the following criteria:

- Repetitive tests and regression tests

- Tests which are complex and may cause human error

- Tests that require multiple data sets

- Critical functionality tests which are used in BAU flows

- Tests that take time to execute

- Tests that are required to be tested on multiple environment with different configurations

It is very important to have a dedicated and a locked test environment for SOA testing and test automation. Usually, it is really important to have a separate environment instead of using the developer's environment. The use of a dedicated test environment provides stability in test results and makes issues traceable.

And adding access controls in the test environment will make sure that the build is not played with by any of the dev or test guys as the access would remain only with the key people.

This approach will really help in the following ways:

The design of any test automation framework (TAF) is its most important feature. If the design is good, the framework will be scalable, reliable and efficient with very less maintenance efforts

Considering the way the testing arena has changed over the past decade it is recommended to use encapsulation for your test automation framework.

Encapsulation is the mechanism of wrapping the data (variables) and code acting on the data (methods) together as a single unit.

So we should remember the above statement while we implement encapsulation in our TAF.

Let's now have a look at the assertions to be used in the TAF that makes the TAF detect defects.

It is very important for any framework to have dynamic assertions rather than having static assertions. In dynamic assertions we validate the data at runtime, based on the input, and fetch the expected results from the desired data source, whereas in static assertion, expected results are static and independent of the data sent in the request.

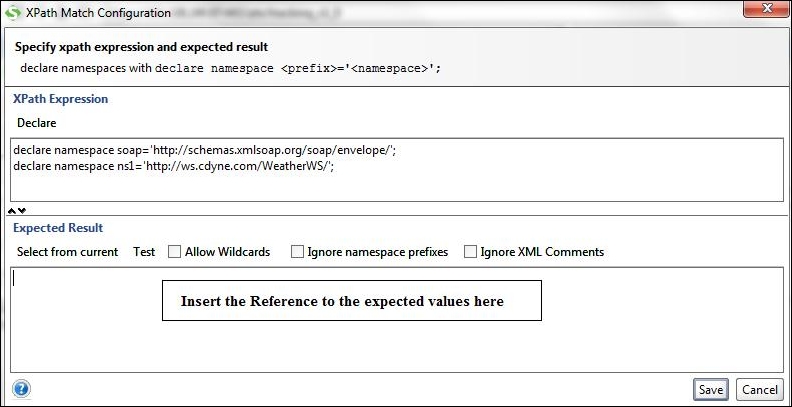

Let's take a look at a dynamic assertions implementation.

Let's suppose we have a test request and here is the structure of the automated test case:

- Test data import of Groovy script

- Set environment of Groovy script

- Expected results retrieval and storage of Groovy script

- Test request with XPath assertions

- Result logging for reporting purposes

Now here in the above example let's look at a few things:

We import the input test data for the request based on which results would be fetched.

For example, let's suppose the request is a get employee data request and takes Emp ID as its input, which is supplied to it by the test data import step. Now, whenever the Emp ID changes expected results will also change, making any static assertion fail even if they pass.

So to solve this issue we have dynamic assertions.

Lets see how this can be achieved !!

We will now break the task of creating dynamic assertions into three steps.

Step 1: Import Excel data using the following script:

import com.eviware.soapui.model.*

import com.eviware.soapui.model.testsuite.Assertable

import com.eviware.soapui.support.XmlHolder

import java.io.File;

import java.util.*;

import jxl.write.*

import jxl.*

def regLogger = org.apache.log4j.Logger.getLogger("RegressionTestLoger");

def groovyUtils = new com.eviware.soapui.support.GroovyUtils( context )

def properties = new java.util.Properties();/

def s2

Workbook workbook = Workbook.getWorkbook(new File("D:\import input data.xls"))

for (count in 2.. 11)

{

Sheet sheet = workbook.getSheet(1)

Cell a1 = sheet.getCell(0,count) // getCell(row,column) — place some values in myfile.xlsCell b2 =

sheet.getCell(s3.toInteger(),count) // then those values will be acessed using a1, b2 , c3 Cell.

String s1 = a1.getContents();

s2 = b2.getContents();

testRunner.testCase.setPropertyValue(s1,s2);

}

workbook.close()Step 2: Now, when the input data is imported and saved, you can utilize the data and fetch the expected results from the database using the following script:

import groovy.sql.Sql;

def regLogger = org.apache.log4j.Logger.getLogger("RegressionTestLoger");

def GUID;

def delayStep = testRunner.testCase.testSuite.getPropertyValue("delayStep")

def tryCount = testRunner.testCase.testSuite.getPropertyValue("delayRetries")

def account = testRunner.testCase.getPropertyValue("EMPID")

int x = 1

int y = 0

while ( x <= Integer.parseInt(tryCount) & y != 1 & y != 2 )

{

println "Delaying " + Integer.parseInt(delayStep)* 0.001 + " seconds."

Thread.sleep(Integer.parseInt(delayStep))

def sql = groovy.sql.Sql.newInstance("jdbc:oracle:thin:@10.253.10X.20X:1521:XXQA4","XCWDEV4SL", "XCWDEV4SL", "oracle.jdbc.driver.OracleDriver")

row = sql.firstRow ("select from com_header where EMPID= " + EMPID+ "order by cwordercreationdate desc")

testRunner.testCase.setPropertyValue("EMPName",GUID.toString ());

testRunner.testCase.setPropertyValue("emp_age",age.toString ());Step 3: Now the same can be referred in XPath assertions and then your assertions are dynamic.

In the Expected

Results section, you can create a value in the test property at test case level and reference it here. For example, if the property is created at the test case level and the name is Expected V1 then the expression to be put in the expected results would be ${#TestCase#ExpectedV1}. You can also put a static value in the Expected Result section.

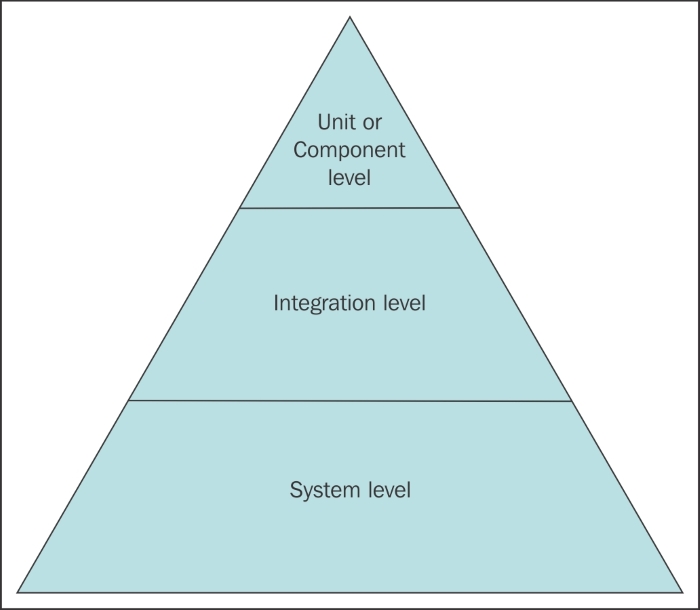

I am sure we now have a better idea of why dynamic assertions are really good and lie in the best practices list. There are three levels in SOA testing:

To achieve the desired result in SOA testing and test automation we should have three testing phases in SOA testing:

- Unit or component level: testing the components independently

- Integration level: testing the integration between components

- End-to-end testing/system level: testing the implementation as a whole

When we define SOA testing in three phases we gain the following benefits:

It can also be said that following the three-level approaches will provide a quality application with better and reusable SOA components.

Like any other components in a composite application, API and web services can be tested not just from a functionality point of view, but also from a non-functional testing view.

- Security testing - verify the API from a security point of view.

Test the API for the following tests

- SQL injection

- XPATH injection

- Malformed XML

- Boundary scan

- Malicious attachment

- CSS attack

- Performance, load and stress verify the behavior of single or multiple sets of API under various loads.

Test the performance of the application using the following performance testing techniques:

- LOAD

- SOAK

- Endurance

- Benchmark

- Interoperability and connectivity – can API be consumed in the agreed manner and does it connect to other components as expected?

APIs are reusable components, and you need to be sure when you are using them, so it's best to have them checked for security and performance earlier than regretting things later. This is because certain API and services exchange highly confidential data which, if exposed, can cause severe loss to a business.

Let's have a look at the right way of defining project working models:

A correct onshore and offshore model is really important in an SOA testing engagement because of the following reasons:

- SOA testers needs to know the business

- SOA testers needs to understand the technology, protocols and so on

- SOA testers are the ones who need access to third-party systems

- SOA testers face environment challenges

- At the time of virtualization we need access to the real system.

- SOA testers start early in the SDLC (short for Software Development Life Cycle) traditionally following a V model approach

So to sum up, a complete offshore model would not work for an SOA testing engagement, however, a mix ratio of 70 offshore:30 onshore would be ok for an SOA testing engagement.

Or we can look towards an onshore model or a near shore model..

But considering the complexity of the work, experience and expertise would always suggest having people from the team to be onshore.

Well let's consider automating our entire component test and placing it as a barrier for build quality acceptance.

Wouldn't this help save manual intervention and make sure only quality and stable build reaches the testers?

This approach will not just help the testers but will also cut costs and effort on the testing front, and would be really helpful in implementing a strong and stable environment which promotes the quality of deliverables from the development end

Here's how to do it:

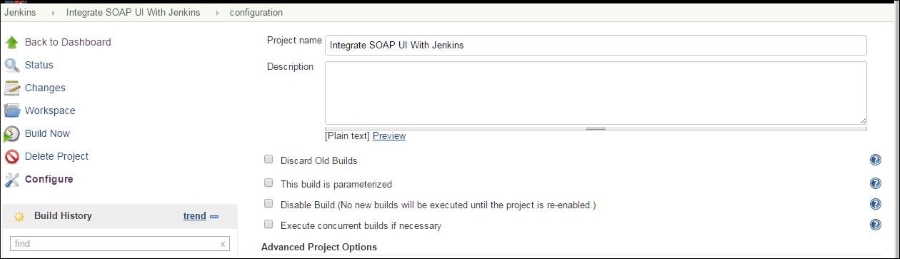

We have already seen in previous chapters how to integrate Jenkins with SoapUI, so let's revise the steps:

- Create a unit test in SoapUI

- Create a batch file or an Ant script to run the tests.

- Integrate with Jenkins:

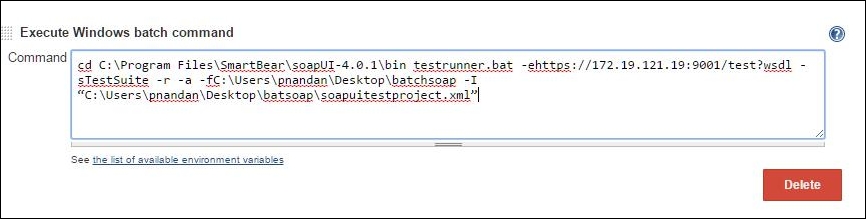

The following screenshot will freshen things up on how to achieve this:

A sample Ant file to run the cases:

<arg line= "-j -f 'C:/Users/pnandan/Desktop/Analysis/NewFolder' 'C:/Users/pnandan/Desktop/Test/Pranai/Production.xml'"/> </exec> </target> <target name = "testreport" depends ="soapui"> <junitreport todir="C:/Users/pnandan/Desktop/Analysis/NewFolder"> <fileset dir="C:/Users/pnandan/Desktop/Analysis/NewFolder"> <include name="TEST-TestSuite_1.xml"/> </fileset> <report todir="C:/Users/pnandan/Desktop/Analysis/NewFolder/HTML" styledir="C:/Testing/apache-ant-1.9.6/etc" format="frames"> </report> </junitreport> </target> </project>

Building good test cases will result in better automation test cases since they will have all the test validations and scenarios covered.

This will not just help the automation testers but also make sure that the automation testing suite adheres to the business requirements and we will have a better suite with most of the requirements being covered.

This will also provide an end-to-end traceability between requirement, manual test cases and automation tests.

With this last topic we come to the end of this chapter and following is the summary of the same.