The other feature provided by Traefik is load balancing. Let's consider the system of the previous part, composed of two services and serving static files. If such a system is really deployed this way, then as soon as one service becomes unavailable, the application does not work anymore. Such a system is clearly not a reactive system as defined in Chapter 1, An Introduction to Reactive Programming: it is not resilient and not elastic.

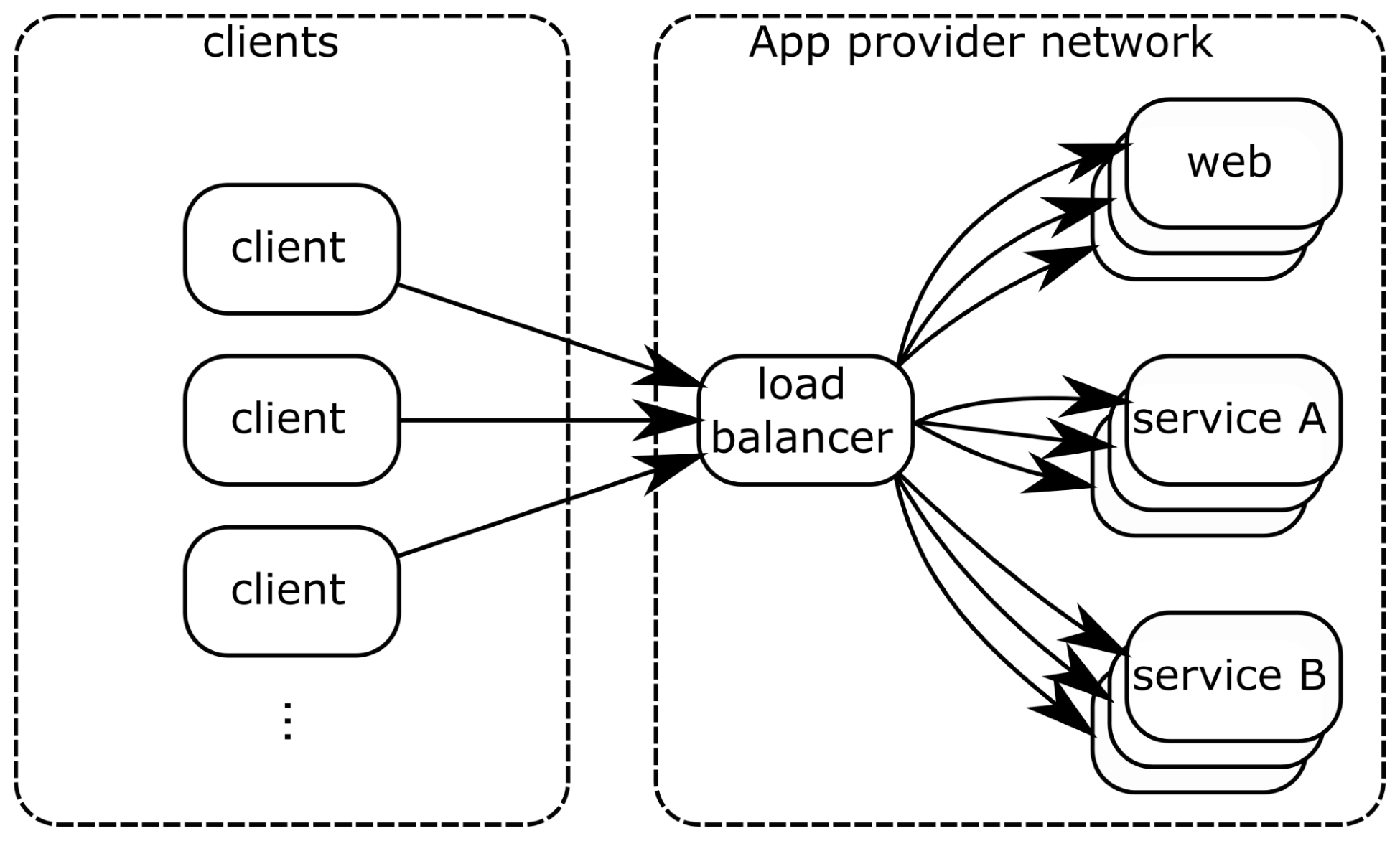

However, if the services are really implemented independently of each other, and in a stateless way, then it becomes possible to execute several instances of each service, and use any of the instances to serve incoming requests. This is shown in the following figure:

In this new architecture, each service is instantiated multiple times. Each time an incoming request happens for one service, it then has to be routed to one of the instances of this service. This is the role of the load balancer: ensuring that requests are equally balanced between each instance of the services.

This new architecture is a step towards a reactive system; it is now more resilient, because if one instance of a service fails, then the other instances can handle the requests. It also provides a first step in elasticity by providing a way to adjust the number of running instances of each service.

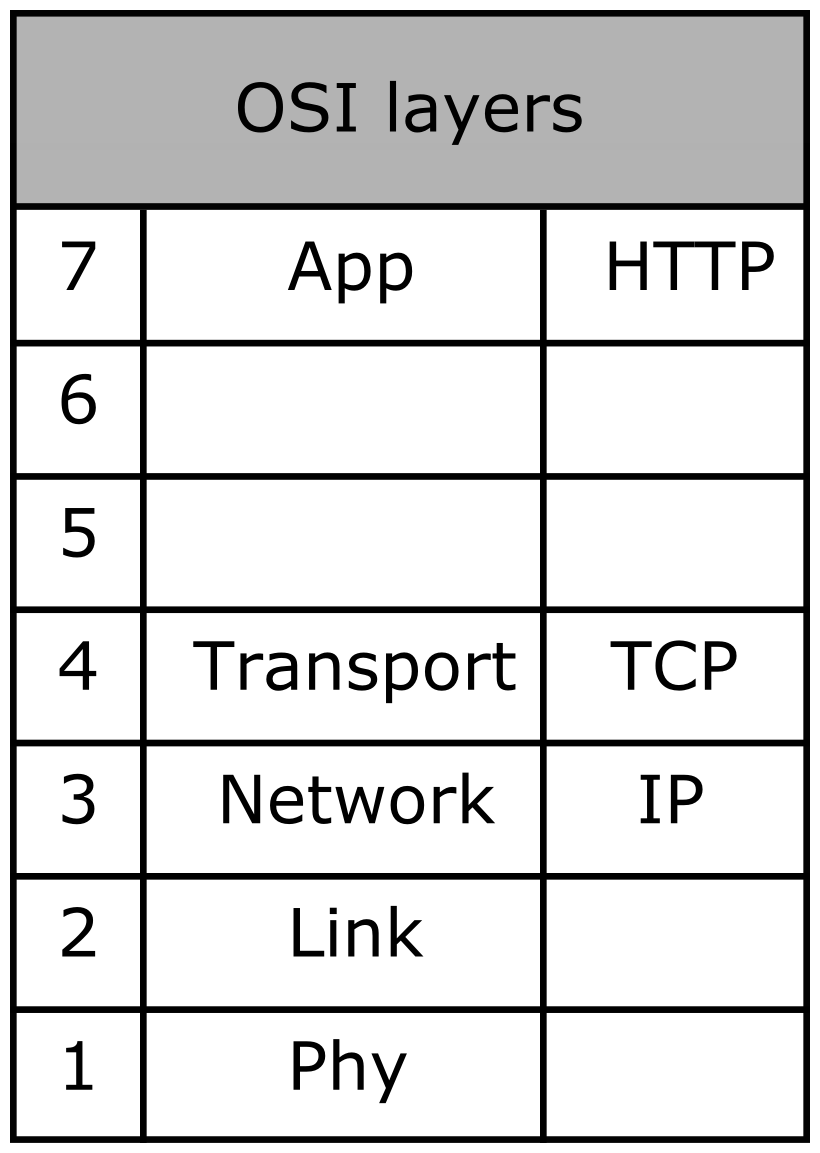

Reverse proxies work exclusively on the HTTP protocol. However, load balancers can work on different protocols. The following figure lists the OSI model layers and the associated internet protocols:

Load balancing is possible at two different layers of the OSI model: layers 4 and 7. Some load balancers (being software or hardware) provide support for both levels. This is the case with the commercial F5 products, the Linux kernel with its Linux Virtual Server (LVS) service, or HA Proxy. Other load balancers, such as Traefik, support only level 7 load balancing because they are fully dedicated to services running on HTTP.

HTTP load balancers also provide another feature: TLS termination. TLS, or SSL, is the protocol used to secure the HTTP protocol (aka HTTPS). TLS relies on certificates that allow the client to ensure that it talks to the correct server (and sometimes also to ensure that the client is allowed to connect to the server). These certificates are generated by providers called certificate authorities. They can generate certificates that will be recognized as trusted by all HTTP stacks (and so by web browsers).

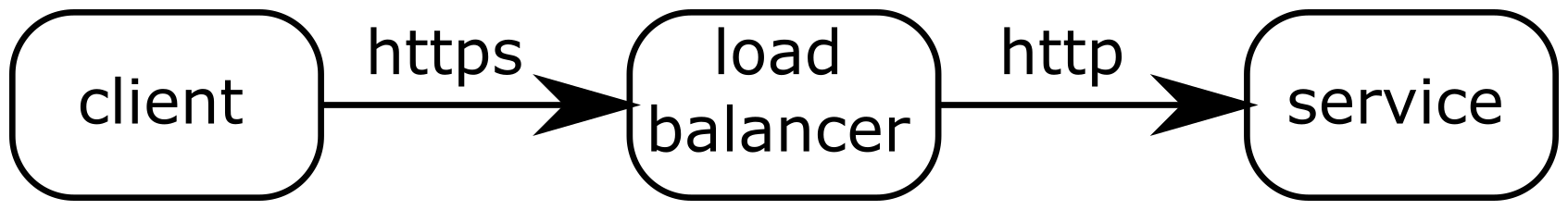

Each HTTPS server needs a certificate to be present on the system. In an architecture where many services are present, managing these certificates can be a complex task. Some tools exist to deal with this, but an easy solution to this problem is to stop the TLS connection on the load balancer. Since the aim of TLS is to secure the connection up to the server of the application, this can end at the load balancer stage. After that, the load balancer forwards the requests using the HTTP protocol, without protection. However, since the load balancer and the services run on the network of the application provider, this can be considered a trusted environment. TLS termination on the load balancer is shown in the following figure: