PCA is one of the most popular dimensionality reduction algorithms and it has a couple of implementations in the Dlib library. There is the Dlib::vector_normalizer_pca type, for which objects can be used to perform PCA on user data. This implementation also normalizes the data. In some cases, this automatic normalization is useful because we always have to perform PCA on normalized data. An object of this type should be parameterized with the input data sample type. After we've instantiated an object of this type, we use the train() method to fit the model to our data. The train() method takes std::vector as samples and the eps value as parameters. The eps value controls how many dimensions should be preserved after the PCA has been transformed. This can be seen in the following code:

void PCAReduction(const std::vector<Matrix> &data, double target_dim) {

Dlib::vector_normalizer_pca<Matrix> pca;

pca.train(data, target_dim / data[0].nr());

std::vector<Matrix> new_data;

new_data.reserve(data.size());

for (size_t i = 0; i < data.size(); ++i) {

new_data.emplace_back(pca(data[i]));

}

for (size_t r = 0; r < new_data.size(); ++r) {

Matrix vec = new_data[r];

double x = vec(0, 0);

double y = vec(1, 0);

}

}

After the algorithm has been trained, we use the object to transform individual samples. Take a look at the first loop in the code and notice how the pca([data[i]]) call performs this transformation.

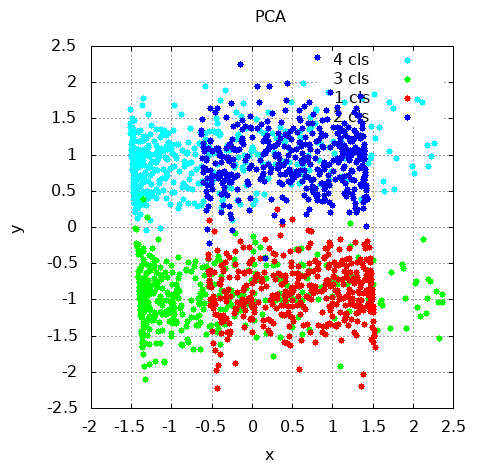

The following graph shows the result of the PCA transformation: