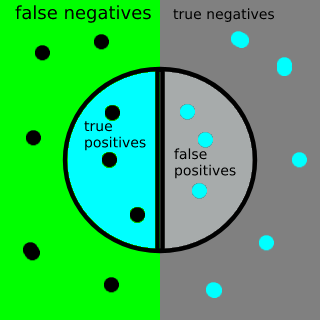

To estimate algorithm quality for each classification class, we shall introduce two metrics: precision and recall. The following diagram shows all the objects that are used in classification and how they have been marked according to the algorithm's results:

The circle in the center contains selected elements – the elements our algorithm predicted as positive ones.

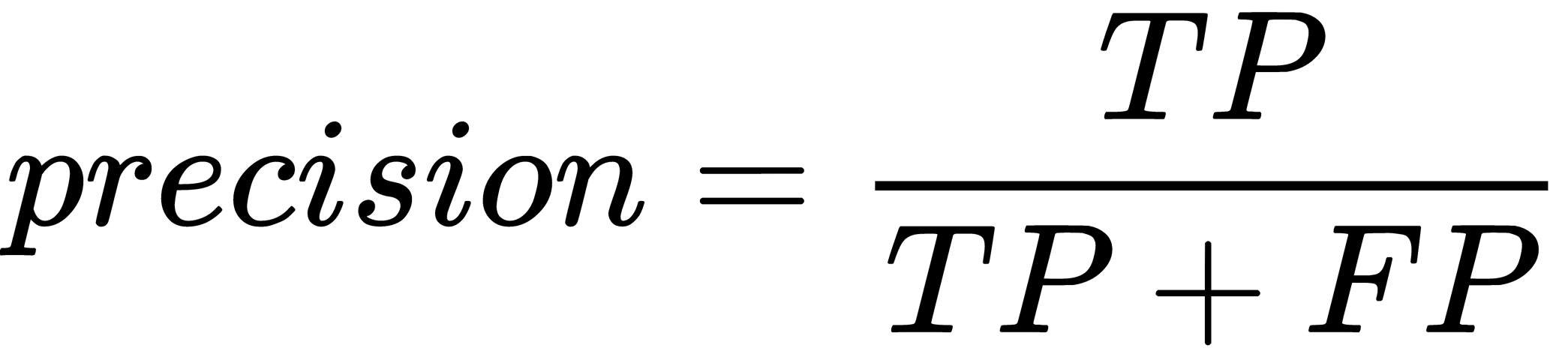

Precision is proportional to the number of correctly classified items within selected ones. Another name for precision is specificity:

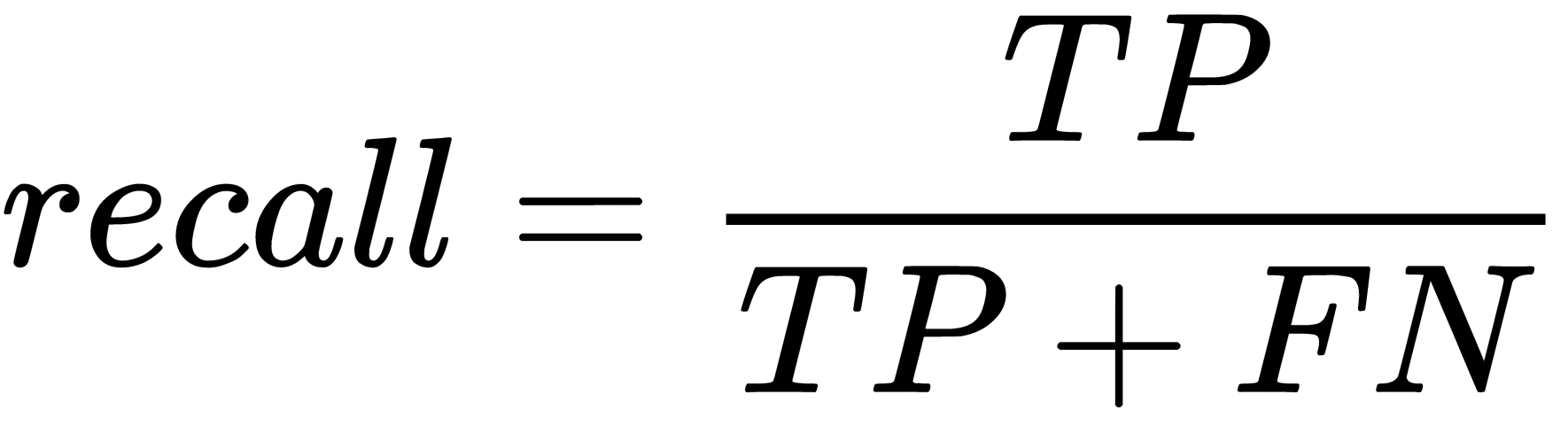

Recall is proportional to the number of correctly classified items within all ground truth positive items. Another name for the recall is sensitivity:

Let's assume that we are interested in the detection of positive items – let's call them relevant ones. So, we use the recall value as a measure of an algorithm's ability to detect relevant items and the precision value as a measure of an algorithm's ability to see the differences between classes. These measures do not depend on the number of objects in each of the classes, and we can use them for imbalanced dataset classification.

There are two classes in the Shogun library called CRecallMeasure and CPrecisionMeasure that we can use to calculate these measures. The Shark-ML and Dlib libraries do not contain functions to calculate these measures.