Usually, a classification algorithm will not return a concrete class identifier but a probability of an object belonging to some class. So, we usually use a threshold to decide whether an object belongs to a class or not. The most apparent threshold is 0.5, but it can work incorrectly in the case of imbalanced data (when we have a lot of values for one class and significantly fewer for other class).

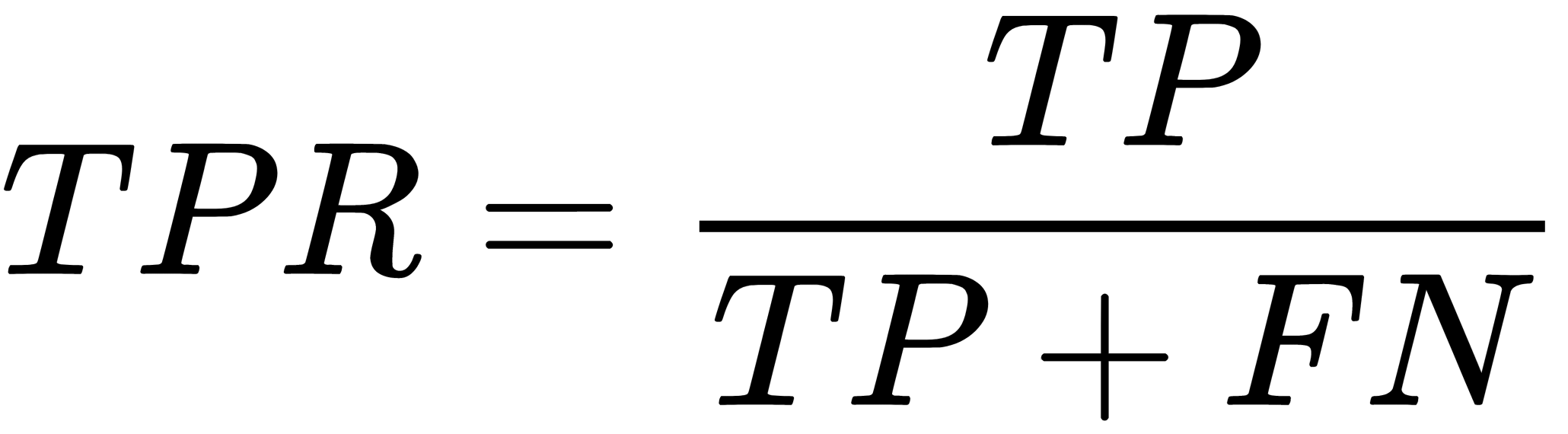

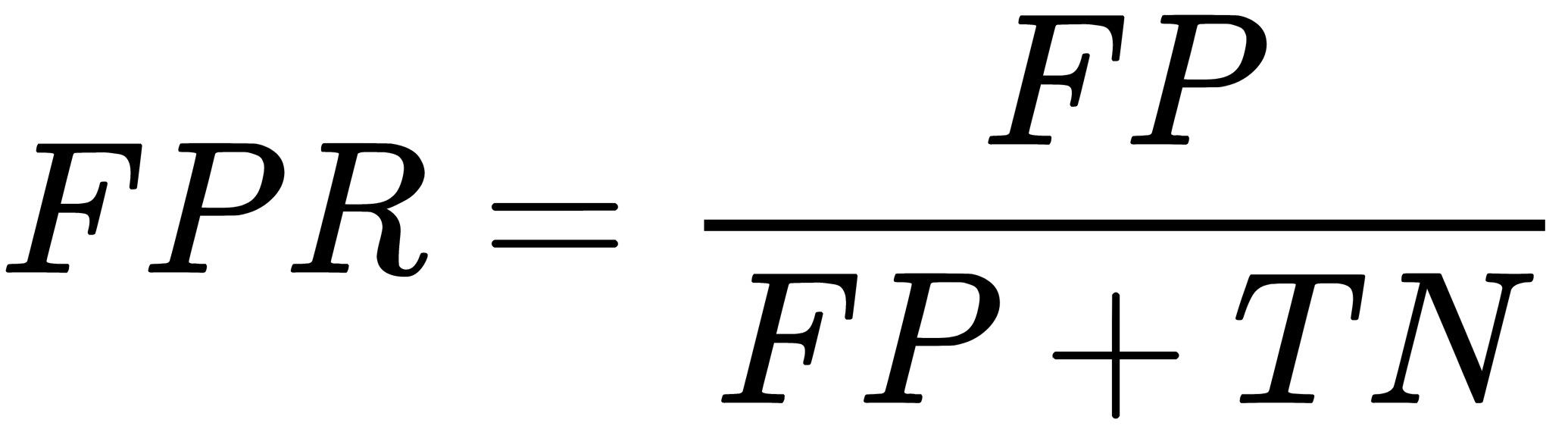

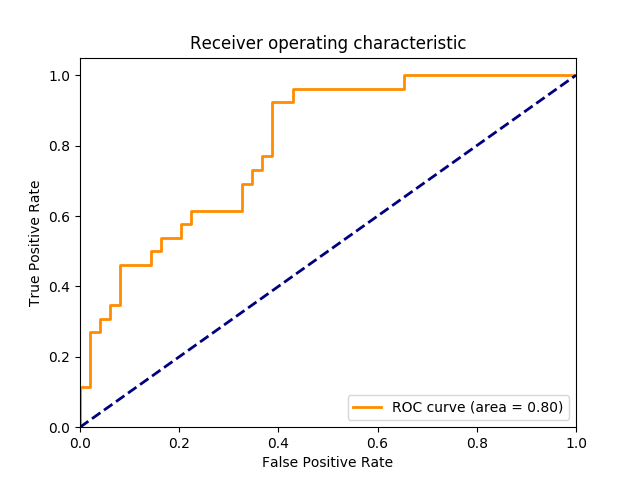

One of the methods we can use to estimate a model without the actual threshold is the value of the Area Under Receiver Operating Characteristic curve (AUC-ROC). This curve is a line from (0,0) to (1,1) in coordinates of the True Positive Rate (TPR) and the False Positive Rate (FPR):

The TPR is equal to the recall, while the FPR value is proportional to the number of objects of negative class that were classified incorrectly (they should be positive). In an ideal case, when there are no classification errors, we have FPR = 0, TPR =1, and the area under the ROC curve will be equal to 1. In the case of random predictions, the area under the ROC curve will be equal to 0.5 because we will have an equal number of TP and FP classifications:

Each point on the curve corresponds to some threshold value. Notice that the curve's steepness is an essential characteristic because we want to minimize FPR, so we usually want this curve to tend to point (0,1). We can also successfully use the AUC-ROC metric with imbalanced datasets. There is a class called NegativeAUC in the Shark-ML library that can be used to calculate AUC-ROC. The Shogun library has a class called CROCEvaluation for the same purpose.