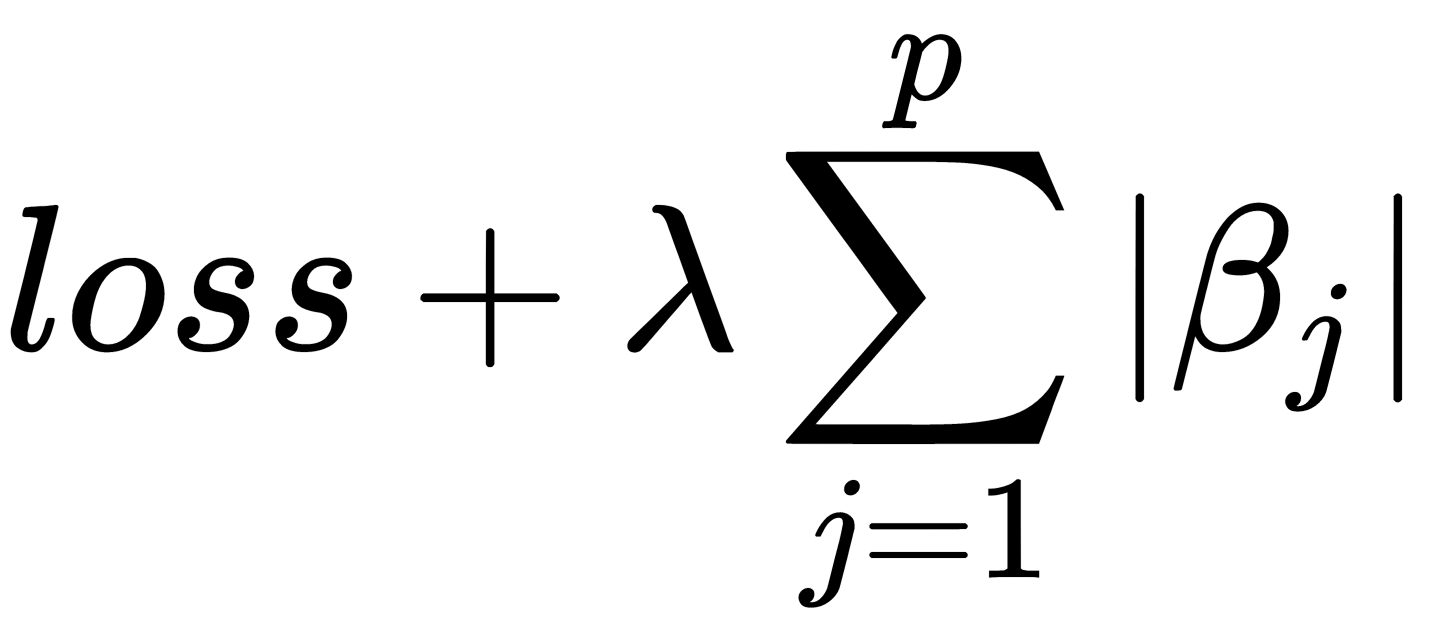

L1 regularization is an additional term to the loss function:

This additional term adds the absolute value of the magnitude of parameters as a penalty. Here, λ is a regularization coefficient. Higher values of this coefficient lead to stronger regularization and can lead to underfitting. Sometimes, this type of regularization is called the Least Absolute Shrinkage and Selection Operator (Lasso) regularization. The general idea behind L1 regularization is to penalize less important features. We can think about it as a feature selection process.

There is a class called shark::OneNormRegularizer in the Shark-ML library whose instances can be added to trainers to perform L1 regularization. In the Shogun library, regularization is usually incorporated into the model (algorithm) classes and cannot be changed.