Chapter 2: Software Requirements – Collecting, Documenting, Managing

Collecting requirements is arguably one of the most frustrating activities in software production for several reasons. Difficulties often arise because it is never completely clear who the owner is, as well as because architects cannot do a good design without certain requisites, and developers, of course, can't do a proper job without the designs.

However, it is fairly common practice for a development team to start doing something without a complete requirements collection job because there is no time. Indeed, what often happens, especially in regards to large and complex projects, is that the milestones are put in place before the project scope is completely defined. In this industry, since software is an intangible product (not like a building or a bridge), budget approval is usually a more fluid process. Therefore, it's not unusual to have a project approved before all the details (including requirements, feasibility, and architectural design) are fully defined. Needless to say, this is an inherently bad practice.

In this chapter, we will look at different techniques for requirements gathering and analysis in order to increase the quality of our software deliverables.

You will learn about the following:

- The different types of requirements: functional and non-functional

- What characteristics a requisite must have

- How to formalize requirements in standard formats

- How to collect requirements by using agile and interactive techniques

Once you have completed this chapter, you will be able to organize productive requirements gathering sessions and document them in a clear way. Being able to collect and properly document requisites can be a real gamechanger for your career in software development in several ways:

- The quality of the software you produce will be better, as you will focus on what's really needed and be able to prioritize well.

- You will have a better understanding of the language of business and the needs of your customers, and you will therefore implement features that better fit their needs.

- You will have the possibility to run informal and interactive sessions on requirements gathering. (As an example, see the Event Storming section.)

- You will have a primer about international standards in software requirements specifications, which may be a hard constraint in some environments (for example, when working for regulated industries such as government or healthcare).

Since requirements collection and management is a practice mostly unrelated to a specific programming language, this chapter doesn't directly reference Java technology.

Now, let's start exploring the discipline of software requirements engineering.

Introducing requirements engineering

From a purely metaphorical perspective, if an algorithm is similar to a food recipe, a software requirement is the order we place at a restaurant. But the similarity probably ends here. When we order our food, we pick a specific dish from a discrete list of options, possibly with some small amount of fine tuning.

Also, continuing with our example, the software requirement has a longer and more complex life cycle (think about the testing and evolution of the requirement itself), while the food order is very well timeboxed: the customer places the order and receives the food. In the worst case, the customer will dislike the food received (like a user acceptance test going wrong), but it's unusual to evolve or change the order. Otherwise, everything is okay when the customer is happy and the cook has done a great job (at least for that particular customer). Once again, unlike the software requirement life cycle, you will likely end up with bug fixes, enhancements, and so forth.

Requirements for software projects are complex and can be difficult to identify and communicate. Software requirements engineering is an unusual job. It requires a concerted effort by the customer, the architect, the product manager, and sometimes other various professionals. But what does a technical requirement actually look like?

Feature, Advantage, and Benefit

As we will see in a few sections, requirements collection involves many different professionals working together to shape what the finished product will look like. These professionals usually fall into two groups, business-aware and technology-aware. You should of course expect those two groups to have different visions and use different languages.

A good way to build common ground and facilitate understanding between these two groups is to use the Feature, Advantage, and Benefit logical flow.

This popular framework, sometimes referred to as FAB, is a marketing and sales methodology used to build messaging around a product. While it may not seem immediately relevant in the requirements gathering phase, it is worth looking at.

In the FAB framework, the following apply:

- A Feature is an inherent product characteristic, strictly related to what the product can do.

- The Advantage can be defined as what you achieve when using a particular Feature. It is common to have more than one Advantage linked to the same technical feature.

- The Benefit is the final reason why you would want to use the Feature. If you want, it's one further step of abstraction starting from advantages, and it is common to have more than one Benefit linked to the same feature.

Let's see an example of FAB, related to the mobile payment example that we are carrying over from the previous chapter:

- A Feature is the possibility of authorizing payments with biometric authentication (such as with your fingerprint or face ID). That's just the technical aspect, directly related to the way the application is implemented.

- The related Advantage is that you don't need to insert a PIN or password (and overall, you will need a simpler interaction with your device – possibly just one touch). That's what the feature will enable, in terms of usage of the application.

- The linked Benefit is that your payments will be faster and easier. But another benefit can be that your payments will also be safer (no one will steal your PIN or password). That's basically the reason why you may want to use this particular feature.

As you can imagine, a non-technical person (for example, a salesperson or the final customer) will probably think of each requirement in terms of benefits or advantages. And that's the right way to do it. However, having reasoning on the FAB flow could help in having a uniform point of view, and possibly repositioning desiderata into features and eventually requirements. We can look at a simple example regarding user experience.

Sticking with our mobile payments sample application, a requirement that business people may want to think about is the advantages that the usage of this solution will bring.

One simple example of a requirement could be to have a list of payments easily accessible in the app. A feature linked to that example would allow the customers to see their transaction list immediately after logging into the system.

In order to complete our flow, we should also think about the benefits, which in this case could be described as the ability to keep your expenses under control. However, this could also work the other way around. When reasoning with more tech-savvy stakeholders, it's easier to focus on product features.

You may come up with a feature such as a user currently not provisioned in the system should be presented with a demo version of the application.

The advantage here is having an easy way to try the application's functionalities. The benefit of this for customers is that they can try the application before signing up for an account. The benefit for the business is that they have free advertising to potentially draw in more customers.

You might now ask, so what am I looking for, when doing requirements gathering, that is, searching for features? There are no simple answers here.

My personal experience says that a feature may be directly considered a requirement, or, more often, be composed of more than one requirement. However, your mileage may vary depending on the type of product and the kind of requirements expressed.

One final thing to note about the FAB reasoning is that it will help with clustering requirements (by affinity to similar requirements or benefits), and with prioritizing them (depending on which benefit is the most important).

Now we have a simple process to link the technical qualities of our product to business impacts. However, we haven't yet defined exactly what a requirement is and what its intrinsic characteristics are. Let's explore what a requirement looks like.

Features and technical requirements

As we saw in the previous section, requirements are usually strictly related to the features of the system. Depending on who is posing the request, requirements can be specified with varying amounts of technical detail. A requirement may be as low-level as the definition of an API or other software interfaces, including arguments and quantitative input validation/outcome. Here is an example of what a detailed, technically specified requirement may look like:

When entering the account number (string, six characters), the system must return the profile information. Result code as int (0 if operation is successful), name as string, and surname as string [...]. In the case of account in an invalid format, the system must return a result code identifying the reason of the fault, as per a mapping table to be defined.

Often requirements are less technical, identifying more behavioral aspects of the system. In this case, drawing on the model we discussed in the previous section (Feature, Advantage, and Benefit), we are talking about something such as a feature or the related advantage.

An example here, for the same functionality as before, may look like this:

The user must have the possibility to access their profile, by entering the account number.

It's easy to understand that a non-technical requirement must be detailed in a quantitative and objective way before being handed over to development teams. But what makes a requirement quantitative and objective?

Types and characteristics of requirements

There are a number of characteristics that make a requirement effective, meaning easy to understand and respondent to the customer expectations in a non-ambiguous way.

From my personal point of view, in order to be effective, a requirement must be the following:

- Consistent: The requirement must not conflict with other requirements or existing functionalities unless this is intentional. If it is intentional (for example, we are removing old functionalities or fixing wrong behaviors), the new requirement must explicitly override older requirements, and it's probably an attention point since corner cases and conflicts are likely to happen.

- Implementable: This means, first of all, that the requirement should be feasible. If our system requires a direct brain interface to be implemented, this of course will not work (at least today). Implementable further means that the requirement must be achievable in the right amount of time and at the right cost. If it needs 100 years to be implemented, it's in theory feasible but probably impractical.

Moreover, these points need to be considered within the context of the current project, since although it may be easy to implement something in one environment it may not be feasible in another. For example, if we were a start-up, we could probably launch a brand-new service on our app that would have little impact on the existing userbase. If we were a big enterprise, however, with a large customer base and consolidated access patterns, this may need to be evaluated more thoroughly.

- Explicit: There should be no room for interpretation in a software requirement. Ambiguity is likely to happen when the requirement is defined in natural language, given that a lot of unspoken data is taken erroneously for granted. For this reason, it is advised to use tables, flowcharts, interface mockups, or whatever schema can help clarify the natural language and avoid ambiguity. Also, straightforward wording, using defined quantities, imperative verbs, and no metaphors, is strongly advised.

- Testable: In the current development philosophies, heavily focused on experimentation and trial and error (we will see more on this in the upcoming chapters), a requirement must be translated in a software test case, even better if it can be fully automated. While it may be expected that the customer doesn't have any knowledge of software testing techniques, it must be possible to put testing scenarios on paper, including things such as tables of the expected outputs over a significant range of inputs.

The QA department may, at a later stage, complement this specification with a wider range of cases, in order to test things such as input validation, expected failures (for example, in the case of inputs too large or malformed), and error handling. Security departments may dig into this too, by testing malicious inputs (for example, SQL injections).

This very last point leads us to think about the technical consequences of a requirement. As we were saying at the beginning of this chapter, requirements are commonly exposed as business features of the system (with a technical standpoint that can vary in the level of detail).

However, there are implicit requirements, which are not part of a specific business use case but are essential for the system to work properly.

To dig deeper into this concept, we must categorize the requirements into three fundamental types:

- Functional requirements: Describing the business features of the system, in terms of expected behavior and use cases to be covered. These are the usual business requirements impacting the use cases provided by the system to be implemented.

- Non-functional requirements: Usually not linked to any specific use case, these requirements are necessary for the system to work properly. Non-functional requirements are not usually expressed by the same users defining functional requirements. Those are usually about implicit aspects of the application, necessary to make things work. Examples of non-functional requirements include performance, security, and portability.

- Constraints: Implicit requirements are usually considered a must and are mandatory. These include external factors and things that need to be taken for granted, such as obeying laws and regulations and complying with standards (both internal and external to the company).

One example here could be the well-known General Data Protection Regulation (GDPR), the EU law about data protection and privacy, which you have to comply with if you operate in Europe. But you may also have to comply with the industry standards depending on the particular market in which you are operating (that's pretty common when working with banks and payments), or even standards enforced by the company you are working with. A common example here is the compatibility of the software (such as when it has to be compatible with a certain version of an operating system or a particular browser).

Now that we've seen the different types of requirements and their characteristics, let's have a look at the life cycle of software requirements.

The life cycle of a requirement

The specification of a requirement is usually not immediate. It starts with an idea of how the system should work to satisfy a use case, but it needs reworking and detailing in order to be documented. It must be checked against (or mixed with) non-functional requirements, and of course, may change as the project goes on. In other words, the life cycle of requirements can be summarized as follows. Each phase has an output, which is the input for the following one (the path could be non linear, as we will see):

- Gathering: Collection of use cases and desired system features, in an unstructured and raw format. This is done in various ways, including interviews, collective sketches, and brainstorming meetings, including both the customer and the internal team. Event Storming (which we will see soon) is a common structured way to conduct brainstorming meetings, but less structured techniques are commonly used here, such as using sticky notes to post ideas coming from both customers and internal teams. In this phase, the collection of data usually flows freely without too much elaboration, and people focus more on the creative process and less on the details and impact of the new features. The output for this phase is an unstructured list of requirements, which may be collected in an electronic form (a spreadsheet or text document), or even just a photograph of a wall with sticky notes.

- Vetting: As a natural follow-up, in this phase the requirements output from the previous phase is roughly analyzed and categorized. Contradicting and unfeasible topics must be addressed. It's not unusual to go back and forth between this phase and the previous one. The output here is still an unstructured list, similar to the one we got from the previous step. But we started to polish it, by removing duplicates, identifying the requirements that need more details, and so on.

- Analysis: In this phase, it's time to conduct a deeper analysis of the output from the previous phase. This includes identifying the impact of the implementation of every new feature, analyzing the completeness of the requirement (desired behavior on a significant list of inputs, corner cases, and validation), and the prioritization of the requirement. While not necessary, it is not unusual in this case to have a rough idea of the implementation costs of each requirement. The output from this step is a far more stable and polished list, basically a subset of the input we got. But we are still talking about the unstructured data (not having an ID or missing some details, for example), which is what we are going to address in the next phase.

- Specification: Given that we've completed the study of each requirement, it's now time to document it properly, capturing all the aspects explored so far. We may already have drafts and other data collected during the previous phases (for example, schemas on paper, whiteboard pictures, and so on) that just need to be transcribed and polished. The documentation redacted in this phase has to be accessible and updatable throughout the project. This is essential for tracking purposes. As an output of this phase, you will have each requirement checked and registered in a proper way, in a document or by using a tool. There are more details on this in the Collecting requirements – formats and tools section of this chapter.

- Validation: Since we got the formal documentation of each requirement as an output of the previous phase, it is a best practice to double-check with the customer whether the final rework covers their needs. It is not unusual for, after seeing the requirements on paper, a step back to the gathering phase to have to be made in order to refocus on some use cases or explore new scenarios that have been uncovered during the previous phases. The output of this phase has the same format as the output of the previous phase, but you can expect some changes in the content (such as priorities or adding/removing details and contents). Even if some rework is expected, this data can be considered as a good starting point for the development phase.

So, the requirement life cycle can be seen as a simple workflow. Some steps directly lead to the next, while sometimes you can loop around phases and step backward. Graphically, it may look like the following diagram:

Figure 2.1 – Software requirements life cycle

As you can see in the previous diagram, software requirements specification is often more than a simple phase of the software life cycle. Indeed, since requirements shape the software itself, they may follow a workflow on their own, evolving and going through iterations.

As per the first step of this flow, let's have a look at requirements gathering.

Discovering and collecting requirements

The first step in the requirements life cycle is gathering. Elicitation is an implicit part of that. Before starting to vet, analyze, and ultimately document the requirements, you need to start the conversation and start ideas flowing.

To achieve this, you need to have the right people in the room. It may seem trivial, but often it is not clear who the source of requirements should be (for example, the business, a vague set of people including sales, executive management, project sponsors, and so on). Even if you manage to have those people onboard, who else is relevant for requirement collection?

There is no golden rule here, as it heavily depends on the project environment and team composition:

- You will need for sure some senior technical resources, usually lead architects. These people will help by giving initial high-level guidance on technical feasibility and ballpark effort estimations.

- Other useful participants are enterprise architects (or business architects), who could be able to evaluate the impact of the solution on the rest of the enterprise processes and technical architectures. These kinds of profiles are of course more useful in big and complex enterprises and can be less useful in other contexts (such as start-ups). As a further consideration, experienced people with this kind of background can suggest well-known solutions to problems, compared with similar applications already in use (or even reusing functionalities where possible).

- Quality engineers can be a good addition to the team. While they may be less experienced in technical solutions and existing applications, they can think about the suggested requirements in terms of test cases, narrowing them down and making them more specific, measurable, and testable.

- Security specialists can be very helpful. Thinking about security concerns early in the software life cycle can help to avoid surprises later on. While not exhaustive, a quick assessment of the security impacts of proposed requirements can be very useful, increasing the software quality and reducing the need to rework.

Now that we have all the required people in a room, let's look at a couple of exercises to break the ice and keep ideas flowing to nail down our requirements.

The first practice we will look at is the lean canvas. This exercise is widely used in the start-up movement, and it focuses on bringing the team together to identify what's important in your idea, and how it will stand out from the competition.

The lean canvas

The lean canvas is a kind of holistic approach to requirements, focusing on the product's key aspects, and the overall business context and sustainability.

Originating as a tool for start-ups, this methodology was developed by Ash Maurya (book author, entrepreneur, and CEO at LEANSTACK) as an evolution/simplification of the Business Model Canvas, which is a similar approach created by Alexander Osterwalder and more oriented to the business model behind the product. This method is based on a one-page template to gather solution requirements out of a business idea.

The template is made of nine segments, highlighting nine crucial aspects that the final product must have:

Figure 2.2 – The lean canvas scaffold

Note that the numbering of each segment reflects the order in which the sections should be filled out. Here is what each segment means:

- Problem: What issues will our customers solve by using our software product?

- Customer Segments: Who is the ideal person to use our software product (that is, the person who has the problems that our product will solve)?

- Unique Value Proposition: Why is our software product different from other potential alternatives solving similar problems?

- Solution: How will our software product solve the problems in section 1?

- Channels: How will we reach our target customer? (This is strictly related to how we will market our software solution.)

- Revenue Streams: How we will make money out of our software solution?

- Cost Structure: How much will it cost to build, advertise, and maintain our software solution?

- Key Metrics: What are the key numbers that need to be used to monitor the health of the project?

- Unfair Advantage: What's something that this project has that no one else can copy/buy?

The idea is to fill each of these areas with one or more propositions about the product's characteristics. This is usually done as a team effort in an informal setting. The canvas is pictured on a whiteboard, and each participant (usually product owners, founders, and tech leads) contributes ideas by sticking Post-it notes in the relevant segments. A postprocess collective phase usually follows, grouping similar ideas, ditching the less relevant ideas, and prioritizing what's left in each segment.

As you can see, the focus here is shifted toward the feasibility of the overall project, instead of the detailed list of features and the specification. For this reason, this methodology is often used as a support for doing elevator pitches to potential investors. After this first phase, if the project looks promising and sustainable from the business model point of view, other techniques may be used to create more detailed requirement specifications, including the ones already discussed, and more that we will see in the next sections.

While the lean canvas is more oriented to the business model and how this maps into software features, in the next section we will explore Event Storming, which is a discovery practice usually more focused on the technical modeling of the solution.

Event Storming

Event Storming is an agile and interactive way to discover and design business processes and domains. It was described by Alberto Brandolini (IT consultant and founder of the Italian Domain Driven Design community) in a now-famous blog post, and since then has been widely used and perfected.

The nice thing about this practice is that it is very friendly and nicely supports brainstorming and cross-team collaboration.

To run an Event Storming session, you have to collect the right people from across various departments. It usually takes at least business and IT, but you can give various different flavors to this kind of workshop, inviting different profiles (for example, security, UX, testers) to focus on different points of view.

When you have the right mix of people in the room, you can use a tool to help them interact with each other. When using physical rooms (the workshop can also be run remotely), the best tool is a wall plus sticky notes.

The aim of the exercise is to design a business process from the user's point of view. So how do you do that?

- You start describing domain events related to the user experience (for example, a recipient is selected). Those domain events are transcribed on a sticky note, traditionally orange, and posted to the wall respecting the temporal sequence.

- You then focus on what has caused the domain event. If the cause is a user interaction (for example, the user picks a recipient from a list), it's known as a command and tracked as a blue sticky note, posted close to the related event.

- You may then draft the user behind the command (for example, a customer of the bank). This means drafting a persona description of the user carrying out the command, tracking it on a yellow sticky note posted close to the command.

- If domain events are generated from other domain events (for example, the selected recipient is added to the recently used contacts), they are simply posted close to each other.

- If there are interactions with external systems (for example, the recipient is sent to a CRM system for identification), they are tracked as pink sticky notes and posted near to the related domain event.

Let's have a look at a simple example of Event Storming. The following is just a piece of a bigger use case; this subset concisely represents the access of a user to its transactions list. The use case is not relevant here, it's just an example to show the main components of this technique:

Figure 2.3 – The Event Storming components

In the diagram, you can see a small but complete subset of an Event Storming session, including stickies representing the different components (User, Command, and Domain Events) and the grouping representing the aggregates.

What do you achieve from this kind of representation?

- A shared understanding of the overall process.

- A clustering of events and commands, identifying the so-called aggregates. This concept is very important for the modeling of the solution, and we will come back to this in Chapter 4, Best Practices for Design and Development, when talking about Domain-Driven Design.

- The visual identification of bottlenecks and unclear links between states of the system.

It's important to note that this methodology is usually seen as a scaffold. You may want to customize it to fit your needs, tracking different entities, sketching simple user interfaces to define commands, and so on. Moreover, these kinds of sessions are usually iterative. Once you've reached a complete view, you can restart the session with a different audience to further enrich or polish this view, to focus on subdomains and so on.

In the following section, we will explore some alternative discovery practices.

More discovery practices

Requirements gathering and documentation is somewhat of a composite practice. You may find that after brainstorming sessions (for example, a lean canvas, Event Storming, or other comparable practices), other requirement engineering techniques may be needed to complete the vision and explore some scenarios that surfaced during the other sessions. Let's quickly explore these other tools so you can add them to your toolbox.

Questionnaires

Questions and answers are a very simple and concise way of capturing fixed points about a software project. If you are capable of compiling a comprehensive set of questions, you can present your questionnaire to the different stakeholders to collect answers and compare the different points of view.

The hard part is building such a list of questions. You may have some ideas from previous projects, but given that questions and answers are quite a closed-path exercise, it isn't particularly helpful if you are at the very beginning of the project. Indeed, it is not the best method to use if you are starting from a blank page, as it's not targeted at nurturing creative solutions and ideas. For this reason, I would suggest proceeding with this approach mostly to detail ideas and use cases that surfaced in other ways (for example, after running brainstorming sessions).

Mockups and proofs of concepts

An excellent way to clarify ideas is to directly test what the product will look like by playing with a subset of functionalities (even if fake or just stubbed). If you can start to build cheap prototypes, or even just mockups (fake interfaces with no real functionalities behind the scenes), you may be able to get non-technical stakeholders and final users on board sooner, as you give them the opportunity to interact with the product instead of having to imagine it.

This is particularly useful in UX design, and for showcasing different solutions. Moreover, in modern development, this technique can be evolved toward a shorter feedback loop (release early, release often), having the stakeholders test alpha releases of the product instead of mockups so they can gain an understanding of what the final product will look like and change the direction as soon as possible.

A/B testing

A further use for this concept is to have the final users test by themselves and drive the product evolution. This technique, known as A/B testing, is used in production by high-performing organizations and requires some technological support to be implemented. The principle is quite simple: you pick two (or more) alternative features, put them into production, and measure how they perform. In an evolutionary design, the best performing will survive, while the others will be discarded.

As you can imagine, the devil is in the details here. Implementing more alternatives and discarding some of them may be expensive, so often there are just minor differences between them (for example, the color or position of elements in the UI). Also, the performance must be measurable in an objective way, for example, in e-commerce you might measure the impact on purchases, or in advertising the conversions of banners and campaigns.

Business intelligence

Another tool to complete and flesh out the requirement list is business intelligence. This might mean sending surveys to potential customers, exploring competitor functionalities, and doing general market research. You should not expect to get a precise list of features and use cases by using only this technique, but it may be useful for completing your view about the project or coming up with some new ideas.

You may want to check whether your idea for the finished system resonates with final customers, how your system compares with competitors, or whether there are areas in which you could do better/be different. This tool may be used to validate your idea or gather some last pieces to complete the picture. Looking at Figure 2.1, this is something you may want to do during the validation phase.

Now, we have collected a wide set of requirements and points of view. Following the requirements life cycle that we saw at the beginning of this chapter, it is now time for requirements analysis.

Analyzing requirements

The discovery practices that we've seen so far mostly cover the gathering and vetting of requirements. We've basically elicited from the stakeholders details of the desired software functionalities and possibly started organizing them by clustering, removing duplicates, and resolving macroscopic conflicts.

In the analysis phase, we are going to further explore the implications of the requirements and complete our vision of what the finished product should look like. Take into account that product development is a fluid process, especially if you are using modern project management techniques (more on that in Chapter 5, Exploring the Most Common Development Models). For this reason, you should consider that most probably not every requirement defined will be implemented, and certainly not everything will be implemented in the same release – you could say we are shooting at a moving target. Moreover, it is highly likely that more requirements will be developed later on.

For this reason, requirements analysis will probably be performed each time, in an iterative approach. Let's start with the first aspect you should consider when analyzing the requirements.

Checking for coherence and feasibility

In the first section, we clearly stated that a requirement must be consistent and implementable. That is what we should look for in the analysis phase.

There is no specific approach for this. It's a kind of qualitative activity, going through requirements one by one and cross-checking them to ensure they are not conflicting with each other. With big and complex requirement sets, this activity may be seen as a first pass, as no explicit conflict may arise later during design and implementation. Similar considerations may be made with regard to feasibility. In this phase, it's important to catch the big issues and identify the requirements that seem to be unfeasible, however, more issues can arise during later phases.

If incoherent or unfeasible requirements are spotted, it's crucial to review them with the relevant stakeholders (usually business), in order to reconsider the related features, and make changes. From time to time, small changes to the requirement can make it feasible. A classic scenario is related to picking a subset of the data or making similar compromises. In our mobile payments example, it may not be feasible to show instantaneously the whole list of transactions updated in real time, however, it could be a good compromise to show just a subset of them (for example, last year) or have a small visualization delay (for example, a few seconds) when new transactions occur.

Checking for explicitness and testability

Continuing with requirements characteristics, it is now time to check the explicitness and testability of each requirement. This may be a little more systematic and quantitative compared to the previous section. Essentially, you should run through the requirements one by one and check whether each requirement is expressed in a defined way, making it easy to understand whether the implementation has been completed correctly. In other words, the requirement must be testable and it is best if it is testable in an objective and automatable way.

Testing for explicitness brings with it the concept of completeness. Once a requirement (and the related feature) is accepted, all the different paths must be covered in order to provide the product with predictable behavior in most foreseeable situations. While this may seem hard and complex, in most situations it's enough to play with possible input ranges and conditional branches to make sure all the possible paths are covered. Default cases are another important aspect to consider; if the software doesn't know how to react to particular conditions it's a good idea to define reasonable, standard answers to fall into.

Checking non-functional requirements and constraints

As the last step, it's important to run through the requirements list, looking for non-functional requirements and constraints. The topic here is broad and subjective. It's likely not possible (nor useful) to explicate all the non-functional requirements and constraints and put them on our list. Most of them are shared with existing projects, regulated by external parties, or simply not known.

However, there are areas that have an important impact on the project implementation, and for this reason, must be considered in the analysis phase.

One usual suspect here is security. All the considerations about user sessions, what to do with unauthenticated users, and how to manage user logins and such have implications for the feasibility and complexity of the solution, other than having an impact on the user experience. Analog reasoning can be made for performance. As seen in the Checking for coherence and feasibility section, small changes in the amount of data and the expected performances of the system may make all the difference. It's not unusual to have non-technical staff neglecting these aspects or expecting unreasonable targets. Agreeing (and negotiating) on the expected result is a good way to prevent issues later in the project.

Other considerations of non-functional requirements and constraints may be particularly relevant in specific use cases. Take into account that this kind of reasoning may also be carried over into the project planning phase, in which constraints in budget or timeframe may drive the roadmap and release plan.

Now, we've gone through the analysis phase in the software requirements life cycle. As expected, we will now approach the specification phase. We will start with a very formal and structured approach and then look at a less structured alternative.

Specifying requirements according to the IEEE standard

The Institute of Electrical and Electronics Engineers (IEEE) has driven various efforts in the field of software requirements standardization. As usual, in this kind of industry standard, the documents are pretty complete and extensive, covering a lot of aspects in a very verbose way.

The usage of those standards may be necessary for specific projects in particular environments (for example, the public sector, aviation, medicine). The most famous deliverable by IEEE in this sense is the 830-1998 standard. This standard has been superseded by the ISO/IEEE/IEC 29148 document family.

In this section, we are going to cover both standards, looking at what the documents describe in terms of content, templates, and best practices to define requirements adhering to the standard.

The 830-1998 standard

The IEEE 830-1998 standard focuses on the Software Requirement Specification document (also known as SRS), providing templates and suggestions on content to be covered.

Some concepts are pretty similar to the ones discussed in the previous sections. The standard states all the characteristics that a requirement specification must have. Each requirement specification should be the following:

- Correct

- Unambiguous

- Complete

- Consistent

- Ranked for importance and/or stability

- Verifiable

- Modifiable

- Traceable

As you can see, this is similar to the characteristics of requirements. One interesting new concept added here is the ranking of requirements. In particular, the document suggests classifying the requirements by importance, assigning priorities to requirements, such as essential, conditional, optional, and/or stability (stability refers to the number of expected changes to the requirement due to the evolution of the surrounding organization).

Another interesting concept discussed in this standard is prototyping. I would say that this is positively futuristic, considering that this standard was defined in 1998. Well before the possibility to cheaply create stubs and mocks, as is normal today, this standard suggests using prototypes to experiment with the possible outcome of the system and use it as a support for requirements gathering and definition.

The last important point I want to highlight about IEEE 830-1998 is the template. The standard provides a couple of samples and a suggested index for software requirements specifications. The agenda includes the following:

- Introduction: Covering the overview of the system, and other concepts to set the field, such as the scope of the document, purpose of the project, list of acronyms, and so on.

- Overall description: Describing the background and the constructs supporting the requirements. Here, you may define the constraints (including technical constraints), the interfaces to external systems, the intended users of the system (for example, the skill level), and the product functions (intended to give an overview of the product scope, without the details that map to specific requirements).

- Specific requirements: This refers to the requirements themselves. Here, everything is expected to be specified with a high amount of detail, focusing on inputs (including validation), expected outputs, internal calculations, and algorithms. The standard offers a lot of suggestions for topics that need to be covered, including database design, object design (as in object-oriented programming), security, and so on.

- Supporting information: Containing accessory information such as a table of contents, index, and appendixes.

As you can see, this SRS document may appear a little verbose, but it's a comprehensive and detailed way to express software requirements. As we will see in the next section, IEEE and other organizations have superseded this standard, broadening the scope and including more topics to be covered.

The 29148 standard

As discussed in the previous sections, the 830-1998 standard was superseded by a broader document. The 29148 family of standards represents a superset of 830-1998. The new standard is rich and articulated. It mentions the SRS document, following exactly the same agenda but adding a new section called verification. This section refers to specifying a testing strategy for each element of the software, suggesting that you should define a verification for each element specified in the other sections of the SRS.

Other than the SRS document, the 29148 standard suggests four more deliverables. Let's have a quick look at them:

- The Stakeholder Requirements Specification: This places the software project into the business perspective, analyzing the business environment around it and the impact it will have by focusing on the point of view of the business stakeholders.

- The System Requirements Specification: This focuses on the technical details of the interactions between the software being implemented and the other system composing the overall architecture. It specifies the domain of the application and the inputs/outputs.

- System Operational Concept: This describes, from the user's point of view, the system's functionality. It takes a point of view on the operation of the system, policies, and constraints (including supported hardware, software, and performance), user classes (meaning the different kinds of users and how they interact with the system), and operational modes.

- Concepts of Operations: This is not a mandatory document. When provided, it addresses the system as a whole and how it fits the overall business strategy of the customer. It includes things such as the investment plan, business continuity, and compliance.

As we have seen, the standards documents are a very polished and complete way to rationalize the requirements and document them in a comprehensive way. However, sometimes it may be unpractical to document the requirements in a such detailed and formalized way. Nevertheless, it's important to take these contents as a reference, and consider providing the same information, even if not using the very same template or level of details.

In the next section, we will have a look at alternative simplified formats for requirements collection and the tools for managing them.

Collecting requirements – formats and tools

In order to manage and document requirements, you can use a tool of your choice. Indeed, many teams use electronic documents to detail requirements and track their progression, that is, in which stage of the requirement life cycle they are. However, when requirements grow in complexity, and the size of the team grows, you may want to start using more tailored tools.

Let's start by having a look at the required data, then we will focus on associated tooling.

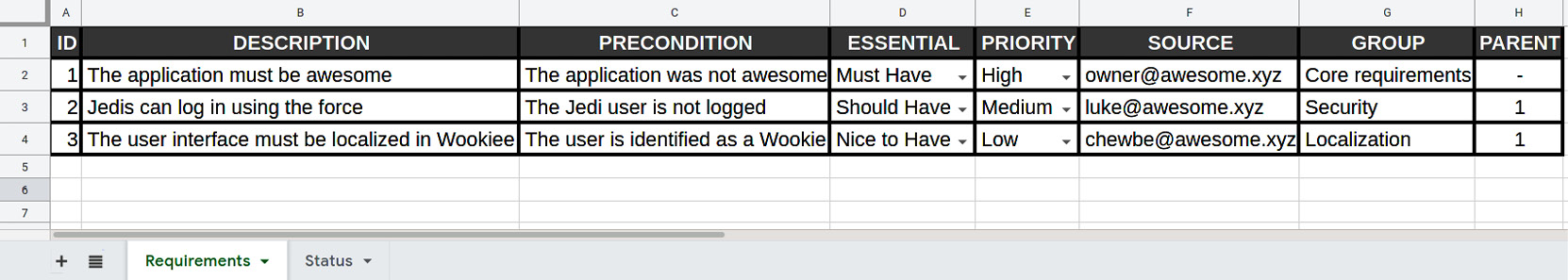

Software requirements data to collect

Regardless of the tool of your choice, there is a subset of information you may want to collect:

- ID: A unique identifier will be needed since the requirement will be cross-referenced in many different contexts, such as test cases, documentation, and code comments. It can follow a naming convention or simply be an incremental number.

- Description: A verbal explanation of the use case to be implemented.

- Precondition: (If relevant) the situation that the use case originates from.

- Essential: How essential the requirement is, usually classified as must have, should have, or nice to have. This may be useful in order to filter requirements to be included in a release.

- Priority: A way to order/cluster requirements. Also, a useful way to filter requirements to be included in a release.

- Source: The author of the requirement. It may be a department, but it is better if there is also a named owner to contact in case of clarifications being needed.

- Group: A way to cluster requirements for functional areas. Also, can be a useful way to collect a set of requirements to implement in a release.

- Parent: This is optional, in case you want to implement a hierarchy with a complex/high-level requirement made of a set of sub-requirements.

These are the basic attributes to collect for each software requirement, to enrich with any further column that may be relevant in your context.

You may then want to track the implementation of each requirement. The attributes to do so usually include the following:

- Status: A synthetic description of the implementation status, including states such as UNASSIGNED, ASSIGNED, DEVELOPMENT, TESTING, and COMPLETE.

- Owner: The team member to whom this requirement is assigned. It may be a developer, a quality engineer, or someone else, depending on the status.

- Target release: The software release that is targeted to include this requirement.

- Blocker: Whether this requirement is mandatory for this release or not.

- Depends on: Whether this requirement depends on other requirements to be completed (and what they are) before it can be worked on.

Also, in this case, this is a common subset of information useful for tracking the requirement status. It may be changed, depending on the tooling and the project management techniques used in your particular context. Let's now have a look at tools to collect and manage this information.

Collecting software requirements in spreadsheets

Looking at the list of attributes described in the previous section, you can imagine that these requirements can be easily collected in spreadsheets. It's a tabular format, with one requirement per row, and columns corresponding to the information we've discussed. Also, you could have the status tracking in the same row or associated by ID in a different sheet. Moreover, you can filter the sheet by attribute (for example, priority, group, status), sort it, and limit/validate the inputs where relevant (for example, restricting values from a specified list). Accessory values may also be added (for example, last modified date).

This is what a requirements spreadsheet might look like:

Figure 2.4 – A requirements spreadsheet

As mentioned, we can then have a sheet for tracking the progression of each requirement. It may look like the example that follows:

Figure 2.5 – Status tracking sheet

In the next sections, we will have a look at tools that can be used to support requirements gathering and documentation.

Specialized tools for software requirements management

As mentioned in the previous section, with bigger teams and long-term projects, specialized tools for requirements management can be easier to use than a shared document/spreadsheet.

The most useful feature is usually having a centralized repo, avoiding back and forth (and a lack of synchronization), which happens when using documents. Other interesting features to look for are auditing (tracking changes), notifications, reporting, and advanced validation/guided input. Also, integration with source code management (for example, associating features with commits and branches) is pretty common and useful.

The software for requirements management is usually part of a bigger suite of utilities for project management. Here are some common products:

- Jira is a pretty widespread project management toolkit. It originated as an issue tracking tool to track defects in software products. It's commonly used for tracking features too. It may also be extended with plugins enriching the functionalities of feature collection, organizing, and reporting.

- Redmine is an open source tool and includes many different project management capabilities. The most interesting thing about it is its customizability, enabling you to track features, associate custom fields, reference source code management tools (for example, Git), and define Gantt charts/calendars.

- IBM Rational DOORS is commercial software for requirements management, very complete and oriented to mid-large enterprises. It is part of the Rational suite, originally developed by Rational Software (now part of IBM), which is also famous for contributing to the creation of UML notation, which we will discuss in the next chapter.

The selection of a requirements management tool is a complex process, involving cost analysis, feature comparison, and more, which is way beyond the goal of this book.

Spreadsheets versus tools

It is a common debate whether to use specialized tools versus spreadsheets (or documents) for managing lists of requirements. It is a common path to start using a simpler approach (such as spreadsheets) and move to a tool once the project becomes too big or too complex to manage this way. Moreover, managers and non-technical users are more willing to use spreadsheets because they are more comfortable with such technology. Conversely, tech teams find it is often more effective to work with specialized tools. As usual, there is no one size that fits all, but honestly, the benefits of using a dedicated tool are many.

The most immediate is having a centralized repository. Tools for requirement management are made to be used in real time, acting as a central, single source of truth. This allows us to avoid back and forth (and lack of synchronization), which happens when using documents (while you could object here that many Office suites offer real-time sharing and collaborative editing, nowadays).

Other interesting features included with a specialized tool are auditing (tracking changes), notifications, reporting, and advanced validation/guided input.

Also, the integration with the source code management (for example, associating features with commits and branches) is pretty common and appreciated by the development teams. Management can also benefit from planning and insight features, such as charts, aggregated views, and integration with other project management tools.

So, at the end of the day, I strongly advise adopting a full-fledged requirements management tool instead of a simple spreadsheet if that is possible.

In the next section, we will explore requirements validation, as a final step in the software requirements life cycle.

Validating requirements

As we've seen, the final phase of the requirements life cycle involves validating the requirements. In this phase, all the produced documentation is expected to be reviewed and formally agreed by all the stakeholders.

While sometimes neglected and considered optional, this phase is in fact very important. By having a formal agreement, you will ensure that all the iterations on the requirements list, including double-checking and extending partial requirements, still reflect the original intentions of the project.

The business makes sure that all the advantages and benefits will be achieved, while the technical staff will check that the features are correctly mapped in a set of implementable requirements so that the development team will clearly understand what's expected.

This sign-off phase could be considered the point at which the project first truly kicks off. At this point, we have a clearer idea of what is going to be implemented. This is not the final word, however; when designing the platform and starting the project plans, you can expect the product to be remodeled. Maybe just a set of features will be implemented, while other functionalities will be put on paper later.

In this section, we took a journey through the requirements life cycle. As already said, most of these phases can be considered iterative, and more than one loop will be needed before completing the process. Let's have a quick recap of the requirements life cycle and the practices we have seen so far:

- Gathering and vetting: As we have seen, these two phases are strictly related and involve a cross-team effort to creatively express ideas and define how the final product should look. Here, we have seen techniques for brainstorming such as the lean canvas, Event Storming, and more.

- Analysis: This phase includes checking the coherence, testability, and so on.

- Specification: This includes the IEEE standard and some less formalized standards and tools.

- Validation: This is the formal sign-off and acceptance of a set of requirements. As said, it's not unusual to see a further rework of such a set by going back to the previous phases, in an iterative way.

In the next section, we will continue to look at our mobile payments example, focusing on the requirements analysis phase.

Case studies and examples

Continuing with the case study about our mobile payments solution, we are going to look at the requirements gathering phase. For the sake of simplicity, we will focus only on a small specific scenario: a peer-to-peer payment between two users of the platform.

The mobile payment application example

As we are doing in every chapter, let's have a look at some examples of the concepts discussed in this chapter applied to the mobile payment application that we are using as a case study.

Requirements life cycle

In the real world, the life cycle of requirements will reasonably take weeks (or months), adding up to a lot of requirements and reworking of them, so it is impractical to build a complete example of the requirements life cycle for our mobile payment scenario. However, I think it will be interesting to have a look at how one particular requirement will evolve over the phases we have seen:

- In the gathering phase, it is likely we will end up with a lot of ideas around ease of use and security for each payment transaction. Most of the participants will start to think from an end user perspective, focusing on the user experience, and so it's likely we will have sketches and mockups of the application. Some more ideas will revolve around how to authorize the payment itself along with its options (how about a secret swipe sequence, a PIN code, a face ID, a One-Time Password (OTP), or a fingerprint?).

- In the vetting phase (likely during, or shortly after, the previous phase), we will cluster and clean up what we have collected. The unpractical ideas will be dropped (such as the OTP, which may be cumbersome to implement), while others will be grouped (face ID and fingerprint) under biometric authorization. More concepts will be further explored and detailed: What does it mean to be fast and easy to use? How many steps should be done to complete the payment? Is entering a PIN code easy enough (in cases where we cannot use biometric authorization)?

- It's now time to analyze each requirement collected so far. In our case, maybe the payment authorization. It is likely that the user will be presented with a screen asking for biometric authentication. But what happens if the device doesn't have a supported hardware? Should the customer be asked for other options, such as a PIN code? What should happen if the transaction is not authorized? And of course, this kind of reasoning may go further and link more than one requirement: What if a network is not available? What should happen after the transaction is completed successfully? Maybe the information we have at that moment (where the customer is, what they have bought, the balance of their account) allows for some interesting use cases, such as contextual advertising, offering discounts, and so on.

- Now that we have clarified our requirements (and discovered new ones), it's time for specification. Once we pick a format (IEEE, or something simpler, such as a specialized tool or a spreadsheet), we start inserting our requirements one by one. Now, it's time to go for the maximum level of details. Let's think about bad paths (what happens when things go wrong?), corner cases, alternative solutions, and so on.

- The last phase is the validation of what we have collected into our tool of choice. It is likely that only a subset of the team has done the analysis and specification, so it's good to share the result of those phases with everyone (especially with non-technical staff and the project sponsors) to understand whether there is anything missing: maybe the assumptions we have made are not what they were expecting. It's not uncommon that having a look at the full list will trigger discussions about prioritization or brand-new ideas (such as the one about contextual advertising that we mentioned in the analysis phase).

In the next sections, we will see some more examples of the specific phases and techniques.

Lean canvas for the mobile payment application

The lean canvas can be imagined as an elevator pitch for getting sponsorship for this application (such as for getting funds or approval for the development). In this regard, the lean canvas is a kind of conversation starter when it comes to requirements. It could be good to identify and detail the main, most important features, but you will probably need to use other techniques (such as the ones described so far) to identify and track all the requirements with a reasonable level of detail.

With that said, here is how I imagine a lean canvas could look in this particular case. Of course, I am aware that other mobile and contactless solutions exist, so consider this just as an example. For readability purposes, I'm going to represent it as a bullet list. This is a transcribed version, as it happens after collecting all those aspects as sticky notes on a whiteboard:

- Problem: The payment procedure is cumbersome and requires cash or card. Payment with card requires a PIN code or a signature. The existing alternatives are credit or debit cards.

- Customer segment: Everybody with a not-too-old mobile phone. The early adopters could be people that don't own a credit card or don't have one to hand (maybe runners, who don't bring a wallet but only a mobile phone, or office workers during their lunch/coffee break).

- Unique value proposition: Pay with one touch, safely.

- Solution: A sleek, fast, and easy-to-use mobile application, allowing users to authorize payment transactions with biometric authentication.

- Unfair advantage: Credit/debit cards that don't need biometric authentication. (Of course I am aware, as I said, that contactless payments are available with credit cards, and other NFC options are bundled with mobile phones. So, in the real world, our application doesn't really have an advantage over other existing options.)

- Revenue streams: Transaction fees and profiling data over customer spending habits.

- Cost structure: App development, hosting, advertising. (In the real world, you may want to have a ballpark figure for it and even have a hypothesis of how many customers/transactions you will need to break even. This will put you in a better position for pitching the project to investors and sponsors.)

- Key metrics: Number of active users, transactions per day, average amount per transaction.

- Channels: Search engine optimization, affiliation programs, cashback programs.

In the next section, we'll look at Event Storming for peer-to-peer payments.

Event Storming for peer-to-peer payments

As we saw in the Event Storming section, in an Event Storming session it's important to have a variety of representations from different departments in order to have meaningful discussions. In this case, let's suppose we have business analysts, chief architects, site reliability engineers, and UX designers. This is what our wall may look like after our brainstorming session:

Figure 2.6 – Event Storming for peer-to-peer payment

As you can see from the preceding diagram, even in this simplified example we begin to develop a clear picture of the people involved in this use case and the external systems.

We can see that two systems are identified, Identity Management (IDM) for dealing with customer profiles and Backend for dealing with balances and transactions.

In terms of command and domain events, this is something you may want to reiterate in order to understand whether more interactions are needed, testing unhappy paths and defining aggregates (probably the hardest and most interesting step toward the translation of this model into software objects).

In the next section, we will see what a related spreadsheet of requirements might look like.

Requirements spreadsheet

Now, let's imagine we successfully completed the Event Storming workshop (or even better, a couple of iterations of it). The collected inputs may be directly worked on and translated into software, especially if developers actively participated in the activity. However, for the sake of tracking, double-checking, and completing the requirements list, it's common to translate those views into a document with a different format. While you can complete a standard IEEE requirement document, especially if you can do some further reworking and have access to all the stakeholders, a leaner format is often more suitable.

Now, starting from the features we have identified before, let's start to draft a spreadsheet for collecting and classifying the related requirements:

Figure 2.7 – Requirements list of a peer-to-peer payment

As you can see, the list is not complete, however, it's already clear that from a concept nice and concisely expressed on a couple of sticky notes, you can potentially derive a lot of rows with requirements and relative preconditions.

Moreover, it is often debated whether you should include all potential paths (for example, including failed logins, error conditions, and other corner cases) in lists like these. The answer is usually common sense; the path is specified if special actions come from it (for example, retries, offering help, and so on). If it's just followed by an error message, this can be specified elsewhere (for example, in the test list and in user acceptance documents).

Another relevant discussion is about supporting information. From time to time, you may have important information to be conveyed in other formats. The most common example is the user interface, commonly specified with graphical mockups. It is up to you whether attaching the mockups somewhere else and referring to them in a field (for example, notes), or directly embedding everything (a list of requirements plus graphic mockups) into the same document is better. This is not very important, however, and it heavily depends on your specific context and what makes your team feel comfortable.

Summary

In this chapter, we have covered a complete overview of software requirements. Knowing the characteristics of a well-defined software requirement, how to collect it, and how to document it is a very good foundation to build software architecture upon. Regardless of the technology and methodologies used in your projects, these ideas will help you to get your project up to speed and to build a collaborative, trusting relationship with your business counterparts.

On the business side, the use of such tools and practices will allow for a structured way to provide input to the technical team and track the progression and coverage of the features implemented.

In the next chapter, we will look at software architecture modeling and what methodologies can be used for representing an architectural design.

Further reading

- Ash Maurya, The Lean Canvas (https://leanstack.com/leancanvas)

- Alberto Brandolini, Introducing Event Storming (http://ziobrando.blogspot.com/2013/11/introducing-event-storming.html)

- Atlassian, Jira Software (https://www.atlassian.com/software/jira)

- Jean-Philippe Lang, Redmine (https://www.redmine.org/)

- IBM, Rational Doors (https://www.ibm.com/it-it/products/requirements-management)