Chapter 8: Measuring Test Coverage of the Web Application

How do you know that you've tested your web application enough? There are many metrics and measures for code quality, including defect density, user stories covered, and other "black-box" measurements. However, there is also a complementary metric that has been on the market for a long while and is more of a "white-box" metric, which is code coverage. In this chapter, you will learn how to complement the quality assessment of your web application with code coverage across the various test automation frameworks featured in this book (Selenium, Cypress, Playwright, and Puppeteer).

The chapter is designed to cover the following:

- Understand the differences between code coverage and test coverage and when to use them.

- Learn about the recommended tool(s) for JavaScript code coverage measurements.

- Understand how to complement code coverage measurements with test coverage capabilities (for example, production data, platform coverage, and analytics).

The goal of this chapter is to help frontend developers and SDETs build code coverage into their software development life cycles and recommend the right tools to use with the leading test automation frameworks.

Introduction to code coverage and test coverage

When assessing code quality, there are various metrics and methods to do so. However, when referring to quality and coverage metrics, there is often confusion between code coverage and test coverage. In this section, we will define and clarify what each of them means and focus on the definition and value of code coverage to frontend web application developers.

Test coverage

Test coverage refers to the level of testing against requirements that you cover via all types of testing (functional, non-functional, API, security, accessibility, and more). Within test coverage, you can also identify the platform coverage metric, which includes the required permutations of browser/OS and mobile/OS platforms. Within the tool stack landscape, there are application life cycle management (ALM) solutions as well as other test management tools that can measure and provide high-level metrics around test coverage.

Typically, the QA manager would build a test plan that specifies all the testing efforts that are planned for the software version under test. As part of the plan, the QA manager will also provide test coverage goals and criteria.

Running all testing types that are required based on the test plan should ensure not only the high quality of the release but also a decent test coverage percentage. Based on the pass/fail ratio, decision-makers will know whether the product is ready to be released or whether it has quality risks.

In many practices, test coverage encapsulates the following pillars:

- Product features coverage – how well we are covering all business flows, website screens, and navigation flows within the application, and how they work from a quality perspective.

- Product requirements coverage – this pillar refers to all the product user stories and capabilities that were supposed to be part of the product. The fact that we've tested, as identified previously, all available features does not mean that all the features that were supported to be included in the version are implemented.

- Boundary values coverage – this aspect ensures that the application works fine in all types of user inputs, whether they are appropriate or unappropriate. This can be testing all types of input fields and forms, entering different characters, mixing languages, and more.

- Compatibility coverage – as mentioned previously, this type of category refers to the level of platforms that are covered within all types of testing. For web applications, we typically refer to the most relevant configurations of web and mobile platforms against supported OS versions.

- Risk coverage – in specific market verticals, this type of pillar is even greater than others. However, measuring and addressing product risks within the test plan and the test coverage analysis is critical to ensure safe and high-quality software. An example of risk coverage is ensuring that dependencies for the web application are properly monitored and that there is a fallback plan (for example, third-party services or databases).

The bottom line is, test coverage looks at the software from a higher level and from a product requirement testing perspective.

Code coverage

Unlike test coverage, code coverage is very much technical and goes down to the code level to measure and assess how many lines of code are exercised and "touched" by different types of tests. In many cases and practices, unit tests would be the highest-priority testing type to attach to code coverage since the method of measuring code coverage requires high development skills, instrumentation of the code, and understanding of the code coverage outputs. With that in mind, this does not state that code coverage cannot be measured by running functional and other types of testing, as we will learn later in this chapter.

In a more scientific manner, code coverage aims to indicate the percentage of the code that is covered by different types of test cases. The output of a code coverage report typically consists of the following pillars of categories:

- Branch coverage – this metric ensures that every possible branch used in a decision-making process is executed. A good example is if within the code for your web application there is a conditional scenario based on user input, you are properly covering all possible cases.

- Function coverage – this metric measures whether all available functions within the web application code are executed.

- Statement coverage – this metric, which is part of most common code coverage tools, will show that every executable statement in the code is executed at least once.

- Loop coverage – as in statement coverage, loop coverage refers to the measurement of loops within the source code of the application under test being executed at least once.

To perform code coverage properly, frontend web application developers need to perform code instrumentation to add the coverage measurements to the code under test.

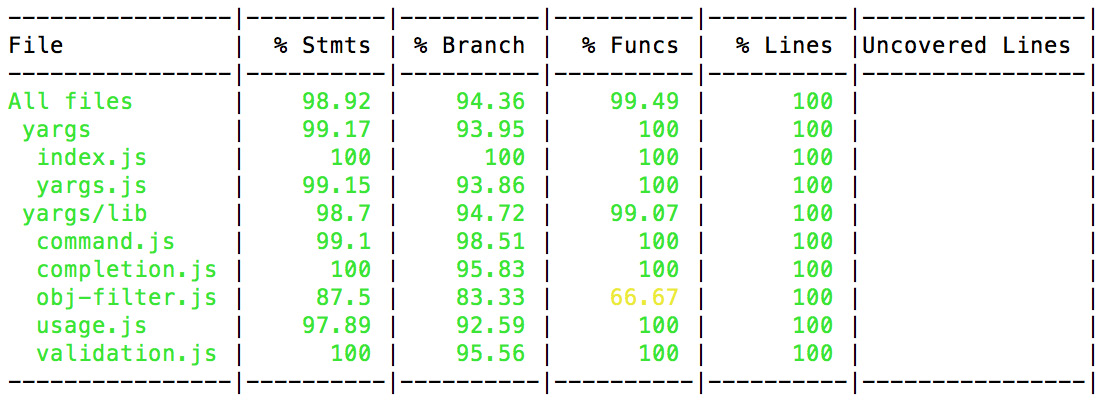

Figure 8.1 – Sample code coverage output from the Istanbul JavaScript code coverage tool (source: https://istanbul.js.org/)

Now that we have clarified the differences between code and test coverage, let's see how one of the leading coverage tools can work with the leading test automation frameworks on the market.

JavaScript code coverage tools for web application developers

As identified in the previous section, to measure code coverage, frontend web application developers should use tools that can measure the depth of testing done by the testing types during the test execution phase. To do so, frontend developers use tools that instrument their website source code by adding different counters and analyzers that in return report back the percentage of lines of code covered by testing and the percentage of statements and branches, and with that, they can assess the overall coverage and quality of their product.

For the JavaScript development language, the most used tool is Istanbul, which also uses the Babel plugin. Most leading test automation frameworks have a plugin for Istanbul, making it the most recommended tool for measuring code coverage.

The Cypress Istanbul plugin can be obtained here: https://www.npmjs.com/package/cypress-istanbul. If you're using Jest, it comes with a built-in coverage capability (https://jestjs.io/) that allows measuring coverage by simply adding the --coverage flag to your testing command line. There are many other guides for using Istanbul with Selenium (https://stackoverflow.com/questions/67913176/how-to-implement-istanbul-coverage-with-selenium-and-mocha) that can be used, as well as similar guides for using Istanbul with Playwright (https://github.com/mxschmitt/playwright-test-coverage; https://medium.com/@novyludek/code-coverage-of-e2e-tests-with-playwright-6f8b4c0b56e1). Lastly, to accomplish the same for your web application in JavaScript with Google Puppeteer and Istanbul, here are two useful guides: https://github.com/istanbuljs/puppeteer-to-istanbul and https://github.com/puppeteer/puppeteer/blob/main/docs/api.md#class-coverage.

Since in theory measuring code coverage across all the preceding test automation frameworks is done using Istanbul and the Babel plugin, we will pick Cypress as a reference framework and explain how to set up, instrument, and measure code coverage of a sample web application.

Measuring JavaScript code coverage using Istanbul and Cypress

Assuming you already have a working environment for Cypress locally installed, we will now focus on setting up Istanbul and Babel.

The first step would be to install the code coverage libraries and dependencies on the Cypress local installation folder. Here, we install two main plugins – the Istanbul plugin and the code-coverage plugin from Cypress:

npm install -D babel-plugin-istanbul

npm install -D @cypress/code-coverage

The preceding plugin installation will not only enable you to measure the code coverage of your application but also allow you to perform the source code instrumentation using the nyc module (https://github.com/istanbuljs/nyc). The use of the preceding plugins on JavaScript code will transform the source code into code that is measured through counters of functions, statements, branches, and lines of code.

Another step that is required is to add the following import statement to the Cypress/support/ index.js file:

import '@cypress/code-coverage/support';

After that, you need to register your code-coverage plugin into the Cypress test by adding the following block to Cypress/plugins/index.js:

module.exports = (on,config) => {

require('@cypress/code-coverage/task')(on, config);

return config;

}

The next step in the setup is to perform source code instrumentation for your application. By just installing the plugins, your code is not yet instrumented and ready to be measured. To do so, you need to either run the following command that operates on the application's src folder (this will be the main source folder of your application under test):

npx nyc instrument -compact=false src instrumented

Alternatively, to instrument your code on the fly, you need to add the Istanbul plugin to the .babelrc file.

Lastly, in the cypress.json file under your local installation, you should set the coverage parameter to true (replacing true with false will disable the coverage measurements) by placing the following lines in the file:

{

"env": {

"coverage": "true"}

}

Now, when I run the Cypress GUI tool and execute any of my tests under the Cypress installation, they will run by default with the coverage capabilities on.

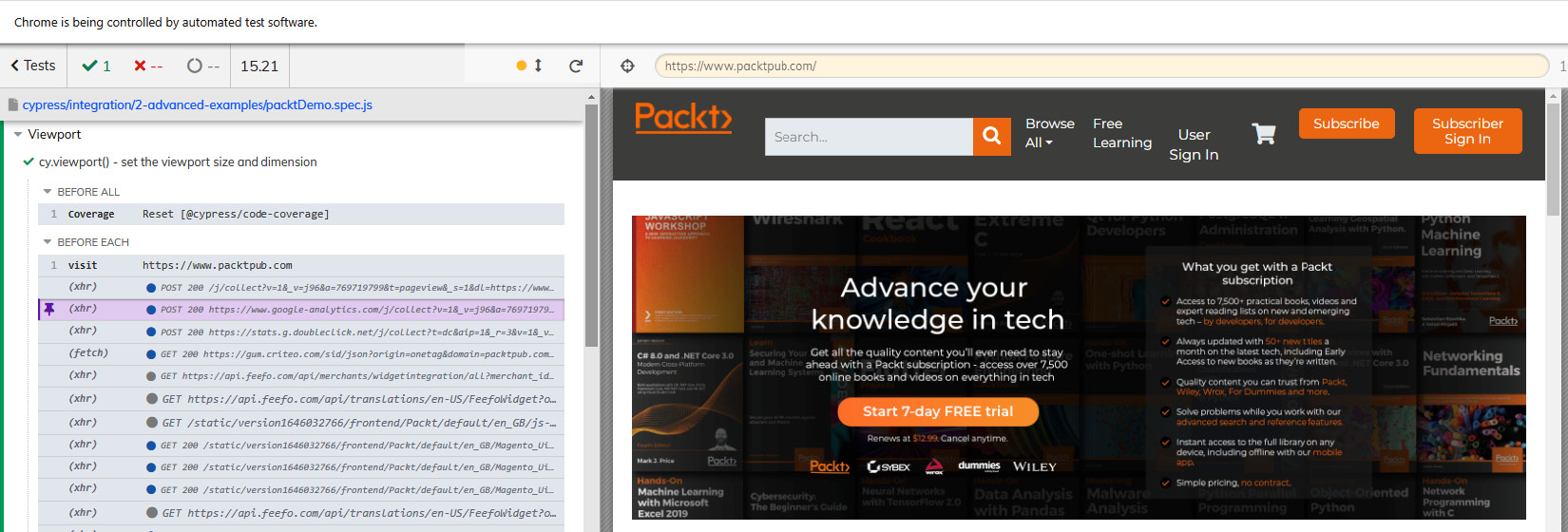

Figure 8.2 – Example for executing a Cypress test with the Istanbul/Babel plugin enabled for code coverage

For well-instrumented code using Istanbul and Babel, you will get the following output for test coverage both in the GUI as well as within the coverage/lcov-report folder under your Cypress local folder. As a code coverage practitioner, you need access to the source code of the web application, as well as having a clear understanding of the application architecture, so you can understand and act upon the results of this tool.

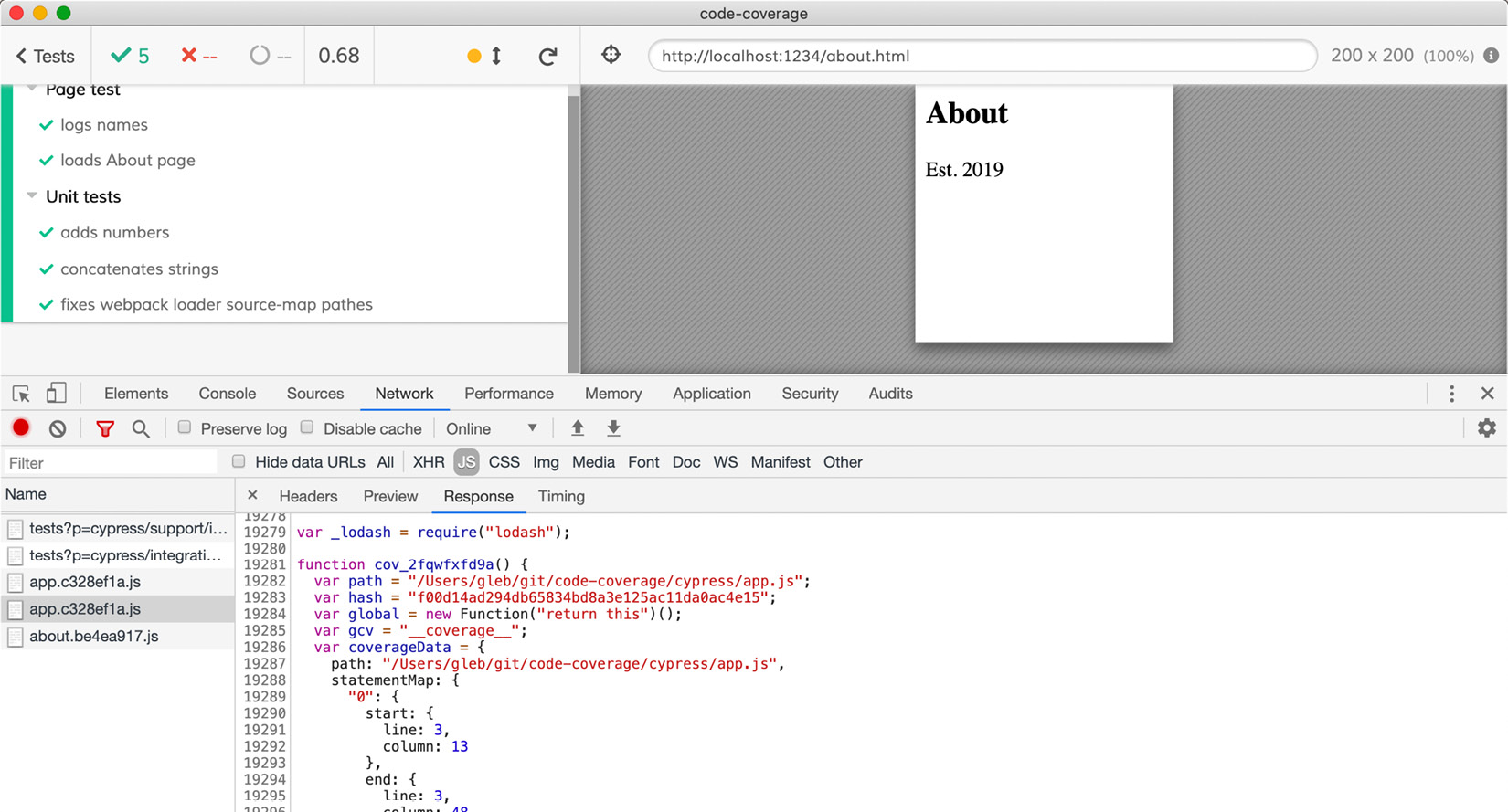

Figure 8.3 – Example of code coverage output when running Cypress with the Istanbul/Babel plugin (source: https://github.com/cypress-io/code-coverage)

To summarize the code coverage section with Istanbul and Cypress, it is important to follow the few simple steps given previously, which include the plugin installation, setting up the configuration files, and then instrumenting your website JavaScript source code prior to running your end-to-end or unit tests.

The setting-up guides might vary between the various frameworks; however, the result is similar – the reports that will be generated will provide you with percentage coverage of the core pillars of your app (statements, branches, functions, and lines).

The percentage for each of the pillars should not just be a number for developers and QA managers, but rather guidance and actionable insight for future testing that might be required. For areas that have lower than 65-70% coverage, it is recommended to add more test cases (unit, functional, APIs, and so on) so there are no quality risks that escape to production. In general, having a source code average coverage below 70% is not a good sign (https://www.bullseye.com/minimum.html#:~:text=Summary,higher%20than%20for%20system%20testing). Hence, measuring and continuously adjusting the code coverage toward 80% and above is highly recommended.

In a great code coverage measurement article that explains how to set up Cypress with Istanbul and the Babel plugin, written by Marie Drake (https://www.mariedrake.com/post/generating-code-coverage-report-for-cypress), you can see at the end of it a sample code coverage report that was captured by testing the Zoopla UK website.

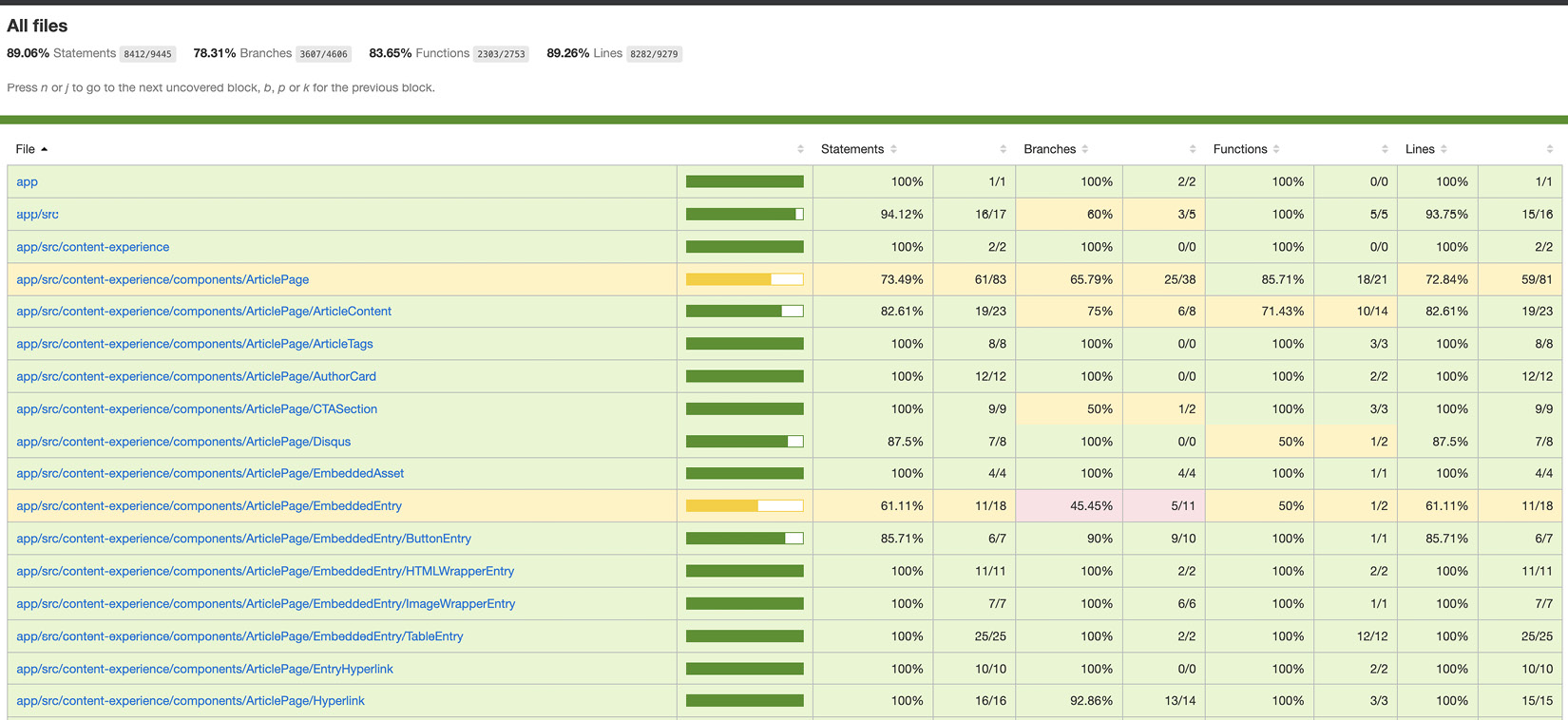

Figure 8.4 – Code coverage report for the Zoopla website using Cypress and the Istanbul plugin (source: https://www.mariedrake.com/post/generating-code-coverage-report-for-cypress)

The overall lines of code (LOC) coverage, as well as the branches, functions, and statements, are quite decent across most of the source code, except for the EmbeddedEntry and ArticlePage files, which are marked in yellow to highlight a potential code coverage risk. As a frontend developer and SDET, I would try and focus in the future on complementing the coverage for these two files and functional areas to enhance the coverage and raise the percentage toward 80% and above.

In this section, we covered the use of Istanbul and Babel and their role within code coverage measurements within the leading test automation frameworks. We specifically featured Istanbul within the Cypress test framework and provided a guide on how to enable this capability for any JavaScript test spec. We also provided a nice real-life report example of a code coverage measurement to explain how to read such a report and what to focus on from a coverage percentage perspective. Next, we'll see how to complement code coverage with test coverage.

Complementing code coverage with test coverage

As we explained in the previous section, measuring code coverage is critical to assessing the depth of code that is being executed and covered via testing. However, such code-level analysis alone is not sufficient and requires the combination of test coverage analysis as well. When trying to ensure the high quality of your web application, there are various factors that play a critical role in that. Code coverage guarantees that the application is tested at runtime across multiple scenarios and that most of the code is being exercised. The outcomes of the code coverage report guide managers on which areas to invest in further to reduce risks and enhance overall application quality.

Test coverage as a superset of the quality plan looks at other aspects of the application quality, such as user experience, security, accessibility, compatibility, and boundary testing. Together, code and test coverage make a great set of metrics and analysis of the entire application. It is important to understand that both code coverage and test coverage are dynamic measurements since both the landscape and platforms keep on changing, as well as the application source code changing from one iteration to the next. It is imperative to continuously test the app and report the metrics back to management, so the quality bar remains high.

Summary

In this chapter, we explored the two confusing terms of code and test coverage. We properly defined them and clarified the differences between them.

We then focused on code coverage for JavaScript web applications through the leading open source tool called Istanbul with its supporting Babel plugin.

Lastly, we gave as a reference and an example a guide for integrating the Istanbul/Babel tools into Cypress for measuring code coverage through end-to-end functional testing.

That concludes this chapter!

In the following chapter, which opens the last part of this book (Part 3), we will start diving deeper into the most advanced features of the leading test automation frameworks. The following chapter will start with the advanced features of Selenium.