2

Test Automation Strategy

Effective test automation requires creativity, technical acumen, and persistence. Success is achieved in test automation by persevering through a wide variety of challenges posed by complex application areas, changing requirements, and unstable environments. Every successful test automation project starts with a sound strategy. Every software project is unique, and a test automation strategy serves as a beacon to meet the unique requirements of a test automation project. It acts as a pillar to inquire, assess, and make constant improvements to the overall software quality.

Test automation strategy is not only about planning and executing the automated tests but also ensuring that by and large the efforts in test automation are placed in the right areas to deliver business value quickly and efficiently.

We will be looking at the following topics in this chapter to solidify our understanding of test automation strategy and frameworks:

- Knowing your test automation strategy

- Devising a good test automation strategy

- Understanding the test pyramid

- Familiarizing ourselves with common test automation design patterns

Technical requirements

In the later part of this chapter, we will be looking at some Python code to understand a simple implementation of design patterns. You can refer to the following GitHub URL for the code in the chapter: https://github.com/PacktPublishing/B19046_Test-Automation-Engineering-Handbook/blob/main/src/test/design_patterns_factory.py.

This Python code is provided mainly for understanding the design patterns and the readers don't have to execute the code. But if you are interested, here is the necessary information to get it working on your machine. First, readers will need an integrated development environment (IDE) to work through the code. Visual Studio Code (VS Code) is an excellent editor with wide support for a variety of programming languages.

The following URL provides a good overview for using Python with VS Code:

https://code.visualstudio.com/docs/languages/python

You will need software versions Python 3.5+ and the Java Runtime Environment (JRE) 1.8+ installed on your machine to be able to execute this code. pip is the package installer for Python, and I would recommend installing it using https://pip.pypa.io/en/stable/installation/. Once you have PIP installed, you can use the pip install -U selenium command to install Selenium Python bindings.

Next is to have the driver installed for your browsers. You can do this by going to the following links for your particular browser:

- Chrome: https://chromedriver.chromium.org/downloads

- Firefox: https://github.com/mozilla/geckodriver/releases

- Edge: https://developer.microsoft.com/en-us/microsoft-edge/tools/webdriver/

Make sure the driver executables are in your PATH environment variable.

Knowing your test automation strategy

Even though every software project has its own traits, there are certain important aspects that every test automation strategy should touch upon. Let us look at some chief considerations of test automation strategy in this section.

Test automation objectives

One of the first tasks that an automation strategy must define is its purpose. All the project stakeholders should be involved in a discussion about which pain points test automation would address eventually. This step is also crucial because it assists management to redirect resources to the appropriate areas. Some of the most common objectives for test automation are noted here:

- Strengthening product quality: This is a chief trigger for test automation projects. Test automation engineers can plan and create tests that can catch regression bugs and can help with defect prevention.

- Improving test coverage: This is a key motivation for several test automation undertakings. Test engineers usually work with the software engineers and the product team to cover all possible scenarios. The scenarios can then be categorized based on their criticality.

- Reducing manual testing: Needless to say, reducing manual testing is a primary objective for most—if not all—test automation initiatives. Automated tests are predictable and perform the programmed tasks in a consistent and error-free manner. This leaves more time for the whole team to focus on other important tasks, and it boosts the overall productivity of the team.

- Minimizing maintenance and increasing portability of tests: This can be an incredibly good reason for kicking off a test automation initiative. Legacy software projects can be ripe with tightly coupled tests that are not compatible with newer technologies. It usually requires considerable effort from the testing team to make them portable and easy to read.

- Enhancing the stability of a software product: This acts as a principal motivation to kick start a test automation project to address non-functional shortcomings. This involves identifying and documenting various real-life stress- and load-testing scenarios for the application under test. Subsequently, with the help of the right tools, the application can be subject to performance testing.

- Reducing quality costs: This can help the management invest their resources and funds to further the capabilities of the organization. Reducing redundancy in quality-related efforts helps trim testing costs and time. Test automation strategy can be a great driver for this endeavor.

A push for test automation can be due to one or a combination of these reasons. Well-thought-out objectives for test automation eventually increase the overall efficiency of the engineering team, thereby aligning their efforts toward achieving greater business value.

Next, let us look at how important it is to garner management support to achieve the objectives of test automation.

Gathering management support

One of the critical factors that influence the outcome of a test automation effort is constant communication with management about the progress and issues. Isolated efforts from teams in building test automation might work in the short term, but to scale up the infrastructure needed to serve the entire organization, we need adequate support from management. Calling attention to the business goals that test automation can help achieve is one of the ways to engage management. It is crucial to relay to management how test automation unites the divide between frequent release cycles and a bug-free product. Having a clear test automation strategy helps exactly with that. Management will have clarity on what to expect in the initial phase when the framework is being built and when they can start seeing the return on investment (ROI). Another factor to be highlighted is that test automation, once put in place, increases the efficiency of multiple teams.

After laying out the objectives and getting adequate support for the test automation effort, let us next deal with defining the scope as well as addressing some limitations of test automation.

Defining the scope of test automation

The scope of test automation refers to the extent to which the software application will be validated using automated tests. There are a number of considerations that go into determining the scope. Some important ones are listed here:

- Number of business-critical flows

- The complexity of test cases

- Types of testing to be performed on the application

- The skill set of test automation engineers

- Reusability of components within the application

- Time constraints for product delivery

- Test environment availability/maturity

It is unrealistic to plan to automate every part of a software application. Every product is unique, and there is no absolute path to determine the scope of test automation. Planning upfront and constant collaboration between test engineers, software engineers, and product owners help lay out the scope initially. Starting small and building iteratively assists in this approach. It is also crucial to distinguish upfront which types of testing will be done and by who.

Let us now investigate the crucial role that the test environment plays in a test automation strategy.

Test automation environment

Where and how automated tests are executed forms an integral part of a test automation strategy. A test automation strategy should address deliberate actions for correctly setting up a test environment and monitoring for active use. There should also be an effective communication strategy to provide timely updates on test environments. Now, let us look at what really constitutes a test environment.

What constitutes a test environment?

The infrastructure team usually makes a replica of the code base and deploys it on different virtual machines (VMs) with the associated dependencies. They provide access to these VMs for the required users. Users can then log on to these and perform a series of operations based on their roles and permissions. The combination of the hardware, software, and state of these code bases makes it a separate test environment.

The most common types of test environments are presented here:

- Development

- Testing

- Staging

- Production

We will not be going into the details of each type here, but it is important to keep in mind that the testing environment is where most of the automated tests are run. The staging environment is usually used for end-to-end (E2E) testing and user acceptance testing (UAT). This can be both manual and automated. All organizations have a development environment, but depending on the size and capacity, some may combine the testing and staging environments into one. Now that we know what constitutes a test environment, let us look at how to provision one.

Provisioning a test environment

A key factor that determines the stability of automated tests is the test environment. Serious considerations must be made in the setup and maintenance of test environments. This is where the test engineers must collaborate effectively with the infrastructure teams and identify the limitations of their tests in the context of a test environment. This analysis, if done early in the project, goes a long way in building resilient automated tests. After all, no one likes to spend a huge amount of time debugging test failures that are not functional in nature.

Test environment provisioning and configuration should be automated at all costs. Manual setup increases the cycle time and adds unnecessary overhead for testers and infrastructure teams. A wide variety of tools such as Terraform and Chef are available on the market for this purpose. Although responsibility for the provisioning of various environments lies with the infrastructure team, it does not hurt for the test engineers to gain a basic understanding of these tools. An ideal environment provisioning approach would address setting up the infrastructure (where the application will run), configurations (behavior of the application in the underlying infrastructure), and dependencies (external modules needed for the functioning of the application) in an automated manner.

Testing in a production environment

Apart from a few high-level checks, any additional testing in production is usually frowned upon. New code changes are usually soaked in a pre-production environment and later released to production when the testing is complete (manual and automated). But in recent times, feature flags are being routinely used to turn off/on new code changes without breaking existing functionality in production. Testing in production can be localized to a minor subset of features by using feature flags effectively. Even if there are features broken by the introduction of new code changes, the impact would be minimal. Test automation strategy helps maintain sanity by providing adequate test coverage for critical features before they are deployed to production. Irrespective of the state of feature flags, primary business flows should be functional, and it is a prime responsibility of test automation to keep it that way.

Note

Automated tests are only as good as the environment they run against. An unstable test environment adversely impacts the accuracy, efficiency, and effectiveness of the test scripts.

Let us next see how a test automation strategy enables quality in an Agile landscape.

Implementing an Agile test automation strategy

As a majority of organizations have adopted the Agile paradigm, it is mainstream now, and test automation strategy must necessarily address the Agile aspects of software development. Fundamental Agile principles must be engrained in the team’s activities, and they should be applied to test automation strategy when and where possible. This might be a huge cultural change for a lot of testing teams. Here is a list of Agile principles for consideration in test automation strategy:

- Gain customer satisfaction through steady delivery of useful software

- Embrace changing requirements to provide a competitive advantage to end users

- Deliver working software frequently

- Constant collaboration between business and engineering teams

- Motivate individuals and trust them to get the job done

- Prefer face-to-face conversation over documentation

- Working software as a primary measure of progress

- Promote a continuous and sustainable pace of development indefinitely

- Continuous attention to technical excellence and superior design

- Keep things simple

- Each team is self-organized in its design, architecture, and requirements

- Continuous retrospection and improvements

A primary goal in the Agile journey is to enhance the software quality at every iteration. Test automation strategy should have automated builds and deployment as a pre-requisite. Only when build automation is coupled with automated tests is continuous improvement enabled. This is how teams can maximize their velocity in the Agile landscape while maintaining high quality.

Another key aspect to zero in on is the ability to get feedback early and often. It helps tremendously to instill a test-first mentality in every member of the team. When an engineer thinks through the feature and which kinds of tests should pass before writing any code, the output is bound to be of high quality. This also keeps the team from accruing any technical debt in terms of test automation.

Attentive and incremental investments in test automation should be the foundation of the strategy, and it goes a long way in tackling the frequent code changes in Agile development. Sprint retrospections can be used to pause and reflect on areas where Agile principles are not being effectively applied to test automation strategy.

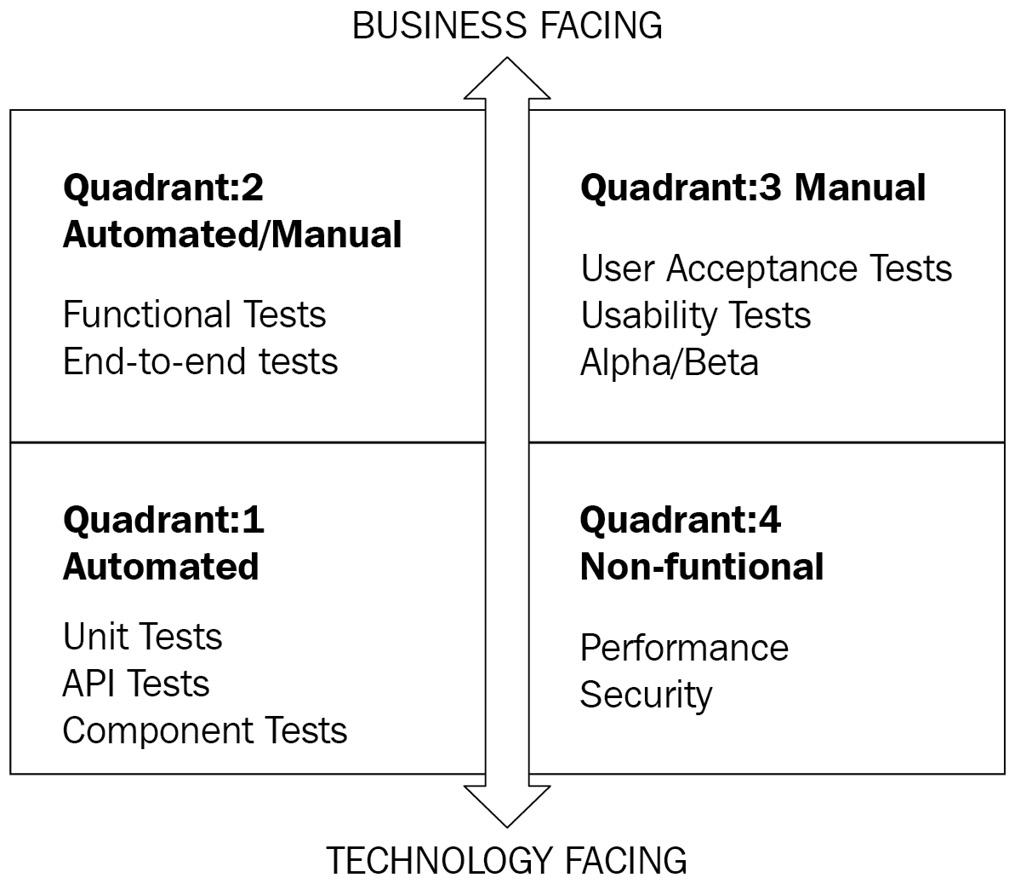

Agile testing quadrants provide a logical way to organize different kinds of tests based on whether they are business-facing or technology-facing. Let us quickly look at which types of tests are written in each of the quadrants, as follows:

- Quadrant 1: Highly technical tests written by the programmers, usually in the same programming language as the software application.

- Quadrant 2: Tests that verify business functions and use cases. These tests can be automated or manual and are used to confirm product behavior with the specifications.

- Quadrant 3: Manual tests that verify the user experience (UX) and functionality of the product through the eyes of a real user.

- Quadrant 4: Tool-intensive tests that verify non-functional aspects of the application such as performance and security.

Test automation strategy should also address the diverse needs for tools and processes in each of the Agile testing quadrants, outlined in the following diagram:

Figure 2.1 – Agile testing quadrants

Test automation strategy in an Agile project should promote team collaboration, thus acting as a strengthening force to achieve superior product quality. Next, let us look at some reporting guidelines to be incorporated into the test automation strategy.

Reporting the test results

There are usually thousands of tests that run part of a pipeline in an enterprise setup. This is just a small number when compared to some advanced tech-savvy organizations that have millions of individual tests run daily. Even a small percentage of failure translates to a massive debugging and maintenance effort. Test automation strategy should incorporate effective test grouping in case of failures. There could be several reasons for a test failure, including—but not limited to—new bugs, flakiness of the test script, test environment issues, and so on. Analyzing failures and producing a quick fix wherever necessary is principal to maintaining a working product.

Test automation strategy should enable the labeling of test failures and open appropriate channels to communicate the failures. Integration with enterprise message software helps in reporting quickly and effectively. This is also an area ripe for machine learning (ML) usage in the quality engineering space.

There should also be a single portal where the test results can be filtered and consumed for providing visibility to interested stakeholders.

Having the right reporting in place for test automation provides a major boost to the productivity of the engineering team. Irrespective of the test automation framework and infrastructure, standardizing test results reporting is a must-have element in any test automation strategy.

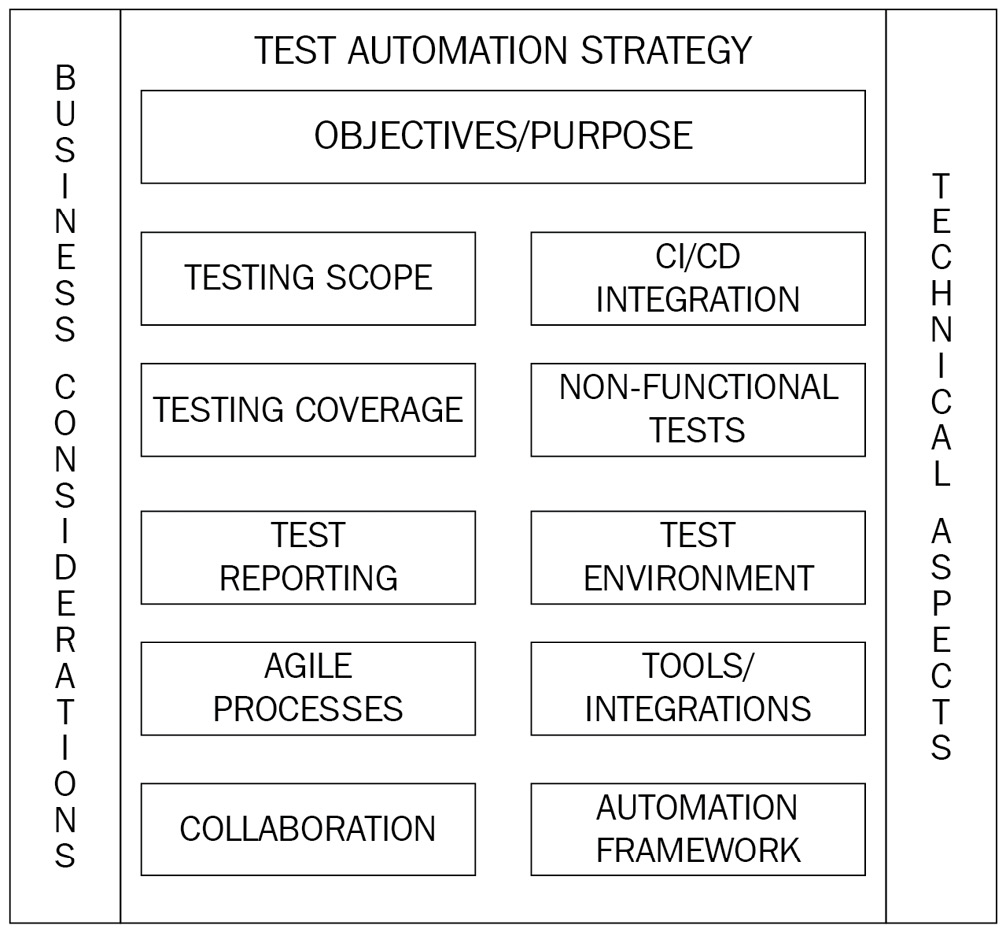

Have a look at the following diagram:

Figure 2.2 – Test automation strategy breakdown

Figure 2.2 provides a view of all the major constituents of a test automation strategy. A good test automation strategy would be a combination of these items based on the individual software application.

Next, let us look at some chief items to consider when devising a good automation strategy.

Devising a good test automation strategy

After defining the objectives for your test automation effort, it is vital to put all the pieces together and hit the road running as soon as possible. The longer you wait to kick start your test automation efforts, the higher the technical debt you accrue. In this section, let us dive deep into some practical tips for implementing and maintaining the key parts of the test strategy.

Selecting the right tools and training

A search for a valuable tool always commences by gaining a deep understanding of the project requirements. The type of application and distinct kinds of platforms to be tested are some chief points of interest to get started. It might help to do a cost-benefit analysis on developing an in-house tool from scratch versus buying a commercially licensed tool. Developing a tool from scratch takes a significant amount of time and requires putting together solutions from multiple open source tools with in-house code modules. This hybrid approach is more cost-effective than a commercial license tool but requires dedicated support from the internal teams to keep up with changes to the external libraries.

For projects that do not have a lot of time upfront, buying the license from a vendor might be the only option on the table. Every test automation tool on the market now has some flavor of cloud offering that can be used to get feet wet initially with a subset of users. Once the pilot is successful, the tool can be rolled out to a broader set of users. There might be some additions and extensions needed to meet specific project needs, even after buying a vendor tool from the market. Look out for additional integrations that the tool will have to support for tracking stories and defects.

Irrespective of the type of tool used, the key factor for success is the training that goes along with it. It is important to educate the test engineers and other users of the tool regarding its effectiveness and limitations. Every user should be equipped with the knowledge and skill set needed to use the tool efficiently. Always try to use a programming language that is most prominent among engineers and testers in the organization. Languages such as Python, Ruby, and JavaScript can be good starting points for novice testers with minimal programming experience. They have tremendous community support and span a wide variety of domains, including the Internet of Things (IoT) and ML. It is also important to consider how well the programing language integrates with the numerous tools in the testing ecosystem. The majority of the time, a quality engineer is focused on quickly solving the problem at hand. Selecting the right programming language goes a long way in assisting this purpose. In some cases, there may be non-technical users who wish to use the test automation tool for running acceptance tests. It is critical to keep in mind the ease of usability in such cases.

A test automation tool is only a small part of the test automation strategy. High-priced tools do not guarantee success if other standards of the test automation framework are missing or below par. Next, let us consider the subject of a test automation framework and pay attention to the standards surrounding it.

Standards of the test automation framework

A test automation framework is a collection of tools and/or software components that enable the creation, execution, and reporting of tests. There should also be some guidelines and standards associated with the framework so that every engineer in the organization utilizes and builds on it the same way. It facilitates a systematic way for test engineers to efficiently design test scripts, automate their execution, and report the test results. Test automation is also crucial to improving the speed and accuracy of test execution, thereby ensuring a high ROI on the underlying test automation strategy.

Here are some important test automation framework types to be aware of:

- Modular test automation framework: In this framework, the application logic and the utilities that help test the logic are broken down into modules of code. These modules can be built on incrementally to accommodate further code changes. An example would be an E2E test framework where the major application modules have a corresponding test module. Changes to the validation logic happen only within the revised modules limiting rework and increasing test efficiency.

- Library-based test automation framework: In a library-based framework, the application logic is written in separate methods, and these methods are grouped into libraries. It is an extremely reusable and portable test automation framework since libraries can be added as dependencies externally. Most test automation frameworks are library-based, and this relieves the engineers from writing redundant code.

- Keyword-driven test automation framework: The application logic being tested is extracted into a collection of keywords and stored in the framework. These keywords form the foundation of test scripts and are used to create and invoke tests for every execution. An example of this would be the Unified Functional Testing (UFT) tool, formerly known as Quick Test Professional (QTP). It has inherent support for keyword extraction and organizing libraries for each of those keywords.

- Data-driven test automation framework: As the name suggests, data drives the tests in this framework. Reusable components of code are executed over a collection of test data, covering major parts of the application. A simple example of a data-driven framework would be reading a collection of data from a database or a comma-separated values (CSV) file and verifying the application logic on every iteration of the data. There is usually a need to integrate with a plugin or driver software to read the data from the source.

- Behavior-driven development (BDD) test framework: This is a business-focused Agile test framework where the tests are written in a business-friendly language such as Gherkin. Cucumber is one of the most popular BDD frameworks on the market.

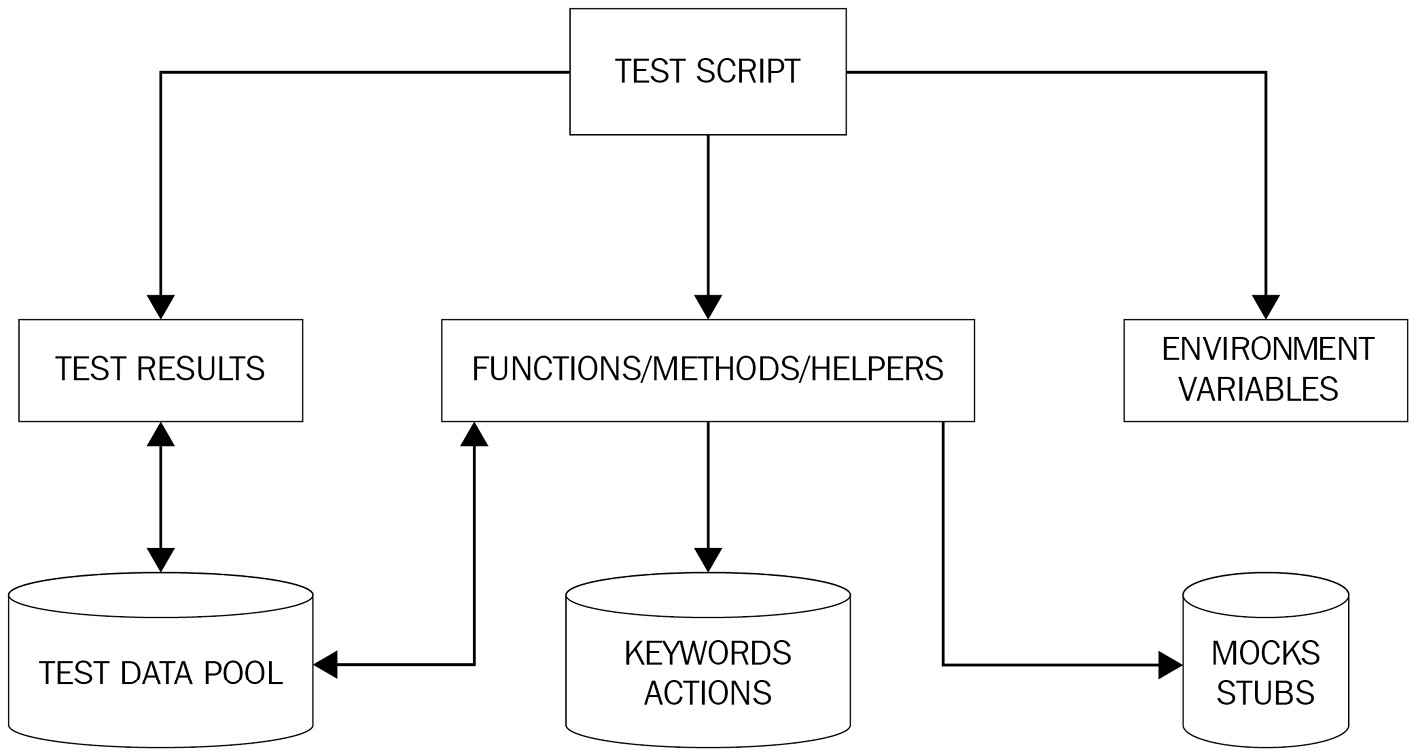

Have a look at the following diagram:

Figure 2.3 – Essential components of a test automation framework

Often, a real-world test automation framework is a combination of two or more of the types shown in Figure 2.3. The test script acts as a primary driver to invoke helper methods to perform a variety of tasks. The test script also leverages the environment variables and is responsible for managing the test results.

Now that we know what a test automation framework is, let us next look at some key components of every test automation framework.

Managing test libraries

The principal component of an automation framework is its collection of libraries. An automation framework should empower the test engineers to extract the core application logic and store this as test libraries. For example, in a banking application, a loan creation method can be used for all types of testing (unit, integration, E2E). The test automation framework should create this method or inherit it from the source code and make it available for consumption.

The test automation framework should also support the usage of third-party libraries for performing various tasks within the tests. Any third-party library used as a dependency within a framework should carefully be vetted for security and performance concerns. These libraries are often used as utilities that aid test scripts to perform certain recurring tasks.

Both the custom and third-party libraries together form the building blocks of a strong test automation framework. Whichever form they may be in an automation framework, they hold the essential application logic and utilities that assist in validating the logic.

Next, let us look at how important it is to have a good platform for running tests.

Laying the platform for tests

A test automation framework primarily aims to maintain consistency across test scripts. When test scripts are created by a team in the organization, they should be available for reuse for any team in the future. The test automation framework should promote this kind of mobility, which in turn enables consistency in the way test scripts are created. Test automation should create foundational utilities for various common actions performed on the software application, such as downloading a Portable Document Format (PDF) file, sending an email, and so on.

The test automation framework should also assist engineers in writing tests for components that are otherwise hard to validate. For example, there could be wrapper code written as part of the test automation framework that could be used to call a legacy system as part of an integration test. Another use case would be to support the mocking of external vendor calls within the framework as part of E2E tests.

Test automation should also support tackling bug detection and analysis with minimum human intervention. It is vital to put in place concise logging mechanisms within the framework for swift debugging of failures. Integrations with tools such as JIRA and Bamboo can be explored to maintain uniform reporting of bugs and issues in an automated manner.

It is the responsibility of the test engineers and the software development engineers in test (SDETs) to work with the infrastructure team to ensure tests are created and run in the most efficient way within the limitations of a test environment. Test automation will be cost-prohibitive if none of the sub-systems is stable or testable. Test engineers always need to be on the lookout for environment-related errors to establish an uninterrupted automated testing process.

Building a solid test automation framework is only the beginning of the quality journey. There is constant effort involved in maintaining and keeping the framework updated. Let us look at some key factors in maintaining the framework.

Maintaining the framework

One of the chief goals of a test automation framework is to ensure continuous synchronization between the different tools and libraries used. It helps to enable automatic updates of various third-party libraries used in the framework as part of the continuous integration (CI) pipeline to circumvent manual intervention. Any test tool utilized within the framework should constantly be evaluated for enhancements and security vulnerabilities. Documenting the best practices around handling the framework helps newly onboarded engineers to hit the ground running in less time. Having dedicated SDETs acting as a liaison with tool vendors and users adds stability to the overall test automation process.

It goes without saying the importance of keeping test scripts updated with code changes. SDETs need to stay on top of code changes that render the automation framework inoperable, while the test engineers should continuously look out for code changes that break the test scripts. Test engineers should efficiently balance their time between adding new tests versus updating existing ones to keep the CI pipeline functional. Test automation framework maintenance takes time and sometimes involves significant code changes. It is important to plan and allocate time upfront for the maintenance and enhancements of the framework. It is crucial to spend more time designing and optimizing the framework as this helps in saving time in maintenance down the road.

Next, let us review key factors in handling test data as part of the test automation framework.

Sanitizing test data

Acquiring test data and cleaning it up for sustainable usage in testing is a key task of an automation framework. A lot of cross-team collaboration must happen to build a repository of high-quality test data. Test engineers and SDETs often work with product teams and data analysts to map test scenarios with the datasets that might already be available. Additional queries will have to be run against production databases to cover any gaps identified in this process. Test data thus acquired is static and should be masked to eliminate any personally identifiable information (PII) for usage within the test automation framework, calling out some of this static data.

A plethora of data-generating libraries are also available for integration with the framework. These libraries can be equipped to handle simple spreadsheets to generate unstructured data based on business logic. Often, there is a need to write custom code to extract, generate, and store test data due to the complexity of software applications. Every automation framework is unique and requires a combination of static and dynamic data generation techniques. It is a good practice to isolate these test data generation libraries for portability and reusability across the organization.

Always try to focus on the quality of test data over quantity. The framework should also include utilities for cleaning up the test data after every test execution to preserve the state of the application. There should be common methods set up to handle test data creation and deletion as part of every test automation framework.

Test data management is a separate domain by itself, and we are just scratching the surface. Let us quickly look at some additional factors in handling and maintaining sanity around test data, as follows:

- Maintaining data integrity throughout its usage in test scripts is essential to the reliability of tests. The automation framework should offer options to clean and reuse test data when and where necessary.

- Guarding the test data for use outside of automation should be avoided at all costs. Maintaining clear communication on which data is being used for test automation keeps the automated tests cleaner.

- Performing data backups of baseline data used for automated testing and using them in the future will help immensely in recreating data across test environments.

- Using data stubs/mocks when dealing with external vendor data or for internal application programming interfaces (APIs) that may not have high availability (HA). Typical responses can be saved and replayed by the stubs/mocks wherever necessary in the framework.

- Employing data generators to introduce randomness and to avoid using real-time data within the tests. There are numerous generators available for commonly used data such as addresses and PII.

- Considering all the integration points within the application when implementing utilities that create underlying test data.

- Including user interface (UI) limitations when designing test data for E2E tests. This is especially useful when using API calls to set up data for UI tests as there might be incompatibilities between how data is handled by different layers of the application stack.

The aspects of the test automation framework that we reviewed so far are mostly agnostic to the hosting environment—that is, on-premise or cloud—but there are certain distinct benefits to embracing the cloud in the overall test automation strategy. Let us look at them in the next section.

Testing in the cloud

Tapping into the advantages of a cloud-based environment is an indispensable aspect of any test automation strategy in the current software engineering landscape. A rightly employed cloud-based testing strategy delivers the dual advantage of faster time-to-market (TTM) with much lower ownership costs. The biggest advantage of a cloud-based environment is the ability to access resources anytime and anywhere. Geographically diverged teams can scale test environments up and down for their testing needs at will.

Imagine a software engineering team working on developing an application that must be supported across all devices and browsers. Setting up and maintaining test environments that sustain all these configurations can be both tedious and expensive. Cloud-based tools can assist in overcoming this drawback by using preconfigured environments and enabling parallel testing across these platforms. These environments can also be scaled up based on usage. This eliminates the need to purchase and maintain the necessary hardware. Cloud-based tools also provide the on-demand capacity needed for non-functional tests.

Cloud-based environments are foundational pillars of development-operations (DevOps). With quality built into the DevOps processes, test engineers can spin up test environments and integrate them with several tools as part of their automation framework. With enhanced scalability and flexibility, a cloud-based test automation strategy serves major testing challenges and reduces go-to-market (GTM) times.

With a good notion of various aspects of the test automation framework, let us next dive into understanding the test pyramid.

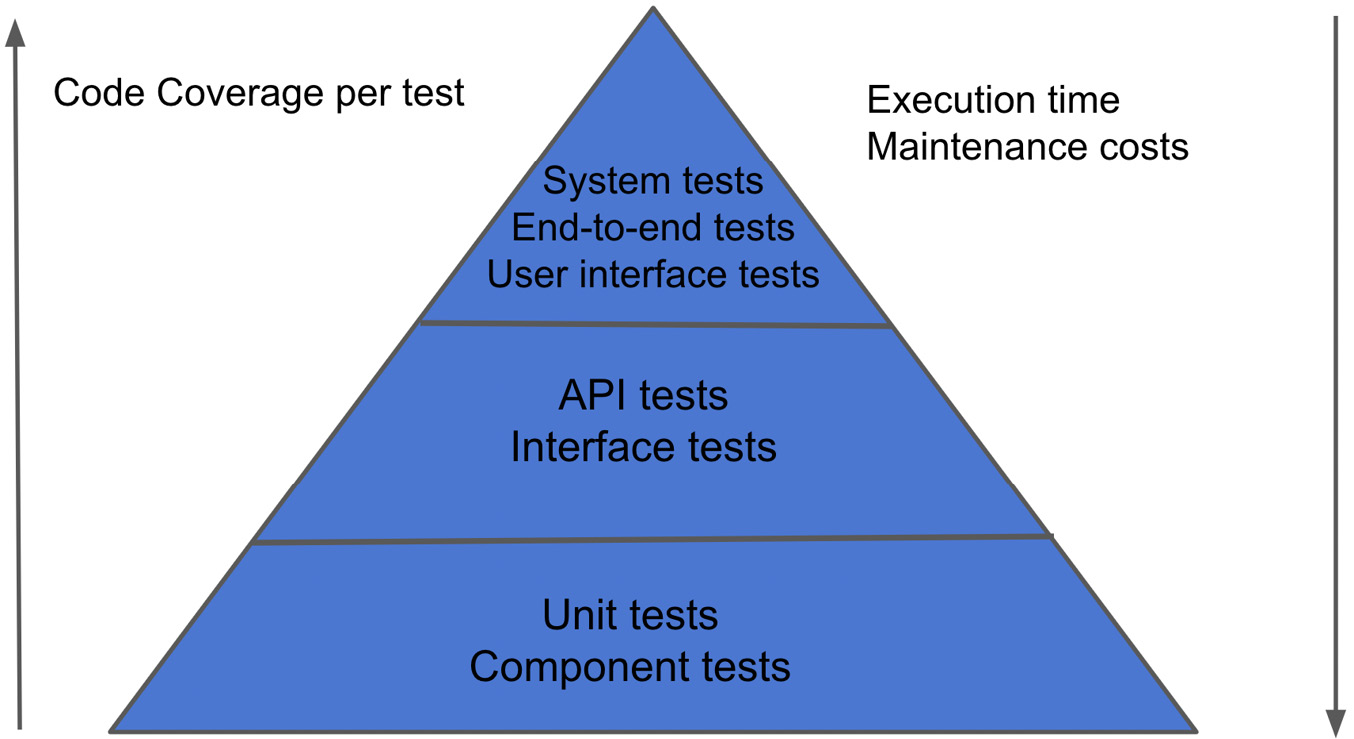

Understanding the test pyramid

The test pyramid is a diagrammatic representation of the distribution of different kinds of tests. It acts as a guideline when planning for the test coverage of a software product. It also helps to keep in mind the scope of each test so that it remains within the designed level, thereby increasing the portability and stability of the test. The following diagram summarizes the test automation pyramid:

Figure 2.4 – Test automation pyramid

Let us look at each layer of the pyramid in a little more detail.

Unit/component tests

They are tests defined at the lowest level of the system. They are written by the engineer developing the feature, and the involvement of test engineers is minimal (and sometimes zero) in these tests. They form a healthy collection of tests, providing excellent coverage for all the code components. Frameworks used to put these tests in place include xUnit, Rspec, and more. They are completely automated, and these tests should ideally run for every commit to the code repository. External and database calls are stubbed in this layer, which contributes to the increased speed of these tests. Since they are the quickest and the least expensive to write, they come with the biggest ROI among all other types of tests. They also enable swift feedback due to their high frequency of execution.

Certain non-functional tests can also be included as component tests in this layer, with additional setup and partnership with the infrastructure team. Test automation strategy should also comprise techniques to quarantine flaky unit tests. This is usually the bulkiest layer of the pyramid, and any application that can be tested should be included as part of unit/component tests.

The main drawback of unit tests is that they fall short in identifying bugs and errors resulting from the communication of multiple code modules.

Next, let us look at how integration/API tests help with validating communication that extends beyond a module of code.

Integration/API tests

The second layer in the pyramid is where the majority of the application logic is validated. Tests in this layer can be tied directly back to a feature or a combination of features. Ideally, these tests should be executed before merging the code into the master branch. If that is not possible, these should be run as soon as the code change is deployed to a testing environment. Since these tests make frequent database or other mocked external calls, their execution time is generally high.

This layer is also apt for verifying API contracts and how APIs communicate with each other. After analyzing production data and setting up inputs for these APIs, the output can be compared to the expected behavior. Care must be taken to exclude any UI-based tests in this layer. While there is a comprise on the speed of execution, these tests tend to offer higher coverage as they span more lines of code in a single test.

Integration/API tests sometimes can be time-consuming and resource-intensive when the link between every API and module must be verified. This is where system tests come in handy. Let us examine and understand the top layer of the pyramid next, comprising system tests.

E2E/System/UI tests

These are tests that exercise the software application through its UI or a series of API calls to validate a business flow. These require a test environment with all the dependencies, associated databases, and mocked vendor calls. These tests are not run as frequently as unit and API tests due to their extensive runtimes. The main goal of these tests is to give product and engineering teams the confidence to deploy the code to production. Since most of the bugs should already be found by the lower-level tests, the frequency of execution of these tests can be adjusted to synchronize with the production release cycles.

These tests also act as acceptance tests for business teams or even the client in some projects. An example would be Selenium tests, which simulate how a real user performs actions on the application. They verify all aspects of the application like a real user would do. Controls must be in place to avoid using real user data from production.

Next, let us examine how to structure the different types of tests for optimal results.

Structuring the test cycles

There are certain standards to keep in mind when structuring these test cycles, as outlined here:

- Automate as many test cases as you need, but no more, and automate them at the lowest level that you can.

- Always try to curb the scope of each test case to one test condition or one business rule. Although this may be difficult in some cases, it goes a long way in keeping your test suite concise and makes debugging much easier.

- It is essential to have clarity on the purpose of each test and avoid dependencies between tests because they quickly increase complexity and maintenance expenses.

- Plan test execution intervals based on test types to maximize bug detection and minimize execution times of the CI pipeline.

- Identify and implement mechanisms to catch and quarantine flaky tests as part of every test execution.

We saw in detail how the test pyramid assists in strategizing test automation. Let us next dive into some common design patterns used in test automation.

Familiarizing ourselves with common design patterns

Design patterns can be viewed as a solution template for addressing commonly occurring issues in software design. Once the underlying software design problem is analyzed and understood, these design patterns can be applied to common problems. Design patterns also help create a shared design language for the software development community. Test automation projects tend to be started in an isolated manner and later scraped due to scalability issues. In the next section, let us try to understand how design patterns help test automation achieve its goals.

Using design patterns in test automation

Test automation is as involved as any other coding undertaking and has its own set of design challenges. But to our rescue, some existing approaches can be applied to solve common design challenges in test automation. Test engineers and SDETs should always be on the lookout for opportunities to optimize test scripts by employing the best design procedures. Some common advantages of using design patterns in test automation include the following:

- Helps with structuring code consistently

- Improves code collaboration

- Promotes reusability of code

- Saves time and effort in addressing common test automation design challenges

- Reduces code maintenance costs

Some common design patterns employed in test automation are noted here:

- Page Object Model (POM)

- The factories pattern

- Business layer pattern

Let us now look at each of them in detail.

POM

Object repositories, in general, help keep the objects used in a test script in a central location rather than having them spread across the tests. POM is one of the most used design patterns in test automation; it aids in minimizing duplicate code and makes code maintenance easier. A page object is a class defined to hold elements and methods related to a page on the UI, and this object can be instantiated within the test script. The test can then use these elements and methods to interact with the elements on the page.

Let us imagine a simple web page that serves as an application for various kinds of loans (such as personal loans, quick money loans, and so on). There may be multiple business flows associated with this single web page, and these can be set up as different test cases with distinct outcomes. The test script would be accessing the same UI elements for these flows except when selecting the type of loan to apply for. POM would be a useful design pattern here as the UI elements can be declared within the page object class and utilized in each of the different tests running the business flows. Whenever there is an addition or change to the elements on the UI, the page object class is the only place to be updated.

The following code snippet illustrates the creation of a simple page object class and how the test_search_title test uses common elements on the UI from the Home_Page_Object page object class to perform its actions:

import selenium.webdriver as webdriver

from selenium.webdriver.common.by import By

class WebDriverManagerFactory:

def getWebdriverForBrowser(browserName):

if browserName=='firefox':

return webdriver.Firefox()

elif browserName=='chrome':

return webdriver.Chrome()

elif browserName=='edge':

return webdriver.Edge()

else:

return 'No match'

The WebDriverManagerFactory class contains a method to select the driver corresponding to the browser being used, as illustrated in the following code snippet:

class Home_Page_Object:

def __init__(self, driver):

self.driver = driver

def load_home_page(self):

self.driver.get("https://www.packtpub.com/")return self

def load_page(self,url):

self.driver.get(url)

return self

def search_for_title(self, search_text):

self.driver.find_element(By.ID,'search').send_keys(search_text)

search_button=self.driver.find_element(By.XPATH, '//button[@class="action search"]')

search_button.click()

def tear_down(self):

self.driver.close()

The POM paradigm usually has a base class that contains methods for identifying various elements on the page and the actions to be performed on them. The Home_Page_Object class here in this example has methods to set up the driver, load the home page, search for titles, and close the driver:

class Test_Script:

def test_1(self):

driver = WebDriverManagerFactory

.getWebdriverForBrowser("chrome")if driver == 'No match':

raise Exception("No matching browsers found")pageObject = Home_Page_Object(driver)

pageObject.load_home_page()

pageObject.search_for_title('quality')pageObject.tear_down()

def main():

test_executor = Test_Script()

test_executor.test_1()

if __name__ == "__main__":

main()

The test_search_title method sets up the Chrome driver and the page object to search for the quality string.

We will look in further detail about setting up and using POM in Chapter 5, Test Automation for Web.

Now, let us investigate how the factory design pattern is helpful in test automation.

The factories pattern

The factory pattern is one of the most used design patterns in test automation and aids in creating and managing test data. The creation and maintenance of test data within a test automation framework could easily get messy, and this approach provides a clean way to create the required objects in the test script, thereby decoupling the specifics of the factory classes from the automation framework. Separating the data logic from the test also helps test engineers keep the code clean, maintainable, and easier to read. This is often achieved in test automation by using pre-built libraries and instantiating objects from classes exposed by the libraries. Test engineers can use the resulting object in their scripts without the need to modify any of the underlying implementations.

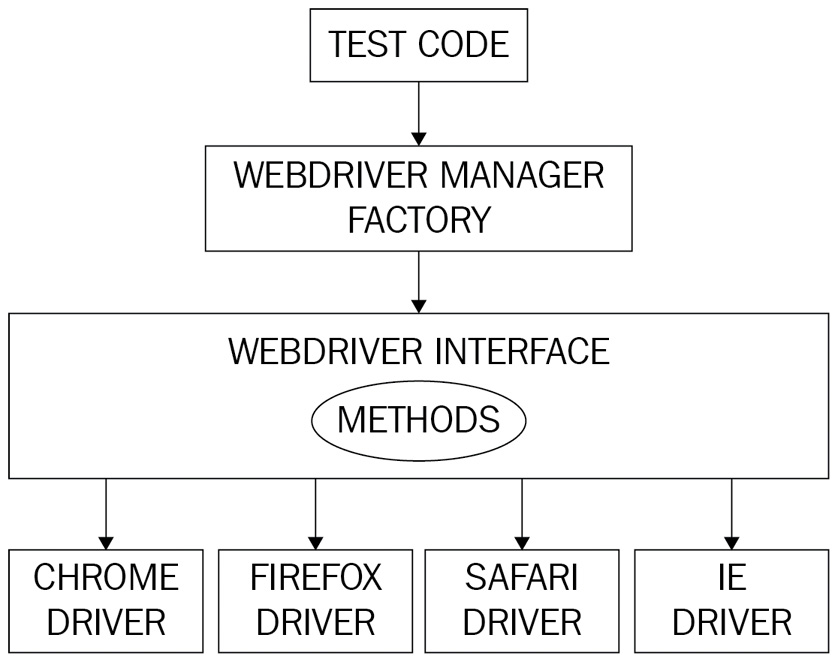

A classic example of a factory design pattern in test automation would be how Selenium WebDriver gets initialized and passed around in a test. Selenium WebDriver is a framework that enables the execution of cross-browser tests.

The following diagram breaks down how a piece of test script can exercise Selenium WebDriver to make cross-browser calls:

Figure 2.5 – Selenium WebDriver architecture

Figure 2.5 shows how the test code utilizes a factory method to initialize and use the web drivers.

Please refer to the code snippet in the previous section for a simple implementation of the factory pattern. Here, the WebDriverManagerFactory class returns an instance of the web driver for the requested browser. The Test_Search_Choose_Title class can use the factory method to open a Chrome browser and perform additional validations. Any changes to how drivers are being created are encapsulated from the test script. If Selenium WebDriver supports additional browsers in the future, the factory method will be updated to return the corresponding driver.

Business layer pattern

This is an architectural design pattern where the test code is designed to handle each layer of the application stack separately. The libraries or modules that serve the test script are intentionally broken down into UI, business logic/API, and data handling. This kind of design is immensely helpful when writing E2E tests where there is a constant need to interface with the full stack. For example, the steps involved may be to start with seeding the database with the pre-requisite data, make a series of API calls to execute business flows, and finally validate the UI for correctness. It is critical here to keep the layers separate as this reduces the code maintenance nightmare. Since each of the layers is abstracted, this design pattern promotes reusability. All the business logic is exercised in the API layer, and the UI layer is kept light to enhance the stability of the framework.

Design patterns play a key role in improving the overall test automation process and they should be applied after thoroughly understanding the underlying problem. We need to be wary of the fact that if applied incorrectly, these design patterns could lead to unnecessary complications.

Summary

In this chapter, we dealt primarily with how to come up with a sound test automation strategy. First, we saw some chief objectives that need to be defined to get started with the test automation effort, then we reviewed key aspects in devising a good test automation strategy. We also looked at how the test pyramid helps in formulating a test strategy by breaking down the types of tests. Finally, we surveyed how design patterns can be useful in test automation and learned about some common design patterns.

Now that we have gained solid ground on test automation strategy, in the next chapter, let us look at some useful command-line tools and analyze in detail certain commonly used test automation frameworks and the considerations around them.

Questions

Take a look at the following questions to test your knowledge of the concepts learned in this chapter:

- Why is a test automation strategy important for a software project?

- What are the main objectives for test automation?

- How does the test pyramid help test automation strategy?

- How do cloud-based tools help in accelerating test automation efforts?

- What are the advantages of using design patterns in test automation?