Chapter 1: Getting Started with Azure Sentinel

Welcome to the first chapter in this book about Azure Sentinel. To understand why this solution was developed, and how best to use it in your organization, we need to explore the cloud security landscape and understand each of the components that may feed data into or extract insights out of this system. We also need to gain a baseline understanding of what a strong Security Operations Center (SOC) architecture looks like, and how Azure Sentinel is going to help to build the foundations for a cost-effective and highly automated cloud security platform.

In this chapter, we will cover the following topics:

- The current cloud security landscape

- Cloud security reference framework

- SOC platform components

- Mapping the SOC architecture

- Security solution integrations

- Cloud platform integrations

- Private infrastructure integrations

- Service pricing for Azure Sentinel

- Scenario mapping

The current cloud security landscape

To understand your security architecture requirements, you must first ensure you have a solid understanding of the IT environment that you are trying to protect. Before deploying any new security solutions, there is a need to map out the solutions that are currently deployed and how they protect each area of the IT environment. The following list provides the major components of any modern IT environment:

- Identity for authentication and authorization of access to systems.

- Networks to gain access to internal resources and the internet.

- Storage and compute in the data center for internal applications and sensitive information.

- End user devices and the applications they use to interact with the data.

- And in some environments, you can include Industrial Control Systems (ICS) and the (IoT).

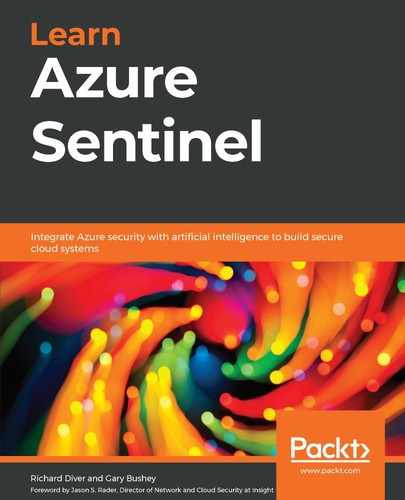

When we start to look at the threats and vulnerabilities for these components, we quickly find ourselves deep in the alphabet soup of problems and solutions:

Figure 1.1 – The alphabet soup of cyber security

This is by no means an exhaustive list of the potential acronyms available. Understanding these acronyms is the first hurdle; matching them to the appropriate solutions and ensuring they are well deployed is another challenge all together (a table of these acronyms can be found in the appendix of this book).

Cloud security reference framework

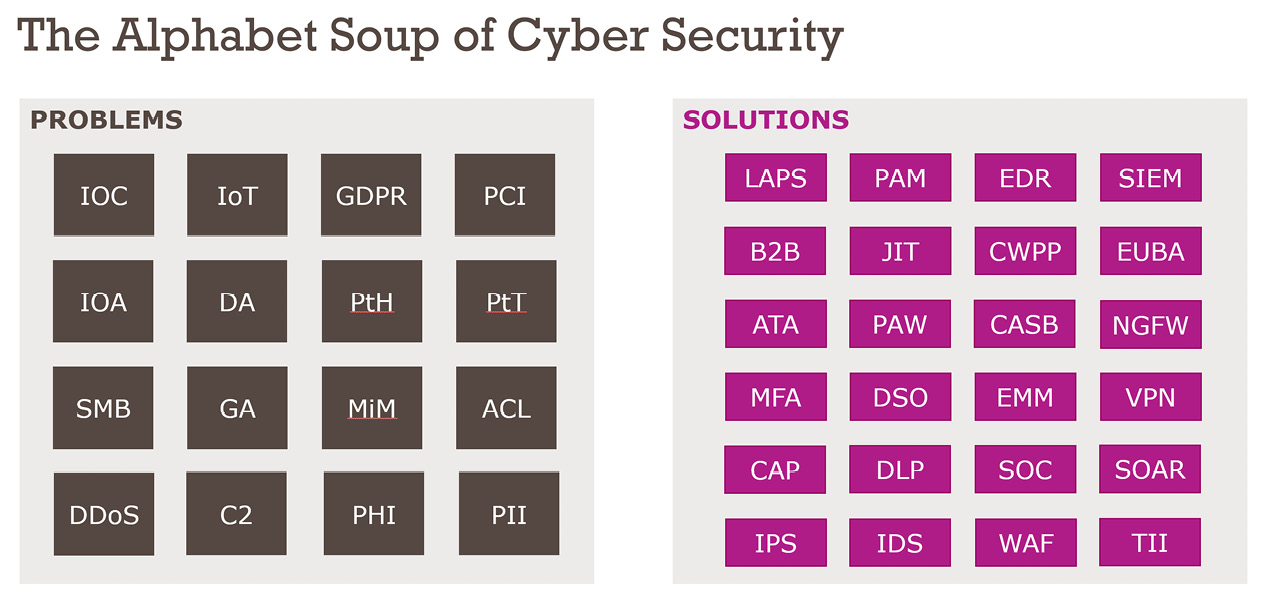

To assist with the discovery and mapping of current security solutions, we developed the cloud security reference framework. The following diagram is a section of this framework that provides the technical mapping components, and you can use this to carry out a mapping of your own environment:

Figure 1.2 – Technical mapping components – cloud security reference framework

Each of these the 12 components is described in the following with some examples of the type of solutions to consider for integration with Azure Sentinel and the rest of your security architecture:

- Security Operations Center: At a high level, it includes the following technologies and procedures: log management and Security Incident and Event Monitoring (SIEM), Security Orchestration and Automated Response (SOAR), vulnerability management, threat intelligence, incident response, and intrusion prevention/detection. This component is further explored in the, Mapping the SOC Architecture section later in this chapter.

- Productivity Services: This component covers any solution currently in use to protect the business productivity services that your end users rely on for their day to day work. This may include email protections, SharePoint Online, OneDrive for Business, Box, Dropbox, Google Apps, and Salesforce. Many more will come in future, and most of these should be managed through the Cloud Access Security Broker (CASB) solution

- Identity and Access Management: Identities are one of the most important entities to track. Once an attacker gains access to your environment, their main priority is to find the most sensitive accounts and use them to exploit the systems further. In fact, identity is usually one of the first footholds into your IT environment, usually through a successful phishing attack.

- Client Endpoint Management: This component covers a wide range of endpoints, from desktops and laptops to mobile devices and kiosk systems, all of which should be protected by specialized solutions such as End Point Detection and Response (EDR), Mobile Device Management (MDM), and Mobile Application Management (MAM) solutions to ensure protection from advanced and persistent threats against the operating systems and applications. This component also includes secure printing, managing peripherals, and any other device that an end user may interact with, such as the future of virtual reality/augmentation devices.

- Cloud Access Security Broker: This component has been around for several years and is finally becoming a mainstay of the modern cloud security infrastructure due to the increase adoption of cloud services. The CASB is run as a cloud solution that can ingest log data from SaaS applications and firewalls and will apply its own threat detection and prevention solutions. Information coming from the CASB will be consumed by the SIEM solution to add to the overall picture of what is happening across your diverse IT environment.

- Perimeter Network: One of the most advanced components, when it comes to cyber security, must be the perimeter network. This used to be the first line of defense, and for some companies it still is the only line of defense. That is changing now, and we need to be aware of the multitude of options available, from external facing advanced firewalls, web proxy servers, and application gateways to virtual private networking solutions and secure DNS. This component will also include protection services such as DDoS, Web Application Firewall, and Intrusion Protection/Detection services.

- IoT and Industrial Control Systems: Industrial Control Systems (ICS) are usually operated and maintained in isolation from the corporate environment, known as the Information Technology/Operational Technology divide (IT/OT divide). These are highly bespoke and runs systems that may have existed for decades and are not easily updated or replaced.

The IoT is different yet similar; in these systems, there are lots of small headless devices that collect data and control critical business functions without working on the same network. Some of these devices can be smart to enable automation; others are single use (vibration and temperature sensors). The volume and velocity of data that can be collected from these systems can be very high. If useful information can be gained from the data, then consider filtering the information before ingesting into Azure Sentinel for analysis and short- or long-term retention.

- Private Cloud Infrastructure: This may be hosted in local server rooms, a specially designed data center, or hosted with a third-party provider. The technologies involved in this component will include storage, networks, internal firewalls, and physical and virtual servers. The data center has been the mainstay of many companies for the last 2-3 decades, but most are now transforming into a hybrid solution, combining the best of cloud (public) and on-premises (private) solutions. The key consideration here is how much of the log data can you collect and transfer to the cloud for Azure Monitor ingestion. We will cover the data connectors more in Chapter 3, Data Collection and Management.

Active Directory is a key solution that should also be included in this component. It will be extended to public cloud infrastructure (component 09) and addressed in the Privileged Access Management section (component 10). The best defense for Active Directory is to deploy the Azure Advanced Threat Protection (Azure ATP) solution, which Microsoft has developed to specifically protect Active Directory domain controllers.

- Public Cloud Infrastructure: These solutions are now a mainstay of most modern IT environments, beginning either as an expansion of existing on-premises virtualized server workloads, a disaster recovery solution, or an isolated environment created and maintained by the developers. A mature public cloud deployment will have many layers of governance and security embedded into the full life cycle of creation and operations. This component may include Infrastructure as a Service (IaaS), Platform as a Service (PaaS), and Software as a Service (SaaS) services; each public cloud service provider offers their own security protections that can be integrated with Azure Sentinel.

- Privileged Access Management: This is a critical component, not to be overlooked, especially gaining access to the SOC platform and associated tools. The Privileged Access Management (PAM) capability ensures all system-level access is highly governed, removing permissioned when not required, and making a record for every request for elevated access. Advanced solutions will ensure password rotation for service accounts, management of shared system accounts (including SaaS services such as Twitter and Facebook), and the rotation of passwords for the local administrator accounts on all computers and servers. For the SOC platform, consider implementing password vaults and session recording for evidence gathering.

- Cloud Workload Protection Platform: This component may also be known as a Cloud Security Posture Management (CSPM), depending on the view of the solution developed. This is a relatively new area for cloud security and is still maturing.

Whatever they are labelled as, these solutions are addressing the same problem: how do you know that your workloads are configured correctly across a hybrid environment? This component will include any DevOps tools implemented to orchestrate the deployment and ongoing configuration management of solutions deployed to private and public cloud platforms. You can also include solutions that will scan for, and potentially enforce, configuration compliance with multiple regulatory and industry standard frameworks.

- Information Security: This component is critical to securing data at rest and in transit, regardless of the storage: endpoint, portable, or cloud storage. This component is important to cover secure collaboration, digital rights management, securing email (in conjunction with component 02, Productivity Services), scanning for regulated data and other sensitive information.

The Cloud Security Reference Framework is meant to be a guide as to what services are needed to secure your cloud implementation. In the next section, we will look at the SOC in more detail.

SOC platform components

As described earlier, the SOC platform includes a range of technologies to assist with the routine and reactive procedures carried out by various teams. Each of these solutions should help the SOC analysts to perform their duties at the most efficient level to ensure a high degree of protection, detection, and remediation.

The core components of the SOC include log management and Security Incident and Event Monitoring (SIEM), Security Orchestration and Automated Response (SOAR), Vulnerability Management, Threat Intelligence, and Incident Response. All of these components are addressed by the deployment of Azure Sentinel. Additional solutions will be required, and integrated, for other SOC platform capabilities such as Intrusion Prevention/Detection, integrity monitoring, and disaster recovery:

Deploying a SOC using Azure Sentinel comprises the following components:

- Azure Monitor for data collection and analysis. This was originally created to ensure a cloud-scale log management solution for both cloud-based and physical data-center-based workloads. Once the data is collected, a range of solutions can then be applied to analyze the data for health, performance, and security considerations. Some solutions were created by Microsoft, and others created by partners.

- Azure Sentinel was developed to address the need for a cloud-native solution as an alternative, or as a complimentary solution, to the existing SIEM solutions that have become a mainstay of security and compliance over the last decade. The popularity of cloud services provides some key advantages, including reduced cost of storage, rapid scale compute, automated service maintenance, and continuous improvement as Microsoft creates new capabilities based on customer and partner feedback.

One of the immediate benefits of deploying Azure Sentinel is the rapid enablement without the need for costly investment in the supporting infrastructure, such as servers, storage, and complex licensing. The Azure Sentinel service is charged based on data consumption, per-gigabyte per month. This allows the initial deployment to start small and grow as needed until full scale deployment and maturity can be achieved.

Ongoing maintenance is also simplified as there are no servers to maintain or licenses to renew. You will want to ensure regular optimization of the solution by reviewing the data ingestion and retention for relevance and suitability. This will keep costs reasonable and improve the quality of data used for threat hunting.

- Logic Apps provides integrations with a vast array of enterprise solutions, ensuring workflows are connected across the multiple cloud platforms and in existing on-premises solutions. While this is initially an optional component, it will become a core part of the integration and automation (SOAR) capabilities of the platform.

Logic Apps is a standards-based solution that provides a robust set of capabilities, however there are third-party SOAR solutions available if you don’t want to engineer your own automation solutions.

Mapping the SOC architecture

To implement a cohesive technical solution for your SOC platform, you need to ensure the following components are reviewed and thoroughly implemented. This is best done on a routine basis and backed up by regularly testing the strength of each capability using penetration testing experts that will provide feedback and guidance to help to improve any weaknesses.

Log management and data sources

The first component of a SOC platform is the gathering and storing of log data from a diverse range of systems and services across your IT environment. This is where you need to have careful planning to ensure you are collecting and retaining the most appropriate data. Some key considerations we can borrow from other big data guidance is listed here:

- Variety: You need to ensure you have data feeds from multiple sources to gain visibility across the spectrum of hardware and software solutions across your organization.

- Volume: Too large a volume and you could face some hefty fees for the analysis and ongoing storage, too small and you could miss some important events that may lead to a successful breach.

- Velocity: Collecting real-time data is critical to reducing response times, but it is also important that the data is being processed and analyzed in real time too.

- Value/Veracity: The quality of data is important to understand meaning; too much noise will hamper investigations.

- Validity: The accuracy and source of truth must be verified to ensure that the right decisions can be made.

- Volatility: How long is the data useful for? Not all data needs to be retained long term; once analyzed, some data can be dropped quickly.

- Vulnerability: Some data is more sensitive than others, and when collected and correlated together in one place, can become an extremely valuable data source to a would-be attacker.

- Visualization: Human interpretation of data requires some level of visualization. Understanding how you will show this information to the relevant audience is a key requirement for reporting.

Azure Sentinel provides a range of data connectors to ensure all types of data can be ingested and analyzed. Securing Azure Monitor will be covered in Chapter 2, Azure Monitor – Log Analytics and connector details will be available in Chapter 3, Data Collection and Management.

Operations platforms

Traditionally a SIEM was used to look at all log data and reason over it, looking for any potential threats across a diverse range of technologies. Today there are multiple platforms available that carry out similar functionality to the SIEM, except they are designed with specific focus on a particular area of expertise. Each platform may carry out its own log collection and analysis, provide specific threat intelligence and vulnerability scanning, and make use of machine learning algorithms to detect changes in user and system behavior patterns.

The following solutions each have a range of capabilities built in to collect and analyze logs, carry out immediate remediations, and report their findings to the SIEM solution for further investigation:

- Identity and Access Management (IAM): The IAM solution may be made up of multiple solutions, combined to ensure the full life cycle management of identities from creation to destruction. The IAM system should include governance actions such as approvals, attestation, and automated cleanup of group and permission membership. IAM also covers the capability of implementing multi-factor authentication: a method of challenging the sign-in process to provide more than a simple combination of user ID and password. All actions carried out by administrators, as well as user-driven activities, should be recorded and reported to the SIEM for context.

Modern IAM solutions will also include built-in user behavior analytics to detect changes in baseline patterns, suspicious activities, and the potential of insider-threat risks. These systems are also integrated with a CASB solution to provide session-based authentication controls, which is the ability to apply further restrictions if the intent changes, or access to higher sensitivity actions are required. Finally, every organization should implement privileged access management solutions to control the access to sensitive systems and services.

- Endpoint Detection and Response (EDR): Going beyond anti-virus and anti-malware, a modern endpoint protection solution will include the ability to detect and respond to advanced threats as they occur. Detection will be based not only on signature-based known threats, but also on patterns of behavior and integrated threat intelligence. Detection expands from a single machine to complete visibility across all endpoints in the organization, both on the network and roaming across the internet.

Response capabilities will include the ability to isolate the machine from the network, to prevent further spread of malicious activities, while retaining evidence for forensic analysis and provide remote access to the investigators. The response may also trigger other actions across integrated systems, such as mailbox actions to remove threats that executed via email or removing access to specific files on the network to prevent further execution of the malicious code.

- Cloud Access Security Broker (CASB): A CASB is now a critical component in any cloud-based security architecture. With the ability to ingest logs from network firewalls and proxy servers, as well as connecting to multiple cloud services, the CASB has become the first point of collation for many user activities across the network, both on-premises and when directly connected to the internet. This also prevents the need to ingest these logs directly into the SIEM (saving on costs), unless there is a need to directly query these logs instead of taking the information parsed by the CASB.

A CASB will come with many connectors for deep integration into cloud services, as well as connection to the IAM system to help to govern access to other cloud services (via SSO) acting as a reverse-proxy and enforcing session-based controls. The CASB will also provide many detection rule templates to deploy immediately, as well as providing the ability to define custom rules for an almost infinite set of use cases unique to your organization. The response capabilities of the CASB are dependent on your specific integrations with the relevant cloud services; these can include the ability to restrict or revoke access to cloud services, prevent the upload or download of documents, or hide specific documents from the view of others.

- Cloud Workload Protection Platform (CWPP): The CWPP may also be known as a Cloud Security Posture Management (CSPM) solution. Either of these will provide a unique capability of scanning and continually monitoring systems to ensure they meet compliance and governance requirements. This solution provides a centralized method for vulnerability scanning and carrying out continuous audits across multiple cloud services (such as Amazon Web Services (AWS) and Azure) while also centralizing the policies and remediation actions.

Today there are several dedicated platforms for CWPP and CSPM, each with their own specialist solutions to the problem, but we predict this will become a capability that merges with the CASB platforms to provide a single solution for this purpose.

When these solutions are deployed, it is one less capability that we need the SIEM to provide; instead, it can take a feed from the service to understand the potential risk and provide an integration point for remediation actions.

- Next Generation Firewall (NGFW): Firewalls have been the backbone of network security since the 1980s and remain a core component for segmentation and isolation of internal networks, as well as acting as the front door for many internet-facing services. With NGFW, not only do you get all of the benefits of previous firewall technologies, but now you can carry out deep packet inspection for the application layer security and integrated intrusion detection/prevention systems. The deployment of NGFW solutions will also assist with the detection and remediation of malware and advanced threats on the network, preventing the spread to more hosts and network-based systems.

As you can see from these examples, the need to deploy a SIEM to do all of the work of centrally collecting and analyzing logs is in the past. With each of these advanced solutions deployed to manage their specific area of expertise, the SIEM focus changes to look for common patterns across the solutions as well as monitoring those systems that are not covered by these individual solutions. With Azure Sentinel as the SIEM, it will also act as the SOAR: enabling a coordinated response to threats across each of these individual solutions, preventing the need to reengineer them all each time there is a change in requirements for alerting, reporting, and response.

Threat intelligence and threat hunting

Threat intelligence adds additional context to the log data collected. Knowing what to look for in the logs and how serious the events may be, requires a combination of skills and the ongoing intelligence feed from a range of experts that are deep in the field of cybercrime research. Much of this work is being augmented by Artificial Intelligence (AI) platforms; however, a human touch is always required to add that gut-feeling element that many detectives and police offices will tell you they get from working their own investigations in law enforcement.

SOC mapping summary

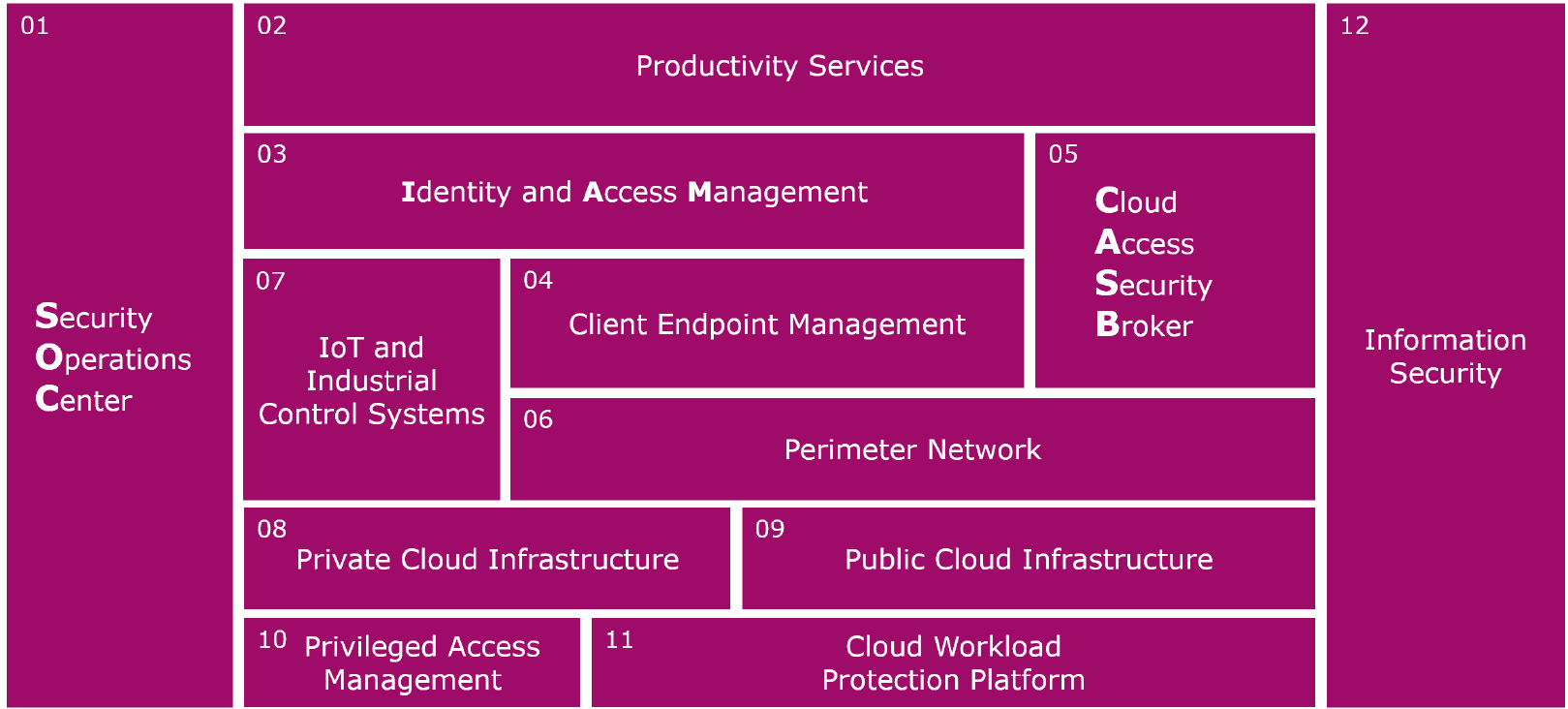

The following diagram provides a summary of the multiple components that come together to help to make up the SOC architecture, with some additional thoughts when implementing each one:

Figure 1.3 – SOC mapping summary

The solution works best when there is a rich source of log data streaming into the log management solution, tied in with data feeds coming from threat intel and vulnerability scans and databases. This information is used for discovery and threat hunting and may indicate any issues with configuration drift. The core solutions of the SOC operations include the SIEM, CASB, and EDR, amongst others; each with their own End User Behavior Analytics (EUBA) and SOAR capabilities. Integrating these solutions is a critical step in minimizing the noise and working toward improving the speed to response. The outcome should be the ability to report accurately on the current risk profile, compliance status, and clearly communicate in situations that require immediate response and accurate data.

Security solution integrations

Azure Sentinel is designed to work with multiple security solutions, not just those that are developed by Microsoft.

At the most basic level, log collection and analysis are possible from any system that can transmit their logs via the Syslog collectors. More detailed logs are available from those that connect via the CEF standard and servers that share Window Event logs. The preferred method, however, is to have direct integration via APIs to enable a two-way communication and help to manage the integrated solutions. More details of these options and covered in Chapter 3, Data Collection and Management.

Common Event Format (CEF)

CEF is an industry standard format applied to Syslog messages, used by most security vendors to ensure commonality between platforms. Azure Sentinel provides integrations to easily run analytics and queries across CEF data. For a full list of Azure Sentinel CEF source configurations, review the article at: https://techcommunity.microsoft.com/t5/Azure-Sentinel/Azure-Sentinel-Syslog-CEF-and-other-3rd-party-connectors-grand/ba-p/803891.

Microsoft is continually developing the integration options. At the time of writing, the list of integrated third-party solution providers includes the following:

- AWS

- Barracuda

- Checkpoint

- Cisco

- Citrix Systems Inc.

- CyberArk

- ExtraHop Networks

- F5 Networks

- Fortinet

- One Identity LLC.

- Palo Alto Networks

- Symantec

- TrendMicro

- Zscaler

As you can see from this list, there are many of the top security vendors already available directly in the portal. Azure Sentinel provides the ability to connect to a range of security data sources with built-in connectors, ingest the logs data, and display using pre-defined dashboards.

Cloud platform integrations

One of the key reasons you might be planning to deploy Azure Sentinel is to manage the security for your cloud platform deployments. Instead of sending logs from the cloud provider to an on-premises SIEM solution, you will likely want to keep that data off your local network, to save on bandwidth usage and storage costs.

Let’s take a look at how some of these platforms can be integrated with Azure Sentinel.

Integrating with AWS

AWS provides API access to most features across the platform, which enables Azure Sentinel a rich integration solution. The following list provides some of the common resources that should be integrated with Azure Sentinel if enabled in the AWS account(s):

- AWS Cloud Trail logs provide insights into AWS user activities, including failed sign-in attempts, IP addresses, regions, user agents, and identity types as well as potential malicious user activities with assumed roles.

- AWS Cloud Trail logs also provide network related resource activities, including the creation, update, and deletions of security groups, network access control lists (ACLs) and routes, gateways, elastic load balancers, Virtual Private Cloud (VPC), subnets, and network interfaces.

Some resources deployed within the AWS Account(s) can be configured to send logs directly to Azure Sentinel (such as Windows Event Logs). You may also deploy a log collector (Syslog, CEF, or LogStash) within the AWS Account(s) to centralize the log collection, the same as you would for a private data center.

Integrating with Google Cloud Platform (GCP)

GCP also provides API access to most features; however, there isn’t currently an out-of-the-box solution to integrate with Azure Sentinel. If you are managing a GCP instance and want to use Azure Sentinel to secure it, you should consider the following options:

- REST API—this feature is still in development; when released, it will allow you to create your own investigation queries.

- Deploy a CASB solution that can interact with GCP logs, control session access, and forward relevant information to Azure Sentinel.

- Deploy a log collector such as Syslog, CEF, or LogStash. Ensure all deployed resources can forward their logs via the log collector to Azure Sentinel.

Integrating with Microsoft Azure

The Microsoft Azure platform provides direct integration with many Microsoft security solutions, and more are being added every month:

- Azure AD, for collecting audit and sign-in logs to gather insights about app usage, conditional access policies, legacy authentication, self-service password reset usage, and management of users, groups, roles, and apps.

- Azure AD Identity Protection, which provides user and sign-in risk events and vulnerabilities, with the ability to remediate risk immediately.

- Azure ATP, for the protection of Active Directory domains and forests.

- Azure Information Protection, to classify and optionally protect sensitive information.

- Azure Security Center, which is a CWPP for Azure and hybrid deployments.

- DNS Analytics, to improve investigations for clients that try to resolve malicious domain names, talkative DNS clients, and other DNS health-related events.

- Microsoft Cloud App Security, to gain visibility into connected cloud apps and analysis of firewall logs

- Microsoft Defender ATP, a security platform designed to prevent, detect, investigate, and respond to advanced threats on Windows, Mac, and Linux computers.

- Microsoft Web App Firewall (WAF), to protect applications from common web vulnerabilities.

- Microsoft Office 365, providing insights into ongoing user activities such as file downloads, access requests, changes to group events, and mailbox activity.

- Microsoft Threat Intelligence Platforms, for integration with the Microsoft Graph Security API data sources: This connector is used to send threat indicators from Microsoft and third-party threat intelligence platforms.

- Windows Firewall, if on your servers and desktop computers (recommended).

Microsoft makes many of these log sources available to Azure Sentinel for no additional log storage charges, which could provide a significant cost saving when considering other SIEM tool options.

Other cloud platforms will provide similar capabilities, so review the options as part of your ongoing due diligence across your infrastructure and security landscape.

Whichever cloud platforms you choose to deploy, we encourage you to consider deploying a suitable CWPP solution to provide additional protections against misconfiguration and compliance violations. The CWPP can then forward events to Azure Sentinel for central reporting, alerting, and remediation.

Private infrastructure integrations

The primary method of integration with your private infrastructure (such as an on-premises data center) is the deployment of Syslog servers as data collectors. While endpoints can be configured to send their data to Azure Sentinel directly, you will likely want to centralize the management of this data flow. The key consideration for this deployment is the management of log data volume; if you are generating a large volume of data for security analytics, you will need to transmit that data over your internet connections (or private connections such as Express Route).

The data collectors can be configured to reduce the load by filtering the data, but a balance must be found between the volume and velocity of data collected in order to have sufficient available bandwidth to send the data to Azure Sentinel. Investment in increased bandwidth should be considered to ensure adequate capacity based on your specific needs.

A second method of integration involves investigation and automation to carry out actions required to understand and remediate any issues found. Automation may include the deployment of Azure Automation to run scripts, or through third-party solution integration, depending on the resources being managed.

Keep in mind that should your private infrastructure lose connectivity to the internet, your systems will not be able to communicate with Azure Sentinel during the outage. Investments in redundancy and fault tolerance should be considered.

Service pricing for Azure Sentinel

There are several components to consider when pricing Azure Sentinel:

- A charge for ingesting data into Log Analytics

- A charge for running the data through Azure Sentinel

- Charges for running Logic Apps for Automation (optional)

- Charges for running your own machine learning models (optional)

- The cost of running any VMs for data collectors (optional)

The cost for Azure Monitor and Azure Sentinel is calculated by how much data is consumed, which is directly impacted by the connectors: which type of information you connect to and the volume of data each node generates. This may vary each day throughout the month as changes in activity occur across your infrastructure and cloud services. Some customers notice a change based on their customer sales fluctuations.

The initial pricing option is to use Pay As You Go (PAYG). With this option, you pay a fixed price per gigabyte (GB) used, charged on a per-day basis. Microsoft has provided the option to use discounts based on the larger volumes of data.

It is worth noting that Microsoft has made available some connectors that do not incur a data ingestion cost. The data from these connectors could account for 10-20% of your total data ingestion, which reduces your overall costs. Currently the following data connectors are not charged for ingestion:

- Azure Activity (Activity Logs for Azure Operations)

- Azure Active Directory Identity Protection (for tenants with AAD P2 licenses)

- Azure Information Protection

- Azure Advanced Threat Protection (alerts)

- Azure Security Center (alerts)

- Microsoft Cloud App Security (alerts only)

- Microsoft Defender Advanced Threat Protection (monitoring agent alerts)

- Office 365 (Exchange and SharePoint logs)

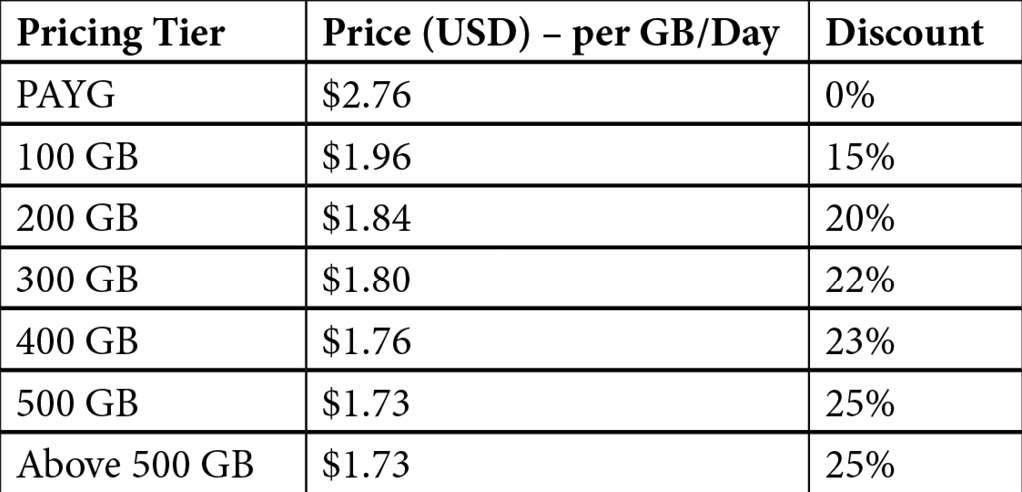

The following table is an example of the published pricing for Azure Log Analytics:

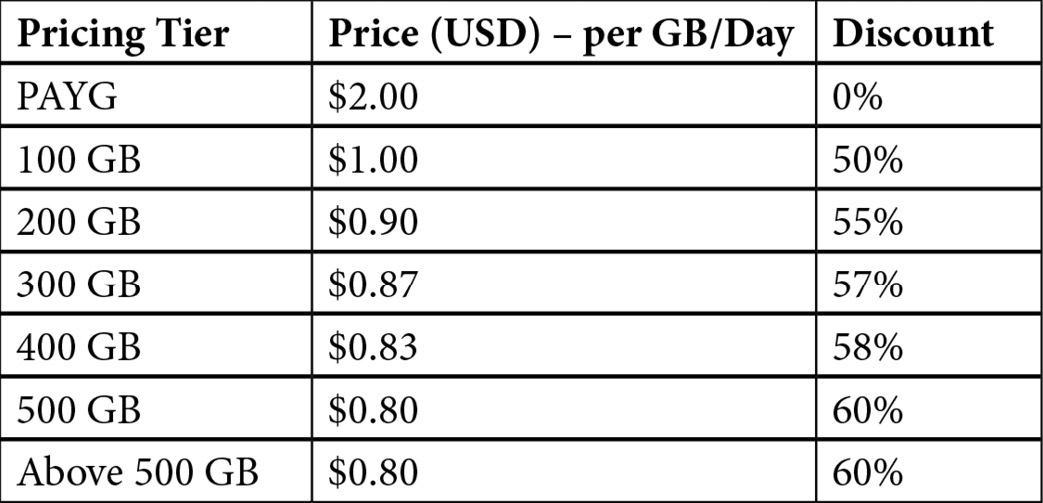

The following table is an example of the published pricing for Azure Sentinel:

Important note

In both examples, everything over 500 GB remains at the same price per GB as the 500 GB tier. Pricing also varies depending on the region you choose for the Azure Monitor workspace; these examples are shown based on East US, December 2019. You may receive discounts from Microsoft, depending on individual agreements.

The pricing works by charging a fixed price for the tier (100 GB = $296 per day), and then charges PAYG price for each GB over that tier. When you work out the calculations for the pricing tiers, it makes financial sense to increase to the next tier when you reach the 50% marker. For example, if you are ingesting an average of 130 GB per day, you will pay for the first 100 GB at $2.96 per GB, then pay PAYG price of $4.76 per GB for the additional 30 GB (total per day = $438.80). Now, if you increase your daily usage to 155 GB, you would save money by increasing your plan to the 200 GB option (total per day = $548) and paying for the extra capacity, instead of paying for the 100 GB (fixed) + 55 GB (PAYG) (total per day = $557.80)

When you look at the amount of data you are using, you may see a trend toward more data being consumed each month as you expand the solution to cover more of your security landscape. As you approach the next tier, you should consider changing the pricing model; you have the option to change once every month.

The next area of cost management to consider is retention and long-term storage of the Azure Sentinel data. By default, the preceding pricing includes 90 days of retention. For some companies, this is enough to ensure visibility over the last 3 months of activity across their environment; for others, there will be a need to retain this data for longer, perhaps up to 7 years (depending on regulatory requirements). There are two ways of maintaining the data long term, and both should be considered and chosen based on price and technical requirements:

- Azure Monitor: Currently, this is available to store the data for up to 2 years.

Pro: The data is available online and in Azure Monitor, enabling direct query using KQL searches, and the data can be filtered to only retain essential information.

Con: This is likely the most expensive option per GB.

- Other storage options: Cloud-based or physical-based storage solutions can be used to store the data indefinitely.

Pro: Cheaper options are available from a variety of partners.

Con: Additional charges will be made if data is sent outside of Azure, and the data cannot be queried by Azure Monitor or Azure Sentinel. Using this data requires another solution to be implemented for querying the data when required.

The final consideration for cost analysis includes the following:

- Running any physical or virtual machines as Syslog servers for data collection

- Charges for running your own machine learning models, which can be achieved using Azure ML Studio and Azure Databricks

- The cost of running Logic Apps for automation and integration

Each of these components is highly variable across deployments, so you will need to carry out this research as part of your design. Also, research the latest region availability and whether Azure Sentinel is support in the various government clouds, such as China.

Scenario mapping

For the final section of this chapter, we are going to look at an important part of SOC development: scenario mapping. This process is carried out on a regular basis to ensure the tools and procedures are tuned for effective analysis and have the right data flow and responses are well defined to ensure appropriate actions are taken upon detection of potential and actual threats. To make this an effective exercise, we recommend involving a range of different people with diverse skills sets and viewpoints, both technical and non-technical. You can also involve external consultants with specific skills and experience in threat hunting, defense, and attack techniques.

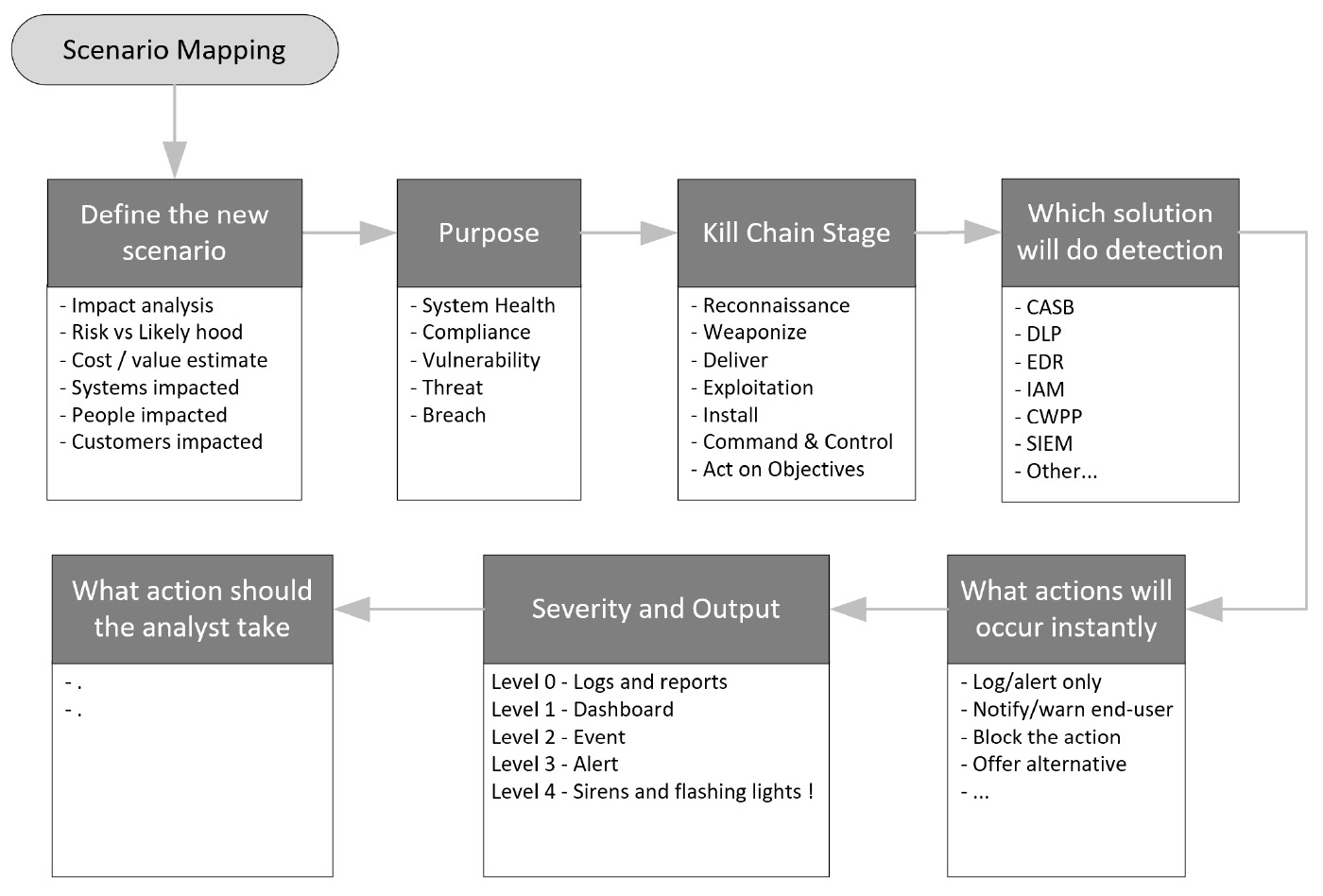

The following process is provided as a starting point; we encourage you to define your own approach to scenario mapping and improve it each time the exercise is carried out.

Step 1 – Define the new scenarios

In this first step, we articulate one scenario at a time; you may want to use a spreadsheet or other documentation method to ensure information is gathered, reviewed, and updated as required:

- Impact analysis: This will be the summary of the complete analysis, based on the next components. You may want to provide a scoring system to ensure the implementation of security controls are handled in priority order, based on the severity of the potential impact.

- Risk versus likelihood: While some scenarios will have a high risk of catastrophe if they were to occur, we must also balance that risk with the potential that it will occur. Risk calculations help to justify budget and controls required to mitigate the risk, but keep in mind you are unlikely to achieve complete mitigation, and there is always a need to prioritize the resources you have to implement the controls.

- Cost and value estimate: Estimate the value of the resource to your organization and cost to protect it. This may be a monetary value or percentage of the IT security budget, or it could be some other definable metric such as time and effort. If the cost outweighs the value, you may need to find a more affordable way to protect the resource.

- Systems impacted: Create a list of the systems that are most likely to be targeted to get to the resources and information in one or many scenarios (primary systems) and a list of the other systems that could be used or impacted when attacking the primary systems (these are secondary systems). By understanding the potential attack vectors, we can make a map of the targets and ensure they are being monitored and protected.

- People impacted: For each scenario list, the relevant business groups, stakeholders, and support personnel that would be involved or impacted by a successful attack. Ensure all business groups have the opportunity to contribute to this process and articulate their specific scenarios. Work with the stakeholders and support personnel to ensure clear documentation for escalation and resolution.

- Customer impacted: For some scenarios, we must also consider the customer impact for the loss or compromise of their data or an outage caused to services provided to them. Make notes about the severity of the customer impact, and any mitigations that should be considered.

Step 2 – Explain the purpose

For each scenario, we recommend providing a high-level category to help to group similar scenarios together. Some categories that may be used include the following:

- System Health: This is the scenario focused on ensuring the operational health of a system or service required to keep the business running.

- Compliance: This is the consideration due to compliance requirements specific to your business, industry, or geographical region.

- Vulnerability: Is this a known system or process vulnerability that needs mitigation to protect it from?

- Threat: This is any scenario that articulates a potential threat, but may not have a specific vulnerability associated.

- Breach: These are scenarios that explore the impact of a successful breach.

Step 3 – The kill-chain stage

The Kill Chain is a well-known construct that originated in the military and later developed as a framework by Lockheed Martin (see here for more details: https://en.wikipedia.org/wiki/Kill_chain). Other frameworks are available, or you can develop your own.

Use the following list as headers to articulate the potential ways resources can become compromised in each scenario and at each stage of the kill chain:

- Reconnaissance

- Weaponization

- Delivery

- Exploitation

- Installation

- Command and control

- Actions on objectives

Step 4 – Which solution will do detection?

Review the information from earlier in this chapter to map which component of your security solutions architecture will be able to detect the threats for each scenario:

- SIEM

- CASB

- DLP

- IAM

- EDR

- CWPP

Step 5 – What actions will occur instantly?

As we aim to maximize the automation of detection and response, consider what actions should be carried out immediately, then focus on enabling the automation of these actions.

Actions may include the following:

- Logging and alerting.

- Notify/warn the end user.

- Block the action.

- Offer alternative options/actions.

- Trigger workflow.

Step 6 – Severity and output

At this step, you should be able to assign a number to associate with the severity level, based on the impact analysis in the previous steps. For each severity level, define the appropriate output required:

- Level 0 – Logs and reports

- Level 1 – Dashboard notifications

- Level 2 – Generate event in ticketing system

- Level 3 – Alerts sent to groups/individuals

- Level 4 – Automatic escalation to the senior management team (sirens and flashing lights are optional!)

Step 7 – What action should the analyst take?

Where the Step 5 - What actions will occur instantly? section was an automated action, this step is a definition of what the security analysts should do. For each scenario, define what actions should be taken to ensure an appropriate response, remediation, and recovery.

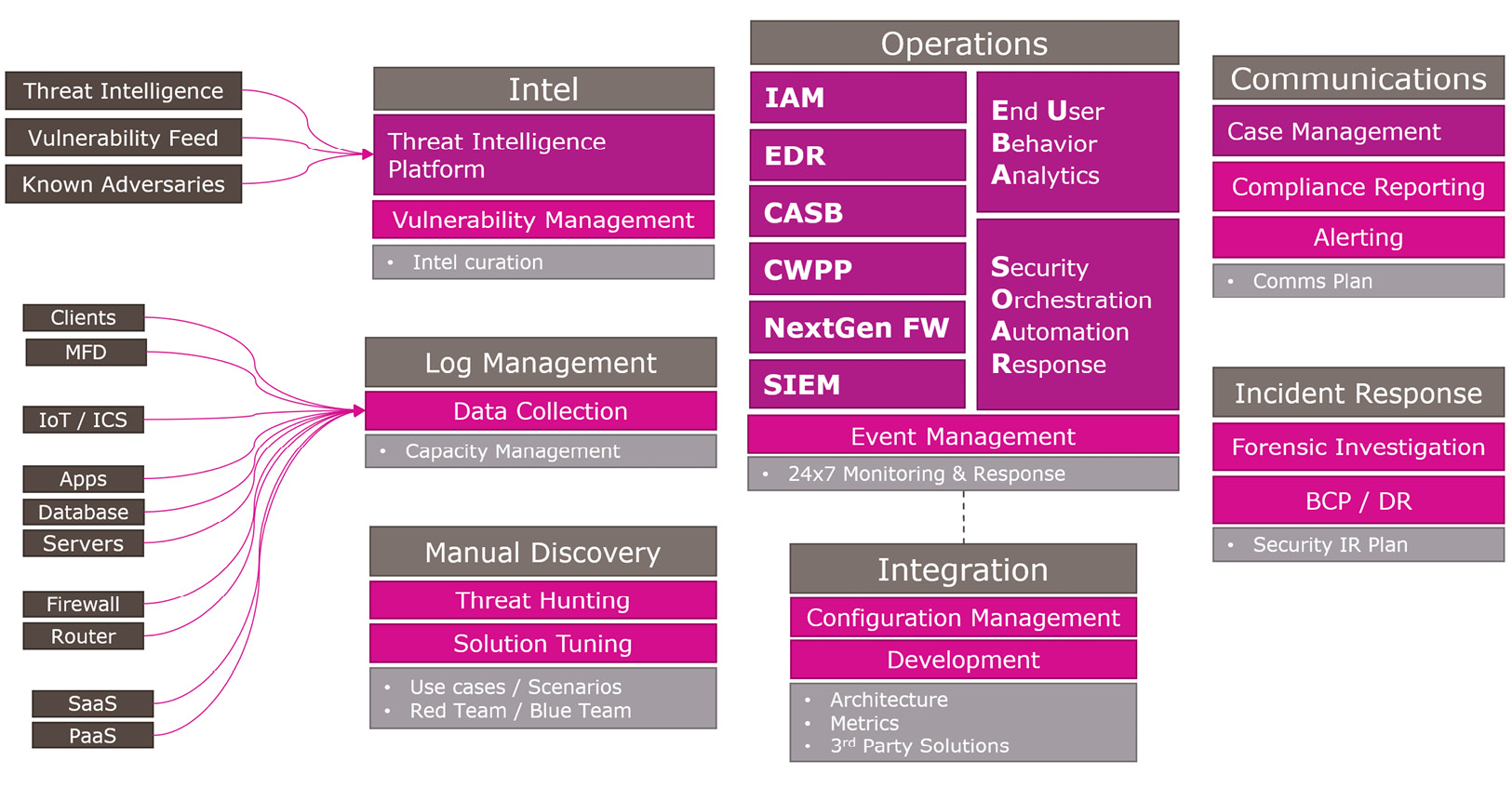

The following diagram is a simple reference chart that can be used during the scenario-mapping exercise:

Figure 1.4 – Scenario-mapping process

By following this seven-step process, your team can better prepare for any eventuality. By following a repeatable process, and improving that process each time, your team can share knowledge with each other, and carry out testing to ensure protections and detections are efficient and effective as well as to identify new gaps in solutions that must be prioritized.

You should commit to take time away from the computer and start to develop this type of table-top exercise on a regular basis. Some organizations only do this once per year while others will do it on a weekly basis or as needed based on the demands they see in their own systems and company culture.

Summary

In this chapter, we introduced Azure Sentinel and how it fits into the cloud security landscape. We explored some of the widely used acronyms for both problems and solutions, then provided a useful method of mapping these technical controls to the wide array of options available from many security platform providers today. We also looked at the future state of SOC architecture to ensure you can gain visibility and control across your entire infrastructure: physical, virtual, and cloud-hosted.

Finally, we looked at the potential cost of running Azure Sentinel as a core component of your security architecture and how to carry out the scenario-mapping exercise to ensure you are constantly reviewing the detections, the usefulness of the data, and your ability to detect and respond to current threats.

In the next chapter, we will take the first steps toward deploying Azure Sentinel by configuring an Azure Monitor workspace. Azure Monitor is the bedrock of Azure Sentinel for storing and searching log data. By understanding this data collection and analysis engine, you will gain a deeper understanding of the potential benefits of deploying Azure Sentinel in your environment.

Questions

- What is the purpose of the cyber security reference framework?

- What are the three main components when deploying a SOC based on Azure Sentinel?

- What are some of the main operations platforms that integrate with a SIEM?

- Can you name five of the third-party (non-Microsoft) solutions that can be connected to Azure Sentinel?

- How many steps are in the scenario-mapping exercise?

Further reading

You can refer to the following URLs for more information on topics covered in this chapter:

- Lessons learned from the Microsoft SOC – Part 1: Organization , at https://www.microsoft.com/security/blog/2019/02/21/lessons-learned-from-the-microsoft-soc-part-1-organization/

- Lessons learned from the Microsoft SOC – Part 2a: Organizing People, at https://www.microsoft.com/security/blog/2019/04/23/lessons-learned-microsoft-soc-part-2-organizing-people/

- Lessons learned from the Microsoft SOC – Part 2b: Career paths and readiness, at https://www.microsoft.com/security/blog/2019/06/06/lessons-learned-from-the-microsoft-soc-part-2b-career-paths-and-readiness/

- The Microsoft Security Blog, at https://www.microsoft.com/security/blog/