CHAPTER 5: SERVICE TRANSITION METRICS

Service transition provides guidance on effectively managing the transition of design services into a delivered operational state. Transition management ensures a disciplined, repeatable approach for implementing new and changed services to minimize quality/technical, schedule and cost risk exposure. It helps ensure new and changed services are delivered with the complete functionality prescribed by the service strategy requirements and built into the service design package.

Service transition processes and metrics are used throughout the service lifecycle as part of sustaining service delivery to meet business needs. The metrics in this chapter are linked to the Critical Success Factors (CSFs) and KPIs established for service transition processes. The metrics sections for this chapter are:

Transition planning and support metrics

Change management metrics

Service asset and configuration management metrics

Release and deployment management metrics

Service validation and testing metrics

Change evaluation metrics

Knowledge management metrics.

Transition planning and support metrics

The metrics in this section support the transition planning and support process. These metrics are in line with the KPIs identified for this process. The metrics presented in this section are:

- Percentage of releases that meet customer requirements

- Percentage of service transitions from RFCs

- Percentage of new or changed services implemented.

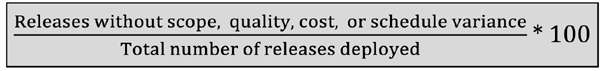

Metric name

Percentage of releases that meet customer requirements

Metric category

Transition planning and support

Suggested metric owner

Release manager

Typical stakeholders

IT operations manager, service level manager, service desk, configuration manager

Description

This is a measure of the number of implemented releases that meet the customer’s agreed requirements in terms of scope (functionality), quality, cost and release schedule – as a percentage of all releases.

Measurement description

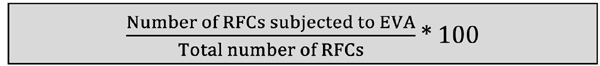

Formula:

Metric name

Percentage of service transitions from RFCs

Metric category

Transition planning and support

Suggested metric owner

Change manager

Typical stakeholders

IT operations manager, service level manager, configuration manager

Description

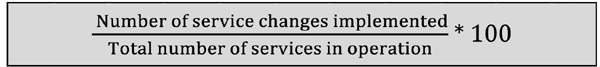

This is a measure for assessing the volume of service changes implemented as a result of requests for change (RFCs). It is expressed in terms of the ratio (percentage) of service changes implemented to the total number of services in operation.

Measurement description

Formula:

Frequency |

|

Measured: |

Monthly |

Reported: |

Quarterly |

Acceptable quality level: N/A |

|

Range: |

NA |

Set baseline percentage of service transitions based on RFCs; measure and perform trend analysis over time. Expectation for this metric would be a sustained to upward trend from the baseline.

Note

This metric relates only to those new or changed services that have been created as a result of RFC submissions. RFCs may also be generated and approved as part of Release and deployment management (RDM) process for transitioning new or changed services into operations.

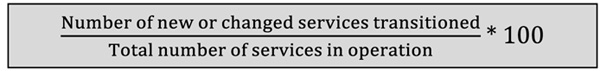

Metric name

Percentage of new or changed services implemented

Metric category

Transition planning and support

Suggested metric owner

Change manager

Typical stakeholders

IT operations manager, information security manager, capacity manager, configuration manager

Description

This is a measure for assessing the volume of services transition efforts, as a percentage of the combined total of all operational services. The metric provides an indicator of the workloads and service transition process activity levels.

Measurement description

Formula:

Frequency |

|

Measured: |

Monthly |

Reported: |

Quarterly |

Acceptable quality level: N/A |

|

Range: |

N/A |

Set baseline percentage of new or changed services implemented in the period; measure and perform trend analysis over time. The expectation for this metric would be a sustained to upward trend from the baseline.

Change management metrics

Change management metrics are of significant value as indicators of process maturity and potential improvement opportunities. The metric calculations provided in this section directly correlate to the KPIs identified for this process. The metrics presented in this section are:

- Percentage of emergency changes

- Percentage of standard changes

- Percentage of successful changes

- Percentage of changes that cause incidents

- Percentage of rejected changes

- Change backlog

- Percentage of backed out changes (remediation plan invoked)

- Unauthorized changes

- Percentage of failed changes

- Percentage of changes completed with no errors/issues.

Metric name

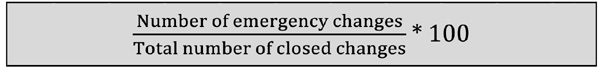

Percentage of emergency changes

Metric category

Change management

Suggested metric owner

Change manager

Typical stakeholders

Change submitter, service desk, other ITSM functions

Description

This metric represents the percentage of emergency changes in the environment (production, UAT, pilot, etc.). This downward trending metric can demonstrate issues in the infrastructure, with software or abuse of the process. Emergency changes can have a direct correlation to incidents and, therefore, must be defined properly to minimize confusion between the two.

After the initial implementation of change management, there is a tendency to see a high percentage of emergency changes in the environment. This can be caused by unfamiliarity with the process and change types or the potential of abuse as changes are pushed through without regard for the normal process and timeline (getting around the process).

Measurement description

This measurement should be gathered within a defined timeframe (i.e. monthly, quarterly). It is recommended that during the early stages of the change management process this metric be reviewed on a weekly basis to prevent abuse of proper categorization.

Formula:

Frequency |

|

Measured: |

Weekly |

Reported: |

Monthly |

Acceptable quality level: 5% |

|

Range: |

> 5% unacceptable (improvement opportunity) = 5% acceptable (maintain/improve) < 5% exceeds (process maturity level 4-5 potential) |

Metric name

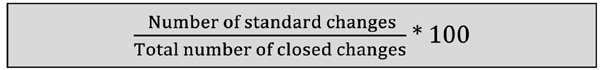

Percentage of standard changes

Metric category

Change management

Suggested metric owner

Change manager

Typical stakeholders

Change submitter, service desk, other ITSM functions

Description

This metric represents the percentage of standard (pre-approved) changes in the environment (production, UAT, pilot, etc.). This is an upward trending metric that, if used properly, makes simple changes more efficient within the process. We recommend setting a rather aggressive goal for this metric and look to move a majority of changes to a standard change type as many changes are low risk operational changes.

This type of change should grow as the change management process matures. As change management rolls out, standard changes are usually not prevalent in the environment. Low risk, highly successful changes will begin to emerge which should then be taken through a procedure to qualify as a documented standard change.

Measurement description

Formula:

This measurement should be gathered within a defined timeframe (i.e. monthly, quarterly). It is recommended that during the early stages of the change management process this metric be review on a monthly basis.

Frequency |

|

Measured: |

Weekly |

Reported: |

Monthly |

Acceptable quality level: Set an aggressive level such as 60% realizing this is a medium-term goal |

|

Range: |

< 60% unacceptable (improvement opportunity) = 60% acceptable (maintain/improve) > 60% exceeds (review to consider resetting goal) |

Metric name

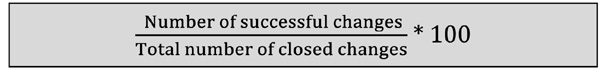

Percentage of successful changes

Metric category

Change management

Suggested metric owner

Change manager

Typical stakeholders

Change submitter, service desk, other ITSM functions, IT management

Description

This metric represents the percentage of successful changes in the environment (production, UAT, pilot, etc.). While all organizations would prefer to have this metric at 100%, the reality is that things happen (i.e. hardware failure, software incompatibility, human error) which prevent success for all changes. It is that reality which brings light to the fact that due diligence must be taken for all changes in order to establish high levels of success.

It is important to note that change management process maturity evolves over time through lessons learned. It is expected that this metric will show an upward trend in successful changes as an indicator of the acceptance and maturity of change management in the environment.

Measurement description

Formula:

This measurement should be gathered within a defined timeframe (i.e. monthly, quarterly). When combined with a downward trending incident number, this will demonstrate improved levels of service for the entire organization.

Frequency |

|

Measured: |

Weekly |

Reported: |

Monthly |

Acceptable quality level: 98% |

|

Range: |

< 98% unacceptable (improvement opportunity) = 98% acceptable (maintain/improve) |

Metric name

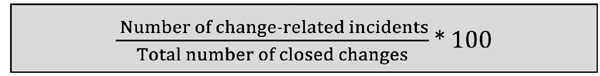

Percentage of changes that cause incidents

Metric category

Change management

Suggested metric owner

Change manager

Typical stakeholders

Change submitter, service desk, operations manager

Description

This metric represents the percentage of changes in the environment (production, UAT, pilot, etc.) which have directly caused an incident to be created. This must be a downward trending metric exhibiting improvement in all aspects of change and the execution of the change management process.

The relationship between changes and incidents can be created and tracked through many IT service management tools found in the marketplace. This relationship should be established early in the development and deployment of the tool.

Measurement description

Formula:

This metric should be gathered in a pre-defined timeframes (e.g. weekly or monthly).

Frequency |

|

Measured: |

Weekly |

Reported: |

Monthly |

Acceptable quality level: 3% |

|

Range: |

> 3% unacceptable (improvement opportunity) = 3% acceptable (maintain/improve) < 3% exceeds (process maturity level 4-5 potential) |

Metric name

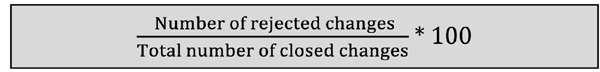

Percentage of rejected changes

Metric category

Change management

Suggested metric owner

Change manager, Change Advisory Board (CAB)

Typical stakeholders

Change submitter

Description

This metric represents the percentage of rejected changes through the process execution. As changes are reviewed within the change management process, either the change manager or the CAB will determine if the change should be rejected. This metric can reveal both the awareness and understanding of the change management process by understanding the reason behind the rejection.

There are multiple reasons for rejecting a change, most commonly due to missing information within the change record or scheduling conflicts.

Measurement description

Formula:

This measurement should be gathered in pre-defined timeframes (e.g. weekly or monthly).

Frequency |

|

Measured: |

Weekly |

Reported: |

Monthly |

Acceptable quality level: 2% |

|

Range: |

> 2% unacceptable (improvement or training opportunity) = 2% acceptable (maintain/improve) < 2% exceeds (process maturity level 4-5 potential) |

Metric name

Change backlog

Metric category

Change management

Suggested metric owner

Change manager

Typical stakeholders

Change submitter, service desk, operations manager, service level management, business customers

Description

This metric represents the number of changes creating a change backlog. A backlog can be created by a number issues or reasons including:

- Pending – changes requiring additional information, resources or authorization

- Postponed – changes put on hold due to business or scheduling conflicts

- Rejected – rejected via assessment and review

- Waiting for approval – changes in the process and waiting for final approval.

The change backlog can be managed within an ITSM tool and should be reviewed during the CAB meeting. The backlog should be reported by time (i.e. days or weeks in the backlog).

Measurement description

Formula: N/A

It is simply reported as the number of changes backlogged and the length of time in the backlog queue.

This measurement should be gathered within a defined timeframe (i.e. monthly or quarterly). A high number of backlogged changes can be a sign of issues within the change management process or with management decision making.

Frequency |

|

Measured: |

Weekly |

Monthly |

|

Acceptable quality level: Maximum backlog queue determined by IT management |

|

Range: |

N/A |

Metric name

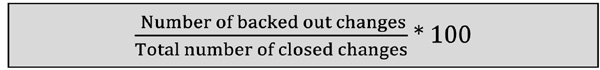

Percentage of backed out changes (remediation plan invoked)

Metric category

Change management

Suggested metric owner

Change manager

Typical stakeholders

Change submitter, service desk, IT management, service level management, customers

Description

This metric represents the percentage of changes that were implemented or partially implemented but needed to be backed out due to issues with the change. This metric can demonstrate value of remediation plans showing timely decision making and execution within the given change window. No change should be approved without a pre-defined remediation plan included.

To properly create the time for a change window, change management will consider the time required to implement the change and the time required to implement a back out. This ensures the decision to back out can be made within the change window thus minimizing the impact and/or outage to the service and business.

Measurement description

Formula:

This measurement should be gathered pre-defined timeframes (e.g. weekly or monthly).

Frequency |

|

Measured: |

Weekly |

Reported: |

Monthly |

Acceptable quality level: 5% |

|

Range: |

> 5% unacceptable (improvement opportunity) = 5% acceptable (maintain/improve) < 5% exceeds (process maturity level 4-5 potential) |

Metric name

Unauthorized changes

Metric category

Change management

Suggested metric owner

Change manager

Typical stakeholders

Service desk, IT management, service level management, customers

Description

This number represents a serious breach of process protocol and must not be tolerated. Detecting unauthorized changes can be difficult based on the tools or resources available to research these activities. Means of detection include:

- Tools — tools that can monitor and report changes within the environment

- CMDB audits — compare differences in the CMDB to change records

- Server log audits — research activities recorded in log files and compare against change records.

A large number of issues can result from unauthorized changes which can create severe delays in incident and problem management. This increases the downtime of services and jeopardizes the overall reliability and quality of the service.

Measurement description

Formula: N/A

This number should be gathered within a defined timeframe (i.e. weekly, monthly).

Frequency |

|

Measured: |

Weekly |

Reported: |

Monthly |

Acceptable quality level: 0 |

|

Range: |

N/A |

Metric name

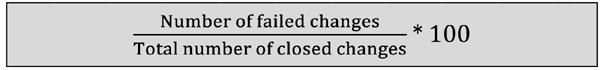

Percentage of failed changes

Metric category

Change management

Suggested metric owner

Typical stakeholders

Change submitter, service desk, IT management

Description

This metric represents the percentage of failed changes in the environment (production, UAT, pilot, etc.). This downward trending metric can demonstrate issues in the change planning, change activities, the infrastructure or with conflicting changes. Failed changes must be clearly defined within the change management process as these can include several instances such as complete failures, back outs, partial failures or change conflict/collision.

Measurement description

Formula:

This measurement should be gathered within a defined timeframe (i.e. monthly, quarterly).

Frequency |

|

Measured: |

Weekly |

Reported: |

Monthly |

Acceptable quality level: 5% |

|

Range: |

> 5% unacceptable (improvement opportunity) = 5% acceptable (maintain/improve) < 5% exceeds (process maturity level 4-5 potential) |

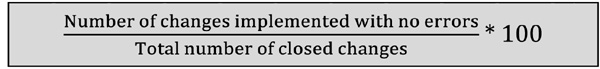

Metric name

Percentage of changes completed with no errors/issues

Metric category

Change management

Suggested metric owner

Change manager

Typical stakeholders

IT operations manager, change manager, configuration manager, service level manager, service desk

Description

This metric represents the number of changes that have been implemented successfully the first time as planned, without executing a back out or incurring any errors from verification testing.

Measurement description

Formula:

Frequency |

|

Measured: |

Monthly |

Reported: |

Monthly |

Acceptable quality level: 95% |

|

Range: |

< 95% unacceptable (service asset management possibly out of control) = 95% acceptable (maintain/improve) |

Note

This AQL allows incurring errors/issues with up to five per cent of the implemented changes due to conditions in the implementation environment and other risk factors.

Service asset and configuration management metrics

Configuration management metrics are of significant value as indicators of process maturity and potential improvement opportunities. The metrics in this section reflect the CSFs and KPIs identified for Service Asset and Configuration Management (SACM). It is important to note that these are not intended as the definitive set of possible SACM process metrics. As for all processes, the metrics applied must support decisions based on alignment to the business. The metrics presented in this section are:

- Percentage of audited configuration items (CIs)

- Percentage of CIs mapped to IT services in the CMDB

- Average age of IT hardware assets

- Percentage of CIs with maintenance contracts

- Percentage of recorded changes to the CMDB

- Percentage of accurately registered CIs in CMDB.

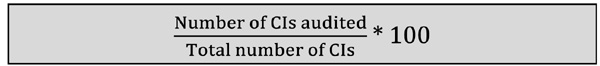

Metric name

Percentage of audited CIs

Metric category

Service asset and configuration management

Suggested metric owner

Configuration manager

Typical stakeholders

Configuration manager, service desk, IT operations manager

Description

This measurement monitors the number of deployed CIs with an audit date in a given time period relative to the total number of all deployed CIs. Regular audits of the CMDB are important as a quality assurance activity to ensure the CI information is accurate and up to date.

Measurement description

Formula:

Frequency |

|

Measured: |

Quarterly |

Reported: |

Quarterly |

Acceptable quality level: 95% |

|

Range: |

< 95% unacceptable (improvement opportunity) = 95% acceptable (maintain/improve) > 95% exceeds (process maturity level 4 or 5 potential) |

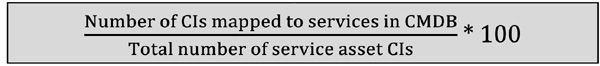

Metric name

Percentage of CIs mapped to IT services in the CMDB

Metric category

Service asset and configuration management

Suggested metric owner

Configuration manager

Typical stakeholders

Configuration manager, IT operations manager, other ITSM functions

Description

This measurement shows the magnitude of CIs mapped to IT services in the CMDB relative to all CIs comprising the delivered IT services.

This metric is useful as an indicator of the magnitude of CI investments that are related to supporting organizational operations and other functions not directly related to delivering services.

Measurement description

Formula:

Frequency |

|

Measured: |

Quarterly |

Reported: |

Quarterly |

Acceptable quality level: 95% |

|

Range: |

< 95% unacceptable (improvement opportunity) = 95% acceptable (maintain/improve) > 95% exceeds (process maturity level 4 or 5 potential) |

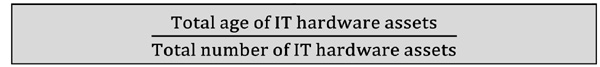

Metric name

Average age of IT hardware assets

Metric category

Service asset and configuration management

Suggested metric owner

Asset manager

Typical stakeholders

Configuration manager, IT operations manager, other ITSM functions

Description

The average age of hardware assets documented and managed as CIs provides a high-level indicator of IT hardware obsolescence with the related issues of maintenance costs to sustain availability and reliability targets. The results of this measurement are a valuable input for IT asset investment decision making as well as for assessing service risks.

Measurement description

Formula:

Frequency |

|

Measured: |

Quarterly |

Reported: |

Quarterly |

Acceptable quality level: 5 years |

|

> 5 years unacceptable (potential risks to operational integrity) = 5 years or less acceptable (sustainable IT investments) |

|

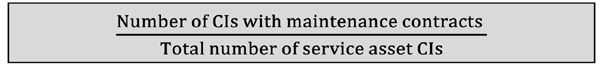

Metric name

Percentage of CIs with maintenance contracts

Metric category

Service asset and configuration management

Suggested metric owner

Configuration manager

Typical stakeholders

Configuration manager, service desk, IT operations manager, service level manager, customers

Description

This measurement monitors the number of deployed CIs that are within their warranty service period or are related to a valid maintenance contract, relative to the total number of deployed CIs.

This is an indicator of CI maintenance support sources and the associated considerations for establishing SLAs. This metric also provides an indicator of internal maintenance staffing workloads as well as a profile of reliability risk transference.

Measurement description

Formula:

Frequency |

|

Measured: |

Monthly |

Reported: |

Monthly |

Acceptable quality level: 99% |

|

Range: |

< 99% unacceptable (service asset management possibly out of control) = 99% acceptable (maintain/improve) > 99% exceeds (indicates service assets are well managed) |

Note

This AQL allows for up to one per cent of all deployed service assets to be without an underpinning maintenance support agreement. As an example, maintenance support may not be required for CIs associated with a service that is being retired.

Metric name

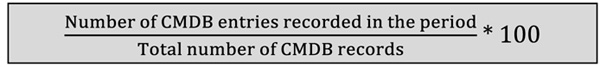

Percentage of recorded changes to the CMDB

Metric category

Service asset and configuration management

Suggested metric owner

Configuration manager

Typical stakeholders

IT operations manager, change manager, configuration manager, service level manager, service desk

Description

This metric indicates the magnitude of changes to the CMDB records in a specific reporting period. The measurement provides high-level insight to the magnitude of change activities, and supports trend analyses associated with service delivery workloads and patterns of business activity.

Measurement description

Formula:

Frequency |

|

Measured: |

Monthly |

Reported: |

Monthly |

Acceptable quality level: N/A |

|

Range: |

N/A |

Set baseline of the percentage of recorded CMDB entries; measure and perform trend analysis over time. Expectation for this metric would be a sustained to upward trend from the baseline as the organization continues to grow and service offerings evolve.

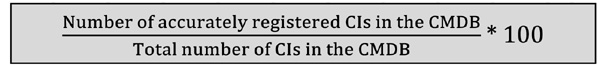

Metric name

Percentage of accurately registered CIs in CMDB

Metric category

Service asset and configuration management

Suggested metric owner

Configuration manager

Typical stakeholders

IT operations manager, change manager, configuration manager, service level manager, service desk

Description

This metric shows the percentage of CIs that have been registered accurately in the CMDB. Depending on the level of confidence in the accuracy of CMDB entries, a KPI can be determined by checking a sample set of CIs.

Although many CIs are recorded using discovery and configuration tools, there are still several other CI types, such as documentation and services that require manual recording and can be prone to errors.

Measurement description

Formula:

|

|

Measured: |

Monthly |

Reported: |

Monthly |

Acceptable quality level: 95% |

|

Range: |

< 95% unacceptable (service asset management possibly out of control) = 95% acceptable (maintain/improve) > 95% exceeds (indicates service assets are well managed) |

Note

This AQL allows up to 5% inaccurate service asset CI record entries to account for CMDB update lag time (i.e. time between change approval and CMDB entry) and data entry errors.

Release and deployment management metrics

Metrics applied to Release and deployment management (RDM) provide the necessary insight to process performance. The metrics in this section are linked to KPIs identified for activities in undertaken in this process. The metrics presented in this section are:

- Percentage of releases deployed without prior testing

- Percentage of releases without approved fallback plans

- Number of RFCs raised during Early Life Support (ELS) for a new or changed service

- Percentage of releases deployed with known errors

- Number of scheduled new or changed service releases pending.

Metric name

Percentage of releases deployed without prior testing

Metric category

Release and deployment management

Suggested metric owner

Deployment manager

Typical stakeholders

IT operations manager, information security manager, service desk, configuration manager

Description

This is a measure for assessing the effectiveness of release/release package planning and implementation management. This metric also provides an indicator of deployment risk exposure (i.e. lower percentage = lower risk).

Measurement description

Formula:

Frequency |

|

Measured: |

Monthly |

Reported: |

Monthly |

Acceptable quality level: All releases should be tested |

|

Range: |

< 99% of the time unacceptable (improvement opportunity) = 99% of the time acceptable (maintain/improve) > 99% of the time exceeds (process maturity level 4 or 5 potential) |

Note

Meeting the AQL target 99% of the time allows for emergency changes as one per cent of the deployed releases.

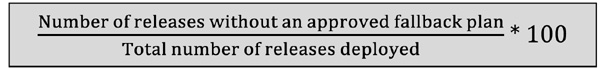

Metric name

Percentage of releases without approved fallback plans

Metric category

Release and deployment management

Suggested metric owner

Release manager

Typical stakeholders

IT operations manager, information security manager, service desk, configuration manager

Description

This is a measure for assessing the effectiveness of release/release package planning and implementation management. This metric also provides an indicator of service continuity risk exposure (i.e. lower percentage = lower risk).

Measurement description

Formula:

Frequency |

|

Measured: |

Monthly |

Reported: |

Monthly |

Acceptable quality level: 0% |

|

Range: |

< 0% of the time unacceptable (improvement opportunity) = 0% of the time acceptable (maintain/improve) |

Note

Meeting the AQL target of 0% of the releases deployed with an approved Fallback Plan minimizes the release and deployment management cost, schedule and quality risk exposure.

Metric name

Number of RFCs raised during Early Life Support (ELS) for a new or changed service

Metric category

Release and Deployment Management (RDM)

Suggested metric owner

Release manager

Typical stakeholders

IT operations manager, information security manager, service desk, configuration manager

Description

This is a measure for assessing the effectiveness of release evaluation and validation testing. It can also be an indicator of deployed release/release package maturity. This metric is a simple tally of the number of RFCs for a new or changed service release within the defined post-deployment early life support period (e.g. two months) after implementation acceptance.

Measurement description

Formula: N/A

Change management tools offer an abundance of fields to collect change source information. It is recommended that the CMDB records include a field (e.g. a flag or comments) to reflect changes made during ELS. This measurement can be reported during the post-deployment review.

Frequency |

|

Monthly |

|

Reported: |

Monthly |

Acceptable quality level: 0 |

|

Range: |

N/A |

Note

This measurement can provide inputs for trend analysis to evaluate the overall effectiveness of RDM efforts, including the quality of release/release package design and validation testing. It can also be useful as an indicator of potential RDM CSI opportunities.

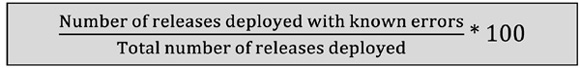

Metric name

Percentage of releases deployed with known errors

Metric category

Release and deployment management

Suggested metric owner

Deployment manager

Typical stakeholders

IT operations manager, problem manager, service level manager, service desk, configuration manager

Description

This is a metric for monitoring the magnitude of unresolved problems/issues associated with the release of new or changed services. The measurement can also provide an indicator of release/release package quality, post-deployment problem management workloads and change management priorities. There are a number of issues that can cause these known errors, for instance:

- Unrealistic business expectations and requirements

- Design errors

- Vendor products

- Improper/inadequate testing

- Human error.

Measurement description

Formula:

Frequency |

|

Measured: |

Monthly |

Reported: |

Monthly |

Acceptable quality level: 0% |

|

Range: |

< 95% unacceptable (improvement opportunity) = 95% acceptable (maintain/improve) > 95% exceeds (process maturity level 4 or 5 potential) |

Note

The 95% AQL target allows for known errors associated with such actions as emergency change releases and/or source controlled CIs as five per cent of the releases.

Metric name

Number of scheduled new or changed service releases pending

Metric category

Release and deployment management

Suggested metric owner

Release manager

Typical stakeholders

IT operations manager, information security manager, service desk, configuration manager

Description

This is a measure of the queue for approved releases/ release packages. It can also provide an indicator of release and deployment management workloads and change management priorities in the ensuing period(s).

Measurement description

Formula: N/A

Simply the number of approved releases / release packages pending deployment in the change schedule.

Frequency |

|

Measured: |

Monthly |

Reported: |

Monthly |

Acceptable quality level: 0 |

|

Range: |

N/A |

Service validation and testing metrics

Quality Assurance (QA) is the basis for Service Validation and Testing (SVT) efforts. The SVT process activities provide the mechanisms for assuring that released services (new or changed) meet performance objectives to deliver the intended utility (fitness for purpose) and warranty (fitness for use). The metrics presented in this section are:

- Percentage of risks with quantified risk assessment

- Percentage of risk register entries with a ‘high’ risk score

- Expected Monetary Value (EMV) of identified service risks

- Number of variances from service acceptance criteria

- Service test schedule variance

- Percentage of reusable test procedures

- Percentage of errors detected from pre-deployment testing

- Percentage of errors detected from testing in early life support.

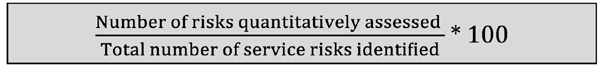

Metric name

Percentage of risks with quantified risk assessment

Metric category

Service validation and testing

Suggested metric owner

Risk manager

Typical stakeholders

IT operations manager, service level manager, service desk, configuration manager

Description

This is a measure of the service risks that have been assessed with the quantified probability of risk event occurrence and the probable impact severity identified in the risk register. Service risks are assessed to determine criteria for validation and testing as part of minimizing service risk exposure. The metric is applied for evaluating performance of the risk management function.

Measurement description

Formula:

Frequency |

|

Measured: |

Monthly |

Reported: |

Monthly |

Acceptable quality level: 0% |

|

Range: |

> 0% unacceptable (service risks possibly out of control) = 0% of requirements acceptable (risk management function being properly performed) |

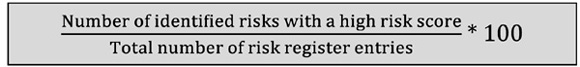

Metric name

Percentage of risk register entries with a ‘high’ risk score

Metric category

Service validation and testing

Suggested metric owner

Typical stakeholders

IT operations manager, service level manager, service desk, configuration manager

Description

This is an overall measure of risk management effectiveness to minimize risk exposure as part of service design, transition and operation. It provides an overall service risk profile and can be applied for validating the service strategy as well as for identifying service improvement opportunities.

Measurement description

Formula:

Frequency |

|

Measured: |

Monthly |

Reported: |

Monthly |

Acceptable quality level: 10% |

|

Range: |

> 10% unacceptable (service risks possibly out of control) = 10% acceptable (maintain/improve) < 10% exceeds (indicates well-managed service risks) |

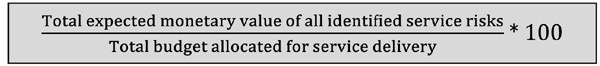

Metric name

EMV of identified service risks

Metric category

Service validation and testing

Suggested metric owner

Risk manager

Typical stakeholders

IT operations manager, service level manager, configuration manager

Description

This is a measure of the amount of Management Reserve (MR) that should be allocated for ‘unknowns’ in the delivery of a service. MR is the budget amount reserved for responding to risk events (i.e. unknowns). MR is derived from the sum of estimated financial cost impacts from risk events identified in the risk register. The MR is total estimated financial cost impact (EMV) that could be incurred if all identified service risk events actually occur.

This metric is useful for determining the value proposition for SVT activities. It is expressed as a percentage of the total service budget. The valuation diminishes as the service life cycle progresses through operational ELS and as service operations risk exposure declines over time.

Measurement description

Formula:

Frequency |

|

Measured: |

Monthly |

Reported: |

Quarterly |

Acceptable quality level: 5% |

|

Range: |

> 5% unacceptable (service risks possibly out of control) = 5% acceptable (maintain/improve) < 5% exceeds (indicates well-planned and implemented services) |

Metric name

Number of variances from service acceptance criteria

Metric category

Service validation and testing

Suggested metric owner

Change manager

Typical stakeholders

IT operations manager, information security manager, service level manager, service desk, configuration manager

Description

This is a measure of the tested performance differences (variances) of new or changed services as compared to the approved service acceptance criteria. Variances may be identified by such evidence as test errors, configuration audit results and unresolved known errors. Understanding these variances provides the opportunity to accept or reject the variance prior to continuing to the next activity. These activities will be based on the decision to accept or reject.

Measurement description

Formula: N/A

It is simply reported as the number of variances from the customer-agreed acceptance criteria.

Frequency |

|

Measured: |

Monthly |

Reported: |

Monthly |

Acceptable quality level: 0 |

|

Range: |

N/A |

Note

Variances from the acceptance criteria include, but are not limited to unfulfilled technical/functional performance, cost, schedule, and/or quality parameters. The metric should include any variance(s) for which a formal deviation or waiver has been approved.

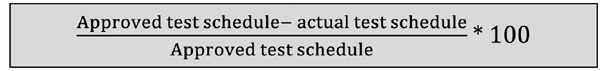

Metric name

Service test schedule variance

Metric category

Service validation and testing

Suggested metric owner

Typical stakeholders

IT operations manager, information security manager, service level manager, service desk, configuration manager

Description

This metric provides an understanding of the relationship between the approved (planned) and incurred (actual) schedule for service testing. Calculated in either labor hours or calendar days, planned versus actual test schedule performance is measured for any test activities during the development, release and deployment, and early life support of new and changed services.

Measurement description

Formula:

Note

Positive value is favorable (Ahead of schedule)

Negative value is unfavorable (Behind schedule)

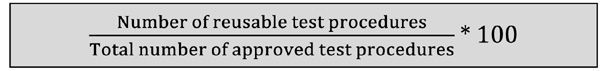

Metric name

Percentage of reusable test procedures

Metric category

Service validation and testing

Suggested metric owner

Release manager

Typical stakeholders

IT operations manager, information security manager, service level manager, configuration manager

Description

This measurement identifies the quantity of reusable test procedures and associated test scripts (or use cases) as a ratio compared to all approved test procedures in a testing database or repository. Reusable test procedures can be identified in the repository (i.e. flagged) as having been used for the testing of more than one new or changed service release. These procedures should be considered as CIs therefore, the testing repository should be part of the CMS.

This metric provide indications of testing program effectiveness, service warranty and the alignment of services to the business.

Measurement description

Formula:

Frequency |

|

Measured: |

Monthly |

Reported: |

Quarterly |

Acceptable quality level: N/A |

|

Range: |

N/A |

Set baseline percentage of reusable test procedures in the CMDB; measure and perform trend analysis over time. Expectation for this metric would be a sustained to upward trend from the baseline.

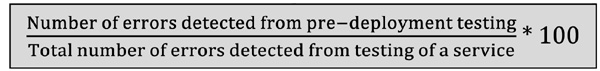

Metric name

Percentage of errors detected from pre-deployment testing

Metric category

Service validation and testing

Suggested metric owner

Release manager

Typical stakeholders

IT operations manager, service desk, configuration manager

Description

This measurement identifies the errors detected from testing prior to deployment, as compared to total errors detected from testing the service in all stages. It is useful as an indicator of pre-deployment testing effectiveness and provides insight to potential SVT improvement opportunities. Comprehensive testing and detection of most/all service errors prior to deployment is a significant part of minimizing service deployment costs and schedules.

Measurement description

Formula:

Frequency |

|

Measured: |

Monthly |

Reported: |

Monthly |

Acceptable quality level: 95% |

|

Range: |

< 95% unacceptable (improvement opportunity) = 95% acceptable (maintain/improve) > 95% exceeds (process maturity level 4 or 5 potential) |

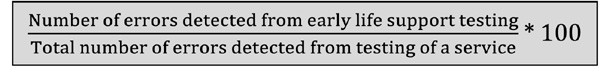

Metric name

Percentage of errors detected from testing in early life support

Metric category

Service validation and testing

Suggested metric owner

Change manager

Typical stakeholders

IT operations manager, customer, service desk, configuration manager

Description

This measurement identifies the errors detected from testing during ELS, as compared to total errors detected from testing the service in all stages. It is useful as an indicator of initial service quality and provides insight to potential SVT improvement opportunities. Service errors incurred during ELS can increase service deployment costs and acceptance schedules.

Errors detected during ELS provide customers/users with indicators of potential service performance issues and can negatively influence customer satisfaction. Accordingly, the SVT process emphasizes pre-deployment detection and resolution of errors.

Measurement description

Formula:

Frequency |

|

Measured: |

Monthly |

Reported: |

Monthly |

Acceptable quality level: 5% |

|

Range: |

> 5% unacceptable (improvement opportunity) = 5% acceptable (maintain/improve) < 5% exceeds (process maturity level 4 or 5 potential) |

Change evaluation metrics

The purpose of Change Evaluation (EVA) is to provide consistent and standardized means for predictive assessment of service change impacts on the business. This is a decision support process invoked by the Change Advisor Board (CAB) to determine the actual performance of a service change as compared to its predicted performance. The metrics provided in this section are:

- Percentage of RFCs Evaluated

- Number of scheduled change evaluations pending

- Number of evaluated changes with variances from service acceptance criteria

- Percentage of RFCs rejected after EVA

- Customer satisfaction

- Quality defects identified from EVA.

Metric name

Percentage of RFCs evaluated

Metric category

Change evaluation

Suggested metric owner

Change manager

Typical stakeholders

IT operations manager, service level manager, configuration manager

Description

This is a measure of the number of requested changes for which a detailed EVA was performed — as a percentage of all RFCs. The metric is valuable as an indicator of the overall complexity of RFCs (evaluations are typically invoked for more complex changes).

It can also be useful as an input to assessing service risk exposure from RFCs. In general the probabilities of unforeseen negative impacts from implementing changes are inversely proportional to the percentage of RFCs evaluated. Accordingly, this metric also provides a high-level input for determining the EVA process value proposition.

Measurement description

Formula:

Frequency |

|

Measured: |

Monthly |

Reported: |

Monthly |

Acceptable quality level: N/A — baseline and trend |

|

Range: |

N/A |

Set EVA percentage baseline; measure and perform trend analysis over time. The expectation for this metric would be an upward trend from the baseline.

Metric name

Number of scheduled EVAs pending

Metric category

Change evaluation

Suggested metric owner

Change manager

Typical stakeholders

IT operations manager, information security manager, service desk, configuration manager

Description

This is a measure of the RFCs queue for new and changed services pending EVA. It provides an indicator of the change management workload and priorities in the ensuing period(s).

Measurement description

Formula: N/A

Simply report the number of RFCs pending EVA in the forward schedule of changes.

Frequency |

|

Measured: |

Monthly |

Reported: |

Monthly |

Acceptable quality level: 0 |

|

Range: |

N/A |

Metric name

Number of evaluated changes with variances from service acceptance criteria

Metric category

Change evaluation

Suggested metric owner

Change manager

Typical stakeholders

IT operations manager, information security manager, service level manager, service desk, configuration manager

Description

This is a measurement of the performance differences (variances) of evaluated changed services for which EVAs were conducted as compared to the approved service acceptance criteria. Variances may be identified by such evidence as test errors, configuration audit results and unresolved known errors.

This metric is closely linked to the ‘Number of variances from service acceptance criteria’ metric in Service validation and testing. The distinctive attribute of this metric is that it considers changed services that were subjected to EVA.

Measurement description

Formula: N/A

It is simply reported as the number of changes implemented after EVA for which there were variances from the customer-agreed acceptance criteria.

Frequency |

|

Measured: |

Monthly |

Reported: |

Monthly |

Acceptable quality level: 0 |

|

Range: |

N/A |

Note

Variances from the acceptance criteria include, but are not limited to unfulfilled technical/functional performance, cost, schedule, and/or quality parameters. The metric should include any variance(s) for which a formal deviation or waiver has been approved.

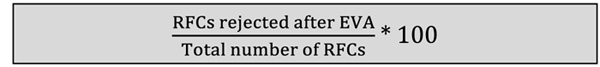

Metric name

Percentage of RFCs rejected after EVA

Metric category

Change evaluation

Suggested metric owner

Change manager

Typical stakeholders

IT operations manager, information security manager, configuration manager

Description

This is a measure for assessing the volume of RFC rejection based on EVA, as a percentage of the combined total of all RFCs for new and changed services. An important task of EVA is to provide recommendations to the change manager concerning the disposition of the change.

The metric provides analytical insight to the value of the EVA process in its contribution to change management decision making.

Measurement description

Formula:

Frequency |

|

Measured: |

Monthly |

Reported: |

Quarterly |

Acceptable quality level: N/A |

|

Range: |

N/A |

Set baseline percentage of disapproved RFCs; measure and perform trend analysis over time. Expectation for this metric would be a downward trend from the baseline.

Metric name

Customer satisfaction

Metric category

Change evaluation

Suggested metric owner

Change manager

Typical stakeholders

IT operations manager, service level manager

Description

This is a measure of customer satisfaction with service changes, based on responses to customer satisfaction surveys, on a five-point scale. Measuring customer satisfaction with delivered services is an ongoing activity that gives service providers vital feedback in the form of a performance score.

Measurement description

Formula: N/A, see range

Frequency |

|

Measured: |

Monthly |

Reported: |

Monthly |

Acceptable quality level: 3 |

|

Range: |

1 = Not satisfied 2 = Slightly dissatisfied 3 = Generally satisfied/not dissatisfied 4 = Very satisfied 5 = Exceeds expectations |

Metric name

Quality defects identified from EVA

Metric category

Change evaluation

Suggested metric owner

Change manager

Typical stakeholders

IT operations manager, information security manager, configuration manager

Description

This is a measure of the number of quality defects identified through the EVA process. Following service validation and testing, EVA will review and report the test results to change management. This report should include recommendations for improving the change and any other inputs to expedite decisions within change management.

This metric provides a Quality Control (QC) figure of merit for the EVA process.

Measurement description

Formula: N/A

It is simply the number of qualitative defects identified in evaluated change releases/release packages based on established workmanship standards and customer-agreed service acceptance criteria.

Frequency |

|

Measured: |

Monthly |

Reported: |

Monthly |

Acceptable quality level: 0 |

|

Range: |

N/A |

Set baseline number of defects per work product; measure and perform trend analysis over time. Expectation for this metric would be a downward trend from the baseline.

Knowledge management metrics

In simplest terms, knowledge management is the translation of tacit knowledge to explicit knowledge. The Knowledge Management (KNM) process is applied to provide a shared knowledge base of service-related data and information available to stakeholders. An SKMS provides the central, controlled access repository of service information. The metrics in this section are:

- Percentage of formally assessed ITSM processes

- Percentage of contracts with remaining terms of 12 months or less

- Percentage growth of the SKMS

- Number of searches of the SKMS

- Percentage growth of SKMS utilization

- Number of SKMS audits (within a defined period).

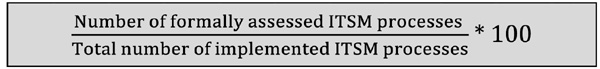

Metric name

Percentage of formally assessed ITSM processes

Metric category

Knowledge management

Suggested metric owner

Service manager

Typical stakeholders

IT operations manager, service level manager, customers

Description

A formal ITSM process assessment provides a benchmark of the assessed process maturity level, by each process attribute, as well as identifying opportunities for process and service improvements.

This assessment can be performed by an internal team which may provide a bias toward the process or be limited by the knowledge of the assessment team. Assessments can also be performed by an external team offering industry experience and knowledge for a well-rounded assessment however, this will increase costs.

Measurement description

Formula:

Frequency |

|

Measured: |

Quarterly |

Reported: |

Annually |

Acceptable quality level: N/A |

|

Range: |

N/A |

Set the baseline percentage of formally assessed ITSM processes; measure and perform trend analysis over time. Expectation for this metric would be a sustained to upward trend from the baseline.

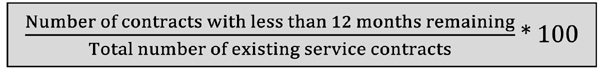

Metric name

Percentage of service contracts with remaining terms of 12 months or less

Metric category

Knowledge management/supplier management

Suggested metric owner

Contract manager

Typical stakeholders

IT operations manager, service level manager, customer relationship manager, business relationship manager

Description

Monitoring and reporting on service contracts that have less than one year remaining on the contract terms provides timely reviews, renegotiation or termination of those contracts. This metric provides insight into the rate of service contract turnover and helps in business forecasting.

The 12-month time horizon can be adjusted to match patterns of business activity. A key element of this metric is to provide the service provider adequate time to prepare for service contract renewals, consider service retirements, revise associated supplier contracts and/or organizational level agreements (OLAs), and assess the change schedule.

Measurement description

Formula:

Frequency |

|

Measured: |

Monthly |

Reported: |

Monthly |

Acceptable quality level: N/A |

|

N/A |

|

Set baseline for the percentage of contracts with remaining terms of 12 months or less; measure and perform trend analysis over time. Expectation for this metric would be a sustained trend from the baseline depending on the level of contract turnover and sustainment.

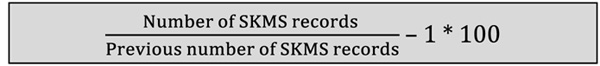

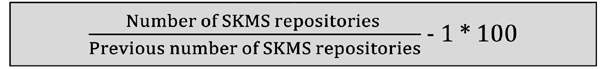

Metric name

Percentage growth of the SKMS

Metric category

Knowledge management

Suggested metric owner

Knowledge manager

Typical stakeholders

Process managers, management, service desk, suppliers

Description

As additional information is collected and store within controlled repositories, these repositories can be part of the SKMS or can be added to the SKMS based on the value provided to the organization. In either case they add to the collective knowledge of the organization and should be managed by KNM.

This metric can be measured in multiple ways when looking at SKMS growth. This first can be based on record counts while the second can measure the number of repositories within the SKMS.

Measurement description

Formula: The first is the record count metric.

The second is measuring repository growth.

This metric should be monitored and collected regularly to observe the SKMS growth from both the record and repository perspective. Both metrics combined provide a clear indication of overall SKMS growth.

Frequency |

|

Measured: |

Monthly |

Reported: |

Quarterly |

Acceptable quality level: Baseline the initial metric and trend over time. |

|

Range: |

N/A |

Metric name

Number of searches of the SKMS

Metric category

Knowledge management

Suggested metric owner

Knowledge manager

Typical stakeholders

Process managers, management, service desk, suppliers

Description

The SKMS exists to provide organizational knowledge to all stakeholders. Therefore, the searches of the SKMS must be monitored to ensure not only its use to the organization but also to the value it provides in support of customers and services. The SKMS should be the first place everyone goes to for information.

This upward trending metric helps monitor the number of searches within the SKMS. As individuals learn to use it, the SKMS can provide valued information and their utilization will increase as the SKMS is seen as a trusted source of information and knowledge.

Measurement description

Formula: N/A

This metric can be collected from the tools used to manage the SKMS repositories. The level of monitoring may increase as more individuals require the needed information found within the SKMS. As this grows monitoring collection and reporting may increase as well.

Frequency |

|

Measured: |

Weekly/monthly |

Reported: |

Monthly/quarterly |

Acceptable quality level: N/A |

|

Range: |

N/A |

Metric name

Percentage growth of SKMS utilization

Metric category

Knowledge management

Suggested metric owner

Knowledge manager

Typical stakeholders

Process managers, management, service desk, suppliers

Description

As the SKMS matures and increases its value throughout the organization, the utilization should increase dramatically. SKMS utilization can include:

- Searches

- Adding new files (documentation)

- Updating information

- File reads.

This metric monitors the overall utilization within a defined period of time. The SKMS will be populated by several tools which may not be included in this metric as several records are added and updated daily.

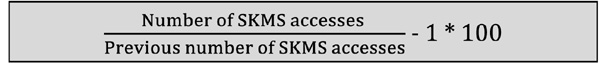

Measurement description

Formula:

As seen above, several activities can be included in the utilization. Therefore, prior to collecting this metric be sure to define the type of access that will be counted as utilization. The KNM tools and monitors will then help collect these metric data.

|

|

Measured: |

Weekly |

Reported: |

Monthly |

Acceptable quality level: Baseline the initial metric and monitor trending patterns. |

|

Range: |

Growth ranges can be set after a pattern of utilization is identified. |

Metric name

Number of SKMS audits (within a defined period)

Metric category

Knowledge management

Suggested metric owner

Knowledge manager

Typical stakeholders

Process managers, management, service desk, suppliers

Description

The success of the SKMS relies in the organization’s trust in the information and knowledge provided within the repositories. Therefore, regular audits of the SKMS must occur to ensure the highest level of quality of the information. The audit will include:

- Policy, process and procedures documentation

- File and records audit

- SKMS maintenance (change records)

- Utilization and performance reports

- Incidents and problems reported.

Audits should be performed by KNM for an overall service provider review. The organization should plan both internal and external audits from a business standpoint to maintain an unbiased perspective of the SKMS.

Measurement description

Formula: N/A

This metric should count all formal audits performed on the SKMS and the Knowledge Management process. It should distinguish between the types of audits performed and by whom.

Frequency |

|

Measured: |

Quarterly |

Reported: |

Annually |

Acceptable quality level: N/A |

|

Range: |

N/A |