CHAPTER 4: SERVICE DESIGN METRICS

Metrics for service design processes provide the capability to evaluate designs of new or changed services for transition into an operational state. These metrics are used to objectively assess the effectiveness of new or changed service designs to deliver the intended service capabilities. The metrics sections for this chapter are:

Design co-ordination metrics

Service catalog metrics

Service level management metrics

Availability management metrics

Capacity management metrics

IT service continuity management metrics

Information security management metrics

Supplier management metrics.

Design co-ordination metrics

Service design activities must be properly co-ordinated to ensure the service design goals and objectives are met. The Design co-ordination process encompasses all activities for the centralized planning, co-ordination and control of service design activities and resources across design projects and the service design phase of the service lifecycle.

The extensiveness of design co-ordination efforts will vary depending on the complexity of the new or changed service designs. Formalized process structure prescribed by the organization (i.e. governance) also impacts the level of effort necessary to perform service design co-ordination activities. The metrics presented in this section are:

- Number of Service Design Packages (SDP) rejected

- Number of designs rejected

- Percentage of designs associated with projects

- Number of architectural exceptions

- Percentage of designs without approved requirements

- Percentage of designs with reusable assets

- Percentage of staff with skills to management designs

Metric name

Number of Service Design Packages (SDPs) rejected

Metric category

Design co-ordination

Suggested metric owner

Engineering or service management

Typical stakeholders

Technical staff, engineering, service transition, applications

Description

An SDP is a collection of documents created throughout the service design phase of the service management lifecycle. It contains all the documentation necessary for the transition (build, test, deploy) of the service or process into the production environment including:

- Requirements (customer, technical, operational)

- Design diagrams

- Financial documentation (business case, budgets)

- Testing documentation

- Service acceptance criteria

- Plans (transition, operational).

Therefore, the transition team must have the authority to reject an SDP and provide justification for the rejection.

This metric will assist in tracking the rejected SDPs to provide valuable information for improvements within the service design phase. Over time this metric can help improve future designs and improve the services and processes that are used in the production environment.

Measurement description

Formula: N/A

This metric, a simple tally (count) used for comparison over time, should be monitored and collected regularly to ensure progress is made in designing services and processes. This will also demonstrate a more proactive approach to managing the environment.

Frequency |

|

Measured: |

Monthly |

Reported: |

Quarterly |

Acceptable quality level: Create a quarterly baseline and reduce the number of SDPs rejected over time |

|

Range: |

Dependent on the established baseline |

Metric name

Number of designs rejected

Metric category

Design co-ordination

Suggested metric owner

Engineering management

Typical stakeholders

Technical staff, engineering, service transition, applications, architecture

Description

Designs are created in the service design phase of the service management lifecycle. These designs are based on requirements and organizational standards to ensure supportable services and processes are found in production. A design can be rejected based on documented reasons such as:

- Missing customer requirements

- Missing operational requirements

- Not following architectural standards

- Improper or mission documentation.

This metric provides insight into the service design phase. As designs are created, they are reviewed to ensure compliance to criteria such as:

- Policies

- Architectures

- Business needs

- Regulatory requirements.

This metric can assist in improving the level of collaboration and communication with the various design teams.

Measurement description

Formula: N/A

This metric should be monitored and tracked (by both project management and service management) regularly to ensure all aspects and criteria of the design fulfill the requirements of the customer and the business. This will also demonstrate a more proactive approach to managing the environment.

Frequency |

|

Measured: |

Monthly |

Reported: |

Quarterly |

Acceptable quality level: Create a quarterly baseline and reduce the number of designs rejected over time |

|

Range: |

Dependent on the established baseline |

Metric name

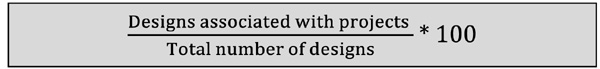

Percentage of designs associated with projects

Metric category

Design co-ordination

Suggested metric owner

Engineering or project management

Typical stakeholders

Project management office, engineering, change management

Description

This metric demonstrates the link between designs and project management. Utilizing project management throughout the lifecycle greatly increases the opportunity for success as project managers provide management skills that are typically not inherent within many IT organizations.

As the number of designs associated with projects increases, planning the staffing and resources required for the service or process will improve both the timeline and financial considerations.

Measurement description

Formula:

Using this metric in combination with the rejected SDPs and rejected design metrics will possibly demonstrate the benefits found in using project management. This can also be used with change management metrics to ensure change compliance and process integration.

Frequency |

|

Measured: |

Monthly |

Reported: |

Quarterly |

Acceptable quality level: Create a quarterly baseline |

|

Range: |

Create the acceptable quality range from the baseline |

Metric name

Number of architectural exceptions

Metric category

Design co-ordination

Suggested metric owner

Engineering or architectural management

Typical stakeholders

Engineering, architecture, management

Description

Architectural standards create consistency throughout the entire organization. These standards improve not only the designs but also:

- Testing

- Deployment

- Maintenance

- Service delivery

- Financial management.

Therefore, exceptions to any architectural standard should be monitored and managed to the lowest possible number.

This metric increases awareness to the exceptions and should be directly reported to management to ensure full approval/authorization of the exception.

Measurement description

Formula: N/A

Combined with other metrics from change, incident and problem management will provide a clear indication of potential issues with exceptions including:

- Lack of staff knowledge

- Increased costs

- Changes to maintenance routines

- Testing

- Operational processes and procedures.

Frequency |

|

Measured: |

Monthly |

Reported: |

Monthly |

Acceptable quality level: < 1% |

|

Range: |

> 1% unacceptable < 1% acceptable 0% exceeds |

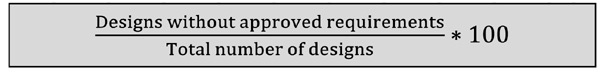

Metric name

Percentage of designs without approved requirements

Metric category

Design co-ordination

Suggested metric owner

Engineering management

Typical stakeholders

Customers, engineering, management

Description

This metric can be used by management and the design team to ensure the appropriate requirements have been collected and approved prior to moving forward with the design. Requirements drive the design of services and processes, and must be agreed upon by the relevant parties to understand what is to be delivered.

There are several different requirements types for any design. These requirements are collected at multiple stages within the design phase due to dependencies. Requirement types can include:

- Business

- Customer

- User

- Regulatory

- Operational

- Technical.

Whatever requirements are necessary to good design should be included within the project plan to ensure proper approval.

Measurement description

Formula:

Collection of data for this metric may be a manual effort depending on tool availability. This metric can be tracked within a project management tool or a repository for designs.

Frequency |

|

Measured: |

Monthly |

Reported: |

Quarterly |

Acceptable quality level: < one per cent |

|

Range: |

> 1% unacceptable < 1% acceptable 0% exceeds (this should ultimately be the AQL) |

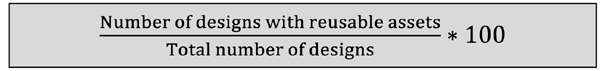

Metric name

Percentage of designs with reusable assets

Metric category

Design co-ordination

Suggested metric owner

Engineering management or asset management

Typical stakeholders

Technical staff, engineering, management

Description

This metric provides an understanding of the level of efficiency for asset utilization. Reusable assets enhance the design effort by offering a known commodity to the design which will:

- Decrease design time

- Reduce costs

- Reduce test time

- Increase consistency.

Reusable assets may include:

- Hardware and software modules

- Documents

- Data elements

- Designs.

This metric will provide management with an indication of maturity for the design phase. As the number of reusable assets increases, other metrics (i.e. incident management, problem management) should see a gradual improvement.

Measurement description

Formula:

This metric should be continually monitored and reported to stakeholders. Integration with other metrics will help demonstrate the cause and effect of reusable assets within other processes.

Frequency |

|

Measured: |

Monthly |

Reported: |

Quarterly |

Acceptable quality level: Create a quarterly baseline and increase designs with reusable assets over time |

|

Range: |

Dependent on the established baseline |

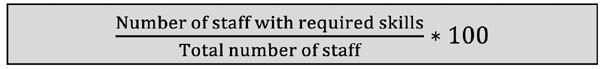

Metric name

Percentage of staff with skills to management designs

Metric category

Design co-ordination

Suggested metric owner

Engineering management

Typical stakeholders

Technical staff, engineering, management

Description

This metric can be gathered from a human resources department, training records or a skills inventory/matrix. The metric provides an understanding of the skills available, within the organization, to build and manage aspects of a design.

This metric is vital when considering technologies or planning for training (i.e. budget, time, resources). This metric can be used to enhance decision making prior to the launch of the design and could impact the attributes of the design components.

Measurement description

Formula:

A skills matrix may be the best tool to understand and measure the skills required to deploy a service or process into production.

Frequency |

|

Measured: |

Quarterly |

Reported: |

Quarterly |

Acceptable quality level: Primary and backup skills required |

|

Range: |

N/A |

Service catalog management metrics

A service catalog presents the details of all services offered to those with approved access. It contains specific details (e.g. dependencies, interfaces, constraints, etc.) in accordance with the established service catalog management policies. The process objectives are to manage the service catalog with current and accurate information, ensure it is available to those with approved access, and ensure it supports the ongoing needs of other processes.

The scope of service catalog management encompasses maintaining accurate information for all services in the operational stage and services being prepared to go to an operational state. Timely catalog updates to reflect newly released services/service packages should be part of the overall service change. Applied service catalog management metrics should be based on the established process goals and specific Key Performance Indicators (KPIs). The service catalog management metrics presented in this section are:

- Percentage of services in the service catalog

- Percentage of customer facing services

- Percentage of service catalog variance

- Number of changes to the service catalog

- Percentage of service requests made via the service catalog

- Percentage increase in service catalog utilization

- Percentage of support services linked to CIs.

Metric name

Percentage of services in the service catalog

Metric category

Service catalog management

Suggested metric owner

Service catalog manager

Typical stakeholders

Customers, IT staff, management

Description

This metric provides an understanding of the amount of services that are documented in the service catalog. The metric can be used periodically throughout the life of the service catalog to ensure all services have a catalog entry.

However, during the transition/migration to the service catalog is when this metric can provide great value. This metric can assist in tracking the progress of moving services into the catalog.

This metric will become increasingly important as customers begin to use the service catalog more often.

Measurement description

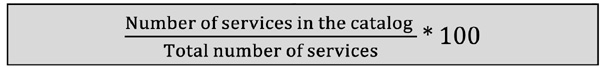

Formula:

This metric should be monitored and collected regularly to ensure all services are entered into the service catalog.

Frequency |

|

Measured: |

Monthly |

Reported: |

Quarterly |

Acceptable quality level: 98% |

|

Range: |

< 98% unacceptable = 98% acceptable 100% exceed |

Metric name

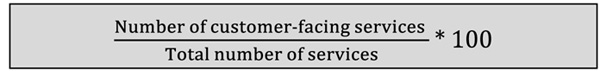

Percentage of customer-facing services

Metric category

Service catalog management

Suggested metric owner

Service catalog manager

Typical stakeholders

Customers, IT staff, management

Description

While similar to the previous metric, this metric deals with measuring services found in the service catalog. The difference however, is that this metric focuses on those services that can be used by the customer.

This metric will have a direct correlation with the usage of the catalog from the user base. If the service catalog is actionable (allow users to make service requests from the catalog) users will come to rely on the catalog to perform and/or make the majority of their requests to IT.

Therefore, IT must strive to have all customer-facing services populated within the service catalog. This will also give the users as sense of empowerment as they can manage and monitor their own requests.

Measurement description

Formula:

These metrics should be monitored and tracked regularly to ensure an increased service catalog utilization to fulfill the service requests made from the customers.

Frequency |

|

Measured: |

Monthly |

Reported: |

Quarterly |

Acceptable quality level: Establish the baseline and trend over time. |

|

Range: |

N/A |

Metric name

Percentage of service catalog variance

Metric category

Service catalog management

Suggested metric owner

Service catalog manager

Typical stakeholders

Customers, IT staff, management

Description

This metric will assist in monitoring the accuracy of the service catalog entries. Regular reviews or audits of the catalog must be conducted to ensure the information in the catalog entry matches the functionality and attributes of the actual service. The review can include catalog features such as:

- Service attributes

- Service description

- Pricing

- Grammar and spelling

- Service availability.

The accuracy of the service catalog is paramount to the success of the services offered and the catalog. Customers must feel confident in the information provided within the service catalog in order to make and/or continue making the appropriate service request.

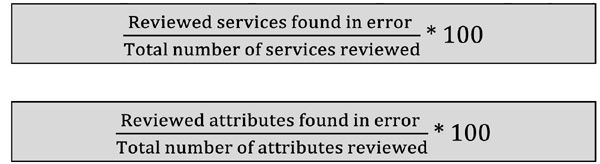

Measurement description

Formula: This formula provides a high level view of service in error.

The second formula provides a granular view into the services. Using these formulas together gives a better perspective of the catalog accuracy.

Frequency |

|

Measured: |

Monthly |

Reported: |

Quarterly |

Acceptable quality level: Create a quarterly baseline |

|

Range: |

100% accuracy |

Metric name

Number of changes to the service catalog

Metric category

Service catalog management

Suggested metric owner

Service catalog manager or change manager

Typical stakeholders

Customers, IT staff, management

Description

Changes to the service catalog are inevitable but change also increases the potential of errors in the catalog. Therefore, this metric provides insight to changes that impact the service catalog and service offerings.

Monitoring and tracking change can assist in making decisions to review or audit the service catalog. If a high volume of changes occur within a period of time a catalog review might be warranted to ensure catalog accuracy.

This metric can also help to align service changes to catalog changes to again ensure catalog accuracy.

Measurement description

Formula: N/A

Regular tracking of this metric will provide a greater understanding of service change and the impact to the service catalog. The tracking can also trigger a review based on a pre-defined number of changes within a defined time period. Combining this metric with the previous metric will provide an increased level of assurance for the service catalog.

Frequency |

|

Measured: |

Monthly |

Reported: |

Monthly |

Acceptable quality level: N/A |

|

Range: |

N/A |

Metric name

Percentage of service requests made via the service catalog

Metric category

Service catalog management

Suggested metric owner

Service catalog manager or service desk manager

Typical stakeholder

Customers, service owners, management

Description

An actionable service catalog provides increased benefits for all stakeholders. These benefits may include:

- Improved information of service offerings

- Better turnaround time for requests

- Decreased IT staff workload

- Customer empowerment

- Increased customer satisfaction.

This upward trending metric will demonstrate not only the utilization of the service catalog but also the potential increase in customer satisfaction. As customers become familiar with the catalog and how to use it, their satisfaction level may increase due to the enhanced functionality of the catalog and availability to service requests.

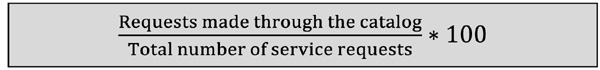

Measurement description

Formula:

Collection of data for this metric may be a manual effort depending on tool availability. This metric can be tracked within a project management tool or a repository for designs.

Frequency |

|

Measured: |

Monthly |

Reported: |

Quarterly |

Acceptable quality level: <5% |

|

Range: |

> 5% unacceptable < 5% acceptable 0% exceeds (this should ultimately be the AQL) |

Metric name

Percentage increase in service catalog utilization

Metric category

Service catalog management

Suggested metric owner

Service catalog manager

Typical stakeholders

Customers, IT staff, management

Description

This metric provides an understanding of the acceptance of the service catalog as a single point of entry to seeing the services available and requesting those services. As services are added to the service catalog, customers should increase their utilization of the catalog to increase their productivity. Catalog offerings can include:

- Requesting services

- Referencing service information

- Asking questions

- Requesting changes

- FAQs.

A good service catalog empowers customers and frees the IT staff to perform other value added activities. To increase utilization, understand the customer’s needs and provide for those through an intuitive, easy to use catalog.

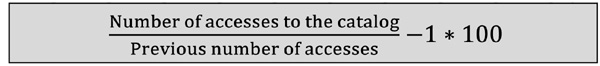

Measurement description

Formula:

This metric should be over a standard period of time (i.e. weekly, monthly) for accurate utilization of the results. The metric will provide a new baseline during the reporting period and used for trending.

Frequency |

|

Measured: |

Monthly |

Reported: |

Quarterly |

Acceptable quality level: Create a baseline and compare regularly |

|

Range: |

Dependent on the established baseline |

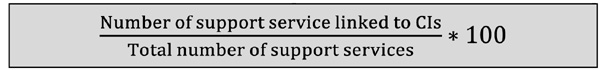

Metric name

Percentage of support services linked to CIs

Metric category

Service catalog management

Suggested metric owner

Service catalog manager or configuration manager

Typical stakeholders

IT staff, configuration management, change management, release management

Description

Support services may not have direct value to the business but they do provide value in support of business services and processes. The metric will provide an understanding of the Configuration Items (CIs) linked to the support service. Having this understanding during the design phase allows greater levels of insight into the service when making design changes or integrating with other services.

Having increased knowledge of the CIs linked to a service during design ensures the correct resources and skills are available to enhance the overall design and service. This metric can be used to enhance decision making when considering the architecture and attributes of the service.

Measurement description

Formula:

Integration with the Configuration Management System (CMS) is required for the accuracy of this metric. This will provide a holistic view of the support service and the infrastructure used to provide the service.

Frequency |

|

Measured: |

Monthly |

Reported: |

Quarterly |

Acceptable quality level: N/A |

|

Range: |

N/A |

Service level management metrics

Service level management is a vital service design process. It has the responsibility for identifying and documenting Service Level Requirements (SLRs) and establishing formal Service Level Agreements (SLAs) for every IT service offered.

Service level targets must accurately reflect customer expectations and user needs. Likewise, the service provider must be able to deliver services that meet or exceed targets in the established SLAs. The service level management process ensures that implemented (i.e. operational) and planned IT services are delivered in accordance with achievable performance targets. Service Level Management (SLM) efforts entail defining and documenting service level targets; monitoring, objectively measuring delivered service levels based on SLAs; reporting to customers and business management on actual service level performance; and participating in service improvement actions. The metrics presented in this section are:

- Percentage of SLA targets threatened

- Percentage of SLA targets breached

- Percentage of services with SLAs

- Number of service review meetings conducted

- Percentage of OLAs reviewed annually

- Number of CSI initiatives instigated by SLM

- Percentage of service reports delivered on time.

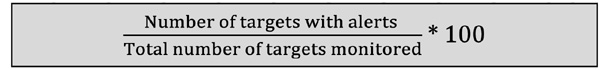

Metric name

Percentage of SLA targets threatened

Metric category

Service level management

Suggested metric owner

Service level manager

Typical stakeholders

Customers, IT staff, management

Description

Service level targets are found in the agreed upon SLAs established between the customer and service provider. Measurements taken against these targets and thresholds are set for monitoring to ensure proper delivery of the service. This metric is based on the target thresholds set within a monitoring tool.

This is a proactive metric which demonstrates the amount of alerts received within a defined period of time. Benefits of this metric include:

- Number of alerts based on the number of targets monitored

- Discovering potential problems before they occur

- The prevention of service outages

- The proactive efforts of the provider

- The quality of service monitoring.

Measurement description

Formula:

This metric should be monitored and collected regularly to ensure all monitored targets are within their normal capacity, availability, and performance ranges.

Frequency |

|

Measured: |

Weekly |

Reported: |

Monthly |

Acceptable quality level: Establish a baseline and trend over time |

|

Range: |

Set the quality range from the baseline |

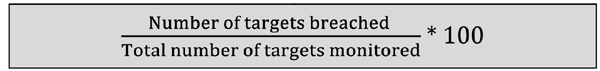

Metric name

Percentage of SLA targets breached

Metric category

Service level management

Suggested metric owner

Service level manager

Typical stakeholders

Customers, IT staff, management

Description

While similar to the previous metric, this metric focuses on SLA targets that have been breached or failed. This metric should reflect a downward trend and will impact on the overall service quality delivered. Breached targets can:

- Increase potential downtime

- Impact business performance

- Create a reactive environment

- Damage the reputation of the organization

- Harm the relationship with the customer.

IT must understand the importance of the SLA targets and closely monitor these targets to ensure service delivery. Target breaches can be caused by:

Measurement description

Formula:

These metrics can be represented with a percentage. However, some organizations may find more value in using an actual count. In either case, this metric must be reported to customers and management.

Frequency |

|

Measured: |

Weekly |

Reported: |

Monthly |

Acceptable quality level: 1% |

|

Range: |

> 1% unacceptable = 1% acceptable < 1% exceeds |

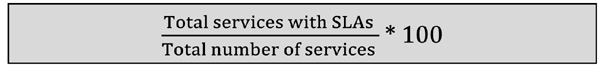

Metric name

Percentage of services with SLAs

Metric category

Service level management

Suggested metric owner

Service level manager

Typical stakeholders

Customers, IT staff, management

Description

This metric will assist in monitoring the progress of service level management as more services are documented with agreed upon SLAs. A well-documented SLA will help improve:

- Service delivery

- Customer relationships

- Process performance

- Business services

- Organizational performance.

SLAs are based on business requirements and expectations. These will help IT better understand the business and how the business operates, thus transforming IT from a technology provider to a service provider. Everyone wins.

Measurement description

Formula:

The metric is a catalyst facilitating the use of SLM metrics as well as other process metrics. Monitor this metric closely and regularly report SLM progress to all stakeholders.

Frequency |

|

Measured: |

Monthly/quarterly |

Reported: |

Quarterly/annually |

Acceptable quality level: 100% |

|

Range: |

Set achievement goals over time |

Metric name

Number of service review meetings conducted

Metric category

Service level management

Suggested metric owner

Service level manager

Typical stakeholders

Customers, IT staff, management

Description

Service review meetings must be documented in the SLA. This binds the customer and the service provider into an ongoing partnership to manage the service.

Based on the level of importance of the service, review meetings can be conducted monthly, quarterly or annually. Meeting documents such as agendas, attendance sheets, and meeting notes provide evidence of the meetings and of this metric.

This metric demonstrates the ongoing pursuit of service excellence as SLM continuously works with the business and IT to deliver services that fulfill the business’s requirements.

Measurement description

Formula: N/A

Regular tracking of this metric can show how IT is attempting to better serve the business. Meeting schedules will provide input for the metric while meeting artifacts provide evidence.

Frequency |

|

Measured: |

Monthly |

Reported: |

Monthly |

Acceptable quality level: N/A |

|

Range: |

N/A |

Metric name

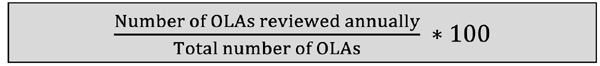

Percentage of OLAs reviewed annually

Metric category

Service level management

Suggested metric owner

Service level manager

Typical stakeholders

Customers, service owners, management

Description

As OLAs help define the levels of support required to deliver a service, it is vital that they are regularly reviewed to ensure they are up to date and relevant to meet the needs of the service. All parties associated with the OLA must participate in the OLA review to make certain all aspects of support are properly maintained and can continue to meet or exceed the targets established within the OLA.

This metric should reflect an upward trend and will demonstrate SLM’s continued commitment to the delivery and ongoing support of the service. As support groups become familiar with the SLM process and the OLAs their understanding of the service and all aspects of the service will increase. This new found understanding and knowledge will help build the relationships within the IT organization and with the customer.

Measurement description

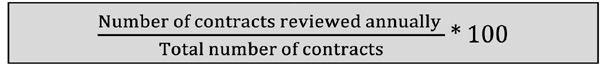

Formula:

Collection of data for this metric may be a manual effort depending on how OLAs are created, tracked and stored.

Frequency |

|

Measured: |

Monthly |

Reported: |

Quarterly |

Acceptable quality level: 98% |

|

Range: |

< 98% unacceptable = 98% acceptable > 99% exceed |

Metric name

Number of CSI initiatives instigated by SLM

Metric category

Service level management

Suggested metric owner

Service level manager

Typical stakeholders

Customers, IT staff, management

Description

This metric is a simple tally (count) that provides an understanding of the service improvement initiatives started by SLM. The proactive nature of SLM should be a natural feed to service improvements as the service level manager:

- Monitors service performance

- Compares performance against SLA targets

- Meets with customers regularly

- Monitors OLAs

- Works with other ITSM processes and IT groups.

The efforts of SLM can discover opportunities for improvement and start the improvement process as early as possible. In many cases, this can occur much sooner than normal lines of communication.

Measurement description

Formula: N/A

This metric will demonstrate the proactive nature of SLM and increase the relationship with all SLM stakeholders including:

- Customers

- Service owners

- Management

- CSI manager

- Other process managers.

Frequency |

|

Measured: |

Monthly |

Reported: |

Quarterly |

Acceptable quality level: N/A |

|

Range: |

N/A |

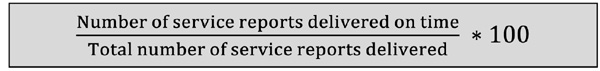

Metric name

Percentage of service reports delivered on time

Metric category

Service level management

Suggested metric owner

Service level manager

Typical stakeholders

Customers, service owner, management

Description

As organizations move toward a more service-oriented environment, service reporting is becoming a critical component for managing services. Management depends on reports to review service delivery and improve decision making. Therefore, on-time delivery of these reports must be monitored to ensure stakeholders are up to date with regards to service performance and delivery.

This metric can help ensure service reports are created and delivered as required to the appropriate stakeholders. Reporting requirements should be contained within the SLA and measured using this metric.

Measurement description

Formula:

Regular monitoring of this metric will help the organization maintain consistency in report delivery and help increase customer satisfaction as on-time delivery meets or exceeds customer expectations.

Frequency |

|

Measured: |

Monthly |

Reported: |

Quarterly |

Acceptable quality level: 98% |

|

Range: |

< 98% unacceptable = 98% acceptable > 99% exceed |

Availability management metrics

The availability management process is central to the IT service value proposition. Service utility (i.e. fitness for purpose and fitness for use)5 cannot be realized without sustained delivery of services that meet the prescribed availability levels. Service availability is initially considered as part of setting objectives in service strategy generation, validated for realism/achievability and incorporated as part of service design, verified through testing in the operating environment as part of service transition, and continuously monitored in service operation.

Availability management process activities are intended to ensure that the reliability of delivered IT services meet availability targets defined in SLAs and is concerned with both current and planned service availability needs. The availability management process metrics presented in this section are:

- Service availability percentage

- Percentage of services with availability plans

- Percentage of incidents caused by unavailability

- Percentage of availability threshold alerts

- Percentage of services that have been tested for availability

- Mean Time Between Failures (MTBF)

- Mean Time Between Service Incidents (MTBSI)

- Mean Time To Restore Service (MTRS)

- Mean Time To Repair (MTTR)

- Percentage of services classified as vital business functions.

Metric name

Service availability percentage

Metric category

Availability management

Suggested metric owner

Service level manager

Typical stakeholders

IT operations manager, service manager, customer relationship manager, customers

Description

This is a measurement of the availability of a discrete service asset expressed as a percentage of the total time the service functionality is operationally available to the user. The metric applies MTBSI, MTTR and MTRS values from incident management records.

- MTBSI is calculated by subtracting the recorded date/time of a previous incident from the subsequently recorded incident for the specific service asset.

- MTRS represents the average time between implementing action(s) to correct/repair the root cause of a failure to the time when the service functionality is restored to the user. It includes service restoration testing and user acceptance times.

- MTTR is the sum of the detection time + response time + repair time.

Measurement description

Formula:

Note

An AQL should be established for the specific service asset (system, subsystem, application, etc.). In a 24/7 operating environment, the AQL of 99.9% allows unavailability (downtime) of 8.76 hours/year or 43.8 minutes/month or 10.11 minutes/week.

Metric name

Percentage of services with availability plans

Metric category

Availability management

Suggested metric owner

Service level manager

Typical stakeholders

IT operations manager, service manager, customer relationship manager, customers

Description

This metric demonstrates the successful implementation of the availability management process as part of service delivery best practices. It shows the percentage of services with availability plans to document methods and techniques for achieving availability targets defined in SLAs.

The measurement is useful as an indicator of the validity of service design practices.

Measurement description

Formula:

Frequency |

|

Measured: |

Monthly |

Reported: |

Monthly |

Acceptable quality level: 99% |

|

Range: |

< 99% unacceptable (indicates poor/incomplete service designs) = 99% acceptable (maintain/improve) > 99% exceeds (indicates well-designed services) |

Note

This AQL allows for one per cent of delivered services to be without an availability plan to account for pipeline activities such as service redesign, service pilots and retiring services.

Metric name

Percentage of incidents caused by unavailability

Metric category

Availability management

Suggested metric owner

Typical stakeholders

IT operations manager, service manager, service desk, customer relationship manager

Description

This measurement provides an indication of the magnitude of issues related to the lack of service availability (unavailability). Depending on the root cause(s) of availability-related incidents, the metric is applied as an indicator of:

- The need for updating the capacity plan

- Unreliable service assets; and/or

- The need for redundant system/subsystem configuration(s) to improve service availability.

The metric can be applied as an input to a Business Impact Analysis (BIA) for service asset unavailability/unreliability.

Measurement description

Formula:

Note

This AQL reflects 99.95% availability of delivered services. This translates to 21.9 minutes per month of unavailability in a 24/7 operating environment. The commonly recognized threshold for a High Availability (HA) system/subsystem is 99.999 (‘five nines’) which allows for 26.28 seconds per month of unavailability in a 24/7 operating environment. Achieving HA requires investment in redundant service assets to provide the target level of fault tolerance.

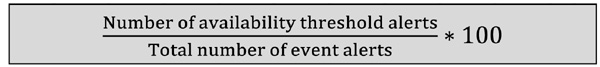

Metric name

Percentage of availability threshold alerts

Metric category

Availability management

Suggested metric owner

Service level manager

Typical stakeholders

IT operations manager, service manager, service desk, customer relationship manager

Description

This measurement provides an indication of the magnitude of issues related to monitored service availability (unavailability) thresholds. Depending on the root cause(s) of availability-related alerts, the metric is applied as an indicator of:

- The need for updating the capacity plan

- Evolving patterns of business activity

- Degraded reliability of service assets; and/or

- The need for redundant system/subsystem configuration(s) to sustain service availability

The metric can be applied as an input to a BIA for service asset unavailability/unreliability.

Measurement description

Formula:

Frequency |

|

Measured: |

Monthly |

Reported: |

Monthly |

Acceptable quality level: N/A |

|

Range: |

N/A |

Set baseline for event alert thresholds; measure and perform trend analysis over time. Expectation for this metric would be a sustained to upward trend from the baseline depending on the service availability, patterns of business activity and service asset lifecycle maturity.

Metric name

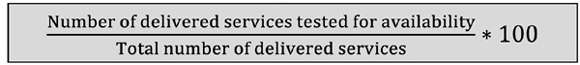

Percentage of services that have been tested for availability

Metric category

Availability management

Suggested metric owner

Typical stakeholders

IT operations manager, service manager, change manager, customer relationship manager, customers

Description

This metric demonstrates the successful implementation of the availability testing as part of service release best practices. It shows the percentage of deployed services for which the target availability has been verified through service validation and testing to underpin the defined in SLAs.

The measurement is useful as an indicator of release and deployment management process implementation consistency. The results of this metric should be applied in assessing service delivery risk exposure.

Measurement description

Formula:

Frequency |

|

Measured: |

Monthly |

Reported: |

Monthly |

Acceptable quality level: 99% |

|

Range: |

< 99% unacceptable (indicates poor/incomplete service designs) = 99% acceptable (maintain/improve) > 99% exceeds (indicates properly deployed services) |

Note

This AQL allows for one per cent of services to be implemented without Availability testing. The services for which Availability testing has not been performed should be noted for Service Management risk exposure and identified as Continual Service Improvement (CSI) opportunities.

Metric name

Mean Time Between Failures (MTBF)

Metric category

Availability management

Suggested metric owner

Service level manager

Typical stakeholders

IT operations manager, service level manager, service desk, problem manager, customers

Description

This measurement is a way to describe the availability of a discrete service or service asset. The MTBF expressed as the arithmetic average of the times between service outages/failures (incidents) as reflected in incident management records.

MTBF is a commonly used measurement for predicting service reliability and is of significant value for:

- Designing service asset configurations to meet the target end-to-end service availability

- Evaluating risks associated with meeting SLAs

- Developing service asset preventive maintenance schedules

- Developing inputs for determining service support staffing levels.

Measurement description

Formula:

Frequency |

|

Measured: |

Monthly |

Reported: |

Monthly |

Acceptable quality level: N/A |

|

Range: |

N/A |

Set baseline availability target in terms of service outage time (in minutes and/or seconds) per month and per year; measure service incident times and perform MTBF trend analyses over time. Expectation for this metric would be a sustained to upward trend from the baseline depending on the target service availability, preventive maintenance actions, proactive event management and service asset lifecycle maturity.

Metric name

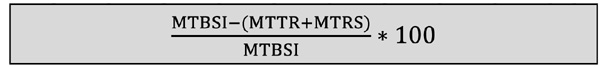

Mean Time Between Service Incidents (MTBSI)

Metric category

Availability management

Suggested metric owner

Service level manager

Typical stakeholders

IT operations manager, service level manager, service desk, problem manager, customers

Description

This measurement is the arithmetic average time from when a service fails, until it next fails. The metric is used for measuring and reporting service reliability and is the equivalent to adding the MTTR, MTRS and MTBF values for a service, as determined from incident management records.

MTBSI is particularly valuable for:

- Measuring and reporting service reliability

- Empirical input to the Service Knowledge Management System (SKMS)

- Identifying CSI opportunities

- Service management decision support.

Measurement description

Formula:

![]()

Frequency |

|

Measured: |

Monthly |

Reported: |

Monthly |

Acceptable quality level: N/A |

|

Range: |

N/A |

Set baseline availability target in terms of outage time (in minutes and/or seconds) per month and per year; measure failure incident times and perform MTBSI trend analyses over time. Expectation for this metric would be a sustained to upward trend from the baseline depending on the target service availability, preventive maintenance actions, proactive event management and service asset lifecycle maturity.

Metric name

Mean Time to Restore Service (MTRS)

Metric category

Availability management

Suggested metric owner

Service level manager

Typical stakeholders

IT operations manager, service level manager, service desk, problem manager, customers

Description

This metric is the arithmetic average time between implementing action(s) to correct/repair a service failure to the time when the service functionality is restored to the user. The measurement includes service restoration testing and user acceptance times.

The MTRS measurement is valuable for:

- Inputs for service design and ongoing service level management

- Service restoration and user acceptance process assessments

- Evaluating staff skill levels and training needs

- Inputs to the SKMS.

Measurement description

Formula:

Average of the time to repair the service plus the time to restore the service (fully functional).

Frequency |

|

Measured: |

Monthly |

Reported: |

Monthly |

Acceptable quality level: N/A |

|

Range: |

N/A |

Set baseline availability target in terms of outage time (in minutes and/or seconds) per month and per year; capture detailed incident activity times and perform MTRS trend analyses over time. Expectation for this metric would be a sustained to downward trend from the baseline depending on the service asset lifecycle maturity, the maturing technical skills and growth of the SKMS.

Metric name

Mean Time To Repair (MTTR)

Metric category

Availability management

Suggested metric owner

Service level manager

Typical stakeholders

IT operations manager, service level manager, service desk, problem manager, customers

Description

The MTTR measurement is the total time for incident detection, response and corrective action. The metric captured from incident management records and is of significant value for:

- Managing failure recovery times to ensure delivered service levels

- Input for calculating service asset total cost of ownership (TCO)

- Evaluating maintenance staff skill levels and training needs

- Assessing the SKMS

- Service asset lifecycle management decision making (e.g. refresh cycles).

Measurement description

Formula:

Average time between the resolution of an incident and the start of the incident.

Frequency |

|

Measured: |

Monthly |

Reported: |

Monthly |

Acceptable quality level: N/A |

|

Range: |

N/A |

Set baseline availability target in terms of outage time (in minutes and/or seconds) per month and per year; measure incident corrective action completion times and perform MTTR trend analyses over time. Expectation for this metric would be a sustained to downward trend from the baseline depending on staff technical skills, maturity of the SKMS and the service asset lifecycle maturity.

Metric name

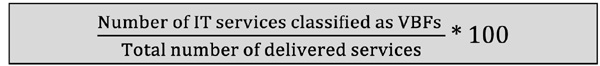

Percentage of services classified as vital business functions

Metric category

Availability management

Suggested metric owner

Service level manager

Typical stakeholders

IT operations manager, service level manager, service desk, problem manager, customers

Description

A vital business function (VBF) is that element of a business process that is critical to the business’s success. The more vital a business function is, the higher the target availability that should be inherent to the supporting IT service design. For example, an IT service may support multiple financial management business functions with payroll and accounts receivable as VBFs. This metric is of significant value for:

- Input for ensuring delivered IT services are aligned to needs of the business

- Determining the required service design to meet target availability

- Prioritizing service asset budgets to ensure VBFs are properly supported.

Measurement description

Formula:

Frequency |

|

Measured: |

Quarterly |

Reported: |

Semi-annually |

Acceptable quality level: N/A |

|

Range: |

N/A |

Capacity management metrics

The capacity management process focuses on ensuring there is adequate capacity of technical infrastructure and supporting human resources to sustain service delivery within the performance envelope necessary to satisfy SLA targets. Capacity planning considers current and forecasted service demand within such constraints as financial budgets and physical space limits.

Capacity management process metrics provide a profile of service capacity adequacy, indication of service demand trends and performance triggers for service capacity changes. The basic capacity management metrics provided in this section are:

- Percentage of services with capacity plans

- Percentage of incidents caused by capacity issues

- Percentage of alerts caused by capacity issues

- Percentage of RFCs created due to capacity forecasts

- Percentage of services with demand trend reports

- Number of services without capacity thresholds.

Metric name

Percentage of services with capacity plans

Metric category

Capacity management

Suggested metric owner

Capacity manager or service manager

Typical stakeholders

IT operations manager, service level manager, supplier manager, change manager

Description

A viable capacity plan enables sustained delivery of services that meet quality levels prescribed by SLAs. It also provides a sufficient planning timeframe to satisfy projected demand based on patterns of business activity trends and also considers future SLRs for new offerings in the service pipeline.

This metric provides insight into the adequacy of capacity planning and potential risks to meeting SLA obligations. It is also useful for identifying CSI opportunities.

Measurement description

Formula:

Frequency |

|

Measured: |

Quarterly |

Reported: |

Quarterly |

Acceptable quality level: 99% |

|

Range: |

< 99% unacceptable (indicates incomplete service design) = 99% acceptable (maintain/improve) > 99% exceeds (indicates well-designed services) |

Note

This AQL allows for one per cent of delivered services to be without a capacity plan to account for pipeline activities such as service redesign, service pilots and services pending retirement.

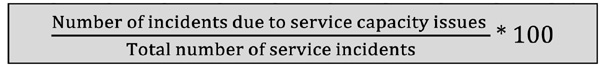

Metric name

Percentage of incidents caused by capacity issues

Metric category

Capacity management

Suggested metric owner

Capacity manager or service manager

Typical stakeholders

IT operations manager, service level manager, service desk, problem manager, change manager

Description

This is a measure of service disruptions and/or degradations for which inadequate resource capacity is the root cause. It is important to note that service capacity issues are typically considered in terms of the technical infrastructure components (e.g. transmission bandwidth or storage), but can also involve human resources (i.e. staffing levels for service delivery workloads).

This is a critical metric for demand management. Capacity-driven incidents provide empirical data for continuously assessing the Patterns of Business Activity (PBA) and evaluating service capacity plans. This metric should be captured for each deployed service to support informed decision making.

Measurement description

Formula:

Frequency |

|

Measured: |

Monthly |

Reported: |

Monthly |

Acceptable quality level: N/A |

|

Range: |

N/A |

Set the baseline in accordance with service-specific capacity plan thresholds; measure and perform trend analysis over time. Expectation for this metric would be a sustained to upward trend from the baseline depending on the growth of service utilization and customer PBAs.

Metric name

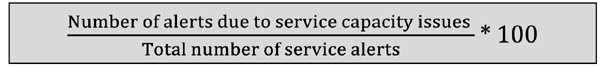

Percentage of alerts caused by capacity issues

Metric category

Capacity management

Suggested metric owner

Capacity manager or service manager

Typical stakeholders

IT operations manager, service level manager, service desk, problem manager, change manager

Description

This is a proactive measurement of service performance based on service capacity issues. It is important to note that service capacity alerts are primarily considered in terms of technical performance thresholds (i.e. event management) although metrics such as labor efficiency variance can provide alerts for service human resource capacity issues (i.e. staffing levels for service delivery workloads).

This is a valuable metric for proactive demand management. Capacity-driven alerts result from continuously monitoring the PBA and comparing service performance to the capacity plan. Capacity alert thresholds should be set for each deployed service to support informed decision making.

Measurement description

Formula:

Frequency |

|

Measured: |

Monthly |

Reported: |

Monthly |

Acceptable quality level: N/A |

|

Range: |

N/A |

Set the service-specific event management threshold baseline in accordance with the capacity plan; measure and perform trend analysis over time. Expectation for this metric would be a sustained to downward trend from the baseline depending on capacity management actions to meet evolving service demand.

Metric name

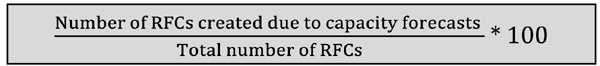

Percentage of RFCs created due to capacity forecasts

Metric category

Capacity management

Suggested metric owner

Capacity manager or service manager

Typical stakeholders

IT operations manager, service level manager, service desk, problem manager, change manager

Description

This metric provides an indicator of service capacity plan validity. It is based on the need for continuously monitoring demand based on PBAs. The volume of RFCs generated because of capacity utilization trends is an indicator of the effectiveness of the capacity management process and can be used as a trigger for initiating capacity plan updates.

Measurement description

Formula:

Frequency |

|

Measured: |

Monthly |

Reported: |

Monthly |

Acceptable quality level: N/A |

|

Range: |

N/A |

Set the baseline in accordance with utilization projections in the capacity plan; measure and perform trend analysis over time. Expectation for this metric would be a sustained to downward trend from the baseline depending on the evolving accuracy of demand forecasts and the timeliness of capacity plan updates in recognition of patterns of business activity (PBAs).

Metric name

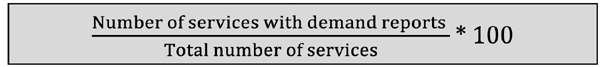

Percentage of services with demand trend reports

Metric category

Capacity management

Suggested metric owner

Capacity manager or service manager

Typical stakeholders

IT operations manager, service level manager, supplier manager, change manager

Description

This metric is closely linked to the ‘Percentage of services with capacity plans’ and ‘Percentage of RFCs created due to capacity forecasts’ metrics. It is a high-level profile of capacity management performance efficacy in terms of continuous monitoring, tracking, analyzing and reporting service demand. The measurement is valuable for:

- Evaluating performance of the capacity management process

- Assessing the validity and reliability of capacity utilization forecasts

- Identifying capacity management CSI opportunities.

Measurement description

Formula:

Note

This AQL allows for one per cent of delivered services to be without demand trend reports to account for pipeline activities such as service redesign, service pilots and services pending retirement.

Metric name

Number of services without capacity thresholds

Metric category

Capacity management

Suggested metric owner

Capacity manager or service manager

Typical stakeholders

IT operations manager, service level manager, supplier manager, change manager

Description

This metric is closely linked to the ‘Percentage of alerts caused by capacity issues’ metric. It provides insight to the consistency of service capacity management process implementation. The measurement is valuable for:

- Evaluating performance of the capacity management process

- Assessing the level of service risk exposure due to capacity utilization

- Identifying service design CSI opportunities.

Measurement description

This is a simple quantitative tally of the delivered services that do not have capacity utilization (i.e. demand management) thresholds established as part of operations performance monitoring.

Frequency |

|

Measured: |

Monthly |

Reported: |

Monthly |

Acceptable quality level: N/A |

|

Range: |

N/A |

Set the baseline in accordance with service design criteria for the capacity management process; measure and perform trend analysis over time. Expectation for this metric would be a sustained to downward trend from the baseline depending on the maturity of capacity management process implementation as part of service design.

IT service continuity management metrics

IT Service Continuity Management (ITSCM) is central to Business Continuity Management (BCM) as an inherent part of risk reduction involving disaster recovery/continuity of operations (DR/COOP) planning. The ITSCM process ensures service designs incorporate the redundancy and recovery options that provide the necessary resilience to meet operational availability established in SLAs.

IT service continuity planning can find business information from a BIA and the level of business risk tolerance for unavailability of the service. A critical element of the ITSCM process is regular verification testing of service continuity plans. The ITSCM metrics presented in this section are:

- Percentage of services with a BIA

- Percentage of services tested for continuity

- Percentage of ITSCM plans aligned to BCM plans

- Number of successful continuity tests

- Percentage of services with continuity plans

- Number of services with annual continuity reviews.

Metric name

Percentage of services with a BIA

Metric category

IT service continuity management

Suggested metric owner

Continuity manager

Typical stakeholders

Customers, management

Description

A BIA provides valuable information to ITSCM and should be performed for, at a minimum, all major services. This analysis will discover all areas of a business service or unit that are critical to the success of the organization. Additionally, the analysis will provide details which outline what and how a potential loss of service and/or data will mean to the business.

This metric will provide an understanding of the services that have an up-to-date BIA which will allow ITSCM to better create contingency plans.

Measurement description

Formula:

This metric can be collected from within the ITSCM process or through the BCM process.

Frequency |

|

Measured: |

Quarterly |

Reported: |

Annually |

Acceptable quality level: N/A |

|

Range: |

N/A |

Metric name

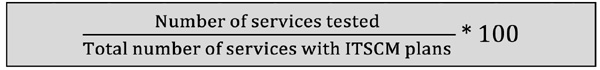

Percentage of services tested for continuity

Metric category

IT service continuity management

Suggested metric owner

Continuity manager

Typical stakeholders

Customers, management

Description

This quantitative metric allows the organization to understand the volume of services tested during an actual continuity test. There are circumstances when a limited number of services can be tested due to time or resources available. This will assist in the decision making process concerning how many tests to perform each year.

Continuity testing requires detailed planning and commitment from several areas of the organization and must have awareness and support form the highest levels of the organization. Depending on the industry, this metric could become necessary based on government regulations or legislation.

Measurement description

Formula: This first formula measures the services tested compared to only those services with ITSCM plans.

The second formula provides an understanding of tested services as compared to the total number of services offered by the organization.

These metrics combine to demonstrate the extent of testing performed during the continuity test.

Frequency |

|

Measured: |

Annually |

Reported: |

Annually |

Acceptable quality level: All services with ITSCM plans should be tested at least annually. |

|

Range: |

Ranges can have a wide dependency based on number and types of tests performed each year. |

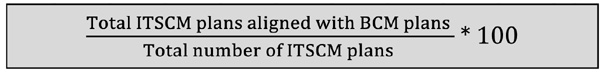

Metric name

Percentage of ITSCM plans aligned to BCM plans

Metric category

IT service continuity management

Suggested metric owner

Continuity manager

Typical stakeholders

Customers, management

Description

As IT establishes a more business- and service-focus, alignment with the changing business environment is crucial to overall success. For ITSCM, alignment with BCM will ensure that continuity plans will recover both the IT infrastructure and the business services based on business requirements.

This metric will help demonstrate IT’s commitment to the business through the alignment of these plans. An important aspect to continuity plans is the BIA, which was mentioned earlier, providing information to closely link IT and business services together.

Measurement description

Formula:

The metric is on the organization having a BCM function. Many organizations rely on IT to provide this functionality through ITSCM.

Frequency |

|

Measured: |

Annually |

Reported: |

Annually |

Acceptable quality level: 100% |

|

Range: |

If BCM plans are available, IT must align to them. |

Metric name

Number of successful continuity tests

Metric category

IT service continuity management

Suggested metric owner

Typical stakeholders

Customers, management

Description

A previous metric focused on the amount of services tested during a continuity test. This metric helps to understand the success of the test. Continuity tests are performed to demonstrate the organization’s ability to recover services in a timely manner during a disaster or crisis. Therefore, successful testing is critical to provide a high level of confidence to customers and stakeholders that, in the event of a disaster, the organization can recover business services.

This metric may be mandatory, based on government regulations or industry standards. A successful test should ultimately be determined by the customer or testing body. This success should be fully documented and certified by management.

Measurement description

Formula: N/A

This metric is a simple tally (count) of the successful tests and can be augmented by a percentage. Evidence of testing should be properly maintained and available for review or audit.

Frequency |

|

Measured: |

Annually or after testing |

Reported: |

Annually or after testing |

Acceptable quality level: N/A |

|

Range: |

N/A |

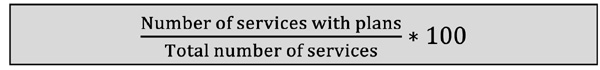

Metric name

Percentage of services with continuity plans

Metric category

IT service continuity management

Suggested metric owner

Continuity manager

Typical stakeholders

Customers, management

Description

Evidence of continuity plans for all services requiring continuity must be both available for review and up to date based on business requirements. This metric provides management with the information to understand the level of continuity planning and potential readiness.

Based on the type of service provided, or the industry, continuity plans may be required by regulatory bodies. Therefore, this metric provides the information necessary to demonstrate the organization’s effort to protect the business from a potential disaster.

Measurement description

Formula:

Eventually, all services requiring continuity must have plans that detail:

- Continuity requirements

- Recovery plans

- Resources required

- Timeline (prioritization)

- Stakeholders

- Logistics and facilities.

Frequency |

|

Measured: |

Annually |

Reported: |

Annually |

Acceptable quality level: 100% |

|

Range: |

All selected services must have continuity plans |

Metric name

Number of services with annual continuity reviews

Metric category

IT service continuity management

Suggested metric owner

Continuity manager

Typical stakeholders

Customers, management

Description

Continuity reviews should align to continuity testing to ensure that continuity plans meet the requirements of the business. All services should be reviewed to determine:

- If continuity is required

- If continuity plans are still relevant

- If continuity plans are up to date

- If resources are available to support the plans.

This metric provides the assurance that IT is maintaining continuity plans and is protecting the business from potential disaster. Attendees for review meetings include:

Measurement description

Formula: N/A

This metric is a simple tally (count) of the implemented services with annual service continuity reviews and can be augmented by a percentage. Evidence of review meetings should be captured and stored as part of the overall continuity documentation. This evidence might include:

- Meeting agenda

- Meeting notes

- Attendee list

- Updates to plans.

Frequency |

|

Measured: |

Annually |

Reported: |

Annually |

Acceptable quality level: N/A |

|

Range: |

N/A |

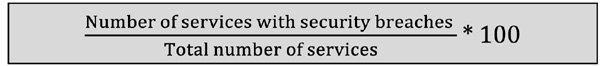

Information security management metrics

The ISM process is part of the IT governance framework that establishes the directives, policies, practices and responsibilities for protection of information assets from unauthorized access. The purpose of the ISM process is to ensure information is accessed by and/or disclosed to only those with the need and authority to know (confidentiality); information assets are complete, accurate and protected from unauthorized modification (integrity); information is available and usable when needed, the IT systems in which the information resides can resist penetration attacks, and have the fault tolerance to recover from or prevent failures (availability); and the information exchange infrastructure can be trusted (authenticity and non-repudiation). The following ISM process metrics are provided in this section:

- Detection of unauthorized devices

- Detection of unauthorized software

- Secure software/hardware configurations

- Percentage of systems with automatic vulnerability scanning program installed

- Malware defenses implemented

- Application software security measures

- Number of information security audits performed

- Number of security breaches

- Percentage of services with security breaches

- Downtime caused by Denial of Service (DoS) Attacks

- Number of DoS Threats Prevented

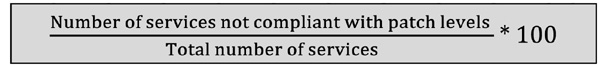

- Percentage of services not compliant with patch levels

- Number of network penetration tests conducted

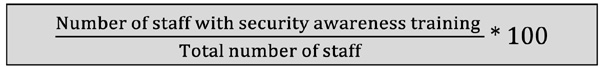

- Percentage of staff with security awareness training

- Percentage of incidents due to security issues

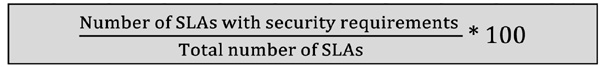

- Percentage of SLAs with security requirements.

Metric name

Detection of unauthorized devices

Metric category

Information security management

Suggested metric owner

Information security manager

Typical stakeholders

IT operations manager, information owners, asset owners

Description

Organizations must first establish information owners and asset owners and their inventory of authorized devices. With the asset inventory assembled, tools pull information from network assets such as switches and routers regarding the machines connected to the network. Whether physical or virtual, each machine directly connected to the network or attached via VPN, currently running or shut down, should be included in an organization's asset inventory.

Measurement description

Formula: N/A

The system must be capable of identifying any new unauthorized devices that are connected to the network within 24 hours. The asset inventory database and alerting system must be able to identify the location, department, and other details of where authorized and unauthorized devices are plugged into the network.

Control test

The evaluation team should connect hardened test systems to at least 10 locations on the network, including a selection of subnets associated with demilitarized zones (DMZs), workstations, and servers. The evaluation team must then verify that the systems generate an alert or e-mail notice regarding the newly connected systems within 24 hours of the test machines being connected to the network.

Frequency |

|

Measured: |

Every 12 hours |

Reported: |

As detection occurs |

Acceptable quality level: Automatic detection and isolation of unauthorized system(s) from the network within one hour of the initial alert and send a follow-up alert or e-mail notification when isolation is achieved. |

|

Range: |

N/A |

Note

While the 24-hour and one-hour timeframes represent the current metric to help organizations improve their state of security, in the future organizations should strive for even more rapid alerting and isolation, with notification about an unauthorized asset connected to the network sent within two minutes and isolation within five minutes.

Metric name

Detection of unauthorized software

Metric category

Information security management

Suggested metric owner

Information security manager

Typical stakeholders

IT operations manager, information owners, software asset owners

Description

The system must be capable of identifying unauthorized software by detecting an attempt to either install or execute it, notifying enterprise administrative personnel within 24 hours through an alert or e-mail. Systems must block installation, prevent execution, or quarantine unauthorized software within one additional hour.

Measurement description

Formula:

Score=100%, if no unauthorized software is found. Minus one per cent for each piece of unauthorized software that is found. Going forward, if the unauthorized software is not removed, score is reduced by two per cent each consecutive month.

Control test

The evaluation team must move a benign software test program that is not included in the authorized software list to 10 systems on the network. The evaluation team must then verify that the systems generate an alert or e-mail regarding the new software within 24 hours.

Frequency |

|

Measured: |

Monthly |

Reported: |

Monthly |

Acceptable quality level: The system must automatically block or quarantine the unauthorized software within one additional hour. The evaluation team must verify that the system provides details of the location of each machine with this new test software, including information about the asset owner. |

|

Range: |

N/A |

Metric name

Secure software/hardware configurations

Metric category

Information security management

Suggested metric owner

Information security manager

Typical stakeholders

IT operations manager, information owners, software asset owners

Description

Organizations can meet this metric by developing a series of images and secure storage servers for hosting these standard images. Commercial and/or free configuration management tools can then be employed to measure the settings for operating systems and applications of managed machines to look for deviations from the standard image configurations used by the organization. File integrity monitoring software is deployed on servers as a part of the base configuration. Centralized solutions like Tripwire® are preferred over stand-alone solutions.

Measurement description

Formula:

A score of 50% is awarded for using an automated tool (e.g. Tripwire®) with a central monitoring/reporting component. The remaining 50% is based on the percentage of servers on which the solution is deployed.

Control test

The evaluation team must move a benign test system that does not contain the official hardened image, but that does contain additional services, ports and configuration file changes, onto the network. This must be performed on 10 different random segments using either real or virtual systems. The evaluation team must then verify that the systems generate an alert or e-mail notice regarding the changes to the software within 24 hours.

Frequency |

|

Measured: |

Monthly |

Reported: |

Monthly |

Acceptable quality level: 100% (derived per the formula description, above) |

|

The system must automatically block or quarantine the unauthorized software within one additional hour. The evaluation team must verify that the system provides details of the location of each machine with this new test software, including information about the asset owner. |

|

Range: |

N/A |

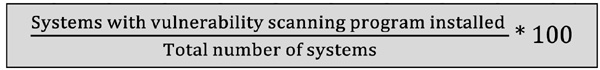

Metric name

Percentage of systems with automatic vulnerability scanning program installed

Metric category

Information security management

Suggested metric owner

Information security manager

Typical stakeholders

IT operations manager, information owners, software asset owners

Description

This metric focuses on continuous security vulnerability assessment and remediation. As vulnerabilities related to unpatched systems are discovered by scanning tools, security personnel should determine and document the amount of time that elapses between the public release of a patch for the system and the occurrence of the vulnerability scan. Tools (e.g. Security Center®, Secunia®, QualysGuard®, et al) should be deployed and configured to run automatically.

Measurement description

Formula:

Control test

The evaluation team must verify that scanning tools have successfully completed their weekly or daily scans for the previous 30 cycles of scanning, by reviewing archived alerts and reports. If a scan could not be completed in that timeframe, the evaluation team must verify that an alert or e-mail was generated indicating that the scan did not finish.

Frequency |

|

Measured: |

Daily or weekly |

Reported: |

Monthly |

Acceptable quality level: 100% of machines on system running vulnerability scans with patch scores of 95% or higher. |

|

Range: |

+/- 5% acceptable (daily/weekly patch scores) |

Metric name

Malware defenses implemented

Metric category

Information security management

Suggested metric owner

Information security manager

Typical stakeholders

IT operations manager, information owners, software asset owners

Description

Relying on policy and user action to keep anti-malware tools up to date has been widely discredited, as many users have proven incapable of consistently handling this task. To ensure anti-virus signatures are updated, effective organizations use automation. They use the built-in administrative features of enterprise end-point security suites to verify that anti-virus, anti-spyware, and host-based IDS features are active on every managed system.

Measurement description

Formula: The system must identify any malicious software that is installed, attempted to be installed, executed, or attempted to be executed on a computer system within one hour. Determine the per cent of systems that are running anti-virus programs and the per cent of systems that are properly configured and average the two together.

Control test

The evaluation team must move a benign software test program that appears to be malware, such as a European Institute for Computer Anti-virus Research (EICAR) standard anti-virus test file, or benign hacker tools, but that is not included in the official authorized software list to 10 systems on the network via a network share.

Metric name

Application software security measures

Metric category

Information security management

Suggested metric owner

Information security manager

Typical stakeholders

IT operations manager, information owners, software asset owners

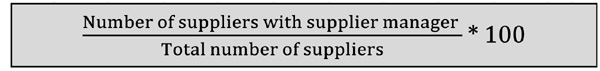

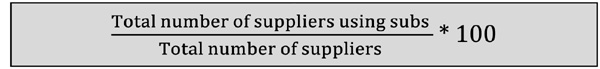

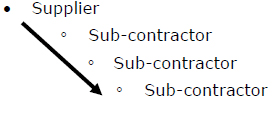

Description