CHAPTER 6: SERVICE OPERATION METRICS

Service operation is where the majority of metrics are collected as production services are provided to the customers. Service operation achieves effectiveness and efficiency in the delivery and support of services, ensuring value for both the customer and the service provider. Service operation maintains operational stability while allowing for changes in the design, scale, scope and service levels. This stage of the lifecycle is the culmination of the efforts provided in strategy, design, and transition. Service operation represents the successful efforts for the previous lifecycle phases to the customers with the successful delivery of services and creating high levels of customer satisfaction.

Operational metrics are used primarily in operations to maintain the infrastructure and within CSI to find opportunities for improvement. The metrics in this chapter are tied to the processes found in service operation. The metrics sections for this chapter are:

Event management metrics

Incident management metrics

Request fulfillment metrics

Problem management metrics

Access management metrics

Service desk metrics.

Event management metrics

This process is extremely important to just about all metrics. Monitoring the environment is a major aspect of event management and the pre-defined monitors will collect a majority of the measurements. These metrics are in line with several KPIs that support this process. The metrics presented in this section are:

- Percentage of events requiring human intervention

- Percentage of events that lead to incidents

- Percentage of events that lead to changes

- Percentage of events categorized as exceptions

- Percentage of events categorized as warnings

- Percentage of repeated events

- Percentage of events resolved with self-healing tools

- Percentage of events that are false positives

- Number of incidents resolved without business impact.

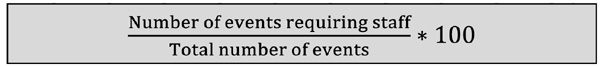

Metric name

Percentage of events requiring human intervention

Metric category

Event management

Suggested metric owner

Operations center management

Typical stakeholders

Technical staff, service desk, customer

Description

While event management relies upon tools for monitoring and managing events, there is still a need for staff to be involved to resolve and close certain events.

This downward trending metric provides an understanding of staff involvement with event management. It can also help determine if the organization is truly exploiting the full benefits of the tools used within event management. Increasing the number of events managed with the available tools will improve consistency and timeliness of event resolution and will allow staff to focus on more complex tasks.

Measurement description

Formula:

This metric should be monitored regularly to ensure progress is made in the utilization of tools to resolve events. This will also demonstrate a more proactive approach to managing the environment.

Frequency |

|

Measured: |

Monthly |

Reported: |

Monthly |

Acceptable quality level: 25% |

|

Range: |

> 25% unacceptable (CSI opportunity) = 25% acceptable (maintain/improve) < 25% exceeds (Reset AQL to lower level) |

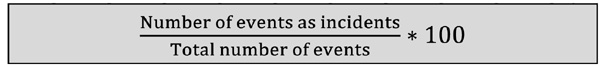

Metric name

Percentage of events that lead to incidents

Metric category

Event management

Suggested metric owner

Operations center management

Typical stakeholders

Technical staff, service desk, customer, SLM

Description

As more of the environment is monitored, exceptions (those having significance to services) can be captured, categorized and escalated to the appropriate process in an expeditious manner. In particular, are those exceptions that require incident management processing.

This upward trending metric will assist in the speed in which incidents are resolved. While it would be optimum to reduce the number of incidents, the ability to capture them quickly, within event management, will reduce downtime and increase customer satisfaction.

Measurement description

Formula:

Utilizing this metric in combination with Incident Management metrics will provide a more complete picture of the life of an incident and how the organization is maturing in managing events and incidents.

Frequency |

|

Monthly |

|

Reported: |

Monthly |

Acceptable quality level: N/A |

|

Range: |

N/A |

Metric name

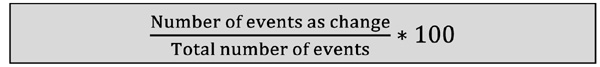

Percentage of events that lead to changes

Metric category

Event management

Suggested metric owner

Operations center management

Typical stakeholders

Technical staff, service desk, customer, change management, SLM

Description

There can be multiple reasons for an exception event to be handled by change management. These include:

- Threshold warnings

- Monitoring patch levels

- Configuration scans

- Software license monitoring.

Any of these situations may require an RFC in order to properly manage the necessary change to either establish or re-establish the norm or monitoring threshold. This demonstrates how event management creates a more proactive environment for managing services and increasing value to the customers.

Measurement description

Formula:

Utilizing this metric in combination with change management metrics will provide a more complete picture of the change and how the organization is maturing with event and change management.

Frequency |

|

Measured: |

Monthly |

Reported: |

Monthly |

Acceptable quality level: N/A |

|

Range: |

N/A |

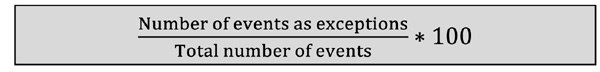

Metric name

Percentage of events categorized as exceptions

Metric category

Event management

Suggested metric owner

Operations center management

Typical stakeholders

Technical staff, service desk, customer

Description

Exception events are events that require additional handling and are past to other processes for further action. These processes are usually:

This metric provides a high level look at these types of events and, in particular, demonstrates the volume of exception events as compared to all events. This can be an important metric for an organization trying to become more proactive revealing measurable actions such as:

- Improved use of automation

- Expeditious handling of incidents

- Creating more standard/pre-approved changes

- Identification of potential problems.

Measurement description

Formula:

The growth and maturation of event management will not only help identify these types of event but will ultimately help prevent or eliminate through process integration.

Frequency |

|

Measured: |

Monthly |

Reported: |

Quarterly |

Acceptable quality level: N/A |

|

Range: |

N/A |

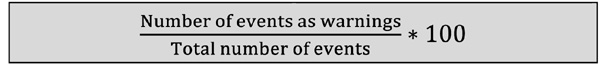

Metric name

Percentage of events categorized as warnings

Metric category

Suggested metric owner

Operations center management

Typical stakeholders

Technical staff, service desk, customer

Description

As you can see from previous metrics, categorization of events is extremely important to determine how the event will be handled. For many organizations, the most common type of managed event will be warning events. These include:

This upward trending metric allows the organization to become more proactive in service provision. A warning event allows actions to be taken to prevent an outage and becoming an exception event.

Measurement description

Formula:

The metric is usually based against thresholds set within capacity and availability management.

Frequency |

|

Measured: |

Monthly |

Reported: |

Quarterly |

Acceptable quality level: N/A |

|

Range: |

N/A |

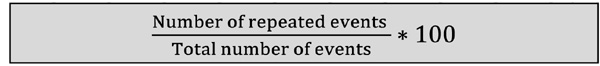

Metric name

Percentage of repeated events

Metric category

Event management

Suggested metric owner

Operations center management

Typical stakeholders

Technical staff, service desk, customer

Description

This metric provides an understanding of the amount of repeated events, primarily those categorized as warnings or exceptions, which occur within the environment. Reoccurring events can be the most problematic for service delivery, while damaging the reputation of the organization. Once detected, these events can be forwarded to problem management for further actions and elimination.

As with many other event management metrics, this metric enhances the organization’s ability to be more proactive in managing and providing services.

Measurement description

Formula:

This measurement can be categorized in many ways to better understand the occurrences. Categorizations include:

- By occurrence type

- By service

- By overall events

- By CI

- By configuration category or CI type.

Frequency |

|

Measured: |

Monthly |

Reported: |

Quarterly |

Range: |

N/A |

Metric name

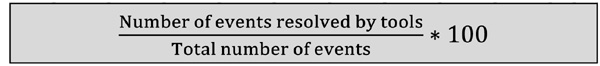

Percentage of events resolved with self-healing tools

Metric category

Event management

Suggested metric owner

Operations center management

Typical stakeholders

Technical staff, service desk, customer

Description

This metric provides an indication of the overall exploitation of tools and automation when handling events. Repeatability and consistency are found in using tools to provide corrective actions when events occur. Self-healing tools utilize scripts to analyze and correct certain types of events (usually simple corrective actions). Not all events can be handled by these types of tools. Careful consideration must be taken when selecting which events will be handled in this manner.

We suggest creating policies to provide direction in the use of self-healing tools and to enhance decision making within the event management process. This should be an upward trending metric as organizations become more familiar with events and the self-healing tools used to correct them.

Measurement description

Formula:

The metric may be provided within an ITSM tool or within the self-healing tools. In many cases, measurements from both tools will provide a comprehensive view of this metric.

Frequency |

|

Measured: |

Monthly |

Reported: |

Quarterly |

Acceptable quality level: 10% (increase this percentage over time) |

|

Range: |

< 10% unacceptable (improvement opportunity) = 10% acceptable (maintain/improve) > 10% exceeds (continue exploring more uses) |

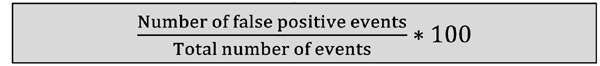

Metric name

Percentage of events that are false positives

Metric category

Event management

Suggested metric owner

Operations center management

Typical stakeholders

Technical staff, service desk, customer

Description

False positive events are nuisances to the staff and waste valuable time trying to investigate and manage. This metric demonstrates improvement opportunities within the service or processes. It can help identify areas throughout the service management lifecycle that might have missed certain items or have not thought of these items.

Once a false positive is discovered, the monitoring tool can be corrected or that area of monitoring can be eliminated if deemed unnecessary.

Measurement description

Formula:

You may experience difficulties finding tools to identify and report this type of metric. These types of events may need manual re-categorization while working within the event management process. If this metric is utilized, ensure the process has a procedure or work instructions to address these events.

Frequency |

|

Measured: |

Monthly |

Reported: |

Quarterly |

Acceptable quality level: 1% |

|

Range: |

> 1% unacceptable (CSI opportunity) = 1% acceptable (maintain/improve) < 1% exceeds (best practice potential) |

Metric name

Number of incidents resolved without business impact

Metric category

Event management and incident management

Suggested metric owner

Operations center management/incident manager

Typical stakeholders

Technical staff, service desk, customer, SLM

Description

While it seems focused on incident management, this metric could be a direct indication of the benefit event management provides. Proactively monitoring the environment to discover real time issues allows incident management to resolve the incident prior to impacting the business.

The relationship between incident and event management can have a dramatic impact to the way incidents are managed. As both processes continue to mature, a proactive nature within the organization will become the norm which will increase service provision and customer satisfaction.

Measurement description

Formula: N/A

An integrated service management tool or suite of tools can provide this metric. It may also require integration with monitoring tools.

Frequency |

|

Measured: |

Monthly |

Reported: |

Quarterly |

Acceptable quality level: N/A |

|

Range: |

N/A |

Incident management metrics

Incident management has been deemed the major process of service operation. This process is all about keeping services running and customers satisfied. Incident management is a reactive process however, if integrated with event management these reactive activities can have a proactive nature (what we call ‘proactively reactive’). That is, reacting and resolving an incident before they impact the organization. These metrics are in line with several KPIs that support this process. The metrics presented in this section are:

- Average incident resolution time

- Average escalation response time

- Percentage of re-opened incidents

- Incident backlog

- Percentage of incorrectly assigned incidents

- First call resolution

- Percentage of re-categorized incidents.

Metric name

Average incident resolution time

Metric category

Incident management

Suggested metric owner

Incident manager

Typical stakeholders

Caller, service desk, required IT functions, service owner, SLM

Description

This metric represents the average time taken to resolve an incident to the customer’s satisfaction. Average resolution time should be based on the assigned priority. An example is shown below:

- Priority 1 – 2 hrs

- Priority 2 – 4 hrs

- Priority 3 – 8 hrs

- Priority 4 – 24 hrs.

Resolution times must be negotiated and documented within the incident management process. Any service requiring different resolution time should be documented within the SLA.

Measurement description

Formula:

This measurement should be gathered in pre-defined timeframes (e.g. daily or weekly). The metric can be calculated for all incidents or broken down by incident priority for a more detailed view. In addition, ITSM tools can provide the number of incidents that failed to meet the prioritized resolution time. This can be used to identify potential issues within the incident management process.

Frequency |

|

Measured: |

Weekly |

Reported: |

Monthly |

Acceptable quality level: Meet each priority level, for example: |

|

Range: |

|

Metric name

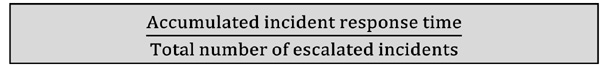

Average escalation response time

Metric category

Incident management

Suggested metric owner

Incident manager

Typical stakeholders

Caller, service desk, required IT functions, service owner, SLM

Description

This metric represents the average time taken for the initial response by a tier two or tier three team to an escalated incident. Average response time should be based on the assigned priority. For example:

- Priority 1 – 15 minutes

- Priority 2 – 30 minutes

- Priority 3 – 1 hrs

- Priority 4 – 2 hrs.

Response times must be negotiated and documented within the incident management process. Any service requiring different response times should be documented within the SLA.

Measurement description

Formula:

This measurement should be gathered in pre-defined timeframes (e.g. weekly or monthly). The metric can be calculated for all escalated incidents or broken down by priority for a more detailed view of escalations. In addition, ITSM tools can provide the number of incidents that failed to meet the prioritized response time. This can be used to identify potential issues within the escalation procedure.

Frequency |

|

Measured: |

Weekly |

Reported: |

Monthly |

Acceptable quality level: Meet each priority level, for example: |

|

Range: |

|

- Priority 1 – 15 minutes

- Priority 2 – 30 minutes

- Priority 3 – 1 hrs

- Priority 4 – 2 hrs.

Metric name

Percentage of re-opened incidents

Metric category

Incident management

Suggested metric owner

Incident manager

Typical stakeholders

Caller, service desk, required IT functions, service owner, SLM

Description

This metric represents the percentage of tickets re-opened due to occurrences such as:

- Inadequate resolution

- Dissatisfied customers

- Improper incident documentation

- Incident re-occurrence within a set period of time (24 hours).

This should be a low to downward trending metric. Re-opened tickets show a possible lack of due diligence within the incident management process as key activities could have been missed or improperly performed. A persistent percentage might demonstrate an opportunity for either process improvement(s) or additional training for incident management staff.

Measurement description

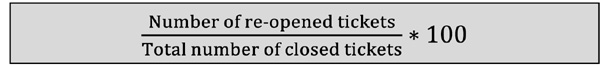

The number of re-opened tickets will be collected over a period of time within the ITSM tool and compared against the total number of closed tickets during that same timeframe.

Formula:

This measurement should be gathered in pre-defined timeframes (e.g. weekly or monthly). Some ITSM tools will not allow a closed incident to be re-opened, making this metric unobtainable. Therefore, other metrics can be used or created to better understand the occurrences described above.

Frequency |

|

Measured: |

Weekly |

Reported: |

Monthly |

Acceptable quality level: 2% |

|

Range: |

> 3% unacceptable (CSI opportunity) = 2% acceptable (maintain/improve) < 2% exceeds (best practice potential) |

Metric name

Incident backlog

Metric category

Incident management

Suggested metric owner

Incident manager

Typical stakeholders

Caller, service desk, service owner, SLM

Description

A backlog of any type can create issues for service provision. An incident backlog is compounded by the fact that service issues exist and customers may be directly affected by the incident which might impact the business. This metric can be used to demonstrate one or multiple issues including:

- Service desk capacity

- High average incident resolution time

- Incident storms

- ITSM tool issues

- Changes to services or processes.

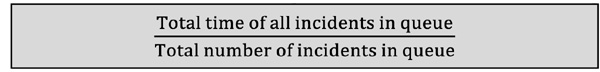

Measurement description

Typically, this measurement is represented by the incident queue length found within the ITSM tool. Another measurement that becomes equally important is the queue time. These measurements can be viewed in real time or as an average within a defined period of time.

Formula:

These measurements should be gathered in pre-defined timeframes (e.g. hourly or daily) and should be reported in increments such as hourly, by shift or within business critical timeframes.

Frequency |

|

Measured: |

Weekly |

Reported: |

Monthly |

Acceptable quality level: Defined by business requirements and reported as averages within defined timeframes. These timeframes can be specified within an SLA. |

|

Range: |

Defined in the SLAs |

Metric name

Percentage of incorrectly assigned incidents

Metric category

Incident management

Suggested metric owner

Incident manager

Typical stakeholders

Caller, service desk, required IT functions, service owner, SLM

Description

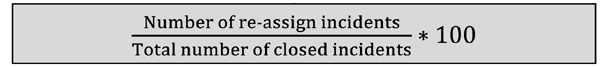

Assigning an incident is critical to the timely escalation and resolution of the issue. If not done properly, incorrectly assigned incidents can cause the ticket to bounce from one group to another while the caller(s) remain without a resolution, wasting time and resources.

This should be a low to downward trending metric. A cause for this issue can be found in the method of incident categorization either within the process or ITSM tool. Utilization of incident models and templates can help to reduce this issue.

Measurement description

Incorrectly assigned incidents can be found in the number of re-assignments logged within the ITSM tool.

Formula:

This measurement should be gathered within a defined timeframe (i.e. weekly, monthly). The results from this metric can provide insights into:

- Increased use to templates within the tool

- Better training for service desk agents

- Process improvements.

Frequency |

|

Measured: |

Weekly |

Reported: |

Monthly |

Acceptable quality level: 10% |

|

Range: |

> 10% unacceptable (CSI opportunity) = 10% acceptable (maintain/improve) < 10% exceeds (best practice potential) |

Metric name

First call resolution

Metric category

Incident management

Suggested metric owner

Incident manager

Typical stakeholders

Caller, service desk, service owner, SLM

Description

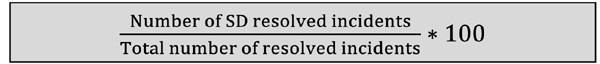

The knowledge and expertise of the service desk are critical when executing incident management. This metric demonstrates the maturity of the service desk as more incidents should be resolved by the service desk without escalation.

Of course, all incidents cannot be resolved by the service desk due to complexity of the incident, security levels, authorization levels, or experience. However, as knowledge is gained, documented and shared, higher levels of first call resolution will become the norm for incident management while increasing the value of the service desk.

Measurement description

ITSM tools provide either a resolved by field or tab (some have both) to document the individual or team that resolved the incident. Searching and reporting on this field will provide the resolution source and demonstrate how the service desk is maturing.

Formula:

This measurement should be gathered incrementally in pre-defined timeframes (i.e. weekly, monthly). Further investigation into resolved incidents will show sources of knowledge used to resolve these incidents (i.e. known error database or KEDB).

Frequency |

|

Measured: |

Weekly |

Reported: |

Monthly |

Acceptable quality level: Develop a baseline and trend going forward |

|

Range: |

N/A |

Metric name

Percentage of re-categorized incidents

Metric category

Incident management

Suggested metric owner

Incident manager

Typical stakeholders

Caller, service desk, service owner

Description

Proper categorization has a dramatic impact to the handling of all incidents. This can be seen during escalation and using/updating the CMS/CMDB.

This should be an upward trending metric. Re-categorizing an incident can occur during the lifecycle of the incident or in reviewing the incident after is has been resolved. No matter when this occurs, it is vital that the incident is properly categorized as it will become part of your knowledge base.

Measurement description

Many ITSM tools provide the ability to monitor and track changes to the incident categorization. Searching and reporting on this field(s) will provide valuable information on incident categorization, process efficiency, and will demonstrate how the service desk is maturing.

Formula:

This measurement should be gathered over a period of time (i.e. weekly, monthly). Further investigation into incident categorization will improve the handling of incidents and the information contained in the CMS/CMDB.

Frequency |

|

Measured: |

Weekly |

Reported: |

Monthly |

Acceptable quality level: 10% |

|

Range: |

> 10% unacceptable (CSI opportunity) = 10% acceptable (maintain/improve) < 10% exceeds (best practice potential) |

Request fulfillment metrics

Service requests require consistent handling and a centralized entry point for customers. Request fulfillment processes these service requests and with integration into the service catalog provides a standardized and consistent entry point from which customers can access and request services. These metrics are in line with several KPIs that support this process. The metrics presented in this section are:

- Percentage of overdue service requests

- Service request queue rate

- Percentage of escalated service requests

- Percentage of correctly assigned service requests

- Percentage of pre-approved service requests

- Percentage of automated service requests.

Metric name

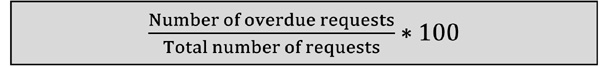

Percentage of overdue service requests

Metric category

Request fulfillment

Suggested metric owner

Service desk manager

Typical stakeholders

End users, customers, service desk, SLM

Description

There is an expectation for delivery when providing requests to customers. Typically, service requests are made through the service catalog or directly to the service desk. In either case, it is appropriate to provide a delivery date or time for the request. The delivery date and time can be specified in the service catalog or an SLA.

Those requests that do not meet their specified delivery schedule are considered overdue and must be reported. This metric provides customers and management a view of how service requests are managed from a high level.

Measurement description

Formula:

Requests, no matter how big or small, are important to the individual customers. If, for any reason, a request cannot be provided in a timely manner, communicate this to the customer prior to the delivery date/time. While the customer will not be pleased with the delay, they will appreciate the proactive communication.

Frequency |

|

Measured: |

Monthly |

Reported: |

Monthly |

Acceptable quality level: 10% |

|

Range: |

> 10% unacceptable (CSI opportunity) = 10% acceptable (maintain/improve) < 10% exceeds (best practice potential) |

Metric name

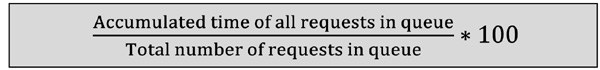

Service request queue rate

Metric category

Request fulfillment

Suggested metric owner

Service desk manager

Typical stakeholders

End users, customers, service desk

Description

The request queue can back up quickly based on the number of requests, time of the year or any number of other situations which cause an increased need from customers and users.

A build-up of the request queue can be a result of several events including:

- New service demand

- Increase in customer/user base

- Major service change

- Request storms

- Security issues or changes.

Measurement description

This measurement is represented by the request queue length found within the ITSM tool. The request queue time is equally as important to understand the magnitude of the requests in queue. These metrics can be viewed in real time or as averages within a defined period of time.

Formula:

These measurements should be gathered within a defined timeframe (i.e. hourly, daily) and should be reported in increments such as hourly or by shift. These can also be categorized by priority yielding greater meaning for the queue time.

Frequency |

|

Measured: |

Weekly |

Reported: |

Monthly |

Acceptable quality level: Baseline and trend |

|

Range: |

N/A |

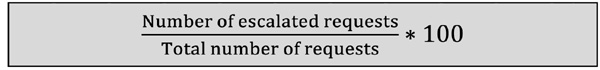

Metric name

Percentage of escalated service requests

Metric category

Request fulfillment

Suggested metric owner

Service desk manager

Typical stakeholders

End users, customers, service desk

Description

This metric represents the percentage of service requests that are escalated to higher tier levels for resolution. While depending on the type of organization, this can be a downward trending metric as more requests are automated within the service catalog or resolved by the service desk.

The metric can help to demonstrate the maturity of the request fulfillment process. It will complement the amount of time required to resolve and close service requests.

Measurement description

Formula:

This measurement should be gathered within a defined timeframe (i.e. weekly). It is recommended that this metric is reviewed on a monthly to quarterly basis.

Frequency |

|

Measured: |

Monthly |

Reported: |

Quarterly |

Acceptable quality level: 25% |

|

Range: |

> 25% unacceptable (CSI opportunity) = 25% acceptable (maintain/improve) < 25% exceeds (consider AQL reset) |

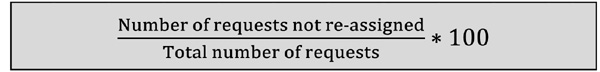

Metric name

Percentage of correctly assigned service requests

Metric category

Request fulfillment

Suggested metric owner

Typical stakeholders

End users, customers, service desk, tier level teams, SLM

Description

Service requests that require greater authority or functional knowledge and experience are escalated to higher tier teams and, therefore, should be assigned properly. This ensures the prompt handling of the request and increases closure time.

While many requests will follow a scripted path (i.e. programmed work flow), there are circumstances when human intervention is required, script errors occur, and/or ad hoc requests are made — all can cause assignment errors which delay the fulfillment of the request.

Measurement description

Formula:

This can be a difficult metric to capture depending on the ITSM tool and its attributes. Some tools can collect changed team assignments and report them as standard features of the tool. Others might not have this capability and might require methods such as log searches to determine if request assignments have been changed. Scripts can be created to help automate these types of searches and regularly scheduled based on reporting targets or requirements.

Frequency |

|

Measured: |

Weekly |

Monthly |

|

Acceptable quality level: 98% |

|

Range: |

< 98% unacceptable (tool or process improvements) = 98% acceptable (maintain/improve) > 98% exceeded |

Metric name

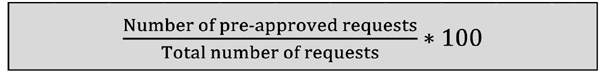

Percentage of pre-approved service requests

Metric category

Request fulfillment

Suggested metric owner

Service desk manager

Typical stakeholders

End users, customers, service desk, management

Description

This metric represents the percentage of service requests that have prior approval for processing and demonstrates efficiencies gained as requests are delivered to customers. Many requests (security, acquisition, general management) require authorization for fulfillment to the customer’s satisfaction. Depending on the types of requests, such as above, some may not be pre-approved. Frequently submitted requests can be approved based on known low risk and thus expedited with an automated work flow model built into the ITSM tool.

Measurement description

Formula:

Once a request is designated as pre-approved, consider creating a template or script within the ITSM tool automation to fulfill the request providing higher levels of consistency and performance. This will also allow greater accuracy in measuring the volume of requests and reporting findings.

Frequency |

|

Measured: |

Monthly |

Reported: |

Monthly |

Acceptable quality level: 25% (look for opportunities to increase) |

|

Range: |

< 25% unacceptable (process improvement opportunity) = 25% acceptable (maintain/improve) > 25% exceeds (reset objectives to higher level) |

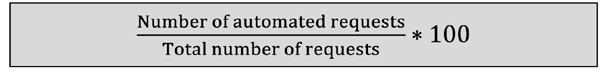

Metric name

Percentage of automated service requests

Metric category

Request fulfillment

Suggested metric owner

Service desk manager

Typical stakeholders

End users, customers, service desk

Description

Many ITSM tools provide methods to automate the handling of service requests to increase the speed and efficiency of fulfillment. Other requests may require manual intervention due to the nature of the request (security, high cost purchases, authorization level).

Careful consideration needs to be taken when considering automating a service request. We recommend creating a methodology to review the request and walk through all aspects of fulfilling the request. Once fully understood and approved, the request can be automated within a request model or template. This should be an upward trending metric as the process and understanding requests matures.

Measurement description

Formula:

This measurement can be directly related to an automated tool-based service catalog. Many service catalog tools have automated workflow capabilities which create consistent execution of the activities required to fulfill the request. Request categorization and request types can assist in determining which requests would be best served in an automated manner.

Frequency |

|

Measured: |

Monthly |

Reported: |

Monthly |

Acceptable quality level: 25% (to start with but increased to an aggressive goal, such as 75%) |

|

< 25% unacceptable (improvement opportunity) = 25% acceptable (maintain/improve) > 25% exceeds (grow/improve) |

|

Problem management metrics

Problem management metrics should help mature process activities to help this process become proactive; resolving potential issues before they occur. Problem management records known errors and provides an incredible knowledge base to incident management in the form of the KEDB. These metrics are in line with several KPIs that support this process. The metrics presented in this section are:

- Problem management backlog

- Number of open problems

- Number of problems pending supplier action

- Number of problems closed in the last 30 days

- Number of known errors added to the KEDB

- Number of major problems opened in the last 30 days

- KEDB accuracy.

Metric name

Problem management backlog

Metric category

Problem management

Suggested metric owner

Problem manager

Typical stakeholders

Service desk, required IT functions, suppliers

Description

This metric represents the number of problems found within a problem queue that are creating a backlog. This backlog can be caused by a number reasons including:

The problem backlog can be managed within an ITSM tool and should be reviewed regularly by the problem manager and/or team. The backlog should be reported via time (i.e. months in the queue).

Measurement description

Formula: N/A

This measurement should be gathered within a defined timeframe (i.e. monthly, quarterly). This measurement is reported as the number of problems in the queue and the length of time (30, 60, 90 days) in the queue. This backlog must be managed as it can have a direct impact to incident and change management as well as many business processes.

Frequency |

|

Measured: |

Monthly |

Reported: |

Monthly, quarterly |

Acceptable quality level: Minimum number determined by the organization |

|

Range: |

N/A |

Metric name

Number of open problems

Metric category

Problem management

Suggested metric owner

Problem manager

Typical stakeholders

Service desk, required IT functions, suppliers, management

Description

This metric includes problems found in several states including:

- Open (working)

- Pending (on hold)

- In queue (backlogged).

Open problems provide opportunities to the organization. Many problems can be created in a more proactive manner allowing the organization to prevent problems from either occurring or re-occurring. All problems must be prioritized to ensure proper handling and to establish a level of importance to the organization.

A well-managed number of problems are a sign of a healthy organization in that it can demonstrate both the reactive nature of problems (working in conjunction with incident management) or being more proactive (linking to availability management) and trying to be opportunistic.

Measurement description

Formula: N/A

This measurement can be easily found in most ITSM tools. If this is not possible, a manual search of problems with active state (as seen above) can provide this measurement.

Frequency |

|

Measured: |

Monthly |

Reported: |

Monthly |

Acceptable quality level: Minimum number determined by the organization |

|

Range: |

N/A |

Metric name

Number of problems pending supplier action

Metric category

Problem management

Suggested metric owner

Problem manager

Typical stakeholders

Service desk, required IT functions, suppliers, supplier management, SLM

Description

Many problems can be created due to supplier issues or known problems. When this occurs the resolution of the problem is out of the hands of problem management and becomes dependent on the supplier.

Resolution for the problem could be found in:

- An existing patch (quick fix)

- A custom-built patch

- Within the next release of patches

- Within the next release of the component (i.e. OS, application, drivers, etc.).

If this is the case, beware of any service level targets for problem management as you no longer have control which could result in a breached target.

Measurement description

Formula: N/A

There is no measurement for this metric but should still be managed at as low a level as possible. This number should be gathered within a defined timeframe (i.e. monthly). This type of problem can be categorized within the ITSM tool providing a simple manner to collect and extract this metric.

|

|

Measured: |

Monthly |

Reported: |

Monthly |

Acceptable quality level: N/A |

|

Range: |

N/A |

Metric name

Number of problems closed in the last 30 days

Metric category

Problem management

Suggested metric owner

Problem manager

Typical stakeholders

Service desk, SLM

Description

This metric is important to determine the progress and maturity of problem management. Once the problem resolution is implemented and accepted in production, formal closure of the problem must occur as documented in the process documentation. When the problem is opened the problem ticket becomes part of the knowledge base for future reference.

The time period and its starting point must be defined (e.g. weekly, monthly, quarterly) to create consistency for this metric.

This metric can be used to measure problem closures in longer terms such as 60, 90 and 120-day increments. The timeframe(s) should be based on organizational needs.

Measurement description

Formula: N/A

This should be an upward trending metric compared to the number of tickets opened. The upward trend will demonstrate improvements in problem management and problem solving techniques. This metric should be gathered within a defined timeframe such as those described in the description.

Frequency |

|

Measured: |

Dependent upon the time period defined |

Reported: |

Dependent upon the time period defined |

Acceptable quality level: N/A |

|

Range: |

N/A |

Metric name

Number of known errors added to the KEDB

Metric category

Problem management

Suggested metric owner

Problem manager

Typical stakeholders

Service desk, knowledge management, suppliers, technical teams

Description

This metric observes activities pertaining to the Known Error Database (KEDB). While problem management will continue to create known errors due to existing problems, proactive problem management will research and find potential problems and create known errors prior to the occurrence of an incident.

The KEDB is a valuable tool for incident management and should be kept up to date and protected at all times. This is one of the first repositories searched when trying to resolve an incident.

Measurement description

Formula: N/A

This should be an upward trending metric particularly when proactive problem management techniques are being used. Known errors related to a problem should have a status assignation based on the current status of the problem. This will keep the KEDB relevant to current issues within the organization.

Frequency |

|

Measured: |

Monthly |

Reported: |

Quarterly |

Acceptable quality level: N/A |

|

Range: |

N/A |

Metric name

Number of major problems opened in the last 30 days

Metric category

Problem management

Suggested metric owner

Problem manager

Typical stakeholders

Service desk, technical team, suppliers, management, SLM

Description

Major problems are described as those that are extreme or even catastrophic to the organization and may require additional authority or resources to minimize impact. Therefore, the hope is that major problems are kept to a minimum.

While this metric is concerned with the number of major problems opened within a defined period, it is equally important to measure major problems closed and reviewed. This will help ensure the all major problems are properly managed.

Measurement description

Formula: N/A

The optimum number would be zero as no organization wants to experience problems at this level. However, since ‘stuff happens’ we must proactively manage our environments to avoid and/or minimize these types of problems. This measurement should be gathered within a defined timeframe (i.e. monthly).

Frequency |

|

Measured: |

Monthly |

Reported: |

Monthly, quarterly, annually (as required) |

Acceptable quality level: N/A |

|

Range: |

N/A |

Metric name

KEDB accuracy

Metric category

Problem management

Suggested metric owner

Problem manager

Typical stakeholders

Service desk, knowledge management, suppliers, technical teams

Description

As mentioned in a previous metric, the KEDB is a valuable tool for incident management and is used continuously as incidents are captured and diagnosed. This creates a dependency on the accuracy of the information provided as the incidents occur in real time and have priority levels which set resolution targets. Poor KEDB information can delay resolution and breach SLA targets.

The information within the KEDB must be regularly monitored for accuracy through:

- Examination of incidents

- Routine audits of the KEDB

- Technical reviews of the root cause and workaround.

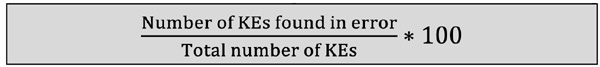

Measurement description

Formula:

In addition, ITSM tools can provide incident and problem information on the creation and use of the KEDB.

Frequency |

|

Measured: |

Monthly |

Reported: |

Quarterly |

Acceptable quality level: 100% Accuracy |

|

< 100% unacceptable (audit and correct) = 100% acceptable |

|

Access management metrics

This process is closely related to information security management. Security management creates security policies dealing with all aspects of security. Access management executes activities that are in line and support those policies. These metrics are in line with several KPIs that support this process. The metrics presented in this section are:

- Percentage of invalid user IDs

- Percentage of requests that are password resets

- Percentage of incidents related to access issues

- Percentage of users with incorrect access.

Metric name

Percentage of invalid user IDs

Metric category

Access management

Suggested metric owner

Security manager (i.e. CISO)

Typical stakeholders

Security, service desk, management, audit

Description

The unfortunate truth is that many organizations do not properly manage user IDs. As people move on, either within the organization or externally, there are instances where user IDs are not removed and remain on the network.

This metric provides an understanding of the amount of IDs that have no ownership (staff assigned) and are considered invalid. This can occur from situations such as:

- Transfers and promotions

- Termination (usually voluntary)

- Change in employee status (family leave)

- Multiple user IDs for individuals

- Managing vendors or contractors.

In many cases invalid user IDs are not found and reported until a security audit is performed (either internal or external).

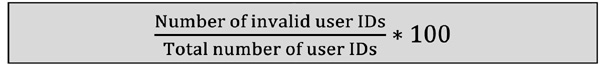

Measurement description

Formula:

This metric should be monitored regularly to ensure the overall protection of the organization and the assets used to provide service.

Frequency |

|

Measured: |

Monthly |

Reported: |

Monthly |

Acceptable quality level: 0% (more of a goal) |

|

Range: |

N/A |

Metric name

Percentage of requests that are password resets

Metric category

Access management

Suggested metric owner

Security manager (i.e. CISO)

Typical stakeholders

Security, service desk, management, request fulfillment, customers

Description

The intricacies and complexity of password standards can lead to a high volume of password resets. Password standards should be based on a security policy as well as the management of passwords.

This metric will show the volume of password resets and can provide insight into potential problems with password standards. The metric can also demonstrate a need for automation of password resets (i.e. self-help tools).

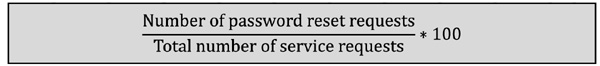

Measurement description

Formula:

This metric will depend on how the organization performs password resets. If resets are performed via a service request the formula above can be used. However, if a self-help tool is used, measurements and metrics from the tool can be used to understand password resets.

Frequency |

|

Measured: |

Monthly |

Reported: |

Monthly |

Acceptable quality level: N/A |

|

Range: |

N/A |

Metric name

Percentage of incidents related to access issues

Metric category

Access management

Suggested metric owner

Security manager (i.e. CISO)

Typical stakeholders

Security, service desk, incident management

Description

Security-related incidents are initially handled by the service desk, however there may be separate security procedures to authorize resolution of access-related incidents by other groups. Sensitivity to access issues may be greater with organizations with classified systems and services. Access incidents may include:

- Insufficient access rights granted

- Improper level of access granted

- Exposure to sensitive information (e.g. intellectual property)

- Security breach

- Unprotected assets.

Careful consideration and additional training are necessary when developing procedures to handle access related incidents. Information security management must be consulted when developing or changing these procedures.

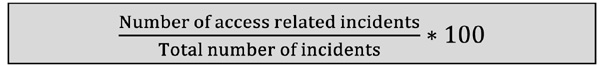

Measurement description

Formula:

Ensure proper identification and categorization of these incidents prior to incident closure. Reporting frequency will depend upon the type of organization and level of security for systems and services.

Frequency |

|

Measured: |

Daily or weekly |

Reported: |

Weekly or Monthly |

Acceptable quality level: < 1% |

|

Range: |

≥ 1% unacceptable < 1% acceptable 0% exceeds |

Metric name

Percentage of users with incorrect access

Metric category

Access management

Suggested metric owner

Security manager (i.e. CISO)

Typical stakeholders

Security, service desk, management, event management, customers

Description

This metric can be used to understand the amount of users with improper rights. This can include:

- Insufficient access

- Inappropriate access rights

- Incorrect group access.

Proper access requires the appropriate level of authorization from management. While access is granted via management approval, in many cases incorrect access is not found until one of these events occur:

- User requests a change or corrections in access

- An access related incident occurs

- An external or internal security audit

- A security clean-up gets underway.

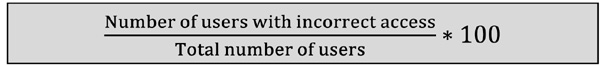

Measurement description

Formula:

Collection of the number of users with incorrect access may come from multiple sources such as event management, incident management and request fulfillment.

Frequency |

|

Measured: |

Weekly |

Reported: |

Monthly |

Acceptable quality level: < 1% |

|

Range: |

> 1% unacceptable < 1% acceptable |

Service desk metrics

The service desk is a function within service operations. Many of the operational processes are carried out by the service desk. Therefore, the service desk should be considered as a vital function for all organizations. These metrics are in line with several KPIs that support this function. The metrics presented in this section are:

- Average call duration

- Average number of calls per period

- Number of abandoned calls

- Average customer survey results

- Average call hold time.

Metric name

Average call duration

Metric category

Event management

Suggested metric owner

Operations center management

Typical stakeholders

Service desk, customers, incident management, SLM

Description

This metric is directly measuring the customer’s experience with the service desk. We must understand that the customer’s experience begins with the call initiation not when a call is answered. Therefore call duration should include:

Consistent management of this metric will:

- Help increase customer satisfaction

- Assist in reducing costs

- Improve management of resources

- Reduce call duration.

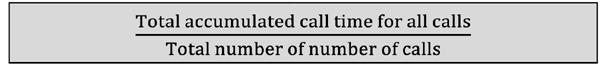

Measurement description

Formula:

Utilizing this metric in combination with incident management or request fulfillment metrics will provide a more complete picture of the customer’s experience with the service desk.

Frequency |

|

Measured: |

Weekly |

Reported: |

Monthly |

Acceptable quality level: Established by management |

|

Range: |

Based on established AQL |

Metric name

Average number of calls per period

Metric category

Service desk

Suggested metric owner

Service desk manager

Typical stakeholders

Service desk, customers, management

Description

One of the first things required for this metric is to have the period clearly defined. This metric will then help understand the volume of calls within the defined period.

This metric is useful for planning the total staffing of the service desk as well as the staffing requirements within the defined periods. Industry statistics are available to help plan resources based on call volumes, however, understanding these statistics only provides guidance for staffing levels. Industry formulas are also available to improve staffing decisions.

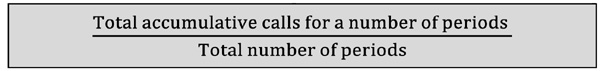

Measurement description

Formula:

For this metric, define the number of periods to use for the collection of information and measurements (e.g. five periods, Monday – Friday for a business week). Collecting all calls during those five periods will then provide the information required to calculate this metric.

Frequency |

|

Measured: |

Weekly |

Reported: |

Monthly |

Acceptable quality level: N/A |

|

N/A |

|

Metric name

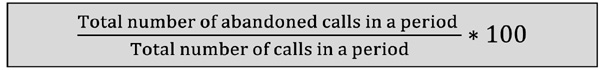

Number of abandoned calls

Metric category

Service desk

Suggested metric owner

Service desk manager

Typical stakeholders

Service desk, customers

Description

Abandoned calls create a direct customer perception of poor service. This metric represents the number of abandoned calls within a defined period of time (i.e. daily, weekly). Abandoned calls are calls which the customer disconnected prior to receiving service. The usual issue behind abandoned calls is the wait time within the queue.

This metric should be viewed regularly by the service desk and managed to the lowest possible number.

Measurement description

Formula:

This measurement can be collected from the Automated Call Distribution (ACD) function of the telephony system. The metric should be presented as a number rather than a percentage as high call volumes can make a percentage seem relatively low. If a percentage is required please reference the formula below.

Combined with other metrics such as, average number of calls per period, the service desk manager can better manage staff and resource levels to meet customer needs.

Frequency |

|

Measured: |

Weekly |

Reported: |

Monthly |

Acceptable quality level: Determine current baseline |

|

Range: |

Strive to continually lower the baseline |

Metric name

Average customer survey results

Metric category

Service desk

Suggested metric owner

Service desk manager

Typical stakeholders

Service desk, management, customers

Description

This metric provides an understanding of the customer’s experience with the service desk and satisfaction level. Appropriate satisfaction levels and definition must be discussed and agreed upon by management prior to the release of any survey.

As the organization’s surveys mature, utilize metrics from other processes to increase the understanding of survey results. This will help increase the organization’s knowledge as to why the results are at their current levels.

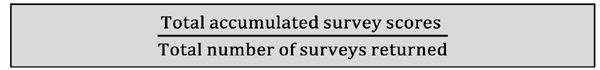

Measurement description

Formula:

Survey scores must be translated into management information. The scores should be categorized into meaningful information such as:

- Outstanding

- Highly satisfied

- Satisfied

- Dissatisfied

- Very dissatisfied.

At times, numbers don’t have the meaning or impact necessary so we recommend using wording.

Frequency |

|

Measured: |

Monthly |

Reported: |

Quarterly |

Acceptable quality level: Highly satisfied |

|

Range: |

Based on categorization |

Metric name

Average call hold time

Metric category

Service desk

Suggested metric owner

Service desk management

Typical stakeholders

Service desk, customer

Description

This metric can be gathered from the ACD function of a telephony system. This is an extremely important metric as it represents the customer’s experience when calling the service desk.

This metric can help determine potential resource issues on the service desk. When discussing hold times, use your own experience as a customer to fully understand how to manage calls. Managing the length of hold times will have a dramatic impact on improved customer satisfaction ratings. Do not underestimate managing this metric.

Measurement description

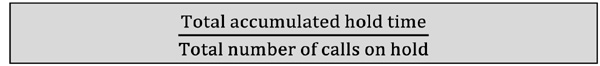

Formula:

In many cases, this metric will be a standard within the ACD function. If not, the measurements can usually be easily extracted and calculated via an external tool such as a spreadsheet.

Frequency |

|

Measured: |

Monthly |

Reported: |

Quarterly |

Acceptable quality level: Defined by the organization |

|

Range: |

Set and maintain as low a hold time as possible |