8

From Architecture to Application

Analysts, application architects, and software engineers work in cooperation with businesspeople to design and build application systems that leverage event processing. They apply architectural concepts, such as those described in the previous chapters, to the requirements and conditions of particular business problems. The first part of this chapter explores how event data and notifications fit into business applications. The second part describes five very different styles of event-processing application.

Role of Notifications in Business Applications

Event-processing systems are an integral part of a company’s IT fabric, not a separate or isolated activity. They tie into other business applications or are part of those application systems. The primary interface between event-processing components and other application components is through sending and receiving notifications.

Business event processing uses two kinds of notifications:

![]() Transactional notifications —Report an event and cause something else to change

Transactional notifications —Report an event and cause something else to change

![]() Observational notifications —Report an event but don’t directly change anything

Observational notifications —Report an event but don’t directly change anything

Transactional notifications are in the main flows of continuous-processing, eventdriven architecture (EDA) applications. Observational notifications are outside of the main application flows (they’re “out of band”), but they affect business decisions so they have an indirect impact on application systems and things in the physical world.

When we defined “event” in Chapter 7, we noted that some people think of an event as a thing that causes a change to occur. They’re partially right—many events do cause a change. Others think of an event as a report of a change, happening, or condition. They’re completely right—all events report a change, happening, or condition. If a domino in a row of dominos topples over and causes a second domino to fall over, it’s analogous to a transactional notification. If a domino falls and doesn’t cause another domino to fall, that’s analogous to an observational notification. Many observational notifications are discarded by the recipient (the event consumer) without being used because the recipient isn’t interested in that data.

The analogy holds reasonably well if you look at how complex events work. They are usually synthesized from multiple observational notifications. Observational notifications can have a big indirect effect on the business when combined into a complex event, but one observational notification can’t directly cause a change. Similarly, if one domino leans into a larger domino, the larger domino won’t fall. But if enough small dominos (observational notifications) lean on the big one (complex-event notification), it will fall.

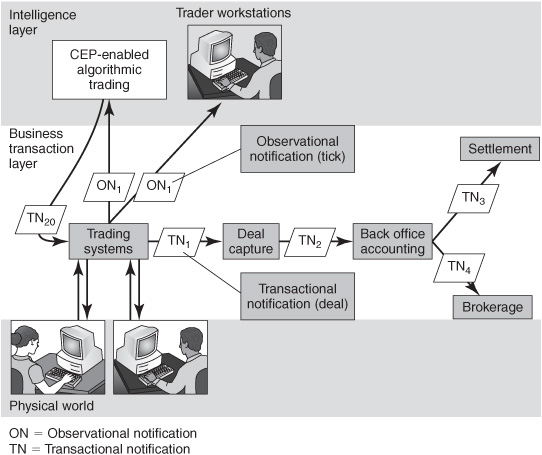

Observational notifications and transactional notifications don’t look different— they’re just data. They differ only in how they are being used. Many business processes involve a mix of transactional and observational notifications. Consider the example of an equity trade. When an investor buys some stock, the deal is immediately captured and reflected in a handful of transactional notifications (TN1 and TN2 in Figure 8-1) at the stock exchange and in the middle office systems of the financial services companies that carried out the buy and sell sides of the transaction. In the hours and days after the trade, a series of additional transactional notifications (TN3 and TN4) or other kinds of records will be generated in back office and settlement systems, and in related accounting applications in the brokerage firms of the buyer and seller. These are the operational applications in the business transaction layer of a company’s IT function (see middle band in Figure 8-1). Transactional notifications have financial and legal implications, so they can’t be lost. They contain many data items about the transactions and can be hundreds or thousands of bytes long. Some of the notifications indicate the identity of the buyer, his or her address, the amount of commission to be paid to the broker, and many other things.

Figure 8-1: Notifications in capital markets trading applications.

The same transaction is also immediately reported in a “tick” (ON1). A tick is a short trade-report message—an observational notification that contains the stock symbol, an identifier for the stock exchange, the trade price, the number of shares traded, an optional time stamp, and other optional fields. The whole message may be less than 100 bytes long. A stock exchange publishes ticks, quotes, and other notifications through its real-time market data network to tens of thousands of event consumers. If a copy of a tick is lost due to a network glitch or a problem at a recipient’s site, generally no attempt will be made to recover it, because another trade in the same stock will come along soon reflecting a more-recent price. A tick doesn’t directly cause an event to occur in the event consumers. However, a large set of trade reports combined together as a complex event can cause an algorithmic trading system at a consumer site to kick off a buy or sell transaction (transactional notification TN20). The algorithmic trading system is in the intelligence layer of a company’s IT function (see top band in Figure 8-1).

We’ll look at the role of transactional and observational notifications in business applications more closely in the next two sections.

Transactional Notifications

Transactional notifications are the notifications in one-event-at-a-time EDA systems. In our recurring order-fulfillment example, the orders, approved orders, rejected orders, and filled orders are transactional notifications. Transactional notifications vary in size, ranging from a few dozen bytes to 100,000 bytes (100MB) or more. The single most common data format is XML but binary and other formats sometimes are still used.

Note: Transactional notifications are typically generated by business applications within the company or in its suppliers, customers, or outsourcers.

Each transactional notification is important. If one is lost, a customer won’t get the goods they ordered, a bill may not be sent, the books may not balance, an insurance claim won’t be paid, or some other problem will ensue. The need for data integrity affects the choice of channel that is used to convey transactional notifications. This kind of notification is often transmitted over a reliable messaging system that stores a copy on disk before sending it so that it can’t be lost due to a problem in the network or another server. If a reliable channel is not used, software developers should build equivalent safeguards into the application components. The application programs and database management systems (DBMSs) that handle the notifications must also be implemented with careful attention to data integrity. Software developers use a set of rules to protect data integrity when writing programs that handle transactional data. The details go beyond the scope of this book, but if you’re interested in knowing more, look up the ACID properties in Wikipedia (check for Atomicity, Consistency, Isolation, and Durability).

Ways Applications Communicate Business Events

We’re calling these messages “transactional notifications” because we wear event-colored glasses and believe that this perspective brings some advantages in application design. However, analysts and software developers are already familiar with data in this form as plain old “messages.” They know event data as orders, bills, purchase orders, equity trades, insurance claims, expense reports, deposit and withdrawal notices, payments, or some other term. They also know that messages aren’t the only way to convey the information about a business event from one step to the next in a business process. If it’s not conveyed in transactional notifications using the five principles of EDA (see Chapter 6), it’s conveyed in some other manner, such as:

![]() In parameters or documents attached to a call or method invocation in a request-driven relationship

In parameters or documents attached to a call or method invocation in a request-driven relationship

![]() By being temporarily stored in a file or database to be picked by the next activity in a time-driven relationship

By being temporarily stored in a file or database to be picked by the next activity in a time-driven relationship

The task is the same—transferring data about a business event from one agent to the next. In a request- or time-driven approach, the records would be called transactions or business objects rather than messages or transactional notifications.

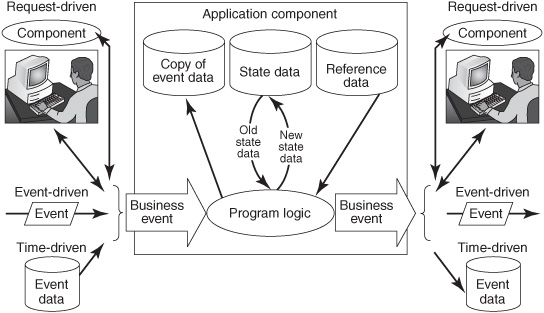

Event Data, State Data, and Reference Data

Regardless of how it is conveyed, event data has a big impact on the application component that receives it. The data reports an event and also causes one or more events to occur in the recipient. The recipient interprets the event data, applies applicationprocessing logic, likely sends messages to other agents, and generally updates some data in files or databases. The data in the application’s files or databases before the event data arrives is state data or reference data (see Figure 8-2).

Developers sometimes find it useful to categorize all application data as event data, state data, or reference data:

![]() Event data —Data about a business event held in an event object or represented in some other manner, such as scattered among multiple documents, business forms, files, databases, web forms, or parameter lists.

Event data —Data about a business event held in an event object or represented in some other manner, such as scattered among multiple documents, business forms, files, databases, web forms, or parameter lists.

![]() State data —Data in an application system that changes as a direct consequence of a routine activity related to the operational purpose of the application. Examples of state data include bank account balances, the number of items in a warehouse inventory, and airline seat reservations. A fundamental goal of a bank’s demand deposit accounting system is to maintain a record of how much money a customer has in his or her account. A deposit or withdrawal event will change the state of the account (that is, its balance). Similarly, the purpose of an airline reservation system is to keep track of who has made reservations and which passenger is assigned to which seat. In a sense, any application program’s purpose in life is to take incoming event data and use it to modify its state data.

State data —Data in an application system that changes as a direct consequence of a routine activity related to the operational purpose of the application. Examples of state data include bank account balances, the number of items in a warehouse inventory, and airline seat reservations. A fundamental goal of a bank’s demand deposit accounting system is to maintain a record of how much money a customer has in his or her account. A deposit or withdrawal event will change the state of the account (that is, its balance). Similarly, the purpose of an airline reservation system is to keep track of who has made reservations and which passenger is assigned to which seat. In a sense, any application program’s purpose in life is to take incoming event data and use it to modify its state data.

Figure 8-2: Business events cause change in application data.

![]() Reference data —Data that describes the stable, more or less permanent attributes of a thing that are not being changed by the current event. Examples of reference data include a person’s name and address, the address and size of a warehouse, a product description, the number of seats on an airplane, and an employee’s demographic information. Reference data is actually a kind of state data. Event data can cause it to change, although it changes less often than state data does. Application programs refer to reference data (hence the name) without changing it. However, what is reference data to one application is state data to another application. The application that updates the product description, airplane seat count, or person’s name considers those entities to be its state data. To the other applications that read but don’t ever modify those data, it is reference data.

Reference data —Data that describes the stable, more or less permanent attributes of a thing that are not being changed by the current event. Examples of reference data include a person’s name and address, the address and size of a warehouse, a product description, the number of seats on an airplane, and an employee’s demographic information. Reference data is actually a kind of state data. Event data can cause it to change, although it changes less often than state data does. Application programs refer to reference data (hence the name) without changing it. However, what is reference data to one application is state data to another application. The application that updates the product description, airplane seat count, or person’s name considers those entities to be its state data. To the other applications that read but don’t ever modify those data, it is reference data.

Note: The terms “state data” and “reference data” also have other meanings in computer science and information management so consider the context when using the terms.

Reference data is usually changed by discarding the old data and replacing it with new data. By contrast, state data is more likely to be recomputed incrementally—the new data is the old data plus or minus the event data. In a sense, all state data and reference data are just the accumulation of event data over time.

Observational Notifications

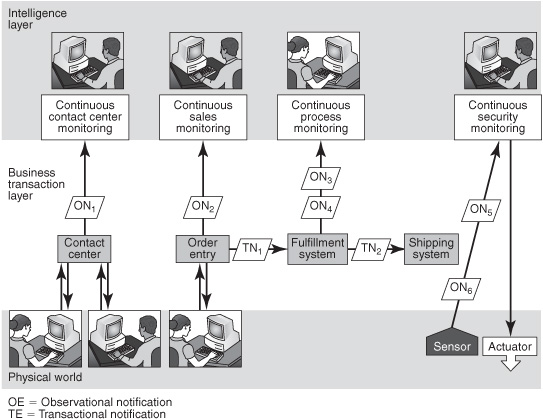

Observational notifications report events but don’t directly cause a change in a business application system, database, or anything else. They’re used in the intelligence layer (see the upper layer of Figure 8-3) as the base input events for CEP software, or in some cases, individually, to inform a person about an event.

Reports of the physical world from RFID readers, GPS devices, bar code readers, temperature and pressure sensors, and similar devices are observational notifications (see the lower part of Figure 8-3). Sensor notifications are typically highly structured. They often contain only a few data items and may be less than 100 to 200 bytes in size. They typically use binary data formats rather than XML to minimize the overhead of generating, sending, and storing them.

Observational notifications are also generated in the business application layer, computer and network monitoring systems, business process management systems, and many other kinds of software. Data from the Web can be observational notifications distributed through Atom and Really Simple Syndication (RSS) feeds.

Some observational notifications are semistructured or unstructured data, such as news feeds, e-mail messages, financial or economic reports, other documents, images, music, video, and other data. Observational notifications that contain text data are usually formatted using XML, which provides some inherent structure. Major news services such as Dow Jones and Reuters provide news data streams in an “elementized” form where certain words and phrases are tagged so that they can be found more easily. CEP software can’t interpret unstructured and semistructured notifications directly, so it must be preprocessed by adaptors. Adaptors parse the contents, extract the salient information, and generate more-structured observational events that can be manipulated for CEP.

Figure 8-3: Observational notifications enable continuous intelligence.

Note: Observational notifications may be structured, semistructured, or unstructured data types.

Sources of Observational Notifications

Acquiring observational notifications, the “first mile” in a CEP system, can be relatively easy or quite difficult depending on the circumstances. There are six ways to get observational notifications, listed here in order from the most to the least invasive of the source:

1. From the physical world

2. From business applications that are designed to natively emit them

3. From business applications that emit transactional notifications that can be leveraged for observational purposes

4. From DBMS adaptors

5. From adaptors on request-driven interfaces

6. From external sources including the World Wide Web

The Physical World

Devices on the edge between the physical world and the IT world are usually easy to implement as event sources because they are intended for and dedicated to this purpose. This includes RFID readers, bar code readers, GPS devices, accelerometers, temperature and pressure sensors, and other types of sensors.

Natively Generated in Business Applications

Some application systems emit observational notifications because their developers foresaw the need for them when they originally designed the application systems. For example, trading systems in stock exchanges and other markets are designed from their inception to emit both transactional and observational notifications. However, most traditional business applications didn’t emit observational notifications natively because no one saw a business requirement for them when the system was designed. They emitted transactional notifications only if they were using EDA. However, most newer packaged applications, including enterprise resource planning, sales force automation, and supply chain management systems, can be configured to generate outbound notifications for at least some business events. These may be used for transactional or observational purposes.

It’s possible to modify an older application to add logic that will produce a notification, although this can be expensive and time consuming. Programs have to be analyzed, modified, compiled, tested, and moved back into production. It might be worth the investment, but in many cases, there are faster and cheaper alternatives, such as those discussed next.

The advantage of notifications that are deliberately generated by an application is that the developer can specify the precise nature of the notifications. However, this approach only works if the person or group that owns the application is willing to tailor the application for this purpose, so it isn’t feasible in many circumstances.

Leveraging Transactional Notifications as Observational Notifications

A copy of a notification that is being used for transactional purposes can be sent to the intelligence layer for observational (CEP) purposes. For example, the record of a credit card purchase is inherently a transactional notification. A point-of-sale (POS) device or a business application generates the credit card transaction data when a consumer buys something. It is sent through a credit card network to a bank, where it ultimately will be used to bill the consumer. However, a second copy of the notification can also be delivered to a marketing system or a CEP-based fraud-detection system to see if a suspicious pattern of charges is emerging on this account number. This is sometimes done before the transaction is complete; elsewhere it happens after the fact, depending on the policy set by the company. Similarly, call-data records (CDRs) for phone calls are primarily intended for billing purposes but they can also be used as observational notifications for location-awareness, fraud-detection, and even marketing purposes. The proliferation of electronic transaction data is a rich and growing source of observational notifications.

If the available transactional notifications are large or not clearly structured, developers may use an adaptor to extract a few key data items from them to generate smaller, well-structured observational notifications. This is noninvasive with respect to the original system. The producer and consumer applications for the transactional notification don’t have to be modified. The adaptor taps into the transactional notification when it is sent, delivered, or at some intermediate point in the network (for example, by getting a copy when the message goes through message oriented middleware or a router).

This approach works well if the source application uses EDA because EDA applications have transactional notifications that can be readily tapped. However, it doesn’t work for applications or parts of applications that are request- or time-driven, because they have no transactional notifications.

DBMS Adaptors

Most business events cause an application system to add, modify, or delete some state data in an application database. That change is a database event, an event that is inherently detectable and available for capture without changing the application program. Database adaptors can use DBMS triggers or a DBMS log mechanism to take a copy of data that is being written to or deleted from a database. This can be implemented by custom coding or through a commercial, event-oriented, DBMS adaptor product.

This is a practical, noninvasive way of generating an observational notification for CEP without disrupting the business application. It works even if the source application is request- or time-driven. However, it assumes that the business unit that owns the database is willing to accept the introduction of a DBMS adaptor. If the application is owned by the same IT organization that wants the observational notifications, this will work fine. If it’s owned by another organization, it’s less likely to be palatable.

Adaptors on Request-Driven Interfaces

As a last resort, when none of the previous approaches is practical, a request-based adaptor can extract events from an application without affecting its application code or DBMS. A request-based adaptor is a software agent that runs outside of an application system, calling on a request/reply basis to detect and capture evidence of business events that occur within the application.

Acquiring notifications on a request-driven basis is difficult, because the source doesn’t give any signal when an event has occurred. The event is hidden within the source application. The adaptor must discover the event by periodically requesting data and examining the response to see if anything is new. This is noninvasive—no change in the source application is required. The burden of detecting the change and transforming the returned data rests in the adaptor. Service-oriented architecture (SOA) applications that expose request-driven interfaces are a good target for this approach as long as the business unit that controls the SOA application allows access.

The quintessential modern request-driven adaptor is a web scraper, a time-driven agent that polls a website at regular intervals to see if something has changed within the target system. For example, a company might deploy a web scraper to monitor a competitor’s website for changes in products or prices. When the agent discovers a change, it will send an alert to a person, application, or CEP engine. The antecedents to web scrapers were “screen scrapers” that spoof traditional applications by pretending to be people at dumb terminals, such as 3270s and VT100s. Screen scrapers only work if the adaptor is authorized to act as a client of the application, so it’s usually only practical within a company. By contrast, web scrapers can operate on any application with a public website.

Events from the World Wide Web and Other External Sources

The Web is a rich source of event information. Some is available through event-driven mechanisms such as Atom and RSS. More of it requires adaptors because it is natively request-driven. Most of the data is free, although some of the more valuable sources are only available as fee-based, software-as-a-service (SAAS) resources. News feeds, economic data, trade association data, and weather information are already widely exploited in business, although direct links to deliver such data to CEP systems are just coming into use. Most of this data is funneled to human decision makers, just as much internal information about events is handled by people. The possibilities for collecting new kinds of observational notifications relevant for making business decisions are increasing rapidly. Some companies have installed agents that listen to Twitter, a micro-blogging website, to detect the frequency of use of certain keywords. Web cams provide video trails that can be mined to detect events.

Comparative Value of Event Sources

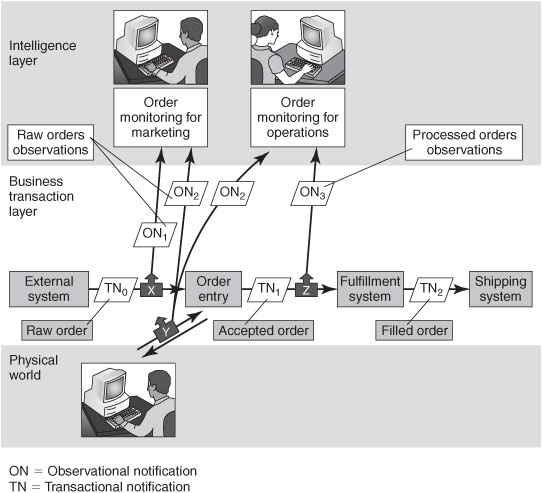

User requirements determine which sources of event data are most relevant for any particular application. Consider our familiar order-fulfillment process, repeated in Figure 8-4 with minor changes. If you’re building a monitor for the marketing department, you might want to tap into the process at the point where the order is captured from a customer. An adaptor at point X can obtain a copy of order transactional notification TN0 as it comes in over a messaging link from a business partner. A full copy of TN0 could be used as an observational notification, or the adaptor could generate a new, subset version (ON1) of the notification to use for monitoring purposes.

Figure 8-4: Capture events at the point of greatest information value.

If the order is captured in a web interaction rather than in a message, a different kind of adaptor can capture all of the communication between a customer and the web server at point Y to generate a set of observational notifications (ON2). Commercial adaptors that listen to web traffic are available and some have been integrated with commercial CEP software. This configuration collects information about orders, but also much more: it can track which pages the customers viewed and which other products and options the customers considered. It can also get information about prospects who chose not to place an order. Some web monitors get their data from a web server–based application rather than intercepting the web page traffic on the way into the Web server, but the general approach is otherwise similar to other web monitors.

If you’re building a business activity monitoring (BAM) dashboard for an operations manager in the order-fulfillment department, you might use the same observational notifications (ON1 or ON2) used by the marketing monitoring system because that would give an advance warning of the orders that will eventually hit the fulfillment system. Or you might put an adaptor at point Z to capture only the accepted orders TN1 because you don’t want to burden the operations dashboard with information about all orders, some of which will never hit the fulfillment system because they won’t make it through the order entry system. Again, you could use a full copy of the transactional notification TN1 or generate a smaller version of the notification that contains only a few key data items to use as an observational notification ON3. Accepted order notification TN1 differs from the raw order notification TN0 because the order entry application has validated the order and enriched it with information such as the customer’s credit score, volume discount, and other information. They’re both transactional notifications about the same order, but they’re at different stages in the process instance’s life. The kind of information available at each stage is different. User requirements will determine which notification is more appropriate.

As a rule of thumb, more information is available early in a process, especially as event data enters the company. This applies not only to our order-entry example, but also to many other applications, such as supply chain management. Raw documents, such as advance shipping notices (ASNs), that arrive via e-mail from external partners contain details and implications that are lost after an application takes the information from an ASN and puts it into an application database. In many kinds of business applications the transformation from raw input data to the data structure used internally within an application system is said to be lossy because some of the information value is lost. Companies are increasingly able to save and use raw event data (incoming notifications and web traffic logs) because of the decreasing costs of computers and storage. Nevertheless, observational notifications captured later in the process (downstream) can sometimes be preferable for some purposes if they have been enriched or combined with other events during the process.

Using Notifications

A notification is a notification. You can’t tell an observational notification from a transactional notification by looking at its data items or how it is communicated, because all notifications look alike. As we have shown, many observational notifications are an exact copy of a transactional notification. The difference between transactional and observational notifications is only in how they are used.

Transactional notifications directly cause changes in the business transaction layer or in the physical world. Observational notifications are used, usually in sets, in CEP software in the intelligence layer to detect threat or opportunity situations. These indirectly cause events in the business application layer or the physical world. All CEP and BAM systems are closed-loop systems in the sense that they eventually result in something tangible happening to help the business in the business transaction layer or the physical world. A company wouldn’t pay for a CEP system if it only produced information for entertainment. In some CEP systems, a person is in the loop to help make a decision or to conduct the response. In others, the decision and response are fully automated, so no person is in the loop. But there is always a loop back to the transactional or physical realms.

The output of CEP systems is used three ways:

![]() Decision support —Simple forms of decision support are provided through browser-based dashboards, e-mail alerts, SMS messages, other notification channels, or office productivity tools. Spreadsheets are one of the most common vehicles for monitoring business situations because they allow the end user to apply ad hoc analytics to data provided through CEP.

Decision support —Simple forms of decision support are provided through browser-based dashboards, e-mail alerts, SMS messages, other notification channels, or office productivity tools. Spreadsheets are one of the most common vehicles for monitoring business situations because they allow the end user to apply ad hoc analytics to data provided through CEP.

CEP software can also be tied into workflow systems for more-elaborate follow-up processes. Workflow systems manage the steps in a human response through a task list (or work list) mechanism (see Chapter 10 for more explanation of the links between CEP and workflow software). In other cases, the CEP software is integrated with a sophisticated notification management system (sometimes called “push” systems). These systems can deliver alerts to individuals, named groups, or roles (for example, all people with a certain job title or job description). They can also manage the response process—for example, by escalating the alert and delivering it to another person if the original target does not acknowledge receipt of the alert in a certain time interval. Such systems may also be used to implement multichannel, communication-enabled business processes (CEBPs). CEBP solutions may use a mix of web, SMS, phone, and other mechanisms to notify the appropriate people; automatically arrange joint conference calls to discuss the response; and integrate human and automated SOA services.

![]() Driving business applications —CEP software can trigger an automated response in a business application by invoking a web service, using some other kind of call or method invocation, or sending a transactional notification. Alternatively, the call or the notification can go to a business process management (BPM) engine that will, in turn, activate business applications.

Driving business applications —CEP software can trigger an automated response in a business application by invoking a web service, using some other kind of call or method invocation, or sending a transactional notification. Alternatively, the call or the notification can go to a business process management (BPM) engine that will, in turn, activate business applications.

![]() Automating the physical world —Finally, CEP software can drive an automated response in the physical world by controlling some kind of actuator to turn a machine on or off, increase the heat, open a valve, close a door, or perform any other action. The type of response is limited only by the capabilities of the development team.

Automating the physical world —Finally, CEP software can drive an automated response in the physical world by controlling some kind of actuator to turn a machine on or off, increase the heat, open a valve, close a door, or perform any other action. The type of response is limited only by the capabilities of the development team.

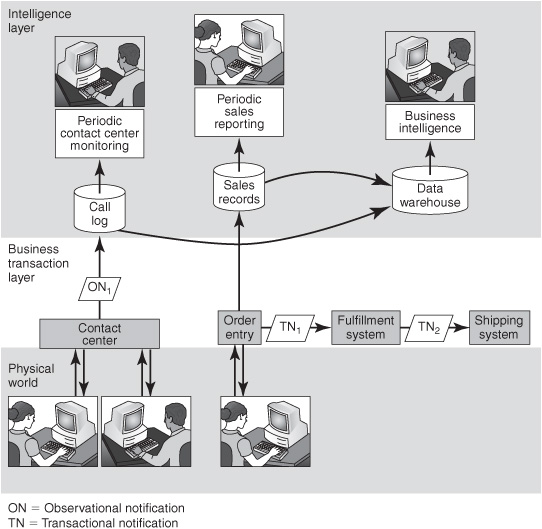

Continuous Intelligence Compared to Periodic Intelligence

The intelligence layer has always been a part of a company’s business operations rather than something that recently emerged through the introduction of CEP (see the top part in Figure 8-5). Virtually all application systems produce management reports at the intelligence layer to summarize what’s happened at the transaction layer. Managers and analysts get regular reports on sales, accounting, inventory, financial controls, manufacturing, transaction volumes, service levels, and other metrics. Conventional reports give a fairly narrow view of business operations because they reflect the activity only within one application system or a set of related applications. However, a broader and deeper view is available through performance management and other business intelligence (BI) programs. BI programs pull together data from many applications and multiple business units. BI data is typically stored in data warehouses and data marts and made available to analysts and managers for a wide range of decisionmaking purposes (Chapter 10 explains the various kinds of BI).

Figure 8-5: Periodic intelligence—management reports and ad hoc analytics.

CEP-based systems play approximately the same role as conventional management and analytics applications, with one big difference. CEP-based systems provide continuous intelligence because they’re event-driven. Conventional management and analytics systems are periodic—they’re either time-driven reports or request-driven queries that run upon user command. For conventional, periodic management systems, the data from the transactional systems is put into databases, where it rests until a batch report is generated or a query comes in. By contrast, observational notifications for CEP systems are processed as they arrive and sometimes are never put out to a database.

Aside from the timing, periodic management systems can provide all of the key performance indicators (KPIs) and other metrics available through continuous intelligence systems. In practice, some of the information that periodic management systems provide is broader and deeper than that available through continuous intelligence systems because periodic management systems are more mature and have the luxury of more time to compute things. Analysts can experiment with ad hoc inquiries and perform “what if” kinds of operations in request-driven systems.

Note: Companies will increase their use of continuous intelligence systems dramatically during 2010 through 2020, but will continue to rely on periodic management and analytical systems for conventional intelligence needs.

Periodic intelligence is reasonably mature but will continue to evolve to take advantage of technology advances. Continuous intelligence is still in the early stages of adoption. Most companies practice it in a few areas, but its fast growth is just beginning. In many cases it will complement conventional forms of intelligence, but in some cases it will replace it. We’ve used the example of continuous intelligence in a customer contact center in this book. Most contact centers still track customer calls and SLAs periodically, through time-based reports and ad hoc queries, but continuous monitoring is partially supplanting periodic monitoring in leading-edge contact centers.

Application Architecture Styles

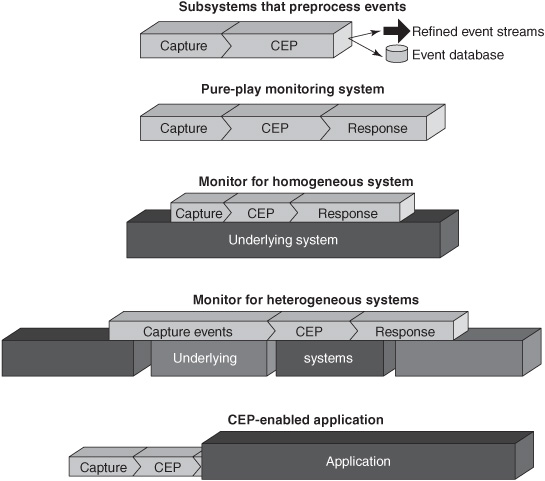

CEP is used in business applications in multiple ways. Each way can be categorized according to how the application obtains and uses notifications, and whether and how the CEP aspects are tied into other parts of the system. The applications differ in the amount of effort that is needed to capture the events, process them, and respond to the situations that are detected (see Figure 8-6).

Figure 8-6: Five styles of CEP applications.

Five styles of CEP usage are:

![]() Subsystems that pre-process event streams —CEP platforms that listen to high-volume event streams to filter and condition event data for subsequent periodic analytical applications or near-real-time continuous monitors to use.

Subsystems that pre-process event streams —CEP platforms that listen to high-volume event streams to filter and condition event data for subsequent periodic analytical applications or near-real-time continuous monitors to use.

![]() Pure-play monitoring systems —Gather observational events from sensors and have little or no integration with other applications. The monitor is the application.

Pure-play monitoring systems —Gather observational events from sensors and have little or no integration with other applications. The monitor is the application.

![]() Monitors for homogeneous systems —CEP-based facilities that help users manage an application system, network, IT subsystem, factory, or some other system.

Monitors for homogeneous systems —CEP-based facilities that help users manage an application system, network, IT subsystem, factory, or some other system.

![]() Monitors for heterogeneous systems —CEP-based applications that monitor two or more autonomous systems.

Monitors for heterogeneous systems —CEP-based applications that monitor two or more autonomous systems.

![]() CEP-enabled applications —Primarily transactional applications that are triggered by complex events or use complex events as input.

CEP-enabled applications —Primarily transactional applications that are triggered by complex events or use complex events as input.

Subsystems that Pre-process Event Streams

CEP software is often used to implement subsystems that capture and refine high-volume event data as it arrives from web applications, industry-focused data feed providers, and other sources. The purpose of these subsystems is to reduce the volume and improve the quality of the event data through functions such as:

![]() Filtering out irrelevant data

Filtering out irrelevant data

![]() Eliminating duplicate event objects

Eliminating duplicate event objects

![]() Discarding data that appears to be erroneous because it is out of the range of possible values

Discarding data that appears to be erroneous because it is out of the range of possible values

![]() Putting the events in some meaningful order if they arrive out of order

Putting the events in some meaningful order if they arrive out of order

![]() Making and recording calculations from multiple incoming events, such as volume-weighted average prices for a stock or counting the number of events of a certain kind that arrive during a specified time window

Making and recording calculations from multiple incoming events, such as volume-weighted average prices for a stock or counting the number of events of a certain kind that arrive during a specified time window

![]() Enriching the events by looking up data from a table in memory or a database

Enriching the events by looking up data from a table in memory or a database

These are subsystems rather than complete business systems because they support other applications rather than implementing an entire business application in their own right. Their output is an improved event stream that will be fed into another CEP application or stored in an event database that will be used by a subsequent business intelligence or other analytical systems on a time-driven or request-driven basis. Cleaning up the event data as it arrives rather than putting the raw, unrefined events directly into an event log allows subsequent applications that use the data to concentrate on analytic functions instead of data quality issues. Reducing the amount of useless event data that is stored in an event database is a major benefit, particularly when dealing with very-high volume event streams.

The need to perform several stages of continuous CEP in sequence—cascading the output event streams from one stage to use as input for the next stage—appears in many kinds of CEP applications. Examples include:

![]() The pattern detection application in the section “Event-Processing Rules and Patterns” of Chapter 7 is an example of cascading event processing. Incoming stock price events were the input to a CEP pre-processing stage that generated the StockPriceRise event stream for use in a subsequent CEP stage.

The pattern detection application in the section “Event-Processing Rules and Patterns” of Chapter 7 is an example of cascading event processing. Incoming stock price events were the input to a CEP pre-processing stage that generated the StockPriceRise event stream for use in a subsequent CEP stage.

![]() Supply chain management applications that leverage RFID data are a type of multistage CEP because the RFID reader is a simple, purpose-built type of CEP pre-processor that eliminates duplicate tag readings before forwarding the events upstream to the supply chain application.

Supply chain management applications that leverage RFID data are a type of multistage CEP because the RFID reader is a simple, purpose-built type of CEP pre-processor that eliminates duplicate tag readings before forwarding the events upstream to the supply chain application.

![]() A large bank uses a multistage CEP application to implement sophisticated trading strategies. The first stage is executed by a commercial CEP engine that listens to market data from stock exchanges and computes complex events that represent the current liquidity of particular financial instruments. These liquidity indicators are emitted as an event stream that goes into a second stage of CEP that runs in an older, custom-written, CEP-enabled trading platform. The trading platform also listens directly to market data events from stock exchanges to track current prices. The trading platform implements an automated trading strategy that reflects a combination of information from the liquidity indicator event stream and the raw market data.

A large bank uses a multistage CEP application to implement sophisticated trading strategies. The first stage is executed by a commercial CEP engine that listens to market data from stock exchanges and computes complex events that represent the current liquidity of particular financial instruments. These liquidity indicators are emitted as an event stream that goes into a second stage of CEP that runs in an older, custom-written, CEP-enabled trading platform. The trading platform also listens directly to market data events from stock exchanges to track current prices. The trading platform implements an automated trading strategy that reflects a combination of information from the liquidity indicator event stream and the raw market data.

Multistage CEP solutions will become more common as business analysts become more knowledgeable about CEP application design. CEP subsystems that pre-process event streams may be combined with the other CEP application styles described below to implement compound event-processing systems.

Pure-Play Monitoring Systems

Pure-play monitoring systems collect observational events from sensors, look for occurrences of patterns of interest, and send alerts and notifications to people or other systems (see the rightmost part of Figure 8-3). Examples include:

![]() Tsunami warning systems receive base notifications regarding the height of ocean waves from buoys stationed in multiple locations. They emit alerts that predict the time, place, and size of a tsunami that will hit the shore.

Tsunami warning systems receive base notifications regarding the height of ocean waves from buoys stationed in multiple locations. They emit alerts that predict the time, place, and size of a tsunami that will hit the shore.

![]() Physical intrusion detection systems collect accelerometer sensor data on the magnitude and timing of the movement of fence sections. A CEP engine analyzes the patterns of movement to understand the nature of a disturbance. The challenge is to distinguish between a true positive, such as a person climbing over a fence, and false positives, such as the fence moving in the wind or being brushed by a small animal.

Physical intrusion detection systems collect accelerometer sensor data on the magnitude and timing of the movement of fence sections. A CEP engine analyzes the patterns of movement to understand the nature of a disturbance. The challenge is to distinguish between a true positive, such as a person climbing over a fence, and false positives, such as the fence moving in the wind or being brushed by a small animal.

![]() Monitoring systems track the location of small children at amusement parks using RFID-tagged wrist bands. An alert is emitted when a child is more than 50 meters from a parent or guardian, or more than 10 meters apart if near an entrance, exit, or restricted location.

Monitoring systems track the location of small children at amusement parks using RFID-tagged wrist bands. An alert is emitted when a child is more than 50 meters from a parent or guardian, or more than 10 meters apart if near an entrance, exit, or restricted location.

![]() Airport monitoring systems listen to notifications from check-in kiosks located in airport lobbies. If a kiosk has been unplugged or turned off by cleaning or maintenance personnel during the night, the monitoring system will notice the absence of activity in the early morning hours. It will send an alert to dispatch a person to start the kiosk up again.

Airport monitoring systems listen to notifications from check-in kiosks located in airport lobbies. If a kiosk has been unplugged or turned off by cleaning or maintenance personnel during the night, the monitoring system will notice the absence of activity in the early morning hours. It will send an alert to dispatch a person to start the kiosk up again.

The primary challenge when developing a pure-play monitoring system is in the pattern discovery—finding the event patterns that indicate the threat or opportunity situation. Analysts spend considerable time studying samples of event streams and defining the rules that will be applied at run time to filter and correlate the base events.

These are stand-alone systems with little or no integration with other systems. The input data are observational notifications from sensors that have been deployed just for the purpose of this application. Notifications are typically small—under a few hundred bytes and with relatively few data items. The response phase is generally straightforward, usually consisting only of alerting people through dashboards or some other notification channel.

Monitors for Homogeneous Systems

The most common kind of CEP application is a monitor that applies to one application system, physical plant, BPM facility, or other IT subsystem (see the middle level of Figure 8-6). Examples include:

![]() Network and computer system monitors report the health, throughput, performance, service levels, and other metrics of networks, computer systems, or parts of computer systems. They are an essential part of IT operations management tools and were among the earliest applications to use CEP concepts (they have used CEP since the 1990s, although it wasn’t called CEP until the 2000s). Users are primarily IT network and system operations staff. Business service management (BSM) tools are a relatively new type of operations management tool. They track the health, throughput, and other metrics of SOA services.

Network and computer system monitors report the health, throughput, performance, service levels, and other metrics of networks, computer systems, or parts of computer systems. They are an essential part of IT operations management tools and were among the earliest applications to use CEP concepts (they have used CEP since the 1990s, although it wasn’t called CEP until the 2000s). Users are primarily IT network and system operations staff. Business service management (BSM) tools are a relatively new type of operations management tool. They track the health, throughput, and other metrics of SOA services.

![]() Operations monitors track the performance of factory production lines, power plants, and other process plants against targets and thresholds and help determine the causes of production problems and downtime. These products have event monitors that detect operating anomalies, mode changes such as whether equipment is running, and other situations that may need attention.

Operations monitors track the performance of factory production lines, power plants, and other process plants against targets and thresholds and help determine the causes of production problems and downtime. These products have event monitors that detect operating anomalies, mode changes such as whether equipment is running, and other situations that may need attention.

![]() Application-specific BAM dashboards and other alerting mechanisms are part of many Enterprise Resource Planning (ERP), accounting, and other packaged business applications. This is a relatively recent phenomenon—few applications offered these as standard features before about 2005 to 2008. BAM differs from IT operations management monitors because the user is a businessperson, not someone in the IT department. Most BAM capabilities are fairly limited in scope—they typically only monitor a small fraction of events and KPIs within one application or a suite of applications from one vendor.

Application-specific BAM dashboards and other alerting mechanisms are part of many Enterprise Resource Planning (ERP), accounting, and other packaged business applications. This is a relatively recent phenomenon—few applications offered these as standard features before about 2005 to 2008. BAM differs from IT operations management monitors because the user is a businessperson, not someone in the IT department. Most BAM capabilities are fairly limited in scope—they typically only monitor a small fraction of events and KPIs within one application or a suite of applications from one vendor.

![]() Most commercial BPM software products have a BAM dashboard facility for business process monitoring. The monitoring is generally limited to events that are under the control of the run-time BPM software (the orchestration and workflow engines). Chapter 10 describes process monitoring facilities in more detail.

Most commercial BPM software products have a BAM dashboard facility for business process monitoring. The monitoring is generally limited to events that are under the control of the run-time BPM software (the orchestration and workflow engines). Chapter 10 describes process monitoring facilities in more detail.

The monitoring facility for a homogeneous system is generally developed by the same team that is building the underlying system, or by someone who is working closely with that team. The monitor is a relatively minor aspect of the project because most of the investment is going to develop and deploy the overall network, computer system, process plant, application, or BPM product. It’s relatively easy to design and implement a monitor for a homogenous system because the underlying system can be designed to emit notifications from its inception. Developers know what events will occur and can format the notifications to provide exactly the kind of event data needed to support the monitor. The response phase usually consists of notifying people through dashboards or other alerting channels, although automated responses are implemented in some of these systems, particularly operations management systems. In this style of operation, the monitor directly affects the running of the system that it is monitoring.

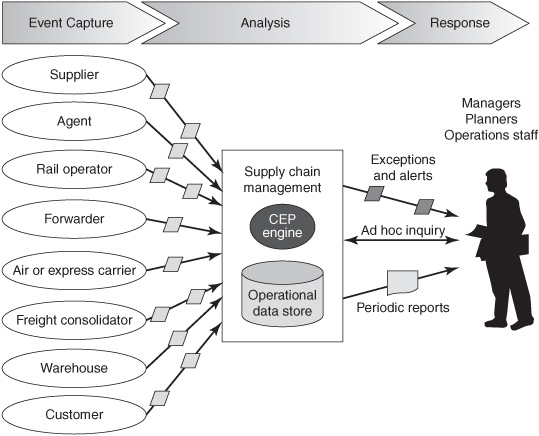

Monitors for Heterogeneous Systems

CEP applications that monitor complex activities or end-to-end processes with multiple, heterogeneous event sources are relatively difficult to implement. Supply chain management (SCM) is a good example (see Figure 8-7).

A supply chain is a complex business activity with many autonomous participants such as suppliers, air carriers, express carriers, rail operators, freight consolidators, agents, warehouses, and, of course, customers. Each participant has its own application systems and makes its own decisions about what to do and when to do it. The process cannot be predicted in advance because of unforeseeable events, such as factories running ahead or behind schedule, raw materials arriving late, labor issues, mechanical failures in trucks or planes, or weather changes. Shipments can be combined or split and sent separately. No process model or BPM engine applies to the whole supply chain from end to end.

Figure 8-7: Supply chain management.

Base events in an SCM system are a mix of observational events, such as ASNs, packing lists, and bills of lading, and transactional events, such as orders, purchase orders, invoices, and bills. Some of these are transmitted through e-mail, and others arrive through electronic data interchange (EDI) networks, web services, HTTP, messaging systems, or on paper. SCM systems have embedded CEP logic that consolidates notifications to derive complex events that make the goods pipeline more visible. SCM answers questions such as, “Where are the goods?” and “When will they arrive?”

Numerous other kinds of track-and-trace systems are emerging. They are similar to SCM systems because they involve multiple autonomous companies and their respective application systems, but they are specialized for particular types of problems. For example:

![]() The food industry is moving to track food items “from farm to fork” to safeguard the food and to be able to identify the source of bad food shortly after it has been discovered.

The food industry is moving to track food items “from farm to fork” to safeguard the food and to be able to identify the source of bad food shortly after it has been discovered.

![]() Pharmaceutical supply chains are installing monitoring systems that track drugs from the factory, through the distribution network, and to the point of sale at a pharmacy. The goal is to prevent theft and the introduction of counterfeit drugs into the system. Regulations are driving the adoption of such systems.

Pharmaceutical supply chains are installing monitoring systems that track drugs from the factory, through the distribution network, and to the point of sale at a pharmacy. The goal is to prevent theft and the introduction of counterfeit drugs into the system. Regulations are driving the adoption of such systems.

Capturing the notifications is typically the most difficult aspect of monitoring heterogeneous systems. Part of the challenge is technical—installing the adaptors that convert notifications from many different formats and protocols into a form that the CEP software can use. The larger challenge is organizational—getting cooperation from the companies, business units, and people that own and manage the applications that are the source of the notifications.

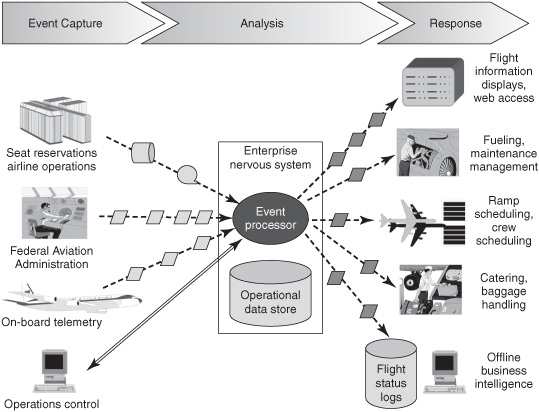

CEP-Enabled Applications

Some business applications are triggered by complex events or use complex events to alter their behavior. The work to acquire notifications and implement the CEP software is a relatively small, although vital, part of the project (see the bottom of Figure 8-6). These applications are the opposite of pure-play monitoring systems, where most of the effort goes into detecting the situations and the response is a minor aspect of project.

Some CEP-enabled applications are all-new but many are developed as extensions or modifications to existing business applications. For example, the sophisticated “enterprise nervous systems” used in modern airline operations are event-enabled systems of systems that encompass many CEP-enabled applications. The essential business operations of major air carriers have been largely automated for 40 years. Transaction workloads that incorporate the central seat reservation systems can exceed 30,000 transactions per second. Other airline applications manage flight schedules, food catering services, flight crew schedules, fueling, aircraft maintenance, gate assignments, and report arrival and departure times (see the right side of Figure 8-8). These are separate applications, developed largely independently at different times and owned by different business units.

The ability of an airline to respond to change has always depended on the speed and effectiveness of the information transfer between the sources of data and the many business units and applications that depend on it. Airlines continuously track hundreds of flights, each at a different stage in its life cycle. Information about the location of each plane and status of each flight becomes outdated in seconds or minutes.

The observational notifications that drive an airline nervous system originate in hundreds of locations (see the left side of Figure 8-8). Event sources include telemetry devices on the planes, reports from the Federal Aviation Administration, application systems on the ground, and gate agents and other people. Notifications are sent through a variety of communication technologies to a virtual clearinghouse which maintains flight status information in operational databases. The clearinghouse publishes notifications and accepts inquiries from application systems and people to keep them informed of changing conditions for every flight worldwide.

Figure 8-8: Events and complex events inform business applications.

An airline enterprise nervous system is all about situation awareness. Each business unit dynamically alters its plans when informed of schedule changes, weather, equipment failure, or other events. Planes and gates are reassigned as conditions change. If flights have become bunched together, there may not be enough available gates to serve all planes immediately. By having current information and predictions about likely future conditions, gate scheduling systems can make better decisions. Airlines pay penalties for flights that arrive after a night-time curfew, so flights that need an early departure are given priority over those that can afford to wait. The enterprise nervous system improves plane turnaround time because fuel, mechanics, food, crews, and baggage handlers are ready at the right moment. Fewer planes, gates, and employees can support the same number of flights. Passengers also have better access to information about the status of flights, and earlier warning of changes.

Airline networks are among the largest and most-complicated event-enabled systems. They combine the challenges of application integration with the challenges of high event volumes in the context of a highly competitive, cost-conscious industry. The underlying applications use a diverse set of operating systems, application servers, transaction-processing monitors, DBMSs, and network protocols. Many of the applications have been in place for years and are constantly being updated. Some applications were modified to accept notifications directly from the virtual clearinghouse. In other places, alerts are sent to people who intervene manually but use the underlying application systems to carry out the business functions. Most aspects of the airline nervous system don’t use CEP software yet. They use simple events or minor sections of CEP logic coded directly into business applications. However, commercial CEP software is used for some parts of the system, and its use is growing as architects identify additional areas where it is helpful.

Summary

Transactional notifications cause changes in transactional business applications. Observational notifications report events and provide information that leads to generating complex events. Complex events enable faster and better decisions, causing indirect changes in transactional applications and the physical world. Observational notifications can be generated in a variety of ways from sensors or from transactional applications. They’re used in relatively autonomous monitoring systems or integrated with other kinds of business applications. Continuous intelligence applications are relatively new, but will have a major impact on the way companies operate during the next 10 years.