2

A Fuzzy Approach to Face Mask Detection

Vatsal Mishra, Tavish Awasthi, Subham Kashyap, Minerva Brahma, Monideepa Roy* and Sujoy Datta

School of Computer Engineering, KIIT Deemed to be University, Bhubaneswar, India

Abstract

With the severe outbreak of the pandemic of COVID-19, several guidelines were laid down by the CDC and WHO for the effective containment of the spread. Some of these measures were proper wearing of adequate masks, social distancing, sanitation, frequent washing of hands, various degrees of lockdown and isolation. Here, we have designed a fuzzy based framework for the automated detection to detect and warn if a user is wearing a mask correctly or not, (e.g., covering both mouth and nose) when in public places, using public services or even while talking to someone. This framework can be implemented at public places to monitor the public and warn a user if he/she is not correctly wearing the mask. The architecture used for the object detection purpose is Single Shot Detector (SSD) because of its good performance accuracy and high speed. Transfer learning in neural networks has also been used to finally find out the presence or absence of a face mask in an image or a video stream. Experimental results show that our model performs well on the test data with 100% and 99% precision and recall, respectively.

Keywords: COVID-19, pandemic, fuzzy decision making, mask wearing

2.1 Introduction

With the outbreak of severe acute respiratory syndrome coronavirus 2 (SARS-CoV-2) infection in Wuhan, Hubei, China in December 2019, it has been declared as pandemic by the World Health Organization (WHO) due to its global outbreak. After the worldwide breakout of the COVID-19 pandemic, the number of global new cases had already exceeded 70 million and the number of death was around a quarter of a million according to the information provided by the world health organization (WHO) (at the time of writing this chapter). Therefore, there was an urgent need to devise a means to restrict the spread of the disease to as much of an extent as possible. Guidelines were issued to people to curtail the spreading of COVID-19, which included wearing a mask covering the nose and mouth, maintaining a physical distance of six feet from others, avoiding crowds, and washing hands frequently.

This has compelled civil administration to enforce wearing face masks in public due to rising COVID-19 cases all over the world. Before the onset of the COVID-19 pandemic, common people usually wore masks for protection against air pollution. The main users of masks for medical or surgical purposes were doctors and practitioners in the healthcare domain. However, as scientists have now proven that the wearing face masks effectively impedes COVID-19 transmission, almost all countries have issued a health advisory to make the wearing of masks compulsory, by all people who are travelling outside their homes.

Over five million cases of infections by COVID-19 were registered in less than 6 months across 188 countries. According to scientists, the virus was found to be primarily airborne, which meant that it spreads through close proximities and in crowded places. In this chapter, we have presented a framework for a mask face detection model that is based on computer vision and deep learning. The proposed model detects whether a person is wearing a mask or not, and whether a mask has been correctly worn according to guidelines. This framework can also be integrated with a surveillance camera for face detections and by raising proper alerts that can effectively reduce the spread of the virus.

The model integrates deep learning and classical machine learning techniques with OpenCV, TensorFlow, and Keras. Deep transfer learning has been used for the feature extractions.

Our main contributions are as follows:

- Initially, the main target was to build a large data set consisting of masked faces, properly wearing masked face detection data set and then include nonmasked face data set. Few predefined classes were included regarding the target cases.

- To make the model a bit more rigid, it was adjusted to application scenarios. As shown in the result, the model when deployed was able to achieve 99% accuracy.

- OpenCV-Python have been used for the real time detection of a face mask on a person, without the usage of much resources. The model is also capable of detecting faces in different orientations and can also detect faces, which are occluded with a good deal of accuracy.

2.2 Existing Work

Device detection or object detection is one of the most fundamentally challenging tasks in the computer vision and is being greatly applied in the applications of face detection, digit detection, handwriting detection, pedestrian detection, etc. In the area of object detection, face detection has been in high demand. Due to the outbreak of the global pandemic (COVID-19), face detection has achieved a lot of attention and research. At present, various types of machine learning algorithms have been developed for enhanced object detection or face detection [7, 9].

CNN-Based Object Detectors

Although deep learning was first proposed in 2006, it did not receive much attention until 2012. With the advent of a large amount of labeled datasets and the increase of computational power, deep learning has been found to be very effective in extracting intrinsic structure and high-level features. A lot of progress has been made to solve different types of problems in AI, and especially in the areas where the data is multidimensional and the feature are difficult to hand-engineer. Some examples are speech recognition, natural language processing, and computer vision. Deep learning is also very useful in areas, like business analysis, medical diagnostics, art creation and image translation.

Of these areas, computer vision is one field that has had remarkably successful applications of deep learning. This is mainly because since vision is the most important sense in terms of navigation and recognition in human beings, it provides the maximum information about the surroundings. With the rapid development of image sensors, computer vision [4] is now applied to a wide spectrum of applications like autonomous driving, visual surveillance, facial recognition, etc.

The fundamental step for many computer vision applications is object detection. The first step to extract the spatial and classification information of an object is usually done by convolutions neural networks (CNNs) as a first step. After that more models are added for specific applications. So most of the research on computer research will depend on the performance of object detection. Convolutional Neural Network is a kind of artificial neural network used for image recognition and processing that is especially designed to gather pixel data. It is a fundamental example of deep learning, which offer a more advanced evolution of artificial intelligence to systems that simulate different types of biological brain activities.

Face-Mask Detection

In view of the COVID 19 outbreak, it has become compulsory for all persons to wear masks when they are in public places or where there are clusters of persons. Since not everyone is following this mandate properly, it has become necessary to monitor the detection of masks and whether the masks were being used properly to ensure the safety of people. Apart from the people who are suspected of being infected with COVID-19 needing to wear masks to prevent the infection from spreading, healthy people also need to wear masks to protect themselves from infection. When these face masks are fitted properly, they can effectively reduce the transmission of infection by obstructing the parties expelled from a cough or sneeze. But unfortunately, the effectiveness of face masks are reduced mostly due to improper wearing. Therefore, it is very important to develop an automatic mask wearing detection approach, which can detect the presence of a mask and whether it has been worn properly. In Wang et al. [5], the authors have generated a new anchor level attention algorithm for obstructed face recognition that might boost the different regions of the face and improve the accuracy by combining the anchor point allocation strategy and data expansion. They have also assembled different types of datasets for this type of studies.

Now, a drawback of that was that in real time performance the different areas were compressed and so the resulting accuracy of the given dataset was just 89.6%. In Loey et al. [3], the authors have inherited the hybrid transfer learning model and machine learning methods for a nicer classification. The final accuracy reached 99.64% on the real time face dataset (RMFD). In Cabani et al. and Chiang et al. [1, 2], the authors have proposed masked face images based on facial feature landmarks and created a huge dataset of 137,016 masked images that gave us more data for training. Along with this, they have created mobile applications that made people wear masks correctly.

In Qin and Li [6], the authors have proposed a new facemark wearing condition identification method, which combines image super resolution and classification networks (SRCNet), based on unconstrained 2D facial images.

Our proposed method uses a customized deep learning model to identify if a person is wearing a mask correctly or not.

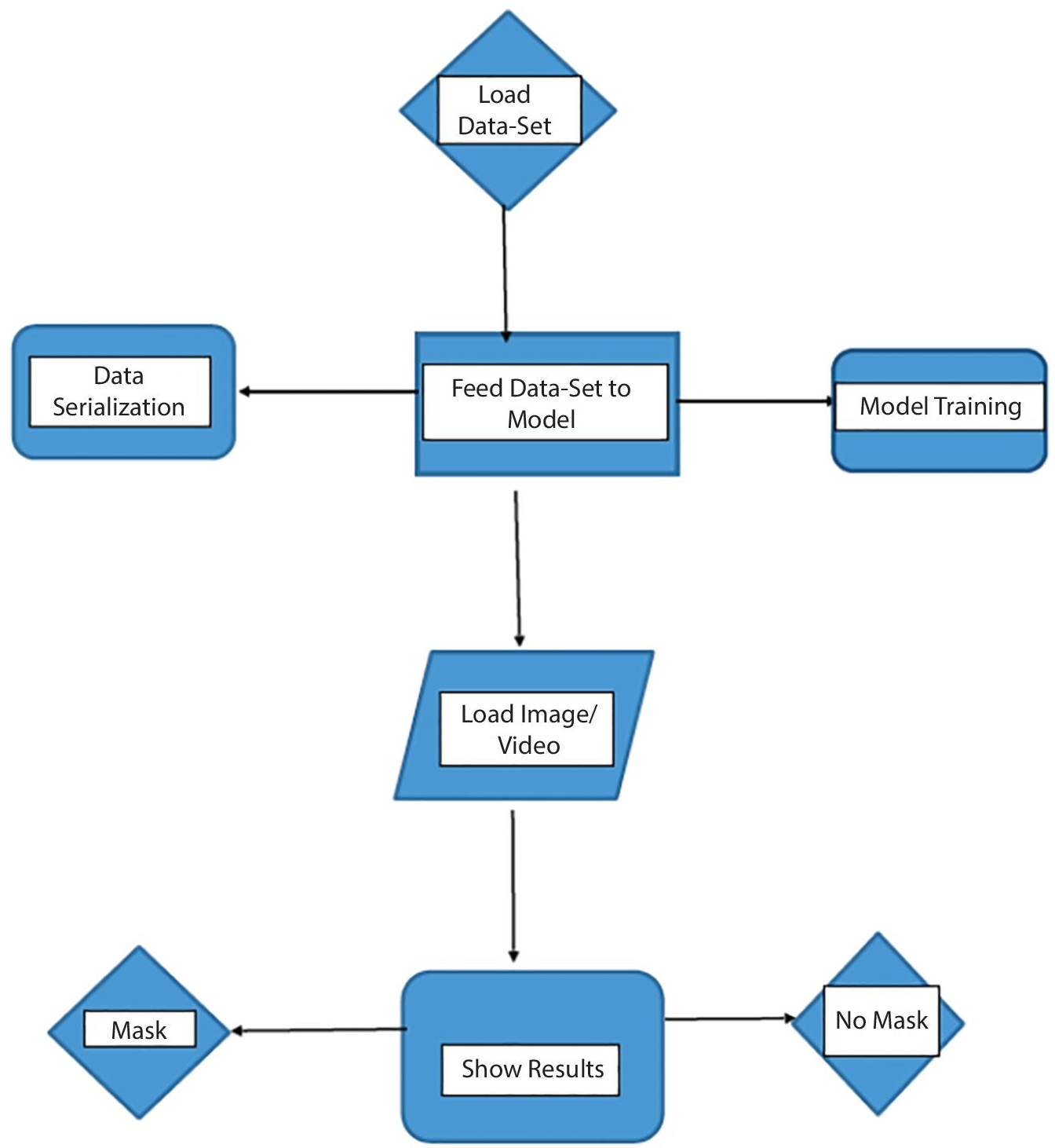

Figure 2.1 The proposed framework.

2.3 The Proposed Framework

It has already been proven that proper wearing of masks and face coverings can prevent the wearer from contracting or transmitting the COVID-19 virus to a large extent. However, it has been observed that many people do not wear their masks properly or not wear masks at all. The following framework (Figure 2.1) was designed to train a custom deep learning model to detect whether a person is or is not wearing a mask.

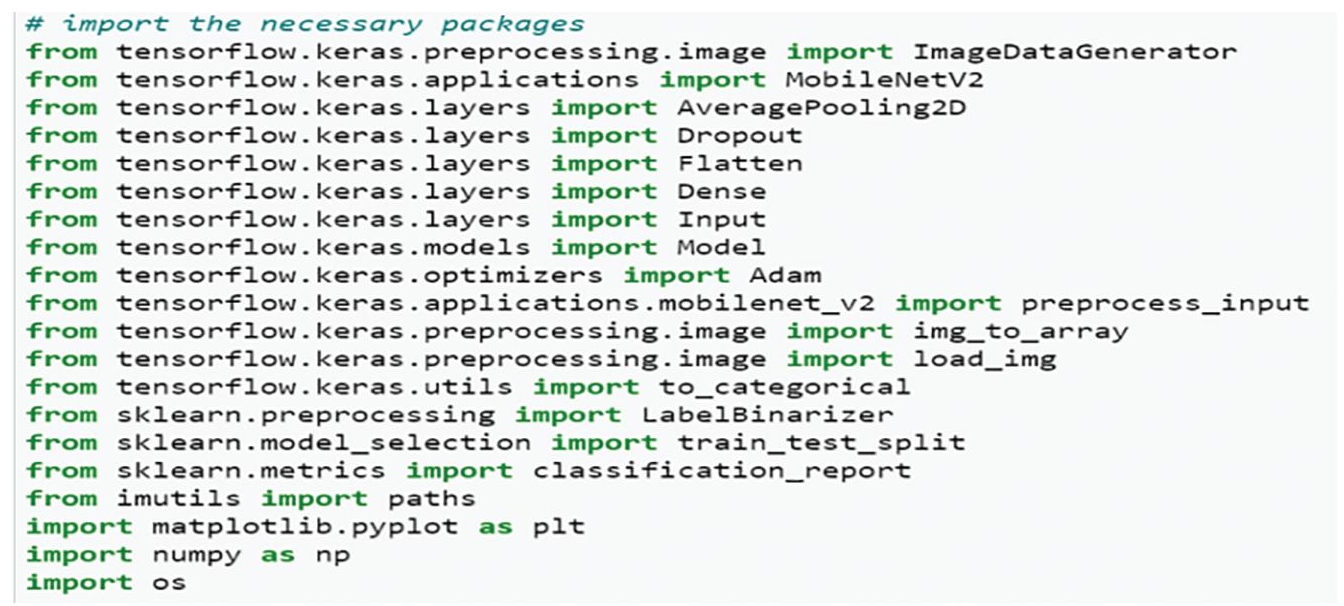

2.4 Set-Up and Libraries Used

In this section an introduction of the various libraries and their function are given along with the setup details. Some of the libraries used were TensorFlow, Keras, Matplotlib (Figure 2.2), etc.

Libraries and Their Functions

TensorFlow

TensorFlow has been created by the Google Brain team and is an open source library for numerical computation and large-scale machine learning. TensorFlow is a collection of machine learning and deep learning (aka neural networking) models and algorithms and makes these algorithms useful as a common metaphor. Tensorflow provides multiple application programming interfaces (APIs), which can be classified as low-level API and high-level API.

Figure 2.2 Some of the libraries used.

Keras

Keras is the high-level API of TensorFlow 2. It is a highly productive interface for solving machine learning problems, with a focus on modern deep learning. It contains essential abstractions and building blocks useful for the development and shipping of machine learning solutions with high iteration velocity. It is also relatively easy to learn and work with because it has a Python frontend with a high level of abstraction while having the option of multiple back-ends for computation purposes. While his makes it a bit slower than other deep learning frameworks, it is very suitable for new learners.

Imutils

This package includes a series of OpenCV + convenience functions that perform basics tasks such as translation, rotation, resizing, and skeletonization.

Numpy

NumPy is a Python library which is used for working with arrays. It also contains necessary the functions needed for linear algebra, Fourier transform, and matrices.

Open CV-Python:

OpenCV-Python is the Python API of OpenCV. It combines the best qualities of OpenCV C++ API and Python language. So OpenCV-Python is an appropriate tool for fast prototyping of computer vision problems.

Matplotlib

Matplotlib [8] is the visualization library in Python for 2D plots of arrays. Matplotlib is a multi-platform data visualization library built on NumPy arrays. The main advantage of visualization is that it allows for the visual access to huge amounts of data in easily comprehensible visuals. Matplotlib consists of several plots, like line, bar, scatter, histogram, etc.

Scipy:

The Scipy package contains various toolboxes dedicated for common issues in scientific computing. There are different submodules for different applications, such as interpolation, integration, optimization, image processing, statistics, special functions, etc.

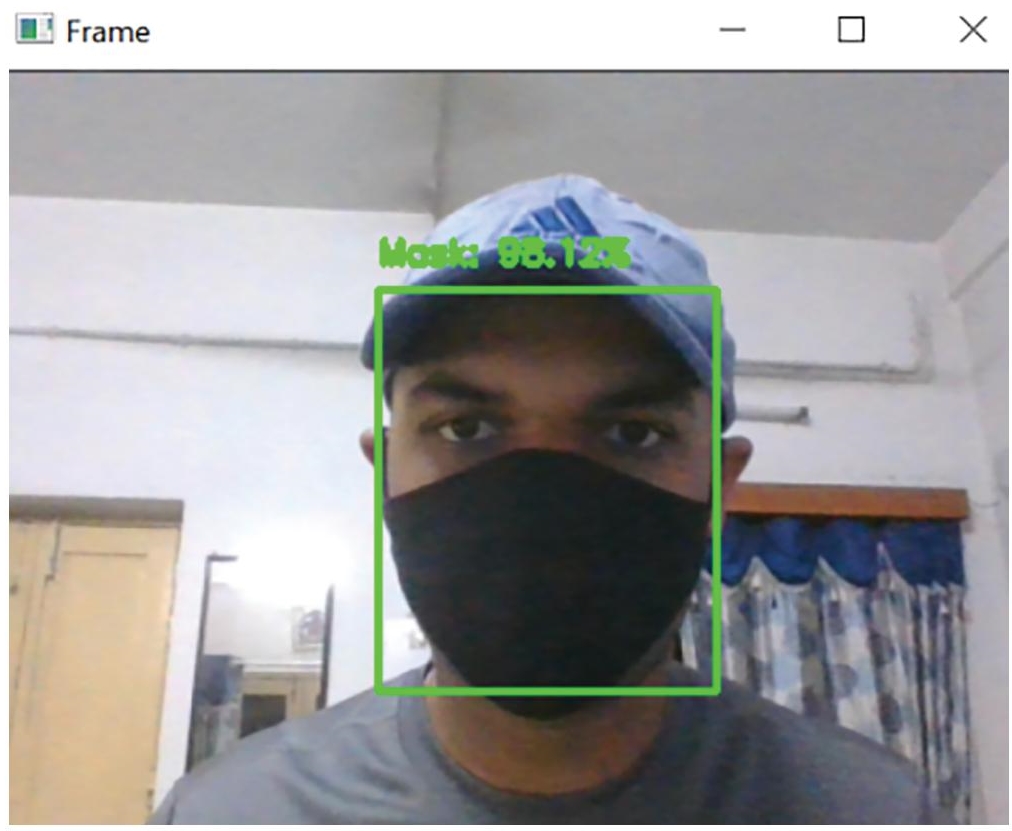

2.5 Implementation

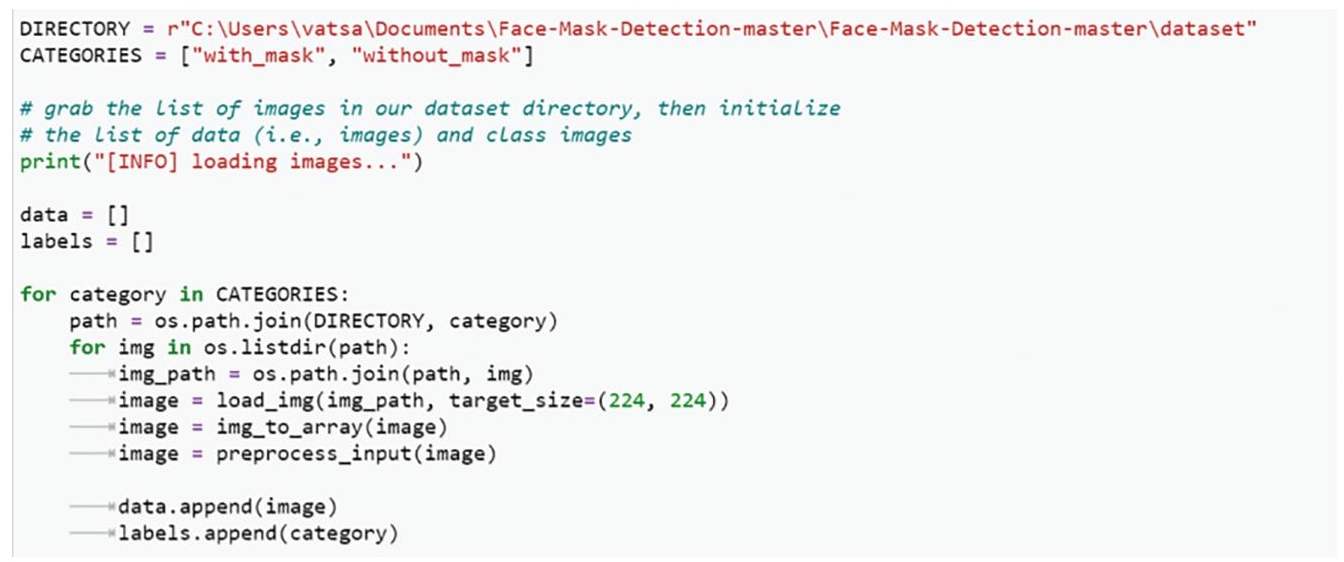

In this section, we present the implementation for the creation of a detector that can differentiate between faces with mask and without masks. In order to create this model, we need some amount of data in the form of images. So we have a dataset containing 3833 images of faces with and without masks (Figure 2.3). Now in order to get the model trained using this dataset, first we need to import all the necessary libraries, which were mentioned above in the previous section. After this, we move to the training phase by reading all the images and assigning them some list and the paths associated with these images and then labeling them in the same way.

Figure 2.3 The dataset and directory.

Now the images are contained in two separate folders with_mask and without_mask. So it is easier to get the label by extracting the name from the path. After this, we move forward to data augmentation which helps to increase the variety of data available for training models. The next step is to compile the model and train it on the data. After our model is trained, we will be plotting a graph (Training Loss Graph) to monitor the learning curve.

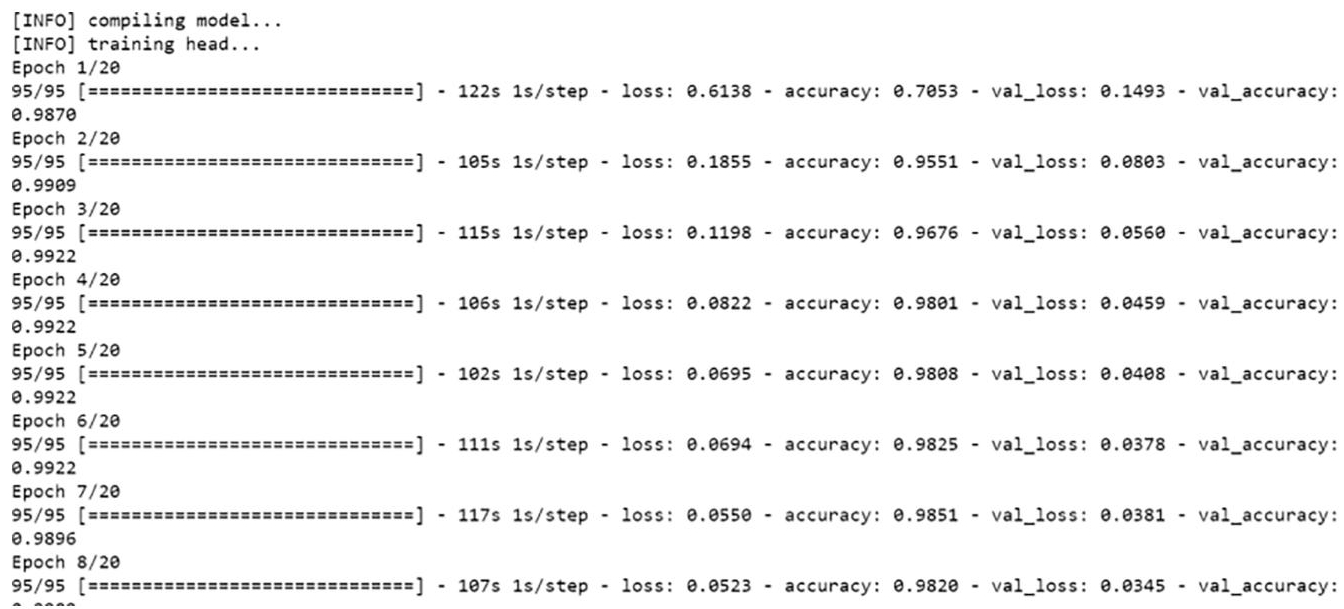

Figure 2.4 Training the model.

Figure 2.5 Training the model.

The dataset we are working on consists of 3833 images, with 1915 images containing images of people wearing masks and 1918 images with people without masks.

Steps for the training model:

With this, we are representing the training phase of the model where we try to train 3833 datasets of masked faces and unmasked faces, which is being trained in batches of 20 (Figures 2.4, 2.5).

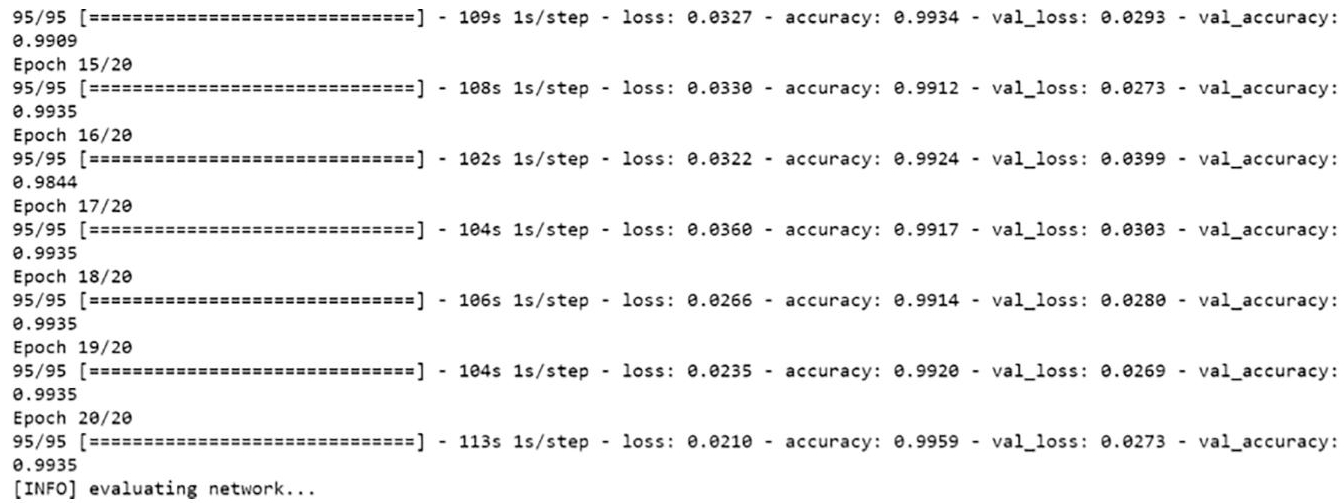

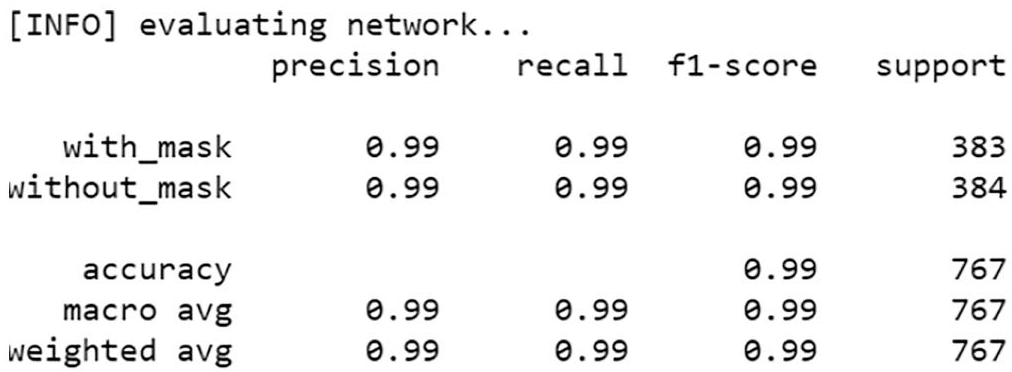

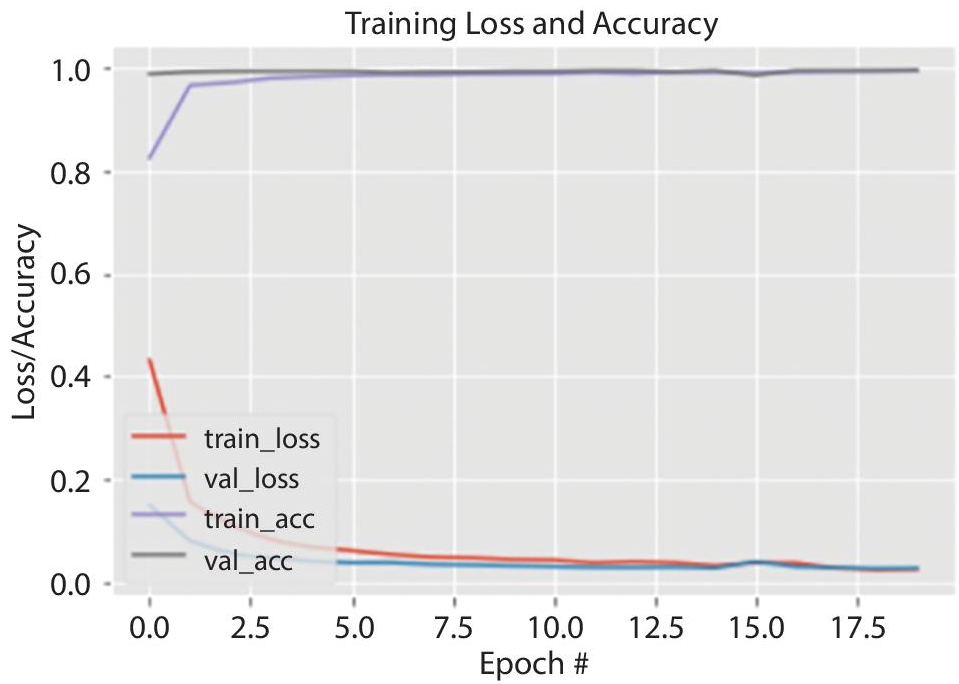

2.6 Results and Analysis

Once the training job is done and complete, the next step is to extract the newly trained interface graph which will later be used to perform the object detection or for the face detection for whether the subject is wearing the mask properly or not. This will be done using TensorFlow object detection program. Figure 2.6 shows the stage of evaluation of the networks and the precision scores. Figure 2.7 shows the training loss and accuracy graph. Figures 2.8 through 2.13 show the results when the model was tested for various types of faces with masks and without masks.

Figure 2.6 Evaluation of the networks and the precision scores.

Figure 2.7 Training loss and accuracy graph on the COVID-19 dataset.

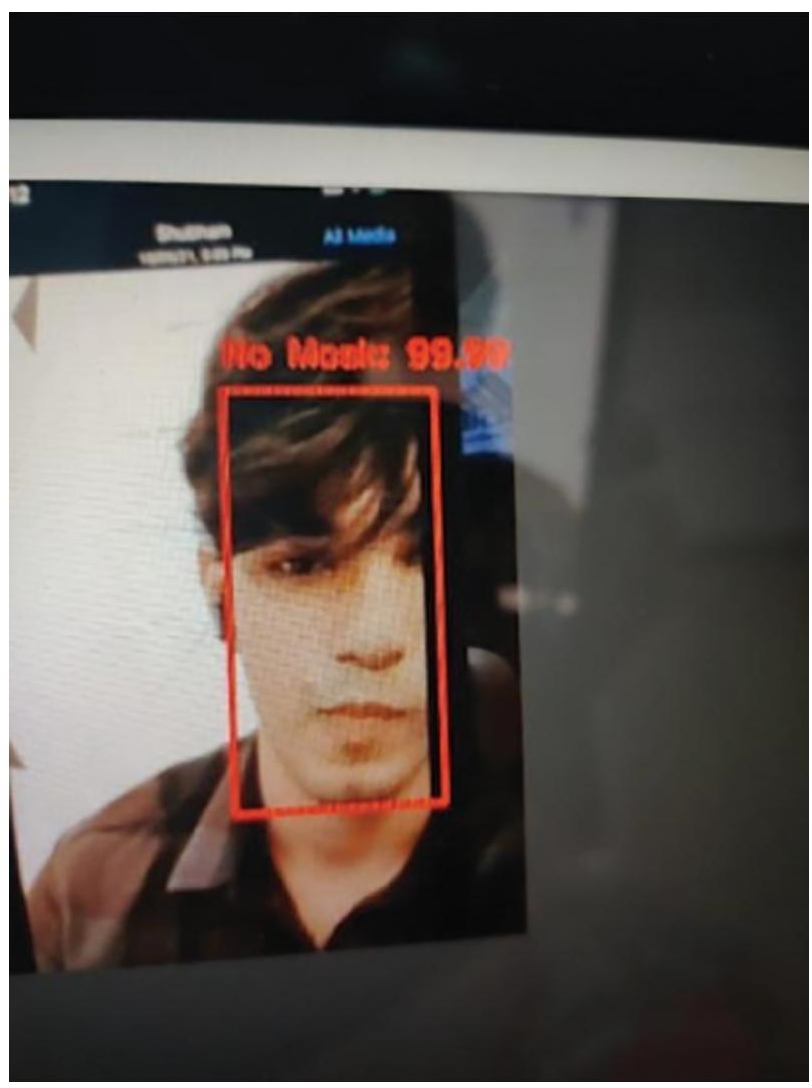

Figure 2.8 In real time with cap, face detected without a mask with 100% accuracy.

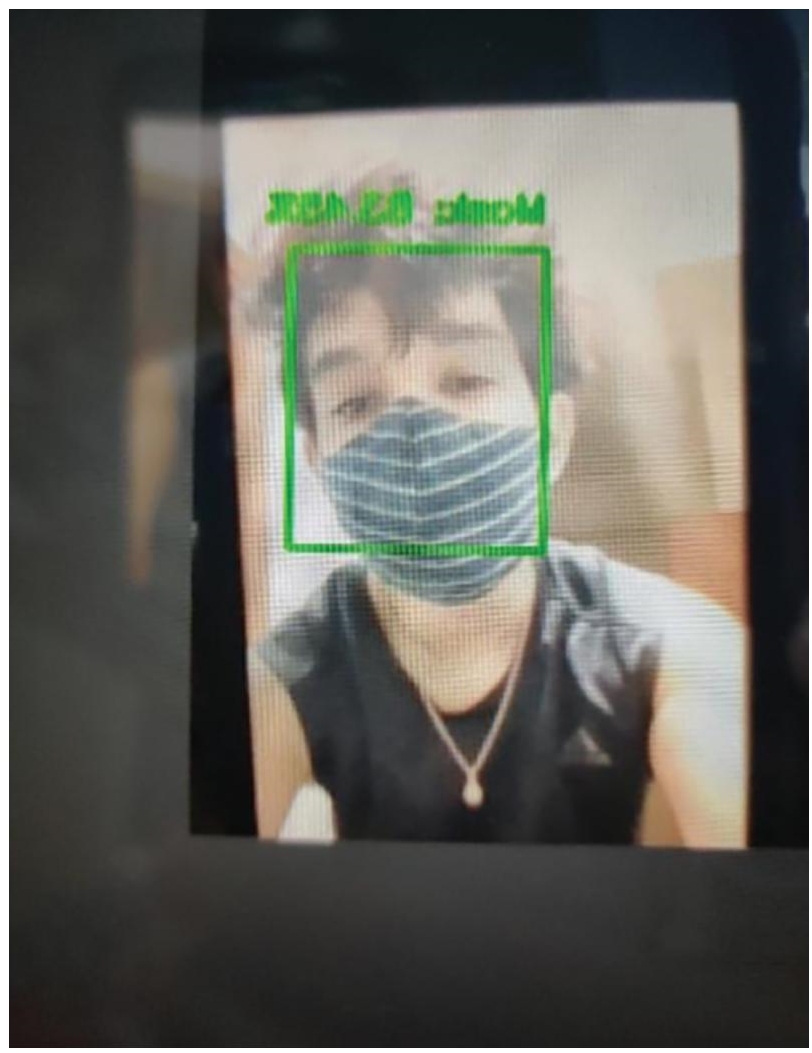

Figure 2.9 In real time, with cap, face detected with the mask with 99% accuracy.

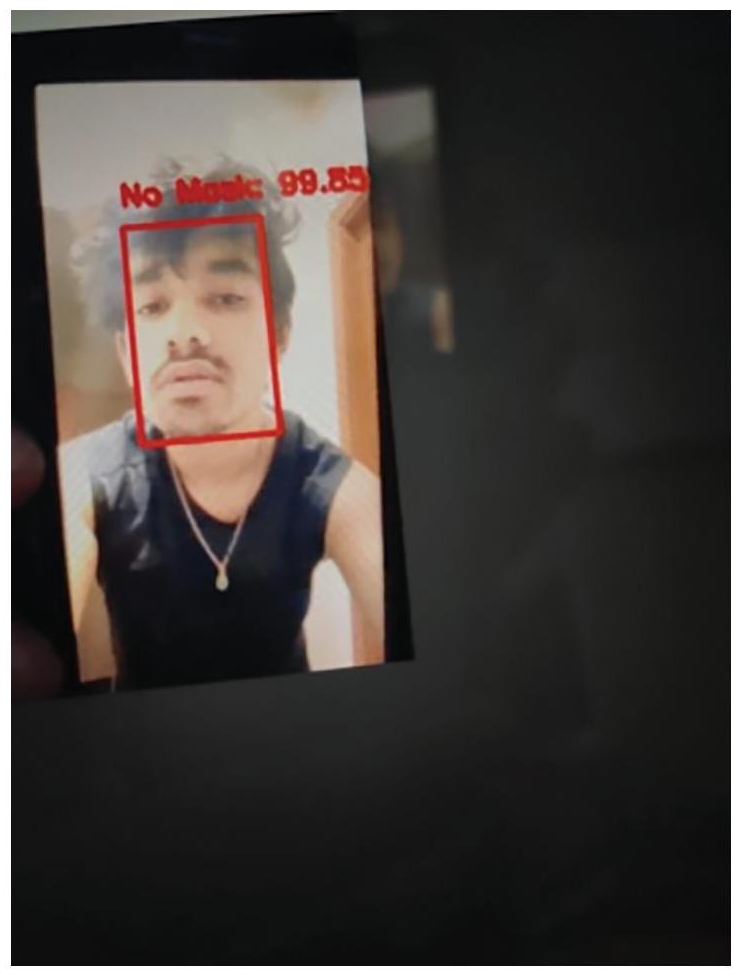

Figure 2.10 In real time, the persons face in the image is detected without mask with 99% accuracy.

Figure 2.11 In real time the persons face in the image is detected with the mask with 99% accuracy.

Figure 2.12 In real time, the persons face in the image is detected without mask with 99% accuracy.

Figure 2.13 In real time ,the persons face in the image is detected with the mask with 92% accuracy.

2.7 Conclusion and Future Work

In this chapter, we developed a model for detection of masks on persons in real time. After the pandemic, more than half the world has made wearing of face masks a compulsory rule in order to avoid the spread of the virus. The main aim of our project was to expand it on existing flash drives, IP cameras, and CCTV cameras in order to detect whether people are wearing masks or not, and if they are wearing masks, whether the masks are being worn accurately or not. This detection mechanism can then be installed in different devices in order to get the live feed of the people entering the premises wearing mask or not. Further, we can also add to trigger with a siren or a bell sound if someone enters not wearing a mask properly. This can be used for enhancing vision-based monitoring systems toward several applications, such as checking whether people are wearing masks as per stipulations or not, or generating crowd statistics.

The proposed model can be used as a surveillance tool at public places to check whether a person is wearing a mask properly or not according to stipulations. Here, MobileNet is used as the backbone of the architecture and is suitable for conditions of both low and high computations. For the extraction of more robust features, transfer learning has been used to adopt weights from a similar task of face detection, which was trained on a very large dataset. We have used OpenCV, TensorFlow, Keras, Scipy, matplotlib for detecting if a person is wearing a mask or not. The testing of the models were done with images, as well as real-time video streams. The accuracy of the model is being done by tuning the hyper parameters. By implementing the face mask detection framework, we can detect if the person is wearing a face mask or not and if it is being worn accurately or not, and based on this, whether the person should be allowed entry to the public places, which would be very useful in screening in places where large volumes of persons enter a public place.

The main limitation here, however, was that there were hardly any available datasets which contained the data needed for the training of the models. However, since a lot of research is being done in this area now, this has to the creation of custom-made data sets for this type of training models.

References

- 1. Cabani, A., Hammoudi, K., Benhabiles, H., Melkemi, M., MaskedFace-Net A dataset of correctly/incorrectly masked face images in the context of COVID-19. Smart Health (Amst), 19, 100144, 2021.

- 2. Chiang., D., Detect faces and determine whether people are wearing mask, 2020. https://github.com/AIZOOTech/FaceMaskDetection.

- 3. Loey, M., Manogaran, G., Taha, M.H.N., Khalifa, N.E.M., A hybrid deep transfer learning model with machine learning methods for face mask detection in the era of the COVID-19 pandemic. Measurement (Lond), 167, 108288, 2021.

- 4. Khandelwal, P., Khandelwal, A., Agarwal, S., Using computer vision to enhance safety of workforce in manufacturing in a post COVID world, 2020. arXiv.org > cs > arXiv:2005.05287.

- 5. Wang, Z., Wang, G., Huang, B., Xiong, Z., Hong, Q., Wu, H., Yi, P., Jiang, K., Wang, N., Pei et al., Y., Masked face recognition dataset and application, 2020. arXiv preprint arXiv:2003.09093.

- 6. Qin, B. and Li, D., Identifying face mask wearing condition using image super-resolution with classification network to prevent COVID-19, Sensors, 18, pp. 5236, 2020.

- 7. https://docs.microsoft.com/en-us/azure/machine-learning/component-reference/normalize-data

- 8. https://matplotlib.org/stable/index.html, Matplotlib 3.5.2 documentation.

- 9. https://machinelearningmastery.com/machine-learning-with-python/, Machine Learning Mastery with Python.

Note

- * Corresponding author: [email protected]