Most of the existing methods of multi-class classification are either based on binary classifiers or are reduced to them. The general idea of such an approach is to use a set of binary classifiers trained to separate different groups of objects from each other. With such a multi-class classification, various voting schemes for a set of binary classifiers are used.

In the one-against-all strategy for N classes, N classifiers are trained, each of which separates its class from all other classes. At the recognition stage, the unknown vector X is fed to all N classifiers. The membership of the vector X is determined by the classifier that gave the highest estimate. This approach can meet the problem of class imbalances when they arise. Even if the task of a multi-class classification is initially balanced (that is, it has the same number of training samples in each class), when training a binary classifier, the ratio of the number of samples in each binary problem increases with an increase in the number of classes, which therefore significantly affects tasks with a notable number of classes.

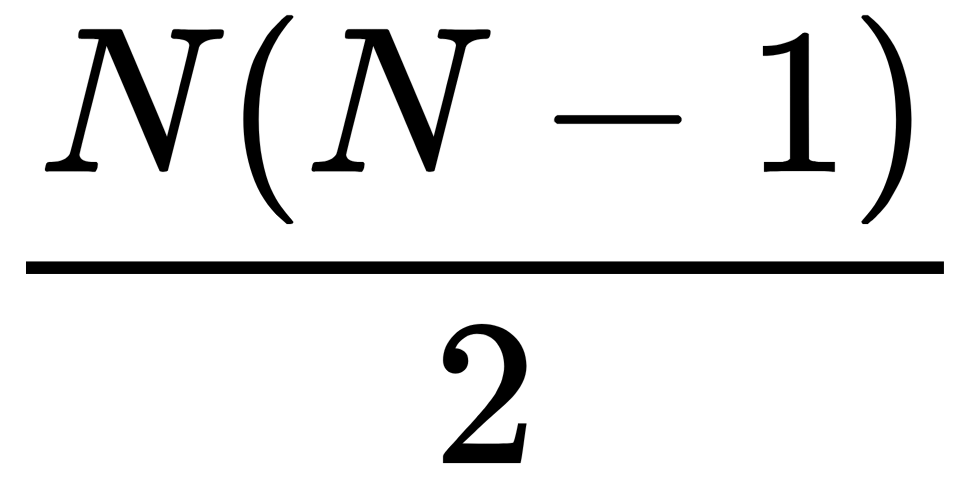

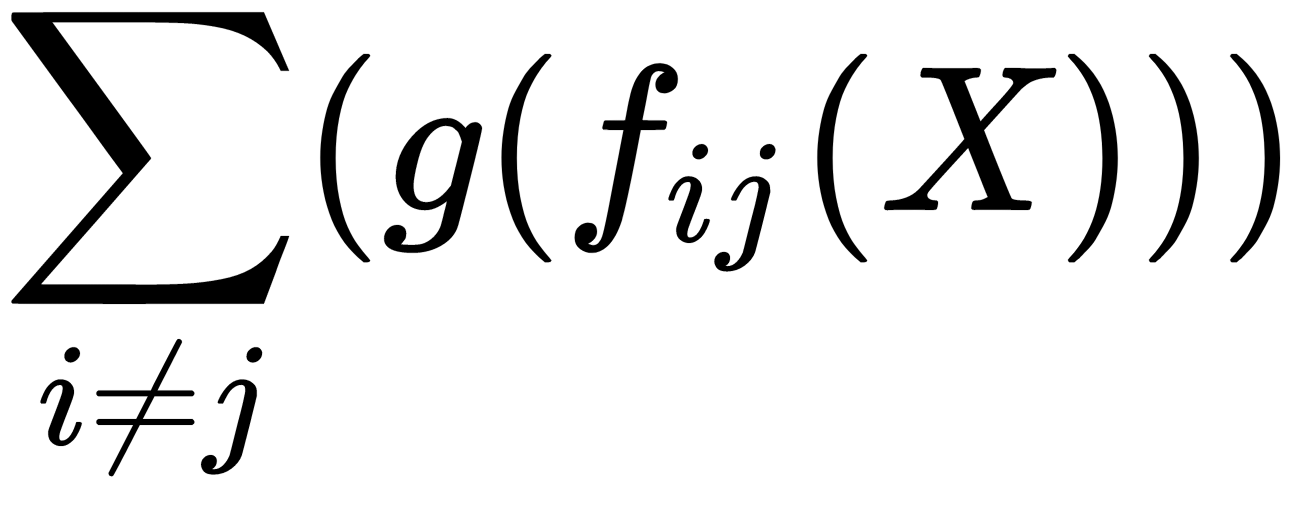

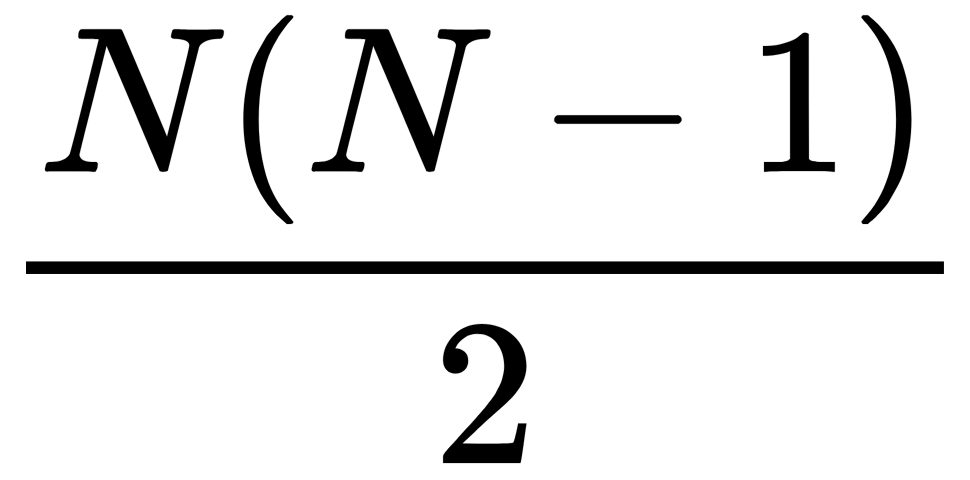

The each-against-each strategy allocates  classifiers. These classifiers are trained to distinguish all possible pairs of classes of each other. For the input vector, each classifier gives an estimate of

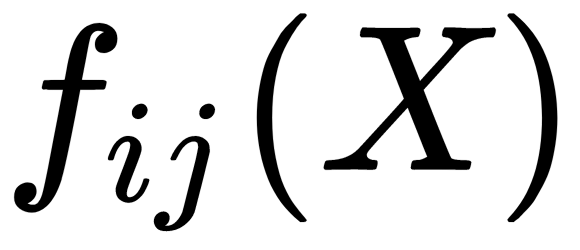

classifiers. These classifiers are trained to distinguish all possible pairs of classes of each other. For the input vector, each classifier gives an estimate of  , reflecting membership in the classes

, reflecting membership in the classes  and

and  . The result is a class with a maximum sum

. The result is a class with a maximum sum  , where g is a monotonically non-decreasing function—for example, identical or logistic.

, where g is a monotonically non-decreasing function—for example, identical or logistic.

The shooting tournament strategy also involves training  classifiers that distinguish all possible pairs of classes. Unlike the previous strategy, at the stage of classification of the vector X, we arrange a tournament between classes. We create a tournament tree, where each class has one opponent and only a winner can go to the next tournament stage. So, at each step, only the one classifier determines the vector X class, then the winning class is used to determine the next classifier with the next pair of classes. The process is carried out until there is only one winning class left, which should be considered the result.

classifiers that distinguish all possible pairs of classes. Unlike the previous strategy, at the stage of classification of the vector X, we arrange a tournament between classes. We create a tournament tree, where each class has one opponent and only a winner can go to the next tournament stage. So, at each step, only the one classifier determines the vector X class, then the winning class is used to determine the next classifier with the next pair of classes. The process is carried out until there is only one winning class left, which should be considered the result.

Some methods can produce multi-class classification immediately, without additional configuration and combinations. The kNN algorithms or neural networks can be considered examples of such methods.

Now we have become familiar with some of the most widespread classification algorithms, let's look at how to use them in different C++ libraries.