Fingerphoto Authentication Using Smartphone Camera Captured Under Varying Environmental Conditions

Aakarsh Malhotra; Anush Sankaran; Apoorva Mittal; Mayank Vatsa; Richa Singh IIIT Delhi, Delhi, India

Abstract

With a rapid growth in smartphone technology, there is a need to provide secured access to critical data using personal authentication. Existing access mechanisms such as pin and password suffer due to lack of security from shoulder-surfing attacks. Use of biometric modalities such as fingerprint are currently explored in existing smartphones as a more secure authentication mechanism. Typically, fingerprint capturing requires an extra sensor, adding to the cost of the device as well as denying backend services to existing smartphone devices. Using a rear camera captured fingerphoto image provides a cheap alternate option, without the need for a dedicated sensor for capturing images. However, unlike fingerprints fingerphoto images are captured in a more uncontrolled environment including any outdoor conditions. Hence, fingerphoto matching is prone to many challenges such as varying environmental illumination and surrounding background. We propose a novel end-to-end fingerphoto matching pipeline by studying the effect of different environmental conditions in fingerphoto matching. The pipeline consists of the following major contributions: (i) a segmentation technique to extract the fingerphoto region of interest from varying background, (ii) an enhancement module to neutralize the illumination imbalance and increase the ridge–valley contrast, (iii) a scattering network based fingerphoto representation technique to deal with the pose variations, whose resultant features are invariant to geometric transformations, and (iv) a learning based matching technique to accommodate maximum variations occurring in fingerphoto images. To experimentally study the challenging conditions such as background and illumination, we create a publicly available fingerphoto dataset, IIITD SmartPhone Fingerphoto Database v1, along with the corresponding live-scan prints. The experiments performed on the dataset shows that the proposed matching pipeline provides an improved performance when compared with some of the existing approaches.

Keywords

Fingerphoto matching; Scattering networks; Outdoor identification; Smartphone authentication

Acknowledgements

This research work is partially funded by TCS PhD Fellowship and Visvesvaraya PhD fellowship from the Government of India. The database collection process was quite extensive and spanned two different sessions, hence we would like to thank all the subjects for participating and cooperating in the data collection process. We would also like to thank Dr. Saket Anand and Dr. Angshul Majumdar for their feedback during the research work.

6.1 Introduction

Biometrics is the science of uniquely identifying a human based on any biological or physiological trait. Biometrics has the unique advantage of “something that you are” instead of “something that you possess” like a key, ID Card, or “something that you know” such as password and PIN [1]. Some popular biometric modalities for human recognition include face, fingerprint, iris, voice, and gait. Recognizing individuals based on these modalities has been well explored under constrained environments [1]. With growing demand for reliable personal authentication, biometric systems are extensively used in many civil and commercial applications such as access control systems, transaction systems, and cross-border security. Recent advancements in technology and data handling capacity have paved a way for a whole new era in the field of secure authentication and have allowed researchers to explore use of biometrics in completely unconstrained environments. Biometric authentication in handheld devices such as smartphones is one such application that has gained significant attention.

Smartphones are increasingly becoming an integral part of human life and are currently recognized as the fastest spreading technology in the world [2]. With growing dependency in a person's life, a smartphone or a hand-held device contains a lot of personal and critical information. It is essential to provide the users usable secure access mechanism to their data. Traditionally, user authentication for smartphones is based on pins, passwords, and patterns [3]. However, these methods are susceptible to over-the-shoulder-surfing attacks. Biometric modalities provide a more secure mechanism to mobile authentication. Of the existing biometric modalities, fingerprint based authentication mechanism is currently being explored in some recent smartphones. Existing fingerprint based authentication mechanisms in smartphones such as Apple iPhone and Samsung include a dedicated sensor which leads to an increased cost of the smartphones, and also it is not possible to provide backend support to the existing mobile devices. It can be observed that in 2012, about ![]() of all the cameras in use were on mobile devices [2]. Thus, using the popularly employed rear camera of a smartphone as a sensor to capture images of a finger (known as fingerphoto images) acts as a simple and universal alternative to fingerprints.

of all the cameras in use were on mobile devices [2]. Thus, using the popularly employed rear camera of a smartphone as a sensor to capture images of a finger (known as fingerphoto images) acts as a simple and universal alternative to fingerprints.

Fig. 6.1A demonstrates the method of capturing fingerphotos, and Fig. 6.1B shows a sample fingerphoto image captured using the rear camera of a smartphone. It can be observed that fingerphoto images can be captured in any kind of indoor or outdoor environment and varying illumination conditions such as broad daylight or nighttime. The challenges associated with smartphone camera based fingerphoto authentication can be summarized as follows:

1. Uncontrolled background. Uncontrolled real time capture of images may result in any kind of background in the image. Also, the nearest background object can be very close to the finger or very far, making segmentation a challenging task.

2. Varying illumination. Fingerphoto can be captured in a controlled indoor illumination or in a completely uncontrolled outdoor illumination. Further, the presence or absence of a flash during capture makes preprocessing difficult.

3. Feature extraction. Existing minutia extraction approaches may yield very noisy responses from fingerphoto images [4], as shown in Fig. 6.2.

4. Mobile camera. Cameras in different smartphones have varying features such as resolution, autofocus, and flash LEDs, which can affect the quality of the captured fingerprint.

5. Finger position. Challenges arise due to the orientation change of finger during capture and varying distance from camera.

6. Skin texture. Skin texture might vary because of the presence of any kind of natural dirt, water, sweat, or other uncontrollable factors.

6.2 Literature Survey

Researchers have explored mobile fingerphoto based recognition in the literature, and Table 6.1 summarizes these approaches. The existing research has primarily focused on fingerphoto preprocessing to remove noise and enhance ridge patterns. Most of the existing work in the literature use variations of existing minutiae based techniques to match fingerphotos. Lee et al. [5] studied this problem in 2005 and identified that foreground segmentation of the region of interest and extracting the ridge orientation are the two challenging problems. Later as the study for fingerphoto matching moved to more uncontrolled environments, Lee et al. [6] in 2008 proposed an algorithm that could estimate the pose of the captured fingerphoto and normalize it for further matching. They also proposed a quality check algorithm to discard out-of-focus fingerphoto images. However, the four sets of database collected for the experiments were not made publicly available, limiting further research. In 2012, Stein et al. [7] proposed a segmentation and quality enhancement algorithm using ridge edge density irrespective of the finger position. Li et al. [4,9] created a database of fingerphoto images collected both in indoor and outdoor environments. They proposed a novel quality estimation algorithm using SVM for the varying background and illumination images. Further, they used the quality metric to improve the existing minutiae based matching algorithm. However, the segmentation of the fingerphoto images was performed manually and also the challenging dataset was not made publicly available for research purposes. Stein et al. [10] studied about using a sequence of fingerphoto images to avoid spoofing the system. The data was collected in a controlled environment and not made publicly available. The major limitations in the research works in the literature are as follows:

• Most of the existing fingerphoto matching algorithms use the traditional minutiae based matching technique. However, Li et al. [9] showed that minutiae extraction is highly spurious in fingerphoto images. Hence, it is important to explore non-minutiae based matching algorithms.

• Different research works have focused on some specific problems when addressing fingerphoto matching. There is a lack of end-to-end matching pipeline that involves preprocessing, feature extraction, and matching across a couple of challenges.

• There is no publicly available dataset and protocol to promote benchmarking in the important problem of smartphone based fingerphoto matching.

Table 6.1

A literature survey of existing algorithms for processing and matching fingerphoto images captured using mobile phones

| Research | Database | Challenges | Problems addressed | Limitations |

| Lee et al. 2005 [5] | 2 subsets of 400 and 840 images | Simulated resolution | Segmentation, Ridge orientation extraction | No extensive experiments, Data not made publicly available |

| Lee et al. 2008 [6] | Samsung DB-I, II, III, IV with 120 fingerphoto sequence, 1200 fingerprints | Finger position | Quality estimation | Controlled illumination, Database not public |

| Stein et al. 2012 [7] | 41 subjects using two mobiles | Finger position | Quality estimation, Segmentation, Enhancement | No extensive experiments, Data not made publicly available |

| Derawi et al. 2012 [8] | 1320 images using two different mobiles | -Nil- | Fingerphoto matching | Controlled illumination, External hardware used, Database not public |

| Li et al. 2012 [9] | 2100 fingerphoto using three mobiles | Background, illumination | Fingerphoto matching | Manual segmentation, Data not available |

| Li et al. 2013 [4] | 2100 fingerphoto using three mobiles | Background, illumination | Quality estimation | Manual segmentation, Data not made publicly available |

| Stein et al. 2013 [10] | 990 fingerphoto images, 66 finger videos | Spoofing | Enhancement, matching | Controlled illumination, background, Data not available |

6.3 IIITD SmartPhone Fingerphoto Database v1

One of the primary challenges limiting the research and benchmarking in fingerphoto matching is the lack of a publicly available fingerphoto dataset incorporating multiple variations. To address this challenge, we create a new public fingerphoto dataset called the IIITD SmartPhone Fingerphoto Database v1 (ISPFD-v1) to study the impact of background and surrounding illumination on fingerphoto matching. Table 6.2 summarizes the dataset, and Fig. 6.3 shows sample images from each of the subsets. Data is collected from 64 subjects, each having 8 samples of fingerphotos and live-scan impressions of right index and right middle fingers, resulting in a total of 128 classes with over 5100 images in total. The dataset has 3 subsets corresponding to three challenges including varying background, varying illumination, and corresponding live-scan impressions. The fingerphoto images are collected using an Apple iPhone5 at 8 MP, and live-scan impressions are captured using a Lumidigm Venus IP65 sensor. Also for capturing the fingerphotos, autofocus was kept on whereas flash LED was kept off. The details of the three subsets are explained below.

Table 6.2

A summary of the multiple subsets and their variations in the IIITD SmartPhone Fingerphoto Database v1

| Background | Illumination | Classes | Images | |

| Set 1: Background | White Indoor | (WI) | 128 | 1024 |

| Natural Indoor | (NI) | 128 | 1024 | |

| Set 2: Illumination | White Outdoor | (WO) | 128 | 1024 |

| Natural Outdoor | (NO) | 128 | 1024 | |

| Set 3: Live-scan | 128 | 1024 | ||

6.3.1 Set 1: Background Variation

With this set, we aim to study the impact of background. The set was captured in indoor controlled illumination with both white background and natural backgrounds. The white background was created using an A4 sheet of paper while the natural background included a variety of objects that are typically found in the indoor environment. The subset Natural Indoor (NI) was collected to study the impact of changing backgrounds with a constant controlled illumination indoors. Both White Indoor (WI) and Natural Indoor (NI) subsets have 8 images per subject for each finger (right index and right middle). Hence, the total number of images in this set are: 64 subjects × 2 background types × 2 fingers × 8 samples = 2048 images. Fig. 6.3A and Fig. 6.3C show some sample images of this set.

6.3.2 Set 2: Illumination Variation

With this set, we aim to study the effect of illumination. This set was captured in a completely uncontrolled outdoor illumination environment with both white and natural background. The subset Natural Outdoor (NO) incorporated both challenges simultaneously, that is, changing background and outdoor uncontrolled illumination. Both White Outdoor (WO) and Natural Outdoor (NO) dataset individually contain 1024 images, making a total of 2048 images. Some sample images from this set are shown in Fig. 6.3B and Fig. 6.3D.

6.3.3 Set 3: Live-Scan Fingerprints

This set is created to evaluate the performance of matching systems where the gallery consists of live-scan fingerprints while the probe images are camera captured fingerphotos. We capture live-scan images using a Lumidigm Venus IP65 sensor. The number of images in this set is 64 subjects × 2 fingers × 8 samples = 1024 images. Some example images of this set are shown in Fig. 6.3E.

6.4 Proposed Fingerphoto Matching Algorithm

We propose a novel end-to-end pipeline for matching fingerphoto images under the influence of various challenges. As shown in Fig. 6.4, the proposed fingerphoto matching pipeline has four major steps: (i) fingerphoto segmentation, (ii) fingerphoto enhancement, (iii) feature representation, and (iv) fingerphoto matching. As shown in Fig. 6.5, the preprocessing stage consists of segmenting out the region of interest and fingerphoto enhancement. Two types of enhancement are attempted in this pipeline: (i) image enhancement algorithm to improve ridge–valley contrast and (ii) Local Binary Patterns (LBP) based fingerphoto enhancement. As the performance of the matching system strongly depends on the feature extraction step, we propose a novel scattering networks based fingerphoto features for a robust representation. Further, we propose a learning based algorithm for fingerphoto matching to account for the variations available in the data, thus making our approach more generalizable.

6.4.1 Fingerphoto Segmentation

The aim of fingerphoto segmentation is to segment out the relevant foreground information containing the finger ridge patterns. The major challenge for segmentation is the varying background noise, along with varying distance from the finger to the camera. It was observed that the skin color of the finger remained mostly consistent despite having varying background and illumination. Following this observation, the fingerphoto is segmented from the background using an adaptive skin color thresholding algorithm. Given an input image, we covert it to the CMYK color space with the magenta channel preserving most of the skin color. Using Otsu's thresholding method [11], an adaptive binary threshold is applied on the magenta (M) color channel, post which a binary mask of fingerphoto is obtained. However, the binary mask contains both false negative and false positive errors, thus leaving out certain finger regions as well as adding some background noise as a foreground region. We utilize the shape information of the finger to reduce the amount of noise by applying morphological operations on the obtained binary mask. A morphological opening operation using a square structuring element is applied twice to reduce the amount of false positives, and further the largest connected component is obtained using run-length encoding to minimize the false negative error. Thus, as a result of this coarse segmentation, a single connected binary mask corresponding to the finger region is obtained as the foreground. To find out a rectangular region of interest (ROI) of the binary mask which is invariant to pose variation, a boundary trace of the binary mask is performed. One assumption that is made for boundary tracing is that the finger is approximately present around the center of the image. In the binary mask, we find the leftmost true pixel from the center row of the image. We traverse in both upward and downward direction, till the point where we find a true pixel that is more than 10 pixels away from the previous true pixel. This final true pixel in both directions will be the leftmost extreme point of the ROI. We repeat the same procedure for the right most true middle pixel to find the boundary on the right side. Using these four points, a rectangular ROI is segmented around the finger. Only the pixels inside the rectangular region are considered as true pixels and retained in the RGB image, while the rest of the image is blacked out.

6.4.2 Fingerphoto Enhancement (Enh#1)

It is essential to build a robust enhancement algorithm to cater challenges such as illumination variation and focus variation. The aim of this step is to improve the contrast between the ridges and the valleys in the finger. The RGB segmented image obtained from the previous step is converted to grayscale and a median filter is applied to reduce the effect of focus blur introduced during capturing. Further, histogram equalization is performed to normalize the illumination variation and increase contrast between ridge and valleys. To make high frequency components such as ridges more prominent and reduce the impact of low frequency components, we perform image sharpening. This is done by subtracting the Gaussian blurred image (with ![]() ) from the original image. In the rest of this chapter, this enhancement approach is abbreviated as Enh#1, and a sample output after segmentation and enhancement is shown in Fig. 6.6.

) from the original image. In the rest of this chapter, this enhancement approach is abbreviated as Enh#1, and a sample output after segmentation and enhancement is shown in Fig. 6.6.

6.4.3 LBP Based Enhancement (Enh#2)

Local Binary Pattern (LBP) is an effective texture descriptor for images which thresholds the neighboring pixels based on the value of the current pixel [12]. LBP descriptors efficiently capture the local spatial patterns and the gray scale contrast in an image. It can be observed from the segmented fingerphoto image in Fig. 6.6 that, in order to trace the ridge lines, it is important to make use of the ridge–valley intensity contrast. The edge lines on the ridges have a higher intensity compared to their spatial neighborhood valleys. LBP embeds this spatial structure into its descriptor, thereby tracing the ridge lines in a fingerphoto image. LBP has been widely used in many computer vision applications.1 However, it is to be noted that LBP is popularly proposed as a feature descriptor, while we propose to use LBP as an image enhancement technique. Computation of the LBP descriptor from an image is a four-step process and is explained below.

1. For every pixel ![]() in an image, I, choose P neighboring pixels at a radius R.

in an image, I, choose P neighboring pixels at a radius R.

2. Calculate the intensity difference of the current pixel ![]() with the P neighboring pixels.

with the P neighboring pixels.

3. Threshold the intensity difference, such that all the negative differences are assigned 0 and all the positive differences are assigned 1, forming a bit vector.

4. Convert the P-bit vector to its corresponding decimal value and replace the intensity value at ![]() with this decimal value.

with this decimal value.

Thus, the LBP descriptor for every pixel is given as

where ![]() and

and ![]() denote the intensity of the current and neighboring pixel, respectively. P is the number of neighboring pixels chosen at a radius R. Fig. 6.7 shows some sample LBP enhanced fingerphoto images.

denote the intensity of the current and neighboring pixel, respectively. P is the number of neighboring pixels chosen at a radius R. Fig. 6.7 shows some sample LBP enhanced fingerphoto images.

6.4.4 Scattering Network Based Feature Representation

Fingerphoto images captured using smartphone camera are prone to geometric and resolution variations. Due to these challenges, minutiae based matching tends to give poor matching performance in case of finger-photos. There is a need for a feature representation technique which is not only translation and rotation invariant but also keeps high frequency information intact since we aim to keep the ridge pattern of the fingerphoto. To cater all these challenges, we propose a novel feature representation technique using Scattering Networks (ScatNet) [13], which quite efficiently extracts texture patterns of the image. ScatNet is a rotation and translation invariant feature representation which is made from a filter bank of wavelets [14]. To obtain a locally affine invariant representation, a low pass averaging filter ![]() is applied on the signal

is applied on the signal ![]() to obtain the zeroth order ScatNet features as follows:

to obtain the zeroth order ScatNet features as follows:

Though these zeroth order features are translation invariant, they lack high frequency information. To extract the high frequency information, a filter ![]() is constructed using a bank of wavelets by varying the scale

is constructed using a bank of wavelets by varying the scale ![]() and the rotation parameter θ. To obtain the first order ScatNet coefficients, a low pass filter is applied to the high frequency components obtained using the high frequency filter:

and the rotation parameter θ. To obtain the first order ScatNet coefficients, a low pass filter is applied to the high frequency components obtained using the high frequency filter:

Higher order ScatNet coefficients can be extracted by recursively constructing wavelet filter banks. As we generate higher order coefficients, more high frequency components are preserved, but at the same time we increase the dimension of the feature representation vector exponentially. As a trade-off between high frequency information and computation time for a smartphone, we choose second order coefficients, and they are a concatenation of all responses, i.e., ![]() . Mathematically, the second order coefficients are given as

. Mathematically, the second order coefficients are given as

6.4.5 Matching Techniques

We have performed verification to determine whether the presented fingerprint matches the claimed identity. The aim of this experiment is to find out if a pair of fingerphoto images are a genuine or an impostor pair. To achieve this, we have explored both distance based matching as well as learning based matching techniques. Also, the features generated using ScatNet are typically of very high dimension. Thus, to reduce the matching/training time and also to ensure the removal of co-linearity in the features, dimensionality reduction becomes inevitable. We used the Principal Component Analysis (PCA) as a dimensionality reduction technique. To get a compact representation of features extracted using ScatNet, we preserved ![]() of eigenenergy and reduced the dimensionality of our data.

of eigenenergy and reduced the dimensionality of our data.

1. Distance based matching. Let P and G be the ![]() vectorized ScatNet representation of the probe and the gallery image, respectively. An L2-distance based matching, M, is given as follows:

vectorized ScatNet representation of the probe and the gallery image, respectively. An L2-distance based matching, M, is given as follows:

where t is a threshold hyperparameter and

2. Learning based matching. In this approach, the aim is to train a supervised binary classifier ![]() to verify a pair of fingerphotos as a match or a non-match pair. The classifier can be considered as a nonlinear function learnt over the L2-distance between the probe and the gallery image and given as follows:

to verify a pair of fingerphotos as a match or a non-match pair. The classifier can be considered as a nonlinear function learnt over the L2-distance between the probe and the gallery image and given as follows:

In our proposed approach, a Random Decision Forest (RDF)2 is used as the nonlinear binary classifier [15,16]. In the presence of highly uncorrelated features, discriminative classifier such as RDF are known to perform competitively [17].

6.5 Experimental Results

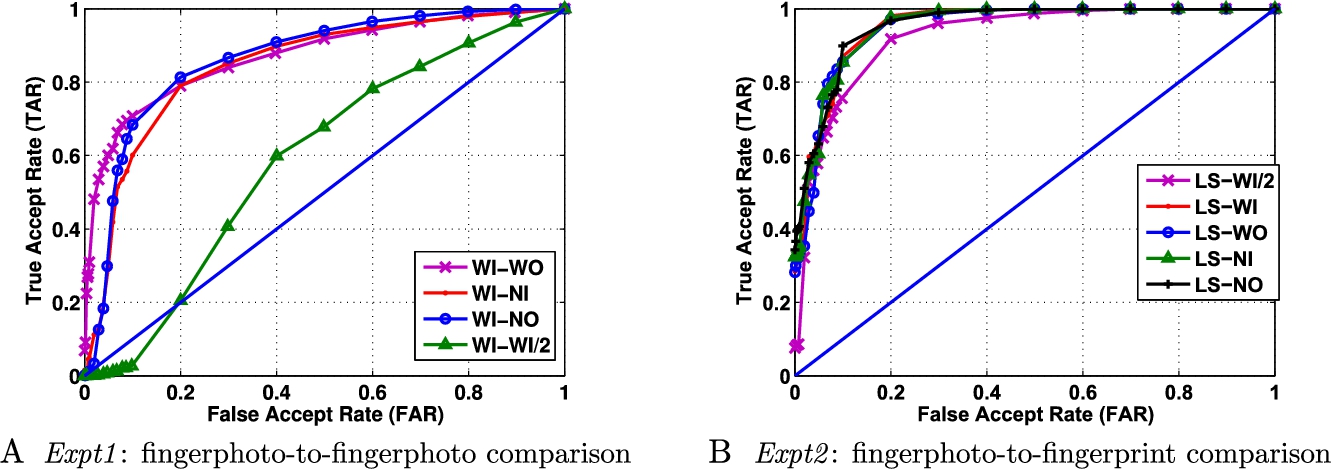

The primary aim of this section is to study the impact of each step and optimize them. We perform two kinds of experiment: (i) Expt1 which is fingerphoto-to-fingerphoto matching where both the gallery and probe are fingerphoto images, and (ii) Expt2 which is fingerphoto-to-fingerprint matching where the gallery contains live-scan (LS) fingerprint images while the probe images are fingerphoto. For Expt1, White Indoor (WI) images are treated as gallery and the other three sets, White Outdoor (WO), Natural Indoor (NI), and Natural Outdoor (NO), are treated as probe. Assuming that WI images are the most stable and controlled capture of fingerphoto images, different probe subsets of WO, NI, and NO study the different illumination and background variation possible during matching. Another experiment for the gallery comprising half of the WI subset (WI/2) and the probe containing the other half of the WI subset is performed to understand how the controlled images compare with each other. For Expt2, live-scan (LS) images are treated as the gallery and WO, NI, NO, and WI fingerphotos sets are taken as the probe. With sensor captured live-scan images as the gallery, the four different subsets study the impact of various capture challenges while matching fingerphoto images to live-scan fingerprints. Also in Expt2, one more experiment with probe as the half of WI (WI/2, the same probe set used for the last experiment in Expt1) is matched with the entire set of LS images. The two experiments in Expt1 and Expt2, with the same probe set as WI/2, provide a comparison between fingerphoto-to-fingerphoto matching and fingerphoto-to-fingerprint matching. For all the learning based matching experiments, a 50–![]() train-test split protocol is followed to train the binary classifiers.

train-test split protocol is followed to train the binary classifiers.

6.5.1 Performance of the Proposed Matching Pipeline

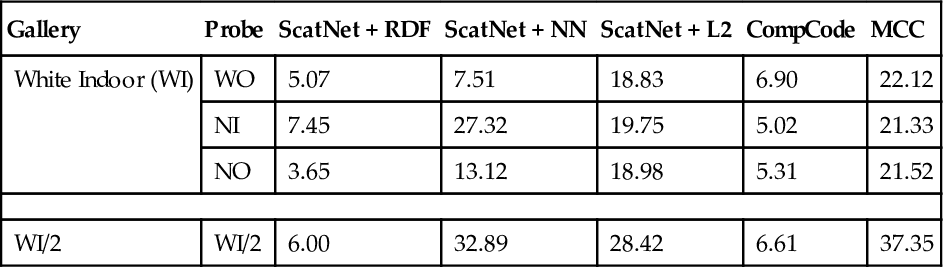

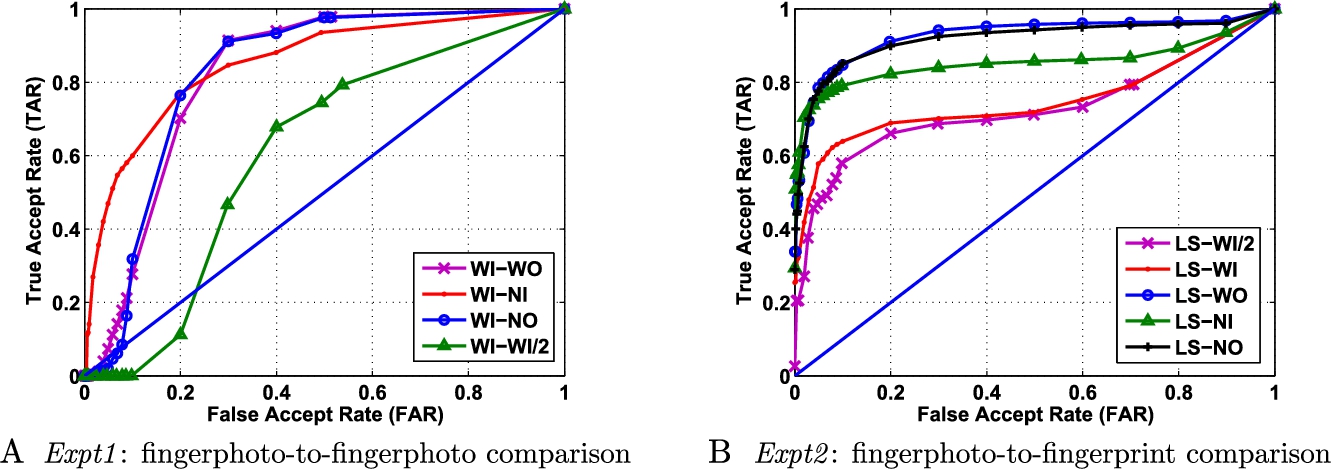

The proposed pipeline includes the proposed adaptive segmentation algorithm, followed by the image based enhancement technique (Enh#1). Level-2 scattering network features are extracted followed by a PCA based dimensionality reduction technique to represent the fingerphoto image. A random decision forest (RDF) based matching algorithm is used to match the gallery and probe images. Equal Error Rate (EER) is used as the evaluation metric, and the results of the proposed matching pipeline are tabulated in Table 6.3 and Table 6.4. The corresponding Receiver Operating Characteristic (ROC) curve is plotted in Fig. 6.8.

Table 6.3

Equal Error Rate (EER) for Expt1 using Enh#1 enhancement and matching using RDF based learning approach with ScatNet features

| Gallery | Probe | ScatNet + RDF |

| White Indoor (WI) | WO | 5.07 |

| NI | 7.45 | |

| NO | 3.65 | |

| WI/2 | WI/2 | 6.00 |

Table 6.4

Equal Error Rate (EER) for Expt2 using Enh#1 enhancement and matching using RDF based learning approach with ScatNet features

| Gallery | Probe | ScatNet + RDF |

| Live Scan (LS) | WI/2 | 5.53 |

| WI | 7.07 | |

| WO | 7.12 | |

| NI | 10.43 | |

| NO | 10.38 |

The major observations made from the results are as follows:

• The results from the same-domain image matching in Expt1 provides, in general, better performance with (3.6–7.4)% error. While matching fingerphoto images to fingerprint images in Expt2, the error rates are a little higher in the range of (5.5–10.5)%.

• In Expt1, with WI as the gallery, using fingerphoto images captured in an outdoor uncontrolled environment with a natural background provides the best performance. Though this result is counter-intuitive, it can be justified by the presence of a balanced diffused lighting in the provided outdoor environment, which makes enhancement easier. Also, in the outdoor environment the objects in the background are typically farther away from the finger, making it easy for the adaptive segmentation algorithm to segment out the foreground.

• The worst performing experiment in Expt1 is the probe NI subset. In the indoor conditions, the focused lighting created due to the ceiling lights create a shadowing effect on the fingerphoto images. Thus, certain parts of the finger are either blacked out or over-saturated, leading to a loss of captured information, and hence to degraded performance.

• In Expt2, as the gallery fingerprint images do not have any background or illumination challenges, much more intuitive results are obtained as seen in Table 6.4. The WI probe subset provides the best matching performance, while NO subset provides the worst performance.

6.5.2 Comparison of Matching Algorithms

To study the effectiveness of the proposed ScatNet + RDF based matching algorithm, four different matching techniques are discussed as follows: (i) ScatNet + NN, which is also a learning based matching algorithm with Neural Network as a nonlinear classifier and ScatNet as features, (ii) ScatNet + L2, which matches the ScatNet features using an L2-distance metric and linear threshold, (iii) CompCode [18], which is one of the most successful methods in the literature for matching touchless fingerprint images, and (iv) Minutiae Cylinder Code (MCC) based matching [19], which is a robust fixed length descriptor over the traditional minutia features. CompCode and MCC based matching methods are explained in detail.

Minutiae Based Feature Representation. Minutiae Cylinder Code (MCC) is found to be one of the most successful minutiae based matching algorithm in the literature [20]. MCC uses minutiae for generating a feature representation and it is a computationally efficient matching algorithm. A fixed radius neighborhood is defined around each minutiae where a local structure cylinder is created. The cylinders in MCC are built from angles and invariant distances around each minutia extracted. Given a set of minutiae ![]() , with each minutia defined as a three tuple feature: x coordinate, y coordinate, and the ridge orientation θ at that point. With each minutia acting as the center for the base, a cylinder for each minutiae is made of height 2π and radius R. The cylinder is discretized by enclosing it inside a cube and is divided into cells, with each cell inside the discretized cube being identified using i, j, and k coordinates (k coordinate defines the vertical axis). Each of these cells is then projected on to the cylinder's base at location

, with each minutia defined as a three tuple feature: x coordinate, y coordinate, and the ridge orientation θ at that point. With each minutia acting as the center for the base, a cylinder for each minutiae is made of height 2π and radius R. The cylinder is discretized by enclosing it inside a cube and is divided into cells, with each cell inside the discretized cube being identified using i, j, and k coordinates (k coordinate defines the vertical axis). Each of these cells is then projected on to the cylinder's base at location ![]() . For each of these projected cells, a value

. For each of these projected cells, a value ![]() is calculated, which takes into account the contribution of each minutia inside the neighborhood

is calculated, which takes into account the contribution of each minutia inside the neighborhood ![]() . The neighborhood

. The neighborhood ![]() has a radius of

has a radius of ![]() . Cappelli et al. [19] showed how the value

. Cappelli et al. [19] showed how the value ![]() is calculated by summing contributions of each minutia in the neighborhood

is calculated by summing contributions of each minutia in the neighborhood ![]() . This value signifies the likelihood of finding a minutia near the projected center

. This value signifies the likelihood of finding a minutia near the projected center ![]() for the cell identified using the coordinate

for the cell identified using the coordinate ![]() . The minutia points are extracted using a commercial ten-print matcher called VeriFinger [21].

. The minutia points are extracted using a commercial ten-print matcher called VeriFinger [21].

CompCode Based Features Representation. Competitive coding (CompCode) is one of the successful non-minutiae based feature representation techniques in the literature. CompCode uses Gabor filters for feature extraction. CompCode uses J different orientations with intervals of ![]() of the total available orientations of Gabor filters. A Gabor filter is defined as follows:

of the total available orientations of Gabor filters. A Gabor filter is defined as follows:

where the frequency of the sinusoid factor is represented by f and σ denotes the standard deviation of the Gaussian envelope. Let the real part of the above Gabor filter be represented as ![]() and let

and let ![]() represent the pre-processed image. The CompCode features

represent the pre-processed image. The CompCode features ![]() are extracted by convolution of

are extracted by convolution of ![]() and

and ![]() , and is defined as follows:

, and is defined as follows:

The CompCode features obtained above are used for matching. They are typically matched using normalized Hamming Distance.

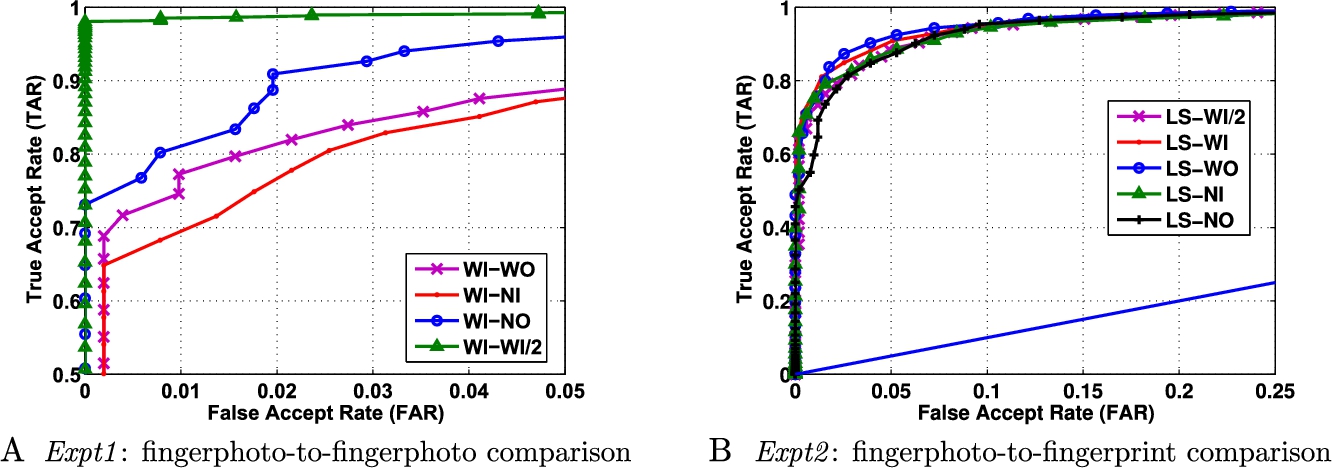

Tables 6.5 and 6.6 provide the error rates of the various matching algorithms for Expt1 and Expt2, respectively. The corresponding ROC curves are plotted in Figs. 6.9, 6.10, 6.11, and 6.12. The results show that ScatNet + RDF provides the best verification performance under all scenarios. The effectiveness of RDF is demonstrated when compared with neural networks (a highly competitive classifier in the literature) and provides much better performance. In Expt1, while matching fingerphoto with fingerphoto images, CompCode provides comparable performance to the proposed solution as both of them use texture features. However, while matching fingerphoto with fingerprints, our proposed solution remains more robust compared with CompCode, highlighting the generalizing capability of our approach. Further, in both Expt1 and Expt2, minutiae based approach performs poorly, implying that extracting minutiae from fingerphoto images is very challenging and includes lots of spurious minutiae.

Table 6.5

Equal Error Rate (EER) for Expt1 using Enh#1 enhancement and matching using the proposed RDF based method as well as other techniques

| Gallery | Probe | ScatNet + RDF | ScatNet + NN | ScatNet + L2 | CompCode | MCC |

| White Indoor (WI) | WO | 5.07 | 7.51 | 18.83 | 6.90 | 22.12 |

| NI | 7.45 | 27.32 | 19.75 | 5.02 | 21.33 | |

| NO | 3.65 | 13.12 | 18.98 | 5.31 | 21.52 | |

| WI/2 | WI/2 | 6.00 | 32.89 | 28.42 | 6.61 | 37.35 |

Table 6.6

Equal Error Rate (EER) for Expt2 using Enh#1 enhancement and matching using the proposed RDF based method as well as other techniques

| Gallery | Probe | ScatNet + RDF | ScatNet + NN | ScatNet + L2 | CompCode | MCC |

| Live Scan (LS) | WI/2 | 5.53 | 20.54 | 49.51 | 21.07 | 31.01 |

| WI | 7.07 | 15.60 | 19.38 | 14.58 | 29.92 | |

| WO | 7.12 | 23.34 | 18.95 | 14.74 | 12.92 | |

| NI | 10.43 | 17.02 | 18.59 | 10.60 | 18.05 | |

| NO | 10.38 | 17.42 | 19.18 | 11.38 | 12.76 |

6.5.3 Comparison of Distance Metrics

In comparison with the L2 distance metric used previously, various other distance metrics are used for matching fingerphoto images using ScatNet. That is, given a probe ScatNet representation of an image, it is compared with the gallery ScatNet representations of each image using different distance metrics. Table 6.7 summarizes all the distance metrics used for comparison. Minkowski distance with varying p parameter is plotted as ROC in Fig. 6.13B to find the best parameter. It can be observed that the accuracy drops when increasing p, and ![]() (Euclidean distance) gives the best performance. Fig. 6.13A shows the performance ROC of various distance metrics, and it can be concluded that, in cases where learning based algorithms cannot be used, L2 distance based ScatNet coefficient matching for fingerphotos yields best results.

(Euclidean distance) gives the best performance. Fig. 6.13A shows the performance ROC of various distance metrics, and it can be concluded that, in cases where learning based algorithms cannot be used, L2 distance based ScatNet coefficient matching for fingerphotos yields best results.

Table 6.7

Various distance metrics used in our experiments for matching fingerphotos

| Distance metric | Equation |

| Chebyshev |

|

| Correlation |

|

| Cosine |

|

| Minkowski |

|

| L2 distance |

|

| Spearman's coefficient |

6.5.4 Effect of Enhancement

As explained in Section 6.4.3, LBP based enhancement technique (referred to as Enh#2) can also be used to improve the ridge–valley contrast in camera captured fingerphoto images. A matching pipeline with adaptive background segmentation followed by LBP based enhancement is used to preprocess the image. This enhanced image is further subjected to multiple feature extraction and matching algorithms, and the results obtained are compared with the results produced by Enh#1 enhancement. Tables 6.8 and 6.9 show the performance error of various matching algorithms using LBP based enhancement. The corresponding ROC plots are plotted in Figs. 6.14, 6.15, 6.16, 6.17, and 6.18. It can be observed that the results produced by Enh#2 + ScatNet + RDF are comparable to the Enh#1 + ScatNet + RDF. Also, while matching same subset images such as WI/2 with WI/2, LBP based enhancement is found to provide very high verification performance. One of the most promising observations in using LBP for enhancement can be observed in Expt2, when all the probe subsets provide almost the same performance for the proposed matching pipeline. ROC plotted in Fig. 6.14 suggests the same, namely when the gallery contains fingerprint images, the effect of various illumination and background environments on the probe fingerphoto images can be neutralized using LBP based enhancement. Thus, LBP based enhancement shows very promising results in (i) matching same subset fingerphoto images, and (ii) while matching fingerphoto images to fingerprint images using the proposed ScatNet + RDF matching pipeline.

Table 6.8

Equal Error Rate (EER) for Expt1 using LBP based enhancement (Enh#2) and matching using the proposed RDF based method along with other matching techniques

| Gallery | Probe | ScatNet + RDF | ScatNet + NN | ScatNet + L2 | CompCode | MCC |

| White Indoor (WI) | WO | 7.78 | 7.52 | 20.49 | 7.02 | 20.34 |

| NI | 8.36 | 17.17 | 20.47 | 5.27 | 25.06 | |

| NO | 4.45 | 9.63 | 19.31 | 4.88 | 22.77 | |

| WI/2 | WI/2 | 1.47 | 33.20 | 40.05 | 7.63 | 55.75 |

Table 6.9

Equal Error Rate (EER) for Expt2 using LBP based enhancement (Enh#2) and matching using the proposed RDF based method along with other matching techniques

| Gallery | Probe | ScatNet + RDF | ScatNet + NN | ScatNet + L2 | CompCode | MCC |

| Live Scan (LS) | WI/2 | 7.61 | 11.54 | 14.11 | 19.22 | 49.65 |

| WI | 7.20 | 19.60 | 11.47 | 13.51 | 40.49 | |

| WO | 6.44 | 17.70 | 12.23 | 13.69 | 13.80 | |

| NI | 7.28 | 22.54 | 12.28 | 11.35 | 31.07 | |

| NO | 7.45 | 20.56 | 10.06 | 11.31 | 21.04 |

6.6 Conclusion

The research work presented in this chapter deals with all the major challenges associated with fingerphoto verification and proposes a pipeline to perform verification efficiently. The matching pipeline includes preprocessing techniques like segmentation and enhancement to a ScatNet based translation and rotation invariant feature representation followed by matching using RDF classifier. To cover each challenge and deal with them individually, a database consisting of 64 subjects with a total of 128 classes was collected. The database consisted of 3 sets with more than 5100 images addressing issues like background and illumination variations along with respective live scan impressions. We develop a segmentation algorithm in preprocessing step of fingerphotos and enhance it using two different methods. For feature representations, a novel translation and rotation invariant technique ScatNet is proposed. We observe that ScatNet representation of LBP enhanced image when matched using RDF supervised classifier trained for classifying match and mismatched pair of images gives best matching performance. This algorithm performs better than the state-of-the-art minutiae based technique Minutiae Cylinder Code (MCC) and CompCode. The results show a considerable improvement in performance for each of the challenges addressed in this work over the existing algorithms.

6.7 Future Work

Fingerphotos as a biometric modality may find a lot of applications for user authentication in the near future. One such future work could be creating a mobile smartphone unlocking application based on user fingerphotos captured using primary back camera. Since smartphones can be of varied resolutions, a cross-resolution algorithm needs to be developed. Also, unlike livescan impression where image captures have the same frontal view, we need to address the challenge where a probe fingerphoto has different frontal view, thus creating a need for considering all degrees of freedom for fingerphotos, namely, pitch, roll, and yaw.