Face Recognition Using an Outdoor Camera Network

Ching-Hui Chen; Rama Chellappa Department of Electrical and Computer Engineering, University of Maryland, College Park, MD, United States

Abstract

Face recognition in outdoor environment is a challenging task due to illumination changes, pose variations, and occlusions. In this chapter, we discuss several techniques for continuous tracking of faces acquired by an outdoor camera network as well as a face matching algorithm. Active camera networks are capable of reconfiguring the camera parameters to collaboratively capture the close-up views of face images. Robust face recognition methods can utilize compact representations extracted from multi-view videos. In the meantime, the constraints of consistent tracking of faces and the limitation of network resources should be satisfied. In this book chapter, we discuss several techniques for continuous tracking of faces acquired by a camera network as well as a face matching algorithm. We conclude this chapter with the discussion on the remaining challenges and emerging frameworks for face recognition in outdoor camera networks.

Keywords

Camera network; Outdoor; Face recognition; Person tracking; Active vision; Pan-tilt-zoom (PTZ) camera

2.1 Introduction

Outdoor camera networks have several applications in surveillance and scene understanding. Several prior works have investigated multiple person tracking [28,43,54], analysis of group behaviors [15,16], anomaly detection [49], person re-identification [17], and face recognition [8,18,19,29] in camera networks. Face recognition in outdoor camera networks is particularly of interest in surveillance system for identifying persons of interest. Besides, the identities of subjects in the monitored area can be useful information for high-level understanding and description of scenes [60]. As persons in the monitored area are non-cooperative, the face of a person is only visible to a subset of cameras. Hence, information collected from each camera should be jointly utilized to determine the identity of the subject. Unlike person re-identification, face recognition usually requires high-resolution images for extracting the detailed features of the face. As human faces possess a semi-rigid structure, this enables the face recognition method to develop 3D face models and multi-view descriptors for robust face representation.

Camera networks can be categorized into static camera networks and active camera networks. In static camera networks, cameras are placed around the monitored area with preset field of views (FOVs). The appearance of a face depends on the relative viewpoints observed from the camera sensors and the potential occlusion in the scene, which has direct impact on the performance of recognition algorithms. Hence, prior work in [64] has proposed a method for optimal placement of static cameras in the scene based on the visibility of objects. Active vision techniques have shown improvements for the task of low-level image understanding than conventional passive vision techniques [2] by allocating the resources based on current observations. Active camera networks usually comprise of a mixture of static cameras and pan-tilt-zoom (PTZ) cameras. During operation, PTZ cameras are continuously reconfigured such that the coverage, resolution (target coverage), informative view, and the risk of missing the target are properly managed to maximize the utility of the application [18,19].

A recent research survey on active camera network is provided in [47], and the authors propose a high-level framework for dynamic reconfiguration of camera networks. This framework consists of local cameras, fusion unit, and a reconfiguration unit. The local cameras capture information in the environment and submit all the information to the fusion unit. The fusion unit abstracts the manipulation of information from local cameras in a centralized or distributed processing framework and outputs the fused information. The reconfiguration unit optimizes the reconfiguration parameters based on the fused information, resource constraints, and objectives. In centralized processing frameworks, the information from each camera is conveyed to a central node for predicting the states of the observations and reconfiguring the local cameras. On the other hand, the distributed processing of the camera networks becomes desirable when the bandwidth and power resources are limited. In this scenario, each camera node receives information from its neighboring nodes and performs the tasks of prediction and reconfiguration locally.

Face association across video frames is an important component in any face recognition algorithm that processes videos. When there are multiple faces appearing in a camera view, robust face-to-face association methods should track the multiple faces across the frames and avoid the potential of identity switching. Also, face images observed from multiple views should be properly associated for effective face recognition. When the cameras are calibrated, the correspondence of face images observed in multiple views can be established by geometric localization methods, e.g., triangulation. Nevertheless, geometric localization methods demand accurate calibration and synchronization among the cameras, and they usually require the target to be observed by at least two calibrated cameras. Hence, these methods are not suitable for associating the face images captured by a single PTZ camera operating at various zoom settings. Alternatively, the association between face images observed in multiple views can be established by utilizing the appearance of upper body [8,22,23]. This method is effective as the human body is more perceivable than the face. Besides, the visibility of human body is not restricted to certain view angle as the human face does. Based on this fact, a face-to-person technique has been developed in [8] to associate the face in the zoomed-in mode with the person in the zoomed-out mode. In order to effectively utilize all the captured face images for robust recognition, face-to-face and face-to-person associations become the fundamental modules to ensure that the face images captured from different cameras and various FOVs are correctly associated.

Face images captured by cameras in outdoor environments are often not as constrained as mug shots since persons in the scene are typically non-cooperative. Furthermore, the face images captured by cameras deployed in outdoor environments can be affected by illumination changes, pose variations, dynamic backgrounds, and occlusions. Moreover, the sudden changes in PTZ settings in active camera networks can introduce motion blur. Although constructing a 3D face model from face images enables synthesis of different views for pose-invariant recognition, it typically relies on accurate camera calibration, synchronization, and high-resolution images. Hence, we address several issues that come up while designing a face recognition algorithm for outdoor camera systems. The objective is to extract diverse and compact face representation from multi-view videos for robust recognition. Also, context information, such as gaze, activity, clothing appearance, and unique presence, can provide additional cues for improving the recognition performance.

In this chapter, we first review the taxonomy of camera networks in Section 2.2. Techniques for face association are discussed in Section 2.3. Several issues for face recognition using images and videos captured in outdoor environments are discussed in Section 2.4. In Section 2.5, we investigate camera network systems related to face recognition. The remaining challenges in outdoor camera networks are presented in Section 2.6. We conclude this chapter in Section 2.7.

2.2 Taxonomy of Camera Networks

Several designs of camera networks have been developed to facilitate multiple cameras for various application scenarios. Camera networks can be categorized into static camera networks and active camera networks. Characteristics of camera networks, such as the centralized/distributed processing framework and overlapping/non-overlapping camera network, will be discussed in this section.

2.2.1 Static Camera Networks

Static camera networks typically consist of multiple cameras mounted in fixed locations, and the preset FOVs of the cameras are not reconfigurable during operation. Static camera networks have been used in multiple person tracking [28,43,54] and person re-identification [17]. In order to enhance the coverage area, an omnidirectional camera has been utilized along with the regular perspective camera [12]. There are very few works utilizing the static camera networks for face recognition in outdoor environments since a static camera lacks the zooming capability to capture the close-up view of faces. Some of the designs preset the static camera to the known walking path of pedestrians for capturing the facial details [40]. In practice, static camera networks for face recognition require densely distributed cameras to opportunistically capture the face images in a wide area. Prior work reported in [64] has proposed a strategy for optimal placement of cameras to ensure that a face of interest is visible to at least two cameras. The objective is to maximize the visibility function among all the camera setup parameters (locations and FOVs) in consideration for the potential occlusions in the scene. As the static camera often lacks the zooming capability to capture the close-up view, face images captured from a remote camera may not have sufficient resolution and good quality. Hence, remote face recognition [46] becomes one of the important issues in static camera networks.

2.2.2 Active Camera Networks

In active camera networks, cameras are reconfigurable during operation to maximize the utility of a certain application. Most of the active camera networks utilize a hybrid of static cameras and PTZ cameras, and the utility function can be formulated as the coverage for the face of interest or the appearance quality of faces [19]. A common setup in active camera networks is the master and slave camera. Static cameras observe the wide area for performing the task of detection and localization. The PTZ cameras possess the flexibility to capture the close-up views of faces. The master and slave camera networks usually adopt a centralized processing framework to reconfigure the slave cameras based on observations from the master camera. Active distributed PTZ camera networks have been proposed to collaboratively and opportunistically capture the informative views and satisfy the coverage constraints [18,19].

2.2.3 Characteristics of Camera Networks

The information collected by multiple cameras can be processed in a centralized or distributed framework. In the centralized processing framework, information from all the camera sensors is conveyed to a base station to estimate the tracking states and determine the identities. The distributed framework can reduce the amount of data transfer by processing the information locally and then convey the succinct information to other nodes. Given the limited resources of bandwidth and power in distributed camera networks, exchanging visual data between sensor nodes is not preferred. Hence, each sensor node only conveys modest information extracted from visual content to other sensor nodes. Based on the received information and its own visual content, each sensor node computes local optimal settings, e.g., PTZ settings of camera, to achieve the common goal in the networks.

For distributed camera networks in a wide area, cameras do not always have overlapping FOVs. Hence, the camera topology (connectivity between non-overlapping FOVs of cameras) should be established by exploring the statistical dependency on the entry and exit activities between cameras [57,67]. Besides, spatial-temporal constraints and relative appearance of the persons can be utilized for persistent tracking in non-overlapping FOVs [7,39]. With the topology of a non-overlapping camera network, faces and persons appearing from one view to another can be successfully associated for robust recognition.

2.3 Face Association in Camera Networks

Face association relies on persistent person tracking and face acquisition in outdoor camera networks. In this section, we investigate face-to-face and face-to-person associations [8], which enable robust recognition in long-term and wide-area monitoring scenarios.

2.3.1 Face-to-Face Association

A successful face-to-face association algorithm can continuously track the movement and appearance changes of faces over time. Nevertheless, face-to-face association is challenging since multiple faces appearing in the scene can introduce ambiguities. Especially, faces of a group of people are likely to be occluded by each other when the face images are captured from a single viewpoint. Hence, it is essential to correctly associate the face images to form face tracks, and then recognition can be performed effectively for each track based on the assumption that a face track only consists of face images captured from the same subject.

In general, a multiple face tracking algorithm handles the initialization of face tracks, simultaneous tracking of multiple faces, and the termination of a face track. There are several challenges to be addressed while designing a multiple face tracking algorithm. Face tracks that are spatially close to each other can lead to identity switching. The drift of face tracks can result due to large pose variations of faces. Besides, face tracks become fragmented due to occlusion and unreliable face detection. Moreover, videos captured by the hand-held cameras can be affected by unexpected camera motion, which makes the association of face images difficult. Given the recent advancements in multiple object tracking (MOT) [6,25,61], several methods have utilized the framework of MOT for multiple face tracking [48,58].

Roth et al. [48] adapted the framework of multiple object tracking to multiple face tracking based on tracklet linking, and several face-specific metrics and constraints were used for enhancing tracking reliability. Wu et al. [58] modeled the face clustering and tracklet linking steps using a Markov Random Field (MRF), and the fragmented face tracks resulting from occlusion or unreliable face detection were then associated to produce reliable face tracks. Duffner and Odobez [25] proposed a multi-face Markov Chain Monte Carlo (MCMC) particle filter and a Hidden Markov Model (HMM)-based method for track management. The track management strategy includes the creation and termination of tracklets. A recent work in [14] proposed to manage the track from the continuous face detection output without relying on long-term observations. In unconstrained scenarios, the camera can be affected by abrupt movements, which makes consistent tracking of faces challenging. Du and Chellappa proposed a conditional random field (CRF) framework to associate faces in two consecutive frames by utilizing the affinity of facial features, location, motion, and clothing appearance [22,23].

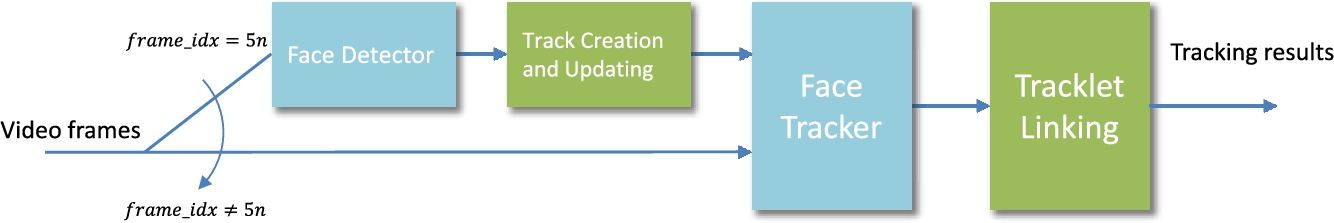

Although linking of tracklets from the bounding boxes provided in face detection has shown some robustness in multiple face tracking, performing face detection for every frame is not feasible due to high demands on computational resources. As shown in Fig. 2.1, the face association method in [11] detects faces for every 5 frames and uses the Kanade–Lucas–Tomasi (KLT) feature tracker [51] for short-term tracking. The bounding boxes provided by detection and KLT tracking serve as inputs for the tracklet linking algorithm [4].

2.3.2 Face-to-Person Association

Face recognition in camera networks requires the persistently tracked person and correct association of captured faces. In overlapping camera networks, the correspondence of faces captured from multiple views can be established from geometric localization methods, i.e., triangulation. Nevertheless, these techniques may not be applicable for non-overlapping camera networks.

For PTZ cameras in a distributed camera network, each PTZ camera actively performs face acquisition operating at different FOVs. It is essential to perform face-to-person association since the number of faces and the number of persons in the field of view may not be consistent when switching between zoomed-out and zoomed-in mode. Face-to-person association ensures that face images of a target captured from various FOVs can be registered with the same person for identification. The appearance of face images captured by the zoomed-in mode can be quite different from that of full-body images captured by the zoomed-out mode since the close-up views only capture a portion of the full-body images. Hence, the HSV color histogram is used to model the appearance of upper-body in different zoom ratio [8], and the Hungarian algorithm [1] is employed to find the optimal assignment between faces and persons.

2.4 Face Recognition in Outdoor Environment

In this section, we discuss several issues when performing the recognition task on images and videos captured by outdoor camera networks.

2.4.1 Robust Descriptors for Face Recognition

Several techniques have been proposed to overcome the challenges due to pose variations by extracting handcrafted features around the local landmarks of face images, and a discriminative distance metric is learned such that a pair of face images from the same person will induce a smaller distance than that from different persons. Chen et al. [10] used multi-scale and densely sampled local binary pattern (LBP) features and trained the joint Bayesian distance metric [9]. Simonyan et al. performed Fisher Vector (FV) encoding on densely sampled SIFT feature [52] to select highly representative features. Li et al. [33] proposed a pose-robust verification technique by utilizing the probabilistic elastic part (PEP) model, and thus the impact of pose variations could be alleviated by establishing the correspondence between local appearance features (e.g., SIFT, LBP, etc.) of the two face images. Recently, Li and Hua [32] proposed the hierarchical PEP model to exploit the fine-grained structure of face images, which outperforms their original PEP model. Besides those methods based on handcrafted features, deep learning methods [55,56] have shown significantly improved performance. However, learning the deep features usually requires a large number of labeled training data.

As face images captured in outdoor environments suffer from low-resolution and occlusion, face alignment becomes challenging. Liao et al. [35] have proposed an alignment-free face recognition using multi-keypoint descriptors, and the size of the descriptor can adapt to the actual content.

2.4.2 Video-Based Face Recognition

In camera networks, sequences of face images in videos can capture diverse views and facial variations of an individual (assuming a face track only consists of face images from one person). Hence, several works have proposed video-based methods for effective representations. Zhou et al. [65,66] proposed to simultaneously characterize the appearance, kinematics and identity of human face using particle filters. Lee et al. [30] constructed the pose manifold from k-means clustering of face tracks and established the connectivity across the pose manifold for representing the face images in the video. Chen et al. [13] proposed to cluster a face track into several partitions, and dictionaries learned from each partition were used for capturing the pose variations of a subject. Li et al. [34] adopted the PEP model for constructing the video-level representation of face images. The video-level representation was computed by performing the pixel-level-mean of the PEP-representation of video frames. Most video-based verification techniques have extracted the diverse and compact frame-level information for constructing the video-level representation.

2.4.3 Multi-view and 3D Face Recognition

Robust face recognition depends on effective descriptions of faces. Several prior works have investigated the affine invariant features that are robust to slight pose variations or view changes. Nevertheless, the 2D model fails to represent large pose variations due to self-occlusion and the perspective distortion introduced when the face is close to cameras. The 3D model overcomes these disadvantages of the 2D model by describing the features on the 3D structure. Given the estimated pose of the face, the features of a face collected from multiple views can be jointly registered onto a 3D structure, and thus the features are no longer dependent on the pose variation of the face itself. In face tracking or recognition, the variation of the head structure is modest. Hence, the 3D feature can be densely constructed by mapping facial textures onto a generic 3D structure. Nevertheless, successful modeling of 3D faces requires reliable camera calibration for accurate registration, which is generally not sufficiently precise in real world surveillance scenarios. In the following, we review prior works that exploit the multiple views and 3D face models for recognition.

An et al. [3] adopted the dynamic Bayesian network (DBN) for face recognition in camera surveillance network by encoding the temporal information and features from multiple views. The DBN consists of a root node and several camera nodes in a time slice. Fig. 2.2 shows the DBN structure using three cameras of three time slices. The root nodes capture the distribution of the subjects in the gallery, and the camera nodes contain the features of a face observed from each camera. The time slices enable the DBN to encode the temporal variations of a face. Du et al. [24] proposed a robust face recognition method based on the spherical harmonic (SH) representation for the texture-mapped multi-view face images on a 3D sphere. Fig. 2.3 shows the texture mapping on a 3D sphere from three cameras. The textured-mapped 3D sphere was used for computing the SH representation. The method is pose-invariant since the spectrum of the SH coefficients is invariant to the rotation of head pose.

Besides, several prior works have utilized structure-from-motion techniques to reconstruct the 3D model for face recognition from multiple face images [38,40].

2.4.4 Face Recognition with Context Information

Context features, such as clothing, activity, attributes, and gait, can serve as additional cues for improving the performance of face recognition algorithms [62]. Moreover, the uniqueness constraint can be utilized to improve the recognition accuracy since two persons presenting in a venue should not be identified as the same subject. Liu and Sarkar [37] proposed a recognition framework by fusing the gait and face information, and several fusion strategies for integrating these two biometric modalities were evaluated. Their experimental results show that the combination of one face and one gait per person gives better result than two face probes per person and two gait probes per person. This shows that different biometric modalities can be fused to further improve the recognition accuracy.

2.4.5 Incremental Learning of Face Recognition

Besides, the outdoor environment can change due to time of day, weather, etc., and thus the distribution of data can change. As a result, the model should adapt to the current captured data for effective face recognition. A recent work in [45] has proposed an adaptive ensemble method to alleviate the impact of environmental changes on face recognition by utilizing diversified learned models. The method first performed change detection to distinguish if the current input significantly differs from the learned model. Otherwise, a corresponding model is selected for recognition. Long-term memory was then used to store the parameters for identifying new concepts and training new model-specific classifier of each subject. The short-term memory holds the validation data for referencing. The system model can be updated by adopting the boosting-based method for learning independent classifiers and performing weighted fusion.

As the outdoor scene is an open environment, it is common that a subject does not belong to any subject in the gallery. Several works have addressed the issue of open set recognition [31,50]. Subjects that have not been seen in the gallery should be rejected, and the captured face images can be potentially used for learning models of new subjects.

2.5 Outdoor Camera Systems

In this section, we review several camera networks deployed in outdoor environments.

2.5.1 Static Camera Approach

Medioni et al. [40] used two static cameras to monitor a chosen region of interest. In this work, one of the static cameras provided high-resolution face images with a narrow FOV, and the other camera captured the full body of pedestrians in the scene with a wide FOV. A 3D face model was constructed from multi-view stereo technique operating on the sequences of face images. Stereo pair of wide baseline can be challenging for establishing correspondence but often provide better disparity resolution. On the other hand, it is easy to establish correspondence for a short baseline stereo pair, but the disparity resolution might be insufficient. The task involved key frame selection to form multiple stereo pairs from near frontal images within −10 to 10 degrees. Each pairwise stereo pair contributed to a disparity map that represents the height of the 3D face surface. The mesh descriptor of the 3D face model was obtained from integrating the multiple disparity maps, and outliers of disparity were rejected by surface self-consistency. The 3D face models and 2D face images were used for biometric recognition. Although this approach is capable of reconstructing the 3D face from outdoor video sequences, their experimental results show that the performance of 3D face recognition degrades as the resolution of face images is reduced due to the increase in distance. This reveals that face recognition based on 3D modeling in outdoor environments remains a challenging task.

2.5.2 Single PTZ Camera Approach

Face recognition systems using a single PTZ camera are challenging to design since the persistent tracking of a person, camera control to follow the identity, and recognizing the identity from face images should be performed simultaneously. Dinh et al. [21] proposed a single PTZ camera acquisition strategy for extracting high-resolution face sequences of a single person. Their method employs a pedestrian detector in the wide FOV to detect face of interest. Once a pedestrian is detected, the pan-tilt parameters of camera are adjusted to bring the face of a pedestrian to the center of the image and the zoom parameter is preset to ensure sufficient resolution of the face images. As the face detector localizes a face, the active tracking mode is initiated by performing face tracking with the bounding box provided by the face detector. In the meantime, camera control is initiated to follow the face simultaneously. Since the tracked face consistently moves in the scene, the camera control module in [20] is employed to follow the target precisely and smoothly by sending commands to reconfigure the pan-tilt parameters. The zoom parameter is dynamically adjusted to ensure that a face in the FOV has a proper size. When the face drifts out of the FOV of a camera, the one-step-back strategy camera control is adopted by decreasing the focal length for one-step until the face reappears in the FOV.

Cai et al. [8] employed a single PTZ camera for face acquisition for multiple persons in the scene. The PTZ camera switches between zoomed-in and zoomed-out mode for obtaining narrow and wide FOV, respectively. In the zoomed-out mode, person-to-person association was employed to track multiple persons in the scene. When the camera is switched from zoomed-out mode to zoomed-in mode to obtain the close-up view of a particular person, the face-to-person association was performed to ensure that the detected faces in the zoomed-in mode are correctly associated with the person in the zoomed-out mode. The face-to-face and face-to-person associations are employed when switching between zoomed-in and zoomed-out modes. The camera scheduling is based on a weighted round-robin mode to acquire close-up views of each person in the scene.

2.5.3 Master and Slave Camera Approach

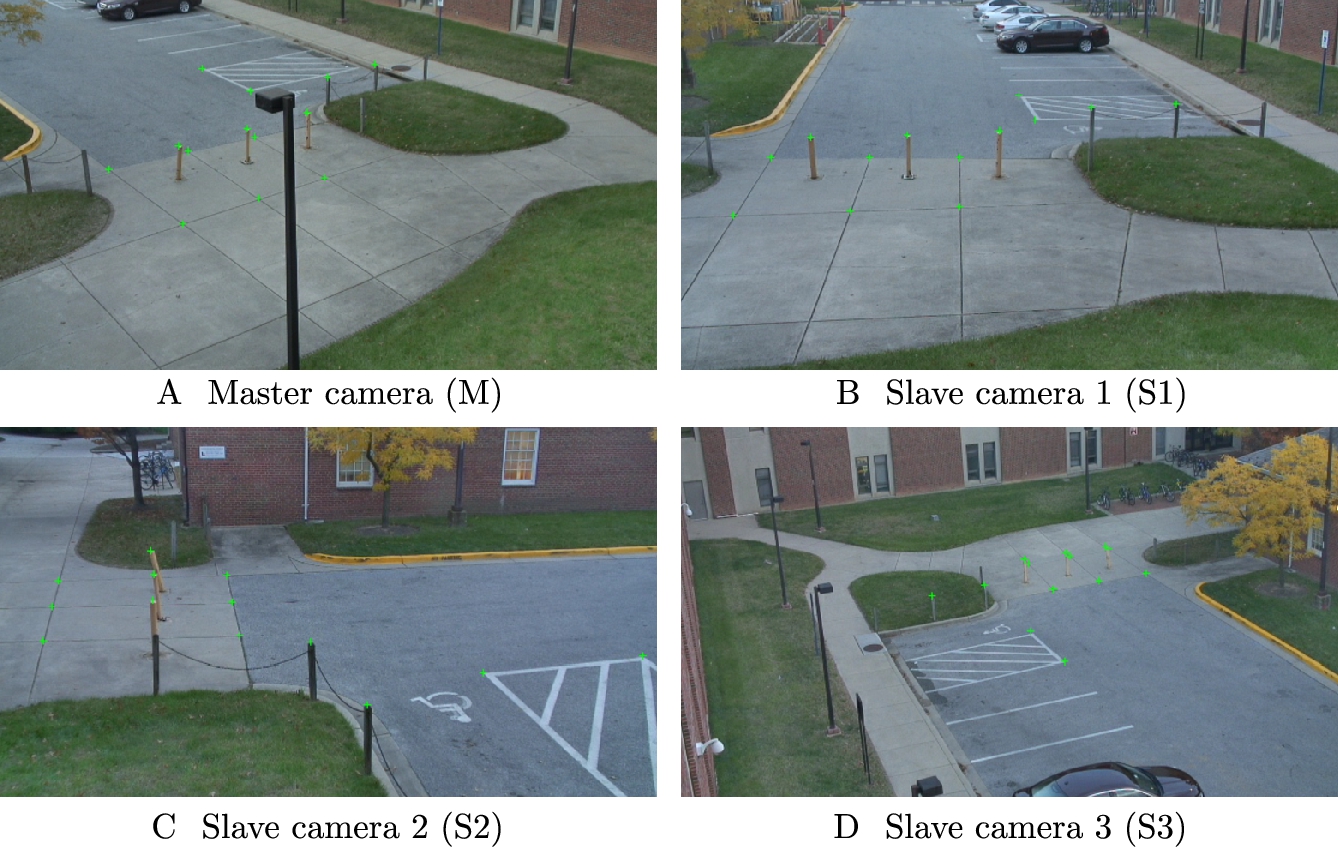

In the University of Maryland (UMD), an outdoor camera network comprising of four sets of the off-the-shelf PTZ network IP cameras (Sony SNC-RH164) is employed to acquire face images in the open area in front of a campus building. A master and slave camera framework is adopted in the outdoor camera system. The PTZ cameras are deployed on the roof and side walls of the building as shown in Fig. 2.4, and their corresponding locations seen from the bird view are marked in the world map of Fig. 2.5. One of the PTZ camera serves as the master camera (M) and other three cameras serve as slave cameras (S1, S2, and S3). The resolution of the video stream from each camera is ![]() pixels, and the frame rate is 15 frames/second.

pixels, and the frame rate is 15 frames/second.

The proposed system consists of several modules, including foreground detection [26], blob tracking, face detection, face recognition, and the surveillance interface.

2.5.3.1 Camera Calibration

Using the steerable functionality of PTZ camera, we calibrate the intrinsic parameters of each camera using techniques presented in [59] without using a known pattern [63]. During calibration, we steer all the PTZ cameras to look at a common overlapping area, and all the cameras are zoomed out to maximize the overlapping FOVs. Since the perspectives are quite different across the different PTZ cameras, we manually select the common corresponding points (Fig. 2.6) for extrinsic calibration [27]. The extrinsic parameters of the PTZ cameras are computed by the Bundler toolkit [53] to obtain the rotation and translation matrices relative to the master camera. Moreover, we assume that the pedestrian movement can be modeled as planer motion on the ground plane, and thus we simply mark the location of the pedestrian in the world map. By using at least three (manually selected) 3D coordinates on the ground, we obtain the planar equation of the ground plane

and the unit normal vector of the ground plane is denoted as ![]() .

.

2.5.3.2 Camera Control

The objective of the outdoor camera system is to recognize the identity of a pedestrian in the area being monitored from a set of subjects in the gallery, and we report its location and identity in the world map as shown in Fig. 2.5. In the view of the master camera, the moving pedestrians are first detected by the foreground detection, and then tracked by the blob trackers. We use the foreground detection and blob tracking methods provided in OpenCV [44]. The image coordinate at the standpoint of a pedestrian ![]() is converted into the 3D world coordinate

is converted into the 3D world coordinate ![]() to indicate the 3D coordinate of the foot of the pedestrian. The 3D world coordinate

to indicate the 3D coordinate of the foot of the pedestrian. The 3D world coordinate ![]() is computed by intersecting the ray along the homogeneous coordinates

is computed by intersecting the ray along the homogeneous coordinates ![]() of the master view with the planner equation of the ground in (2.1). In order to capture the high-resolution face images for recognition, the slave cameras are steered to point at the 3D coordinate of the head such that the head of the pedestrian are brought to the image center. We compute the rough 3D coordinate of the head

of the master view with the planner equation of the ground in (2.1). In order to capture the high-resolution face images for recognition, the slave cameras are steered to point at the 3D coordinate of the head such that the head of the pedestrian are brought to the image center. We compute the rough 3D coordinate of the head ![]() in the world as

in the world as

where h is the average human height of a pedestrian in the scene. In the system, it is empirically set as a constant. However, a more precise height of a pedestrian in the scene can be computed from a reference object of known height and the vanishing point [42].

A simple camera scheduling strategy is implemented to steer all the slave cameras to point at the head of a person simultaneously. When there is more than one person in the monitored area, each person is sequentially observed by all the slave cameras with a time interval of 4 s. Sophisticated camera scheduling algorithm, such as in [19], can be implemented to allocate the PTZ cameras to optimally capture the most informative views. Hence, PTZ cameras can be individually steered to capture the face images from different persons in parallel.

2.5.3.3 Face Recognition

The sequence of face images detected in the camera views are recognized by the video-based face recognition method developed by Chen et al. [13]. The dictionaries for the 8 subjects in the gallery are trained offline from two sessions of videos captured from three slave cameras. In the training stage, each face image in grayscale is resized to 30 × 30 pixels, and each face image is then vectorized into feature vector of dimension 900. Feature vectors of each subject are clustered into ten partitions using k-means clustering. Fig. 2.7 shows a subset of partitions from three subjects in the gallery. There are ten sub-dictionaries of each subject learned from the ten partitions to build the compact face representation of each subject. In the testing stage, the identity of a face image in each frame is predicted by assigning the identity of the sub-dictionary that yields the minimum reconstruction error of the face image. The identity of the pedestrian is then determined by using a majority voting that accounts for the predicted identity of face images of previous frames. The location and identity of each pedestrian is continuously updated on the world map.

2.5.4 Distributed Active Camera Networks

In master and slave camera networks, the functionality of each camera is assigned throughout the operation. On the other hand, in the collaborative and opportunistic PTZ camera networks, the tasks of tracking in wide FOV and capturing high-resolution images in narrow FOV are dynamically reconfigured based on the current observations. Each PTZ camera is capable of low-level processing, including target tracking and common consensus state estimation.

Ding et al. [19] have implemented distributed active camera networks of 5 and 9 PTZ cameras, which provide the dynamic coverage of the monitored area. The configuration of the PTZ settings relies on a distributed tracking method based on the Kalman-Consensus filter [43,54]. Neighboring cameras can communicate with each other and negotiate with neighboring nodes before taking an action. The framework optimizes the distributed camera configurations by maximizing the utility based on the specified tracking accuracy, informative shot, and image quality, in the active distributive camera network. The utility function can model the area coverage and target coverage. Another framework in [18] uses a camera network of 215 PTZ cameras to opportunistically retrieve informative views. A Bayesian framework is utilized to perform the trade-off between the reward of informative view and the cost of missing a target. Besides, a framework proposed by Morye et al. [41] continuously changes the camera parameters to satisfy the tracking constraint and opportunistically capturing the high-resolution faces. Image quality is formulated as a function of the target resolution and its relative pose with respect to the view camera.

2.6 Remaining Challenges and Emerging Techniques

Video surveillance in complex scenarios remains a challenging task since existing computer vision algorithms cannot adequately address the challenges due to pose variations, severe occlusions, illumination changes, and ambiguity between identities of similar appearance. Although the 3D structure of face can provide distinct features, the issues of synchronization error, calibration error, insufficient imaging resolution of remote identity, make it difficult to recover the 3D face model. The challenges of designing a video surveillance systems do not depend on a single factor, and the performance of one stage can potentially suffer from unreliable results in previous stages. All these factors make face recognition in outdoor camera networks a challenging task.

Face recognition in mobile camera network is an emerging research topic [36]. In this scenario, each visual sensor is mounted on a mobile agent and works cooperatively with other visual sensors in the mobile networks. Given the limited bandwidth and power in the mobile networks, exchanging visual data between sensors becomes infeasible. Hence, each sensor node only conveys a modest amount of information extracted from a particular camera to other sensor nodes. Based on the received information and its own visual data, each sensor node computes an optimal setting such as the moving direction of the mobile agent or the PTZ setting of camera to achieve the common goal in the networks. With the low cost of drones, cameras mounted on flying mobile agents have been utilized for face recognition [5]. As compared to conventional mobile agents, drones are less restricted by the geographic constraints. Nevertheless, sophisticated drone stabilization techniques, camera controls, and communication techniques should be developed for conveying informative and stable face images for face recognition in drone-based video surveillance.

With the prevailing use of personal mobile devices, the utilization of camera sensors embedding GPS and orientation sensor remains an open problem to solve. Unlike typical mobile networks where the algorithm gets full control on the steering of mobile agent, the visual sensors on personal device usually acquire visual data passively. Hence, crowd-based services as part of the mobile camera network should take into account the behavior of user and human interaction to opportunistically collect information for face recognition in large-scale and unrestricted environments.

2.7 Conclusions

In this chapter, we first discussed the usefulness of camera frameworks for face recognition in outdoor environments. The static camera networks are suitable for densely distributed wide area, but they are not as flexible as the active camera networks. The active camera networks can take advantages of the PTZ capability to opportunistically capture high-resolution face images. Nevertheless, the face images captured in outdoor environments are unconstrained, and the quality is usually affected by pose variations, illumination changes, occlusions, and motion blur. Effective multi-view video-based methods should be employed to build diverse and compact face representations. We reviewed several issues relevant to the design of camera network systems for face recognition deployed in the outdoor environments. Remaining challenges such as handling real-time operation, synchronization, etc., should be overcome to make the outdoor camera network systems for face recognition pervasive and reliable. Finally, we discussed the emerging camera frameworks for face recognition.