Virtualization concepts

This chapter describes virtualization concepts as they apply to the DS8880.

This chapter covers the following topics:

4.1 Virtualization definition

For the purposes of this chapter, virtualization is the abstraction process from the physical drives to one or more logical volumes that are presented to hosts and systems in a way that they appear as though each were a physical drive.

4.2 Benefits of virtualization

The DS8880 physical and logical architecture provides highly configurable enterprise storage virtualization. DS8880 virtualization includes the following benefits:

•Flexible logical volume configuration:

– Multiple RAID types (RAID 5, RAID 6, and RAID 10)

– Storage types (count key data (CKD) and fixed-block architecture (FB)) aggregated into separate extent pools

– Volumes are allocated from extents of the extent pool

– Storage pool striping

– Dynamically add and remove volumes

– Logical volume configuration states

– Dynamic Volume Expansion (DVE)

– Extent space-efficient (ESE) volumes for thin provisioning of FB and CKD volumes

– Support for small and large extents

– Extended address volumes (EAVs) (CKD)

– Parallel Access Volumes across LCUs (Super PAV for CKD)

– Dynamic extent pool merging for IBM Easy Tier

– Dynamic volume relocation for Easy Tier

– Easy Tier Heat Map Transfer

•Flexible logical volume sizes:

– CKD: up to 1 TB (1,182,006 cylinders) by using EAVs

– FB: up to 16 TB (limit of 2 TB when used with Copy Services, limit of 1 TB with small extents).

•Flexible number of logical volumes:

– Up to 65280 (CKD)

– Up to 65280 (FB)

– 65280 total for mixed CKD + FB

•No strict relationship between RAID ranks and logical subsystems (LSSs)

•LSS definition allows flexible configuration of the number and size of devices for each LSS:

– With larger devices, fewer LSSs can be used.

– Volumes for a particular application can be kept in a single LSS.

– Smaller LSSs can be defined, if required (for applications that require less storage).

– Test systems can have their own LSSs with fewer volumes than production systems.

4.3 Abstraction layers for drive virtualization

Virtualization in the DS8880 refers to the process of preparing physical drives for storing data that belongs to a volume that is used by a host. This process allows the host to think that it is using a storage device that belongs to it, but it is really being implemented in the storage system. In open systems, this process is known as creating logical unit numbers (LUNs). In IBM z Systems, it refers to the creation of 3390 volumes.

The basis for virtualization begins with the physical drives, which are mounted in storage enclosures and connected to the internal storage servers. To learn more about the drive options and their connectivity to the internal storage servers, see 2.5, “Storage enclosures and drives” on page 46.

Virtualization builds upon the physical drives in a series of layers:

•Array sites

•Arrays

•Ranks

•Extent pools

•Logical volumes

•Logical subsystems

4.3.1 Array sites

An array site is formed from a group of eight identical drives with the same capacity, speed, and drive class. In high-performance flash enclosures (HPFEs), an array site can contain either 7 or 8 flash cards. The specific drives that are assigned to an array site are automatically chosen by the system at installation time to balance the array site capacity across both drive enclosures in a pair, and across the connections to both storage servers. The array site also determines the drives that are required to be reserved as spares. No predetermined storage server affinity exists for array sites.

Figure 4-1 shows an example of the physical representation of array site. The disk drives are dual connected to SAS-FC bridges. An array has drives from two chains.

Figure 4-1 Array site across two chains

|

Note: One HPFE is equivalent to one standard enclosure pair.

|

Figure 4-2 shows an example of the physical representation of an HPFE array site.

Figure 4-2 Array site (high-performance flash enclosure)

Array sites are the building blocks that are used to define arrays.

4.3.2 Arrays

An array is created from one array site. When an array is created, its Redundant Array of Independent Disks (RAID) level, array type, and array configuration are defined. This process is also called defining an array.

The following RAID levels are supported in DS8880:

•RAID 5

•RAID 6

•RAID 10

For more information, see “RAID 5 implementation in DS8880” on page 81, “RAID 6 implementation in the DS8880” on page 83, and “RAID 10 implementation in DS8880” on page 84.

|

Note: The flash cards and the HPFE support only RAID 5. Starting with DS8880 R8.1 code, HPFE also supports RAID 10.

Important: RAID configuration information changes occasionally. Consult your IBM service support representative (SSR) for the latest information about supported RAID configurations. For more information about important restrictions for DS8880 RAID configurations, see 3.5.1, “RAID configurations” on page 78.

Important: In all DS8000 series implementations, one array is always defined as using one array site.

|

According to the sparing algorithm of the DS8880, 0 - 2 spares can be taken from the array site. For more information, see 3.5.9, “Spare creation” on page 84.

Figure 4-3 shows the creation of a RAID 5 array with one spare, which is also called a 6+P+S array. It has a capacity of six drives for data, a capacity of one drive for parity, and a spare drive. According to the RAID 5 rules, parity is distributed across all seven drives in this example. On the right side of Figure 4-3, the terms, D1, D2, D3, and so on, stand for the set of data that is contained on one drive within a stripe on the array. For example, if 1 GB of data is written, it is distributed across all of the drives of the array.

Figure 4-3 Creation of an array

Depending on the selected RAID level and sparing requirements, seven types of arrays are possible, as shown in Figure 4-4.

Figure 4-4 RAID array types

|

Tip: Remember that larger drives have a longer rebuild time. Only RAID 6 can recover from a second error during a rebuild due to the extra parity. RAID 6 is the best choice for systems that require high availability.

|

4.3.3 Ranks

After the arrays are created, the next task is to define a rank. A rank is a logical representation of the physical array formatted for use as FB or CKD storage types. In the DS8880, ranks are defined in a one-to-one relationship to arrays. Before you define any ranks, you must decide whether you will encrypt the data.

Encryption group

All drives that are offered in the DS8880 are Full Disk Encryption (FDE)-capable to secure critical data. In the DS8880, the Encryption Authorization license is included in the Base Function license group.

If you plan to use encryption, you must define an encryption group before any ranks are created. The DS8880 supports only one encryption group. All ranks must be in this encryption group. The encryption group is an attribute of a rank. Therefore, your choice is to encrypt everything or nothing. If you want to enable encryption later (create an encryption group), all ranks must be deleted and re-created, which means that your data is also deleted.

For more information, see the latest version of IBM DS8880 Data-at-rest Encryption, REDP-4500.

Defining ranks

When a new rank is defined, its name is chosen by the data storage (DS) Management graphical user interface (GUI) or data storage command-line interface (DS CLI), for example, R1, R2, or R3. The rank is then associated with an array.

|

Important: In all DS8000 series implementations, a rank is defined as using only one array. Therefore, rank and array can be treated as synonyms.

|

The process of defining a rank accomplishes the following objectives:

•The array is formatted for FB data for open systems or CKD for z Systems data. This formatting determines the size of the set of data that is contained on one drive within a stripe on the array.

•The capacity of the array is subdivided into partitions, which are called extents. The extent size depends on the extent type, which is FB or CKD. The extents are the building blocks of the logical volumes. An extent is striped across all drives of an array, as shown in Figure 4-5 on page 99, and indicated by the small squares in Figure 4-6 on page 101.

•Starting with the DS8880 R8.1 code, you can choose between large extents and small extents.

A fixed-block rank features an extent size of either 1 GB (more precisely, GiB, gibibyte, or binary gigabyte, which equals 230 bytes), which are will called large extents, or an extent size of 16 MiB, which are called small extents.

z Systems users or administrators typically do not deal with gigabytes or gibibytes. Instead, storage is defined in terms of the original 3390 volume sizes. A 3390 Model 3 is three times the size of a Model 1. A Model 1 features 1113 cylinders, which are about 0.94 GB. The extent size of a CKD rank is one 3390 Model 1, or 1113 cylinders. A 3390 Model 1, or 1113 cylinders is the large extent size for CKD ranks. The small CKD extent size is 21 cylinders. This size corresponds to the z/OS allocation unit for EAV volumes larger than 65520 cylinders where software changes addressing modes and allocates storage in 21 cylinder units.

An extent can be assigned to only one volume. Although you can define a CKD volume with a capacity that is an integral multiple of one cylinder or a fixed-block LUN with a capacity that is an integral multiple of 128 logical blocks (64 KB), if you define a volume this way, you might waste the unused capacity in the last extent that is assigned to the volume.

For example, the DS8880 theoretically supports a minimum CKD volume size of one cylinder. But the volume will still claim one full extent of 1113 cylinders if large extents are used or 21 cylinder for small extents. So, 1112 cylinders are wasted if large extents are used.

|

Note: An important change in the DS8880 firmware is that all volumes now have a common metadata structure. All volumes now have the metadata structure of ESE volumes, whether the volumes are thin-provisioned or fully provisioned. ESE is described in 4.4.4, “Volume allocation and metadata” on page 114.

|

Figure 4-5 shows an example of an array that is formatted for FB data with 1-GB extents. The squares in the rank indicate that the extent consists of several blocks from separate drives.

Figure 4-5 Forming an FB rank with 1-GB extents

Small or large extents

Whether to use small or large extents depends on the goals you want to achieve. Small extents provide a better capacity utilization, particularly for thin-provisioned storage. However, managing many small extents causes some performance degradation during initial allocation. For example, a format write of 1 GB requires one storage allocation with large extents, but 64 storage allocations with small extents. Otherwise, host performance should not be adversely affected.

4.4 Extent pools

An extent pool is a logical construct to aggregate the extents from a set of ranks, and it forms a domain for extent allocation to a logical volume. Originally, extent pools were used to separate drives with different revolutions per minute (RPM) and capacity in different pools that have homogeneous characteristics. You still might want to use extent pools for this purpose.

No rank or array affinity to an internal server (central processor complex (CPC)) is predefined. The affinity of the rank (and its associated array) to a server is determined when it is assigned to an extent pool.

One or more ranks with the same extent type (FB or CKD) can be assigned to an extent pool.

|

Important: Because a rank is formatted to have small or large extents, the first rank that is assigned to an extent pool determines whether the extent pool is a pool of all small or all large extents. You cannot have a pool with a mixture of small and large extents. You cannot change the extent size of an extent pool.

|

If you want Easy Tier to automatically optimize rank utilization, configure more than one rank in an extent pool. A rank can be assigned to only one extent pool. As many extent pools as ranks can exist.

Heterogeneous extent pools, with a mix of flash cards, flash drives, enterprise serial-attached Small Computer System Interface (SCSI) (SAS) drives, and nearline drives can take advantage of the capabilities of Easy Tier to optimize I/O throughput. Easy Tier moves data across different storage tiering levels to optimize the placement of the data within the extent pool.

With storage pool striping, you can create logical volumes that are striped across multiple ranks to enhance performance. To benefit from storage pool striping, more than one rank in an extent pool is required. Fore more information, see “Storage pool striping: Extent rotation” on page 109.

Storage pool striping can enhance performance significantly. However, in the unlikely event that a whole RAID array fails, the loss of the associated rank affects the entire extent pool because data is striped across all ranks in the pool. For data protection, consider mirroring your data to another DS8000 family storage system.

With the availability of Easy Tier, storage pool striping is somewhat irrelevant. It is a much better approach to let Easy Tier manage extents across ranks (arrays).

When an extent pool is defined, it must be assigned with the following attributes:

•Internal storage server affinity

•Extent type (FB or CKD)

•Encryption group

As with ranks, extent pools are also assigned to encryption group 0 or 1, where group 0 is non-encrypted, and group 1 is encrypted. The DS8880 supports only one encryption group, and all extent pools must use the same encryption setting as was used for the ranks.

A minimum of two extent pools must be configured to balance the capacity and workload between the two servers. One extent pool is assigned to internal server 0. The other extent pool is assigned to internal server 1. In a system with both FB and CKD volumes, four extent pools provide one FB pool for each server and one CKD pool for each server.

With Release 8.1, if you plan on using small extents for ESE volumes, you must create additional pools with small extents. Indeed, small and large extents cannot reside in the same pool.

Figure 4-6 shows an example of a mixed environment that features CKD and FB extent pools.

Extent pools can be expanded by adding more ranks to the pool. All ranks that belong to extent pools with the same internal server affinity are called a rank group. Ranks are organized in two rank groups. Rank group 0 is controlled by server 0, and rank group 1 is controlled by server 1.

Figure 4-6 Extent pools

4.4.1 Dynamic extent pool merge

Dynamic extent pool merge is a capability that is provided by the Easy Tier manual mode facility. It allows one extent pool to be merged into another extent pool if they meet these criteria:

•Have extents of the same size

•Have the same storage type (FB or CKD)

•Have the same DS8880 internal server affinity

The logical volumes in both extent pools remain accessible to the host systems. Dynamic extent pool merge can be used for the following reasons:

•Consolidation of two smaller extent pools with the equivalent storage type (FB or CKD) and extent size into a larger extent pool. Creating a larger extent pool allows logical volumes to be distributed over a greater number of ranks, which improves overall performance in the presence of skewed workloads. Newly created volumes in the merged extent pool allocate capacity as specified by the selected extent allocation algorithm. Logical volumes that existed in either the source or the target extent pool can be redistributed over the set of ranks in the merged extent pool by using the Migrate Volume function.

•Consolidating extent pools with different storage tiers to create a merged extent pool with a mix of storage drive technologies. This type of an extent pool is called a hybrid pool and is a prerequisite for using the Easy Tier automatic mode feature.

The Easy Tier manual mode volume migration is shown in Figure 4-7.

Figure 4-7 Easy Tier migration types

|

Important: Volume migration (or Dynamic Volume Relocation) within the same extent pool is not supported in hybrid (or multi-tiered) pools. Easy Tier automatic mode rebalances the volumes’ extents within the hybrid extent pool automatically based on activity. However, you can also use the Easy Tier application to manually place entire volumes in designated tiers. For more information, see DS8870 Easy Tier Application, REDP-5014.

|

Dynamic extent pool merge is allowed only among extent pools with the same internal server affinity or rank group. Additionally, the dynamic extent pool merge is not allowed in the following circumstances:

•If source and target pools feature different storage types (FB and CKD)

•If source and target pool feature different extent sizes

•If you selected an extent pool that contains volumes that are being migrated

•If the combined extent pools include 2 PB or more of ESE logical capacity (virtual capacity)

For more information about Easy Tier, see IBM DS8000 EasyTier, REDP-4667.

4.4.2 Logical volumes

A logical volume consists of a set of extents from one extent pool. The DS8880 supports up to 65,280 logical volumes (64 K CKD, or 64 K FB volumes, or a mixture of both, up to a maximum of 64 K total volumes). The abbreviation 64 K is used in this section, even though it is 65,536 minus 256, which is not quite 64 K in binary.

Fixed-block LUNs

A logical volume that is composed of fixed-block extents is called a LUN. A fixed-block LUN is composed of one or more 1 GiB (230 bytes) large extents or one or more 16 MiB small extents from one FB extent pool. A LUN cannot span multiple extent pools, but a LUN can have extents from multiple ranks within the same extent pool. You can construct LUNs up to a size of 16 TiB (16 x 240 bytes, or 244 bytes) when using large extents.

|

Important: DS8880 Copy Services do not support FB logical volumes larger than 2 TiB

(2 x 240 bytes). Do not create a LUN that is larger than 2 TiB if you want to use Copy Services for the LUN, unless the LUN is integrated as Managed Disks in an IBM SAN Volume Controller. Use SAN Volume Controller Copy Services instead. |

LUNs can be allocated in binary GiB (230 bytes), decimal GB (109 bytes), or 512 or 520-byte blocks. However, the physical capacity that is allocated is a multiple of 1 GiB. For small extents, it is a multiple of 16 MiB. Therefore, it is a good idea to use LUN sizes that are a multiple of a gibibyte or a multiple of 16 MiB. If you define a LUN with a size that is not a multiple of 1 GiB (for example, 25.5 GiB), the LUN size is 25.5 GiB. However, 26 GiB are physically allocated, of which 0.5 GiB of the physical storage will be unusable. When you want to specify a LUN size that is not a multiple of 1 GiB, specify the number of blocks. A 16 MiB extent has 32768 blocks.

The allocation process for FB volumes is illustrated in Figure 4-8.

Figure 4-8 Creation of a fixed-block LUN

With small extents, waste of storage is not an issue.

A fixed-block LUN must be managed by an LSS. One LSS can manage up to 256 LUNs. The LSSs are created and managed by the DS8880, as required. A total of 255 LSSs can be created in the DS8880.

IBM i logical unit numbers

IBM i LUNs are also composed of fixed-block 1 GiB extents. However, special aspects need to be considered with IBM System i LUNs. LUNs that are created on a DS8880 are always RAID-protected. LUNs are based on RAID 5, RAID 6, or RAID 10 arrays. However, you might want to deceive IBM i and tell it that the LUN is not RAID-protected. This deception causes the IBM i to conduct its own mirroring. IBM i LUNs can have the unprotected attribute, in which case the DS8880 reports that the LUN is not RAID-protected. This selection of the protected or unprotected attribute does not affect the RAID protection that is used by the DS8880 on the open volume, however.

IBM i LUNs expose a 520-byte block to the host. The operating system uses eight of these bytes, so the usable space is still 512 bytes like other SCSI LUNs. The capacities that are quoted for the IBM i LUNs are in terms of the 512-byte block capacity, and they are expressed in GB (109). Convey these capacities to GiB (230) when the effective usage of extents that are 1 GiB (230) are considered.

|

Important: The DS8880 supports IBM i variable volume (LUN) sizes, in addition to fixed volume sizes.

|

IBM i volume enhancement adds flexibility for volume sizes and can optimize the DS8880 capacity usage for IBM i environments. For instance, Table 4-1 shows the fixed volume sizes that were supported for the DS8000, and the amount of space that was wasted because the fixed volume sizes did not match an exact number of GiB extents.

Table 4-1 IBM i fixed volume sizes

|

Model type

|

IBM i device size (GB)

|

Number of logical block addresses (LBAs)

|

Extents

|

Unusable space (GiB1)

|

Usable space %

|

|

|

Unprotected

|

Protected

|

|||||

|

2107-A81

|

2107-A01

|

8.5

|

16,777,216

|

8

|

0.00

|

100.00

|

|

2107-A82

|

2107-A02

|

17.5

|

34,275,328

|

17

|

0.66

|

96.14

|

|

2107-A85

|

2107-A05

|

35.1

|

68,681,728

|

33

|

0.25

|

99.24

|

|

2107-A84

|

2107-A04

|

70.5

|

137,822,208

|

66

|

0.28

|

99.57

|

|

2107-A86

|

2107-A06

|

141.1

|

275,644,416

|

132

|

0.56

|

99.57

|

|

2107-A87

|

2107-A07

|

282.2

|

551,288,832

|

263

|

0.13

|

99.95

|

1 GiB represents “binary gigabytes” (230 bytes), and GB represents “decimal gigabytes”

(109 bytes).

(109 bytes).

The DS8880 supports IBM i variable volume data types A50, an unprotected variable size volume, and A99, a protected variable size volume. See Table 4-2.

Table 4-2 System i variable volume sizes

|

Model type

|

IBM i device size (GB)

|

Number of logical block addresses (LBAs)

|

Extents

|

Unusable space (GiB1)

|

Usable space%

|

|

|

Unprotected

|

Protected

|

|||||

|

2107-050

|

2107-099

|

Variable

|

0.00

|

Variable

|

||

Example 4-1 demonstrates the creation of both a protected and an unprotected IBM i variable size volume by using the DS CLI.

Example 4-1 Creating the System i variable size for unprotected and protected volumes

dscli> mkfbvol -os400 050 -extpool P4 -name itso_iVarUnProt1 -cap 10 5413

CMUC00025I mkfbvol: FB volume 5413 successfully created.

dscli> mkfbvol -os400 099 -extpool P4 -name itso_iVarProt1 -cap 10 5417

CMUC00025I mkfbvol: FB volume 5417 successfully created.

|

Important: The creation of IBM i variable size volumes is only supported by using DS CLI commands. Currently, no support exists for this task in the GUI.

|

When you plan for new capacity for an existing IBM i system, the larger the LUN, the more data it might have, which causes more input/output operations per second (IOPS) to be driven to it. Therefore, mixing different drive sizes within the same system might lead to hot spots.

|

Note: IBM i fixed volume sizes continue to be supported in current and future DS8880 code levels. Consider the best option for your environment between fixed and variable size volumes.

|

T10 data integrity field support

The ANSI T10 standard provides a way to check the integrity of data that is read and written from the application or the host bus adapter (HBA) to the drive and back through the SAN fabric. This check is implemented through the data integrity field (DIF) that is defined in the T10 standard. This support adds protection information that consists of cyclic redundancy check (CRC), LBA, and host application tags to each sector of FB data on a logical volume.

A T10 DIF-capable LUN uses 520-byte sectors instead of the common 512-byte sector size. Eight bytes are added to the standard 512-byte data field. The 8-byte DIF consists of 2 bytes of CRC data, a 4-byte Reference Tag (to protect against misdirected writes), and a 2-byte Application Tag for applications that might use it.

On a write, the DIF is generated by the HBA, which is based on the block data and LBA. The DIF field is added to the end of the data block, and the data is sent through the fabric to the storage target. The storage system validates the CRC and Reference Tag and, if correct, stores the data block and DIF on the physical media. If the CRC does not match the data, the data was corrupted during the write. The write operation is returned to the host with a write error code. The host records the error and retransmits the data to the target. In this way, data corruption is detected immediately on a write, and the corrupt data is never committed to the physical media.

On a read, the DIF is returned with the data block to the host, which validates the CRC and Reference Tags. This validation adds a small amount of latency for each I/O, but it might affect overall response time on smaller block transactions (less than 4 KB I/Os).

The DS8880 supports the T10 DIF standard for FB volumes that are accessed by the Fibre Channel Protocol (FCP) channels that are used by Linux on z Systems. You can define LUNs with an option to instruct the DS8880 to use the CRC-16 T10 DIF algorithm to store the data.

You can also create T10 DIF-capable LUNs for operating systems that do not yet support this feature (except for IBM System i). However, active protection is available only for Linux on z Systems and AIX on Power Systems.

When you create an FB LUN with the mkfbvol DS CLI command, add the option -t10dif. If you query a LUN with the showfbvol command, the data type is shown to be FB 512T instead of the standard FB 512 type.

|

Important: Because the DS8880 internally always uses 520-byte sectors (to be able to support IBM i volumes), no capacity is considered when standard or T10 DIF-capable volumes are used.

Target LUN: When FlashCopy for a T10 DIF LUN is used, the target LUN must also be a T10 DIF-type LUN. This restriction does not apply to mirroring.

|

Count key data volumes

A z Systems CKD volume is composed of one or more extents from one CKD extent pool. CKD extents are of the size of 3390 Model 1, which features 1113 cylinders for large extents or 21 cylinders for small extents. When you define a z Systems CKD volume, specify the size of the volume as a multiple of 3390 Model 1 volumes or the number of cylinders that you want for the volume.

Before a CKD volume can be created, an LCU must be defined that provides up to 256 possible addresses that can be used for CKD volumes. Up to 255 LCUs can be defined. For more information about LCUs, which also are called LSSs, see 4.4.5, “Logical subsystems” on page 119.

On a DS8880 and previous models that start with the DS8000 LIC Release 6.1, you can define CKD volumes with up to 1,182,006 cylinders, or about 1 TB. This volume capacity is called an EAV, and it is supported by the 3390 Model A.

A CKD volume cannot span multiple extent pools, but a volume can have extents from different ranks in the same extent pool. You also can stripe a volume across the ranks. For more information, see “Storage pool striping: Extent rotation” on page 109.

Figure 4-9 shows an example of how a logical volume is allocated with a CKD volume.

Figure 4-9 Allocation of a count-key-data logical volume

CKD alias volumes

Another type of CKD volume is the PAV alias volume. PAVs are used by z/OS to send parallel I/Os to the same base CKD volume. Alias volumes are defined within the same LCU as their corresponding base volumes. Although they have no size, each alias volume needs an address, which is linked to a base volume. The total of base and alias addresses cannot exceed the maximum of 256 for an LCU.

SuperPAV

With HyperPAV, the alias can be assigned to access a base device within the same LCU on an I/O basis. However, the system can exhaust the alias resources that are needed within a LCU and cause an increase in IOS queue time (queue time in software waiting for a device to issue to the I/O). In this case, you need more aliases to access hot volumes.

In DS8000 code releases before R8.1, to start an I/O to a base volume, z/OS could select any alias address from the same LCU as the base address to perform the I/O. Starting with DS8880 R8.1 code, the concept of SuperPAV or cross LCU HyperPAV alias device is introduced. With SuperPAV support in DS8880 and in z/OS (check for Super PAV APARs, support is introduced with APARs OA49090, OA49110), the operating system can use alias addresses from other LCUs to perform an I/O for a base address.

Figure 4-10 shows how SuperPAV works.

Figure 4-10 SuperPAV

The restriction is that the LCU of the alias address belongs to the same DS8880 server. In other words, if the base address is from an even/odd LCU, the alias address that z/OS can select must also be from an even/odd LCU. In addition, the LCU of the base volume and the LCU of the alias volume must be in the same path group. z/OS prefers ailas addresses from the same LCU as the base address, but if no alias address is free, z/OS looks for free alias addresses in LCUs of the same Alias Management Group. An Alias management Group is all LCUs that have affinity to the same DS8880 internal server and that have the same paths to the DS8880. SMF and RMF (APAR OA49415) will be enhanced to provide reports at the Alias Management Group level.

It is still a requirement that initially each alias address is assigned to a base address. Therefore, it is not possible to define an LCU with only alias addresses.

As with PAV and Hyper PAV, SuperPAV must be enabled. SuperPAV is enabled by the HYPERPAV=XPAV statement in the IECIOSxx parmlib member or by the SETIOS HYPERPAV=XPAV command. The D M=DEV(address) and the D M=CU(address) display commands show whether XPAV is enabled or not. With the D M=CU(address) command, you can check whether aliases from other LCUs are currently being used (see Example 4-2).

Example 4-2 Display information about Super PAV (XPAV)

D M=CU(4E02)

IEE174I 11.26.55 DISPLAY M 511

CONTROL UNIT 4E02

CHP 65 5D 34 5E

ENTRY LINK ADDRESS 98 .. 434B ..

DEST LINK ADDRESS FA 0D 200F 0D

CHP PHYSICALLY ONLINE Y Y Y Y

PATH VALIDATED Y Y Y Y

MANAGED N N N N

ZHPF - CHPID Y Y Y Y

ZHPF - CU INTERFACE Y Y Y Y

MAXIMUM MANAGED CHPID(S) ALLOWED = 0

DESTINATION CU LOGICAL ADDRESS = 56

CU ND = 002107.981.IBM.75.0000000FXF41.0330

CU NED = 002107.981.IBM.75.0000000FXF41.5600

TOKEN NED = 002107.900.IBM.75.0000000FXF41.5600

FUNCTIONS ENABLED = ZHPF, XPAV

XPAV CU PEERS = 4802, 4A02, 4C02, 4E02

DEFINED DEVICES

04E00-04E07

DEFINED PAV ALIASES

14E40-14E47

With cross LCU HyperPAV / SuperPAV, the number of alias addresses can further be reduced while the pool of available alias addresses to handle I/O bursts to volumes is increased.

4.4.3 Allocation, deletion, and modification of LUNs and CKD volumes

Extents of ranks that are assigned to an extent pool are independently available for allocation to logical volumes. The extents for a LUN or volume are logically ordered, but they do not have to come from one rank. The extents do not have to be contiguous on a rank.

This construction method of using fixed extents to form a logical volume in the DS8880 allows flexibility in the management of the logical volumes. You can delete LUNs or CKD volumes, resize LUNs or volumes, and reuse the extents of those LUNs to create other LUNs or volumes, including ones of different sizes. One logical volume can be removed without affecting the other logical volumes that are defined on the same extent pool.

The extents are cleaned after you delete a LUN or CKD volume. The reformatting of the extents is a background process, and it can take time until these extents are available for reallocation.

Two extent allocation methods (EAMs) are available for the DS8880: Storage pool striping (rotate extents) and rotate volumes.

Storage pool striping: Extent rotation

The storage allocation method is chosen when a LUN or volume is created. The extents of a volume can be striped across several ranks. An extent pool with more than one rank is needed to use this storage allocation method.

The DS8880 maintains a sequence of ranks. The first rank in the list is randomly picked at each power-on of the storage system. The DS8880 tracks the rank in which the last allocation started. The allocation of the first extent for the next volume starts from the next rank in that sequence.

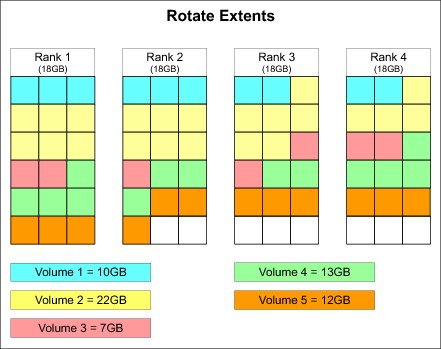

The next extent for that volume is taken from the next rank in sequence, and so on. Therefore, the system rotates the extents across the ranks, as shown in Figure 4-11.

|

Note: Although the preferred storage allocation method was storage pool striping, it is now a better choice to just let Easy Tier manage the storage pool extents. This chapter describes rotate extents for the sake of completeness, but it is now mostly irrelevant.

|

Figure 4-11 Rotate extents

Rotate volumes allocation method

Extents can be allocated sequentially. In this case, all extents are taken from the same rank until enough extents are available for the requested volume size or the rank is full. In this case, the allocation continues with the next rank in the extent pool.

If more than one volume is created in one operation, the allocation for each volume starts in another rank. When several volumes are allocated, rotate through the ranks, as shown in Figure 4-12.

Figure 4-12 Rotate volumes

You might want to consider this allocation method when you prefer to manage performance manually. The workload of one volume is going to one rank. This configuration makes the identification of performance bottlenecks easier. However, by putting all the volumes’ data onto one rank, you might introduce a bottleneck, depending on your actual workload.

|

Important: Rotate extents and rotate volume EAMs provide distribution of volumes over ranks. Rotate extents run this distribution at a granular (1 GiB or 16 MiB extent) level, and is a better method to minimize hot spots and improve overall performance.

However, as previously stated, Easy Tier is really the preferred choice for managing the storage pool extents.

|

In a mixed-drive-characteristic (or hybrid) extent pool that contains different classes (or tiers) of ranks, the storage pool striping EAM is used independently of the requested EAM, and EAM is set to managed.

For extent pools that contain flash ranks, extent allocation is performed initially on enterprise or nearline ranks while space remains available. Easy Tier algorithms migrate the extents as needed to flash ranks. For extent pools that contain a mix of enterprise and nearline ranks, initial extent allocation is performed on enterprise ranks first.

This was the allocation rule for DS8000 code up to R8.0. With the DS8880 R8.1 code, there is an option for the whole Storage Facility to change the allocation preference to start the allocation at the highest flash/SSD tier. The chsi command can be used to change the ETAOMode parameter to High Utilization or to High Performance.

When you create striped volumes and non-striped volumes in an extent pool, a rank might be filled before the others. A full rank is skipped when you create new striped volumes.

By using striped volumes, you distribute the I/O load of a LUN or CKD volume to more than one set of eight drives, which can enhance performance for a logical volume. In particular, operating systems that do not include a volume manager with striping capability benefit most from this allocation method.

Small extents can increase the parallelism of sequential writes. While the system stays within one rank until 1 GiB has been written, with small extents, we jump to the next rank after 16 MiB. This configuration uses more disk drives when performing sequential writes.

|

Important: If you must add capacity to an extent pool because it is nearly full, it is better to add several ranks at the same time, not just one rank. This method allows new volumes to be striped across the newly added ranks.

With the Easy Tier manual mode facility, if the extent pool is a non-hybrid pool, the user can request an extent pool merge followed by a volume relocation with striping to run the same function. For a hybrid-managed extent pool, extents are automatically relocated over time, according to performance needs. For more information, see IBM DS8000 EasyTier, REDP-4667.

Rotate volume EAM: The rotate volume EAM is not allowed if one extent pool is composed of flash drives and configured for virtual capacity.

|

Dynamic volume expansion

The DS8880 supports expanding the size of a LUN or CKD volume without data loss, by adding extents to the volume. The operating system must support resizing.

A logical volume includes the attribute of being striped across the ranks or not. If the volume was created as striped across the ranks of the extent pool, the extents that are used to increase the size of the volume are striped. If a volume was created without striping, the system tries to allocate the additional extents within the same rank that the volume was created from originally.

|

Important: Before you can expand a volume, you must delete any Copy Services relationship that involves that volume.

|

Because most operating systems have no means of moving data from the end of the physical drive off to unused space at the beginning of the drive, and because of the risk of data corruption, IBM does not support shrinking a volume. The DS8880 DS CLI and DS GUI interfaces will not allow reducing the size of a volume.

Dynamic volume migration

Dynamic volume migration or dynamic volume relocation (DVR) is a capability that is provided as part of the Easy Tier manual mode facility.

DVR allows data that is stored on a logical volume to be migrated from its currently allocated storage to newly allocated storage while the logical volume remains accessible to attached hosts. The user can request DVR by using the Migrate Volume function that is available through the DS Storage Manager GUI (DS GUI) or DS CLI. DVR allows the user to specify a target extent pool and an EAM. The target extent pool can be a separate extent pool than the extent pool where the volume is located. It can also be the same extent pool, but only if it is a non-hybrid or single-tier pool. However, the target extent pool must be managed by the same DS8880 internal server.

|

Important: DVR in the same extent pool is not allowed in the case of a managed pool. In managed extent pools, Easy Tier automatic mode automatically relocates extents within the ranks to allow performance rebalancing. The Easy Tier application can also be used to manually place volumes in designated tiers within a managed pool. For more information, see DS8870 Easy Tier Application, REDP-5014.

Restriction: In the current implementation (DS8880 R8,1 code) you can only move volumes between pools of the same extent size.

|

Dynamic volume migration provides the following capabilities:

•The ability to change the extent pool in which a logical volume is provisioned. This ability provides a mechanism to change the underlying storage characteristics of the logical volume to include the drive class (Flash, Enterprise, or Nearline), drive RPM, and RAID array type. Volume migration can also be used to migrate a logical volume into or out of an extent pool.

•The ability to specify the extent allocation method for a volume migration that allows the extent allocation method to be changed between the available extent allocation methods any time after volume creation. Volume migration that specifies the rotate extents EAM can also be used (in non-hybrid extent pools) to redistribute a logical volume’s extent allocations across the existing ranks in the extent pool if more ranks are added to an extent pool.

Each logical volume has a configuration state. To begin a volume migration, the logical volume initially must be in the normal configuration state.

More functions are associated with volume migration that allow the user to pause, resume, or cancel a volume migration. Any or all logical volumes can be requested to be migrated at any time if available capacity is sufficient to support the reallocation of the migrating logical volumes in their specified target extent pool. For more information, see IBM DS8000 EasyTier, REDP-4667.

4.4.4 Volume allocation and metadata

|

Important: With the DS8880, a new internal data layout is introduced for all volumes.

|

In earlier DS8000 systems, metadata allocation differed between fully provisioned or thin provisioned (extent space efficient (ESE)) volumes:

•For fully provisioned volumes, metadata was contained in system-reserved areas of the physical arrays, outside of the client data logical extent structure.

Figure 4-13 illustrates the extent layout in the DS8870 and earlier DS8000 models, for standard, fully provisioned volumes. Note the size of the reserved area (not to scale), which includes volume metadata. The rest of the space is composed of 1 GB extents.

Figure 4-13 DS8000 standard volumes

•For ESE volumes, several logical extents, which are designated as auxiliary rank extents, are allocated to contain volume metadata.

Figure 4-14 shows the extent layout in the DS8870 and earlier, for ESE volumes. In addition to the reserved area, auxiliary 1 GB extents are also allocated to store metadata for the ESE volumes.

Figure 4-14 DS8870 extent space-efficient volumes

In the DS8880, the logical extent structure changed to move to a single volume structure for all volumes. All volumes now use an improved metadata extent structure, similar to what was previously used for ESE volumes. This unified extent structure greatly simplifies the internal management of logical volumes and their metadata. No area is fixed and reserved for volume metadata, and this capacity is added to the space that is available for use.

The metadata is allocated in the Storage Pool when volumes are created, and the space that is used by metadata is referred to as the Pool Overhead. Pool Overhead means that the amount of space that can be allocated by volumes is variable and depends on both the number of volumes and the logical capacity of these volumes.

|

Tips: The following characteristics apply to the DS8880:

•Metadata is variable in size. Metadata is used only as logical volumes are defined.

•The size of the metadata for a logical volume depends on the number and size of the logical volumes that are being defined.

•As volumes are defined, the metadata is immediately allocated and reduces the size of the available user extents in the storage pool.

•As you define new logical volumes, you must allow for the fact that this metadata space continues to use user extent space.

|

For storage pools with large extents, metadata is also allocated as large extents (1 GiB for FB pools or 1113 cylinder for CKD pools). Large extents that are allocated for metadata are subdivided into 16 MB subextents, which are also referred to as metadata extents, for FB volumes, or 21 cylinders for CKD. For extent pools with small extents, metadata extents are also small extents. Sixty-four metadata subextents are in each large metadata extent for FB, and 53 metadata subextents are in each large metadata extent for CKD.

For each FB volume that is allocated, an initial 16 MiB metadata subextent or metadata small extent is allocated, and an additional 16 MiB metadata subextent or metadata small extent is allocated for every 10 GiB of allocated capacity or portion of allocated capacity.

For each CKD volume that is allocated, an initial 21 cylinders metadata subextent or metadata small extent is allocated, and an additional 21 cylinders metadata subextent or metadata small extent is allocated for every 11130 cylinders (or 10 3390 Model 1) of allocated capacity or portion of allocated capacity.

For example, a 3390-3 (that is, 3339 cylinders or about 3 GB) or 3390-9 (that is, 10,017 cylinders or 10 GB) volume takes two metadata extents (one metadata extent for the volume and another metadata extent for any portion of the first 10 GB). A 128 GB FB volume takes 14 metadata extents (one metadata extent for the volume and another 13 metadata extents to account for the 128 GB).

Figure 4-15 shows an illustration of 3 GB and 12 GB FB volumes for a storage pool with large extents. In an extent pool with small extents, there is no concept of sub-extents. You just have user extents, unused extents, and metadata extents.

Figure 4-15 Metadata allocation

Metadata extents with free space can be used for metadata by any volume in the extent pool.

|

Important: In a multitier extent pool, volume metadata is created in the highest available tier. It is recommended to have some flash/SSD storage for the volume metadata.

Typically, ten percent of flash storage in an extent pool results in all volume metadata being stored on flash, assuming no overprovisioning. With overprovisioning, a corresponding larger percentage of flash would be required.

|

With this concept of metadata extents and user extents within an extent pool, some planning and calculations are required. This is particularly true in a mainframe environment where often thousands of volumes are defined and often the whole capacity is allocated during the initial configuration. You have to calculate the capacity used up by the metadata to get the capacity that can be used for user data. This is only important when fully provisioned volumes will be used. Thin-provisioned volumes consume no space when created, only metadata and space will be consumed when data is actually written.

For extent pools with small extents, the number of available user data extents can be estimated as follows:

(user extents) = (pool extents) - (number of volumes) - (total virtual capacity)/10

For extent pools with regular 1 GiB extents, when the details of the volume configuration are not known, you can estimate the number of metadata extents based on many volumes only. The calculations are performed as shown:

•FB Pool Overhead = (Number of volumes x 2 + total volume extents/10)/64 and rounded up to the nearest integer

•CKD Pool Overhead = (Number of volumes x 2 + total volume extents/10)/53 and rounded up to the nearest integer

This formula overestimates the space that is used by the metadata by a small amount because it assumes wastage on every volume. However, the precise size of each volume does not need to be known.

Two examples are explained:

•A CKD storage pool has 6,190 extents in which you expect to allocate all of the space on 700 volumes. Your Pool Overhead is 39 extents by using this calculation:

(700 x 2 + 6190/10)/53 = 38.09

•An FB storage pool has 6,190 extents in which you expect to use thin provisioning and allocate up to 12,380 extents (2:1 overprovisioning) on 100 volumes. Your Pool Overhead is 23 extents by using this calculation:

(100 x 2 + 12380/10)/64 = 22.46

Space for Easy Tier

The Easy Tier function of DS8000 monitors access to extents transparently to host applications. Easy Tier moves extents to higher or lower tiers depending on the access frequency or heat of an extent. To be able to move around extents, some free space or free extents must be available in an extent pool. You can manually have an eye on this, but a better option is to let the system reserve some space for Easy Tier extent movements. The chsi command can be used to change the ETSRMode parameter to Enabled. This setting causes the storage system to reserve some capacity for Easy Tier. Obviously, when this mode is enabled, capacity for new volume allocations is reduced.

Extent space efficient volumes

Volumes can be created as thin-provisioned or fully provisioned.

Space for a thin-provisioned volume is allocated when a write occurs. More precisely, it is allocated when a destage from the cache occurs and insufficient free space is left on the currently allocated extent.

Therefore, thin provisioning allows a volume to exist that is larger than the physical space in the extent pool that it belongs to. This approach allows the “host” to work with the volume at its defined capacity, even though insufficient physical space might exist to fill the volume with data.

The assumption is that either the volume will never be filled, or as the DS8880 runs low on physical capacity, more will be added. This approach also assumes that the DS8880 is not at its maximum capacity.

Thin-provisioned volume support is contained in the Base Function license group. This provisioning is an attribute that you specify when creating a volume.

|

Note: The DS8880 does not support track space efficient (TSE) volumes.

|

|

Important: If thin provisioning is used, the metadata is allocated for the entire volume (virtual capacity) when the volume is created not when extents are used.

|

Extent space efficient capacity controls for thin provisioning

Use of thin provisioning can affect the amount of storage capacity that you choose to order. Use ESE capacity controls to allocate storage correctly.

With the mixture of thin-provisioned (ESE) and fully provisioned (non-ESE) volumes in an extent pool, a method is needed to dedicate part of the extent-pool storage capacity for ESE user data usage, and to limit the ESE user data usage within the extent pool.

Also, you need to detect when the available storage space within the extent pool for ESE volumes is running out of space.

ESE capacity controls provide extent pool attributes to limit the maximum extent pool storage that is available for ESE user data usage. They also guarantee that a proportion of the extent pool storage will be available for ESE user data usage.

The following controls are available to limit the use of extents in an extent pool:

•Reserve capacity in an extent pool by enabling the extent pool limit function with the chextpool -extentlimit enable -limit extent_limit_percentage pool_ID command

•You can reserve space for the sole use of ESE volumes by creating a repository with the mksestg -repcap capacity pool_id command.

Figure 4-16 shows the areas in an extent pool.

Figure 4-16 Spaces in an extent pool

Capacity controls exist for an extent pool and also for a repository, if it is defined. There are system defined warning thresholds at 15% and 0% free capacity left, and you can set your own user-defined threshold for the whole extent pool or for the ESE repository. Thresholds for an extent pool are set with the DSCLI chextpool -threshold (or mkextpool) command and for a repository with the chsestg -repcapthreshold (or mksestg) command.

The threshold exceeded status refers to the user-defined threshold.

The extent pool or repository status shows one of the three following values:

•0: The percent of available capacity is greater than the extent pool / repository threshold.

•1: The percent of available capacity is greater than zero but less than or equal to the extent pool / repository threshold.

•10: The percent of available capacity is zero.

An SNMP trap is associated with the extent pool / repository capacity controls that notifies you when the extent usage in the pool exceeds a user-defined threshold, and when the extent pool is out of extents for user data.

When the size of the extent pool remains fixed or when it is increased, the available physical capacity remains greater than or equal to the allocated physical capacity. However, a reduction in the size of the extent pool can cause the available physical capacity to become less than the allocated physical capacity in certain cases.

For example, if the user requests that one of the ranks in an extent pool is depopulated, the data on that rank is moved to the remaining ranks in the pool. This process causes the rank to become not allocated and removed from the pool. The user is advised to inspect the limits and threshold on the extent pool after any changes to the size of the extent pool to ensure that the specified values are still consistent with the user’s intentions.

4.4.5 Logical subsystems

An LSS is another logical construct. It can also be referred to as an LCU. In reality, it is licensed internal code (LIC) that is used to manage up to 256 logical volumes. The term LSS is used in association with FB volumes. The term LCU is used in association with CKD volumes. A maximum of 255 LSSs can exist in the DS8880. They each have an identifier from 00 - FE. An individual LSS must manage either FB or CKD volumes.

All even-numbered LSSs (X'00’, X'02’, X'04', up to X'FE') are handled by internal server 0, and all odd-numbered LSSs (X'01', X'03', X'05', up to X'FD') are handled by internal server 1. LSS X'FF' is reserved. This configuration allows both servers to handle host commands to the volumes in the DS8880 if the configuration takes advantage of this capability. If either server is not available, the remaining operational server handles all LSSs. LSSs are also placed in address groups of 16 LSSs, except for the last group that has 15 LSSs. The first address group is 00 - 0F, and so on, until the last group, which is F0 - FE.

Because the LSSs manage volumes, an individual LSS must manage the same type of volumes. An address group must also manage the same type of volumes. The first volume (either FB or CKD) that is assigned to an LSS in any address group sets that group to manage those types of volumes. For more information, see “Address groups” on page 121.

Volumes are created in extent pools that are associated with either internal server 0 or 1. Extent pools are also formatted to support either FB or CKD volumes. Therefore, volumes in any internal server 0 extent pools can be managed by any even-numbered LSS, if the LSS and extent pool match the volume type. Volumes in any internal server 1 extent pools can be managed by any odd-numbered LSS, if the LSS and extent pool match the volume type.

Volumes also have an identifier that ranges from 00 - FF. The first volume that is assigned to an LSS has an identifier of 00. The second volume is 01, and so on, up to FF, if 256 volumes are assigned to the LSS.

For FB volumes, the LSSs that are used to manage them are not significant if you spread the volumes between odd and even LSSs. When the volume is assigned to a host (in the DS8880 configuration), a LUN is assigned to it that includes the LSS and Volume ID. This LUN is sent to the host when it first communicates with the DS8880, so it can include the LUN in the “frame” that is sent to the DS8880 when it wants to run an I/O operation on the volume. This method is how the DS8880 knows which volume to run the operation on.

Conversely, for CKD volumes, the LCU is significant. The LCU must be defined in a configuration that is called the input/output configuration data set (IOCDS) on the host. The LCU definition includes a control unit address (CUADD). This CUADD must match the LCU ID in the DS8880. A device definition for each volume, which has a unit address (UA) that is included, is also included in the IOCDS. This UA needs to match the volume ID of the device. The host must include the CUADD and UA in the “frame” that is sent to the DS8880 when it wants to run an I/O operation on the volume. This method is how the DS8880 knows which volume to run the operation on.

For both FB and CKD volumes, when the “frame” that is sent from the host arrives at a host adapter port in the DS8880, the adapter checks the LSS or LCU identifier to know which internal server to pass the request to inside the DS8880. For more information about host access to volumes, see 4.4.6, “Volume access” on page 122.

Fixed-block LSSs are created automatically when the first fixed-block logical volume on the LSS is created. Fixed-block LSSs are deleted automatically when the last fixed-block logical volume on the LSS is deleted. CKD LCUs require user parameters to be specified and must be created before the first CKD logical volume can be created on the LCU. They must be deleted manually after the last CKD logical volume on the LCU is deleted.

Certain management actions in Metro Mirror, Global Mirror, or Global Copy operate at the LSS level. For example, the freezing of pairs to preserve data consistency across all pairs, in case a problem occurs with one of the pairs, is performed at the LSS level.

The option to put all or most of the volumes of a certain application in one LSS makes the management of remote copy operations easier, as shown in Figure 4-17.

Figure 4-17 Grouping of volumes in LSSs

Address groups

Address groups are created automatically when the first LSS that is associated with the address group is created. The groups are deleted automatically when the last LSS in the address group is deleted.

All devices in an LSS must be CKD or FB. This restriction goes even further. LSSs are grouped into address groups of 16 LSSs. LSSs are numbered X'ab', where a is the address group and b denotes an LSS within the address group. For example, X'10' to X'1F' are LSSs in address group 1.

All LSSs within one address group must be of the same type (CKD or FB). The first LSS that is defined in an address group sets the type of that address group.

|

Important: z Systems users who still want to use IBM Enterprise Systems Connection (ESCON) to attach hosts through ESCON/Fibre Channel connection (FICON) converters to the DS8880 need to be aware that ESCON supports only the 16 LSSs of address group 0 (LSS X'00' to X'0F'). Therefore, reserve this address group for CKD devices that attach to ESCON in this case, and do not use it for FB LSSs. The DS8880 does not support native ESCON channels.

|

Figure 4-18 shows the concept of LSSs and address groups.

Figure 4-18 Logical storage subsystems

The LUN identifications X'gabb' are composed of the address group X'g', and the LSS number within the address group X'a', and the ID of the LUN within the LSS X'bb'. For example, FB LUN X'2101' denotes the second (X'01') LUN in LSS X'21' of address group 2.

An extent pool can have volumes that are managed by multiple address groups. The example in Figure 4-18 shows only one address group that is used with each extent pool.

4.4.6 Volume access

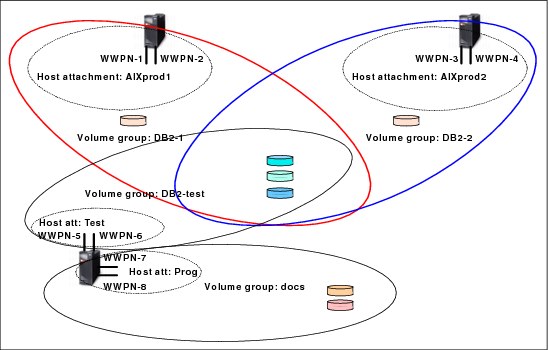

The DS8880 provides mechanisms to control host access to LUNs. In most cases, a host system features two or more HBAs and the system needs access to a group of LUNs. For easy management of system access to logical volumes, the DS8880 introduced the concept of host attachments and volume groups.

Host attachment

HBAs are identified to the DS8880 in a host attachment construct that specifies the worldwide port names (WWPNs) of a host’s HBAs. A set of host ports can be associated through a port group attribute that allows a set of HBAs to be managed collectively. This port group is referred to as a host attachment within the configuration.

Each host attachment can be associated with a volume group to define the LUNs that host is allowed to access. Multiple host attachments can share the volume group. The host attachment can also specify a port mask that controls the DS8880 I/O ports that the host HBA is allowed to log in to. Whichever ports the HBA logs in on, it sees the same volume group that is defined on the host attachment that is associated with this HBA.

The maximum number of host attachments on a DS8880 is 8,192. This host definition is only required for open systems hosts. Any z Systems servers can access any CKD volume in a DS8880 if its IOCDS is correct.

Volume group

A volume group is a named construct that defines a set of logical volumes. A volume group is only required for FB volumes. When a volume group is used with CKD hosts, a default volume group contains all CKD volumes. Any CKD host that logs in to a FICON I/O port has access to the volumes in this volume group. CKD logical volumes are automatically added to this volume group when they are created and are automatically removed from this volume group when they are deleted.

When a host attachment object is used with open systems hosts, a host attachment object that identifies the HBA is linked to a specific volume group. You must define the volume group by indicating the FB volumes that are to be placed in the volume group. Logical volumes can be added to or removed from any volume group dynamically.

|

Important: Volume group management is available only with the DS CLI. In the DS GUI, users define hosts and assign volumes to hosts. A volume group is defined in the background. No volume group object can be defined in the DS GUI.

|

Two types of volume groups are used with open systems hosts. The type determines how the logical volume number is converted to a host addressable LUN_ID in the Fibre Channel SCSI interface. A SCSI map volume group type is used with FC SCSI host types that poll for LUNs by walking the address range on the SCSI interface. This type of volume group can map any FB logical volume numbers to 256 LUN IDs that have zeros in the last 6 bytes and the first 2 bytes in the range of X'0000' to X'00FF'.

A SCSI mask volume group type is used with FC SCSI host types that use the Report LUNs command to determine the LUN IDs that are accessible. This type of volume group can allow any FB logical volume numbers to be accessed by the host where the mask is a bitmap that specifies the LUNs that are accessible. For this volume group type, the logical volume number X'abcd' is mapped to LUN_ID X'40ab40cd00000000'. The volume group type also controls whether 512-byte block LUNs or 520-byte block LUNs can be configured in the volume group.

When a host attachment is associated with a volume group, the host attachment contains attributes that define the logical block size and the Address Discovery Method (LUN Polling or Report LUNs) that is used by the host HBA. These attributes must be consistent with the volume group type of the volume group that is assigned to the host attachment. This consistency ensures that HBAs that share a volume group have a consistent interpretation of the volume group definition and have access to a consistent set of logical volume types. The DS Storage Manager GUI typically sets these values for the HBA based on your specification of a host type. You must consider what volume group type to create when a volume group is set up for a particular HBA.

FB logical volumes can be defined in one or more volume groups. This definition allows a LUN to be shared by host HBAs that are configured to separate volume groups. An FB logical volume is automatically removed from all volume groups when it is deleted.

The DS8880 supports a maximum of 8,320 volume groups.

Figure 4-19 shows the relationships between host attachments and volume groups. Host AIXprod1 has two HBAs, which are grouped in one host attachment and both HBAs are granted access to volume group DB2-1. Most of the volumes in volume group DB2-1 are also in volume group DB2-2, which is accessed by the system AIXprod2.

However, one volume in each group is not shared in Figure 4-19. The system in the lower-left part of the figure features four HBAs, and they are divided into two distinct host attachments. One HBA can access volumes that are shared with AIXprod1 and AIXprod2. The other HBAs have access to a volume group that is called docs.

Figure 4-19 Host attachments and volume groups

4.4.7 Virtualization hierarchy summary

As you view the virtualization hierarchy (as shown in Figure 4-20), start with many drives that are grouped in array sites. The array sites are created automatically when the drives are installed. Complete the following steps as a user:

1. Associate an array site with a RAID array, and allocate spare drives, as required.

2. Associate the array with a rank with small or large extents formatted for FB or CKD data.

3. Add the extents from the selected ranks to an extent pool. The combined extents from the ranks in the extent pool are used for subsequent allocation for one or more logical volumes. Within the extent pool, you can reserve space for ESE volumes. ESE volumes require virtual capacity to be available in the extent pool.

4. Create logical volumes within the extent pools (by default, striping the volumes), and assign them a logical volume number that determines the logical subsystem that they are associated with and the internal server that manages them. The LUNs are assigned to one or more volume groups.

5. Configure the host HBAs into a host attachment associated with a volume group.

Figure 4-20 shows the virtualization hierarchy.

Figure 4-20 Virtualization hierarchy

This virtualization concept provides a high degree of flexibility. Logical volumes can be dynamically created, deleted, and resized. They can be grouped logically to simplify storage management. Large LUNs and CKD volumes reduce the total number of volumes, which contributes to the reduction of management effort.

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.