Volumes

This chapter describes how to create and provision volumes for IBM Spectrum Virtualize systems. In this case, a volume is a logical disk provisioned out of a storage pool and is recognized by a host with a unique identifier (UID) field and a parameter list.

The first part of this chapter provides a brief overview of IBM Spectrum Virtualize volumes, the classes of volumes available, and the topologies that they are associated with. It also provides an overview of the advanced customization available.

The second part describes how to create volumes using the GUI and shows you how to map these volumes to defined hosts.

The third part provides an introduction to the new volume manipulation commands, which are designed to facilitate the creation and administration of volumes that are used for IBM HyperSwap topologies.

This chapter includes the following topics:

7.1 Introduction to volumes

A volume is a logical disk that the system presents to attached hosts. For an IBM Spectrum Virtualize system, the volume presented is from a virtual disk (VDisk). A VDisk is a discrete area of usable storage that has been virtualized, using IBM Spectrum Virtualize code, from storage area network (SAN) storage that is managed by the IBM Spectrum Virtualize cluster. The term virtual is used because the volume presented does not necessarily exist on a single physical entity.

Volumes have the following characteristics or attributes:

•Volumes can be created and deleted.

•Volumes can be resized (expanded or shrunk).

•Volume extents can be migrated at run time to another MDisk or storage pool.

•Volumes can be created as fully allocated or thin-provisioned. A conversion from a fully allocated to a thin-provisioned volume and vice versa can be done at run time.

•Volumes can be stored in multiple storage pools (mirrored) to make them resistant to disk subsystem failures or to improve the read performance.

•Volumes can be mirrored synchronously or asynchronously for longer distances. An IBM Spectrum Virtualize system can run active volume mirrors to a maximum of three other IBM Spectrum Virtualize systems, but not from the same volume.

•Volumes can be copied by using FlashCopy. Multiple snapshots and quick restore from snapshots (reverse FlashCopy) are supported.

•Volumes can be compressed.

•Volumes can be virtual. The system supports VMware vSphere Virtual Volumes, sometimes referred to as VVols, which allow VMware vCenter to manage system objects, such as volumes and pools. The system administrator can create these objects and assign ownership to VMware administrators to simplify management of these objects.

|

Note: A managed disk (MDisk) is a logical unit of physical storage. MDisks are either Redundant Arrays of Independent Disks (RAID) from internal storage, or external physical disks that are presented as a single logical disk on the SAN. Each MDisk is divided into several extents, which are numbered, from 0, sequentially from the start to the end of the MDisk. The extent size is a property of the storage pools that the MDisks are added to.

Attention: MDisks are not visible to host systems.

|

Volumes have two major modes: Managed mode and image mode. Managed mode volumes have two policies: The sequential policy and the striped policy. Policies define how the extents of a volume are allocated from a storage pool.

The type attribute of a volume defines the allocation of extents that make up the volume copy:

•A striped volume contains a volume copy that has one extent allocated in turn from each MDisk that is in the storage pool. This is the default option. However, you can also supply a list of MDisks to use as the stripe set as shown in Figure 7-1.

|

Attention: By default, striped volume copies are striped across all MDisks in the storage pool. If some of the MDisks are smaller than others, the extents on the smaller MDisks are used up before the larger MDisks run out of extents. Manually specifying the stripe set in this case might result in the volume copy not being created.

If you are unsure if sufficient free space is available to create a striped volume copy, select one of the following options:

•Check the free space on each MDisk in the storage pool by using the lsfreeextents command.

•Let the system automatically create the volume copy by not supplying a specific stripe set.

|

Figure 7-1 Striped extent allocation

•A sequential volume contains a volume copy that has extents that are allocated sequentially on one MDisk.

•Image-mode volumes are a special type of volume that has a direct relationship with one MDisk.

7.1.1 Image mode volumes

Image mode volumes are used to migrate LUNs that were previously mapped directly to host servers over to the control of the IBM Spectrum Virtualize system. Image mode provides a one-to-one mapping between the logical block addresses (LBAs) between a volume and an MDisk. Image mode volumes have a minimum size of one block (512 bytes) and always occupy at least one extent.

An image mode MDisk is mapped to one, and only one, image mode volume.

The volume capacity that is specified must be equal to the size of the image mode MDisk. When you create an image mode volume, the specified MDisk must be in unmanaged mode and must not be a member of a storage pool. The MDisk is made a member of the specified storage pool (Storage Pool_IMG_xxx) as a result of creating the image mode volume.

The Spectrum Virtualize also supports the reverse process, in which a managed mode volume can be migrated to an image mode volume. If a volume is migrated to another MDisk, it is represented as being in managed mode during the migration. It is only represented as an image mode volume after it reaches the state where it is a straight-through mapping.

An image mode MDisk is associated with exactly one volume. If the (image mode) MDisk is not a multiple of the MDisk Group’s extent size, the last extent is partial (not filled). An image mode volume is a pass-through one-to-one map of its MDisk. It cannot be a quorum disk and it does not have any metadata extents assigned to it from the IBM Spectrum Virtualize system. Managed or image mode MDisks are always members of a storage pool.

It is a preferred practice to put image mode MDisks in a dedicated storage pool and use a special name for it (for example, Storage Pool_IMG_xxx). The extent size that is chosen for this specific storage pool must be the same as the extent size into which you plan to migrate the data. All of the IBM Spectrum Virtualize copy services functions can be applied to image mode disks. See Figure 7-2.

Figure 7-2 Image mode volume versus striped volume

7.1.2 Managed mode volumes

Volumes operating in managed mode provide a full set of virtualization functions. Within a storage pool, the IBM Spectrum Virtualize supports an arbitrary relationship between extents on (managed mode) volumes and extents on MDisks. Each volume extent maps to exactly one MDisk extent.

Figure 7-3 shows this mapping. It also shows a volume that consists of several extents that are shown as V0 - V7. Each of these extents is mapped to an extent on one of the MDisks: A, B, or C. The mapping table stores the details of this indirection.

Several of the MDisk extents are unused. No volume extent maps to them. These unused extents are available for use in creating volumes, migration, expansion, and so on.

Figure 7-3 Simple view of block virtualization

The allocation of a specific number of extents from a specific set of MDisks is performed by the following algorithm:

•If the set of MDisks from which to allocate extents contains more than one MDisk, extents are allocated from MDisks in a round-robin fashion.

•If an MDisk has no free extents when its turn arrives, its turn is missed and the round-robin moves to the next MDisk in the set that has a free extent.

When a volume is created, the first MDisk from which to allocate an extent is chosen in a pseudo-random way rather than by choosing the next disk in a round-robin fashion. The pseudo-random algorithm avoids the situation where the striping effect places the first extent for many volumes on the same MDisk. This effect is inherent in a round-robin algorithm.

Placing the first extent of several volumes on the same MDisk can lead to poor performance for workloads that place a large I/O load on the first extent of each volume, or that create multiple sequential streams.

7.1.3 Cache mode for volumes

It is also possible to define the cache characteristics of a volume. Under normal conditions, a volume’s read and write data is held in the cache of its preferred node, with a mirrored copy of write data held in the partner node of the same I/O Group. However, it is possible to create a volume with cache disabled. This setting means that the I/Os are passed directly through to the back-end storage controller rather than being held in the node’s cache.

Having cache-disabled volumes makes it possible to use the native copy services in the underlying RAID array controller for MDisks (LUNs) that are used as the IBM Spectrum Virtualize image mode volumes. Using IBM Spectrum Virtualize Copy Services rather than the underlying disk controller copy services gives better results.

Cache characteristics of a volume can have any of the following settings:

•readwrite. All read and write I/O operations that are performed by the volume are stored in cache. This is the default cache mode for all volumes.

•readonly. All read I/O operations that are performed by the volume are stored in cache.

•disabled. All read and write I/O operations that are performed by the volume are not stored in cache. Under normal conditions, a volume’s read and write data is held in the cache of its preferred node, with a mirrored copy of write data being held in the partner node of the same I/O Group. With cache disabled volumes, the I/Os are passed directly through to the back-end storage controller rather than being held in the node’s cache.

|

Note: Having cache-disabled volumes makes it possible to use the native copy services in the underlying RAID array controller for MDisks (LUNs) that are used as IBM Spectrum Virtualize image mode volumes. Consult IBM Support before turning off the cache for volumes in your production environment to avoid any performance degradation.

|

7.1.4 Mirrored volumes

The mirrored volume feature provides a simple RAID 1 function, so a volume has two physical copies of its data. This approach enables the volume to remain online and accessible even if one of the MDisks sustains a failure that causes it to become inaccessible.

The two copies of the volume often are allocated from separate storage pools or by using image-mode copies. The volume can participate in FlashCopy and remote copy relationships. It is serviced by an I/O Group, and has a preferred node.

Each copy is not a separate object and cannot be created or manipulated except in the context of the volume. Copies are identified through the configuration interface with a copy ID of their parent volume. This copy ID can be 0 or 1.

This feature provides a point-in-time copy function that is achieved by “splitting” a copy from the volume. However, the mirrored volume feature does not address other forms of mirroring that are based on remote copy, which is sometimes called IBM HyperSwap, that mirrors volumes across I/O Groups or clustered systems. It is also not intended to manage mirroring or remote copy functions in back-end controllers.

Figure 7-4 provides an overview of volume mirroring.

Figure 7-4 Volume mirroring overview

A second copy can be added to a volume with a single copy or removed from a volume with two copies. Checks prevent the accidental removal of the only remaining copy of a volume. A newly created, unformatted volume with two copies initially has the two copies in an out-of-synchronization state. The primary copy is defined as “fresh” and the secondary copy is defined as “stale.”

The synchronization process updates the secondary copy until it is fully synchronized. This update is done at the default synchronization rate or at a rate that is defined when the volume is created or modified. The synchronization status for mirrored volumes is recorded on the quorum disk.

If a two-copy mirrored volume is created with the format parameter, both copies are formatted in parallel. The volume comes online when both operations are complete with the copies in sync.

If mirrored volumes are expanded or shrunk, all of their copies are also expanded or shrunk.

If it is known that MDisk space (which is used for creating copies) is already formatted or if the user does not require read stability, a no synchronization option can be selected that declares the copies as synchronized (even when they are not).

To minimize the time that is required to resynchronize a copy that is out of sync, only the

256 kibibyte (KiB) grains that were written to since the synchronization was lost are copied. This approach is known as an incremental synchronization. Only the changed grains must be copied to restore synchronization.

256 kibibyte (KiB) grains that were written to since the synchronization was lost are copied. This approach is known as an incremental synchronization. Only the changed grains must be copied to restore synchronization.

|

Important: An unmirrored volume can be migrated from one location to another by adding a second copy to the wanted destination, waiting for the two copies to synchronize, and then removing the original copy 0. This operation can be stopped at any time. The two copies can be in separate storage pools with separate extent sizes.

|

When there are two copies of a volume, one copy is known as the primary copy. If the primary is available and synchronized, reads from the volume are directed to it. The user can select the primary when the volume is created or can change it later.

Placing the primary copy on a high-performance controller maximizes the read performance of the volume.

Write I/O operations data flow with a mirrored volume

For write I/O operations to a mirrored volume, the IBM Spectrum Virtualize preferred node definition, with the multipathing driver on the host, is used to determine the preferred path. The host routes the I/Os through the preferred path, and the corresponding node is responsible for further destaging written data from cache to both volume copies. Figure 7-5 shows the data flow for write I/O processing when volume mirroring is used.

Figure 7-5 Data flow for write I/O processing in a mirrored volume

As shown in Figure 7-5, all the writes are sent by the host to the preferred node for each volume (1). Then, the data is mirrored to the cache of the partner node in the I/O Group (2), and acknowledgment of the write operation is sent to the host (3). The preferred node then destages the written data to the two volume copies (4).

A volume with copies can be checked to see whether all of the copies are identical or consistent. If a medium error is encountered while it is reading from one copy, it is repaired by using data from the other copy. This consistency check is performed asynchronously with host I/O.

|

Important: Mirrored volumes can be taken offline if no quorum disk is available. This behavior occurs because the synchronization status for mirrored volumes is recorded on the quorum disk.

|

Mirrored volumes use bitmap space at a rate of 1 bit per 256 KiB grain, which translates to

1 MiB of bitmap space supporting 2 TiB of mirrored volumes. The default allocation of bitmap space is 20 MiB, which supports 40 TiB of mirrored volumes. If all 512 MiB of variable bitmap space is allocated to mirrored volumes, 1 PiB of mirrored volumes can be supported.

1 MiB of bitmap space supporting 2 TiB of mirrored volumes. The default allocation of bitmap space is 20 MiB, which supports 40 TiB of mirrored volumes. If all 512 MiB of variable bitmap space is allocated to mirrored volumes, 1 PiB of mirrored volumes can be supported.

Table 7-1 shows you the bitmap space default configuration.

Table 7-1 Bitmap space default configuration

|

Copy service

|

Minimum allocated bitmap space

|

Default allocated bitmap space

|

Maximum allocated bitmap space

|

Minimum1 functionality when using the default values

|

|

Remote copy2

|

0

|

20 MiB

|

512 MiB

|

40 TiB of remote mirroring volume capacity

|

|

FlashCopy3

|

0

|

20 MiB

|

2 GiB

|

•10 TiB of FlashCopy source volume capacity

•5 TiB of incremental FlashCopy source volume capacity

|

|

Volume mirroring

|

0

|

20 MiB

|

512 MiB

|

40 TiB of mirrored volumes

|

|

RAID

|

0

|

40 MiB

|

512 MiB

|

•80 TiB array capacity using RAID 0, 1, or 10

•80 TiB array capacity in three-disk RAID 5 array

•Slightly less than 120 TiB array capacity in five-disk RAID 6 array

|

1 The actual amount of functionality might increase based on settings such as grain size and strip size. RAID is subject to a 15% margin of error.

2 Remote copy includes Metro Mirror, Global Mirror, and active-active relationships.

3 FlashCopy includes the FlashCopy function, Global Mirror with change volumes, and active-active relationships.

The sum of all bitmap memory allocation for all functions except FlashCopy must not exceed 552 MiB.

7.1.5 Thin-provisioned volumes

Volumes can be configured to be thin-provisioned or fully allocated. A thin-provisioned volume behaves as though application reads and writes were fully allocated. When a thin-provisioned volume is created, the user specifies two capacities:

•The real physical capacity that is allocated to the volume from the storage pool

•The virtual capacity that is available to the host

In a fully allocated volume, these two values are the same.

Therefore, the real capacity determines the quantity of MDisk extents that is initially allocated to the volume. The virtual capacity is the capacity of the volume that is reported to all other IBM Spectrum Virtualize components (for example, FlashCopy, cache, and remote copy), and to the host servers.

The real capacity is used to store the user data and the metadata for the thin-provisioned volume. The real capacity can be specified as an absolute value, or as a percentage of the virtual capacity.

Thin-provisioned volumes can be used as volumes that are assigned to the host, by FlashCopy to implement thin-provisioned FlashCopy targets, and with the mirrored volumes feature.

When a thin-provisioned volume is initially created, a small amount of the real capacity is used for initial metadata. I/Os are written to grains of the thin volume that were not previously written, which causes grains of the real capacity to be used to store metadata and the actual user data. I/Os are written to grains that were previously written, which updates the grain where data was previously written.

The grain size is defined when the volume is created. The grain size can be 32 KiB, 64 KiB, 128 KiB, or 256 KiB. The default grain size is 256 KiB, which is the preferred option. If you select 32 KiB for the grain size, the volume size cannot exceed 260 TiB. The grain size cannot be changed after the thin-provisioned volume is created. Generally, smaller grain sizes save space, but they require more metadata access, which can adversely affect performance.

When using thin-provisioned volume as a FlashCopy source or target volume, use 256 KiB to maximize performance. When using thin-provisioned volume as a FlashCopy source or target volume, specify the same grain size for the volume and for the FlashCopy function.

Figure 7-6 shows the thin-provisioning concept.

Figure 7-6 Conceptual diagram of thin-provisioned volume

Thin-provisioned volumes store user data and metadata. Each grain of data requires metadata to be stored. Therefore, the I/O rates that are obtained from thin-provisioned volumes are less than the I/O rates that are obtained from fully allocated volumes.

The metadata storage used is never greater than 0.1% of the user data. The resource usage is independent of the virtual capacity of the volume. If you are using the thin-provisioned volume directly with a host system, use a small grain size.

|

Thin-provisioned volume format: Thin-provisioned volumes do not need formatting. A read I/O that requests data from deallocated data space returns zeros. When a write I/O causes space to be allocated, the grain is “zeroed” before use.

|

The real capacity of a thin volume can be changed if the volume is not in image mode. Increasing the real capacity enables a larger amount of data and metadata to be stored on the volume. Thin-provisioned volumes use the real capacity that is provided in ascending order as new data is written to the volume. If the user initially assigns too much real capacity to the volume, the real capacity can be reduced to free storage for other uses.

A thin-provisioned volume can be configured to autoexpand. This feature causes the IBM Spectrum Virtualize to automatically add a fixed amount of extra real capacity to the thin volume as required. Therefore, autoexpand attempts to maintain a fixed amount of unused real capacity for the volume, which is known as the contingency capacity.

The contingency capacity is initially set to the real capacity that is assigned when the volume is created. If the user modifies the real capacity, the contingency capacity is reset to be the difference between the used capacity and real capacity.

A volume that is created without the autoexpand feature, and therefore has a zero contingency capacity, goes offline when the real capacity is used and it must expand.

Autoexpand does not cause the real capacity to grow much beyond the virtual capacity. The real capacity can be manually expanded to more than the maximum that is required by the current virtual capacity, and the contingency capacity is recalculated.

To support the auto expansion of thin-provisioned volumes, the storage pools from which they are allocated have a configurable capacity warning. When the used capacity of the pool exceeds the warning capacity, a warning event is logged. For example, if a warning of 80% is specified, the event is logged when 20% of the free capacity remains.

A thin-provisioned volume can be converted nondisruptively to a fully allocated volume (or vice versa) by using the volume mirroring function. For example, the system allows a user to add a thin-provisioned copy to a fully allocated primary volume, and then remove the fully allocated copy from the volume after they are synchronized.

The fully allocated-to-thin-provisioned migration procedure uses a zero-detection algorithm so that grains that contain all zeros do not cause any real capacity to be used.

7.1.6 Compressed volumes

This is a custom type of volume where data is compressed as it is written to disk, saving additional space. To use the compression function, you must obtain the IBM Real-time Compression license.

7.1.7 Volumes for various topologies

A Basic volume is the simplest form of volume. It consists of a single volume copy, made up of extents striped across all MDisks in a storage pool. It services I/O by using readwrite cache and is classified as fully allocated (reported real capacity and virtual capacity are equal). You can create other forms of volumes, depending on the type of topology that is configured on your system:

•With standard topology, which is a single-site configuration, you can create a basic volume or a mirrored volume.

By using volume mirroring, a volume can have two physical copies. Each volume copy can belong to a different pool, and each copy has the same virtual capacity as the volume. In the management GUI, an asterisk indicates the primary copy of the mirrored volume. The primary copy indicates the preferred volume for read requests.

•With HyperSwap topology, which is a three-site HA configuration, you can create a basic volume or a HyperSwap volume.

HyperSwap volumes create copies on separate sites for systems that are configured with HyperSwap topology. Data that is written to a HyperSwap volume is automatically sent to both copies so that either site can provide access to the volume if the other site becomes unavailable.

|

Note: IBM Storwize V7000 product supports the IBM HyperSwap topology. However, IBM Storwize V7000 does not support Stretched Cluster or Enhanced Stretched Cluster topologies. The Stretched Cluster or Enhanced Stretched Cluster topologies are only supported in a IBM SAN Volume Controller environment.

|

•Virtual Volumes: The IBM Spectrum Virtualize V7.6 release also introduces Virtual Volumes. These volumes are available in a system configuration that supports VMware vSphere VVols. These volumes allow VMware vCenter to manage system objects, such as volumes and pools. The Spectrum Virtualize system administrators can create volume objects of this class, and assign ownership to VMware administrators to simplify management.

For more information about configuring vVol with IBM Spectrum Virtualize, see Configuring VMware Virtual Volumes for Systems Powered by IBM Spectrum Virtualize, SG24-8328.

|

Note: With V7.4 and later, it is possible to prevent accidental deletion of volumes if they have recently performed any I/O operations. This feature is called Volume protection, and it prevents active volumes or host mappings from being deleted inadvertently. This process is done by using a global system setting. For more information, see IBM Knowledge Center:

|

7.2 Create Volumes menu

The GUI is the simplest means of volume creation, and presents different options in the Create Volumes menu depending on the topology of the system.

To start the process of creating a volume, navigate to the Volumes menu and click the Volumes option of the IBM Spectrum Virtualize graphical user interface as shown in Figure 7-7.

Figure 7-7 Volumes menu

A list of existing volumes along with their state, capacity, and associated storage pools is displayed.

Figure 7-8 Create Volume window

The Create Volumes tab opens the Create Volumes menu, which displays three potential creation methods: Basic, Mirrored, and Custom.

|

Note: The volume classes that are displayed on the Create Volumes menu depend on the topology of the system.

|

The Create Volumes menu is shown in Figure 7-9.

Figure 7-9 Basic, Mirrored, and Custom Volume Creation options

In the previous example, the Create Volumes menu showed submenus that allow the creation of Basic, Mirrored, and Custom volumes (in standard topology):

Figure 7-10 Create Volumes menu with HyperSwap Topology

Independent of the topology of the system, the Create Volume menu displays a Basic volume menu and a Custom volume menu that can be used to customize parameters of volumes. Custom volumes are described in more detail later in this section.

|

Notes:

•A Basic volume is a volume whose data is striped across all available managed disks (MDisks) in one storage pool.

•A Mirrored volume is a volume with two physical copies, where each volume copy can belong to a different storage pool.

•A Custom volume, in the context of this menu, is either a Basic or Mirrored volume with customization from the default parameters.

•The Create Volumes menu also provides, using the Capacity Savings parameter, the ability to change the default provisioning of a Basic or Mirrored Volume to Thin-provisioned or Compressed. For more information, see “Volume Creation with Capacity Saving options” on page 259.

|

Volume migration is described in 7.8, “Migrating a volume to another storage pool” on page 278, and creating volume copies in 7.3.2, “Creating Mirrored volumes” on page 256.

7.3 Creating volumes

This section focuses on using the Create Volumes menu to create Basic and Mirrored volumes in a system with standard topology. It also covers creating host-to-volume mappings. As previously stated, volume creation is available on four different volume classes:

•Basic

•Mirrored

•HyperSwap

•Custom

|

Note: The ability to create HyperSwap volumes by using the GUI simplifies creation and configuration. This simplification is enhanced by the GUI by using the mkvolume command.

|

7.3.1 Creating Basic volumes

The most commonly used type of volume is the Basic volume. This type of volume is fully allocated, with the entire size dedicated to the defined volume. The host and the IBM Spectrum Virtualize system see the fully allocated space.

Create a Basic volume by clicking Basic as shown in Figure 7-9 on page 252. This action opens Basic volume menu where you can define the following information:

•Pool: The pool in which the volume is created (drop-down)

•Quantity: The number of volumes to be created (numeric up/down)

•Capacity: Size of the volume in units (drop-down)

•Capacity Savings (drop-down):

– None

– Thin-provisioned

– Compressed

•Name: Name of the volume (cannot start with a numeric)

•I/O group

The Basic volume creation process is shown in Figure 7-11.

Figure 7-11 Creating Basic volume

Use an appropriate naming convention for volumes for easy identification of their association with the host or host cluster. At a minimum, it should contain the name of the pool or some tag that identifies the underlying storage subsystem. It can also contain the host name that the volume is mapped to, or perhaps the content of this volume, for example, name of applications to be installed.

When all of the characteristics of the Basic volume have been defined, it can be created by selecting one of the following options:

•Create

•Create and Map

|

Note: The Plus sign (+) icon highlighted in green in Figure 7-11, can be used to create more volumes in the same instance of the volume creation wizard.

|

In this example, the Create option has been selected (the volume-to-host mapping can be performed later). At the end of the volume creation, a confirmation window appears, as shown in Figure 7-12.

Figure 7-12 Create Volume Task Completion window: Success

Success is also indicated by the state of the Basic volume being reported as formatting in the Volumes pane as shown in Figure 7-13.

Figure 7-13 Basic Volume Fast-Format

|

Notes:

•Fully allocated volumes are automatically formatted through the quick initialization process after the volume is created. This process makes fully allocated volumes available for use immediately.

•Quick initialization requires a small amount of I/O to complete, and limits the number of volumes that can be initialized at the same time. Some volume actions, such as moving, expanding, shrinking, or adding a volume copy, are disabled when the specified volume is initializing. Those actions are available after the initialization process completes.

•The quick initialization process can be disabled in circumstances where it is not necessary. For example, if the volume is the target of a Copy Services function, the Copy Services operation formats the volume. The quick initialization process can also be disabled for performance testing so that the measurements of the raw system capabilities can take place without waiting for the process to complete.

For more information, see the Fully allocated volumes topic in IBM Knowledge Center:

|

7.3.2 Creating Mirrored volumes

IBM Spectrum Virtualize offers the capability to mirror volumes, which means a single volume, presented to a host, can have two physical copies. Each volume copy can belong to a different pool, and each copy has the same virtual capacity as the volume. When a server writes to a mirrored volume, the system writes the data to both copies. When a server reads a mirrored volume, the system picks one of the copies to read.

Normally, this is the primary copy (as indicated in the management GUI by an asterisk (*)). If one of the mirrored volume copies is temporarily unavailable (for example, because the storage system that provides the pool is unavailable), the volume remains accessible to servers. The system remembers which areas of the volume are written and resynchronizes these areas when both copies are available.

The use of mirrored volumes results in the following outcomes:

•Improves availability of volumes by protecting them from a single storage system failure

•Provides concurrent maintenance of a storage system that does not natively support concurrent maintenance

•Provides an alternative method of data migration with better availability characteristics

•Converts between fully allocated volumes and thin-provisioned volumes

|

Note: Volume mirroring is not a true disaster recovery (DR) solution because both copies are accessed by the same node pair and addressable by only a single cluster. However, it can improve availability.

|

To create a mirrored volume, complete the following steps:

1. In the Create Volumes window, click Mirrored and choose the Pool of Copy1 and Copy2 by using the drop-down menus. Although the mirrored volume can be created in the same pool, this setup is not typical. Next, enter the Volume Details: Quantity, Capacity, Capacity savings, and Name.

Generally, keep mirrored volumes on a separate set of physical disks (Pools). Leave the I/O group option at its default setting of Automatic (see Figure 7-14).

Figure 7-14 Mirrored Volume creation

2. Click Create (or Create and Map)

3. Next, the GUI displays the underlying CLI commands being run to create the mirrored volume and indicates completion as shown in Figure 7-15.

Figure 7-15 Task complete: creating Mirrored volume

|

Note: When creating a Mirrored volume using this menu, you are not required to specify the Mirrored Sync rate. It defaults to 2 MBps. Customization of the synchronization rate can be done by using the Custom menu.

|

Volume Creation with Capacity Saving options

The Create Volumes menu also provides, using the Capacity Savings option, the ability to alter the provisioning of a Basic or Mirrored volume into Thin-provisioned or Compressed. Select either Thin-provisioned or Compressed from the drop-down menu as shown in Figure 7-16.

Figure 7-16 Volume Creation with Capacity Saving option

7.4 Mapping a volume to the host

After a volume is created, it can be mapped to a host:

1. From the Volumes menu, highlight the volume that you want to create a mapping for and then select Actions from the menu bar.

|

Tip: An alternative way of opening the Actions menu is to highlight (select) a volume and use the right mouse button.

|

Figure 7-17 Map to Host or Host Cluster

3. This action opens a Create Mapping window. In this window, use the radio buttons to select whether to create a mapping to Hosts or Host Clusters. Then, select which volumes to create the mapping for. You can also select whether to Self Assign SCSI LUN IDs or let the System Assign them. In this example, we map a single volume to an iSCSI Host and have the system assign the SCSI LUN IDs, as shown in Figure 7-18. Click Next.

Figure 7-18 Mapping a Volume to Host

4. A summary of the proposed volume mappings is displayed. To confirm the mappings, click Map Volumes, as shown in Figure 7-19.

Figure 7-19 Map volumes summary

5. The Modify Mappings window displays the command details and then a Task completed message as shown in Figure 7-20.

Figure 7-20 Successful completion of Host to Volume mapping

7.5 Creating Custom volumes

The Create Volumes window also enables Custom volume creation. It provides an alternative method of defining Capacity savings options, such as Thin-provisioning and Compression, but also expands on the base level default options for available Basic and Mirrored volumes. A Custom volume can be customized regarding Mirror sync rate, Cache mode, and Fast-Format.

The Custom menu consists of several submenus:

•Volume Location (Mandatory, defines the Pools to be used and I/O group preferences)

•Volume Details (Mandatory, defines the Capacity savings option)

•Thin Provisioning (defines Thin Provisioning settings if selected above)

•Compressed (defines Compression settings if selected above)

•General (for changing default options for Cache mode and Formatting)

•Summary

Work through these submenus to customize your Custom volume as wanted, and then commit these changes by using Create as shown in Figure 7-21.

Figure 7-21 Customization submenus

7.5.1 Creating a custom thin-provisioned volume

A thin-provisioned volume can be defined and created by using the Custom menu. Regarding application reads and writes, thin-provisioned volumes behave as though they were fully allocated. When creating a thin-provisioned volume, you can specify two capacities:

•The real physical capacity that is allocated to the volume from the storage pool. The real capacity determines the quantity of extents that are initially allocated to the volume.

•Its virtual capacity available to the host. The virtual capacity is the capacity of the volume that is reported to all other components (for example, FlashCopy, cache, and remote copy) and to the hosts.

To create a thin-provisioned volume, complete the following steps:

1. From the Create Volumes window, select the Custom menu. In the Volume Location subsection, enter the Volume copy type, Pool, Caching I/O group, Preferred node, and Accessible I/O groups. In the Volume Details subsection, enter the Quantity, Capacity (virtual), Capacity Savings (choose Thin-provisioned from the drop-down menu), and Name of the volume being created (Figure 7-22).

Figure 7-22 Create a thin-provisioned volume

2. In the Thin Provisioning subsection, enter the Real capacity, Automatically expand setting, Warning threshold, and Grain size (Figure 7-23).

Figure 7-23 Thin Provisioning settings

The Thin Provisioning options are as follows (defaults are displayed in parentheses):

– Real capacity: (2%) Specify the size of the real capacity space used during creation.

– Automatically Expand: (Enabled) This option enables the automatic expansion of real capacity, if more capacity is to be allocated.

– Warning threshold: (Enabled) Enter a threshold for receiving capacity alerts.

– Grain Size: (256 kibibytes (KiB)) Specify the grain size for real capacity. This option describes the size of the chunk of storage to be added to used capacity. For example, when the host writes 1 megabyte (MB) of new data, the capacity is increased by adding four chunks of 256 KiB each.

|

Important: If you do not use the autoexpand feature, the volume will go offline after reaching its real capacity. The default grain size is 256 KiB. The optimum choice of grain size depends on volume use type. Consider these points:

•If you are not going to use the thin-provisioned volume as a FlashCopy source or target volume, use 256 KiB to maximize performance.

•If you are going to use the thin-provisioned volume as a FlashCopy source or target volume, specify the same grain size for the volume and for the FlashCopy function.

For more information, see the “Performance Problem When Using EasyTier With Thin Provisioned Volumes” topic:

|

3. Confirm the settings and click Create to define the volume. A Task completed message is displayed as shown in Figure 7-24.

Figure 7-24 Task completed, the thin-provisioned volume is created

4. Alternatively, you can create and immediately map this volume to a host by using the Create and Map option instead.

7.5.2 Creating Custom Compressed volumes

The configuration of compressed volumes is similar to thin-provisioned volumes. To create a Compressed volume, complete the following steps:

1. From the Create Volumes window, select the Custom menu. In the Volume Location subsection, enter the Volume copy type, Pool, Caching I/O group, Preferred node, and Accessible I/O groups. In the Volume Details subsection, enter the Quantity, Capacity (virtual), Capacity Savings (choose Compressed from the drop-down menu), and Name of the volume being created (Figure 7-25).

Figure 7-25 Defining a volume as compressed using the Capacity savings option

2. Open the Compression subsection and verify that Real Capacity is set to a minimum of the default value of 2%. Leave all other parameter at their defaults. See Figure 7-26.

Figure 7-26 Compression settings

Figure 7-27 Task completed, the compressed volume is created

7.5.3 Custom Mirrored Volumes

The Custom option in the Create Volumes window is used to customize volume creation. Using this feature, the default options can be overridden and volume creation can be tailored to the specifics of the clients environment.

Modifying the Mirror sync rate

The Mirror sync rate can be changed from the default setting by using the Custom option, changing the Volume copy type to Mirrored in the Volume Location subsection of the Create Volumes window. This option sets the priority of copy synchronization progress, enabling a preferential rate to be set for more important volumes (Figure 7-28).

Figure 7-28 Customization of Mirrored sync rate

The progress of formatting and synchronization of a newly created Mirrored Volume can be checked from the Running Tasks menu. This menu reports the progress of all currently running tasks, including Volume Format and Volume Synchronization (Figure 7-29).

Figure 7-29 Progress of all running tasks

Creating a Custom Thin-provisioned Mirrored volume

The Custom option in the Create Volumes window is used to customize volume creation. Using this feature, the default options can be overridden and volume creation can be tailored to the specifics of the clients environment.

The Mirror sync rate can be changed from the default setting by using the Custom option, changing the Volume copy type to Mirrored in the Volume Location subsection of the Create Volumes window. This option sets the priority of copy synchronization progress, enabling a preferential rate to be set for more important volumes.

The summary shows you the capacity information and the allocated space. You can click Custom and customize the thin-provision settings or the mirror synchronization rate. After you create the volume, the confirmation window opens as shown in Figure 7-30.

Figure 7-30 Creating a thin provisioned mirrored custom volume

The initial synchronization of thin-mirrored volumes is fast when a small amount of real and virtual capacity is used.

7.6 HyperSwap and the mkvolume command

HyperSwap volume configuration is not possible until site awareness has been configured.

When the HyperSwap topology is configured, the GUI uses the mkvolume command to create volumes instead of the traditional mkvdisk command. This section describes the mkvolume command that is used in HyperSwap topology. The GUI continues to use mkvdisk when all other classes of volumes are created.

In this section, the new mkvolume command and how the GUI uses this command when the HyperSwap topology has been configured are described, rather than the “traditional” mkvdisk command.

|

Note: It is still possible to create HyperSwap volumes as in the V7.5 release, as described in the following white paper:

You can also get more information in IBM Storwize V7000, Spectrum Virtualize, HyperSwap, and VMware Implementation, SG24-8317.

|

HyperSwap volumes are a new type of HA volume that is supported by IBM Spectrum Virtualize. They are built from two existing IBM Spectrum Virtualize technologies:

•Metro Mirror

•(VDisk) Volume Mirroring

These technologies have been combined in an active-active configuration deployed by using Change Volumes (as used in Global Mirror with Change Volumes) to create a Single Volume (from a host perspective) in an HA form. The volume presented is a combination of four “traditional” volumes, but is a single entity from a host (and administrative) perspective as shown in Figure 7-31.

Figure 7-31 What makes up a HyperSwap Volume

The GUI simplifies the complexity of HyperSwap volume creation by only presenting the volume class of HyperSwap as a volume creation option after HyperSwap topology has been configured.

In the following example, a HyperSwap topology has been configured and the Create Volumes window is being used to define a HyperSwap Volume as shown in Figure 7-32.

The capacity and name characteristics are defined as for a Basic volume (in the Volume Details section) and the mirroring characteristics are defined by the HyperSwap site parameters (in the Hyperswap Details section).

Figure 7-32 HyperSwap Volume creation with summary of actions

A summary (lower left of the creation window) indicates the actions that are carried out when the Create option is selected. As shown in Figure 7-32, a single volume is created, with volume copies in site1 and site2. This volume is in an active-active (Metro Mirror) relationship with extra resilience provided by two change volumes.

The command that is issued to create this volume is shown in Figure 7-33 and can be summarized as follows:

svctask mkvolume -name <name_of_volume> -pool <X:Y> -size <size_of_volume> -unit <units>

Figure 7-33 Example mkvolume command

7.6.1 Volume manipulation commands

Five new CLI commands for administering volumes were released in IBM Spectrum Virtualize V7.6. However, the GUI continues to use existing commands for all volume administration, except for HyperSwap volume creation (mkvolume) and deletion (rmvolume). The following CLI commands are available for administering volumes:

•mkvolume

•mkimagevolume

•addvolumecopy

•rmvolumecopy

•rmvolume

In addition, the lsvdisk and GUI functionality are available. The lsvdisk command now includes volume_id, volume_name, and function fields to easily identify the individual VDisk that make up a HyperSwap volume. These views are “rolled-up” in the GUI to provide views that reflect the client’s view of the HyperSwap volume and its site-dependent copies, as opposed to the “low-level” VDisks and VDisk Change Volumes.

As shown in Figure 7-34, Volumes → Volumes shows the HyperSwap Volume ITSO_HS_VOL with an expanded view opened to reveal two volume copies: ITSO_HS_VOL (site1) (Master VDisk) and ITSO_HS_VOL (site2) (Auxiliary VDisk). We do not show the VDisk Change Volumes.

Figure 7-34 Hidden Change Volumes

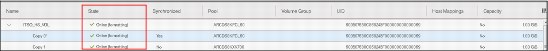

Likewise, the status of the HyperSwap volume is reported at a “parent” level. If one of the copies is syncing or offline, the parent HyperSwap volume reflects this state as shown in Figure 7-35.

Figure 7-35 Parent volume reflects state of copy volume

Individual commands related to HyperSwap are described briefly here:

•mkvolume

Create an empty volume by using storage from existing storage pools. The type of volume created is determined by the system topology and the number of storage pools specified. Volume is always formatted (zeroed). This command can be used to create these items:

– Basic volume: Any topology

– Mirrored volume: Standard topology

– HyperSwap volume: HyperSwap topology

•rmvolume

Remove a volume. For a HyperSwap volume, this process includes deleting the active-active relationship and the change volumes.

The -force parameter with rmvdisk is replaced by individual override parameters, making it clearer to the user exactly what protection they are bypassing.

•mkimagevolume

Create an image mode volume. This command can be used to import a volume, preserving existing data. Implemented as a separate command to provide greater differentiation between the action of creating an empty volume and creating a volume by importing data on an existing MDisk.

•addvolumecopy

Add a copy to an existing volume. The new copy is always synchronized from the existing copy. For HyperSwap topology systems, this process creates a highly available volume. This command can be used to create the following volume types:

– Mirrored volume: Standard topology

– HyperSwap volume: HyperSwap topology

•rmvolumecopy

Remove a copy of a volume. This command leaves the volume intact. It also converts a Mirrored or HyperSwap volume into a basic volume. For a HyperSwap volume, this process includes deleting the active-active relationship and the change volumes.

This command enables a copy to be identified simply by its site.

The -force parameter with rmvdiskcopy is replaced by individual override parameters, making it clearer to the user exactly what protection they are bypassing.

See IBM Knowledge Center for more details:

7.7 Mapping Volumes to Host after volume creation

A newly created volume can be mapped to the host at creation time, or later. If the volume was not mapped to a host during creation, then to map it to a host by completing the steps in 7.7.1, “Mapping newly created volumes to the host using the wizard” on page 274.

7.7.1 Mapping newly created volumes to the host using the wizard

This section explains how to map a volume that was created in 7.3, “Creating volumes” on page 253. It is assumed that you followed that procedure and the Volumes menu is displayed showing a list of volumes, as shown in Figure 7-36.

Figure 7-36 Volume list

To map volumes to a host or host cluster, complete the following steps:

1. Right-click the volume to be mapped and select Map to Host or Host Cluster, as shown in Figure 7-37.

Figure 7-37 Mapping a volume to a host

2. Select the host or host cluster to which the new volume should be mapped, as shown in Figure 7-38. Click Next.

Figure 7-38 Select a host or host cluster

3. A summary window is displayed showing the volume to be mapped along with existing volumes already mapped to the host or host cluster, as shown in Figure 7-39. Click Map Volumes.

Figure 7-39 Map volume to host cluster summary

4. The confirmation window shows the result of the volume mapping task, as shown in Figure 7-40.

Figure 7-40 Confirmation of volume to host mapping

5. After the task completes, the wizard returns to the Volumes window. You can verify the host mappings for a volume by navigating to Hosts → Hosts or Hosts → Host Clusters, as shown in Figure 7-41.

Figure 7-41 Accessing the host clusters menu

6. Right-click the host or host cluster to which the volume was mapped and select Modify Volume Mappings, or Modify Shared Volume Mappings for host clusters, as shown in Figure 7-42.

Figure 7-42 Modify shared volume host mappings

7. A window is displayed showing a list of volumes already mapped to the host or host cluster, as shown in Figure 7-43.

Figure 7-43 Volumes that are mapped to host cluster

The host is now able to access the volumes and store data on them. For host clusters, all hosts in the cluster are able to access the shared volumes. See 7.8, “Migrating a volume to another storage pool” on page 278 for information about discovering the volumes on the host and making additional host settings, if required.

Multiple volumes can also be created in preparation for discovering them and customizing their mappings later.

7.8 Migrating a volume to another storage pool

IBM Spectrum Virtualize provides online volume migration while applications are running. Storage pools are managed disk groups, as described in Chapter 6, “Storage pools” on page 185. With volume migration, data can be moved between storage pools, regardless of whether the pool is an internal pool or a pool on another external storage system. This migration is done without the server and application knowing that it even occurred.

The migration process itself is a low priority process that does not affect the performance of the IBM Spectrum Virtualize system. However, it moves one extent after another to the new storage pool, so the performance of the volume is affected by the performance of the new storage pool after the migration process.

To migrate a volume to another storage pool, complete the following steps:

1. In the Volumes menu, highlight the volume that you want to migrate, then select Actions followed by Migrate to Another Pool, as shown in Figure 7-44.

Figure 7-44 Migrate Volume Copy window: Select copy

2. The Migrate Volume Copy window opens. If your volume consists of more than one copy, select the copy that you want to migrate to another storage pool, as shown in Figure 7-45. If the selected volume consists of one copy, this selection menu is not available.

Figure 7-45 Migrate Volume Copy: Selecting the volume copy

Figure 7-46 Migrate Volume Copy: Selecting the target pool

4. The volume copy migration starts as shown in Figure 7-47. Click Close to return to the Volumes menu.

Figure 7-47 Migrate Volume Copy started

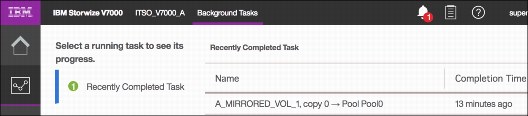

The time that it takes for the migration process to complete depends on the size of the volume. The status of the migration can be monitored by navigating to Monitoring → Background Tasks, as shown in Figure 7-48.

Figure 7-48 Migration progress

After the migration task completes, the Background Tasks menu displays a Recently Completed Task. Figure 7-49 shows that the volume was migrated to Pool0.

Figure 7-49 Migration complete

In the Pools → Volumes By Pool menu, the volume is now displayed in the target storage pool, as shown in Figure 7-50.

Figure 7-50 Volume copy after migration

The volume copy has now been migrated without any host or application downtime to the new storage pool. It is also possible to migrate both volume copies to other online pools.

Another way to migrate volumes to another pool is by performing the migration by using the volume copy feature, as described in 7.9, “Migrating volumes using the volume copy feature” on page 281.

|

Note: Migrating a volume between storage pools with different extent sizes is not supported. If you need to migrate a volume to a storage pool with a different extent size, use the volume copy feature instead.

|

7.9 Migrating volumes using the volume copy feature

IBM Spectrum Virtualize supports creating, synchronizing, splitting, and deleting volume copies. A combination of these tasks can be used to migrate volumes to other storage pools. The easiest way to migrate volume copies is to use the migration feature that is described in 7.8, “Migrating a volume to another storage pool” on page 278. If you use this feature, one extent after another is migrated to the new storage pool. However, the migration of a volume can also be achieved by first making a new copy of the volume and then removing the old copy.

To migrate a volume using the volume copy feature, complete the following steps:

1. Select the volume to create a copy of, and in the Actions menu select Add Volume Copy, as shown in Figure 7-51.

Figure 7-51 Adding a volume copy to another pool

2. Create a second copy of your volume in the target storage pool as shown in Figure 7-52. In this example, a compressed copy of the volume is created in target pool Pool1. Click Add.

Figure 7-52 Defining the new volume copy

Wait until the copies are synchronized, as shown in Figure 7-53.

Figure 7-53 Waiting for the volume copies to synchronize

3. Change the roles of the volume copies by making the new copy the primary copy as shown in Figure 7-54. The current primary copy is displayed with an asterisk next to its name.

Figure 7-54 Making the new copy in a different storage pool the primary

4. Split or delete the old copy from the volume as shown in Figure 7-55.

Figure 7-55 Deleting the old volume copy

5. Ensure that the new copy is in the target storage pool by double-clicking the volume or highlighting the volume and selecting Properties from the Actions menu. The properties of the volume in the target storage pool are shown in Figure 7-56.

Figure 7-56 Verifying the new copy in the target storage pool

The migration of volumes using the volume copy feature requires more user interaction, but provides some benefits for particular use cases. One such example is migrating a volume from a tier 1 storage pool to a lower performance tier 2 storage pool. First, the volume copy feature can be used to create a copy on the tier 2 pool (steps 1 and 2). All reads are still performed in the tier 1 pool to the primary copy. After the volume copies have synchronized (step 3), all writes are destaged to both pools, but the reads are still only done from the primary copy.

Now the roles of the volume copies can be switched such that the new copy is the primary (step 4) to test the performance of the new pool. If testing of the lower performance pool is successful, the old copy in tier 1 can be split or deleted. If the performance testing of the tier 2 pool was unsuccessful, the old copy can be made the primary again to switch volume reads back to the tier 1 copy.

7.10 Volume operations using the CLI

This section describes various configuration and administrative tasks that can be performed on volumes that can be carried out on volumes by using the command-line interface (CLI). For more information, see IBM Knowledge Center:

See Appendix B, “CLI setup” on page 745 for how to set up SAN boot.

7.10.1 Creating a volume

The mkvdisk command creates sequential, striped, or image mode volume objects. When they are mapped to a host object, these objects are seen as disk drives with which the host can perform I/O operations. When a volume is created, you must enter several parameters at the CLI. Mandatory and optional parameters are available.

|

Creating an image mode disk: If you do not specify the -size parameter when you create an image mode disk, the entire MDisk capacity is used.

|

You must know the following information before you start to create the volume:

•In which storage pool the volume has its extents

•From which I/O Group the volume is accessed

•Which IBM Spectrum Virtualize node is the preferred node for the volume

•Size of the volume

•Name of the volume

•Type of the volume

•Whether this volume is managed by IBM Easy Tier to optimize its performance

When you are ready to create your striped volume, use the mkvdisk command. In Example 7-1, this command creates a 10 gigabyte (GB) striped volume with volume ID 8 within the storage pool Pool0 and assigns it to the io_grp0 I/O Group. Its preferred node is node 1.

Example 7-1 The mkvdisk command

IBM_Storwize:ITSO:superuser>mkvdisk -mdiskgrp Pool0 -iogrp io_grp0 -size 10 -unit gb -name Tiger

Virtual Disk, id [8], successfully created

To verify the results, use the lsvdisk command, as shown in Example 7-2.

Example 7-2 The lsvdisk command

IBM_Storwize:ITSO:superuser>lsvdisk 8

id 8

name Tiger

IO_group_id 0

IO_group_name io_grp0

status online

mdisk_grp_id 0

mdisk_grp_name Pool0

capacity 10.00GB

type striped

formatted no

formatting yes

mdisk_id

mdisk_name

FC_id

FC_name

RC_id

RC_name

vdisk_UID 6005076400F580049800000000000010

preferred_node_id 2

fast_write_state not_empty

cache readwrite

udid

fc_map_count 0

sync_rate 50

copy_count 1

se_copy_count 0

filesystem

mirror_write_priority latency

RC_change no

compressed_copy_count 0

access_IO_group_count 1

last_access_time

parent_mdisk_grp_id 0

parent_mdisk_grp_name Pool0

owner_type none

owner_id

owner_name

encrypt yes

volume_id 8

volume_name Tiger

function

throttle_id

throttle_name

IOPs_limit

bandwidth_limit_MB

volume_group_id

volume_group_name

cloud_backup_enabled no

cloud_account_id

cloud_account_name

backup_status off

last_backup_time

restore_status none

backup_grain_size

deduplicated_copy_count 0

copy_id 0

status online

sync yes

auto_delete no

primary yes

mdisk_grp_id 0

mdisk_grp_name Pool0

type striped

mdisk_id

mdisk_name

fast_write_state not_empty

used_capacity 10.00GB

real_capacity 10.00GB

free_capacity 0.00MB

overallocation 100

autoexpand

warning

grainsize

se_copy no

easy_tier on

easy_tier_status balanced

tier tier0_flash

tier_capacity 0.00MB

tier tier1_flash

tier_capacity 0.00MB

tier tier_enterprise

tier_capacity 0.00MB

tier tier_nearline

tier_capacity 10.00GB

compressed_copy no

uncompressed_used_capacity 10.00GB

parent_mdisk_grp_id 0

parent_mdisk_grp_name Pool0

encrypt yes

deduplicated_copy no

used_capacity_before_reduction 0.00MB

The required tasks to create a volume are complete.

7.10.2 Volume information

Use the lsvdisk command to display summary information about all volumes that are defined within the IBM Spectrum Virtualize environment, as shown in Example 7-3. To display more detailed information about a specific volume, run the command again and append the volume name parameter or the volume ID.

Example 7-3 The lsvdisk command

IBM_Storwize:ITSO:superuser>lsvdisk -delim ' '

id name IO_group_id IO_group_name status mdisk_grp_id mdisk_grp_name capacity type FC_id FC_name RC_id RC_name vdisk_UID fc_map_count copy_count fast_write_state se_copy_count RC_change compressed_copy_count parent_mdisk_grp_id parent_mdisk_grp_name formatting encrypt volume_id volume_name function

0 A_MIRRORED_VOL_1 0 io_grp0 online many many 10.00GB many 6005076400F580049800000000000002 0 2 empty 0 no 0 many many no yes 0 A_MIRRORED_VOL_1

1 COMPRESSED_VOL_1 0 io_grp0 online 1 Pool1 15.00GB striped 6005076400F580049800000000000003 0 1 empty 0 no 1 1 Pool1 no yes 1 COMPRESSED_VOL_1

2 vdisk0 0 io_grp0 online 0 Pool0 10.00GB striped 6005076400F580049800000000000004 0 1 empty 0 no 0 0 Pool0 no yes 2 vdisk0

3 THIN_PROVISION_VOL_1 0 io_grp0 online 0 Pool0 100.00GB striped 6005076400F580049800000000000005 0 1 empty 1 no 0 0 Pool0 no yes 3 THIN_PROVISION_VOL_1

4 COMPRESSED_VOL_2 0 io_grp0 online 1 Pool1 30.00GB striped 6005076400F580049800000000000006 0 1 empty 0 no 1 1 Pool1 no yes 4 COMPRESSED_VOL_2

5 COMPRESS_VOL_3 0 io_grp0 online 1 Pool1 30.00GB striped 6005076400F580049800000000000007 0 1 empty 0 no 1 1 Pool1 no yes 5 COMPRESS_VOL_3

6 MIRRORED_SYNC_RATE_16 0 io_grp0 online many many 10.00GB many 6005076400F580049800000000000008 0 2 empty 0 no 0 many many no yes 6 MIRRORED_SYNC_RATE_16

7 THIN_PROVISION_MIRRORED_VOL 0 io_grp0 online many many 10.00GB many 6005076400F580049800000000000009 0 2 empty 2 no 0 many many no yes 7 THIN_PROVISION_MIRRORED_VOL

8 Tiger 0 io_grp0 online 0 Pool0 10.00GB striped 6005076400F580049800000000000010 0 1 not_empty 0 no 0 0 Pool0 yes yes 8 Tiger

12 vdisk0_restore 0 io_grp0 online 0 Pool0 10.00GB striped 6005076400F58004980000000000000E 0 1 empty 0 no 0 0 Pool0 no yes 12 vdisk0_restore

13 vdisk0_restore1 0 io_grp0 online 0 Pool0 10.00GB striped 6005076400F58004980000000000000F 0 1 empty 0 no 0 0 Pool0 no yes 13 vdisk0_restore1

7.10.3 Creating a thin-provisioned volume

Example 7-4 shows how to create a thin-provisioned volume. In addition to the normal parameters, consider the following parameters:

-rsize This parameter makes the volume a thin-provisioned volume. Otherwise, the volume is fully allocated (mandatory).

-autoexpand This parameter specifies that thin-provisioned volume copies automatically expand their real capacities by allocating new extents from their storage pool (optional).

-grainsize This parameter sets the grain size in kilobytes (KB) for a thin-provisioned volume (optional).

Example 7-4 Using the command mkvdisk

IBM_Storwize:ITSO:superuser>mkvdisk -mdiskgrp Pool0 -iogrp 0 -vtype striped -size 10 -unit gb -rsize 50% -autoexpand -grainsize 256

Virtual Disk, id [9], successfully created

This command creates a thin-provisioned10 GB volume. The volume belongs to the storage pool that is named Site1_Pool and is owned by input/output (I/O) Group io_grp0. The real capacity automatically expands until the volume size of 10 GB is reached. The grain size is set to 256 KB, which is the default.

|

Disk size: When the -rsize parameter is used, you have the following options: disk_size, disk_size_percentage, and auto.

Specify the disk_size_percentage value by using an integer, or an integer that is immediately followed by the percent (%) symbol.

Specify the units for a disk_size integer by using the -unit parameter. The default is MB. The -rsize value can be greater than, equal to, or less than the size of the volume.

The auto option creates a volume copy that uses the entire size of the MDisk. If you specify the -rsize auto option, you must also specify the -vtype image option.

An entry of 1 GB uses 1,024 MB.

|

7.10.4 Creating a volume in image mode

This virtualization type enables an image mode volume to be created when an MDisk has data on it, perhaps from a pre-virtualized subsystem. When an image mode volume is created, it directly corresponds to the previously unmanaged MDisk from which it was created. Therefore, except for a thin-provisioned image mode volume, the volume’s LBA x equals MDisk LBA x.

You can use this command to bring a non-virtualized disk under the control of the clustered system. After it is under the control of the clustered system, you can migrate the volume from the single managed disk.

When the first MDisk extent is migrated, the volume is no longer an image mode volume. You can add an image mode volume to an already populated storage pool with other types of volumes, such as striped or sequential volumes.

|

Size: An image mode volume must be at least 512 bytes (the capacity cannot be 0). That is, the minimum size that can be specified for an image mode volume must be the same as the storage pool extent size to which it is added, with a minimum of 16 MiB.

|

You must use the -mdisk parameter to specify an MDisk that has a mode of unmanaged. The -fmtdisk parameter cannot be used to create an image mode volume.

|

Capacity: If you create a mirrored volume from two image mode MDisks without specifying a -capacity value, the capacity of the resulting volume is the smaller of the two MDisks. The remaining space on the larger MDisk is inaccessible.

If you do not specify the -size parameter when you create an image mode disk, the entire MDisk capacity is used.

|

Use the mkvdisk command to create an image mode volume, as shown in Example 7-5.

Example 7-5 The mkvdisk (image mode) command

IBM_Storwize:ITSO:superuser>mkvdisk -mdiskgrp ITSO_Pool1 -iogrp 0 -mdisk mdisk25 -vtype image -name Image_Volume_A

Virtual Disk, id [6], successfully created

This command creates an image mode volume that is called Image_Volume_A that uses the mdisk25 MDisk. The volume belongs to the storage pool ITSO_Pool1, and the volume is owned by the io_grp0 I/O Group.

If you run the lsvdisk command again, the volume that is named Image_Volume_A has a status of image, as shown in Example 7-6.

Example 7-6 The lsvdisk command

IBM_Storwize:ITSO:superuser>lsvdisk -filtervalue type=image

id name IO_group_id IO_group_name status mdisk_grp_id mdisk_grp_name capacity type FC_id FC_name RC_id RC_name vdisk_UID fc_map_count copy_count fast_write_state se_copy_count RC_change compressed_copy_count parent_mdisk_grp_id parent_mdisk_grp_name formatting encrypt volume_id volume_name function

6 Image_Volume_A 0 io_grp0 online 5 ITSO_Pool1 1.00GB image 6005076801FE80840800000000000021 0 1 empty 0 no 0 5 ITSO_Pool1 no no 6 Image_Volume_A

7.10.5 Adding a mirrored volume copy

You can create a mirrored copy of a volume, which keeps a volume accessible even when the MDisk on which it depends becomes unavailable. You can create a copy of a volume on separate storage pools or by creating an image mode copy of the volume. Copies increase the availability of data, but they are not separate objects. You can create or change mirrored copies from the volume only.

In addition, you can use volume mirroring as an alternative method of migrating volumes between storage pools. For example, if you have a non-mirrored volume in one storage pool and want to migrate that volume to another storage pool, you can add a copy of the volume and specify the second storage pool. After the copies are synchronized, you can delete the copy on the first storage pool. The volume is copied to the second storage pool while remaining online during the copy.

To create a mirrored copy of a volume, use the addvdiskcopy command. This command adds a copy of the chosen volume to the selected storage pool, which changes a non-mirrored volume into a mirrored volume.

The following scenario shows the creation of a mirrored volume copy from one storage pool to another storage pool. As you can see in Example 7-7, the volume has a single copy with copy_id 0 in pool Pool0.

Example 7-7 The lsvdisk command

IBM_Storwize:ITSO:superuser>lsvdisk 2

id 2

name vdisk0

IO_group_id 0

IO_group_name io_grp0

status online

mdisk_grp_id 0

mdisk_grp_name Pool0

capacity 10.00GB

type striped

formatted yes

formatting no

mdisk_id

mdisk_name

FC_id

FC_name

RC_id

RC_name

vdisk_UID 6005076400F580049800000000000004

preferred_node_id 2

fast_write_state empty

cache readonly

udid

fc_map_count 0

sync_rate 50

copy_count 1

se_copy_count 0

filesystem

mirror_write_priority latency

RC_change no

compressed_copy_count 0

access_IO_group_count 1

last_access_time

parent_mdisk_grp_id 0

parent_mdisk_grp_name Pool0

owner_type none

owner_id

owner_name

encrypt yes

volume_id 2

volume_name vdisk0

function

throttle_id

throttle_name

IOPs_limit

bandwidth_limit_MB

volume_group_id

volume_group_name

cloud_backup_enabled no

cloud_account_id

cloud_account_name

backup_status off

last_backup_time

restore_status none

backup_grain_size

deduplicated_copy_count 0

copy_id 0

status online

sync yes

auto_delete no

primary yes

mdisk_grp_id 0

mdisk_grp_name Pool0

type striped

mdisk_id

mdisk_name

fast_write_state empty

used_capacity 10.00GB

real_capacity 10.00GB

free_capacity 0.00MB

overallocation 100

autoexpand

warning

grainsize

se_copy no

easy_tier on

easy_tier_status balanced

tier tier0_flash

tier_capacity 0.00MB

tier tier1_flash

tier_capacity 0.00MB

tier tier_enterprise

tier_capacity 0.00MB

tier tier_nearline

tier_capacity 10.00GB

compressed_copy no

uncompressed_used_capacity 10.00GB

parent_mdisk_grp_id 0

parent_mdisk_grp_name Pool0

encrypt yes

deduplicated_copy no

used_capacity_before_reduction 0.00MB

Example 7-8 shows adding the volume copy mirror by using the addvdiskcopy command.

Example 7-8 The addvdiskcopy command

IBM_Storwize:ITSO:superuser>addvdiskcopy -mdiskgrp Pool1 -vtype striped -unit gb vdisk0

Vdisk [2] copy [1] successfully created

During the synchronization process, you can see the status by using the lsvdisksyncprogress command. As shown in Example 7-9, the first time that the status is checked, the synchronization progress is at 48%, and the estimated completion time is 151026203918. The estimated completion time is displayed in the YYMMDDHHMMSS format. In our example, it is 2016, Oct-26 20:39:18. The second time that the command is run, the progress status is at 100%, and the synchronization is complete.

Example 7-9 Synchronization

IBM_Storwize:ITSO:superuser>lsvdisksyncprogress

vdisk_id vdisk_name copy_id progress estimated_completion_time

2 vdisk0 1 0 171018232305

IBM_Storwize:ITSO:superuser>lsvdisksyncprogress

vdisk_id vdisk_name copy_id progress estimated_completion_time

2 vdisk0 1 100

As you can see in Example 7-10, the new mirrored volume copy (copy_id 1) was added and can be seen by using the lsvdisk command.

Example 7-10 The lsvdisk command

IBM_Storwize:ITSO:superuser>lsvdisk vdisk0

id 2

name vdisk0

IO_group_id 0

IO_group_name io_grp0

status online

mdisk_grp_id many

mdisk_grp_name many

capacity 10.00GB

type many

formatted yes

formatting no

mdisk_id many

mdisk_name many

FC_id

FC_name

RC_id

RC_name

vdisk_UID 6005076400F580049800000000000004

preferred_node_id 2

fast_write_state empty

cache readonly

udid

fc_map_count 0

sync_rate 50

copy_count 2

se_copy_count 0

filesystem

mirror_write_priority latency

RC_change no

compressed_copy_count 0

access_IO_group_count 1

last_access_time

parent_mdisk_grp_id many

parent_mdisk_grp_name many

owner_type none

owner_id

owner_name

encrypt yes

volume_id 2

volume_name vdisk0

function

throttle_id

throttle_name

IOPs_limit

bandwidth_limit_MB

volume_group_id

volume_group_name

cloud_backup_enabled no

cloud_account_id

cloud_account_name

backup_status off

last_backup_time

restore_status none

backup_grain_size

deduplicated_copy_count 0

copy_id 0

status online

sync yes

auto_delete no

primary yes

mdisk_grp_id 0

mdisk_grp_name Pool0

type striped

mdisk_id

mdisk_name

fast_write_state empty

used_capacity 10.00GB

real_capacity 10.00GB

free_capacity 0.00MB

overallocation 100

autoexpand

warning

grainsize

se_copy no

easy_tier on

easy_tier_status balanced

tier tier0_flash

tier_capacity 0.00MB

tier tier1_flash

tier_capacity 0.00MB

tier tier_enterprise

tier_capacity 0.00MB

tier tier_nearline

tier_capacity 10.00GB

compressed_copy no

uncompressed_used_capacity 10.00GB

parent_mdisk_grp_id 0

parent_mdisk_grp_name Pool0

encrypt yes

deduplicated_copy no

used_capacity_before_reduction 0.00MB

copy_id 1

status online

sync yes

auto_delete no

primary no

mdisk_grp_id 1

mdisk_grp_name Pool1

type striped

mdisk_id

mdisk_name

fast_write_state empty

used_capacity 10.00GB

real_capacity 10.00GB

free_capacity 0.00MB

overallocation 100

autoexpand

warning

grainsize

se_copy no

easy_tier on

easy_tier_status balanced

tier tier0_flash

tier_capacity 0.00MB

tier tier1_flash

tier_capacity 0.00MB

tier tier_enterprise

tier_capacity 0.00MB

tier tier_nearline

tier_capacity 10.00GB

compressed_copy no

uncompressed_used_capacity 10.00GB

parent_mdisk_grp_id 1

parent_mdisk_grp_name Pool1

encrypt yes

deduplicated_copy no

used_capacity_before_reduction 0.00MB

While you are adding a volume copy mirror, you can define a mirror with different parameters to the original volume copy. For example, you can define a thin-provisioned volume copy of a fully allocated volume copy to migrate a thick-provisioned volume to a thin-provisioned volume, and vice versa.

|

Volume copy mirror parameters: To change the parameters of a volume copy mirror, you must delete the volume copy and redefine it with the new values.

|

Now, you can change the name of the volume that was mirrored from VOL_NO_MIRROR to VOL_WITH_MIRROR, as shown in Example 7-11.

Example 7-11 Volume name changes

IBM_Storwize:ITSO:superuser>chvdisk -name VOL_WITH_MIRROR VOL_NO_MIRROR

IBM_Storwize:ITSO:superuser>

7.10.6 Adding a compressed volume copy

Use the addvdiskcopy command to add a compressed copy to an existing volume.

This example shows the usage of addvdiskcopy with the -autodelete flag set. The -autodelete flag specifies that the primary copy is deleted after the secondary copy is synchronized.

Example 7-12 shows a shortened lsvdisk output of an uncompressed volume.

Example 7-12 An uncompressed volume

IBM_Storwize:ITSO:superuser>lsvdisk UNCOMPRESSED_VOL

id 9

name UNCOMPRESSED_VOL

IO_group_id 0

IO_group_name io_grp0

status online

...

copy_id 0

status online

sync yes

auto_delete no

primary yes

...

compressed_copy no

...

Example 7-13 adds a compressed copy with the -autodelete flag set.

Example 7-13 Compressed copy

IBM_Storwize:ITSO:superuser>addvdiskcopy -autodelete -rsize 2 -mdiskgrp 0 -compressed UNCOMPRESSED_VOL

Vdisk [9] copy [1] successfully created

Example 7-14 shows the lsvdisk output with an additional compressed volume (copy 1) and volume copy 0 being set to auto_delete yes.

Example 7-14 The lsvdisk command output

IBM_Storwize:ITSO:superuser>lsvdisk UNCOMPRESSED_VOL

id 9

name UNCOMPRESSED_VOL

IO_group_id 0

IO_group_name io_grp0

status online

...

compressed_copy_count 2

...

copy_id 0

status online

sync yes

auto_delete yes

primary yes

...

copy_id 1

status online

sync no

auto_delete no

primary no

...