Vulnerability Disclosure

Introduction

As a security professional, you’ll find it difficult to work with computers without finding vulnerabilities. Of course if you’re actively looking, you’ll find more. Regardless of how you find the information, you have to decide what to do with it.

There are many factors that determine how much detail you supply, and to whom. First of all, the amount of detail you can provide depends on the amount of time you have to spend on the issue, as well as your interest level. If you aren’t interested in doing all of the research yourself, there are ways to basically pass the information along to other researchers, which are also discussed in this chapter. You may have gotten as far as fully developing an exploit, or the problem may be so easy to exploit that no special code is required. In that instance, you have some decisions to make—such as whether you plan to publish the exploit, and when.

How much detail to publish, up to and including whether to publish exploit code, is the subject of much debate at present. There is debate, because by making vulnerability details public, you are not only allowing security professionals to check their own systems for the problems, but you are also providing this same information to malicious hackers or cyber adversaries. Because there is no easy and effective way to contain the security knowledge by teaching only well-meaning people how to find security problems, cyber adversaries also learn by using the same information. But, recall that some malicious hackers already have access to such information and share it among themselves. It is unlikely that everyone will agree on a single answer anytime soon. In this chapter, we discuss the pros and cons, rights and wrongs, of the various options.

Vulnerability Disclosure and Cyber Adversaries

Hundreds of new vulnerabilities are discovered and published every month, often complete with exploit codes—each offering cyber adversaries new tools to add to their arsenal and therefore augmenting their adversarial capability. The marked increase in the discovery of software vulnerabilities can be partly attributed to the marked increase in software development by private companies and individuals, developing new technologies and improving on aging protocol implementations such as FTP (file transfer protocol) servers. Although the evidence to support this argument is plentiful, there has also been a marked increase in the exploration of software vulnerabilities and development of techniques to take advantage of such issues, knowledge that through the end of the 20th century remained somewhat of a black art. Although many of those publishing information pertaining to software vulnerabilities do so with good intentions, the same well-intentioned information can be, and has been many times over, used for adversarial purposes. For this reason, many, often highly heated discussions have been commonplace at security conferences and within online groups, regarding individuals’ views on what they see as being the “right” way to do things. Whereas one school of thought deems that it is wrong to publish detailed vulnerability information to the public domain, preferring to inform software vendors of the issue, causing it to be fixed “silently” in the preceding software version, there also exists a school of thought that believes that all vulnerability information should be “free”—being published to the public at the same time the information is passed to its vendor. Although there is no real justification for the view, this full-disclosure type approach is described by some as the “black hat” approach. To gain an understanding of some of the more common disclosure procedures, the next few pages will examine the semantics of these procedures and the benefits and drawbacks of each, from the perspective of both the adversary (offender) and defender. Through doing this, we ultimately hope to glean an insight into the ways in which variable vulnerability disclosure procedures benefit the cyber adversaries abilities and to gain an improved understanding of the problems that organizations such as the CERT ®Coordination Center and software vendors face when addressing vulnerabilities.

“Free For All”: Full Disclosure

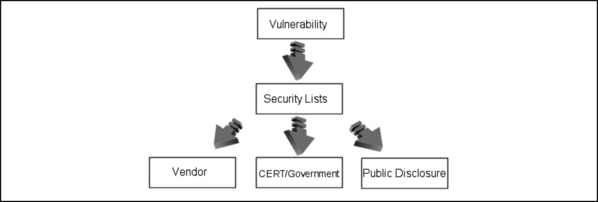

Figure 7.1 depicts what some view as the more reckless of the vulnerability disclosure processes. The ethic that tends to drive this form of disclosure is usually that of free speech, that is, that all information pertaining to a vulnerability should be available to all via a noncensored medium.

The distribution medium for this form of information is more often than not the growing number of noncensored security mailing lists such as “Full Disclosure,” and in cases where the list moderator has deemed that information contained within a post is suitable, the Bugtraq mailing list operated by Symantec Corp. As vulnerabilities are being released to a single point (such as a mailing list), the time it often takes for the information to be picked up by organizations such as CERT and the respective vendor is left to the frequency that the mailing list is checked by the relevant bodies. In spite of the great efforts made by CERT and other advisory councils, it can be days or even weeks before information posted to less mainstream security lists will be noticed.

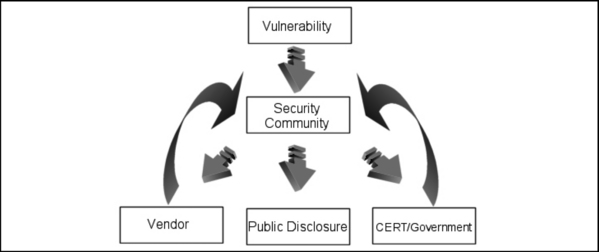

Due to the nature of organizations such as CERT, it is often the case that a vendor’s first notification of a vulnerability for one of their products has been announced via one of these lists will be from CERT itself, as it is within the remit of CERT (and organizations like it) to ensure that the respective vendor is aware of an issue and ultimately that the issue is remedied, allowing a CERT advisory to be released, complete with information about the fix that the vendor has come up with. The way in which advisory councils will respond to emerging threats and feed information back into the computer security community is depicted in Figure 7.2.

“This Process Takes Time”

In some cases, weeks may pass before a vendor will respond to an issue. The reasons can range from issues related to the complexity of the vulnerability, resulting in extended time being required to remedy the problem, to the quality assurance process, which the now-fixed software component may go through prior to it being released into the public domain. The less security-savvy vendor can take prolonged periods of time to even acknowledge the presence of an exploitable condition.

Unfixed Vulnerability Attack Capability and Attack Inhibition Considerations

The event, or a combination of the previously mentioned events, leaves the skilled cyber adversary with a window of time, which in many cases will result in a greatly increased capability against their target. This window of opportunity will remain open until changes are made to the target to inhibit the attack. Such changes may include, but are not limited to the vulnerability being fixed by the vendor. Other attack inhibitors (or risk mitigators), which may be introduced at this point, include “workarounds.” Workarounds attempt to mitigate the risk of a vulnerability being exploited in cases where vendor fixes are not yet available or cannot be installed for operational reasons. An example of a workaround for a network software daemon may be to deny access to the vulnerable network daemon from untrusted networks such as the Internet. Although this and many other such workarounds may not prevent an attack from occurring, it does create a situation where the adversary must consume additional resources. For example, the adversary may need to gain access to networks that remain trusted by the vulnerable network daemon for this particular attack. Depending on the adversary’s motivation and capability, such a shift in required resources to succeed may force the adversary into pursuing alternate attack options that are more likely to succeed, or force a decision that the target is no longer worth engaging.

Although attack inhibitors may be used as an effective countermeasure, during the period of time between a vendor fix being released and information pertaining to a vulnerability being available to the masses, we make three assumptions when considering an inhibitor’s capability of preventing a successful attack:

![]() That we (the defender) have actually been informed about the vulnerability.

That we (the defender) have actually been informed about the vulnerability.

![]() That the information provided is sufficient to implement the discussed attack inhibitor.

That the information provided is sufficient to implement the discussed attack inhibitor.

![]() That the adversary we are trying to stop knew about the vulnerability and gained the capability to take advantage (exploit) of it (a) at the same time as the implementation of the inhibitor or (b) after implementation of the inhibitor.

That the adversary we are trying to stop knew about the vulnerability and gained the capability to take advantage (exploit) of it (a) at the same time as the implementation of the inhibitor or (b) after implementation of the inhibitor.

In spite of a defender’s ability to throw attack inhibitors into the attack equation, in almost all cases that vulnerability information is disclosed, and the adversary has the upper hand, if only for a certain period of time.

We hypothesize that an adversary may have the capability to exploit a recently announced vulnerability and that there is currently no vendor fix to remedy it. We also hypothesize that the adversary has the motivation to engage a target affected by this vulnerability. Although there are multiple variables, there are several observation, we can make regarding the adversarial risks associated with the attack. (These risks relate to the inhibitor object within the attacker properties.) Before we outline the impact of the risks as a result of this situation, let’s review the known adversarial resources (see Table 7.1).

Table 7.1

| Resource | Value |

| Time | Potentially limited due to vulnerability information being in the public domain |

| Technologies | Sufficient to take advantage of disclosed flaw |

| Finance | Unknown |

| Initial access | Sufficient to take advantage of disclosed flaw as long as the target state does not change |

Probability of Success Given an Attempt

The adversary’s probability of success is high, given his or her elevated capability against the target. Probability of success will be impacted by the amount of time between the vulnerability’s disclosure and time of attack. The more time that has passed, the more likely it is that the target’s state will have changed, requiring the adversary to use additional resources to offset any inhibitors that may have been introduced.

Probability of Detection Given an Attempt

Probability of detection will be low to begin with. But as time goes by, the chances of the detection given an attempt will increase because it’s more likely that the type of attack will be recognized.

Figure 7.3 depicts a typical shift in probability of success, given the attempt versus probability of detection over a four-day period. Note that day 0 is considered to be the day that the vulnerability is released into the public domain and not the more traditional interpretation of the “0 day.” As you can see, the probability of success decreases over time as the probability of detection increases. We postulate that day 3 may represent the day on which a vendor fix is released (perhaps with accompanying CERT advisory), resulting in the decrease in the probability of success given an attempt [P(S/A)] and an increase in the probability of detection given an attempt [P(D/A)]. Note that unless the adversary can observe a change in state of the target (that it is no longer vulnerable to his attack), the P(S/A) calculation will never reach zero.

“Symmetric” Full Disclosure

A “symmetric” full disclosure describes the disclosure procedures that attempt to ensure a symmetric, full disclosure of a vulnerability (and often an exploit) to all reachable information security communities, without first notifying any specific group of individuals.

This kind of disclosure procedure is perhaps commonly committed by those who lack an understanding of responsible disclosure procedures, often due to a lack of experience coordinating vulnerability releases with the software firms or those capable of providing an official remedy for the issue. In the past, it has also been commonplace for this form of disclosure to be employed by those wanting to publicly discredit software vendors, or those responsible for maintaining the software concerned. It’s noteworthy that when this occurs, exploit code is often published with the information pertaining to the vulnerability, in order to increase the impact of the vulnerability on the information technology community, in an attempt to discredit the software vendor further.

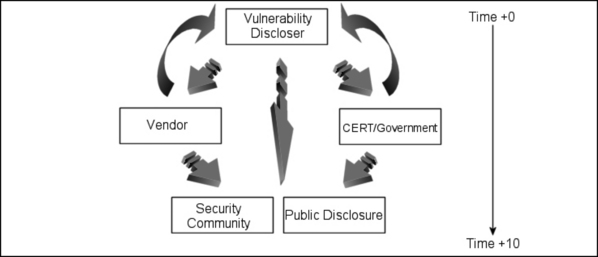

Figure 7.4 attempts to demonstrate the symmetric information flow, which occurs when a vulnerability is released into the public domain using an “equal-opportunity,” full-disclosure type procedure (or lack of procedure as is often the case). It’s important to note that public disclosure is defined (at least in this context) as the point at which information pertaining to a vulnerability enters the public domain on a nonsubscription basis—the point at which the vulnerability information appears on security or news Web sites, for example. Although most security mailing lists such as Bugtraq and Vuln-Dev are available to the general public, they operate on a subscription and therefore not fully public basis. Although the procedure depicted in Figure 7.1 does not constitute responsible disclosure in the minds of most responsible disclosure advocates and software vendors, the vulnerability is being disclosed to the vendor, government, and the rest of the security community at the same point in time.

The result of this type of disclosure is that the software vendor and government vulnerability advisory authorities such as CERT stand a fighting chance of conducting their own vulnerability assessment, working on a fix or workaround for the issue, and ultimately releasing their own advisory in a reasonable time scale, before the issue draws the attention of the general public and perhaps more importantly these days, the media.

Although this form of disclosure procedure leaves those responsible for finding a remedy for the disclosed issues a certain amount of time to ensure that critical assets are protected from the new threat, the ball (at least for a short time) is in the court of the cyber adversary. Depending on the skill of the individual and quality of information disclosed, it can take a highly skilled and motivated adversary as little as an hour to acquire the capability to exploit the disclosed vulnerability.

Responsible Restricted “Need to Know” Disclosure

When we talk about restricted disclosure, we are not necessarily referring to restrictions that are placed on the distribution of information pertaining to vulnerabilities, but rather the restrictive nature of the information provided in an advisory or vulnerability alert release. For the purposes of characterizing disclosure types, we define responsible, restricted disclosure as the procedure used when partial information is released, while ensuring that the software or service vendor have been given a reasonable opportunity to remedy the issue and save face - prior to disclosure. It will usually also imply that organizations such as CERT/CC and MITRE have been notified of the issue and given the opportunity to prepare for the disclosure. Note that restricted disclosure does not imply responsible disclosure. The primary arguments for following a restricted disclosure model follow from the belief that the disclosure of “too much” vulnerability information is unnecessary for most end-users and encourages the development of proof of concept code to take advantage of (exploit) the disclosed issue. Secondary reasons for following a restricted disclosure model can include the individual disclosing the vulnerability having an insufficient research capability to release detailed vulnerability information.

Restricted disclosure is becoming an increasingly less common practice due to a recognition of its lack of benefits; however, many closed-source software and service vendors believe it is better to release partial information for vulnerabilities that have been discovered “in-house,” since the public doesn’t “need to know” the vulnerability details—just how to fix it.

Responsible, Partial Disclosure and Attack Inhibition Considerations

Unless additional resources are invested toward an attack, the capabilities (given an attempt) of a cyber adversary aiming at exploiting a flaw based upon the retrieval of partial vulnerability information from a forum such as Bugtraq or Vuln-Dev, which has been disclosed in a responsible manner will be severally impacted upon, compared to our first two full disclosure–oriented procedures. The two obvious reasons for this are (1) that due to the nature of the disclosure, by the time the adversary intercepts the information, a vendor fix is already available for the issue and (2) that only partial information regarding the vulnerability has been released—excluding the possibility that proof of concept code was also released by the original vulnerability source. Even in the eventuality of a proof of concept code (to take advantage of the issue on a vulnerable target) being developed by the adversary, this takes time (a resource), which has been seriously impacted upon by the vulnerability being “known.” Therefore, a high likelihood exists that the state of a potentially vulnerable target will change sooner rather than later. Also, because the vulnerability is “known” and possible attack vectors are also known, the likelihood of detection is also greatly heightened.

Figure 7.5 plots comparative values of probability of success given an attempt versus probability of detection given an attempt. Note that because of the previously discussed facts, driven by the nature of the vulnerability disclosure, the initial probability of success is greatly reduced, and the probability of detection is heightened.

“Responsible” Full Disclosure

A common misconception amongst many involved in the information technology industry is that providing “full” disclosure implies recklessness or a lack of responsibility.

Full, responsible disclosure is the term we use to refer to disclosure procedures that provide the security communities with all (“full”) information held by the discloser pertaining to a disclosed vulnerability and also make provisions to ensure that considerable effort is made to inform the product or service vendor/provider (respectively) of the issues affecting them.

So-called full-disclosure policies adopted by many independent security enthusiasts and large security firms alike often specify a multistage approach for contacting the parties responsible for maintaining the product or service, up to a point that the vulnerability has been remedied or (in less frequent cases) the vendor/provider is deemed to have no interest in fixing the problem. Responsible, full-disclosure policies tend to differ on their approach to contacting organizations such as CERT/CC and MITRE, however, it is more common than not that such organizations will be contacted prior to the (full) disclosure of information to the security community (and ultimately the public).

Figure 7.6 depicts the timeline of a typical full, responsible vulnerability disclosure. As previously discussed, the vendor and more often than not CERT, MITRE, and other such organizations will be contacted prior to public disclosure. Typically, during the time period between vendor/CERT/MITRE notification, a substantial amount of coordination will occur between the respective party and the source of the vulnerability information. The purpose of these communications is usually twofold: to clarify details of the vulnerability, which at this stage may remain unclear, and to provide the original discloser with updated information regarding the status of any remedies that the vendor or service provider may have implemented. Such information is often published by the original discloser alongside the information they eventually publish to the security communities and public. This follows the full disclosure principal of disclosing “all” information, whether it be in regard to the vulnerability itself or vendor fix details.

Although in many cases the vulnerability discloser will contact the vendor, organizations such as CERT and MITRE ensure that the vendor has been contacted with appropriate information to provision for the less-common cases where an individual has contacted such an organization and not the vendor or service provider.

Responsible, Full Disclosure Capability and Attack Inhibition Considerations

The adversaries’ perceived capability would take a significant blow if an increased level of technical data pertaining to a vulnerability was also offered and available through the full disclosure of unfixed vulnerabilities. This would increase the likelihood that that state of the target has changed, and would thus modify the perceived likelihood of a successful attack. However, the likelihood of detection also increases, due to the high probability that the security community would detect attack vectors at an early stage (See Figure 7.7.).

In spite of adverse changes in the discussed two attack inhibitors, the initial probability of success given an attempt remains high, due to the likelihood that the information disclosed either included a proof of concept code or alluded to ways in which the issue could be exploited in a robust manner. This makes the exploit-writing adversaries’ task much easier than if only few, abstract details regarding exploitation were disclosed.

Although the perceived probability of success given an attempt is high at first, it tapers off at a greater rate than the hypothetical data used to represent P(S/A) for the restricted responsible disclosure model.

Although many systems may remain theoretically vulnerable for days—even weeks post-disclosure—the detail of the disclosure may have allowed effective workarounds (or threat mitigators) to be introduced to protect the target from this specific threat. In the case of a large production environment, this is a likely scenario, because certain mission-critical systems cannot always be taken out of service at the instant a new vulnerability is disclosed.

Although the use of responsible disclosure model—the full disclosure of vulnerability information and possible attack vectors—results in an initial high-perceived probability of success given an attempt, the eventual perceived probability of success given an attempt over a relative period of time is reduced at a far greater rate. Over time, the value of P(S/A) coupled with P(D/A) may very well result in the attack being aborted due to the adverse conditions introduced by the disclosure procedures.

Security Firm “Value Added” Disclosure Model

A growing number of information security firms, already well known for their disclosure advisories into the public domain, have begun to offer a “value” added vulnerability alert and advisory service. Typically, such services will involve a vulnerability being found in-house, or paid for by a provider of such services, and the disclosure of the vulnerability is made in the form of an alert or full advisory to a closed group of private and/or public “customers” who pay a subscription-driven fee for the service.

The time at which the affected vendor or service provider will be notified of the issue will vary, depending on the policy of the advisory service provider. However, it is often after an initial alert has been sent out to paying customers. Certain security firms will also use such alert services to leverage the sale of professional services for clients who are concerned about a disclosed vulnerability and want to mitigate the risk of the vulnerability being exploited. After disclosure to paying customers—and conditionally, the vendor—it is the norm that the vulnerability will be then disclosed to the remainder of the security community and ultimately the general public. Again, the nature of disclosures to paying clients and the security community will vary, based upon the policies of the security firm providing the servers; however, most appear to be adopting the full-disclosure model.

Although we will deal with the semantics of prepublic disclosure issues further on in this chapter, the value-added disclosure model creates the possibility for several scenarios—unique to this disclosure model—to arise. The risk of adversarial insiders within large organizations is a very real one. One of the possible scenarios caused by the value-add disclosure model is in the lack of control the provider of the value-add service has over a disclosed advisory (and often exploit code) once a vulnerability has been disclosed to its paying customer base.

Value-Add Disclosure Model Capability and Attack Inhibition Considerations

Often, value-add advisories and alerts will be circulated throughout the systems administration and operations groups within the organizational structures of firms paying for the service. It goes without saying that the providers of value-add vulnerability alerting services will go to great lengths to ensure legally tight nondisclosure contracts are in place between themselves and their customers, it is seldom sufficient to prevent disclosed information from entering the (strategically) “wrong” hands.

Although there are only a few publicly documented instances where a value-add vulnerability advisory provider has published an advisory to a paying client, resulting in an insider within the paying client using the information for or to augment an adversarial act, let’s hypothesize toward how the previously discussed attack inhibitors may be impacted in such a scenario.

The values displayed in Figure 7.8 are rather arbitrary because the conclusions we assume the adversary will draw (such as the adversaries’ ability to enumerate the target’s capability to detect the attack given an attempt) will depend upon other data to which we do not necessarily have access, such as available skill-related resources.

This said, it does represent the kind of perceived probability of success (given an attempt) and perceived probability of detection (given an attempt) that would be typical of an adversary who has insider access to vulnerability data provided by a value-add vulnerability information provider.

Regardless of other resources available to the adversary, we are able to observe a greatly elevated initial perceived probability of success given an attempt due the low probability that the target’s properties consist of sufficient countermeasures to mitigate the attack, and a low initial perceived probability of detection given an attempt due to the low likelihood that the targets properties consist of the capability to detect the attack. Of course, even in the most extreme cases, the perceived probability of detection given an attempt will never be zero, and likewise, the probability of success never is finite due to the existence of the uncertainty inhibitor. The adversary may very well be uncertain regarding issues such as the possibility of the attack being detected through intrusion-detection heuristics or even if the maintainers of the target also subscribe to the very same value-add vulnerability notification service.

Non-Disclosure

In many cases, it wouldn’t be exaggerating to state that “For every vulnerability that is found and disclosed to the computer security community, five are found and silently fixed by the respective software or service vendor.”

As the software vendor and Internet service providing industries become more aware of the damage that could occur through the disclosure of problems inherent to their products, they are forced into taking a hard line regarding how they, as a business will handle newly discovered problems in their products. For the handful of software and service vendors who choose to remain relatively open regarding the disclosure of information pertaining to vulnerabilities, discovered “in-house,” a vast amount remain who will fix such vulnerabilities, without informing the security community, the public, or their customers of said issues with their product or service.

Common reasons for employing this type of policy include a fear that disclosing the issue will cause irreparable damage to the company’s profile and the fear that the disclosure of any vulnerability details, as few and abstract as they may be, may lead to the compromise of vulnerable customer sites and ultimately an adverse impact on the reputation of the software vendor or service provider.

In contrast to the nondisclosing vendor phenomena, a vast community of computer security enthusiasts equally choose not disclose information pertaining to vulnerabilities they have discovered to anyone other than those closest to them. More often than not, they keep the vulnerability secret because the longer it is kept “private,” the longer potential target systems will remain vulnerable, hence heightening the perceived probability of success given an attempt.

The Vulnerability Disclosure Pyramid Metric

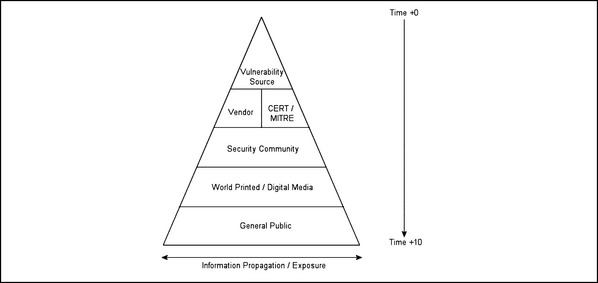

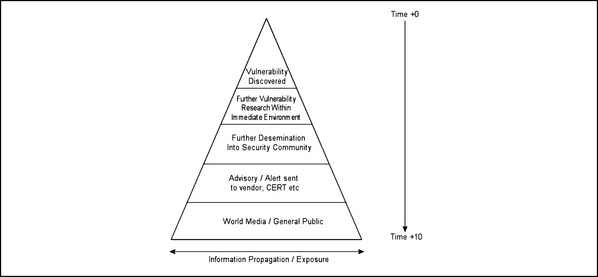

When we talk about vulnerability disclosure, one of the first models we tend look to is the “pyramid metric.” A pyramid is used because its increasing breadth from top to bottom (the X axis) is said to be representative of the increased distribution of information pertaining to a vulnerability, in relation to time—time therefore being represented by the Y axis. In simple terms, the wider the pyramid gets, the more people that know about any given vulnerability. Figure 7.9 depicts the disclosure pyramid metric in the light of a vulnerability being disclosed via a practice such as the full responsible disclosure.

The pyramid metric clearly works well as a visual aid; however, up until now, we have dealt with vulnerability disclosure and the consequences of vulnerability disclosure in the light of an individual placed at a lower point in the pyramid than the “source” of the vulnerability (those who make the original disclosure). In doing this, we make a fatal error—the discloser of a vulnerability is hardly ever the actual source of the vulnerability (he or she who discovered its existence).

To remedy this error, let us consider the following improved version of the disclosure pyramid (see Figure 7.10).

Figure 7.10 depicts the new and improved version of the disclosure pyramid, which includes two additional primary information dissemination points:

![]() The vulnerability’s actual discovery

The vulnerability’s actual discovery

![]() The research that takes place on the discovery of a vulnerability, along with further investigations into details such as possible ways in which the vulnerability could be exploited (attack vectors) and more often than not, the development of a program (known as an exploit) to prove the original concept that the issue can indeed be exploited, therefore constituting a software floor rather than “just a software bug”

The research that takes place on the discovery of a vulnerability, along with further investigations into details such as possible ways in which the vulnerability could be exploited (attack vectors) and more often than not, the development of a program (known as an exploit) to prove the original concept that the issue can indeed be exploited, therefore constituting a software floor rather than “just a software bug”

Pyramid Metric Capability and Attack Inhibition

We hypothesize that for any given vulnerability, the higher the level at which an individual resides within the pyramid metric, the higher the individual’s perceived (and more often than not, actual) capability will be. The theory behind this is based around two points, both of which pertain to an individual’s position within the pyramid:

![]() The higher an individual is in the pyramid, the more time they have to perform an adversarial act against a target prior to the availability of a fix or workaround (attack inhibitors). And, as we discussed earlier in this chapter, the earlier in the disclosure timeline the adversary resides, the higher the perceived probability of success given an attempt and the lower the perceived probability of detection given an attempt will be.

The higher an individual is in the pyramid, the more time they have to perform an adversarial act against a target prior to the availability of a fix or workaround (attack inhibitors). And, as we discussed earlier in this chapter, the earlier in the disclosure timeline the adversary resides, the higher the perceived probability of success given an attempt and the lower the perceived probability of detection given an attempt will be.

![]() The higher in the disclosure pyramid that an individual resides is in direct proportion to the number of “other” people that know about a given issue. In other words, the higher in the pyramid that an adversary resides, the lesser the likelihood is that the attack will be detected (due to less people knowing about the attack) and the higher the likelihood is of success given that countermeasures have probably not been introduced.

The higher in the disclosure pyramid that an individual resides is in direct proportion to the number of “other” people that know about a given issue. In other words, the higher in the pyramid that an adversary resides, the lesser the likelihood is that the attack will be detected (due to less people knowing about the attack) and the higher the likelihood is of success given that countermeasures have probably not been introduced.

To summarize, the higher in the disclosure pyramid an adversary is, the higher the perceived probability of success given an attempt [P(S/A)] and the lower the perceived probability of detection given an attempt [P(D/A)].

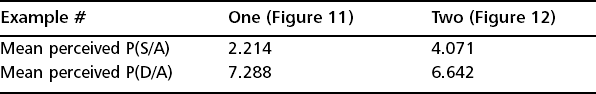

Pyramid Metric and Capability—A Composite Picture Pyramid

Due to the nature of vulnerability disclosure, it would be somewhat naive to presume that a given adversary falls at the same point in the disclosure pyramid for any given vulnerability. We must however remind ourselves that when we perform a characterization of an adversary, it is on a per-attack basis. In other words, what we are really trying to assess is the “average” or composite placing of the adversary within the disclosure pyramid in order to measure typical inhibitor levels such as the perceived probability of success given an attempt. To demonstrate this point more clearly, Figure 7.11 and Figure 7.12 depict the composite placing for two entirely different adversary types.

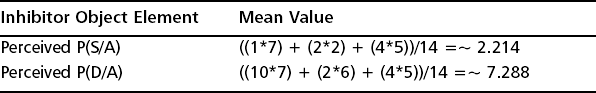

Figure 7.11 depicts a disclosure pyramid complete with the points at which a single adversary was placed for multiple vulnerabilities. This kind of result is typical of adversaries who have a degree of interaction with the security community but not to the extent that they are discovering their own vulnerabilities. Although not implied, they are more than likely not developing their own proof of concept (exploit) code to take advantage of the depicted vulnerabilities. We postulate that through the ability to determine the “mean” placing, we are also able to determine the “mean” values for inhibitors, which are impacted upon by technical capability. (For purposes of demonstrating this theory, score values have been attributed to the respective sections into which the pyramid diagram has been divided. In addition to this, some example values of the previous discussed inhibitors have been attributed to the values displayed within the pyramid for the respective categories. (Note that a high number represents a high, perceived probability.)

Given the 14 vulnerability placings displayed in Figure 7.11 and the data in Table 7.2, the mean inhibitor values for the adversary depicted in Figure 7.11 are illustrated in Table 7.3.

Table 7.2

| Pyramid Category Value | Perceived Probability of Success Given an Attempt | Perceived Probability of Detection Given an Attempt |

| 1 | 1 (Low) | 10 (High) |

| 2 | 2 | 6 |

| 3 | 4 | 5 |

| 4 | 6 | 2 |

| 5 | 9 (High) | 1 (Low) |

Admittedly, these numbers are rather arbitrary until we put them into context through a comparison with a second example of an adversary whose placings within the disclosure pyramid are more typical of an individual who is deeply embedded within the information security community and is far more likely to be involved in the development of proof of concept codes to take advantage of newly discovered vulnerabilities. Note that in spite of the adversary’s involvement in the security community, a number of vulnerability placings remain toward the bottom of the disclosure pyramid. This is because it’s nearly impossible for a single adversary to be involved in the discovery or research of each and every vulnerability—a prerequisite if every placing were to be toward to the top of the pyramid metric. The mean inhibitor values for Figure 7.12 are shown in Table 7.4.

Table 7.4

Mean Inhibitor Values for Figure 7.12

| Inhibitor Object Element | Mean Value |

| Perceived P(S/A) | ((6*1) + (5*3) + (3*6) + (2*9))/14 =∼ 4.071 |

| Perceived P(D/A) | ((6*10) + (5*5) + (3*2) + (2*1))/14 =∼ 6.642 |

Comparison of Mean Inhibitor Object Element Values

The higher an adversary’s composite placing within the vulnerability disclosure pyramid, the lower the mean perceived probability of detection given an attempt, and the higher the mean perceived probability of success given an attempt, as demonstrated in Table 7.5.

Do not be mistaken into the assumption that the disclosure pyramid metric is also an indicator of the threat that an individual who is often placed at a high location within the disclosure pyramid may pose. Threat is measured as a result of observing both the motivations and capabilities of an adversary against a defined asset—the pyramid metric not being any kind of measure of adversarial motivation. It does however play an important role in outlining one of the key relationships between an adversary’s technical resources and perceived attack preference observations, such as perceived probability of success given an attempt.

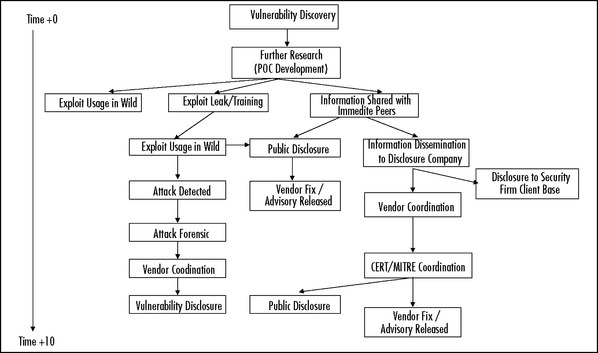

The Disclosure Food Chain

As we allude to the existence of multiple possible procedures and eventualities, which may play a part in the life cycle of data pertaining to system vulnerabilities, the disclosure pyramid becomes increasingly more complex, eventually outliving its usefulness. This resulted in the birth of what is now known as the “disclosure food chain,” a pyramid-like Web chart. Similar to the disclosure pyramid, it depicts the dissemination of vulnerability data to the public domain over time, but it also attempts to demonstrate the multiple directions in which vulnerability data can flow, depending on the way in which the vulnerability data was disclosed. Although not in its entirety, a partial disclosure food chain is depicted in Figure 7.13, outlining some of the more common routes that vulnerability information takes post-discovery.

Security Advisories and Misinformation

Amongst the plethora of invaluable pearls of security wisdom available on forums such as the full-disclosure and even Bugtraq mailing lists, the more informed reader may find a few advisories and purported security alerts that for some reason just don’t seem to make sense. Although there’s a chance the information you’ve been reading was in fact been written by a 14-year-old, more interested in sending out “shoutz” to his hacker friends than conveying any kind of useful information, there’s an equal chance that the information you’ve read has been part of a plot to misinform the computer security community in some shape or form.

As corporate security became higher on the agendas of IT managers around the globe and more frequently discussed within the world media, peoples’ perceptions about secure communications also changed.

Peoples’ fear of what they have heard about on TV but fail to understand is the very artifact played upon by an increasing number of groups and individuals publishing misleading or otherwise entirely false information to forums such as the full-disclosure mailing list. Past examples have included false advisories concerning non-existent vulnerabilities in the popular server software OpenSSH—causing panic among the ill-advised systems administration communities, and perhaps the more significant GOBBLES RIAA/mpg-123 advisory. In January 2003, the infamous group GOBBLES posted an advisory detailing a specific vulnerability in the relatively unknown media player called mpg-123 to Bugtraq.

In the advisory, the author made claims that the group had been hired by the Recording Industry Association of America (RIAA) to author a software worm to take advantage of vulnerabilities in more popular media players (such as the Microsoft Windows Media Player). The aim of this alleged worm was to infect all users of file-sharing platforms such as KaZaa and WinMX, ultimately taking down all those who share copyrighted music (the copyright holders being clients of the RIAA). Of course, all but the information pertaining to the mpg-123 vulnerability within the advisory was totally fabricated. At the time, however, the RIAA was well known for its grudge against the growing number of users of file sharing software, and the mpg-123 vulnerability was indeed real. This resulted in a huge number of individuals believing every word of the advisory. It wasn’t long before multiple large media networks caught on to the story, reciting the information contained within the original GOBBLES advisory as gospel, reaching an audience an order of magnitude larger than the entire security community put together. Naturally, the RIAA was quick to rebut the story as being entirely false. In turn, news stories were published to retract the previously posted (mis) information, but it proved a point that at the time the public was extremely vulnerable to a form of social engineering that played on fear of the unknown and the fear of insecurity.

The following are extracts from original GOBBLES advisory. Please note that certain statements have been removed due to lack of relevance and that GOBBLES is not in fact working for the RIAA.

“Several months ago, GOBBLES Security was recruited by the RIAA (riaa.org) to invent, create, and finally deploy the future of antipiracy tools. We focused on creating virii/worm hybrids to infect and spread over p2p nets. Until we became RIAA contracters, the best they could do was to passively monitor traffic. Our contributions to the RIAA have given them the power to actively control the majority of hosts using these networks. We focused our research on vulnerabilities in audio and video players. The idea was to come up with holes in various programs, so that we could spread malicious media through the p2p networks, and gain access to the host when the media was viewed.

1. During our research, we auditted and developed our hydra for the following: media tools:

2. mplayer (www.mplayerhq.org)

3. WinAMP (www.winamp.com)

4. Windows Media Player (www.microsoft.com)

5. xine (xine.sourceforge.net)

6. mpg123 (http://www.mpg123.de)

7. xmms (http://www.xmms.org)

After developing robust exploits for each, we presented this first part of our research to the RIAA. They were pleased, and approved us to continue to phase two of the project – development of the mechanism by which the infection will spread.

It took us about a month to develop the complex hydra, and another month to bring it up to the standards of excellence that the RIAA demanded of us. In the end, we submitted them what is perhaps the most sophisticated tool for compromising millions of computers in moments.

Our system works by first infecting a single host. It then fingerprints a connecting host on the p2p network via passive traffic analysis, and determines what the best possible method of infection for that host would be. Then, the proper search results are sent back to the “victim” (not the hard-working artists who p2p technology rapes, and the RIAA protects). The user will then (hopefully) download the infected media file off the RIAA server, and later play it on their own machine.

When the player is exploited, a few things happen. First, all p2p-serving software on the machine is infected, which will allow it to infect other hosts on the p2p network. Next, all media on the machine is cataloged, and the full list is sent back to the RIAA headquarters (through specially crafted requests over the p2p networks), where it is added to their records and stored until a later time, when it can be used as evidence in criminal proceedings against those criminals who think it’s OK to break the law.

Our software worked better than even we hoped, and current reports indicate that nearly 95% of all p2p-participating hosts are now infected with the software that we developed for the RIAA.

If you participate in illegal file-sharing networks, your computer now belongs to the RIAA.

Your BlackIce Defender(tm) firewall will not help you.

Snort, RealSecure, Dragon, NFR, and all that other crap cannot detect this attack, or this type of attack.

Don’t fuck with the RIAA again, scriptkids.

We have our own private version of this hydra actively infecting p2p users, and building one giant ddosnet.

However, as a demonstration of how this system works, we’re providing the academic security community with a single example exploit, for a mpg123 bug that was found independently of our work for the RIAA, and is not covered under our agreement with the establishment.”

Summary

In this chapter, we have covered various real-world semantics of the vulnerability disclosure process, and in turn, how those semantics affect the adversarial capability. But what relevance does it have in the context of theoretical characterization?

Just as it is of great value to have the ability to gain an understanding for the ways in which adversaries in the kinetic world acquire weapons (and their ability to make acquisitions), it is also of huge value to have the capability to characterize the lengths at which a cyber adversary must go to in order to acquire technical capabilities. The vulnerability disclosure process and structure of the vulnerability research communities play a vital role in determining the ease (or not) with which technical capabilities are acquired. As we have also seen, an ability to assess the ease at which adversary may acquire technical capabilities through their placement in the disclosure food chain also entails the ability to glean an insight into the adversaries’ attitude to attack inhibitors such as the adversaries’ perceived probability of success given an attempt and perceived probability of detection given an attempt.

Frequently Asked Questions

The following Frequently Asked Questions, answered by the authors of this book, are designed to both measure your understanding of the concepts presented in this chapter and to assist you with real-life implementation of these concepts. To have your questions about this chapter answered by the author, browse to www.syngress.com/solutions and click on the “Ask the Author” form. You will also gain access to thousands of other FAQs at ITFAQnet.com

Q: I want to make sure I keep my systems secure ahead of the curve. How can I keep up with the latest vulnerabilities?

A: The best way is to subscribe to the Buqtraq mailing list, which you can do by sending a blank e-mail to [email protected] Once you reply to the confirmation, your subscription will begin.

For Windows-based security holes, subscribe to NTBugtraq by sending an e-mail to [email protected]. In the body of your message, include the phrase “SUBSCRIBE ntbugtraq Firstname Lastname” using your first name and last name in the areas specified.

Q: I’ve found an aberration and I’m not sure if it is a vulnerability or not, or I’m fairly certain I have found a vulnerability, but I don’t have the time to perform the appropriate research and write up. What should I do?

A: You can submit undeveloped or questionable vulnerabilities to the vuln-dev mailing list by sending e-mail to [email protected] This mailing list exists to allow people to report potential or undeveloped vulnerabilities. The idea is to help people who lack the expertise, time, or information about how to research a vulnerability to do so. To subscribe to vuln-dev, send an e-mail to [email protected] with a blank message body. The mailing list will then send you a confirmation message for you to reply to before your subscription begins. You should be aware that by posting the potential or undeveloped vulnerability to the mailing list, you are in essence making it public.

Q: I was checking my system for a newly released vulnerability and I’ve discovered that the vulnerability is farther-reaching than the publisher described. Should I make a new posting of the information I’ve discovered?

A: Probably not. In a case like this, or if you find a similar and related vulnerability, first contact the person who first reported the vulnerability and compare notes. To limit the number of sources of input for a single vulnerability, you may decide that the original discoverer should issue the revised vulnerability information (while giving you due credit, of course). If the original posting was made anonymously, then you should consider a supplementary posting that includes documentation of your additional discoveries.

Q: I think I’ve found a problem, should I test it somewhere besides my own system? (For example, Hotmail is at present a unique, proprietary system. How do you test Hotmail holes?)

A: In most countries, including the United States, it is illegal for you to break into computer systems or even attempt to do so, even if your intent is simply to test a vulnerability for the greater good. By testing the vulnerability on someone else’s system, you could potentially damage it or leave it open to attack by others. Before you test a vulnerability on someone else’s system, you must first obtain written permission. For legal purposes, your written permission should come from the owner of the system you plan to “attack.” Make sure you coordinate with that person so that he or she can monitor the system during your testing in case he or she needs to intervene to recover it after the test. If you can’t find someone who will allow you to test his or her system, you can try asking for help in the vuln-dev mailing list or some of the other vulnerability mailing lists. Members of those lists tend to be more open about such things. As far as testing services like Hotmail, it can’t legally be done without the express written permission of Hotmail and you may even be subject to a DMCA violation (see the sidebar earlier in the chapter), depending on the creativity of the vendor’s legal staff.

Q: I’ve attempted to report a security problem to a vendor, but they require you to have a support contract to report problems. What can I do?

A: Try calling their customer service line anyway, and explain to them that this security problem potentially affects all their customers. If that doesn’t work, try finding a customer of the vendor who does have a service contract. If you are having trouble finding such a person, look in any forums that may deal with the affected product or service. If you still come up empty-handed, it’s obvious the vendor does not provide an easy way to report security problems, so you should probably skip them and release the information to the public.