Mind model

Abstract

The technology of building mind model is often called mind modeling, which aims to explore and study the human thinking mechanism.

Keywords

Mind model; Turing machine; physical symbol system; SOAR model; ACT-R model; CAM model; cognitive cycle; PMJ model

The mind means all the spiritual activities of human beings, including emotion, will, sensibility, perception, representation, learning, memory, thought, intuition, etc. The mind issue is one of the most fundamental and significant issues of intelligence science [1]. People use modern scientific methods to study the form, process, and law of the integration of human irrational psychology and rational cognition. The technology of building a mind model is often called mind modeling, which aims to explore and study the human thinking mechanism, especially the human information processing mechanism, and provide new architecture and technical methods for designing a corresponding artificial intelligence system.

4.1 Mind

In intelligence science, “mind” means a series of cognitive abilities, which enable individuals to have consciousness, sense the outside world, think, make judgments, and remember things [2]. The mind is a human characteristic; however, other living creatures may also have mind.

The phenomenon and psychological aspects of the mind have long been intertwined. The phenomenal concept of mind is a consciously experienced mental state. This is the most confusing aspect of the mind. Another is the psychological concept of mind, which is a causal or an explanatory basis of behavior. In this sense, a state is mental if it plays an appropriate causal role in terms of behavior formation, or it at least plays a proper role in terms of behavior interpretation.

In accordance with the concept of phenomenology, mind is depicted by way of sensing, while in accordance with the concept of psychology, the mind is depicted by its behavior. There is no competition problem between these two mind concepts. Either of them may be a correct analysis of the mind. They involve different fields of phenomenon, both of which are quite real.

A particular mind concept can often be analyzed as a phenomenal concept, a psychological concept, or as a combination of both. For example, sensing is best seen as a phenomenal concept in its core meaning: a sensing of a certain sensing status. On the other hand, learning and memory are best viewed as a psychological concept. Roughly speaking, something is to be learned, since it is appropriate to adjust the capacity to respond to certain environmental stimuli. In general, the phenomenal characteristics of the mind are depicted by those subjects with the appropriate characteristics. Psychological characteristics of the mind are represented by those roles, which are associated with the causal relation or explanation of behavior.

The states of mind mean psychological states, including belief, ability, intentions, expectations, motivation, commitment, and so on. They are important factors in determining intelligent social behavior and individual behavior. The concept of the human mind is related to thoughts and consciousness. It is the product of human consciousness development at a certain stage. Anthropologists believe that all creatures have some kind of mind, mind development went through four stages: (1) Simple reflection stage: For example, the pupil shrinks when eyes are stimulated by strong light, which cannot be controlled by consciousness. (2) Reflex stage: Pavlov’s famous experiment showed that stimuli can make dog saliva outflow. (3) Tool stage: Chimpanzees can get fruits from the tree using sticks. (4) Symbol stage: It is the ability to use language symbol to communicate with the outside world, which only humans can do. Therefore, in comparison with the animal mind at a relatively early stage, the human mind is the product of mind development at the highest stage. The generation and development of human intelligence cannot be separated from the symbolic language of mankind.

4.1.1 Philosophy issues of mind

In a long time, people are trying to understand what the mind is from the perspective of philosophy, religion, psychology, and cognitive science and to explore the unique nature of the mind. Many famous philosophers studied in this domain, including Plato, Aristotle, R. Descartes, G. W. Leibniz, I. Kant, M. Heidegger, J. R. Searle, D. Dennett, et al. Some psychologists, including S. Freud and W. James, also set up a series of influential theories about the nature of the human mind from the perspective of psychology in order to represent and define the mind. In the late 20th and early 21st centuries, scientists have established and developed a variety of ways and methods to describe the mind and its phenomenon in the field of cognitive science. In another field, artificial intelligence began to explore the possibility of the existence of the nonhuman mind by combining the control theory and information theory. They also looked for a method of realizing the human spirit’s influence on the machine.

In recent years, mental philosophy developed rapidly, which has become a fundamental and pivotal subject in the field of philosophy. If we said the movement from modern philosophy to contemporary philosophy has gone through a kind of Copernicus revolution, in which linguistic philosophy replaced epistemology and became a symbol of contemporary philosophy, then mental philosophy has become the foundation of contemporary genres. If we said that the solution to the problem of ontology and epistemology cannot be separated from linguistic philosophy, then that of linguistic philosophy depends on the exploration and development of mental philosophy. For example, to explain the meaning, reference, nature, and characteristics of language, we must resort to the intention of mental state (but not as the only factor). What is amazing are the large number of works, the depth and vastness of the problems, the novel and unique insight, the fierce debates, the rapid progress in this domain.

The study of mind philosophy is mainly about the form, scope, nature, characteristics, relationship between mind and body, psychological content and its source, and the philosophical reflection [3] on the explanatory model of folk psychology. With the deepening of cognition, mental philosophy has changed or is changing the traditional psychology. Because the psychological phenomenon is an important part of the cosmic structure, the newest exploration has touched the fundamental cosmic view, such as collateral, dependence, decision, covariance, reduction, rule, and so on. There are indications that the development of mental philosophy will be one of the most important sources and forces of future philosophy. From this point of view, philosophy of mind is not a narrow mental knowledge but a profound domain with stable core, fuzzy boundary, open character, and the broad future. At present, the focus is as discussed next.

4.1.1.1 Mind–body problem

The mind–body problem involves the nature of psychological phenomenon and the relationship between the mind and body. The current debate is mainly concentrated on reductionism, functionalism, and the dilemma of realizationism and physicalism.

The nature of the problem is the relationship between the brain and the nervous system. The discussion is about the mind–body dichotomy, whether the mind is independent of human flesh to some extent (dualism), and whether the flesh is independent from physical phenomena can be regarded as physical phenomena including neural activity (physicalism). The discussion is also about whether the mind is consistent with our brains and its activity. The other question is whether only humans have mind or whether all or some of animals and creatures have mind, or even whether a human-made machine could have mind.

Regardless of the relationship between the mind and the body, it is generally believed that the mind makes individual subjective and intentional judgments about the environment. Accordingly, individual can perceive and respond to stimulation via a certain medium. At the same time, the individual can think and feel.

4.1.1.2 Consciousness

Consciousness here is used in a limited sense, meaning the common awareness that runs through humans’ various psychological phenomena. Scientists have formed a special and independent research field around consciousness, some of which also puts forward the particular concept of “consciousness.” There are numerous problems with consciousness, which can be classified in two kinds: difficult problems and easy problems. The former refers to the problem of experience, which attracts the lion’s share of attention.

4.1.1.3 Sensitibility

Sensitibility is the subjective characteristic or phenomenological nature of people experiencing the psychological state of feeling. Nonphysicalists argue that physicalism has been able to assimilate all kinds of counterexamples except the qualia. Sensitibility is the new world of the psychological world, a nonphysical thing that cannot be explained by physical principle. However, physicalists will counter that, in the effort to move physicalism forward.

4.1.1.4 Supervenience

Supervenience is a new problem and a new paradigm in mental philosophy, referring to the characteristic that the psychological phenomenon is interdependent with the physical phenomenon. Definitely, it can also be generalized to other relations or attributes, so as to become a universal philosophical category. Throughout the study, people are trying to further grasp the essence and characteristics of mental phenomena and its status in the cosmic structure. They also want to look for the product of a middle path between reductionism and dualism. All these relate to the crucial problem of decision, reduction, inevitability, and psychophysical law in mental philosophy and cosmology.

4.1.1.5 The language of thought

The language of thought refers to machine language, which is different from natural language and which is the real medium of the human brain. It seems to many people that natural language has echoism, so it cannot be processed by the brain.

4.1.1.6 Intentionality and content theory

This is an old but new problem, which is the intersection of linguistic philosophy and mental philosophy. Many people think that the meaning of natural language is related to mental state and that the latter is rooted in the semantic of both the language of thought and mental representation. But what is the semantic of the language of thought? What is the relationship between it and the function of mental state? What is the relationship between it and the syntactic of psychological statement? All these are the focuses of debate.

4.1.1.7 Mental representation

Mental representation is the expression of information, which is stored and processed by people. The information is attained when they acquaint themselves with the world and their own. These studies reach deeply into the internal structure, operation, and mechanism of the mental world.

4.1.1.8 Machine mind

The machine mind is the problem of “other mind,” that is, is there any other mind except the human mind? If it exists, how can we recognize and prove that it exists? What is its basis, reason, and process? What is the foundation to judge whether the object like a robot is intelligent? The debate focuses on the skepticism and analogous argumentation of “other mind.”

The mental philosopher has been arguing about the relationship between the phenomenal consciousness and the brain and mind. Recently, neural scientists and philosophers have discussed the problem of neural activity in the brain that constitutes a phenomenal consciousness and how to distinguish between the neural correlates of consciousness and nervous tissue? What levels of neural activity in the brain constitute a consciousness? What might be a good phenomenon for study, such as binocular rivalry, attention, memory, emotion, pain, dreams, and coma? What should consciousness know and explain in this field? How to apply tissue relationship to the brain, mind, and other relationships like identity, nature, understanding, generation, and causal preference? The literature [4] brings together the interdisciplinary discussion of the problems by neural scientists and philosophers.

Experimental philosophy [5] is a new thing in the 21st century. In just over a decade, like a powerful cyclone, it shook the philosophers’ dream about the rationality of traditional research methods and sparked a reflection on philosophical methodology. With the heated debate, its influence is spread rapidly around the world.

4.1.2 Mind modeling

Research on the brain, like intelligence in intelligence science, is closely related to the computational theory of mind. Generally, models are used to express how the mind works and to understand the working mechanism of the mind. In 1980, Newell first proposed the standard of mind modeling [6]. In 1990, Newell described the human mind as a set of functional constraints and proposed 13 criteria for mind [7]. In 2003, on the basis of Newell’s 13 criteria, Anderson et al. put forward the Newell test [8] to judge the criteria to be met by the human mental model and the conditions needed for better work. In 2013, the literature [9] analyzed the standards of mind models. In order to construct a better mind model, the paper proposed the criteria for mind modeling.

4.1.2.1 To behave flexibly

The first criterion is to behave flexibly as a function of the environment, and it was clear that it should have computational universality and that it was the most important criterion for human mind. Newell recognized the true flexibility in the human mind that made it deserving of this identification with computational universality, even as the modern computer is characterized as a Turing-equivalent device despite its physical limitations and occasional errors.

When universality of computation is the factor of human cognition, even if computers have specialized processors, they should not be regarded as only performing various specific cognitive functions. Moreover, it shows that it is much easier for people to learn some things than other devices. In the field of language, “natural language” is emphasized, and the learning of natural language is much easier than that of non-natural language. Commonly used artifacts are only a small part of an unnatural system. When people may approach the universality of computation, they only obtain a small part of computable function and execute it.

4.1.2.2 Adaptive behavior

Adaptive behavior means it must be in the service of goals and rationally related to things. Humans don’t just do fantastic intelligence computation but choose the ones that meet their needs. In 1991 Anderson proposed two levels of self-adaptability: One is the basic process and related forms of the system structure, providing useful functions; the other is whether the system is regarded as a whole and whether its whole computation meets people’s needs.

4.1.2.3 Real time

For a cognitive theory, just flexibility is not enough. The theory must explain how people can compete in real time. Here “real time” represents the time of human execution. For people who understand neural networks, cognition has its limitations. Real time is a restriction on learning and execution. If it takes a lifetime to learn something, in principle, this kind of learning is not useful.

4.1.2.4 Large-scale knowledge base

One of the key points of human’s adaptability is that we can access a lot of knowledge. Perhaps the biggest difference between human cognition and various “expert systems” is that in most cases people have the necessary knowledge to take appropriate action. However, a large-scale knowledge base can cause problems. Not all knowledge is equally reliable or relevant. For the current situation, relevant knowledge can quickly become irrelevant. There may be serious problems in successfully storing all knowledge and retrieving relevant knowledge in a reasonable time.

4.1.2.5 Dynamic behavior

In the real world, it is not as simple as solving maze and Hanoi Tower problems. Change in the world is beyond our expectation and control. Even if people’s actions are aimed to control the world, they will have unexpected effects. Dealing with a dynamic and unpredictable environment is the premise of the survival of all organisms. Given that people have built complexity analysis for their own environment, the need for dynamic response is mainly faced with cognitive problems. To deal with dynamic behavior, we need a theory of perception and action as well as a theory of cognition. The work of situational cognition emphasizes how the structural cognition of the external world appears. Supporters of this position argue that all cognition reflects the outside world. This is in sharp contrast to the earlier view that cognition can ignore the external world [10].

4.1.2.6 Knowledge integration

Newell called this standard “symbols and abstraction.” Newell’s comments on this standard appear in his book Unified Theories of Cognition: The mind can use symbols and abstractions [7]. We know that just from observing ourselves. He never seemed to admit that there was any debate on the issue. Newell thinks that the existence of external symbols such as symbols and equations is rarely disputed. He believes that symbols are concrete examples of table processing languages. Many symbols have no direct meaning, which is different from the effect of philosophical discussion or calculation. In Newell’s sense, as a classification standard, symbols cannot be installed. However, if we pay attention to the definition of his physical symbols, we will understand the rationality of this standard.

4.1.2.7 Use language

The seventh criterion is to use language, both natural and artificial. Newell claimed that language is a central feature of human mind–like activity, and he believes that language depends on symbol manipulation.

4.1.2.8 Consciousness

Newell acknowledged the importance of consciousness to human cognition as a whole. Newell asked us to consider all the criteria, not just one of them. Consciousness includes subconscious perception, implicit learning, memory, and metacognition.

4.1.2.9 Learning

Learning is another uncontrollable standard of human cognitive theory. A satisfactory cognitive theory must explain the ability of human beings to acquire their competitiveness. People must be able to have many different kinds of learning abilities, including semantic memory, situational memory, skills, priming, and conditions. There may be more than one way to learn.

4.1.2.10 Development

Development is the first of three constraints in Newell’s initial list of the cognitive system structures. Although the functions associated with imagination and new cognitive theories are fully mature in the imaginary world, human cognition is constrained in the real world by the growth of the organism and the corresponding experience. Human development is not an ability but a constraint.

4.1.2.11 Evolution

Human cognitive ability must be improved through evolution. Various elements have been proposed—specific capabilities, such as the ability to detect cheaters or the constraints of natural language, which evolved at specific times in the history of human evolution. The change of evolutionary constraint is comparative constraint. How is the architecture of human cognition different from that of other mammals? We have taken cognitive plasticity as one of the characteristics of human cognition, and language is also a certain characteristic. What is the basis and unique cognitive attribute of the human cognitive system?

4.1.2.12 Brain

The last constraint is the neural realization of cognition. Recent research has greatly increased the functional data on specific brain regions that can be used to study cognitive constraint theory.

The world of the mind is much more complex than the possible world described with mathematics and logic. How might we move from the finite, noncontradictory use of the deductive method to construct relatively simple possible worlds into the infinite, contradictory use of a variety of logic and cognitive methods in a more complex mind world?

The goal of intelligence science is to uncover the mysteries of the human mind. Its research can not only promote the development of artificial intelligence and reveal the essence and significance of life but also have extraordinary significance in promoting the development of modern science, especially psychology, physiology, linguistics, logic, cognitive science, brain science, mathematics, computer science, and even philosophy.

4.2 Turing machine

In 1936 British scientist Turing submitted his famous paper “On Computable Numbers, with an Application to the Entscheidungsproblem” [11]. He put forward an abstract computation model, which can accurately define the computable function. In this pioneering paper, Turing gave “computable” a strict mathematical definition and put forward the famous Turing machine. The Turing machine is an abstract model rather than a material machine. It can produce a very easy but powerful computing device, which can compute all the computable functions ever imagined. Its physical structure is similar to the finite state machine.

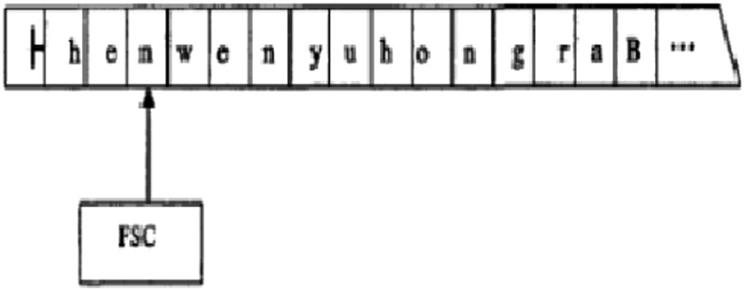

Fig. 4.1 shows that the Turing machine has a finite state controller (FSC) and an external storage device that is an infinite tape that can randomly extend rightward. (The top left is the tape head, identified as “![]() .”) A tape is divided into cells. Each cell can be blank or can contain a symbol from some finite alphabet. For convenience, we use a special symbol B, which is not in the alphabet, to represent the blank symbol. FSC interconnects with the tape by using a head that can read and write. Generally, the symbol B will be marked at the right.

.”) A tape is divided into cells. Each cell can be blank or can contain a symbol from some finite alphabet. For convenience, we use a special symbol B, which is not in the alphabet, to represent the blank symbol. FSC interconnects with the tape by using a head that can read and write. Generally, the symbol B will be marked at the right.

At any given moment, FSC is in a certain state. The read/write head will scan every cell. According to the state and the symbol, the Turing Machine will have an action for changing the state of FSC. It will erase the symbol on cells that have been scanned and write a new symbol for it. (The new symbol might be the same as the previous one, which makes the cell content stay the same.) The read/write head will move left or right by one cell. Or the head is stationary.

The Turing machine can be defined as a 5-tuple

(4.1)

where

Q is a finite set of states;

Σ is a finite set of alphabet symbols on the tape, with the augmented set Σ′=Σ∪{B};

q0∈Q is the initial state;

qa∈Q is an accept state;

δ is the state transition function of Q×Σ’→Q×Σ’×{L, R, N}, which is ![]() .

.

Generally, the transition function (or rules of Turing machine) will be marked as:

(4.2)

where x, w∈ Σ∪{B} means that if symbol x is scanned, the state will transform from q to q′. And a new symbol w will be written. The read/write head will move to the left (L), right (R), or not move (N).

4.3 Physical symbol system

Simon has defined that a physical symbol system (SS) is a machine that, as it moves through time, produces an evolving collection of symbol structures. Symbol structures can and commonly do serve as internal representations (e.g., “mental images”) of the environment to which the SS is seeking to adapt [12]. A SS possesses a number of simple processes that operate upon symbol structures—processes that create, modify, copy, and destroy symbols. It must have a means for acquiring information from the external environment that can be encoded into internal symbols, as well as a means for producing symbols that initiate action upon the environment. Thus it must use symbols to designate objects and relations and actions in the world external to the system.

Symbol systems are called “physical” to remind the reader that they exist in real-world devices, fabricated of glass and metal (computers) or flesh and blood (brains). In the past, we have been more accustomed to thinking of the SSs of mathematics and logic as abstract and disembodied, leaving out of account the paper and pencil and human minds that were required to actually bring them to life. Computers have transported SSs from the platonic heaven of ideas to the empirical world of actual processes carried out by machines or brains or by the two of them working together.

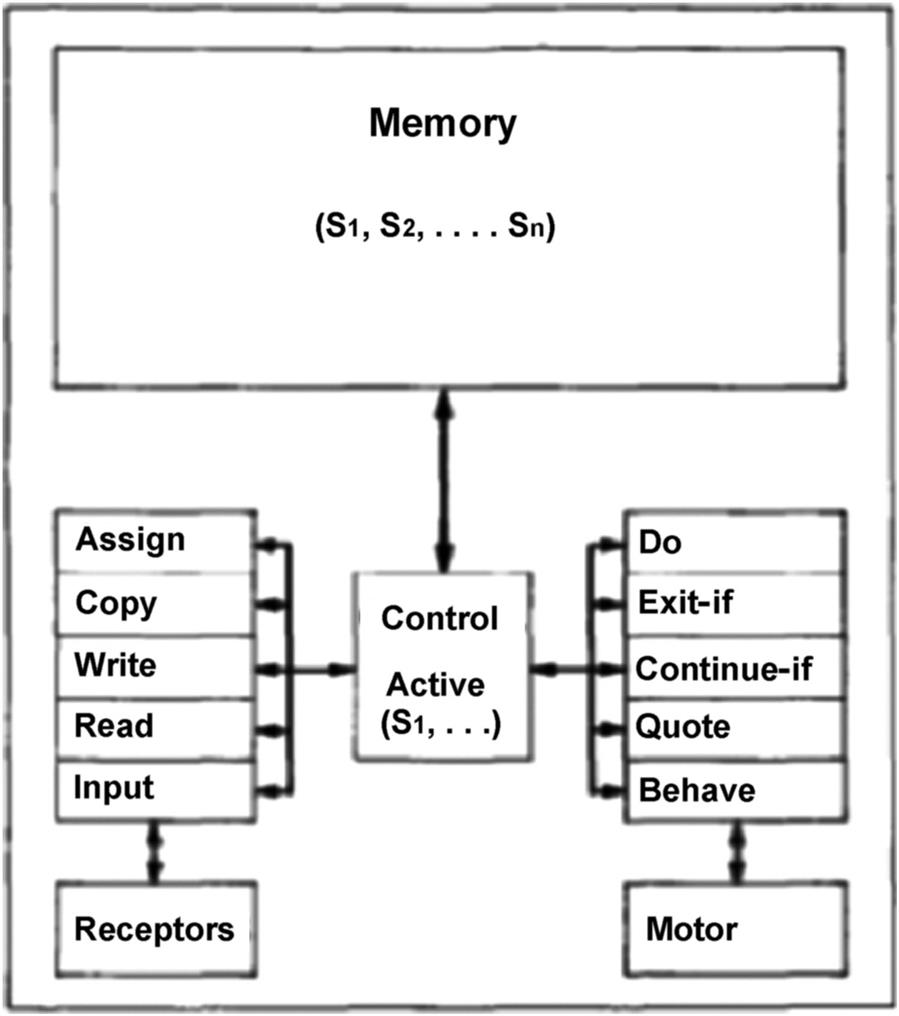

A SS consists of a memory, a set of operators, a control, an input, and an output. Its inputs are the objects in certain locations; its outputs are the modification or creation of the objects in certain (usually different) locations. Its external behavior, then, consists of the outputs it produces as a function of its inputs. The larger system of environment plus SS forms a closed system, since the output objects either become or affect later input objects. SS’s internal state consists of the state of its memory and the state of the control; and its internal behavior consists of the variation in this internal state over time. Fig. 4.2 shows you a framework of a SS [6].

Two notions are central to this structure of expressions, symbols, and objects: designation and interpretation. Designation means that an expression designates an object if, given the expression, the system can either affect the object itself or behave in ways dependent on the object. Interpretation has been defined such that the system can interpret an expression if the expression designates a process and if, given the expression, the system can carry out the process. Interpretation implies a special form of dependent action: Given an expression, the system can perform the indicated process, which is to say it can evoke and execute its own processes from expressions that designate them.

The 1975 Association for Computing Machinery (ACM) Turing Award was presented jointly to Allen Newell and Herbert A. Simon at the ACM Annual Conference in Minneapolis on October 20. They gave a Turing lecture entitled “Computer Science as Empirical Inquiry: Symbols and Search.” At this lecture, they presented a general scientific hypothesis—a law of qualitative structure for SS’s: the Physical Symbol System Hypothesis [13]. A physical SS has the necessary and sufficient means for general intelligent action. By “necessary” we mean that any system that exhibits general intelligence will prove upon analysis to be a physical SS. By “sufficient” we mean that any physical SS of sufficient size can be organized further to exhibit general intelligence. By “general intelligent action” we wish to indicate the same scope of intelligence as we see in human action: that in any real situation, behavior appropriate to the ends of the system and adaptive to the demands of the environment can occur, within some limits of speed and complexity. The Physical Symbol System Hypothesis clearly is a law of qualitative structure. It specifies a general class of systems within which one will find those capable of intelligent action. Its main points of the hypothesis are as follows:

- 1. The hypothesis of a physical SS is the necessary and sufficient condition of representing intelligent actions through a physical system.

- 2. Necessary means that any physical system of representing intelligence is one example of a physical SS.

- 3. Sufficiency means that any physical SS could represent the intelligent actions through further organizing.

- 4. Intelligent behaviors are those that human owns: Under some physical limitations, they are the actually occurring behaviors that fit the system purpose and meet the requirement of circumstance.

Given these points, since the human being has intelligence, it is a physical SS. A human being can observe and recognize outside objects, receive the intellectual tests, and pass the examinations. All these are a human being’s representation. The reason humans can represent their intelligence is based on their procedure of information processing. This is the first deduction from the hypothesis of the physical SS. The second inference is that, since the computer is a physical SS, it must show its intelligence, which is the basic condition of artificial intelligence. The third inference is that since the human being is a physical SS and the computer is also a physical SS, we can simulate the human being’s actions through the computer. We can describe the procedure of human action or establish a theory to describe the whole activity procedure of the human being.

4.4 SOAR

SOAR is the abbreviation of State, Operator And Result, which represents the state, operand, and result. It reflects the basic principle of applying the weak method to continuously use the operands in the state and obtain new results. SOAR is a theoretical cognitive model. It carries out the modeling of human cognition from the aspect of psychology and proposes a general problem-solving structure.

By the end of 1950s, a model of storage structure was invented by using one kind of signals to mark other signals in neuron simulation. This is the earlier concept of chunks. The chess master keeps in mind memory chunks about experiences of playing chess under different circumstances. In the early of 1980s, Newell and Rosenbloom proposed that system performance can be improved by acquiring knowledge of a model problem in a task environment and that memory chunks can be regarded as the simulation foundation of human action. By means of observing problem solving and acquiring experience memory chunks, the complex process of each subgoal is substituted and thus ameliorates the speed of the problem solving of the system, thereafter laying a solid foundation for empirical learning.

4.4.1 Basic State, Operator And Result architecture

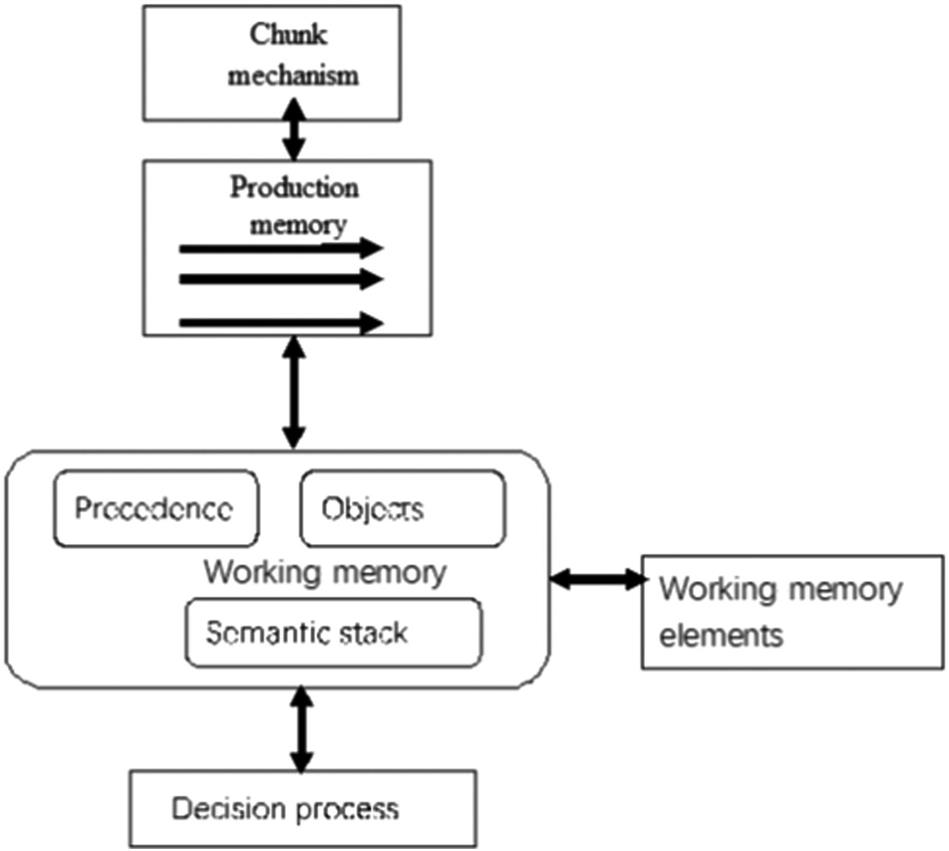

In 1987 J. E. Laird from the University of Michigan, Paul S. Rosenbloom from the University of Stanford, and A. Newell from Carnegie Mellon University developed the SOAR system [14], whose learning mechanism is to learn general control knowledge under the guidance of an outside expert. The outer guidance can be direct or an intuitionistic simple question. The system converts the high-level information from the outer expert into inner presentations and learns to search the memory chunk [15]. Fig. 4.3 presents the architecture of SOAR.

The processing configuration is composed of production memory and decision process. The production memory contains production rule, which can be used for searching the control decision. The first step is detailed refinement; all the rules are referred to working memory in order to decide the priorities and which context should be changed and how to change it. The second step is to decide the segment and goal that needs to be revised in the context stack.

Problem solving can be roughly described as a search through a problem space for a goal state. This is implemented by searching for the states that bring the system gradually closer to its goal. Each move consists of a decision cycle, which has an elaboration phase and a decision procedure.

SOAR originally stood for State, Operator And Result, reflecting this representation of problem solving as the application of an operator to a state in order to get a result. According to the project FAQ, the Soar development community no longer regards SOAR as an acronym, so it is no longer spelled in all caps, though it is still representative of the core of the implementation.

If the decision procedure just described is not able to determine a unique course of action, Soar may use different strategies, known as weak methods to solve the impasse. These methods are appropriate to situations in which knowledge is not abundant. When a solution is found by one of these methods, Soar uses a learning technique called chunking to transform the course of action taken into a new rule. The new rule can then be applied whenever Soar encounters the situation again.

In the process of SOAR problem solving, it is especially important to use the knowledge space. It is basically the trilogy about the analysis, decision, and action when using the knowledge to control the action of SOAR.

- 1. Analyzing phrase

Input: the object in library.

Task: put the object into the current environment from library.

Increase the information role of object in the current environment.

Control: repeat this process until it is finished. - 2. The phrase of decision

Input: the object in library.

Task: agreement, opposing or denying the objects in library. Select a new object to replace the congeneric objects.

Control: agree and oppose simultaneously. - 3. The phrase of execution

Input: current state and operands

Task: put the current operand into the current state. If a new state appears then put it into the library and use it to replace the original current state.

Control: this is a basic action which cannot be divided.

The memory chunk, which uses working-memory-elements (w-m-e) to collect conditions and constructs memory chunks in the Soar system, is the key for learning. When a subgoal is created for solving a simple problem or assessing the advice from experts, the current statuses are stored into w-m-e. System gets initial statuses of the subgoal from w-m-e and deletes solution operators as the conclusion action after the subgoal is solved. This generative production rule is the memory chunk. If the subgoal is similar to the subgoal of the initial problem, the memory chunk can be applied to the initial problem, and the learning strategy can apply what has already learned from one problem to another.

The formation of a memory chunk depends on the explanation of subgoal. The imparted learning is applied when converting the instructions of experts or simple problems into machine-executable format. Lastly, experiences obtained from solving simple and intuitionistic problems can be applied to initial problems, which involve analogy learning. Therefore, the manner of learning in the Soar system is a comprehensive combination of several learning methods.

4.4.2 Extended version of SOAR

Through the years, there have been substantial evolution and refinement of the Soar architecture. During this evolution, the basic approach of pure symbolic processing, with all long-term knowledge being represented as production rules, was maintained, and Soar proved to be a general and flexible architecture for research in cognitive modeling across a wide variety of behavioral and learning phenomena. Soar also proved to be useful for creating knowledge-rich agents that could generate diverse, intelligent behavior in complex, dynamic environments.

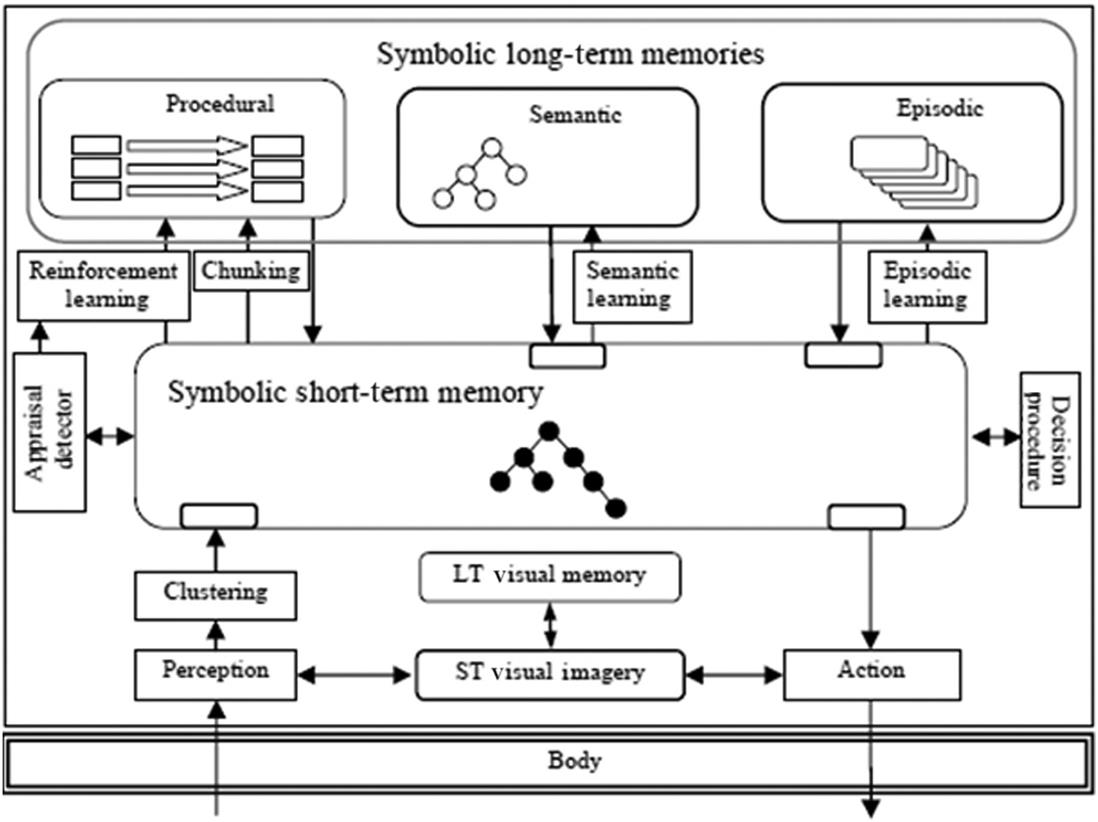

Fig. 4.4 shows the block diagram of SOAR 9 [16]. SOAR’s processing cycle is still driven by procedural knowledge encoded as production rules. The new components influence decision making indirectly by retrieving or creating structures in symbolic working memory that cause rules to match and fire. In the remainder of this section, we will give descriptions of these new components and discuss briefly their value and why their functionality would be very difficult to achieve by existing mechanisms.

4.4.2.1 Working memory activation

SOAR 9 added activation to Soar’s working memory. Activation provides meta-information in terms of the recency of a working memory element and its relevance, which is computed based depending on when the element matched rules that fired. This information is not used to determine which rules to fire, as Soar fires all rules that match, but it is stored as part of episodic memories, biasing their retrieval so that the episode retrieved is the most relevant to the current situation. Empirical results verify that working memory activation significantly improves episodic memory retrieval. Working memory activation will be used in semantic memory retrieval and emotion.

4.4.2.2 Reinforcement learning

Reinforcement learning (RL) involves adjusting the selection of actions in an attempt to maximize reward. In early versions of Soar, all preferences for selecting operators were symbolic, so there was no way to represent or adjust such knowledge; however, they added numeric preferences, which specify the expected value of an operator for the current state. During operator selection, all numeric preferences for an operator are combined, and an epsilon-greedy algorithm is used to select the next operator. This makes RL in Soar straightforward – it adjusts the actions of rules that create numeric preferences for selected operators. Thus, after an operator applies, all of the rules that created numeric preferences for that operator are updated based on any new reward and the expected future reward, which is simply the summed numeric value of the numeric preferences for the next selected operator. RL in Soar applies across all goals, including impasse-generated subgoals.

4.4.2.3 Semantic memory

In addition to procedural knowledge, which is encoded as rules in Soar, there is declarative knowledge, which can be split into things that are known, such as facts, and things that are remembered, such as episodic experiences. Semantic learning and memory provides the ability to store and retrieve declarative facts about the world, such as tables have legs, dogs are animals, and Ann Arbor is in Michigan. This capability has been central to ACT-R’s ability to model a wide variety of human data, and adding it to Soar should enhance the ability to create agents that reason and use general knowledge about the world. In Soar, semantic memory is built up from structures that occur in working memory. A structure from semantic memory is retrieved by creating a cue in a special buffer in working memory. The cue is then used to search for the best partial match in semantic memory, which is then retrieved from working memory.

Because the knowledge is encoded in rules, retrieval requires an exact match of the cue, limiting the generality of what is learned. These factors made it difficult to use data chunking in new domains, begging the question as to how it would naturally arise in a generally intelligent agent.

4.4.2.4 Episodic memory

In Soar, episodic memory includes specific instances of the structures that occur in working memory at the same time, providing the ability to remember the context of past experiences as well as the temporal relationships between experiences [17]. An episode is retrieved by the deliberate creation of a cue, which is a partial specification of working memory in a special buffer. Once a cue is created, the best partial match is found (biased by recency and working memory activation) and retrieved into a separate working memory buffer. The next episode can also be retrieved, providing the ability to replay an experience as a sequence of retrieved episodes.

Although similar mechanisms have been studied in case-based reasoning, episodic memory is distinguished by the fact that it is task-independent and thus available for every problem, providing a memory of experience not available from other mechanisms. Episodic learning is so simple that it is often dismissed in AI as not worthy of study. Although simple, one has only to imagine what life is like for amnesiacs to appreciate its importance for general intelligence.

Episodic memory would be even more difficult to implement using chunking than semantic memory because it requires capturing a snapshot of working memory and using working memory activation to bias partial matching for retrieval.

4.4.2.5 Visual imagery

All of the previous extensions depend on Soar’s existing symbolic short-term memory to represent the agent’s understanding of the current situation and with good reason. The generality and power of symbolic representations and processing are unmatched, and the ability to compose symbolic structures is a hallmark of human-level intelligence. However, for some constrained forms of processing, other representations can be much more efficient. One compelling example is visual imagery, which is useful for visual-feature and visual-spatial reasoning. They have added a set of modules to Soar that support visual imagery, including a short-term memory where images are constructed and manipulated; a long-term memory that contains images that can be retrieved into the short-term memory; processes that manipulate images in short-term memory; and processes that create symbolic structures from the visual images. Although not shown, these extensions support both a depictive representation in which space is inherent to the representation, as well as an intermediate, quantitative representation that combines symbolic and numeric representations. Visual imagery is controlled by the symbolic system, which issues commands to construct, manipulate, and examine visual images.

With the addition of visual imagery, it is possible to solve spatial reasoning problems orders of magnitude faster than without it and using significantly less procedural knowledge.

4.5 ACT-R model

ACT-R (Adaptive Control of Thought–Rational) is a cognitive architecture mainly developed by John Robert Anderson at Carnegie Mellon University. Like any cognitive architecture, ACT-R aims to define the basic and irreducible cognitive and perceptual operations that enable the human mind. In theory, each task that humans can perform should consist of a series of these discrete operations.

The roots of ACT-R can be backtracked to the original HAM (human associative memory) model of memory, described by John R. Anderson and Gordon Bower in 1973. The HAM model was later expanded into the first version of the ACT theory. This was the first time the procedural memory was added to the original declarative memory system, introducing a computational dichotomy that was later proved to hold in the human brain. The theory was then further extended into the ACT* model of human cognition.

In the late eighties, Anderson devoted himself to exploring and outlining a mathematical approach to cognition that he named rational analysis. The basic assumption of Rational Analysis is that cognition is optimally adaptive and that precise estimates of cognitive functions mirror statistical properties of the environment. Later, he came back to the development of the ACT theory, using national analysis as a unifying framework for the underlying calculations. To highlight the importance of the new approach in the shaping of the architecture, its name was modified to ACT-R, with the “R” standing for rational.

In 1993 Anderson met with Christian Lebiere, a researcher in connectionist models mostly famous for developing, with Scott Fahlman, the cascade correlation learning algorithm. Their joint work culminated in the release of ACT-R 4.0. which included optional perceptual and motor capabilities, mostly inspired from the EPIC architecture, which greatly expanded the possible applications of the theory.

After the release of ACT-R 4.0, John Anderson became more and more interested in the underlying neural plausibility of his lifetime theory and began to use brain imaging techniques pursuing his own goal of understanding the computational underpinnings of the human mind. The necessity of accounting for brain localization pushed for a major revision of the theory. ACT-R 5.0 introduced the concept of modules, specialized sets of procedural and declarative representations that could be mapped to known brain systems. In addition, the interaction between procedural and declarative knowledge was mediated by newly introduced buffers, specialized structures for holding temporarily active information (see the preceding section). Buffers were thought to reflect cortical activity, and a subsequent series of studies later confirmed that activations in cortical regions could be successfully related to computational operations over buffers.

A new version of the code, completely rewritten, was presented in 2005 as ACT-R 6.0. It also included significant improvements in the ACT-R coding language.

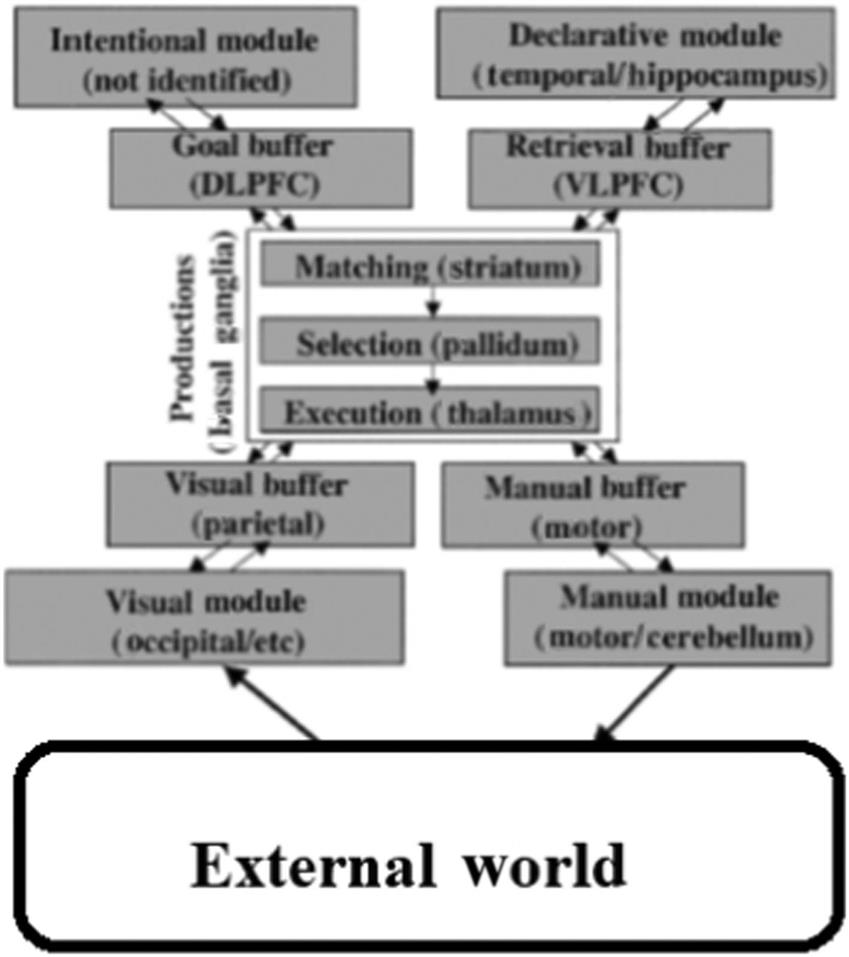

Fig. 4.5 illustrates the basic architecture of ACT-R 5.0 which contains some of the modules in the system: a visual module for identifying objects in the visual field, a manual module for controlling the hands, a declarative module for retrieving information from memory, and a goal module for keeping track of current goals and intentions [18]. Coordination of the behavior of these modules is achieved through a central production system. This central production system is not sensitive to most of the activities of these modules but rather can only respond to a limited amount of information that is deposited in the buffers of these modules. The core production system can recognize patterns in these buffers and make changes to them. The information in these modules is largely encapsulated, and the modules communicate only through the information they make available in their buffers.

The architecture assumes a mixture of parallel and serial processing. Within each module, there is a great deal of parallelism. For instance, the visual system is simultaneously processing the whole visual field, and the declarative system is executing a parallel search through many memories in response to a retrieval request. Also, the processes within different modules can go on in parallel and asynchronously.

ACT-R contains main components modules, buffers, and pattern matcher. The workflow of ACT-R is shown in Fig. 4.6.

- 1. Modules: There are two types of modules: (a) Perceptual-motor modules, which take care of the interface with the real world (i.e., with a simulation of the real world). The most well developed perceptual-motor modules in ACT-R are the visual and the manual modules. (b) Memory modules. There are two kinds of memory modules in ACT-R: declarative memory, consisting of facts such as Washington, D.C. is the capital of United States, or 2+3=5, and procedural memory, made of productions. Productions represent knowledge about how we do things: for instance, knowledge about how to type the letter “Q” on a keyboard, about how to drive, or about how to perform addition.

- 2. Buffers: ACT-R accesses its modules through buffers. For each module, a dedicated buffer serves as the interface with that module. The contents of the buffers at a given moment in time represents the state of ACT-R at that moment.

- 3. Pattern matcher: The pattern matcher searches for a production that matches the current state of the buffers. Only one such production can be executed at a given moment. That production, when executed, can modify the buffers and thus change the state of the system. Thus, in ACT-R cognition unfolds as a succession of production firings.

ACT-R is a hybrid cognitive architecture. Its symbolic structure is a production system; the subsymbolic structure is represented by a set of massively parallel processes that can be summarized by several mathematical equations. The subsymbolic equations control many of the symbolic processes. For instance, if several productions match the state of the buffers, a subsymbolic utility equation estimates the relative cost and benefit associated with each production and decides to select for execution the production with the highest utility. Similarly, whether (or how fast) a fact can be retrieved from declarative memory depends on subsymbolic retrieval equations, which consider the context and the history of usage of that fact. Subsymbolic mechanisms are also responsible for most learning processes in ACT-R.

ACT-R has been used successfully to create models in domains such as learning and memory, problem solving and decision making, language and communication, perception and attention, cognitive development, or individual differences.

Beside its applications in cognitive psychology, ACT-R has been used in human–computer interaction to produce user models that can assess different computer interfaces; education (cognitive tutoring systems) to “guess” the difficulties that students may have and provide focused help; computer-generated forces to provide cognitive agents that inhabit training environments; neuropsychology to interpret fMRI data.

4.6 CAM model

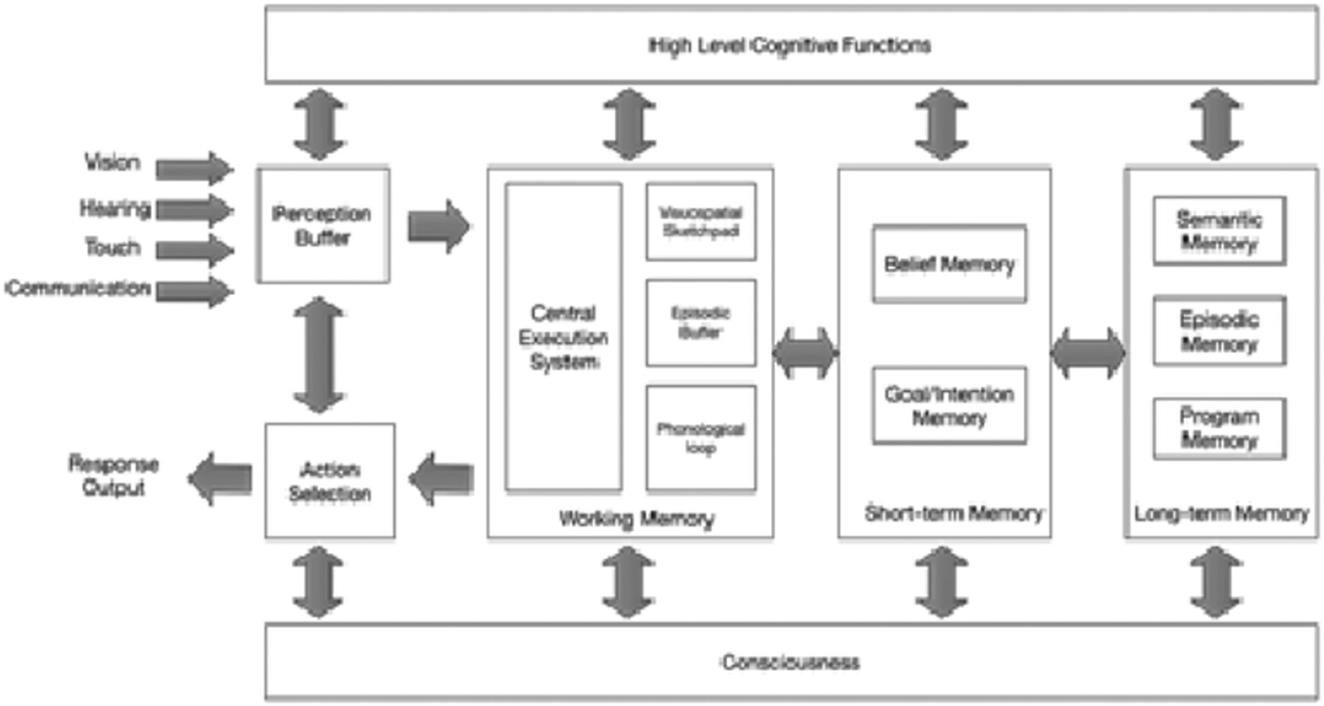

In mind activities, memory and consciousness play the most important role. Memory stores various important information and knowledge; consciousness gives humans the concept of self, according to the needs, preferences-based goals, and the ability to do all kinds of cognitive activity according to memory information. Therefore, the main emphasis on the mind model Consciousness And Memory (CAM) is on memory functions and consciousness functions [19]. Fig. 4.7 shows the architecture of the mind model CAM, which includes 10 main modules, briefly introduced as follows.

4.6.1 Vision

Human sensory organs including vision, hearing, touch, smell, taste. In the CAM model, visual and auditory are focused on. The visual system of the organism gives visual perception ability. It uses visible light to build the perception of the world. According to the image, the process of discovering what objects are in the surrounding scenery and where the objects are located is a process of discovering the useful symbolic description for image. Visual system has the ability to reconstruct a three-dimensional world from a two-dimensional projection of the outside world. It should be noted that the various objects in the visible light spectrum can be perceived in a different location.

In the processing of outside objects’ images in the retina, actually the light stimulus is transformed by retinal photoreceptor cells (rods and cones) into electrical signals, which, by means of retinal ganglion cells within the bipolar cells, form nerve impulses that is visual information. Visual information is transmitted to the brain via the optic nerve. Bipolar cells can be regarded as the Level 1 neurons of the visual transduction pathway neurons; ganglion cells are Level 2 neurons. Many ganglion cells send out nerve fibers composed of coarse optic nerve, optic nerve at the back end of the eye leaving the eye back into the cranial cavity. At this point, the left and right sides of the optic nerve cross. The crossing is called the optic chiasm. The optic tract at the bottom of brain is connected to the lateral geniculate body, which is an important intermediate visual information transmission station containing Level 3 neurons. They emit a large number of fibers that are composed of so-called radiation. Fiber optic radiation is projected to the visual center of the occipital lobe—the visual cortex. Visual information finally reaches the visual cortex of the brain and is processed, analyzed, and then finally formed into the subjective visual experience.

The visual cortex refers to the part of cerebral cortex that is responsible for processing visual information. It is located in the rear of the occipital lobe of the brain. The human visual cortex includes the primary visual cortex (V1, also known as the striate cortex) and extrastriate cortex (V2, V3, V4, V5, etc.). The primary visual cortex is located in the 17th area. The extrastriate cortex comprises areas 18 and 19.

The output of the primary visual cortex (V1) forward takes two pathways to become the dorsal and ventral stream flows. The dorsal stream begins with V1, through V2, into the dorsal medial area and the temporal area (MT, also known as V5), then arrives at the inferior parietal lobule. The dorsal stream is often referred to as the space path. It is involved in spatial location and related motion control of objects, such as saccades and stretch to take. The ventral stream begins with V1, followed by V2 and V4, into the inferior temporal lobe. This passage is often called a content access, participating in object recognition, such as face recognition. The path is also related to long-term memory.

4.6.2 Hearing

Why humans can hear sound and understand speech is dependent on the integrity of the entire auditory pathway, which includes the external ear, middle ear, inner ear, auditory nerve, and central auditory. Auditory pathways outside the central nervous system is called the auditory outer periphery. The auditory pathway that is inside the central nervous system is called the auditory center or central auditory system. The central auditory, spanning the brainstem, midbrain, thalamus of the cerebral cortex, is one of the longest central pathways of the sensory system.

Sound information is conducted from the surrounding auditory system to the central auditory system. The central auditory system performs processing and analysis functions for sound, such as functions to feel the tone, pitch, intensity, and determine the orientation of sound. Specialized cells are able to respond to where the sound begins and ends. Auditory information spreading to the cerebral cortex has connections with the language center of the brain that manages reading, writing, and speaking.

4.6.3 Perception buffer

The perception buffer, also known as the sensory memory or instantaneous memory, is the first direct impression of sensory information on the sensory organ. The perception buffer can cache information from all the sensory organs in ranges from tens to hundreds of milliseconds. In the perception buffer, the information may be noticed and become significant after the encoding process. It may proceed to the next phase of processing, and, if unnoticed or not encoded, the sensory information will automatically subside.

A variety of sensory information is stored in the perception buffer in its unique form for a time period and continues to work. These sensory information forms are visual representations and sound representations, called video images and sound images. Imagery can be said to be directly and primitively memorized. Imagery can exist for only a short period of time; even the most distinctive visual image can be kept for only tens of seconds. Perception memory has the following characteristics:

4.6.4 Working memory

Working memory consists of a central executive system, visuospatial sketchpad, phonological loop, and episodic buffer. The central executive system is the core of working memory, which is responsible for associating various subsystems and long-term memory, paying attention to resource coordination, strategy selection and planning, and so on. The visuospatial sketchpad is mainly responsible for the storage and processing of visual-spatial information. It contains visual and spatial subsystems. The phonological loop is responsible for the sound-based storage and control of information, including sound storage and pronunciation control. In silent reading, the characterization of faded voice can be reactivated in order to prevent a recession and also can transfer words in a book into speech. The episodic buffer store connects information across the region, in order to form a visual, spatial, and verbal integrated unit in chronological order, such as for a story or a movie scene memory. The episodic buffer also associates long-term memory and semantic memory.

4.6.5 Short-term memory

Short-term memory stores beliefs, goals, and intentions. It responds to a rapidly changing environment and operations of agents. In short-term memory, the perceptual coding scheme and experience coding scheme for related objects are prior knowledge.

4.6.6 Long-term memory

Long-term memory is a container with large capacity. In long-term memory, information is maintained for a long time. According to the stored contents, long-term memory can be divided into semantic memory, episodic memory, and procedural memory.

- 1. Semantic memory stores the words, the concepts, general rules in reference to the general knowledge system. It has generality and does not depend on time, place, and conditions. It is relatively stable and is not easily interfered with by external factors.

- 2. Episodic memory stores personal experience. It is the memory about events that are taking place in a certain time and place. It is easily interfered with by a variety of factors.

- 3. Procedural memory refers to technology, process, or how-to memories. Procedural memory is generally less likely to change but can be automatically exercised unconsciously. It can be a simple reflex action or a combination of a more complex series of acts. Examples of procedural memory include learning to ride a bicycle, typing, using an instrument, or swimming. Once internalized, the procedure memory can be very persistent.

4.6.7 Consciousness

Consciousness is a complex biological phenomenon. A philosopher, physician, or psychologist may have different understandings of the concept of consciousness. From a scientific point of view of intelligence, consciousness is a subjective experience. It is an integration of the outside world, physical experience, and psychological experience. Consciousness is a brain-possessed “instinct” or “function”; it is a “state,” a combination of a number of brain structures for a variety of organisms. In CAM, consciousness is concerned with automatic control, motivation, metacognition, attention, and other issues.

4.6.8 High-level cognition function

High-level cognitive brain functions include learning, memory, language, thinking, decision making, and emotion. Learning is the process of continually receiving stimulus via the nervous system, accessing new behaviors and habits, and accumulating experience. Memory refers to keeping up and reproducing behavior and knowledge by learning. It is made up of the intelligent activities that we do every day. Language and higher-order thinking are the most important factors that differentiate humans from other animals. Decision making is the process of finding an optimal solution through analysis and comparison of several alternatives; it can be a decision made under uncertain conditions to deal with the occasional incident. Emotion is an attitudinal experience arising in humans when objective events of things meet or do not meet their needs.

4.6.9 Action selection

Action selection means building a complex combination of actions by the atomic action, in order to achieve a particular task in the process. Action selection can be divided into two steps. The first step is atomic action selection, which is choosing the relevant atomic actions from the action library. Then, using planning strategies, the selected atomic actions is composed of complex actions. The action selection mechanism is implemented by the spike basal ganglia model.

4.6.10 Response output

Response output is classified from overall objective. It can be influenced by surrounding emotion or motivation input. Based on the control signal, the primary motor cortex directly generates muscle movements in order to achieve some kind of internal given motion command.

If you want to understand the CAM model in detail, please refer to the book Mind Computation [20].

4.7 Cognitive cycle

The cognitive cycle is a basic procedure of mental activities in cognitive level, it consists of the basic steps of the cognitive-level mind activity. Human cognition recurs as brain events in the cascade cycle. In CAM, each current situation is perceived in the cognitive cycle according to the intended goal. Then an internal or external flow of actions is constituted in order to respond to the desired goal [21], as shown in Fig. 4.8. The CAM cognitive cycle is divided into three phases: perception, motivation, and action planning. In the perception phase, the process of environmental awareness is realized by sensory input. Sensory input and information in working memory are taken as clues, and then a local association is created with the automatic retrieval of episodic memory and declarative memory. The motivation phase is focused on the learner’s needs in terms of belief, expectations, sorting, and understanding. According to the motivation factors, such as activation, opportunities, continuity of actions, persistence, interruptions, and preferential combination,the motivation system is built. An action plan is achieved through action selection and action planning.

4.7.1 Perception phase

The perceptual phase achieves environmental awareness or understanding and organizes and interprets sensory information processing. The sensory organ receives an external or internal stimulus. This is the beginning of meaning creation in the perception phase. Awareness is the event feeling, perception, consciousness state, or ability. At this level of consciousness, sensory data can be confirmed by the observer but does not necessarily mean understanding. In biological psychology, awareness is defined as a human’s or animal’s perception and cognitive response to external conditions or events.

4.7.2 Motivation phase

The motivation phase in CAM determines the explicit goals according to the need. A target includes a set of subgoals, which can be formally described as:

4.7.3 Action planning phase

Action planning is the process of building a complex action composed of atomic actions to achieve a task. Action planning can be divided into two steps: The first is action selection, which is the selection of the relevant action from the action library; second is the use of planning strategies to integrate the selected actions. Action selection is instantiating the action flow or selecting an action from a previous action flow. There are many action selection methods, most of them based on similarity matching between goals and behaviors. Planning provides an extensible and effective method for action composition. It allows an action request to be expressed as a combination of objective conditions and regulates a set of restrictions and preferences, under which a set of constraints and preferences exists. In CAM, we use dynamic logic to formally describe the action and design action planning algorithms.

4.8 Perception, memory, and judgment model

The research results of cognitive psychology and cognitive neuroscience provide much experimental evidence and theoretical viewpoints for clarifying the human cognitive mechanism and the main stages and pathways of the cognitive process, that is, the stages of perception, memory, and judgment (PMJ), as well as the fast processing pathway, fine processing pathway, and feedback processing pathway. Fu et al. constructed the PMJ mind model [22], as shown in Fig. 4.9. The dotted box in the figure is the mental model, which summarizes the main cognitive processes, including the three stages of PMJ (represented by the gear circle) and three types of channels (represented by arrow lines with numbers) of rapid processing, fine processing, and feedback processing. In each stage, under the constraints of various cognitive mechanisms, the cognitive system receives information input from other stages, completes specific information processing tasks, and outputs processed information to other stages. Each stage cooperates with the other to realize a complete information processing process. Each kind of processing path represents the transmission of processing information. In the model, the corresponding relationship between cognition and calculation is also given, that is, the perception stage corresponds to the analysis in the calculation process, the memory stage corresponds to the modeling in the calculation process, and the judgment stage corresponds to the decision making in the computation process.

According to existing research results, the PMJ model classifies the main pathways of cognitive processing into the fast processing pathway (analogous to large cell pathway and its related cortical pathway), fine processing pathway (analogous to small cell pathway and its related cortical pathway), and feedback pathway (top-down feedback).

4.8.1 Fast processing path

The fast processing path refers to the processing from the perception stage to the judgment stage (as shown in ⑧ in Fig. 4.9) to realize perception-based judgment. This process does not need too much knowledge and experience to participate. It mainly processes the whole characteristics, contour, and low spatial frequency information of stimulus input. On this basis, it carries out primary rough processing of the input information and makes rapid classifications and judgments. Visual saliency features can be classified and judged by the rapid processing pathway.

4.8.2 Fine processing pathway

The fine processing pathway refers to the processing from the perception stage to the memory stage and then from the memory stage to the perception and judgment stages (as shown in ④, ⑤, and ⑦ in Fig. 4.9), to realize memory-based perception and judgment. This process relies on existing knowledge and experience, and mainly processes the local characteristics, details, and high spatial frequency information of stimulus input. It matches the knowledge stored in long-term memory precisely and then makes a classification and judgment. People’s perception of the outside world is usually inseparable from attention. We need to filter the useful information from a great deal of other information through attention screening, store it in the memory system, and then form a representation. The information stored in the memory space of the memory representation adaptive dynamic memory system is constructed and changed dynamically with cognitive processing activities.

4.8.3 Feedback processing pathway

The feedback processing pathway refers to the processing from the judgment stage to the memory stage, or from the judgment stage to the perception stage (such as ⑥ or ⑨ in Fig. 4.9) in order to realize judgment-based perception and memory. The cognitive system corrects the knowledge stored in the short-term or long-term memory according to the output results of the judgment stage; the output results of the judgment stage also provide clues to the perception stage, making information processing in the perception stage more accurate and efficient.