Chapter 4. How Attacks Are Waged

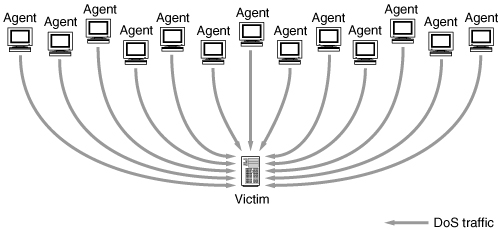

A DDoS attack has to be carefully prepared by the attacker. She first recruits the agent army. This is done by looking for vulnerable machines, then breaking into them, and installing her attack code. The attacker next establishes communication channels between machines, so that they can be controlled and engaged in a coordinated manner. This is done using either a handler/agent architecture or an IRC-based command and control channel. Once the DDoS network is built, it can be used to attack as many times as desired against various targets.

4.1 Recruitment of the Agent Network

Depending on the type of denial of service planned, the attacker needs to find a sufficiently large number of vulnerable machines to use for attacking. This can be done manually, semi-automatically, or in a completely automated manner. In the cases of two well-known DDoS tools, trinoo [Ditf] and Shaft [DLD00], only the installation process was automated, while discovery and compromise of vulnerable machines were performed manually. Nowadays, attackers use scripts that automate the entire process, or even use scanning to identify already compromised machines to take over (e.g., Slammer-, MyDoom-, or Bagle-infected hosts). It has been speculated that some worms may be used explicitly to create a fertile harvesting ground for building bot networks that are later used for various malicious purposes, including DDoS attacks. If the owners didn’t notice the worm infection, they will likely not notice the bot that harvests them!

4.1.1 Finding Vulnerable Machines

The attacker needs to find machines that she can compromise. To maximize the yield, she will want to recruit machines that have good connectivity and ample resources and are poorly maintained. Unfortunately, many of these exist within the pool of millions of Internet hosts.

In the early days of DDoS, hosts with high-availability connections were found only in universities and scientific and government institutions. They further tended to have fairly lax security and no firewalls, so they were easily compromised by an attacker. The recent popularity of cable modem and digital subscriber line (DSL) high-speed Internet for business and home use has brought high-availability connections into almost every home and office. This has vastly enlarged the pool of lightly administered and well-provisioned hosts that are frequently continuously connected and running—ideal targets for DDoS recruitment. The change in the structure of potential DDoS agents was followed by a change in DDoS tools. The early tools ran mostly on Unix-based hosts, whereas recent DDoS code mostly runs on Windows-based systems. In some cases, such as the Kaiten and Knight bots, the same original Unix source code was simply recompiled using the Cygwin portable library.

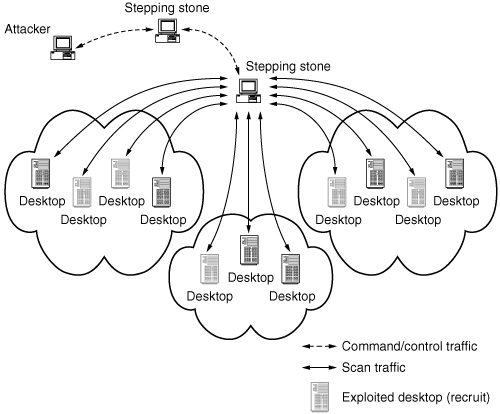

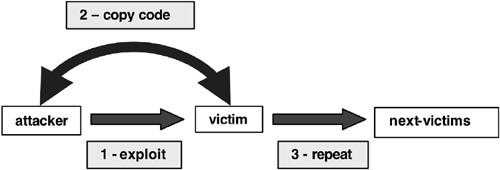

The process of looking for vulnerable machines is called scanning. Figure 4.1 depicts the simple scanning process. The attacker sends a few packets to the chosen target to see whether it is alive and vulnerable. If so, the attacker will attempt to break into the machine.

Figure 4.1. Recruitment of agent army

Scanning was initially a manual process performed by the attacker using crude tools. Over time, scanning tools improved and scanning functions were integrated and made automatic. Two examples of this are “blended threats” and worms.

Blended threats are individual programs or groups of programs that provide many services, in this case command and control using an IRC bot and vulnerability scanning.

A bot (derived from “robot”) is a client program that runs in the background on a compromised host, and watches for certain strings to show up in an IRC channel. These strings represent encoded commands that the bot program executes, such as inviting someone into an IRC channel, giving the user channel operator permissions, scanning a block of addresses (netblock), or performing a DoS attack. Netblock scans are initiated in certain bots, such as Power [Dita], by specifying the first few octets of the network address (e.g., 192.168 may mean to scan everything from 192.168.0.0 to 192.168.255.255). Once bots get a list of vulnerable hosts, they inform the attacker using the botnet (a network of bots that all synchronize through communication in an IRC channel). The attacker retrieves the file and adds it to her list of vulnerable hosts. Some programs automatically add these vulnerable hosts to the vulnerable host list, thereby constantly reconstituting the attack network. Network blocks for scanning are sometimes chosen randomly by attackers. Other times they are chosen explicitly for a reason (e.g., netblocks owned by DSL providers and universities are far more “target-rich environments” than those owned by large businesses and are less risky than a military site).

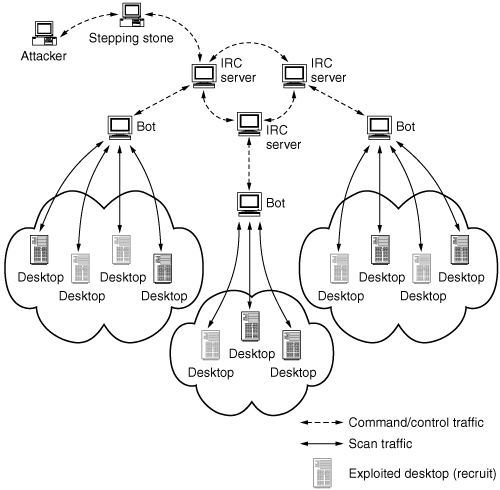

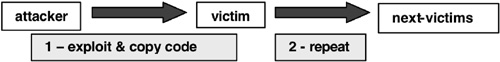

The scanning can be performed with separate programs that are simply “plugged in” to the blended threat kit, or (as is the case with Phatbot), built into the program as a module. An IRC bot scanning is depicted in Figure 4.2.

Figure 4.2. Sophisticated scanning for recruitment

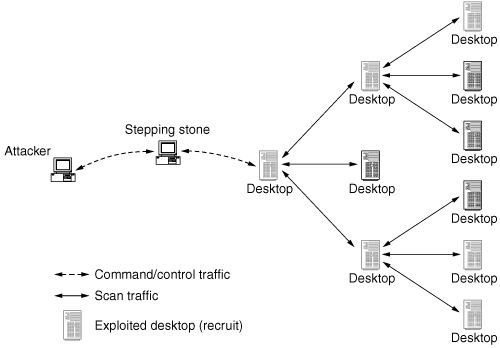

Another program that employs scanning to identify vulnerable hosts is an Internet worm. Internet worms are automated programs that propagate from one vulnerable host to another, in a manner similar to biological viruses (e.g., the flu). A worm has three distinct primary functions: (1) scanning, to look for vulnerable machines; (2) exploitation, which compromises machines and establishes remote control; and (3) a payload (code they execute upon compromise to achieve some attack function). Since the worm is designed to propagate, once it infects a machine, the scan/infect cycle repeats on both the infected and infecting machines. The payload can be simply a copy of the worm (in memory or written to the file system), or it may be a complete set of programs loaded into the file system. Internet worms are an increasingly popular method of recruiting DDoS agents, so the worm payload frequently includes DDoS attack code. Figure 4.3 illustrates worm propagation.

Figure 4.3. Worm scanning for recruitment

Worms choose the addresses to scan using several methods.

• Completely randomly. Randomly choose all 32 bits of the IP address (if using IPv4) for targets, effectively scanning the entire Internet indiscriminately.

• Within a randomly selected address range. Randomly choose only the first 8 or 16 bits of the IP address, then iterate from .0.0 through .255.255 in that address range. This tends to scan single networks, or groups of networks, at a time.

• Using a hitlist. Take a small list of network blocks that are “target rich” and preferentially scan them, while ignoring any address range that appears to be empty or highly secured. This speeds things up tremendously, as well as minimizing time wasted scanning large unused address ranges.

• Using information found on the infected machine. Upon infecting a machine, the worm examines the machine’s log files that detail communication activity, looking for addresses to scan. For instance, a Web browser log contains addresses of recently visited Web sites, and a file known_hosts contains addresses of destinations contacted through the SSH (Secure Shell) protocol.

Worms spread extremely fast because of their parallel propagation pattern. Assume that a single copy successfully infects five machines in one second. In the next second, all six copies (the original one and five new copies) will try to propagate further. As the worm spreads, the number of infected machines and number of worm copies swarming over the Internet grow exponentially. Frequently, this huge amount of scanning/attacking traffic clogs edge networks and creates a DoS effect for many users. Some worms carry DDoS payloads as well, allowing the attacker who controls the compromised machines to carry out more intentional and targeted attacks after the worm has finished spreading. Since history suggests that worms are often not completely cleaned up (for example, Code Red–infected hosts still exist in the Internet, years after Code Red first appeared), some infected machines might continue serving as DDoS agents indefinitely.

4.1.2 Breaking into Vulnerable Machines

The attacker needs to exploit a vulnerability in the machines that she is intending to recruit in order to gain access to them. You will find this referred to as “owning” the machine. The vast majority of vulnerabilities provide an attacker with administrative access to the system, and she can add/delete/change files or system settings at will.

Exploits typically follow a vulnerability exploitation cycle.

1. A new vulnerability has been discovered in attacker circles and is being exploited in a limited fashion.

2. The vulnerability makes it outside of this circle and gets exploited at a wider scale.

3. Automated tools appear, and nonexperts (script kiddies) are running the tools.

4. A patch for the vulnerability appears and gets applied.

5. Exploits for a given vulnerability decline.

Once one or more vulnerabilities have been identified, the attacker incorporates the exploits for those vulnerabilities into his DDoS toolkit. Some DDoS tools actually take advantage of several vulnerabilities to propagate their code to as many machines as possible. These are often referred to as propagation vectors.

Frequently, the attackers patch the vulnerability they exploited to break into the machine. This is done to prevent other attackers from gaining access in the same manner and seizing control of the agent machine. To facilitate his future access to the compromised machine, the attacker will start a program that listens for incoming connection attempts on a specific port. This program is called a backdoor. Access through the backdoor is sometimes protected by a strong password, and in other cases is wide open and will respond to any connection request.

One vulnerability that is not mitigated by patching, which some blended threats take advantage of, is weak passwords. Some exploits contain a list of common passwords. They try these passwords in a brute-force or iterative manner, one after another. This sometimes exceeds system limits for failed logins and causes a lockout condition (a safe fallback for the system, but disruptive to legitimate users who cannot get in to the system). All too many times, these exploits succeed in finding a weak login/password combination and gain unauthorized access to the system. Users often think that leaving no password on the Administrator account is reasonable, or that “password” or some other simple word is sufficient to protect the account. They are mistaken.

4.1.3 Malware Propagation Methods

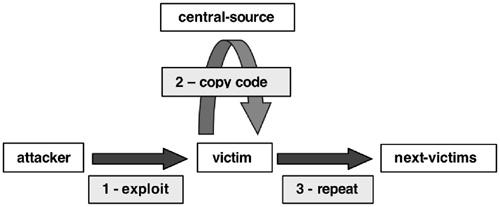

The attacker needs to decide on a propagation model for installing his malware. A simple model is the central repository, or cache, approach: The attacker places the malware in a file repository (e.g., an FTP server) or a Web site, and each compromised host downloads the code from this repository. One advantage of the caching model for the defender is that such central repositories can be easily identified and removed. Attackers installing trinoo [Ditf] and Shaft [DLD00] agents used such centralized approaches in the early days. In 2001, W32/Leaves [CER01c] used a variant of reconfigurable sites for its cache, as did the W32 /SoBig mass-mailing worm in 2003. Figure 4.4 illustrates propagation with central repository.

Figure 4.4. Propagation with central repository. (Reprinted from [HWLT01] with permission of the CERT Coordination Center.)

Another model is the back-chaining, or pull, approach, wherein the attacker carries his tools from an initially compromised host to subsequent machines that this host compromises. Figure 4.5 illustrates propagation with back-chaining.

Figure 4.5. Propagation with back chaining. (Reprinted from [HWLT01] with permission of the CERT Coordination Center.)

Finally, the autonomous, push, or forward propagation approach combines propagation and exploit into one process. The difference between this approach and back chaining is that the exploit itself contains the malware to be propagated to the new site, rather than performing a copy of that malware after compromising the site. The worm carries a DDoS tool as a payload, and plants it on each infected machine. Recent worms have incorporated exploit and attack code, protected by a weak encryption using linear feedback shift registers. The encryption is used to defeat the detection of well-known exploit code sequences (e.g., the buffer overflow “sled,” a long series of NOOP commands [Sko02]) by antivirus or personal firewall software. Once on the machine, the code self-decrypts and resumes its propagation. Figure 4.6 illustrates autonomous propagation.

Figure 4.6. Autonomous propagation. (Reprinted from [HWLT01] with permission of the CERT Coordination Center.)

All of the preceding propagation methods are described in more detail in [HWLT01].

Other complexities of attack tools and toolkits include such features as antiforensics and encryption. Methods of analyzing DDoS tools are described in Chapter 6.

4.2 Controlling the DDoS Agent Network

When the agent army has been recruited in sufficiently large numbers, the attacker communicates with the agents using special “many-to-many” communication tools. The purpose of this communication is twofold.

1. The attacker commands the beginning/end and specifics of the attack.

2. The attacker gathers statistics on agent behavior.

Note here that “sufficiently large” depends on the frame of reference: Early tools like trinoo, Tribe Flood Network (TFN), and Shaft dealt with hundreds and low thousands of agents, but nowadays it is not uncommon to see sets of agents (or botnets) of tens of thousands being traded on IRC. Phatbot networks as large as 400,000 hosts have reportedly been witnessed (http://www.securityfocus.com/news/8573).

4.2.1 Direct Commands

Some DDoS tools like trinoo [Dith] build a handler/agent network, where the attacker controls the network by issuing commands to the handler, which in turn relays commands (sometimes using a different command set) to the agents. Commands may consist of unencrypted (clear) text, obfuscated or encrypted text, or numeric (binary) byte sequences. Analysis of the command and control traffic between handlers and agents can give insight into the capabilities of the tools without having access to the malware executable or its source code, as demonstrated with Shaft [DLD00].

In order for some handlers and agents, such as trinoo, Stacheldraht, and Shaft to function, the handler must learn the addresses of the agents and “remember” this across reboots or program restarts. The early DDoS tools have hardcoded the IP address of a handler, and agents would report to this handler upon recruitment. Usually the agent list is kept in a file that the handler maintains in order to keep state information about the DDoS network. In some cases, there is no authentication of the handler (in fact any computer can send commands to some DDoS agents, and they will respond). The early analyses of trinoo, TFN, Stacheldraht, Shaft, and mstream all showed ways in which handlers and agents could be detected or controlled. Early DDoS attacks were waged between underground groups fighting for supremacy and ownership of IRC channels. Attackers would sometimes act to take over another’s DDoS network if access to it was not somehow protected.1 For instance, an attacker who captures a plaintext message sent to another’s agent can control this same agent by modifying the necessary message fields and resending it. Or the defender can issue a command that stops the attack. Some DDoS tools that used a handler/agent architecture protected remote access to the handler using passwords, and some tried to protect the handler/agent communication through passwords or encryption using shared secrets. The first handlers even encrypted (using RC4 and Blowfish ciphers; see [MvOV96] for an excellent reference on applied cryptography) their list of agents to avoid disclosure of the identity of the agents, if the handler was examined by investigators. By replaying the commands the list of agents could be exposed, or the file could sometimes be decrypted using keys obtained from forensic analysis or reverse engineering of the handler. Other tools like Stacheldraht [Ditg] allowed encryption of the command channel between the attacker and the handler, but not between handlers and agents. Over time, these handlers became traceable and were, in most cases, removable.

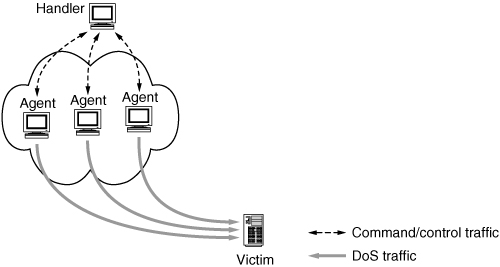

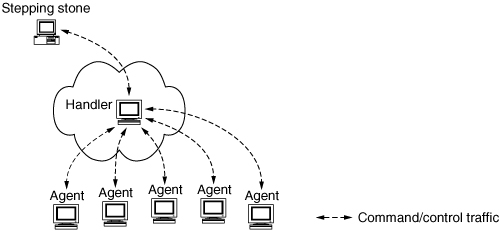

Figures 4.7 and 4.8 illustrate the control and attack traffic visible from the site hosting an agent and from the site hosting a handler, respectively.

Figure 4.7. Illustration of control traffic seen from site hosting agents

Figure 4.8. Illustration of control traffic seen from site hosting a handler

4.2.2 Indirect Commands

Direct communication had a few downsides for the attackers. Because the handlers needed to store agents’ identities and, frequently, an agent machine would store the identity of the handler, once investigators captured one machine the whole DDoS network could be identified. Further, direct communication patterns were generating anomalous events on network monitors (imagine a Web server that suddenly initiates communication with a foreign machine on an obscure port), which network operators were quick to spot. Investigating captured messages, they could identify the foreign peer’s address. Operators were able to detect agent or handler processes even when no messages were actively flowing, by monitoring open ports on their machines. For direct communication, both agents and handlers had to be “ready” to receive messages all the time. This readiness was manifested by the attack process’ opening a port and listening on it. Operators were able to spot this by looking at the list of currently open ports; unidentified listening processes were promptly investigated. Finally, the attacker needed to write his own code for command and control.

A drawback to the original handler/agent design that caused a limitation in the size of DDoS networks was the number of open file handles required for TCP connections between the handler and agents. Many versions of Unix have limits to the number of open file descriptors each process can have, as well as limits for the kernel itself. Even if these limits could be increased, some DDoS tools would simply stop being able to add new agents after reaching 1024, a typical limit on the number of open file handles for many operating systems.

Since many of the authors of DDoS tools were developing them to fight battles on IRC, and since they were already programming bots for other purposes, they began to extend existing code for IRC bots to implement DDoS functions and scanning. An early example of this was the Kaiten bot, programmed originally for Unix systems. Another example coded for the Windows operating environment is the Power bot. Rather than running a separate program that listens for incoming connections on an attacker-specified port, both the DDoS agents (the bots) and the attacker connect to an IRC server like any other IRC client. Since most sites would allow IRC as a communications channel for users, the DDoS communication does not create anomalous events. The role of the handler is played by a simple channel on an IRC server, often protected with a password. There is typically a default channel hard-coded into the bot, where it connects initially to learn where the current control channel is really located. The bot then jumps to the control channel. Channel hopping, even across IRC networks, can be implemented this way. Once in the current control channel, the bots are ready to respond to attacker commands to scan, DDoS someone, update themselves, shut down, etc.

The advantage to the attacker of communicating via IRC is manifold. The server is already there and is being maintained by others. The channel is not easily discovered within thousands of other chat channels (although it might be unusual for a channel full of real humans to suddenly have 10,000 “people” in it in a few minutes). Even when discovered, the channel can be removed only through cooperation of the server’s administrators. This cooperation may be difficult to obtain in the case of foreign servers. Due to the distributed nature of IRC, not all clients have to access the same IRC server to get to the “handler channel,” but merely have to access a server within the same IRC network or alliance. Most tools appearing after Trinity (discussed in [DLD00]) take advantage of this alternate communication mechanism.

As a means of hardening IRC-based communications, attackers regularly compromise hosts and turn them into rogue IRC servers, often using nonstandard ports (instead of the typical 6667/tcp that regular IRC servers use.) Another mechanism, made trivial by Phatbot, is to turn some of the bots into TCP proxies on nonstandard ports, which in turn connect to the real IRC servers on standard ports. Either way, another form of stepping stone in the command and control channel easily defeats many incident responders trying to identify and disable botnets.

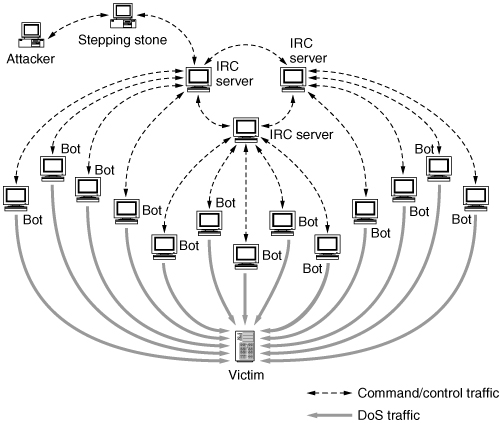

The attacker’s communication with agents via IRC is illustrated in Figure 4.9.

Figure 4.9. IRC-based DDoS network

4.2.3 Malware Update

Like everyone else, attackers need to update the code for their tools. DDoS attackers essentially wanted a software update mechanism analogous to the software update function available in many common desktop operating systems, though without the actual machine owner’s controlling the update process, of course. Using the same mechanism to perform updates as was used for initial recruitment—scanning for machines with attack code and planting new code—is noisy and not always effective, since some attack tools patch the vulnerability they used to gain entrance to ensure that no one else can take over their agents. The attacker then cannot break in the same way as before. Many existing tools and bots distribute updates by sending a command to their agents that tells each agent to download a newer version of the code from some source, such as a Web server.

With the increased use of peer-to-peer networks, attackers are already looking at using peer-to-peer mechanisms for malicious activity. The Linux Slapper worm, for example, used a peer-to-peer mechanism that it claimed could handle millions of peers. More recently, Phatbot has adopted peer-to-peer communication using the “WASTE” protocol, linking to other peers by using Gnutella caching servers to appear to be a Gnutella client (see http://www.lurhq.com/phatbot.html). Using this mechanism, attackers can organize their agents into peer-to-peer networks to propagate new code versions or even to control the attack. Robustness and reliability of peer-to-peer communication can make such DDoS networks even more threatening and harder to dismantle than today.

4.2.4 Unwitting Agent Scenario

There is also a class of DDoS attacks that engage computers with vulnerabilities whose exploitation does not necessarily require installing any malicious software on the machine but, instead, allows the attacker to control these hosts to make them generate the attack traffic. The attacker assembles a list of vulnerable systems and, at the time of the attack, has agents iterate through this list sending exploit commands to initiate the traffic flow. The generated traffic appears to be legitimate. For example, the attacker can misuse a vulnerability present in a Web server to cause it to run the PING.EXE program.2 Some researchers have called these unwitting agents.

The distinction between an “unwitting agent” and other DDoS attack scenarios is subtle. Rather than a remotely executable vulnerability being used to install malicious software, the vulnerability is used to execute legitimate software already on the system. A more complete description can be found in a talk by David Dittrich (see http://staff.washington.edu/dittrich/talks/first/).

Although attacks generated by unwitting agents, like the Web server vulnerability used to run PING.EXE, are similar in some respects to reflection attacks, they are not identical. In most reflection attacks, the attacker misuses a perfectly legitimate service, generating legitimate requests with a fake source address. In unwitting agent attacks, the misused service possesses a remotely exploitable vulnerability that enables the attacker to initiate attack traffic. Patching this vulnerability will immunize unwitting agents from misuse, while defense against reflection attacks is far more complex and difficult.

Unwitting agents cannot be identified by remote port-scanning tools (e.g., RID [Bru] or Zombie Zapper [Tea]), nor can they be found by running file system scanners such as NIPC’s find_ddos or antivirus software. This is because there is no malicious software or obscure open ports, just a remotely exploitable vulnerability. The vulnerable machines may be identified by monitoring network traffic, looking for DDoS attack traffic. They can also be discovered by doing typical vulnerability scans with programs such as Nessus (http://www.nessus.org).

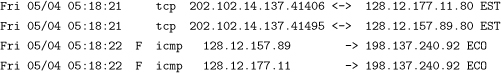

An example of an unwitting agent attack is the ICMP Echo Request (ping) flood leveled on www.whitehouse.gov on May 4, 2001. The attack misused a Microsoft IIS server vulnerability to trigger a ping application on unwitting agents and start the flood. It was reported that hundreds of systems worldwide were flooding. The systems were identified to be running Windows 2000 and NT, and some administrators found PING.EXE running on their systems, targeting the IP address of www.whitehouse.gov. Since ping is a legitimate application, antivirus software cannot help in detecting or disabling this attack. A message to the UNISOG mailing list shown in the sidebar provides some more technical information about the attack.

The Power bot used the identical mechanism to do some of its flooding. The recruited bots would use scanning techniques described earlier to identify unwitting agents. When an attack was initiated the bots would send exploit code to the agent’s vulnerable Web server to start the flood. The exploit details are given in the sidebar on page 76.

4.2.5 Attack Phase

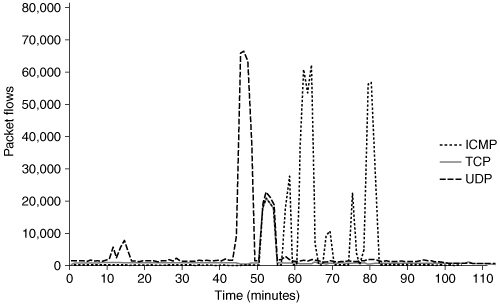

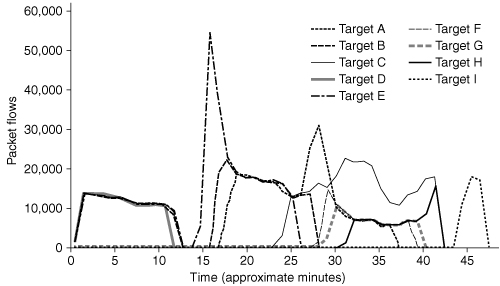

Some attacks are prescheduled—hard-coded in the propagated code. However, most attacks occur when an attacker disseminates a command from his handlers to the agents. During the attack, the control traffic has mostly subsided. Depending on the type of attack tool used, the attacker may or may not be able to stop the ongoing attack. The duration of the attack is either specified in the attacker’s command or controlled by default variable settings (e.g., 10 minutes of flooding). It could very well be that the attacker has moved on by the time the flooding has started. However, it is likely that the attacker is observing the ongoing attack, looking for its effects on test targets. Some tools, like Shaft [DLD00], have the ability to provide feedback on flood statistics. Figure 4.10 shows the initial compromise and test runs of the Shaft tool. The attacker is testing several attack types, such as ICMP, TCP SYN, and UDP floods, which we will discuss in Section 4.3, prior to the real full-fledged attack aimed at multiple targets shown in Figure 4.11.

Figure 4.10. Illustration of test runs of the Shaft tool

Figure 4.11. Illustration of full-scale attack with the Shaft tool

Date: Fri, 04 May 2001 14:26:29 -0700

From: Computer Security Officer <[email protected]>

Subject: [unisog] DDoS against www.whitehouse.gov

The attack exploited vulnerable IIS5 servers on Win2K and WinNT systems.

Immediately prior to the attack we see an incoming port 80 connection from IP address 202.102.14.137 (CHINANET Jiangsu province network) to each of the systems that subsequently began pinging 198.137.240.92. The argus log looks in part like this.

Each of the systems reviewed so far had two ping processes running. One of the hosts had the following in its IIS log file.

12:21:36 202.102.14.137 GET /scripts/../../winnt/system32/ping.exe 200

12:29:29 202.102.14.137 GET /scripts/../../winnt/system32/ping.exe 200

While I am surprised that such a simple exploit could work, it looks like it may be exactly what happened.

The attack was targeted at less than 2% of the total residence network population so it was probably mapped out earlier. ZDNet has a story running that indicated that we were not the only one used in this way.

We are issuing an alert to our dorm network users to update their systems with the relevant security patches. We’ve been working so hard at cleaning up the Linux boxes that we’ve tended to ignore the Windows boxes. Not any more.

Stephen

Excerpt from “Power Bot” Analysis

The HTTP GET request exploiting the Web server vulnerability (as seen by the ngrep utility from http://www.packetfactory.net/Projects/Ngrep/) and the corresponding flood traffic generated by the request:

T 2001/06/08 02:20:09.406262 10.0.90.35:2585 -> 192.168.64.225:80 [AP]

GET /scripts/..%c1%9c../winnt/system32/cmd.exe?/c+ping.exe+"-v"+igmp+"

-t"+"-l"+30000+10.2.88.84+"-n"+9999+"-w"+10..

I 2001/06/08 02:20:09.430676 192.168.64.225 -> 10.2.88.84 8:0 7303@0:1480

...c....abcdefghijklmnopqrstuvwabcdefghijklmnopqrstuvwabcdefghijklmnop

qrstuvwabcdefghijklmnopqrstuvwabcdefghijklmnopqrstuvwabcdefghijklmnopq

rstuvwabcdefghijklmnopqrstuvwabcdefghijklmnopqrstuvwabcdefghijklmnopqr

stuvwabcdefghijklmnopqrstuvwabcdefghijklmnopqrstuvwabcdefghijklmnopqrs

tuvwabcdefghijklmnopqrstuvwabcdefghijklmnopqrstuvwabcdefghijklmnopqrst

uvwabcdefghijklmnopqrstuvwabcdefghijklmnopqrstuvwabcdefghijklmnopqrstu

vwabcdefghijklmnopqrstuvwabcdefghijklmnopqrstuvwabcdefghijklmnopqrstuv

wabcdefghijklmnopqrstuvwabcdefghijklmnopqrstuvwabcdefghijklmnopqrstuvw

abcdefghijklmnopqrstuvwabcdefghijklmnopqrstuvwabcdefghijklmnopqrstuvwa

bcdefghijklmnopqrstuvwabcdefghijklmnopqrstuvwabcdefghijklmnopqrstuvwab

..........

The exploit is contained in the embedded Unicode characters %c1%9c, which trick the server into performing a directory traversal and executing a command shell /winnt/system32/cmd.exe.

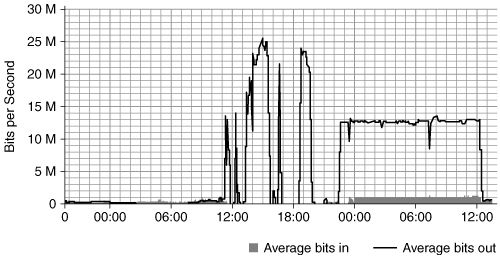

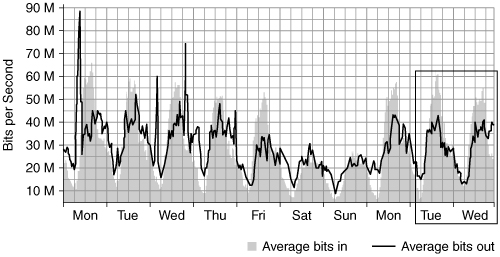

During the attack phase, levels of network activity can be above normal, depending on the type of attack. In a flooding attack, the majority of it is felt at the aggregation point, that is, the target. This is illustrated in Figure 4.12.

Figure 4.12. Floods from the perspective of the victim

In another example, you can observe unusual levels of traffic in Figure 4.13, as seen from the victim’s perspective. The quick fluctuations between 12:00 and 18:00 represent a full-scale attack, whereas the somewhat tamed flood between 00:00 and 12:00 reflects a repeated attack, only this time mitigated by a defense mechanism. The aforementioned fluctuations are not due to variations in the attack, but solely to the measuring equipment collapsing under the load.

Figure 4.13. Illustration of a full-scale attack as seen by a victim. Average bits out denote the traffic received by the victim.

From a different perspective, the same attack viewed from an upstream provider a few hops into the Internet in Figure 4.14 barely shows an impact of traffic levels. (The box on the right side of Figure 4.14 indicates the attack timeframe shown in Figure 4.13.) It is important to realize that what affects the target or victim can have little impact on an upstream provider, thus not creating any anomalous observations.

Figure 4.14. Illustration of a full-scale attack seen further upstream. Average bits in denote the traffic sent to the victim.

4.3 Semantic Levels of DDoS Attacks

There are several methods of causing a denial of service. Creating a DoS effect is all about breaking things or making them fail. There are many ways to make something fail, and often multiple vulnerabilities will exist in a system and an attacker will try to exploit (or target) several of them until she gets the desired result: The target goes offline.

4.3.1 Exploiting a Vulnerability

As mentioned in Chapter 2, vulnerability attacks involve sending a few well-crafted packets that take advantage of an existing vulnerability in the target machine. For example, there is a bug in Windows 95 and NT, and some Linux kernels, in handling improperly fragmented packets. Generally, when a packet is too large for a given network, it is divided into two (or more) smaller packets, and each of them is marked as fragmented. The mark indicates the order of the first and the last byte in the packet, with regard to the original. At the receiver, chunks are reassembled into the original packet before processing. The fragment marks must fit properly to facilitate reassembly. The vulnerability in the above kernels causes the machine to become unstable when improperly fragmented packets are received, causing it to hang, crash, or reboot. This vulnerability can be exploited by sending two malformed UDP packets to the victim. There were several variations of this exploit—fragments that indicate a small overlap, a negative offset that overlaps the second packet before the start of the header in the first packet, and so on. These were known as the bonk, boink, teardrop, and newtear exploits [CER98b].

Vulnerability attacks are particularly bad because they can crash or hang a machine with just one or two carefully chosen packets. However, once the vulnerability is patched, the original attack becomes completely ineffective.

4.3.2 Attacking a Protocol

An ideal example of protocol attacks is a TCP SYN flood attack. We first explain this attack and then indicate general features of protocol attacks.

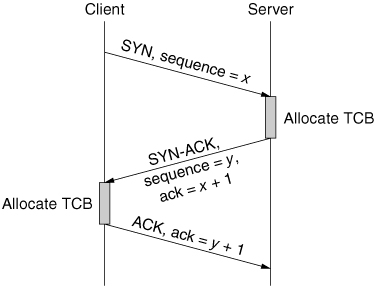

A TCP session starts with negotiation of session parameters between a client and a server. The client sends a TCP SYN packet to the server, requesting some service. In the SYN packet header, the client provides his initial sequence number, a unique per-connection number that will be used to keep count of data sent to the server (so the server can recognize and handle missing, misordered, or repeated data). Upon SYN packet receipt, the server allocates a transmission control block (TCB), storing information about the client. It then replies with a SYN-ACK, informing the client that its service request will be granted, acknowledging the client’s sequence number and sending information about the server’s initial sequence number. The client, upon receipt of the SYN-ACK packet, allocates a transmission control block. The client then replies with an ACK to the server, which completes the opening of the connection. This message exchange is called a three-way handshake and is depicted in Figure 4.15.

Figure 4.15. Opening of TCP connection: three-way handshake

The potential for abuse lies in the early allocation of the server’s resources. When the server allocates his TCB and replies with a SYN-ACK, the connection is said to be half-open. The server’s allocated resources will be tied up until the client sends an ACK packet, closes the connection (by sending an RST packet) or until a timeout expires and the server closes the connection, releasing the buffer space. During a TCP SYN flooding attack, the attacker generates a multitude of half-open connections by using IP source spoofing. These requests quickly exhaust the server’s TCB memory, and the server can accept no more incoming connection requests. Established TCP connections usually experience no degradation in service, though, as they naturally complete and are closed, the TCB records spaces they were using will be exhausted by the attack, not replaced by other legitimate connections. In rare cases, the server machine crashes, exhausts its memory, or is otherwise rendered inoperative. In order to keep buffer space occupied for the desired time, the attacker needs to generate a steady stream of SYN packets toward the victim (to reserve again those resources that have been freed by timeouts or completion of normal TCP sessions).

The TCP SYN flooding attack is described in detail in [CER96, SKK+97]. This is an especially vicious attack, as servers expect to see large numbers of legitimate SYN packets and cannot easily distinguish the legitimate from the attack traffic. No simple filtering rule can handle the TCP SYN flooding attack because legitimate traffic will suffer collateral damage. Several solutions for TCP SYN flooding have been proposed, and their detailed description is given in [SKK+97].

In order to perform a successful TCP SYN flooding attack, the attacker needs to locate an open TCP port at the victim. Then he generates a relatively small volume packet stream—as few as ten packets per minute [SKK+97] can effectively tie up the victim’s resources. Another version of the TCP SYN flooding attack, random port TCP SYN flooding, is much less common. In it, the attacker generates a large volume of TCP SYN packets targeting random ports at the victim, with the goal of overwhelming the victim’s network resources, rather than filling his buffer space.

Protocol attacks are often difficult to fix—if the fix requires changing the protocol, both the sender and receiver must use the new version of the protocol. Changing commonly used Internet protocols for any reason has proven difficult. In a few cases, clever use of the existing protocol can solve the problem. For example, TCP SYN cookies (described in Chapters 5 and 6) deal with the SYN flood attack without changing the protocol specification, only how the server’s stack processes the connections.

Simple Nomad of the RAZOR team at BindView came up with an attack that targets the same resource as a SYN flood, connection state record, but in a novel manner. Instead of creating lots of half-open connections, the attacker actually completes the three-way handshake and creates many established connection state records in the kernel’s TCB table. The trick that enables the attacker to quickly set up a lot of established connections without using up the agent’s TCB memory lies in the attack programming using custom packets rather than the TCP API [Wright]. The agent machine never allocates a TCB. Instead, it listens promiscuously on the Ethernet card and responds to TCP packets based on header flags alone. The agent infers from the victim’s replies the TCP header information that the victim expects to see in future packets. For instance, if the victim has sent a SYN-ACK packet with SEQ number 1232 and ACK number 540, the agent infers that it should next send an ACK packet with SEQ 540 and ACK 1233. While the server uses TCBs, the attacker does not. This attack can even forge the source address to make it look like packets are coming from a nonexistent host on the local network. Since the attacker is listening to communications on the local network in promiscuous mode, he will be able to hear and reply to the victim’s packets, even though they are sent to the spoofed address.

The attacker leaves established TCP connections idle but responds to keep-alive packets so that the connections do not time out and free up kernel resources. He can even rate limit the connection establishment to avoid SYN flooding protections within the stack [CER00]. This attack is called the Naptha attack [Bin00], and TCP SYN cookies are an ineffective defense against it.

To generalize, protocol attacks target an asymmetry inherent in certain protocols. This asymmetry enables the attacker to create a large amount of work and consume substantial resources at the server, while sparing its own resources. Generally, the only fix that works against protocol attacks is creating a protocol patch that balances the asymmetry in the server’s favor.

4.3.3 Attacking Middleware

Attacks can be made on algorithms, such as hash functions that would normally perform operations in linear time for each subsequent entry. By injecting values that force worst-case conditions to exist [CW03], such as all values hashing into the same bucket, the attacker can cause the application to perform their functions in exponential time for each subsequent entry [MvOV96].

As long as the attacker can freely send data that is processed using the vulnerable hash function, she can cause the CPU utilization of the server to exceed capacity and degrade what would normally be a subsecond operation into one that takes several minutes to complete. It does not take a very large number of requests to overwhelm some applications this way and render them unusable by legitimate users.

The victim can immunize the host from this kind of attacks by changing (or even removing) the middleware to remove the vulnerability. Doing so may not always be easy, and if the attacker has truly found a previously unknown vulnerability, even detecting what he has done to cause the slowdown may be a challenge. Also, the middleware may not be changeable by the victim himself, who may need to wait for an update from the author or manufacturer of the middleware. Further, if the middleware is vital to the victim’s proper operation, disabling or removing it may prove to be as damaging as the attack itself.

Note that a node under a successful middleware attack might not appear to be in any trouble at all, except that those of its services that rely on the middleware are slow. The number of packets it receives might be unexceptional, other unrelated services on the node might be behaving properly, and there might be few telltale signs of an ongoing DoS attack, beyond services not working. Further, many DDoS defense mechanisms that aim to defend against flooding might be unable to help with this kind of attack.

4.3.4 Attacking an Application

The attacker may target a specific application and send packets to reach the limit of service requests this application can handle. For example, Web servers take a certain amount of time to serve normal Web page requests, and thus there will exist some finite number of maximum requests per second that they can sustain. If we assume that the Web server can process 1,000 requests per second to retrieve the files that make up a company’s home page, then at most 1,000 customers’ requests can be processed concurrently. For the sake of argument, let’s say the normal load a Web server sees daily is 100 requests per second (one tenth of capacity).

But what if an attacker controls 10,000 hosts, and can force each one of them to make one request every 10 seconds to the Web server? That is an average of 1,000 requests per second, and added to the normal traffic results in 110% of the server’s capacity. Now a large portion of the legitimate requests will not make it through because the server is saturated.

As with middleware attacks, an application attack might not cripple the entire host or appear as a vast quantity of packets. So, again, many defenses are not able to help defend against this kind of attack.

4.3.5 Attacking a Resource

The attacker may target a specific resource such as CPU cycles or router switching capacity. In January 2001, Microsoft suffered an outage that was reported to have been caused by a network configuration error, as described in Chapter 3. This outage disrupted a large number of Microsoft properties. When news of this attack came out, it was discovered that all of Microsoft’s DNS servers were on the same network segment, served by the same router. Attackers then targeted the routing infrastructure in front of these servers and brought down all of Microsoft’s online services.

Microsoft quickly moved to disperse its name servers geographically and to provide redundant routing paths to the servers to make it more difficult for someone to take them all out of service at once. Removing bottlenecks and increasing capacity can address resource attacks, though, again, an attacker may respond with a stronger attack. For companies with fewer resources than Microsoft, overprovisioning and geographically dispersing services may not be a financially viable option.

Attacking Infrastructure Resources

Infrastructure resources are particularly attractive targets because attacks on them can have effects on large segments of the Internet population. Routing is a key Internet infrastructure service that could be (and, indeed, sometimes has been) targeted by DoS attacks. Briefly, this infrastructure maintains the information required to deliver packets to their destinations, and thus is critical for Internet operation. At a high level, the service operates by exchanging information about paths through the Internet among routers, which build tables that help decide where to send packets. As a packet enters a router, the table is consulted to determine where to send the packet next.

This infrastructure can be attacked in many ways to cause denial of service [Gre02] [ZPW+02] [MV02] [Jou00]. In addition to flooding routers, the protocols used to exchange information have various potential vulnerabilities that could be exploited to deny service. These are not only bugs in the implementations of the protocols, but also characteristics of how the protocols were designed that could be misused by an attacker. For example, there are various methods by which an attacker could try to fill up a router’s tables with unimportant entries, perhaps leaving no room for critical entries or making the router run much slower. A router could be instructed by an attacker to send packets to the wrong place, preventing their delivery.3 Or the routing infrastructure could be made unstable by forcing extremely frequent changes in its routing information. There are a number of fairly effective approaches to countering these attacks [Tho] [Ope], and research is ongoing into even better methods of protecting this critical infrastructure [KLS00].

4.3.6 Pure Flooding

Given a sufficiently large number of agents, it is possible to simply send any type of packets as fast as possible from each machine and consume all available network bandwidth at the victim. This is a bandwidth consumption attack. The victim cannot defend against this attack on her own, since the legitimate packets get dropped on the upstream link, between the ISP and the victim network. Thus, frequently the victim requests help from its ISP to filter out offending traffic.

It is not unusual that the victim’s ISP is also affected by the attack (at least on the “customer attach router” connecting the victim to the ISP’s network) and may have to filter on their upstream routers and even get their upstream provider or peers to also filter traffic coming into their network. In some cases, the attack traffic is very easy to identify and filter out (e.g., large-packet UDP traffic to unused ports, packets with an IP protocol value of 255). In other cases, it may be very difficult to identify specific packet header fields that could be used for filtering (e.g., reflected DNS queries, which could look like responses to a legitimate DNS query from clients within your network, or a flood of widely dispersed legitimate-looking HTTP requests). If this happens, filtering will simply save both the victim’s and the ISP’s resources, but the victim’s customer traffic will be brought down to zero, achieving a DoS effect.

4.4 Attack Toolkits

While some attackers are sophisticated enough to create their own attack code, far more commonly they use code written by others. Such code is typically built into a general, easily used package called an attack toolkit. It is very common today for attackers to bundle a large number of programs into a single archive file, often with scripts that automate its installation. This is a blended threat, as discussed in Section 4.4.2.

4.4.1 Some Popular DDoS Programs

While there are numerous scripts that are used for scanning, compromise and infection of vulnerable machines, there are only a handful of DDoS attack tools that have been used to carry out the actual attacks. A detailed overview of these tools, along with a timeline of their appearance, is given in [HWLT01]. DDoS attack tools mostly differ in the communication mechanism deployed between handlers and agents, and in the customizations they provide for attack traffic generation. The following paragraphs provide a brief overview of these popular tools. The reader should bear in mind that features discussed in this overview are those that have been observed in instances of attack code detected on some infected machines. Many variations may (and will) exist that have not yet been discovered and analyzed.

Trinoo[Ditf] uses a handler/agent architecture, wherein an attacker sends commands to the handler via TCP and handlers and agents communicate via UDP. Both handler and agents are password protected to try to prevent them from being taken over by another attacker. Trinoo generates UDP packets of a given size to random ports on one or multiple target addresses, during a specified attack interval.

Tribe Flood Network (TFN) [Dith] uses a different type of handler/agent architecture. Commands are sent from the handler to all of the agents from the command line. The attacker does not “log in” to the handler as with trinoo or Stacheldraht. Agents can wage a UDP flood, TCP SYN flood, ICMP Echo flood, and Smurf attacks at specified or random victim ports. The attacker runs commands from the handler using any of a number of connection methods (e.g., remote shell bound to a TCP port, UDP-based client/server remote shells, ICMP-based client/server shells such as LOKI [rou97], SSH terminal sessions, or normal telnet TCP terminal sessions). Remote control of TFN agents is accomplished via ICMP Echo Reply packets. All commands sent from handler to agents through ICMP packets are coded, not cleartext, which hinders detection.

Stacheldraht [Ditg] (German for “barbed wire”) combines features of trinoo and TFN tools and adds encrypted communication between the attacker and the handlers. Stacheldraht uses TCP for encrypted communication between the attacker and the handlers, and TCP or ICMP for communication between handler and agents. Another added feature is the ability to perform automatic updates of agent code. Available attacks are UDP flood, TCP SYN flood, ICMP Echo flood, and Smurf attacks.

Shaft [DLD00] is a DDoS tool that shares a combination of features similar to those in trinoo, TFN, and Stacheldraht. Added features are the ability to switch handler and agent ports on the fly (thus hindering detection of the tool by intrusion detection systems), a “ticket” mechanism to link transactions, and a particular interest in packet statistics. Shaft uses UDP for communication between handlers and agents. Remote control is achieved via a simple telnet connection from the attacker to the handler. Shaft uses “tickets” for keeping track of its individual agents. Each command sent to the agent contains a password and a ticket. Both passwords and ticket numbers have to match for the agent to execute the request. Simple letter shifting (a Caesar cipher) is used to obscure passwords in sent commands. Agents can generate a UDP flood, TCP SYN flood, ICMP flood, or all three attack types. The flooding occurs in bursts of 100 packets per host (this number is hard-coded), with the source port and source address randomized. Handlers can issue a special command to agents to obtain statistics on malicious traffic generated by each agent. It is suspected that this is used to calculate the yield of a DDoS network.

Tribe Flood Network 2000 (TFN2K) [CERb] is an improved version of the TFN attack tool. It includes several features designed specifically to make TFN2K traffic difficult to recognize and filter; to remotely execute commands; to obfuscate the true source of the traffic, to transport TFN2K traffic over multiple transport protocols including UDP, TCP, and ICMP, and to send “decoy” packets to confuse attempts to locate other nodes in a TFN2K network. TFN2K obfuscates the true traffic source by spoofing source addresses. Attackers can choose between random spoofing and spoofing within a specified range of addresses. In addition to flooding, TFN2K can also perform some vulnerability attacks by sending malformed or invalid packets, as described in [CER98a, CERa].

Mstream [CER01b, DWDL] generates a flood of TCP packets with the ACK bit set. Handlers can be controlled remotely by one or more attackers using a password-protected interactive login. The communications between attacker and handlers, and a handler and agents, are configurable at compile time and have varied significantly from incident to incident. Source addresses in attack packets are spoofed at random. The TCP ACK attack exhausts network resources and will likely cause a TCP RST to be sent to the spoofed source address (potentially also creating outgoing bandwidth consumption at the victim).

Trinity is the first DDoS tool that is controlled via IRC. Upon compromise and infection by Trinity, each machine joins a specified IRC channel and waits for commands. Use of a legitimate IRC service for communication between attacker and agents replaces the classic independent handler and elevates the level of the threat, as explained in Section 4.2.2. Trinity is capable of launching several types of flooding attacks on a victim site, including UDP, IP fragment, TCP SYN, TCP RST, TCP ACK, and other floods.

From late 1999 through 2001, the Stacheldraht and TFN2K attack tools were the most popular. The Stacheldraht agent was bundled into versions of the t0rnkit rootkit and a variant of the 2001 Ramen worm. The 1i0n worm included the TFN2K agent code.

On the Windows side, a large number of blended threat rootkit bundles include the knight.c or kaiten.c DDoS bots. TFN2K was coded specifically to compile on Windows NT, and versions of the trinoo agent have also been seen on Windows systems. In fact, knight.c was originally coded for Unix systems, but can be compiled with the Cygwin development libraries. Using this method, nearly any Unix DDoS program could reasonably be ported to Windows, and in fact some Windows blended threat bundles are delivered in Unix tar-formatted archives that are unpacked with the Cygwin-compiled version of GNU tar [Dev].

Agobot and its descendant Phatbot saw very widespread use in 2003 and 2004. This blended threat is packed into a single program that some have called a “Swiss army knife” of attack tools. Phatbot implements two types of SYN floods, a UDP flood, an ICMP flood, the Targa flood (random IP protocol, fragmentation and fragment offset values, and spoofed source address), the wonk flood (one SYN packet, followed by 1,023 ACK packets) floods, and a recursive HTTP GET flood or a single HTTP GET flood with a built-in delay in hours (either set by the user or randomly chosen). The latter, when distributed across a network of tens or hundreds of thousands of hosts, would look like a normal pattern of HTTP traffic that would be very difficult to detect and block by some defense mechanisms.

4.4.2 Blended Threat Toolkits

Blended threats typically include some or all of the following components, which can vary due to operating system, degree of automation (for example, worms), author, etc.

• A Windows network service program. A tool commonly found in Windows blended threats is a program called Firedaemon. Firedaemon is responsible for registering programs to be run as servers, so they can listen on network sockets for incoming connections. Firedaemon would typically control the FTP server, IRC bounce program, and/or backdoor shell.

• Scanners. Various network scanners are included to help the attacker reconnoiter the local network and find other hosts to attack. These may be simple SYN scanners like synscan, TCP banner grabbers like mscan, or more full-featured scanners like nmap (http://www.nmap.org).

• Single-threaded DoS programs. While these programs may seem old-fashioned, a simple UDP or SYN flooder such as synk4 can still be effective against some systems. The attacker must log in to the host and run these commands from the command line, or use an IRC bot that is capable of running external commands, such as the Power bot.

• An FTP server. Installing an FTP server, such as Serv-U FTP daemon, allows an attacker (or software/media pirate who doubles as DDoS attacker) to upload files to the compromised host. These files are then served up by the next category of programs, the Warez bot.

• An IRC file service (Warez) bot. Pirated media files (music and video) and software programs are known as Warez. Bots that serve Warez are known as—you guessed it—Warez bots. Bots and IRC clients are able to transfer files using a feature of IRC called the Direct Client-to-Client (DCC) protocol.

One of the most popular Warez bots is called the iroffer bot. Large bot networks using the iroffer form XDCC Warez bot nets (a peer-to-peer DCC network) and rely on Serv-U FTP daemons for uploading gigabytes of pirated movies.4

• An IRC DDoS bot or DDoS agent. As mentioned earlier, standard DDoS tools like Stacheldraht or TFN, or IRC bots like GTbot or knight, are typically found in blended threats. These programs may be managed by Firedaemon on Windows hosts, or inetd or cron on Unix hosts.

• Local exploit programs. Since these kits are used for convenience, they often include some method of performing privilege escalation on the system, in the event they are loaded into a normal user account that was compromised through password sniffing. This allows the attacker to gain full administrative rights, at which point all the programs can then be installed completely on the compromised host.

• Remote exploit programs. Going along with the scanner program will often be a set of remote exploits that can be used to extend the attacker’s reach into your network, or use your host as a stepping stone to go attack another site. Scripts that automate the scanning and remote exploitation are often used, making the process as simple as running a single command and giving just the first one, two, or three octets of a network address.

• System log cleaners. Once the intruder has gained access to the system, she often wants to wipe out any evidence that she ever connected to the host. There are log cleaners for standard text log files (e.g., Unix syslog or Apache log files), or for binary log files (e.g., Windows Event Logs or Unix wtmp and lastlog files).

• Trojan Horse operating system program replacements. To provide backdoors to regain access to the system, or to make the system “lie” about the presence of the attackers’ running programs, network connections, and files/directories, attackers often replace some of the operating system’s external commands. On Unix systems, the candidate programs for replacement would typically be ls and find (replaced to hide files), ps and top (replaced to hide processes), netstat (replaced to hide network connections), and ifconfig (replaced to hide the fact the network interface is in promiscuous mode for sniffing).

• Sniffers. Installing a sniffer allows the attacker to steal more login account names and passwords, extending his reach into your network. Most sniffers look for commonly used protocols that put passwords in cleartext form on the network, such as telnet, ftp, and the IMAP and POP e-mail protocols. Some sniffers allow logging of the sniffed data to an “unlinked” (or removed) file that will not show up in directory listings, possibly encrypted, or even located on a remote host (Phantom sniffer).

As described earlier, Phatbot implements a large percentage of these functions in a single program, including its own propagation.

4.4.3 Implications

Security sites such as PacketStormSecurity.org have assembled large numbers of malicious programs. Some of the tools are clearly written for reuse and allow easy adaptation for a specific purpose, and others are clearly crippled so that script kiddies cannot easily apply them.

Hacker Web sites offer readily downloadable DDoS toolkits. This code can frequently be used without modification or real understanding, just by specifying a command to start recruiting agents and then, at the time of the attack, specifying another command with the target address and type of the attack. As a result, those who wish to use existing tools, or craft their own, have a ready supply of code with which to work. They must still learn how to recruit an attack network, to keep it from being stolen by others, how to target their victims, and how to get around any defenses. With dedication and time, or money to buy these skills, this is not a significant obstacle.

4.5 What Is IP Spoofing?

One tactic used in malicious attacks, particularly in DDoS, is IP spoofing. In normal IP communications, the header field contains the source IP address and the destination address as set by the default network socket operations. IP spoofing occurs when a malicious program creates its own packets and does not set the true source IP address in the packet’s header. It is easy to craft individual packets with full control over the IP header and send them out over the network if one has sufficient privileges within the operating system. This is referred to as raw socket access.

A natural question to ask is why does this capability exist in the TCP/IP stack? There are perfectly legitimate reasons to craft packets by hand and transmit them on the network, rather than only use functions provided by a given TCP/IP stack’s API; for example, in mobile IP environments, where a roaming host must use a “home” IP address in a foreign network [Per02], virtual private networks that set the host IP to an address local to the organization’s network, etc. Regardless of the advisability of allowing use of raw sockets in the TCP/IP API, current operating systems lacking mandatory access control [Bell] and other advanced security features will have ways of getting around such limitations. Removal of raw sockets from the Windows API was proposed in 2001, and researchers showed many examples of programmers getting around the lack of raw sockets in earlier versions of Windows (e.g., the l0pht implemented a workaround for a lack of raw sockets in Windows 9x in l0phtcrack 3; PCAUSA provides a raw sockets implementation for Windows 9x—see http://www.pcausa.com/; WinPcap implements a means of sending raw packets—see http://netgroup-serv.polito.it/winpcap/). With the release of Windows XP Service Pack 2 in August 2004, Microsoft removed the raw sockets API, which broke applications like the public domain nmap port scanner. In just a few days, a workaround was produced restoring the ability of nmap to craft custom packets. See http://seclists.org/lists/nmap-hackers/2004/Jul-Sep/0003.html. We must also live with the fact that even if the ability to spoof addresses did not exist in the first place, DDoS would still not only be possible, but be just as damaging. There are simply too many ways to perform viable denial of service attacks.

There are several levels of spoofing.

• Fully random IP addresses. The host generates IP addresses that are taken at random from the entire IPv4 space, 0.0.0.0–255.255.255.255. This strategy will sometimes generate invalid IPv4 addresses, such as addresses from 192.168.0.0 range, that are reserved for private networks, and may also generate nonroutable IP addresses, multicast addresses, broadcast addresses, and invalid addresses (e.g., 0.2.45.6). Some of these exotic addresses cause significant problems in their own right for routing hardware, which must process spoofed packets, and in some cases they crash or lock up the router. However, most randomly generated addresses will be valid and routable. If the attacker cares little about whether a particular packet is delivered, she can send large numbers of randomly spoofed packets and expect that most of them will be delivered.

A frequently proposed defense against random spoofing is to use a form of ingress and egress filtering [FS00]. (See Sidebar “Ingress/Egress Filtering” for a complete description of these sometime confusing terms and how they apply to the problem of IP spoofing.) This technique compares the packet’s source address with the range of IP addresses assigned to its source or destination network, depending on the location of the filtering router, and drops nonmatching ones that appear to be randomly spoofed.

While ingress/egress filtering has the potential to greatly limit the ability of attackers to generate spoofed packets, it has to be widely deployed to really impact the DDoS threat. An edge network performing ingress/egress filtering is mostly scrutinizing its own traffic to protect others, but itself gains little benefit. This may be the reason why this type of filtering is still not widely deployed.

• Subnet spoofing. If a host resides in, say, the 192.168.1.0/24 network, then it is relatively easy to spoof a neighbor from the same network. For example, 192.168.1.2 can easily spoof 192.168.1.45 or any other address in 192.168.1.0/24 range unless the network administrators have put in protective measures that prevent an assigned Ethernet hardware address (MAC address) being associated with any but the administratively assigned IP address. This is an expensive fix from an administration point of view.

If the host is part of a larger network (for example, a /16 network that contains 65,536 addresses), then it could spoof addresses from the larger domain, assuming the protective measures outlined in the previous point have not been implemented.

Packets spoofed at the subnet level can be filtered by ingress/egress filtering only at the subnet level, since their source address is, by definition, from an assigned address range for a given network. Even with such filtering at the subnet level, spoofing can still make it difficult to identify the flooding host.

The terms ingress and egress mean, respectively, the acts of entering and exiting. In an interconnected network of networks, such as the Internet, what leaves (egresses) one network will enter (ingress) another. It is extremely important to clearly define the location where the filtering is done with respect to the network whose traffic is being filtered, to avoid confusion.

If there was one “Big I” Internet, and we all connected our hosts directly to “The Internet,” life would be simple and we could just say “ingress means entering the Internet” and “egress means leaving the Internet,” and everything would be clear. There would be only one perspective. But there is no “Internet” to which we all connect, and to make matters worse there are tier 1 and tier 2 network providers, as well as leaf networks (e.g., university and enterprise networks).

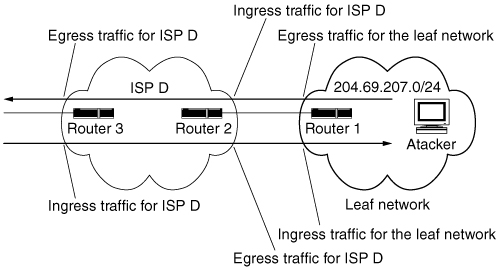

To see how a confusion can occur, take the example given in RFC 2827 [FS00]. It discusses ingress filtering in terms of an attacker’s packets coming in to an edge router of ISP D. This is illustrated by the following figure.

Ingress/egress filtering example from RFC 2827

The authors of the RFC describe an attacker residing within the 204.69.207. 0/24 address space, which is provided Internet connectivity by ISP D. (This is shown in the above figure.) They describe an input traffic filter being applied on the ingress (input) link of Router 2, which provides connectivity to the attacker’s network. This restricts traffic to allow only packets originating from source addresses within the 204.69.207.0/24 prefix, prohibiting the attacker from spoofing addresses of any other network besides 204.69.207.0/24.

The authors of this RFC only discuss ISP D doing filtering of traffic coming in to their edge router from their customer, shown in the figure on page 94 as Router 2. So in their example, ISP D does ingress filtering to avoid an attacker using “invalid” source addresses that appear to come from networks other than the customer’s address range.

This RFC does not consider this same filtering also being done by the customer through the router the customer owns, Router 1. (This customer is known as an edge network, an end customer of a network service provider, or simply a leaf network.) If outbound packets are filtered on Router 1 to prevent source addresses from networks other than 204.69.207.0/24 leaving this leaf network, the customer would be doing egress filtering.

It would be very confusing to always say “ingress means packets going to the ISP” and explain that customer 204.69.207.0/24 does ingress filtering to block packets leaving its network, especially if the description is taken across more than one network (e.g., moving to the left in the figure on page 94.)

Another possible source of confusion in the use of these terms is that the filtering to be performed could be on any criteria, not just on source addresses. While discussion of these terms often focuses on the issue of mitigating IP spoofing, the filtering could be on protocol type, port number, or any other criteria of importance.

Within the context of handling IP spoofing, there are two important reasons for making the ingress/egress distinction, and they have to do with filtering being done for the same reason at two locations (perhaps simultaneously), the customer network’s egress router and the ingress router of an ISP that connects to the customer’s network.

1. It can be argued that egress filtering of traffic leaving a leaf network is less expensive, CPU-wise, than the equivalent ingress filtering for that same traffic coming into a tier 2 network provider (and it is even worse if you go up one level to tier 1, or backbone providers, which is not the place to do ingress filtering to stop spoofed packets coming in from their peers.)* Given this argument, egress filtering on leaf networks to stop attackers from spoofing off-network hosts is more desirable.

2. Spoofing can happen in either direction. Attackers outside your network can send packets inbound to your network pretending to be hosts on your network, and attackers inside your network can send packets outbound from your network pretending to be from hosts outside your network. This means that leaf networks should do both ingress and egress filtering to protect their network as well as other networks. Even if your ISP says it will filter packets coming into your network to prevent spoofing, you may want to do redundant filtering of those same packets to make sure that spoofing cannot take place. After all, don’t things fail sometimes?

In this book, we will be careful to use ingress filtering to mean filtering the traffic coming into your network and egress filtering to mean filtering the traffic leaving your network. We will be explicit when talking about filtering being done by an ISP or tier 1 provider versus filtering being done by a leaf network. Where the filters are applied and why really does matter.

• Spoofing the victim’s addresses. This is the reflection attack scenario. The host forges the source address in service request packets (for example, a SYN packet for a TCP connection) to invoke a flood of service replies to be sent to the victim. If there is no filtering of packets as they leave the source network or at the entry point to the upstream ISP’s router, these forged packets allow a reflection DoS attack.

4.5.1 Why Is IP Spoofing Defense Challenging?

There is nothing to prevent someone from spoofing IP addresses, since all that is required is privileged access to the network socket. One must put up filters and restrictions at the edge of every network to allow only packets with source addresses from that network to leave. For example, a network 192.168.1.0/24 (a /24 network containing 254 host addresses) should allow only address packets carrying a source from the range 192.168.1.1–192.168.1.254 to leave the network. Note the clear omission of the broadcast addresses 192.168.1.0 and 192.168.1.255 which are used in conjunction with spoofing in a Smurf attack. If any network does not put this form of filtering in place on its router, machines in that network can spoof any IP address.

There are sometimes good reasons not to turn this form of filtering on in your network. It requires a little extra network administration, and may temporarily shut off your Internet access if you make configuration mistakes. If you do turn anti-spoofing egress filtering on, it may break mobile IP support. It may be worth considering for other security implications, but you must realize that you are contributing to other networks’ security, not your own. You would need expensive solutions to prevent spoofed traffic from reaching you. Some ideas are discussed in Chapter 7.

There are research results suggesting that it may be possible to detect many spoofed packets in the core of the Internet [PL01, LMW+01, FS00]. However, these approaches are immature, they require deployment in core Internet routers, and there is no reason to believe any of them will be adopted in the foreseeable future.

4.5.2 Why DDoS Attacks Use IP Spoofing

IP spoofing is not necessary for a successful DDoS attack, since the attacker can exhaust the victim’s resources with a sufficiently large packet flood, regardless of the validity of source addresses. However, some DDoS attacks do use IP spoofing for several reasons.

• Hiding the location of agent machines. In single-point DoS attacks, spoofing was used to hide the location of the attacking host. In such attacks, network operators find it hard to block the source of the attack and/or remove the offending host from the network, or even clean it. In DDoS attacks, the agents are the path to the handler, which provides an additional layer of indirection to the attacker. Hiding agents means hiding a quick path to the attacker.

• Reflector attacks. Reflector attacks require spoofing to be accomplished. The agents must be able to spoof the victim’s addresses in service requests directed at legitimate servers, to invoke replies that flood the victim.

• Bypassing DDoS defenses. Some DDoS defenses build a list of legitimate clients that are accessing the network. During the attack, these clients are given preferential treatment. Other defenses attempt to share resources fairly among all current clients, each source IP address being assigned its fair share. In both of these scenarios, IP spoofing defeats the separation of clients based on their IP addresses, and enables the attack packets to assume the IP addresses of legitimate clients. Of course this only applies to UDP-based attacks, as one cannot typically spoof source addresses on TCP connection–based services in order to bypass DDoS mitigation defenses.

4.5.3 Spoofing Is Irrelevant at 10,000+ Hosts

Looking at the history of DDoS attacks, one quickly realizes that given the sheer number of agents in a DDoS network, spoofing is not really necessary in a flooding attack, as described in Section 4.3.6. Agent networks of 10,000 hosts or more are not uncommon and are easily traded on IRC networks, where some malicious attackers use them as “currency.” As shown in Section 2.5.2, botnets of 400,000 hosts are not impossible to obtain. At this size, preventing all spoofing could somewhat help certain DDoS defense approaches deal with certain forms of DDoS attacks. This alone would not eliminate the problem of DDoS.

4.6 DDoS Attack Trends

There is a constant arms race between the attackers and the defenders. As soon as there are effective defenses against a certain type of attack, the attackers change tactics finding a way to bypass these defenses. Due to improved security practices such as ingress/egress filtering, attackers have improved their tools, adding the option of specifying the spoofing level or spoofing netmask. A large number of attacks nowadays use subnet spoofing, as discussed in Section 4.5, bypassing anti-spoofing ingress/egress filters.

The defenses that detect command and control traffic based on network signatures of known DDoS tools have led the attackers to start encrypting this traffic. As an added benefit, this encryption prevents the DDoS networks from being easily taken over by competing attacker groups, as well as from being easily discovered and dismantled.

New techniques in anti-analysis and anti-forensics make discovery of the mission of the tool difficult. Obfuscation of the running code by encryption exists in both the Windows and Unix worlds. Code obfuscators like burneye, Shiva, and burneye2 are under scrutiny by security analysts.

The trend of making DDoS tools and attack strategies more advanced in response to advanced defenses will likely continue. This was predicted in the original trinoo analysis [Ditf], and the trend has continued unabated. There are a variety of potential DDoS scenarios that would be very difficult for defense mechanisms to handle, yet painfully simple for attackers to perpetrate. Some of these were detailed in a CERT Coordination Center publication on DDoS attack trends [HWLT01].

To prevail, the defenders must always keep in mind that they have intelligent and agile adversaries. They themselves need to be intelligent and agile in response. While it is unlikely that we will ever design a perfect defense which handles all possible DDoS attacks, making determined progress in handling simple scenarios will discourage all but the most sophisticated attackers, and dramatically reduce the incidence of attacks.

In defending against an advanced attacker, one needs to get into the mindset of the attacker and pay close attention to DDoS attack tool capabilities and features. Attackers often must test their attack method in advance, or probe your network for weaknesses. They may also need to test to see if their attack has succeeded. Evidence of this can be found in logs, or collected during an attack as you adjust your defenses. This is known as “network situational awareness.”

When facing an advanced DDoS attack, one would be wise to study Boyd’s OODA Loop (which stands for Observe, Orient, Decide, and Act) [Boy]. While a complex concept, the essential aspects are employing methods of observing what is happening on your network and hosts, using a body of knowledge of DDoS attack tools and behavior such as what is provided in this book, knowing the available set of actions that can be taken to counter an attack (and the results you expect to obtain by taking those actions), and finally acting to counter the attack. Once action is taken, you immediately go back to observation to determine if you obtained the expected results, and if not, go through the process again to choose another course of action.