Chapter 5. An Overview of DDoS Defenses

How can we defend against the difficult problems raised by distributed denial-of-service attacks? As discussed in Chapter 4, there are two classes of victims of DDoS attacks: the owners of machines that have been compromised to serve as DDoS agents and the final targets of DDoS attacks. Defending against the former attack is the same as defending against any other attempt to compromise your machine. We will concentrate in this chapter on the issue of defending the final target of the DDoS attack—the machine or network that the attacker wishes to deny service to.

We will begin by discussing the aspects of DDoS attacks that make defending against them difficult. We will then discuss the types of challenges a DDoS defense solution must overcome, and then cover basic concepts of defense: prevention versus detection and reaction, the basic goals to be achieved by a defense system, and where to locate the defenses in the network.

In spite of several years of intense research, these attacks still inflict a large amount of damage to Internet users. Why are these attacks possible? Can we identify some feature in the Internet design or in its core protocols, such as TCP and IP, that facilitates DoS attacks? Can we then remove or modify this feature to resolve the problem? Like all histories, the history of DDoS attacks discussed in Chapter 3 does not represent a final state, but is merely the prelude to the future. We have presented publically known details on exactly how today’s attacks are perpetrated, which has set the stage for discussing what you must do to counter them. Remember, however, that the current DDoS attack trends suggest, more than anything else, continued and rapid change for the future. Early analyses of DDoS attack tools like trinoo, TFN, Stacheldraht, and Shaft all made predictions about future development trends based on past history. Attackers continued in the directions identified, as well as going in new directions (e.g., using IRC for command and control, and integration of several other malicious functions). We should expect both the number and sophistication of attack tools to grow steadily. Therefore, the tools attackers will use in upcoming years and the methods used to defend against them will progress from the current states we describe in this book, requiring defenders to keep up to date on new trends and defense methods.

Another big problem in the arms race between the attackers and the defenders is the imbalance of the effort needed to take another step. Developing DDoS solutions is costly and they usually work for a small range of attacks. The attacker needs only to change a few lines of code, or gather more agents (hardly any effort at all) to bypass or overwhelm the existing defenses. The defenders, on the other hand, spend an immense amount of time and resources to augment their systems for handling new attacks. It seems like an unfair competition. But does it have to be so, or is there something we have overlooked that could restore the balance?

5.1 Why DDoS Is a Hard Problem

The victim of a vulnerability attack (see Chapter 2) usually crashes, deadlocks, or has some key resource tied up. Vulnerability attacks need only a few packets to be effective, and therefore can be launched from one or very few agents. In a flooding attack, the resource is tied up as long as the attack packets keep coming in, and is reclaimed when the attack is aborted. Flooding attacks thus need a constant flow of the attack packets into the victim network to be effective.

Vulnerability attacks target protocol or implementation bugs in the victim’s systems. They base their success on much the same premise as intrusion attempts and worms do, relying on the presence of protocol and implementation bugs in the victim’s software that can be exploited for the attacker’s purpose. While intruders and worm writers simply want to break into the machine, the aim of the vulnerability attack is to crash it or otherwise cripple it. Future security mechanisms for defending against intrusions and worms and better software writing standards are likely to help address DDoS vulnerability attacks. In the meantime, patching and updating server machines and filtering malformed packets offer a significant immunity to known vulnerability attacks. A resourceful attacker could still bypass these defenses by detecting new vulnerabilities in the latest software releases and crafting new types of packets to exploit them. This is a subtle attack that requires a lot of skill and effort on the part of the attacker, and is not very common. There are much easier ways to deny service.

Flooding attacks target a specific resource and simply generate a lot of packets that consume it. Naturally, if the attack packets stood out in any way (e.g., they had a specific value in one of the header fields), defense mechanisms could easily filter them out. Since a flooding attack does not need any specific packets, attackers create a varied mixture of traffic that blends with the legitimate users’ traffic. They also use IP spoofing to create a greater variety of packet sources and hide agent identities. The victim perceives the flooding attack as a sudden flood of requests for service from numerous (potentially legitimate) users, and attempts to serve all of them, ultimately exhausting its resources and dropping any surplus traffic it cannot handle. As there are many more attack packets than the legitimate ones, legitimate traffic stands a very low chance of obtaining a share of the resource, and a good portion of it gets dropped. But the legitimate traffic does not lose only because of the high attack volume. It is usually congestion-responsive traffic—it perceives packet drops as a sign of congestion and reduces its sending rate. This decreases the chance of obtaining resources even further, resulting in more legitimate drops. The following characteristics of DDoS flooding attacks make these attacks very effective for the attacker’s purpose and extremely challenging for the defense:

• Simplicity. There are many DDoS tools that can be easily downloaded or otherwise obtained and set into action. They make agent recruitment and activation automatic, and can be used by inexperienced users. These tools are exceedingly simple, and some of them have been around for years. Still, they generate effective attacks with little or no tweaking.

• Traffic variety. The similarity of the attack traffic to legitimate traffic makes separation and filtering extremely hard. Unlike other security threats that need specially crafted packets (e.g., intrusions, worms, viruses), flooding attacks need only a high traffic volume and can vary packet contents and header values at will.

• IP spoofing. IP spoofing makes the attack traffic appear as if it comes from numerous legitimate clients. This defeats many resource-sharing approaches that identify a client by his IP address. If IP spoofing were eliminated, agents could potentially be distinguished from the legitimate clients by their aggressive sending patterns, and their traffic could be filtered. In the presence of IP spoofing, the victim sees a lot of service initiation requests from numerous seemingly legitimate users. While the victim could easily tell those packets apart from ongoing communications with the legitimate users, it cannot discern new legitimate requests for service from the attack ones. Thus, the victim cannot serve any new users during the attack. If the attack is long, the damage to victim’s business is obvious.

• High-volume traffic. The high volume of the attack traffic at the victim not only overwhelms the targeted resource, but makes traffic profiling hard. At such high packet rates, the defense mechanism can do only simple per-packet processing. The main challenge of DDoS defense is to discern the legitimate from the attack traffic, at high packet speeds.

• Numerous agent machines. The strength of a DDoS attack lies in the numerous agent machines distributed all over the Internet. With so many agents, the attacker can take on even the largest networks, and she can vary her attack by deploying subsets of agents at a time or sending very few packets from each agent machine. Varying attack strategies defeat many defense mechanisms that attempt to trace back the attack to its source. Even in the cases when the attacker does not vary the attacking machines, the mere number of agents involved makes traceback an unattractive solution. What if we knew the identities of 10,000 machines that are attacking our network? This would hardly get us any closer to stopping the attack. The situation would clearly be simplified if the attacker were not able to recruit so many agents. As mentioned above, the general increase of Internet hosts and, more recently, the high percentage of novice Internet users suggest that the pool of potential agents will only increase in the future. Furthermore, the distributed Internet management model makes it unlikely that any security mechanism will be widely deployed. Thus, even if we found ways to secure machines permanently and make them impervious to the attacker’s intrusion attempts, it would take many years until these mechanisms would be sufficiently deployed to impact the DDoS threat.1

• Weak spots in the Internet topology. The current Internet hub-and-spoke topology has a handful of highly connected and very well provisioned spots that relay traffic for the rest of the Internet. These hubs are highly provisioned to handle heavy traffic in the first place, but if these few spots were taken down by an attacker or heavily congested, the Internet would grind to a halt. Amassing a large number of agent machines and generating heavy traffic passing through those hot spots would have a devastating effect on global connectivity. For further discussion of this threat, see [GOM03] or [AJB00, Bar02].

Let’s face it: A flooding DDoS attack seems like a perfect crime in the Internet realm. Means (attack tools) and accomplices (agent machines) are abundant and easily obtainable. A sufficient attack volume is likely to bring the strongest victim to its knees and the right mixture of the attack traffic, along with IP spoofing, will defeat attack filtering attempts. Since numerous businesses rely heavily on online access, taking that away is sure to inflict considerable damage to the victim. Finally, IP spoofing, numerous agent machines and lack of automated tracing mechanisms across the networks guarantee little to no risk to perpetrators of being caught.

The seriousness of the DDoS problem and the increased frequency, sophistication and strength of attacks have led to the advent of numerous defense mechanisms. Yet, although a great effort has been invested in research and development, the problem is hardly dented, let alone solved. Why is this so?

5.2 DDoS Defense Challenges

The challenges in designing DDoS defense systems fall roughly into two categories: technical challenges and social challenges. Technical challenges encompass problems associated with the current Internet protocols and characteristics of the DDoS threat. Social challenges, on the other hand, largely pertain to the manner in which a successful technical solution will be introduced to Internet users, and accepted and widely deployed by these users.

The main problem that permeates both technical and social issues is the problem of large scale. DDoS is a distributed threat that requires a myriad of overlapping “solutions” to various aspects of the DDoS problem, which must be spread across the Internet because attacking machines may be spread all over the Internet. Clearly, attack streams can only be controlled if there is a point of defense between the agents and the victims. One approach is to place one defense system close to the victim so that it monitors and controls all of the incoming traffic. This approach has many deficiencies, the main one being that the system must be able to efficiently handle and process huge traffic volumes. The other approach is to divide this workload by deploying distributed defenses. Defense systems must then be deployed in a widespread manner to ensure effective action for any combination of agent and victim machines. As widespread deployment cannot be guaranteed, the technical challenge lies in designing effective defenses that can provide reasonable performance even if they are sparsely deployed. The social challenge lies in designing an economic model of a defense system in a manner that motivates large-scale deployment in the Internet.

5.2.1 Technical Challenges

The distributed nature of DDoS attacks, similarity of the attack packets to the legitimate ones, and the use of IP spoofing represent the main technical challenges to designing effective DDoS defense systems, as discussed in Section 5.1. In addition to that, the advance of DDoS defense research has historically been hindered by the lack of attack information and absence of standardized evaluation and testing approaches. The following list summarizes and discusses technical challenges for DDoS defense.

• Need for a distributed response at many points in the Internet. There are many possible DDoS attacks, very few of which can be handled only by the victim. Thus, it is necessary to have a distributed, possibly coordinated, response system. It is also crucial that the response be deployed at many points in the Internet to cover diverse choices of agents and victims. Since the Internet is administered in a distributed manner, wide deployment of any defense system (or even various systems that could cooperate) cannot be enforced or guaranteed. This discourages many researchers from even considering distributed solutions.

• Lack of detailed attack information. It is widely believed that reporting occurrences of attacks damages the business reputation of the victim network. Therefore, very limited information exists about various attacks, and incidents are reported only to government organizations under obligation to keep them secret. It is difficult to design imaginative solutions to the problem if one cannot become familiar with it. Note that the attack information should not be confused with attack tool information, which is publicly available at many Internet sites. Attack information would include the attack type, time and duration of the attack, number of agents involved (if this information is known), attempted response and its effectiveness, and damages suffered. Appendix C summarizes the limited amount of publicly available attack information.

• Lack of defense system benchmarks. Many vendors make bold claims that their solution completely handles the DDoS problem. There is currently no standardized approach for testing DDoS defense systems that would enable their comparison and characterization. This has two detrimental influences on DDoS research: (1) Since there is no attack benchmark, defense designers are allowed to present those tests that are most advantageous to their system; and (2) researchers cannot compare actual performance of their solutions to existing defenses; instead, they can only comment on design issues.

• Difficulty of large-scale testing. DDoS defenses need to be tested in a realistic environment. This is currently impossible due to the lack of large-scale test beds, safe ways to perform live distributed experiments across the Internet, or detailed and realistic simulation tools that can support several thousand nodes. Claims about defense system performance are thus made based on small-scale experiments or simulations and are not credible.

This situation, however, is likely to change soon. The National Science Foundation and the Department of Homeland Security are currently funding a development of a large-scale test bed and have sponsored research efforts to design benchmarking suites and measurement methodology for security systems evaluation [USC]. We expect that this will greatly improve quality of research in DDoS defense field. Some test beds are in use right now by DDoS researchers (e.g. PlanetLab [BBC+04] and Emulab/Netbed [WLS+02]).

5.2.2 Social Challenges

Many DDoS defense systems require certain deployment patterns to be effective. Those patterns fall into several categories.

• Complete deployment. A given system is deployed at each host, router, or network in the Internet.

• Contiguous deployment. A given system is deployed at hosts (or routers) that are directly connected.

• Large-scale, widespread deployment. The majority of hosts (or routers) in the Internet deploy a given system.

• Complete deployment at specified points in the Internet. There is a set of carefully selected deployment points. All points must deploy the proposed defense to achieve the desired security.

• Modification of widely deployed Internet protocols, such as TCP, IP or HTTP.

• All (legitimate) clients of the protected target deploy defenses.

None of the preceding deployment patterns are practical in the general case of protecting a generic end network from DDoS attacks (although some may work well to protect an important server or application that communicates with a selected set of clients). The Internet is extremely large and is managed in a distributed manner. No solution, no matter how effective, can be deployed simultaneously in hundreds of millions of disparate places. However, there have been quite a few cases of an Internet product (a protocol, an application, or a system) that has become so popular after release that it was very widely deployed within a short time. Examples include Kazaa, the SSH (Secure Shell) protocol, Internet Explorer, and Windows OS. The following factors determine a product’s chances for wide deployment:

• Good performance. A product must meet the needs of customers. The performance requirement is not stringent, and any product that improves the current state is good enough.

• Good economic model. Each customer must gain direct economic benefit, or at least reduce the risk of economic loss, by deploying the product. Alternately, the customer must be able to charge others for improved services resulting from deployment.

• Incremental benefit. As the degree of deployment increases, customers might experience increased benefits. However, a product must offer considerable benefit to its customers even under sparse partial deployment.

Development of better patch management solutions, better end-host integrity and configuration management solutions, and better host-based incident response and forensic analysis solutions will help solve the first phase of DDoS problems—the ability to recruit a large agent network. Building a DDoS defense system that is itself distributed, with good performance at sparse deployment, with a solid economic model and an incremental benefit to its customers, is likely to ensure its wide deployment and make an impact on second-phase DDoS threat—defending the target from an ongoing attack.

In the remainder of this chapter we discuss basic DDoS defense approaches at a high level. In Chapter 6, we get very detailed and describe what steps you should take today to make your computer, network, or company less vulnerable to DDoS attacks, and what to do if you are the target of such an attack. In Chapter 7, we provide deeper technical details of actual research implementations of various defense approaches. This chapter is intended to familiarize you with the basics and to outline the options at a high conceptual level.

5.3 Prevention versus Protection and Reaction

As with handling other computer security threats, there are two basic styles of protecting the target of a DDoS attack: We can try to prevent the attacks from happening at all, or we can try to detect and then react effectively when they do occur.

5.3.1 Preventive Measures

Prevention is clearly desirable, when it can be done. A simple and effective way to make it impossible to perform a DDoS attack on any Internet site would be the best solution, but it does not appear practical. However, there is still value in preventive measures that make some simple DDoS attacks impossible, or that make many DDoS attacks more difficult. Reasonably effective preventive defenses deter attackers: If their attack is unlikely to succeed, they may choose not to launch it, or at least choose a more vulnerable victim. (Remember, however, that if the attacker is highly motivated to hit you in particular, making the attack a bit more difficult might not deter her.)

There are two ways to prevent DDoS attacks: (1) We can prevent attackers from launching an attack, and (2) we can improve our system’s capacity, resiliency, and ability to adjust to increased load so that an ongoing attack fails to prevent our system from continuing to offer service to its legitimate clients.

Measures intended to make DDoS attacks impossible include making it hard for attackers to compromise enough machines to launch effective DDoS attacks, charging for network usage (so that sending enough packets to perform an effective DDoS attack becomes economically infeasible), or limiting the number of packets forwarded from any source to any particular destination during a particular period of time. Such measures are not necessarily easy to implement, and some of them go against the original spirit of the Internet, but they do illustrate ways in which the basis of the DoS effect could be undermined, at least in principle.

Hardening the typical node to make it less likely to become a DDoS agent is clearly worthwhile. Past experience and common sense suggest, however, that this approach can never be completely effective. Even if the typical user’s or administrator’s vigilance and care increase significantly, there will always be machines that are not running the most recently patched version of their software, or that have left open ports that permit attackers to compromise them. Nonetheless, any improvement in this area will provide definite benefits in defending against DDoS attacks, and many other security threats such as intrusions and worms. More effective ways to prevent the compromise of machines would be extremely valuable. Similarly, methods that might limit the degree of damage that an attacker can cause from a site after compromising it might help, provided that the damage limitation included preventing the compromised site from sending vast numbers of packets.

Hardening a node or an entire installation to protect it from swelling the ranks of a DDoS army is no different from hardening them to protect from other network threats. Essentially, this is a question of computer and network hygiene. Entire books are written on this subject, and many of the necessary steps depend very much on the particular operating system and other software the user is running. If the reader does not already have access to such a book, many good ones can be found on the shelves of a typical bookstore that stocks computer books. So other than reiterating the vital importance of making it hard for an attacker to take control of your node, for complete details we refer the reader to resources specific to the kinds of machines, operating systems, and applications deployed.

While perfectly secure systems are a fantasy, not a feature of the next release of your favorite operating system, there are known things that can be done to improve the security of systems under development. More widespread use of these techniques will improve the security of our operating systems and applications, thus making our machines less likely to be compromised. Again, these are beyond the scope of this book and are subjects worthy of their own extended treatment. Possible avenues toward building more secure systems that might help us all avoid becoming unwilling draftees in a DDoS army in the future include the following:

• Better programmer education will lead to a generally higher level of application and operating system security. There are well-known methods to avoid common security bugs like buffer overflows, yet such problems are commonplace. A bettereducated programmer workforce might reduce the frequency of such problems.

• Improvements in software development and testing tools will make it easier for programmers to write code that does not have obvious security flaws, and for testers to find security problems early in the development process.

• Improvements in operating system security, both from a code quality point of view and from better designed security models for the system, will help. In addition to making systems harder to break into, these improvements might make it harder for an attacker to make complete use of a system shortly after she manages to run any piece of code on it, by compartmentalizing privileges or by having a higher awareness of proper and improper system operations.

• Automated tools for program verification will improve in their ability to find problems in code, allowing software developers to make stronger statements about the security of their code. This would allow consumers to choose to purchase more secure products, based on more than the word and reputation of the vendor. Similarly, development of better security metrics and benchmarks for security could give consumers more information about the risks they take when using a particular piece of software.

Beyond hardening nodes against compromise, prevention measures may be difficult to bring to bear against the DDoS problem. Many other types of prevention measures have the unfortunate characteristic of fundamentally changing the model of the Internet’s operation. Charging for packet sending or always throttling or metering packet flows might succeed in preventing many DDoS attacks, but they might also stifle innovative uses of the Internet. Anything based on charging for packets opens the Internet to new forms of attacks based on emptying people’s bank accounts by falsely sending packets under their identities. From a practical point of view, these types of prevention measures are unrealistic because they would require wholesale changes in the existing base of installed user machines, routers, firewalls, proxies, and other Internet equipment. Unless they can provide significant benefit to some segments of the Internet with more realistic partial deployment, they are unlikely to see real use.

Immunity to some forms of DDoS attack can potentially be achieved in a number of ways. For example, a server can be so heavily provisioned that it can withstand any amount of traffic that the backbone network could possibly deliver to it. Or the server and its router might accept packets from only a small number of trusted sites that will not participate in a DDoS attack. Of course, when designing a solution based on immunity, one must remember that the entire path to your installation must be made immune. It does little good to make it challenging to overload your server if it is trivial to flood your upstream ISP connection.

Some sites have largely protected themselves from the DDoS threat by these kinds of immunity measures, so they are not merely theoretical. For example, during the DDoS attacks on the DNS root servers in October 2002, all of the DNS servers were able to keep up with the DNS requests that reached them, since they all were sufficiently provisioned with processing power and memory [Nar]. Some of them, however, did not have enough incoming bandwidth to carry both the attack traffic and the legitimate requests. Those servers thus did not see all of the DNS requests that were sent to them. Other root servers were able to keep up with both the DDoS traffic (which consisted of a randomized mixture of ICMP packets, TCP SYN requests, fragmented TCP packets, and UDP packets) and the legitimate requests because these sites had ample incoming bandwidth, had mirrored their content at multiple locations, or had hardware-switched load balancing that prevented individual links from being overloaded. A number of DDoS attacks on large sites have failed because they targeted companies’ sites that have high-bandwidth provisions to handle the normal periodic business demand for download of new software products, patches, and upgrades.

The major flaw to the immunity methods as an overall solution to the DDoS problem is that known immunity methods are either very expensive or greatly limit the functionality of a network node, often in ways that are incompatible with the node’s mission. For example, limiting the nodes that can communicate with a small business’s Web site limits its customer base and makes it impossible for new customers to browse through its wares. Further, many immunity mechanisms protect only against certain classes of attacks or against attacks up to a particular volume. An immunity mechanism that rejects all UDP packets does not protect against attacks based on floods of HTTP requests, for example, and investing in immunity by buying bandwidth equal to that of your ISP’s own links will be a poor investment if the attacker generates more traffic than the ISP can accept. If attackers switch to a different type of DDoS attack or recruit vastly larger numbers of agents, the supposed immunity might suddenly vanish.

5.3.2 Reactive Measures

If one cannot prevent an attack, one must react to it. In many cases, reactive measures are better than preventive ones. While there are many DDoS attacks on an Internet-wide basis, many nodes will never experience a DDoS attack, or will be attacked only rarely. If attacks are rare and the costs of preventing them are high, it may be better to invest less in prevention and more in reaction. A good reactive defense might incur little or no cost except in the rare cases where it is actually engaged.

Reaction does not mean no preparation. Your reaction may require you to contact other parties to enlist their assistance or to refer the matter to legal authorities. If you know who to contact, what they can do for you, and what kind of information they will need to do it, your reaction will be faster and more effective. If your reaction includes locally deployed technical mechanisms that expect advice or confirmation from your system administrators, understanding how to interact with them and the likely implications of following (or not following) their recommendations will undoubtedly pay off when an attack hits. Certainly, with the current state of DDoS defense mechanisms, your preparation should include some ability to analyze what’s going on in your network. As discussed in Chapter 6, many sites have assumed a DDoS attack when actually there was a different problem, and their responses have thus been slow, expensive, and ineffective. Being well prepared to detect and react to DDoS attacks will prove far more helpful than anything you can buy or install.

Unlike preventive measures, reactive measures require attack detection. No reaction can take place until a problem is noticed and understood. Thus, the effectiveness of reactive measures to DDoS attacks depends not only on how well they reduce the DoS effect once they are deployed, but also on the accuracy of the system that determines which defenses are required to deal with a particular attack, when to invoke them, and where to deploy them. False positives, signals that DDoS attacks are occurring when actually they are not, may be an issue for the detection mechanism, especially if some undesirable costs or inconveniences are incurred when the reactive defense is deployed. At the extreme, if the detection mechanism falsely indicates that the reactive defense needs to be employed all the time, a supposedly reactive mechanism effectively becomes a preventive one, probably at a higher cost than having designed it to prevent attacks in the first place.

Reactive defenses should take effect as quickly as possible once the detection mechanism requests them. Taking effect does not mean merely being turned on, but reaching the point where they effectively stop (or, at least, reduce) the DoS effect. Presuming that there is some cost to engaging the reactive defense, this defense should be turned off as soon as the DoS attack is over. On the other hand, the defense must not be turned off so quickly that an attacker can achieve the DoS effect by stopping his attack and resuming it after a brief while, repeating the cycle as necessary.

Regardless of the form of defense chosen, the designers and users of the defenses must keep their real aim in mind. Any DoS attack, including distributed DoS attacks, aims to cripple the normal operation of its target. The attack’s goal is not really to deliver vast numbers of attack packets to the target, but to prevent the target from servicing most or all of its legitimate traffic. Thus, defenses must not only stop the attack traffic, but must let legitimate traffic through. If one does not care about handling legitimate traffic, a wonderful preventive defense is to pull out the network cable from one’s computer. Certainly, attackers will not be able to flood your computer with attack packets, but neither can your legitimate customers reach you. A defensive mechanism that, in effect, “pulls the network cable” for both good and bad traffic is usually no better than the attack itself. However, in cases in which restoring internal network operations is more important than allowing continued connectivity to the Internet, pulling the cable, either literally or figuratively, may be the lesser of two evils.

5.4 DDoS Defense Goals

Whether our DDoS defense strategy is preventive, reactive, or a combination of both, there are some basic goals we want it to achieve.

• Effectiveness. A good DDoS defense should actually defend. It should provide either effective prevention that really makes attacks impossible or effective reaction ensuring that the DoS effect goes away. In the case of reactive mechanisms, the response should be sufficiently quick to ensure that the target does not suffer seriously from the attack.

• Completeness. A good DDoS defense should handle all possible attacks. If that degree of perfection is impossible, it should at least handle a large number of them. A mechanism that is capable of handling an attack based on TCP SYN flooding, but cannot offer any assistance if a ping flood arrives, is clearly less valuable than a defense that can handle both styles of attack. Thus, a preventive measure like TCP SYN cookies helps but is not sufficient unless coupled with other defense mechanisms. Completeness is also required in detection and reaction. If our detection mechanism does not recognize a particular pattern of incoming packets as an attack, presumably it will not invoke any response and the attack will succeed.

While completeness is an obvious goal, it is extremely hard to achieve, since attackers are likely to develop new types of attacks specifically designed to bypass existing defenses. Defensive mechanisms that target the fundamental basis of DoS attacks are somewhat more likely to achieve completeness than those targeted at characteristics of particular attacks, even if those are popular attacks. For example, a mechanism that validates which packets are legitimate with high accuracy and concentrates on delivering only as many such packets as the target can handle is more likely to be complete than a mechanism that filters out packets based on knowledge of how a particular popular DDoS toolkit chooses its spoofed source addresses. However, it is often easier to counter a particular attack than to close basic vulnerabilities in networks and operating systems. Virus detection programs have shown that fairly complete defenses can be built by combining a large number of very specific defenses. A similar approach might solve the practical DDoS problem, even if it did not theoretically handle all possible DDoS attacks.

• Provide service to all legitimate traffic. As mentioned earlier, the core goal of DDoS defense is not to stop DDoS attack packets, but to ensure that the legitimate users can continue to perform their normal activities despite the presence of a DDoS attack. Clearly, a good defense mechanism must achieve that goal.

Some legitimate traffic may be flowing from sites that are also sending attack traffic. Other legitimate traffic is destined for nodes on the same network as the target node. There may be legitimate traffic that is neither coming from an attack machine nor being delivered to the target’s network, but perhaps shares some portion of its path through the Internet with some of the attack traffic. And some legitimate traffic may share other characteristics with the attack traffic, such as application protocol or destination port, potentially making it difficult to distinguish between them. None of these legitimate traffic categories should be disturbed by the DDoS defense mechanism. Legitimate traffic dropped by a DDoS defense mechanism has suffered collateral damage. (Collateral damage is also used to refer to cases where a third party who is not actually the target of the attack suffers damage from the attack.) Since DDoS attackers often strive to conceal their attack traffic in the legitimate traffic stream, it is common for legitimate traffic to closely resemble the attack packets, so the problem of collateral damage is real and serious.

Consider a DDoS defense mechanism that detects that a DDoS attack stream is coming from a local machine and then shuts down all outgoing traffic from that machine. Assuming high accuracy and sufficient deployment, such a mechanism would indeed stop the DDoS attack, but it would also stop much legitimate traffic. As mentioned early in this chapter, many machines that send DDoS attack streams are themselves victims of the true perpetrators of the attacks. It would be undesirable to shut down their perfectly legitimate activities simply because they have been taken over by a malicious adversary.2

If a DDoS defense mechanism develops some form of signature by which it distinguishes attack packets from nonattack packets, then unfortunate legitimate packets that happen to share that signature are likely to suffer at the hands of the DDoS defense mechanism. For example, a Web server might be flooded by HTTP request packets. If a DDoS defense mechanism decides that all HTTP request packets are attack packets, using that as the signature to determine which packets to drop, not only will the packets attacking the Web server be dropped, but so will all of the server’s legitimate traffic.

Many proposed DDoS defenses inflict significant collateral damage in some situations. While all collateral damage is bad, damage done to true third parties, who are neither at the sending nor receiving end of the attack, is probably the worst form of collateral damage. Any defense mechanism that deploys filtering, rate limiting, or other technologies that impede normal packet handling in the core of the network must be carefully designed to avoid all such third-party collateral damage.

• Low false-positive rates. Good DDoS defense mechanisms should target only true DDoS attacks. Preventive mechanisms should not have the effect of hurting other forms of network traffic. Reactive mechanisms should be activated only when a DDoS attack is actually under way. False positives may cause collateral damage in many cases, but there are other undesirable properties of high false-positive rates. For example, when a reactive system detects and responds to a DDoS attack, it might signal the system administrator of the targeted system that it is taking action. If most such signals prove to be false, the system administrator will start to ignore them, and might even choose to turn the defense mechanism off. Also, reactive mechanisms are likely to have costs of some sort. Perhaps they use some fraction of a system’s processing power, perhaps they induce some delay on all packets, or, in the longer term, perhaps a sufficiently frequent occurrence of reactions demands investment in a more powerful piece of defensive equipment. If these costs are frequently paid when no attack is under way, then the costs of running the defense system may outweigh the benefits achieved in those rare cases when an attack actually occurs.

• Low deployment and operational costs. DDoS defenses are meant to allow systems to continue operations during DDoS attacks, which, despite being very harmful, occur relatively rarely. The costs associated with the defense system must be commensurate with the benefits provided by it. For commercial solutions, there is an obvious economic cost of buying the hardware and software required to run it. Usually, there are also significant system administration costs with setting up new security equipment or software. Depending on the character of the DDoS defense mechanism, it may require frequent ongoing administration. For example, a mechanism based on detecting signatures of particular attacks will need to receive updates as new attacks are characterized, requiring either manual or automated actions.

Other operational costs relate to overheads imposed by the defense system. A system that performs stateful inspection of all incoming packets may delay each packet, for example. Or a system that throttles data streams from suspicious sources may slow down any legitimate interactions with those sources. Unless such costs are extremely low or extremely rarely paid, they must be balanced against the benefits of achieving some degree of protection against DDoS attacks.

You must further remember that part of the cost you will need to pay to protect yourself against DDoS attacks will not be in delays or CPU cycles, nor even in money spent to purchase a piece of hardware or software. Nothing beats preparation, and preparation takes time. You need to spend time carefully analyzing your network, developing an emergency plan, training your employees to recognize and deal with a DDoS attack, contacting and negotiating with your ISP and other parties who may need to help you in the case of an attack, and taking many other steps to be ready. The cost of any proposed DDoS solution must take these elements into account.

5.5 DDoS Defense Locations

The DDoS threat can be countered at different locations in the network. A DDoS attack consists of several streams of attack packets originating at different source networks. Each stream flows out of a machine; through a server or router into the Internet; across one or more core Internet routers; into the router, server, or firewall machine that controls access to the target machine’s network; and finally to the target itself. Defense mechanisms can be placed at some or all of these locations. Each possible location has its strengths and weaknesses, which we discuss in this section.

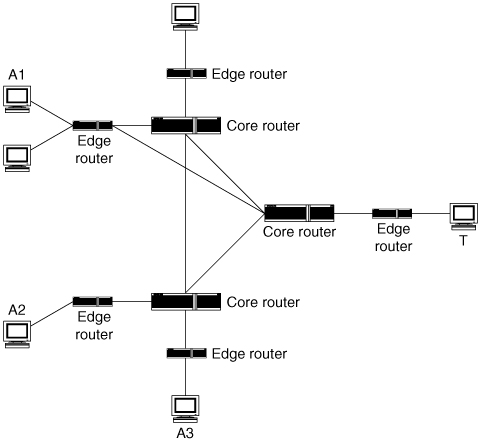

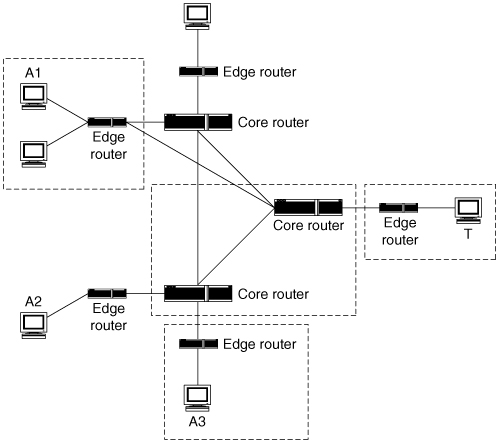

Figure 5.1 shows a highly simplified network with several user machines at different locations, border routers that attach local area networks to the overall network, and a few core routers. This figure will be used to illustrate various defensive locations. In this and later figures, the node at the right marked T is the target of the DDoS attack, and nodes A1, A2, and A3 are sources of attack streams.

Figure 5.1. A simplified network

5.5.1 Near the Target

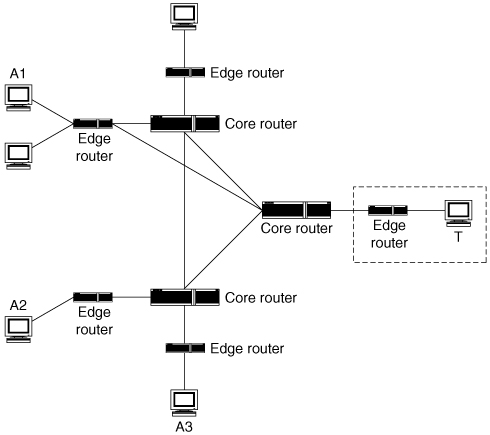

The most obvious location for a DDoS defense system is near the target (the area surrounded by a dashed rectangle in Figure 5.2). Defenses could be located on the target’s own machine, or at a router, firewall, gateway, proxy, or other machine that is very close to the target. Most existing defense mechanisms that protect against other network threats tend to be located near the target, for very good reasons. Many of those reasons are equally applicable to DDoS defense. Nodes near the target are in good positions to know when an attack is ongoing. They might be able to directly observe the attack, but even if they cannot, they are quite close to the target and often have a trust relationship with that target. The target can often tell them when it is under attack. Also, the target is the single node in the network that receives the most complete information about the characteristics of the attack, since all of the attack packets are observed there. Mechanisms located elsewhere will see only a partial picture and might need to take action based on incomplete knowledge.

Figure 5.2. Deployment near the attack’s target

Another advantage of locating a defense near the target is deployment motivation. Those who are particularly worried about the danger of DDoS attacks will pay the price of deploying such a defense mechanism, while those who are unaware or do not care about the threat need not pay. Further, the benefit of deploying the mechanism accrues directly to the entity that paid for it. Historically, mechanisms with these characteristics (such as firewalls and intrusion detection systems) have proved to be more widely accepted than mechanisms that require wide deployment for the common good (such as ingress/egress filtering of spoofed IP packets).

A further advantage of deployment near the target is maximum control by the entity receiving protection. If the defense mechanism proves to be flawed, perhaps generating large numbers of false positives, the target machine that suffers from those flaws can turn off or adjust the defense mechanism fairly easily. Similarly, different users who choose different trade-offs between the price they pay for defense and the amount of protection they receive can independently implement those choices when the defense mechanisms are close to them and under their control. (Note that this advantage assumes a rather knowledgeable and careful user. It is far more common for users to install a piece of software or hardware using whatever defaults it specifies, and then never touch it again unless problems arise.)

But there are also serious disadvantages to defense mechanisms located close to the target. A major disadvantage is that a DDoS attack, by definition, overwhelms the target with its volume. Unless the defense mechanism can handle this load more cheaply than the target, or is much better provisioned than the target, it is in danger of being similarly overloaded. Instead of spending a great deal of money to heavily provision a defense box whose only benefit is to help out during DDoS attacks, one might be better off spending the same money to increase the power of the target machine itself. In some cases where the defense mechanism is just a little bit upstream of the potential target, we may gain advantages by sharing the defense mechanism among many different potential targets, somewhat lessening this problem, since several entities can pool the resources they are willing to devote to DDoS defense on a more powerful mechanism.

A less obvious problem with this location is that the target may be in a poor position to perform actions that require complex analysis and differentiation of legitimate and attack packets. The defense mechanism in this location is, as noted above, itself in danger of being overwhelmed. Unless it is very heavily provisioned, it will need to perform rather limited per-packet analysis to differentiate good packets from attack traffic. Such mechanisms are thus at risk of throwing away the good packets with the bad.

A further potential disadvantage is that, unless the solution is totally automated and completely effective, some human being at the target will have to help in the analysis and defense deployment. If you do not have a person on your staff capable of doing that, you will have to enlist the assistance of others who are not at your site, which limits the advantages of the defense being purely local. Further, if the flood is large and the necessary countermeasures are not obvious, many of your local resources could well be overwhelmed, not least of which are the human resources you need to adjust your defenses to the attack. This problem may not be too serious for very large sites that maintain many highly trained system and network administrators, but it could be critical for a small site that has few or no trained computer professionals on its regular staff.

A final disadvantage is that deployment near each potential target benefits only that target. Every edge network that needs protection must independently deploy its own defense, gaining little benefit from any defense deployed by other edge networks. The overall cost of protecting all nodes in the Internet using this pattern of deployment might prove higher than the costs of deploying mechanisms at other locations that provide protection to wider groups of nodes.

5.5.2 Near the Attacker

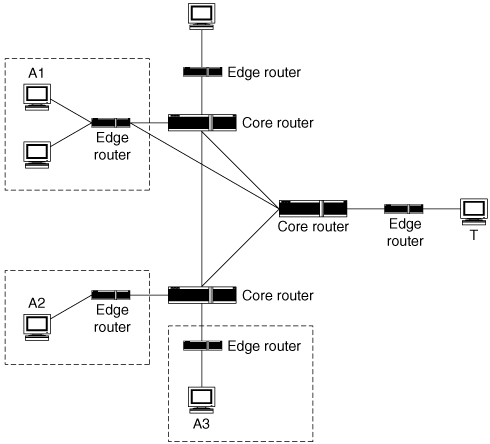

Figure 5.3 illustrates the option of deploying a defense mechanism near attack sources. Such a defense could be statically deployed at most or all locations from which attacks could possibly originate or could be dynamically created at locations close to where streams belonging to a particular ongoing attack actually are occurring. The multiple dotted rectangles in Figure 5.3 suggest one important characteristic of locating the defense near the attacker. An effective defense close to the attacker must actually be located close to all or most of the attackers. If the attack is coming from A1 and A2, but the defense is deployed only at A3, it will not be able to stop this attack. Even if it is deployed also at A2, the attack streams coming out of A1 will not be affected by the defense.

Figure 5.3. Deployment near attack sources

One advantage of this deployment location is that DDoS attack streams are not highly aggregated close to the source, unlike close to the attack’s target. They are of a much lower volume, allowing more processing to be devoted to detecting and characterizing them than is possible close to the target. This low volume and lack of aggregation may also prove helpful in separating the packets participating in an attack from those that are innocent traffic.

There are also disadvantages. Typically, a host that originates a DDoS attack stream suffers little direct adverse effect from doing so.3 Its attack stream is a tiny fraction of the huge flood that will swamp the target, and thus will rarely cause problems to its own network. A DDoS defense system located close to a source might have trouble determining that there is an attack going on. Even if it does know that an attack is being sent out of its network, the defense mechanism must determine which of its packets belong to attack streams. Existing research has shown that some legitimate traffic can be differentiated from attack traffic at this point, but it is not clear that all traffic can be confidently characterized as legitimate or harmful.

The second disadvantage is deployment motivation. A DDoS defense node close to a source will provide its benefits to other nodes and networks, not to the node where the attack originates or to its local network. Thus, there are few direct economic advantages to deploying a DDoS defense node of this kind, leading to a variant on the tragedy of the commons.4 While everyone might be better off if all participants deployed an effective DDoS defense system at the exit point of their own network, nobody benefits much from his own deployment of such a system. The benefits derive from the overall deployment by everyone, with no incremental benefit accruing to the individual who must perform each deployment.

If there were advantages to deploying a source-end defense system, then this problem might be overcome. Proponents of these kinds of solutions have thus devoted some effort to finding such advantages. One possible advantage is that a target-end defense might form a trust relationship with the source-end network that polices its own traffic. During an attack, this trust relationship may bring privileged status to this source-end network, delivering its packets despite the DDoS attack. Another possible advantage is that one might avoid legal liability by preventing DDoS flows from originating in one’s network, though it is unclear if existing law would impose any such liability. Finally, there is the advantage that accrues from being known as a good network citizen. However, these motivations have not been sufficient to ensure widespread deployment of other defense mechanisms with a similar character. For example, egress filtering at the exit router of the originator’s local network can detect most packets with spoofed IP source addresses before they get outside that network (see Chapter 4 for details on ingress and egress filtering). However, despite the feature’s being available on popular routing platforms and recommendations from knowledgeable sources to enable it, many installers do not turn it on.5 DDoS defense mechanisms designed to operate close to potential sources would need to overcome similar reluctance.

The reluctance will be even greater if the defense mechanism does not have superb discrimination. If the defense’s ability to separate attack traffic from good traffic is poor, it will harm many legitimate packets. Assuming that the mechanism either drops or delays packets that it classifies as part of the attack, anyone who chooses to deploy the mechanism will suddenly see some of her legitimate traffic being harmed. Perhaps the defense mechanism will even start dropping good packets when no attack stream is actually coming out of the local network. If so, it would be quickly turned off and discarded.

A final disadvantage is the deployment scale required for this approach to be effective. If attack streams emanate from 10,000 sources to converge on one poor victim, this style of defense mechanism would need to be deployed close to a significant fraction of those 10,000 sources to do much good. A DDoS defense mechanism that is only applied to 5 to 10% of the attack packets will very likely do no good. The attacker would merely need to recruit 5 to 10% more machines to perform his attack, not a very challenging task. Unless the defense mechanism in question is located near a large fraction of all possible sites, it would not have enough coverage to be effective.

5.5.3 In the Middle

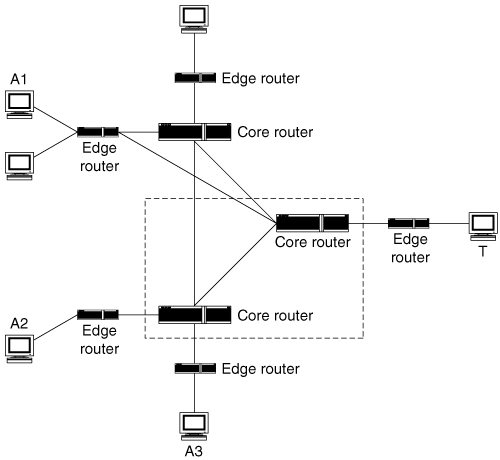

Deployments in the middle of the network generally refer to defenses living at core Internet routers (depicted in Figure 5.4). As a rule, such defenses are deployed at more than one core router, as the figure suggests. However, deployment “in the middle” might also refer to routers and other network nodes that are close to the target but not part of the target’s network, such as an ISP. At some point, “middle” blends into “edges,” and the deployment location is really near the target or near the attacker, having the characteristics of those locations. For true core deployments, there are obvious advantages and disadvantages.

Figure 5.4. Deployment in the middle of the Internet

The vast bulk of the Internet’s traffic goes through a relatively small number of core Autonomous Systems (ASs), each of which deploys a large, but not immense, number of routers to carry that traffic. Thus, any defense located at a reasonably large number of well-chosen ASs can get excellent coverage. To the degree that the defense is effective, it can provide its benefit to practically every node attached to the Internet. In Figure 5.4, if the defense is located at the two routers shown, all traffic coming from the three attack sources will pass through it. Further, even if there were a different victim (say T1, located in the same network as A3), the same two deployment points would offer protection to that victim against all attack traffic except that originating in its own network. (Core defenses inherently cannot protect against attacks that do not traverse the core; most attacks do, however.) If core defenses were effective, accurate, cheap, and easy to deploy, they could thus completely solve the problem of DDoS attacks.

These caveats suggest the disadvantages of deploying DDoS defense in the middle of the network. First, routers at core ASs are very busy machines. They cannot devote any substantial resources to handling or analyzing individual packets. Thus, a core defense mechanism cannot perform any but the most cursory per-packet inspection, and cannot perform any serious packet-level analysis to determine the presence, characteristics, or origins of a DDoS attack, even assuming we had analysis methods that could do so.

The basic problem in DDoS defense is, again, separating the huge volume of DDoS traffic from the relatively tiny volume of legitimate traffic. DDoS defenses at core routers cannot afford to devote many resources to making such differentiation decisions. They must have simple, cheap rules for dealing with the vast majority of the packets they see.

A second problem arises because core routers could inflict massive collateral damage if they are not exceptionally accurate in discriminating DDoS traffic from legitimate traffic. If they make mistakes at a rate that might be acceptable for a victim-side deployment, they could easily drop a huge amount of legitimate traffic. Those running core routers consider dropping legitimate traffic as extremely undesirable. Combined with their lack of resources to perform careful examination of packets, we thus would require the core defense to provide high accuracy with little analysis, a very challenging task.

Another problem with this deployment location is that core routers are unlikely to notice DDoS attacks. They themselves are unlikely to be overwhelmed, and they cannot afford to keep statistics on packets coming through on a per-destination basis. Perhaps they can afford to look for DDoS attacks by a statistical method that examines a tiny fraction of the total packets, looking for suspiciously high numbers of packets to a single destination, but one node’s overwhelming DDoS attack is another node’s ordinary daily business. There is ongoing research on using measurements of entropy in packet traffic to detect DDoS attacks in the core. However, proven methods applied at core routers are not likely to pinpoint all DDoS attacks without generating unacceptable levels of false positives.

Deployment incentives are also problematic for core-located DDoS defense mechanisms. By and large, the routers comprising the Internet backbone are not likely targets of DDoS attacks. They are heavily provisioned, are designed to perform well under high load, and are not easy to send packets to directly. Attackers are likely to need to deduce which network paths pass through such a router if they want to target it, which is not always easy. Thus, the companies running these machines would probably not receive direct benefit from deploying DDoS defenses. They would receive indirect benefit, since they typically try to minimize the time a packet travels through their system (and quickly dropping a packet because it is part of a DDoS flow certainly minimizes that time), and because their business ultimately depends on the usability of the Internet as a whole. On the other hand, their equipment is expensive and must operate correctly even under conditions of heavy strain, so they are generally little inclined to install unproven hardware and software. A very compelling case for the need for a particular defense mechanism, its correctness, and the acceptability of its performance would be required before there would be any hope of deployment in the core.

If a core router defense performs badly, many users would be affected. Yet, unlike defenses located in their own domains (whether source-side or victim-side), users would have no power to turn the defense mechanisms off or adjust them. Those running the Internet backbone cannot afford to field calls from every ISP or, worse, every user who is having her legitimate packets dropped by a core-deployed DDoS defense mechanism.

A final point against this form of defensive deployment is based on the respected end-to-end argument, which states that network functionality should be deployed at the endpoints of a network connection, not at nodes in the middle, unless it cannot be achieved at the endpoints or is so ubiquitously required by all traffic that it clearly belongs in the middle. While the end-to-end argument should not be regarded as the final deciding word in any discussion of network functionality, its careful application is arguably an important factor in the success of the Internet. Core-deployed DDoS defense mechanisms tend to run counter to the end-to-end argument, unless one can make a strong case for the impossibility of achieving similar results at the endpoints.

5.5.4 Multiple Deployment Locations

Some researchers have argued that an inherently distributed problem like DDoS requires a distributed solution. In the most trivial sense, we must have distributed solutions, unless someone comes up with a scheme that protects all potential targets against all possible attacks by deploying something at only one machine in the Internet. Most commercial solutions are, in this trivial sense, distributed, since each network that wants protection deploys its own solution. There are actually nontrivial distributed system problems related to this kind of deployment for other cyberdefenses, as exemplified by the issue of updating virus protection databases. Similarly, updating all target-side deployments to inform them of a new DDoS toolkit’s signatures would be such a distributed system problem even for this trivial form of distribution.

Some source end solutions operate purely autonomously to control a single network’s traffic, and these are distributed in the same trivial sense. All other types of defense schemes suggested to date are distributed in a less trivial sense. Some of those require defense deployment at the source and at the victim, with the defense systems communicating during an attack. Others require deployment at multiple core routers, which may also cooperate among themselves. Some require defense nodes scattered at the edge networks to cooperate. All these schemes will be discussed in more detail in Chapter 7.

There’s a simple argument for why distributed solutions are necessary. Source-side nondistributed deployments just will not happen at a high enough rate to solve the problem. Target-side deployments cannot handle high-volume flooding attacks. There is no single location in the network core where one can capture all attacks, since not all packets pass any single point in the Internet. What is left? A solution that is deployed in more than one place, or multiple cooperating solutions at different places. Hence, a distributed solution.

Perhaps each instance of such a solution can work independently, rendering its distributed nature nearly trivial. However, this seems unlikely, since the common characteristic of the flooding attacks that force distributed solutions is that you cannot observe all the traffic except at a point where there is too much of it to do anything with. Unless each instance can independently, based on its own local information, reach a conclusion on the character of the attack that is generally the same as the conclusion reached by other instances, independent defense points might not engage their defenses against enough attack traffic. Most likely, some information exchange between instances will be required to reach a common agreement on the presence and character of attacks and the nature of the response, leading to true distributed characteristics.

With a good design, a distributed defense could exploit the strong points of each defense location while minimizing its weaknesses. For example, locations at aggregation points near the target are in a good position to recognize attacks. Locations near the attackers are well positioned to differentiate between good and bad packets. Locations in the center of the network can achieve high defensive coverage with relatively few deployment points. One approach to solving the DDoS problem is stitching together a defensive network spanning these locations. One such distributed deployment is shown in Figure 5.5. This approach must avoid the pitfall of accumulating the weaknesses of the various defensive locations, in addition to their strengths. For example, if locations near potential attackers are reluctant to deploy defensive mechanisms because they see no direct benefit and core router owners hesitate because they are unwilling to take the risk of damaging many users’ traffic, a defense mechanism requiring deployments in both locations might be even less likely to be installed than one requiring deployment in only one of these locations.

Figure 5.5. Distributed deployment

Generally speaking, a defensive scheme that deploys cooperating mechanisms at multiple locations requires handling the many well-known difficulties of properly designing a distributed system. Distributed systems, while potentially powerful, are notorious for being bug-ridden and prone to unpredicted performance problems. Given that a distributed DDoS defense scheme is likely to be a tempting target for attackers, it must carefully resolve all distributed system problems that may create weak points in the defense system. These problems include standard distributed system issues (such as synchronization of various participants and behavior in the face of partial failures) and security concerns of distributed systems (such as handling misbehavior by some supposedly legitimate participants).

5.6 Defense Approaches

Given the basic dichotomy between prevention and reaction, the goals of DDoS defense, and the three types of locations where defenses can be located, we will now discuss the basic options on how to defend against DDoS attacks that have been investigated, to date. The discussion here is at a high level, with few examples of actual systems that have been built or actions that you can take, since the purpose of this material is to lay out for you the entire range of options. Doing so will then make it easier for you to understand and evaluate the more detailed defense information presented in subsequent chapters.

Some DDoS defenses concentrate on protecting you against DDoS. They try to ensure that your network and system never suffer the DDoS effect. Other defenses concentrate on detecting attacks when they occur and responding to them to reduce the DDoS effect on your site. We will discuss each in turn.

Most of these approaches are not mutually exclusive, and one can build a more effective overall defense by combining several of them. Using a layered approach that combines several types of defenses, at several different locations, can be more flexible and harder for an attacker to completely bypass. This layering includes host-level tuning and adequate resources, close-proximity network-level defenses, as well as border- or perimeter-level network defenses.

5.6.1 Protection

Some protection approaches focus on eliminating the possibility of the attack. These attack prevention approaches introduce changes into Internet protocols, applications and hosts, to strengthen them against DDoS attempts. They patch existing vulnerabilities, correct bad protocol design, manage resource usage, and reduce the incidence of intrusions and exploits. Some approaches also advocate limiting computer versatility and disallowing certain functions within the network stack (see, for example, [BR00, BR01]). These approaches aspire to make the machines that deploy them impervious to DDoS attempts. Attack prevention completely eliminates some vulnerability attacks, impedes the attacker’s attempts to gain a large agent army, and generally pushes the bar for the attacker higher, making her work harder to achieve a DoS effect. However, while necessary for improving Internet security, prevention does not eliminate the DDoS threat.

Other protection approaches focus on enduring the attack without creating the DoS effect. These endurance approaches increase and redistribute a victim’s resources, enabling it to serve both legitimate and malicious requests during the attack, thus canceling the DoS effect. The increase is achieved either statically, by purchasing more resources, or dynamically, by acquiring resources at the sign of a possible attack from a set of distributed public servers and replicating the target service. Endurance approaches can significantly enhance a target’s resistance to DDoS—the attacker must now work exceptionally hard to deny the service. However, the effectiveness of endurance approaches is limited to cases in which increased resources are greater than the attack volume. Since an attacker can potentially gather hundreds of thousands of agent machines, endurance is not likely to offer a complete solution to the DDoS problem, particularly for individuals and small businesses that cannot afford to purchase the quantities of network resources required to withstand a large attack.

Hygiene Hygiene approaches try to close as many opportunities for DDoS attacks in your computers and networks as possible, on the generally sound theory that the best way to enhance security is to keep your network simple, well organized, and well maintained.

Fixing Host Vulnerabilities Vulnerability DDoS attacks target a software bug or an error in protocol or application design to deny service. Thus, the first step in maintaining network hygiene is keeping software packages patched and up to date. In addition, applications can also be run in a contained environment (for instance, see Provos’ Systrace [Pro03]), and closely observed to detect anomalous behavior or excess resource consumption.

Even when all software patches are applied as soon as they are available, it is impossible to guarantee the absence of bugs in software. To protect critical applications from denial of service, they can be duplicated on several servers, each running a different operating system and/or application version akin to biodiversity. This, however, greatly increases administrative requirements.

As described in Chapters 2 and 4, another major vulnerability that requires attention is more social than technical: weak or no passwords for remotely accessible services, such as Windows remote access for file services. Even a fully patched host behind a good firewall can be compromised if arbitrary IP addresses are allowed to connect to a system with a weak password on such a service. Malware, such as Phatbot, automates the identification and compromise of hosts that are vulnerable due to such password problems. Any good book on computer security or network administration should give you guidance on checking for and improving the quality of passwords on your system.

Fixing Network Organization Well-organized networks have no bottlenecks or hot spots that can become an easy target for a DDoS attack. A good way to organize a network is to spread critical applications across several servers, located in different subnetworks. The attacker then has to overwhelm all the servers to achieve denial of service. Providing path redundancy among network points creates a robust topology that cannot be easily disconnected. Network organization should be as simple as possible to facilitate easy understanding and management. (Note, however, that path redundancy and simplicity are not necessarily compatible goals, since multiple paths are inherently more complex than single paths. One must make a trade-off on these issues.)

A good network organization not only repels many attack attempts, it also increases robustness and minimizes the damage when attacks do occur. Since critical services are replicated throughout the network, machines affected by the attack can be quarantined and replaced by the healthy ones without service loss.

Filtering Dangerous Packets Most vulnerability attacks send specifically crafted packets to exploit a vulnerability on the target. Defenses against such attacks at least require inspection of packet headers, and often even deeper into the data portion of packets, in order to recognize the malicious traffic. However, data inspection cannot be done with most firewalls and routers. At the same time, filtering requires the use of an inline device. When there are features of packets that can be recognized with these devices, there are often reasons against such use. For example, a lot of rapid changes to firewall rules and router ACLs is often frowned upon for stability reasons (e.g., what if an accident leaves your firewall wide open?) Some types of Intrusion Prevention Systems (IPS), which act like an IDS in recognition of packets by signature and then filter or alter them in transit, could be used, but may be problematic and/or costly on very high bandwidth networks.

Source Validation

Source validation approaches verify the user’s identity prior to granting his service request. In some cases, these approaches are intended merely to combat IP spoofing. While the attacker can still exhaust the server’s resources by deploying a huge number of agents, this form of source validation prevents him from using IP spoofing, thus simplifying DDoS defense.

More ambitious source validation approaches seek to ensure that a human user (rather than DDoS agent software) is at the other end of a network connection, typically by performing so-called Reverse Turing tests.6 The most commonly used type of Reverse Turing test displays a slightly blurred or distorted picture and asks the user to type in the depicted symbols (see [vABHL03] for more details). This task is trivial for humans, yet very hard for computers. These approaches work well for Web-based queries, but could be hard to deploy for nongraphical terminals. Besides, imagine that you had to decipher some picture every time you needed to access an online service. Wouldn’t that be annoying? Further, this approach cannot work when the communications in question are not supposed to be handled directly by a human. If your server responds directly to any kind of request that is not typically generated by a person, Reverse Turing tests do not solve your problem. Pings, e-mail transfers between mail servers, time synchronization protocols, routing protocol updates, and DNS lookups are a few examples of computer-to-computer interactions that could not be protected by Reverse Turing tests.

Finally, some approaches verify the user’s legitimacy. In basic systems, this verification can be no more than checking the user’s IP address against a list of legitimate addresses. To achieve higher assurance, some systems require that the user present a certificate, issued by some well-known authority, that grants him access to the service, preferably for a limited time only. Since certificate verification is a cryptographic activity, it consumes a fair amount of the server’s resources and opens the possibility for another type of DDoS attack. In this attack, the attacker generates many bogus certificates and forces the server to spend resources verifying them.

Note that any agent machine that is capable of proving its legitimacy to the target will pass these tests. If nothing more is done by the target machine, once the test is passed an agent machine can perpetrate the DDoS attack at will. So an attacker who can recruit sufficient legitimate clients of his target as agents can defeat such systems. If you run an Internet business selling to the general public, you may have a huge number of clients who are able to prove their legitimacy, making the attacker’s recruitment problem not very challenging.

This difficulty can perhaps be addressed by requiring a bit more from machines that want to communicate with your site, using a technique called proof of work.

Proof of Work

Some protocols are asymmetric—they consume more resources on the server side than on the side of the client. Those protocols can be misused for denial of service. The attacker generates many service requests and ties up the server’s resources. If the protocol is such that the resources are released after a certain time, the attacker simply repeats the attack to keep the server’s resources constantly occupied.

One approach to protect against attacks on such asymmetric protocols is to redesign the protocols to delay commitment of the server’s resources. The protocol is balanced by introducing another asymmetric step, this time in the server’s favor, before committing the server’s resources. The server requires a proof of work from the client.

The asymmetric step should ensure that the client has spent sufficient resources for the communication before the server spends its own resources. A commonly used approach is to send a client some puzzle to solve (e.g., [JB99, CER96]). The puzzle is such that solving it takes a fair amount of time and resources, while verifying the correctness of the answer is fast and cheap. Such puzzles are called one-way functions or trapdoor functions [MvOV96]. For example, a server could easily generate a large number and ask the client to factor it. Factoring of large numbers is a hard problem and it takes a lot of time. Once the client provides the answer, it is easy to multiply all the factors and see that they produce the number from the puzzle. After verifying the answer, the server can send another puzzle or grant the service request. Of course, the client machine runs software that automatically performs the work requested of it, so the human user is never explicitly aware of the need to solve the puzzle.

The use of proof-of-work techniques ensures that the client has to spend a lot more resources than the server before his request is granted. The amount of work required must not be sufficiently onerous for legitimate clients to mind or even usually notice, but it must be sufficient to slow down DDoS agents very heavily, making it difficult or perhaps impossible for them to send enough messages to the target to cause a DDoS effect.