Chapter 3. History of DoS and DDoS

In this chapter we will discuss the origins of Internet denial of service, based on the historical aspects of the Internet and its design principles, as well as the events that led to major DDoS attacks on Internet sites and beyond, up to today. We describe the motivations of both the Internet designers and the attackers.

3.1 Motivation

It is human nature that when groups of people get together, there is bound to be disagreement and conflict. This conflict can take many forms: glaring at someone who is crowding you in line to get them to back off, cutting someone off in traffic, using the favorite national hand gesture that shows the utmost contempt possible for them. Or even worse acts: slashing someone’s tires, pouring sugar in their gas tank to make their car fail, or throwing a bundle of money into a public square or street causing a riot and obstructing passage. As it happens, all of these are examples of physical-world forms of DoS, denial of transportation, in these last examples.

As the Internet gained popularity as a virtual meeting place, it also became a place of conflict. Usenet newsgroups that bring together people with like interests degrade into flame-filled series of tirade after tirade among arguing members. Or someone who feels wronged goes “trolling” [Wik]—making inflammatory statements, calling someone names, asking a blatantly off-topic question—anything to purposely cause flame wars and degrade conversation in a newsgroup or e-mail list. Someone who trolls can cause dozens, even hundreds, of useless e-mail messages saying, “Stop this!,” “You’re just an idiot and should leave this group,” “Can’t someone ban this jerk from our newsgroup?”, etc. In some cases, it gets so bad that people unsubscribe and leave the group permanently. The degradation of discourse is another form of DoS—some kind of interference that prevents a computer user from doing something that he or she would otherwise have been able to do had there been no interference, but one that often cannot be maintained very long.

Articles like Suler and Phillips’ “The Bad Boys of Cyberspace” [SP98] and a study titled “The Experience of Bad Behavior in Online Social Spaces: A Survey of Online Users,” by Davis [JPD] show that people can sometimes behave quite differently, often in very antisocial ways, when interacting in the Internet as opposed to when they interact with people face to face. They may misinterpret things because they lack nonverbal cues or because they lack detail or context. They may be quicker to anger than if speaking to someone face to face, and because they cannot see the person they are speaking to, they may react more strongly. Anonymity may give them a sense of invisibility, and they may consider the icons that represent other users as being unreal and disassociated from another person.

This point is important. Some people consider online chat rooms to be just like real rooms, and they can form a picture in their mind that gives these other participants identity. Other people in the same chat room will only see the words on the screen, and they will themselves feel invisible and invincible because they sit in the comfort of their own room and can turn off the computer whenever they want. The other world (and everyone in it) then ceases to exist, just like the TV world vanishes when the set is turned off. Unlike the physical world, where two people having a conflict are often standing toe to toe, in the Internet the conflict takes place with an intermediary network that is effectively a black box to the parties involved. There is only a keyboard and monitor in front of each person, and their respective moral and ethical frameworks to guide them in how they act. This disassociation and lack of physical proximity encourages people to participate in illegal activities in the Internet, such as hacking, denial of service, or collecting copyrighted material. They do not feel that in reality they are doing any serious harm.

Typical end users do not care about the intrinsics of network communications in the Internet. Instead, they are merely interested in the benefits the Internet provides them with, such as e-commerce or Internet banking. However, those who have that detailed knowledge of network specifics and can abuse it to exclude and effectively deny the services to others feel greatly empowered. That is the point at which DoS programs enter the scene.

Over the years, DoS attacks in the Internet have predominantly been associated with communication mechanisms such as newsgroups, chat rooms, online games, etc. These are asynchronous communication mechanisms, meaning that there is no direct and immediate acknowledgment of receipt, and no real-time dialogue. E-mail gets delivered when it gets delivered, and messages can come in out of order and get mixed in with all the rest. Asynchronous communication mechanisms in the Internet, such as Usenet newsgroups or e-mail lists, can be attacked by trolling or by flooding with bogus messages, but these attack mechanisms do not have a direct effect and can fairly easily be dealt with by filtering. Since these communication mechanisms are asynchronous, there is a delay and thus the attacker does not get instant gratification.

DoS attacks that cause servers to crash or fill networks with useless traffic, on the other hand, do provide immediate satisfaction. They directly affect a system and, if combined with a threat immediately beforehand, increase the potency and satisfaction for the attacker. They work best on synchronous means of communication, like real-time chat or Web activity that involves a sustained series of interactions between a browser and a Web server.

For example, if Jane wants to hurt NotARealSiteForPuppies.com, to really scare them, she might first send them a threatening e-mail that states, “You people are scum! I am going to take your site down for three hours, and then I’m going after your little dog, too!” She waits until she gets a reply saying this is being reported to the ISP of the account that sent the message (most likely a stolen account), and then she immediately begins the promised attack. She then checks to see if the Web page comes up, and sees that the browser reports, “Timeout connecting to server.” Mission accomplished!

Synchronous communication mechanisms like online games [Gam] and Internet Relay Chat (IRC [vLL]), as opposed to Usenet newsgroups and mailing lists, are more often subjected to DoS attacks because of this direct effect. Not only can you directly affect an individual user, causing them to get knocked off of IRC channels, but you can also disrupt an entire IRC network. It is important to understand these attacks (even if you don’t use or have anything to do with IRC) because the tools and techniques are just as effective against a Web server, or a corporation’s external Domain Name System (DNS) or mail servers.

Early attacks on the IRC network were known to a few security experts, such as coauthor Sven Dietrich, in the early 1990s. DoS attacks, which in one instance took the form of a TCP RST flood, caused IRC servers to “split” (i.e., to lose track of who owns a channel). A remote user, being the only one left in that channel, would then “own” one or more chat channels, since the legitimate owner was split from the local network. When the networks would join again, legitimate and illegitimate owners would have a face-off, which could lead to further retribution. Larger-scale attacks were also used to remove unwelcome users from chat channels, as an effective method of kicking them off forcefully. These problems were known to some IRC operators at Boston University at the time.

Over the years, IRC has been one of the main motivators for development and use of DoS and DDoS tools, as well as being its major target. This relationship between IRC and DDoS attacks shares some similarities to the development of the HIV/AIDS crisis in the 1980s.1 When HIV/AIDS was first discovered, many considered it a problem for only gays or Haitians or intravenous drug users. As long as you were not in that group, why should you worry about HIV/AIDS? Research into treatments and cures did not start early enough, and as a result HIV/AIDS spread across the world, to the point where today, the world’s largest country, China, has cases in all levels of society throughout the entire country.

Similarly, DoS and DDoS were originally seen as an IRC-related problem, affecting only IRC servers and IRC users. Some sites even banned IRC servers on their campus, or moved IRC servers outside of the main network to a DMZ (DeMilitarized Zone) “free fire zone” that wouldn’t impact the main network, all with the belief that this would “solve” the problem of DoS. (In fact, it just pushed it away, allowing it to continue to develop and outpace defense capabilities.) The same issue involved in DDoS—a large flood of packets—in 2003 began to occur as a result of worms, taking down many of the largest networks in the world, which had nearly five years to understand the problem and prepare for it but chose not to.

In this same time, the attack tools themselves have grown in power, capabilities, ability to spread, and sophistication to the point where they are today being used in sophisticated attacks with financial motivations by organized criminal gangs. How did all this happen?

We begin our quest for answers by examining the assumptions and principles on which the Internet was built.

3.2 Design Principles of the Internet

The predecessor to today’s Internet, called ARPANET (Advanced Research Project Agency Network), was born in the late 1960s when computers were not present in every home and office.2 Rather, they existed in universities and research institutions, and were used by experienced and knowledgeable staff for scientific calculations. Computer security was viewed as purely host security, not network security, as most hosts were not networked yet. As those calculations became more advanced and computers started gaining a significant presence in research activities, people realized that interconnecting research networks and enabling them to talk to each other in a common language would advance scientific progress. As it turned out, the Internet advanced more than the field of science—it transformed and revolutionized all aspects of human life, and introduced a few new problems along the way.

3.2.1 Packet-Switched Networks

The key idea in the design of the Internet was the idea of the packet-switched network [Kle61, Bar64]. The birth of the Internet happened in the middle of the Cold War, when the threat of global war was hanging over the world. Network communications were already crucial for many critical operations in the national defense, and were performed over dedicated lines through a circuit-switched network. This was an expensive and vulnerable design. Government agencies had to pay to set up the network infrastructure everywhere they had critical nodes, then set up communication channels for every pair of nodes that wanted to talk to each other. During this communication, the route from the sender to the receiver was fixed. The intermediate network reserved resources for the communication that could not be shared by information from other source-destination pairs. These resources could only be freed once the communication ended. Thus, if the sender and the receiver talked infrequently but in high-volume bursts, they would tie down a considerable amount of resources that would lie unutilized most of the time.

The bigger problem was the vulnerability of the communication to intermediate node failures. The dedicated communication line was as reliable as the weakest of the intermediate nodes comprising it. Single node or link failure led to the tear-down of the whole communication line. If another line was available, a new channel between the sender and the receiver had to be set up from the start. Nodes in circuit-switched networks had only a few lines available, which were high-quality leased lines dedicated to point-to-point connectivity between computers. These were not only very expensive, but made the network topology extremely vulnerable to physical node and link failures and consequently could not provide reliable communications in case of targeted physical attack. A report [Bar64] not only discussed this in detail, but also offered simulation results that showed that adding more nodes and links to a circuit-switched network only marginally improves the situation.

The packet-switched network emerged as a new paradigm of network design. This network consists of numerous low-cost, unreliable nodes and links that connect senders and receivers. The low cost of network resources facilitates building a much larger and more tightly connected network than in the circuit-switched case, providing redundancy of paths. The reliability of communication over the unreliable fabric is achieved through link and node redundancy and robust transport protocols. Instead of having dedicated channels between the sender and the receiver, network resources are shared among many communication pairs. The senders and receivers communicate through packets, with each packet carrying the origin and the destination address, and some control information in its header. Intermediate nodes queue and interleave packets from many communications and send them out as fast as possible on the best available path to their destination. If the current path becomes unavailable due to node or link failure, a new route is quickly discovered by the intermediate nodes and subsequent packets are sent on this new route. To use the communication resource more efficiently, links may have variable data rates. To compensate for the occasional discrepancy in the incoming and outgoing link rates, and to accommodate traffic bursts, intermediate nodes use store-and-forward switching—they store packets in a buffer until the link leading to the destination becomes available. Experiments have shown that store-and-forward switching can achieve a significant advantage with very little storage at the intermediate nodes [Bar64].

The packet-switched network paradigm revolutionized communication. All of its design principles greatly improved transmission speed and reliability and decreased communication cost, leading to the Internet we know today—cheap, fast, and extremely reliable. However, they also created a fertile ground for misuse by malicious participants. Let’s take a closer look at the design principles of the packet-switched network.

• There are no dedicated resources between the sender and the receiver. This idea allowed a manifold increase in network throughput by multiplexing packets from many different communications. Instead of providing a dedicated channel with the peak bandwidth for each communication, packet-switched links can support numerous communications by taking advantage of the fact that peaks do not occur in all of them at once. A downside of this design is that there is no resource guarantee, and this is exactly why aggressive DDoS attack traffic can take over resources from legitimate users. Much research has been done in fair resource sharing and resource reservation at the intermediate nodes, to offer service guarantees to legitimate traffic in the presence of malicious users. While resource reservation and fair sharing ensure balanced use among legitimate users, they do not solve the DDoS problem. Resource reservation protocols are sparsely deployed and thus cannot make a large impact on DDoS traffic. Resource-sharing approaches assign a fair share of a resource to each user (e.g., bandwidth, CPU time, disk space). In the Internet context, the problem of establishing user identity is escalated due to IP spoofing. An attacker can thus fake as many identities as he wants and monopolize resources in spite of the fair sharing mechanism. Even if these problems were solved, an attacker could effectively slow service to legitimate users to an unacceptable rate by compromising enough machines and using their “fair shares” of the resources.

• Packets can travel on any route between the sender and the receiver. Packet-switched network design facilitates the development of dynamic routing algorithms that quickly discover an alternative route if the primary one fails. This greatly enhances communication robustness as packets in flight can take a different route to the receiver from the one that was valid when they were sent. The route change and selection process are performed by the intermediate nodes directly affected by the route failure, and are transparent to the other participants, including the sender and the receiver. This facilitates fast packet forwarding and low routing message overhead, but has a side effect that no single network node knows the complete route between the packet origin and its destination.

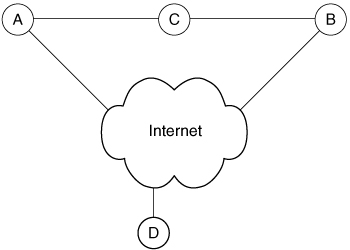

To illustrate this, let us observe the network in Figure 3.1. Assume that the path from A to B leads over one node, C, and that a node D is somewhere on the other side of the Internet. If D ever sees a packet from A to B, it cannot infer if the source address (A) is fake, or if C somehow failed and the packet is trying to follow an alternative path. Therefore, D will happily forward the packet to B. This example illustrates why it is difficult to detect and filter spoofed packets.3 IP spoofing is one of the centerpieces of the DDoS problem. It is not necessary for many DDoS attacks, but it significantly aggravates the problem and challenges many DDoS defense approaches.

Figure 3.1. Routing in a packet-switched network

• Different links have different data rates. This is a logical design principle, as some links will naturally be more heavily used than others. The Internet’s usage patterns caused it to evolve a specific topology, resembling a spider with many legs. Nodes in the Internet core (the body of the spider) are heavily interconnected, while edge nodes (on the spider’s legs) usually have one or two paths connecting them to the core. Core links provide sufficient bandwidth to accommodate heavy traffic from many different sources to many destinations, while the links closer to the edges need only support the end network traffic and need less bandwidth. A side effect of this design is that the traffic from the high-bandwidth core link can overwhelm the low-bandwidth edge link if many sources attempt to talk to the same destination simultaneously, which is exactly what happens during DDoS attacks.

3.2.2 Best-Effort Service Model and End-to-End Paradigm

The main purpose of the Internet is to move packets from source to destination quickly and at a low cost. In this endeavor, all packets are treated as equal and no service guarantees are given. This is the best-effort service model, one of the key principles of the Internet design. Since routers need only focus on traffic forwarding, their design is simple and highly specialized for this function.

It was understood early on that the Internet would likely be used for a variety of services, some of which were unpredictable. In order to keep the interconnection network scalable and to support all the services a user may need now and in the future, the Internet creators decided to make it simple. The end-to-end paradigm states that application-specific requirements, such as reliable delivery (i.e., assurance that no packet loss occurred), packet reorder detection, error detection and correction, quality-of-service requirements, encryption, and similar services, should not be supported by the interconnection network but by the higher-level transport protocols deployed at the end hosts—the sender and the receiver. Thus, when a new application emerges, only the interested end hosts need to deploy the necessary services, while the interconnection network remains simple and invariant. The Internet Protocol (IP) manages basic packet manipulation, and is supported by all routers and end hosts in the Internet. End hosts additionally deploy a myriad of other higher-level protocols to get specific service guarantees: Transport Control Protocol (TCP) for reliable packet delivery; User Datagram Protocol (UDP) for simple traffic streaming; Real Time Protocol (RTP), Real Time Control Protocol (RTCP), and Real Time Streaming Protocol (RTSP) for streaming media traffic; and Internet Control Message Protocol (ICMP) for control messages. Even higher-level services are built on these, such as file transfer, Web browsing, instant messaging, e-mail, and videoconferencing.

The end-to-end argument is frequently understood as justification not to add new functionalities to the interconnection network. Following this reasoning, DDoS defenses located in the interconnection network would not be acceptable. However, the end-to-end argument, as originally stated, did not claim that the interior of the network should never again be augmented with any functionality, nor that all future changes in network behavior had to be implemented only on the ends. The crux of this argument was that only services that were required for all or most traffic belonged in the center of the network. Services that were specific to particular kinds of traffic were better placed at the edge of the network.

Another component of the end-to-end argument was that security functions (which include access control and response functions to mitigate attacks) were the responsibility of the edge devices (i.e., end hosts) and not something the network should do. This argument assumes that owners of end hosts:

• Have the resources, including time, skills, and tools, to ensure the security of every end host

• Will be able to notice malicious activity themselves and take response actions quickly

It also assumes that compromised hosts will themselves not become a threat to the availability of the network to other hosts.

These assumptions have increasingly proven to be incorrect, and network stability became a serious problem in 2003 and 2004 due to rampant worms and bot networks. (The mstream DDoS program, for example, caused routers to crash as a result of the way it spoofed source addresses, as did the Slammer worm.)

Taking that into account, there is a good case for putting DDoS defense mechanisms in the core of the network, since DDoS attacks can leverage any sort of packet whatsoever, and pure flooding attacks cannot be handled at an edge once they achieve a volume greater than the edge connection’s bandwidth. DDoS defense mechanisms that add general defenses against attacks using any kind of traffic are not out of bounds by the definitions of the end-to-end argument, and should be considered, provided they can be demonstrated to be safe, effective, and cheap—the latter especially—for ordinary traffic when no attack is going on. It is not clear that any of the currently proposed DDoS defense mechanisms requiring core deployment meet those requirements yet, and obviously any serious candidate for such deployment must do so before it should even be considered for actual insertion into the routers making up the core of the Internet. However, both proponents and critics of core DDoS defenses should remember that the authors of the original end-to-end argument put it forward as a useful design principle, not absolute truth.

Critiques of DDoS defense solutions based solely on violation of the end-to-end argument miss the point. On the other hand, critiques of particular components of DDoS defense solutions on the basis that they could be performed as well or better on the edges are proper uses of the end-to-end argument.

These two above-mentioned ideas, the best-effort service model and the end-to-end paradigm, essentially define the same design principle: The core network should be kept simple; all the complexity should be pushed to the edge nodes. Thanks to this simplicity and division of functionalities between the core and the edges, the Internet easily met challenges of scale, the introduction of new applications and protocols, and a manifold increase in traffic while remaining a robust and cheap medium with ever-increasing bandwidth and speed. A downside of this simple design becomes evident when one of the parties in the end-to-end model is malicious and acts to damage the other party. Since the interconnection network is simple, intermediate nodes do not have the functionality necessary to step in and police the violator’s traffic.

This is exactly what happens in DDoS attacks, IP spoofing, and congestion incidents. The problem first became evident in October 1986 when the Internet suffered a series of congestion collapses [Nag84]. End hosts were simply sending more traffic than could be supported by the interconnection network. The problem was quickly addressed by the design and deployment of several TCP congestion control mechanisms [Flo00]. These mechanisms augment end-host TCP implementations to detect packet drops as a sign of congestion and respond to them by rapidly reducing the sending rate. However, it soon became clear that end-to-end flow management cannot ensure a fair allocation of resources in the presence of aggressive flows. In other words, those users who would not deploy congestion control were able to easily steal bandwidth from well-behaved congestion-responsive flows. As congestion builds up and congestion-responsive flows reduce their sending rate, more bandwidth becomes available for the aggressive flows that keep on pounding.

This problem was finally handled by violating the end-to-end paradigm and enlisting the help of intermediate routers to monitor and police bandwidth allocation among flows to ensure fairness. There are two major mechanisms deployed in today’s routers for congestion avoidance purposes—active queue management and fair scheduling algorithms [BCC+98]. A similar approach may be needed to completely address the DDoS problem. We discuss this further in Section 5.5.3.

3.2.3 Internet Evolution

The Internet has experienced immense growth in size and popularity since its creation. The number of Internet hosts has been growing exponentially and currently (in 2004) there are over 170 million computers online. Thanks to its cheap and fast message delivery, the Internet has become extremely popular and its use has spread from scientific institutions into companies, government, public works, schools, homes, banks, and many other places.

This immense growth has also brought on several issues that affect Internet security.

• Scale. In the early days of the ARPANET, there was a maximum of 64 hosts allowed in the network, and if a new host needed to be added to the network, another had to leave. In 1971, there were 23 hosts and 15 connection hosts (nowadays called routers). In August 1981, when these restrictions no longer applied (NCP, with 6-bit address fields allowing only 64 hosts, was being phased out—it was being replaced by TCP, which was specified in 1974 and initially deployed in 1975), there were only 213 hosts online. By 1983, there were more than 1,000, by 1987 more than 10,000, and by 1989 (when the ARPANET was shut down) more than 100,000 hosts. In January 2003, there were more than 170 million Internet hosts. It is quite feasible to manage several hundred hosts, but it is impossible to manage 170 million of them. Poorly managed machines tend to be easy to compromise. Consequently, in spite of continuing efforts to secure online machines, the pool of vulnerable (easily compromised) hosts does not get any smaller. This means that attackers can easily enlist hundreds or thousands of agents for DDoS attacks, and will be able to obtain even more in the future.

• User profile. A common ARPANET user in the 1970s was a scientist who had a fair knowledge of computers and accessed a small, fairly constant set of machines at remote sites that typically ran a well-known and static set of software. A large number of today’s Internet users are home users who need Internet access for Web browsing, game downloads, e-mail, and chat. Those users usually lack knowledge to properly secure and administer their machines. Moreover, they commonly download binary files (e.g., games) from unknown Internet sites or receive them in e-mail. A very effective way for the attacker to spread his malicious code is to disguise it to look like a useful application (a Trojan horse), and post it online or send it in an e-mail. The unwitting user executes the code and his machine gets compromised and recruited into the agent army. An ever-growing percentage of the Internet users are home users whose machines are constantly online and poorly secured, representing an easy recruiting pool for an attacker assembling a DDoS agent army.

• Popularity. Today, Internet use is no longer limited to universities and research institutions, but permeates many aspects of everyday life. Since connectivity plays an important role in many businesses and infrastructure functions, it is an attractive target for the attackers. Internet attacks inflict great financial damage and affect many daily activities.

The evolution of the Internet from a wide-area research network into the global communication backbone exposed security flaws inherent in the Internet design and made the task of correcting them both pressing and extremely challenging.

3.2.4 Internet Management

The way the Internet is managed creates additional challenges for DDoS defense. The Internet is not a hierarchy but a community of numerous networks, interconnected to provide global access to their customers. As early as the days of NSFnet,4 there existed little islands of self-managed networks as part of the noncommercial network. Each of the Internet networks is managed locally and run according to policies defined by its owners. There is no central authority. Thanks to this management approach, the Internet has remained a free medium where any opinion can be heard. On the other hand, there is no way to enforce global deployment of any particular security mechanism or policy. Many promising DDoS defense solutions need to be deployed at numerous points in the Internet to be effective, as illustrated in Chapter 5. IP spoofing is another problem that will likely need a distributed solution. The distributed nature of these threats will make it very difficult for single-node solutions to counteract them. However, the impossibility of enforcing global deployment makes highly distributed solutions unattractive. See Chapter 5 for a detailed discussion of defense solutions and their deployment patterns.

Due to privacy and business concerns, network service providers typically do not wish to provide information on cross-network traffic behavior and may be reluctant to cooperate in tracing attacks (see [Lip02] for further discussion of Internet tracing challenges and possible solutions, as well as the legal issues in Chapter 8). Furthermore, there is no automated support for tracing attacks across several networks. Each request needs to be authorized and put into effect by a human at each network. This introduces large delays. Since many DDoS attacks are shorter than a few hours, they will likely end before agent machines can be located.

3.3 DoS and DDoS Evolution

There are many security problems in today’s Internet. E-mail viruses lurk to infect machines and spread further, computer worms—sometimes silently—swarm the Internet, competitors and your neighbor’s kids attempt to break into company machines and networks and steal industrial secrets, DDoS attacks knock down online services. The list goes on and on. None of these problems have been completely solved so far, but many have been significantly lessened through practical technological solutions. Firewalls have greatly helped reduce the danger from the intrusions by blocking all but necessary incoming traffic. Antivirus programs prevent the execution of known worms and viruses on the protected machine, thus defeating infection and stopping the spread. Applications and operating systems check for software updates and patch themselves automatically, greatly reducing the number of vulnerabilities that can be exploited to compromise a host machine.

However, the DoS problem remains largely unhandled, in spite of great academic and commercial efforts to solve it. Sophisticated firewalls, the latest antivirus software, automatic updates, and a myriad of other security solutions improve the situation only slightly, defending the victim from only the crudest attacks. It is, however, strikingly easy to generate a successful DDoS attack that bypasses these defenses and takes the victim down for as long as the attacker wants. And there is often nothing a victim can do about it.

How did these tools develop, and how are they being used? We will now look at a combined history of the development of network-based DoS and DDoS attack tools, in relation to the attacks waged with them.

3.3.1 History of Network-Based Denial of Service

The developmental progression of DoS and DDoS tools and associated attacks can give great insight into likely trends for the future, as well as allowing an organization to gauge the kinds of defenses they need to consider based on what is realistic to expect from various attackers, from less skilled up to advanced attackers.

These are only some representative tools and attacks, not necessarily all of the significant attacks. For more stories, see http://staff.washington.edu/dittrich/misc/ddos/.

The Late 1980s

The CERT Coordination Center (CERT/CC, which was originally founded by DARPA as the Computer Emergency Response Team) at Carnegie Mellon University’s Software Engineering Institute was established in 1988 in response to the so-called Morris worm, which brought the Internet to its knees.5 The CERT Coordination Center has a long-established expertise in handling and responding to incidents in the Internet, analyzing and reporting vulnerabilities within systems, as well as research in computer and network security, and networked system survivability. Figure 3.2 presents a CERT Coordination Center–created summary of the trends in attacker tools over the last few years.

Figure 3.2. Over time, attacks on networked computer systems have become more sophisticated, but the knowledge attackers need has decreased. Intruder tools have become more sophisticated and easy to use. Thus, more people can become “successful intruders” even though they have less technical knowledge. (Reprinted with permission of the CERT Coordination Center.)

The Early 1990s

After the story of the Morris worm incident died out, the Internet continued to grow through the early 1990s into a fun place, with lots of free information and services. More and more sites were added, and Robert Metcalf stated his now famous law: The usefulness, or utility, of a network equals the square of the number of users. But as we saw earlier in Section 3.1, some percentage of these new users will not be nice, friendly users.

In the mid-1990s, remote DoS attack programs appeared and started to cause problems. In order to use these programs, one needed an account on a big computer, on a fast network, to have maximum impact. This led to rampant account theft at universities in order for attackers to have use of stolen accounts to run DoS programs—as they were easy to identify and shut down, they were often considered “throwaway” accounts—which drove a market for installing sniffers.6 These accounts would be traded for pirated software, access to hard-to-reach networks, stolen computers, credit card numbers, cash, etc. At the time, flat thick-wire and thin-wire Ethernet networks were popular, as was the use of telnet and ftp (both of which suffered from a clear-text password problem). The result of this architectural model, combined with vulnerable network services, were networks that were easy prey to attackers running network sniffers.

1996

In 1996, a vulnerability in the TCP/IP stack was discovered that allowed a flood of packets with only the SYN bit set (known as a SYN flood; see Chapter 4 for detailed description). This became a popular and effective tool to use to make servers unavailable, even with moderate bandwidth available to an attacker (which was a good thing for attackers, as modems at this time were very slow). Small groups used these tools at first, and they circulated in closed groups for a time.

1997

Large DoS attacks on IRC networks began to occur in late 1996 and early 1997. In one attack, vulnerabilities in Windows systems were exploited by an attacker who took out large numbers of IRC users directly by crashing their systems [Val]. DoS programs with names like teardrop, boink, and bonk allowed an attacker to crash unpatched Windows systems at will. In another attack, a Romanian hacker took out portions of IRC network Undernet’s server network with a SYN flood [KC]. SYN flood attacks on IRC networks are still prevalent today. A noteworthy event in 1997 was the complete shutdown of the Internet due to (nonmalicious) false route advertisement by a single router [Bon97]

For the most part, these DoS vulnerabilities are simple bugs that were fixed in subsequent releases of the affected operating systems. For example, there were a series of bugs in the way the Microsoft Windows TCP/IP stack handled fragmented packets. One bug in Windows’ TCP/IP stack didn’t handle fragmented packets whose offset and length did not match properly, such that they overlapped. The TCP/IP stack code authors expected packets to be properly fragmented and didn’t properly check start/end/offset conditions. Specially crafted overlapping packets would cause the stack to allocate a huge amount of memory and hang or crash the system.7 As these bugs were fixed, attackers had to develop new means of performing DoS attacks to up the ante and increase the disruption capability past the point where it can be stopped by simple patches.

Another effective technique that appeared around 1997 was a form of reflected, amplified DoS attack, called a Smurf attack. Smurf attacks allowed for amplification from a single source. By bouncing packets off a misconfigured network, attackers could amplify the number of packets destined for a victim by a factor of up to 200 or so for a so-called Class C or /24 network, or by a factor of several thousands for a moderately populated Class B or /16 network. The attacker would simply craft packets with the return address of the intended victim, and send those packets to the broadcast address of a network. These packets would effectively reach all available and responsive hosts on that particular network and elicit a response from them, Since the return address of the requests was forged, or spoofed, the response would be sent to the victim.8

Attackers next decided to explore another avenue for crashing machines. Instead of exploiting a vulnerability, they just sent a lot of packets to the target. If the target was on a relatively slow dial-up connection (say, 14.4Kbps), but the attacker was using a stolen university computer on a 1Mbps connection, she could trivially overwhelm the dial-up connection and the computer would be lagged, or slowed down to the point of being useless (each keystroke typed could take 10 seconds or more to be received and echoed).

1998

As the bandwidth between attacker and target became more equal, and more network operators learned to deal with simple Smurf attacks, the ability to send enough traffic at the target to lag it became harder and harder to achieve. The next step was to add the ability to control a large number of remotely located computers—someone else’s computers—and to direct them all to send massive amounts of useless traffic across the network and flood the victim (or victims). Attackers began organizing themselves into coordinated groups, performing an attack in concert on a victim. The added packets, whether in sheer numbers or by overlapping attack types, obtained the desired effect.

Prototypes of DDoS tools (most notably fapi, see [CER99]) were developed in mid-1998, serving as examples of how to create client/server DDoS networks. Rather than relying on a single source, attackers could now take advantage of all the hosts they can compromise to attack with. These early programs had many limitations and didn’t see widespread use, but did prove that coordination of computers in an attack was possible.

Vulnerability-based attacks did not simply go away, and in fact continued to be possible due to a constant stream of newly discovered bugs. Successful DoS attacks using the relatively simple fragmented packet vulnerability targeting tens to hundreds of thousands of vulnerable hosts have been waged in the past. For example, the attacks in 1998 took advantage of fragmented packet vulnerabilities but added scripts to prepare the list of vulnerable hosts in advance and then rapidly fired exploit packets at those systems. This attacker prepared the list by probing potential victims for the correct operating system (by a technique called OS fingerprinting, which can be defeated by packet normalization [SMJ00]) and for the existence of the vulnerability, and creating a list of those that “pass the test.” The University of Washington network was one of the victims of the attack. In computer labs across campus, some with hundreds of PCs being busily used by students doing their homework, the sound of keystrokes turned to dead silence as every screen in the lab went blue, only to be replaced seconds later with the chatter of “Hey, did your computer just crash too?” A large civilian U.S.-based space agency was a similar victim of these attacks during the same time period. Users at many locations were subjected to immediate patching, since subsequent controlled tests clearly turned unpatched systems’ screens (and their respective users) blue.

The next step to counter the patching issue, which made it harder for an attacker to predict which DoS attack would be effective, was to combine multiple DoS exploits into one tool, using Unix shell scripts. This increased the speed at which an effective DoS attack could be waged. One such tool, named rape, (according to the code, written in 1998) integrates the following DoS attacks into a single shell script:

A tool like this has the advantage of allowing an attacker to give a single IP address and have multiple attacks be launched (increasing the probability of a successful attack), but it also means having to have a complete set of precompiled versions of each individual exploit packaged up in a Unix “tar” format archive for convenient transfer to a stolen account from which to launch the attack.

To still allow multiple DoS exploits to be used, but with a single precompiled program that is easier to store, transfer, and use quickly, programs like targa.c by Mixter were developed (this same strategy was used again in 2003 by Agobot/Phatbot). Targa combines all of the following exploits in a single C source program:

Even combined DoS tools like targa still only allowed one attacker to DoS one IP address at a time, and they required the use of stolen accounts on systems with maximum bandwidth (predominantly university systems). To increase the effectiveness of these attacks, groups of attackers, using IRC channels or telephone “voice bridges” for communication, could coordinate attacks, each person attacking a different system using a different stolen account. This same coordination was being seen in probing for vulnerabilities, and in system compromise and control using multiple backdoors and “root kits.”

The years 1998 and 1999 saw a significant increase in the ability to attack computer systems, which came as a direct result of programming and engineering efforts, the same ones that were bringing the Internet to the world: automation, increases in network bandwidth, client/server communications, and global chat networks.

1999

In 1999, two major developments grew slowly out of the computer underground and began to take shape as everyday attack methods: distributed computing (in the forms of distributed sniffing, distributed scanning, and distributed denial of service), and a rebirth of the worm (the worm simply being the integration and automation of all aspects of intrusion: reconnaissance scanning, target identification, compromise, embedding, and attacker control). In fact, many worms today (e.g., Nimda, Code Red, Deloder, Lion, and Blaster) either implement a DDoS attack or bundle in DDoS tools.

The summer of 1999 saw the first large-scale use of new DoS tools: trinoo [Ditf], Tribe Flood Network (TFN) [Dith], and Stacheldraht [Ditg]. These were all simple client/server style programs—handlers and agents, as mentioned earlier—that performed only DDoS-related functions of command and control, various types of DoS attacks, and automatic update in some cases. They required other programs to propagate them and build attack networks, and the most successful groups using these tools also used automation for scanning, target identification, exploitation, and installation of the DDoS payload. The targets of nearly all the attacks in 1999 were IRC clients and IRC servers.

One prominent attack against the IRC server at the University of Minnesota and a dozen or more IRC clients scattered around the globe was large enough to keep the University’s network unusable for almost three full days. This attack used the trinoo DDoS tool, which generated a flood of UDP packets with a 2-byte payload and did not use IP spoofing. The University of Minnesota counted 2,500 attacking hosts, but the logs were not able to keep up with the flood, so that count was an underestimate.9 These hosts were used in several DDoS networks of 100 to 400 hosts each, in rolling attacks that activated and deactivated groups of hosts. This made locating the agents, identification, and cleanup take several hours to a couple of days. The latency in cleanup contributed to the attack’s duration.

The change to using distributed tools was inevitable. The growth of network bandwidth that came about through the development of Internet2 [Con] (officially launched in 2000) made simple point-to-point tools less effective against well-provisioned networks, and attacks that used single hosts for flooding were easy to filter out and easy to track back to their source and stop. Scripting of vulnerability scans (which are also made faster because of the same bandwidth increase) and scripting of attacks made it much easier to compromise hundreds, thousands, even tens of thousands of hosts in just a few hours. If you control thousands of computers, why not program them to work in a coordinated manner for leverage? It wasn’t a large leap to automate the installation of attack tools, back doors, sniffers—whatever the attackers wanted to add.

Private communications with some of the authors of the early DDoS tools10 indicated the motivation for this automation was for a smaller group of attackers to counter attacks wielded at it by a large group (using the classic DoS tools described above, and manual coordination). The smaller group could not wield the same number of manual tools and thus resorted to automation. In almost all cases, these were first coding efforts, and even with bugs they were quite effective at waging large-scale attacks. The counterattacks were strikingly effective, and the smaller group was able to retake all their channels and strike back at the larger group.

Similar attacks, although on a slightly smaller scale, continued through the late fall of 1999, this time using newer tools that spoofed source addresses, making identification of attackers even harder. Nearly all of these attacks were directed at IRC networks and clients, and there was very little news coverage of them. Tribe Flood Network (or TFN), Stacheldraht, and then Tribe Flood Network 2000 (TFN2K) saw popular use, while Shaft showed up in more limited attacks.

In November of 1999, CERT Coordination Center sponsored (for the first time ever) a workshop to discuss and develop a response to a situation they saw as a significant problem of the day, in this case Distributed System Intruder Tools (including distributed scanners, distributed sniffers, and distributed denial of service tools.) The product of the workshop was a report [CER99], which covered the issue from multiple perspectives (those of managers, system administrators, Internet service providers, and incident response teams). This report is still one of the best places to start in understanding DDoS, and what to do about it in the immediate time frame (< 30 days), medium term (30–180 days) and long term (> 180 days). Ironically, only days after this workshop, a new tool was discovered that was from a different strain of evolution, yet obeyed the same attack principles as trinoo, TFN, and Stacheldraht [Ditf, Dith, Ditg]. The Shaft [DLD00] tool, as discussed in Chapter 4, was wreaking havoc with only small numbers of agents across Europe, the United States, and the Pacific Rim, and caught the attention of some analysts.

The next generation of tools included features such as encryption of communications (to harden the DDoS tools against counterattack and detection), multiplicity of attack methods, integrated chat functions, and reporting of packet flood rates. This latter function is used by the attacker to make it easier to determine the yield of the DDoS attack network, and hence when it is large enough to obtain the desired goal and when (due to attrition) it needs to be reinforced. (The attackers, at this point, knew more about how much bandwidth they were using than many network operators knew was being consumed. Refer to the discussion of Shaft in Chapter 4 for more on this statistics feature, and the OODA Loop in Chapter 4 to understand how this affects the attacker/defender balance of power.) As more and more groups learned of the power of DDoS to engage in successful warfare against IRC sites, more DDoS tools were developed.

As Y2K Eve approached, many security professionals feared that widespread DDoS attacks would disrupt the telecommunications infrastructure, making it look like Y2K failures were occurring and panicking the public [SSSW]. Thankfully, these attacks never happened. That did not mean that attack incidence slowed down, however.

2000

On January 18, 2000, a local ISP in Seattle, Washington, named Oz.net was attacked [Ric]. This appeared to be a Smurf attack (or possibly an ICMP Echo reply flood with a program like Stacheldraht). The unique element of this attack was that it was directed against not only the servers at Oz.net, but also at their routers, the routers of their upstream provider Semaphore, and the routers of the upstream’s provider, UUNet. It was estimated that the slowdown in network traffic affected some 70% of the region surrounding Seattle.

Within weeks of this attack, in February 2000, a number of DDoS attacks were successfully perpetrated against several well-provisioned and carefully run sites—many of the major “brand names” of the Internet. They included the online auction company eBay (with 10 million customers), the Internet portal Yahoo (36 million visitors in December 1999), online brokerage E*Trade, online retailer Buy.com (1.3 million customers), online bookselling pioneer Amazon.com, Web portal Excite.com, and the widely consulted news site CNN [Har]. Many of these sites were used to high, fluctuating traffic volume, so they were heavily provisioned to provide for those fluctuations. They were also frequent targets of other types of cyberattacks, so they kept their security software up to date and maintained a staff of network administration professionals to deal with any problems that arose. If anyone in the Internet should have been immune to DDoS problems, these were the sites. (A detailed timeline of some of the lesser known events surrounding the first public awareness of DDoS, leading up to the first major DDoS attacks on e-commerce sites, is provided in the side bar at the end of this chapter.)

However, the attacks against all of them were quite successful, despite being rather unsophisticated. For example, the attack against Yahoo in February 2000 prevented users from having steady connectivity to that site for three hours. As a result, Yahoo, which relies on advertising for much of its revenue, lost potentially an estimated $500,000 because its users were unable to access Yahoo’s Web pages and the advertisements they carried [Kes]. The attack method used was not sophisticated, and thus Yahoo was eventually able to filter out the attack traffic from its legitimate traffic, but a significant amount of money was lost in the process. Even the FBI’s own Web site was taken out of service for three hours in February of 2000 by a DDoS attack [CNN].

2001

In January 2001, a reflection DDoS attack (described in Section 2.3.3) [Pax01] on futuresite.register.com [el] used false DNS requests sent to many DNS servers throughout the world to generate its traffic. The attacker sent many requests under the identity of the victim for a particularly large DNS record to a large number of DNS servers. These servers obediently sent the actually undesired information to the victim. The amount of traffic inbound to the victim’s IP address was reported to be 60 to 90 Mbps, with one site reporting seeing approximately 220 DNS requests per minute per DNS server.

This attack lasted about a week, and could not be filtered easily at the victim network because simple methods of doing so would have disabled all DNS lookup for the victim’s customers. Because the attack was reflected off many DNS servers, it was hard to characterize which ones were producing only attack traffic that should be filtered out.

Arguably, the DNS servers responding to this query really shouldn’t have done so, since it would not ordinarily be their business to answer DNS queries on random records from sites not even in their domain. That they did so could be considered a bug in the way DNS functions, a misconfiguration of DNS servers, or perhaps both, but it certainly underscores the complexity of Internet protocols and protocol implementations and inherent design issues that can lead to DoS vulnerabilities.

The number and scope of DDoS attacks continued to increase, but most people (at least those who didn’t use IRC) were not aware of them. In 2001 David Moore, Geoffrey Voelker, and Stephan Savage published a paper titled, “Inferring Internet Denial-of-Service Activity” [MVS01]. This work investigates Internet-wide DDoS activity and is described in detail in Appendix C. The technique for measuring DDoS activity underestimates the attack frequency, because it cannot detect all occurring attacks. But even with these limitations, the authors were able to detect approximately 4,000 attacks per week for the three-week period they analyzed. Similar techniques for attack detection and evaluation were already in use among network operators and network security analysts in 1999 and 2000. What many operators flagged as “RST scans” were known to insiders as “echoes” of DDoS attacks [DLD00].

Microsoft’s prominent position in the industry has made it a frequent target of attacks, including DDoS attacks. Some DDoS attacks on Microsoft have failed, in part because Microsoft heavily provisions its networks to deal with the immense load generated when they release an important new product or when an upgrade becomes available. But some of the attacks succeeded, often by cleverly finding some resource other than pure bandwidth for the attack to exhaust. For example, in January 2001 someone launched a DDoS attack on one of Microsoft’s routers, preventing it from routing normal traffic at the required speeds [Del]. This attack would have had relatively little overall effect on Microsoft’s Internet presence were it not for the fact that all of the DNS servers for Microsoft’s online properties were located behind the router under attack. While practically all of Microsoft’s network was up and available for use, many users could not get to it because their requests to translate Microsoft Web site names into IP addresses were dropped by the overloaded router. This attack was so successful that the fraction of Web requests that Microsoft was able to handle dropped to 2%.

2002

Perhaps inspired by this success, in October 2002 an attacker went a step further and tried to perform a DDoS attack on the complete set of the Internet’s DNS root servers. DNS is a critical service for many Internet users and many measures have been taken to make it robust and highly available. DNS data is replicated at 13 root servers, that are themselves well provisioned and maintained, and it is heavily cached in nonroot servers throughout the Internet. An attacker attempted to deny service to all 13 of these root servers using a very simple form of DDoS attack. At various points during the attack, 9 of the 13 root servers were unable to respond to DNS requests, and only 4 remained fully operational throughout the attack. The attack lasted only one hour, after which the agents stopped. Thanks to the robust design of the DNS and the attack’s short duration, there was no serious impact in the Internet as a whole. A longer, stronger attack, however, might have been extremely harmful.

2003

It wasn’t until 2003 that a major shift in attack motivations and methodologies began to show up. This shift coincided with several rapid and widespread worm events, which have now become intertwined with DDoS.

First, spammers began to use distributed networks in the same ways as DDoS attackers to set up distributed spam networks [KP]. As antispam sites tried to counter these “spambots,” the spammers’ hired guns retaliated by attacking several antispam sites [AJ]. Using standard DDoS tools, and even worms like W32/Sobig [JL], they waged constant attacks against those who they perceived were threatening their very lucrative business.

Second, other financial crimes began to involve the use of DDoS. In some cases, online retailers with daily gross sales on the order of tens of thousands of dollars a day (as opposed to larger sites like the ones attacked in February 2000) were also attacked, using a form of DDoS involving seemingly normal Web requests. These attacks were just large enough to bring the Web server to a crawl, yet not large enough to disrupt the networks of the upstream providers. Similarly to the reflected DNS attacks described earlier, it was difficult (if not impossible) to filter out the malicious Web requests, as they appeared to be legitimate. Similar attacks were waged against online gambling sites and pornography sites. Again, in some cases extortion attempts were made for sums in the tens of thousands of dollars to stop the attacks (a new form of protection racketeering) [CN].

DDoS had finally become a component of widespread financial crimes and high-dollar e-commerce, and the trend is likely to only increase in the near future. DDoS continued to also be used as in prior years, including for political reasons.

During the Iraq War in 2003, a DDoS attack was launched on the Qatar-based Al-Jazeera news organization, which broadcast pictures of captured American soldiers. Al-Jazeera attempted to outprovision the attackers by purchasing more bandwidth, but they merely ratcheted up the attack. The Web site was largely unreachable for two days, following which someone hijacked their DNS name, redirecting requests to another Web site that promoted the American cause.

In May 2003, then several more times in December, SCO’s Web site experienced DDoS attacks that took it offline for prolonged periods of time. Statements by SCO management indicated the belief that the attacks were in response to SCO’s legal battle over Linux source code and statements criticizing the Open Source community’s role in these lawsuits [Lem].

In mid-2003, Clickbank (an electronic banking service) and Spamcop (a company that filters e-mail to remove spam) were subjected to powerful DDoS attacks [Zor]. The attacks seemingly involved thousands of attack machines. After some days, the companies were able to install sophisticated filtering software that dropped the DDoS attack traffic before it reached a bottleneck point.

2004

Financially motivated attacks continue, along with speculation that worm attacks in 2004 were used to install Trojan software on hundreds of thousands of hosts, creating massive bot networks. Two popular programs—Agobot, and its successor Phatbot [LTIG]—have been implicated in distributed spam delivery and DDoS; in some cases networks of these bots are even being sold on the black market [Leya]. Phatbot is described in more detail in Chapter 4.

Agobot and Phatbot both share many of the features that were predicted in 2000 by Michal Zalewski [Zal] in his write-up on features of a “super-worm.” This paper was written in response to the hype surrounding the “I Love You” virus. Zalewski lists such features as portability (Phatbot runs on both Windows and Linux), polymorphism, self-update, anti-debugging, and usability (Phatbot comes with usage documentation and online help commands). Since Phatbot preys on previously infected hosts, other parts of Zalewski’s predictions are also possible (via programming how Phatbot executes) and thus Phatbot is one of the most advanced forms of automation seen to date in the category of DDoS (or blended threat, actually) tools.

Like all histories, this history of DDoS attacks does not represent a final state, but is merely the prelude to the future. In the next chapter, we will provide more details on exactly how today’s attacks are perpetrated, which will then set the stage for discussing what you must do to counter them. Remember, however, that the history we’ve just covered suggests, more than anything else, continued and rapid change for the future. Early analyses of DDoS attack tools like trinoo, Tribe Flood Network, Stacheldraht, and Shaft all made predictions about future development trends based on past history, but attackers proved more nimble in integrating new attack methods into existing tools than those predictions suggested. We should expect both the number and sophistication of attack tools to grow steadily, and perhaps more rapidly than anyone predicts. Therefore, the tools attackers will use in upcoming years and the methods used to defend against them will progress from the current states we describe in the upcoming chapters, requiring defenders to keep up to date on new trends and defense methods.

Early DDoS Attack Tool Time Line

There was a flurry of media attention paid to the potential for DoS attacks to be used around the time of Y2K Eve. These attacks were expected to mimic anticipated Y2K bug failures and potentially attract much public attention and invoke panic. This potential generated, rightly from a protective role, a lot of attention from within the government and organizations that support the government. Many stories covering the possibility of a DoS attack were published in the media. The media attention continued at a low level after Y2K, then picked up sharply after the first major DDoS attacks against e-commerce sites in February 2000. Many of the authors of then-published stories could not benefit from behind-the-scenes activity or information that was unpublished at the time. This created an impression that DDoS is a phenomenon that was largely neglected by incident responders. Later, some articles took very critical positions against federal government agencies, mostly due to the reporters not knowing all of the events leading up to February 2000. Those articles can be obtained following links at http://security.royans.net/info/articles/feb_attack.shtml and http://staff.washington.edu/dittrich/misc/ddos/.

The time line below follows a talk given at the Usenix Security Symposium in 2000 on DDoS attacks (see http://www.computerworld.com/news/2000/story/0,11280,48796,00.html and http://staff.washington.edu/dittrich/talks/sec2000/) and provides more information about early DoS/DDoS incidents.

• May/June, 1998. First primitive DDoS tools developed in the underground—small networks, only mildly worse than coordinated point-to-point DoS attacks. These tools did not see widespread use at the time, but classic point-to-point DoS attacks continued to cause problems, as well as increasing “Smurf” amplification attacks (see Section 2.3.3).

• July 22, 1999. The CERT Coordination Center released Incident Note 99-04 mentioning widespread intrusions on Solaris RPC services. (See http://www.cert.org/incident_notes/IN-99-04.html.)

• August 5, 1999. First evidence seen at sites around the Internet of programs being installed on Solaris systems in what appeared to be “mass” intrusions using massive autorooters and using multiple attack vectors. (This was a precursor to some of today’s worms and blended threats, such as Phatbot.) It was not clear at first how these incidents were related.

• August 17, 1999. Attack on the University of Minnesota reported to University of Washington (among many others) network operations and security teams. The University of Minnesota reported as many source networks as it could identify (but netflow records were overwhelming their storage capacity, so some records were dropped).

• September 2, 1999. Contents of a stolen account used to cache files were recovered. Over the next month, a detailed analysis of the files was performed. An initial draft analysis of trinoo was circulated privately at first.

• October 21, 1999. Final drafts of trinoo and TFN analyses were finished. (See http://packetstormsecurity.nl/distributed/trinoo.analysis and http://packetstormsecurity.nl/distributed/tfn.analysis.)

• November 2–4, 1999. The Distributed Systems Intruder Tools (DSIT) workshop, organized by the CERT Coordination Center, was held near the Carnegie Mellon University campus in Pittsburgh. It was agreed by attendees that it was important not to panic people, but instead provide meaningful steps to deal with this new threat. All attendees agreed to keep information about DDoS programs private until the attendees finished a report on how to respond, and not to release other information provided to them without permission of the sources.

• November 18, 1999. The CERT Coordination Center released Incident Note 99-07 “Distributed Denial of Service Tools,” mentioning DDoS tools. (See http://www.cert.org/incident_notes/IN-99-07.html.)

• November 29, 1999. SANS NewsBytes, Vol. 1, Num. 35, mentioned trinoo /TFN in the context of widespread Solaris intrusion reports they were getting that were consistent with the CERT Coordination Center IN-99-07 and involving ICMP Echo Reply packets.

• Late November/Early December, 1999. Evidence of another DDoS agent (Shaft) was found in Europe. (An analysis by Sven Dietrich, Neil Long, and David Dittrich wasn’t published until March 13, 2000.

See http://www.adelphi.edu/~spock/shaft_analysis.txt.)

• December 4, 1999. Massive attack using Shaft is detected. (Data acquired during this attack was analyzed in early 2000 and presented by Sven Dietrich in a paper at the USENIX LISA 2000 conference.

See http://www.adelphi.edu/~spock/lisa2000-shaft.pdf.)

• December 7, 1999. ISS released an advisory on trinoo /TFN after first nontechnical mention of DDoS tools in a USA Today article. The CERT Coordination Center released final report of the DSIT workshop. David Dittrich sent his analyses of trinoo and TFN to the BUGTRAQ e-mail list.

(See http://www.usatoday.com/life/cyber/tech/review/crg681.htm,

http://www.cert.org/reports/dsit_workshop-final.html,

http://packetstormsecurity.nl/distributed/trinoo.analysis, and

http://packetstormsecurity.nl/distributed/tfn.analysis.)

• Remainder of December 1999 and into January 2000. Many conference calls were held within a group of government, academic, and private industry participants who worked cooperatively and constructively in helping develop responses to the DDoS threat. Fears of Y2K-related attacks helped drive the urgency. (See http://news.com.com/2100-1001-234678.html and http://staff.washington.edu/dittrich/misc/corrections/cnet.html.)

• December 8, 1999. According to a USA Today article, NIPC sent a note briefing FBI director Louis Freeh on DDoS tools.

(See http://www.usatoday.com/life/cyber/tech/cth523.htm.)

• December 17, 1999. According to a USA Today article, NIPC director Michael Vatis briefed Attorney General Janet Reno as part of an overview of preparations being made for Y2K.

(See http://www.usatoday.com/life/cyber/tech/cth523.htm.)

• December 27, 1999. As final work on the analysis of Stacheldraht was being performed by David Dittrich, with help from Sven Dietrich, Neil Long, and others, Dittrich scanned the University of Washington network with gag (the scanner included in the Stacheldraht analysis). Three active agents were found and traced to a handler in the southern United States. The ISP and its upstream provider were able to use the Stacheldraht analysis to identify over 100 agents in this network and take it down.

• December 28, 1999. The CERT Coordination Center releases Advisory CA-1999-17 “Denial-of-Service Tools” (covers TFN2K and MacOS 9 DoS exploit).

(See http://www.cert.org/advisories/CA-1999-17.html.)

• December 30, 1999. Analysis of Stacheldraht posted to the BUGTRAQ e-mail list. NIPC issued a press release on DDoS programs and released “Distributed Denial of Service Attack Information (trinoo/Tribe Flood Network),” including a tool for scanning local file systems/memory for DDoS programs. (See http://packetstormsecurity.nl/distributed/stacheldraht.analysis, http://www.fbi.gov/pressrm/pressrel/pressrel99/prtrinoo.htm, and http://www.fbi.gov/nipc/trinoo.htm.)

• January 3, 2000. The CERT Coordination Center and FedCIRC jointly published Advisory 2000–01 “Denial-of-Service Developments,” discussing Stacheldraht and NIPC scanning tool. (See http://www.cert.org/advisories/CA-2000-01.html.)

• January 4, 2000. SANS asked its membership to use published DDoS detection tools to determine how widely DDoS attack tools are being used. Reports of successful searches started coming in within hours. Several DDoS networks were discovered and taken down.

• January 5, 2000. Sun released bulletin #00193, “Distributed Denial-of-Service Tools.”

(See http://packetstorm.securify.com/advisories/sms/sms.193.ddos.)

• January 14, 2000. Attack on OZ.net in Seattle affected Semaphore and UUNET customers (affecting as much as 70% of Puget Sound Internet users, and possibly other sites in the United States—no national press attention until January 18). This attack targeted the network infrastructure, as well as end servers.

• January 17, 2000. ICSA.net organized Birds of a Feather (BoF) session on DDoS attacks at the RSA 2000 conference in San Jose.

• February 7, 2000. A talk was given by Steve Bellovin on DDoS attacks, and another ICSA.net DDoS BoF was organized at a NANOG [Ope] meeting in San Jose. Coincidentally (although not necessarily related), the first attacks on e-commerce sites began.

• February 10, 2000. Jason Barlow and Woody Thrower of AXENT Security Team published analysis of TFN2K.

• February 8–12, 2000. Attacks on e-commerce sites continued. Popular media began to widely publish reports on these DDoS attacks. To this day, most stories incorrectly refer to this as the beginning of DDoS.

While the government was criticized heavily in some early media reports, the following points are considered important in understanding these stories.

• Technical details of the developing DDoS tools did not start circulating, even privately, until October 1999.

• The CERT Coordination Center released IN-99-07 mentioning DDoS tools on November 18, 1999, and published CA-1999-17 on December 28, 1999. Any sites paying attention to the CERT Coordination Center Incident Notes and Advisories learned of trinoo, TFN, and TFN2K in November and December 1999.

• Anyone reading BUGTRAQ learned of trinoo and TFN on December 7, 1999, and Stacheldraht on December 30, 1999.

• NIPC’s advisory and tool came out just after the technical analyses were published, but because all three commonly used DDoS tools were discussed publicly by late December 1999, it seems overly critical to say the government “failed to warn” e-commerce sites before February 7, 2000. The e-commerce sites could have learned about the possible attacks from the CERT Coordination Center Incident Note, the DSIT Workshop Report, SANS publications, and postings to BUGTRAQ in November and December 1999.

• Several news stories mentioned all of these information sources in December 1999 through February 2000.

• Various agencies widely spread the analyses of DDoS tools as necessary, so the problem was known and being handled privately long before any media stories were published.