C H A P T E R 9

![]()

Working with Data

In this chapter, we are going to look at what has changed in terms of working with data in .NET 4.5 and Visual Studio 2012. Most of the action is around Entity Framework, but there have also been some changes made to the SqlClient data provider around streaming and asynchronous programming. Also with this release we see the introduction of LocalDb.

LocalDb

With Visual Studio 2012 (and SQL Server 2012), there is a new version of SQL Server Express call SQL Server Express LocalDb, or LocalDb for short. LocalDb has been created specifically for developers providing the same T-SQL, programming surface, and client-side providers as SQL Server Express but without having to install and manage a full instance of SQL Server Express or SQL Server.

Creating a Database with LocalDb

From within Visual Studio 2012, there are a few different ways that a LocalDb database can be created depending on the job at hand. The thing to be aware of is that the location of the resulting .mdf file differs depending on which method you use.

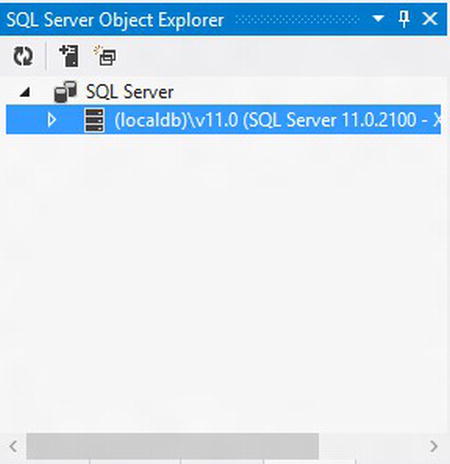

From the SQL Server Object Explorer

Within Visual Studio, open the new SQL Server Object Explorer (Ctrl +,Ctrl +s) and click on the Add SQL Server icon. When the Connect to Server dialog box displays, enter (localdb)v11.0 as the server name and click Connect. Right-click on the localdb icon (see Figure 9-1) and select New Query.

Figure 9-1. SQL Server Object Explorer

The simplest command to create a database is the following:

Create database TestDb1

This will create a new .mdf file which will be located in c:Usersuser-name (type %userprofile% in the File Explorer address bar to get there). This is probably not the best place to store your database files, so the Microsoft recommendation when creating database files is to specify the location. Enter the following and click the Execute button:

Create database TestDb1 on (name='TestDb1', filename='c:DbFilesTestDb1.mdf')

While you are in the SQL Server Object Explorer, you can also create a new database by right-clicking on the Databases folder just below the localdb icon and select “Add New Database”. A new database gets created but this time, if you go looking for it, the .mdf and .ldf files will be located in the folder C:Usersuser-nameAppDataLocalMicrosoftMicrosoft SQL Server Local DBInstancesv11.0.

Creating a New Database Project

With the release of SQL Server 2012, a new set of database developer tools for Visual Studio was released, and these tools have been integrated into Visual Studio 2012. These tools make use of LocalDb to allow developers to do database development offline without having to have a full version of SQL Server installed.

When you create a new SQL Server database project, as part of the project, two things happen in relation to LocalDb:

- First, a new instance folder is created under

C:Usersuser-nameAppDataLocalMicrosoftMicrosoft SQL Server Local DBthat will be named after the solution in which you have created the project. This folder will contain the system databases, logs, and so forth. - Second, within the project a folder named

Sandboxwill be created (select Show All Files to see it), which will contain the .mdf file that you will work against and which takes the name of the project.

If you want to access this database from another project, the data source for the connection string will be (localdb)<solution name>.

Adding a Database to an Application

If you are using a file-based database as part of your application, you can add a new database by selecting Add ![]() New Item, and then from the Data node select Service-based Database. This will create a LocalDb .mdf file within the project folder and add the corresponding connection string to the

New Item, and then from the Data node select Service-based Database. This will create a LocalDb .mdf file within the project folder and add the corresponding connection string to the app.config file.

SqlClient Data Provider

With .Net 4.5 some new features, both small and large, have been added to SqlClient. Some of these are in line with the release of SQL Server 2012 or are to catch-up with SQL Server 2008, especially around support for sparse columns. Probably the two new additions that will have the most impact are the implementation of the Task Parallel Library (TPL) to provide a more robust model for handling data asynchronously, and the inclusion of streaming support for unstructured data.

In this next section, we will take a brief look at this two new features as well as the new SqlCredential class and finish off with a short summary of some of the other changes that are part of this release.

Asynchronous Programming

Previously, asynchronous programming with the SqlClient data provider was achieved by adding Asynchronous Processing = true to the connection string and using SqlCommand.BeginExecuteNonQuery() or SqlCommand.BeginExecuteReader(). These methods still exist in .NET 4.5 (though you no longer need the asynchronous command in the connection string), but like elsewhere in the framework, the TPL has been implemented in SqlConnection, SqlCommand, SqlDataReader, and SqlBulkCopier (as well as their underlying abstract classes) to provide asynchronous versions of existing synchronous methods.

The new methods follow the standard pattern of appending Async to the method name so, for example, SqlConnection.Open() now has as a corresponding asynchronous method SqlConnection.OpenAsync(). A full list of all the new methods can be found at http://msdn.microsoft.com/en-us/library/hh211418(v=vs.110).aspx.

The following contrived example demonstrates some of the async methods that are available. If you want to try the code, modify the connection string to point to the database of your choice and modify the select statement accordingly.

class Program

{

public static void Main()

{

string connString = "Data Source=(local); Initial Catalog=DummyDb; Integrated

Security=SSPI";

var cts = new CancellationTokenSource();

using (var conn = new SqlConnection(connString))

{

var command = new SqlCommand("WAITFOR DELAY '00:00:05';select FirstName from

FakePeople", conn);

ExecuteNonQueryAsync(conn, command)

.ContinueWith(t =>

{

Console.WriteLine("Start reader");

command.ExecuteReaderAsync(cts.Token)

.ContinueWith(async t2 =>

{

if (t2.Status == TaskStatus.Canceled)

Console.WriteLine("Read was cancelled");

while (await t2.Result.ReadAsync())

Console.WriteLine(t2.Result[0]);

});

Console.WriteLine("Do something else while getting the results");

});

Console.WriteLine("Waiting...");

Console.ReadKey();

}

}

public static async Task<int> ExecuteNonQueryAsync(SqlConnection conn, SqlCommand cmd)

{

await conn.OpenAsync();

Console.WriteLine("Connection open");

await cmd.ExecuteNonQueryAsync();

Console.WriteLine("Query completed");

return 1;

}

}

Streaming

We have clearly reached a point where databases store more than just dates, numbers, and strings. Documents, images, media files, and the like are part of the wonderful world of data that our applications need to retrieve and store. In recognition of this, streaming support to and from SQL Server has been added to support scenarios involving unstructured data that is stored on the server. This addition should simplify writing applications that stream data by not having to fully load the data into memory, and it will also improve the scalability of the middle-tier applications especially in cases that require interaction with SQL Azure.

To facilitate streaming from SQL Server, new methods have been added to the DbDataReader and implemented in SqlDataReader. These methods include the following:

For streaming to SQL Server, the SqlParameter class has been modified so that it can accept and react to Stream, XmlReader, and TextReader objects depending on the SqlDbType you are targeting.

Combined with the new asynchronous methods in SqlClient, it will become easier to manage moving unstructured data between applications and SQL Server. If you are interested in exploring this further, some simple but reasonably comprehensive examples can be found at http://msdn.microsoft.com/en-us/library/hh556234(v=vs.110).aspx.

SqlCredential

Even though we all know integrated security is always the preferred option when connecting to a database, there are cases (SQL Azure springs to mind) when you need to use a username and password. In these cases, we needed to keep the username and password with the connection string, which left them vulnerable to being exposed in a crash dump or a page file in a page swap. With .NET4.5, this problem can now be mitigated with the use of the SqlCredential class.

The SqlCredential class exposes just two properties, UserId and Password, the latter of which is of the type SecureString. The SqlConnection has, in turn, been modified and now has a Credential property of the type SqlCredential as well as a modified constructor that takes an instance of SqlCredential as a parameter. Here is an example of how it can be used:

private void LoginButton_Click(object sender, RoutedEventArgs e)

{

var connString = "Data Source=(local);Initial Catalog=DummyDb";

var password = PasswordText.SecurePassword;

password.MakeReadOnly();

var sqlCredential = new SqlCredential(UsernameText.Text,password);

string message = "Successfully logged in";

try

{

using (var connection = new SqlConnection(connString,sqlCredential))

{

connection.Open();

}

}

catch (Exception ex)

{

message = "failed to log in";

}

MessageBox.Show(message);

}

The key thing to note is that the SqlCredential constructor will only allow you to pass an instance of SecureString that has been marked as read only.

A blog post from the ADO.NET team that covers this in a bit more detail can be found at http://blogs.msdn.com/b/adonet/archive/2012/03/09/safer-passwords-with-sqlcredential.aspx.

And the Other Stuff

In order to round off this section on the SqlClient, here is quick list of some of the other changes and features you will find with this release of the framework:

- Support for high availability, disaster recovery is available. Always On Availability Groups is a feature that was introduced with SQL Server 2012. If you are using SQL Server 2012 and have this feature enabled with SqlClient, on the connection property you can now specify the availability group listener for an availability group or a SQL Server 2012 Failover Cluster Instance. For more information, see

http://msdn.microsoft.com/en-us/library/hh205662(v=vs.110).aspx. - Connection failures will now be logged in the extended events log.

- SqlClient now supports extended protection.

- Sparse columns, a feature that was added in SQL Server 2008, are now supported in SqlClient. In addition, if you use the method

SqlDataReader.GetSchemaTable(), you can check if a column is a sparse column or not by checking itsIsColumnSetproperty. - When

TrustServerCertificateis false andEncryptis true, the connection attempt will fail unless the server name or IP address specified in the SQL Server’s SSL certificate exactly matches the one used in the connection string.

Entity Framework

Writing about what is new in the Entity Framework (EF) in .NET 4.5 is not as simple as it looks. The main reason is that the EF team is gradually working toward removing the Entity Framework from the .NET Framework so that they can provide a more responsive release cycle. The consequence of this is that even though there have been some new EF features added to .NET 4.5, other features such as code-first and DbContext (which were released in EF 4.1) have not been rolled into it and are still only available via the EF NuGet package.

The simplest way to install EF 5.0 NuGet package, which requires .NET 4.5, is to open the Package Manager Console and enter the following command:

Install-Package EntityFramework

This will install the latest version of the Entity Framework.

Support for Enums

One of the criticisms that have been leveled at the Entity Framework has been its lack of support for enums. With the release of .NET4.5, enums are now supported and can be implemented using either Code, Model, or Database First workflows. In the following example, we will use code-first to create our entities.

- First, create a new console application project, ensuring it targets the .NET 4.5 Framework, and then add the EF 5.0 NuGet package.

- Next, add a new class and call it

Employee. Open the resultingEmployee.csfile and add the following code:public class Employee

{

public int Id { get; set; }

public string FirstName { get; set; }

public string LastName { get; set; }

public Positions Position { get; set; }

}

public enum Positions

{

CEO,

CTO,

SeniorDeveloper,

Developer,

Tester

}

public class EmployeesContext: DbContext

{

public DbSet<Employee> Employees { get; set; }

} - Next, open up the

Program.csfile and modify theMain()method using the following code as a guide:

static void Main(string[] args)

{

using (var context = new EmployeesContext())

{

context.Employees.Add(new Employee

{

Position = Positions.CEO,

FirstName = "James",

LastName = "Brown"

});

context.Employees.Add(new Employee

{

Position = Positions.CTO,

FirstName = "Sly",

LastName = "Stone"

});

context.SaveChanges();

var ceo = context.Employees.Where(e => e.Position == Positions.CEO)

.FirstOrDefault();

if (ceo != null)

Console.WriteLine("The CEO is {0} {1}", ceo.FirstName, ceo.LastName);

else

Console.WriteLine("No CEO was found");

Console.ReadKey();

}

}

As you can see, it is a fairly painless process using enums with the Entity Framework both in terms of using them when persisting data and in LINQ queries. Setting them up in Model First or Database First requires a few more steps but again provides exactly the same functionality. If you are interested in a Model First example take a look at this blog post: http://msdn.microsoft.com/en-us/library/hh770088(v=vs.103).aspx.

![]() Note If this is your first look at the code-first workflow, you may be wondering where the database is. When the NuGet package is installed, it checks which database server is installed and then uses a configuration setting to set the default database server for creating databases from the code-first workflow. If SqlExpress is running, it will be used. Otherwise, LocalDb will be used. If you are using LocalDb with the previous example, you can find the database by opening the SQL Server Object Explorer, click on the add new server icon, in the Connect to Server dialog box enter (localdb)v11.0, and click connect. In the SQL Server Object Explorer expand the Databases node below the LocalDb node and you should see the database that was created.

Note If this is your first look at the code-first workflow, you may be wondering where the database is. When the NuGet package is installed, it checks which database server is installed and then uses a configuration setting to set the default database server for creating databases from the code-first workflow. If SqlExpress is running, it will be used. Otherwise, LocalDb will be used. If you are using LocalDb with the previous example, you can find the database by opening the SQL Server Object Explorer, click on the add new server icon, in the Connect to Server dialog box enter (localdb)v11.0, and click connect. In the SQL Server Object Explorer expand the Databases node below the LocalDb node and you should see the database that was created.

Support for Multi-Result Stored Procedures

In the past, with Entity Framework you could call a stored procedure that had multiple result sets, but it would only return the first result set. This has been changed in EF 5, and it is now possible to access all the result sets returned by a stored procedure. There is one catch though. This feature, though supported by EF’s plumbing, is not exposed as an option by the modeling tools so that the only way to actually implement it is by manually editing the EDMX.

To show what is involved, we will walk through a simple implementation. You can follow along working against you own database or, if you want, you can download the database project for the sample database used here by going to http://tinyurl.com/7grkrys and downloading the file AirTavelDb.zip or the completed solution containing the code for this and the next two sections: entityFrameworkExamples.zip.

![]() Note The following setup for this example is basically the same for the next two sections so we will go into more detail here.

Note The following setup for this example is basically the same for the next two sections so we will go into more detail here.

- You need to set up a few things to begin. First, create a new console project and call it

MultiResultStoredProcExample. - Next, open the Package Manager Console window and install the latest Entity Framework package:

Install-Package EntityFramework - Now add a new entity data model and name it

AirTraveland click the button labeled Add. - On the Choose Model Contents dialog box, select Generate from database and click Next and then select the connection for the

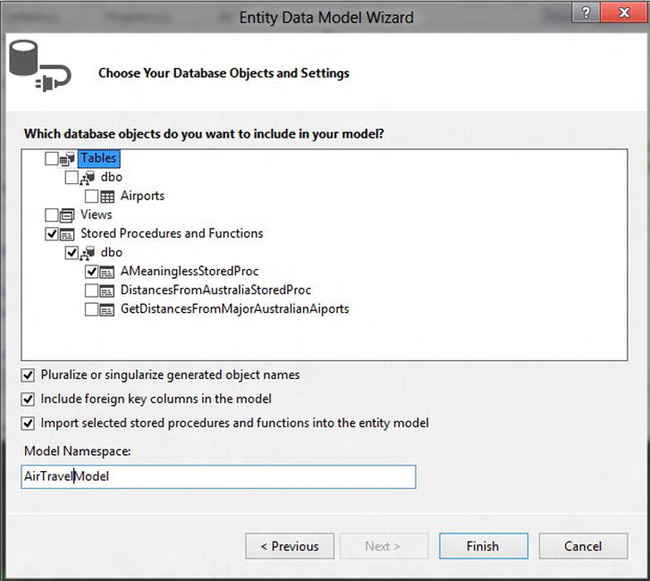

AirTravelDb. If you are using LocalDb for the database you will probably need to add a new connection. To do this, click on the New Connection button and in the Connection Properties enter (localDb)<YourSolutionNameHere>. Click Refresh and then select the database name. - In the next screen (Figure 9-2), where you select the database objects you want, drill down into the stored procedures node and select the stored procedure

AMeaninglessStoredProc, change the model namespace toAirTravelModel, and click the Finish button.

Figure 9-2. Selecting the stored procedure

At this point, you should have an empty designer, but since you didn’t select any tables, that’s okay.

- To be able to work with the DbContext API, you need to get the DbContext Generator template. Right-click on the designer and select “Add Code Generation Item…” from the context menu.

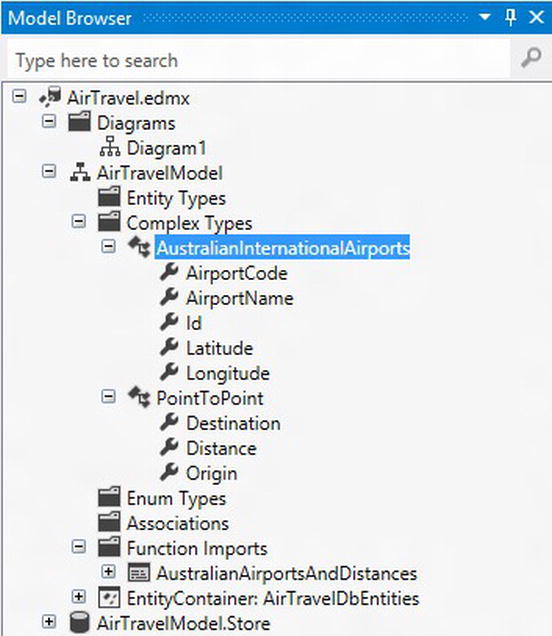

- Now right-click on the designer and select Model Browser from the context menu. In the Model Browser window opening the Complex Types folder, you should find a class called

AMeaninglessStoredProc_Result. This complex type was generated off the data returned from the first result set in your stored procedure. To better represent the data being returned, rename itAustralianInternationalAirports. - Next, open the Function Imports folder. You want to rename the function import

AMeaninglessStoredProcso right-click on it and select Edit. In the dialog box that displays, rename the function toAustralianAirportsAndDistances. - Finally, since a complex type wasn’t generated for your second result set, you need to create one. Right-click on the Complex Types folder and select Add New Complex Type. Rename it

PointToPointand then add the following scalar properties:- Origin: string

- Destination: string

- Distance: double

Once that is done, your complex types should be similar to Figure 9-3.

Figure 9-3. Model Browser after editing

Now, at this point, if you called the function

AustralianAirportsAndDistances, you would only get one result set back. So that you can return both result sets, you will now need to edit the EDMX: - Right-click on

AirTravel.edmx, select Open With, and then select XML (Text) Editor. - Next, you need to edit the function import in the CSDL content. Look for the following XML:

<FunctionImport Name="AustralianAirportsAndDistances"

ReturnType="Collection(AirTravelModel.AustralianInternationalAirports)">

<Parameter Name="AirportCode" Mode="In" Type="String" />

</FunctionImport> - Now you need to modify this so that the function will return both result sets. Update this section to the following:

<FunctionImport Name="AustralianAirportsAndDistances" >

<ReturnType Type="Collection(AirTravelModel.AustralianInternationalAirports)"/>

<ReturnType Type="Collection(AirTravelModel.PointToPoint)"/>

<Parameter Name="AirportCode" Mode="In" Type="String" />

</FunctionImport> - Next, you need to add a new result mapping for your

PointToPointcomplex type to the function import mapping. Scroll down to the C-S mapping content section and update theFunctionImportMappingso it matches this:

<FunctionImportMapping FunctionImportName="AustralianAirportsAndDistances"

FunctionName="AirTravelModel.Store.AMeaninglessStoredProc">

<ResultMapping>

<ComplexTypeMapping TypeName="AirTravelModel.AustralianInternationalAirports">

<ScalarProperty Name="Id" ColumnName="Id" />

<ScalarProperty Name="AirportCode" ColumnName="AirportCode" />

<ScalarProperty Name="AirportName" ColumnName="AirportName" />

<ScalarProperty Name="Latitude" ColumnName="Latitude" />

<ScalarProperty Name="Longitude" ColumnName="Longitude" />

</ComplexTypeMapping>

</ResultMapping>

<ResultMapping>

<ComplexTypeMapping TypeName="AirTravelModel.PointToPoint" >

<ScalarProperty Name="Origin" ColumnName="Origin"/>

<ScalarProperty Name="Destination" ColumnName="Destination" />

<ScalarProperty Name="Distance" ColumnName="Distance" />

</ComplexTypeMapping>

</ResultMapping>

</FunctionImportMapping>

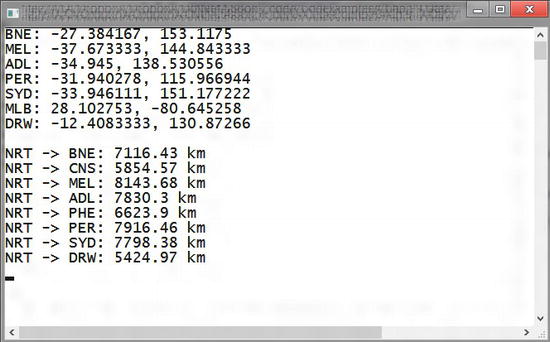

When that is all done, you can now retrieve both result sets. The following code will give you an idea of how it is done (see Figure 9-4 for the output):

static void Main(string[] args)

{

string distinationAirportCode = "NRT"; //Narita airport;

using (var context = new AirTravelDbEntities())

{

var result = context.AustralianAirportsAndDistances(distinationAirportCode);

foreach (var ap in result)

{

Console.WriteLine("{0}: {1}, {2}", ap.AirportCode, ap.Latitude, ap.Longitude);

}

Console.WriteLine();

//to get the second result we need to call GetNextResult()

var result2 = result.GetNextResult<PointToPoint>();

foreach (var ptp in result2)

{

Console.WriteLine("{0} -> {1}: {2} km", ptp.Origin, ptp.Destination, ptp.Distance);

}

}

Console.ReadKey();

}

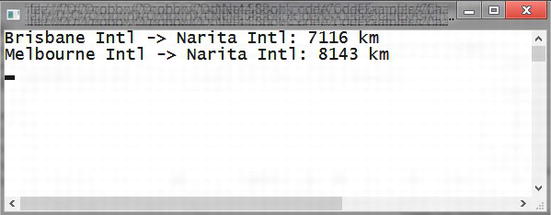

Figure 9-4. Output from both result sets

At the time of writing, it is clear that this feature is not as easy to use as should be, but it can be done. If you are thinking of using of it, plan ahead before implementing it.

Working with Spatial Data Types

Spatial data types, which were introduced with SQL Server 2008, are now supported in the Entity Framework with the classes DbGeography and DbGeometry in the namespace System.Data.Spatial. This means you can store and query against the spatial data types Geometry and Geography, whether you take a code-first, model-first, or database-first approach to constructing your entities.

The real power that comes with the inclusion of these classes is that using them with LINQ provides an easy way to perform complex spatial calculations against the database since all of the properties and methods associated with the SQL spatial data types are exposed through the corresponding EF classes.

To give you a small taste of what this means, in the following excerpt we want to find all of the airports within 15 kilometers from a given location. (Note: If you are following on from the previous example you will need to add the Airports table as an entity to the model. Otherwise, this example is included in the completed solution mentioned earlier.)

static void Main(string[] args)

{

var newYorkLocation = DbGeography.FromText("Point( -74.006605 40.714623)");

var searchDistanceInMeters = 15000;

using (var context = new AirTravelDbEntities())

{

var airports = from a in context.Airports

where a.Location.Distance(newYorkLocation) < searchDistanceInMeters

select new { airportName = a.AirportName,

Code = a.AirportCode,

Distance = Math.Round(a.Location.Distance(newYorkLocation).Value/1000,2) };

Console.WriteLine("Aiports within {0} kilometres of New York:", searchDistanceInMeters / 1000);

foreach (var airport in airports)

{

Console.WriteLine("{0} ({1}) {2} km", airport.airportName, airport.Code,airport.Distance);

}

Console.ReadKey();

}

}

The SQL generated by the LINQ query looks like this:

SELECT

1 AS [C1],

[Extent1].[AirportName] AS [AirportName],

[Extent1].[AirportCode] AS [AirportCode],

ROUND(([Extent1].[Location].STDistance(@p__linq__2)) / cast(1000 as float(53)),

2) AS [C2]

FROM [dbo].[Airports] AS [Extent1]

WHERE ([Extent1].[Location].STDistance(@p__linq__0)) < @p__linq__1

As you can in the SQL statement that is generated by Entity Framework the method Distance() on the geography object Location is translated to the STDistance comparative method in SQL.

Table-Value Function Support

Though this will only work with a database-first workflow, you can now add table-value functions (TVFs) to your entity data model. When you import a table-value function, Entity Framework, as it does for stored procedures, will create an import function and a complex type that will map to the schema of the table returned. Where TVFs have the advantage over stored procedures is that the import function created for the TVF is composable, which means that you can use LINQ and Entity SQL against them.

For the following example, we will use the AirTravel database we used in the previous two sections.

- If you are creating a new solution, import the

AirTravelDbproject. - Add a new console window project.

- Add a new ADO.NET Entity Data Model and select Generate from Database.

- When you are prompted to select database objects, drill down into the Stored Procedures and Functions node and select

GetDistancesFromMajorAirports. - From the Data Model Designer page, open the Model Browser.

- Open the Complex Types folder and rename the class

GetDistancesFromMajorAustralianAiports_ResulttoDistancesFromAustralianAirports(you don’t have to do this, it’s just a little neater). - Return to the Solution Explorer and open the

Program.csfile and add the following code to theMain()method:

using (var context = new AirTravelDbEntities())

{

var result = context.GetDistancesFromMajorAustralianAiports("NRT")

.Where(a => a.StartPointAirportCode == "BNE" || a.StartPointAirportCode == "MEL");

foreach (var item in result)

{

Console.WriteLine("{0} -> {1}: {2} km", item.StartPoint, item.EndPoint, item.Distance);

}

Console.ReadKey();

If you now run application, you should get the output in Figure 9-6.

Figure 9-6. Output from table function example

As you can see, it is fairly easy to implement a table-value function in Entity Framework that allows you to use it directly in a LINQ query—definitely an advantage over using stored procedures.

Performance

Using any ORM will introduce overhead to data access. With this in mind, the ADO.NET team has made improving the performance of Entity Framework one of their goals with this release (see the ADO.NET team blog at http://blogs.msdn.com/b/adonet/archive/2012/02/14/sneak-preview-entity-framework-5-0-performance-improvements.aspx). One of the ways the team has improved performance is by introducing automatic compilation of LINQ to Entities queries.

Up until this version, when you created a LINQ to Entity the Entity Framework would walk the expression tree generated by the compiler to translate it into SQL. This process involved some overhead, especially for complex queries, and would be repeated every time the query was called. You could reduce this overhead by using the CompiledQuery class, which would allow you to compile the query once, returning you a delegate that pointed to the compiled version in the Entity Framework cache. The problem with this is that you had to know about it and you needed to explicitly implement it.

With the introduction of the auto-compiled LINQ queries feature, every LINQ query you execute gets automatically compiled and placed in the query cache. Therefore, every time you run the query, it will be retrieved from the cache negating the need to go through the compilation process again.

Entity Model Designer

Not a lot has changed with the designer, but in VS2012 you find will the following new features:

- Multiple diagrams per model. Especially for complex models, this feature will be useful since it allows you to visualize subsections of the overall model.

- Assign colors to shapes. Shapes in the designer can now be assigned different colors, which again is useful when dealing with complex models. To change the color of a shape, select it and then in the properties window under the Diagram node, you will see the property Fill Color where you can change the color from either the dropdown palette or by directly entering the RGB values for the color you want.

- Batch import of stored procedures. In VS 2010, you could load multiple stored procedures when creating your model, but then for each stored procedure you had to explicitly create a function import and complex type for each one. Not a problem if you only have a few to contend with, but increase that number by any reasonable amount, and you have a lot more work on your hands. With VS2012, the Entity Framework will now not only import the stored procedures (and functions) in one hit, but it will also create corresponding function imports and associated complex types.

Conclusion

It is very few developers who do not interact in some way or form with data and for a lot of us this usually means working with SQL Server, which is what everything in this chapter essentially leads back to. The question is whether these new features that we have covered in this chapter help or hinder us.

When developing applications, the question of what comes first—the database or the application—has tended, whichever position you took, to cause friction. The addition of LocalDb (and the associated SQL Server Data Tools that have been included in VS 2012) has the potential to change the way we think about this by providing a more iterative and flexible approach to both application and database development. Another area where LocalDb will be an interesting option is integration and functional testing. Coupled with the inclusion of a database project, spinning up and initializing data independent of a SQL Server instance may reduce some of the frustration that testing against a shared SQL Server instance can cause.

The changes to the SqlClient Data Provider, with the introduction of asynchronous and streaming support, will ensure that data access does not become the thorn in the side of developing responsive applications.

Finally, there is the Entity Framework. Having gotten off to a rocky start when it was first introduced, it is clear that the ADO.NET team is putting in the work to make it a viable option in the ORM space.