Chapter 9

Digital communication

9.1 Introduction

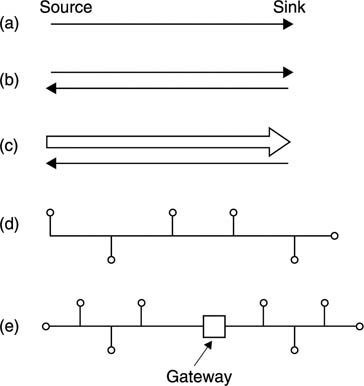

Digital communication includes any system which can deliver data over distance. Figure 9.1 shows some of the ways in which the subject can be classified. The simplest is a unidirectional point-to-point signal path shown at (a). This is common in digital production equipment and includes the AES/EBU digital audio interface and the serial digital interface (SDI) for digital video. Bidirectional point-to-point signals include the RS-232 and RS-422 duplex systems. Bidirectional signal paths may be symmetrical, i.e. have the same capacity in both directions (b), or asymmetrical, having more capacity in one direction than the other (c). In this case the low-capacity direction may be known as a back channel.

Figure 9.1 Some ways of classifying communications systems. At (a) the unidirectional point-to-point connection used in many digital audio and video interconnects. (b) Symmetrical bidirectional point-to-point system. (c) Asymmetrical point-to-point system. (d) A network must have some switching or addressing ability in addition to delivering data. (e) Networks can be connected by gateways.

Back channels are useful in a number of applications. Video-on demand and interactive video are both systems in which the inputs from the viewer are relatively small, but result in extensive data delivery to the viewer. Archives and databases have similar characteristics.

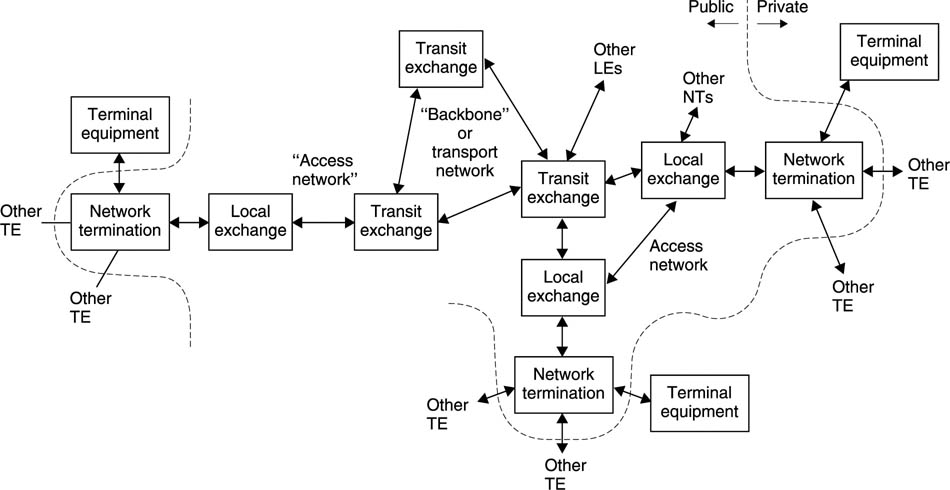

When more than two devices can be interconnected in such a way that any one can communicate at will with any other, the result is a network as in Figure 9.1(d). The traditional telephone system is a network, and although the original infrastructure assumed analog speech transmission, subsequent developments in modems have allowed data transmission.

The computer industry has developed its own network technology, a long-serving example being Ethernet. Computer networks can work over various distances, giving rise to LANs (local area networks), MANs (metropolitan area networks) and WANs (wide area networks). Such networks can be connected together to form internetworks or internets for short, including the Internet. A private network, linking all employees of a given company, for example, may be referred to as an intranet.

Figure 9.1(e) shows that networks are connected together by gateways. In this example a private network (typically a local area network (LAN) within an office block) is interfaced to an access network (typically a metropolitan area network (MAN) with a radius of the order of a few kilometres) which in turn connects to the transport network. The access networks and the transport network together form a public network.

The different requirements of networks of different sizes have led to different protocols being developed. Where a gateway exists between two such networks, the gateway will often be required to perform protocol conversion. Protocol conversion represents unnecessary cost and delay and recent protocols such as ATM are sufficiently flexible that they can be adopted in any type of network to avoid conversion.

Networks also exist which are optimized for storage devices. These range from the standard buses linking hard drives with their controllers to SANs (storage area networks) in which distributed storage devices behave as one large store.

Communication must also include broadcasting, which initially was analog, but has also adopted digital techniques so that transmitters effectively radiate data. Traditional analog broadcasting was unidirectional, but with the advent of digital techniques, various means for providing a back channel have been developed.

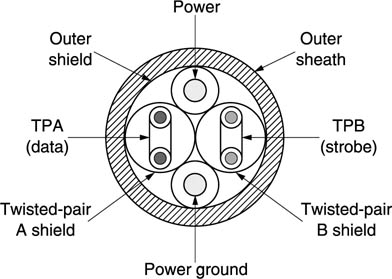

To have an understanding of communications it is important to appreciate the concept of layers shown in Figure 9.2(a). The lowest layer is the physical medium dependent layer. In the case of a cabled interface, this layer would specify the dimensions of the plugs and sockets so that a connection could be made, and the use of a particular type of conductor such as co-axial, STP (screened twisted pair) or UTP (unscreened twisted pair). The impedance of the cable may also be specified. The medium may also be optical fibre which will need standardization of the terminations and the wavelength(s) in use.

Figure 9.2 (a) Layers are important in communications because they have a degree of independence such that one can be replaced by another leaving the remainder undisturbed. (b) The functions of a network protocol. See text.

Once a connection is made, the physical medium dependent layer standardizes the voltage of the transmitted signal and the frequency at which the voltage changes (the channel bit rate). This may be fixed at a single value, chosen from a set of fixed values, or, rarely, variable. Practical interfaces need some form of channel coding (see Chapter 6) in order to embed a bit clock in the data transmission.

The physical medium dependent layer allows binary transmission, but this needs to be structured or formatted. The transmission convergence layer takes the binary signalling of the physical medium dependent layer and builds a packet or cell structure. This consists at least of some form of synchronization system so that the start and end of serialized messages can be recognized and an addressing or labelling scheme so that packets can reliably be routed and recognized. Real cables and optical fibres run at fixed bit rates and a further function of the transmission convergence layer is the insertion of null or stuffing packets where insufficient user data exist.

In broadcasting, the physical medium dependent layer may be one which contains some form of radio signal and a modulation scheme. The modulation scheme will be a function of the kind of service, for example a satellite modulation scheme would be quite different from one used in a terrestrial service.

In all real networks requests for transmission will arise randomly. Network resources need to be applied to these requests in a structured way to prevent chaos, data loss or lack of throughput. This raises the requirement for a protocol layer. TCP (transmission control protocol) and ATM (asynchronous transfer mode) are protocols. A protocol is an agreed set of actions in given circumstances. In a point-to-point interface the protocol is trivial, but in a network it is complex. Figure 9.2(b) shows some of the functions of a network protocol. There must be an addressing mechanism so that the sender can direct the data to the desired location, and a mechanism by which the receiving device confirms that all the data have been correctly received. In more advanced systems the protocol may allow variations in quality of service whereby the user can select (and pay for) various criteria such as packet delay and delay variation and the packet error rate. This allows the system to deliver isochronous (near-real-time) MPEG data alongside asynchronous (non-time-critical) data such as e-mail by appropriately prioritizing packets.

The protocol layer arbitrates between demands on the network and delivers packets at the required quality of service. The user data will not necessarily have been packeted, or if they were the packet sizes may be different from those used in the network. This situation arises, for example, when MPEG transport packets are to be sent via ATM. The solution is to use an adaptation layer.

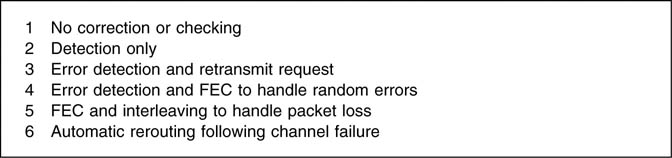

Adaptation layers reformat the original data into the packet structure needed by the network at the sending device, and reverse the process at the destination device. Practical networks must have error checking/correction. Figure 9.3 shows some of the possibilities. In short interfaces, no errors are expected and a simple parity check or checksum with an error indication is adequate. In bidirectional applications a checksum failure would result in a retransmission request or cause the receiver to fail to acknowledge the transmission so that the sender would try again. In real-time systems, there may not be time for a retransmission, and an FEC (forward error correction) system will be needed in which enough redundancy is included with every data block to permit on-the-fly correction at the receiver. The sensitivity to error is a function of the type of data, and so it is a further function of the adaptation layer to take steps such as interleaving and the addition of FEC codes.

Figure 9.3 Different approaches to error checking used in various communications systems.

9.2 Production-related interfaces

As audio and video production equipment made the transition from analog to digital technology, computers and networks were still another world and the potential of the digital domain was largely neglected because the digital interfaces which were developed simply copied analog practice but transmitted binary numbers instead of the original signal waveform. These interfaces are simple and have no addressing or handshaking ability. Creating a network requires switching devices called routers which are controlled independently of the signals themselves. Although obsolescent, there are substantial amounts of equipment in service adhering to these standards which will remain in use for some time.

The AES/EBU (Audio Engineering Society/European Broadcast Union) interface was developed to provide a short-distance point-to-point connection for PCM digital audio and subsequently evolved to handle compressed audio data.

The serial digital interface (SDI) was developed to allow up to ten-bit samples of standard definition interlaced component or composite digital video to be communicated serially.1 16:9 format component signals with 18 MHz sampling rate can also be handled. SDI as first standardized had no error-detection ability at all. This was remedied by a later option known as EDH (error detection and handling). The interface allows ancillary data including transparent conveyance of embedded AES/EBU digital audio channels during video blanking periods.

SDI is highly specific to two broadcast television formats. Subsequently the electrical and channel coding layer of SDI was used to create SDTI (serial data transport interface) which is used for transmitting, among other things, elementary streams from video compressors. ASI (asynchronous serial interface) uses only the electrical interface of SDI but with a different channel code and protocol and is used for transmitting MPEG transport streams through SDI-based equipment.

9.3 SDI

The serial digital interface was designed to allow easy conversion to and from traditional analog component video for production purposes. Only 525/59.94/2:1 and 625/50/2:1 formats are supported with 4:2:2 sampling. The sampling structure of SDI was detailed in section 7.14 and only the transmission technique will be considered here.

Chapter 6 introduced the concepts of DC components and uncontrolled clock content in serial data for recording and the same issues are important in interfacing, leading to a coding requirement. SDI uses convolutional randomizing, as shown in section 6.13, in which the signal sent down the channel is the serial data waveform which has been convolved with the impulse response of a digital filter. On reception the signal is deconvolved to restore the original data.

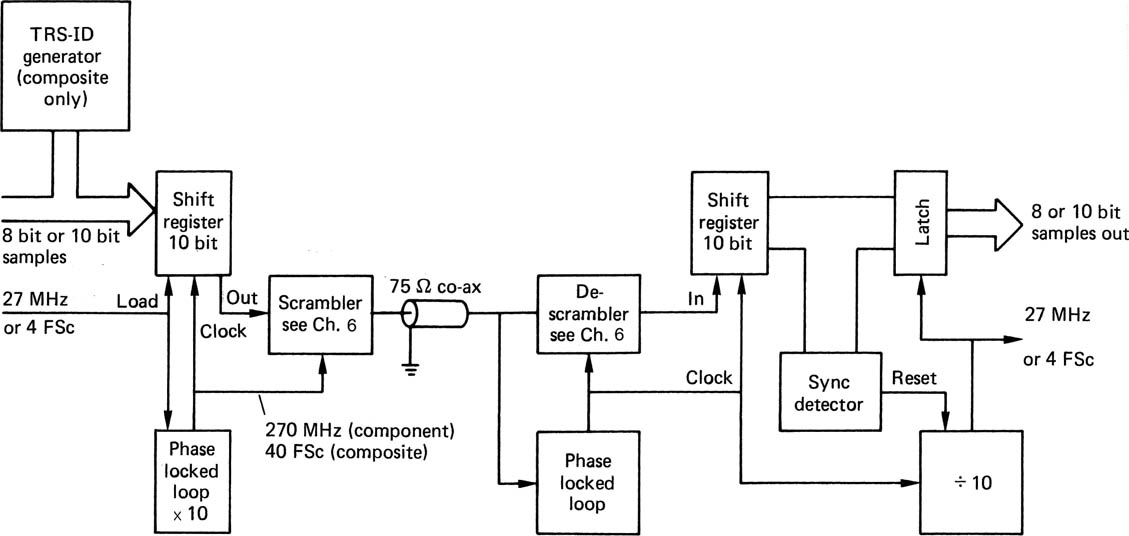

The components necessary for an SDI link are shown in Figure 9.4. Parallel component or composite data having a wordlength of up to ten bits form the input. These are fed to a ten-bit shift register which is clocked at ten times the input rate, which will be 270 MHz or 40 × Fsc. If there are only eight bits in the input words, the missing bits are forced to zero for transmission except for the all ones condition which will be forced to ten ones. The serial data from the shift register are then passed through the scrambler, in which a given bit is converted to the exclusive-OR of itself and two bits which are five and nine clocks ahead. This is followed by another stage, which converts channel ones into transitions. The resulting signal is fed to a line driver which converts the logic level into an alternating waveform of 800 mV peak-to-peak. The driver output impedance is carefully matched so that the signal can be fed down 75 Ohm co-axial cable using BNC connectors.

The scrambling process at the encoder spreads the signal spectrum and makes that spectrum reasonably constant and independent of the picture content. It is possible to assess the degree of equalization necessary by comparing the energy in a low-frequency band with that in higher frequencies. The greater the disparity, the more equalization is needed. Thus fully automatic cable equalization is easily achieved. The receiver must generate a bit clock at 270 MHz or 40 × Fsc from the input signal, and this clock drives the input sampler and slicer which converts the cable waveform back to serial binary. The local bit clock also drives a circuit which simply reverses the scrambling at the transmitter. The first stage returns transitions to ones, and the second stage is a mirror image of the encoder which reverses the exclusive-OR calculation to output the original data. Since transmission is serial, it is necessary to obtain word synchronization, so that correct deserialization can take place.

Figure 9.4 Major components of a serial scrambled link. Input samples are converted to serial form in a shift register clocked at ten times the sample rate. The serial data are then scrambled for transmission. On reception, a phase-locked loop recreates the bit rate clock and drives the de-scrambler and serial-to-parallel conversion. On detection of the sync pattern, the divide-by-ten counter is rephased to load parallel samples correctly into the latch. For composite working the bit rate will be 40 times subcarrier, and a sync pattern generator (top left) is needed to inject TRS-ID into the composite data stream.

In the component parallel input, the SAV and EAV sync patterns are present and the all-ones and all-zeros bit patterns these contain can be detected in the thirty-bit shift register and used to reset the deserializer.

On detection of the synchronizing symbols, a divide-by-ten circuit is reset, and the output of this will clock words out of the shift register at the correct times. This output will also become the output word clock.

It is a characteristic of all randomizing techniques that certain data patterns will interact badly with the randomizing algorithm to produce a channel waveform which is low in clock content. These so-called pathological data patterns2 are extremely rare in real program material, but can be specially generated for testing purposes.

9.4 SDTI

SDI is closely specified and is only suitable for transmitting 2:1 interlaced 4:2:2 digital video in 525/60 or 625/50 systems. Since the development of SDI, it has become possible economically to compress digital video and the SDI standard cannot handle this. SDTI (serial data transport interface) is designed to overcome that problem by converting SDI into an interface which can carry a variety of data types whilst retaining compatibility with existing SDI router infrastructures.

SDTI3 sources produce a signal which is electrically identical to an SDI signal and which has the same timing structure. However, the digital active line of SDI becomes a data packet or item in SDTI. Figure 9.5 shows how SDTI fits into the existing SDI timing. Between EAV and SAV (horizontal blanking in SDI) an ancillary data block is incorporated. The structure of this meets the SDI standard, and the data within describe the contents of the following digital active line.

Figure 9.5 SDTI is a variation of SDI which allows transmission of generic data. This can include compressed video and non-real-time transfer.

The data capacity of SDTI is about 200Mbits/s because some of the 270 Mbits/s is lost due to the retention of the SDI timing structure. Each digital active line finishes with a CRCC (cyclic redundancy check character) to check for correct transmission.

SDTI raises a number of opportunities, including the transmission of compressed data at faster than real time. If a video signal is compressed at 4:1, then one quarter as much data would result. If sent in real time the bandwidth required would be one quarter of that needed by uncompressed video. However, if the same bandwidth is available, the compressed data could be sent in 1/4 of the usual time. This is particularly advantageous for data transfer between compressed camcorders and non-linear editing workstations. Alternatively, four different 50 Megabit/s signals could be conveyed simultaneously.

Thus an SDTI transmitter takes the form of a multiplexer which assembles packets for transmission from input buffers. The transmitted data can be encoded according to MPEG, MotionJPEG, Digital Betacam or DVC formats and all that is necessary is that compatible devices exist at each end of the interface. In this case the data are transferred with bit accuracy and so there is no generation loss associated with the transfer. If the source and destination are different, i.e. having different formats or, in MPEG, different group structures, then a conversion process with attendant generation loss would be needed.

9.5 ASI

The asynchronous serial interface is designed to allow MPEG transport streams to be transmitted over standard SDI cabling and routers. ASI offers higher performance than SDTI because it does not adhere to the SDI timing structure. Transport stream data do not have the same statistics as PCM video and so the scrambling technique of SDI cannot be used. Instead ASI uses an 8/10 group code (see section 6.12) to eliminate DC components and ensure adequate clock content).

SDI equipment is designed to run at a closely defined bit rate of 270 Mbits/s and has phase-locked loops in receiving and repeating devices which are intended to remove jitter. These will lose lock if the channel bit rate changes. Transport streams are fundamentally variable in bit rate and to retain compatibility with SDI routing equipment ASI uses stuffing bits to keep the transmitted bit rate constant.

The use of an 8/10 code means that although the channel bit rate is 270 Mbits/s, the data bit rate is only 80 per cent of that, i.e 216 Mbits/s. A small amount of this is lost to overheads.

9.6 AES/EBU

The AES/EBU digital audio interface, originally published in 19854 was proposed to embrace all the functions of existing formats in one standard. The goal was to ensure interconnection of professional digital audio equipment irrespective of origin. The EBU ratified the AES proposal with the proviso that the optional transformer coupling was made mandatory and led to the term AES/EBU interface, also called EBU/AES by some Europeans and standardized as IEC 958.

The interface has to be self-clocking and self-synchronizing, i.e. the single signal must carry enough information to allow the boundaries between individual bits, words and blocks to be detected reliably. To fulfil these requirements, the FM channel code is used (see Chapter 6) which is DC-free, strongly self-clocking and capable of working with a changing sampling rate. Synchronization of deserialization is achieved by violating the usual encoding rules.

The use of FM means that the channel frequency is the same as the bit rate when sending data ones. Tests showed that in typical analog audio-cabling installations, sufficient bandwidth was available to convey two digital audio channels in one twisted pair. The standard driver and receiver chips for RS–422A5 data communication (or the equivalent CCITT-V.11) are employed for professional use, but work by the BBC6 suggested that equalization and transformer coupling were desirable for longer cable runs, particularly if several twisted pairs occupy a common shield. Successful transmission up to 350 m has been achieved with these techniques.7 Figure 9.6 shows the standard configuration. The output impedance of the drivers will be about 110 Ohms, and the impedance of the cable and receiver should be similar at the frequencies of interest. The driver was specified in AES-3-1985 to produce between 3 and 10 V peak-to-peak into such an impedance but this was changed to between 2 and 7 V in AES-3-1992 to better reflect the characteristics of actual RS-422 driver chips.

Figure 9.6 Recommended electrical circuit for use with the standard two-channel interface.

In Figure 9.7, the specification of the receiver is shown in terms of the minimum eye pattern (see section 6.9) which can be detected without error. It will be noted that the voltage of 200 mV specifies the height of the eye opening at a width of half a channel bit period. The actual signal amplitude will need to be larger than this, and even larger if the signal contains noise. Figure 9.8 shows the recommended equalization characteristic which can be applied to signals received over long lines.

Figure 9.7 The minimum eye pattern acceptable for correct decoding of standard two-channel data.

Figure 9.8 EQ characteristic recommended by the AES to improve reception in the case of long lines.

The purpose of the standard is to allow the use of existing analog cabling, and as an adequate connector in the shape of the XLR is already in wide service, the connector made to IEC 268 Part 12 has been adopted for digital audio use. Effectively, existing analog audio cables having XLR connectors can be used without alteration for digital connections.

There is a separate standard8 for a professional interface using coaxial cable for distances of around 1000 m. This is simply the AES/EBU protocol but with a 75 Ohm coaxial cable carrying a one volt signal so that it can be handled by analog video distribution amplifiers. Impedance converting transformers allow balanced 110 Ohm to unbalanced 75 Ohm matching.

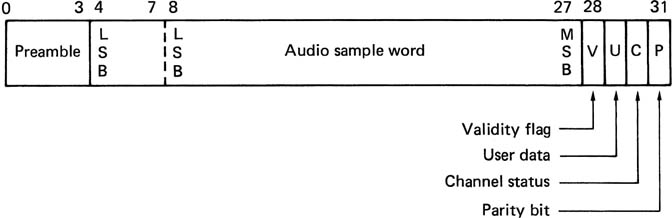

In Figure 9.9 the basic structure of the professional and consumer formats can be seen. One subframe consists of 32 bit-cells, of which four will be used by a synchronizing pattern. Subframes from the two audio channels, A and B, alternate on a time-division basis, with the least significant bit sent first. Up to twenty-four-bit sample wordlength can be used, which should cater for all conceivable future developments, but normally twenty-bit maximum length samples will be available with four auxiliary data bits, which can be used for a voice-grade channel in a professional application.

Figure 9.9 The basic subframe structure of the AES/EBU format. Sample can be twenty bits with four auxiliary bits, or twenty-four bits. LSB is transmitted first.

The format specifies that audio data must be in two’s complement coding. If different wordlengths are used, the MSBs must always be in the same bit position otherwise the polarity will be misinterpreted. Thus the MSB has to be in bit 27 irrespective of wordlength. Shorter words are leading-zero filled up to the twenty-bit capacity. The channel status data included from AES-3-1992 signalling of the actual audio wordlength used so that receiving devices could adjust the digital dithering level needed to shorten a received word which is too long or pack samples onto a storage device more efficiently.

Four status bits accompany each subframe. The validity flag will be reset if the associated sample is reliable. Whilst there have been many aspirations regarding what the Vbit could be used for, in practice a single bit cannot specify much, and if combined with other Vbits to make a word, the time resolution is lost. AES-3-1992 described the Vbit as indicating that the information in the associated subframe is ‘suitable for conversion to an analog signal’. Thus it might be reset if the interface was being used for non-PCM audio data such as the output of an audio compressor.

The parity bit produces even parity over the subframe, such that the total number of ones in the subframe is even. This allows for simple detection of an odd number of bits in error, but its main purpose is that it makes successive sync patterns have the same polarity, which can be used to improve the probability of detection of sync. The user and channel-status bits are discussed later.

Two of the subframes described above make one frame, which repeats at the sampling rate in use. The first subframe will contain the sample from channel A, or from the left channel in stereo working. The second subframe will contain the sample from channel B, or the right channel in stereo. At 48 kHz, the bit rate will be 3.072 MHz, but as the sampling rate can vary, the clock rate will vary in proportion.

In order to separate the audio channels on receipt the synchronizing patterns for the two subframes are different as Figure 9.10 shows. These sync patterns begin with a run length of 1.5 bits which violates the FM channel coding rules and so cannot occur due to any data combination. The type of sync pattern is denoted by the position of the second transition which can be 0.5, 1.0 or 1.5 bits away from the first. The third transition is designed to make the sync patterns DC-free.

Figure 9.10 Three different preambles (X, Y and Z) are used to synchronize a receiver at the start of subframes.

The channel status and user bits in each subframe form serial data streams with one bit of each per audio channel per frame. The channel status bits are given a block structure and synchronized every 192 frames, which at 48 kHz gives a block rate of 250 Hz, corresponding to a period of 4 ms. In order to synchronize the channel-status blocks, the channel A sync pattern is replaced for one frame only by a third sync pattern which is also shown in Figure 9.10. The AES standard refers to these as X, Y and Z whereas IEC 958 calls them M, W and B. As stated, there is a parity bit in each subframe, which means that the binary level at the end of a subframe will always be the same as at the beginning. Since the sync patterns have the same characteristic, the effect is that sync patterns always have the same polarity and the receiver can use that information to reject noise. The polarity of transmission is not specified, and indeed an accidental inversion in a twisted pair is of no consequence, since it is only the transition that is of importance, not the direction.

In both the professional and consumer formats, the sequence of channel-status bits over 192 subframes builds up a 24-byte channel-status block. However, the contents of the channel status data is completely different between the two applications. The professional channel status structure is shown in Figure 9.11. Byte 0 determines the use of emphasis and the sampling rate. Byte 1 determines the channel usage mode, i.e. whether the data transmitted are a stereo pair, two unrelated mono signals or a single mono signal, and details the user bit handling and byte 2 determines wordlength. Byte 3 is applicable only to multichannel applications. Byte 4 indicates the suitability of the signal as a sampling rate reference. There are two slots of four bytes each which are used for alphanumeric source and destination codes. These can be used for routing. The bytes contain seven-bit ASCII characters (printable characters only) sent LSB first with the eighth bit set to zero acording to AES-3–1992. The destination code can be used to operate an automatic router, and the source code will allow the origin of the audio and other remarks to be displayed at the destination.

Figure 9.11 Overall format of the professional channel-status block.

Bytes 14-17 convey a thirty-two-bit sample address which increments every channel status frame. It effectively numbers the samples in a relative manner from an arbitrary starting point. Bytes 18–21 convey a similar number, but this is a time-of-day count, which starts from zero at midnight. As many digital audio devices do not have real-time clocks built in, this cannot be relied upon. AES-3-92 specified that the time-of-day bytes should convey the real time at which a recording was made, making it rather like timecode. There are enough combinations in thirty-two bits to allow a sample count over 24 hours at 48 kHz. The sample count has the advantage that it is universal and independent of local supply frequency. In theory if the sampling rate is known, conventional hours, minutes, seconds, frames timecode can be calculated from the sample count, but in practice it is a lengthy computation and users have proposed alternative formats in which the data from EBU or SMPTE timecode are transmitted directly in these bytes. Some of these proposals are in service as de facto standards.

The penultimate byte contains four flags which indicate that certain sections of the channel-status information are unreliable. This allows the transmission of an incomplete channel-status block where the entire structure is not needed or where the information is not available. The final byte in the message is a CRCC which converts the entire channel-status block into a codeword (see Chapter 6). The channel status message takes 4 ms at 48 kHz and in this time a router could have switched to another signal source. This would damage the transmission, but will also result in a CRCC failure so the corrupt block is not used.

9.7 Telephone-based systems

The success of the telephone has led to vast number of subscribers being connected with copper wires and this is a valuable network infrastructure. As technology has developed, the telephone has become part of a global telecommunications industry. Simple economics suggests that in many cases improving the existing telephone cabling with modern modulation schemes is a good way of providing new communications services.

For economic reasons, there are fewer paths through the telephone system than there are subscribers. This is because telephones were not used continuously until teenagers discovered them. Before a call can be made, the exchange has to find a free path and assign it to the calling telephone. Traditionally this was done electromechanically. A path which was already in use would be carrying loop current. When the exchange sensed that a handset was off-hook, a rotary switch would advance and sample all the paths until it found one without loop current where it would stop. This was signalled to the calling telephone by sending a dial tone.

The development of electronics revolutionalized telephone exchanges. Whilst the loop current, AC ringing and hook switch sensing remained for compatibility, the electromechanical exchange gave way to electronic exchanges where the dial pulses were interpreted by digital counters which then drove crosspoint switches to route the call. The communication remained analog.

The next advance permitted by electronic exchanges was touch-tone dialling, also called DTMF. Touch-tone dialling is based on seven discrete frequencies shown in Figure 9.12. The telephone contains tone generators and tuned filters in the exchange can detect each frequency individually. The numbers 0 through 9 and two non-numerical symbols, asterisk and hash, can be transmitted using twelve unique tone pairs. A tone pair can reliably be detected in about 100 ms and this makes dialling much faster than the pulse system.

Figure 9.12 DTMF dialling works on tone pairs.

The frequencies chosen for DTMF are logarithmically spaced so that the filters can have constant bandwidth and response time, but they do not correspond to the conventional musical scale. In addition to dialling speed, because the DTMF tones are within the telephone audio bandwidth, they can also be used for signalling during a call.

The first electronic exchanges simply used digital logic to perform the routing function. The next step was to use a fully digital system where the copper wires from each subscriber terminate in an interface or line card containing ADCs and DACs. The sampling rate of 8 kHz retains the traditional analog bandwidth, and eight-bit quantizing is used. This is not linear, but uses logarithmically sized quantizing steps so that the quantizing error is greater on larger signals. The result is a 64 kbit/s data rate in each direction.

Packets of data can be time-division multiplexed into high bit-rate data buses which can carry many calls simultaneously. The routing function becomes simply one of watching the bus until the right packet comes along for the selected destination. 64 kbit/s data switching came to be known as IDN (Integrated Digital Network). As a data bus doesn’t care whether it carries 64 kbit/s of speech or 64 kbit/s of something else, communications systems based on IDN tend to be based on multiples of that rate.

Such a system is called ISDN (integrated services digital network) which is basically a use of the telephone system that allows dial-up data transfer between subscribers in much the same way as a conventional phone call is made.

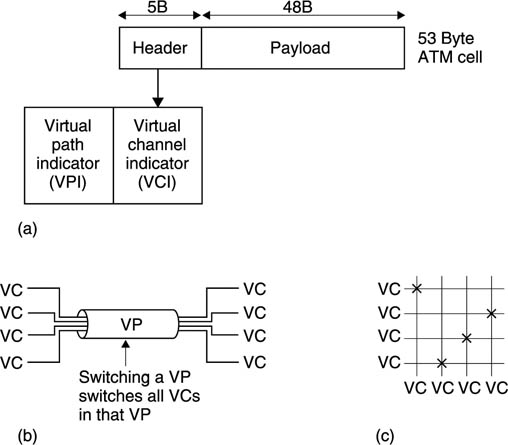

With the subsequent development of broadband networks (B-ISDN) the original ISDN is now known as N-ISDN where the N stands for narrow-band. B-ISDN is the ultimate convergent network able to carry any type of data and uses the well-known ATM (asynchronous transfer mode) protocol. Broadband and ATM are considered in a later section.

One of the difficulties of the AMI coding used in N-ISDN are that the data rate is limited and new cabling is needed to the exchange. ADSL (asymmetric digital subscriber line) is an advanced coding scheme which obtains high bit rate delivery and a back channel down existing subscriber telephone wiring.

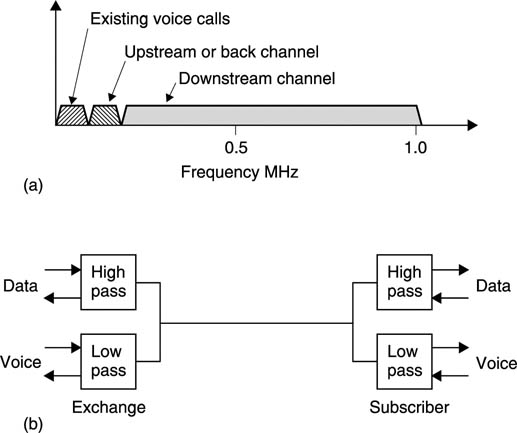

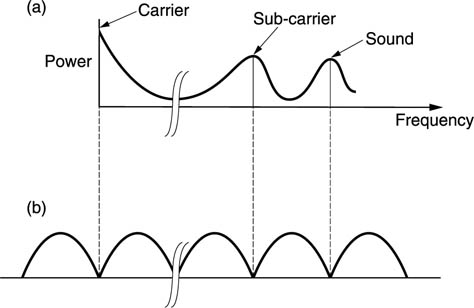

ADSL works on frequency-division multiplexing using 4 kHz wide channels, 249 of these provide the delivery or downstream channel and 25 provide the back channel. Figure 9.13(a) shows that the existing bandwidth used by the traditional analog telephone is retained. The back channel occupies the lowest-frequency channels, with the downstream channels above. Figure 9.13(b) shows that at each end of the existing telephone wiring a device called a splitter is needed. This is basically a high-pass/low-pass filter which directs audio frequency signals to the telephones and high-frequency signals to the modems.

Figure 9.13 (a) ADSL allows the existing analog telephone to be retained, but adds delivery and back channels at higher frequencies. (b) A splitter is needed at each end of the subscriber’s line.

Telephone wiring was never designed to support high-frequency signalling and is non-ideal. There will be reflections due to impedance mismatches which will cause an irregular frequency response in addition to high-frequency losses and noise which will all vary with cable length. ADSL can operate under these circumstances because it constantly monitors the conditions in each channel. If a given channel has adequate signal level and low noise, the full bit rate can be used, but in another channel there may be attenuation and the bit rate will have to be reduced. By independently coding the channels, the optimum data throughput for a given cable is obtained.

Each channel is modulated using DMT (discrete multitone technique) in which combinations of discrete frequencies are used. Within one channel symbol, there are 15 combinations of tones and so the coding achieves 15 bits/s/Hz. With a symbol rate of 4kHz, each channel can deliver 60kbits/s, making 14.9 Mbits/s for the downstream channel and 1.5 Mbits/s for the back channel. It should be stressed that these figures are theoretical maxima which are not reached in real cables. Practical ADSL systems deliver multiples of the ISDN channel rate up to about 6 Mbits/s, enough to deliver MPEG-2 coded video.

Over shorter distances, VDSL can reach up to 50 Mbits/s. Where ADSL and VDSL are being referred to as a common technology, the term xDSL will be found.

9.8 Digital television broadcasting

Digital television broadcasting relies on the combination of a number of fundamental technologies. These are: MPEG-2 compression to reduce the bit rate, multiplexing to combine picture and sound data into a common bitstream, digital modulation schemes to reduce the RF bandwidth needed by a given bit rate and error correction to reduce the error statistics of the channel down to a value acceptable to MPEG data.

MPEG compressed video is highly sensitive to bit errors, primarily because they confuse the recognition of variable length codes so that the decoder loses synchronization. However, MPEG is a compression and multiplexing standard and does not specify how error correction should be performed. Consequently a transmission standard must define a system which has to correct essentially all errors such that the delivery mechanism is transparent.

Essentially a transmission standard specifies all the additional steps needed to deliver an MPEG transport stream from one place to another. This transport stream will consist of a number of elementary streams of video and audio, where the audio may be coded according to MPEG audio standard or AC-3. In a system working within its capabilities, the picture and sound quality will be determined only by the performance of the compression system and not by the RF transmission channel.

Whilst in one sense an MPEG transport stream is only data, it differs from generic data in that it must be presented to the viewer at a particular rate. Generic data are usually asynchronous, whereas baseband video and audio are synchronous. However, after compression and multiplexing audio and video are no longer precisely synchronous and so the term isochronous is used. This means a signal that was at one time synchronous and will be displayed synchronously, but which uses buffering at transmitter and receiver to accommodate moderate timing errors in the transmission.

Clearly another mechanism is needed so that the time axis of the original signal can be re-created on reception. The time stamp and program clock reference system of MPEG does this.

Figure 9.14 shows that the concepts involved in digital television broadcasting exist at various levels which have an independence not found in analog technology. In a given configuration a transmitter can radiate a given payload data bit rate. This represents the useful bit rate and does not include the necessary overheads needed by error correction, multiplexing or synchronizing. It is fundamental that the transmission system does not care what this payload bit rate is used for. The entire capacity may be used up by one high-definition channel, or a large number of heavily compressed channels may be carried. The details of this data usage are the domain of the transport stream. The multiplexing of transport streams is defined by the MPEG standards, but these do not define any error-correction or transmission technique.

Figure 9.14 Source coder doesn’t know delivery mechanism and delivery doesn’t need to know what the data mean.

At the lowest level in Figure 9.15 the source coding scheme, in this case MPEG compression, results in one or more elementary streams, each of which carries a video or audio channel. Elementary streams are multiplexed into a transport stream. The viewer then selects the desired elementary stream from the transport stream. Metadata in the transport stream ensure that when a video elementary stream is chosen, the appropriate audio elementary stream will automatically be selected.

Figure 9.15 Program Specific Information helps the demultiplexer to select the required program.

9.9 MPEG packets and time stamps

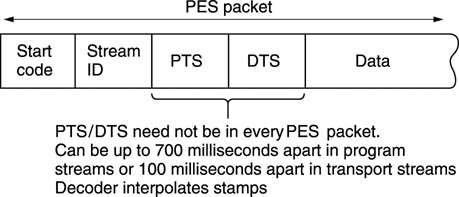

The video elementary stream is an endless bitstream representing pictures which take a variable length of time to transmit. Bidirection coding means that pictures are not necessarily in the correct order. Storage and transmission systems prefer discrete blocks of data and so elementary streams are packetized to form a PES (packetized elementary stream). Audio elementary streams are also packetized. A packet is shown in Figure 9.16. It begins with a header containing an unique packet start code and a code which identifies the type of data stream. Optionally the packet header also may contain one or more time stamps which are used for synchronizing the video decoder to real time and for obtaining lip-sync.

Figure 9.16 A PES packet structure is used to break up the continuous elementary stream.

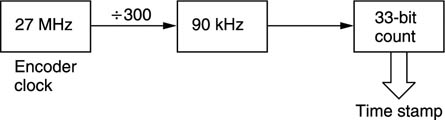

Figure 9.17 shows that a time stamp is a sample of the state of a counter which is driven by a 90 kHz clock. This is obtained by dividing down the master 27 MHz clock of MPEG-2. This 27 MHz clock must be locked to the video frame rate and the audio sampling rate of the program concerned. There are two types of time stamp: PTS and DTS. These are abbreviations for presentation time stamp and decode time stamp. A presentation time stamp determines when the associated picture should be displayed on the screen, whereas a decode time stamp determines when it should be decoded. In bidirectional coding these times can be quite different.

Figure 9.17 Time stamps are the result of sampling a counter driven by the encoder clock.

Audio packets have only presentation time stamps. Clearly if lip-sync is to be obtained, the audio sampling rate of a given program must have been locked to the same master 27 MHz clock as the video and the time stamps must have come from the same counter driven by that clock.

In practice, the time between input pictures is constant and so there is a certain amount of redundancy in the time stamps. Consequently PTS/DTS need not appear in every PES packet. Time stamps can be up to 100 ms apart in transport streams. As each picture type (I, P or B) is flagged in the bitstream, the decoder can infer the PTS/DTS for every picture from the ones actually transmitted.

The MPEG-2 transport stream is intended to be a multiplex of many TV programs with their associated sound and data channels, although a single program transport stream (SPTS) is possible. The transport stream is based upon packets of constant size so that multiplexing, adding error-correction codes and interleaving in a higher layer is eased. Figure 9.18 shows that these are always 188 bytes long.

Figure 9.18 Transport stream packets are always 188 bytes long to facilitate multiplexing and error correction.

Transport stream packets always begin with a header. The remainder of the packet carries data known as the payload. For efficiency, the normal header is relatively small, but for special purposes the header may be extended. In this case the payload gets smaller so that the overall size of the packet is unchanged. Transport stream packets should not be confused with PES packets which are larger and vary in size. PES packets are broken up to form the payload of the transport stream packets.

The header begins with a sync byte which is an unique pattern detected by a demultiplexer. A transport stream may contain many different elementary streams and these are identified by giving each an unique thirteen-bit Packet Identification Code or PID which is included in the header. A multiplexer seeking a particular elementary stream simply checks the PID of every packet and accepts only those which match.

In a multiplex there may be many packets from other programs in between packets of a given PID. To help the demultiplexer, the packet header contains a continuity count. This is a four-bit value which increments at each new packet having a given PID.

This approach allows statistical multiplexing as it does matter how many or how few packets have a given PID; the demux will still find them. Statistical multiplexing has the problem that it is virtually impossible to make the sum of the input bit rates constant. Instead the multiplexer aims to make the average data bit rate slightly less than the maximum and the overall bit rate is kept constant by adding ‘stuffing’ or null packets. These packets have no meaning, but simply keep the bit rate constant. Null packets always have a PID of 8191 (all ones) and the demultiplexer discards them.

9.10 Program clock reference

A transport stream is a multiplex of several TV programs and these may have originated from widely different locations. It is impractical to expect all the programs in a transport stream to be genlocked and so the stream is designed from the outset to allow unlocked programs. A decoder running from a transport stream has to genlock to the encoder and the transport stream has to have a mechanism to allow this to be done independently for each program. The synchronizing mechanism is called Program Clock Reference (PCR).

Figure 9.19 shows how the PCR system works. The goal is to re-create at the decoder a 27 MHz clock which is synchronous with that at the encoder. The encoder clock drives a forty-eight-bit counter which continuously counts up to the maximum value before overflowing and beginning again.

Figure 9.19 Program or System Clock Reference codes regenerate a clock at the decoder. See text for details.

A transport stream multiplexer will periodically sample the counter and place the state of the count in an extended packet header as a PCR (see Figure 9.18). The demultiplexer selects only the PIDs of the required program, and it will extract the PCRs from the packets in which they were inserted.

The PCR codes are used to control a numerically locked loop (NLL) described in section 2.9. The NLL contains a 27 MHz VCXO (voltage controlled crystal oscillator), a variable-frequency oscillator based on a crystal which has a relatively small frequency range.

The VCXO drives a forty-eight-bit counter in the same way as in the encoder. The state of the counter is compared with the contents of the PCR and the difference is used to modify the VCXO frequency. When the loop reaches lock, the decoder counter would arrive at the same value as is contained in the PCR and no change in the VCXO would then occur. In practice the transport stream packets will suffer from transmission jitter and this will create phase noise in the loop. This is removed by the loop filter so that the VCXO effectively averages a large number of phase errors.

A heavily damped loop will reject jitter well, but will take a long time to lock. Lockup time can be reduced when switching to a new program if the decoder counter is jammed to the value of the first PCR received in the new program. The loop filter may also have its time constants shortened during lockup.

Once a synchronous 27 MHz clock is available at the decoder, this can be divided down to provide the 90 kHz clock which drives the time stamp mechanism.

The entire timebase stability of the decoder is no better than the stability of the clock derived from PCR. MPEG-2 sets standards for the maximum amount of jitter which can be present in PCRs in a real transport stream.

Clearly, if the 27 MHz clock in the receiver is locked to one encoder it can only receive elementary streams encoded with that clock. If it is attempted to decode, for example, an audio stream generated from a different clock, the result will be periodic buffer overflows or underflows in the decoder. Thus MPEG defines a program in a manner which relates to timing. A program is a set of elementary streams which have been encoded with the same master clock.

9.11 Program Specific Information (PSI)

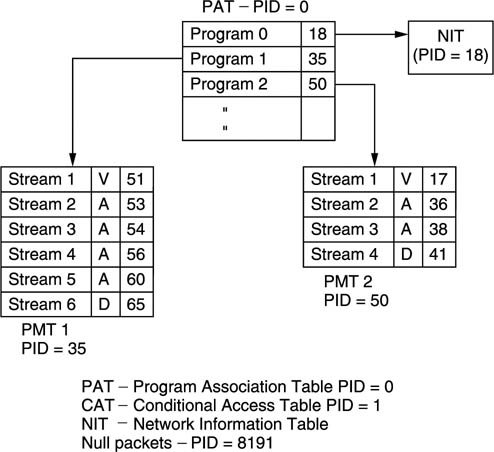

In a real transport stream, each elementary stream has a different PID, but the demultiplexer has to be told what these PIDs are and what audio belongs with what video before it can operate. This is the function of PSI which is a form of metadata. Figure 9.20 shows the structure of PSI. When a decoder powers up, it knows nothing about the incoming transport stream except that it must search for all packets with a PID of zero. PID zero is reserved for the Program Association Table (PAT). The PAT is transmitted at regular intervals and contains a list of all the programs in this transport stream. Each program is further described by its own Program Map Table (PMT) and the PIDs of of the PMTs are contained in the PAT.

Figure 9.20 also shows that the PMTs fully describe each program. The PID of the video elementary stream is defined, along with the PID(s) of the associated audio and data streams. Consequently when the viewer selects a particular program, the demultiplexer looks up the program number in the PAT, finds the right PMT and reads the audio, video and data PIDs. It then selects elementary streams having these PIDs from the transport stream and routes them to the decoders.

Figure 9.20 MPEG-2 Program Specific Information (PSI) is used to tell a demultiplexer what the transport stream contains.

Program 0 of the PAT contains the PID of the Network Information Table (NIT). This contains information about what other transport streams are available. For example, in the case of a satellite broadcast, the NIT would detail the orbital position, the polarization, carrier frequency and modulation scheme. Using the NIT a set-top box could automatically switch between transport streams.

Apart from 0 and 8191, a PID of 1 is also reserved for the Conditional Access Table (CAT). This is part of the access control mechanism needed to support pay per view or subscription viewing.

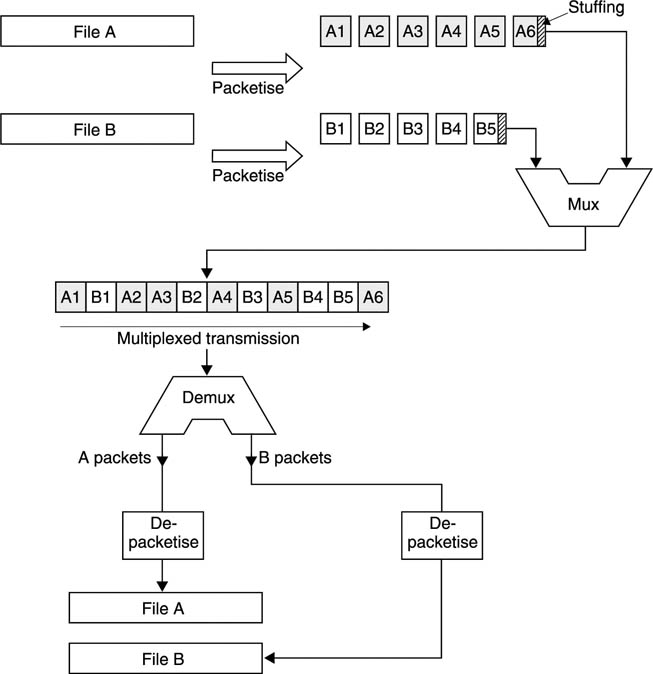

9.12 Transport stream multiplexing

A transport stream multiplexer is a complex device because of the number of functions it must perform. A fixed multiplexer will be considered first. In a fixed multiplexer, the bit rate of each of the programs must be specified so that the sum does not exceed the payload bit rate of the transport stream. The payload bit rate is the overall bit rate less the packet headers and PSI rate.

In practice, the programs will not be synchronous to one another, but the transport stream must produce a constant packet rate given by the bit rate divided by 188 bytes, the packet length. Figure 9.21 shows how this is handled. Each elementary stream entering the multiplexer passes through a buffer which is divided into payload-sized areas. Note that periodically the payload area is made smaller because of the requirement to insert PCR.

Figure 9.21 A transport stream multiplexer can handle several programs which are asynchronous to one another and to the transport stream clock. See text for details.

MPEG-2 decoders also have a quantity of buffer memory. The challenge to the multiplexer is to take packets from each program in such a way that neither its own buffers nor the buffers in any decoder either overflow or underflow. This requirement is met by sending packets from all programs as evenly as possible rather than bunching together a lot of packets from one program. When the bit rates of the programs are different, the only way this can be handled is to use the buffer contents indicators. The more full a buffer is, the more likely it should be that a packet will be read from it. This a buffer content arbitrator can decide which program should have a packet allocated next.

If the sum of the input bit rates is correct, the buffers should all slowly empty because the overall input bit rate has to be less than the payload bit rate. This allows for the insertion of Program Specific Information. Whilst PATs and PMTs are being transmitted, the program buffers will fill up again. The multiplexer can also fill the buffers by sending more PCRs as this reduces the payload of each packet. In the event that the multiplexer has sent enough of everything but still can’t fill a packet then it will send a null packet with a PID of 8191. Decoders will discard null packets and as they convey no useful data, the multiplexer buffers will all fill whilst null packets are being transmitted.

The use of null packets means that the bit rates of the elementary streams do not need to be synchronous with one another or with the transport stream bit rate. As each elementary stream can have its own PCR, it is not necessary for the different programs in a transport stream to be genlocked to one another; in fact they don’t even need to have the same frame rate.

This approach allows the transport stream bit rate to be accurately defined and independent of the timing of the data carried. This is important because the transport stream bit rate determines the spectrum of the transmitter and this must not vary.

In a statistical multiplexer or statmux, the bit rate allocated to each program can vary dynamically. Figure 9.22 shows that there must be a tight connection between the statmux and the associated compressors. Each compressor has a buffer memory which is emptied by a demand clock from the statmux. In a normal, fixed bit rate, coder the buffer content feeds back and controls the requantizer. In statmuxing this process is less severe and only takes place if the buffer is very close to full, because the degree of coding difficulty is also fed to the statmux.

Figure 9.22 A statistical multiplexer contains an arbitrator which allocates bit rate to each program as a function of program difficulty.

The statmux contains an arbitrator which allocates more packets to the program with the greatest coding difficulty. Thus if a particular program encounters difficult material it will produce large prediction errors and begin to fill its output buffer. As the statmux has allocated more packets to that program, more data will be read out of that buffer, preventing overflow. Of course this is only possible if the other programs in the transport stream are handling typical video.

In the event that several programs encounter difficult material at once, clearly the buffer contents will rise and the requantizing mechanism will have to operate.

9.13 Broadcast modulation techniques

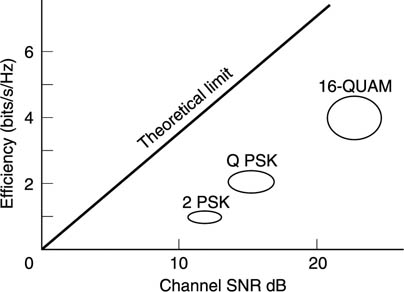

A key difference between analog and digital transmission is that the transmitter output is switched between a number of discrete states rather than continuously varying. A good code minimizes the channel bandwidth needed for a given bit rate. This quality of the code is measured in bits/s/Hz and is the equivalent of the density ratio in recording. Figure 9.23 shows, not surprisingly, that the less bandwidth required, the better the signal-to-noise ratio has to be. The figure shows the theoretical limit as well as the performance of a number of codes which offer different balances of bandwidth/noise performance.

Figure 9.23 Where a better SNR exists, more data can be sent down a given bandwidth channel.

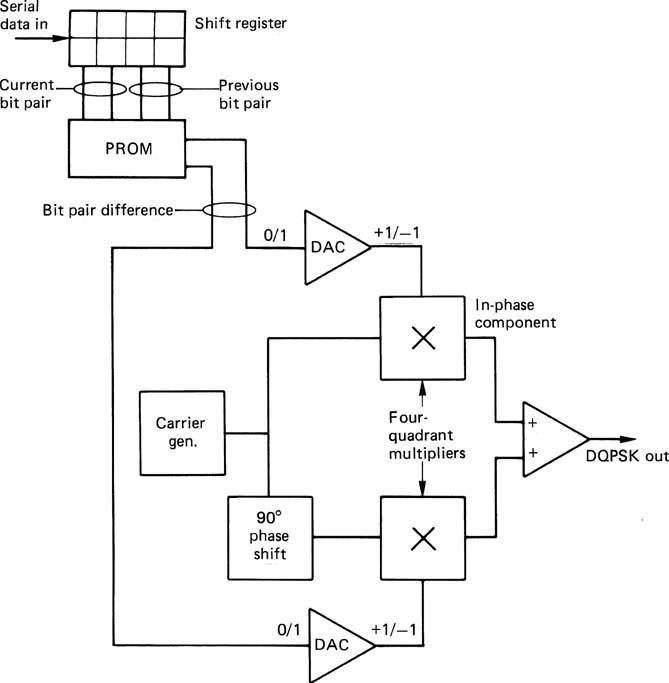

Where the SNR is poor, as in satellite broadcasting, the amplitude of the signal will be unstable, and phase modulation is used. Figure 9.24 shows that phaseshift keying (PSK) can use two or more phases. When four phases in quadrature are used, the result is Quadrature Phase Shift Keying or QPSK. Each period of the transmitted waveform can have one of four phases and therefore conveys the value of two data bits. 8-PSK uses eight phases and can carry three bits per symbol where the SNR is adequate. PSK is generally encoded in such a way that a knowledge of absolute phase is not needed at the receiver. Instead of encoding the signal phase directly, the data determine the magnitude of the phase shift between symbols. A QPSK coder is shown in Figure 9.25.

Figure 9.24 Differential quadrature phase shift keying (DQPSK).

Figure 9.25 A QPSK coder conveys two bits for each modulation period. See text for details.

In terrestrial transmission more power is available than, for example from a satellite and so a stronger signal can be delivered to the receiver. Where a better SNR exists, an increase in data rate can be had using multi-level signalling or m-ary coding instead of binary. Figure 9.26 shows that the ATSC system uses an eight-level signal (8-VSB) allowing three bits to be sent per symbol. Four of the levels exist with normal carrier phase and four with inverted phase so that a phase-sensitive rectifier is needed in the receiver. Clearly, the data separator must have a three-bit ADC which can resolve the eight signal levels. The gain and offset of the signal must be precisely set so that the quantizing levels register precisely with the centres of the eyes. The transmitted signal contains sync pulses which are encoded using specified code levels so that the data separator can set its gain and offset.

Figure 9.26 In 8-VSB the transmitter operates in eight different states enabling three bits to be sent per symbol.

Multi-level signalling systems have the characteristic that the bits in the symbol have different error probability. Figure 9.27 shows that a small noise level will corrupt the low-order bit, whereas twice as much noise will be needed to corrupt the middle bit and four times as much will be needed to corrupt the high-order bit. In ATSC the solution is that the lower two bits are encoded together in an inner error-correcting scheme so that they represent only one bit with similar reliability to the top bit. As a result the 8-VSB system actually delivers two data bits per symbol even though eight-level signalling is used.

Figure 9.27 In multi-level signalling the error probability is not the same for each bit.

The modulation of the carrier results in a double-sideband spectrum, but following analog TV practice most of the lower sideband is filtered off leaving a vestigial sideband only, hence the term 8-VSB. A small DC offset is injected into the modulator signal so that the four in-phase levels are slightly higher than the four out-of-phase levels. This has the effect of creating a small pilot at the carrier frequency to help receiver locking.

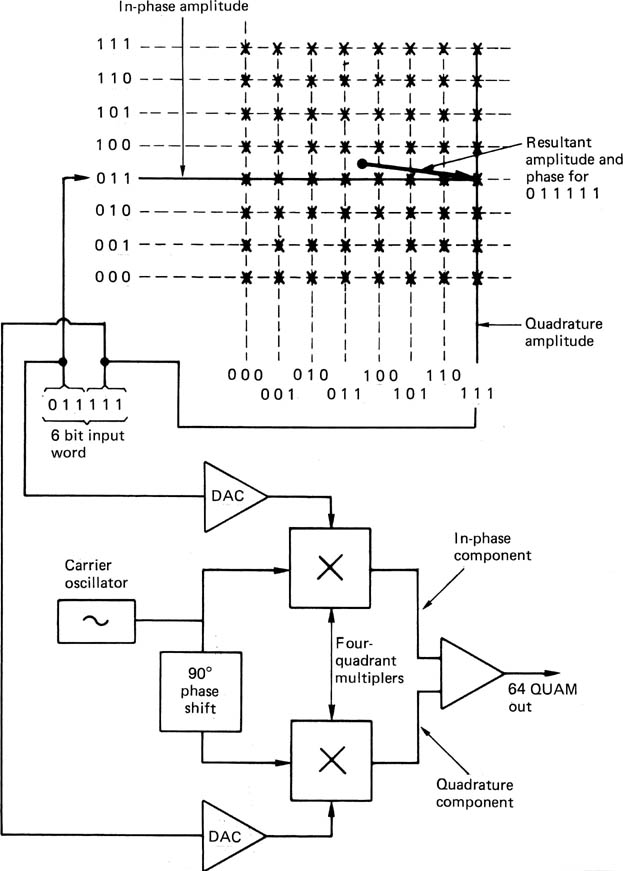

Multi-level signalling can be combined with PSK to obtain multi-level Quadrature Amplitude Modulation (QUAM). Figure 9.28 shows an example of 64-QUAM. Incoming six-bit data words are split into two three-bit words and each is used to amplitude modulate a pair of sinusoidal carriers which are generated in quadrature. The modulators are four-quadrant devices such that 23 amplitudes are available, four which are in phase with the carrier and four which are antiphase. The two AM carriers are linearly added and the result is a signal which has 26 or 64 combinations of amplitude and phase. There is a great deal of similarity between QUAM and the colour subcarrier used in analog television in which the two colour difference signals are encoded into one amplitudeand phase-modulated waveform. On reception, the waveform is sampled twice per cycle in phase with the two original carriers and the result is a pair of eight-level signals. 16-QUAM is also possible, delivering only four bits per symbol but requiring a lower SNR.

Figure 9.28 In 64-QUAM, two carriers are generated with a quadrature relationship. These are independently amplitude modulated to eight discrete levels in four quadrant multipliers. Adding the signals produces a QUAM signal having 64 unique combinations of amplitude and phase. Decoding requires the waveform to be sampled in quadrature like a colour TV subcarrier.

The data bit patterns to be transmitted can have any combinations whatsoever, and if nothing were done, the transmitted spectrum would be non-uniform. This is undesirable because peaks cause interference with other services, whereas energy troughs allow external interference in. The randomizing technique of section 6.13 is used to overcome the problem. The process is known as energy dispersal. The signal energy is spread uniformly throughout the allowable channel bandwidth so that it has less energy at a given frequency.

A pseudo-random sequence generator is used to generate the randomizing sequence. Figure 9.29 shows the randomizer used in DVB. This sixteen-bit device has a maximum sequence length of 65 535 bits, and is preset to a standard value at the beginning of each set of eight transport stream packets. The serialized data are XORed with the LSB of the Galois field, which randomizes the output which then goes to the modulator. The spectrum of the transmission is now determined by the spectrum of the prs.

Figure 9.29 (a) The randomizer of DVB is preset to the initial condition once every 8 transport stream packets. The maximum length of the sequence is 65 535 bits, but only the first 12 02! bits are used before resetting again (b).

9.14 OFDM

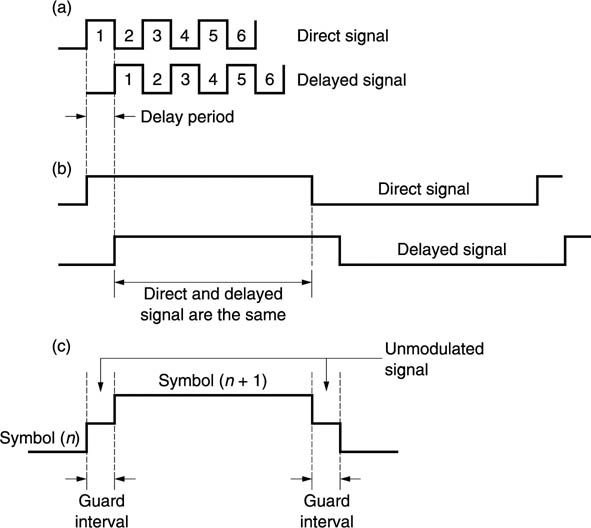

The way that radio signals interact with obstacles is a function of the relative magnitude of the wavelength and the size of the object. AM sound radio transmissions with a wavelength of several hundred metres can easily diffract around large objects. The shorter the wavelength of a transmission, the larger objects in the environment appear to it, and these objects can then become reflectors. Reflecting objects produce a delayed signal at the receiver in addition to the direct signal. In analog television transmissions this causes the familiar ghosting. In digital transmissions, the symbol rate may be so high that the reflected signal may be one or more symbols behind the direct signal, causing intersymbol interference. As the reflection may be continuous, the result may be that almost every symbol is corrupted. No error-correction system can handle this. Raising the transmitter power is no help at all as it simply raises the power of the reflection in proportion.

The only solution is to change the characteristics of the RF channel in some way to either prevent the multipath reception or stop it being a problem. The RF channel includes the modulator, transmitter, antennae, receiver and demodulator.

As with analog UHF TV transmissions, a directional antenna is useful with digital transmission as it can reject reflections. However, directional antennae tend to be large and they require a skilled permanent installation. Mobile use on a vehicle or vessel is simply impractical.

Another possibility is to incorporate a ghost canceller in the receiver. The transmitter periodically sends a standardized waveform known as a training sequence. The receiver knows what this waveform looks like and compares it with the received signal. In theory it is possible for the receiver to compute the delay and relative level of a reflection and so insert an opposing one. In practice if the reflection is strong it may prevent the receiver finding the training sequence.

The most elegant approach is to use a system in which multipath reception conditions cause only a small increase in error rate which the error-correction system can manage. This approach is used in DVB. Figure 9.30(a) shows that when using one carrier with a high bit rate, reflections can easily be delayed by one or more bit periods, causing interference between the bits. Figure 9.30(b) shows that instead, OFDM sends many carriers each having a low bit rate. When a low bit rate is used, the energy in the reflection will arrive during the same bit period as the direct signal. Not only is the system immune to multipath reflections, but the energy in the reflections can actually be used. This characteristic can be enhanced by using guard intervals shown in Figure 9.30(c). These reduce multipath bit overlap even more.

Figure 9.30 (a) High bit rate transmissions are prone to corruption due to reflections. (b) If the bit rate is reduced the effect of reflections is eliminated, in fact reflected energy can be used. (c) Guard intervals may be inserted between symbols.

Note that OFDM is not a modulation scheme, and each of the carriers used in a OFDM system still needs to be modulated using any of the digital coding schemes described above. What OFDM does is to provide an efficient way of packing many carriers close together without mutual interference.

A serial data waveform basically contains a train of rectangular pulses. The transform of a rectangle is the function sinx/x and so the baseband pulse train has a sinx/x spectrum. When this waveform is used to modulate a carrier the result is a symmetrical sinx/x spectrum centred on the carrier frequency. Figure 9.31(a) shows that nulls in the spectrum appear spaced at multiples of the bit rate away from the carrier.

Figure 9.31 In OFDM the carrier spacing is critical, but when correct the carriers become independent and most efficient use is made of the spectrum. (a) Spectrum of bitstream has regular nulls. (b) Peak of one carrier occurs at null of another.

Further carriers can be placed at spacings such that each is centred at the nulls of the others as is shown in Figure 9.31(b). The distance between the carriers is equal to 90° or one quadrant of sinx. Owing to the quadrant spacing, these carriers are mutually orthogonal, hence the term ‘orthogonal frequency division’. A large number of such carriers (in practice, several thousand) will be interleaved to produce an overall spectrum which is almost rectangular and which fills the available transmission channel.

When guard intervals are used, the carrier returns to an unmodulated state between bits for a period which is greater than the period of the reflections. Then the reflections from one transmitted bit decay during the guard interval before the next bit is transmitted. The use of guard intervals reduces the bit rate of the carrier because for some of the time it is radiating carrier, not data. A typical reduction is to around 80 per cent of the capacity without guard intervals.

This capacity reduction does, however, improve the error statistics dramatically, such that much less redundancy is required in the error-correction system. Thus the effective transmission rate is improved. The use of guard intervals also moves more energy from the sidebands back to the carrier. The frequency spectrum of a set of carriers is no longer perfectly flat but contains a small peak at the centre of each carrier.

The ability to work in the presence of multipath cancellation is one of the great strengths of OFDM. In DVB, more than 2000 carriers are used in single-transmitter systems. Provided there is exact synchronism, several transmitters can radiate exactly the same signal so that a single-frequency network can be created throughout a whole country. SFNs require a variation on OFDM which uses over 8000 carriers.

With OFDM, directional antennae are not needed and, given sufficient field strength, mobile reception is perfectly feasible. Of course, directional antennae may still be used to boost the received signal outside of normal service areas or to enable the use of low-powered transmitters.

An OFDM receiver must perform fast Fourier transforms (FFTs) on the whole band at the symbol rate of one of the carriers. The amplitude and/or phase of the carrier at a given frequency effectively reflects the state of the transmitted symbol at that time slot and so the FFT partially demodulates as well.

In order to assist with tuning in, the OFDM spectrum contains pilot signals. These are individual carriers which are transmitted with slightly more power than the remainder. The pilot carriers are spaced apart through the whole channel at agreed frequencies which form part of the transmission standard.

Practical reception conditions, including multipath reception, will cause a significant variation in the received spectrum and some equalization will be needed. Figure 9.32 shows what the possible spectrum looks like in the presence of a powerful reflection. The signal has almost been cancelled at certain frequencies. However, the FFT performed in the receiver is effectively a spectral analysis of the signal and so the receiver computes for free the received spectrum. As in a flat spectrum the peak magnitude of all the coefficients would be the same (apart from the pilots), equalization is easily performed by multiplying the coefficients by suitable constants until this characteristic is obtained.

Figure 9.32 Multipath reception can place notches in the channel spectrum. This will require equalization at the receiver.

Although the use of transform-based receivers appears complex, when it is considered that such an approach simultaneously allows effective equalization the complexity is not significantly higher than that of a conventional receiver which needs a separate spectral analysis system just for equalization purposes.

The only drawback of OFDM is that the transmitter must be highly linear to prevent intermodulation between the carriers. This is readily achieved in terrestrial transmitters by derating the transmitter so that it runs at a lower power than it would in analog service. This is not practicable in satellite transmitters which are optimized for efficiency so OFDM is not really suitable for satellite use.

9.15 Error correction in digital television broadcasting

As in recording, broadcast data suffer from both random and burst errors and the error-correction strategies of digital television broadcasting have to reflect that. Figure 9.33 shows a typical system in which inner and outer codes are employed. The Reed–Solomon codes are universally used for burst-correcting outer codes, along with an interleave which will be convolutional rather than the block-based interleave used in recording media. The inner codes will not be R–S, as more suitable codes exist for the statistical conditions prevalent in broadcasting. DVB uses a parity-based variable-rate system in which the amount of redundancy can be adjusted according to reception conditions. ATSC uses a fixed-rate parity-based system along with trellis coding to overcome co-channel interference from analog NTSC transmitters.

Figure 9.33 Error-correcting strategy of digital television broadcasting systems.

9.16 DVB

The DVB system is subdivided into systems optimized for satellite, cable and terrestrial delivery. This section concentrates on the terrestrial delivery system. Figure 9.34 shows a block diagram of a DVB-T transmitter.

Figure 9.34 DVB-T transmitter block diagram. See text for details.

Incoming transport stream packets of 188 bytes each are first subject to R-S outer coding. This adds 16 bytes of redundancy to each packet, resulting in 204 bytes. Outer coding is followed by interleaving. The interleave mechanism is shown in Figure 9.35. Outer code blocks are commutated on a byte basis into twelve parallel channels. Each channel contains a different amount of delay, typically achieved by a ring-buffer RAM. The delays are integer multiples of 17 bytes, designed to skew the data by one outer block (12 × 17 = 204). Following the delays, a commutator reassembles interleaved outer blocks. These have 204 bytes as before, but the effect of the interleave is that adjacent bytes in the input are 17 bytes apart in the output. Each output block contains data from twelve input blocks making the data resistant to burst errors.

Figure 9.35 The interleaver of DVB uses 12 incrementing delay channels to reorder the data. The sync byte passes through the undelayed channel and so is still at the head of the packet after interleave. However, the packet now contains non-adjacent bytes from 12 different packets.

Following the interleave, the energy-dispersal process takes place. The pseudo-random sequence runs over eight outer blocks and is synchronized by inverting the transport stream packet sync symbol in every eighth block. The packet sync symbols are not randomized.

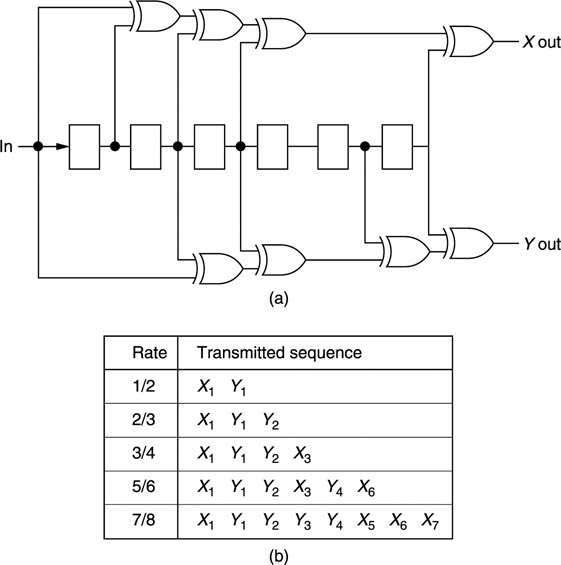

The inner coding process of DVB is shown in Figure 9.36. Input data are serialized and pass down a shift register. Exclusive-OR gates produce convolutional parity symbols X and Y, such that the output bit rate is twice the input bit rate. Under the worst reception conditions, this 100 per cent redundancy offers the most powerful correction with the penalty that a low data rate is delivered. However, Figure 9.36 also shows that a variety of inner redundancy factors can be used from 1/2 down to 1/8 of the transmitted bit rate. The X, Y data from the inner coder are subsampled, such that the coding is punctured.

Figure 9.36 (a) The mother inner coder of DVB produces 100 per cent redundancy, but this can be punctured by subsampling the X and Y data to give five different code rates as (b) shows.

The DVB standard allows the use of QPSK, 16-QUAM or 64-QUAM coding in an OFDM system. There are five possible inner code rates, and four different guard intervals which can be used with each modulation scheme, Thus for each modulation scheme there are twenty possible transport stream bit rates in the standard DVB channel, each of which requires a different receiver SNR. The broadcaster can select any suitable balance between transport stream bit rate and coverage area. For a given transmitter location and power, reception over a larger area may require a channel code with a smaller number of bits/s/Hz and this reduces the bit rate which can be delivered in a standard channel. Alternatively a higher amount of inner redundancy means that the proportion of the transmitted bit rate which is data goes down. Thus for wider coverage the broadcaster will have to send fewer programs in the multiplex or use higher compression factors.

Figure 9.37 shows a block diagram of a DVB receiver. The off-air RF signal is fed to a mixer driven by the local oscillator. The IF output of the mixer is bandpass filtered and supplied to the ADC which outputs a digital IF signal for the FFT stage. The FFT is analysed initially to find the higher-level pilot signals. If these are not in the correct channels the local oscillator frequency is incorrect and it will be changed until the pilots emerge from the FFT in the right channels. The data in the pilots will be decoded in order to tell the receiver how many carriers, what inner redundancy rate, guard band rate and modulation scheme are in use in the remaining carriers. The FFT magnitude information is also a measure of the equalization required.

Figure 9.37 DVB receiver block diagram. See text for details.

The FFT outputs are demodulated into 2K or 8K bitstreams and these are multiplexed to produce a serial signal. This is subject to inner error correction which corrects random errors. The data are then de-interleaved to break up burst errors and then the outer R–S error-correction operates. The output of the R–S correction will then be derandomized to become an MPEG transport stream once more. The derandomizing is synchronized by the transmission of inverted sync patterns.

The receiver must select a PID of 0 and wait until a Program Association Table (PAT) is transmitted. This will describe the available programs by listing the PIDs of the Program Map Tables (PMT). By looking for these packets the receiver can determine what PIDs to select to receive any video and audio elementary streams.

When an elementary stream is selected, some of the packets will have extended headers containing program clock reference (PCR). These codes are used to synchronize the 27 MHz clock in the receiver to the one in the MPEG encoder of the desired program. The 27 MHz clock is divided down to drive the time stamp counter so that audio and video emerge from the decoder at the correct rate and with lip sync.

It should be appreciated that time stamps are relative, not absolute. The time stamp count advances by a fixed amount each picture, but the exact count is meaningless. Thus the decoder can only establish the frame rate of the video from time stamps, but not the precise timing. In practice the receiver has finite buffering memory between the demultiplexer and the MPEG decoder. If the displayed video timing is too late, the buffer will tend to overflow whereas if the displayed video timing is too early the decoding may not be completed. The receiver can advance or retard the time stamp counter during lockup so that it places the output timing mid-way between these extremes.

9.17 ATSC

The ATSC system is an alternative way of delivering a transport stream, but it is considerably less sophisticated than DVB, and supports only one transport stream bit rate of 19.28 Mbits/s. If any change in the service area is needed, this will require a change in transmitter power.

Figure 9.38 shows a block diagram of an ATSC transmitter. Incoming transport stream packets are randomized, except for the sync pattern, for energy dispersal. Figure 9.39 shows the randomizer.

Figure 9.38 Block diagram of ATSC transmitter. See text for details.

Figure 9.39 The randomizer of ATSC. The twisted ring counter is preset to the initial state shown each data field. It is then clocked once per byte and the eight outputs D0-D7 are X-ORed with the data byte.

The outer correction code includes the whole packet except for the sync byte. Thus there are 187 bytes of data in each codeword. Twenty bytes of R-S redundancy are added to make a 207-byte codeword. After outer coding, a convolutional interleaver shown in Figure 9.40 is used. This reorders data over a time span of about 4 ms. Interleave simply exchanges content between packets, but without changing the packet structure.

Figure 9.40 The ATSC convolutional interleaver spreads adjacent bytes over a period of about 4 ms.

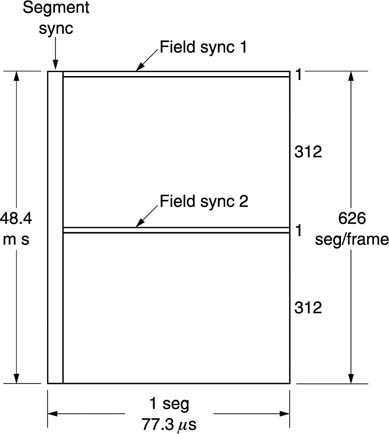

Figure 9.41 shows that the result of outer coding and interleave is a data frame which is divided into two fields of 313 segments each. The frame is tranmitted by scanning it horizontally a segment at a time. There is some similarity with a traditional analog video signal here, because there is a sync pulse at the beginning of each segment and a field sync which occupies two segments of the frame. Data segment sync repeats every 77.3 ms, a segment rate of 12933 Hz, whereas a frame has a period of 48.4ms. The field sync segments contain a training sequnce to drive the adaptive equalizer in the receiver.

Figure 9.41 The ATSC data frame is transmitted one segment at a time. Segment sync denotes the beginning of each segment and the segments are counted from the field sync signals.

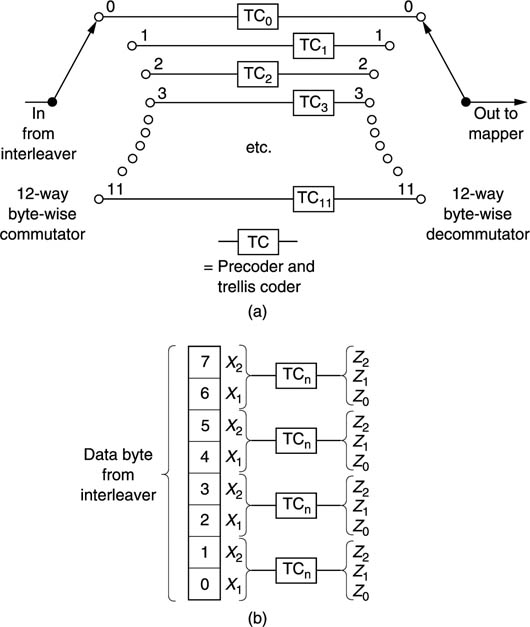

The data content of the frame is subject to trellis coding which converts each pair of data bits into three channel bits inside an inner interleave. The trellis coder is shown in Figure 9.42 and the interleave in Figure 9.43. Figure 9.42 also shows how the three channel bits map to the eight signal levels in the 8-VSB modulator.

Figure 9.42 (a) The precoder and trellis coder of ATSC converts two data bits X1, X2 to three output bits Z0, Z1, Z2. (b) The Z0, Z1, Z2 output bits map to the eight-level code as shown.

Figure 9.43 The inner interleave (a) of ATSC makes the trellis coding operate as twelve parallel channels working on every twelfth byte to improve error resistance. The interleave is byte-wise, and, as (b) shows, each byte is divided into four di-bits for coding into the tri-bits Z0, Z1, Z2.

Figure 9.44 shows the data segment after eight-level coding. The sync pattern of the transport stream packet, which was not included in the error-correction code, has been replaced by a segment sync waveform. This acts as a timing reference to allow deserializing of the segment, but as the two levels of the sync pulse are standardized, it also acts as an amplitude reference for the eight-level slicer in the receiver.

Figure 9.44 ATSC data segment. Note the sync pattern which acts as a timing and amplitude reference. The eight levels are shifted up by 1.25 to create a DC component resulting in a pilot at the carrier frequency.