Chapter 2

Video principles

2.1 The eye

All television signals ultimately excite some response in the eye and the viewer can only describe the result subjectively. Familiarity with the operation and limitations of the eye is essential to an understanding of television principles.

The simple representation of Figure 2.1 shows that the eyeball is nearly spherical and is swivelled by muscles so that it can track movement. This has a large bearing on the way moving pictures are reproduced. The space between the cornea and the lens is filled with transparent fluid known as aqueous humour. The remainder of the eyeball is filled with a transparent jelly known as vitreous humour. Light enters the cornea, and the the amount of light admitted is controlled by the pupil in the iris. Light entering is involuntarily focused on the retina by the lens in a process called visual accommodation.

Figure 2.1 A simple representation of an eyeball; see text for details.

The retina is responsible for light sensing and contains a number of layers. The surface of the retina is covered with arteries, veins and nerve fibres and light has to penetrate these in order to reach the sensitive layer. This contains two types of discrete receptors known as rods and cones from their shape. The distribution and characteristics of these two receptors are quite different. Rods dominate the periphery of the retina whereas cones dominate a central area known as the fovea outside which their density drops off. Vision using the rods is monochromatic and has poor resolution but remains effective at very low light levels, whereas the cones provide high resolution and colour vision but require more light.

The cones in the fovea are densely packed and directly connected to the nervous system allowing the highest resolution. Resolution then falls off away from the fovea. As a result the eye must move to scan large areas of detail. The image perceived is not just a function of the retinal response, but is also affected by processing of the nerve signals. The overall acuity of the eye can be displayed as a graph of the response plotted against the degree of detail being viewed. Image detail is generally measured in lines per millimetre or cycles per picture height, but this takes no account of the distance from the image to the eye. A better unit for eye resolution is one based upon the subtended angle of detail as this will be independent of distance. Units of cycles per degree are then appropriate. Figure 2.2 shows the response of the eye to static detail. Note that the response to very low frequencies is also attenuated. An extension of this characteristic allows the vision system to ignore the fixed pattern of shadow on the retina due to the nerves and arteries.

Figure 2.2 Response of the eye to different degrees of detail.

The retina does not respond instantly to light, but requires between 0.15 and 0.3 second before the brain perceives an image. The resolution of the eye is primarily a spatio-temporal compromise. The eye is a spatial sampling device; the spacing of the rods and cones on the retina represents a spatial sampling frequency. The measured acuity of the eye exceeds the value calculated from the sample site spacing because a form of oversampling is used.

The eye is in a continuous state of unconscious vibration called saccadic motion. This causes the sampling sites to exist in more than one location, effectively increasing the spatial sampling rate provided there is a temporal filter which is able to integrate the information from the various different positions of the retina.

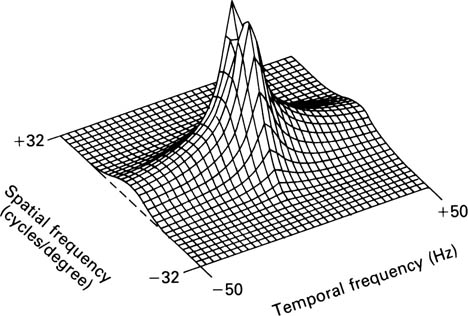

This temporal filtering is responsible for ‘persistence of vision’. Flashing lights are perceived to flicker until the critical flicker frequency (CFF) is reached; the light appears continuous for higher frequencies. The CFF is not constant but varies with brightness. Note that the field rate of European television at 50 fields per second is marginal with bright images. Film projected at 48 Hz works because cinemas are darkened and the screen brightnes is actually quite low. Figure 2.3 shows the two-dimensional or spatio-temporal response of the eye.

Figure 2.3 The response of the eye shown with respect to temporal and spatial frequencies. Note that even slow relative movement causes a serious loss of resolution. The eye tracks moving objects to prevent this loss.

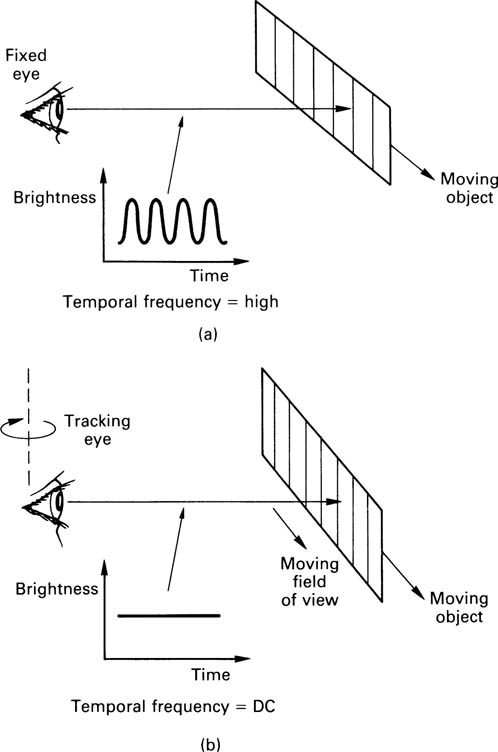

If the eye were static, a detailed object moving past it would give rise to temporal frequencies, as Figure 2.4(a) shows. The temporal frequency is given by the detail in the object, in lines per millimetre, multiplied by the speed. Clearly a highly detailed object can reach high temporal frequencies even at slow speeds, yet Figure 2.3 shows that the eye cannot respond to high temporal frequencies.

Figure 2.4 In (a) a detailed object moves past a fixed eye, causing temporal frequencies beyond the response of the eye. This is the cause of motion blur. In (b) the eye tracks the motion and the temporal frequency becomes zero. Motion blur cannot then occur.

However, the human viewer has an interactive visual system which causes the eyes to track the movement of any object of interest. Figure 2.4(b) shows that when eye tracking is considered, a moving object is rendered stationary with respect to the retina so that temporal frequencies fall to zero and much the same acuity to detail is available despite motion. This is known as dynamic resolution and it’s how humans judge the detail in real moving pictures. Dynamic resolution will be considered in section 2.10.

The contrast sensitivity of the eye is defined as the smallest brightness difference which is visible. In fact the contrast sensitivity is not constant, but increases proportionally to brightness. Thus whatever the brightness of an object, if that brightness changes by about 1 per cent it will be equally detectable.

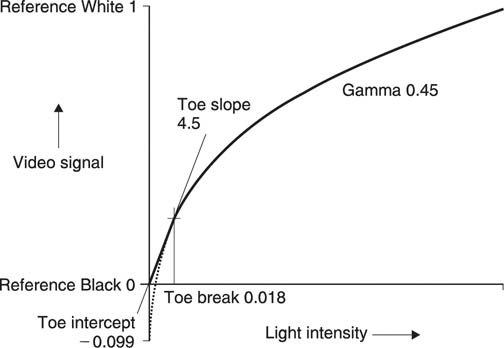

2.2 Gamma

The true brightness of a television picture can be affected by electrical noise on the video signal. As contrast sensitivity is proportional to brightness, noise is more visible in dark picture areas than in bright areas. For economic reasons, video signals have to be made non-linear to render noise less visible. An inverse gamma function takes place at the camera so that the video signal is non-linear for most of its journey. Figure 2.5 shows a reverse gamma function. As a true power function requires infinite gain near black, a linear segment is substituted. It will be seen that contrast variations near black result in larger signal amplitude than variations near white. The result is that noise picked up by the video signal has less effect on dark areas than on bright areas. After a gamma function at the display, noise at near-black levels is compressed with respect to noise at near-white levels. Thus a video transmission system using gamma has a lower perceived noise level than one without. Without gamma, vision signals would need around 30 dB better signal-to-noise ratio for the same perceived quality and digital video samples would need five or six extra bits.

Figure 2.5 CCIR Rec.709 reverse gamma function used at camera has a straight-line approximation at the lower part of the curve to avoid boosting camera noise. Note that the output amplitude is greater for modulation near black.

In practice the system is not rendered perfectly linear by gamma correction and a slight overall exponential effect is usually retained in order further to reduce the effect of noise in darker parts of the picture. A gamma correction factor of 0.45 may be used to achieve this effect. Clearly, image data which are intended to be displayed on a video system must have the correct gamma characteristic or the grey scale will not be correctly reproduced.

As all television signals, analog and digital, are subject to gamma correction, it is technically incorrect to refer to the Y signal as luminance, because this parameter is defined as linear in colorimetry. It has been suggested that the term luma should be used to describe luminance which has been gamma corrected.

In a CRT (cathode ray tube) the relationship between the tube drive voltage and the phosphor brightness is not linear, but an exponential function where the power is known as gamma. The power is the same for all CRTs as it is a function of the physics of the electron gun and it has a value of around 2.8. It is a happy but pure coincidence that the gamma function of a CRT follows roughly the same curve as human contrast sensitivity.

Consequently if video signals are predistorted at source by an inverse gamma, the gamma characteristic of the CRT will linearize the signal. Figure 2.6 shows the principle. CRT gamma is not a nuisance, but is actually used to enhance the noise performance of a system. If the CRT had no gamma characteristic, a gamma circuit would have been necessary ahead of it. As all standard video signals are inverse gamma processed, it follows that if a non-CRT display such as a plasma or LCD device is to be used, some gamma conversion will be required at the display.

Figure 2.6 The non-linear characteristic of tube (a) contrasted with the ideal response (b). Non-linearity may be opposed by gamma correction with a response (c).

2.3 Scanning

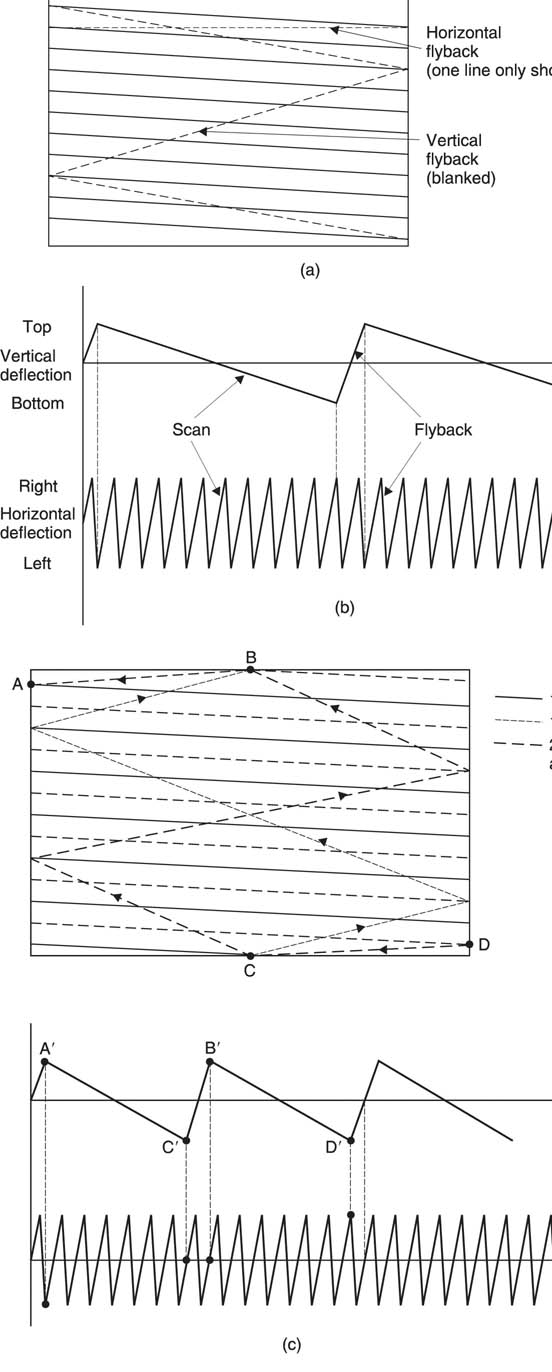

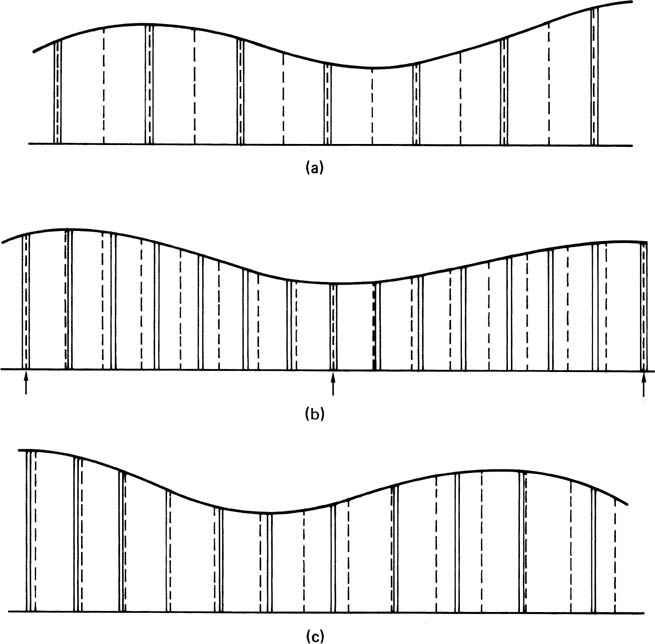

Figure 2.7(a) shows that the monochrome camera produces a video signal whose voltage is a function of the image brightness at a single point on the sensor. This voltage is converted back to the brightness of the same point on the display. The points on the sensor and display must be scanned synchronously if the picture is to be re-created properly. If this is done rapidly enough it is largely invisible to the eye. Figure 2.7(b) shows that the scanning is controlled by a triangular or sawtooth waveform in each dimension which causes a constant speed forward scan followed by a rapid return or flyback. As the horizontal scan is much more rapid than the vertical scan the image is broken up into lines which are not quite horizontal.

Figure 2.7 Scanning converts two-dimensional images into a signal which can be sent electrically. In (a) the scanning of camera and display must be identical. The scanning is controlled by horizontal and vertical sawtooth waveforms (b). Where two vertical scans are needed to complete a whole number of lines, the scan is interlaced as shown in (c). The frame is now split into two fields.

In the example of Figure 2.7(b), the horizontal scanning frequency or line rate, Fh, is an integer multiple of the vertical scanning frequency or frame rate and a progressive scan system results in which every frame is identical. Figure 2.7(c) shows an interlaced scan system in which there is an integer number of lines in two vertical scans or fields. The first field begins with a full line and ends on a half line and the second field begins with a half line and ends with a full line. The lines from the two fields interlace or mesh on the screen. Current analog broadcast systems such as PAL and NTSC use interlace, although in MPEG systems it is not necessary. The additional complication of interlace has both merits and drawbacks which will be discussed in section 2.11.

2.4 Synchronizing

The synchronizing or sync system must send timing information to the display alongside the video signal. In very early television equipment this was achieved using two quite separate or non-composite signals. Figure 2.8(a) shows one of the first (US) television signal standards in which the video waveform had an amplitude of 1 V peak-to-peak and the sync signal had an amplitude of 4 V peakto-peak. In practice, it was more convenient to combine both into a single electrical waveform then called composite video which carries the synchronizing information as well as the scanned brightness signal. The single signal is effectively shared by using some of the flyback period for synchronizing.

Figure 2.8 Early video used separate vision and sync signals shown in (a). The US one volt video waveform in (b) has 10:4 video/sync ratio. (c) European systems use 7:3 ratio to avoid odd voltages. (d) Sync separation relies on two voltage ranges in the signal.

The 4 V sync signal was attenuated by a factor of ten and added to the video to produce a 1.4 V peak-to-peak signal. This was the origin of the 10:4 video:sync relationship of US analog television practice. Later the amplitude was reduced to 1 V peak-to-peak so that the signal had the same range as the original non-composite video. The 10:4 ratio was retained. As Figure 2.8(b) shows, this ratio results in some rather odd voltages and to simplify matters, a new unit called the IRE unit (after the Institute of Radio Engineers) was devised. Originally this was defined as 1 per cent of the video voltage swing, independent of the actual amplitude in use, but it came in practice to mean 1 per cent of 0.714 V. In European analog systems shown in Figure 2.8(c) the messy numbers were avoided by using a 7:3 ratio and the waveforms are always measured in milliVolts. Whilst such a signal was originally called composite video, today it would be referred to as monochrome video or Ys, meaning luma carrying syncs although in practice the s is often omitted.

Figure 2.8(d) shows how the two signals are separated. The voltage swing needed to go from black to peak white is less than the total swing available. In a standard analog video signal the maximum amplitude is 1 V peak-to-peak. The upper part of the voltage range represents the variations in brightness of the image from black to white. Signals below that range are ‘blacker than black’ and cannot be seen on the display. These signals are used for synchronizing.

Figure 2.9(a) shows the line synchronizing system part-way through a field or frame. The part of the waveform which corresponds to the forward scan is called the active line and during the active line the voltage represents the brightness of the image. In between the active line periods are horizontal blanking intervals in which the signal voltage will be at or below black. Figure 2.9(b) shows that in some systems the active line voltage is superimposed on a pedestal or black level set-up voltage of 7.5 IRE. The purpose of this set-up is to ensure that the blanking interval signal is below black on simple displays so that it is guaranteed to be invisible on the screen. When set-up is used, black level and blanking level differ by the pedestal height. When set-up is not used, black level and blanking level are one and the same.

Figure 2.9 (a) Part of a video waveform with important features named. (b) Use of pedestal or set-up.

The blanking period immediately after the active line is known as the front porch, which is followed by the leading edge of sync. When the leading edge of sync passes through 50 per cent of its own amplitude, the horizontal retrace pulse is considered to have occurred. The flat part at the bottom of the horizontal sync pulse is known as sync tip and this is followed by the trailing edge of sync which returns the waveform to blanking level. The signal remains at blanking level during the back porch during which the display completes the horizontal flyback. The sync pulses have sloping edges because if they were square they would contain high frequencies which would go outside the allowable channel bandwidth on being broadcast.

The vertical synchronizing system is more complex because the vertical flyback period is much longer than the horizontal line period and horizontal synchronization must be maintained throughout it. The vertical synchronizing pulses are much longer than horizontal pulses so that they are readily distinguishable. Figure 2.10(a) shows a simple approach to vertical synchronizing. The signal remains predominantly at sync tip for several lines to indicate the vertical retrace, but returns to blanking level briefly immediately prior to the leading edges of the horizontal sync, which continues throughout. Figure 2.10(b) shows that the presence of interlace complicates matters, as in one vertical interval the vertical sync pulse coincides with a horizontal sync pulse whereas in the next the vertical sync pulse occurs half-way down a line.

Figure 2.10 (a) A simple vertical pulse is longer than a horizontal pulse. (b) In an interlaced system there are two relationships between H and V. (c) The use of equalizing pulses to balance the DC component of the signal.

In practice the long vertical sync pulses were found to disturb the average signal voltage too much and to reduce the effect extra equalizing pulses were put in, half-way between the horizontal sync pulses. The horizontal timebase system can ignore the equalizing pulses because it contains a flywheel circuit which only expects pulses roughly one line period apart. Figure 2.10(c) shows the final result of an interlaced system with equalizing pulses. The vertical blanking interval can be seen, with the vertical pulse itself towards the beginning.

In digital video signals it is possible to synchronize simply by digitizing the analog sync pulses. However, this is inefficient because many samples are needed to describe them. In practice the analog sync pulses are used to generate timing reference signals (TRS) which are special codes inserted in the video data which indicate the picture timing. In a manner analogous to the analog approach of dividing the video voltage range into two, one for syncs, the solution in the digital domain is the same: certain bit combinations are reserved for TRS codes and these cannot occur in legal video. TRS codes are detailed in Chapter 9.

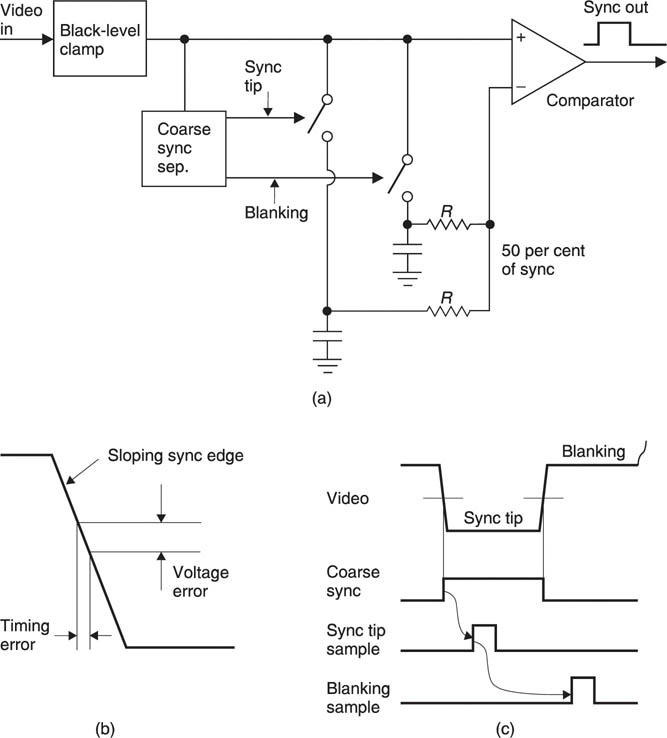

It is essential accurately to extract the timing or synchronizing information from a sync or Ys signal in order to control some process such as the generation of a digital sampling clock. Figure 2.11(a) shows a block diagram of a simple sync separator. The first stage will generally consist of a black level clamp which stabilizes the DC conditions in the separator. Figure 2.11(b) shows that if this is not done the presence of a DC shift on a sync edge can cause a timing error.

Figure 2.11 (a) Sync separator block diagram; see text for details. (b) Slicing at the wrong level introduces a timing error. (c) The timing of the sync separation process.

The sync time is defined as the instant when the leading edge passes through the 50 per cent level. The incoming signal should ideally have a sync amplitude of either 0.3 V peak-to-peak or 40 IRE, in which case it can be sliced or converted to a binary waveform by using a comparator with a reference of either 0.15 V or 20 IRE. However, if the sync amplitude is for any reason incorrect, the slicing level will be wrong. Figure 2.11(a) shows that the solution is to measure both blanking and sync tip voltages and to derive the slicing level from them with a potential divider. In this way the slicing level will always be 50 per cent of the input amplitude. In order to measure the sync tip and blanking levels, a coarse sync separator is required, which is accurate enough to generate sampling pulses for the voltage measurement system. Figure 2.11(c) shows the timing of the sampling process.

Once a binary signal has been extracted from the analog input, the horizontal and vertical synchronizing information can be separated. All falling edges are potential horizontal sync leading edges, but some are due to equalizing pulses and these must be rejected. This is easily done because equalizing pulses occur part-way down the line. A flywheel oscillator or phase-locked loop will lock to genuine horizontal sync pulses because they always occur exactly one line period apart. Edges at other spacings are eliminated. Vertical sync is detected with a timer whose period exceeds that of a normal horizontal sync pulse. If the sync waveform is still low when the timer expires, there must be a vertical pulse present. Once again a phase-locked loop may be used which will continue to run if the input is noisy or disturbed. This may take the form of a counter which counts the number of lines in a frame before resetting.

The sync separator can determine which type of field is beginning because in one the vertical and horizontal pulses coincide whereas in the other the vertical pulse begins in the middle of a line.

2.5 Bandwidth and definition

As the conventional analog television picture is made up of lines, the line structure determines the definition or the fineness of detail which can be portrayed in the vertical axis. The limit is reached in theory when alternate lines show black and white. In a 625-line picture there are roughly 600 unblanked lines. If 300 of these are white and 300 are black then there will be 300 complete cycles of detail in one picture height. One unit of resolution, which is a unit of spatial frequency, is c/ph or cycles per picture height. In practical displays the contrast will have fallen to virtually nothing at this ideal limit and the resolution actually achieved is around 70 per cent of the ideal, or about 210 c/ph. The degree to which the ideal is met is known as the Kell factor of the display.

Definition in one axis is wasted unless it is matched in the other and so the horizontal axis should be able to offer the same performance. As the aspect ratio of conventional television is 4:3 then it should be possible to display 400 cycles in one picture width, reduced to about 300 cycles by the Kell factor. As part of the line period is lost due to flyback, 300 cycles per picture width becomes about 360 cycles per line period.

In 625-line television, the frame rate is 25 Hz and so the line rate Fh will be:

If 360 cycles of video waveform must be carried in each line period, then the bandwidth required will be given by:

In the 525-line system, there are roughly 500 unblanked lines allowing 250 c/ph theoretical definition, or 175 lines allowing for the Kell factor. Allowing for the aspect ratio, equal horizontal definition requires about 230 cycles per picture width. Allowing for horizontal blanking this requires about 280 cycles per line period.

In 525-line video, Fh = 525 χ 30 = 15 750 Hz Thus the bandwidth required is:

If it is proposed to build a high-definition television system, one might start by doubling the number of lines and hence double the definition. Thus in a 1250-line format about 420 c/ph might be obtained. To achieve equal horizontal definition, bearing in mind the aspect ratio is now 16:9, then nearly 750 cycles per picture width will be needed. Allowing for horizontal blanking, then around 890 cycles per line period will be needed. The line frequency is now given by:

and the bandwidth required is given by

Note the dramatic increase in bandwidth. In general the bandwidth rises as the square of the resolution because there are more lines and more cycles needed in each line. It should be clear that, except for research purposes, high-definition television will never be broadcast as a conventional analog signal because the bandwidth required is simply uneconomic. If and when high-definition broadcasting becomes common, it will be compelled to use digital compression techniques to make it economic.

2.6 Aperture effect

The aperture effect will show up in many aspects of television in both the sampled and continuous domains. The image sensor has a finite aperture function. In tube cameras and in CRTs, the beam will have a finite radius with a Gaussian distribution of energy across its diameter. This results in a Gaussian spatial frequency response. Tube cameras often contain an aperture corrector which is a filter designed to boost the higher spatial frequencies that are attenuated by the Gaussian response. The horizontal filter is simple enough, but the vertical filter will require line delays in order to produce points above and below the line to be corrected. Aperture correctors also amplify aliasing products and an overcorrected signal may contain more vertical aliasing than resolution.

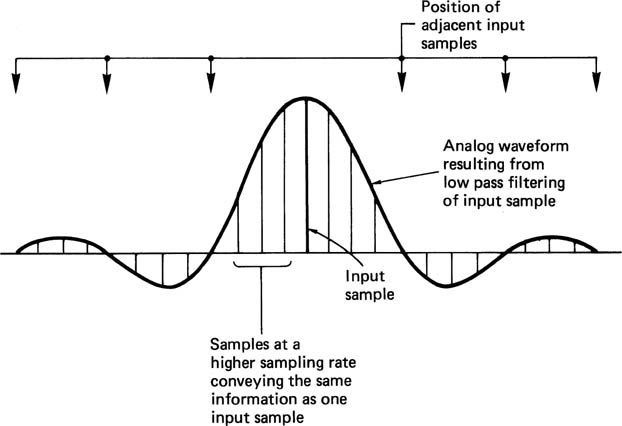

Some digital-to-analog convertors keep the signal constant for a substantial part of or even the whole sample period. In CCD cameras, the sensor is split into elements which may almost touch in some cases. The element integrates light falling on its surface. In both cases the aperture will be rectangular. The case where the pulses have been extended in width to become equal to the sample period is known as a zero-order hold system and has a 100 per cent aperture ratio.

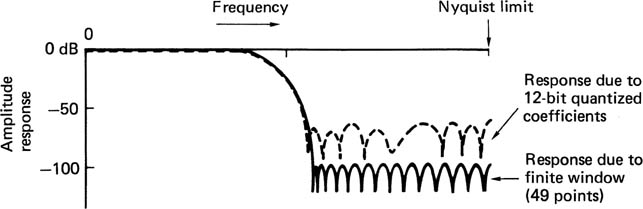

Rectangular apertures have a sinx/x spectrum which is shown in Figure 2.12. With a 100 per cent aperture ratio, the frequency response falls to a null at the sampling rate, and as a result is about 4 dB down at the edge of the baseband.

Figure 2.12 Frequency response with 100 per cent aperture nulls at multiples of sampling rate. Area of interest is up to half sampling rate.

The temporal aperture effect varies according to the equipment used. Tube cameras have a long integration time and thus a wide temporal aperture. Whilst this reduces temporal aliasing, it causes smear on moving objects. CCD cameras do not suffer from lag and as a result their temporal response is better. Some CCD cameras deliberately have a short temporal aperture as the time axis is resampled by a shutter. The intention is to reduce smear, hence the popularity of such devices for sporting events, but there will be more aliasing on certain subjects.

The eye has a temporal aperture effect which is known as persistence of vision, and the phosphors of CRTs continue to emit light after the electron beam has passed. These produce further temporal aperture effects in series with those in the camera.

2.7 Colour

Colour vision is made possible by the cones on the retina which occur in three different types, responding to different colours. Figure 2.13 shows that human vision is restricted to range of light wavelengths from 400 nanometres to 700 nanometres. Shorter wavelengths are called ultra-violet and longer wavelengths are called infra-red. Note that the response is not uniform, but peaks in the area of green. The response to blue is very poor and makes a nonsense of the traditional use of blue lights on emergency vehicles.

Figure 2.13 The luminous efficiency function shows the response of the HVS to light of different wavelengths.

Figure 2.14 shows an approximate response for each of the three types of cone. If light of a single wavelength is observed, the relative responses of the three sensors allows us to discern what we call the colour of the light. Note that at both ends of the visible spectrum there are areas in which only one receptor responds; all colours in those areas look the same. There is a great deal of variation in receptor response from one individual to the next and the curves used in television are the average of a great many tests. In a surprising number of people the single receptor zones are extended and discrimination between, for example, red and orange is difficult.

Figure 2.14 All human vision takes place over this range of wavelengths. The response is not uniform, but has a central peak. The three types of cone approximate to the three responses shown to give colour vision.

The full resolution of human vision is restricted to brightness variations. Our ability to resolve colour details is only about a quarter of that.

The triple receptor characteristic of the eye is extremely fortunate as it means that we can generate a range of colours by adding together light sources having just three different wavelengths in various proportions. This process is known as additive colour matching which should be clearly distinguished from the subtractive colour matching that occurs with paints and inks. Subtractive matching begins with white light and selectively removes parts of the spectrum by filtering. Additive matching uses coloured light sources which are combined.

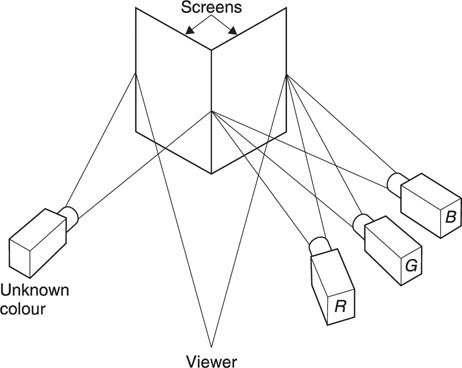

An effective colour television system can be made in which only three pure or single-wavelength colours or primaries can be generated. The primaries need to be similar in wavelength to the peaks of the three receptor responses, but need not be identical. Figure 2.15 shows a rudimentary colour television system. Note that the colour camera is in fact three cameras in one, where each is fitted with a different coloured filter. Three signals, R, G and B must be transmitted to the display which produces three images that must be superimposed to obtain a colour picture.

Figure 2.15 Simple colour television system. Camera image is split by three filters. Red, green and blue video signals are sent to three primary coloured displays whose images are combined.

In practice the primaries must be selected from available phosphor compounds. Once the primaries have been selected, the proportions needed to reproduce a given colour can be found using a colorimeter. Figure 2.16 shows a colorimeter which consists of two adjacent white screens. One screen is illuminated by three light sources, one of each of the selected primary colours. Initially, the second screen is illuminated with white light and the three sources are adjusted until the first screen displays the same white. The sources are then calibrated. Light of a single wavelength is then projected on the second screen. The primaries are once more adjusted until both screens appear to have the same colour. The proportions of the primaries are noted. This process is repeated for the whole visible spectrum, resulting in colour mixture curves shown in Figure 2.17. In some cases it will not be possible to find a match because an impossible negative contribution is needed. In this case we can simulate a negative contribution by shining some primary colour on the test screen until a match is obtained. If the primaries were ideal, monochromatic or single-wavelength sources, it would be possible to find three wavelengths at which two of the primaries were completely absent. However, practical phosphors are not monochromatic, but produce a distribution of wavelengths around the nominal value, and in order to make them spectrally pure other wavelengths have to be subtracted.

Figure 2.16 Simple colorimeter. Intensities of primaries on the right screen are adjusted to match the test colour on the left screen.

Figure 2.17 Colour mixture curves show how to mix primaries to obtain any spectral colour.

The colour-mixing curves dictate what the response of the three sensors in the colour camera must be. The primaries are determined in this way because it is easier to make camera filters to suit available CRT phosphors rather than the other way round.

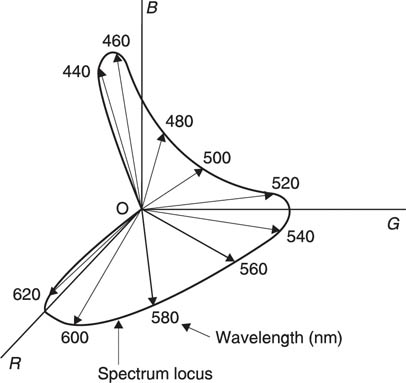

As there are three signals in a colour television system, they can only be simultaneously depicted in three dimensions. Figure 2.18 shows the RGB colour space which is basically a cube with black at the origin and white at the diagonally opposite corner. Figure 2.19 shows the colour mixture curves plotted in RGB space. For each visible wavelength a vector exists whose direction is determined by the proportions of the three primaries. If the brightness is allowed to vary this will affect all three primaries and thus the length of the vector in the same proportion.

Figure 2.18 RGB colour space is three-dimensional and not easy to draw.

Figure 2.19 Colour mixture curves plotted in RGB space result in a vector whose locus moves with wavelength in three dimensions.

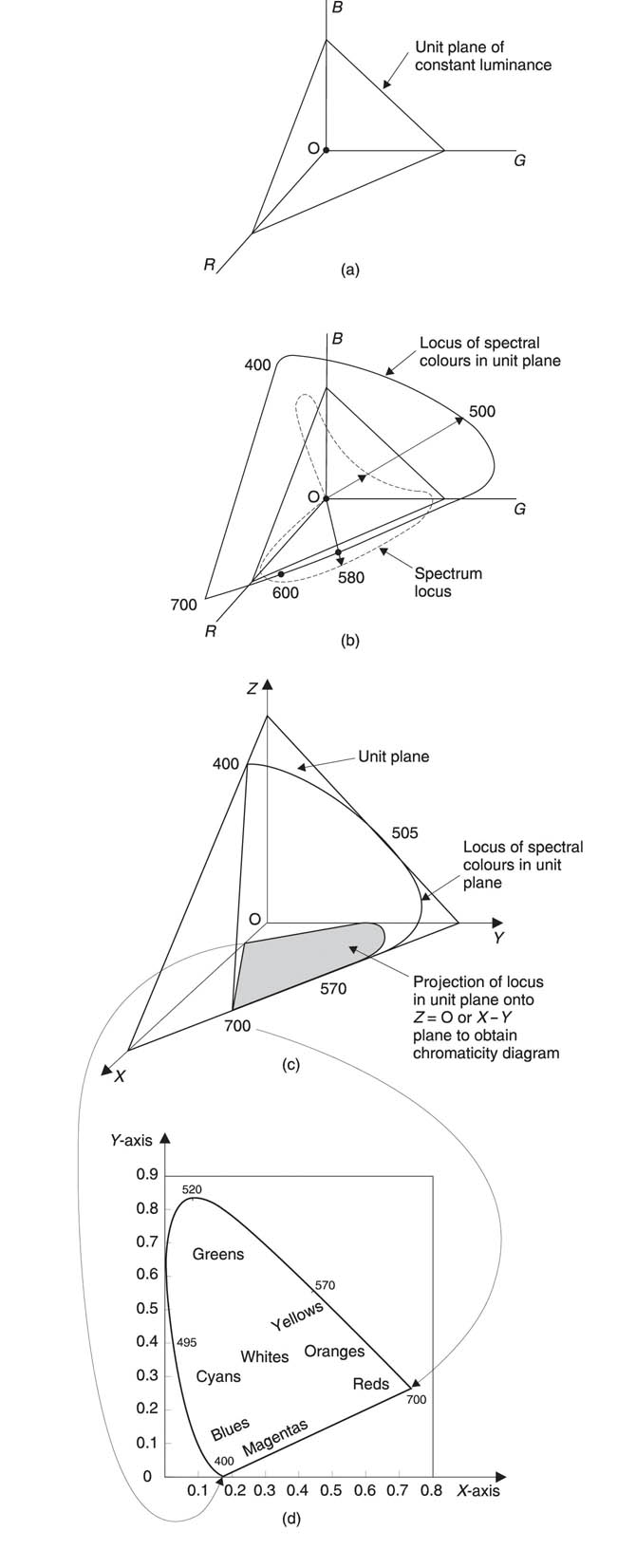

Depicting and visualizing the RGB colour space is not easy and it is also difficult to take objective measurements from it. The solution is to modify the diagram to allow it to be rendered in two dimensions on flat paper. This is done by eliminating luminance (brightness) changes and depicting only the colour at constant brightness. Figure 2.20(a) shows how a constant luminance unit plane intersects the RGB space at unity on each axis. At any point on the plane the three components add up to one. A two-dimensional plot results when vectors representing all colours intersect the plane. Vectors may be extended if necessary to allow intersection. Figure 2.20(b) shows that the 500 nm vector has to be produced (extended) to meet the unit plane, whereas the 580 nm vector naturally intersects. Any colour can now uniquely be specified in two dimensions.

Figure 2.20 (a) A constant luminance plane intersects RGB space, allowing colours to be studied in two dimensions only. (b) The intersection of the unit plane by vectors joining the origin and the spectrum locus produces the locus of spectral colours which requires negative values of R, G and B to describe it. In (c) a new coordinate system, X, Y, Z, is used so that only positive values are required. The spectrum locus now fits entirely in the triangular space where the unit plane intersects these axes. To obtain the CIE chromaticity diagram (d), the locus is projected onto the X–Y plane.

The points where the unit plane intersects the axes of RGB space form a triangle on the plot. The horseshoe-shaped locus of pure spectral colours goes outside this triangle because, as was seen above, the colour mixture curves require negative contributions for certain colours.

Having the spectral locus outside the triangle is a nuisance, and a larger triangle can be created by postulating new coordinates called X, Y and Z representing hypothetical primaries that cannot exist. This representation is shown in Figure 2.20(c).

The Commission Internationale d’Eclairage (CIE) standard chromaticity diagram shown in Figure 2.20(d) is obtained in this way by projecting the unity luminance plane onto the X, Y plane. This projection has the effect of bringing the red and blue primaries closer together. Note that the curved part of the locus is due to spectral or single-wavelength colours. The straight base is due to nonspectral colours obtained by additively mixing red and blue.

As negative light is impossible, only colours within the triangle joining the primaries can be reproduced and so practical television systems cannot reproduce all possible colours. Clearly, efforts should be made to obtain primaries which embrace as large an area as possible. Figure 2.21 shows how the colour range or gamut of television compares with paint and printing inks and illustrates that the comparison is favourable. Most everyday scenes fall within the colour gamut of television. Exceptions include saturated turquoise, spectrally pure iridescent colours formed by interference in a duck’s feathers or reflections in Compact Discs. For special purposes displays have been made having four primaries to give a wider colour range, but these are uncommon.

Figure 2.21 Comparison of the colour range of television and printing.

Figure 2.22 shows the primaries initially selected for NTSC. However, manufacturers looking for brighter displays substituted more efficient phosphors having a smaller colour range. This was later standardized as the SMPTE C phosphors which were also adopted for PAL.

Figure 2.22 The primary colours for NTSC were initially as shown. These were later changed to more efficient phosphors which were also adopted for PAL. See text.

Whites appear in the centre of the chromaticity diagram corresponding to roughly equal amounts of primary colour. Two terms are used to describe colours: hue and saturation. Colours having the same hue lie on a straight line between the white point and the perimeter of the primary triangle. The saturation of the colour increases with distance from the white point. As an example, pink is a desaturated red.

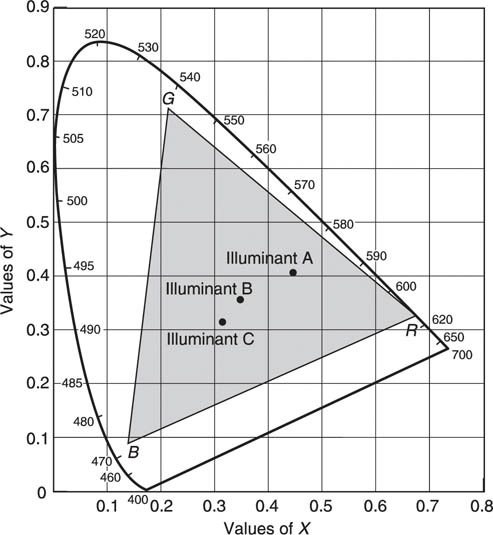

The apparent colour of an object is also a function of the illumination. The ‘true colour’ will only be revealed under ideal white light which in practice is uncommon. An ideal white object reflects all wavelengths equally and simply takes on the colour of the ambient illumination. Figure 2.23 shows the location of three ‘white’ sources or illuminants on the chromaticity diagram. Illuminant A corresponds to a tungsten filament lamp, illuminant B to midday sunlight and illuminant C to typical daylight which is bluer because it consists of a mixture of sunlight and light scattered by the atmosphere. In everyday life we accommodate automatically to the change in apparent colour of objects as the sun’s position or the amount of cloud changes and as we enter artificially lit buildings, but colour cameras accurately reproduce these colour changes. Attempting to edit a television program from recordings made at different times of day or indoors and outdoors would result in obvious and irritating colour changes unless some steps are taken to keep the white balance reasonably constant.

Figure 2.23 Position of three common illuminants on chromaticity diagram.

2.8 Colour displays

In order to display colour pictures, three simultaneous images must be generated, one for each primary colour. The colour CRT does this geometrically. Figure 2.24(a) shows that three electron beams pass down the tube from guns mounted in a triangular or delta array. Immediately before the tube face is mounted a perforated metal plate known as a shadow mask. The three beams approach holes in the shadow mask at a slightly different angle and so fall upon three different areas of phosphor which each produce a different primary colour. The sets of three phosphors are known as triads. Figure 2.24(b) shows an alternative arrangement in which the three electron guns are mounted in a straight line and the shadow mask is slotted and the triads are rectangular. This is known as a PIL (precision-in-line) tube. The triads can easily be seen upon close inspection of an operating CRT.

Figure 2.24 (a) Triads of phosphor dots are triangular and electron guns are arranged in a triangle. (b) Inline tube has strips of phosphor side by side.

In the plasma display the source of light is an electrical discharge in a gas at low pressure. This generates ultra-violet light which excites phosphors in the same way that a fluorescent light operates. Each pixel consists of three such elements, one for each primary colour. Figure 2.25 shows that the pixels are controlled by arranging the discharge to take place between electrodes which are arranged in rows and columns.

Figure 2.25 When a voltage is applied between a line or row electrode and a pixel electrode, a plasma discharge occurs. This excites a phosphor to produce visible light.

The advantage of the plasma display is that it can be made perfectly flat and it is very thin, even in large screen sizes. There is a size limit in CRTs beyond which they become very heavy. Plasma displays allow this limit to be exceeded.

The great difficulty with the plasma display is that the relationship between light output and drive voltage is highly non-linear. Below a certain voltage there is no discharge at all. Consequently the only way that the brightness can be varied is to modulate the time for which the discharge takes place. The electrode signals are pulse width modulated.

Eight-bit digital video has 256 different brightnesses and it is difficult to obtain such a scale by pulse width modulation as the increments of pulse length would need to be generated by a clock of fantastic frequency. It is common practice to break up the picture period into many pulses, each of which is modulated in width. Despite this, plasma displays often display contouring or posterizing, indicating a lack of sufficient brightness levels. Multiple pulse drive also has some temporal effects which may be visible on moving material unless motion compensation is used.

2.9 Colour difference signals

There are many different ways in which television signals can be carried and these will be considered here. A monochrome camera produces a single luma signal Y or Ys whereas a colour camera produces three signals, or components, R, G and B which are essentially monochrome video signals representing an image in each primary colour. In some systems sync is present on a separate signal (RGBS), rarely is it present on all three components, whereas most commonly it is only present on the green component leading to the term RGsB. The use of the green component for sync has led to suggestions that the components should be called GBR. As the original and long-standing term RGB or RGsB correctly reflects the sequence of the colours in the spectrum it remains to be seen whether GBR will achieve common usage. Like luma, RGsB signals may use 0.7 or 0.714 V signals, with or without set-up.

RGB and Y signals are incompatible, yet when colour television was introduced it was a practical necessity that it should be possible to display colour signals on a monochrome display and vice versa.

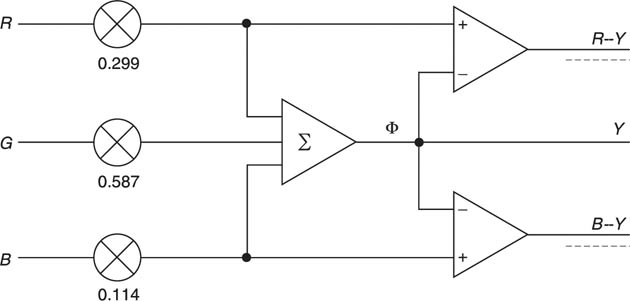

Creating or transcoding a luma signal from R, Gs and B is relatively easy. Figure 2.13 showed the spectral response of the eye which has a peak in the green region. Green objects will produce a larger stimulus than red objects of the same brightness, with blue objects producing the least stimulus. A luma signal can be obtained by adding R, G and B together, not in equal amounts, but in a sum which is weighted by the relative response of the eye. Thus:

Syncs may be regenerated, but will be identical to those on the Gs input and when added to Y result in Ys as required.

If Ys is derived in this way, a monochrome display will show nearly the same result as if a monochrome camera had been used in the first place. The results are not identical because of the non-linearities introduced by gamma correction.

As colour pictures require three signals, it should be possible to send Ys and two other signals which a colour display could arithmetically convert back to R, G and B. There are two important factors which restrict the form which the other two signals may take. One is to achieve reverse compatibility. If the source is a monochrome camera, it can only produce Ys and the other two signals will be completely absent. A colour display should be able to operate on the Ys signal only and show a monochrome picture. The other is the requirement to conserve bandwidth for economic reasons.

These requirements are met by sending two colour difference signals along with Ys. There are three possible colour difference signals, R–Y, B–Y and G. As the green signal makes the greatest contribution to, then the amplitude of G–Y would be the smallest and would be most susceptible to noise. Thus R–Y and B–Y are used in practice as Figure 2.26 shows.

Figure 2.26 Colour components are converted to colour difference signals by the transcoding shown here.

R and B are readily obtained by adding Y to the two colour difference signals. G is obtained by rearranging the expression for Y above such that:

If a colour CRT is being driven, it is possible to apply inverted luma to the cathodes and the R – Y and B – Y signals directly to two of the grids so that the tube performs some of the matrixing. It is then only necessary to obtain G – Y for the third grid, using the expression:

If a monochrome source having only a Ys output is supplied to a colour display, R–Y and B–Y will be zero. It is reasonably obvious that if there are no colour difference signals the colour signals cannot be different from one another and R = G = B. As a result the colour display can produce only a neutral picture.

The use of colour difference signals is essential for compatibility in both directions between colour and monochrome, but it has a further advantage that follows from the way in which the eye works. In order to produce the highest resolution in the fovea, the eye will use signals from all types of cone, regardless of colour. In order to determine colour the stimuli from three cones must be compared. There is evidence that the nervous system uses some form of colour difference processing to make this possible. As a result, the acuity of the human eye is available only in monochrome. Differences in colour cannot be resolved so well. A further factor is that the lens in the human eye is not achromatic and this means that the ends of the spectrum are not well focused. This is particularly noticeable on blue.

If the eye cannot resolve colour very well there is no point is expending valuable bandwidth sending high-resolution colour signals. Colour difference working allows the luma to be sent separately at full bandwidth. This determines the subjective sharpness of the picture. The colour difference signals can be sent with considerably reduced bandwidth, as little as one quarter that of luma, and the human eye is unable to tell.

In practice, analog component signals are never received perfectly, but suffer from slight differences in relative gain. In the case of RGB a gain error in one signal will cause a colour cast on the received picture. A gain error in Y causes no colour cast and gain errors in R – Y or B – Y cause much smaller perceived colour casts. Thus colour difference working is also more robust than RGB working.

The overwhelming advantages obtained by using colour difference signals mean that in broadcast and production facilities RGB is seldom used. The outputs from the RGB sensors in the camera are converted directly to Y, R – Y and B – Y in the camera control unit and output in that form. Standards exist for both analog and digital colour difference signals to ensure compatibility between equipment from various manufacturers. The M-II and Betacam formats record analog colour difference signals, and there are a number of colour difference digital formats.

Whilst signals such as Y, R, G and B are unipolar or positive only, it should be stressed that colour difference signals are bipolar and may meaningfully take on levels below zero volts.

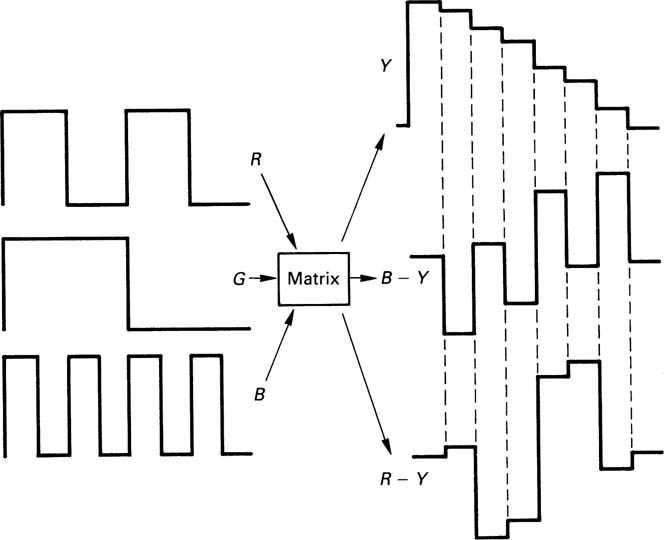

The wide use of colour difference signals has led to the development of test signals and equipment to display them. The most important of the test signals are the ubiquitous colour bars. Colour bars are used to set the gains and timing of signal components and to check that matrix operations are performed using the correct weighting factors. The origin of the colour bar test signal is shown in Figure 2.27. In 100 per cent amplitude bars, peak amplitude binary RGB signals are produced, having one, two and four cycles per screen width. When these are added together in a weighted sum, an eight-level luma staircase results because of the unequal weighting. The matrix also produces two colour difference signals, R – Y and B – Y as shown. Sometimes 75 per cent amplitude bars are generated by suitably reducing the RGB signal amplitude. Note that in both cases the colours are fully saturated; it is only the brightness which is reduced to 75 per cent. Sometimes the white bar of a 75 per cent bar signal is raised to 100 per cent to make calibration easier. Such a signal is sometimes erroneously called a 100 per cent bar signal.

Figure 2.27 Origin of colour difference signals representing colour bars. Adding R, G and B according to the weighting factors produces an irregular luminance staircase.

Figure 2.28(a) shows an SMPTE/EBU standard colour difference signal set in which the signals are called Ys, Ph and Pr. 0.3 V syncs are on luma only and all three video signals have a 0.7 V peak-to-peak swing with 100 per cent bars. In order to obtain these voltage swings, the following gain corrections are made to the components:

Figure 2.28 (a) 100 per cent colour bars represented by SMPTE/EBU standard colour difference signals. (b) Level comparison is easier in waveform monitors if the B-Y and R-Y signals are offset upwards.

Within waveform monitors, the colour difference signals may be offset by 350 mV as in Figure 2.28(b) to match the luma range for display purposes.

2.10 Motion portrayal and dynamic resolution

As the eye uses involuntary tracking at all times, the criterion for measuring the definition of moving-image portrayal systems has to be dynamic resolution, defined as the apparent resolution perceived by the viewer in an object moving within the limits of accurate eye tracking. The traditional metric of static resolution in film and television has to be abandoned as unrepresentative.

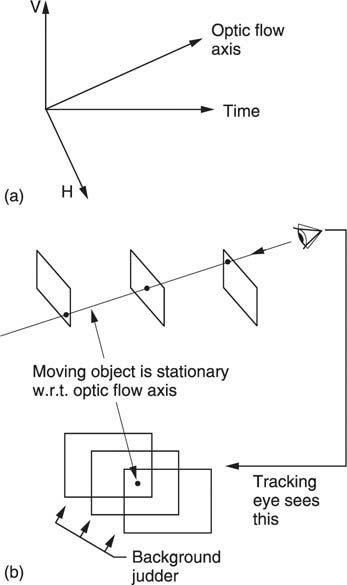

Figure 2.29(a) shows that when the moving eye tracks an object on the screen, the viewer is watching with respect to the optic flow axis, not the time axis, and these are not parallel when there is motion. The optic flow axis is defined as an imaginary axis in the spatio-temporal volume which joins the same points on objects in successive frames. Clearly, when many objects move independently there will be one optic flow axis for each.

Figure 2.29 The optic flow axis (a) joins points on a moving object in successive pictures. (b) When a tracking eye follows a moving object on a screen, that screen will be seen in a different place at each picture. This is the origin of background strobing.

The optic flow axis is identified by motion-compensated standards convertors to eliminate judder and also by MPEG compressors because the greatest similarity from one picture to the next is along that axis. The success of these devices is testimony to the importance of the theory.

Figure 2.29(b) shows that when the eye is tracking, successive pictures appear in different places with respect to the retina. In other words if an object is moving down the screen and followed by the eye, the raster is actually moving up with respect to the retina. Although the tracked object is stationary with respect to the retina and temporal frequencies are zero, the object is moving with respect to the sensor and the display and in those units high temporal frequencies will exist. If the motion of the object on the sensor is not correctly portrayed, dynamic resolution will suffer.

In real-life eye tracking, the motion of the background will be smooth, but in an image-portrayal system based on periodic presentation of frames, the background will be presented to the retina in a different position in each frame. The retina seperately perceives each impression of the background leading to an effect called background strobing.

The criterion for the selection of a display frame rate in an imaging system is sufficient reduction of background strobing. It is a complete myth that the display rate simply needs to exceed the critical flicker frequency. Manufacturers of graphics displays which use frame rates well in excess of those used in film and television are doing so for a valid reason: it gives better results! Note that the display rate and the transmission rate need not be the same in an advanced system.

Dynamic resolution analysis confirms that both interlaced television and conventionally projected cinema film are both seriously sub-optimal. In contrast, progressively scanned television systems have no such defects.

2.11 Progressive or interlaced scan?

Interlaced scanning is a crude compression technique which was developed empirically in the 1930s as a way of increasing the picture rate to reduce flicker without increasing the video bandwidth. Instead of transmitting entire frames, the lines of the frame are sorted into odd lines and even lines. Odd lines are transmitted in one field, even lines in the next. A pair of fields will interlace to produce a frame. Vertical detail such as an edge may only be present in one field of the pair and this results in frame rate flicker called ‘interlace twitter’.

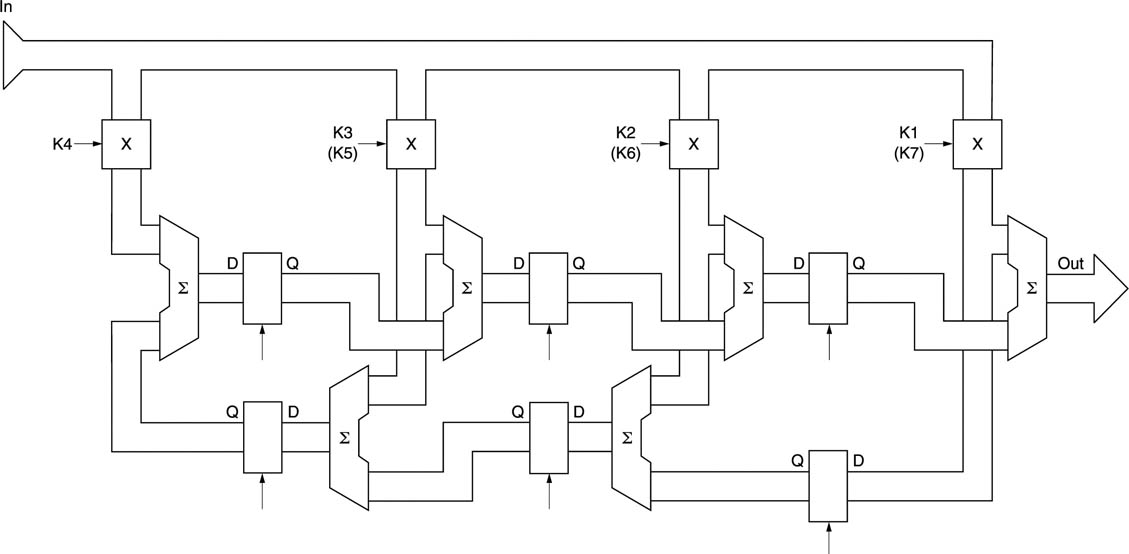

Figure 2.30(a) shows a dynamic resolution analysis of interlaced scanning. When there is no motion, the optic flow axis and the time axis are parallel and the apparent vertical sampling rate is the number of lines in a frame. However, when there is vertical motion, (b), the optic flow axis turns. In the case shown, the sampling structure due to interlace results in the vertical sampling rate falling to one half of its stationary value.

Figure 2.30 When an interlaced picture is stationary, viewing takes place along the time axis as shown in (a). When a vertical component of motion exists, viewing takes place along the optic flow axis, (b) The vertical sampling rate falls to one half its stationary value, (c) The halving in sampling rate causes high spatial frequencies to alias.

Consequently interlace does exactly what would be expected from a half-bandwidth filter. It halves the vertical resolution when any motion with a vertical component occurs. In a practical television system, there is no anti-aliasing filter in the vertical axis and so when the vertical sampling rate of an interlaced system is halved by motion, high spatial freqiencies will alias or heterodyne causing annoying artifacts in the picture. This is easily demonstrated.

Figure 2.30(c) shows how a vertical spatial frequency well within the static resolution of the system aliases when motion occurs. In a progressive scan system this effect is absent and the dynamic resolution due to scanning can be the same as the static case.

This analysis also illustrates why interlaced television systems have to have horizontal raster lines. This is because in real life, horizontal motion is more common than vertical. It is easy to calculate the vertical image motion velocity needed to obtain the half-bandwidth speed of interlace, because it amounts to one raster line per field. In 525/60 (NTSC) there are about 500 active lines, so motion as slow as one picture height in 8 seconds will halve the dynamic resolution. In 625/50 (PAL) there are about 600 lines, so the half-bandwidth speed falls to one picture height in 12 seconds. This is why NTSC, with fewer lines and lower bandwidth, doesn’t look as soft as it should compared to PAL, because it has better dynamic resolution.

The situation deteriorates rapidly if an attempt is made to use interlaced scanning in systems with a lot of lines. In 1250/50, the resolution is halved at a vertical speed of just one picture height in 24 seconds. In other words on real moving video a 1250/50 interlaced system has the same dynamic resolution as a 625/50 progressive system. By the same argument a 1080 I system has the same performance as a 480 P system.

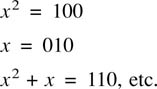

2.12 Binary codes

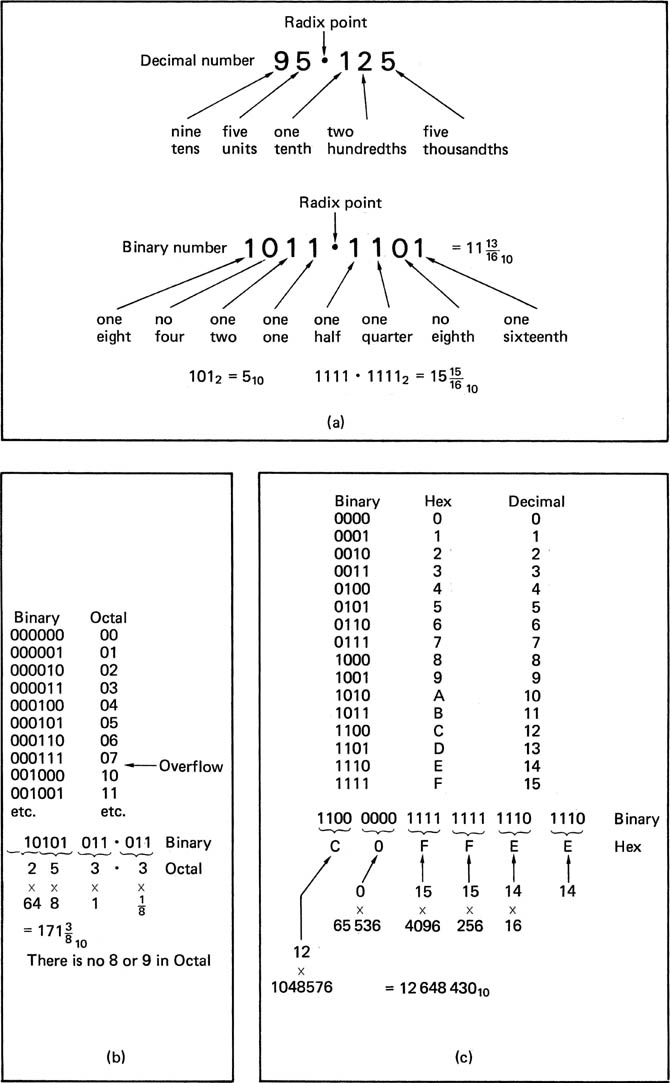

For digital video use, the prime purpose of binary numbers is to express the values of the samples which represent the original analog video waveform. Figure 2.31 shows some binary numbers and their equivalent in decimal. The radix point has the same significance in binary: symbols to the right of it represent one half, one quarter and so on. Binary is convenient for electronic circuits, which do not get tired, but numbers expressed in binary become very long, and writing them is tedious and error-prone. The octal and hexadecimal notations are both used for writing binary since conversion is so simple. Figure 2.31 also shows that a binary number is split into groups of three or four digits starting at the least significant end, and the groups are individually converted to octal or hexadecimal digits. Since sixteen different symbols are required in hex. the letters A – F are used for the numbers above nine.

Figure 2.31 (a) Binary and decimal; (b) In octal, groups of three bits make one symbol 0–7. (c) In hex, groups of four bits make one symbol O-F. Note how much shorter the number is in hex.

There will be a fixed number of bits in a PCM video sample, and this number determines the size of the quantizing range. In the eight-bit samples used in much digital video equipment, there are 256 different numbers. Each number represents a different analog signal voltage, and care must be taken during conversion to ensure that the signal does not go outside the convertor range, or it will be clipped. In Figure 2.32(a) it will be seen that in an eight-bit pure binary system, the number range goes from 00 hex, which represents the smallest voltage, through to FF hex, which represents the largest positive voltage. The video waveform must be accommodated within this voltage range, and (b) shows how this can be done for a PAL composite signal. A luminance signal is shown in (c). As component digital systems only handle the active line, the quantizing range is optimized to suit the gamut of the unblanked luminance. There is a small offset in order to handle slightly maladjusted inputs.

Figure 2.32 The unipolar quantizing range of an eight-bit pure binary system is shown at (a). The analog input must be shifted to fit into the quantizing range, as shown for PAL at (b). In component, sync pulses are not digitized, so the quantizing intervals can be smaller as at (c). An offset of half scale is used for colour difference signals (d).

Colour difference signals are bipolar and so blanking is in the centre of the signal range. In order to accommodate colour difference signals in the quantizing range, the blanking voltage level of the analog waveform has been shifted as in Figure 2.32(d) so that the positive and negative voltages in a real video signal can be expressed by binary numbers which are only positive. This approach is called offset binary. Strictly speaking, both the composite and luminance signals are also offset binary because the blanking level is part-way up the quantizing scale.

Offset binary is perfectly acceptable where the signal has been digitized only for recording or transmission from one place to another, after which it will be converted directly back to analog. Under these conditions it is not necessary for the quantizing steps to be uniform, provided both ADC and DAC are constructed to the same standard. In practice, it is the requirements of signal processing in the digital domain which make both non-uniform quantizing and offset binary unsuitable.

Figure 2.33 shows that analog video signal voltages are referred to blanking. The level of the signal is measured by how far the waveform deviates from blanking, and attenuation, gain and mixing all take place around blanking level. Digital vision mixing is achieved by adding sample values from two or more different sources, but unless all the quantizing intervals are of the same size and there is no offset, the sum of two sample values will not represent the sum of the two original analog voltages. Thus sample values which have been obtained by non-uniform or offset quantizing cannot readily be processed because the binary numbers are not proportional to the signal voltage.

Figure 2.33 All video signal voltages are referred to blanking and must be added with respect to that level.

If two offset binary sample streams are added together in an attempt to perform digital mixing, the result will be that the offsets are also added and this may lead to an overflow. Similarly, if an attempt is made to attenuate by, say, 6.02 dB by dividing all the sample values by two, Figure 2.34 shows that the offset is also divided and the waveform suffers a shifted baseline. This problem can be overcome with digital luminance signals simply by subtracting the offset from each sample before processing as this results in numbers truly proportional to the luminance voltage. This approach is not suitable for colour difference or composite signals because negative numbers would result when the analog voltage goes below blanking and pure binary coding cannot handle them. The problem with offset binary is that it works with reference to one end of the range. What is needed is a numbering system which operates symmetrically with reference to the centre of the range.

Figure 2.34 The result of an attempted attenuation in pure binary code is an offset. Pure binary cannot be used for digital video processing.

In the two’s complement system, the upper half of the pure binary number range has been redefined to represent negative quantities. If a pure binary counter is constantly incremented and allowed to overflow, it will produce all the numbers in the range permitted by the number of available bits, and these are shown for a four-bit example drawn around the circle in Figure 2.35. As a circle has no real beginning, it is possible to consider it to start wherever it is convenient. In two’s complement, the quantizing range represented by the circle of numbers does not start at zero, but starts on the diametrically opposite side of the circle. Zero is midrange, and all numbers with the MSB (most significant bit) set are considered negative. The MSB is thus the equivalent of a sign bit where 1 = minus. Two’s complement notation differs from pure binary in that the most significant bit is inverted in order to achieve the half-circle rotation.

Figure 2.35 In this example of a four-bit two’s complement code, the number range is from -8 to +7. Note that the MSB determines polarity.

Figure 2.36 shows how a real ADC is configured to produce two’s complement output. At (a) an analog offset voltage equal to one half the quantizing range is added to the bipolar analog signal in order to make it unipolar as at (b). The ADC produces positive only numbers at (c) which are proportional to the input voltage. The MSB is then inverted at (d) so that the all-zeros code moves to the centre of the quantizing range. The analog offset is often incorporated into the ADC as is the MSB inversion. Some convertors are designed to be used in either pure binary or two’s complement mode. In this case the designer must arrange the appropriate DC conditions at the input. The MSB inversion may be selectable by an external logic level. In the digital video interface standards the colour difference signals use offset binary because the codes of all zeros and all ones are at the end of the range and can be reserved for synchronizing. A digital vision mixer simply inverts the MSB of each colour difference sample to convert it to two’s complement.

Figure 2.36 A two’s complement ADC. At (a) an analog offset voltage equal to one-half the quantizing range is added to the bipolar analog signal in order to make it unipolar as at (b). The ADC produces positive only numbers at (c), but the MSB is then inverted at (d) to give a two’s complement output.

The two’s complement system allows two sample values to be added, or mixed in video parlance, and the result will be referred to the system midrange; this is analogous to adding analog signals in an operational amplifier.

Figure 2.37 illustrates how adding two’s complement samples simulates a bipolar mixing process. The waveform of input A is depicted by solid black samples, and that of B by samples with a solid outline. The result of mixing is the linear sum of the two waveforms obtained by adding pairs of sample values. The dashed lines depict the output values. Beneath each set of samples is the calculation which will be seen to give the correct result. Note that the calculations are pure binary. No special arithmetic is needed to handle two’s complement numbers.

Figure 2.37 Using two’s complement arithmetic, single values from two waveforms are added together with respect to midrange to give a correct mixing function.

It is sometimes necessary to phase reverse or invert a digital signal. The process of inversion in two’s complement is simple. All bits of the sample value are inverted to form the one’s complement, and one is added. This can be checked by mentally inverting some of the values in Figure 2.35. The inversion is transparent and performing a second inversion gives the original sample values.

Using inversion, signal subtraction can be performed using only adding logic. The inverted input is added to perform a subtraction, just as in the analog domain. This permits a significant saving in hardware complexity, since only carry logic is necessary and no borrow mechanism need be supported.

In summary, two’s complement notation is the most appropriate scheme for bipolar signals, and allows simple mixing in conventional binary adders. It is in virtually universal use in digital video and audio processing.

Two’s complement numbers can have a radix point and bits below it just as pure binary numbers can. It should, however, be noted that in two’s complement, if a radix point exists, numbers to the right of it are added. For example, 1100.1 is not −4.5, it is–4 + 0.5 = 3.5.

2.13 Introduction to digital logic

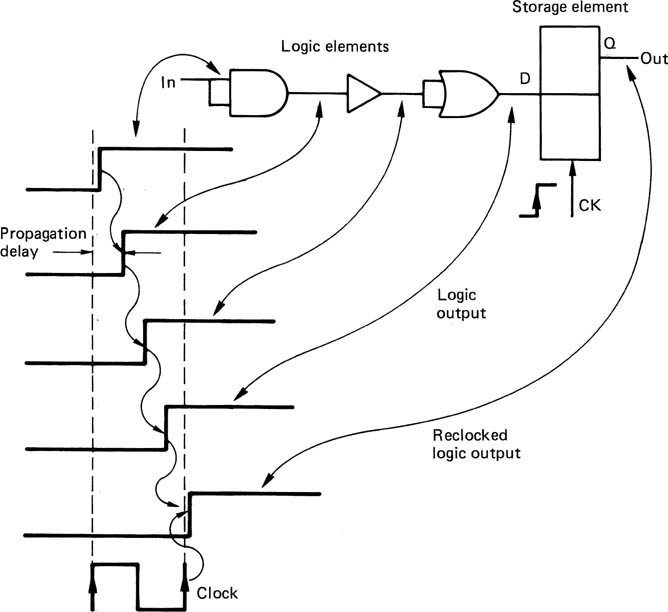

However complex a digital process, it can be broken down into smaller stages until finally one finds that there are really only two basic types of element in use, and these can be combined in some way and supplied with a clock to implement virtually any process. Figure 2.38 shows that the first type is a logic element. This produces an output which is a logical function of the input with minimal delay. The second type is a storage element which samples the state of the input(s) when clocked and holds or delays that state. The strength of binary logic is that the signal has only two states, and considerable noise and distortion of the binary waveform can be tolerated before the state becomes uncertain. At every logic element, the signal is compared with a threshold, and can thus can pass through any number of stages without being degraded.

Figure 2.38 Logic elements have a finite propagation delay between input and output and cascading them delays the signal an arbitrary amount. Storage elements sample the input on a clock edge and can return a signal to near coincidence with the system clock. This is known as reclocking. Reclocking eliminates variations in propagation delay in logic elements.

In addition, the use of a storage element at regular locations throughout logic circuits eliminates time variations or jitter. Figure 2.38 shows that if the inputs to a logic element change, the output will not change until the propagation delay of the element has elapsed. However, if the output of the logic element forms the input to a storage element, the output of that element will not change until the input is sampled at the next clock edge. In this way the signal edge is aligned to the system clock and the propagation delay of the logic becomes irrelevant. The process is known as reclocking.

The two states of the signal when measured with an oscilloscope are simply two voltages, usually referred to as high and low. As there are only two states, there can only be true or false meanings. The true state of the signal can be assigned by the designer to either voltage state. When a high voltage represents a true logic condition and a low voltage represents a false condition, the system is known as positive logic, or high true logic. This is the usual system, but sometimes the low voltage represents the true condition and the high voltage represents the false condition. This is known as negative logic or low true logic. Provided that everyone is aware of the logic convention in use, both work equally well.

In logic systems, all logical functions, however complex, can be configured from combinations of a few fundamental logic elements or gates. It is not profitable to spend too much time debating which are the truly fundamental ones, since most can be made from combinations of others. Figure 2.39 shows the important simple gates and their derivatives, and introduces the logical expressions to describe them, which can be compared with the truth-table notation. The figure also shows the important fact that when negative logic is used, the OR gate function interchanges with that of the AND gate.

Figure 2.39 The basic logic gates compared.

If numerical quantities need to be conveyed down the two-state signal paths described here, then the only appropriate numbering system is binary, which has only two symbols, 0 and 1. Just as positive or negative logic could be used for the truth of a logical binary signal, it can also be used for a numerical binary signal. Normally, a high voltage level will represent a binary 1 and a low voltage will represent a binary 0, described as a ‘high for a one’ system. Clearly a ‘low for a one’ system is just as feasible. Decimal numbers have several columns, each of which represents a different power of ten; in binary the column position specifies the power of two.

Several binary digits or bits are needed to express the value of a binary video sample. These bits can be conveyed at the same time by several signals to form a parallel system, which is most convenient inside equipment or for short distances because it is inexpensive, or one at a time down a single signal path, which is more complex, but convenient for cables between pieces of equipment because the connectors require fewer pins. When a binary system is used to convey numbers in this way, it can be called a digital system.

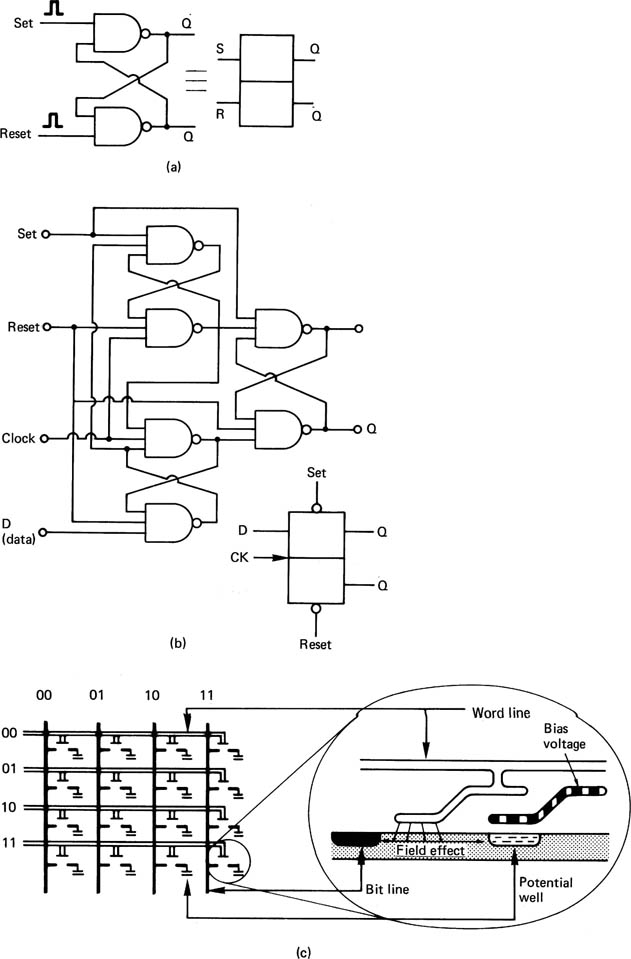

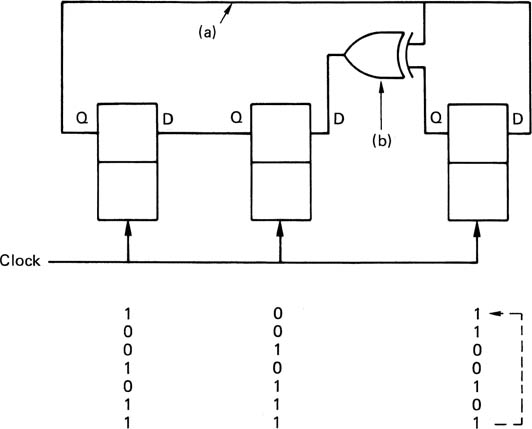

The basic memory element in logic circuits is the latch, which is constructed from two gates as shown in Figure 2.40(a), and which can be set or reset. A more useful variant is the D-type latch shown at (b) which remembers the state of the input at the time a separate clock either changes state for an edge-triggered device, or after it goes false for a level-triggered device. D-type latches are commonly available with four or eight latches to the chip. A shift register can be made from a series of latches by connecting the Q output of one latch to the D input of the next and connecting all the clock inputs in parallel. Data are delayed by the number of stages in the register. Shift registers are also useful for converting between serial and parallel data transmissions.

Figure 2.40 Digital semiconductor memory types. In (a), one data bit can be stored in a simple set-reset latch, which has little application because the D-type latch in (b) can store the state of the single data input when the clock occurs. These devices can be implemented with bipolar transistors or FETs, and are called static memories because they can store indefinitely. They consume a lot of power.

Figure 2.40 In (c), a bit is stored as the charge in a potential well in the substrate of a chip. It is accessed by connecting the bit line with the field effect from the word line. The single well where the two lines cross can then be written or read. These devices are called dynamic RAMs because the charge decays, and they must be read and rewritten (refreshed) periodically.

Where large numbers of bits are to be stored, cross-coupled latches are less suitable because they are more complicated to fabricate inside integrated circuits than dynamic memory, and consume more current.

In large random access memories (RAMs), the data bits are stored as the presence or absence of charge in a tiny capacitor as shown in Figure 2.40(c). The capacitor is formed by a metal electrode, insulated by a layer of silicon dioxide from a semiconductor substrate, hence the term MOS (metal oxide semiconductor). The charge will suffer leakage, and the value would become indeterminate after a few milliseconds. Where the delay needed is less than this, decay is of no consequence, as data will be read out before they have had a chance to decay. Where longer delays are necessary, such memories must be refreshed periodically by reading the bit value and writing it back to the same place. Most modern MOS RAM chips have suitable circuitry built-in. Large RAMs store thousands of bits, and it is clearly impractical to have a connection to each one. Instead, the desired bit has to be addressed before it can be read or written. The size of the chip package restricts the number of pins available, so that large memories use the same address pins more than once. The bits are arranged internally as rows and columns, and the row address and the column address are specified sequentially on the same pins.

The circuitry necessary for adding pure binary or two’s complement numbers is shown in Figure 2.41. Addition in binary requires two bits to be taken at a time from the same position in each word, starting at the least significant bit. Should both be ones, the output is zero, and there is a carry-out generated. Such a circuit is called a half adder, shown in Figure 2.41(a) and is suitable for the least significant bit of the calculation. All higher stages will require a circuit which can accept a carry input as well as two data inputs. This is known as a full adder (Figure 2.41(b)). Multibit full adders are available in chip form, and have carryin and carry-out terminals to allow them to be cascaded to operate on long wordlengths. Such a device is also convenient for inverting a two’s complement number, in conjunction with a set of invertors. The adder chip has one set of inputs grounded, and the carry-in permanently held true, such that it adds one to the one’s complement number from the invertor.

Figure 2.41 (a) Half adder; (b) full-adder circuit and truth table; (c) comparison of sign bits prevents wraparound on adder overflow by substituting clipping level.

When mixing by adding sample values, care has to be taken to ensure that if the sum of the two sample values exceeds the number range the result will be clipping rather than wraparound. In two’s complement, the action necessary depends on the polarities of the two signals. Clearly, if one positive and one negative number are added, the result cannot exceed the number range. If two positive numbers are added, the symptom of positive overflow is that the most significant bit sets, causing an erroneous negative result, whereas a negative overflow results in the most significant bit clearing. The overflow control circuit will be designed to detect these two conditions, and override the adder output. If the MSB of both inputs is zero, the numbers are both positive, thus if the sum has the MSB set, the output is replaced with the maximum positive code (0111 …). If the MSB of both inputs is set, the numbers are both negative, and if the sum has no MSB set, the output is replaced with the maximum negative code (1000 …). These conditions can also be connected to warning indicators. Figure 2.41(c) shows this system in hardware. The resultant clipping on overload is sudden, and sometimes a PROM is included which translates values around and beyond maximum to soft-clipped values below or equal to maximum.

A storage element can be combined with an adder to obtain a number of useful functional blocks which will crop up frequently in audio equipment. Figure 2.42(a) shows that a latch is connected in a feedback loop around an adder. The latch contents are added to the input each time it is clocked. The configuration is known as an accumulator in computation because it adds up or accumulates values fed into it. In filtering, it is known as an discrete time integrator. If the input is held at some constant value, the output increases by that amount on each clock. The output is thus a sampled ramp.

Figure 2.42 Two configurations which are common in processing. In (a) the feedback around the adder adds the previous sum to each input to perform accumulation or digital integration. In (b) an inverter allows the difference between successive inputs to be computed. This is differentiation.

Figure 2.42(b) shows that the addition of an invertor allows the difference between successive inputs to be obtained. This is digital differentiation. The output is proportional to the slope of the input.

2.14 The computer

The computer is now a vital part of digital video systems, being used both for control purposes and to process video signals as data. In control, the computer finds applications in database management, automation, editing, and in electromechanical systems such as tape drives and robotic cassette handling. Now that processing speeds have advanced sufficiently, computers are able to manipulate certain types of digital video in real time. Where very complex calculations are needed, real-time operation may not be possible and instead the computation proceeds as fast as it can in a process called rendering. The rendered data are stored so that they can be viewed in real time from a storage medium when the rendering is complete.

The computer is a programmable device in that its operation is not determined by its construction alone, but instead by a series of instructions forming a program. The program is supplied to the computer one instruction at a time so that the desired sequence of events takes place.

Programming of this kind has been used for over a century in electromechanical devices, including automated knitting machines and street organs which are programmed by punched cards. However, the computer differs from these devices in that the program is not fixed, but can be modified by the computer itself. This possibility led to the creation of the term software to suggest a contrast to the constancy of hardware.

Computer instructions are binary numbers each of which is interpreted in a specific way. As these instructions don’t differ from any other kind of data, they can be stored in RAM. The computer can change its own instructions by accessing the RAM. Most types of RAM are volatile, in that they lose data when power is removed. Clearly if a program is entirely stored in this way, the computer will not be able to recover fom a power failure. The solution is that a very simple starting or bootstrap program is stored in non-volatile ROM which will contain instructions that will bring in the main program from a storage system such as a disk drive after power is applied. As programs in ROM cannot be altered, they are sometimes referred to as firmware to indicate that they are classified between hardware and software.

Making a computer do useful work requires more than simply a program which performs the required computation. There is also a lot of mundane activity which does not differ significantly from one program to the next. This includes deciding which part of the RAM will be occupied by the program and which by the data, producing commands to the storage disk drive to read the input data from a file and write back the results. It would be very inefficient if all programs had to handle these processes themselves. Consequently the concept of an operating system was developed. This manages all the mundane decisions and creates an environment in which useful programs or applications can execute.

The ability of the computer to change its own instructions makes it very powerful, but it also makes it vulnerable to abuse. Programs exist which are deliberately written to do damage. These viruses are generally attached to plausible messages or data files and enter computers through storage media or communications paths.

There is also the possibility that programs contain logical errors such that in certain combinations of circumstances the wrong result is obtained. If this results in the unwitting modification of an instruction, the next time that instruction is accessed the computer will crash. In consumer-grade software, written for the vast personal computer market, this kind of thing is unfortunately accepted.

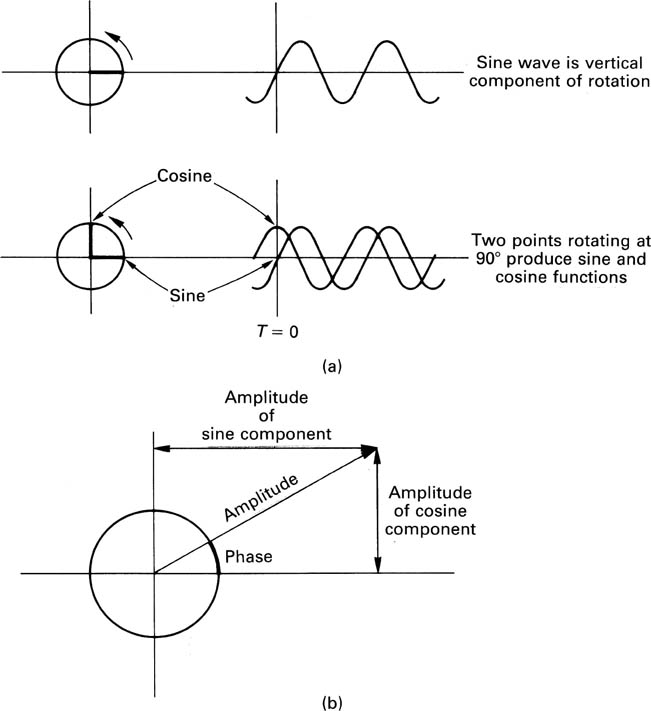

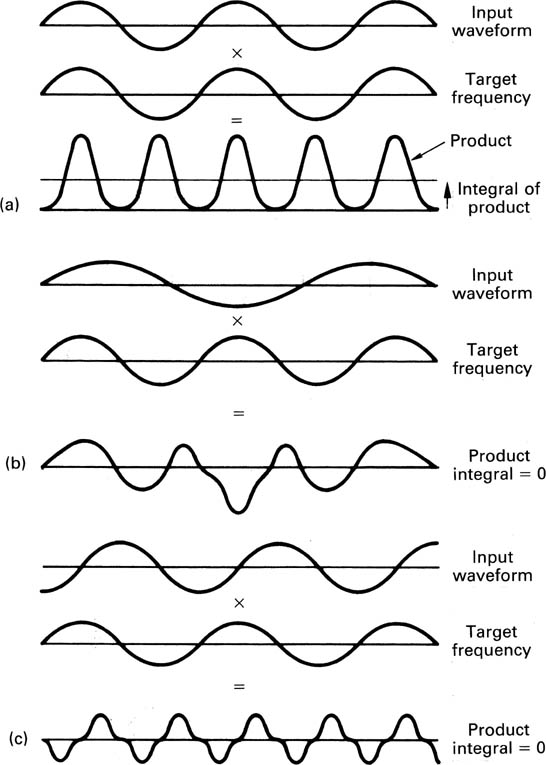

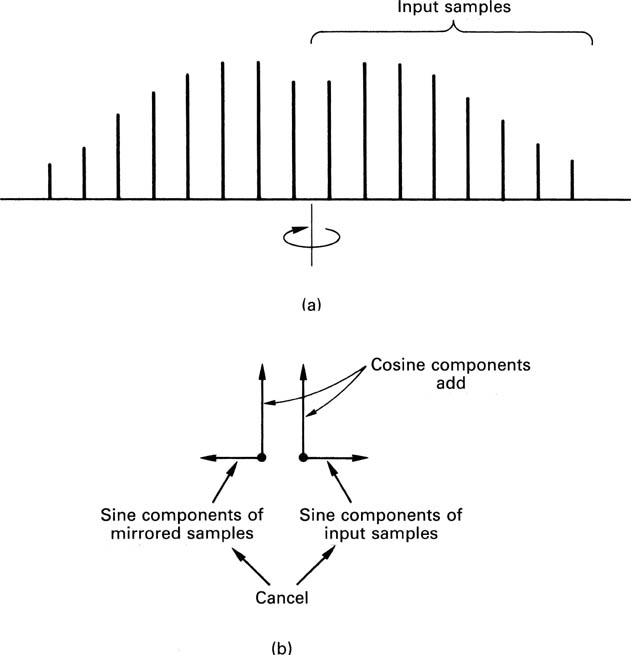

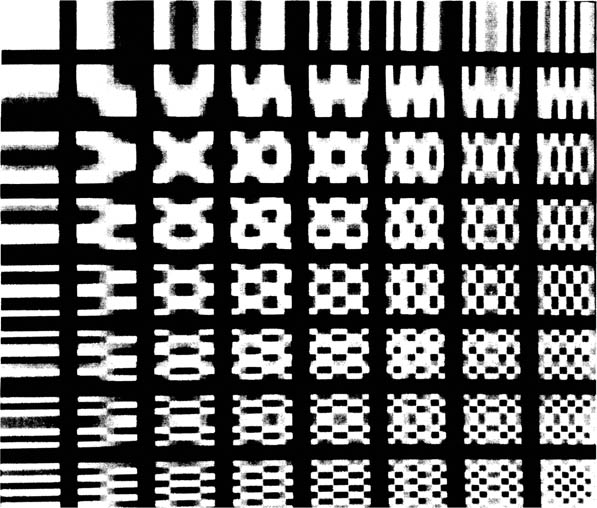

For critical applications, software must be verified. This is a process which can prove that a program can recover from absolutely every combination of circumstances and keep running properly. This is a non-trivial process, because the number of combinations of states a computer can get into is staggering. As a result most software is unverified.