Chapter 9

Service Delivery Maturity and the Service Engineering Lead

If you cannot see where you are going, ask someone who has been there before.

JJ Loren Norris

Delivering services effectively can be difficult. Many service delivery teams focus on the challenge of having to figure out how to deliver what the customer wants seamlessly and cost effectively. But customers need more than just a bunch of features. They also need services to have the “ilities” (described in the previous chapter) they need to meet their outcomes. A lot of service delivery teams struggle to build the maturity to consistently and confidently provide those “ilities.” Growing ecosystem complexity only increases the difficulty to achieve it. As the size and number of teams, instances, and services grow, even seemingly great teams can struggle to maintain shared awareness and cross-organizational alignment.

Unfortunately, emerging tools and delivery approaches have mostly continued to focus on removing delivery friction. As a result, they often contribute little to ensuring that the information flow needed to stay aware of the ecosystem interactions that shape service “ilities” is sufficient. This means that small gaps and misalignments are free to spread around the ecosystem, often silently warping our mental model of service dynamics in ways that ultimately undermine the effectiveness of our decision making.

The best way for you and your team to overcome this challenge is to start assessing the maturity of the practices and behaviors you rely upon that impact information flow and situational awareness. Doing so can provide clues to your exposure to awareness problems and their likely sources so that you can begin the process of escaping the awareness fog that damages your decision making, and ultimately your customer’s service experience.

This chapter will introduce you to some approaches that I have found that can help you begin your process of discovery and get you on the right path to improvement. The first is a maturity model framework that can help you discern how well you know, and can effectively manage, the parts of your service ecosystem that affect your ability to deliver your services with the “ilities” that are expected. The framework relies on the knowability concepts from the previous chapter, and adheres to many sensible hygiene, architecture, and collaboration approaches that allow teams to better understand and improve their ability to deliver successfully within the means under their control.

As you progress, you will likely find that solving your awareness fog takes more than a handful of process and tool tweaks. Sometimes team members have such demanding workloads that they are constantly under the risk of developing tunnel vision that obscures critical relationships between their area and the larger ecosystem. Other times the need for up-to-date deeply technical information is so high due to rapid and complex ecosystem dynamics that it cannot be fully satisfied by even the most-engaged and well-intentioned manager, architect, or project/program manager.

Such challenges is where the second piece fits in. It is a rotating mechanism that can more directly help boost team awareness and alignment. Depending upon how complex and fragmented your ecosystem is, as well as what capacity and ability your team has to improve, it can be used on either a temporary or more continual rolling basis. I like to call this mechanism the Service Engineering Lead, or SE Lead for short.

This chapter will cover what these mechanisms are, how they work, and some ideas for how they can be rolled out in your organization.

Modeling Service Delivery Maturity

Our industry is festooned by all sorts of maturity models. So many of them are little more than gimmicks to sell consulting services that even mentioning the concept can evoke groans from team members. However, there are some places where a well-structured model can be an effective way to measure and guide an organization toward more predictably, reliably, and efficiently delivering the capabilities and services necessary to satisfy their customers.

It might sound obvious, but one of the best ways to determine whether a given maturity model is likely to help is to look at what is being measured. A helpful model should teach you how to spot and then either mitigate or remove the root causes of any unpredictability and risk plaguing your organization. This differs from more-flawed maturity models, which tend to focus on counting outputs that, at best, only expose symptoms rather than their root causes.

The beauty of a maturity model is that, unlike a school assessment grade, you do not have to have a perfect score across every dimension to have the maturity level that is most practical for you. You may encounter situations, as I have, where some immaturity and variability in an area is perfectly acceptable as long as its causes and range are known and never affect the “ilities” customers depend upon.

In fact, any maturity model that insists success only comes when every score is perfect is inherently flawed. I regularly encounter organizations that seem to aim for high levels in a number of areas that not only are unnecessary but damage their ability to deliver the “ilities” that the customer actually needs. This is why your maturity model needs to be implemented in a way that is attuned to your customer outcomes and “ilities.”

One such example are those measures that aid service availability, such as supportability and coupling. Even without a maturity model in the way, availability measures often do not match what the customer actually needs. I have teams strain to meet some mythical 99.999% uptime number, not realizing that customers only need the services to be up during a predictable window during the business day. Customers generally do not care if a service is available when there is no chance they will need to use it. In fact, they likely would prefer a much simpler and cheaper service that is available when they need it over a dangerously over-engineered and always available service that is expensive and slow to adapt to their needs.

To avoid such issues, I try to encourage teams to avoid treating maturity measures as an assessment, and instead use them as a guide to help improve their situational awareness of their service delivery ecosystem to meet the needs of their organization and customer base. Sometimes a maturity measure will expose ility shortfalls that need to be closed, or gaps in skills or information flow that the team might need help to address. Occasionally, teams need management or focused expert help, such as the Service Engineering Lead discussed later, to see and progress. By removing the fear of judgment, teams feel safe to share and ask for help to ultimately deliver effectively.

The Example of Measuring Code Quality

When measuring service delivery maturity, it is important to ensure that you are measuring an area in a way that will sensibly help you meet customer needs. You get what you measure, and it is easy to measure even an important area in a way in which resulting behaviors drift away from your intended goal. A good way to illustrate this is by taking a look at a common metric like code quality.

Code quality can tell you a lot about the likely stability of a given area and a team’s ability to change, extend, and support it. A common assumption is that code quality can be measured by counting and tracking the number of defects that have been registered against it. For this reason, there are entire industries devoted to counting, tracking, and graphing the trends for how defect numbers rise and fall over the lifecycle of various bits of code.

The problem with this metric is that the number of registered defects only captures those that were found and deemed important enough to record. There can be hundreds or even thousands of undiscovered defects. Some might live in unexplored but easily encounterable edge cases. Others might only express themselves with mundane symptoms that cause the team to discount counting them. There might also be defects where interactions between components or services cross the responsibility boundary between teams.

Another problem with this metric is that teams are often rewarded or punished on defect counts rather than the overall success or failure of the service. As you can only count what has been registered as an open defect, team members are encouraged to hide or claim something is a “feature and not a bug” instead of striving to achieve what is actually desired: having higher levels of code quality in the ecosystem in order to build customer confidence in your ability to deliver and support the services with the “ilities” they need to reach their target outcomes.

Instead of counting defects, a good maturity model should look at what ways you and your team proactively mitigate the creation of defects. One such metric is how frequently and extensively teams refactor code. Not only does regular refactoring of legacy code allow the team to reduce the number of bugs in the code base, it also helps members of the team to maintain a current and accurate understanding of the various pieces of code that you rely upon. This reduces the likelihood of erroneous assumptions about a particular code element causing defects to creep in. Factors like having team members rotate around the code base further improves everyone’s shared awareness.

Service Delivery Maturity Model Levels

An optimal service delivery maturity model works on the concept of maturity levels against capability areas of interest. I typically use a set of six levels going from 0 to 5. Besides conveniently aligning with the level count of most other maturity models, the number fits neatly with step changes in the span of shared awareness, learning, and friction management across the delivery ecosystem.

Here is the general outline of each of the levels:

Level 0: Pre-evidence starting point. This is a temporary designation where everyone starts out at the very beginning of onboarding the model before any details have been captured. At Level 0 the maturity model is explained and a plan is put together to find out and capture the level for each capability area of interest. It is not uncommon for a team to immediately leap to Level 2 or 3 from this designation.

Level 1: Emerging Best Practices. This is the where the information for a capability area of interest has been captured and found to be immature or in a poor state. At this level a “Get Well” plan with a timeline and sensible owners for each action should be assembled to help put in place improvements to get the area to at least Level 2.

Level 2: Local or Component-Level Maturity. This is the level a team reaches when they know the state of a capability area of interest at a local or component level. They can track its state, understand what customer ilities it contributes or affects, understand how health and performance are monitored, and understand what can go wrong. There is a set of practices for managing activities around the capability that the team has adopted and can continually improve. Information and coordination beyond the local level is limited or immature.

Level 3: Cross-Component Continuous Practices. At this level the team has a firm understanding of what components, services, data elements, and teams the components and services they deliver depend upon that is tracked and kept up to date. There is a demonstrably good set of coordination practices and regular clear communication channels with the teams and organizations that provide and support dependencies. For software and services, any APIs are clean and well defined, data models are clear and agreed upon, data flows are known and tracked, testing and monitoring is stable and reliable, coupling between components is well managed and loosened wherever sensible, deployment models are considered and aligned, and monitoring and troubleshooting practices are clear with roles and escalations known and agreed upon to mitigate any coordination problems during major events.

Level 4: Cross-Journey Continuous Practices. At this level all meaningful service journeys traversed that include components and services of the delivery team are known end-to-end. This includes components and services that are outside the direct remit of the delivery team. There are few areas that are not either “known” or “known unknown” and dealt with accordingly. There is a clear understanding and tracking of the dependencies and level of coupling across all journeys, all cross-journey flows are monitored, failure types are documented and understood along with their effect on the customer and their desired outcomes, and troubleshooting, repair, and escalation are known, documented, and demonstrable. Communication and coordination with teams across the service journey are clear and regular. Instrumentation is in place to capture, understand, and improve customer service experience and usage patterns across the journey. Teams often find themselves helping each other, rallying others across the business to help and invest in weak areas that may not fall under their direct remit. This level is essential for smooth service delivery in ecosystems with either multiple service delivery teams or outside service dependencies.

Level 5: Continuously Optimized Service Delivery Practices. When delivery teams reach this level, everything works seamlessly. The delivery team can and does release on demand in close alignment whenever necessary with other teams and the customer. Continuous improvement measures are everywhere, and the team regularly challenges the business to improve and take on new challenges that actively help current customers and attract new customers to meet their target outcomes with the delivered services.

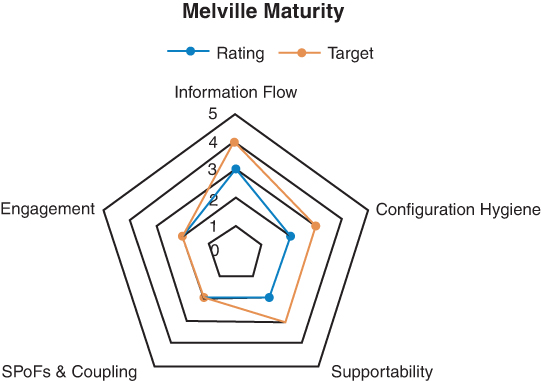

Maturity is typically measured across a number of dimensions, as the example in Figure 9.1 demonstrates. Measuring different dimensions, or areas of interest, rather than just having one maturity number is a great way to help teams expose potential risks and highlight the areas where they might need time and help to improve. Sometimes it might show that there is value in investing in architecture work to build in resiliency or loosen coupling with problematic dependencies. Other times it might expose places where a lot of effort is being spent that is not contributing to customer outcomes.

Figure 9.1

Maturity “spider” diagram representing current and target states.

One feature of this model is that each maturity level is cumulative. While it is possible to move up and down between maturity levels, most movement is caused by improving maturity through having a better understanding of what you have or by genuinely putting improvements in place, or reducing maturity when introducing new components, solutions, or teams to the service ecosystem.

Another benefit of this approach is that it provides a subtle yet effective nudge to improve communication flow and collaboration between teams and even other important parties outside the organization. This can be useful encouragement for more insular or combative teams. It also might highlight an unhealthy vendor relationship or, in the case of FastCo illustrated in Chapter 8, a problematic supplier service level mismatch that needs to be addressed.

To pull all of this together, the team usually works with the manager or a knowledgeable person from outside the team to collect, review, and track the evidence behind each capability measure. This makes it easier to audit the data from time to time, particularly in the unlikely chance some event occurs that does not seem to reflect the maturity level. This can help the team understand the root cause that has pulled maturity levels downward. I often encourage teams to build charts as well, like the example shown in Figure 9.1, to help track where they are, as well as where they are targeting to reach in their next improvement cycle.

One item to note is that it is generally rare for teams to confidently get to Level 5 on all measures. Level 5 is hard. It means that everything just works. Service delivery ecosystems are rarely so static that there is no longer a need to remediate arising problems and improve. Even at Level 5, the objective is for everyone to continually find new ways to more effectively meet target outcomes together.

Service Delivery Maturity Areas of Interest

Now that you have a good idea of a workable measuring mechanism, it is worthwhile to discuss the dimensions of what is likely meaningful to measure and track in your ecosystem.

All ecosystems are different. However, there are a number of common useful areas to look to in order to measure service delivery maturity. Software-, data-, and systems-level hygiene are certainly one set, as are the ways the team approaches various architectural practices like coupling, redundancy, and failure management challenges. All of these are traditional technical areas where any lack of awareness can create a large and potentially destructive risk of failure.

There are other areas that are less obvious but have an important impact on a service delivery team’s awareness and ability to respond and improve effectively in the face of arising challenges. The list of interesting areas that I have found generally applicable to capturing the maturity of delivery capabilities in most organizations includes the following:

Information flow and instrumentation

Configuration management and delivery hygiene

Supportability

Single point of failure (SPoF) mitigation and coupling management

Engagement

Why this list? Let’s go into some detail on each to understand more.

Information Flow and Instrumentation

Figure 9.2

There is a lot of value in knowing how well data is flowing.

Effective and timely information flow across the ecosystem is critical for maintaining situational awareness. Information comes from people, the code and services delivered, as well as any platforms and mechanisms that support the creation, delivery, and operation of the code and services.

The intent of this measure is to capture how well situational awareness across the delivery and operational teams is maintained. This includes finding the answers to questions like:

What is the balance of proactive and reactive instrumentation in place to help the right people become aware of a development that requires attention. How many of those measures are necessarily reactive, and why are there no reasonable proactive alternatives?

How long are any potential time lags between a development occurring and the right people knowing about it?

What is the quality of information flowing?

How much expertise is needed to assess and understand a situation, and how widely available is that expertise in the team?

How well-known are team and service capabilities and weaknesses?

Measuring information flow starts small with capturing simple and obvious things like how inclusive team meetings and discussions are to the observations, ideas, and concerns of members of the team. It also includes identifying information and knowledge sources throughout the ecosystem.

The team’s ability to know who knows what, who has the knowledge to perform certain tasks, and how that knowledge can be shared and grown can tell you where there might be bottlenecks, single points of failure, or places where context can become distorted or lost. For instance, information that heavily counts on a manager or a subject matter expert to act as a bridge between a situation and the people needing to handle it can create unnecessary friction and information loss. Likewise, using chatrooms can be a great way of helping information flow. However, they can fall short if their membership is fragmented, there is too much extraneous channel noise, or if the information is not captured in some way for future reference.

Measuring the flow and quality of information coming from ecosystem instrumentation is just as important. Inconsistent logging practices, little to no code and service instrumentation, noisy or unreliable monitoring, poorly tracked instance configuration state, missing or out-of-date documentation, and erratic incident tracking can limit the team’s ability to understand everything from service health and code interactions to customer usage patterns and journeys across the ecosystem. I regularly encounter teams that rely upon external service providers for key platform services like message queues, networking, and data services that do not really understand the usage patterns of those services and end up getting caught out exceeding provider caps. The resulting provider throttling, service cutoffs, and blown budgets are both embarrassing and can detrimentally affect the customer.

The value of metrics from the delivery process itself is also commonly overlooked. Having data about everything from amount of code churn, distribution of code changes by developers across the team within components, build/integration/testing statistics, versioning, packaging atomicity, deployment configuration tracking, to the restorability of an environment and its data all can reveal risk areas and friction points that can hinder the team’s ability to respond to events and to learn.

It is also important to examine how, if at all, the team acquires information about customers and their needs and expectations. The way the team captures and learns about customer usage patterns, what “ilities” are important to them, and what outcomes they are trying to achieve can expose potential lags, gaps, and misdirection that can degrade vital situational awareness needed for decision making.

Many teams find gauging information flow maturity to be difficult. A big part of the reason is that information flow maturity is less about the amount of information flowing and more about its ability to provide enough context to align teams and improve decision efficacy.

Trying to answer the questions outlined earlier can provide a good start to help you and your team start on the road to information flow maturity. I often supplement those with a number of experiments that are geared toward testing how, and how quickly, information flows across the organization. Sometimes it can be as simple as asking the same questions to different teams and team members and then looking for clues in any differences in their answers. Other times I use what I call “tracers” (as mentioned in Chapter 6, “Situational Awareness”) to look for any flow friction, bottlenecks, and distortions as the information and resulting actions move across the team.

Over time, everyone on the team should be able to check the quality of the flow themselves, even if it is only to improve their own awareness and decision efficacy. Checking what information is flowing, where it is going to, and its overall accuracy should be done in such a way that the team clearly understands that it is intended to help support the team, not to judge them. Gaps, bottlenecks, and single points of failure form with the best of teams. Value comes from catching them quickly, understanding how they formed, and then seeking to prevent them from appearing in the future.

Configuration Management and Delivery Hygiene

Figure 9.3

Hygiene is important in your delivery ecosystem.

As anyone who has had to deal with a messy teenager or roommate can attest, a disorganized room makes it difficult to know with any certainty what is in it, what condition those items might be in, and what dependencies they might need in order to work as needed. This not only slows down the process of finding and using any items, it can also result in lost items, rework, and other costly mistakes. It might be a minor annoyance if it is their bedroom. However, it can be outright dangerous to your health if the disorder extends to the kitchen where hidden joys like rancid food and contaminated utensils can sicken or kill.

This works the same for delivery ecosystems. Just like cooking a meal, any missing or damaged element, wrong version or type, or incorrectly used or configured part of the technology stack can result in all sorts of unpleasant surprises that cause services to behave differently than the customer needs.

Like having an organized kitchen, putting in place good ecosystem hygiene practices makes it possible to understand the state of your service components in a way that will provide clues to how well they and the mechanisms you use to assemble and deliver them will contribute to customer ilities. For instance, you should be able to look at build and test metrics to spot potentially brittle code. These will be those places where things seemingly go wrong every time they are touched.

Similarly, being able to get a sense of how much and where change will likely take place within the environment can help you ascertain the level of potential risk in rolling out that change. This includes determining what ilities might be impacted by the change, as well as how reversible the change might be if something does go awry.

To measure the level of ecosystem hygiene, I typically look to have the team try to answer the following questions:

How does the delivery team manage their code and delivery environments? Is it done in a more mature way with good version control, atomic packaging, and tools that build and track configuration changes that can be easily audited for accuracy? Is it instead done in a less mature way with documentation that people might follow but where there is limited visibility in ensuring the final and current state?

Are continuous build and integration techniques in place, and if so, how continuously are they providing insight into the state of the code? How often is this checked and used to understand code health and its ability to meet customer ilities?

Does the development team do top-of-trunk development where all code gets checked into the same trunk and conflicts are dealt with immediately, or does the team have elaborate branching and patching/merging that means everyone’s work isn’t being integrated together all the time? It is easy for awareness details to be missed when sorting out merge conflicts.

Is software packaged, and if so, are packages atomic so that they can be easily uninstalled without leaving residue like hanging files, orphaned backups, or potentially mangled configurations?

Is all software and are all packages versioned and are changes tracked over time? If so, is it possible to determine what configuration was in place in an environment at a given time, as well as what changes were made, and when, in order to trace down a bug or an unexpected behavioral change?

Is it possible to know who changed what, when they changed it, and why they changed it?

Is version compatibility known, tracked, and managed across components, and if so, how?

The same needs to be done for environments:

Are all of their elements known and tracked or otherwise dealt with?

Does this include the hosting infrastructure?

How much variance is allowable in the hosting infrastructure without causing problems, and why?

Are variances known and tracked? If so, by whom and how often are they audited?

Is the stack reproducible, and if so, how much time and effort are required to reproduce it?

Does anyone authorized to build an environment have the ability to do it themselves?

How long does it take someone to come up to speed on how to build and configure an environment, deploy and configure a release?

Are the differences between development, test, and production environments known, and if so, where are the differences tracked, where are the risk areas caused by differences between the environments, and how are they dealt with?

Good configuration management and delivery hygiene is what enables continuous delivery tools and mechanisms to really shine. For this reason, it is the capability measure that can be most exploited by complementary operational tools and mechanisms.

Supportability

Figure 9.4

Are your services supportable, or an accident waiting to happen?

The level of supportability of a service is dependent on two important factors:

The extensiveness and usability of capabilities in the delivery ecosystem necessary to install, manage, and support the service effectively.

The amount of knowledge and awareness required by those operationally supporting the service. This includes understanding what might cause the service to degrade or fail and how it might behave when it starts to occur, how to troubleshoot and recover from problems when they occur, and how to scale the service both for growth and to handle occasional traffic bursts.

Supportability is an often misunderstood and neglected capability. For one, having a service ecosystem that has supportability mechanisms in place and some team members who potentially have the ability to use them does not necessarily mean that the capability is deployed effectively. Knowledge and awareness are often heavily fragmented across the team, with lower-tier operational support seeing what is going on but only having basic infrastructure and troubleshooting knowledge, while deeper skills sit in specialized silos such as front-end, back-end, and database teams. As you might expect, this slows down responsiveness while simultaneously making it difficult to see relationship patterns between disparate ecosystem elements.

Another challenge is that the concept of supportability can easily be misconstrued as the number of prepared processes and plans for handling incidents. I regularly encounter runbooks that expect their step-by-step processes to be mindlessly followed to contain a problem rather than encouraging staff to understand the problem so that they can find ways of proactively reducing the chances of such problems occurring in the future. On the other end of the spectrum are disaster recovery and business continuity plans that try to pre-plan the steps for handling specific catastrophic disasters. While documenting approaches for troubleshooting likely problems and executing recovery and business continuity procedures is sensible, counting on having captured and documented all failure and recovery paths in a way that they can be executed by anyone technical, regardless of the depth of their knowledge of the ecosystem they run in, is often dangerous. Not only is it unlikely that all paths will be captured, but they often do not account for an unforeseen combination of events, such as a couple of components degrading or a data corruption event, which are both more likely to occur and require deep ecosystem awareness to resolve.

There are a number of good ways to track supportability. One place to start is to measure how quickly someone can come up to speed on installing and supporting a component or service. I have done this in the past by instituting an onboarding exercise for new staff where they were given the task to install various key service components. This includes finding out how to install various components, then performing the installations and documenting what they experienced. This is followed by a debrief with the new starter so that they can suggest what should be fixed, and describe what value they believe their proposed fixes would bring.

At one company this exercise began by placing a new server fresh out of the box on the person’s desk. They then had to figure out not only how to install the service, but also how to get the server in a rack and networked, get the right operating system on it, and determine all the other configuration and support details. This was an impressively effective way of improving installation and configuration of services. Everyone who went through it could recall all the pain they went through, and were motivated to find ways to continually simplify it.

It is easy to run similar exercises when services are delivered in the cloud, including guiding code through a delivery pipeline or trying to determine how to spin up new service instances. I know several companies do this by having new hires perform pushes to production as part of the onboarding process in order to help them become familiar with the delivery and push process, as well as to gain insight into ways they can be improved.

There is also value in putting in place similar processes that measure how quickly current team members can come fully up to speed on any parts of the ecosystem they have not previously worked on. This is especially beneficial in larger organizations that have a plethora of service components scattered across many areas. This not only can be eye opening, but it is a great way to encourage everyone to openly share and collaborate across team boundaries.

Tracking incidents can be another great window into the supportability of parts of your ecosystem. Like code quality, the value does not come from the number of incidents, but what is happening in and around them. For instance, at one company we managed to find a major fault in the bearings inside a particular model of hard drive this way. As we had thousands of these drives, our proactive tracking saved us from lots of potential data loss and headache.

A good starting point to gauge team maturity is to have them try to answer the following questions:

Do certain incidents coincide with a change? This might indicate a situational awareness problem in your delivery ecosystem that needs to be remedied.

Do certain types of incidents seem to always be handled by the same small subset of the total team that handles incidents? This might indicate dangerous knowledge gaps, over-specialization, or single points of failure.

Are incidents so common in a particular area that team members have created a tool, or want to, that cleans up and restarts the process rather than fix the underlying problem? This can dangerously hide customer-effecting problems that can degrade your service delivery ilities.

Are there patterns of failures in or between one or more particular components or infrastructure types? This might provide clues of ecosystem brittleness or loss of information flow that need to be addressed.

As in the case of installation, one way of proactively finding such awareness weaknesses during troubleshooting is by simulating failures in testing, then dropping in various people from the team to try to troubleshoot, stabilize, and recover. When done well, such activities can improve responsiveness and spark innovative new ways of resolving future problems.

Single Point of Failure Mitigation and Coupling Management

Figure 9.5

It was at that moment that Bill realized being a SPoF was no fun.

Single points of failure (SPoFs) are terrible. They happen in code, services, infrastructure, and people. These choke points limit the flexibility of the organization to respond to unfolding events. The desire with this measure is to capture where SPoFs might exist, why, and how they formed, so that you can then put in mechanisms to remove them and prevent them from occurring in the future.

People are arguably the most dangerous form of SPoF. They trap knowledge, awareness, and capabilities in a place the organization has the least ability to control. People can die, leave, or forget, leaving everyone exposed. SPoFs usually manifest themselves in individuals that the organization continually relies upon to solve the most troubling problems. These people not only are helpful, but often work long hours going out of their way to save the day. For those of you who have read the book The Phoenix Project,4 the character Brent Geller is a great example of one. When we encounter them in the wild, we often joke that they may have saved the day, but they did so by turning the ordinary into superhero mysteries.

4. Gene Kim, Kevin Behr, and George Spafford (IT Revolution Press, 2014).

Getting your Brent to do a task they’ve always done before feels safe. Who wants to risk a problem when you know that Brent can confidently do the task himself? That logic works until the day eventually comes when there is no Brent to ask.

People SPoFs can happen on both the development side and the operational side. Avoiding SPoFs is one of many reasons that I am a strong proponent of rotating team members around to different areas. Rotating duty roles like Queue Master and the SE Lead also helps reduce SPoFs. They not only are great mechanisms to help team members take a step back and spot dysfunction or discover some key detail that hasn’t yet been captured and shared adequately, but they are also a great way of reminding everyone to constantly share knowledge widely throughout the team. The practice of rotating roles and the sharing necessary to make them work also reinforces the value of regularly rotating all work around the team. Nothing is more annoying than getting stuck doing the same work time and again because you are the only person who knows how to do it, or risk suffering from constantly getting bothered with questions about some detail no one else knows that you forgot to document on some past work.

Code, service, and infrastructure SPoFs are also dangerous. These include places where components are tightly coupled. Tight coupling, where one component cannot be changed or removed without having serious or catastrophic consequences on another component, limits flexibility and responsiveness. Changes, maintenance, and upgrades often need to be carefully handled and closely tracked. Closely coupled components frequently have to be upgraded together or tested for backward compatibility.

Capturing and tracking these problems is important for highlighting the risks that they pose to the team so that the team can target and eliminate them. This can be done in a number of ways. I have had teams create rosters of tightly coupled components and ordering dependencies and their effects that are then highlighted every time they are touched.

For people SPoFs, I have team members trade roles and then document every case where they were unsure of something or needed to reach out to the other person for help. When someone is particularly possessive about an area, sometimes it is good to have the manager or the team recommend that the individual be sent away on training or vacation to expose the extent of the problem. The information gaps that can be identified and then closed while the person is away can be a great way to help people understand the value of reducing the SPoF risk.

Engagement

Knowing what is going on within the team does not always help your overall awareness across the rest of your delivery ecosystem. It is important to measure who the team engages with, how and how often they engage, as well as how consistent and collaborative those engagements are in order to find and eliminate any barriers that might reduce shared awareness and knowledge sharing. It is especially important to monitor and support engagement between the team and entities that they depend on. Examples may be another team that their components or services are closely coupled with or a supplier of critical services. It also includes operational teams that might be running and supporting your services, as well as one or more customers you and your team know are important parts of the user community you are ultimately delivering for.

The engagement metric is a means to measure the health of these engagements. Are collaboration and feedback regular and bidirectional? Are engagements more proactive or reactive? Are interactions friendly and structured well with clear objectives and dependencies or do they happen haphazardly or in an accusatory way?

How well do delivery and support teams work collaboratively with counterparts in nontechnical teams to coordinate activities with each other and with the customer? Do they happen smoothly, or does there seem to be a lot of haphazardness and finger-pointing?

If development and operations are in different groups, do the two teams think about, share, and collaboratively plan the work they do together or do they only consider their own silo? This can often be measured by the amount of resistance there is for change and the number of complaints about changes being “tossed over the wall at the last minute.”

Engagement with the customer in some form is also very important. Delivery teams do not necessarily have to directly engage with customers, but they should know enough about customers that they can craft and tune their work to at least try to fit customer needs. This includes understanding what actions and events might seriously impact customers, and if there are particular time periods when it is particularly disruptive for them to occur. Are there solutions in place that can minimize problems? Are there known times when maintenance activities can be done with minimal customer impact? How are these known, and how are these checked?

Maturing the team’s ability to engage is important for everyone’s success. It is also probably the area that, under the constant pressure of delivery, teams often neglect the most.

To help with engagement, I have looked for opportunities where beneficial information can be shared. For instance, I look to see if team members can go on a “ride-along” with Sales to a friendly customer to see their product in action. If there is a User Experience team, I look to have team members go to see UX studies in action. To encourage cross-team sharing, I encourage teams to invite other teams to brown-bag sessions where they discuss some task or component from their area. I also look for ways that people from different teams can work together on a particular problem so that they can build connections.

Sometimes, cross-team engagement needs more than informal engagement. This is one of the many places that the Service Engineering Lead pattern might be able to help.

The Service Engineering Lead

Figure 9.6

The Service Engineering Lead.

A lot of teams find it hard to balance the need to maintain situational awareness and improve their ability to deliver all while handling the flood of work coming in. Part of the reason for this is that many of us have long been conditioned to think about our jobs within the confines of the traditional roles and responsibilities of someone holding our title. We all also have a tendency to lose sight of the bigger picture when we are busy focusing on the tasks we need to complete.

Countering all of this often takes focused effort. This focus becomes even more critical when trying to improve delivery maturity and fill knowledge gaps. For these reasons, I have found it useful to put in place what I call the Service Engineering Lead.

The Service Engineering Lead (or SE Lead) is a duty role (meaning one that rotates around the team) that is tasked with facilitating ecosystem transparency throughout the service delivery lifecycle. One of its missions is to have someone who is for a time focused on some aspect of the team’s service delivery maturity to help it find, track, and close any gaps that are hindering the team’s ability to deliver effectively. This is particularly important for teams that get busy or tend to specialize into silos and need help to ensure enough effort gets set aside to help the team help themselves improve.

Another important area where a Service Engineering Lead can be a big help is when there is a need to improve engagement, information flow, and shared ownership across teams and specialist silos. This is particularly useful when such silos run deep in tightly interconnected ways, such as in a more traditional configuration of separate development and operations teams.

Why Have a Separate Rotating Role?

Some teams are fortunate to have a manager, architect, or project/product manager person or function who not only has all the time, skills, technical and operational prowess, team trust, and desire necessary to take on SE Lead responsibilities but already performs much of that role. If that is the case, creating the rotating SE Lead role might not be necessary.

However, few organizations are so fortunately blessed. If you think your organization might be, you should check for two important conditions before deciding to forego an SE Lead rotation. The first is whether you have one particular person in mind. This, of course, risks creating a whole new single point of failure. Decide if you can cope or easily pivot to having an SE Lead if that person leaves.

The second check is whether the person or function is close enough to the details to have the situational awareness necessary to really help the rest of the team both maintain their situational awareness and learn and improve.

Some unfortunately mistake the SE Lead as being another name for a number of roles that fall far short of meeting these requirements. The most common include

Another name for a project manager or coordinator. While the SE Lead does help, the whole team is responsible for improving service delivery maturity.

Another name for a helpdesk function. While an SE Lead will often pick up activities focused on improving information and workflow, they are not another name for a capture and routing function.

In the case of separate development and operations teams, another name for a delivery team’s dedicated IT Ops support person. The SE Lead might pick up and perform some of the operational tasks when doing so makes sense and does not distract from their primary focus of providing operational guidance and cross-team alignment.

There are a number of reasons why I prefer having the SE Lead rotating among technical peers who are well versed in both coding and service operations. The first is that by being part of the team, even if they are from an operations team and are rotating in as the SE Lead for a development team, they will already have intimate knowledge of their area. This can help bridge any potential knowledge gaps when building and delivering software, tooling, service infrastructure, and support capabilities. Having someone who is familiar with both code and service operations can ensure sensible design, deployment, and operational support choices that help deliver the service ilities needed.

Being part of the team also means the SE Lead has the necessary relationships with the team members to improve awareness and information flow. Knowing team members and their areas also increases the chances that the SE Lead will know who needs to be looped into conversations to understand or guide incoming changes or decisions.

Finally, having the SE Lead rotate among technical peers also increases the “skin in the game” necessary to improve the chances of success. They will have a chance to step back and look at what is going on within the team or with a particular delivery and know that any improvements they are able to help put in place will ultimately help them as well.

Rotating the role as a temporary “tour of duty” can also really open people’s eyes to challenges and the cause and effect of certain challenges they might not have otherwise been aware of or fully appreciated. For this reason, it is important to find the right balance between stability in context and minimizing the chances of creating a new single point of failure when deciding on the rotation rate.

Under normal conditions, I usually rotate the SE Lead at a sensible boundary roughly a month in from when they start. A sensible boundary is usually after the end of a particular sprint, at a lull or change in focus point in the delivery, or simply at the end of week 4 or 5. The only time I might extend the length of the SE Lead period is at the initial discovery phase when onboarding the maturity model. At this time the team will need a lot of help. The Lead for that phase will need to be very knowledgeable about the model so that the team knows what the model is for and why it is important for helping them. For that reason, I usually handpick the Lead and have them work full time on the onboarding for double the normal length, or typically two months.

SE Leads can be a useful addition to guide more than just code and service deliveries. They are also useful in operational, business intelligence, data science, as well artificial intelligence and machine learning activities. The nature of responsibilities might differ for SE Leads depending upon the type of delivery. What remains important throughout is that they actively work to improve awareness and alignment to ensure the delivery of the “ilities” expected from the service.

How the SE Lead Improves Awareness

In order to try to improve information flow and situational awareness, the SE Lead needs to be fully embedded in the team. This includes, whenever possible, attending all the meetings, standups, and other team sync points. This allows the SE Lead to stay attuned to the delivery, the “ilities” that the delivery team needs to deliver with the service, along with any dependencies and coordination points that might ultimately affect the successful delivery and operation of the service.

To do this, the SE Lead will work with the team to answer the following questions and identify what evidence the delivery team will likely need to collect to ensure that any required conditions are met:

What services and “ilities” are part of or might be affected by the delivery?

Which customers are likely to be involved and/or affected?

What are the minimum acceptable levels for affected “ilities,” and will the products of this delivery meet them?

How many of the required conditions are in place to meet stated and agreed upon contractual commitments?

Are there any infrastructural elements that might be required or affected by the change? If so, what “ilities” will they impact?

Are any new infrastructure, software, or service components being added that need to be acquired from outside vendors?

Are there any lead times that need to be considered for ordering and receiving external dependencies?

What configuration elements are going to be touched, and how are changes captured and tracked across the ecosystem?

Are there any specialist skills that might be needed for delivery that have to be drawn from outside the delivery team? If so, how will coordination and information flow be handled so that team awareness doesn’t degrade?

Is the delivery creating any notable changes to the way that the service will be operationally managed (new services, old ones going away, new things to monitor and track, new “ilities,” new or different troubleshooting steps, new or different management methods, etc.)? If so, how will they be captured and tracked?

Are there any legal, regulatory, or governance requirements that need to be considered or might require additional work to be done, processes followed, parties notified, and/or artifacts generated?

What are the security implications of the delivery? Are any elements improved or degraded, and if so, how and to what effect?

Are there any changes to data elements, data flow, data architecture or any classification and treatment adjustments that need to be made? If so, what are those data changes? Who is responsible for implementing them? How will they be managed and tracked?

Are there any known manageable “ilities” that need to be tested and documented?

Are there any known unmanageable “ilities” that the team needs to be aware of and defend against?

Are there any unknown “ilities” that might cause problems?

Are there any activities or changes that are being made by teams or organizations outside that might affect the team’s ability to deliver?

If so, what are they, who is delivering them, and how might they affect the team?

Who is coordinating those changes with the team, tracking them, and informing the team of their effects?

Are there any platform tools or technologies that might help the team deliver more effectively that could be integrated into the delivery? These are generally tools and technologies that can facilitate delivery and overall ecosystem hygiene.

If so, what are they, and who can help with educating the team about them and their use?

Can they be used in the delivery without either impacting the overall design and architecture of the service or unnecessarily derailing the delivery?

It might be difficult to get clear answers to many of these questions, particularly when you first start out and service engineering is not very mature within the organization. But having answers to them helps the SE Lead and others start the process of exposing the problem areas and building a roadmap for how to start to eliminate them.

Organizational Configurations with the SE Lead

Figure 9.7

Traditional team configuration.

Everything from history and internal politics to legal and regulatory rules can affect the layout of organizational structures and responsibilities. While these differences do not prevent you from having an SE Lead, they will likely affect the overall shape of the activities that make sense for a Lead to engage with.

To illustrate this, and to better understand what areas the SE Lead is likely to be able to help with within your organizational structure, let’s walk through the two most likely configurations.

Separate Development and IT Operations Teams

It is still quite common to have the responsibility of service operations handled by a team that is organizationally separate from development. Some organizations are legally or regulatorily required to have such a separation of duties. More often, the configuration has grown out of the different ways that organizations look at the responsibilities of development and operations, and the differences in skill sets those responsibilities require team members to have.

In such a configuration, it makes the most sense to draw the SE Lead from the IT Operations team, and then have each Lead rotate through the development team.

Having an Operations SE Lead in the development team has a number of distinct advantages. For one, it is far more likely that Leads will have the sorts of deep operational knowledge so often missing in the development team. Being part of the delivery team, the SE Lead can guide developers to design and implement decisions that are more likely to meet the outcomes and ilities that the customer wants.

Operational SE Leads are also a great way to ensure ecosystem information flow. Their experience with the service ecosystem can guide developers through the details that are important and need to be considered in order to make better technical design and implementation decisions. This allows developers to understand whether an easier-to-implement choice now will introduce extra risks or friction that may prove too costly in the long run.

Most IT Operations teams are also familiar with, if not actively engaging, any other development teams or suppliers who are delivering components or services that the development team might depend upon or otherwise be affected by. This creates a new conduit for the development team to obtain insights into existing or upcoming conditions they might need to consider or flag as potential risks for their own delivery. I have personally seen this happen a number of times, spurring conversations and proactive adjustments that prevented later headaches.

Likewise, SE Leads can help the rest of the Operations team get earlier and likely deeper insight into the nuances of an upcoming delivery. This reduces the likelihood of surprises, allowing the Operations team to more proactively prepare for installing and supporting any changes, and thus increasing their chances of operational success.

Importantly, IT Operations team members are far more likely to be familiar with the various cloud and DevOps tools and technologies. They should understand which ones are present or make the most sense to use within your service delivery ecosystem, and be able to help developers to take maximum advantage of them. If the Operations team has a Tools & Automation Engineering function (as described in Chapter 10, “Automation”), the SE Lead can help coordinate between the two.

Lastly, having the SE Lead participate as part of the delivery team is also far more likely to foster a feeling of shared ownership for service delivery success between the two organizations. By becoming part of the delivery team, the SE Lead can build relationships that help break down silo walls that so often disconnect teams from the shared outcomes that come from their decisions.

Challenges to Watch Out For

Placing a person from an external team in such an important role as SE Lead does have a number of challenges.

The first is that there will likely be some significant cultural differences between developers and IT Operations staff. Developers have long been encouraged to deliver quickly, while Operations staff have been pushed to minimize risk. Often these conflicting views have soured relationships between the two groups. To resolve this, managers on both sides should look to change incentive structures so that each side benefits from collaboratively delivering what actually matters: the target outcomes and ilities that the customer desires.

Another challenge is that traditionally few IT Operations staff have much experience with code and the software development end of the delivery lifecycle. Likewise, developers rarely have a full grasp of the entire service stack they are delivering into. Many are prone to feel embarrassed or try to hide these knowledge gaps, or worse be convinced that they do not exist or do not matter.

Both sides should look for opportunities to educate each other. While Operations Service Engineers do not necessarily need to become full coders, it is extremely beneficial for them to know basic scripting and understand some rudimentary coding and software delivery concepts like dependencies, coupling, inheritance, consistency, and version control. Not only will this help them engage more effectively with developers, it can alter how they think about troubleshooting and operating the production environment. Knowing how to script is useful in that it gets a person to think of ways to automate away boring repetitive tasks. Even if they do not end up coding up anything, thinking about places to do it is important to help the team find bandwidth to do the more valuable work.

Getting developers to better understand the service stack helps reduce the chances that they will hold flawed assumptions about it. They are also far more likely to design their software in ways that can use cloud and DevOps tools in more effective ways. Finally, seeing the operational side up close can encourage developers to think about how to instrument the services they produce to help everyone understand what is happening when they are deployed. Developers often are so used to being able to probe a development instance that they forget how difficult it is to do the same thing when there might be hundreds or thousands of instances of it deployed in production.

Incentivizing Collaboration and Improvement

Talented managers know that the most important measure of team success is how well they can deliver the outcomes that matter to the customer. This is where the maturity model can help. By incentivizing teams across the delivery lifecycle to improve the organization’s ability to deliver those outcomes and the ilities being sought, it can encourage team members to find ways to remove team barriers and collaborate.

One of the most compelling ways to do this is to look for sensible pairings of organizational activities that together improve delivery. One of the best examples is pairing improving packaging and configuration management with automated deployment and environment configuration management tools.

Developers and testers are always looking for good places to try out new code, but the options they have for doing so are far from ideal. The sandbox where most developers code rarely reflects production conditions. Even if one gets over infrastructure sizing differences, the sandbox is littered with extraneous cruft and usually lacks all the necessary dependencies. Test environments are typically only marginally better, and are often limited in number and in high demand. With the wide availability of cloud providers, building out infrastructure elements can be as easy as using a credit card. However, the means for deploying the rest of the service stack are far more troublesome. Some people try using shortcuts like snapshots of preconfigured containers and virtual machines that, without sufficient care, can themselves grow outdated and filled with extraneous code and configuration data that, as with Knight Capital, can cause problems.

An automated deployment and configuration system, particularly one that heavily encourages cleaner and more atomic software packaging, tokenized configurations, and audit functions, can dramatically reduce installation friction while making it far easier to find and track otherwise untraceable changes. This type of automated system can be combined with dashboards to show configurations of various environments and flag any differences or drift, making it far easier to spot potential risk areas and ultimately help manage environments more effectively.

Another great pairing is improving code instrumentation and service instrumentation hooks with production monitoring, telemetry, and troubleshooting tools. Many developers and testers either are unaware of what can be collected and presented with modern IT Operations monitoring and telemetry tools or do not see much personal benefit in extending the richness of information exposed to them. Expanding the availability of such tools can help build shared awareness across the delivery lifecycle, leading to more-educated ways of matching up conditions in the various environments to produce better services. If done well it can also help SE Leads better spot potentially problematic discrepancies earlier in the delivery lifecycle that can be investigated and either tackled or prepared for.

Developers Running Production Services

Figure 9.8

Trying to do it all.

An increasingly common configuration is to do away with IT Operations entirely and instead overlay service operations duties on top of the developers building the product. Some view this as true DevOps, or even NoOps. With no team boundary between service operation and development, there is not the issue of those two teams having conflicting priorities, nor is there the risk of information flow breakages between the two functions.

There are a couple of ways that you could implement the Service Engineering Lead in such a model. The most common would be to rotate the duty among members of the delivery team. This has the distinct advantage of eliminating any potential incentive conflicts and trust issues between teams. It also ensures that there is still someone who is looking out for maturity improvements to more effectively deliver the service ilities that the customer needs.

There are, however, some challenges that can potentially limit the value that having an SE Lead might bring. The first is that much of the value an SE Lead brings is being that person who can deliver that extra bit of focus to ensure information flow and situational awareness in order to deliver the service ilities the customer needs more effectively. Unless your team is working alongside other delivery teams where an SE Lead might be able to help improve coordination and alignment, most of the SE Lead’s attention will likely instead need to focus on the operational aspects of the service.

The problem with this is that few developers have sufficient operational experience to accurately translate operational service ilities into service design and delivery. This can be especially problematic when it comes to operational tools and the configuration and use of the infrastructure stack. A lack of knowledge in either of these areas often results in them not being used effectively, if at all.

I have personally encountered a large number of different teams in recent years that have suffered greatly from this problem. By the time I am asked in, they usually have managed to twist their production environment into unmanageable knots by misusing everything from Kubernetes and containers to AWS infrastructure services. In most cases this had real effects on not just the organization’s ability to release changes, but also on what the customer experienced. Sometimes customer sessions were dropped, orders lost or doubled unexpectedly, or worse.

This is obviously not a great way to meet customer target outcomes.

Larger organizations that have many delivery teams might find it advantageous to build the SE Lead capability into a central platform team, particularly one that is already providing core operational tooling. This configuration has the advantage of encouraging information sharing between teams while also promoting platform tool adoption.

Overcoming the Operational Experience Gap

While some delivery teams are fortunate enough to have one or more strong senior developers who have spent a significant amount of time in operational roles and can help frame up an SE Lead discipline, most are not so fortunate. To overcome this operational experience gap, teams might want to consider hiring one or more people with a solid service operations and operational tooling background to seed your organization. This would not be a wasted hire. Once your team either learns how to perform the SE Lead tasks effectively or grows out of the need for an SE Lead, this operational tooling person can rotate into a Tools & Automation Engineering position where they can enhance your operational tooling capabilities.

Once you have identified some people to help you begin your journey, those people should help the team devise a backlog of operational items that the team needs to put in place in order to build up the team’s maturity. These items might include such improvements as clean atomic packaging and automated deployment, identifying the key metrics and thresholds for service health, determining what instrumentation is needed to help with troubleshooting and understanding ecosystem dynamics, minimizing manual changes, capturing and proactively managing configurations and versioning of deployments, tracking what service versions are deployed and which are in use, having methods to ensure knowledge sharing and staying attuned to customer outcomes, and the like.

From there, the team needs to come up with a means of rotating the SE Lead duties around. Just as in the IT Operations model, the duties the team member takes on during their rotation should be reduced to allow them to step back and assume the role. This is where building in experience and a maturity backlog or checklist early on is extremely helpful. This allows for maturity improvements to be shared across the team, as well as for those who are inexperienced or uncomfortable with the operational side to have enough guidance and people to lean on to still be successful.

Another challenge that such double-duty teams also tend to have is with managing reactive unplanned work. Having reactive work in the team will likely require a number of adjustments to the team’s way of working. The biggest of these is making the flow of work visible to everyone, particularly those within the team (see Chapter 12, “Operations Workflow”). There also needs to be a mechanism to minimize the amount of reactive work that hits the whole team (see Chapter 13, “Queue Master”).

Finally, as with all service delivery configurations, the team will ultimately benefit from having a small team of one or more people who are dedicated to building, acquiring, and improving the operational tools and automation the team uses (as discussed in Chapter 10, “Automation”). This allows the team to have capacity that can focus on making the tooling improvements that will be necessary to take full advantage of maturity improvements like atomic packaging and configuration management.

Summary

Putting in place a maturity model that measures and creates targets to help your team improve its ability to cut through the service delivery fog is an effective way to help ensure that you can deliver the outcomes and ilities that the customer desires. Creating a Service Engineering Lead rotation can further bolster progress by allowing the delivery team to have someone who can take a step back to look for awareness disconnects and inefficiencies that can form and get in the way.

To further bolster this journey, teams should look at how they manage and track their workloads. This is where workflow management and the Queue Master can help.