Chapter 22. Test Evaluation

“Program testing can be a very effective way to show the presence of bugs, but it is hopelessly inadequate for showing their absence.”

Edsger Dijkstra

This chapter describes the characteristics of good tests. These characteristics include being understandable to customers, not fragile, and test a single concept.

Test Facets

The following discussion of things to look for in tests came partly from Gerard Meszaros [Meszaros01], Ward Cunningham, and Rick Mugridge [Cunningham01]. Overall, remember that the tests represent a shared understanding between the triad.

Understandable to Customers

The test should be written in customer terms (ubiquitous language) (Chapter 24, “Context and Domain Language”). The tables should represent the application domain. If the standard tables shown in the examples are not sufficient, create tables that the user can understand (Chapter 21, “Test Presentation”). Use whatever way of expressing the test most closely matches the customer’s way of looking at things. Try multiple ways to see which way is most suitable to the customer.

The bottom line is to use what is easiest for the customer. If a basic action table is not as understandable, add graphics to make it look like a dialog box. If the customer needs something that looks like a printed form to understand the material, rather than just the data, use that as expected output. You can also have a simple test for the data to more easily debug what might be wrong with the printed form (Chapter 12, “Development Review”).

Unless the customer wants values, use names. For example, use Good and Excellent as customer types, not customer types 1 and 2.

As presented in this book, acceptance tests are customer-defined tests that are created prior to implementation (see the book’s Introduction). You can execute them as unit tests, integration tests, or user interface tests. Make the acceptance tests written in unit testing frameworks readable, as shown in the example in Chapter 4, “An Introductory Acceptance Test,” so that you can match them with the customer’s expectations.

Spell Checked

Spell-check your tests. Tests are meant for communication, and misspelled words hinder communication. The spell-check dictionary can contain triad-agreed-upon acronyms and abbreviations. Define these acronyms and abbreviations in a glossary.

Idempotent

Tests should be idempotent. They should work the same way all the time. Either they consistently pass or they consistently fail. A non-idempotent test is erratic; it does not work the same way all the time. Causes of erratic tests are interacting tests that share data and tests for which first and following executions are different because something in the state has changed. Paying attention to setup (Chapter 31, “Test Setup”) can usually resolve erratic tests.

Not Fragile

Fragile tests are sensitive to changes in the state of the system and with the interfaces they interact with. The state of the system includes all code in the system and any internal data repositories.

Handle sensitivity to external interfaces by using test doubles as necessary (Chapter 11, “System Boundary”). In particular, functionality that depends on the date or time will most likely need a clock that can be controlled through a test double. For example, testing an end-of-the-month report may require the date to be set to the end of the month. Random events should be simulated so that they occur in the same sequence for testing.

Changes to a common setup can cause a test to fail. If there is something particular that is required for the test, the test could check for the assumptions it makes about the condition of the system. If the condition is not satisfied, the test fails in setup, rather than in the confirmation of expected outcome. This makes it easier to diagnose why the failure occurred.

Tests should only check for the minimum amount of expected results. This makes them less sensitive to side effects from other tests.

Test Sequence

Ideally, tests should be independent so they can run in any sequence without dependencies on other tests. To ensure that tests are as independent as possible, use a common setup (Chapter 31) only when necessary. As noted in that chapter, the setup part of a test (“Given”) should ensure that the state of the system is examined to see that it matches what the test requires. Internally, this can be done by either checking that the condition is as described or making the condition be that which is described.

Workflow Tests

Often, there are workflows: sequences of operations that are performed to reach a goal. You should test each operation separately. Then you might have a workflow test. A workflow tests includes multiple operations that need to be run in a particular order to demonstrate that the system processes the sequence correctly.

Use case tests should have a single action table in them. Workflows that have multiple use cases within them may have multiple action tables in their tests. Try not to have too many complicated workflow tests. They can be fragile or hard to maintain. See Chapter 28, “Case Study: A Library Print Server,” for an example of a workflow test.

Test Conditions

A set of tests should abide by these three conditions:1

- A test should fail for a well-defined reason. (That is what the test is checking.) The reason should be specific and easy to diagnose. Each test case has a scope and a purpose. The failure reason relates to the purpose

- No other test should fail for the same reason. Otherwise, you may have a redundant test. You may have a test fail at each level—an internal business rule test and a workflow test that uses one case of the business rule. If the business rule changes and the result for that business rule changes, you will have two failing tests. You want to minimize overlapping tests. But if the customer wants to have more tests because they are familiar or meaningful to him, do it. Remember: The customer is in charge of the tests

- A test should not fail for any other reason. This is the ideal, but it is often hard to achieve. Each test has a three part sequence: setup, action/trigger, and expected result. The purpose of the test is to ensure that the actual result is equal to the expected result. A test may fail because the setup did not work or the action/trigger did not function properly

Separation of Concerns

The more that you can separate concerns, the easier it can be to maintain the tests. With separation, changes in the behavior of one aspect of the application do not affect other aspects. Here are some parts that can be separated, as shown earlier in this book:

• Separate business rules from the way the results of business rules are displayed (Chapter 14, “Separate View from Model”).

• Separate the calculation of a business rule, such as a rating, from the use of that business rule (Chapter 13, “Simplification by Separation”).

• Separate each use case or step in a workflow (Chapter 8, “Test Anatomy”).

• Separate validation of an entity from use of that entity (Chapter 16, “Developer Acceptance Tests”).

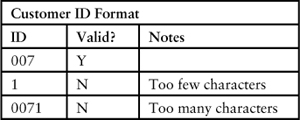

You can have separate tests for the simplest things. For example, the customer ID formatting functionality needs to be tested. The test can show the kinds of issues that the formatting deals with. If the same module is used anywhere a customer ID is used, other tests do not have to perform checks for invalid customer IDs. And if the same module is not used, you have a design issue. A test of ID might be as follows.

Test Failure

As noted before in Chapter 3, “Testing Strategy,” a passing test is a specification of how the system works. A failing test indicates that a requirement has not been met. Initially, before an implementation is created, every acceptance test should fail. If a test passes, you need to determine why it passed.

• Is the desired behavior that the test checks already covered by another test? If so, the new test is redundant.

• Does the implementation already cover the new requirement?

• Is the test really not testing anything? For example, the expected result may be the default output of the implementation.2

Test Redundancy

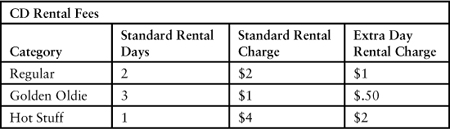

You want to avoid test redundancy. Redundancy often occurs when you have data-dependent calculations. For example, here are the rental fees for different category CDs that were shown in Chapter 10, “User Story Breakup.”

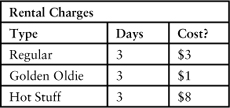

Here were the tests that were created.

Do you need all these tests? Are they equivalent? They all use the same underlying formula (Cost = Standard Rental Charge + (Number Rental Days – Standard Rental Days) * Extra Day Rental Charge).3 When the first test passes, the other tests also pass.

What if there are lots of categories? Say there are 100 different ones that all use this same formula. That would be a lot of redundant tests. If there was an identified risk in not running them, then they may be necessary.4 A collaborative triad should be able to cut down these tests to the essential ones.

Test variations of business rules or calculations separately, unless they affect the flow through a use case or user story.

In an effort to avoid redundancy, don’t shortcut tests. Have at least one positive and one negative test. For example, there should be a test with a customer ID that is valid and one that is not valid. Be sure to test each exception. For example, with the CD rental limit of three, have a test for renting three CDs (the happy path) and four CDs (the exception path).

No Implementation Issues

Tests should be minimally maintainable. Make them depend on the behavior being tested, not the implementation underneath. This way they require little maintenance work. Unless the desired behavior has changed, the test should not have to change, regardless of any implementation changes underneath. To achieve this, design tests as if they had to work with multiple implementations.

Tests should not imply that you need a database underneath. You can have a slew of file clerks who took down the data, filed it away in folders, and retrieved it when requested. Of course, the system would take a little bit longer. The tables in the tests such as CD Data represent the business view of the objects, not the persistence layer view. They will be used to create the database tables, so there will be similarities.

Points to Remember

When creating and implementing tests, consider the following:

• Develop tests and automation separately. Understand the test first, and then explore how to automate it.

• Automate the tests so that they can be part of a continuous build. See Appendix C, “Test Framework Examples,” for examples of automation.

• Don’t put test logic in the production code. Tests should be completely separate from the production code.5

• As much as practical, cover 100% of the functional requirements in the acceptance tests.

In structuring tests, remember the following:

• Tests should follow the Given-When-Then or the Arrange-Act-Assert form [AAA01].

• Keep tests simple.

• Only have the essential detail in a test.

• Avoid lots of input and output columns. Break large tables into smaller ones, or show common values in the headers (Chapter 18, “Entities and Relationships”).

• Avoid logic in tests.

• Describe the intent of the test, not just a series of steps.

A test has several costs involved in writing and testing it, executing it, and maintaining it. The tests deliver the benefits of communicating requirements and identifying defects. The incremental cost of a new test should be less than the incremental benefit that that test delivers.

Summary

• Make acceptance tests readable to customers.

• Separate concerns—test one concept with each test.

• Avoid test redundancy.