For many purposes threads are great, especially because we can still program in the familiar procedural, blocking-IO style. But they suffer from the drawback that they struggle when managing large numbers of connections simultaneously, because they are required to maintain a thread for each connection. Each thread consumes memory, and switching between threads incurs a type of CPU overhead called context switching. Although these aren't a problem for small numbers of threads, they can impact performance when there are many threads to manage. Multiprocessing suffers from similar problems.

An alternative to threading and multiprocessing is using the event-driven model. In this model, instead of having the OS automatically switch between active threads or processes for us, we use a single thread which registers blocking objects, such as sockets, with the OS. When these objects become ready to leave the blocking state, for example a socket receives some data, the OS notifies our program; our program can then access these objects in non-blocking mode, since it knows that they are in a state that is ready for immediate use. Calls made to objects in non-blocking mode always return immediately. We structure our application around a loop, where we wait for the OS to notify us of activity on our blocking objects, then we handle that activity, and then we go back to waiting. This loop is called the event loop.

This approach provides comparable performance to threading and multiprocessing, but without the memory or context switching overheads, and hence allows for greater scaling on the same hardware. The challenge of engineering applications that can efficiently handle very large numbers of simultaneous connections has historically been called the c10k problem, referring to the handling of ten-thousand concurrent connections in a single thread. With the help of event-driven architectures, this problem was solved, though the term is still often used to refer to the challenges of scaling when it comes to handling many concurrent connections.

Note

On modern hardware it's actually possible to handle ten-thousand concurrent connections using a multithreading approach as well, see this Stack Overflow question for some numbers https://stackoverflow.com/questions/17593699/tcp-ip-solving-the-c10k-with-the-thread-per-client-approach.

The modern challenge is the "c10m problem", that is, ten million concurrent connections. Solving this involves some drastic software and even operating system architecture changes. Although this is unlikely to be manageable with Python any time soon, an interesting (though unfortunately incomplete) general introduction to the topic can be found at http://c10m.robertgraham.com/p/blog-page.html.

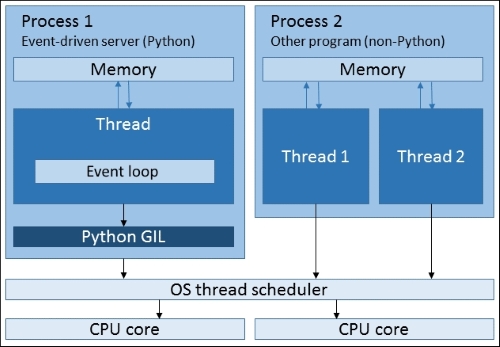

The following diagram shows the relationship of processes and threads in an event-driven server:

Although the GIL and the OS thread scheduler are shown here for completeness, in the case of an event-driven server, they have no impact on performance because the server only uses a single thread. The scheduling of I/O handling is done by the application.