5

Wireless User Hardware

5.1 Military and Commercial Enabling Technologies

I am not sure where to start in this chapter. The beginning is always a good idea but it is hard to settle on a date when wireless hardware first came into use. Arguably it could be the photophone that Alexander Graham Bell invented (referenced in Chapter 2) or before that early line-of-sight semaphore systems. The ancient Greeks invented the trumpet as a battlefield communications system and the Romans were fond of their beacons.1

In December 1907 Theodore Roosevelt dispatched the Great White Fleet, 16 battleships, six torpedo boat destroyers and 14 000 sailors and marines on a 45 000-mile tour of the world, an overt demonstration of US military maritime power and technology. The fleet was equipped with ship-to-shore telegraphy (Marconi equipment) and Lee De Forest’s arc transmitter radio telephony equipment. Although unreliable, this was probably the first serious use of radio telephony in maritime communication and was used for speech and music broadcasts from the fleet – an early attempt at propaganda facilitated by radio technology.

In 1908 valves were the new ‘enabling technology’ ushering in a new ‘era of smallness and power efficiency’. Marconi took these devices and produced tuned circuits – the birth of long-distance radio transmission. Contemporary events included the first Model T Ford and the issue of a patent to the Wright brothers for their aircraft design.

Between 1908 and the outbreak of war six years later there was an unprecedented investment in military hardware in Britain, the US and Germany that continued through the war years and translated into the development of radio broadcast and receiver technologies in the 1920s.

In the First World War portable Morse-code transceivers were used in battlefield communications and even in the occasional aircraft. Radio telegraphy was used in maritime communications including U boats in the German navy. These events established a close link between military hardware development and radio system development. This relationship has been ever present over the past 100 years. Examples include the use of FM VHF radio in the US army in the Second World War (more resilient to jamming) and the use of frequency hopping and spread spectrum techniques used in the military prior to their more widespread use in commercial radio systems.

However, it is not unusual for innovation to flow in the opposite direction. Radio and radar systems in the Second World War borrowed heavily from broadcast technologies developed in the 1930s.

When the British expeditionary force arrived in France in 1939 they discovered that the German infantry had significantly superior radio communications, using short wave for back to base communications and VHF for short-range exchanges.

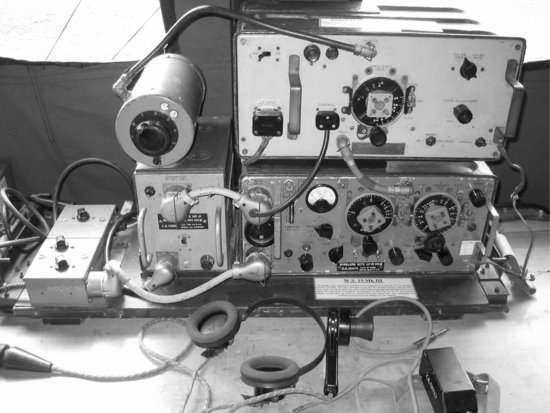

In the UK a rapid design project was put in place to produce a dualband short-wave/VHF radio that could offer similar capability. This has been described as ‘the radio that won the war’ and is certainly one of the earliest examples of a dualband dual-mode radio. The device shown in Figure 5.1 is a WS (wireless set) 19 with a separate RF amplifier No 2 producing 25 watts of RF power.

Figure 5.1 Pye WS19 dualband short-wave/VHF radio. Reproduced with permission of the Pye Telecom Historic Wireless Collection.2

Long procurement cycles and a need to amortise costs over a significant service period can also mean that military systems remain in use for longer than civilian systems, sometimes as long as thirty years. If this coincides with rapid development in the commercial sector then military handsets can seem bulky and poorly specified when compared for example to modern cellular phones.

Additionally, the development of a radio technology for military applications does not necessarily guarantee that the technology will be useful in a commercial context. Military radio equipment does not have the scale economy that cellular phones enjoy. On the other hand, military radios do not have the same cost constraints and may not have the same weight constraints and may be able to use more exotic battery technologies than cellular phones. Components can also be aggressively toleranced to improve performance.

This means that the development of a radio technology for military applications does not necessarily guarantee that the technology will be useful in a commercial context. Two examples are software-defined radio, a radio that can self-redefine its modulation and channel coding and cognitive radio, a radio that can scan and then access available radio resources.

Software-defined radio is used extensively in military radio systems, partly to overcome interoperability issues. Translating software-defined radio techniques to cellular radio has, however, proved problematic partly because of cost and performance issues including battery drain but also because in the commercial sector interoperability issues have been addressed more effectively through harmonisation and standardisation though this is now beginning to change.

Cognitive radio provides another example. Usually combined with software-defined radio, cognitive radio is useful in a military context because radio systems can be made flexible and opportunistic. This makes them less vulnerable to interception and jamming. Cognitive radio is presently being proposed for use in white-space devices in the US UHF TV band and being propositioned for use in other markets including the UK. These devices are designed to sit in the interleaved bands not being used by TV broadcasting (covered in more detail in Chapter 15)

There is, however, an important difference. In a military context it does not necessarily matter if cognitive radios interfere with other radio systems. In a commercial context, a guarantee that radios will not create interference is a precondition of deployment. Interference avoidance in the white-space world is based on a composite of location awareness and channel scanning to detect the presence or absence of TV channels and beacon detection to detect the presence of wireless microphones.

However robust these mechanisms might be, other users of the band, TV broadcasters, wireless microphones and more recently 700-MHz cellular operators at the top of the band and public safety users will need to be convinced that white-space devices do not present a threat either technically or commercially. We return to this topic in Chapter 15.

There are also similarities between military radios and specialist mobile radios including radio equipment used by public protection and disaster-relief agencies. This includes a need to ruggedise equipment, make equipment waterproof rather than splashproof, make equipment dustproof and secure. Encryption and access security are covered in Chapter 10 of 3G Handset and Network Design.

In the US specialist mobile radio user equipment and network equipment is standardised by the Association of Public Communication Officers. Analogue radios were specified as APCO 16 and digital radios are specified as APCO 25. In the UK and parts of Asia these radios comply with the TETRA radio standard though a standard called TETRAPOL with narrower channel spacing is used in some countries including France. The physical layer implementation of these radios is covered in the wireless radio access network hardware of Chapter 12.

APCO-compliant user devices have 3-watt power amplifiers to deliver deep rural coverage and are relatively narrowband with narrow channel spacing in order to realise good sensitivity on the receive path. Other specialist low-volume but high-value markets include satellite phones. Inmarsat, for example, have a new portable handset3 designed by EMS Satcom (hardware) and Sasken (the protocol stack). This is illustrated in Figure 5.2.

Figure 5.2 Inmarsat IsatPhone Pro hand-held terminal. Reproduced with permission of Inmarsat Global Limited.

Light Squared4 have plans to use a version of this phone in a dual-mode network in the US combining terrestrial coverage with geostationary satellite coverage covered in more detail in Chapter 16.

Other satellite networks, including the Iridium low-earth orbit satellite network, have radios that are optimised to work on a relatively long distance link – 35 000 kilometres for geostationary or 700 kilometres for low-earth orbit. These networks support voice services, data services and machine to machine applications (covered in more detail in Chapter 16)

Specialist phones for specialist markets include Jitterbug,5 a phone optimised for older people manufactured by Samsung and a phone being developed for blind women.6

5.2 Smart Phones

But this chapter was supposed to be predominantly addressing the evolution of mobile phone and smart phone form factors and our starting point has literally just arrived by group e mail from my friend Chetan Sharma in the US announcing that smart phone sales have just crossed the 50% share mark in the US and that the US now accounts for one third of all smart phone sales in the world (as at lunch time Monday 9 May 2011).7

The term smart phone has always been slightly awkward – is the phone smart or is it supposed to make us smart or at least feel smart and all phones are smart so presumably we should have categories of smart, smarter and smartest phones.

Over the last twenty five years the evolution of smartness expressed in terms of user functionality can be described as shown in Table 5.1.

Table 5.1 Degrees of smartness

In the beginning, cellular phones were used mainly for business calls; they were expensive, and predominantly used for one-to-one voice communication.

Then text came along, then data, originally a 1200-bps or 2400-bps FFSK data channel in first-generation cellular phones, then audio, imaging and positioning and most recently a range of sensing capabilities. Smart phones were originally intended to help make us more efficient, predominantly in terms of business efficiency. Mobile phones have become tools that we use to improve our social efficiency and access to information and entertainment. Both in business and socially, modern mobile phones allow people to be egocentric. Text has become twitter and cameras and audio inputs enable us to produce rich media user-generated content. Positioning and sensing are facilitating another shift and producing a new generation of devices that allow us to interact with the physical world around us.

Who would have thought, for example, that a whole new generation of users would master SMS texting through a phone keypad, achieving communication speeds that are comparable with QWERTY key stroke entry albeit with bizarre abbreviations.

The question to answer is how fast are these behavioural transforms happening and the answer to that can be found by looking at how quickly or slowly things have changed in the past.

A time line for cellular phone innovations can be found at the referenced web site8 but this is a summary.9 Cellular phones did not arrive out of the blue twenty five years ago. The principle of cellular phones and the associated concepts of frequency reuse were first described by DH Ring at the Bell Laboratories in 1947, a year before William Shockley invented the transistor.

In 1969 Bell introduced the first commercial system using cellular principles (handover and frequency reuse) on trains between Washington and New York. In 1971 Intel introduced the first commercial microprocessor. Microprocessors and microcontrollers were to prove to be key enablers in terms of realising low-cost mass market cellular end-user products.

In 1978 Standard Telecommunication Laboratories in Harlow introduced the world’s first single-chip radio pager using a direct conversion receiver and the Bahrain Telephone company opened the first cellular telephone system using Panasonic equipment followed by Japan, Australia, Kuwait and Scandinavia, the US and the UK.

In 1991 the first GSM network went live at Telecom Geneva.

In 1993 the first SMS phones became available.

In 1996 triband phone development was started and an initial WCDMA test bed was established

In 1997 Nokia launched the 6110, the first ARM-powered mobile phone but significant because it was very compact, had a long battery life and included games. The Bluetooth Special Interest Group was founded in September.

The world’s first dualband GSM phone the Motorola 8800 was launched in the spring.

The first triband GSM phone the Motorola Timeport L7089 was launched at Telecom 99 in Geneva and included WAP, a wireless access profile designed to support web access. WAP was slow and unusable. On 5 March 2002 Release 5 HSDPA was finalised.

Hutchison launched the ‘3’ 3G service on 3 March 2003.

In 2010 100 billion texts10 were sent from mobile phones.

The particular period we want to look at is from 2002/2003 onwards, comparing state-of-the-art handsets then and now. The objective is to identify the hardware innovations that have created significant additional value for the industry value chain and identify how and why they have added value. These include audio capabilities, imaging capabilities, macro- and micropositioning coupled with touch-screen interactive displays and last but not least sensing.

5.2.1 Audio Capabilities

Adding storage for music was an obvious enhancement that came along in parallel with adding games in phones and was the consequence of the rapid increase in storage density and rapidly decreasing cost of solid-state memory.

Rather like ring tones, operators and handset vendors, in particular Nokia despite substantial investment have failed to capitalise on the audio market that has largely been serviced by other third parties for example iTunes and Spotify.

In February 2010 Apple announced that 10 billion songs had been downloaded from the iTunes store11 with users able to choose from 12 million songs, 55 000 TV episodes and 8500 movies 2500 of which were high-definition video.

Given that it is easy to side load devices from a PC or MAC there seems to be insufficient incentive to download music off air. Even if it’s free it flattens the battery in a mobile device. There is even less incentive to download TV or movie clips. This is just as well as the delivery cost to the operator would probably exceed the income from the download which in any case goes to Apple not the operator.

Audio quality from in-built speakers as opposed to head phones has not improved substantially even in larger form factor products – lap tops including high-end lap tops as one example still have grim audio performance unless linked to external audio devices.

Innovations in 2002 included new flat speaker technologies actuated across their whole surface with NXT as the prime mover in the sector. NXT no longer exists as a company though a new Cambridge-based company Hi Wave Technologies PLC is working on similar concepts in which the display can double as a speaker and haptic input device.12

So much for audio outputs, how about audio inputs in particular the microphone.

Emile Berliner is credited with having developed the first microphone in 1876 but microphone technology moved forwards in leaps and bounds with the growth of broadcasting in the 1920s.

George V talked to the Empire on medium wave-radio in 1924 from the Empire Exhibition at Wembley using a Round–Sykes Microphone developed by two employees of the BBC, Captain Round and AJ Sykes. Over time microphones came to be optimised for studio or outdoor use, the most significant innovations being the ribbon microphone and lip microphone.

Marconi–Reisz carbon microphones were a BBC standard from the late 1920s to the late 1930s and were then gradually supplanted by the ribbon microphone. Over the following decades an eclectic mix of microphone technologies evolved including condenser microphones, electromagnetic induction microphones and piezoelectric microphones.

Over the past ten years the condenser microphone has started to be replaced by MEMS-based devices sometimes described as solid-state or silicon microphones. The devices are usually integrated onto a CMOS chip with an A to D converter.

The space saved allows multiple microphones to be used in consumer devices and supports new functions such as speech recognition. These functions have existed for years but never previously worked very well. This new generation of microphones also enable directional sensitivity, noise cancellation and high-quality audio recording. Companies such as Wolfson Microelectronics, Analogue Devices, Akustica, Infineon, NXP and Omron are active in this sector.

Audio value is probably more important than we realise. It has powerful emotional value. Audio is the last sense we lose before we die, it is the only sensing system that gives us information on what’s happening behind us. Perceptions of video quality improve if audio quality is improved.

Ring tones also sound better. How and why ring tones became a multibillion pound business remains a compete mystery to me but then that’s why I don’t drive a Porsche and have time to write a book like this.

5.2.2 Positioning Capabilities

Positioning includes micropositioning and macropositioning. Many iPhone applications exploit information from a three-axis gyroscope, an accelerometer and a proximity sensor to perform functions such as screen reformatting. This is micropositioning. Macropositioning typically uses GPS usually combined with a digital compass.

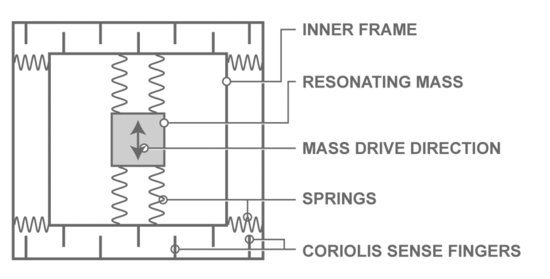

Gyroscopes are MEMS elements that sense change in an object’s axis of rotation including yaw, pitch and roll. Traditionally, a separate sensor would be needed for each axis but the example shown from STMicroelectronics in Figure 5.3 uses one MEMS-based module taking up 4 by 4 by 1 mm. An accelerometer takes up about 3 mm square. By the time you read this these two devices will probably be available in a single package. The devices have a direct cost but an indirect real-estate cost and a real-estate value. The value is realised in terms of additional user functionality. In the previous chapter we referenced products in which the display turns off when the phone is placed to the ear.

The gyroscope can be set at one of three sensitivity levels to allow speed to be traded against resolution. For example, for a gaming application it can capture movements at up to 2000 degrees per second but only at a resolution of 70 millidegrees per second per digit. For the point-and-click movement of a user interface – a wand or a wearable mouse – the gyroscope can pick up movements at up to 500 degrees per second at a resolution of up to 18 millidegrees per second per digit.

Figure 5.3 STMicroelectronics MEMS-based module. Reproduced with permission of STMicro-electronics.

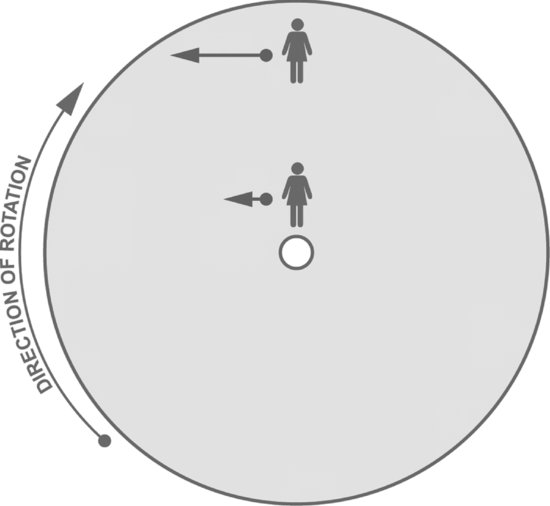

Figure 5.4 Coriolis acceleration. Reproduced with permission of Analog Devices.

The 3-axis gyroscope illustrated draws 6 milliamps compared to 5 milliamps for each of the three devices needed two years ago. The device can be put into sleep mode and woken up when needed within 20 milliseconds, for example to support dead reckoning when a GPS signal is lost. MEMS-based devices measure the angular rate of change using principles described by Gaspard G de Coriolis in 1835 and now known as the Coriolis effect.

The diagram in Figure 5.4 from an Analogue Dialogue article13 shows that as a person or object moves out towards the edge of a rotating platform the speed increases relative to the ground, as shown by the longer blue arrow.

The movement is captured by an arrangement of sensors, as shown in the springs and roundabouts diagram, see Figure 5.5 above.

Figure 5.5 Springs and mechanical structure. Reproduced with permission of Analog Devices.

Integrating microsensing with GPS based macrosensing is covered in more detail in Chapter 16.

5.2.3 Imaging Capabilities

Imaging includes imaging capture through a camera or multiple cameras and image display. Camera functionality is also covered in Chapter 7. For the moment we want to just study the technology and business impact of display technologies. First, one that didn’t make it.

In Chapter 5 of 3G Handset and Network Design we featured a miniaturised thin cathode ray tube based on an array of microscopic cathodes from a company called Candescent. The thin CRT had a number of advantages over liquid crystal displays including 24-bit colour resolution, high luminance, wide-angle viewing and a response time of 5 milliseconds that compared with 25 milliseconds for contemporary LCDs. The company announced plans in 1998 to invest $450 million in a US-based manufacturing plant in association with Sony. Candescent filed for Chapter 12 protection in 2004. Candescent.com is now a web site for Japanese teeth whitening products.

The moral of this particular tale is that it is hazardous to compete with established legacy products (liquid crystal displays) that have significant market scale advantage that in turn translates into research and development and engineering investment to improve performance and lower cost. This is exactly what has happened to the liquid crystal display supply chain.

The LCD business has also effectively scaled both in terms of volume and screen size with screens in excess of 100 inches that as we shall see in Chapter 6 has a specific relevance to future smart phone functionality. The lap top market owes its existence to LCD technology and lap tops as we know now come with embedded WiFi and either a 3G dongle or sometimes embedded 3G connectivity.

The global lap top market is of the order of 100 million units or so per year though the number depends on whether newer smaller form factors such as the iPad are counted as portable computers. A similar number of television sets are sold each year. Even combined, these numbers are dwarfed by mobile phone shipments some of which are low value, an increasing number of which are high value.

So the question is will the iPad change the lap top market in the same way that the iPhone has changed the smart-phone market.

By March 2011 Apple claimed (3rd March press release) to have shipped a total of 100 million iPhones. iPad shipments since market launch nine months prior totalled 15 million. This is a modest volume compared to Nokia. Nokia have suffered massive share erosion from over 40 to 27% but this is still 27% of 1.4 billion handsets or 378 million handsets per year. A design win into an Apple product is, however, a trophy asset and fiercely fought over by baseband and RF and display component vendors.

This gives them a design influence that is disproportionate to their market volume, though perhaps not to their market value. Apple sales volume into the sector represents at time of writing about 4% of the global market by volume but captures something of the order of 50% of the gross margin achieved across the sector.

This means that component vendors are in turn under less price pressure. The iPhone has an effective realised price including subsidies and rebates that is higher than many lap tops and although the display is small relative to a lap top its added value is higher and its cost is lower. The display in an iPhone 4 for example is estimated14 to have a realised value of about ten dollars, rather more than the RF components used in the phone.

So while we are on the topic of imaging let’s skip to another value domain – display-based interaction.

5.2.4 Display-Based Interaction – A Game Changer

When Apple released its league table of the best-selling iPhone apps from its ‘first 10 billion’ downloads earlier this year, 8 out of 10 were games. Clearly games on mobile make money but the value realisable from games is at least partially dependent on the screen and screen input capabilities of the host device.

The iPhone and the iPad both use capacitive touch screen displays with a flexible transparent surface and transparent conductive layer. An oleophobic coating is used to stop greasy fingers leaving marks on the screen.

The capacitors are organised in a coordinate system. Changes in capacitance across the coordinate points are input into gesture interpretative software that is referenced against knowledge of the application that is running at the time. This makes possible all those thumb and finger pinch and spread features that people now take for granted. Simple really but no one had thought to do this prior to 2007 or rather no one had actually done it. Apple benefited substantially from having first-mover advantage.

Table 5.2 shows some of the added feature sets across the first four generations of iPhone.

Table 5.2 Four generations of iPhone

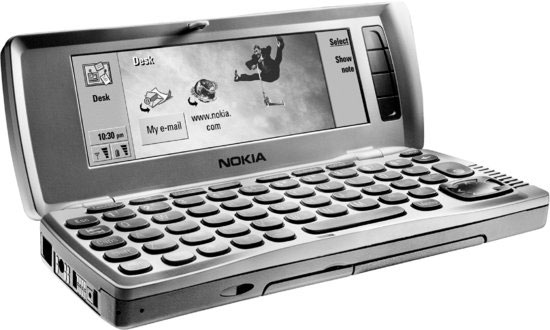

The iPhone 4 has a 3.5-inch diagonal 24-bit colour display with 960 by 640 pixel resolution at 326 ppi, an 800 to 1 contrast ratio, 500 candelas per sq metre brightness and a 5.25-watt hour battery. As a comparison, the Nokia 9210, which was a market leading smart phone (shown in Figure 5.6) product in 2002, had a 4000 colour (12 bit) 110 by 35 mm display at 150 dpi with a refresh driver cycle time of 50 milliseconds (limiting the frame rate to 12 frames per second). The device ran off a 1300-mAh lithium ion battery. A contemporary 2011 N8 Nokia smart phone by comparison has a 16.7 million colour capacitive touch screen display with orientation sensor (accelerometer), compass (magnetometer) and supports high-definition video recording and play back with 16 GB of memory, all running off a 1200 mAh battery (Figure 6.4 in Chapter 6).

Figure 5.6 Nokia 9210 Introduced in 2002. Reproduced with permission of Nokia Corporation.

The iPad takes the same principles of the iPhone but translates them into a larger tablet form factor with a 9.7-inch diagonal display with 1024 by 768 pixel resolution. The device has back and front cameras and can do high-definition video recording at VGA resolution at up to 30 frames per second output to an HDTV. The device is powered by a 25-watt lithium polymer battery.

5.2.5 Data Capabilities

Data is data but includes text, which includes as we said earlier Twitter and related text-based social-networking entities.

Much of the narrative of Chapter 5 and other chapters in our original 3G Handset and Network Design centred on the transition from delivery bandwidth added value to memory bandwidth added value.

This happened much as predicted but we missed the significance of search algorithms as a key associated enabling technology. This had been spotted in 1998 by a newly incorporated company called Google, now worth upwards of $150 billion dollars and delivering content from over one million servers around the world. A topic we return to in our later chapters on network software.

Text is well documented as being a surprise success story of the mobile phone sector with the highest value per delivered bit of all traffic types and the lowest per bit delivery cost.

However, another chapter (literally) is unfolding in terms of text-based value with telecoms as part of the delivery process – the e book, a product sector created by the electrophoretic display. Electrophoretic displays were described in Chapter 5 of 3G handset and Network Design but at that stage (2002) were not used in mainstream consumer appliance products. Electrophoretic displays do not have a backlight but rely on reflected light. This means they work well in sunlight or strong artificial light. Electrophoretic displays also do not consume power unless something changes. For this reason they are often described as bistable displays.

In an electrophoretic bistable display, titanium oxide particles about a micrometre in diameter are dispersed in a hydrocarbon oil into which a dark-coloured dye is introduced with surfactants and charging agents that cause the particles to take on an electric charge. This mixture is placed between two parallel, conductive plates. When a voltage is applied the particles migrate electrophoretically to the plate bearing the opposite charge. The particles that end up at the back of the display appear black because the incident light is absorbed by the dye; those at the front appear white because the light is reflected back by the titanium oxide particles.

These displays have enabled a new product sector to evolve, the e book, with the Kindle as, arguably the exemplar product in the sector. Table 5.3 shows the typical performance today of one of these displays.

Table 5.3 Electrophoretic display

| Display Type | Reflective electrophoretic |

| Colours | Black, white and grey-scale |

| Contrast ratio | 10 : 1 minimum |

| White state | 70 minimum |

| Dark state | 24 maximum |

| Bit depth | 4-bit grey, 16 levels |

| Reflective | 40.7% |

| Viewing angle | Near 180 degrees |

| Image update time | 120 milliseconds |

| Size | 2 to 12 inches (diagonal) |

| Thickness | 1.2 mm |

| Resolution | Better than 200 dpi |

| Aspect ratio | Typically 4 : 3 |

The Kindle 1 was introduced at a Press Conference hosted by Jeff Bozo of Amazon on 19 November 2007 as a product that ‘kindles’ (sets fire to) the imagination (and sells books via Amazon in the process). The Kindle 2 was launched in February 2009, moving from a 4-level to 16-level grey display and packed in to a substantially slimmer form factor.

Initial market launches were in the USA. The device had a CDMA 1EV DO transceiver supplied by Qualcomm. Later versions for Europe and rest of the world are 3G enabled.

The device can hold up to 3500 books; everything is free except the books of which there 650 000 to choose from, plus autodelivered newspapers, magazines and blogs. Some of the books are free as well. It takes about 60 seconds to download a book and your book and the collection is backed up remotely. Extracts can be shared with friends via an integrated Twitter or Face book function. The QWERTY keyboard can also be used to annotate text and there is an in-built dictionary and access to Wikipedia plus an ability to edit PDF documents.

The differences between the Kindle 2 and the Kindle 1 include a redesigned key board. The ambition of the Kindle and other e books is to reinvent and enhance the reading experience and change the economics of book distribution by reducing the cost of delivery.

On 27 January 2011 Amazon.com Inc. announced a 36% rise in revenue for the final quarter of 2010 with revenue of $12.95 billion and a net income of $416 million or 91 cents per share compared to $384 million or 85 cents per share a year earlier.

Analysts were disappointed and suggested the costs associated with the Kindle were having an overall negative impact on the business with each unit being shipped at a loss once product and distribution costs including payments to wireless carriers were factored in. Amazon has never published sales figures for the device though stated in a Press Conference that they had shipped more Kindle 3 units than the final book in the Harry Potter series. Additionally, operators are beginning to sell advertisement-supported Kindles with 3 G connectivity at the same price as WiFi-only models.15

Given that this is a business with a market capitalisation of $90 billion it can be safely said that some short-term pressure on margins will probably turn out to be a good longer-term investment and this may well be an example of short-term investor sentiment conflicting with a sensible longer-term strategic plan. Only time will tell.

Time will also tell if other bistable display options prove to be technically and commercially viable. Qualcomm, the company that provides the EVDO and now 3 G connectivity to the Kindle have invested in a MEMS-based bistable display described technically as an interferometric modulator and known commercially by the brand name Mirasol.

The display consists of two conductive plates one of which is a thin-film stack on a glass substrate, the other a reflective membrane suspended over the substrate. There is an air gap between the two. It is in effect an optically resonant cavity. When no voltage is applied, the plates are separated and light hitting the substrate is reflected. When a small voltage is applied, the plates are pulled together by electrostatic attraction and the light is absorbed, turning the element black. When ambient light hits the structure, it is reflected both off the top of the thin-film stack and off the reflective membrane. Depending on the height of the optical cavity, light of certain wavelengths reflecting off the membrane will be slightly out of phase with the light reflecting off the thin-film structure. Based on the phase difference, some wavelengths will constructively interfere, while others will destructively interfere. Colour displays are realised by spatially ordering IMOD16 elements reflecting in the red, green and blue wavelengths. Given that these wavelengths are between 380 and 780 nanometres the membrane only has to move a few hundred nanometres to switch between two colours so the switching can be of the order of tens of microseconds. This supports a video rate capable display without motion-blur effects. As with the electrophoretic display if the image is unchanged the power usage is near zero.

5.2.6 Batteries (Again) – Impact of Nanoscale Structures

One of the constraints in the design of portable systems is the run time between recharging. Battery drain is a function of all or certainly most of the components and functions covered in this chapter.

Batteries are a significant fraction of the size and weight of most portable devices. The improvement in battery technology over the last 30 years has been modest, with the capacity (Watt-hours kg) for the most advanced cells increasing only by a factor of five or six, to the present value of 190 Wh/kg for lithium-ion. Other performance metrics include capacity by volume, the speed of charge and discharge and how many duty cycles a battery can support before failing to hold a charge or failure to recharge or failure to discharge. Self-discharge rates also vary depending on the choice of anode and cathode materials and electrolyte mix. For example, lithium-ion batteries using a liquid electrolyte have a high energy density but a relatively high self-discharge rate, about 8% per month. Lithium polymer self discharge at about 20% per month. As stated in Chapter 3 high-capacity batteries can have high internal resistance, which means they can be reluctant to release energy quickly. Lead acid batteries for example can deliver high current on demand, as you will have noticed if you have ever accidentally earthed a jump lead. Using alternative battery technologies such as lithium (same capacity but smaller volume and weight) requires some form of supercapacitor to provide a quick-start source of instant energy.

The University of Texas17 for example is presently working on nanoscale carbon structures described generically as graphene that act like a superabsorbent sponge. This means that a supercapacitor could potentially hold an energy capacity similar to lead acid with a lifetime of 10 000 charge–discharge cycles. Although automotive applications are the most likely initial mass market application there may be scaled down applications in mobile devices that require intermittent bursts of power.

Energy density for more mainstream power sources in general is presently improving at about 10% per year. The energy density of high explosive is about 1000 Wh/kg so as energy density increases the stability of the electrochemical process becomes progressively more important.

The lead acid battery was discovered by Gaston Plante in 1869. Batteries store and release energy through an electrochemical oxidation–reduction reaction that causes ions to flow in a particular direction between an anode and cathode with some form of electrolyte in between, for example distilled water in a lead acid battery. The first lithium-ion-based products were introduced by Sony in 1990 and have now become the workhorse of the cellular phone and lap top industry.

Lithium is the third element of the periodic table and the lightest element known on earth. Most of the world’s lithium supply is under a desert in Bolivia dissolved in slushy brine that is extracted and allowed to dry in the sun. It is a highly reactive substance often combined with a combustible electrolyte constrained in a space. Much of the material cost, as much as 90%, is absorbed into overcharge/overdischarge protection. Even so, from time to time there are incidents when batteries ignite. In April 2006 Sony, Lenovo and Dell encountered battery problems and had to do a major product recall. The problem becomes more acute in applications requiring relatively large amounts of energy to be stored, electric vehicles being one example. Nonflammable electrolytes including polymer electrolytes are proposed as one solution. Petrol can be pretty explosive too, so this is one of those technical problems that can almost certainly be solved once industrial disincentives to innovate (sunk investment in petrol engine development) have evaporated. Similar industrial disincentives exist in the telecommunications industry. RF power-amplifier vendors for example are understandably reluctant to produce wideband amplifiers that mean six amplifiers are replaced with one amplifier, particularly if the unit cost stays the same.

But back to chemistry. Interestingly, electrochemical reactions at the nanoscale are different from electrochemical reactions at the macroscale. This opens up opportunities to use new or different materials. The small particle size of nanoscale materials allows short diffusion distances that means that active materials act faster. A larger surface area allows for faster absorption of lithium ions and increased reactivity without structural deterioration.

Shape changing includes structural change or surface modification, sometimes described as flaky surface morphology. A shape or surface change can increase volumetric capacity and/or increase charge and discharge rates.

For example, carbon nanotubes have been proposed as anodes. Silicon is a possible anode material with high capacity in terms of mAh/g, though the capacity fades quickly during initial cycling and the silicon can exhibit large changes in volume through the charge/discharge cycle. Film-based structures might mitigate these effects. Conventional thinking suggests that electrode materials must be electronically conducting with crystallographic voids through which lithium ions can diffuse. This is known as intercalation chemistry – the inclusion of one molecule between two other molecules. Some 3D metal oxide structures have been shown to have a reversible capacity of more than 1000 mAh/g. Low-cost materials like iron and manganese have also been proposed.

One problem with lithium-ion batteries has been cost and toxicity with early batteries using cobalt as a cathode material. Cobalt has now largely been replaced with lithium manganese. In 2002 other battery technologies being discussed included zinc air and methanol cells, but these have failed to make an impact on the mobile phone market for mainly practical reasons.

Batteries have some similarities with memory – both are storage devices and both achieve performance gain though materials innovation, packaging and structural innovation. Memory technologies are addressed in more detail in Chapter 7.

Batteries and memory are both significant markets in their own right both by volume and value. New and replacement sales of batteries into the mobile-phones sector alone are approaching two billion units per year and account for between 4 and 5% of the individual BOM of each phone. Even assuming say between five and ten dollars per phone this is a $10 to $20 billion market.

Memory represents a higher percentage of the BOM at around 9 to 10% though with less year-on-year replacement sales (apart from additional plug-in memory). We address the technology economics of memory in Chapter 7.

5.3 Smart Phones and the User Experience

Battery constraints are of course one of the two caveats that apply to the potential usability and profitability of smart phones. If a smart-phone battery runs flat by lunchtime then two things happen. The user gets annoyed and the operator loses an afternoon of potential revenue. The smarter smart phones deal with this by automatically switching off functions that are not being used. For example, there is no point in doing automatic searches for WiFi networks if the user never uses WiFi. There is no point in having a display powered up if the user cannot see it.

Smart phones as stated earlier can also hog a disproportionate amount of radio access bandwidth, a composite of the application bandwidth and signalling bandwidth needed to keep the application and multiple applications alive.

This does not matter if there is spare capacity, but if a network is running hot (close to full capacity) then voice calls and other user data sessions will be blocked or dropped. When blocked sessions or dropped sessions reach a level at which they become noticeably irritating to the user then customer support and retention costs will increase and ultimately churn rates will increase, which is very expensive for the industry.

As this paragraph was written (17 May 2011) Vodafone’s annual results were announced18

Vodafone’s annual profits received a smart phone boost despite an overall fall in net profits of 7.8 per cent. Despite a £6 bn+ impairment charge on operations in its European “PIIGS” markets (Portugal, Ireland, Italy, Greece, Spain), a 26.4 per cent boost in mobile data revenue made a significant contribution to its overall profit of £9.5 bn for the year.

This suggests that smart phones are unalloyed good news for operators. However, this is only true if the operator can build additional capacity cost effectively. This may of course include network sharing or acquisition. However this is achieved it is wise to factor opportunity cost into any analysis of device economics. A device is only profitable if the income it generates is greater than the cost of delivery.

5.3.1 Standardised Chargers for Mobile Phones

Anyway, if a user’s battery goes flat at lunchtime, at least he/she will soon be able to borrow a charger to substitute for the one they left at home. 2011 was the year in which a common charger standard was agreed using the micro-USB connector already used for the data connection on many smart phones and finally overcoming the frustration and waste of multiple connection techniques. Hurrah we say.

5.4 Summary So Far

The thesis so far in this chapter is that innovations in display technologies have created two new market sectors.

Electrophoretic displays have created an e book sector that did not previously exist.

Displays with capacitive sensing and touch-screen ‘pinch and spread’ functionality have reinvented and repurposed the smart phone.

The Nokia 9210 featured by us in 2002 was essentially a business tool. Its purpose was to make us more efficient.

The iPhone’s use of capacitive sensing has transformed a business tool into a mass-market product that changes the way that people behave, interact with each other and manage and exploit information. The addition of proximity sensing, ambient light and temperature sensing, movement and direction sensing both at the micro- and macrolevel enable users to interact with the physical environment around them and if used intelligently can help battery life by turning off functions that cannot or are not being used.

Fortuitously, wide-area connectivity is an essential part of the added value proposition of all of these newly emerged or emergent product sectors.

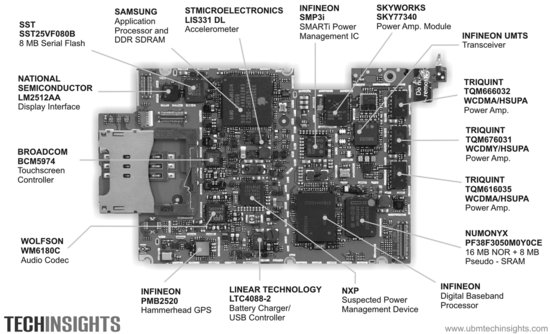

The iPhone has also changed the anatomy of the smart phone. Figure 5.7 shows the main components including RF functions.

The added value of these components is, however, realised by different entities.

Figure 5.7 Handset components. Reproduced with permission of UBM TechInsights.19

Some of the components support functionalities that help sell the phone. In the iPhone the accelerometer would be one example, the GPS receiver another. These components also realise value to companies selling applications from an APP store, for example a gaming application or navigation-based or presence-based application.

The memory functions are either user facing, supporting user applications or device facing, supporting protocol stack or physical layer processing in the phone (covered in more detail in Chapter 7).

The application processor, display interface, touch-screen controller, audio codec, battery charger and USB controller and power-management chip and digital baseband processor all perform functions that are necessary for and/or enhance the user experience and in some cases help sell the phone in the first place, for instance the display and audio functions.

The network operator is, however, predominantly interested in how at least some of this added value can be translated into network value. This brings us to connectivity that is the domain of the transceiver and power-amplifier modules with some help from the baseband processor and all the other components that are not identified but in practice determine the RF connectivity efficiency of the phone.

As with displays and other user-facing functions, RF efficiency and enhanced functionality is achieved through a combination of materials innovation, manufacturing innovation and algorithmic innovation. Manufacturing innovation includes silicon scaling but also provides opportunities to exploit changes in material behaviour when implemented at the nanoscale level.

We have noted the increasing use of MEMS in for example 3-axis gyroscopes. Gyroscopes, accelerometers and ink-jet printer heads all constitute a significant part of the established $45 billion market for MEMS devices.

MEMS (microelectromechanical systems) already determine the performance of some parts of the front end of a mobile phone. The SAW and/or FBAR filters used in the transmit and receive path are an example. NEMS (nanoelectromechanical systems) are a logical next step.

Nanoscale devices are generally referred to as structures sized between 1 to 100 nanometres in at least one dimension. The devices require processes that can be implemented at atomic or molecular scale.20 The deposition of a single layer of atoms on a substrate is one example. Structures exhibit quantum-mechanical effects at this scale. Materials behave differently. As a reminder a nanometre is one billionth of a metre. A microscale device is generally described as a structure sized between 1 and 1000 millimetres in one dimension. A millimetre is one thousandth of a metre.

One of the traditional problems with many RF components is that they do not scale in the same way that semiconductor devices scale. Applying NEMS techniques to RF components may change this. But first, let’s remind ourselves of the problem that needs to be solved.

Mobile broadband data consumption is presently quadrupling annually and operators need to ensure that this data demand can be served cost efficiently. Part of the cost-efficiency equation is determined by the RF efficiency of the front end of the phone.

The increase in data consumption is motivating operators to acquire additional spectrum. However, this means that new bands have to be added to the phone. Each additional band supported in a traditional RF front end introduces potential added insertion loss and results in decreased isolation between multiple signal paths within the user’s device. Additionally, a market assumption that users require high peak data rates has resulted in changes to the physical layer that reduce rather than increase RF efficiency. Two examples are multiple antennas and channel bonding.

Economists remain convinced that scale economy in the mobile-phone business is not an issue, despite overwhelming industry evidence to the contrary. This has resulted in regulatory and standards making that have resulted in a multiplicity of band plans and standards that are hard to support in the front end of a user’s device. The ‘front end’ is an industry term to describe the in and out signal paths including the antenna, filters, oscillators, mixers and amplifiers, a complex mix of active and passive components that are not always easily integrated. The physicality of these devices means they do not scale as efficiently as baseband devices.

These inefficiencies need to be resolved to ensure the long-term economic success of the mobile broadband transition. The band allocations are not easily changed so technology solutions are needed.

In Chapter 4 we listed the eleven ‘legacy bands’ and 25 bands now specified by 3GPP. The band combination most commonly supported in a 3 G phone is the original 3G band at 1900/2100 MHz, described as Band 1 in the 3GPP numbering scheme, and the four GSM bands, 850, 900, 1800 and 1900 MHz (US PCS).

We also highlighted changes to existing allocations, for example the proposed extension of the US 850 band and the related one-dB performance cost incurred. This directly illustrated the relationship between operational bandwidth, the pass band expressed as a ratio of the centre frequency of the band, and RF efficiency.

Additionally in some form factors, for example tablets, slates and lap tops, we suggested a future need to integrate ATSC TV receive and/or DVB T or T2 receiver functionality in the 700-MHz band.

Within existing 3GPP band plan boundaries there are already significant differences in handset performance that are dependent on metrics such as the operational bandwidth as a percentage of centre frequency, the duplex gap and duplex spacing in FDD deployments and individual channel characteristics determined, for example, by band-edge proximity.

It is not therefore just the number of band plans that need to be taken into account but also the required operational bandwidths, duplex spacing and duplex gap of each option expressed as a percentage of the centre frequency combined with the amount of unwanted spectral energy likely to be introduced from proximate spectrum, a particular issue for the 700- and 800-MHz band plans or L band at 1.5 GHz (adjacent to the GPS receive band).

The performance of a phone from one end of a band to the other can also vary by several dBs. Adaptive-matching techniques can help reduce but not necessarily eliminate this difference. At present, a user device parked on a mismatched channel on the receive path will be, or at least should be, rescued by the handover process but the associated cost will be a loss of network capacity and absorption of network power (a cost to others). So there are two issues there, a performance loss that translates into an increase in per bit delivery cost for the operator, and commercial risk introduced by either late availability or nonavailability of market competitive performance products capable of accessing these new bands.

The market risk is a function of the divide down effect that multiple standards and multiple bands have on RF design resource. The industry’s historic rate of progress with new band implementation has been slow. GSM phones were single band in 1991, dualband by 1993, triband a few years later, quadruple band a few years later and quintuple band a few years later. A five-band phone remains a common denominator product. Self-evidently if a band per year had been added we would have twenty-band user devices by now, the average has been a new band every three years or so yet here we have the international regulatory community assuming a transition from a five-band phone to a phone supporting ten bands or more within two to three years at the outside. This could only be done if a significant performance loss and significant increase in product cost was considered acceptable. Note that one operator’s ten band phone will not be the same as another operator, further diluting available RF design and development capability.

The US 700-MHz band plan is particularly challenging for RF design engineers both in terms of user-device design and base-station design. We revisit this topic in Chapter 12, Staying with user-device design considerations, Figure 5.8 illustrates the complexity introduced even when a relatively small subset of 3GPP bands is supported.

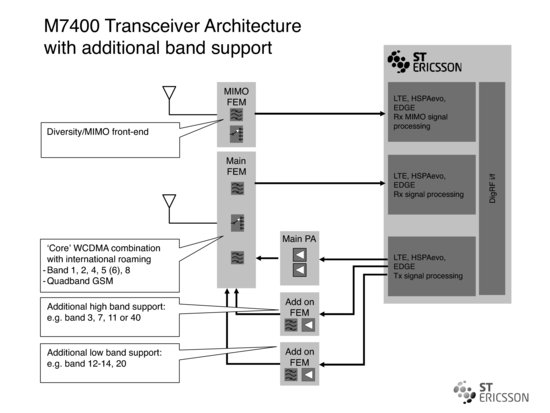

Figure 5.8 Block diagram of multiband multimode RF front end. Reproduced with permission of ST Ericsson.21

Table 5.4 shows the core bands supported and the additional bands and band (either or) combination options. Note the separate MIMO front-end module (FEM) to support the diversity receive path.

Table 5.4 Core band combination with international roaming support

To support progress to or beyond requires substantial RF component innovation. Although most of these components have already been introduced in Chapter 3 we now need to revisit present and future performance benchmarks and how these devices may potentially resolve the multiple band allocation problem described above and related cost and implementation issues.

5.5 RF Component Innovation

5.5.1 RF MEMS

The application of radio-frequency microelectromechanical system microscale devices is being expanded to include MEMS switches, resonators, oscillators and tuneable capacitors and (in the longer term) inductors that potentially provide the basis for tuneable filters.

RF MEMS are not a technical panacea and as with all physical devices have performance boundaries, particularly when used as filters. If any device is designed to tune over a large frequency range it will generally deliver a lower Q (and larger losses) than a device optimised for a narrow frequency band.

An alternative approach is to exploit the inherent smallness of RF MEMS devices to package together multiple components, for example packaging multiple digital capacitors on one die. The purpose of these devices to date has been to produce adaptive matching to changing capacitance conditions within a band rather than to tune over extended multiband frequency ranges.

In an ideal world additional switch paths would be avoided. They create loss and distortion and dissipate power. More bands and additional modes therefore add direct costs in terms of component costs and indirect costs in terms of a loss of sensitivity on the receive path and a loss of transmitted power on the transmit path. MEMS (microelectrical mechanical system) -based switches help to mitigate these effects.

There is also a potential power handling and temperature cycling issue. The high peak voltages implicit in the GSM TX path can lead to the dielectric breakdown of small structures, a problem that occurred with early generations of SAW filters. Because MEMS devices are mechanical, they will be inherently sensitive to temperature changes. This suggests a potential conflict between present ambitions to integrate the RF PA on to an RFIC and to integrate MEMS devices to reduce front-end component count and deliver a spectrally flexible phone.

The combination of RF power amplification and an RFIC is also problematic with GSM as the excess heat generated by the PA can cause excessive drift in the small signal stages of the receiver, including baseband gain stages and filtering. At the lower levels of UMTS and LTE this becomes less of a problem but still requires careful implementation. The balance between these two options will be an important design consideration. The optimal trade off is very likely to be frequency specific.

For example, if the design brief is to produce an ultralow-cost handset, then there are arguments in favour of integrating the RFPA on to the RFIC. However, this will make it difficult to integrate MEMS components onto the same device

MEMS are also being suggested as potential replacements for present quartz crystal-based subsystems. The potential to use microelectrical mechanical resonators has been the subject of academic discussion for almost 40 years and the subject of practical research for almost as long.

The problem with realising a practical resonator in a MEMS device is the large frequency coefficient of silicon, ageing, material fatigue and contamination. A single atomic layer of contaminant will shift the resonant frequency of the device. As with MEMS switches and filters, the challenge is to achieve hermetically robust packaging that is at least as effective as the metal or ceramic enclosures used for quartz crystals, but without the size or weight constraint. There are products now available that use standard CMOS foundry processes and plastic moulded packaging.

While some 2G handsets make do with a lower-cost uncompensated crystal oscillator, 3G and LTE devices need the stability over temperature and time, accuracy and low phase noise that can only presently be achieved using a voltage-controlled temperature-compensated device. Temperature-compensated MEMS oscillators (TCMO), particularly solutions using analogue rather than digital compensation, are now becoming a credible alternative and potentially offer significant space and performance benefits. A MEMS resonator is a few tenths of a millimetre across. A quartz crystal is a few millimetres across, one hundred times the surface area.

MEMS resonator performance is a function of device geometry. As CMOS geometries reduce, the electrode gap reduces and the sense signal and signal-to-noise ratio will improve, giving the oscillators a better phase noise and jitter specification. As MEMS resonators get smaller they get less expensive. As quartz crystals get smaller they get more expensive. MEMS resonators therefore become increasingly attractive over time.22

SAW filters use semiconductor processes to produce combed electrodes that are a metallic deposit on a piezoelectric substrate. SAW devices are used as filters, resonators and oscillators and appear both in the RF and IF (intermediate frequency) stages of present cellular handset designs. SAW devices are now being joined by a newer generation of devices known as BAW (bulk acoustic wave) devices.

In a SAW device, the surface acoustic wave propagates, as the name suggests, over the surface of the device. In a BAW device, a thin film of piezoelectric material is sandwiched between two metal electrodes. When an electric field is created between these electrodes, an acoustic wave is launched into the structure. The vibrating part is either suspended over a substrate and manufactured on top of a sacrificial layer or supported around its perimeter as a stretched membrane, with the substrate etched away.

The devices are often referred to as film bulk acoustic resonators (FBAR). The piezoelectric film is made of aluminium nitride deposited to a thickness of a few tens of micrometres. The thinner the film, the higher the resonant frequency. BAW devices are useful in that they can be used to replace SAW or microwave ceramic filters and duplexers in a single component. BAW filters are smaller than microwave ceramic filters and have a lower height profile. They have better power-handling capability than SAW filters and achieve steeper roll-off characteristics. This results in better ‘edge of band’ performance.

The benefit apart from the roll-off characteristic and height profile is that BAR devices are inherently more temperature resilient than SAW devices and are therefore more tolerant of modules with densely populated heat sources (transceivers and power amplifiers). However, this does not mean they are temperature insensitive. BAR filters and SAW filters all drift with temperature and depending on operational requirements may require the application of temperature-compensation techniques. However, present compensation techniques compromise Q.

Twelve months ago a typical BAW duplexer took up a footprint of about 5 by 5 mm2 with an insertion height of 1.35 mm. In the US PCS band or 1900/2100 band these devices had an insertion loss of about 3.6 dB on the receive path and 2.7 dB on the transmit path and delivered RX/TX isolation of 57 dB in the TX band and 44 dB in the RX band.

A typical latest generation FBAR duplexer takes up a footprint of about 2 by 2.5mm2 and has an insertion height of 0.95 mm max. In the US PCS band or UMTS1900 band these devices have a typical insertion loss of about 1.4 dB on the receive path and 1.4 dB on the transmit path and deliver RX/TX isolation of 61 dB in the TX band and 66 dB in the RX band. More miniaturised versions (smaller than 2 by 1.6 mm) are under development.

SAW filters also continue to improve and new packaging techniques (two filters in one package) offer space and cost savings.

FBAR filters and SAW filters could now be regarded as mature technologies but as such are both still capable of substantial further development. Developments include new packaging techniques. For example, FBAR devices are encapsulated using wafer-to-wafer bonding, a technique presently being extending to miniaturised RF point filters and RF amplifiers within a hermetically sealed 0402 package.

SAW filters are, however, also continuously improving and many handsets and present designs assume SAW filters rather than FBAR in all bands up to 2.1 GHz though not presently for the 2.6-GHz extension band.

Some RF PA vendors are integrating filters with the PA. This allows for optimised (lower than 50 ohm) power matching, though does imply an RF PA and filter module for each band. There are similar arguments for integrating the LNA and filter module as a way of improving noise matching and reducing component count and cost. A present example is the integration of a GPS LNA with FBAR GPS filters into a GPS front-end module.

RF PA vendors often ship more than just the power amplifier and it is always an objective to try and win additional value from adjacent or related functions, for example supplying GaAs-based power amplifiers with switches and SAW and BAW filter/duplexers.

In comparison to SAW, BAW and FBAR filters, RF MEMS realistically still have to be characterised as a new technology in terms of real-life applications in cellular phones and mobile broadband user equipment. RF MEMS hold out the promise of higher-Q filters with good temperature stability and low insertion loss and could potentially meet aggressive cost expectations and quality and reliability requirements.

However, the NRE costs and risks associated with RF MEMS are substantial and need to be amortised over significant market volumes. Recent commercial announcements suggest that there is a growing recognition in the RF component industry that RF MEMS are both a necessary next step and a potential profit opportunity. The issue is the rate at which it is technically and commercially viable to introduce these innovations.

5.5.2 Tuneable Capacitors and Adaptive TX and RX Matching Techniques

The rationale for adaptive matching is that it can deliver TX efficiency improvements by cancelling out the mismatch caused by physical changes in the way the phone is being used. A hand around a phone or the proximity of a head or the way a user holds a tablet or slate will change the antenna matching, particularly with internal and/or electrically undersized antennas. A gloved hand will have a different but similar effect.

Mismatches also happen as phones move from band to band and from one end to the other end of a band. TX matching is done by measuring the TX signal at the antenna. In practice, the antenna impedance match (VSWR) can be anything between 3.1 and 5.1 (and can approach 10 to 1). This reduces to between 1.1 and 1.5 to 1 when optimally matched.

Adaptive matching of the TX path is claimed to realise a 25% reduction in DC power drain in conditions where a severe mismatch has occurred and can be corrected. However, in a duplex spaced band matching the antenna on the TX path to 50 ohms could produce a significant mismatch.

Ideally therefore, the RX path needs to be separately matched (noise matched rather than power matched). This is dependent on having a low-noise oscillator in the handset such that it can be assumed that any noise measured is from the front end of the phone rather than the oscillator. Matching can then be adjusted dynamically. Note that low phase noise is also needed to avoid reciprocal mixing to avoid desensitisation.

Adaptive matching is by present indications one of the more promising new techniques for use in cellular phones. The technique is essentially agnostic to the duplexing method used. It does, however, offer the promise of being able to support wider operational bandwidths though this in turn depends on the tuning range of the matching circuitry, the loss involved in achieving the tuning range and the bandwidth over which the tuning works effectively.

Note that most of these devices and system solutions depend on a mix of digital and analogue functions. A strict definition of tunability would imply an analogue function, whereas many present device solutions move in discrete steps – eighty switched capacitors on a single die being one example. Of course if the switch steps are of sufficiently fine resolution the end result can be very much the same.

There are some informed industry observers that consider a tuneable front end defined strictly as a well-behaved device that can access any part of any band anywhere between 700 MHz and 4.2 GHz could be twenty years away. This begs the question as to what the difference would be between say a twenty-five-band device in twenty years time (assuming one band is added each year) and a device with a genuinely flexible 700 MHz to 4.2 GHz front end. From a user-experience perspective the answer is probably not a lot, but the inventory management savings of the infinitely flexible device would be substantial, suggesting that this is an end point still worth pursuing.

5.3.3 Power-Amplifier Material Options

RF efficiency is determined by the difficulty of matching the power amplifier to the antenna or multiple antennas and to the switch path or multiple switch paths across broad operational bandwidths. However, transmission efficiency is also influenced by the material and process used for the amplifier itself.

Commercialisation of processes such as gallium arsenide in the early 1990s provided gains in power efficiency to offset some of the efficiency loss implicit in working at higher frequencies, 1800 MHz and above, and needing to preserve wanted AM components in the modulated waveform.

In some markets, GSM for example, CMOS-based amplifiers helped to drive down costs and provided a good trade off between cost and performance but for most other applications GaAs provided a more optimum cost/performance compromise.

This is still true today. WCDMA or LTE is a significantly more onerous design challenge than GSM but CMOS would be an attractive option in terms of power consumption, cost and integration capability if noise and power consumption could be reduced sufficiently.

Noise can be reduced by increasing operating voltage. This also helps increase the passive output matching bandwidth of the device. However, good high-frequency performance requires current to flow rapidly through the construction of the base area of the transistor. In CMOS devices this is achieved by reducing the thickness of the base to micrometres or submicrometres but this reduces the breakdown voltage of the device.

The two design objectives, higher voltage for lower noise and a thinner base for higher electron mobility are therefore directly opposed. At least two vendors are actively pursuing the use of CMOS for LTE user devices but whether a cost performance crossover point has been reached is still open to debate. Low-voltage PA operation also is a direct contributor to PA bandwidth restriction. Because it is output power that is specified, low supply voltages force the use of higher currents in the PA transistors. This only happens by designing at low impedances, significantly below the nominal 50 ohms of radio interfaces.

Physics requires that impedance value shifts happen with bandwidth restrictions: larger impedance shifts involve increasingly restricted bandwidths. At a fixed power, broadband operation and low-voltage operation are in direct opposition.

Gallium arsenide in comparison allows electrons to move faster and can therefore generally deliver a better compromise between efficiency, noise and stability, a function of input impedance. However, the material has a lower thermal conductivity than CMOS and is more brittle. This means that it can only be manufactured in smaller wafers, which increases cost. The material is less common than silicon, the world’s most common chemical element, and demands careful and potentially costly environmental management. As at today, gallium arsenide remains dominant in 3G user devices for the immediately foreseeable future and is still the preferred choice for tier 1 PA vendors.

Gallium nitride, already used in base station amplifiers is another alternative with electron mobility equivalent to GaAs but with higher power density, allowing smaller devices to handle more power. Gallium nitride is currently used in a number of military applications where efficient high-power RF is needed. However, gallium nitride devices exhibit memory effects that have to be understood and managed.

Silicon germanium is another option, inherently low noise but with significantly lower leakage current than GaAs and silicon. However, an additional thin base deposition of germanium (a higher electron mobility material) is required, which adds cost. Breakdown voltage is also generally low in these devices.

5.5.4 Broadband Power Amplifiers

Irrespective of the process used it is plausible that a greater degree of PA reuse may be possible. For LTE 800 to use the same power amplifier as LTE900 would imply covering an operational bandwidth of over 100 MHz (810 to 915 MHz) at a centre frequency of 862 MHz.

This is 11.6% of the centre frequency. PA operational bandwidths of up to 20% of centre frequency are now possible, though with some performance compromise.23 As a comparison the 1800 MHz band is 4.3%. Any efficiency loss for a UMTS/LTE 900 handset incurred as a consequence of needing to also support LTE 800 would likely to be unacceptable. This implies incremental R and D spending in order to deliver an acceptable technical solution. The general consensus is that 15% is OK, 30% is stretching things too far, which would mean a power amplifier covering say 698 to 915 MHz would be unlikely to meet efficiency and/or EVM and/or spectral mask requirements. The PA will also need to be characterised differently for GSM (higher power) and for TDD LTE.

The power in full duplex has to be delivered through a highly specified (linear) RX/TX duplexer with a TDD switch with low harmonics. The design issues of the PA and switch paths are well understood but if both FDD and TDD paths need to be supported then the design and implementation becomes problematic, particularly when all the other existing switch paths are taken into consideration.

Wideband amplifiers are also being promoted where one PA (with suitable linearisation and adaptive matching) can replace two or three existing power amplifiers. The approach promises overall reductions in DC power drain and heat dissipation and cost and board real estate but will need to be optimised for any or all of the process choices.

However, even if this broadband PA problem can be solved, within the foreseeable future each full-duplex band requires an individual duplexer. Connecting one broadband PA to multiple duplexers is a lossy proposition with complex circuitry. To match a good broadband PA, a switched (or tuneable) duplexer is required.

5.5.5 Path Switching and Multiplexing

Unlike power amplifiers that drive current into a low impedance, switches must tolerate high power in a 50-ohm system. If connected to an antenna with impedance other than 50 ohms the voltage will be even higher, commonly above 20 Vpk. The switch is in the area of highest voltage and has the most severe power-handling and linearity requirements with a need to have an IP3 of >70 dBm.

Table 5.5 gives typical power-handling requirements.

Table 5.5 Power-handling requirements. Reproduced with permission of Peregrine Semiconductor24

Low-voltage FETS can be ‘stacked’ in series for high-voltage handling in the OFF condition, but this requires a low loss insulating substrate, either GaAs or silicon on sapphire. Apart from power handling, switches must be highly linear and have low insertion loss.

Switch losses have more than halved over the past five years that has allowed for higher throw counts and cascaded switches but substantial performance improvements are still needed due to the steadily increasing throw count of the antenna side switch, the addition of a PA side switch and the need to support bands at higher frequencies.

Table 5.6 shows the performance of a nine throw switch implemented using a silicon on sapphire process. This provides good isolation and the use of CMOS means that the off-chip logic can be integrated which is generally harder to achieve in GaAs switches. The switch also integrates the low pass filters.

CMOS scaling combined with finer metal pitch technology and improved transistor performance should, however, realise future benefits both in terms of size and insertion loss, a 0.18-μm process would halve the insertion loss and core area and double the bandwidth. The insertion loss and size benefits are shown below. Note that on the basis of these measurements the insertion loss of silicon on sapphire has now achieved parity with GaAs.

Table 5.6 Silicon on sapphire process versus GaAs insertion loss comparison. Reproduced with permission of Peregrine Semiconductor

Similar flexibility options and cost and performance advantage can be achieved using their proprietary thin-film ceramic material, a doped version of barium strontium titanate (BST). The dielectric constant of the material varies with the application of a DC voltage. The device is claimed to have a Q of 100 at 1 GHz and better than 80 at 2 GHz, and is claimed to be highly linear and provide good harmonic performance with low power consumption, high capacitance density, an IP3 of greater than 70 dBm, fast switching speed and low current leakage.

5.6 Antenna Innovations25

5.6.1 Impact of Antenna Constraints on LTE in Sub-1-GHz Bands

The design of the antenna system for LTE mobile devices involves several challenges; in particular if the US 700 MHz (Bands 12, 13, 14, 17) bands have to be supported alongside the legacy cellular bands frequencies in handset-size user equipment. In general, the upper frequency bands (>=1710 MHz) don’t create particular difficulties for the antenna design.

The two main challenges are:

- Bandwidth: The antenna must have enough bandwidth to cover the additional part of the spectrum, and this is difficult to achieve in the 700-MHz bands as the size of the device is small compared to the wavelength

- MIMO (multiple-input and multiple-output): Multiple antennas, at least 2, must be integrated in the device to support the MIMO functionality. Below 1 GHz isolation becomes an issue and the correlation between the radiation pattern is high, limiting the MIMO performance.

The maximum theoretical bandwidth available for a small antenna is proportional to the volume, measured in wavelengths, of the sphere enclosing the antenna. A typical antenna for mobile devices either PIFA or monopole, excites RF currents on its counterpoise, typically the main PCB of the device or a metal frame. The PCB/frame of the device therefore becomes part of the ‘antenna’, and the maximum linear dimension of the device should be used as the diameter of the sphere.

As a consequence, in the low-frequency bands the bandwidth of the antenna is proportional to the third power of the size of the device using the antenna, and as the frequency is reduced the bandwidth reduces quickly. More bandwidth can be traded off at the expense of antenna efficiency.

For a typical handset with length around 100 mm, it can be already difficult to cover the legacy 3GPP bands 5+ 8 (824–894 MHz and 880–960 MHz), so adding support for an additional 100 MHz (698–798 MHz) at an even lower frequency is a major challenge for small devices; for larger devices, for example tablets, the problem is reduced.

Although a tuneable/switchable antenna can greatly help in meeting the LTE requirements, it comes with its own set of challenges. In particular, the antenna has to satisfy stringent linearity requirements and introduces added cost.

Different choices of active elements are possible. Tuneable digital capacitor arrays can be used to realise ‘variable capacitors’ with ranges between fractions of a picofarad to tens of picofarads. Alternatively, conventional high-linearity RF switches can be used to create switchable antenna functionality. The active elements can be used in the matching circuit, in which case the function partly overlaps with that of the adaptive matching circuits for the PA discussed below or can be part of the antenna, in which case the active element can be used to change the effective electrical length of the antenna.

In any case, the physical dimension of the antenna cannot be reduced below a certain point even using a tuneable antenna:

- The instantaneous bandwidth of the antenna must be well above that of the channel (20 MHz for bands above 1 GHz).

- If the bandwidth is narrow, a sophisticated feedback loop must be implemented to make sure that the antenna remains tuned correctly in all possible conditions where an external object (head, hand …) could shift the resonant frequency. The feedback loop is typically required if capacitor arrays are used, as the fabrication tolerance can be quite high.

- The above feedback loop is easier to implement in the uplink (UE TX) than the downlink (UE RX), however, the antenna must have enough instantaneous bandwidth so that if it is optimally tuned in the uplink it is automatically also tuned in the paired downlink frequency.

As the bandwidth of the antenna is mostly dictated by the size of the device through some fundamental physical relation, it is unlikely that innovations in materials can bring groundbreaking advancements in miniaturisation.

5.6.2 MIMO