In the event that a number of OSDs have failed, and you are unable to recover them via the ceph-object-store tool, your cluster will most likely be in a state where most, if not all, RBD images are inaccessible. However, there is still a chance that you may be able to recover RBD data from the disks in your Ceph cluster. There are tools that can search through the OSD data structure, find the object files relating to RBDs, and then assemble these objects back into a disk image, resembling the original RBD image.

In this section, we will focus on a tool by Lennart Bader to recover a test RBD image from our test Ceph cluster. The tool allows the recovery of RBD images from the contents of Ceph OSDs, without any requirement that the OSD is in a running or usable state. It should be noted that if the OSD has been corrupted due to an underlying filesystem corruption, the contents of the RBD image may still be corrupt. The RBD recovery tool can be found in the following GitHub repository: https://gitlab.lbader.de/kryptur/ceph-recovery.

Before we start, make sure you have a small test RBD with a valid filesystem created on your Ceph cluster. Due to the size of the disks in the test environment that we created in Chapter 2, Deploying Ceph with Containers, it is recommended that the RBD is only a gigabyte in size.

We will perform the recovery on one of the monitor nodes, but in practice, this recovery procedure can be done from any node that can access the Ceph OSD disks. To access the disks, we need to make sure that the recovery server has sufficient space to recover the data.

In this example, we will mount the remote OSDs contents via sshfs, which allows you to mount remote directories over ssh. However in real life, there is nothing to stop you from physically inserting disks into another server or whatever method is required. The tool only requires to see the OSDs data directories:

- Clone the Ceph recovery tool from the Git repository:

git clone https://gitlab.lbader.de/kryptur/ceph-recovery.git

The following screenshot is the output for the preceding command:

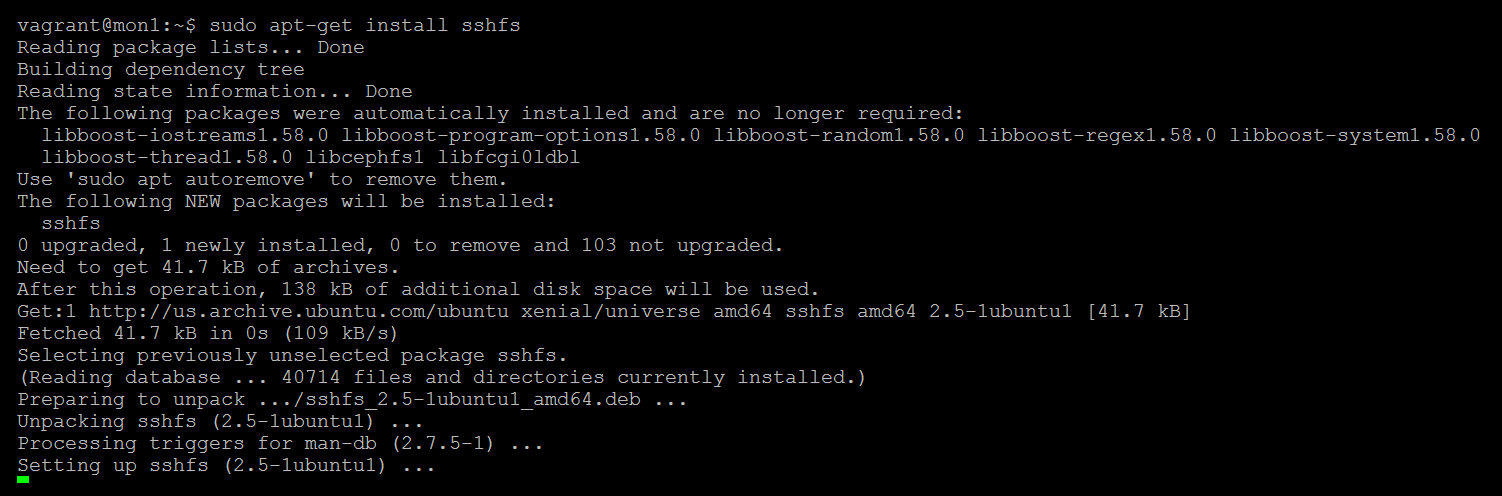

- Make sure you have sshfs installed:

sudo apt-get install sshfs

The following screenshot is the output for the preceding command:

- Change into the cloned tool directory, and create the empty directories for each of the OSDs:

cd ceph-recovery

sudo mkdir osds

sudo mkdir osds/ceph-0

sudo mkdir osds/ceph-1

sudo mkdir osds/ceph-2