Python supports a lot of database operations using libraries such as psycopg2 and sqlalchemy. Both of them are quite comprehensive and useful when working with databases from a Python interface. However, they have their own paraphernalia, which sometimes gets too much information for simple querying tasks. Fortunately, there is a hidden gem in pandas called read_sql_query method. It does the following:

- Runs simple queries involving select, where, and so on.

- Runs all the queries that return a table or its subset in a tabular form.

- Can't use the INSERT, UPDATE, and DELETE statements.

- The output is a DataFrame and hence all the pandas methods can be used for further data processing.

Let's look at how we can make use of this method. To illustrate this, we will insert a dataset as a table into a database. To do this, you will need to install a PostgreSQL or SQL database to your local directory. If you already have a database set up, you can ignore the table creation process and jump to the queries process.

Let's download the World Happiness 2019 dataset from Kaggle, push it to a db, and perform various DB and pandas operations on it:

import pandas as pd

df = pd.read_csv('F:/world-happiness-report-2019.csv')

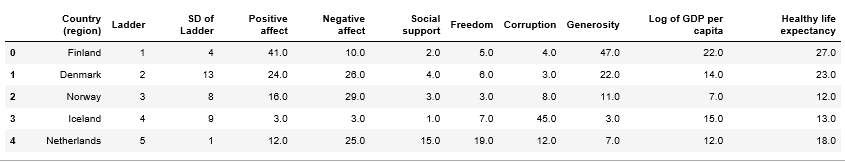

df.head()

The following data shows us the World Happiness report as a DataFrame:

Since we are going to directly create a table from the DataFame that we generated previously, it is necessary to change the column name to postgresql since it does not support column names with spaces:

# rename Country (region) to region

df= df.rename(columns={'Country (region)':'region'})

# push the dataframe to postgresql using sqlalchemy

# Syntax:engine = db.create_engine('dialect+driver://user:pass@host:port/db')

from sqlalchemy import create_engine

engine = create_engine('postgresql://postgres:[email protected]:5433/postgres')

df.to_sql('happy', engine,index=False)

In order to use the read_sql_query method, we need to make a connection with the database using either psycopg2 or sqlalchemy. Once the connection has been established, read_sql_query can be used in its full form:

import psycopg2

try:

connection = psycopg2.connect(user="[db-user_name]",

password="[db-pwd]",

host="127.0.0.1",

port="5433",

database="postgres")

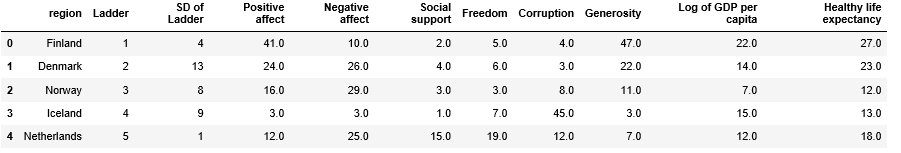

happy= pd.read_sql_query("select * from happy;",con=connection).head()

This results in the following output:

Take a look at the following code. This helps in running a SQL query.

posgrt40 = pd.read_sql_query('select * from happy where "Positive affect" > 40;',con=connection).head()

This results in the following output:

except (Exception, psycopg2.Error) as error :

print ("Error while fetching data from PostgreSQL", error)

The pd.read_sql_query() method returns the table as a DataFrame rather than requiring the programmer to intervene and convert the data into the necessary format.