CHAPTER 4

Software for Medical Systems

1. Introduction

Safety has long been a prominent concern with food, drugs, and devices. Medical devices are regulated by government in every important economy in the world. With the invention of the computer and the ability to embed computers and software in devices, it was only natural that software would become important in medicine. Sometimes the software is a medical device, such as image processing software making a breast cancer diagnosis from a mammogram would be. As software has gained more functionality and touched safety, such as in software systems that keep patients alive, regulatory bodies worldwide have gradually come to realize the importance of regulating software and its construction as well as the more traditional scope of regulating manufacturing processes.

The Food and Drug Administration (FDA), part of the Department of Health and Human Services, is the U.S. government agency with authority over the safety of food, drugs, medical devices, and radiation-producing equipment. To market any medical device or drug in the United States, you must have the approval of the FDA. The rest of the world has similar policies restricting their markets.

The eventual measurement of quality is success in the marketplace. Complex factors are at play in any economy, but by and large if a product meets customer needs they will buy it, and it will win out over its competitors. But this is a lengthy process—the product could spend years in the market and injure many people before it becomes evident that the rewards are not worth the risk. In the 1960s, thalidomide was thought safe and prescribed as an anti-nausea drug. Unfortunately, it was realized too late that if taken at a certain stage of pregnancy, it would cause birth defects. The objective of the regulatory environment is to prevent such disasters before they happen.

The governing law for current good manufacturing practices (cGMP) is found in Section 520 of the Food, Drug and Cosmetic Act. The FDA regulations are a nonprescriptive quality process. The actual regulation derived from the law is not very long. This results in the virtue of comprehensiveness but the vice of generality. Many paths can meet the same goal.

Because the eventual quality of a product is so hard to measure, takes too long, and is too risky, many quality programs do the next best thing, which is to audit compliance to the written policies. What the FDA expects is something similar. To the FDA, you are the experts in your device and your quality programs. Writing down the procedures is necessary—it is assumed that you know best what the procedures should be—but it is essential that you comply with your written procedures. At the same time, the methods cannot be too far off the beaten path, because then you will have to explain how they result in the same safety and effectiveness as standard practices in the rest of the safety-critical industry.

In the wake of political waves seeking to reduce regulation in the U.S. economy, the FDA promises to consider the “least burdensome approach in all areas of medical device regulation” [1]. Companies are free to adopt any policies they wish, but it is still contingent on them to establish with the FDA that the approaches they take will result in a product with adequate safeguards and quality. In practice, few companies are willing to accept the business risk of having their product launch locked up in a regulatory approval cycle.

The actual process for gaining regulatory approval in the United States is known as a premarket submission. Companies prepare documentation about the device, its software, the verification and validation activities, and the labeling that goes with the device. One aspect significant about the labeling is the claims for the device, that is, the conditions under which the device should be used, the types of patients and their illnesses that it should be used with, and the outcomes that should be expected. The claims are central to the regulatory process and the FDA thought process. These formulate customer needs and intended use. The standard is high; any company wishing to sell a device that is supposed to make people healthier must be able to prove it through scientific means. This notion of scientific proof underlies what the regulatory bodies are requesting and why they ask firms to do certain things.

Premarket submission must necessarily be late in the product development process, since the company will be documenting what the product can do. Part of this is to submit the product verification and validation documentation and also to perform the final design review, which occurs when the product design moves from development to manufacturing. The last thing a business could want is to have a product ready to manufacture and sell but held up by the approval process. The effect of this is to make companies conservative in their approach to quality programs so as to avoid a lengthy dialog with the FDA.

Rather like getting a legal opinion, in which the lawyer will only speak to the likelihood of an outcome from a court and cannot predict the final result, the FDA will not tell you beforehand which contents of a submission would be acceptable. FDA spokespersons want to avoid being in the position of having suggested that a method would be compliant before actually seeing the results.

This presents a challenge to the engineer developing software for a medical device, because the specifics of the methods for software construction are not spelled out. I will have more to say about this later, but the rigor of the methods and the amount of documentation required also varies with the “level of concern” for the safety of the software. Many of the methods described elsewhere in this volume are also appropriate for medical devices, and will be found acceptable to the medical regulatory bodies. This chapter hopes to inform the reader of methods the author has found that result in high-quality software and establish that quality to the regulatory bodies.

After all, it is not as if we are trying to get away with something. We all want software that works, software that—in the words of the FDA—is “fit for its intended purpose and meets customer needs.” We are obliged to build safe devices. In part, this is an ethical issue—as good engineers, we don’t want our devices to hurt anybody. But safety is also important to the business because of potential product liability. Practices that will result in the highest-quality software will also be acceptable to the regulatory bodies. For software development, aside from the level of documentation, there are not many practices required by the regulatory bodies over and above what engineers should be doing anyway.

Approaching regulatory bodies in an adversarial relationship is not helpful. They are a fact of life in modern economies; whether we like it or not, they serve a valuable function. Without regulations, markets will engage in a race to the bottom. Even if a company wants to be ethical, they will not remain competitive if their competitors are allowed to cut corners. Regulation establishes a floor for behavior beneath which companies cannot go.

1.1. Verification and Validation

Software verification and validation (V&V) forms a large part of the scope of software development for medical devices. Loosely, V&V is the activity of establishing that the software does what is intended. Often in informal usage, the words “verification and validation” are interchangeable or even redundant. Over the years a type of clarity has emerged, but one could still dispute where the line is drawn between the two.

The FDA definition of software validation is “confirmation . . . that software specifications conform to user needs and intended uses.” The phrases “user needs” and “intended uses” are commonplace in the FDA guidance documents. The FDA definition is related to the principle of showing effectiveness: not only did you accomplish what you set out to do, but your actions were the right thing to do. In other words, what you built met user needs.

Verification has a narrower definition. It is the act of demonstrating that design outputs match design inputs. The FDA sees software development (or any engineering development for that matter—see the discussion about design control in Section 3) as a step-wise refinement of project artifacts until development achieves the final deliverable, executable code. Each step starts with design input. The design is further refined, and the result is the design output. So, for example, one phase would be software coding. Coding starts with the software design as the design input. (The software design itself was the design output of the design phase.) Engineers then write the code that satisfies the design. Verification activities would be anything you did to verify that the code fulfills the intent of the design. This might be demonstration, such as dynamic testing. It might be a code review or inspection, in which the team examines the code and the design and ensures that the code implements the design, or that the design is changed to reflect the realities of the actual implementation. Activities performed in the normal course of software development such as requirements reviews, design reviews, unit testing, static tests, and build audits are all examples of verification.

Once you have passed through all the phases of development, the sum total of verification activities conducted at each phase sustain the conclusion that the software is validated. Verification comprises the individual steps, whereas validation is the sum of the whole.

Hence it is a bit mistaken to think that software validation is something that is done—or can be done—at the end of a project. To meet the standards expected by the regulatory bodies, the software will have had to be built with thorough verification activities right from the start. For this reason, it is difficult to subcontract software, because the subcontractor would have to satisfy regulatory requirements just as if you were implementing the development in house. So the problem becomes one of subcontractor management to regulatory standards. (This is part of the subject of the use of off-the-shelf or third-party software, about which I have more to say later on.)

Example 4.1 Precisely Wrong

While not a software failure, the development of the Hubble space telescope is a stellar example of the difference between validation and verification. The instrument had a troubled history—it was seriously behind schedule, and then was further delayed by holdups in the Space Shuttle program. When NASA finally flew the Hubble, they discovered that the mirror was wrong and could not focus [2].

Now this mirror is one of the most precise objects ever created. It was polished to within 10 nanometers—that is to say, 100 atom diameters—of its specification. This is 4 parts per billion in a 2.4-m mechanical structure. Yet when it made it to space, it didn’t work.

It turned out that a mistake had been made during its manufacture. A reference tool to establish the aspherical form of the mirror had been mismeasured and put in the wrong place. So when the technicians polished the mirror to within 100 atom diameters of precision, they polished it wrong. In other words, the mirror matched its verification—the output matched the input to an amazing degree. But the input was wrong, and the mirror failed at its primary purpose—to take clear pictures of the universe. This is a failure in validation.

(The NASA report [2] is interesting reading for the commonplace failures in the quality system that allowed the mistake to occur. Among those that also apply to software are ignoring the evidence when the system did not go together right, relying on a single measurement, failing to follow the quality plan, having QA people report to the project manger instead of being independent, and failing to specify the expected result, so that inexperienced personnel were unable to tell that something was amiss. I am happy to report that in later missions to the Hubble, astronauts were able to retrofit a focus adjustment device that allowed the telescope to take some of the most spectacular pictures of all time.)

To sum up:

• Verification is showing that you did what you intended to do.

• Validation is showing that what you did was the right thing to do.

What I have described is the meaning of verification and validation at the system level. Confusion on the part of some readers about the definition of verification and validation results from the FDA itself, in its description of software validation in the guidance document. I believe the FDA has overloaded the definition. There is validation, by which the FDA writers mean system validation as I have just described. There is also software validation, which is the sum total of verification activities—the construction of software according to software development policies. Technically, the definition for software validation is the same as any other kind of validation—the software conforms to “user needs and intended uses.” It is just that, in this case, the “user” is the rest of the system. If the software complies with its requirements, it has satisfied its “user’s needs.” Hence one could call, with complete legitimacy, the document with the tests in which you demonstrate that the software meets its requirements the software validation protocol. In fact, at a recent conference devoted to the development of medical device software, I informally polled the audience as to what was called the test protocol which established that the software meets its requirements. Half called it software validation, and the other half called it software verification.

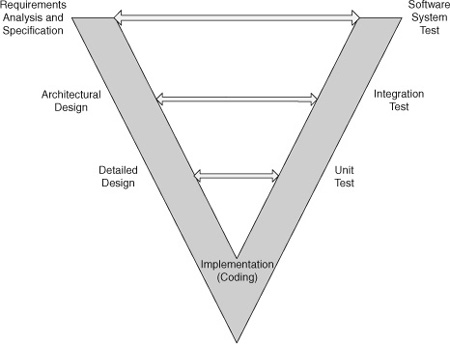

1.2. Life Cycle Model

While the FDA recognizes that “[t]here are a variety of life cycle models, such as . . . waterfall, spiral, evolutionary, incremental” [3], their methods for documenting the software development process assume a waterfall model. This makes sense; the waterfall model may not be accurate, but it is a useful method for describing the deliverables and the activities that occur during software development. (As they say, “All models are wrong; some models are useful.”) I will follow the same practice, partly for reasons of modeling my presentation after the FDA and ANSI methods, but also because the waterfall is a useful way to discuss process. To refresh the reader, the waterfall model is the classical software development life cycle. (See Fig. 4.1.) Software development begins with vague customer needs and wants. These are formalized into requirements during the requirements analysis phase. This is input to the architectural design phase, where the structure of the software is created. With a software architecture in hand, engineers next develop the detailed design. Once all the designs are known, class definitions complete, methods named and prototyped in pseudo code, and a data dictionary written, the engineers can begin the coding phase. After the code is complete, the software enters the test phase, and once the unit, integration, and software system testing is concluded, the software is deployed for use by the customer. After that, it is in the maintenance phase. This development sequence is represented by the light-colored arrows leading to the right in the diagram.

Figure 4.1: Waterfall model of the software life cycle.

The trouble with the waterfall model is that it does not match the reality of the way that systems in general and software in particular is developed. It is rarely possible to define requirements in sufficient detail up front, leave them alone while the team develops the design, and then put all that away and actually start writing code.

After all, requirements originate in the vague wishes of the customers; many things they can imagine or hope for cannot be done. We are constrained by time, resources, technology, competitive intellectual property, and sometimes even by the laws of physics. Moreover, who can imagine that technology, our knowledge, or the marketplace will stay still while we spend months in requirements analysis?

In my experience, we often do not know what we can build until we try to build it. Construction is the real test of requirements. For a software life cycle to have any hope of reflecting reality, it must acknowledge the iterative nature of development. Indeed, more recent descriptions of the waterfall model show backward-pointing arrows between the phases, as you can see in the diagram. The drawing looks complex because you can return to any of the previous phases at any time.

Moreover, the phases are not always that distinct. Requirements sometimes shade into design, and vice versa. For example, if I am writing a custom interface between an embedded controller and a Graphical User Interface running on a laptop, I have quite a bit of design freedom in what the data exchange looks like. But once decided upon, if one or the other components doesn’t precisely comply, the interface won’t work. So is this design or requirements? It depends on your point of view and what you need it for at the moment.

The regulatory bodies allow that you are not restricted to a waterfall model; you are free to choose a different life cycle model. “Medical device software may be produced using any of these or other models, as long as adequate risk management activities and feedback processes are incorporated into the model selected” [3]. So, the risk management, feedback, and quality assurance activities are the keys to being able to assert that the software is valid, not the technical details of the order in which all of this was accomplished.

More modern agile approaches that recognize and take advantage of the naturally iterative unfolding of software development are the ones more likely to succeed. Develop requirements in layers of gradually increasing detail to get an idea of the scope of the project. This enables negotiating the trade-off of features, time, and resources before investing in a huge amount of detailed analysis. Perform an architectural design to understand the responsibilities of subsystems and the way that project task partitioning will allocate across the team. Then implement in stages.

The FDA has been tasked by Congress to consider the “least burdensome approach in all areas of medical device regulation” [1]. They will consider alternatives, such as a process like extreme programming (XP), which de-emphasizes formal requirements analysis and design in favor of user stories. It becomes a function of the level of concern of the software; such methods might be appropriate if there is no risk of harm from the software system. However, when the level of concern is moderate or major, there will be the expectation that methods of sufficient rigor were used to ensure that risk management activities were carried out.

2. The Medical Regulatory Environment

The FDA is the regulatory body in the United States with oversight of medical devices, among other things. Given that the U.S. medical market is a large part of the world’s medical market, medical device manufacturers seeking maximum markets will be interacting with the FDA.

The worldwide standardization body is International Organization for Standardization, or ISO [4]. The name “ISO” is not actually an acronym for anything. It is instead derived from isos, the Greek word for “equal,” as in “isosceles triangle,” and reflects the organization’s aim to equalize standards between countries. More than 150 nations are members, including all of the developed countries, so it is truly international.

Closely related to the ISO is the International Electrotechnical Commission (IEC); in fact, the two have published many standards together. As indicated by the name, IEC is specific to electrical and electronic devices. ISO is more concerned with quality systems for a broad range of products, whereas the IEC has standards for particular devices. (The IEC has a standard for “rumble measurement on vinyl disc turntables,” for example.)

Example 4.2 Guidance Documents

The FDA has a very good website, www.fda.gov, with many useful documents concerning medical device development in general and software for medical systems in particular. The most useful of these are the guidance documents, which serve to further explain the meaning of the regulations.

General principles of software validation. Final guidance for industry and FDA staff. January 11, 2002. Available at: www.fda.gov/MedicalDevices/ |

This is the most important for software development. If you read none of the other guidance documents, you should still read this one. |

Design control guidance for medical device manufacturers. March 11, 1997. Available at: www.fda.gov/MedicalDevices/ |

This document provides detail about the design control process itself, which is of larger scope than just software development. It is a description of good engineering practices that the FDA expects to be followed in the development of any medical device. Software development has to follow the same guidelines, as well as further specialized activities. |

Guidance for the content of premarket submissions for software contained in medical devices. May 11, 2005. Available at: www.fda.gov/MedicalDevices/ |

This document contains the criteria for establishing the level of concern of the software under development. It also describes the documentation necessary for the premarket submission, not just the list of documents but the type of content that they should contain. This will be important if you are responsible for putting together the software documentation for the premarket submission. |

Guidance for industry, FDA reviewers and compliance on off-the-shelf software use in medical devices. September 9, 1999. Available at: www.fda.gov/MedicalDevices/ |

This document tells you what the FDA expects you to do if you use third-party software in your medical device. It also has the most thorough discussion of software hazard analysis among the guidance documents. |

ANSI/AAMI/IEC 62304:2006. Medical device software—Software life-cycle processes. Available from the webstore at www.iso.org/iso/store.htm. |

This is the official international standard. It restates much of the information in the FDA guidance documents, but is crisper and less subject to interpretation. This is a valuable addition to the FDA documents, but does require purchase. |

IEC Medical Standards

The standards important in the medical world begin with IEC 60601:

• IEC 60601-1 Medical electrical equipment—Part 1: General requirements for safety.

• IEC 60601-1-1 Medical electrical equipment—Part 1-1: General requirements for safety—collateral standard: Safety requirements for medical electrical system.

• IEC 60601-1-2 Medical electrical equipment—Part 1-2: General requirements for safety—Section 2: Collateral standard: electromagnetic compatibility—requirements and tests.

• IEC 60601-1-4 Medical electrical equipment—Part 1-4: General requirements for safety—collateral standard: programmable electrical medical systems.

• IEC 60601-1-8 Medical electrical equipment—Part 1-8: General requirements for safety—collateral standard: general requirements, tests, and guidance for alarm systems in medical electrical equipment and medical electrical systems. First edition in August 2003.

• IEC 60601-1-9 Medical electrical equipment—Part 1-9: General requirements for basic safety and essential performance—Collateral standard: requirements for environmentally conscious design. First edition in July 2007.

These are mostly hardware standards that your device would need to comply with in general. IEC 60601-1-4 is the relevant standard for devices containing microprocessors. IEC 60601-1-8 may also be relevant if your software or device has alarms. This document spells out international standards for physical features of alarms, such as the loudness and frequency characteristics of an audible alarm, its cadence for different alarm priorities, and the colors to use for LEDs.

Other IEC standards would come into play if your product used components from the list of IEC standards. For example, if your device used a lithium battery, you would need to comply with IEC 60086-4 Primary batteries—Part 4: Safety of lithium batteries.

Safety Laboratory Markings

Safety markings, such as the UL, CSA, and CE marks, are much more relevant to overall electrical system safety than to software concerns. Nevertheless, if you are producing an electrical product, some form of a safety mark is required by almost all countries. Underwriters Laboratory (UL) is preferred in the United States, CSA is the Canadian standard, and the CE mark is required for EU countries.

UL is largely a testing standard. The CE mark, on the other hand, can be obtained through documenting a quality process. It is not necessary to have ISO 9000 certification to get a CE mark, but if you do, you will have completed 80% of the effort for getting the mark [5].

One difference between the CE mark and the FDA is that it is sufficient to show that a product is safe for the CE mark. The FDA creates a greater burden of proof by requiring that the product also be shown to be effective; in other words, that using the product results in some benefit to the user. This sometimes results in products being released in Europe before the United States, because effectiveness is much harder to demonstrate.

Approval for the CE is similar to ISO certification. You find (and pay) a notified body (such as TUV or BSI) to represent you to the competent authority—a representative arm of the EU member-state that monitors the activities of the notified bodies. This body will assess your product and company in much the same way that the FDA would assess it, with greater scrutiny accruing to higher-risk devices. The same kinds of testing and design documentation would be suitable for both.

Worldwide regulatory bodies embarked upon a major effort in the 1990s to harmonize medical device regulatory requirements. This has taken the form of the Global Harmonization Task Force (GHTF); it includes “representatives of the Canadian Ministry of Health and Welfare; the Japanese Ministry of Health and Welfare; FDA; industry members from the European Union, Australia, Canada, Japan, and the United States, and a few delegates from observing countries” [6]. There are four subgroups; the task force working on harmonizing quality-system requirements has had the most success.

Many countries defer to ISO standards for quality systems and IEC standards for safety. The regulatory bodies have established cross-approval processes, so generally what has been approved by one agency is acceptable to the other, with perhaps some tweaking. For example, the FDA requires a risk analysis but does not specify the method; the European agencies expect the risk analysis to be conducted per ISO 14971. Following the precepts of ISO 14971 is completely acceptable to the FDA. Generally then, since you know you will need to follow ISO 14971 for Europe, you might as well use the same analysis and structure to satisfy the FDA. “ISO 9001 is the most comprehensive because it covers design, production, servicing, and corrective/preventive activities. The FDA GMP requirements are slightly more extensive because they include extensive coverage of labeling, [sic] and complaint handling” [7].

There are a couple of significant reasons the FDA regulations are still separate from the ISO standards. In the first place, the FDA regulations and guidance documents are free. The ISO/ANSI/IEC documents are protected by copyright and require purchase, typically around $100. Charging for the standards is even more inconvenient than it seems. A full set is not cheap, and, although they are available as electronic documents, you cannot create more than one copy. A company would prefer, of course, to share them to lower the cost, but they can’t just print them whenever someone needs to consult a copy.

The second issue is that “FDA does not believe that ISO 9000:1994 alone is sufficient to adequately protect the public health. . . . Through the many years of experience enforcing and evaluating compliance with the original CGMP regulation, FDA has found that it is necessary to clearly spell out its expectations” [8]. This is because the FDA sees itself as more of an enforcement agency than ISO seems to be. Thus, the FDA wants to define the practices that its staff expects to see, not just recommend good practice.

In the next section, I provide an overview of quality systems from the perspective of both FDA regulations and ISO simultaneously because there is much overlap. At the same time, this provides an opportunity to point out the ways that they differ. Details of the quality system and the submission process are really the bailiwick of a company’s regulatory compliance personnel. However, there are occasions when the quality system interfaces to software development, so it is useful to know the regulatory context in which a medical manufacturer must operate.

2.1. Worldwide Quality System Requirements

The actual regulations for medical manufacturers doing business in the United States are codified in U.S. 21 Code of Federal Regulations (CFR) 820, known as the QSR (for quality systems regulations). The ISO general quality system requirements are described in ISO 9001:2001. There is a supplement to the general standard in ISO 13485:2003, which comprise additional requirements for medical devices. Together, these two international standards are equivalent to the QSR. There are no extra requirements in ISO 13485 that have relevance to design engineers, or to software engineers in particular, that have not already been covered by the FDA’s QSR.

The regulations are very high-level requirements that medical manufacturers must implement in order to have satisfactory quality systems. Many of these requirements are specific to manufacturing processes, such as device configuration management, documentation control, process control, and material handling. Developers of medical device software will be only indirectly concerned with many of these policies, although the software development process has to fit into the general scheme. Of greatest interest to software engineers is §820.30, Design Control (Subpart C).

Most of this chapter is focused on design control and its application to medical software development. But first I wish to briefly cover general principles of the regulations for medical manufacturers and their interface to software development. These are the sorts of practices that any medical manufacturer will have to follow.

The general policies are known as good manufacturing practices, or GMP. (Since this has been revised in 1996, the current policies are known as cGMP for current good manufacturing practices.) In other words, the QSR codifies cGMP. These are principles of mature product development and manufacturing firms; while medical manufacturers must comply, most manufacturers would benefit from following these practices, for they by and large make sense for manufacturing quality products.

The QSR and the ISO standard cover much the same ground, but the organization of the standards differs slightly. I will use the organization of the QSR, summarizing the policies of both organizations in the following sections.

2.2. Subpart A: General Provisions

The first section in both documents is an introduction that covers scope and definitions, plus some legal information. The applicability of the QSR is “the design, manufacture, packaging, labeling, storage, installation, and servicing of all finished [medical] devices intended for human use” [8]. “Finished” means that it does not apply to companies which manufacture parts that go into a finished device, but they are nevertheless encouraged to follow the same guidelines, where appropriate.

2.3. Subpart B: Quality System Requirements

This part of the QSR and the ISO standard cover organizational principles. Any company that wishes to manufacture medical devices must establish a quality system. The term “establish” has special meaning to the FDA—it means define, document, and implement. The documentation step is important. Companies must document and follow their processes.

The quality system must state a quality policy, and the company has to have the organization to support the quality system. This includes trained personnel, with sufficient time to do the work. There needs to be enough time in the production schedule to allow for inspection, testing, and verification. The quality organization includes an executive to oversee the quality system. One of the executive’s responsibilities is management review. The FDA is more specific and has more details about management review of the quality system than ISO. The FDA encourages an internal review to evaluate compliance, but staff will not ask to see the results under normal circumstances, in order not to discourage forthright reports. One part of review is internal audits of the quality system. Normally, these would not be shown to the FDA. ISO-notified bodies such as the TUV are less adversarial, and can ask to see internal audits, since they are seeking to work with the manufacturer to create compliance.

Both QSR and ISO require the establishment of a quality plan. The guidance from ISO is much more specific; the FDA in this case accepts the ISO standards. This is a case where ISO goes further than FDA and the FDA finds that completely acceptable. In another case, personnel, the FDA goes further than ISO. The FDA specifically instructs that personnel who build or test the device be made aware of defects that may occur if they don’t do their jobs right, or the types of error they may see so as to be on the lookout for quality problems.

2.4. Subpart C—Design Controls

The next part of the QSR, Subpart C, concerns design controls. This is the most pertinent part of the regulation for software engineers. In fact, the point of this chapter is to explain how a software development process can be designed to comply with Subpart C. It deserves a section of its own, so discussion of design controls appears in a subsequent expanded section, and in the meantime I press on with an overview of cGMP.

2.5. Subpart D—Document Controls

Document control is an important function in any manufacturing organization: it is the principal method for controlling change. Controlling change is necessary because the reason for any change in a medical device must be understood. In some cases, the change has to be validated, that is to say, it is not sufficient to claim “new and improved”; you must actually be able to show “new and improved” with actual clinical data.

The purpose of document control is to collect all descriptive documents that say how to make a product. These are the drawings for how to make pieces of it, the vendor list of whom to buy components from, the bill of materials (BOM) that tells you how many of what to build the product, and the standard operating procedures (SOPs) that tell you how to manufacture it. The SOPs extend to describing the process for controlling the process, that is, the procedures to change the documents in document control, or other procedures having to do with the quality system itself.

Note: Software has its own set of SOPs that describe the software development process. These are an extension of the quality system SOPs.

Changes to the document set are often known as engineering change orders (ECOs). Various elaborate schemes for managing the ECO process exist, but they are beyond the scope of this chapter. There are electronic systems to manage documents as data elements in a database, complete with electronic signatures. They are expensive and complex, but convenient in many ways. If you are looking for one (or building your own), keep in mind the fact that, since they are part of the quality system, electronic document control systems must themselves be validated. See Section 6.1, Software of Unknown Provenance (SOUP), for guidelines of how conduct this validation. They must also comply with 21 CFR 11, Electronic Records; Electronic Signatures, which are policies to ensure the validity of electronic records.

The ultimate deliverable for document control is the device master record (DMR). This is the sum total of documentation that tells how to build the product. The goal would be to have enough detail (including capturing cultural knowledge, which would then no longer be cultural) that someone else could build the product from the documentation alone.

Document control is especially significant to the FDA since the documents support the ability to trace all of the materials that went into manufacturing a product. In the event of a product recall, the FDA would want to be able to trace all the concerned lot numbers so that deviating product could be sequestered or pulled from the market. You as the manufacturer would want to have enough detail retained about configurations to limit the extent of the recall.

2.5.1. The Interface of Software to Document Control

The interface among software development, software configuration management (SCM), and document control will vary with the business and the applications. Software has its own need for configuration management (see Section 3.11, Software Configuration Management Methods) that needs to accommodate a huge number of changes with a limited amount of overhead. Most of the time the ECO process is enormously more cumbersome than software development could use and still stay productive.

The way I’ve seen it work is for the interface between SCM and document control to be at very specific boundaries. For example, documents that are used outside the software group are good candidates for release to document control. The alphabet soup of development documents should be in document control—they define what the product does as much as the executable. Most documentation that will be included in the premarket submission will be in document control.

When software is a component in the medical device, the released software is treated as a virtual part under document control. It is given a part number and a place in the BOM. Revising the software requires an ECO.

If software is the medical device and your firm is not otherwise a manufacturer, other systems may work for you. It will, as always, be a function of the level of concern for the software. What is required is compliance with control methods. You have to be able to ensure that you know exactly what software configuration went into the device, and that a change cannot have occurred without proper approvals and validations, as necessary.

2.6. Subpart E—Purchasing Controls

Purchasing controls are procedures to make sure that purchased products conform to their specifications. The FDA is not regulating the component suppliers, so they explicitly require the device manufacturers to exercise control themselves. This mostly has to do with raw materials and components, and so on, but where it is important to software is that it also covers contractors and consultants, who may be providing software. It would also include off-the-shelf (OTS) software.

Firms who use contractors must document the selection of a contractor and why the contractor is able to perform the job. They must also define the control mechanisms they will use to ensure quality, and review the work of the contractor at intervals to confirm that requirements are being met. Finally, the contracting company must document and maintain quality records for acceptable contractors.

One form of the purchasing controls is in the data that the company uses to buy the specific items that will go into the medical device and the methods to ensure that what was delivered was what was agreed to. Purchasing controls like this would prevent something like the Chinese toy recall of 2007. For software, for instance, the purchase agreement would need to state which version of an OTS package was being used in the device.

The FDA regulations spell out that a supplier should notify the manufacturer of changes in the product they are providing so that the manufacturer has an opportunity to evaluate how the change may affect safety and effectiveness. They warn that suppliers who don’t notify the manufacturer may be unacceptable. ISO is slightly less strict in this regard, but ISO indicates that it is a good practice.

The degree to which you were to specify an exact version is a function of the safety or effectiveness of the finished device. For example, it may be possible to simply specify a generic version of Windows, such as Windows 2000 or XP. But because Windows comes in so many variants and installations, making testing each combination difficult or impossible, a generic specification is probably not suitable for the moderate or major level of concern, and may be unwise for minor-level-of-concern software.

2.7. Subpart F—Identification and Traceability

The purpose of identification is to prevent the use of the wrong component or material in manufacturing. The method to provide for this is to make each batch or unit traceable with a control number. For software, this implies version labels, although firmware could be identified with a part number on the program storage medium or board.

While it is not part of 21 CFR 820, the FDA also has regulations for tracking which devices go into which patients [9]. This is necessary so that if a problem is found in production, the manufacturer can find the products and warn the recipients or recall if needed. For example, Medtronic recently determined that some defibrillator leads were prone to fracture and issued a voluntary recall. By identifying the patients who had the leads, their doctors can plan to adjust the defibrillator settings at the next visit [10].

2.8. Subpart G—Production and Process Controls

Production and process controls are really a manufacturing subject. They comprise the methods that a manufacturer uses to make sure that the products they are making consistently meet specifications. The subpart covers manufacturing and inspection procedures, environmental control, cleanliness and personal practices of personnel, contamination control, building, and equipment.

Insofar as a manufacturer uses automated data processing systems for production or in the quality system, “it is necessary that software be validated to the extent possible to adequately ensure performance” [11]. This does not mean that you have to conduct software V&V to the extent that you would for product software. Often, source code and design documentation are unavailable. What you must do is confirm that the software meets your needs and is fit for the purpose you have in mind. This is a matter of determining your requirements for the OTS software and conducting black-box tests to verify “that it will perform as intended in its chosen application” [11]. You would not need to test every feature, but you would want to convince yourselves that the features important to correctly manufacturing or inspecting your device are working properly, or that you are using the device properly. For more about this, see Section 6.1.2, Third Party Validation.

2.9. Subpart H—Acceptance Activities, and Subpart I—Nonconforming Product

These subparts discuss receiving, in-process, and finished device acceptance testing and inspections. In an interesting difference between ISO and FDA, ISO allows release of product prior to full verification under an “urgent use provision,” provided that the manufacturer keeps track of it in case of a recall. The FDA does not permit urgent use; devices must have a completed set of final acceptance activities, including signed records, before release.

Presumably “urgent use” could include the release of beta software to a customer site to fix a serious problem, before the full software V&V had been completed. This would be allowed by ISO but is forbidden by FDA. To them, the advantage is not worth the risks.

If the inspection finds that a subcomponent, material, or product does not meet its specifications, the nonconforming product subpart deals with “the identification, documentation, evaluation, segregation, and disposition” [12] of such items, so that there is no danger of mixing it with released products. Generally, the nonconforming product must be specially tagged and kept in a protected area away from the acceptable product. The company must have a documented procedure for deciding what to do with the deviating items: reworked, accepted by concession, used in alternative applications, or scrapped. A nonconforming product accepted by concession must have a justification based on scientific evidence, signed by the person authorizing its use.

2.10. Subpart J—Corrective and Preventive Action

Methods to provide feedback when there have been problems are a part of any quality system. For the FDA, this is the corrective and preventive action process, also known as CAPA. The purpose is to identify root causes for quality problems and provide correctives so that they don’t happen again. This is risk based—problems deserve investigation equal to the significance and potential risk.

CAPA is a very serious matter to the FDA and other regulatory bodies; for one thing, it is subject to audit and almost always looked at by the auditor. It is a prime role belonging to the QA/RA (quality assurance/regulatory affairs) departments of a medical manufacturer. Any death associated with a device is relevant, even if caused by user error. This is because human factors should have been considered in the design of the device, and misuse is a failure of the human factors design. Manufacturers would be expected to evaluate whether a redesign or more cautions and warnings in the manuals are called for.

You could extend a software problem reporting process to cover all the issues that could occur in manufacturing a device and in handling customer complaints. Usually you would not want to; you don’t want to confound and overwhelm the quality data from the rest of the organization with minor software defects. At the same time, you don’t want to make the process of closing software defects so cumbersome that you discourage their reporting. The rigor for follow-through on a CAPA issue is often quite high, and CAPAs are supposed to be reviewed in the quality-system management review meeting. You may not wish to find yourself explaining to the CEO what a race condition is and how you verified that you had fixed it. (A race condition occurs when operations can occur in any order but must be done in a certain sequence to be successful.)

A tiered system is likely best, where only software defects discovered after release of the software go into the CAPA system. These defects would be duplicated into the CAPA system if they were determined to be of threshold severity; customer complaints or other quality issues needing a software change would go into the software problem reporting system to inform the software team of actions that need to be taken.

2.11. Subpart K—Labeling and Packaging Control

The one thing to note for software in this section is the special meaning of “labeling” and its importance to the FDA. This is not just the manufacturer’s label with the model and serial number of the device. Labeling is any textual or graphical material that is shipped with or references the device. It includes the user’s manual, physician’s guide, instructions for use, container labels, and advertising brochures. The text on a screen or in a help system in a software-controlled device is labeling.

Labeling is also part of the premarket submission process. (The premarket process is further discussed later in this chapter.) You want to be careful that you don’t make claims in the use of the product that have not been validated through clinical use. While doctors, based on their own judgment, can use devices as they see fit, the manufacturer cannot make claims for therapy that are not backed up by science.

Labeling is also an area where the FDA differs slightly from ISO. The FDA requires an examination for accuracy of the labeling, and a record that includes the signature of the inspector, documented in the device history record. The FDA does this because its data show that, even with the rules, there have been numerous recalls because of labeling errors. ISO is not so strict; while you can retain label inspection records, you are not required to do so.

2.12. Subpart L—Handling, Storage, Distribution, and Installation

Now we are into a part of the regulation that is really more about issues related to manufacturing and distributing devices. This doesn’t have much to do with designing the products or the software that goes into them. Policies about handling, storage, and distribution are designed to make sure that devices are properly taken care of before they arrive in customer’s hands, and that only a qualified released product is shipped.

As for installation, the manufacturer must have directions for correct installation and must ship them with the device or make them readily available. These should include test procedures where appropriate. The installer is also required to maintain records, subject to audit, that show the installation was correct and the test procedures were followed. In another difference from the FDA, ISO does not address installation as a separate subject.

If the medical device requires an elaborate software installation, these requirements could be an issue. If the manufacturer performs the installation, it would have to keep records. Third parties can do the installation, but then are considered to be manufacturers, and are subject to their own record requirements. You would want to provide comprehensive installation instructions—it would not be sufficient to assume that the user would know to double-click setup.exe. You could also provide a signature form with instructions to document the installation and retain the record. You might need to provide a small validation to confirm that the software installed correctly, and have the users sign, date, and keep the form in their records. Hospital bioengineering departments are usually comfortable with this.

2.13. Subpart M—Records

This is an area where the FDA has much more to say than the ISO. The FDA requirements would fit under ISO—there is nothing inconsistent—but ISO is much less specific. So if you intend to market in the United States, you would need to comply with the detail the FDA describes. For this reason, I will summarize the FDA record-keeping policies.

There are five classifications for record files:

• Design/device history file (DHF)

• Device master record (DMR)

• Device history record (DHR)

• Quality system record (QSR)

• Complaint file

The DHF is not discussed in this section, but is nevertheless an important record, and second only in importance to the DMR for design engineers. I have more to say about this in Section 3, on design controls, since the DHF is really the documentation of the design history. These are the records of design reviews, technical reviews, verification activities, analysis, and testing that collect the history of the design. ISO has no specific requirement for a DHF.

Creating the DMR is the whole point of the design phase. It is the collection of drawings, specifications, production processes, quality assurance methods and acceptance criteria, labeling, and installation and servicing procedures. In other words, it is the set of documentation that tells a manufacturer how to build a device. These specifications must all be controlled documents under the document control procedures. Software specifications are explicitly included as a component of the DMR.

The DHR comprises the data for each particular device. This must identify each device or lot, the labeling, dates of manufacture, and the acceptance tests used to show that the device was manufactured according to the DMR.

The QSR is the set of SOPs, compliant with FDA regulations that are not specific to a particular device. These describe the quality system itself, and the practices, policies, and procedures used to ensure quality in the device and its manufacture. It is important to occasionally analyze the procedures to determine whether they are inadequate, incorrect, or excessive [7].

The complaint file is a much bigger deal for the FDA than for the ISO. In the ISO guidance document, it is merely referenced as an example of a type of system record. ISO 13485 extends this to a near-equivalent of FDA requirements.

The FDA specifies that each manufacturer will maintain a complaint file and establish “procedures for receiving, reviewing, and evaluating complaints by a formally designated unit” [13]. “Any complaint involving the possible failure of a device, labeling, or packaging to meet its specifications shall be reviewed, evaluated, and investigated. . . .” [13] (emphasis added). Even if you decide an investigation is not warranted—because it has been reported already, for instance—you must document why no investigation was made and who made that decision.

Medical care providers are required to report any death, serious injury, or gross malfunction to the FDA and the manufacturer. The manufacturer must determine whether the device failed to meet its specifications and how the device might have contributed to the adverse event. Beyond this, there is also a specific list of the data that the manufacturer must keep with respect to complaints.

A software defect could show up as a customer complaint (also sometimes known as a customer experience report, or CER). Normally you would want the complaint handling to be separate from your software problem reporting system, because of the formality required to deal with customer complaints. But expect the complaint file to be the origin of some of the defects in the software problem reporting system.

All of these records need to be carefully maintained, backed up where electronic, and made readily available to the FDA for inspection. You should keep records for the expected lifetime of the device, but not less than 2 years from date of release.

2.14. Subpart N—Servicing and Subpart O Statistical Techniques

Where servicing is required by the product, both FDA and ISO require documented service procedures and records. The FDA is more specific than ISO about exactly what data go into the service report.

Servicing is an opportunity to collect metrics on the process capability and product characteristics. Collecting statistics is a recommended good practice, but it is up to the manufacturer to decide its appropriateness.

2.15. Post-Market Activities

2.15.1. Audits

A key practice of quality systems in general is the conduct of audits. The purpose of an audit is to have an independent reviewer assess how well the organization is following its procedures and meeting its quality goals. Both FDA and ISO recommend internal audits, at least annually. For ISO, it is necessary to have an independent audit by the notified body in order to retain certification. These are audits that you pay for, so they tend to be more congenial than an FDA audit. They are reviewing your compliance and suggesting ways to help you better comply with the letter and spirit of the quality systems.

The FDA, on the other hand, is fulfilling its regulatory duty. FDA staff take a more adversarial, even suspicious, approach to protect public safety. They are less likely to give you the benefit of the doubt. Plus they have had many years of experience with product safety and failure, and have learned what kinds of practices may lead to injury. They are an enforcement agency and are not to be disregarded. They have badges.

They can look at the DHF and the change records. “The holder of a 510(k) must have design control documentation available for FDA review during a site inspection. In addition, any changes to the device specifications or manufacturing processes must be made in accordance with the Quality System regulation” [14].

As a software engineer, it is unlikely that you would have direct interaction with an auditor in all but the smallest of companies. It is usually the responsibility of the QA/RA part of the organization. Nevertheless, if it does come up, there are a few things to remember about handling an audit.

It is best to think of it as a kind of legal interaction. First of all, don’t offer information. Answer the questions they ask, but don’t volunteer more than they are asking for. It can provide an opportunity for them to dig further or suggest ways in which your organization is weak.

Second, just answer the questions factually. Don’t speculate or offer an opinion about how effective a process is or how well something works. Don’t guess. It is okay to say, “I don’t know” (although that may cause them to write you up for poor employee training).

Finally, and in a related vein, don’t get caught up in hypothetical questions. If they ask you what you would do in a certain situation that has not occurred, the best answer is, “We have not dealt with that.” If they press, the answer is, “We would follow the procedure.” You don’t need to stonewall or be a jerk, but you also don’t need to express your concerns. That’s their job.

3. Design Control Explained

3.1. Purpose of Design Control

The quality system regulation describes at a high level the process for developing customer needs into a marketable medical device. This process is found in Subpart C of 21 CFR 820 [15] for the United States. It is known as design controls to the FDA but goes by the singular design control in ISO Q9001, Section 4.4. It encompasses designing a new product and transferring that design to manufacturing. The objective of design control is to “increase the likelihood that the design transferred to production will translate into a device that is appropriate for its intended use” [16].

Design control is an outline of a new product development process and is general to all aspects of product development, mechanical and electrical, not just software development. The regulation itself is not very long—about 700 words—and not very specific. The software development process needs to fit into the overall process, but software will have extra phases and detail.

Almost all Class I medical devices are exempt from design control, unless they contain software. (For a discussion of device risk classes, see Section 5.7, Device Risk Classes.) Class II and Class III devices are always subject to design control. Since the purpose of this chapter is to discuss software for medical devices, any process we propose will be subject to the design control regulation because any software-controlled medical device is subject to design control.

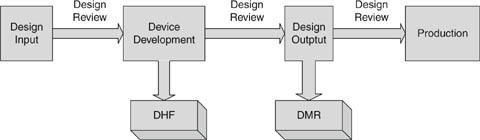

The design control process is like the waterfall model for software development but even simpler (Fig. 4.2). There are four phases: design input, where the customer needs are determined and described in engineering language; device development, where the design is fleshed out, and the design process captured in the DHF; design output, where the final design is captured in the DMR; and production, where the device is repeatedly and reliably manufactured for sale. The transition between phases is always qualified by a design review.

Figure 4.2: Design control process.

It is possible for a simple medical product with a handful of requirements to go through the design control process in a single phase of design input, device development, and design output. From the get-go, software has more phases, so it is only meaningful to discuss design input, device development, and design output as the boundaries between the phases in the waterfall model. One set of design outputs becomes design inputs to the next phase. Thus in a very high-level sense, software development follows the design control model, but sometimes people are confused because there is so much more detail in the software development process. The design control process was invented to encompass all kinds of projects. A simple project like the development of an extension to the application of a catheter (a new claim for what the catheter could do, with some minor changes) is something that might have a single design input/design output phase.

For more complex devices, this really becomes a stepwise refinement of specifications to more and more detail until the final device is fully specified. Once the team has reviewed the design input requirements and found them acceptable, for example, you can begin the iterative process of translating those requirements into a software architecture. Once the architecture is verified as a correct response to the high-level requirements, this output then becomes the input to the next phase of software design. “Each design input is converted into a new design output; each output is verified as conforming to its input; and it then becomes the design input for another step in the design process. In this manner, the design input requirements are translated into a device design conforming to those requirements.” [16]

I will first discuss design control as described in the regulation in the general sense in which it applies to product development as a whole. Further on, in Section 5, Software V&V in the Context of Design Control, I describe the way software development fits in to the design control process. (This is my own stepwise refinement of QSR → design control → software development process.)

In terms of the regulations, those who develop software to control a medical device “shall establish and maintain procedures to control the design of the device in order to make certain that specified design requirements are met” [17]. Notwithstanding language about the “least burdensome approach” and some freedom in selecting software-development life cycles, compliance to the design control regulations will probably look like a traditional, rigorous approach to the software development process, with a focus on documenting the process steps and holding reviews at each phase of development.

It is especially important that the design of software be carefully controlled. The FDA recognizes that the quality of software is not dependent on its manufacture, which is contrary to the case with physical devices. There is no process variability to duplicating software—its manufacture is usually trivial. What is critical is the quality of the construction in the first place.

Because it is so easy to change, some fall into the fallacy of thinking that software is easy to correct and thus does not require controls as stringent as the ones for hardware. The FDA holds the more sophisticated view that, “[i]n fact, the opposite is true. Because of its complexity, the development process for software should be even more tightly controlled than for hardware” [1]. Insofar as the goal of design control is to ensure that the design output meets customer needs and serves its intended purpose, controls are even more important. Software can more easily adapt to new knowledge about customer needs, which is a great strength, but the process must ensure that real needs continue to be met.

A feasibility study does not need to meet the requirements of design control, but when you have decided to produce a design, you will have to create a plan so that the device will meet the quality requirements. It is tempting to just develop the prototype, and then reverse engineer the design control into the project. This is not the best method. The FDA has found that “[u]nsafe and ineffective devices are often the result of informal development that does not ensure the proper establishment and assessment of design requirements that are necessary to develop a medical device that is safe and effective for the intended use of the device and that meets the needs of the user” [16, 18].

One final note: the FDA is prohibited by law from determining the safety or effectiveness of a device by looking at the design control procedures that a manufacturer uses—safety and effectiveness determination is the purpose of the premarket submission. However, they do audit the SOPs for an adequate implementation of design control. So the device could be safe and effective, but inadequate procedures could still land you in trouble.

3.2. Project Planning

The first step in design control is project planning. Project planning is necessary for the organization to determine where it is going, and even more important to have an idea what it might look like to arrive there. It is crucial to have the conception of what the project goals are, and what it means to meet customer needs and be fit for intended use.

It is not specified in the design control guidance, but I’ve always found it helpful if the project plan starts with a mission statement or vision of what accomplishing the project means. A mission statement is a description in 50 words or less of the goal of the project. GE HealthCare has a concept of CTQs—the list of features that are critical to quality. These are the features—no more than five or six—that the product must have to fulfill customer needs and achieve the product goals for the company.

The purpose of the project vision is to focus the development team on what needs to happen, and more important, on what does not need to happen. The essence of good design is deciding what to leave out. A strong vision statement can focus decisions when a room full of bright people start brainstorming all the things a product could do. Usually, doing everything in the world is not a very wise approach to medical product development. It takes longer, and delays the day when our products reach the market and start helping people. It adds to complexity, and higher complexity reduces reliability in devices that must be safe. And it drives engineers nuts.

It is not easy to write a good vision statement, and many are so meaningless that it may lead you to be cynical about the whole idea. They can end up as a mom-and-apple-pie statement that is hard to argue with. For example, a company could start a project to “build the world’s best word processor.” Unfortunately, that does not leave any basis for deciding what to leave out. Any idea that anybody comes up with must be included—we are trying to build the “best,” after all, and how can it be best if it doesn’t have every feature imaginable? But projects like this may never end, and even if they do, they are often late and cluttered with features that get in the way of the essential function.

Note: One way to screen out happy talk is to apply a test for information content. To do this, negate the statement and see if it is obviously false. So negate the original example, “We will not build the world’s best word processor.” Clearly, no one would set such a lousy goal.

A better vision would be to “build the word processor that allows writers to get words on paper in the easiest, fastest way.” Or “build the word processor that allows casual users to format the look of the document in the easiest way.” These have enough content that they communicate the decision about what the product is going to be and what it is not going to be.

A second purpose of planning is to reduce the number of false starts and distracted pathways. This is, of course, advantageous to the company; it is certainly not in the interest of the development organization to pay for development that will never be used. But it also enhances quality. Even though by its nature development will often be iterative, we don’t need to indulge more iterations than we have to. Change management is difficult and important to control, but the easiest way to control change is not to have any. Attention lags the tenth time you’ve done something, and errors creep into upstream documents when it is necessary to revise downstream documents in response to new knowledge.

Of course, there will always be new knowledge, and the last thing we want to do is ship the wrong product come hell or high water. No plan survives contact with reality, but that does not mean that planning is not useful.

Finally, the purpose of planning is to reduce the pressure to compromise quality when projects are behind schedule. The FDA recognizes that deadlines have contributed to defects introduced when designs were not carefully considered due to lack of time, and these defects have resulted in injury [16]. Project plans should emphasize accomplishing quality goals over calendar goals. Good plans let managers make supportable decisions when it is necessary to compromise the project to meet deadlines.

The amount of detail in the project plans is going to vary with organizational needs, the size and complexity of the project, the work habits and personalities of the team, and, as always, the level of concern of the device or software. It is not necessary to plan every last detail. In the FDA’s own words, “Each design control plan should be broad and complete rather than detailed and complete. The plan should include all major activities and assignments such as responsibility for developing and verifying the power supplies rather than detailing responsibility for selecting the power cords, fuseholders [sic] and transformers” [1].

It is important for the plan to describe the interfaces between the contributors and the stakeholders that have input to the design process. To be effective, the plan should establish the roles of the groups involved in the design and the information they share. A typical plan would include:

• Proposed quality practices

• Methods to assess quality

• Policies for record-keeping and documentation

• Sequence of events related to design.

3.3. Design Input

Once preliminary plans are formulated, the device design begins with the design input phase. The starting design input is the as-yet unformed user needs or amorphous marketing concepts. A vision statement will have gone a long way toward deciding what the product will be. The project vision statement, if you use one, will be the first formal description beginning to refine the vague wishes of customers into a product concept. With this, the task of the engineer is to turn the user needs into design input requirements with enough detail and formality that they can serve as input to subsequent phases in the development process.

A distinction that has caused me confusion is naming the phases. Design input is not a thing or deliverable, but a phase of the development process. It has inputs and deliverables (outputs), but they should not be confused with design input—what is really meant is the phase.

In the traditional software waterfall model, the design input phase maps to the requirements analysis phase. So the input is the customer needs and user requirements, and the output is the software requirements specification (SRS). From the project perspective, where design control is describing whole product development, not just software, the input to the design input phase is equivalent—customer needs. The output of the design input phase will vary with the complexity and nature of the project.

Projects of moderate complexity that involve both software and hardware have more elaborate needs for what has to happen in the design input phase. It is still requirements analysis, but now the requirements are for a more complex system containing elements of hardware and software that have to interact to satisfy the product requirements.

The output of the design input phase is design description documents. These documents define the product’s:

• Functional and performance characteristics

• Physical characteristics

• Safety and reliability requirements

• Environmental limits for safe use

• Applicable standards and regulatory requirements

• Labeling and packaging requirements

Human factors analysis and testing should be conducted and used as input to define the function and performance of both hardware and software, including testing the instructions for usability [19]. The specifications should be quantified whenever practical. Where that is difficult, the design document should identify the parts of the design that require further analysis and testing. The document should record any incomplete, ambiguous, or conflicting requirements and provide a mechanism for resolving them.

Developing the system design requirements document is often a function of a systems engineering group. Many organizations may not have the wherewithal to sustain an entire engineering department devoted to systems engineering, yet the systems engineering must occur. It is where the electrical engineers, mechanical engineers, and software engineers get together and allocate requirements to subsystems.

Often you have the design freedom to decide where best to implement a requirement. For example, if you had a requirement to make an alarm sound at multiple frequencies so that the aurally impaired stood a greater chance of hearing it, you could try to find a sound transducer that would produce the sound at the desired frequencies and loudness, or you could create sound waveforms in software in your main processor, or you could devote a special processor to it. Each has tradeoffs in product cost, development time, power usage, and so on. But if the process does not happen, it would be easy for a software development team to fail to schedule time to develop waveforms to output to a driver circuit that the hardware engineers had designed.

System engineering becomes especially important in the development of requirements around hazards. I’ll have more to say about the subject in Section 4 on risk management.

It can be difficult to collect all necessary and correct requirements during the design input phase. As Nancy Leveson has observed, “On many projects, requirements are not complete before software development begins. . . . To avoid costly redesign and recoding, the requirements specification and analysis should be as complete as possible as early as possible. Realistically, however, some of the analysis may need to be put off or redone as the software and system development proceeds” [20]. For this reason, write the system design requirements document so that it can change as issues are resolved and new knowledge gained. The document (or the design input process) should define what would trigger an update, and who is responsible. At the conclusion of the design input phase, the system design document is subject to design review and requires “the date and signatures of the individual(s) approving the requirements” [19].

The FDA realizes that “[a]s the physical design evolves, the specifications usually become more specific and more detailed” [21]. Nevertheless, if possible, the team should strive to get the upstream requirements analysis as correct as possible. As is commonly known, the later in the development process a defect is found, the more expensive it is to correct. (This is especially true for medical devices, which are subject to postmarket surveillance and complaint handling regulations, as explained in Section 2, The Medical Regulatory Environment.) Furthermore, correcting a defect later may have ramifications for the project as a whole. It is much easier to get it all right when thinking about it, especially for hazards, than to go back and reconstruct all the thinking that went on during the risk analysis. Often risks interact in complex ways; a superficially insignificant change in one subsystem may have influences on the rest of the system that are poorly understood.

At the same time, it is possible to spend almost unlimited time in analysis, contemplating behaviors and risk probabilities that cannot be known without some level of prototyping. Perhaps prototyping is needed before the design input phase is complete—even then, what you know about is a prototype and not necessarily the thing that will be built.

Better to not expect to resolve every to-be-determined (TBD) or think that you will capture every requirement. The first drafts of the requirements are documents useful for refining the project planning documents. It is beyond the scope of this chapter to discuss scheduling methods and project planning in depth. But generally what happens is a negotiation of the set of requirements—the features the product has—versus the time and resources available to develop them. During this negotiation it is vital to have a sense of what the requirements are and that is what I mean by the utility of the draft requirements as input to the project planning.

Stepwise refinement of the requirements will occur throughout the development of the project, but this will require effort to maintain consistency between the downstream documents and the upstream documents. Moreover, one of the deliverables at the end of the project is a traceability matrix (see below) in which you establish that all requirements have a corresponding test (and vice versa). So if you had discovered new requirements during development, you would want to be sure that they make their way back into the upstream documents.

One thing to make things easier is to resist tightening the bolts too early. A lot of this is just the use of standard good coding practices. Keep things decoupled. Use accessors and mutators to get at data so that the data itself can change. Keep the data abstraction at a high level. Use identifiers for constants; don’t use magic numbers. You can put off deciding a lot of things like thresholds because they will be easy to change in a solid software design. But you can’t put them off forever—sooner or later you will have to establish why something has one value and not another. While it can be easy to change in software, it can be difficult to validate that a change is justified. Changing the number may be trivial, but the validation of the value of the number is crucial. (Remember the Hubble example!) “The device specification will undergo changes and reviews as the device evolves. However, one goal of market research and initial design reviews is to establish complete device requirements and specifications that will minimize subsequent changes” [7].

There are several more things that I want to say here about requirements management.