4

The Digital Learning Environment in the Paradigm of Systemic Complexity Modeling

We have just highlighted the fragility of the current models when external conditions change, especially when these models are supposed to represent environments having undergone significant technopedagogical transformations. Before advocating the application of the complex systems theory to the modeling of new-generation DLEs (section 4.2), we will first clarify the founding concepts of this theory (section 4.1). It will be easier to later make a reference to argue this choice.

4.1. Complex systems theory and theoretical framework

According to the theory of relativity and quantum physics, the complex systems (and chaos) theory is probably the third scientific revolution of the 20th Century. It drastically changed our way of thinking, perceiving the world and predicting the effects of such an event on the evolution of a system, in other words, how we do science. It is also referred to as systems science or complexity science. These new sciences “take their scientific status within the paradigm of constructivist epistemology; while normal sciences usually more readily refer to the paradigm of positivist epistemology” [LEM 99, p. 22].

There are many disciplines that one way or another refer to the constructivist paradigm. Among them and beyond, system and complexity science include education and communication sciences. For the latter, “the paradigms set up can be common to social sciences, either specific to communication sciences, such the scientificity of the approach, the positioning of man at the center of reflection, the systemic nature of the objects analyzed or the constructivist dimension of reality, to name a few” [VIA 13, p. 4]. For scientific education, the constructivist paradigm can also be the basis for different theories of learning or knowledge development, and various educational intervention models may result.

“It especially stems from Piaget’s epistemological and psychological dual perspective and Bachelard’s historical-criticism perspective from which didactics have borrowed the epistemological obstacle concept. These epistemological perspectives take the opposite view of positivism by emphasizing the role of the subject in the development of knowledge and on the impossibility to separate the facts from the interpretation models. Various learning theories can be registered in a constructivist paradigm while offering different explanatory models to account for the processes involved in learning: mental processing of information from the perspective of cognitive psychology; process of assimilation, accommodation and equilibration in the Piagetian perspective; process of gradual internalization of regulatory mechanisms of action and thought in the sociocultural context of Vygotsky, etc.” [LEG 04, p. 70]

The common characteristic between these different “constructivist visions” is based on the idea that knowledge of the phenomena is the result of a structure made by the subject. The image of reality that the latter has or the notions that build this image are “a product of the human mind in interaction with this reality, and not a true reflection of reality itself”1.

With this theoretical background having been specified, let us return to systems and complexity science before addressing more specifically the paradigm of systemic modeling of complexity.

For Aurore Cambien, author of an impressive Certu study report2 on how to comprehend complexity:

“The birth of systemic thinking is intrinsically linked to the emergence of a reflection regarding the notion of complexity during the 20th century. Awareness of complexity in the world goes hand in hand with that of so-called classic thought deficiencies to offer the necessary means for comprehension and understanding of complexity on one hand, and action in response complexity on the other.” [CAM 07, p. 9]

4.1.1. Definition of a complex system

A complex system is first and foremost a system. In fact, it consists of a set of elements interacting with each other and with the environment in order to produce the corresponding services to achieve its purpose. Beyond this overall systemic definition, complex systems are also, in essence, “open and heterogeneous systems in which interactions are linear and whose overall behavior can be obtained by a simple composition of individual behaviors” [ANI 06, p. 3].

Most of the formal properties that characterize a system (totality, feedback, equifinality, etc.) have already been defined and studied in section 1.2 in Chapter 1, when we discussed the interest in considering a DLE as a system of instrumented activity. This gave us the opportunity to return to the “General Systems Theory” formalized after World War II by Von Bertalanffy [VON 56]. Although these properties are still valid and active in complex systems, we will obviously reconsider their definition. In this section, instead, we will exclusively discuss the system’s complex nature.

The word complex comes from the Latin word complexus meaning “what is woven together” and complecti, “something containing different elements” [FOR 05, p. 16]. Humans are rarely able to spontaneously understand complexity and it must be modeled and simulated in an attempt to identify it. Edgar Morin said that complexity exists when our level of knowledge does not allow us to tame all the information.

“A complex system is, by definition, a system built by the observer who is interested in it. This said person postulates the complexity of the phenomenon without forcing himself to believe in the natural existence of such a property in nature or reality. Complexity, which always involves some form of unpredictability, cannot easily be held for deterministic purposes. In general, however, complexity is represented by a tangle of interrelationships interacting.” [LEM 99, p. 24].

In his book, La Méthode, Edgar Morin [MOR 08] helps us to understand complexity: he reveals to us the underlying concepts, requirements, issues and challenges. “The eminent thinker displays knowledge of complexity to command a new approach and thinking reform” [FOR 05, back cover]. In fact, we can expect, following the work of Atlan [ATL 79] and Morin [MOR 08], complementing those of Varela [VAR 79] and Von Foerster [VON 60], that scientific advances in the field of design and analysis of complex systems are better taken into account.

Aware that a universally accepted definition of the complex nature of a system has yet to be formalized, we will study it based on four features that create general consensus3:

- – the nature of its constituents (their type, their internal structure);

- – its open and chaotic appearance (outside influences on different scales);

- – self-organizing and homeostatic (features) dominated by interactions and feedback (non-linear interactions, often of different types);

- – its ability to shift to new systems with emerging and unforeseen properties [MIT 09].

Finally, we will address the epistemology to which these systems adhere to before differentiating the algorithmic complexity of natural complexity.

4.1.1.1. Variety of components and links

The constituents of a complex system are of varied size, heterogeneous in nature (individuals, artifacts, symbols, etc.) and indistinct (possible evolution over time) and have an internal structure. “A complex system does not behave as a simple aggregate of independent elements, it constitutes a complete coherent and indivisible structure” [TAR 86, p. 33]. This formal property brings us back to the entire concept that we discussed in section 1.2.2 in Chapter 1.

“The two notions that characterize complexity ‘are the variety of elements and interaction between these elements’. A complex system is formed by a wide variety of components organized into arborescent levels. The different levels and components are interconnected by a variety of links.” [TAR 86, p. 33]

And it is the variety of interactions between its constituents and the variety of the constituents themselves that contribute the overall evolution of complex systems.

4.1.1.2. A complex system is not a complicated system

A complex system is unpredictable. Therefore, it does not allow (by calculation), as forceful as it may be, a predicted outcome of the processes or phenomena4.

But complex doesn’t mean complicated.

“For example, we are able to understand and predict when and why a car engine breaks down while we are unable to understand and predict all of the factors that influence a change or a social movement (e.g. for a revolution).” [BEG 13]

When the system is complicated, we are able to find one or more solutions, because a “complicated” system consists of a set of simple things. It just takes a bit of precision, knowledge and time to understand its function. The general method is first to break down and isolate each of the elements (in simple components or in simple notions) to then be able to reassemble them. “The mechanical function of a clock or a car engine, for example, may seem complicated to us but we are able to clearly explain how it works, because we know what impact each mechanical or electronic component has on the other” [BEG 13].

To illustrate the difference between a complex system and a complicated system, Snowden [SNO 02, p. 13] pertinently noted that “when rumors of reorganization appear in an organization, the human complex system begins to mutate in unpredictable ways and forms trends in anticipation of the change. Conversely, when one approaches a plane with a toolbox in hand, nothing changes!”5

4.1.1.3. Open system: between complexity and chaos

A complex system is also an “open system” or in constant contact with its environment. In fact, “it undergoes external disturbances that are preconceived as unpredictable and unanalyzable. These disturbances, occurring in the environment, cause system adaptations that bring it back to a stationary state” [SNO 02, p. 32].

For those who study it, a closed system is more predictable than an open system in the sense where being totally isolated from external influences, it will be subject to internal modifications and will be found in a number of states defined by its initial conditions.

And if the latter are invariable regardless of the number of experiences, in other words, the number of times that you observe the system’s behavior, from its initial phase to its final phase (which is very difficult to attain), it will behave as an automaton that develops a known program. In this sense, it can be considered to be complex, as complicated as this program can also be! The knowledge of the various intermediate states being sufficient to define the system, its evolution and its finality become foreseeable and predictable.

But as we will see below, it is difficult to ensure the invariance of the initial conditions applied to a closed system, which makes an open system and therefore a potentially complex system (because openness is a necessary condition but not sufficient to ensure that a system is complex).

4.1.1.3.1. Sensitivity to initial conditions

In his time, Edward Lorenz raised the difficulty of such predictions when he sought to predict long-term weather events. Thanks to computer systems, and his knowledge of the deterministic laws of Galileo and Newton (also known as forecasters), according to initial conditions that would allow him to determine the future state of a system, he was given the objective of predicting the weather using computer simulations based on a mathematical model.

In 1963, his work revealed the fact that tiny variations between two initial situations could lead to final situations that are unrelated.

He claimed that it was not possible to correctly predict the weather over the long term (for example, one year) because an uncertainty of a millionth when entering data of the initial situation could lead to a totally incorrect prediction.

The “butterfly effect” is a famous metaphor that illustrates this observation. Noted as part of “chaos theory”, it is intended to describe the fundamental phenomenon of sensitivity to initial conditions. This metaphor is sometimes simply expressed using a question: “Does the flap of a butterfly’s wings in Brazil set off a tornado in Texas?” This sensitivity can be translated by the following observation: no matter what the level of accuracy of the initial conditions, the system will nevertheless evolve in completely unpredictable ways.

4.1.1.3.2. Complex or chaotic system?

The sensitivity to initial conditions is not the only responsible cause of the difficulty in predicting a complex system’s behavior. In an open system, the reciprocal influences between the activity of the system, its environment and its purposes continually alter its structure. “Random sounds” from the environment or the constituents of the system are active to the relationships that bind them. These events which are difficult to control (randomness of sound) are disturbances that contribute to changing the system. Whether they are internal or external, sounds alter the latter by evolving new forms and most are often unknown. Whether these systems are social or material, natural or artificial, it is very difficult to predict their behavior and their evolution because the world in which we live is mainly chaotic in nature and very rarely allows us to establish the system equations we want to study.

Another reason why it is difficult to predict the behavior of a complex system is the ignorance of the rules that tell us how the system will change, in other words, the impossibility of modeling this system using predictive, solvent equations.

But then, what good is there in studying a complex system if you cannot, in any reasonable way, predict its future behavior? The answer lies in the title of Henri Atlan’s book [ATL 79]: Entre le cristal et la fumée [Between the crystal and the smoke]. By this metaphor, the author tries to situate the complexity between these two extremes: the most certainly known crystal model and that of unpredictable smoke [ATL 79, p. 5].

4.1.1.3.3. Algorithmic complexity and natural complexity

We can differentiate algorithmic complexity from natural complexity. The first is one that we can process with a computer, a program or an algorithm. The goal to be achieved is known, as complex as it may be. The purpose is specified, and it determines the value of the procedure. Natural complexity is inherent to “systems not totally controlled by man because they are not built by man” [ATL 79, p. 5]: biological systems such as memory and social systems. This distinction is fundamental to the extent where the theory of self-organization that we will discuss is only justified in systems of natural complexity. These are a mix of knowing and ignorance. They constitute a sort of “compromise between two extremes: a perfectly symmetrical repetitive order whose crystals are more classic physical models and an infinitely complex and unpredictable variety in details such as evanescent forms of smoke” [ATL 79, p. 5]. Simon, 1974, has already shown the difference of intelligibility between natural and artificial objects in 1969. Natural objects are analyzed with the assumption that “complexity is woven from simplicity; correctly analyzed complexity is only a mask concealing simplicity”. Therefore, it would be appropriate, according to Simon, to find the ordered shape that hides in the apparent disorder. The theory of fractals, introduced in 1975 by Benoît Mandelbrot, would be a recent illustration. “The Santa Fe Institute6 was created in 1984 in an attempt to study the conditions by which order occasionally emerges from chaos, which is exactly the opposite of a butterfly effect”7.

Let us go back and take a quick look at the foundations of information theory [SHA 48] to examine this issue in more detail.

According to this theory, all communication processes can be represented by a channel of communication between a transmitter and a receiver in which a message pass. The latter may be subject to external disturbances that generate errors, which modify the message. We call “sound” a disruption that modifies a message between its transmission and reception. For example, the letter or the symbol of an alphabet composing a loud message is received with a certain probability, but not certainty.

This sound in the channel of communication has a negative effect in the formalization of the amount of information developed by Shannon [SHA 48]. However, instead of considering the transmission of information between the sender and the receiver, Atlan [ATL 79, p. 46] invites us to observe the amount of total information in the system (S) including this transmission path. In this case, the relevant transmission path is no longer the same. It settles between the system and outside observers.

Figure 4.1. Zoom on a color representation of the Mandelbrot set. For a color version of this figure, see www.iste.co.uk/trestini/learning.zip

Figure 4.2. Message transmitted in a channel that leaves the system and leads to the observer

From this perspective, the ambiguity introduced by sound on a basic channel (the one seen by Shannon) can be a generator of information for the observer.

Intuitively, we perceive that the complexity notion of a system is intimately linked to the amount of information defined by Shannon.

“The more a system is composed of a large number of different items, the greater its quantity of information and the improbability of it forming ‘as it is’ by randomly assembling its constituents. That is why this quantity could be proposed as a measurement of the complexity of a system, in that it is a measurement of the degree of variety of elements that constitute it” [ATL 79, p. 45].

Complexity is often associated with newness (high amount of information), while the trivial is repeated (small amount of information).

“The quantity of information of a system, made up of parts, is then defined from the probabilities that we can assign to each kind of component on a set of supposed statistically homogeneous systems from each other; or even from all the combinations, where it is possible to achieve with its components, that form possible states of the system.” [ATL 79, p. 45].

Errors caused by sound introduce a new variability within the system. Thus, the increase in the degree of variety (or degree of differentiation or complexity) of a system, based on a random disturbance from its environment, is measured by the increase in the amount of Shannonian information that it contains.

Henri Atlan transposes this increase in the amount of Shannonian information in a loud system as far as the complexity of an organized system, such as biological organisms or a social organization. This conversion leads the author to design “the evolution of organized systems or the phenomenon of self-organization as a process of increasing both structural and functional complexity resulting from a succession of disruptions caught, each time followed by a recovery to a level of greater variety and lower redundancy” [ATL 79, p. 49].

The increase in complexity “can be used to achieve greater performances, most notably with regard to the possibilities of adaptation to new situations, thanks to a variety of responses to diverse stimuli and randomness in the environment” [ATL 79, p. 45].

In fact, a very organized system, such as an algorithmic complexity system, for example, will be totally predictable and, consequently, poor as to its content information in a Shannonian sense. Otherwise, if it is completely erratic and random, its predictability will be void. It is therefore not complex, but chaotic.

“It is when the content information is intermediate in terms of predictability and average probability, that complex systems can be found.” [WAL 12, p. 1023]

It is in this limited space that it is possible to predict potential emerging phenomena with, undoubtedly, a certain degree of uncertainty. Other definitions of complex systems or associated concepts, mainly from thermodynamics, also exist and can be used depending on the circumstances, as we will see below.

4.1.1.4. Feedback, self-organization and homeostasis

4.1.1.4.1. Feedback

A fundamental feature of a complex system (already studied above) is its circular causation. In more explicit terms, individual or collective constituents of the system behavior provide feedback on their own behavior. These elements will individually or collectively change their environment, which in turn will constrain them and change their states or possible behaviors.

This circular process applied to systems, as opposed to the linear and unidirectional Shannon model, is in the foundations of social theory of communication. Expanding cybernetic thinking to all living systems, which our learners are a part of, Bateson [BAT 77] uses this concept of feedback to explain a very broad set of phenomena, such as language, biological evolution and schizophrenia... but also learning. Thus, it deals with learning as a feedback phenomenon: “change can define learning”, he says. For him, “the word ‘learning’ undoubtedly indicates a change of one kind or another” [BAT 77, p. 133].

What is essential in a complex system is therefore not so much the number of factors or dimensions (factors, variables) related to the system constituents, but the fact that “each of them indirectly influence others, who themselves influence it in return, making the system’s behavior an irreducible whole”8. In other words, in a complex system, knowing the properties and behavior of isolated elements is useful but not sufficient in order to predict the overall behavior of the system. To predict this behavior, it is necessary to take them all into account, which usually involves making a simulation of the studied system, a simulation we will look at in detail in section 6.2 in Chapter 6.

4.1.1.4.2. Self-organization

We have just explained that a system can be subject to external disturbances that generate errors, which modify it. These disturbances are called “sounds”. When the system is reached by a sound from the environment, it can be the source of an increase in the complexity of the system. Initially, the system is disrupted and then it recovers by increasing its level of variety. As a system, it can expand this disorganization. The increase in the complexity of a system can produce a positive effect on it.

The increase of complexity “can be used to achieve greater performances, most notably with regard to the possibilities of adapting to new situations, thanks to a variety of responses to diverse stimuli and randomness in the environment” [ATL 79, p. 49]

Accordingly, when a random sound from the environment reaches the system, it integrates to rebuild in a new way. This is true regardless of the system, on the condition that it is natural complexity (cognitive system, memory, DLEs, etc.). If this mechanism is unknown to us in detail, it allows the system to do its own learning by looking to increase its degree of complexity (or variety). It is also the reason why this sound is said to be organizational.

4.1.1.4.3. Homeostasis

In section 1.2.3 in Chapter 1, we defined homeostasis as a resistance to change specific to all complex systems. This can now be explained using self-organizing capabilities.

Social systems such as ecological and biological systems, and even more so education, are particularly homeostatic systems.

“They oppose change by all means at their disposal. If the system fails to restore its balance, it then enters a mode of operation with, at times, more drastic constraints than the previous, which may lead, if the disturbances continue, to the destruction of the whole group.” [DER 75, p. 129]

Atlan [ATL 79, p. 47] formally shows by what mechanisms these critical cases can occur. After a fairly long period of development, he concluded that we are dealing with two kinds of effects of ambiguity generated by sound on the general organization of a system. He calls these effects of ambiguity “destructive ambiguity” and “ambiguity-autonomy”, the first being considered negatively, the second positively. A necessary condition so that the two coexist would be what Von Neumann [VON 66] called an “extremely highly complicated system” and that Edgar Morin [MOR 73] called subsequently, probably more correctly, a “hyper-complex system” [ATL 79, p. 48].

Between these two extremes, “homeostasis appears as an essential condition for stability and therefore survival for complex systems. Homeostatic systems are ultra-stable: all of their internal, structural, functional organization contribute to the maintenance of this same organization” [DER 75, p. 129].

4.1.1.5. Existence of emerging properties

Perpetual reorganizations of systems can sometimes produce unexpected and unpredictable behaviors. We say that such behavior is “‘counter intuitive’ in the words of Jay Forrester or counter-variant: when following a specific action, we expect a given reaction, although a completely unexpected and often an opposite outcome is often obtained. These are the games of interdependence and homeostasis. Politicians, businessmen or sociologists don’t know much about the effects” [DER 75, p. 129].

If there is one thing that characterizes many complex systems, it is certainly the existence of emerging properties, either in the living or inert world. These phenomena, which are also emerging, are well known in the world of matter. Atoms, for example, bind to produce a new entity with new properties: the molecule. For example, who could have predicted that from gas molecules, such as dihydrogen (H2) and oxygen (O2), we could produce a liquid substance with completely new characteristics, water (H2O)? In addition, these water molecules crystallize in the cold to produce an unpredictable new compound, ice crystals...

The first conclusion that can be drawn from these phenomena is that it is difficult to predict the overall behavior of the system from the mere knowledge of the properties and behavior of isolated elements.

A complex system has, in fact, new and higher properties than those that we would have obtained as the sum of the properties of its constituents.

Let us also keep in mind from these examples that complex systems are not linear “since the new function or the new state does not appear gradually, but suddenly: water freezes, life appears” [WAL 12, p. 1022]. Many other emerging phenomena can be cited beyond the phenomena derived from the world of matter. For example, when we think about the human mind or conscience... Do they not emerge from a “simple” organic aggregate?

“The obvious emerging phenomena that have been shown during the evolution of the physical and social universe are undeniably the appearance of life, the emergence of thought and the genesis of institutions (language, currency). However, we can observe a variety of emerging phenomena at a more modest pace such as turbulence in fluids, cell clusters, colonies of insects or the prices of goods. [...] These emerging phenomena can take various forms, ranging from simple combinations of properties of microscopic entities or relations between these entities concerning the appearance of characteristics or original macroscopic entities.” [WAL 09, p. 2].

Thus, emerging phenomena can occur “through all natural sciences, such as physics, chemistry, biology, but also at the level of those dealing with the environment, climate, society, energy distribution systems and the internet” [WAL 12, p. 1022].

It then becomes possible to imagine that social interactions within digital learning environments from the last generation could also be the cause of emerging phenomena... Could we not imagine, for example, MOOCs quickly becoming real parallel universities that compete with conventional universities, calling into question the methods and teachings of the latter, even while MOOCs were designed to be at the service of universities? Alternatively, could we not conceive of the opposite assumption, whereby MOOCs would finally be abandoned by users, like a fashion phenomenon, even though they currently seem fated to success?

4.1.2. Modeling of complex systems

“As soon as we exceed the description and classification for the analysis ‘of processes’, this implies a reference to a model, of which the definition of which should be more precise, as the reasoning that we apply to it is more elaborate.” [GOG 16]

We discussed in section 2.2.1 in Chapter 2 the different functions of modeling as well as the nature of these models and their uses. In doing so, we were very quickly positioned in this field by vacating our immediate research concerns, models known as “theoretical” in order to only keep “object”-type models [LEG 93] and especially graphic and visual models. However, the latter allow the development of theories that bring back the phenomenon studied to a more general phenomenon, in agreement with experience and its confrontation [GOG 16]. Therefore, throughout our modeling approach, we will make reference to theories such as the modeling of complex systems, for example, [LEM 99] that we consider central (inspired by the previous theories, synthetic and relatively current). This is the reason why we refer to it frequently. However, from a pragmatic point of view, our models have no other ambition than to provide “a schematic representation of an object or a process that allows you to substitute a simpler system for a natural system” [GOG 16]. The theories solely help us to construct these models. In other words, our intention is not to construct a theory on the modeling of complexity, but to use the complexity theories to construct models that are expected to facilitate our analysis.

EXAMPLE.– The simplification of the “object” model to which we have just referred can be done by performing a series of reductions and simplifications (well-thought-out) of information9 that the conjunctive logic of “modeling of complex systems” (theoretical model) encourages us to do.

We will revisit this fundamental process of reduction and simplification of information (most notably through statistical methods) in great detail, that allows SM (systemic modeling, as opposed to analytical modeling, AM) in the section dealing with the arguments that lead us to apply the complex systems theory to the modeling of new-generation DLEs (section 4.2).

Moreover, advancing through these different theories, we gradually found that a connection between the systems theory and the theory of modeling became essential to the understanding of complexity. Le Moigne [LEM 77, LEM 94] explains why in his book entitled, Théorie générale des systèmes [General theory of systems]:

“The ‘General System’ concept was precisely forged to facilitate the apprehension, or understandings, of open processes rather than closed objects. Not a preconceived premise of existence (or rejection) of systems in nature (nature of things or human nature), but a project with lucid understanding: to represent the phenomenon considered as so by a system in general. This taking advantage of the accumulated modeler experience has taken place since the rhetoricians of Ancient Greece (‘inventio’ rhetoric) up to Condillac’s ‘Treaty of systems’10. By accepting this well thought-out and reasoned formulation of a ‘systems science’ project, we can legitimize and ensure this argument: ‘Systems science is understood as the science of systemic modeling’.” [LEM 94, p. 7].

DEFINITION.– A complex system is a model of a phenomenon perceived as complex that is built by systemic modeling [LEM 99, p. 41].

In a second book on the modeling of complex systems, this same author presents the classic methods of modeling and highlights their failures. Through examples, parables and historical reference points, he shows the limits of these models over time.

“The question posed by the author is whether one can truly solve complex problems with analytical models. To address this issue, he begins by distinguishing complexity and complication. It starts from two postulates:

- – the simplification of applied complications to the complex results to an increase in complexity;

- – the modeling system projects are not given: they are built.

Next, modeling is defined. He introduces the notion of intelligibility that does not have the same significance for a complicated system (explanation) in a complex system (understanding) [...]. In other words, the author shows how, in the face of a problem, we develop models with which we reason.” [NON 00, paragraph 2]

The systemic modeling of complexity is developed throughout the book, referencing the contributions of several thinkers such as Jean Piaget11 and Paul Valéry Goethe, who are not satisfied with analytical models. The limits of analytical thinking facing complexity are also highlighted in numerous works and in varied disciplines such as biology, mathematics and neurophysiology, considered as being the origin of the development of systemic thinking [CAM 07, p. 9].

4.1.2.1. Conjunctive versus disjunctive logic

“The analytical method needs disjunctive logic since the results of the breakdown must be definitively distinguished and separated” [LEM 99, p. 32]. However, according to the author and all the thinkers he cites, this logic does not, “account for phenomena that we perceive in and by their complex conjunctions...” [LEM 99, p. 33]. He blames in addition the analytical school supporters “seeking to adapt the problem available to models rather than seeking models of substitution that best meet the problem” [NON 00, paragraph 2]. The use of analytical models requires a simplification of the studied phenomenon, so when a breakdown or division sets off from the hypothesis, in order to address a complex situation, the register must be changed. It is required to go from the registry of disciplined knowledge review to that of active enlightening knowledge methods.

To guide and argue his reasoning, Le Moigne refers to some stable benchmarks which he called axioms. He thus said gather “the contemporary state of maturation of axiomatics based on the texts of Heraclitus, Archimedes, Leonardo da Vinci, GB. Vico or Hegel” [LEM 99, p. 35], in the form of three axioms that fit well within a constructivist epistemology (the paradigm of the constructed universe). In this argument, this radically comes in opposition with positivist epistemology (the wired universe paradigm) that always relies, according to the author, on Aristotelian axioms of analytical modeling and disjunctive logic.

Let us quote the three axioms of systemic modeling referred to by the author [LEM 99, p. 36]:

- – the axiom of teleological operationality or synchronicity: a modelable phenomenon is perceived as an intelligible action and therefore teleological (not erratic, showing some forms of regularity);

- – the axiom of teleological irreversibility (or diachrony): a modelable phenomenon is perceived, transforming and project forming over time;

- – the axiom of inseparability or recursion (or excluded outsiders, or conjunction, or autonomy): a modelable phenomenon is perceived inseparably joining the operation and its product, which can be a producer for itself.

Referring to these three axioms, it appears that we can actually ensure the precision and cognitive consistency of modeling reasonings [LEM 99, p. 35].

Each new theoretical contribution is then associated with “a canonical model that represents the new system” [NON 00, paragraph 2]. The first is the canonical form of the general system that leads the author to define four founding concepts, grouped in pairs in procedures: the cybernetic procedure includes active environment and project concepts, while the structuralist procedure includes synchronic operation and diachronic transformation. The inseparability of these concepts leads to the conceptualization of the general system, understood as “the representation of an active phenomenon perceived identifiable ‘by’ its projects in ‘an’ active environment in which it functions ‘and’ transforms teleologically” [LEM 99, p. 40].

At the beginning of Chapter 5, we will revisit the concepts underlying cybernetic and structuralist procedures. In a more mnemonic way, “the general system is described by an action (a tangle of actions) in an environment (‘carpeted with process’) for some projects (purposes) functioning (doing) and transforming (becoming)” [LEM 99, p. 40]: therefore, for the author, the basic concept of systemic modeling is not its structure, but the action it represents by a black box or a symbolic processor that takes account of the action.

“Modeling a complex system first involves modeling a system of actions.” [LEM 99, p. 45].

4.1.2.2. A complex system and canonical model process

The modeling of a complex action is characterized by a general process notion, which is defined by its exercise and result. The author represents the process using three functions: the temporal transfer function, the function of morphological transformation and spatial transfer (see Figure 5.21).

“We define a process by its exercise and result (an ‘implex’ therefore): there is a process when there is, over time (T), modification of the position in a ‘Space-Form’ reference, and a collection of ‘products’ identifiable by their morphology, and thus by their form (F). The preconceived conjunction of a temporal S transfer (moving in space: transport, for example) and a temporal F transformation (morphology modification: an industrial treatment transforming flour and water into bread, for example) is by definition a process. Its outcome can be recognized: a movement in a ‘Time-Space-Form’ reference, identified by its exercise.” [LEM 99, p. 47]

In this regard, Martin et al. noted:

“In this theory, the observed system is apprehended in the form of a collection of tangled actions, the process notion being conceptualized by that of action. If the process is a formal construction expressed in the language of mathematics (differential equation) in ‘Time–Space–Form’ references, the description of the action expresses itself in natural language in response to the question, ‘What does that do?’ Although this theory is based on the action concept, no formal description framework of an action is proposed (Larsen-Freeman and Cameron, 2008).” [MAR 12]

We will see later that these authors propose a formalism for the action concept we want to deepen and that will be very useful for modeling DLEs considered as complex systems.

4.1.2.3. Complex system and action interrelation

Returning to feedback concepts, Le Moigne characterizes each processor by its “inputs” and “outputs”, at every “ti” moment, before establishing three archetype processors that he adapts specifically into three transfer functions: temporal, spatial and morphological. He also distinguishes processors mainly processing symbols (or information) from others (mainly processing tangible goods) [LEM 99].

He then takes an interest in cases where the number of considered processors becomes high (frequent cases in complex systems) and then proposes differentiating the system into “many subsystems or ‘levels’, each level with the capability to be modeled by its network and relatively interpreted independently as soon as the inter-level coupling interrelationships have been carefully identified” [LEM 99, p. 53].

“We’ll have the opportunity to show [he says] that this deliberate a priori articulation of a complex system ‘in multiple functional levels’ (by intermediary projects) will lead to a very general canonical model which will often lead in turn to an intelligible modeling of its organization.” [LEM 99, p. 57]

4.1.2.4. An archetype model of the articulation of a nine-level complex system

K.E. Boulding [BOU 04a] and subsequently J.-L. Le Moigne [LEM 99, p. 58] offered a definition of an organization based on the definition of increasing levels of complexity of a system, each level also containing all the characteristics of lower levels. The article ‘General System Theory, the Skeleton of Science’ (the “framework of science”, [BOU 04a]) presents Boulding’s famous nine levels [BOU 04a] analysis, and we will quickly present it here. Keep in mind the significance of each of these levels when applying the approach to DLE modeling that we saw in Chapter 5. We will avoid redundancy by this time citing the way Le Moigne has interpreted them. What makes this nine levels analysis important is not so much the manner in which it is formulated, but the way it has contributed to “clarifying the terms of use of the systemic approach or methodology”12.

We will only cite briefly the first six levels, referring the interested reader to the description of other levels, either by the original document (op. cit.), the work of Tardieu, Nanci and Pascot [TAR 79], or the reinterpretations made by Le Moigne [LEM 99] in Chapter 5.

- – Level 1: “The first level is that of static structure”13 [BOU 04a, p. 202]. The phenomenon to model is identifiable but passive and without need.

- – Level 2: The phenomenon is active. It does, meaning it “‘transforms’ one or more ‘inflows’ into one or several ‘outflows’” [TAR 86, p. 60].

- – Level 3: It is active and regulated. “It presents ‘patterns of behavior’”.

- – Level 4: It inquires. “The information allows a processor to know something about the activity of another processor in the system or its environment. The regulation of a system, thanks to a flow of information, is the basic concept of cybernetics” [TAR 86, p. 60]. The system transmits regulation instructions, reflecting changes in the system’s state.

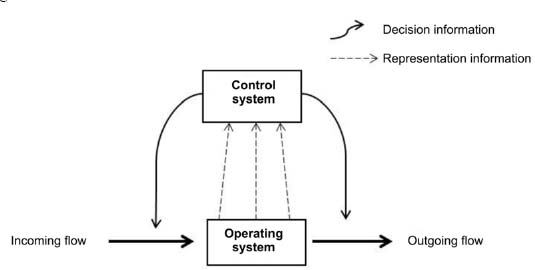

- – Level 5: It determines its activity. This level of complexity suggests links that will be established between a “control system” and an “operating system” that we will later define. Here, the system is seen capable of making decisions concerning its own activity.

We will come back fairly quickly to this fifth level of organization and cover level 6 in the section relative to the concept of organization as well (section 4.1.2.5). Note that at this time the definition of tree diagram concepts (seen in section 4.1.2.3) and the concepts of organization levels proposed by Boulding (seen in section 4.1.2.4) is a critical first step in modeling complex systems.

“From the first level corresponding to static and simple objects of physics and chemistry up to the last level of socio-culture, the movement is that of increasing complexification. The understanding of the system represented by the ninth level involves all the previous levels.” [CAM 07, p. 16].

“This presentation of complexity, in addition to its educational interest, offers a methodological interest. It illustrates the specific approach of a speaker (or curious person) when becoming acquainted with the object information system [...]. Social organizations, the goal of our modeling, are at the highest level; the ninth.” [TAR 86, p. 59]

4.1.2.5. Active organization: operating system and control system

If there is a central concept that allows us to carefully describe a complex system, it is that of active organization.

“Say that a business, a municipality, a family, a government, an industry, an ecosystem, a university, a hospital, a club, etc., are complex systems. This is already the application of some strong assumptions that we’ve recognized. It relates to a complexity of irreversible, recursive and teleological actions that we propose designating (or modeling). The organization of this modeling exercise is through a pivot concept (today very well clarified) known as active organization (that E. Morin proposes calling Organis-action and F. Perroux the Active Unit).” [LEM 99, p. 73]

The organization is here considered as “active” because it is the one who determines its own activity. If the behavior of the system was solely dependent on external interventions, it would not be complex but simply predictable. The complexity appears with the emergence of autonomic capabilities within the system. The behavior of a complex system regulates endogenously; therefore, it is the one ensuring that its behavior is consistent with what was expected and eventually determines to act for modification. The organization is therefore made up of, “on the one hand, an ‘operating system’ (OS), the place of activity allowing transformation of incoming and outgoing flows and, on the other hand, a ‘control system’ (CS) capable of, in view of the information representing the activity of the operating system, reacting according to a certain project (certain objectives) to make decisions [...]. The dynamics of the organization [active organization] concern, on one hand, ‘the functioning of the operating system’ and on the other hand, ‘the functioning of the management system’” [TAR 86, p. 61], as suggested in Figure 4.4.

Figure 4.4. Level 5 description of an organization: the object determines its own activity14

4.1.2.5.1. The functioning of the operating system

Metaphorically, the functioning of the operating system can be represented by a succession of images of each input–output pair, taken “intermittently”, which represent the state of the system, at every successive moment, seen from the exterior. In fact, its function can be defined as “‘the chronicle of instantaneous observation’ by which the representation system15 has agreed to identify the object’s behavior” [TAR 86, p. 62]. However, this series of states accurately reflects the observed system function and not necessarily one that was expected. It then becomes useful to compare the observed states, at each instant “T” , to the desired operation. This leads us to consider, on the one hand, a functional structure resulting from a design (a program) that will model the desired operation, and on the other, a model that will describe the observed behavior. Doing so, immersed in its environment, the operating system that will without a doubt be the target of unforeseen external disturbances (organizational noises) will account for the control system of these variations (representation information). It will then take the necessary decisions to ensure that the organization optimally follows the objectives having been set (decision information).

4.1.2.5.2. The functioning of the control system

The control system therefore receives input from the representation information regarding the functioning of the output product and operating system of decisions viewed to maintain or change its behavior. This regulation will be based on the organization project, whose details must obviously be known. We can also expect that this verification be done at the control system level by controlling good understanding of the organization project. Therefore, the control system itself has a functioning that can be modeled “as a chronicle of observation” [TAR 86, p. 63] of couples (inputs and outputs) of its own system and its state. “This means that the association of representation information provided to the control system and decision information taken by it is a representation of the functioning of its internal logic” [TAR 86, p. 63]. Looking ahead in our future modeling, we would rightfully have to imagine that there is a link between the decision-making process to limit an MOOC to a six-week duration and the observed resilience of registered users.

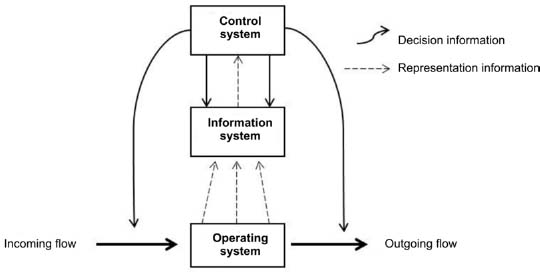

4.1.2.6. Information system

“Information allows the organization to adapt its behavior at every moment by regulating, transforming and rebalancing in order to be in harmony with the environment. Therefore, information leads to a process of permanent adjustment of the organization by channels (the system adapts by accommodation) and codes (the system adapts by assimilation) of communication with respect to a project.” [NON 00, paragraph 2]

At the sixth level of organization, modeling the information system (IS) is introduced. It ensures a coupling between the information considered during its storage and information considered at the time of its use. In fact, “in developing its decisions, the system does not consider instant information, such as, for example, a conventional thermostat, or a reflex arc; it also considers the information it has stored” [LEM 99, p. 61].

Figure 4.5. Level 6 description of an organization16

At this level, the control system cannot only have representation information on the current state of the operating system (for example, who is exchanging information on a discussion thread, looking back at our activity example on MOOCs), but also on the past states of the operating system (number of registered users having been active on the discussion thread during the last training session, for example).

The questions that will now arise to complete level 6 concern, on the one hand, the dynamics in the organization’s information system (where system information/operating system interactivity exists) and, on the other hand, the dynamics of the operating and control system within the information system. We refer the interested reader to the details of these three representations in “the Merise method” [TAR 86, p. 65] seen in section 3.5 in Chapter 3.

Nevertheless, and essentially:

“The organization’s information system (IS):

- – records (in symbolic form) representations of the functions of the operating system (behavior of the complex system);

- – stores them;

- – makes them available, generally by means of an interactive form, to the decision-making system. Which, after having developed its action decisions (orders), also records and stores them through the IS, transmitting them ‘for action’ to the operating system.” [LEM 99, p. 87]

4.1.2.7. The canonical model of a three-level organization system: OS, IS and DS

It is possible to represent the organization with an established model:

- – decision-making system;

- – information system;

- – operating system.

Figure 4.6 shows a possible diagram of this organization.

Le Moigne adds that “the canonical model of system organization, connecting the three levels: operation, information, decision-making, is universal. You can always start an organization’s modeling of a complex system using this genotype model” [LEM 99, p. 87].

Figure 4.6. Canonical model of a system and its three subsystems (OS, IS and DS)

4.2. Argument in favor of the application of the complex systems theory to the modeling of latest-generation DLEs

4.2.1. Introduction

In Chapter 3, it was clearly shown that there are limits to some design or DLE analysis models according to the context in which they are involved in. They are subject to relatively important sociotechnical transformations linked, for example, to the emergence of technopedagogical innovations.

As an example, let us first emphasize Engeström’s model in order to recall the first modifications that we plan to make, discuss them and develop arguments in favor of a possible new path, like, for example, that of a radical change paradigm in terms of modeling.

With the emergence of social networks, MOOCs, informal learning networks and connectivist learning approaches, we realized that digital learning environment (DLE) analysis had become even more complex to conduct than ever before. Let us look back on this significant development and the events that have accompanied it.

After having developed each of the poles of this model “into clusters”, duplicated and stretched into the three dimensions of space within its internal structure and added new relationships between its constituents (see sections 3.2 and 3.3 in Chapter 3), a new sense of incompleteness arises concerning this representation. It then seemed important to reconsider the systematic use of this triadic model and even its expansion, where we again found little adaptation to these new study objects.

This sense of incompleteness brought about a counter flow to the teachings of systemic modeling (see section 4.1.2) through its contemporary explanatory efforts: to reason in joining rather than separating.

The model structure17 constitutes various divisions (subject, object, tool, community, work division, etc.), although practical in certain simple analysis cases, and shows (in the art of reasoning) the adoption of disjunctive logic specific to analytical modeling (AM)18. This finds itself in conflict with the conjunctive logic advocated by systemic modeling (SM). Therefore, in justifying a potentially infinite breakdown of system constituents-objects, we had the feeling of gradually distancing ourselves from the canonical form of a “general system” (see Chapter 4).

Engestrom’s model [ENG 87] could certainly take advantage of the time period where new contributions to systems science were established. It could also identify with systematism as many other so-called “systems analysis” models developed in the present period (and previous years). However, in light of the work on systemic modeling of complexity, this model relies on a disjunctive axiom since the results of breakdown into the model’s constituent divisions must be definitively distinguished and separated.

This impression of infinite decomposition into subsets of finer constituents (and not of functions or specialized processes) was always present at the time when we wanted to apply it to instructional design models (see section 2.4.1 in Chapter 2). Moreover, the latter have shown other inadequacies, for example, their difficulty to be able to adapt to certain distance education teaching courses, most notably [MEI 94] collaborative or situational problem learning [HEN 01]. Despite the enormous progress that has been made in this area, we had to accept that current EMLs were still struggling to properly represent collaborative activities built around situational problems, with evidence provided by the following authors’ works: Pernin and Lejeune [PER 04b]; Ferraris et al. [FER 05]; Laforcade [LAF 05a]; Laforcade et al. [LAF 05b]. Furthermore, the adoption of a more “macro” approach, to us, appeared more adapted in the context of the current research aimed at taking into account more successfully all the present activities at the heart of the DLEs [OUB 07, VIL 07, etc.]. The so-called education engineering has this goal: also taking into account management activities, for example (see section 2.4.2 in Chapter 2).

Finally, the current adaptive models (see section 2.4.3 in Chapter 2) focusing particularly on technopedagogical innovations like MOOC [BLA 13, BEJ 15] aim to anticipate learners’ needs and to provide an answer, and this is regardless of the complexity of the system. However, in this specific case, are we not facing another challenge, namely that of wanting to design a system capable of adapting to all situations encountered? In the words of Atlan [ATL 79, p. 5], “in this type of system, the goal to achieve is known, as complex as it may be”. The purpose is specified and determines the value of the procedure. Here, we typically recognize what Atlan called an algorithmic complexity system. Natural complexity is inherent to “systems not totally controlled by man because they are not made by man” [ATL 79, p. 5]. Wanting to substitute templates specific to the theory of complexity in models of the Canadian team, LICEF, [BEJ 15], based on an ontology modeling, there is nothing provocative: it is simply a way to “replace the research accuracy of materialistic ontology by a more uncertain phenomenology constructing approximated models, but that better correspond to entirely real phenomena, and which reductionism cannot account for” [ZIN 03].

As the organization of a complex system is considered “active” (it is a concept of SM), it is the one (and not a program) that determines its own activity (see section 4.1.2). If the system behavior was solely dependent on external interventions, it would not be complex and even less unpredictable. Complexity appears with the emergence of autonomic capabilities within the system. “In human groups, for example, and more particularly in the case of the emergence of panic or a rumor spreading among [PRO 07] crowds19, self-organization is not the result of a predetermined intention. Agents or entities in interaction, without a previously defined common goal, will create a certain form of organization without knowledge and by imitation. Therefore, what characterizes self-organized systems is the emergence and maintenance of global order without there being a conductor” [PRO 08, p. 2], and we assume that this upholding of order cannot be preconceived. The theory of self-organized criticality proposed by Per Bak, Chao Tang and Kurt Wiesenfeld in 1987 [BAK 96] shows that it is impossible to predict and anticipate all behaviors of a complex system.

Just as useful as the “adaptive system” design (in the sense of education engineering), we also believe in their ability (in the sense of the theory of ‘adaptive’ complex systems) to better react to disturbances, both externally and internally or to self-regulate. As advised by Davis [DEL 00], we should not fear complexity: “In wanting to simplify reality, do we not risk putting ourselves on the fringes of the wealth it offers?”

In summary, the hypothesis that we offer in order to justify the adoption of the paradigm of systemic complexity modeling is mainly due to the recognition of complex phenomena, which act at the very heart of our object of study (latest-generation DLEs) and by alleged inadequacy of analytical modeling (AM) to solve the problem posed. However, other reasons reinforce this deliberate choice, for example:

- – the existence of many convergence points between connectivist learning design and the complex systems theory;

- – the existence of a conceptual analogy between the SM of the complexity and SM of the activity;

- – the existence of languages “better” adapted to open complex system modeling, open in addition to social sciences;

- – to follow a forward-looking approach allowing answers to the many societal questions on the future of these digital environments, etc.

Below, we will develop and explain these few peripheral arguments, after having done the same thing with our two central arguments.

4.2.2. DLE: a complex system

Looking back at the different characteristics of a complex system from section 4.1, let us explain what leads us to consider a latest-generation DLE as a complex system.

4.2.2.1. A world of knowledge: a complex system

To have the project of modeling a world of knowledge, such as latest-generation DLEs, referring to the constructivist paradigm of systemic complexity modeling, may seem like an audacious exercise. But moreover, assuming that all acquisition of knowledge can adapt to a world not necessarily built for the sole purpose of learning may prove to be the challenge. However, we wanted to address this challenge by perceiving cognitive organization and the human cognitive system as self-organizing complex systems capable of learning in a world organized for this purpose (rather than built in the formal sense of the term), by combining two shifts of thought of which the union seemed, only a few years ago, utopian. Let us argue the choice here, when the main development is (at the personal request of Jean-Louis Le Moigne) in the “Cahier des Lectures MCX (Modélisation de la Complexité)” of the “Réseau Intelligence de la Complexité” [TRE 09b].

The first of these shifts is based on the complex systems theory, which assumes that any learning opportunity results from the disruption of a system subject to external disturbances. Whether this system be social or cognitive, it then reorganizes by increasing its degree of variety or complexity.

“[As a system, it is enriched by this disruption by the means of] a non-directed learning process, in the sense where this learning is by no means a program established in human memory, or in the natural or social environment of this memory. [...] The efficient cause of this learning is the random encounter of system memory and noise factors from its environment. [...] This learning product is a succession of psychological categories always finer or differentiated, whose list and construction methods are likely to be involved at any stage of the process.” [ANC 92]

We can intuitively understand this phenomenon when thinking of the classical theory of evolution: “Mutations, which are precisely DNA replication errors, are considered to be the source of the progressive increase of the diversity and complexity of living human beings” [ATL 98]. The increase in complexity in a system can produce a positive effect on them; it becomes a learning source. However, this theory is also taken for granted in the sense that this self-organizing process can only operate in systems with natural complexity, or inherent complexity, to “systems not totally controlled by man because they are not made by man” [ATL 79, p. 5], such as biological systems like memory, social systems, etc.

The second shift takes an interest in an opposing way to the “building” of these worlds, hence the apparent paradox. By their prescriptive nature, teaching design (or Instructional Design) models are in fact essentially based on behaviorist-type epistemological approaches in order to “teach” the subject. Conversely, constructivist learning environments emphasize interactive environments that seek the personal experience of the subject, initially through collective activities, supported by the instructor and social group, and then through individual activities. The connectivist approach goes even further referring mainly to metacognitive, or even meta-metacognitive processes to which learners must access in order to learn through the network [SIE 05, BAT 77, p. 323]. In the latter cases, learning is no longer dependent on stricto sensu “teaching”. Formally, in constructivist, or even connectivist models, the learning process operates independently of cognitive situations constructed by the teacher or by the designer. At most, these environments require, on the part of the latter, “creation” of “real” environments using the context in which the study is appropriate [JON 09]. Since this is a matter of “artificially” designing “real” learning situations or simulating situations connected to a natural context, the teaching model design patterns gradually accommodated the constructivist beliefs and practices. The constructivist paradigm is now added to the founding paradigms of instructional design models after, first, behaviorist, then cognitivist [TRE 08].

From our point of view, one of the keys to the success of “the application of the complex systems theory to the modeling of new generation DLEs” lies in the connection between these two shifts. For Lemire [LEM 08, p. 38], building a world of knowledge first involves “weaving links between approaches to the modeling of reality and a general system”. This a way of explaining the changing world perceived as a system; to better understand and change with it, without wanting to force it. It is also pretending to take advantage of its evolution. It is learning more about the system in which we are making progress through the use of illuminating modeling or learning to better recognize ourselves as a cognitive system integrated into this encompassing system. By modeling such systems that surround us or live in us, our actions become metacognitive acts. It is by “applying a methodology to the systemic approach that models constructed from perceived realities become the representation of what is received as and by a general system” [LEM 08, p. 37]. From these considerations, the objective is clearly formulated as an invitation to model what characterizes a real world, conducive to the self-construction of knowledge.

4.2.2.2. Latest-generation DLEs: an “open” system

Remember that what characterizes latest-generation DLEs, whose MOOCs are currently the best representatives, is primarily their openness to the world, as well as the consequences of this openness. The first ‘O’ (for Open) of the acronym MOOC ostensibly shows: online courses become open to all. As we recall from our book l’Appropriation sociale des MOOC en France [The social appropriation of MOOCs in France] [TRE 16a, p. 15], registration restrictions (place, time, age, degree) such as registration fees are dropped. Open means that everyone has access unlike traditional online teaching (distance learning), which requires registration with access conditions difficult to negotiate (degree, availability, staff, language proficiency, registration fees, etc.). This is one of the major features of MOOCs, which confirms and undoubtedly strengthens its originality. We can also immediately judge the consequences of this availability caused by the presence of the letter “M” for Massive in the acronym. The massive aspect of an MOOC is certainly what makes the biggest impression when you discover one. Most people questioned on this matter also begin by quoting some impressive numbers (hundreds of thousands of people) corresponding to the number of registered users. When you ask a teacher to describe his teaching methods, he immediately mentions about 30 students in front of him, and even more in the case of a university course. In an MOOC, there is not 30 or 50 registered, but 150,000 or even more. To our knowledge, the most registered for a single MOOC course has been 230,000 users to date. Imagine yourself in front of 230,000 students! This is substantially the size of the American population who came to listen to Martin Luther King Jr. (MLK) in his famous “I have a dream” speech in Washington in front of the Lincoln Memorial on 28 August 1963. Therefore, there exists an endless variety in the learner profiles, in their geographical and socio-cultural origins, in their prerequisites, but also in their ways of learning, in teaching methods, in assistive devices and assistance offered, etc. And since the complexity of a system is measured (even quantitatively) by its variety, there is no compunction in considering a latest-generation DLE as a complex system. As Tardieu et al. wrote [TAR 86] “The two notions that characterize complexity are the variety of elements and interactions between these elements”. Concerning interactions, we have seen that, within MOOCs, participants self-teach and motivate in an interactive and animated space [CRI 12], which, in view of the variety of systems constituents, increase the variety of interactions between these participants. Remember also that George Siemens20 is convinced that “formal education no longer represents the majority of our learning in that there are a large variety of ways to learn today, through communities of practice, personal networks and through work-related tasks”.

4.2.2.3. Unpredictable nature of DLEs

It is also necessary to acknowledge the chaotic evolution of these environments that characterize complex systems. Their effects are unpredictable by nature. Going back to the exemplary case of MOOCs, nobody could in fact predict, in 2013, what they would become and least of all what they were supposed to bring to French society. Some of the most serious newspapers and media outlets also did not hesitate in predicting the end of traditional university education: “a model that could revolutionize the education system; with no need to follow prestigious educational courses”.21 And these may not just be the “visionary” qualities of Geneviève Fioraso22, then Secretary of Higher Education, who led the French government into the MOOC adventure in January 2014. This was rather the fear of missing out on any future profits, without being certain of receiving them at the end. The approach was, therefore, to not risk missing “a moving train”, while we knew very well its destination. Even today, nothing allows us to say with certainty to what extent this major innovation will change or not change the landscape of the French academic world and beyond. This unpredictability allows us to say that a latest-generation DLE can be studied as a complex system and not as an ordinary or “merely” complicated system (see section 4.1.1.2). Furthermore, complex (or chaotic) does not mean hazardous. In addition, there lies the interest in studying complex systems with systemic complexity modeling tools. As we have seen in section 4.1.1.3, between a completely predictable and a chaotic system (completely unpredictable) are complex systems (systems with average predictability).

The whole point of systemic complexity modeling is unveiled: the emergence of certain phenomena can be anticipated by modeling with a certain probability of accuracy.

Therefore, we can consider not only all sorts of predictions that will be useful to developers and users of DLE, but also policies that do not cease to request enlightening reports on these social issues. This is why we devote a whole section to this topic (section 4.2.5).

4.2.2.4. Consequence of the limits of analytical modeling and disjunctive logic

Disjunctive logic specific to analytical modeling (AM), as opposed to individual conjunctive modeling specific to systemic modeling (SM), aims to independently study the constituents of a system: “the breakdown results must be definitively distinguished AND separated” [LEM 99, p. 32]. In fact, it does not allow for “the realization of phenomena that we perceive in and by their complex conjunctions” [LEM 99, p. 33].

For example, let us take the example of the energy and work concepts in physical sciences. Applied to a body under gravity in space (for example, a rock resting on our hand a meter from the ground), we define the potential energy of this body by the amount of the work (in the physical sense of the term, that is, force times displacement) it is “potentially” capable of producing during its fall (if we drop the rock, of course). Therefore, it is easy to find the value of its potential energy: m.g.z (m.g being the weight of mass (m) in a gravitational field (g) and z the distance from the rock to the ground). We see through this example that it is impossible to define the concept of energy without talking about work and vice versa. These concepts cannot understand each other and can only observe each other in their conjunction.

Many other examples can be cited to illustrate this need to conjoin rather than separate. In the case that concerns us, we ask the question to know what is the point of separating the “subject” and the “tool” or the “subject” and its “community”, just as Engestrom did in his model. Can these system constituents not also be seen in their conjunction? Bogdanov23 [BOG 81, p. 63] cited by Le Moigne [LEM 99, p. 33] considers the act of joining as fundamental, and separation as derivative; “the first is direct, the second is the result: all begins with the act of joining”.

Let us look back for a moment at the anthropocentric approach of the Rabardel techniques [RAB 95] seen in section 1.2.4 in Chapter 1. Is it not also intended to transcend this mind/matter dualism to approach the cognitive development of the subject by placing it in its social and material environment? Piaget and Vygotsky also worked together to overcome a Cartesian dualistic vision (mind/matter) by locating the thought mechanism and its development at the crossroads of an outside/inside, middle/subject loop.

According to Bogdanov:

“The two conceivable actions of man in nature, whether practical or cognitive actions, are to join and to separate. [...] Join his body or attention to the system to be considered; apply a conscious effort, which is still a conjunction. It is a similar situation for cognition: no distinction is possible without a preliminary comparison, which is still an act of conjunction. In this case, disjunction is also secondary.” [BOG 81, p. 63]

Bateson [BAT 77, p. 275] reinforces the idea of inseparability of the “subject” and the “tool”. To do this, he takes the example of a blind man and his cane (tool) used to help guide him. “The cane is simply a way [he says] in which transformed differences are transmitted, in a way that the cutting of this channel removes a part of the systemic circuit that determines the possibility of locomotion for the blind man”. Michel Serres [SER 15] also shares that view in a French metaphor: “la tête de Saint Denis” (English translation: the head of Saint Denis). He made an analogy between “our head” and “our computer”. We have, he says, “our head in our hands” since we are more than ever in an externalization logic of our cognitive functions in the direction of tools capable of memorizing and processing information instead of us. These tools become part of ourselves (our head is in our hands) and therefore inseparable: the subject and the tool are one.

But what should we then think of all these models that assert systemic nature and that, as we ourselves have done, represent only the components of a system, connected through relationships? Le Moigne provides an explanation:

“Between 1960 and 1980, many treaties outlined, under the name Systems Analysis (plural matter), a methodology of analytical modeling hidden under a systemic jargon that was able to, at the time, allude to the fact that before we agreed that this systems analysis only attributed to closed and complicated but not complex systems analysis modeling. But since 1975, we can consider that the systemic and fundamental engineering sciences have begun to emerge from their initial conceptualization and epistemological maturation phase, to enter into a strictly instrumental and methodological phase: it will then be possible to present systems modeling in its originality, without coating it in the finery of analytical modeling in order to improve its respectability.” [LEM 99, p. 7]

It is therefore, unbeknownst to users, very often “through scientific willingness” as the author emphasized [LEM 94, p. 8], “that the use of ‘analysis system’, ‘system approach’, and ‘application of systems theory’” concepts has almost always led them to practice analytical modeling that only had systemic in its name. This is the lack of adaption to these internal epistemic critical exercises that should be familiar to any scientific and technical activity. However, it is not difficult to first ask oneself “what does that do, in what sense, for what, becoming what?” rather than wondering firstly “what is it made from?”.

4.2.2.5. For a functional and non-organic approach

To model a DLE, we have taken the position of only supporting a secondary attention to its structure to focus on rather “what it does”. We will represent each of its actions or complexes of actions by “a black box or the symbolic processor that takes action into account” [LEM 99, p. 46]. We will see in Chapters 5 and 6 that this decision occurs in practice when entering modeling: not with objects but with functions (“functional diagrams” in OMT and “use cases” in UML). It is also possible to verify that this choice is confirmed at the level of the necessary simplifications that we will have to make regarding the data to be processed. The use of multivariate descriptive statistical techniques aiming to intelligently conjoin our factorial axis data and producing representative classes (clusters) of specific populations (which facilitate dendrograms) once again demonstrates the adoption of this conjunctive logic.

4.2.3. Complexity theory: at the heart of the connectivist learning design

We have said and shown repeatedly: when a DLE model is subjected to too many significant variations in initial conditions (Chapter 3), the adjustment of the intended model to represent (learning, educational, training, etc.) becomes very difficult, if not impossible. That is why we have proposed changing the paradigm, choosing a systemic modeling of complexity that offers a more suitable framework. Here we will address this issue from the perspective of modeling forms of networked learning. Contrary to traditional distance education, which seeks to reproduce within DLEs from real, significant situations susceptible to promoting apprenticeships, MOOC professionals (designers, researchers, teachers, etc.) or more generally OpenCourseWare (OCW) implicitly poses the (connectivist?) assumption that the meaning of the activities (pre)exist: the learner’s challenge is to recognize the reoccurrences (patterns) that appear hidden [SIE 05], resulting in their decision-making process. If the conditions involving their decision-making process change, this decision will no longer necessarily be correct. It was at the moment when they took it but will no longer be after this change [SIE 05]. Connectivism is based on the idea that the strategies that we deploy to learn rest on unstable models and contexts and whose foundations are moving. Our ability to recognize and adjust our strategies to changes in context is an essential task of learning. Bateson [BAT 77, p. 307] wonderfully formalized this change in how to learn according to the signals emitted by the context, themselves dependent on the signals emitted by the individual. He establishes three levels of learning all punctuated by a change of one kind or another. “Change can define learning”, he says.

“The word ‘learning’ undoubtedly indicates one kind of change or another but it is very difficult to say what ‘kind’ of change it is [...]. Change involves a process. But the processes themselves are exposed to change.” [BAT 77, p. 303]

We have already posed the assumption [TRE 05, p. 66] that learning within a DLE must be “highly” facilitated by the acquisition of a “Level III Batesonian”. Let us look back for a moment at these three fundamental levels, which have not diminished in learning contexts, holding our attention here.

Bateson [BAT 77, p. 307] develops the idea that all learning is to some extent “stochastic” (it contains sequences of trial and error). He introduced “Level 1” learning corresponding to a change in the response specificity through a correction of errors of choice within a set of possibilities. In a Level 1 training context, the individual learns and therefore corrects his actions and his choices and corrects his mistakes within a set of possibilities (a given conceptual framework).