Chapter 4

Asymmetries

And he had a helmet of brass upon his head, and he was armed with a coat of mail…greaves of brass upon his legs, and a target of brass between his shoulders… [And David] took thence a stone, and slang it, and smote the Philistine in his forehead…and he fell upon his face to the earth.

—1 Samuel 17

Some 3,000 years ago, Goliath took the field of battle securely armed and prepared for hand-to-hand combat. He then fell victim to perhaps the world's most famous remote attacker. David exploited an advantage in striking distance to strike one of Goliath's few exposed vulnerabilities. And had he missed, David would have surely launched the other four stones he held before Goliath could have closed the distance to engage. As Malcolm Gladwell noted in his 2013 book David and Goliath: Underdogs, Misfits, and the Art of Battling Giants (Little, Brown and Company), it was not a fair fight. Goliath was at a disadvantage because he did not understand the asymmetry of the encounter.

To understand the success of computer attacks and the failure of computer security, you must move beyond thinking in terms of a specific event or security failure and understand the properties of the space. To read the news, you would think that every time a company divulges its customers' personal data, exposes sensitive internal e-mails, or loses the design to yet another advanced weapons system, that the compromise was inevitable. This attitude is lazy.

Warring technologies have historically leapfrogged each other: from cavalry over foot soldiers, to tanks over cavalry, to A-10s over tanks, which may someday be rendered ineffective by rail guns. Judging from the 600+ known data breaches in 2013 alone,1 Attackers in CNE have an empirical advantage, but is that guaranteed to last? Why or why not? Which imbalances are foregone conclusions and which are worth fighting? Load up your slingshot and let's take a look.

False Asymmetries

The oft-repeated refrain—excuse, actually—of the security community is along the lines of “The Attacker has to be right only once. The Defender has to be right all the time.” That is true, but the same is equally true for Fort Knox. After all, an Attacker needs to get through security only once to steal a gold bar from the U.S. supply. How is cyberspace different?

The oft-repeated answer is that cyberspace is inherently asymmetric, unlike the physical world. This is almost always followed by pointing out two things: cost and attribution. Neither is a true asymmetry.2

Cost

Attacking is supposedly cheap, whereas defending is expensive. The implication is that Attackers can just trip over their keyboard and produce a magic exploit that can defeat every organization's security. Perhaps in the early days of the Internet, this was not far from the truth, but today this is nonsense.

Attacking is cheap only in the way that driving a car a few miles is cheap. If you consider only the cost of the gasoline to the owner, driving costs just a few dollars. But add in the cost of manufacturing the car, and it jumps to tens of thousands. Yet even this is not the true cost. Billions of dollars have been invested over decades to build the mining operations, manufacturing plants, oil refineries, roads, gas stations, and more that all precipitate hopping in your car to go pick up milk.

Offensive operations are cheaper than building an entire automotive infrastructure, but the analogy is valid. Breaking into a particular network may be cheap after the tools and infrastructure are in place. Top off the gas and off you go. However, some aspects do not last much longer than that gallon of milk. Building and maintaining the infrastructure for a program of sustained operations requires targeting, research, hardware engineering, software development, analysis, and training. This is not cheap, nor is it a game of luck.

Attackers do not stumble into being “right once.” They put in the time and effort to build an infrastructure and then work through Thomas Edison's alleged “10,000 ways that won't work.” Cost is simply not as asymmetric as many contend.

Still, various sources put combined Defender spending on IT-related security at 50 to 70 billion dollars a year, a figure that surpasses what each country in the world, excluding the top 5, spends on their armed forces. And yet the defense still fails, rather spectacularly. This is because individual defense expenditures do not improve the overall security of the space. One company's improvements do not benefit others. Another company's hard-learned lessons do not permeate.

The supposed asymmetry of cost is actually just lack of defensive coordination.

Attribution

Attribution is typically mentioned as the next asymmetry. Perfect attribution is indeed difficult. The architecture of the Internet makes it such.

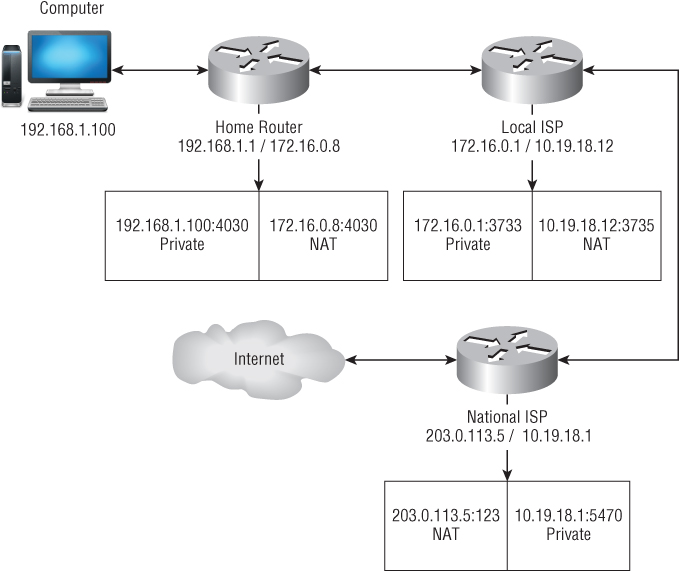

As data moves across the Internet, it is forwarded through proxies and subjected to network address translation (NAT). At a high level, NAT works as shown in Figure 4.1.

Figure 4.1 Network address translation

You can identify the NAT device from the outside, but tracing further inwards requires two things:

- The NAT device needs to store logs of the transaction.

- The cooperation of whomever owns the NAT device.

There are various technical methods of trying to distinguish different computers behind a NAT device based on the differences between operating systems, web browser configurations, timing, and so forth. While these methods allow someone to identify a unique computer, without logs of the transaction, it still does not reveal the source address of the connection.

The real world problem is even harder. NAT devices are often layered as shown in Figure 4.2.

Figure 4.2 Layered network address translation

For example, right now my computer is behind two layers of indirection: one for my home network and one for the ISP. It isn't until the third hop, after the source address has been rewritten twice, that a data packet leaving my computer reaches the public Internet. To attribute that packet to my home network, someone must first get the cooperation of the ISP. Then, to attribute it to this computer, someone would need my cooperation.

And therein lies the problem. Tracing a connection requires the cooperation of every entity from the public-facing IP address inward. This may be forthcoming in a criminal investigation if it is within a single country. (There's no question Verizon would give up my records if served a warrant from the FBI.)

Cooperation might be possible between allied countries. But it all breaks down as soon you start crossing international boundaries between rival countries. Attribution then requires cooperation from state-controlled (or influenced) communications companies without any legal basis to compel them. (If anything, there may be legal issues that prevent them from cooperating.) The trail will stop there.

And this is just the basic Internet addressing system. It ignores the reality that the Attacker will take active steps to misdirect the Defender. These steps include forwarding connections through multiple countries, using fake and/or real companies, launching from or routing through cloud service providers such as Amazon Web Services, or leveraging innocent but compromised third-party networks. These and other techniques provide further protection against attribution.

Of course, even with careful offensive planning, some attributive characters can be determined over time, such as country of origin. More specific details may be found with mistakes or lapses in operational security. But these are the exception, and they often require months to piece together. It remains difficult to attribute the source of an attack in real time.

So, yes, attribution is more difficult than in the physical world. But so what?

Suppose attribution were not an issue. There is nothing so permanent about technology that ordains the current state of anonymity. Recently, Verizon and AT&T were revealed to be adding “Unique Identifier Headers” to their customers' browsing to de-anonymize and track it. They backed away when it became public, but you can easily imagine a larger shift occurring that slides all traffic away from anonymity. Many countries and advertising companies are attempting to do just that.

What does full attribution change? In the physical world, the North Koreans continued to deny sinking a South Korean naval vessel even after incontrovertible proof was presented. The Russian government maintained that it had no troops in Crimea despite the presence of Russian tanks, Russian-speaking uniformed soldiers with Russian weapons, and at least one soldier on film answering, “Yes” to the question, “Are you a Russian solider?” And that is in a world with established international norms and laws of war. The virtual world doesn't have the benefit of having such precedents to ignore.

Nation-states maintain their innocence with an ever-weakening shield of plausible deniability as the mountains of evidence pile up against them. Report after report gives ever more detail about so-called “threat actors.” Still the attacks continue.

Perhaps attribution could cut into purely criminal activities. It might give some pause for CNA. But do not expect blame to slow down espionage. A true asymmetry provides one side an advantage of sorts. The difficulty of attribution provides no such benefit to espionage as evidenced by the fact that when caught, behavior does not change. Therefore, it is a false asymmetry.

Advantage Attacker

A true asymmetry provides a strategic advantage to one side. It is difficult to counter, but if successfully countered, it causes behavioral changes to the formerly advantaged side. Cost is not nearly as unbalanced as first perceived. Attribution, even if solved, it not going to make much impact on espionage. But just because these two are fakes does not preclude actual imbalances.

Here are some true asymmetries that are to the Attacker's advantage.

Motivation

Consider two lottery games. The first game, call it Fifty Plus, gives a 50 percent chance of winning. The cost of the ticket may be substantial, but odds are good you will recoup more than you invest. In the worst case, you lose the cost of the ticket. Fifty Plus is all upside.

The second game, call this one Zero Minus, gives a 0 percent chance of winning. Losing the cost of the ticket is the best possible outcome. The worst outcome is you lose the investment and some additional unknown amount of money, ranging from nothing to all your income. This loss happens all at once, over several years, or maybe never. Zero Minus is all downside.

Which game would you be excited to play? A nation-state is playing the first game. Sure there are costs, but there is a huge payoff potential and little risk. The gains are immediate and tangible.

The Defender is playing the second game. There is nothing to gain, only something to lose. And the nature of the loss is often intangible, ranging from nothing to catastrophic.

The difference between the two risk reward trade-offs creates an imbalance in motivation. With attacking, the Attacker accomplishes something tangible: gaining access, retrieving some piece of data, or outsmarting some person or system. With defending, if the Defender is successful, nothing happens. That's hardly inspirational.

Of course, there is also a third game, call it the Crime Pays version. This version is variable risk and high reward. There's a good chance of success, but there is also a chance of getting arrested.

The stakes in Crime Pays vary widely. The probability of arrest depends on the criminal's home country. Some countries seem to tolerate, if not encourage, electronic theft. There is some risk, such as when Roman Valerevich Seleznev, a.k.a. “Track2,” an alleged trafficker in stolen financial information, was arrested in Guam.3 But that risk is small. You can safely bet Track2, a son of a Russian Parliament member, would have been protected from arrest had he stayed in Russia.

Others countries, like the United States with its FBI, do their best to track down what they can. The odds of arrest are much higher. Between the two extremes are countries like Romania, where criminal behavior is widespread, but occasionally someone gets taken down.

The potential personal risk in this game has an interesting selection effect: the unmotivated stay out. Only the highly motivated are willing to take the risk. This means that criminals, if anything, are likely to be the most motivated party out there. They are motivated both by success and fear of failure.

The Defender is also motivated by fear of failure, but people are simply not as viscerally inspired to protect some indeterminate company loss as they are to protect their own skin.

The stakes involved in the three versions are not the only issue. The Defender suffers from a further demotivator: monotony. Much of defending is repetitive. Checking logs, updating software, or applying patches are all tasks that need to be done over and over. Sure there is designing and expanding the network, integrating new systems, or finding and purging an intruder, but this only means that occasionally the Defender has the same level of motivation as the Attacker.

Of course, higher motivation for the Attacker in itself does not guarantee success: I am not going to beat Michael Jordan in a game of one-on-one no matter how much more motivated I may be. But motivation drives investment, recruitment, creativity, attention to detail, and a host of other things that make a substantial difference to which side prevails.

Initiative

Initiative is having the ability to make threats or take actions that require your opponent to react. It is different than motivation. Motivation describes mental state, whereas initiative measures ability.

The Attacker has the initiative. An exploit is released and a patch follows. An attack methodology becomes known, and an operating system mitigation is developed. A Trojan is found and a signature is created. By and large, the Attacker acts and the Defender reacts.

Having the initiative means that the Attacker can stay one step ahead. The Conficker worm provides an example. The Conficker worm was a piece of Microsoft Windows malware that infected millions of computers across industry and government starting at the end of 2008. The worm included an update mechanism that would reach out to potential Internet rendezvous points for new code. Each day the worm generated and contacted a list of 250 pseudorandom domain names for new updates.

The security community responded quickly. Within approximately 3 months, it had fully reverse engineered the name-generation algorithm and locked down all potential contact addresses. It was a momentous, timely, and effective effort.

But think about how much time that actually is: 3 months. Three months from discovery. The Attacker had 3 entire months to update Conficker and adapt while the Defender reacted. And update it they did, releasing B, C, D, and E variants at a rate of about one per month, each improving on the last.

How long had the exploit been used before that? Who knows? The flaw existed in Windows 2000 SP4 for at least 5 years before public discovery. There is simply no way to know if or how long one Attacker managed to keep it quiet before another made it into a worm.

Scenarios like this are fairly common. According to the 2012 Symantec study “Before We Knew It: An Empirical Study of Zero-Day Attacks in the Real World,” a previously unknown exploit (a Zero-Day) is used for between 19 days and 30 months, with an average of 10 months and a median of 8.4 Immunity Security published a report stating that the average was over 1 year. Either way, that means the Attacker can expect well over one-half year of initiative per exploit. That is quite an advantage.

The Defender can attempt to take the initiative, and it's not like the security community is sitting idly by. In 2011, Microsoft, in conjunction with the U.S. Marshals, FireEye security company, the University of Washington, and partners in the Netherlands and China successfully launched a legal and technical counterattack that decapitated the Rustock botnet in one fell swoop.

This act of striking initiative undoubtedly took the botnet's masters by surprise. So the Defender can take initiative. It is just more difficult because it requires greater resources and coordination. The Attacker, however, does not require coordination. Therefore, until such time as this coordination is no longer required, the Attacker will maintain an asymmetric advantage in initiative.

Focus

The Attacker has a single mission and point of focus. Although that focus may be split among building capabilities and different operations, their focus is always on gaining, maintaining, and exploiting access.

Attackers also have positive feedback that reinforces focus. Attackers know when they gain access, when they fail, and when they lose access. This feedback enables them to adjust and try again, all while maintaining focus on the objective. Feedback keeps people focused and glued to the task—just ask the gambling and gaming industries.

Defenders, however, have their focus split between securing the network and running it. Similarly, technology companies have their focus split between developing secure versus timely and functional products.

Further, Defenders lack positive feedback. They know for certain only when they fail. Although you may detect one attack, there is no way to prove that you detected all attacks, that a successful intrusion did not occur. You cannot prove a negative. The feedback that the proverbial barn door is open comes only when Defenders notice the horse (that is, their data) galloping across the field. This difference in feedback and reinforcement serves only to exacerbate the existing difference in focus.

Therefore, due to singularity of purpose and feedback, Attackers have an asymmetric advantage in focus.

Effect of Failure

In classical war theory, when close combat dominated war, defense was considered the stronger form of warfare. It is easier to hold a prepared position than to advance into one. This conclusion contains a key assumption: fending off an attack has an impact on the Attacker. Each unsuccessful attack carries some risk and leads to fatigue, equipment damage, casualties, or other spent resources.

This assumption is invalid for computer operations. Preventing an attack may have no effect whatsoever on the Attacker.

The Defender may prevent an intrusion without ever detecting that an attack was attempted. Perhaps the attack was blocked outright by the firewall. Perhaps the web application was secure enough to withstand SQL injection attacks. Perhaps the e-mail-luring e-mail was blocked by the spam filter. The failed attack may not even be logged. When Defenders do not know they were attacked, the only impact on the Attacker is loss of time.

The Defender may catch and stop the attack early in the process. Perhaps that malicious e-mail attachment is caught by antivirus as soon as it is opened. In this case, only the initial access tool is lost, or worst case the outermost layer of the attack infrastructure. The exploit used to gain execution and the core infrastructure likely remain completely operational, as do the more advanced tools never installed that would have followed. Again, the only real loss to the Attacker is time.

This lack of impact on the Attacker is the theory behind honeypots. A honeypot is a computer network designed to entice Attackers in, to trick them into exposing a larger cadre of tools and methods in the hopes of inflicting a cost. Honeypots can be effective, but they have historically been expensive. Creating an entirely new network that is convincing enough to appear real is no small feat.

Generally though, an Attacker loses little in trying, failing, and waking up the next day to try again. There is no real impact on resources. Beyond that, the risk of legal or other consequences is essentially nonexistent. (Notwithstanding, the United States recently filed criminal charges against five members of the PLA. However, there is no expectation that this will immediately impact Chinese espionage; rather it is simply the first step in a broader program of enforcing consequences.)

By contrast, the effect of failing to prevent an attack can be devastating to the Defender. Trade secrets may be lost, customer confidence destroyed, money stolen, or business negotiating positions weakened, among other outcomes.

Unfortunately, even if the Defender successfully prevents an intrusion, they may be weakened. Attackers learn from their failures and adjust. Was the e-mail opened? What led to being stopped? Where are the public points of presence? Was the exploit stopped by a firewall? Which brand? How long did it take the Defender to react? Attackers may glean all this and more from an unsuccessful operation and put it to use on the next attempt.

The Defender has no such knowledge to gain from a single unsuccessful attack. Certainly you may learn how the Attacker attempted (or successfully gained) access the last time, but this tells you little about the next go-around. It requires a substantial amount of data across many attacks to even begin to make any kind of prediction about future methods.

The Attacker has little to lose and much to gain even in failure. The Defender has little to gain and much to lose. The effect of failure is asymmetric.

Knowledge of Technology

A well-funded Attacker knows more about common technology than the typical Defender. This is because the Attacker has a much broader base of experience having “worked on” more disparate networks than the typical system administrator. As you move from one network to the next, the commonalities become second nature. Whether it is router configurations, Windows Active Directory trees, LDAP configurations, types of mail servers, file servers, and such, there are but a limited number of typical setups, and the Attacker has seen them all.

This knowledge imbalance is even more certain for knowledge of how to break systems. Offense is the Attacker's full-time job. Some administrators may read about offense. Others may explore it as a hobby. But how many will spend most of their waking hours living and breathing offensive computer security like the Attacker? Few, and those few will be the exception.

Perhaps surprisingly, the Attacker is also more likely to understand defensive technologies, including how to effectively deploy them. Why? Defensive technology is part of both the Attacker's and the Defender's job, but there is a difference in motivation. The Defender must learn defensive methods and technologies to stay current and to maintain compliance. The Attacker must learn them to stay in business. You could, of course, argue the same thing for the Defender, but the urgency just is not there.

There is, however, one critical exception in the asymmetry of technical knowledge: if the Defender employs proprietary or rare technology. If no one else runs a particular package, then clearly the Attacker will know little about it at the onset. Here the Defender has an advantage, at least while their institutional knowledge of the system remains. This small plus, however, is often offset by the generally weaker security found in uncommon applications or by the additional complexity created by using nonstandard technology.

Knowledge of technology is clearly not an inherent asymmetry. A well- resourced Defender is capable of knowing just as much as the Attacker. In some sense though, it is just a reflection of motivation. At present, the Attacker is more motivated and as a consequence almost certainly more knowledgeable.

Analysis of Opponent

It is relatively easy for the Attacker to acquire and analyze defensive software and devices. For approximately $500, you can buy the top 10 personal security products that make up the majority of endpoint installations. For $500 K you can get the commercial versions and a consequential selection of defensive hardware. For $5 M—a lot for a small company but hardly a stretch for a large company or government—you can purchase practically every piece of software and a substantial portion of the hardware that is commercially available.

Of course, some things are not (easily) commercially available, such as high-end telecommunications gear, SCADA systems common in manufacturing, or other proprietary systems. Yet on balance, the bulk of any security is most likely made up of off-the-shelf components. So in general, Attackers can acquire, analyze, and test against these solutions before deploying their attack tools.

Also, the Defender's high-level technology choices tend to be quite stable, which can make analysis even easier. Switching between Cisco and Huawei hardware across an organization or between McAfee and Symantec software is a substantial undertaking. It will not be fast nor frequent. Attackers have basically as much time as they need for analyzing this high-level technology, limited only by how long a particular version of it will remain deployed.

The same ease of acquisition is not available to the Defender. Offensive tools are not for sale, at least not on open commercial markets. Yes, there are underground markets, where a single exploit purportedly can sell for $100,000 or more,5 but not all Defenders can pay this price, and few attack tools are generally available even if they could. The underground industry is smart enough to understand that if tools are available, security companies will just buy up the stock and protect against them. This would not be good for the purveyors' reputations or their business models.

In practical terms, offensive tools, at least the ones that are going to be used against you, cannot be purchased. They must be captured. This leads to a chicken-and-egg type problem: you must detect and capture a tool for analysis, but you must analyze the tool to detect and capture it. This circle often leaves the defensive industry stuck detecting the previous generation of tools.

Of course, it's not quite that cut and dry and hopeless. Defenders catch unknown tools all the time by looking for reused components and methodologies or variations of the two. This kind of analysis is done by pattern recognition and prediction. It works to an extent and is constantly improving, but it does not undo the fundamental imbalance. Defenders must guess and predict. Attackers simply purchase and know. Attackers have a fundamental advantage in analyzing their opponent.

Tailored Software

Consider the software at the so-called pointy end: software used to gain and maintain access and exfiltrate data for the offense, and software used to prevent this for the defense.

Attacker development is tailored to the task at hand. Whether it's a remote access tool, collector, or method of persisting, the Attacker controls the process from start to finish. There is only one set of users, one set of requirements, and one set of well-defined use cases. For anyone familiar with the process of software development, these limitations confer a huge advantage in speed.

The entire development life cycle can be compressed. Without outside customers, polish can be traded for functionality and reliability. Tools can be developed to meet the minimal requirements and then later expanded without worrying about how to attract customers. Testing can be done just-in-time when a new setup is encountered instead of having to test all potential situations up front. Training can be done across the entire user base. User feedback can be queried directly.

The Attacker's support tail is more flexible. Tools that outlive their usefulness can be abandoned entirely. Think about when a company discontinues a product line with an avid user base. Windows XP was released in 2001 and supported for 13 years. During 7 of those years, there was a replacement available (Windows Vista), and during 5 of those there was a decent replacement available (Windows 7). Cisco supports their hardware products for 5 years after they stop selling them entirely.

Commercial companies have to issue warnings of deprecation, develop upgrade paths, and provide long-term support. Attackers may do all this, or they may just throw the whole thing in the trash. There is no concern for damaging an external reputation. There is no need to convince users to spend more money for an upgrade. Attackers can make the most efficient decisions necessary without regard for losing market share.

Of course, just because Attackers can be efficient does not mean they will be. All the previously mentioned advantages presuppose nimble Attackers with decisive management and whose development and operational elements can effectively communicate. This ideal is hard to meet in organizations of any consequence. But the only thing limiting the Attackers' speed of development for pointy-end software is their resources and themselves.

This is in contrast to defensive tools. Defenders must wait for the commercial market to develop tools, a process that is typically a 1–2 year life cycle. Significant updates, in the best case, are quarterly. Nor are these out-of-the-box tools customized for Defenders. How could they be? Most tools need to appeal to a broad market to be profitable. So Defenders must take extra steps to adapt defensive tools to their particular scenario after delivery.

In short, Attackers have an advantage in creating and deploying pointy-end software. The development cycle can be condensed and it is under their own control. However, this advantage is not inherent. The defensive security market is actively researching and developing defensive architectures that can be quickly tailored to specific environments under the buzzword adaptive defense. Results so far have been muted, but it is in the early stages. If and when a true adaptive defense is achieved, the Attackers' advantage will dissipate.

Rate of Change

The fast pace of technological change works to the Attackers' advantage. The Attackers' superior focus, motivation, and initiative gives them, almost by definition, the ability to act faster, to adapt, and to exploit. But that advantage has been covered in previous sections, so why call out rate of change separately? The rapidity of change yields another asymmetry: a shifting security foundation.

In the physical world, security is additive. If there is a locked safe, putting it in a locked room and then posting a guard at the entrance enhances the security of the safe. Each new layer builds upon the previous foundation and makes the whole system more effective.

This intuitive understanding of security does not translate to the virtual world. The massive increase in computing power and the corresponding increase in the system and software complexity guarantees there is simply no solid foundation to build upon. The pace of change prevents it.

In 2014, the world was introduced to Heartbleed. This bug affected OpenSSL, a software program used across the world to establish secure connections between web browsers and websites. (You are using SSL or the Secure Sockets Layer when you see the secure lock icon or the green highlighting in your browser. OpenSSL is a popular implementation of this protocol.) The bug allowed Attackers to read information out of a server's memory, information like passwords and credit card numbers.

The bug was introduced in March 2012, and subsequently discovered and patched in April 2014. Putting aside the technical details of how it worked, consider only the chain of events. A software program, whose sole purpose is establishing trust, is made more vulnerable by an update, and it takes more than 2 years before anyone notices. Security is clearly not additive.

This simple fact is what makes the comparison of virtual security to the physical security of somewhere like Fort Knox invalid. When Fort Knox's defenses are updated, they are added to a well-established foundation. They do not weaken previously deployed defenses. However, when software is updated, especially if new features are added, history has shown there's a decent chance new vulnerabilities will be introduced.

The rate of change and the resultant shaky foundation it creates offers a renewing stream of vulnerabilities that is to the Attacker's advantage. The pace could theoretically work to the Defender's advantage if the Attacker fell behind. But so far this has not been an issue, and it is unlikely to become one while the Attacker maintains an advantage in motivation and focus.

Advantage Defender

The Attacker has many natural advantages over Defenders, but they do not hold all the cards. There are a few areas in which Defenders have their own favorable asymmetries.

Network Awareness

The network is the Defender's home court. User log-ons, network traffic, application use, and other information can all be logged, monitored, and analyzed. The Defender has the ability to have complete network awareness, and with that ability, the Defender is capable of ferreting out the Attacker.

Of course, exercising this ability requires time, money, expertise, and the adeptness to intelligently avoid information overload. There is a wide gap between the potential of total awareness and the reality of it that is rarely closed. For example, many companies do not even have a basic high-level drawing of their own network layout when asked for it by assessment teams. If you do not understand how your network is put together, there is no hope of finding an intrusion. Nonetheless, it is within the sole power of the Defender to close this gap, and at least in theory, it is always possible to detect the Attacker.

The Attacker lacks this level of capability. They simply cannot acquire the same level of detail with the same level of effort. Everything is more difficult. The Defender can read the manufacturer and model number of a router off the label, while the Attacker has to figure this out from surveys and profiling tools. The Defender can collect and search hard drives full of monitoring information, whereas the Attacker has no practical method of getting this information.

Now in some instances, the Attacker may actually understand the network better than administrators. As the Attacker enumerates and navigates the network, their information is dynamically refreshed. Meanwhile, the Defenders' information may grow stale. But this is merely an advantage of circumstance and effort in spite of the asymmetry, not because of it.

The Defender has full access to every switch, every router, every firewall configuration, and every computer. If leveraged with the right tools and expertise, this level of access and awareness can be a dominant advantage.

Network Posture

Defenders can change the constraints of the network at any time and in any manner. It is not just their home court. They can make and remake the court and its rules at will.

Posture changes can range from the simple, such as changing a password, to the complex, such as segmenting portions of the network. New network layouts, security technologies, auditing, or the tightening of technical and nontechnical policies and controls can all be introduced with impunity. The Attacker cannot prevent the Defender from doing anything.

By contrast, the Attacker must expend significant time and effort to keep from being blinded by changes in network posture. The introduction of a firewall rule, the decommissioning of a switch, or the updating of a single computer can set back Attackers if they are not careful.

This disparity of control opens a theoretical way of keeping the Attacker off balance. A moving target is harder to hit. The concept of keeping things in motion has been applied with some success on a microscale through antiexploitation technologies such as address space layout randomization (ASLR). At a high level, ASLR changes how programs are loaded, making certain classes of vulnerabilities much harder to exploit without affecting the program itself.

Creating a moving target is a powerful idea. To date, however, there is little that leverages an analogous concept across a network. The challenge, of course, is how to effectively randomize an entire network in such a way that it still functions as expected for the user base but confuses the Attacker. Is this possible? It's an open question. Either way, more could be done to effectively leverage the asymmetric advantage of control of network posture.

Advantage Indeterminate

It's 6:00 p.m., and the local high school football game is about to start on a field that runs east-west. It's clear that one team is going to have the sun in its eyes the entire first half, and by the time it switches sides, the sun will have set and it will not be an issue for their opponents.

Some asymmetries are like that. One side will have an advantage, but until the coin is tossed, it's impossible to know which. These asymmetries are worth mentioning, if only to be aware of how factors outside one's control set the stage.

Time

Time constraints are rarely symmetric. For the Attacker, time is required to understand the target and hide, expand, and complete the objective. Every stage of the operation requires time. The Attacker also needs times to develop the technology and methodologies necessary to execute an operation.

The urgency of the operation varies considerably with the operational objective. If the objective is strategic in nature, such as stealing intellectual property, perhaps the Attacker has months or even years to infiltrate. They can just keep pounding away like waves against a sea wall until eventually a breakthrough is found. This is allegedly how China is operating within many U.S. industrial and technology sectors: slowly and patiently.

If the operation is tactical in nature, time may be more constrained. Interdicting a shipment of weapons or gaining insight into a country's political system can have rather strict deadlines.

With more time, Attackers can develop better capabilities and improve their access in a network, or their existing abilities can atrophy and they can become more exposed. Time can help and it can hurt.

By contrast, Defenders need time to maintain the network and update, upgrade, and expand it. They need time to build and maintain expertise, train new employees, and create and implement secure processes. Defenders also needs time to find, analyze, and counter Attackers.

With more time, Defenders can strengthen their perimeter and internal controls or grow more complacent. They can seek out existing infiltrations, or remain blissfully unaware as the Attacker burrows ever deeper.

Which side has the advantage? There are simply too many variables to make a blanket assertion. What is certain is that time is not under the full control of either party. The Attacker dictates the Defender's time line and vice versa, while the technology drives both. It is an asymmetric advantage that is up for grabs.

Efficiency

Efficiency is a measure of how well an organization performs against some criteria within the given time constraints.

For an efficient Attacker, interim criteria may include the total number of simultaneous targets, the depth of penetration, the number of times detected, the speed of new technology development and deployment, or any number of other metrics. The ultimate criteria is the value of whatever information is gathered measured against the cost of gaining it, as shown in Figure 4.3.

Figure 4.3 Attacker efficiency criteria

Compromising 500 pizza delivery places sounds impressive but is unlikely to have the same value as the intellectual property of a major chemical manufacturer.

For the Defender, interim criteria may include the speed and efficacy of patching or the lag time between compromise and detection. The ultimate criteria is the value of whatever information is secured measured against the cost of securing it, as shown in Figure 4.4.

Figure 4.4 Defender efficiency criteria

Efficiency varies widely. Attackers may range from loose-knit criminal organizations to professional intelligence organizations, though which is more efficient is not a given. Larger organizations have the capability to be more efficient through scale (think Wal-Mart) but they can get bogged down under the weight of their own processes (think your cable company). Defenders can vary just the same.

It is impossible to generalize which side has the advantage. Are most Attackers more efficient than most Defenders? Yes, if you count the number of attacking organizations versus the number of organizations hacked. But what if you count the number of people that produce offensive technology versus the number that produces defensive technology? Then the answer is not so clear. Maybe the Attackers are not nearly as efficient as presumed.

While generalizing is impossible, one side will most certainly be more efficient for a given Attacker/Defender matchup. Therefore, efficiency is an indeterminate asymmetry. An organization must improve its efficiency in the hope that it gives itself an advantage over its opponent.

Summary

People commonly cite cost and attribution as the great asymmetries in cyber space, but these are strategically irrelevant. Cost is not as cheap as it seems, and attribution is unlikely to change behavior. The true asymmetries are in motivation, initiative, focus, and other areas broader than the specifics of the technology. Recognizing which asymmetries are intrinsic versus those that just reflect current circumstances is the essential first step in minimizing, maximizing, or reversing these advantages outright.

In the next chapter, we'll examine the things that impede the Attacker in spite of their many asymmetric advantages.