Chapter 6

Defender Frictions

It is possible to commit no mistakes and still lose.

—Captain Jean-Luc Picard

Defenders have their own set of impediments that occur repeatedly. At the top of the list is that they are consistently hacked, but stating this truth as a friction is not very helpful. What are some of the reoccurring issues that make defense more difficult? Identifying these and minimizing their effects is a must for any effective defensive strategy.

Mistakes

The Defender is human and therefore makes mistakes. Assuming the existence of a mistake-free environment can be your first mistake.

Not all mistakes are created equal. Accidentally leaving your workstation logged in while you go to lunch is a security issue, but unless someone walks by at that exact moment and installs something malicious, then it's a harmless mistake.

Other mistakes may be caught and corrected before it's too late. A Goldman Sachs contractor accidentally e-mailed “highly confidential” account information to someone's @gmail.com account instead of the @gs.com account that was intended. Sure they had to pay a lawyer to get a court order to get Google to delete the e-mail, but what could have been a breach of security was caught and fixed in time.1

Some mistakes have actual consequences. Misconfigure a firewall and suddenly the world can access the internal network. And access it they will. Fail to notice an alert, and what could have been an easily cordoned breach turns into millions of lost credit card numbers. This is the mistake the retailer Target committed in 2014.

The story [sources] tell is of an alert system, installed to protect the bond between retailer and customer, that worked beautifully. But then, Target stood by as 40 million credit card numbers—and 70 million addresses, phone numbers, and other pieces of personal information—gushed out of its mainframes.2

In January 2014, the Chinese Great Firewall started redirecting millions of users to Dynamic Internet Technology, a company that sells anticensorship software for Chinese users.3 Oops! I would not want to be the guy who caused that. No doubt what we view as ironic, the Chinese government considered a critical security mistake.

The severity of a mistake's consequences depends on several factors, but a key one is whether a system is designed to fail “open” or fail “secure,” where failure in this case means human error or oversight. Put another way: does a mistake leave things more accessible than wanted or less?

Failing to add a new user is a mistake, but one that fails secure. Failing to remove a user is a similar mistake, but that error fails open, leaving an avenue for unauthorized access. For security, the trick is to minimize the number of potential systems and processes that fail open and to develop a response plan for those that remain.

No matter how well prepared the Defender may be, mistakes and their effects are a key defensive friction.

Flawed Software

The Defender must deal with flawed software just like the Attacker. This much is obvious or security would be perfect, and offensive and defensive strategies would be irrelevant. Software is demonstrably flawed in all kinds of ways.

Software may be flawed in implementation, usually called a bug. The word bug simply means that a system has some behavior not intended by its developers. It may be that a particular program crashes if you try to open a menu while it is processing. It could be a truncated document causes the program to corrupt its own memory. It may be that the program deadlocks, a condition in which different parts of a program are waiting on each other so that nothing ever completes. (This is sometimes what causes your mouse cursor to change into a spinning wheel.) It could be the program fails to clean up nicely, slowly bringing the entire system to a halt. The list of bug manifestations goes on and on.

Software may be flawed by design. Do a search for “hardcoded backdoor,” and you can find lists of printers, routers, and software from Samsung, Dell, Barracuda, Netis, Cisco, and more where developers left in backdoors that require minimal skill to exploit. Even one of the FBI's wiretapping tools is vulnerable.4

Other flawed designs are not so egregious but involve flawed assumptions. For example, many industrial control system devices make the implicit assumption that they would never be connected to an externally accessible network. This was a reasonable assumption, especially since in many cases, at the time of initial development, the Internet did not exist yet. Fast-forward 10 or 20 years and someone bolts on a networking capability for remote management without reconsidering the consequences. There is no bug here. The system is performing exactly as intended and built. Yet it is hopelessly insecure.

Software may also be flawed by functional omission, meaning some feature or protection that should be there is not. Until September 2014, Apple's automatic mobile phone backup service, iCloud, allowed repeated failed login attempts with no consequences. To the dismay of many female Hollywood celebrities, this functional oversight allowed hackers to try millions of passwords, eventually logging in to the accounts by brute force. Once in, they restored the celebrity's phone backups to their own devices, downloading several intimate photographs in the process. Apple's security model was not broken, nor was there a bug. But its security failure by omission, and then quite a bit more, was laid bare for all to see.

Even security software is not immune. A quick search of the Common Vulnerabilities and Exposure's database maintained by the U.S. government shows that between 6/2014 and 9/2014, a short 3-month time window, the security products companies Kaspersky, Symantec, McAfee, AVG, Panda Security, Cisco, and Juniper all had reported vulnerabilities in at least one of their products. Some created additional attack vectors that would not have been there if the product were not installed.

There is nothing special about the preceding company list or the time frame. Searching for practically any security company yields results, if not in those 3 months, then within the last year. Security vendors have every motivation to make products secure, but they, too, release flawed technology.

In short, regardless of intent or resources, decades of experience have taught us that all software has flaws and some percentage of those flaws affect security. So what can be done?

Simply removing flawed software is impractical. Even if flawed software could be recognized (all evidence suggests it can't), you cannot remove anything the Defender depends on even if it is egregiously insecure.

Upgrading may not be an option either. The original software developer may be out of business. It may be an embedded system that cannot be updated. It may be so intertwined into the call center, manufacturing equipment, inventory tracking, or other business critical system as to be unrealistic to replace. Upgrading, even when technically possible, may be simply cost prohibitive.

Patching is its own friction. Figure 6.1 shows what patching is like for one computer.

Figure 6.1 All Adobe Updates5

The potential confusion and frustration is only multiplied in a corporate environment. The Defender must not only spend time and resources applying patches as they arise, but they must also spend precious time proactively testing updates lest they negatively impact the business. Or instead they must respond to any applied updates that break things.

Broken updates are not a theoretical issue. The September 2014 update to iOS 8.0.1 for iPhone prevented many devices from, well, working as a phone. It killed the ability to get cellular service. Not 1 month earlier Microsoft released an update that caused many Windows systems to crash. And these updates are from well-funded companies.

Whether by implementation, design, or omission, flawed software is here to stay. In some cases, it cannot be updated, but even in those where it can, the updated versions will contain flaws. In the best case, these flaws will serve as a source of negligible unpredictability to the Defender; in the worst, a source of entry or expansion for the Attacker. They are an irremovable defensive friction.

Inertia

Law I. Every body perseveres in its state of rest, or of uniform motion in a right line, unless it is compelled to change that state by forces impressed thereon.

—Sir Isaac Newton from Philosophiæ Naturalis Principia Mathematica

Newton's First Law of Motion, or the law of inertia, is one of the foundations of physics. But it should be considered a foundation to human psychology and action as well.

Consider Windows XP. It is well over a decade old and has not been supported since April 2014. It is by definition insecure now, as every new vulnerability discovered will be permanent. It's not as if the original software developer went out of business. There is a replacement readily available, and Microsoft has expended untold effort to ensure Windows 7 and 8 are backward compatible.

Still XP persists in the millions. Why? Inertia: it requires “force” to change, where force is the resources and motivation to change and the knowledge that it is necessary.

The cost of upgrading is clear. It takes time and expertise to do the upgrade, money for the new operating system, and money to replace old hardware. It may also take time, expertise, and money to deal with any resulting incompatibilities.

What's the cost of not upgrading? At some point in the near or distant future, an Attacker may or may not get access to this computer, and then do something that may or may not cause anyone any heartache whatsoever. Tough choice? Not really. The system works. Leave it alone and go concentrate on something else.

This, of course, assumes people are even making that choice. Most people have automatic updates set for their computers, but how many people know if the firmware on their home router is up to date? How many know what firmware is? (It's the low-level software burned into the device that loads the operating system.) What are the risks if it is out of date? Answer: who cares? People have better things to worry about. They are quite justifiably ignorant, and this ignorance is a form of inertia.

Inertia is hard to overcome. “If it ain't broke, don't fix it” is a perfectly rational attitude, especially when fixing it requires time and money. Sometimes even “Well it is broke, but fixing it would be hard” wins the day as well. Google aptly demonstrated this attitude with a January 2015 announcement that they will not fix security issues in WebView, a component currently on 60% of Android phones.6 Even multibillion dollar companies have levels of inertia they are unwilling to overcome.

Until the cost of not upgrading is made more painful than neglecting other duties, inertia will remain a defensive friction.

The Security Community

The security community seeks out flaws to get them addressed. As discussed earlier, this is a clear Attacker friction. So how does discovery hurt the Defense?

What if a Defender cannot update due to incompatibilities or because the flaw is built into the hardware? Even if they could update, inertia and resource constraints may prevent all Defenders from updating in a timely fashion.

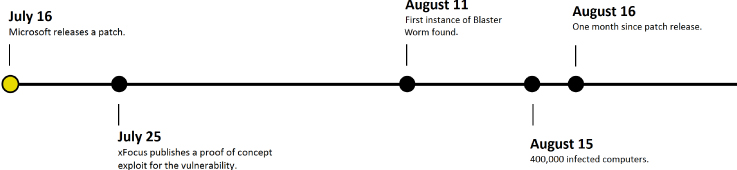

On July 16, 2003, Microsoft released a patch for a vulnerability in Windows (MS 03-2026). Prior to this, as far as anyone knows, no one outside of Microsoft was aware of the issue. By July 25th, the Chinese group xFocus reverse engineered the patch to discover the vulnerability and published a proof of concept on how to exploit it. By August 11th, a worm was found spreading on the Internet. By August 15th, the now named Blaster Worm had infected more than 400,000 computers. As shown in the time line in Figure 6.2, that's less than one month from a released patch to a widespread worm.

Figure 6.2 Blaster Worm time line

The Attacker was handed a new attack vector by the patch. What could Microsoft have done differently? Nothing. Microsoft's actions improved security in the long run. The alternative of not releasing the patch would have left everyone insecure, not to mention exposing the company to untold liability.

For a more recent example, in September 2014, a critical flaw in the Linux program Bash was disclosed by Red Hat, a Linux development and support company. The Linux operating system powers more than one-half the world's websites, and Bash is a key component of almost every Linux system.

At the same time the flaw was disclosed, a fix was released. The fix itself was incomplete, but that did not actually matter. Within 24 hours, Attackers were actively scanning the Internet and using the vulnerability in the wild. Let me repeat: attacks were detected within 24 hours, a next-to-impossible deadline for any IT staff.

These are but two examples. Many smaller, less widespread flaws are reported all the time. In some cases, because they are less popular, they do not receive as much attention, and therefore Defenders are even more likely to delay or forgo updating.

In finding flaws and fixing them, the security community can make the Attackers' job paradoxically easier. The security community is therefore a source of friction to Defenders.

Complexity

Every technical aspect of the target is complex. Computers and networks are complicated things. They have architectures, operating systems, file systems, protocols, applications, and more. Typical users scratch only the surface of understanding the components that sit on their desks. My laptop has 400,000+ files and 50+ programs running on it when it first starts up. Which are necessary? Which have vulnerabilities? There is simply no way to tell. It is far too complicated. And that's only the computer in front of me.

The backbone of any network, the routers, are complex computers that are less visible, but equally vulnerable. Until recently, few paid any attention to these devices, but that changed in 2014 when a worm was released for Linksys routers, a prolific home brand.7 This particular worm, dubbed “TheMoon,” was not very effective, but it could have been. Time and money is all that stood between a somewhat harmless annoyance and a full-featured home router toolkit that could redirect online banking, eavesdrop on insecure communications, or serve as an entry point into the network behind it.

Practically every network in the world also contains a printer. The dot matrix printers of yore were pretty simple, but a modern printer runs a full operating system. In September 2014, a researcher compromised a Canon Pixma printer and reprogrammed it to play the legendary game Doom on its tiny LCD screen.8 Does anyone doubt he could have instead surreptitiously copied and exfiltrated every printed document? Modern printer complexity makes them ripe for exploitation.

Given that printer flaws have been demonstrated for years now, I am surprised that my searches turn up no real-world examples of printer malware. Of course, this may have more to do with the fact that few, if any, defensive tools exist. If your printer was infected, how would you know? Answer: you wouldn't.

In 2000, Bruce Schneier wrote in his book Secrets and Lies, “Complexity is the worst enemy of security…. A more complex system is less secure on all fronts. It contains more weaknesses to start with, it's modularity exacerbates those weaknesses, it's harder to test, it's harder to understand, and it's harder to analyze.”9

I'll add one more to the list: it's harder to fix, even if the vulnerability is understood. When, for example, Microsoft identifies, analyzes, and develops a fix for a security issue, it still has to retest it on thousands of operating system configurations, and despite best efforts, the fix is sometimes flawed.

In November 2014, Microsoft pushed out a patch for the SChannel flaw, a serious remote execution vulnerability affecting all versions of Windows since 2003. For some, the patch caused processes to hang, services to become unresponsive, issues with certain database applications, and more.10 Microsoft had to retract the patch and issue another one a couple weeks later. Microsoft, a company with years of experience testing and issuing patches, missed the complex interactions the first time around.

The same problem exists for any major software vendor. Fixing complex systems is more costly and adds to the difficulty of defense.

Complexity also makes deployment mistakes more likely. Even if all software were flaw free in terms of vulnerabilities, it still requires someone to configure and use it. A firewall with a single misconfiguration can turn an otherwise impervious fortress into a Maginot Line.

Finally, complexity is hard to avoid. Witness the recently developed U.S. government system for purchasing health care insurance, also known as Healthcare.gov. Unsubstantiated reports put it in the 3 million lines of code range for the site and all its various backend systems, databases, and interchanges. This number may or may not be true, but it's reasonable enough.

And that's the scary part: 3 million lines of code are reasonable for the scope of what needed to be accomplished. How many people believe that this system was adequately security tested before launch? While contemplating your answer, keep in mind it was not adequately functionally tested before launch. You might then ask, why was it so complicated? For the same reason most software is complicated. It's the only way to deliver the features that the market, or in this case the law, demanded.

(By the way, lest you think this is a political commentary, I would be far more concerned about the 100s of millions of lines of code in the operating systems, databases, and network infrastructure that this and every other public service runs on top of. Oh, that and the insurance companies with actual medical information, not just eligibility information, that have already been hacked. The latest example is the insurance giant Anthem who, almost as if on cue, had 80 million customer records stolen in early 2015, corresponding to tens of millions of customers.11)

On top of all this, not only is complexity hard to avoid creating, it's hard to simplify once created.

The Department of Veterans Affairs and the Department of Defense spent at least $1.3 billion during the last four years trying unsuccessfully to develop a single electronic health-records system between the two departments—leaving veterans' disability claims to continue piling up in paper files across the country, a News21 investigation shows.

This does not include billions of other dollars wasted during the last three decades, including $2 billion spent on a failed upgrade to the DOD's existing electronic health-records system.12

Attempting to combine and simplify two military systems was a complete failure. Starting from scratch is not necessarily much better either. Witness the Virtual Case File, a program designed to replace the FBI's legacy Automated Case Support system: 170 million dollars later, the new system was abandoned entirely.

There's certainly plenty of blame to go around in every example of failed attempts to reduce complexity, and rest assured, fingers get pointed in all directions by a lot of people. However, these are just a small sample of the highly publicized ones. The larger point remains: complexity is the worst enemy of security, and it is not going anywhere. It is a friction for every aspect of defensive security.

Users

A great source of friction for the Defender is the user base. Users will ignore policy. They will find a way to undo security restrictions that they find cumbersome. They will ignore warning signs of compromise and chalk it up to “that's just what the computer does sometimes.”

Users will open unsolicited attachments. They will forward company proprietary information to their own web mail accounts. Even seemingly innocuous behavior, like bringing in a child's book report on a thumb drive for printing, can cause security headaches.

I have witnessed an employee bring in hard drives full of music and movies (and whatever malware may have gotten on to them) and connect the drives to the company network. Another hooked up a computer outside the company firewall to circumvent restrictions on downloads. The funny part is neither act was malicious. In the download case, there was actually a legitimate business reason for it. Yet neither user bothered to clear the insecure behavior through IT.

There is no end to the creative abuse that the user base can and will find. And this assumes no malice whatsoever.

What is the best way to deal with this? The security-conscious network administrator walks a fine line. Imposing strict controls without the ability to quickly deal with the inevitable exceptions just breeds a hostile user base. Imagine having to put in a request to browse the results of a Google search. I've seen one company try this. The rule lasted less than a day. Employees do not like to wait minutes, never mind hours or days, to gain access to something they feel is justified. The Internet is full of stories where employees feel that corporate IT just gets in the way.

Also, what should an administrator do when the “stupid user” who causes issues is the boss? According to one survey of security professionals by ThreatTrack Security, 40% of respondents said they “had to remove malware after a senior executive visited an infected pornographic website.”13 I have to believe most executives were warned not to browse porn at work at some point in their careers. And still, 40%.

So what's the right course of action here? Attempt to restrict his access to contain the damage or quietly let it pass and just deal with it? Making the correct security call should not involve weighing your career prospects, but in the real world it can.

There is no easy win with users. Short of introducing draconian consequences, the user base will ignore good practice and policy when it suits them and therefore will always remain a Defender friction.

Bad Luck

Murphy's law is not limited to the Attacker. Laptops will be stolen. Disgruntled employees will retaliate. Servers will suffer inopportune outages. Software that worked for years will stop working because of some unrelated update. The Defender's attention will be grabbed and held elsewhere to the detriment of security.

Not every friction can be named, predicted, or planned for despite all care. Bad luck will always play a role.

Summary

The effect of frictions can be reduced through expertise, training, process, and technologies, but the frictions themselves can never be eliminated. Both Attacker and Defender must craft a strategy that recognizes and accommodates their frictions while seeking to increase those of their opponent.

In the next chapter, we'll explore the framework of offensive principles necessary to maximize offensive asymmetric advantages and minimize frictions.