2

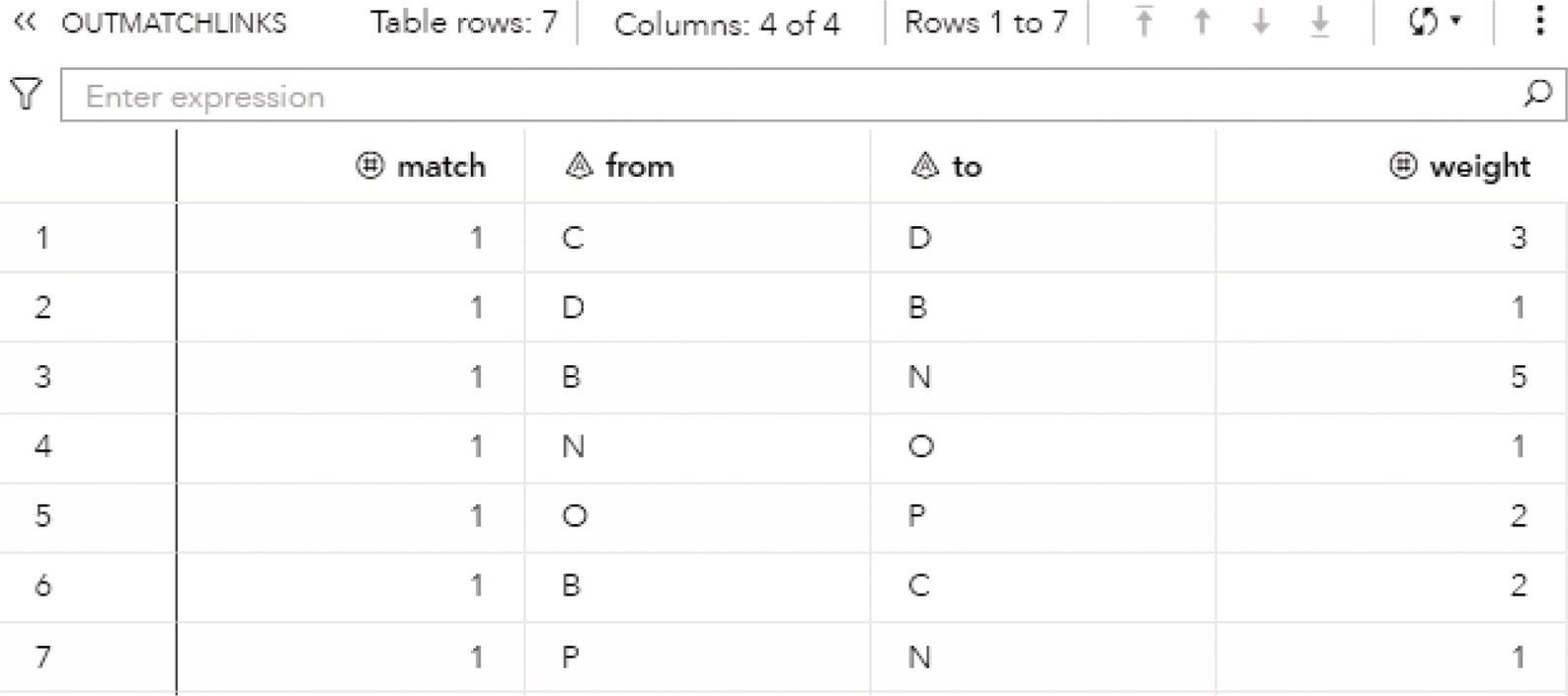

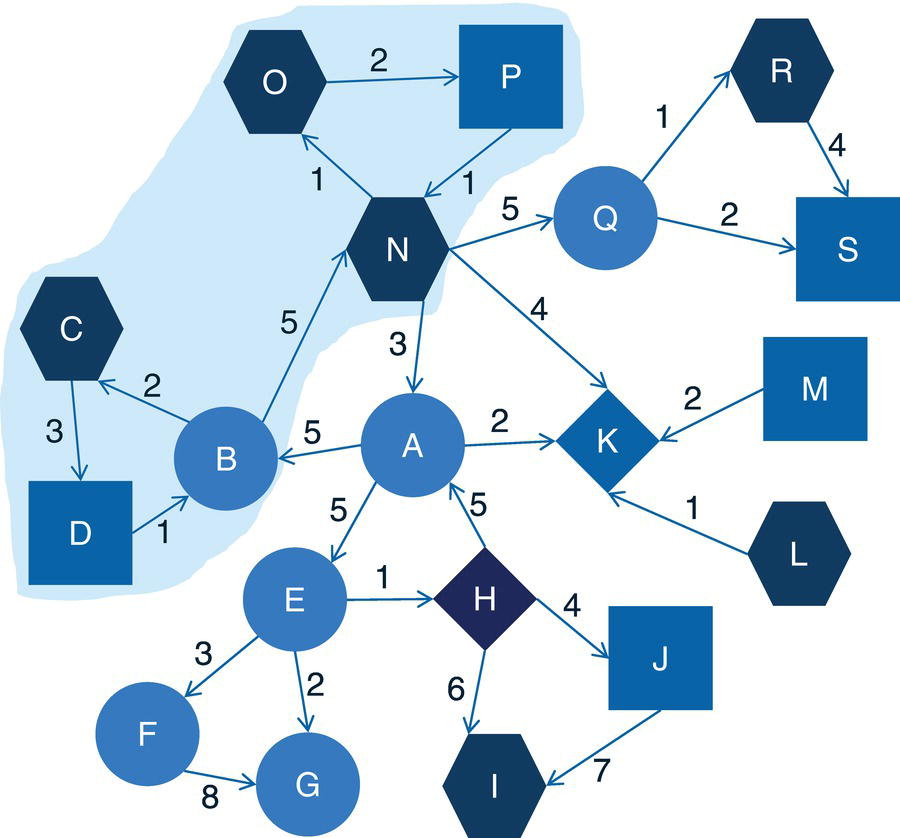

Subnetwork Analysis

2.1 Introduction

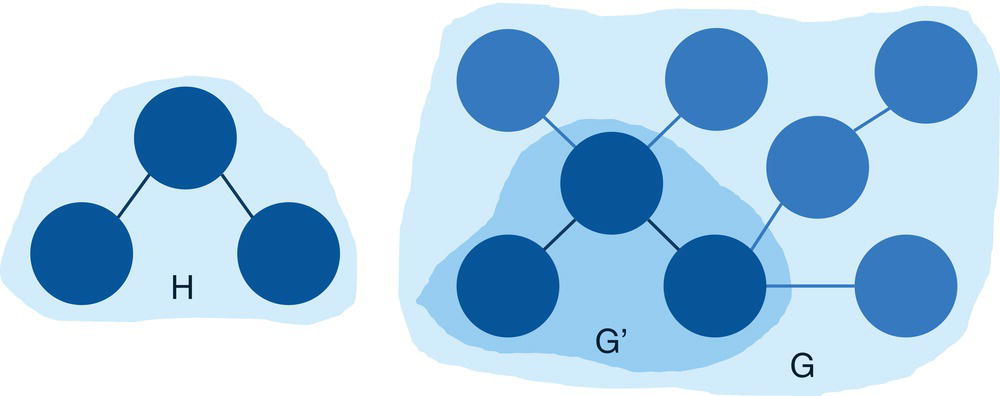

An important concept in graph theory is the concept assigned to subgraphs. A subgraph of a graph is a smaller portion of that original graph. For example, if H is a subgraph of G and i and j are nodes of H, by the definition of a subgraph, the nodes i and j are also nodes of the original graph G. However, a critical concept in subgraphs needs to be highlighted. If the nodes i and j are adjacent in G, which means that they are connected by a link between them, then the definition of a subgraph does not necessarily require that the link joining the nodes i and j in G is also a link of H. If the subgraph H has the property that whenever two of its nodes are connected by a link in G, this link is also a link in H, then the subgraph H is called an induced subgraph.

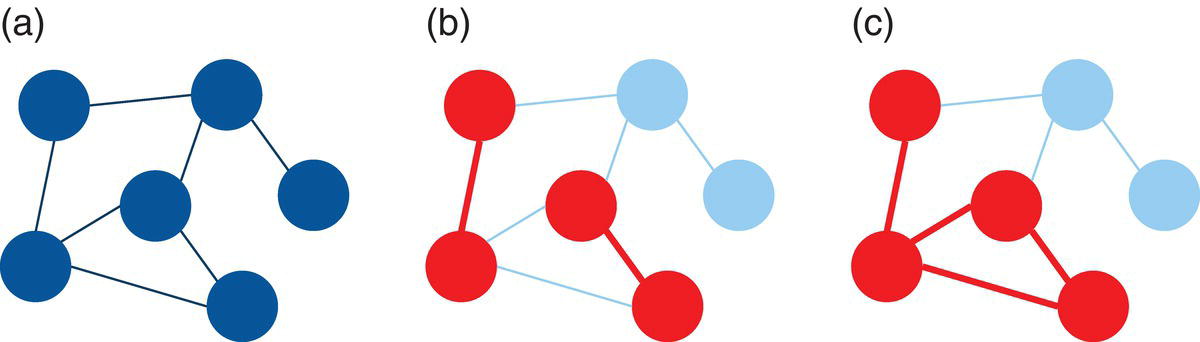

For example, the three graphs shown in Figure 2.1 describe (a) a graph, (b) a subgraph, and (c) an induced subgraph.

Notice that the subgraphs in (b) and (c) have the same subset of nodes. However, the subgraph (b) misses all the links between their nodes. Subgraph (c) has the original links between the subset of nodes from the original graph. For that reason, the subgraph (c) is an induced subgraph.

The idea of working with subgraphs is simple and straightforward. If all the nodes and links we are interested in analyzing are in the graph, we have the whole network to perform the analysis. However, from the entire data collected to perform the network analysis, we may often be interested in just some subset of the nodes, or a subset of the links, or in most cases, in both sets of nodes and links. In those cases, we don't need to work on the entire network. We can focus only on the subnetwork we are interested in, considering just the subset of nodes and links we want to analyze. This makes the entire analysis easier, faster, and more interpretable, as the outcomes are smaller and more concise.

We can think of a graph as a set of two sets. A set of nodes and a set of links, or sometimes, just a set of nodes and links. Based on the set theory, it is always possible to consider only a subset of the original set. Since graphs are sets of nodes and links, we can do the same we consider only a subset of its members. A subset of the original nodes of a graph is still a graph, and we can call it a subgraph. Based on that, if G = (N, L) is the original graph, the subgraph G ′ = (N ′, L ′) is a subset of G, The subset of nodes and links G must contain only elements of the original set of nodes and links G ′. This property is written as G ′ ⊂ G. We read this equation as G ′ is a subset of G. If every element of the subset of nodes and links is also an element of the original set of nodes and links, then we can also write the following equations, N ′ ⊂ N and L ′ ⊂ L.

In Figure 2.1, the graph represented by (b) is also sometimes called a disconnected subgraph of the original graph, and the graph represented by (c) is called a connected subgraph of the original graph. The subgraph in (c) is well connected, where all nodes are a link to each other, even if is not through a directed connection. This subgraph captures well all relationships between the subset of nodes in the subgraph. It misses just the links to the nodes that are not included in the subgraph. Conversely, the subgraph in (b) has two pairs of nodes isolated. The nodes within the two pairs are linked to each other, but the pairs of nodes are not. That means a node from one pair cannot reach out to a node in the other pair. The absence of these existing connections in the original graph compromise the information power of the subgraph and makes it a disconnected subgraph.

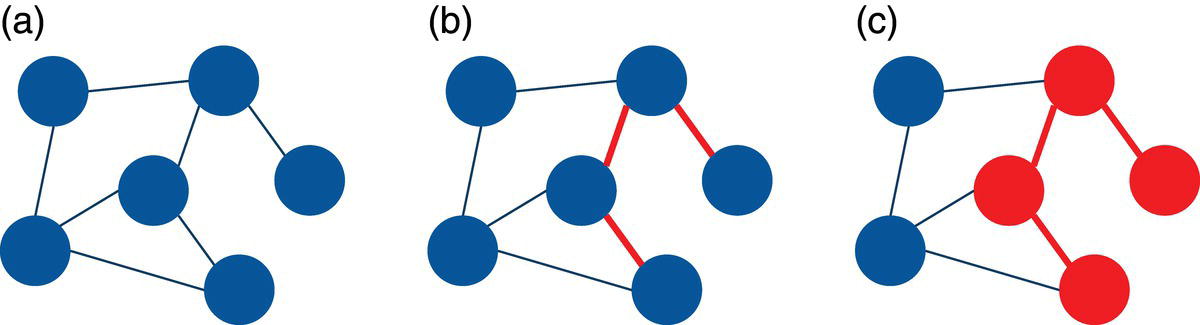

It is important to note that we can create a subgraph from a subset of nodes from the original graph, but also from a subset of links from the original graph. When selecting a subset of links from the original graph, by consequence, we also select the nodes connected by those links. Therefore, we also select a subset of nodes from the original set. For example, by selecting three particular links from the previous graph, we also select the four nodes connected by those links. This scenario is shown in Figure 2.2.

Figure 2.1 (a) Graph (b) Subgraph (c) Induced graph.

Figure 2.2 (a) Graph (b) Links selection (c) Subgraph by links selection.

Notice that the three links in (b) selected as part of the subgraph from the original graph in (a) also brings the fours nodes connected by them, creating the subgraph in (c).

When a subgraph is defined based on a subset of nodes, which is quite common, it is called a node induced subgraph of the original graph. When a subgraph is defined by selecting a subset of links, it is called a link induced subgraph of the original graph.

In addition to reducing the size of the original network, one of the most common reasons we use subgraphs when analyzing network is to understand the impact of removing nodes and links from the original graph; how the subnetwork would look if some actors or relationships are removed from the original network. This approach is quite important to better understand how significant a particular actor is, or how important specific relationships are. Eventually, by removing an actor, or a relationship, the original network is split into multiple subnetworks, disconnected from each other. That means that actor or relationship is absolutely crucial in the original network. Eventually, the network will keep a similar interconnectivity, still allowing the other actors to reach out to each other through other actors or other relationships. It means removing that actor or that relationship from the original network is not too important and doesn't much change the overall network connectivity.

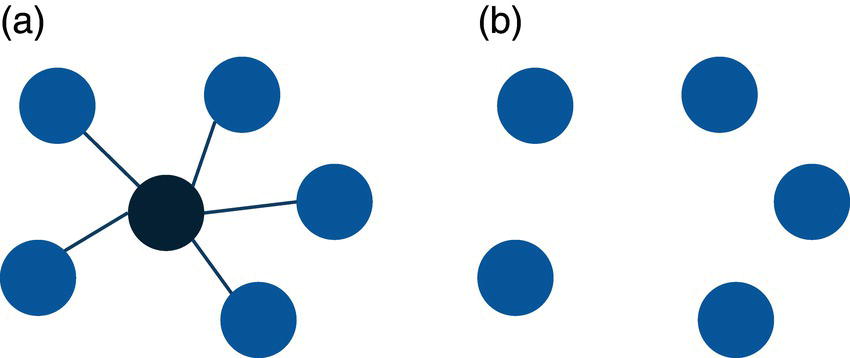

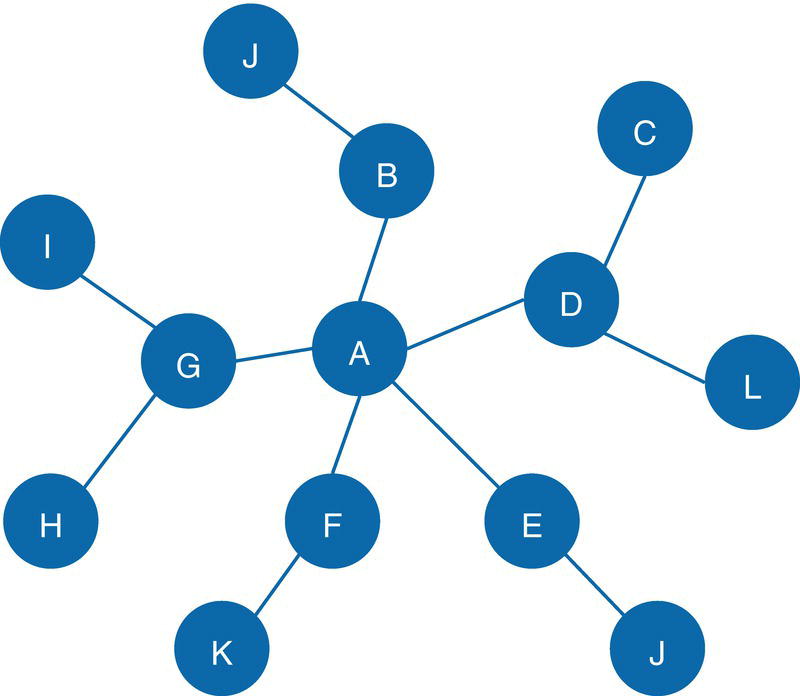

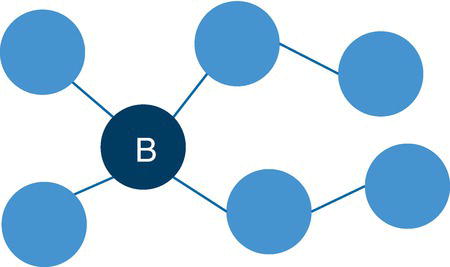

For example, in a star graph, the central node plays a very important role holding the network together. If the central node is removed from the original network, all satellite nodes will be isolated from each other. This case is shown in Figure 2.3.

Notice that the central node in the star graph connects all other nodes in the graph. If this node is removed from the original network, all other nodes will be isolated.

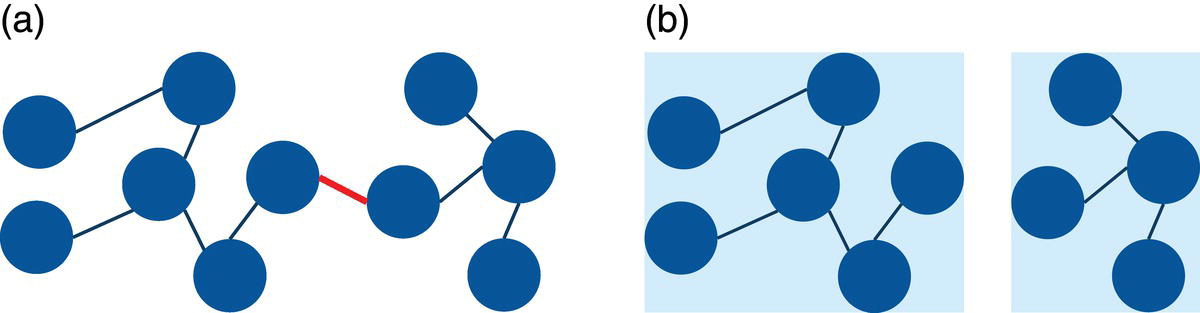

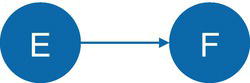

A specific connection can be also very important in a network. The following example shows that, if the central connection highlighted in the graph (a) is removed from the original graph, it will split the graph into two disconnected subgraphs in (b). In that case, one single connection can completely change the shape and the properties of the original network. This can be seen in Figure 2.4.

Figure 2.3 (a) Graph (b) Subgraph by removing nodes.

Removing nodes and links from the original network can therefore help us to understand the properties of the graph and the outcomes produced by these deletions. For example, how the final topology and structure of the subnetworks will look after removing particular nodes and links. Do these resulting subnetworks create communities, cores, cliques, or other different types of groups from the original network? What would be the importance of the removed nodes? What would be the relevance of the removed links? All these questions guide us to better exploring all the properties of the original network and identifying hidden patterns within the nodes and most important, within their relationships.

Figure 2.4 (a) Graph (b) Subgraphs by removing links.

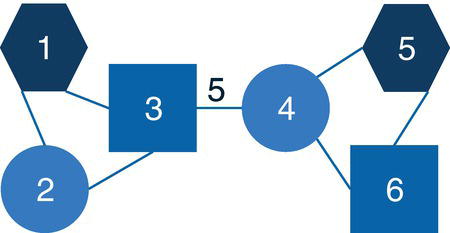

2.1.1 Isomorphism

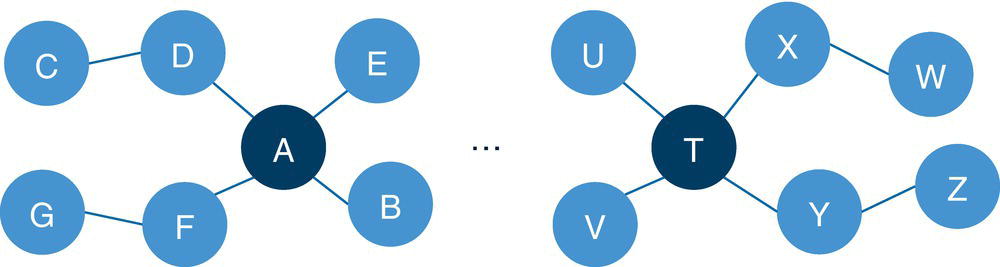

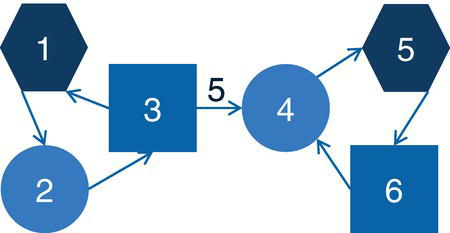

A frequent problem in network analysis and network optimization is known as the subgraph isomorphism problem. A subgraph isomorphism problem is a computational task that involves two graphs G and H as input. The problem is to determine if G contains a subgraph that is isomorphic to H. Two graphs are isomorphic to each other if they have the same number of nodes and links, and the link connectivity is the same. The following graphs and subgraphs exemplify the subgraph isomorphism problem. The subgraph G ′ of the graph G is isomorphic to the graph H. They have the same number of nodes and the same number of links. Also, the link connectivity is the same, which means the existing links connect the existing nodes within the graph and the subgraph in the same way. Figure 2.5 shows a case of isomorphism.

The subgraph isomorphism problem can be reduced to find a fixed graph as a subgraph in a given graph. The answer to the subgraph isomorphism question attracts many researchers and practitioners because many graph properties are hereditary for subgraphs. Ultimately, this means that a graph has a particular property if and only if all its subgraphs have the same property. However, the answer to this question can be computationally intensive. Finding maximal subgraphs of a certain type is usually a NP‐complete problem. The subgraph isomorphism problem is a generalization of the maximum clique problem or the problem of testing whether a graph contains a cycle, or a Hamiltonian cycle. Both problems are NP‐complete.

NP is a set of decision problems that can be solved by a non‐deterministic Turing Machine in polynomial time. NP is often a set of problems for which the correctness of each solution can be verified quickly, and a brute‐force search algorithm can find a solution by trying all workable solutions. Some heuristics are often used to reduce computational time here. The problem can be used to simulate every other problem for which a solution can be quickly verified whether it is correct or not. NP‐complete problems are the most difficult problems in the NP set. The problem, particularly in large networks, can be very computationally intensive. For instance, in the previous example, the very small graph G has another five other different subgraphs that are isomorphic to graph H. Graph G is a very small graph with just eight nodes and contains a total of six subgraphs isomorphic to graph H. In a very large network, the search for all possible subgraphs can be computationally exhaustive.

Analyzing subgraphs instead of the entire original graph becomes more and more interesting, especially in applications like social network analysis. Finding patterns in groups of people instead of in the entire network can help data scientists, data analysts, and marketing analysts to better understand customers' relationships and the impact of these relations in viral churn or influential product and service adoption. Particularly for large networks, we can find multiple distinct patterns throughout the original network when looking deeper into groups of actors. Each group might have a specific pattern, and it can be vastly different from the other groups. Understanding these differences may be a key factor not to just interpret the network properties but mostly to apply network analysis outcomes to drive business decisions and solve complex problems. The subgraph approach allows us to focus on specific groups of actors within the network, reducing the time to process the data and making the outcomes easier to interpret. The sequence of steps to divide the network into subnetworks, analyze less data, and more quickly interpret the outcomes can be automatically replicated to all groups within the network.

Figure 2.5 Subgraph G ′ isomorphic to graph H .

Many network problems rely on the concepts behind subgraphs. In this chapter we will focus on some of the most important subgraphs in network analysis and network optimization. This chapter covers, therefore, the concepts on connected components, biconnected components, and the role of the articulation points, communities, cores, reach networks (or ego networks), network projection, nodes similarity, and pattern match. Some other concepts on subgraphs like clique, cycle, minimum cut, and minimum spanning tree, for example, will be covered in the network optimization chapter.

2.2 Connected Components

A connected component is a set of nodes that are all reachable from each other. For a directed graph, there are two types of components. The first type is a strongly connected component that has a directed path between any two nodes within the graph. The second type is a weakly connected component, which ignores the direction of the graph and requires only that a path exist between any two nodes within the graph. Connected components describe that if two nodes are in the same component, then there is a path connecting these two nodes. The path connecting these two nodes may have multiple steps going through other nodes within the graph. All nodes in the connected component are somehow linked, no matter the number of nodes in between any pair.

Connected components can be calculated for both directed and undirected graphs. A graph can have multiple connected components. Therefore, a connected component is an induced graph where any two nodes are connected to each other by a path, but they are not connected to any other node outside the connected component within the graph.

Connected components are commonly referred to as just components of a graph. A common question in graph theory relies on the fact of whether or not a single node can be considered as a connected component. By definition, the answer would be yes. A graph can be formed by a single node with no link incident to it. A graph is connected if every pair of nodes in the graph can be connected by a path. Theoretically, a single node is connected to itself by the trivial path. Based on that, a single node in a graph would be also considered a component, or a connected component in that graph.

In proc network, the CONNECTEDCOMPONENTS statement invokes the algorithm that solves the connected component problem, or the algorithm that identifies all connected components within a given graph. The connected components can be identified for both directed and undirected graphs. Proc network uses three different types of algorithms to identify the connected components within a graph. The option ALGORITHM = has four options to either specify one of the three algorithms available or allow the procedure to automatically identify the best option. The option ALGORITHM = AUTOMATIC uses the union‐find or the afforest algorithms in case the network is defined as an undirected graph. If the network is defined as a directed graph, the option ALGORITHM = AUTOMATIC uses the depth‐first search algorithm to identify all connected components within the network. The option ALGORITHM = AFFOREST forces proc network to use the algorithm afforest to find all connected components within an undirected graph. Similarly, the option ALGORITHM = DFS forces proc network to use the algorithm depth‐first in order to find all connected components within a directed graph or an undirected graph. Finally, the option ALGORITHM = UNIONFIND forces proc network to use the union‐find algorithm to find all connected components within an undirected graph. The algorithm union‐find can also be executed in a distributed manner across multiple server nodes by using the proc network option DISTRIBUTED = TRUE. This option specifies whether or not proc network will use a distributed graph. By default, proc network doesn't use a distributed graph. The afforest algorithm usually scales well for exceptionally large graphs.

The final results for the connected component algorithm in proc network are reported in the output table defined by the options OUT =, OUTNODES =, and OUTLINKS=.

The option OUT = within the CONNECTEDCOMPONENTS statement produces an output containing all the connected components and the number of nodes in components.

- concomp: the connected component identifier.

- nodes: the number of nodes contained in the connected component.

The option OUTNODES = within the PROC NETWORK statement produces an output containing all the nodes and the connected component to which each one of them belongs.

- node: the node identifier.

- comcomp: the connected component identifier for the node.

The option OUTLINKS = within the PROC NETWORK statement produces an output containing all the links and the connected component each link belongs to.

- from: the origin node identifier.

- to: the destination node identifier.

- concomp: the connected component identifier for the link.

Let's see some examples of connected components in both directed and undirected graphs.

2.2.1 Finding the Connected Components

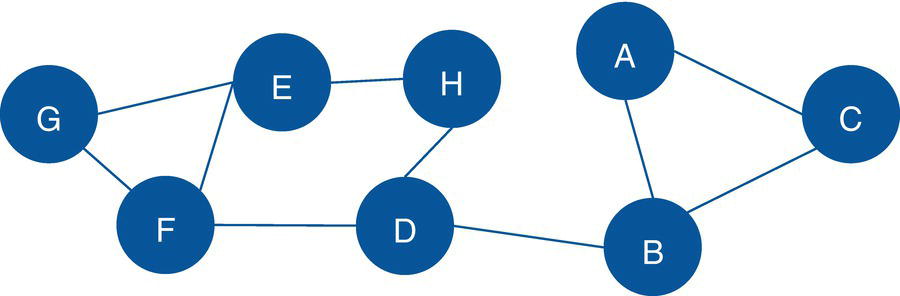

Let's consider a simple graph to demonstrate the connected components problem using proc network. Consider the graph presented in Figure 2.6. It shows a network with just 8 nodes and only 10 links. Weight links are not relevant when searching for connected components, just the existence of a possible path between any two nodes. As in many graph algorithms, when the input graph grows too large, the process can be increasingly exhaustive, and the output table can be extremely big.

The following code describes how to create the input dataset for the connected component problem. First, let's create only the links dataset, defining the connections between all nodes within the network. Later, we will see the impact of defining both links and nodes datasets to represent the input graph. The links dataset has only the nodes identification, the from and to variables specifying the origin and the destination nodes. When the network is specified as undirected, the origin and destination are not considered in the search. The direction doesn't matter here. Another way to think of the undirected graph is that every link is duplicated to have both directions. For example, a unique link A→B turns into two links A→B and B→A. This link definition doesn't provide a link weight as it is not considered when searching for the connected components.

data mycas.links;input from $ to $ @@;datalines;A B B C C A B D D H H E E F E G G F F D;run;

Once the input dataset is defined, we can invoke the connected component algorithm using proc network. The following code describes how this is done. Notice the links definition using the LINKSVAR statement. The variables from and to receive the origin and the destination nodes, respectively. As the name of the variables in the links definition are exactly the same names required by the LINKSVAR statement, the use of the LINKSVAR statement can be suppressed. We are going to keep the definition in the code (and in all of the codes throughout this book) as in most of the cases, the name of the nodes can be different than to and from, like caller and callee, origin and destination, departure and arrival, sender and receiver, among many others.

proc networkdirection = undirectedlinks = mycas.linksoutlinks = mycas.outlinksoutnodes = mycas.outnodes;linksvarfrom = fromto = to;connectedcomponentsout = mycas.concompout;run;

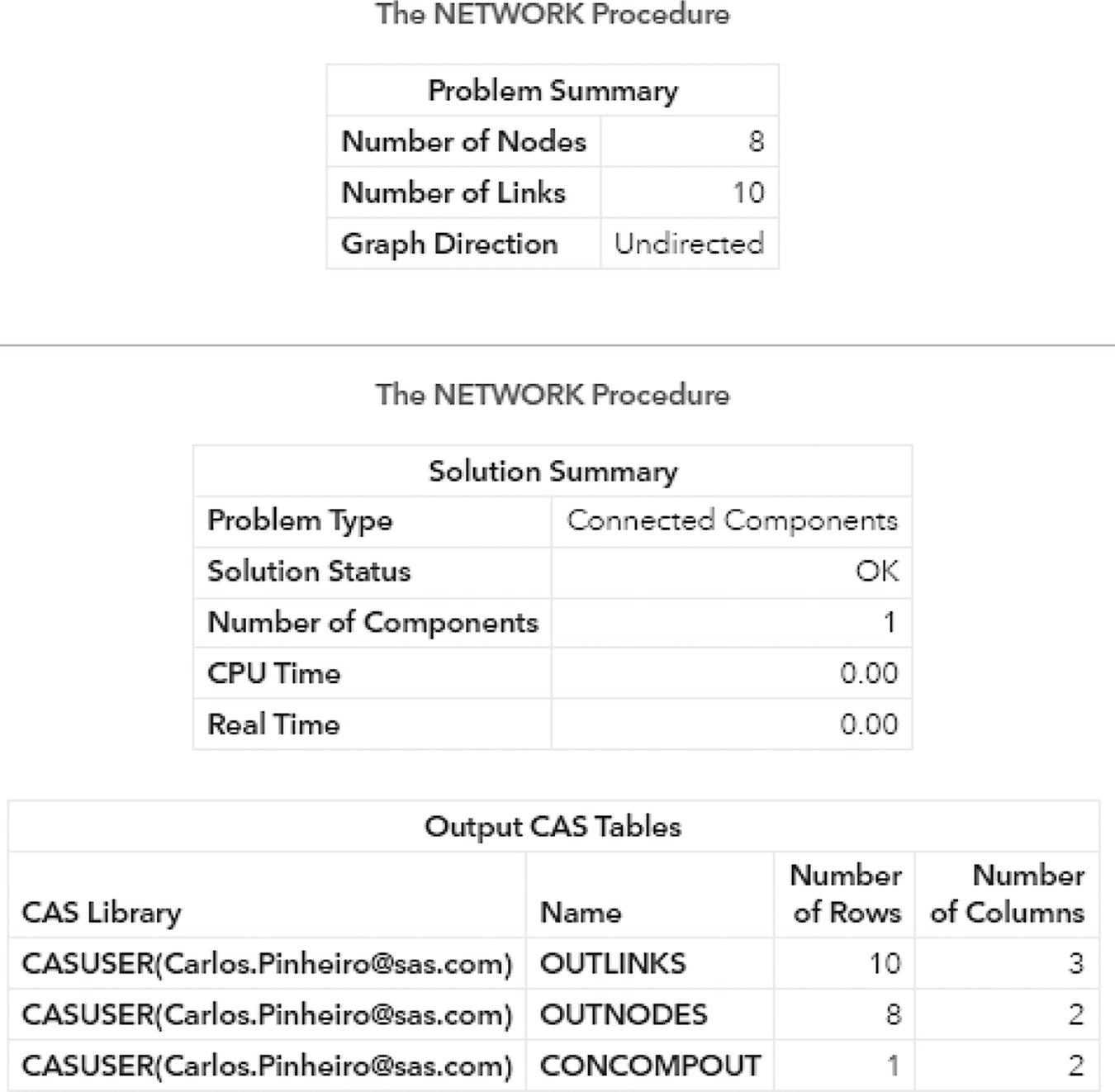

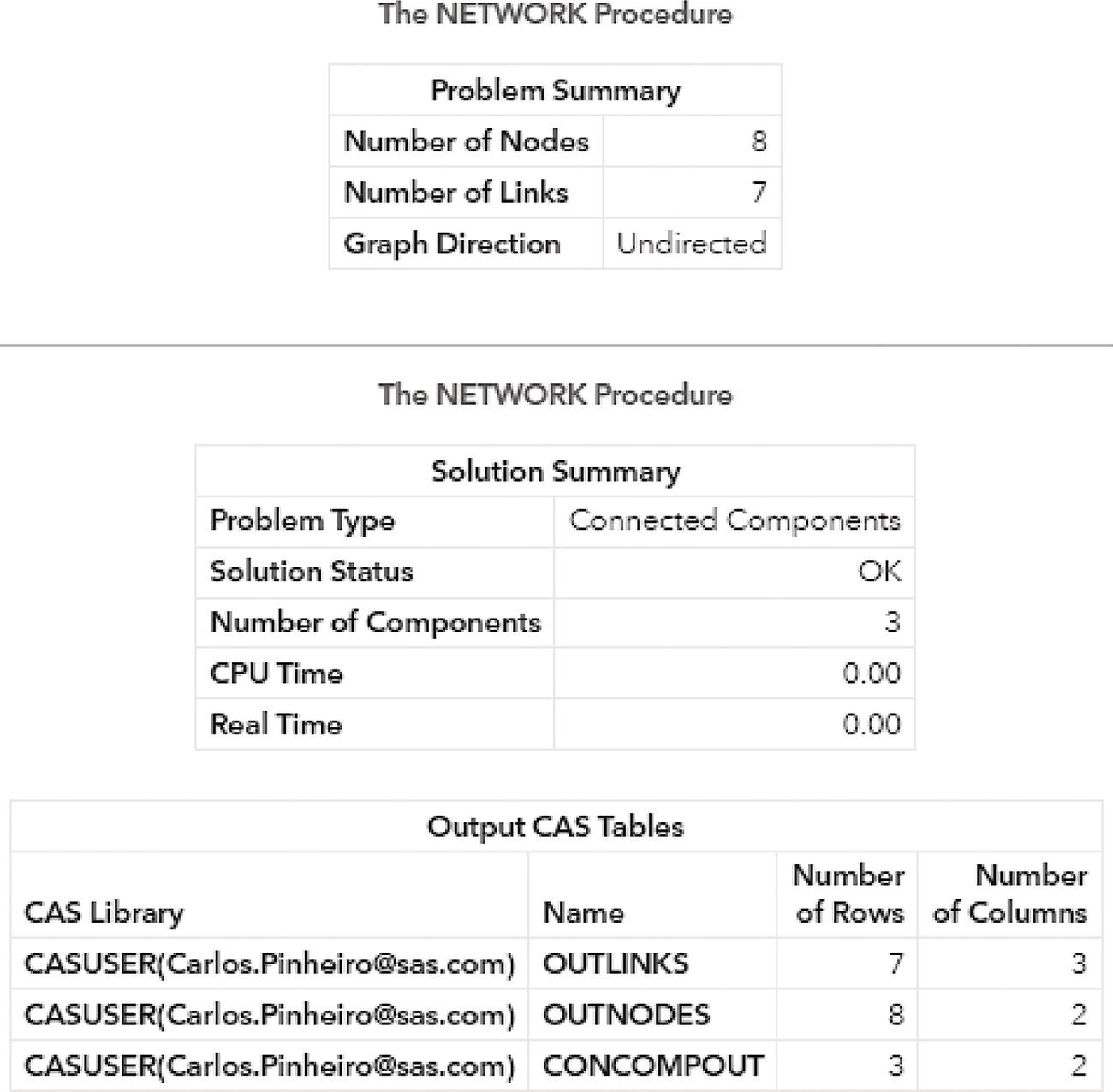

Figure 2.7 shows the outputs for the connected component algorithm. The first one reports a summary of the execution process. It provides a summary of the network, with 8 nodes and 10 links. Notice that we didn't provide a nodes dataset, even though proc network can derive the set of nodes from the links dataset. It also says the graph direction as undirected. The solution summary says that a solution was found, and only one connected component was identified. Finally, the output describes the three output tables created, the links output table, the nodes output table, and the connected components output table.

Figure 2.6 Input graph with undirected links.

Figure 2.7 Output results by proc network running the connected components algorithm upon an undirected input graph.

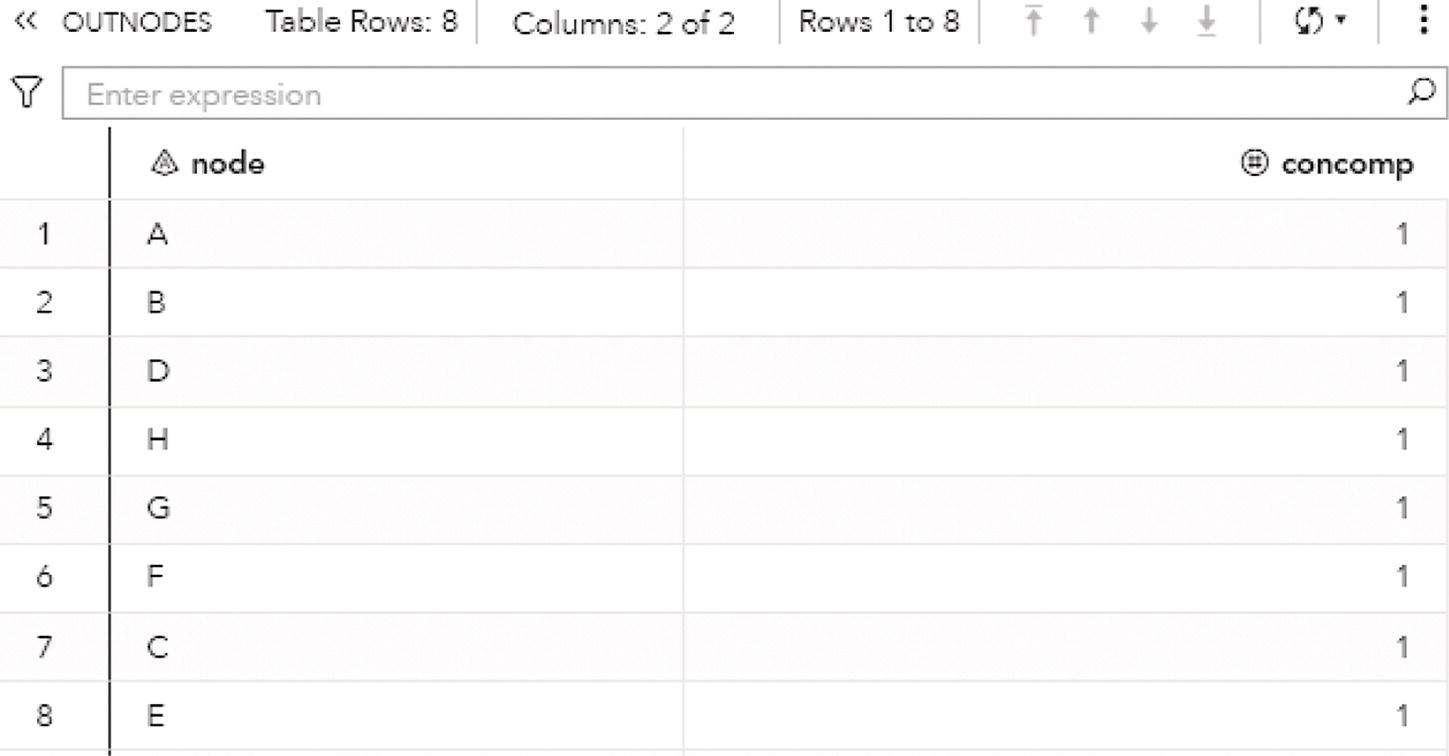

The following figures show the connected components results. Figure 2.8 presents the OUTNODES table, which describes the nodes and the connected components which each node belongs to.

Notice that there is one single connected component. The undirected graph in this example allows for every node to be reached by any other within the network, based on some particular path.

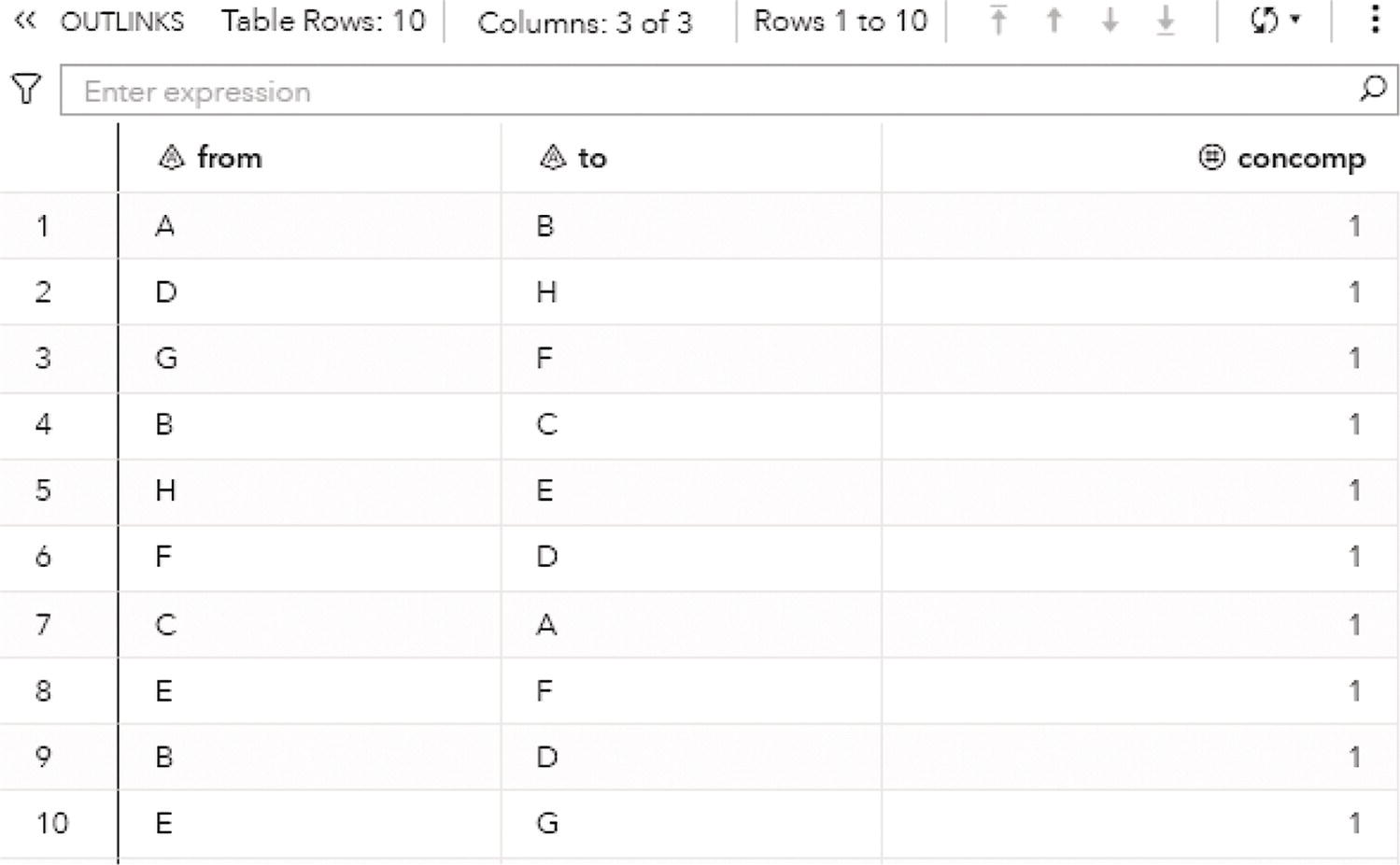

Figure 2.9 shows the OUTLINKS table, which presents the links and the connected components they belong to. The output table describes the original links variables, from and to, and the connected component assigned to the link.

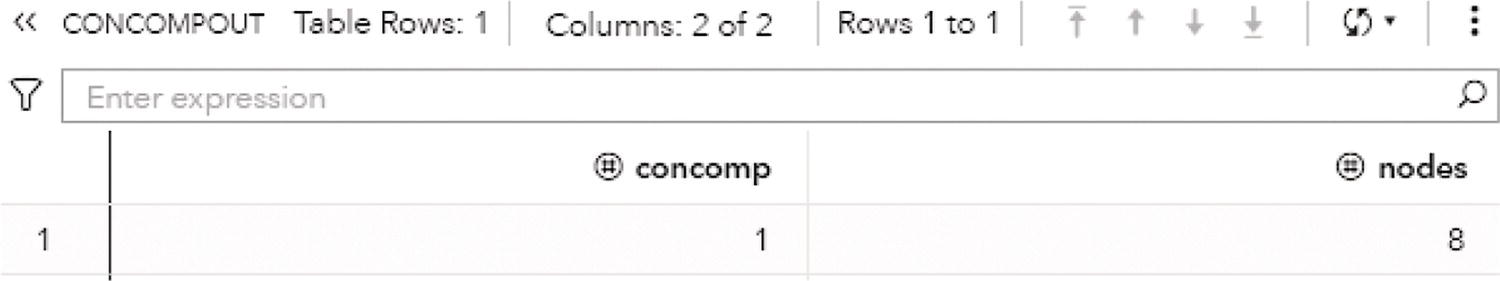

Finally, the output table CONCOMPOUT shows the summary of the connected component algorithm, presented in Figure 2.10. This table presents the connected components identifiers and the number of nodes in each component. As this undirected graph has one single connected component, this output table has one single row, showing the unique connected component 1 with all the eight nodes from the input graph.

As we can see, the original input graph, considering undirected links, presents one single connected component. What happens if we remove a few links from the original network? Let's recreate the links dataset without the three original links, H→E, D→H, and B→D. The following code shows how to recreate this new network based on that modified links dataset.

data mycas.links;input from $ to $ @@;datalines;A B B C C A E F E G G F F D;run;

Figure 2.8 Output nodes table for the connected components.

Figure 2.9 Output links table for the connected components.

In order to maintain the same set of nodes from the original network, we need to define a nodes dataset. Proc network assumes the set of nodes based on the provided set of links. As we remove the incident links to node H (H→E and D→H), node H wouldn't be in the links dataset and therefore, it wouldn't be in the presumed nodes dataset either. If we want to keep the same set of nodes, including node H from the original network, we need to provide the nodes dataset and force the presence of node H in the input graph. The following code creates the nodes dataset.

data mycas.nodes;input node $ @@;datalines;A B C D E F G H;run;

Figure 2.10 Output summary table for the connected components.

Once both links and nodes dataset s are created, we can rerun proc network and see how many connected components will be identified upon that new set of links and the declared set of nodes. The following code reruns proc network on the new links dataset and also considers the nodes dataset.

proc networkdirection = undirectedlinks = mycas.linksnodes = mycas.nodesoutlinks = mycas.outlinksoutnodes = mycas.outnodes;linksvarfrom = fromto = to;nodesvarnode = node;connectedcomponentsout = mycas.concompout;run;

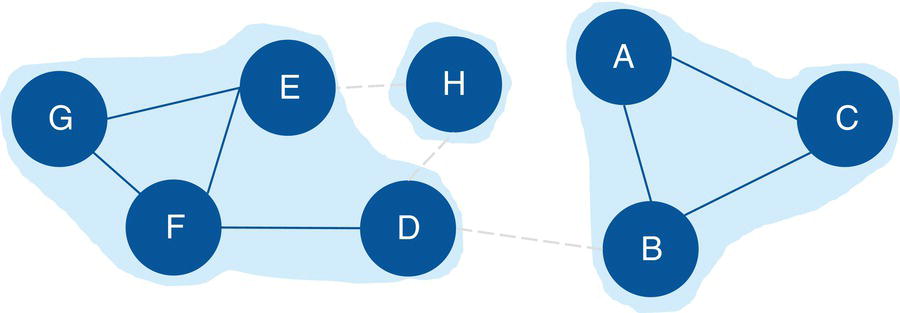

Without the three original links H→E, D→H, and B→D, the new network will present three connected components. One of the components will contain a single node.

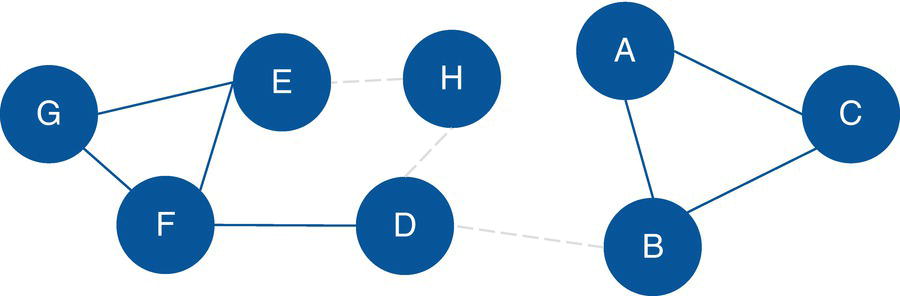

Figure 2.11 shows the new network without those three links and the declared set of nodes.

Proc network produces the output summary, stating that the input graph has eight nodes and now only seven links. A solution was found and three connected components were identified. The output tables OUTLINKS, OUTNODES, and CONCOMPOUT report all results. Figure 2.12 shows the summary results for the procedure.

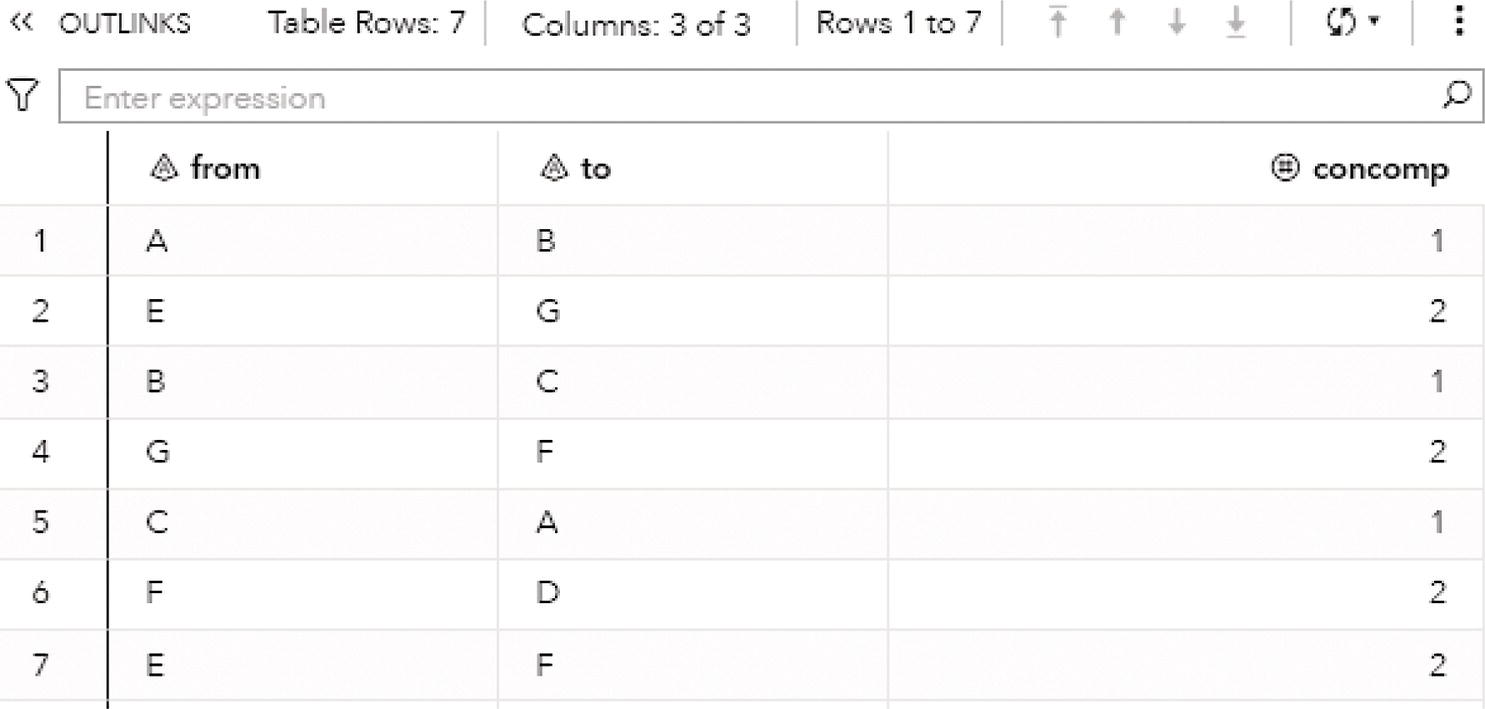

The output table OUTLINKS shows all seven links associated with the input graph, and the connected component each link belongs to. Figure 2.13 shows the output table for the links.

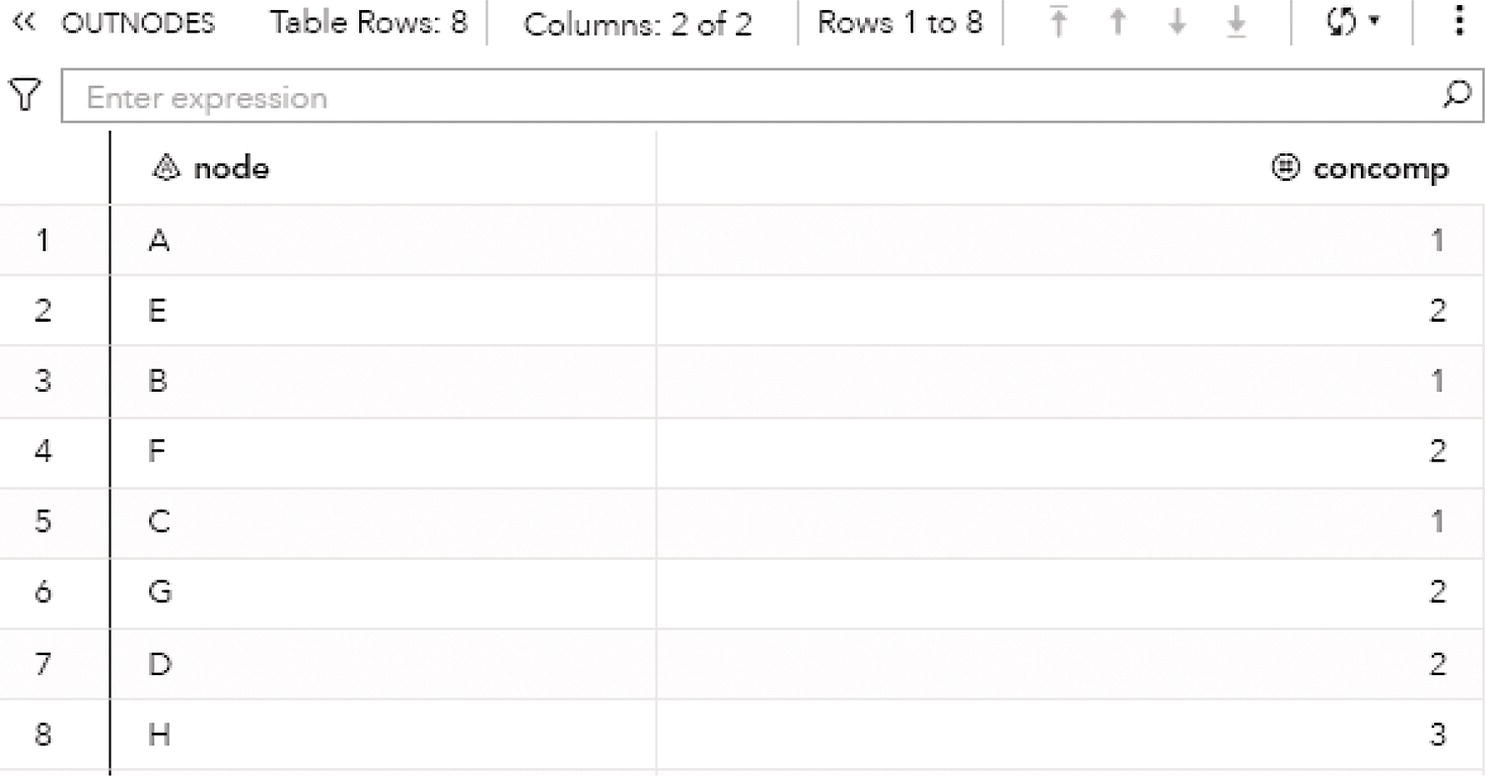

The output table OUTNODES shows all the eight nodes associated with the input graph, and the connected component each one belongs to. Notice that the connected component 1 has three nodes (A, B, and C), the connected component 2 has four nodes (E, F, G, and D), and the connected component 3 has one single node (H). Figure 2.14 shows the output table for the nodes.

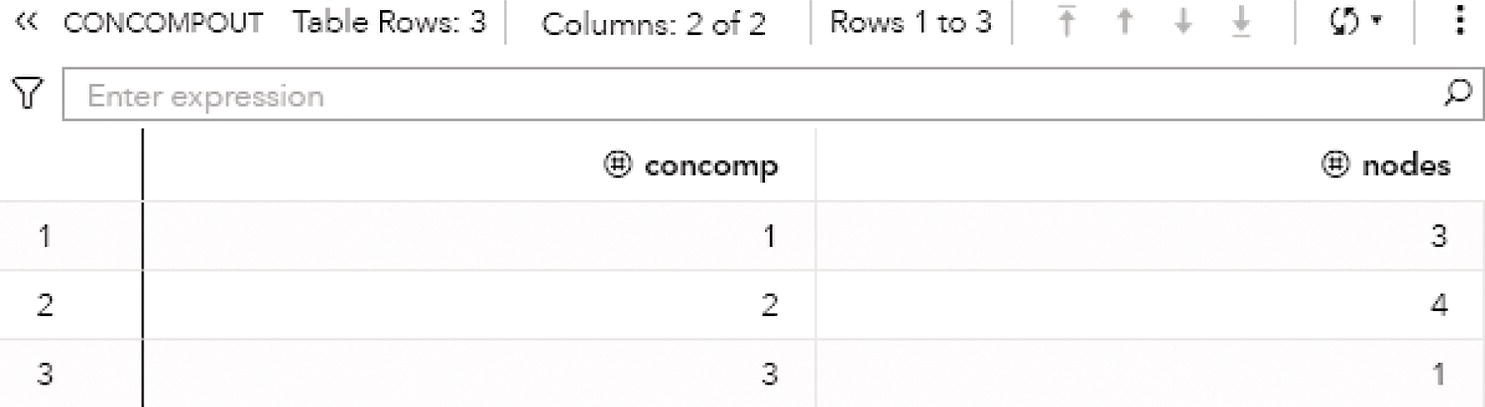

The output table CONCOMPOUT shows the connected components identified and the number of nodes of each component. The connected component 1 has three nodes, the connected component 2 has four nodes, and finally the connected component 3 has one single node. Figure 2.15 shows the output table for the connected components.

Figure 2.11 Input graph with undirected links.

Figure 2.16 shows the connected components highlighted within the original input graph.

Figure 2.12 Output results by proc network running connected components on undirected graph.

Figure 2.13 Output links table for the connected components.

Now, let's see how the connected components algorithm works upon a directed graph. In this example, we are going to use the exact same input graph defined at the beginning of this section, but the direction in proc network will be set as directed. The direction of each link will follow the links dataset definition.

The following code defines the set of links, and the from variable here actually specifies the origin or source node, and the to variable actually specifies the destination or sink node.

Figure 2.14 Output nodes table for the connected components.

Figure 2.15 Output summary table for the connected components.

data mycas.links;input from $ to $ @@;datalines;A B B C C A B D D H H E E F E G G F F D;run;

The following code invokes proc network to identify the connected components within a directed graph. Notice the DIRECTION = DIRECTED statement in proc network.

proc networkdirection = directedlinks = mycas.linksoutlinks = mycas.outlinksoutnodes = mycas.outnodes;linksvarfrom = fromto = to;connectedcomponentsout = mycas.concompout;run;

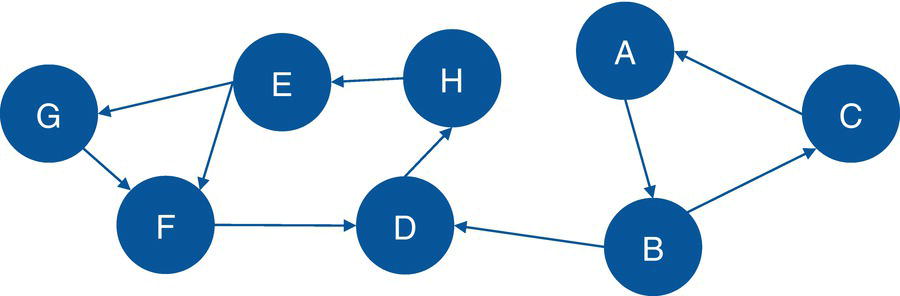

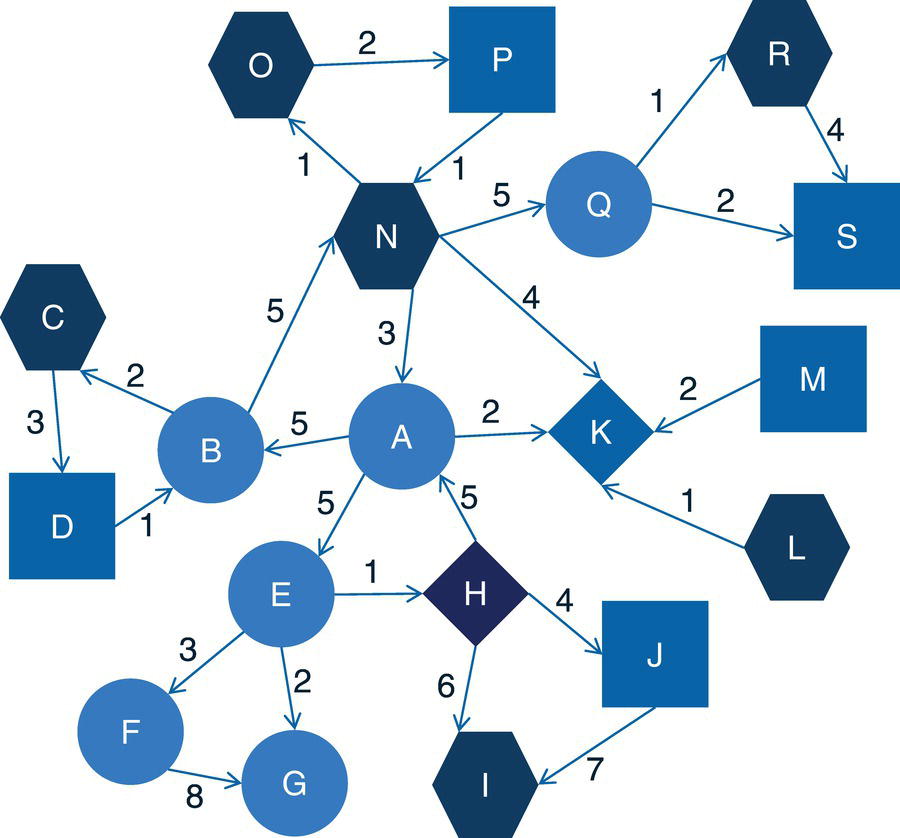

Figure 2.17 shows the original input graph with the directed links. Note that the graph is exactly the same as the one presented previously, except for the direction of the links between the nodes.

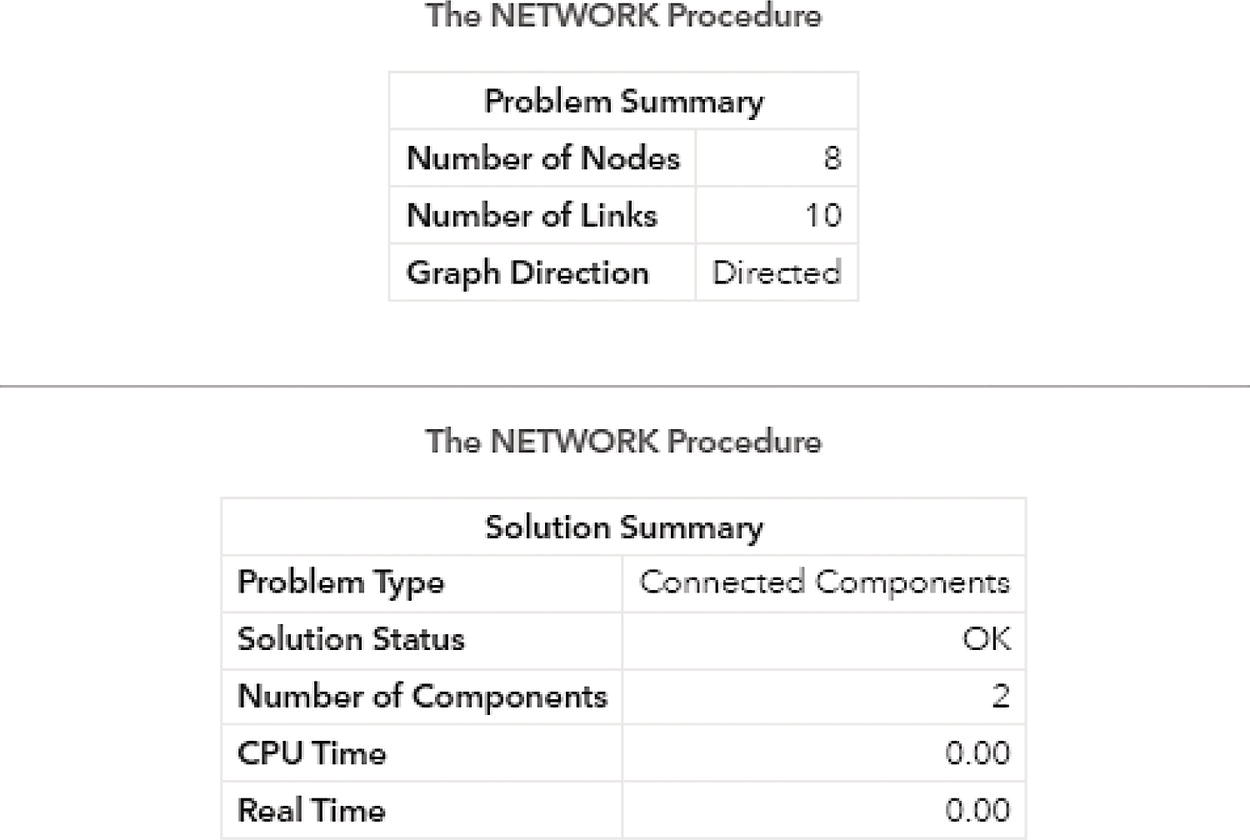

The output summary produced by proc network shows the results of the connected components upon a directed graph. It has the same number of nodes and links, but now instead of one single component, two components are identified. Figure 2.18 shows the summary results for the connected components algorithm.

Figure 2.16 Input graph with undirected links and the identified connected components.

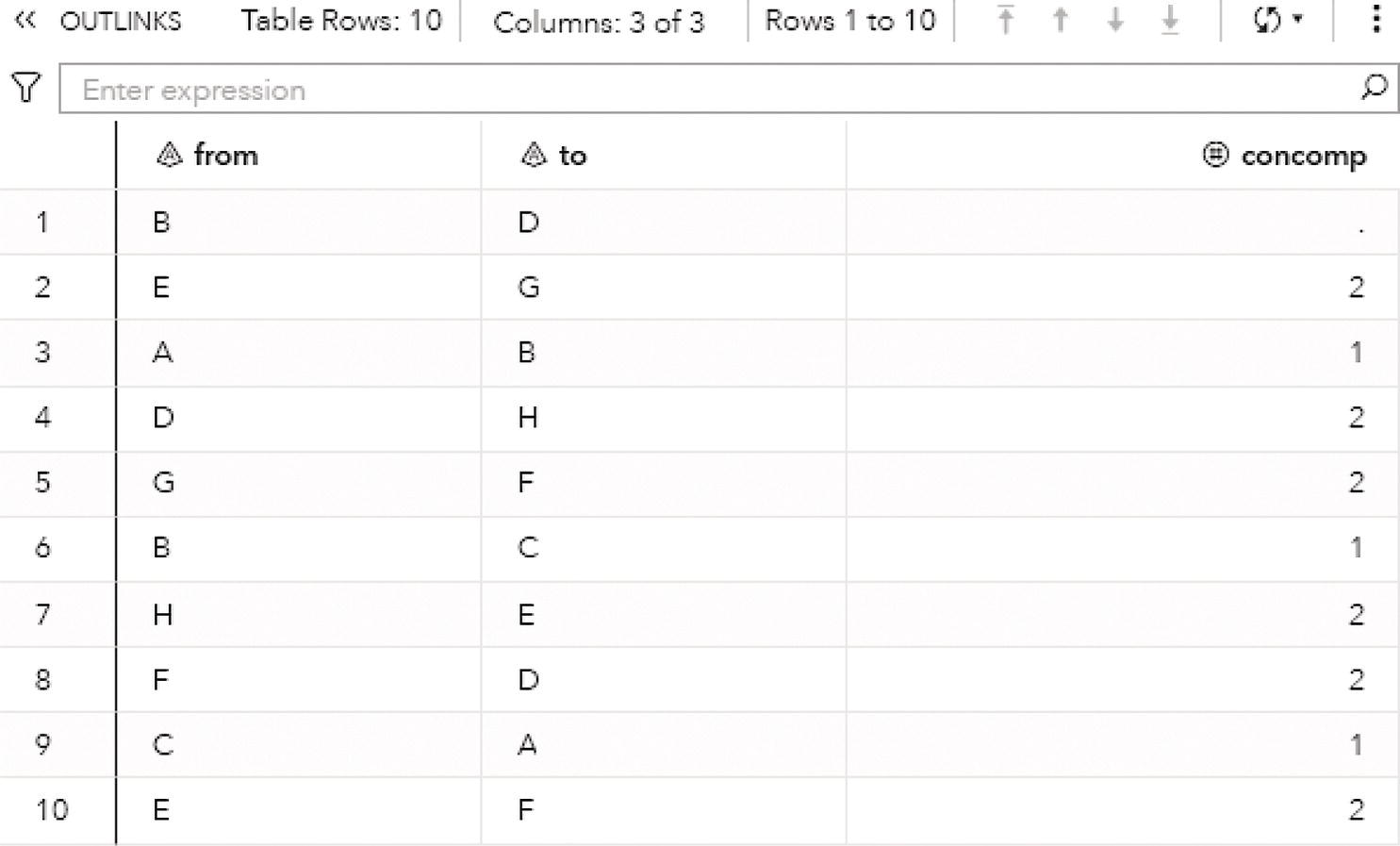

Figure 2.19 presents the output links for the connected components. The output table OUTLINKS shows all ten links associated with the input graph and the connected component each link belongs to. Notice that the link B→D does not belong to any connected component. This particular link is the one that breaks down the original directed input graph into two distinct components.

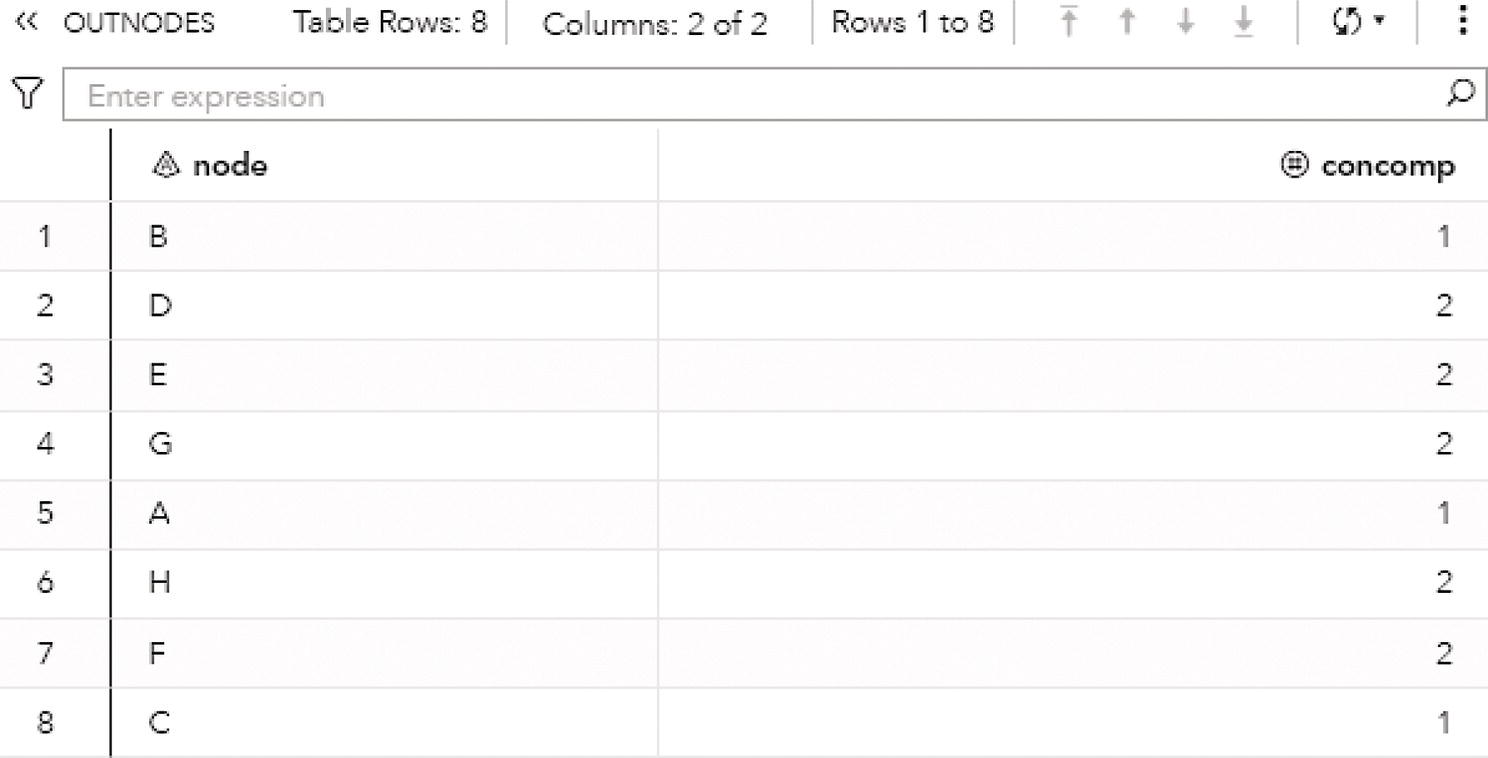

Figure 2.20 shows the output nodes for the connected components. The output table OUTNODES shows all the eight nodes associated with the input graph, and the connected component each one of them belongs to. Notice that the connected component 1 has three nodes (B, A, and C), and the connected component 2 has five nodes (D, E, G, H, and F).

Figure 2.17 Input graph with directed links.

Figure 2.21 shows the output connected components. The output table CONCOMPOUT shows the connected components identified and the number of nodes of each component. The connected component 1 has three nodes and the connected component 2 has five nodes.

Figure 2.22 shows the connected components highlighted within the original directed input graph.

When we analyze graphs, sometimes the focus is too much on the role of the nodes. In the next section, we clearly see this while we discuss the concept of biconnected components. However, the last example here also shows the importance of analyzing the roles of the links. The link B→D plays a fundamental role in splitting the original input graph into two disjoined components. In many practical cases and business scenarios, when analyzing graphs created by social or corporate interactions, we focus on the roles of the nodes. Nevertheless, it is important to keep in mind the significant role of the links. Sometimes, the links will reveal more useful insights about the social or corporate networks than the nodes themselves. Sometimes it is not about the entities in the network, but how these entities relate to each other.

Figure 2.18 Output results by proc network running connected components on a directed graph.

Figure 2.19 Output links table for the connected components.

Figure 2.20 Output nodes table for the connected components.

Figure 2.21 Output summary table for the connected components.

Figure 2.22 Input graph with directed links and the identified connected components.

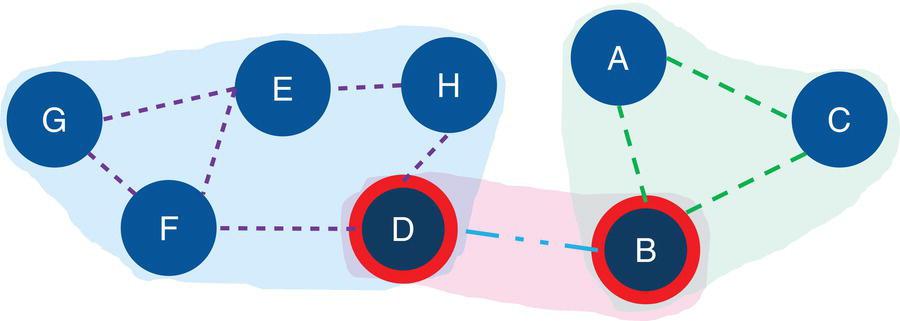

2.3 Biconnected Components

A biconnected component of a graph G = (N, L) is a subgraph that cannot be broken into disconnected pieces by deleting any single node and its incidents links. If a component can be broken into other connected components, there is a node that if removed from the graph, will increase the number of connected components. This type of node is commonly called an articulation point.

Biconnected components can be seen as a variation of the connected components concept, meaning the graph may have some important nodes that can break down a component into smaller components. Sometimes the articulation points are also referred to as bridges, as these nodes create bridges between different groups or components within the network.

Articulation points often play an important role when analyzing graphs that represent any sort of communications network. For example, suppose a graph G = (N, L) and an articulation point i ∈ N. If the articulation point i is removed from the graph G, it will break down the graph into two components C 1 and C 2. All paths in G between nodes in C 1 and nodes C 2 must pass through node i. The articulation point i is crucial to keep the communication flow within the original component or graph.

Depending on the context, particularly in scenarios associated with communication networks, the articulation points can be viewed as points of vulnerability in a network. Roads and rails represented by graphs, supply chain processes, social and political networks, among other types, are graphs where the articulation points represent vulnerabilities within the network, either breaking down the components into more connected components, or stopping the communication flow within the network. Imagine a bridge connection two locations. These two locations can represent articulation points and can stop the network flow not just between these two locations, but among the locations that are adjacent to them. Terrorist networks are also a fitting example of the importance of the articulation points. Commonly, targeting and eliminating individuals that represent articulation points within the network can strategically break down the power of a cohesive component into smaller and disorganized groups.

In proc network, the BICONNECTEDCOMPONENTS statement invokes the algorithm that solves the biconnected component problem, or the algorithm that identifies all biconnected components within a given graph. Different from the connected components, which can be identified in both directed and undirected graphs, the biconnected components can be identified only for the undirected graphs. In order to compute and identify the biconnected components, proc network uses a variant of the depth‐first search algorithm. This algorithm can scale large graphs.

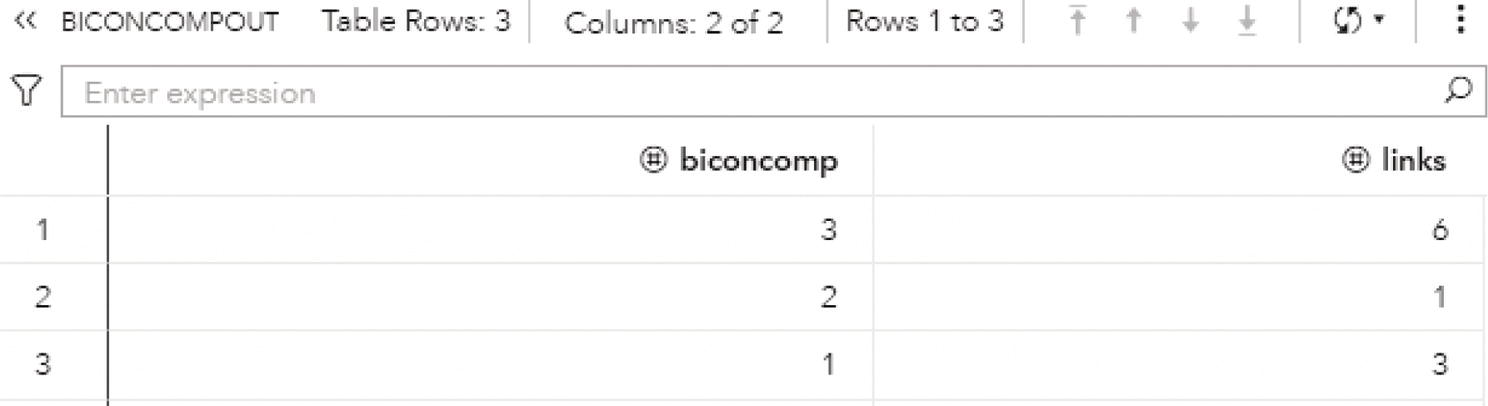

The final results for the biconnected component algorithm in proc network are reported in the output table defined by the options OUT =, OUTNODES =, and OUTLINKS =. The option OUT = within the BICONNECTEDCOMPONENTS statement produces an output containing all the biconnected components and the number of links associated with each of them.

- biconcomp: the biconnected component identifier.

- links: the number of links contained in the biconnected component.

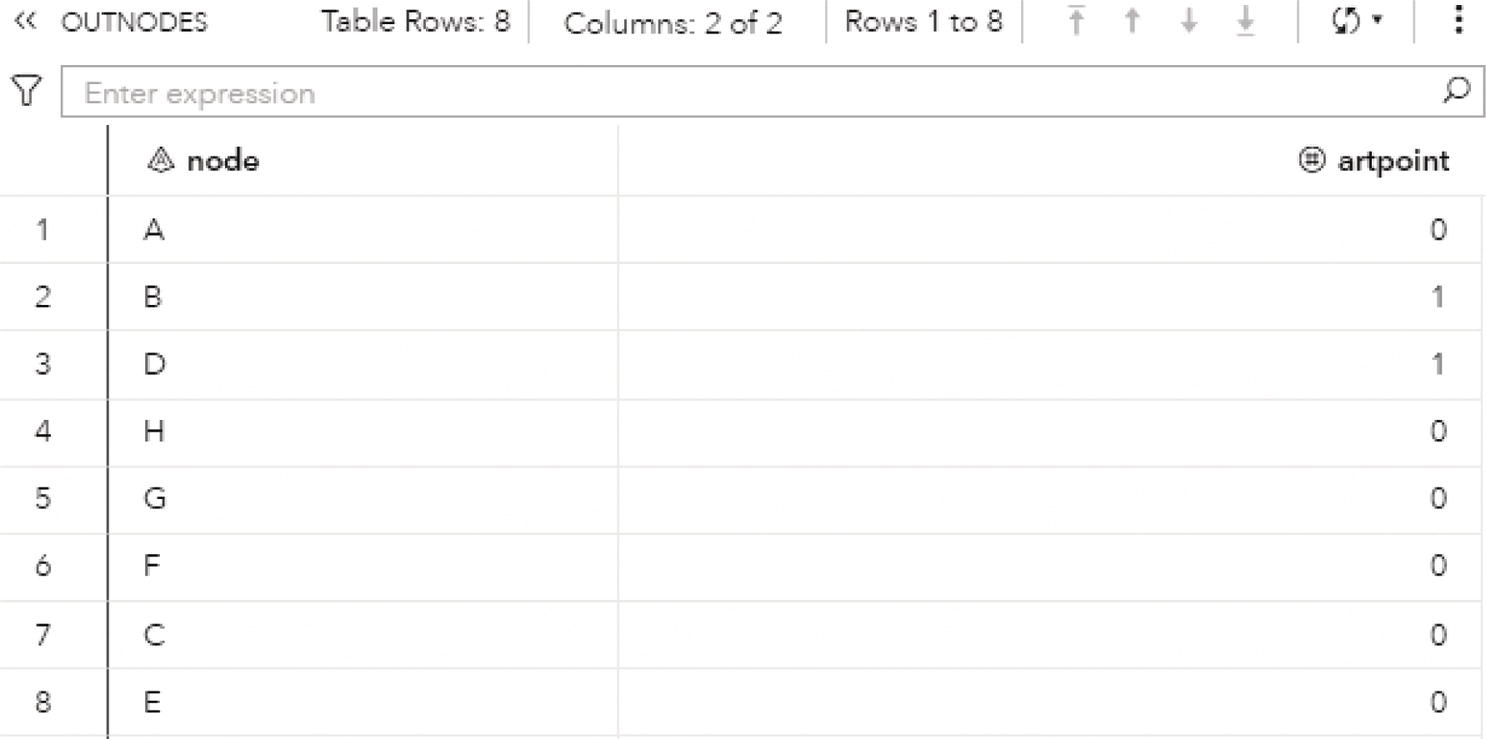

The biconnected components are more associated with the links within the graph than to the nodes. Therefore, the option OUTNODES = within the PROC NETWORK statement produces an output containing all the nodes identification, and a flag to determine whether or not each node is an articulation point.

- node: the node identifier.

- artpoint: identify whether or not the node is an articulation point. The value of 1 determines that the node is an articulation point. The value of 0 determines that the node is not an articulation point.

The option OUTLINKS = within the PROC NETWORK statement produces an output containing all the links and the biconnected component each link belongs to.

- from: the origin node identifier.

- to: the destination node identifier.

- biconcomp: the biconnected component identifier for the link.

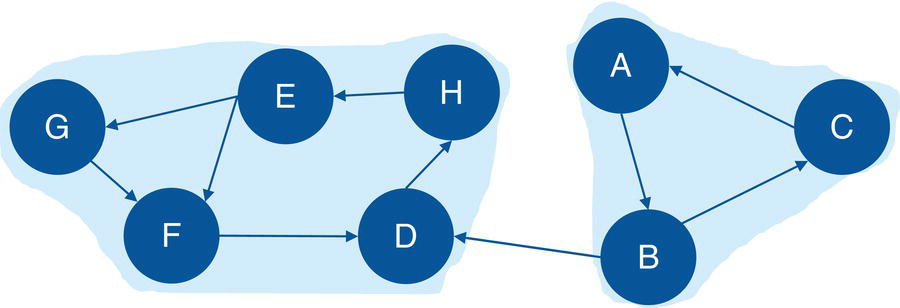

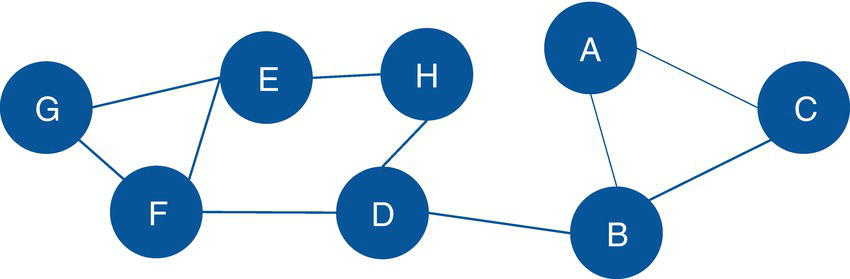

Figure 2.23 Input graph with undirected links for the biconnected components identification.

Let's see an example of connected components for an undirected graph.

2.3.1 Finding the Biconnected Components

Let's consider the same graph we have used for the connected components example. That graph is shown in Figure 2.23 and has 8 nodes and 10 links. Similar to the connected components, links weights are not required when searching for biconnected components.

The following code describes the links dataset creation. No weights are required, just the origin and destination nodes for the undirected graph.

data mycas.links;input from $ to $ @@;datalines;A B B C C A B D D H H E E F E G G F F D;run;

Based on the links dataset, we can invoke the biconnected component algorithm to reach for the biconnected components and the articulation point nodes within the graph. The following code describes how to do that.

proc networkdirection = undirectedlinks = mycas.linksoutlinks = mycas.outlinksoutnodes = mycas.outnodes;linksvarfrom = fromto = to;biconnectedcomponentsout = mycas.biconcompout;run;

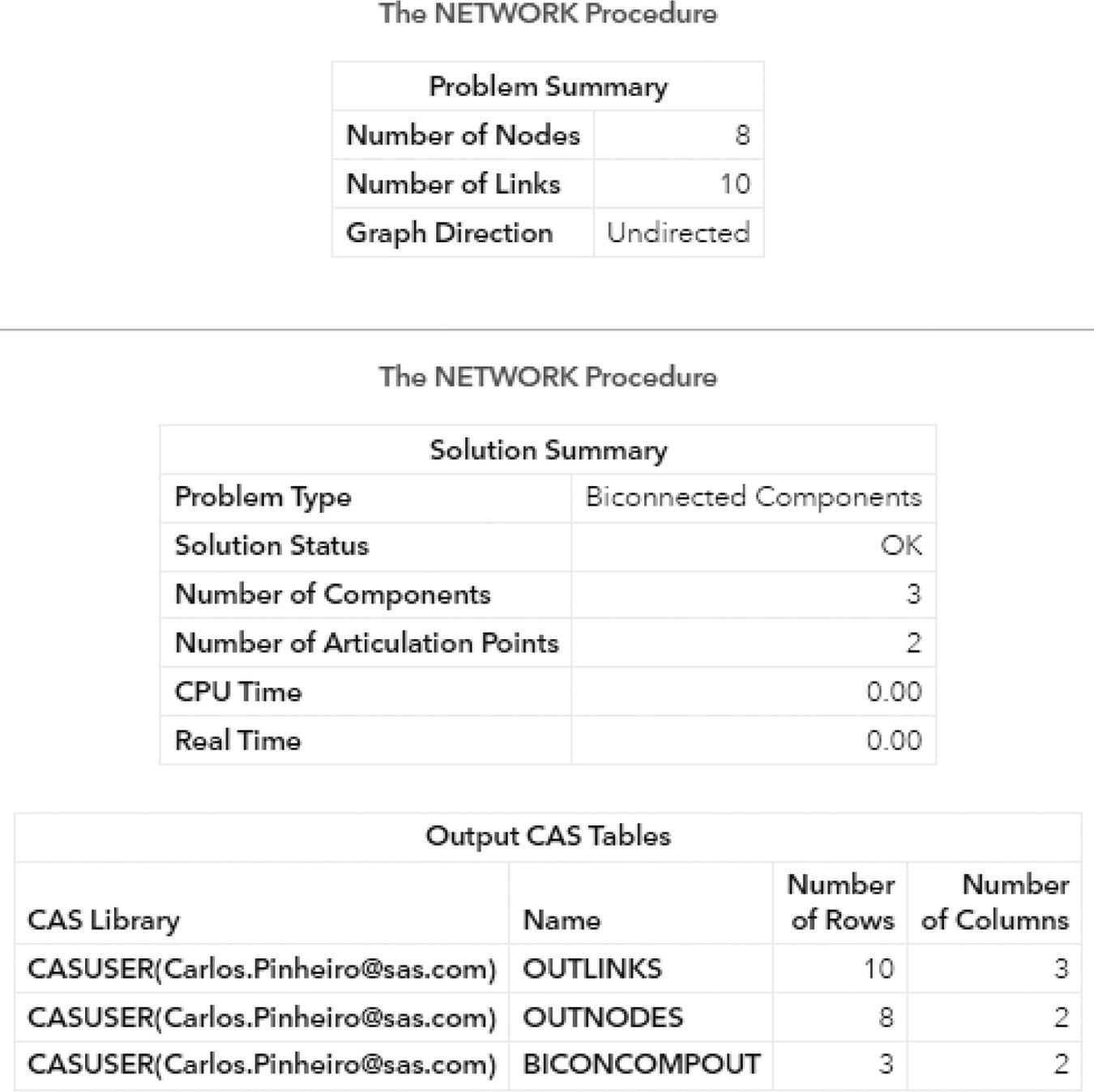

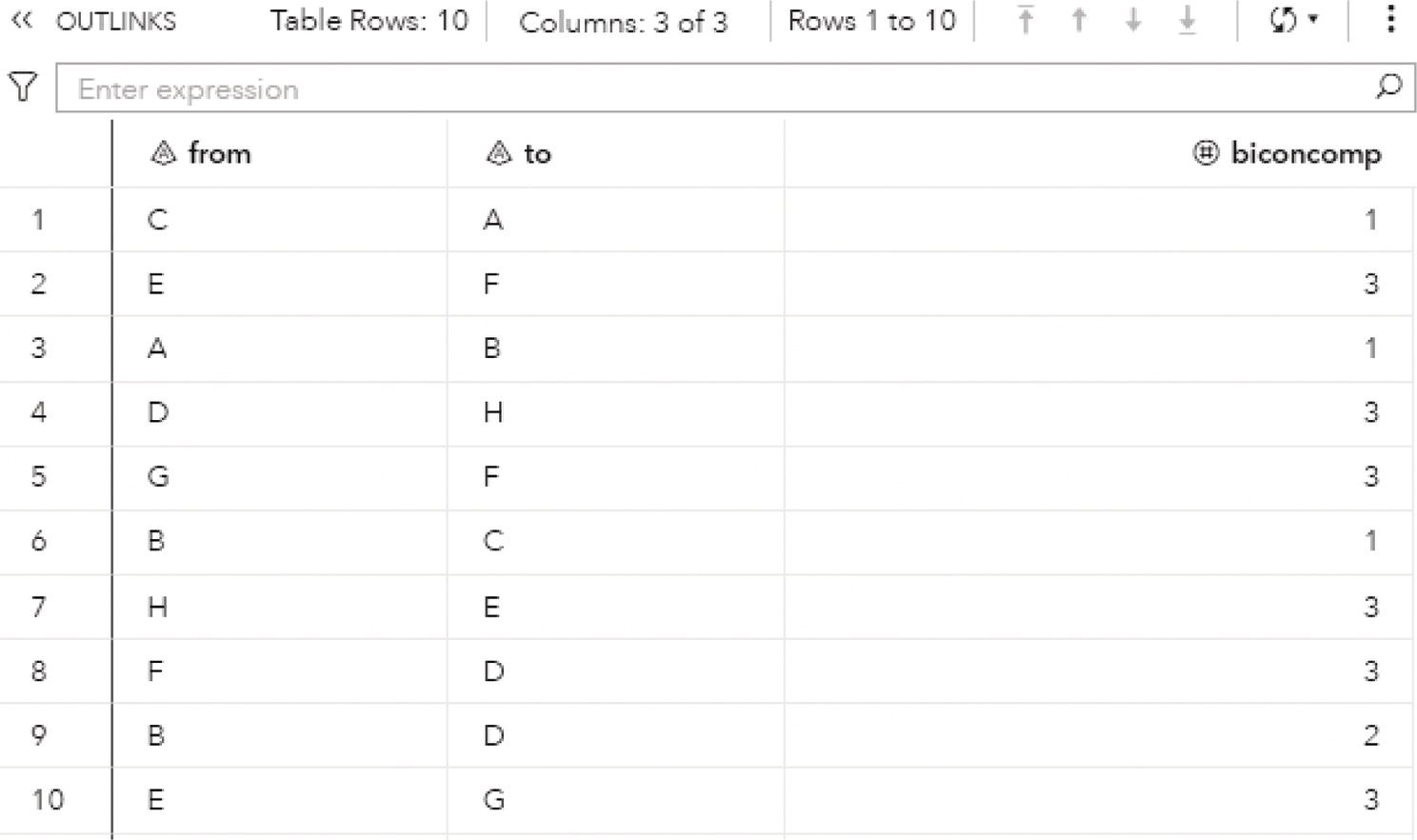

The following picture shows the outputs for the biconnected components algorithm. Figure 2.24 reports a summary of the execution process, showing the network composed of 8 nodes and 10 links, as an undirected graph. A solution was found with three biconnected components being identified and with two nodes being determined as articulation points. The last part of the output describes the three output tables created, the links output table, the nodes output table, and the biconnected components output table.

Figure 2.25 shows the OUTLINKS table, which describes the links and the biconnected components each link belongs to. The output table describes the original links variables from and to, and the biconnected component identifier assigned to the link.

Notice that there are three biconnected components. The biconnected component 1 comprises three links, C→A, A→B, and B→C. The biconnected component 2 comprises one single link, B→D. Finally, the biconnected component 3 comprises six links, E→F, D→H, G→F, H→E, F→D, and E→G.

Figure 2.26 shows the OUTNODES table, which describes the nodes and the articulation point flag.

Two nodes were identified as articulation points, which means they can break down the connected component into smaller components within the input graph. The nodes B and D can represent bridges in the original network splitting the original graph into multiple subgraphs.

Figure 2.24 Output results by proc network for the biconnected components algorithm.

Figure 2.25 Output links table for the biconnected components.

Finally, the output table BICONCOMPOUT shows the summary of the biconnected component algorithm. It is presented in Figure 2.27. This table presents the biconnected components identifiers and the number of links in each biconnected component. As described above, biconnected component 3 has six links, biconnected component 2 has one single link, and biconnected component 1 has three links.

Figure 2.26 Output nodes table for the biconnected components.

Figure 2.27 Output summary table for the biconnected components.

Figure 2.28 Input graph with the identified biconnected components and the articulation point nodes.

Figure 2.28 shows the biconnected components highlighted within the original input graph, as well as the articulation point nodes.

Notice on the left that six links create one biconnected component. On the right, three links create another biconnected component. In the middle of the graph, a single link creates the last biconnected component. On the left biconnected component, if we remove node E, nodes G and F still can reach out to node H through node D. If we remove node H, node D still can reach out to node E through node F. Therefore, nodes G, F, E, H, and D form a unique biconnected component. By removing any of these nodes, we still cannot break apart the original component into two or more smaller components. The same thing happens for nodes A, B, and C. Removing any of these nodes does not break apart the original component into smaller components. The remaining nodes will still be able to connect to each other. Only nodes D and B have the ability to break apart the original component into smaller components. If we remove node D, the nodes G, F, E, and H will form a component, and node A, B, and C will for another one. If we remove node B, nodes A and C will form a component, and nodes G, F, E, H, and D will form another one. For this reason, nodes D and B are the articulation points within this input graph.

2.4 Community

Community detection is a crucial step in network analysis. It breaks down the entire network into smaller groups of nodes. These groups of nodes are more connected among them than between that group and the others. The idea of community detection is to join nodes based on their average link weights. The average link weights inside the communities should be greater than the average link weight between the communities. Nodes are gathered based on the strength of their relationships in comparison to the rest of their connections.

Communities are densely connected subgraphs within the network where nodes belonging to the same community are more likely to be connected to nodes within their own communities than to nodes outside their communities. A network can contain as many communities as the number of nodes, or one single community representing the entire network. Often, something in between that captures the overall characteristics of different groups of nodes while keeping the network topology structure. Similar to clustering analysis, the community detection algorithm assigned to each node to a unique community.

The idea of communities within networks is very intuitive and quite useful when analyzing large graphs. As groups of nodes densely connected, the concept of communities is commonly found in networks representing social interactions, chemistry and biological structures, and grids of computers, among other fields. Community detection is important to identify customers consuming the same service, products being purchased together (like in association rules or market basket analysis), companies most frequently making transactions together, or just groups of people who are densely related to each other. Notice that community detection is not clustering analysis. Both techniques combine observations into groups. Clustering works on the similarity of the observations' attributes. For example, customers' demographics or consuming habits. Customers with no relation can fit into the same group just based on their similar attributes. Community detection combines observations (nodes) based on their relations, or the strength of their connections to each other. In fact, this is the fundamental difference between common machine learning or statistical models and network analysis. The first always looks into observations attributes, and the second always looks into observations connections.

There are two primary methods to detect communities within networks, the agglomerative methods, and the divisive methods. Agglomerative methods add links one by one to subgraphs containing only nodes. The links are added from the higher link weight to the lower link weight. Divisive methods perform a completely opposite approach. In divisive methods, links are removed one by one from the subgraphs. Both methods can find multiple communities upon the same network, with different numbers of members in them. It is hard to say which method is the best, as well as what is the best number of communities within a network and the average number of members in them. Mathematically, we can use metrics of resolution and modularity to narrow this search, but in most cases, a domain knowledge is important to understand what makes sense for each particular network. For example, let's say that in a telecommunications network, the average number of relevant distinct connections for each subscriber is around 30. When detecting communities within this network, we should try to find a particular number of communities that represent a similar number of mem'ers in each community on average. Suppose that carrier has around 120 million subscribers. Finding 2 or 3 million communities wouldn't be absurd. That number would give us around 40‐60 as an average number of members in each community. Community detection is important in understanding and evaluating the structure of large networks, like the ones associated with communications, social media, banking, and insurance, among others.

The community detection algorithm was initially created to be used on undirected graphs. Currently, there are some algorithms that provide alternative methods to compute community detection on directed graphs. If we are working on directed graphs and still want to perform a community detection using algorithms for undirected graphs, we need to aggregate the links between the pair of nodes, considering both directions, and sum up their weights. Once the links are aggregated and the links weighted are summed up, we can invoke a community detection algorithm designed to detect communities on undirected graphs. Figure 2.29 describes that scenario.

The method of partitioning the network into communities is an iterative process, as described before. Two types of algorithms to partition a graph into subgraphs can be used upon undirected links. Proc network implements both heuristics methods, called Label Propagation and Louvain. Proc network also implements a particular algorithm to partition a graph into communities based on directed links, called Parallel Label Propagation. All three methods for finding communities in directed and undirected graphs are heuristic algorithms, which means they search for an optimal solution, speeding up the searching process by employing practical methods that reach satisfactory solutions. The solution found doesn't mean that the solution is perfect.

The Louvain algorithm aims to optimize modularity, which is one of the most popular merit functions for community detection. Modularity is a measure of the quality of a division of a graph into communities. The modularity of a division is defined to be the fraction of the links that fall within the communities, minus the expected fraction if the links were distributed at random, assuming that the degree of each node does not change.

Figure 2.29 Aggregating directed links into undirected links.

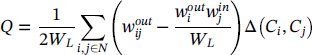

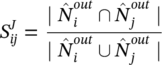

The modularity can be expressed by the following equation:

where Q is the modularity, W ij is the sum of all link weights in the graph G = (N, L), w ij is the sum of the link weights between nodes i and j, C i is the community to which node i belongs, C j is the community to which node j belongs, and ∆(C i , C j ) is the Kronecker delta defined as:

The following is a brief description of the Louvain algorithm:

- Initialize each node as its own community.

- Move each node from its current community to the neighboring community that increases modularity the most. Repeat this step until modularity cannot be improved.

- Group the nodes in each community into a super node. Construct a new graph based on super nodes. Repeat these steps until modularity cannot be further improved or the maximum number of iterations has been reached.

The label propagation algorithm moves a node to a community to which most of its neighbors belong. Extensive testing has empirically demonstrated that in most cases, the label propagation algorithm performs as well as the Louvain method.

The following is a brief description of the label propagation algorithm:

- Initialize each node as its own community.

- Move each node from its current community to the neighboring community that has the maximum number of nodes. Break ties randomly, if necessary. Repeat this step until there are no more movements.

- Group the nodes in each community into a super node. Construct a new graph based on super nodes. Repeat these steps until there are no more movements or the maximum number of iterations has been reached.

The parallel label propagation algorithm is an extension of the basic label propagation algorithm. During each iteration, rather than updating node labels sequentially, nodes update their labels simultaneously by using the node label information from the previous iteration. In this approach, node labels can be updated in parallel. However, simultaneous updating of this nature often leads to oscillating labels because of the bipartite subgraph structure often present in large graphs. To address this issue, at each iteration the parallel algorithm skips the labeling step at some randomly chosen nodes in order to break the bipartite structure.

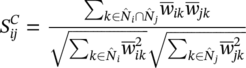

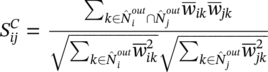

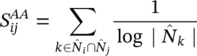

For directed graphs, the modularity equation changes slightly.

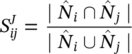

where ![]() is the sum of the weights of the links departing from node i and arriving at node j,

is the sum of the weights of the links departing from node i and arriving at node j, ![]() is the sum of the weights of the links outgoing from node i, and

is the sum of the weights of the links outgoing from node i, and ![]() is the sum of the weights of the links incoming to node j.

is the sum of the weights of the links incoming to node j.

All three algorithms adopt a heuristic local optimization approach. The final result often depends on the sequence of nodes that are presented in the links input data set. Therefore, if the sequence of nodes in the links data set changes, the result can be different.

The graph direction is relevant in the community detection process. For undirected graphs, both algorithms; Louvain and label propagation; find communities based on the density of the subgraphs. For directed graphs, the algorithm parallel label propagation finds communities based on the information flow along the directed links. It propagates the community identification along the outgoing links of each node. Then, when using directed graphs, if the graph does not have a circle structure where nodes are connected to each other along outgoing links, the nodes are likely to switch between communities during the computation. As a result, the algorithm might not converge well and then a good community structure within the graph might not be established.

We always need to think about the graph direction depending on the problem we are analyzing. For example, in a social network, the definition of friendship is represented by undirected links. For a telecommunications or banking network, the call, text, or money transfer are represented by directed links. However, the community detection approach can still be performed by both directed and undirected graphs. For instance, in the telecommunications network, even though the relationships between subscribers are represented by directed links, it makes sense to detect communities based on an undirected graph, as both directions for calls and texts ultimately create the overall relation among people. Perhaps for the banking network, detect communities based on directed links makes more sense as the groups of accounts would be based on the information flow, or the sequence of transactions.

A crucial step in community detection is always to analyze the outcomes and verify if the groups found make sense in the context of the business or the problem to be solved. For example, If the parallel label propagation algorithm does not converge for the directed graphs and poor communities are detected, a reasonable approach is to perform the community detection based on undirected graphs. If the label propagation does not work well in finding proper communities upon undirected graphs, we can always try the Louvain algorithm, and vice versa. Changing parameters for the recursive option, or the resolution used are also options to better detect reasonable communities. The community detection is ultimately a trial and error process. We need to spend time executing multiple runs and analyzing the outcomes until we decide the best structure and partitioning.

Another point of attention is the size of the network. For large networks, a power law distribution is commonly observed in terms of the average number of nodes within the communities, which results in few communities with a large number of members, and most of the communities with a small number of nodes. There are some approaches to reduce this effect, by using some of the parameters mentioned before, like the resolution list and the recursive methods. A combination of approaches can also be deployed, usually providing reliable results. For example, we can first perform community detection based on the resolution list, and then adjust the partitioning by using the recursive method considering solely the best resolution in the last step.

In proc network, the COMMUNITY statement invokes the algorithm to detect communities within an input graph. There are many options when running community detection in proc network. The first and probably the most important one is the decision about which algorithm to use. The option ALGORITHM = specifies the algorithm to be used during the community detection process, one of the three algorithms previously mentioned. ALGORITHM = LOUVAIN specifies that the community detection will be based on the algorithm Louvain. It runs only for undirected graphs. ALGORITHM = LABELPROP specifies that the community detection will be performed based on the label propagation algorithm. It can be used only for undirected graphs. Finally, the option ALGORITHM = PARALLELLABELPROP specifies that the community detection will be based on the parallel label propagation algorithm. Although this algorithm is designed to directed graphs, it can also run based on undirected graphs.

The FIX = option specifies which variable in the nodes input table will define the group of nodes that need to be together in a community. This option is only used for undirected graphs, which means it is available when the ALGORITHM = option is LOUVAIN or LABELPROP. The idea behind this option is to allow users to define a group of nodes to be together, in the same community, no matter the result of the community detection based on the partitioning algorithm. Usually, community detection algorithms start by assigning each node as one community and then progressively changing nodes between communities based on the type of the algorithm and the set of parameters defined. The variable specified in the FIX = option should be in the nodes input table defined in the NODES = option. This variable must contain a nonnegative integer value. Nodes with the same value are forced to be in the same community. Regardless of the partitioning process, they cannot be split into different groups along the community detection process. These nodes that are forced into the same community still can be merged or split into larger or smaller communities, but they must be together in the same community. For example, a nodes dataset NodeSetIn has a variable called comm to determine the nodes that must be together. The option NODES = NodeSetIn defines the nodes input table. The option FIX = comm specifies the variable to be used during the partitioning process. Let's say nodes A, B, and C have comm equal to 1, and nodes X, Y, and Z have comm equal to 2. All other nodes have missing values for comm. During the community detection process, proc network will force that nodes A, B, and C fall into the same community, and nodes X, Y, and Z fall into the same community as well. They can be in the same community (all of them together), or in two distinct communities (A/B/C together in one and X/Y/Z together in another), but they cannot be split into multiple communities. The community detection algorithm basically treats the group of nodes that are required to be in the same community as a single unit, like a “supernode”. A straightforward consequence of fixing nodes to be in the same community by using the FIX = option is that proc network will have fewer options to move these nodes around into different communities. The overall number of node movements will be drastically reduced according to the number of nodes set to be together. The other consequence is that less communities with more members may be found.

The WARMSTART = option works similarly to the FIX = option, at least at the beginning of the process. This option specifies which variable in the nodes input table will define the group of nodes that need to be together when the partitioning process starts. This option defines the initial partition for warm starting community detection. This initialization can be based on prior executions of community detection, or according to existing knowledge about the data, the problem or the business scenario involved. Similar to the FIX = option, the nodes that have the same value in the variable defined in the option WARMSTART are placed in the same community. All other nodes with missing values in that variable or not specified in the nodes input table, are placed in their own community. The warm start just places the nodes together at the beginning of the partitioning process. During the community detection execution, those nodes can move around to different communities and can end up falling into distinct groups. For example, a nodes dataset NodeSetIn has a variable called startcomm to determine the nodes that must be placed together when the graph partitioning process starts. The option NODES = NodeSetIn defines the nodes input table. The option FIX = startcomm specifies the variable to be used during the community detection process. Let's say nodes A, B, and C have startcomm equal to 1, and nodes X, Y, and Z have startcomm equal to 2. All other nodes have missing values for startcomm. At the beginning of the partitioning, nodes A, B, and C will form a single community. Nodes X, Y, and Z will form another community. All other nodes will be their own community. Nodes A, B, and C, as well as X, Y, and Z, will be treated as a “supernode” ABC and XYZ, respectively. They can change communities along the partitioning, but they need to be placed together at the beginning.

Notice that both FIX = and WARMSTART = options can be used at the same time. When both options are specified simultaneously, the values of the variables defined for fix and warm start are assumed to be related. For example, if nodes A and B have a value of 1 for the fix, and node C has a value of 1 for the warm start, then they are initialized in the same community. However, during the partitioning, the algorithm can move node C to a different community than nodes A and B, but nodes A and B are forced to move together to another community. At the end of the process, A and B are forced to be in the same community, while C can end up in a different one.

The RECURSIVE option recursively breaks down large communities into smaller groups until some certain conditions are satisfied. The RECURSIVE option provides a combination of three possible sub options, MAXCOMMSIZE, MAXDIAMETER, and RELATION. These options can be used individually or simultaneously. For example, we can define RECURSIVE (MAXCOMMSIZE = 100) to define a tentative maximum of 100 members for community, or RECURSIVE (MAXCOMMSIZE = 500 MAXDIAMETER = 3 RELATION = AND) to define the maximum number of members for each community as 500 and the maximum distance between any pair of nodes in the community as 3. Both conditions must be satisfied during the graph partitioning as the sub option RELATION is defined as AND. The sub option MAXCOMMSIZE defines the maximum number of nodes for each community. The sub option MAXDIAMETER defines the maximum number of links within the shortest paths between any pair of nodes within the community. The diameter represents the largest number of links to be traversed in order to travel from one node to another. It is the longest shortest path of a graph. The sub option RELATION defines the relationship between the other two sub options, MAXCOMMSIZE and MAXDIAMETER. If RELATION = AND, the recursive partitioning continues until both constrains defined in MAXCOMMSIZE and MAXDIAMETER sub options are satisfied. If RELATION = OR, the partitioning continues until either the maximum number of members in any community is satisfied or the maximum diameter is reached.

At the first step, the community detection algorithm processes the network data with no recursive option. At the end of each step, the algorithm checks if the community outcomes satisfy the criteria defined in the recursive option. If the criteria are satisfied, the recursive partitioning stops. If the criteria are not satisfied yet, the recursive partition continues on each large community (the ones do not satisfy the criteria) as an independent subgraph and the recursive partitioning is applied to each one of them. The criteria define the maximum number of members for each community and the maximum distance between any pair of nodes within each community. However, the recursive method only attempts to limit the size of the community and the maximum diameter. The criteria are not guaranteed to be satisfied as in certain cases; some communities can no longer be split. Because of that, at the end of the process, some communities can have more members than defined in MAXCOMMSIZE or more links between a pair of nodes than defined in MAXDIAMETER.

The RESOLUTIONLIST option specifies a list of values that are used as a resolution measure during the recursive partitioning. The list of resolutions is interpreted differently depending on the algorithm used for the community detection. The algorithm Louvain uses all values defined in the resolution list and computes multiple levels of recursive partitioning. At the end of the process, the algorithm produces different numbers of communities, with different numbers of members in them. For example, higher resolution tends to produce more communities with less members, and low resolution tends to produce less communities with more members. This algorithm detects communities at the highest level first, and then merges communities at lower levels, repeating the process until the lowest level is reached. All levels of resolution are computed simultaneously, in one single execution. This allows the process to be computed extremely fast, even for large graphs. On the other hand, the recursive option cannot be used. If the recursive option is used in conjunction with the resolution list, only the default resolution value of 1 is used and the list of multiple resolutions is ignored. Also, because of the nature of this optimization algorithm, two different executions most likely will produce two different results. For example, a first execution with a resolution list of 1.5, 1.0, and 0.5, and a second execution with a resolution list of 2.0 and 0.5 can produce different outcomes at the same resolution level of 0.5. This happens mostly because of the recursive merges happening throughout the process from higher resolutions to lower ones.

The algorithm parallel label propagation executes the recursive partitioning multiple times, one for each resolution specified. Because of that, the resolution list in the parallel label propagation algorithm is fully compatible with the recursive option. Every execution of the community detection algorithm will use one resolution value and the recursive criteria. In the parallel label propagation, the resolution value is used when the algorithm decides to which neighboring communities a node should be fit. A node cannot fit to a community if the density of the community, after adding the node, is less than the resolution value r. The density of a community is defined as the number of existing links in the community divided by the total number of possible links, considering the current set of nodes within it. As this algorithm runs in parallel when deciding about which community to fit a node, the final communities are not guaranteed to have their densities larger than the resolution value at all times. The parallel label propagation algorithm may not converge properly if the resolution value specified is too large. The algorithm will probably create many small communities, and the nodes will tend to change between communities along the iterations. On the other hand, if the resolution value is too small, the algorithm will probably find a small number of communities large. The use of the recursive option could be an approach to break down those large communities into smaller ones. Notice that, the larger the network, the longer it will take to properly run this process.

The algorithm label propagation is not compatible with the resolution list option. If a resolution list is specified when the label propagation algorithm is defined, the default resolution value of 1 is used instead.

The resolution can be interpreted as a measure to merge communities. Two communities are merged if the sum of the weights of intercommunity links is at least r times the expected value of the same sum if the graph is reconfigured randomly. Large resolutions produce more communities with a smaller number of nodes. On the contrary, small resolutions produce fewer communities with a greater number of nodes. Particularly for the algorithm Louvain, the resolution list enables running multiple community detections simultaneously. At the end of the recursive partitioning process multiple communities can be found at the same execution. This approach is interesting when searching for the best topology, particularly in large networks. However, this approach requires further analysis on the outcomes in order to identify the best community distribution. There are two main approaches to analyze the community distribution. The first one is by looking at the modularity value for each resolution level. Mathematically, the higher the modularity, the better. The resolution that gives the modularity closest to 1 provides the best topology in terms of community distribution. The second approach relies on the domain knowledge and the context of the problem. The number of communities and the average number of members in each community can be driven based on previous executions or upon a business understanding of the problem. The best approach is commonly a combination of the mathematical and business methods looking for a good modularity but restricting the number of communities and the average number of members in each community based on the domain knowledge.

The LINKREMOVALRATIO = option specifies the percentage of links with small weights will be removed from each node neighborhood. This option can dramatically reduce the execution time when running community detection on large networks by removing unimportant links between nodes. A link is removed if its weight is relatively smaller than the weights of the neighboring links. For example, suppose there are links A→B, A→C, and A→D with link weights 100, 80, and 1, respectively. When nodes are merged into communities, links A→B and A→C are more important than link A→D, as they contribute more to the overall modularity value. Because of that, link A→D can be removed from the network. By removing link A→D, node A will be connected only to nodes B and C. Node D will be disconnected to A when the algorithm merge nodes into communities. It doesn't mean that node D is removed from the network. Perhaps node D has strong links to other nodes in the graph and will be connected to them in a different community. If the weight of any link is less than the number specified in the LINKREMOVALRATIO = option divided by 100 and multiplied by the maximum link weight among all links incident to the node, that link will be removed from the network. The default value for the link removal ratio is 10.

The MAXITERS = option specifies the maximum number of iterations the community detection algorithm runs during the recursive partitioning process. For the Louvain algorithm, the default number of times is 20. For the parallel label propagation and the label propagations algorithms, the default number of times is 100.

The RANDOMFACTOR = option specifies the random factor for the parallel label propagation algorithm in order to avoid nodes oscillating in parallel between communities. At each iteration of the recursive partitioning, the number specified in the option is the percentage of the nodes that are randomly skipped to the step. The number varies from 0 to 1. For example, 0.1 means that 10% of the nodes are not considered during each iteration of the algorithm.

The RANDOMSEED = option works together with the random factor in the parallel label propagation algorithm. The random factor defines the percentage of the nodes that will be not considered at each iteration of the partitioning. In order to consider a different set of random nodes at each iteration, a number must be specified to create the seed. The default seed is 1234.

The TOLERANCE = option or the MODULARITY = option specifies the tolerance value to stop the iterations when the algorithm searches for the optimal partitioning. The Louvain algorithm stops iterating when the fraction of modularity gain between two consecutive iterations is less than the number specified in the option. The label propagation and parallel label propagation stop iterating when the fraction of community changes for all nodes in the graph is less than the number specified in the option. The value for this option varies from 0 to 1. The default value for the Louvain and the label propagation algorithms is 0.001, and for the parallel label propagation algorithm is 0.05.

The community detection process can produce up to six outputs as a result. These datasets describe the network structure in terms of the communities found based on the set of nodes, the set of links, the different levels of executions when the community detection uses the algorithm Louvain with multiple resolutions, the properties of the communities, the intensity of the nodes within the communities, and how the communities are connected. To produce all these outcomes, the community detection algorithm may consume considerable time and storage, particularly for large networks. In that case, we may consider suppressing some of these outcomes that are mostly intended for post community detection analysis.

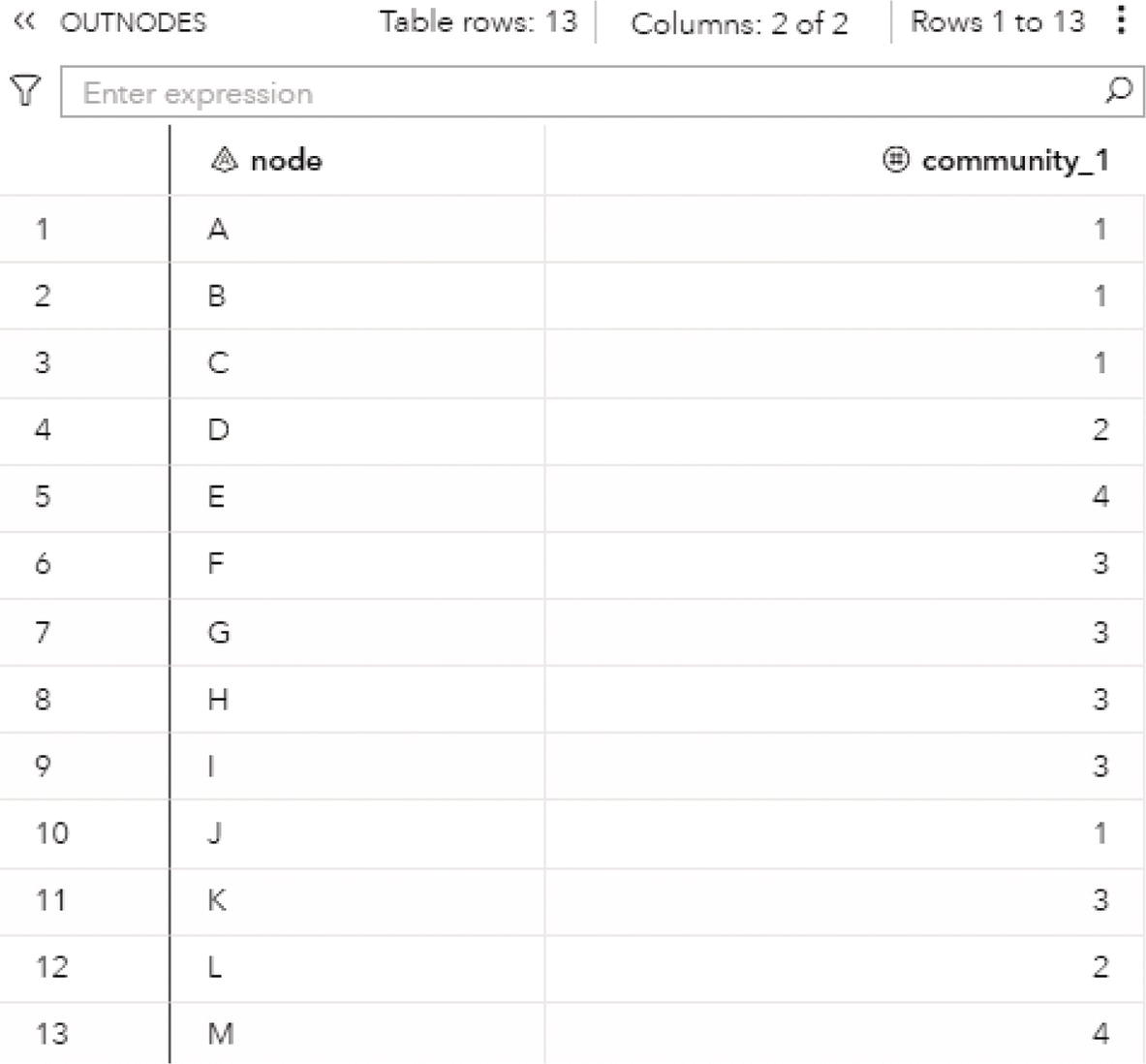

The option OUTNODES = within the COMMUNITY statement produces an output containing all nodes and their respective community. In the case of multiple resolutions, the output reports the community for each node at each resolution level. After the topology is selected, all nodes are uniquely identified to a community, at each resolution level. Post analysis of the communities can be performed based on the nodes and their communities upon each level executed.

- node: identifies the node.

- community_i: identifies the community the node belongs to, where i identifies the resolution level defined in the resolution list, and i is ordered from the greatest resolution to the lowest in the list.

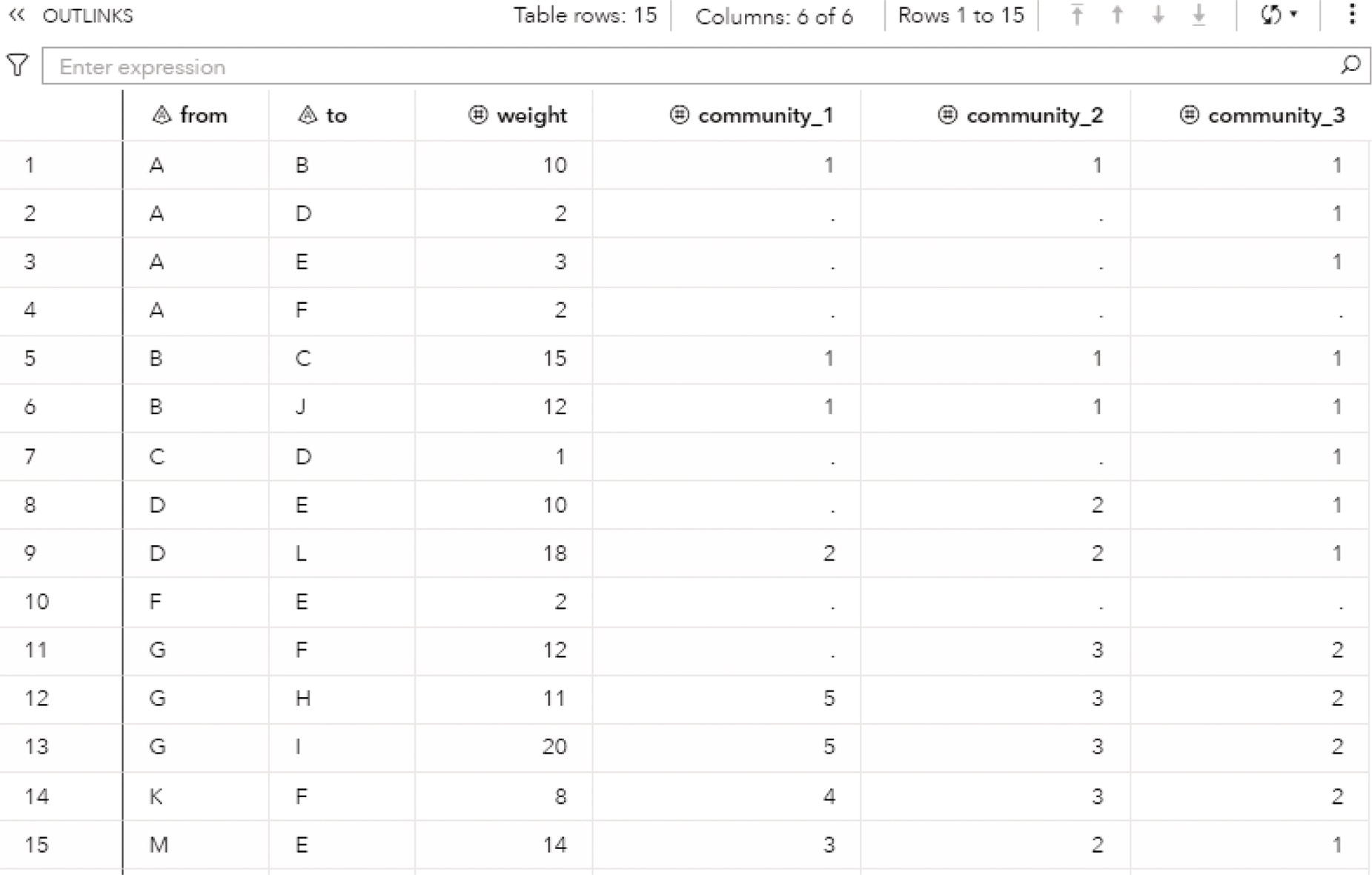

The option OUTLINKS = contains all links and their respective communities. Again, if multiple resolutions are specified during the community detection process, the output reports the community for each link at each resolution level. If the link contains the from node assigned to one community and the to node assigned to a different community, the community identifier receives a missing value to describe that link belongs to inter‐communities.

- from: identifies the originating node.

- to: identifies the destination node.

- community_i: identifies the community the link belongs to, where i identifies the resolution level defined in the resolution list and is ordered from the greatest to the lowest resolution in the list.

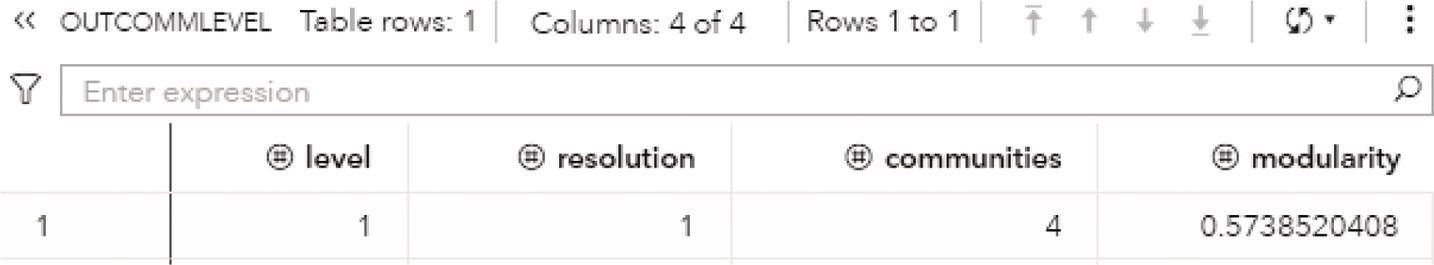

The option OUTLEVEL = contains the number of communities and the modularity values associated with the different resolution levels. This outcome is probably one of the first outputs to be analyzed in order to identify the possible network topology in terms of communities. The balance between the modularity value, the number of communities found, and the average number of members for all communities is evaluated at this point.

- level: identifies the resolution level.

- resolution: identifies the value in the resolution list.

- communities: identifies the number of communities detected on that resolution list.

- modularity: identifies the modularity achieved for the resolution level when the community detection process is executed.

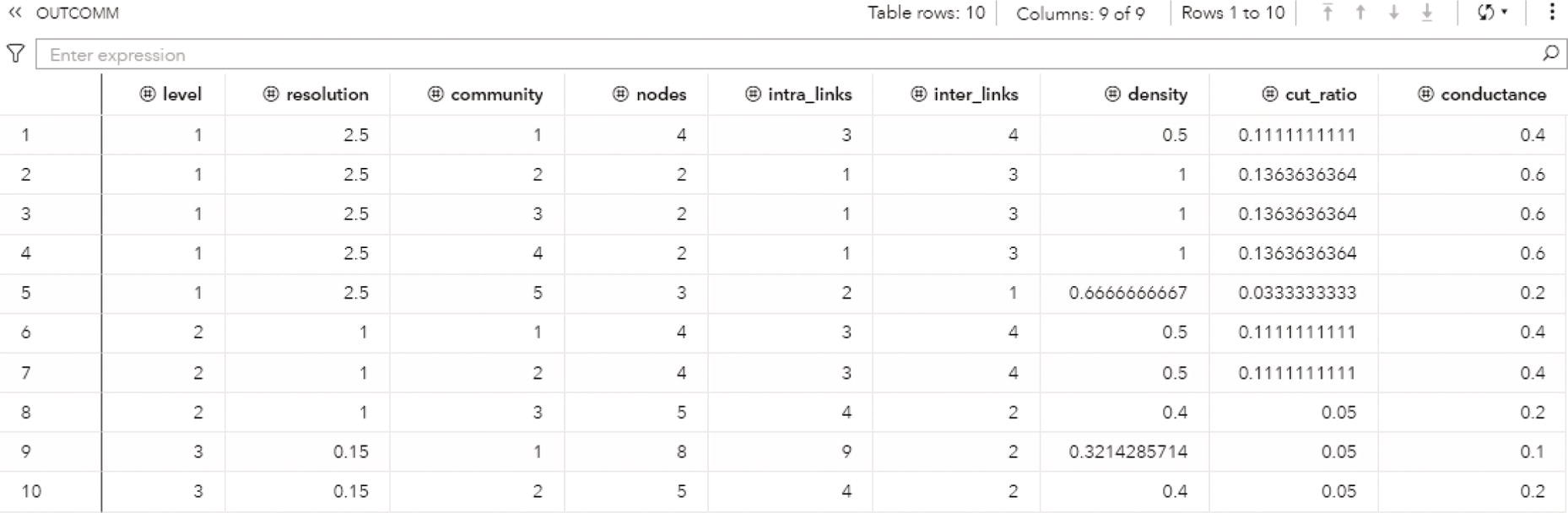

The option OUTCOMMUNITY = contains several attributes to describe the communities found during the community detection process.

- level: identifies the resolution level.

- resolution: identifies the value in resolution list.

- community: identifies the community.

- nodes: identifies the number of nodes in the community.

- intra_links: identifies the number of links that connect two nodes in the community.

- inter_links: identifies the number of links that connect two nodes within different communities. For example, from node in one community and to node in a different community than from node.

- density: the intra_links divided by the total number of possible links within the community.

- cut_ratio: the inter_links divided by the total number of possible links from the community to nodes outside the community.

- conductance: the fraction of links from a node in the community that connects to nodes in a different community. For undirected graphs, it is the inter_links divided by the sum of inter_links, plus two times the intra_links. For directed graph, it is the inter_links divided by the sum of inter_links, plus intra_links.

These measures are especially useful in analyzing the communities, particularly in large graphs. The density and the intra links show how dense and well connected the community is, no matter its size or the number of members. The inter links, the cut ratio, and the conductance describe how isolated the community is from the rest of the network, or how interconnected it is to other communities within the network. Communities more interconnected to the rest of the network tend to spread the information flow faster and stronger. This characteristic can be used to diffuse marketing and sales information. Spare or highly isolated communities can be unexpected and can indicate possible suspicious behaviors. All these analyzes certainly depend on the context of the problem.

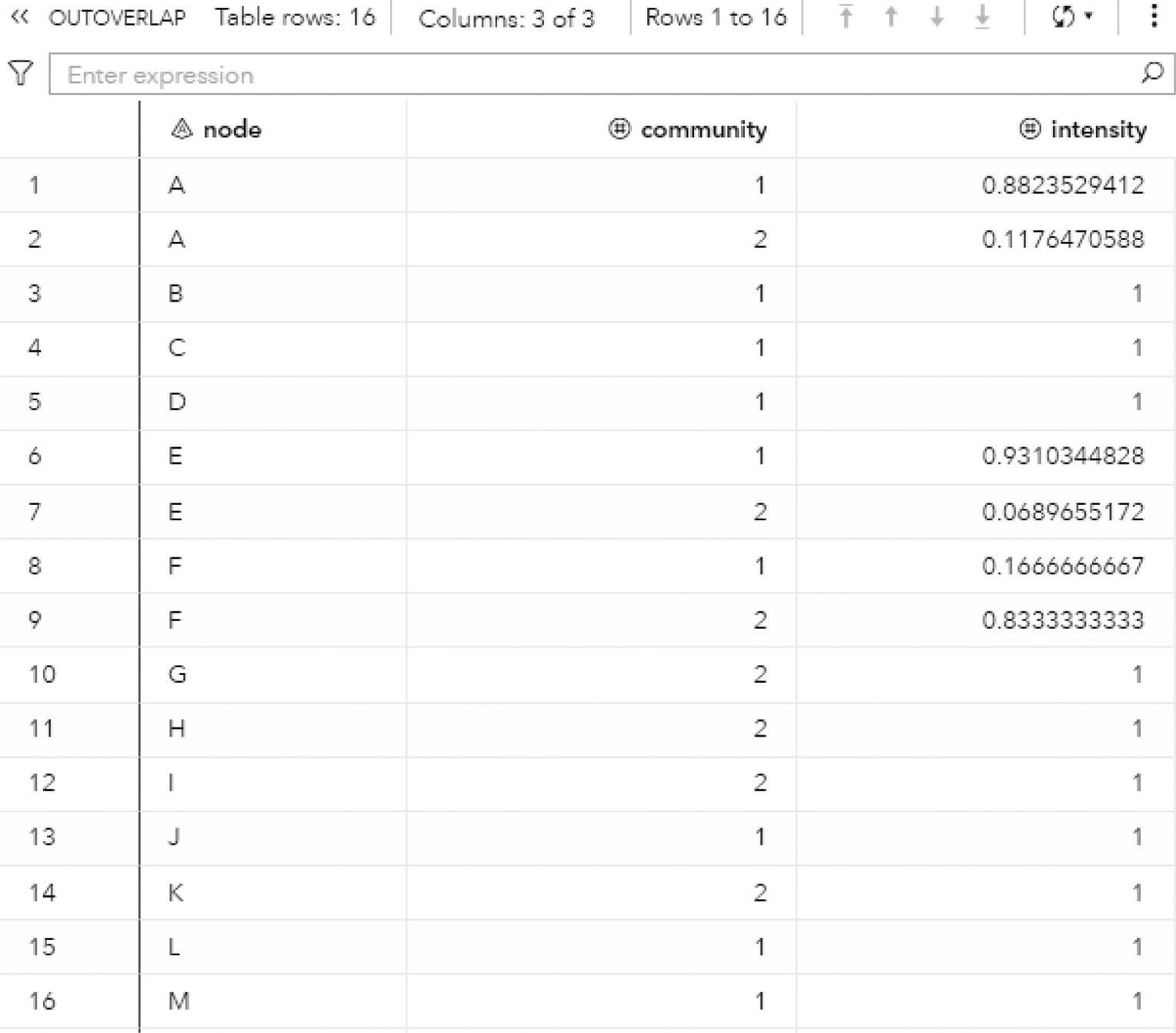

The option OUTOVERLAP = contains information about the intensity of each node. Even though a node belongs to a single community, it may have multiple links to other communities. The intensity of the node is calculated as the sum of the link weights it has with nodes within its community, divided by the sum of all link weights it has (considering link to nodes to the same community and to nodes within different communities). For directed graphs, only the outgoing links are taken into consideration. This output can be expensive to compute and store, particularly in large networks. For that reason, this output contains only the results achieved by the smallest value of the resolution list. This output table is one of the first options to be suppressed when working on large networks.

- node: identifies the node.

- community: identifies the community.

- intensity: identifies the intensity of the node that belongs to the community.

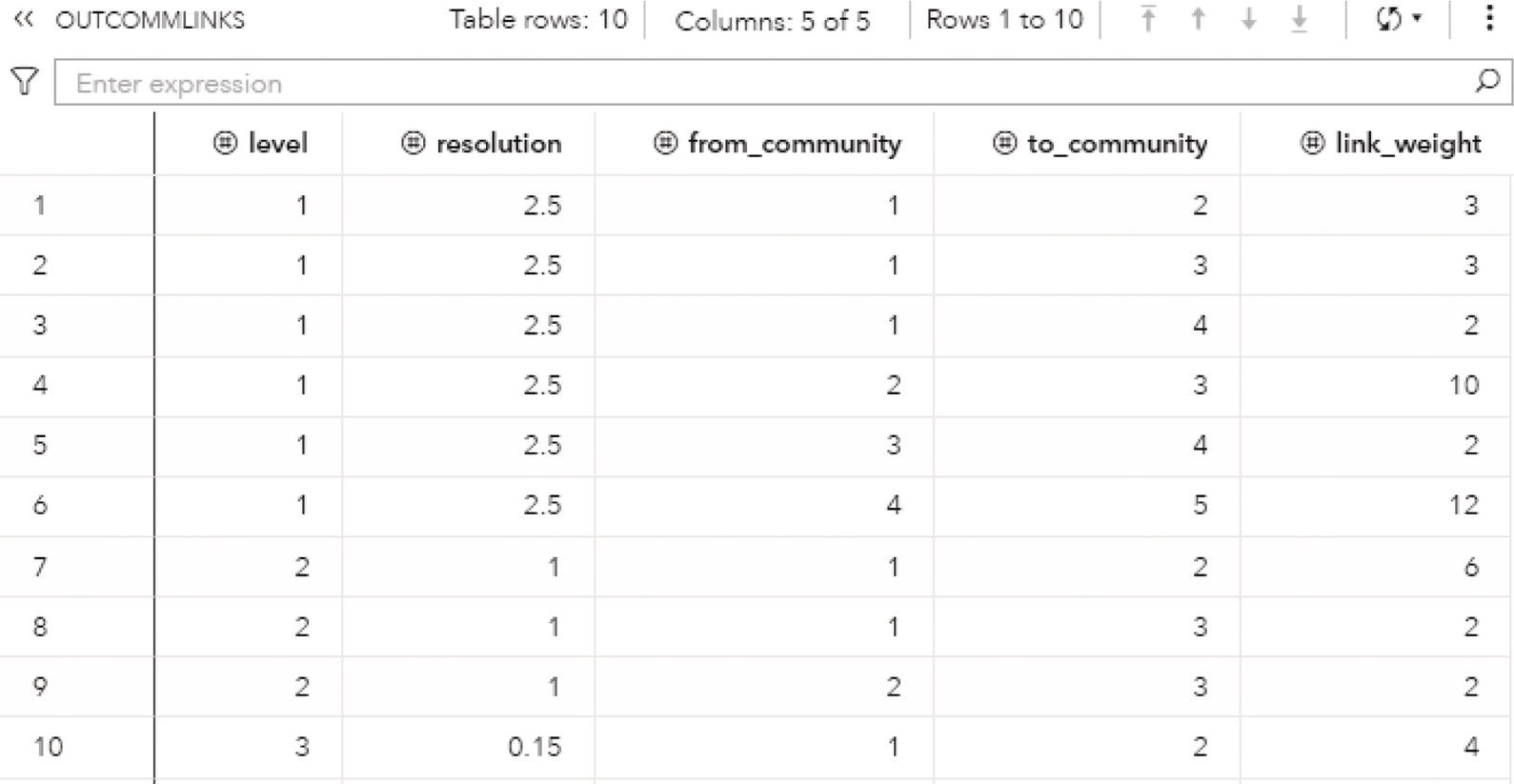

Finally, the option OUTCOMMLINKS contains information about how the communities are interconnected. This information is important to understand how cohesive the network is in a community's perspective.

- level: identifies the resolution level.

- resolution: identifies the value in the resolution_list.

- from_community: identifies the community of the from community.

- to_community: identifies the community of the to community.

- link_weight: identifies the sum of the link weights of all links between from and to communities.

Let's look into some different examples of community detection for both directed and undirected networks.

2.4.1 Finding Communities

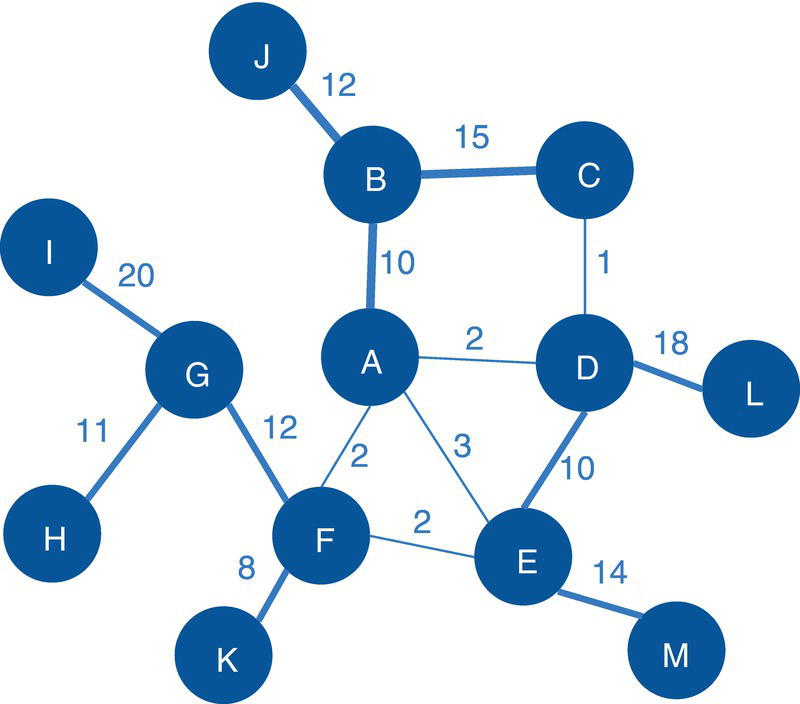

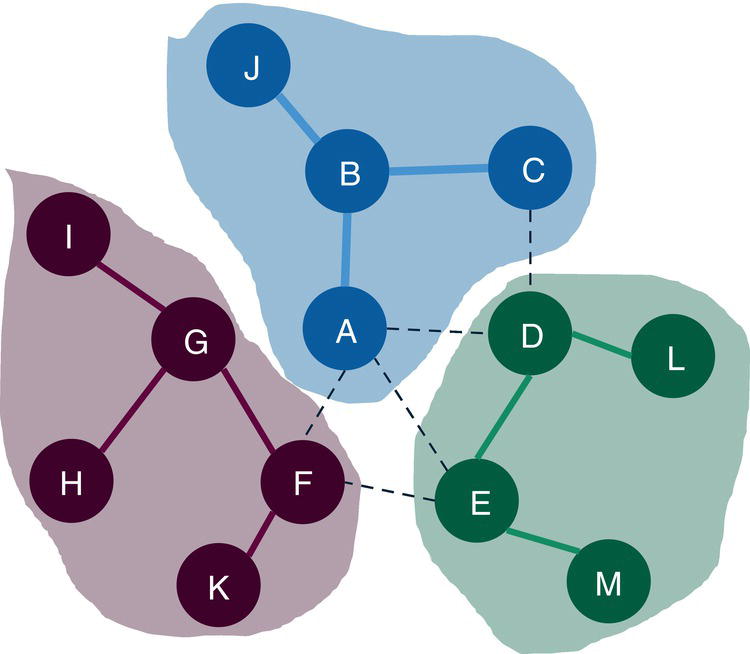

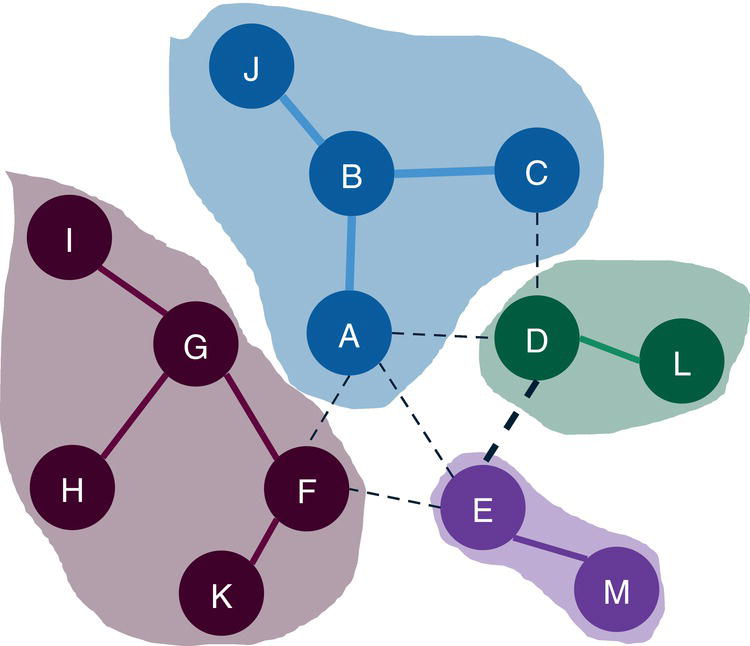

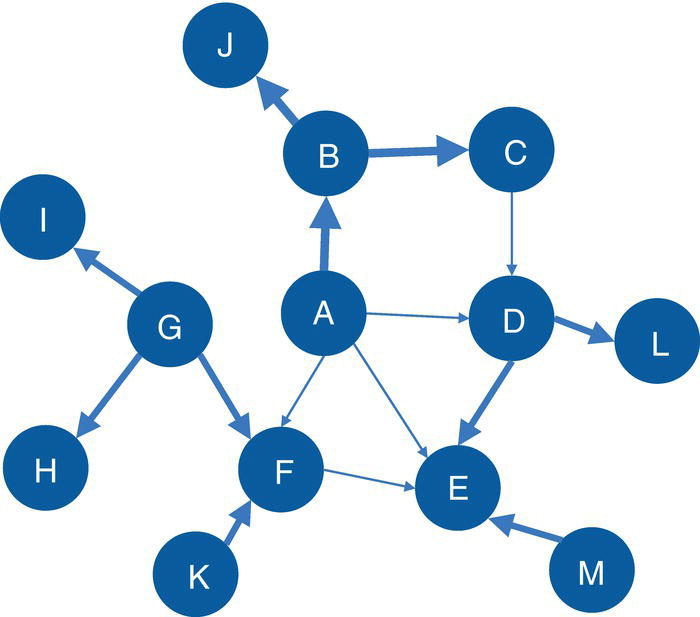

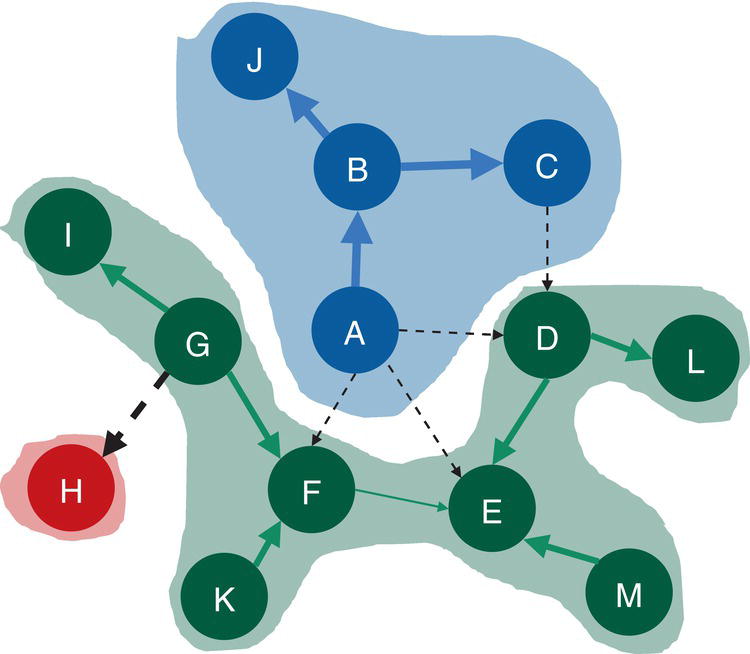

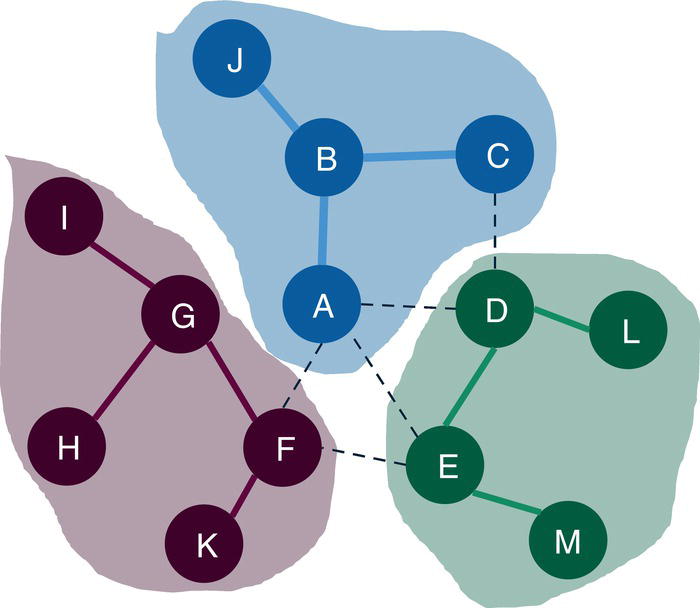

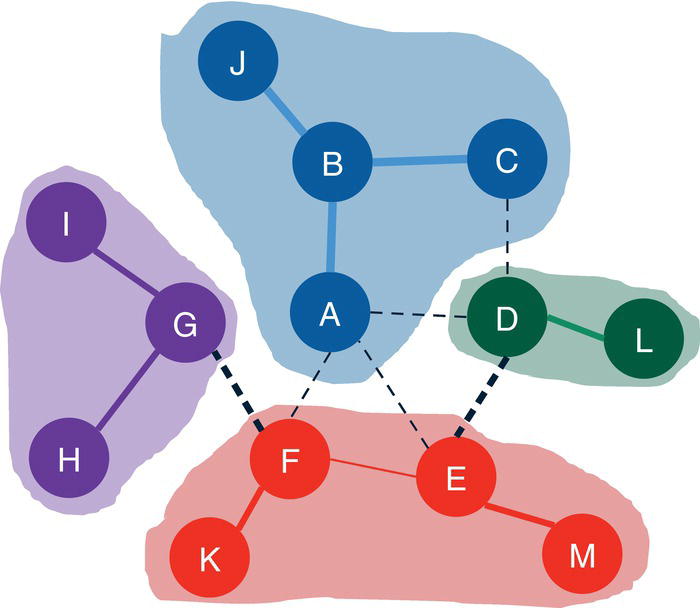

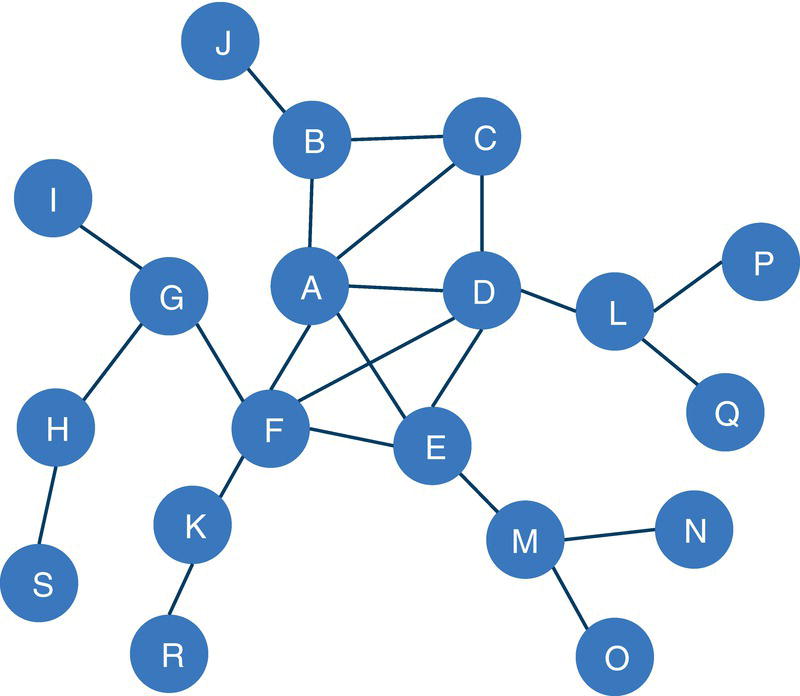

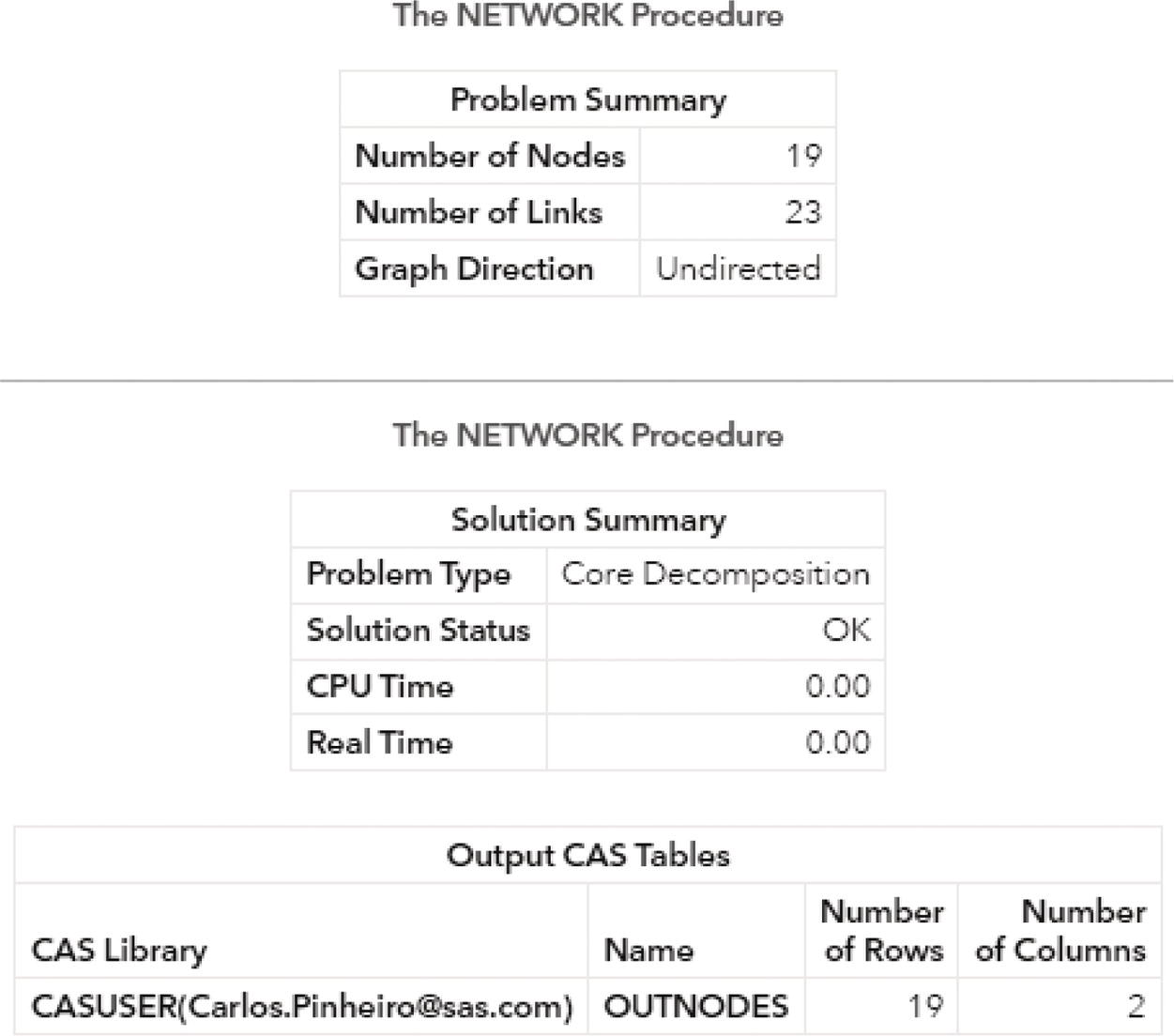

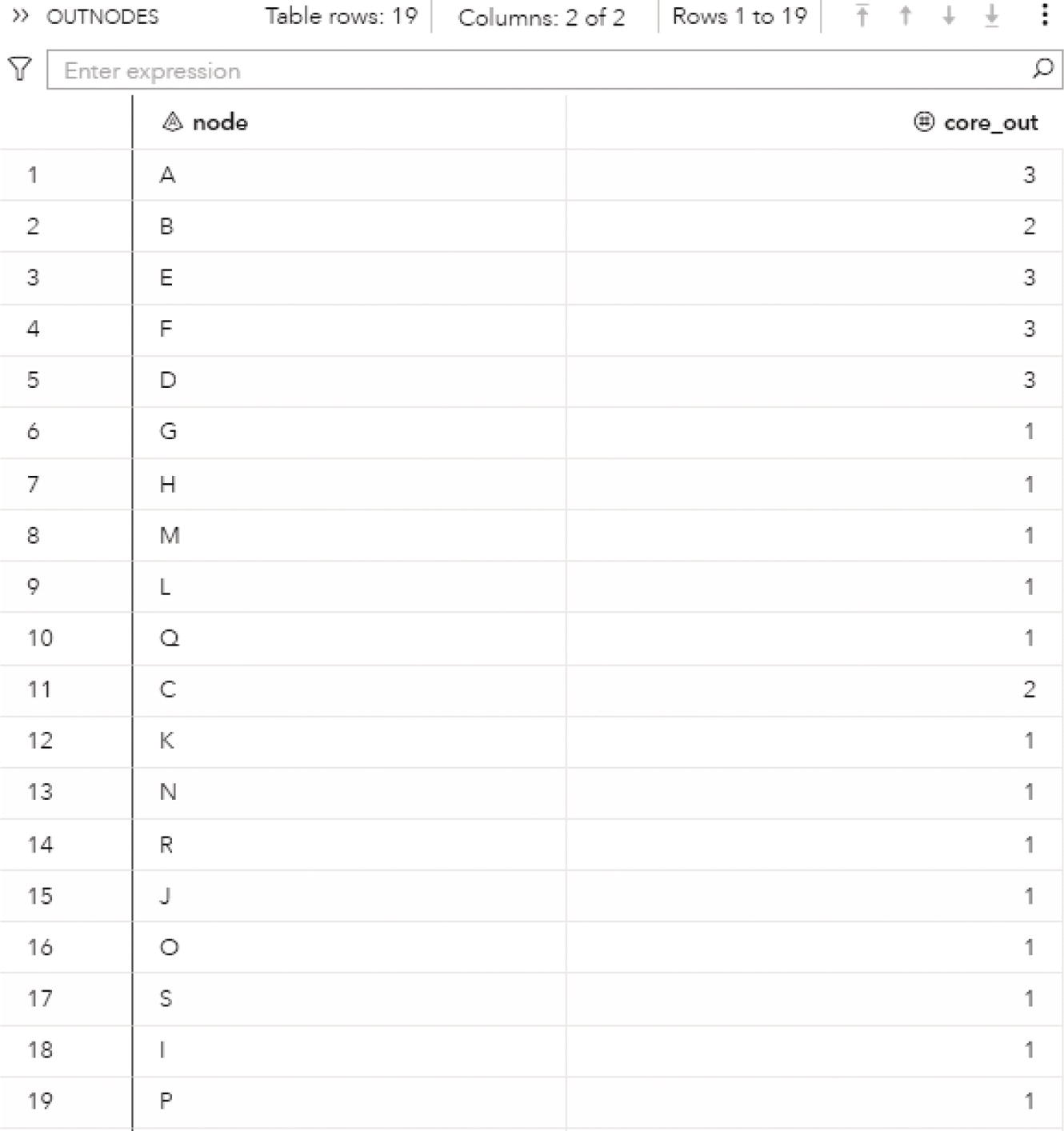

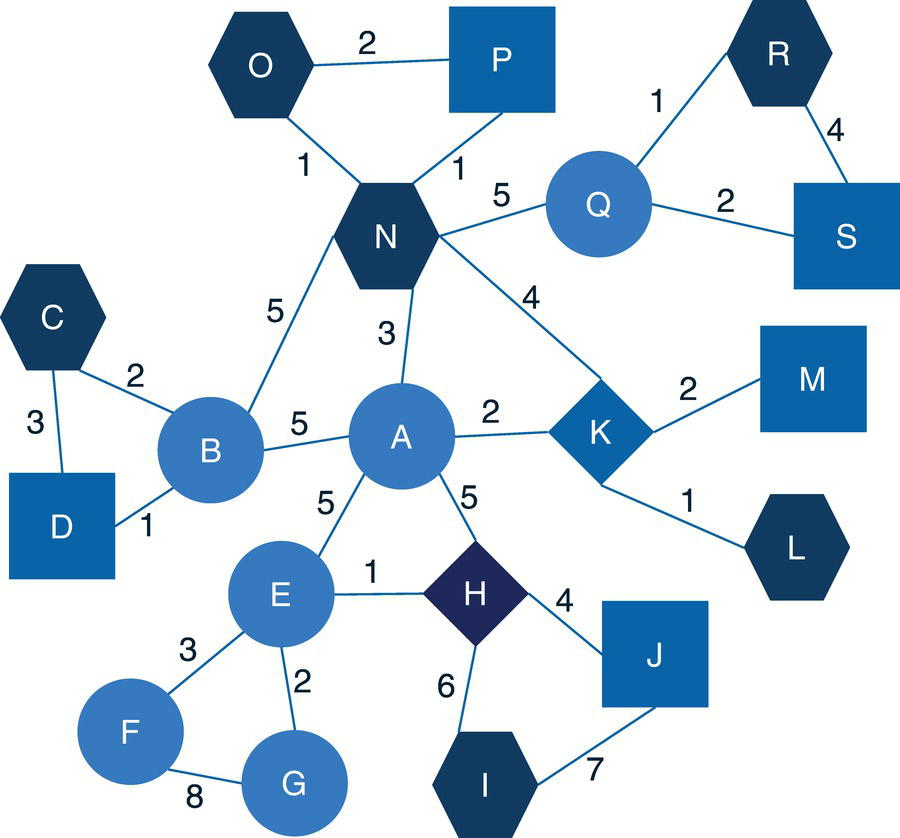

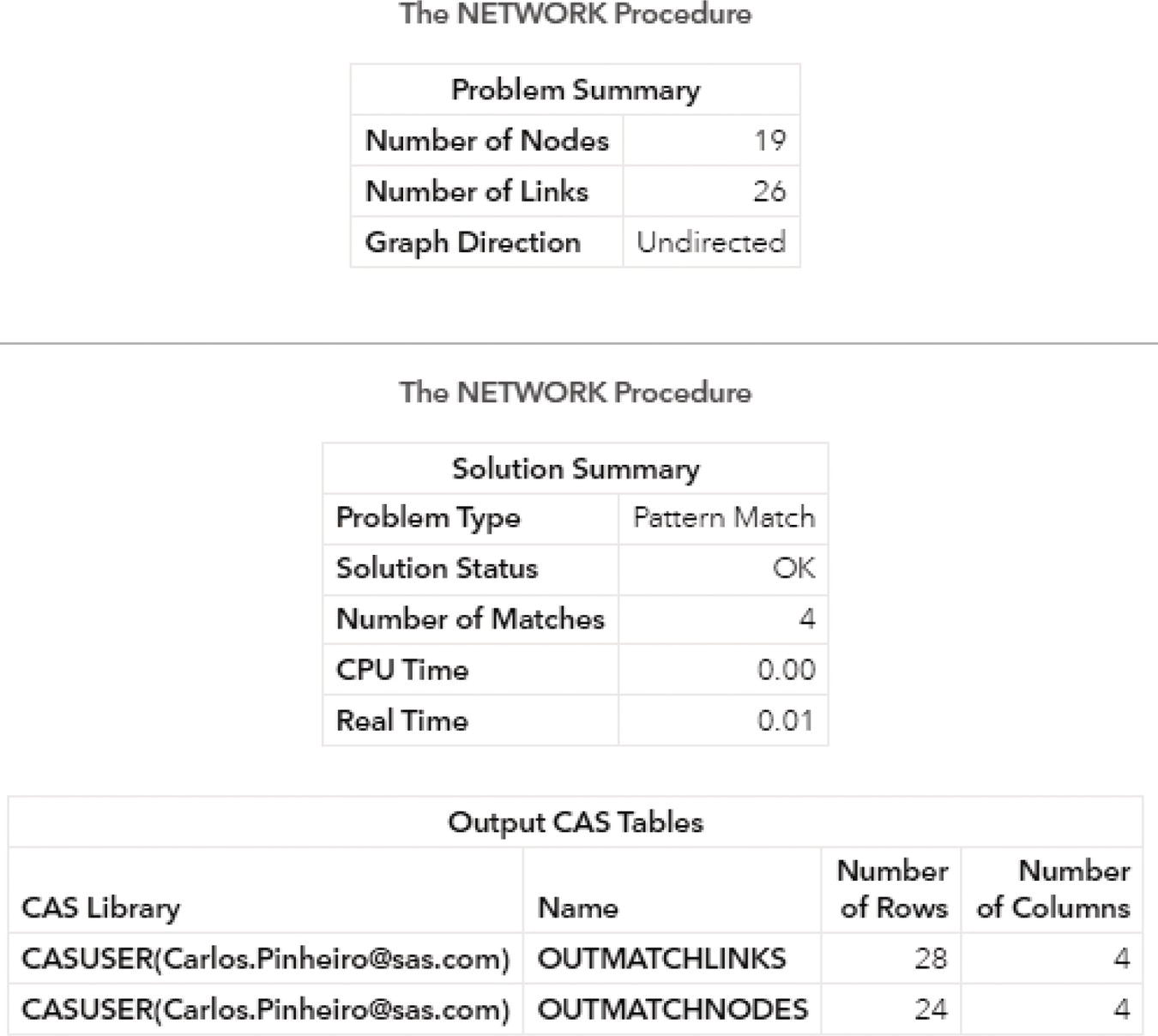

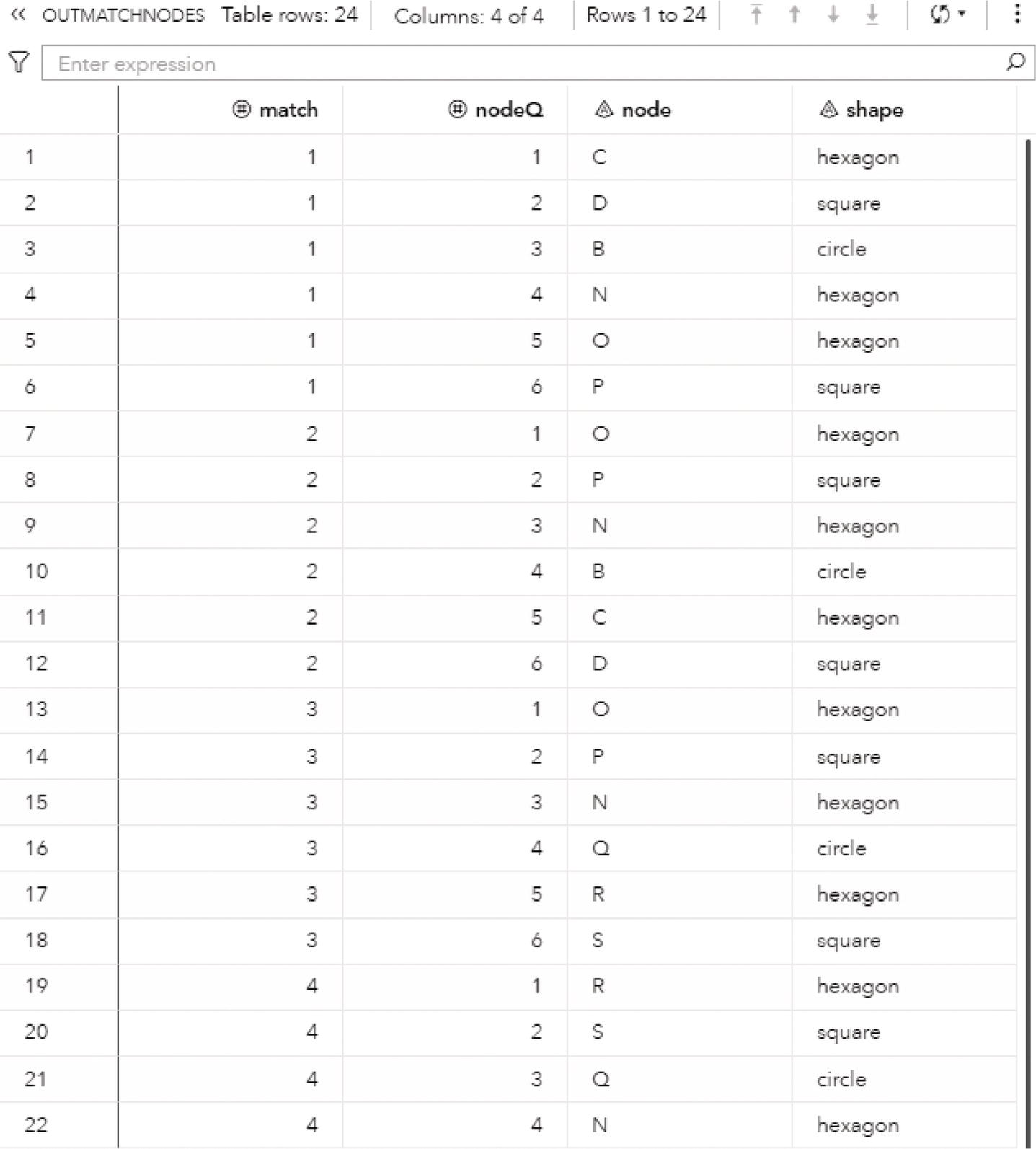

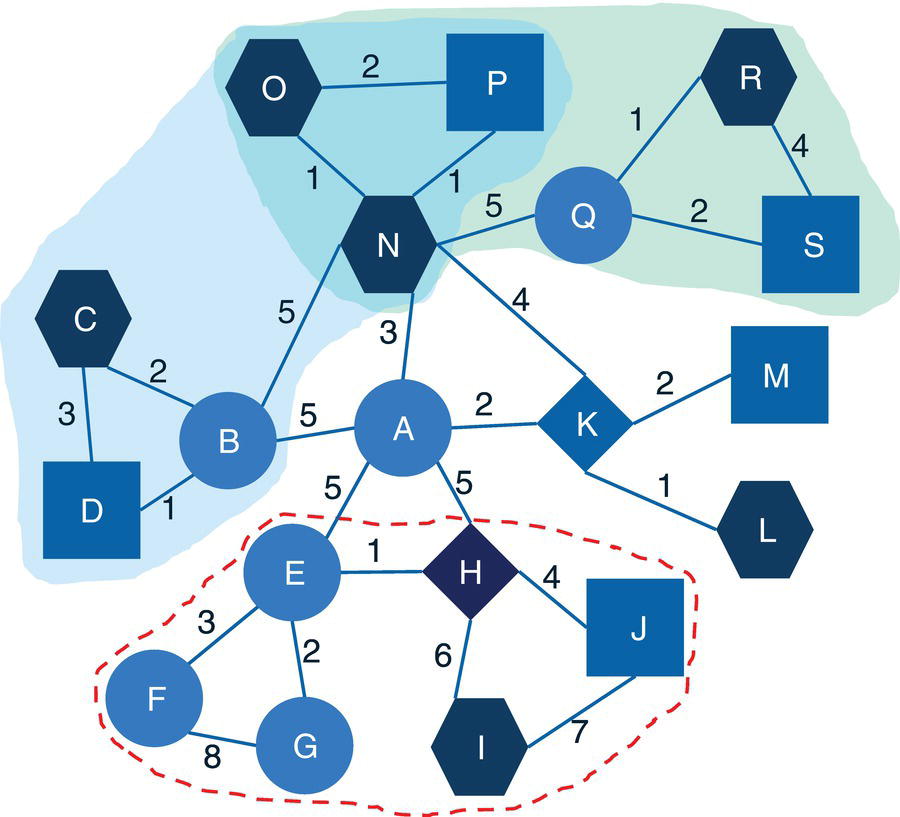

Let's consider the following graph presented in Figure 2.30 to demonstrate the community detection algorithm. This graph has 13 nodes and 15 links. Link weights in community detection is crucial and defines the recursive partitioning of the network into subgraphs. The links in this network are first considered undirected. Multiple algorithms will be used and therefore, different communities will be detected.

Figure 2.30 Input graph with undirected weighted links.

The following code creates the input dataset for the community detection algorithm. The links dataset contains origin and destination nodes plus the weight of the links. Both algorithms Louvain and label propagation are used. Later, the same network is be used as a directed graph to demonstrate the parallel label propagation algorithm.

data mycas.links;Input from $ to $ weight @@;datalines;A B 10 B C 15 B J 12A F 2 A E 3 A D 2 C D 1 F E 2G F 12 G I 20 G H 11 K F 8D L 18 D E 10 M E 14;run;

As the input dataset is defined, we can now invoke the community detection algorithm using proc network. The following code describes how to define the algorithm and other parameters when searching for communities. In the LINKSVAR statement the variables from and to receive the origin and the destination nodes, even though the direction is not used at this first run. The variable weight receives the weight of the link. In the COMMUNITY statement, the ALGORITHM option defines the Louvain algorithm. The RESOLUTIONLIST option defines three resolutions to be used during the recursive partitioning. Notice that all three resolutions will be used in parallel, identifying distinct communities' topologies at a single run. Resolutions 2.5, 1.0, and −0.15 are defined for this search.

proc networkdirection = undirectedlinks = mycas.linksoutlinks = mycas.outlinksoutnodes = mycas.outnodes;linksvarfrom = fromto = to;communityalgorithm=louvainresolutionlist = 2.5 1.0 0.15outlevel = mycas.outcommleveloutcommunity = mycas.outcommoutoverlap = mycas.outoverlapoutcommLinks = mycas.outcommlinks;run;

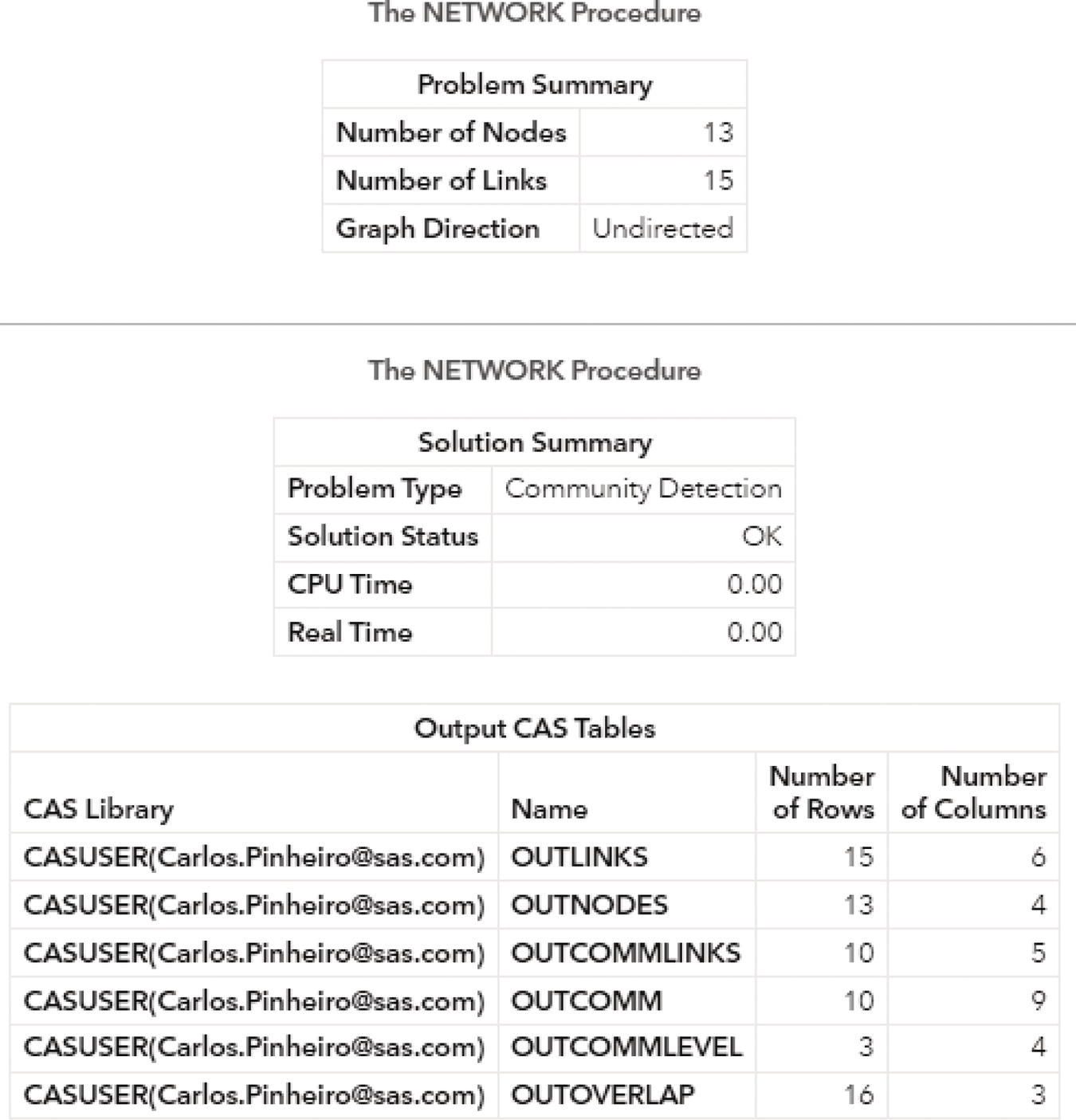

The following pictures show the several outputs for the community detection algorithm. Figure 2.31 reports the summary for the execution process. It provides the size of the network, with 13 nodes and 15 links, and the direction of the graph. The solution summary states that a solution was found and provides the name of all output tables generated during the process.

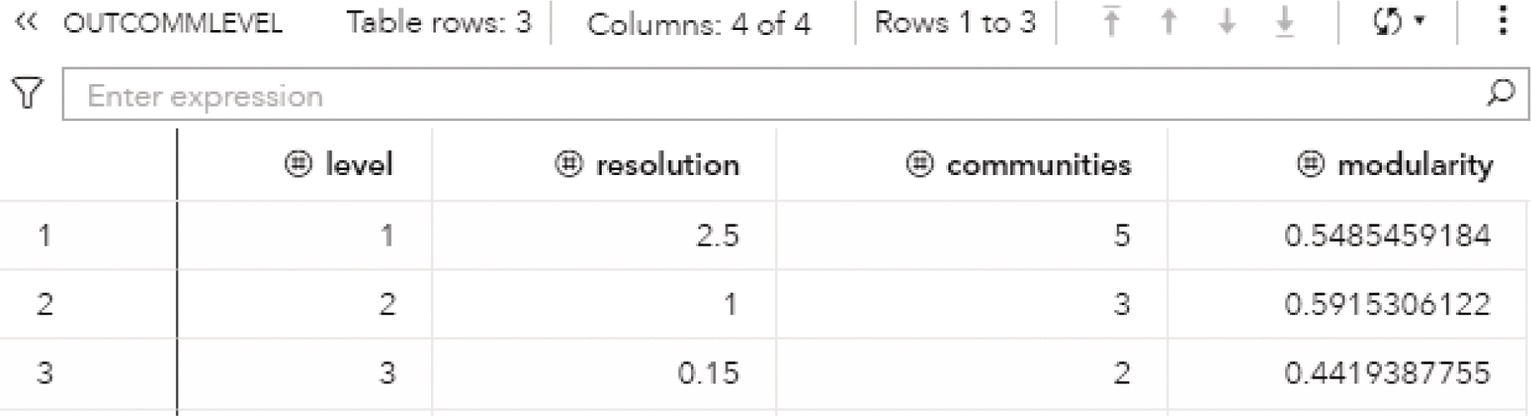

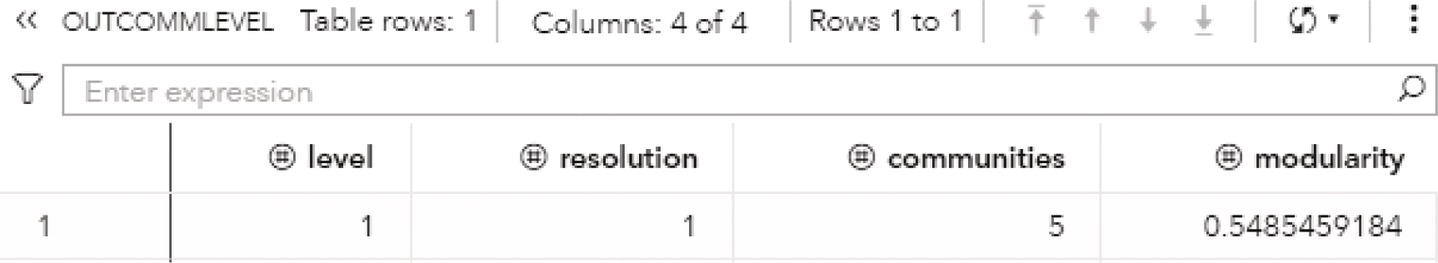

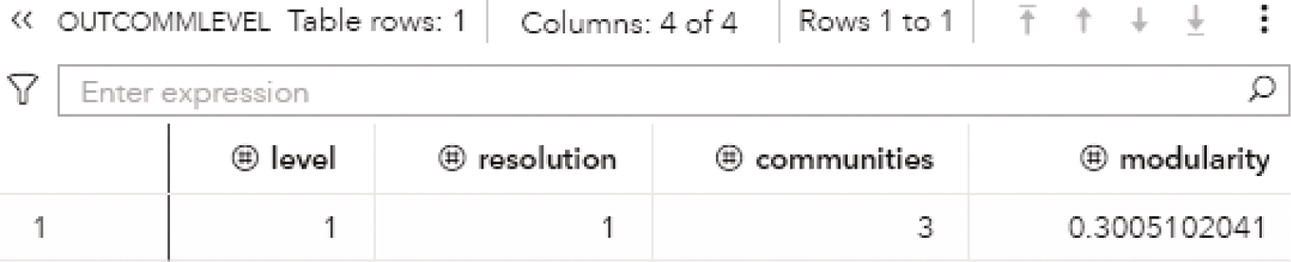

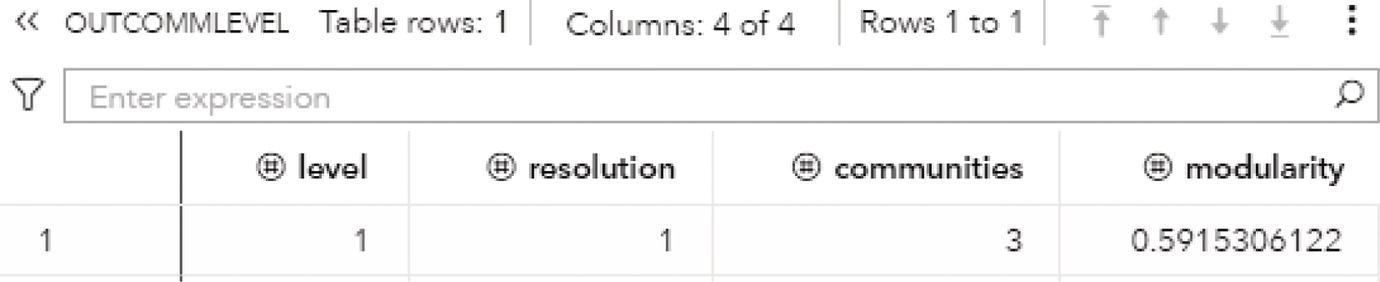

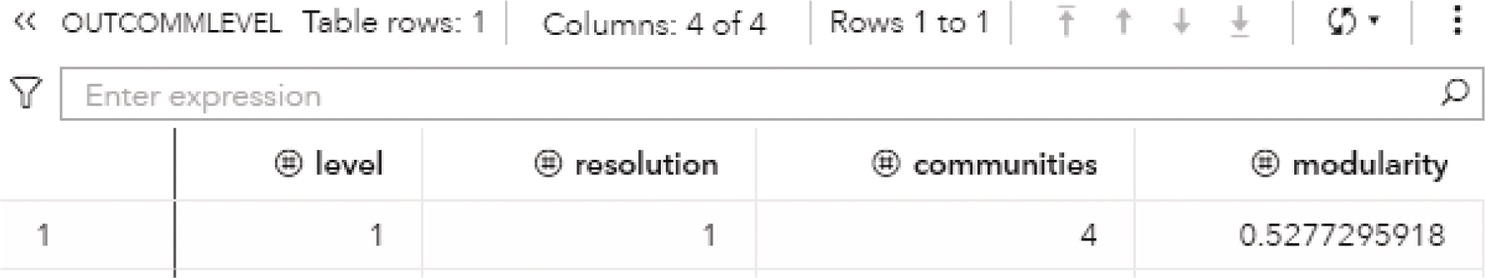

Particularly for the Louvain algorithm, when multiple resolutions are used, the first output table analyzed is the OUTCOMMLEVEL. This output shows the result of each resolution used, the number of communities found, and the modularity achieved. It is presented in Figure 2.32.

As described before, the higher the modularity closest to one, the better the distribution of the nodes throughout the communities identified. The highest modularity for this execution is 0.5915, assigned to the level 2, using resolution 1.0, where 3 communities were found.

Figure 2.31 Output summary results for the community detection on an undirected graph.

Figure 2.32 Output communities level table for the community detection.

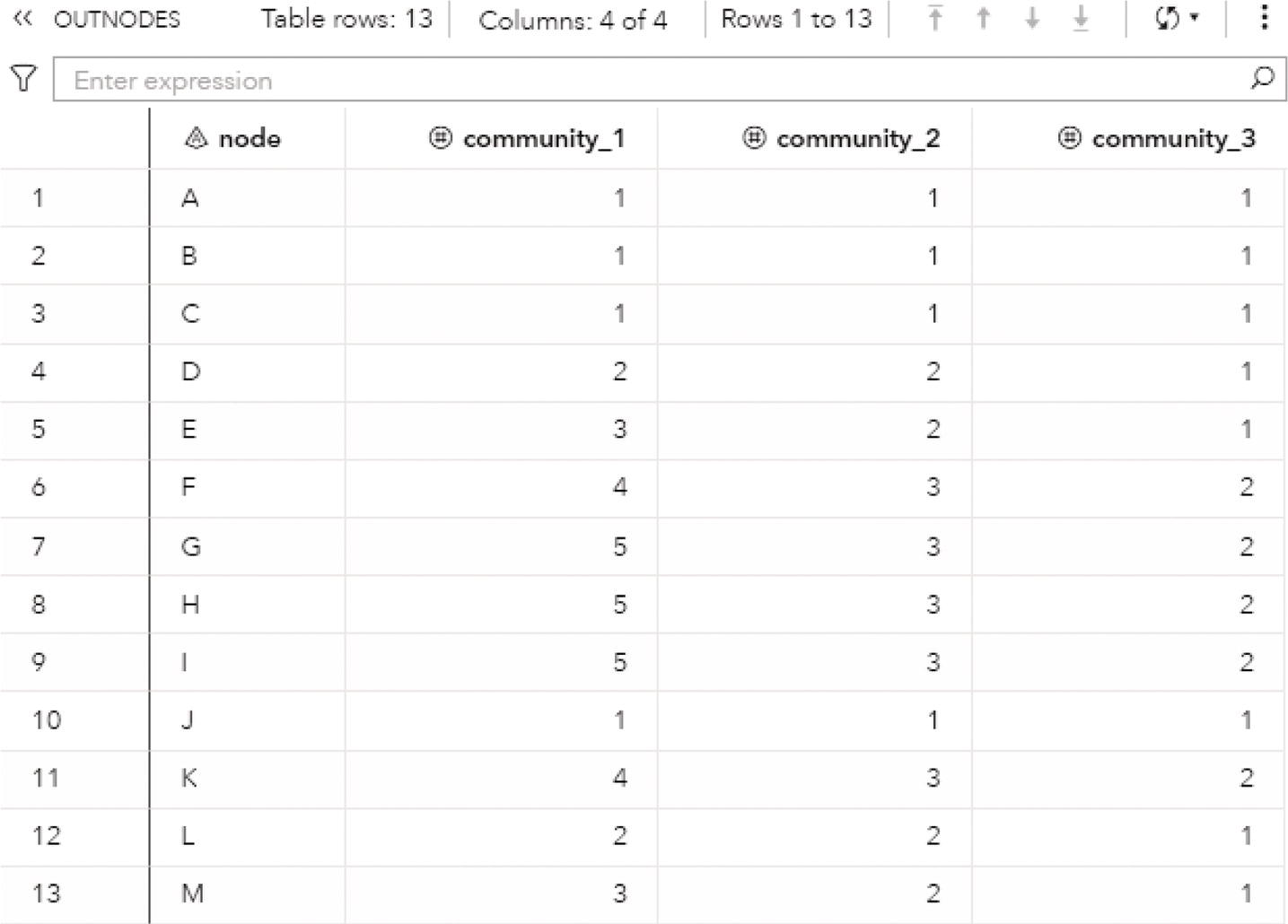

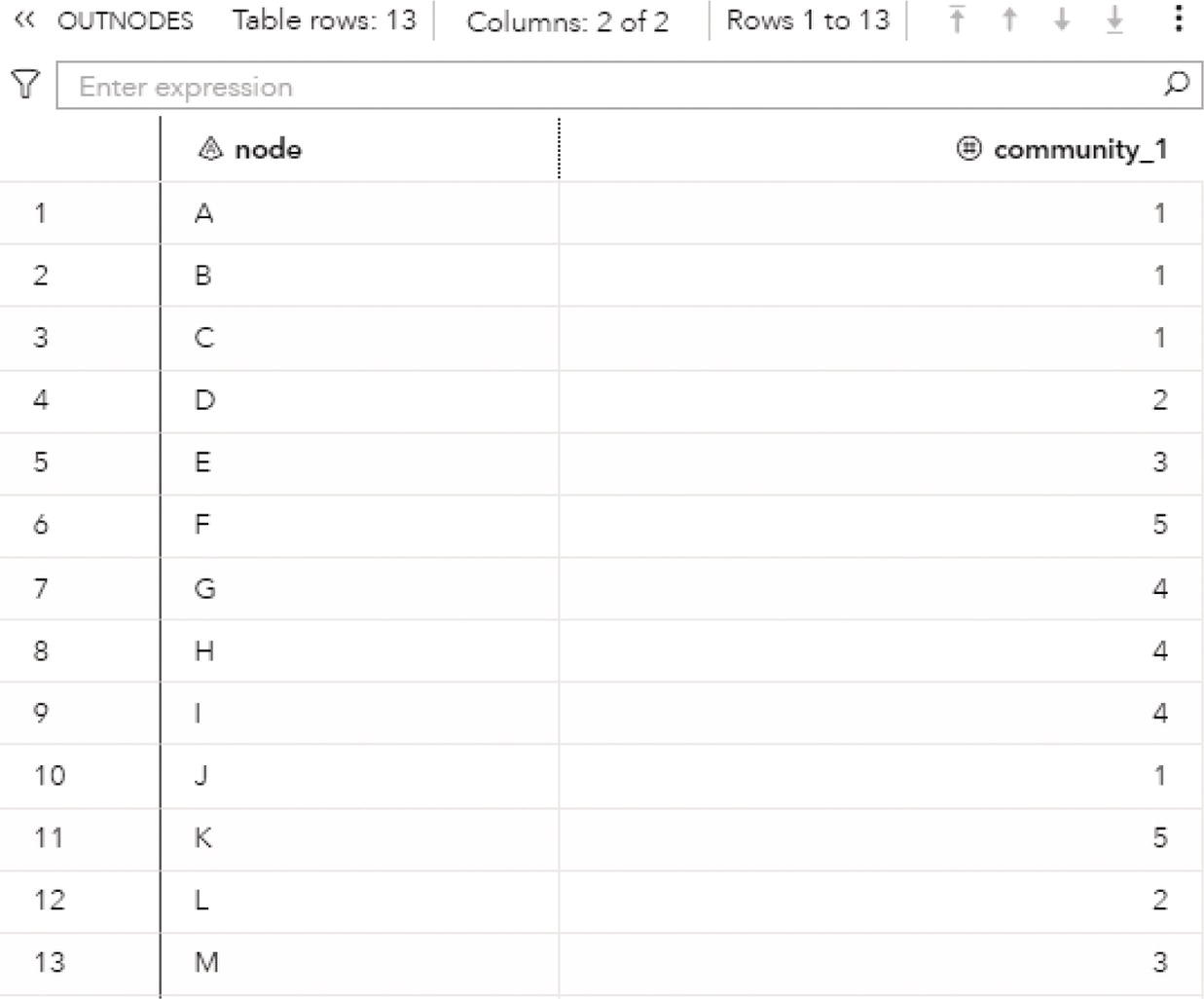

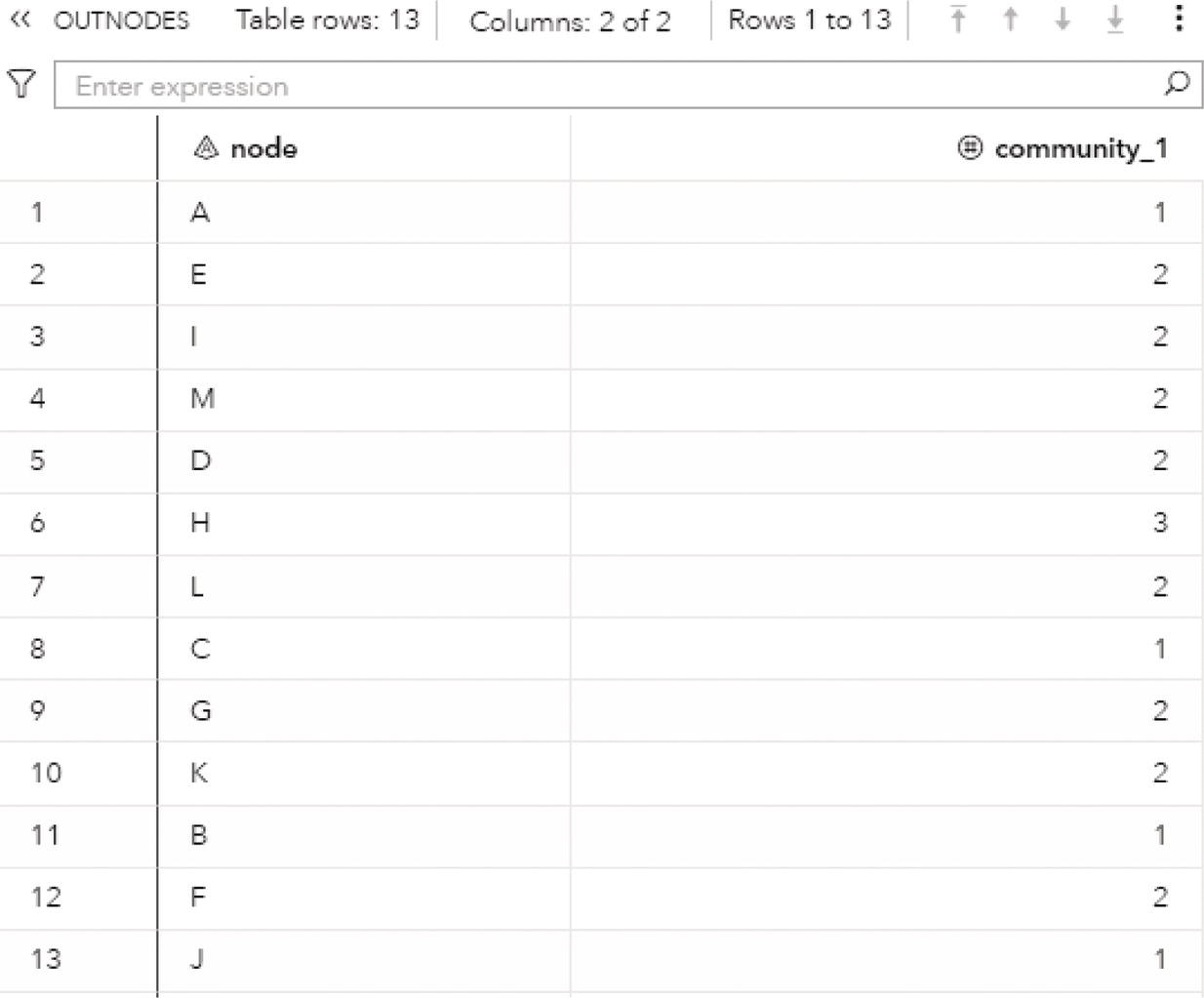

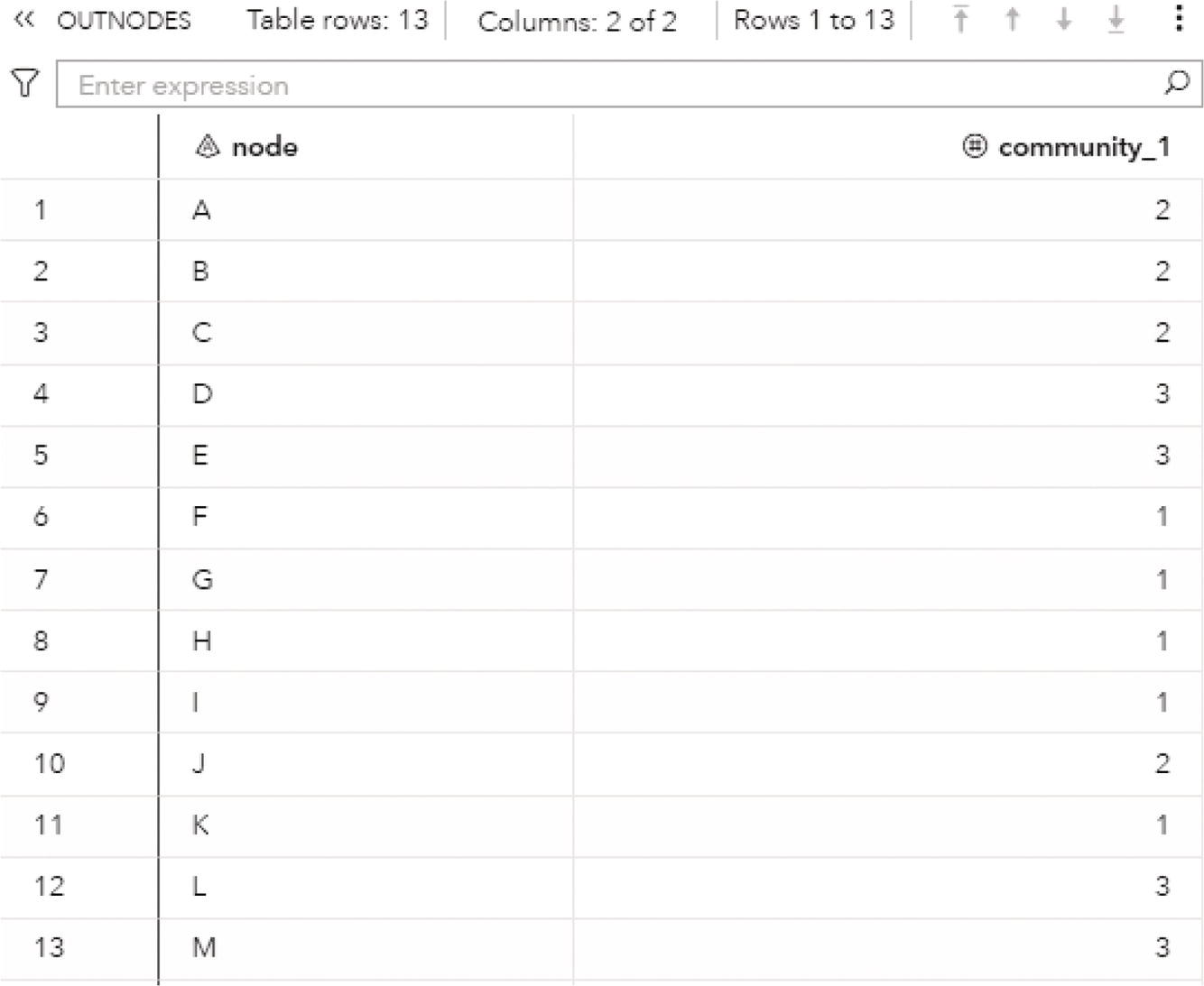

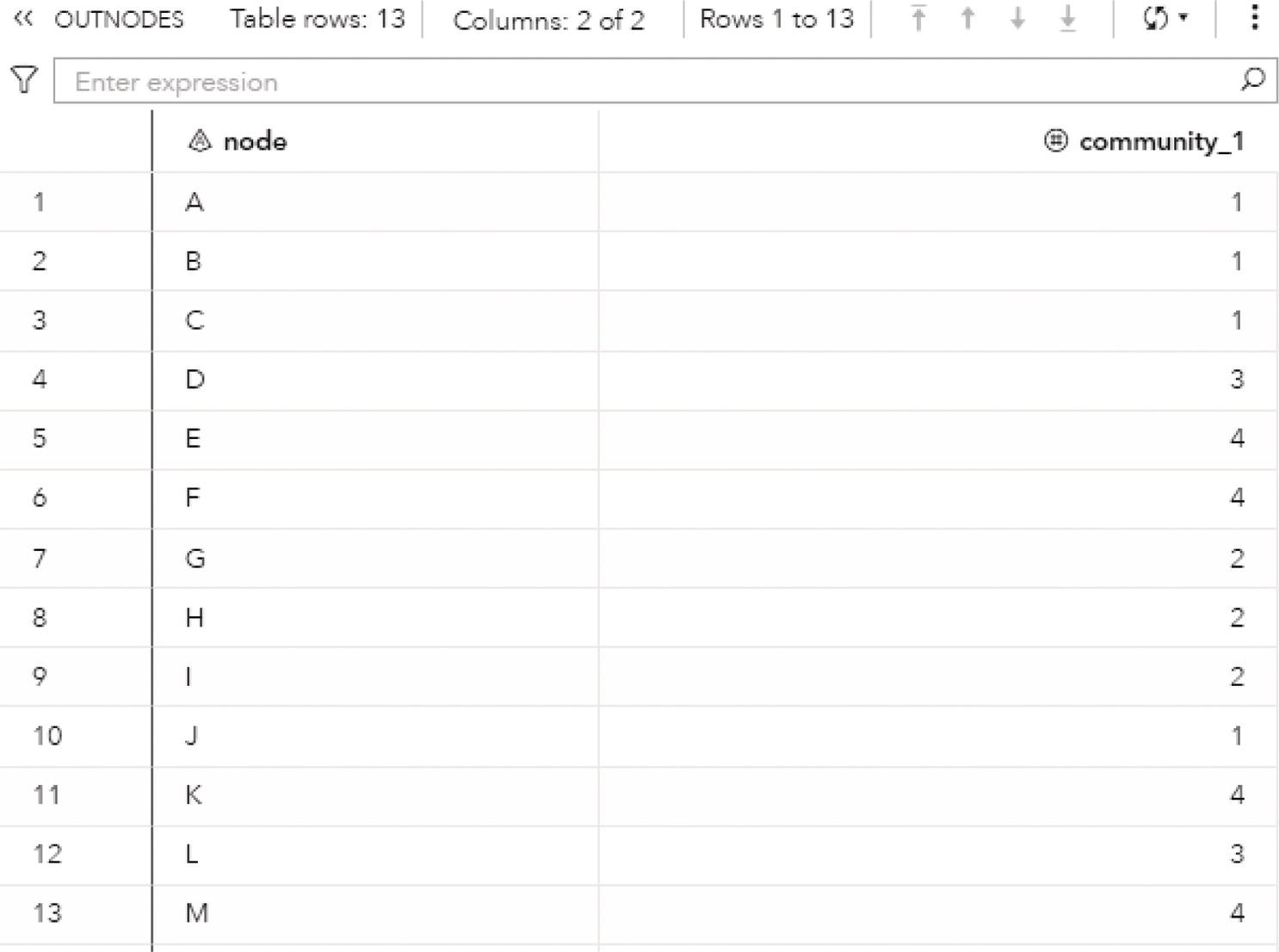

The OUTNODES table presented in Figure 2.33 shows the nodes and the communities they belong to for each resolution used. For example, for the best resolution (level 2), the variable community_2 specifies which node is assigned to each community.

For the level 2, nodes A, B, C, and J fall into community 1, nodes D, E, L, and M fall into community 2 and nodes F, G, H, I, and K fall into community 3.

The OUTLINKS table presented in Figure 2.34 shows all links and the community they belong to for each resolution used. Notice that some links do not belong to any community and do not have a community identifier assigned. These links connect nodes that fall into different communities. For example, nodes A and D are connected in the original network but belong to different communities, 1 and 2. Then, these particular links do not belong to any community and receive a missing value.

The OVERLAP table presented in Figure 2.35 shows the nodes, which community or communities they belong to, and their intensity on each community. For example, node A belongs to community 1 with intensity 0.88 and to community 2 with intensity 0.11. Remember that the intensity of a node measures the ratio of the sum of the link weights with nodes within the same community by the sum of the link weights with all nodes. The intensity of node A in community 1 is much higher than the intensity in community 2, and in this case, it makes more sense that node A belongs to community 1.

Figure 2.33 Output nodes table for the community detection.

Figure 2.34 Output links table for the community detection.

The OUTCOMM table presented in Figure 2.36 shows the communities' attributes, for each level, or the resolution specified, the community identifier, the number of nodes, the intra links, the inter links, the density, the cut ration, and the conductance:

Figure 2.35 Output overlap table for the community detection.

Figure 2.36 Output communities' attributes table for the community detection.

The OUTCOMMLINKS table presented in Figure 2.37 shows how the communities are connected, providing the links between the communities and the sum of the link weights.

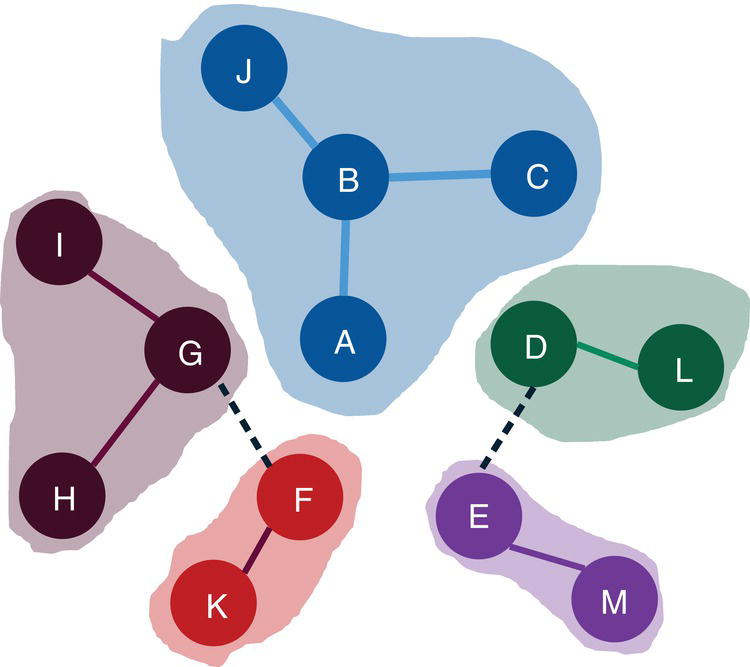

Figure 2.38 shows the best topology for the community detection, highlighting the three communities identified and their nodes.

The Louvain algorithm found three different community topologies based on three distinct resolutions. The best resolution, the one which achieved the highest modularity, and identified three communities. Let's see how the other algorithms work and what happens when other options like the recursive approach are used.

Figure 2.37 Output communities' links table for the community detection.

Figure 2.38 Communities identified by the Louvain algorithm.

The following code describes how to invoke the label propagation algorithm. A list of resolutions can be defined, but only the first one is used. If the resolution list is not specified, the default value of 1 is used. Any other value can be used but the resolution list must be specified with the value wanted.

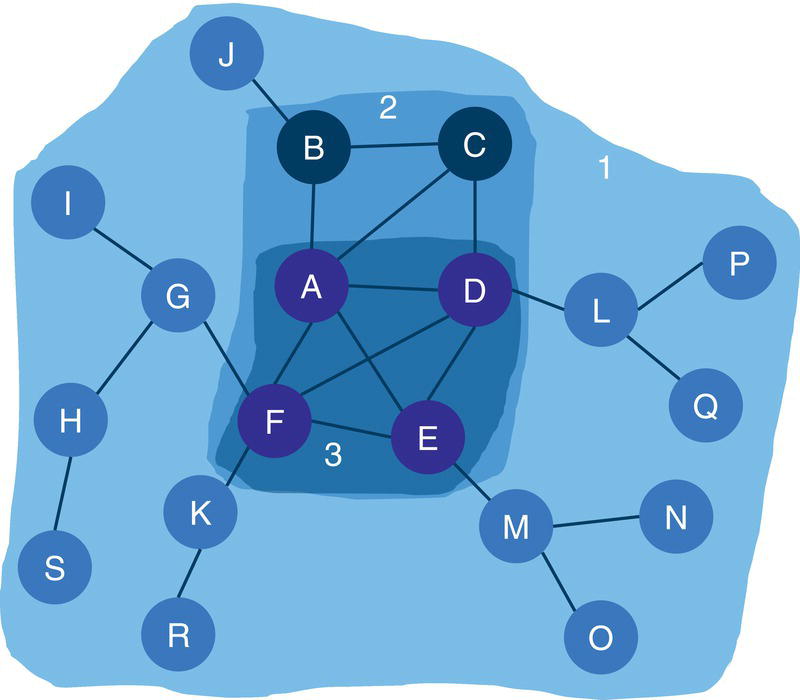

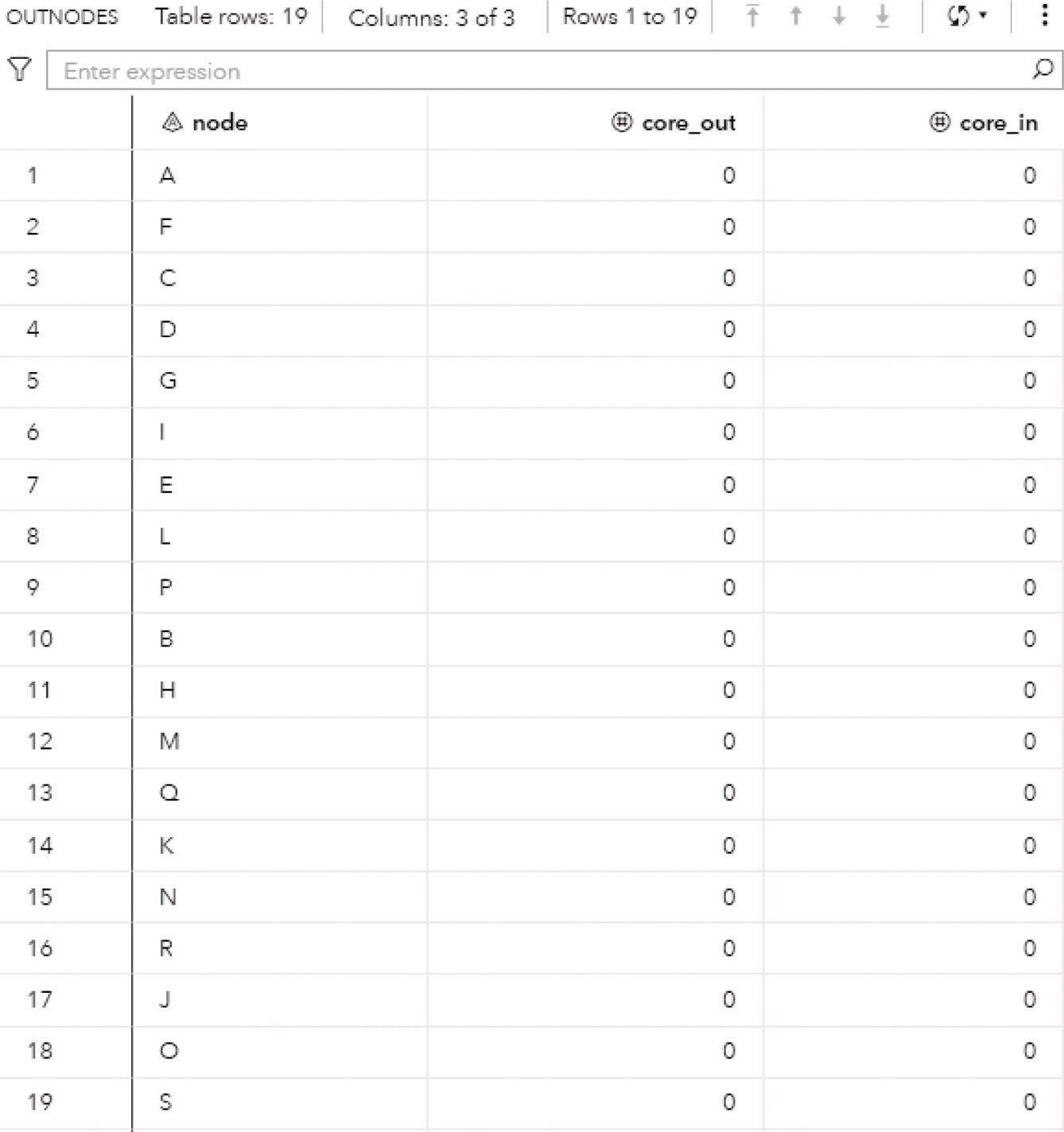

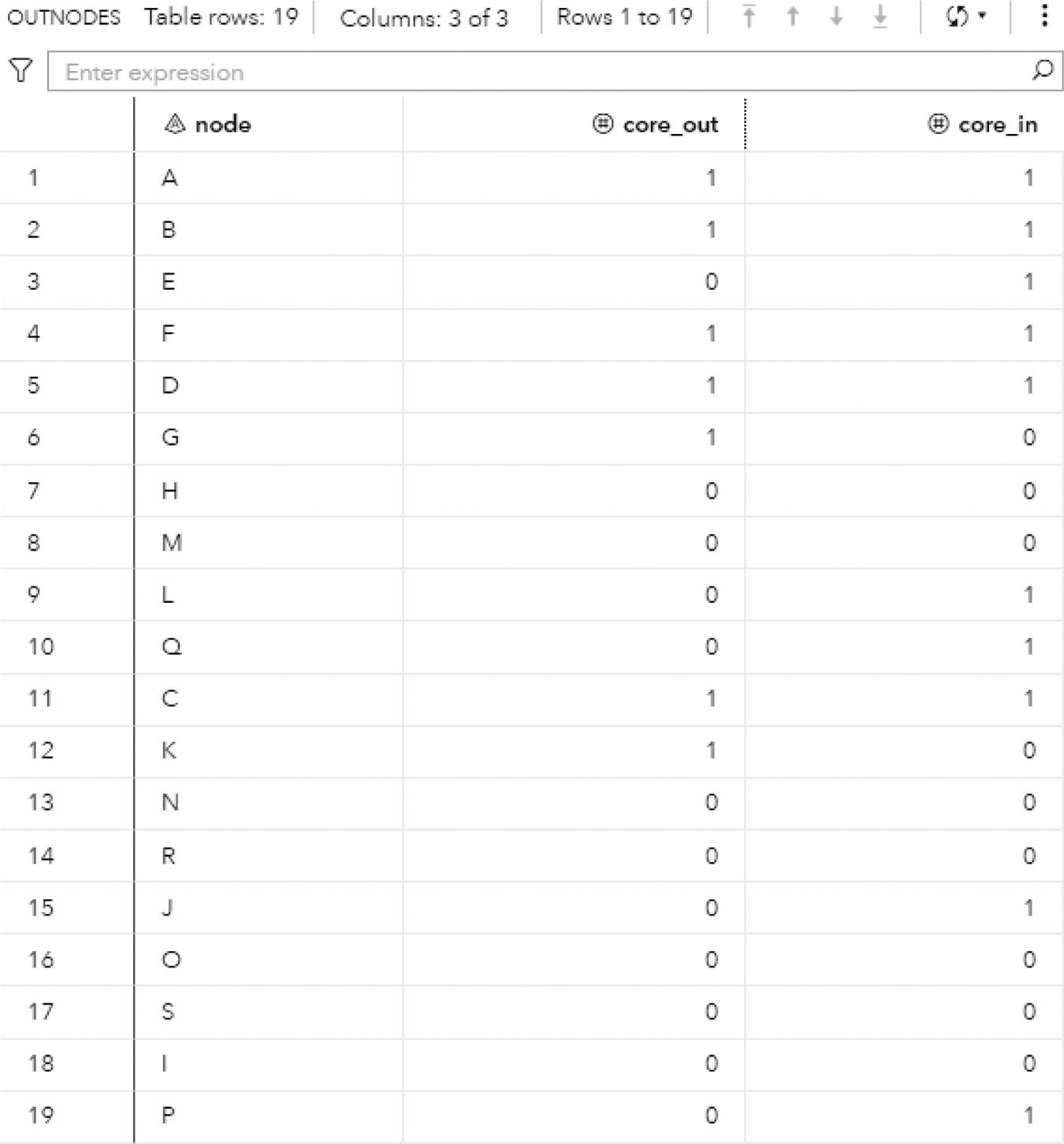

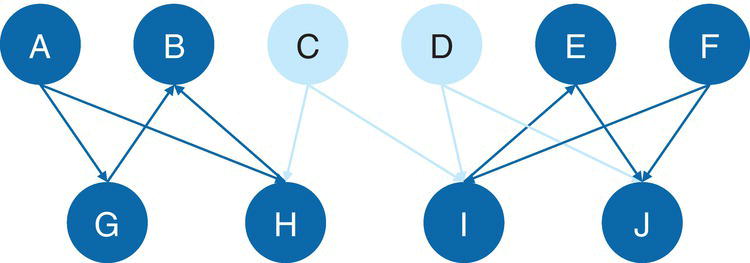

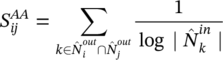

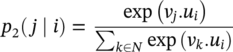

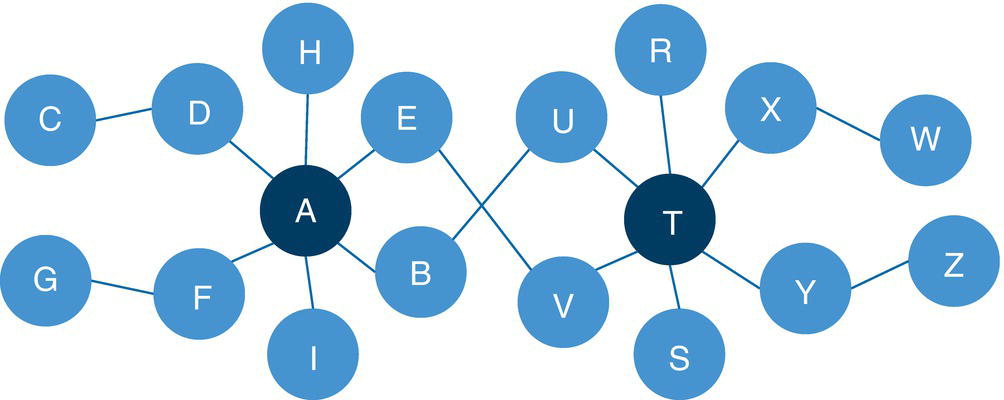

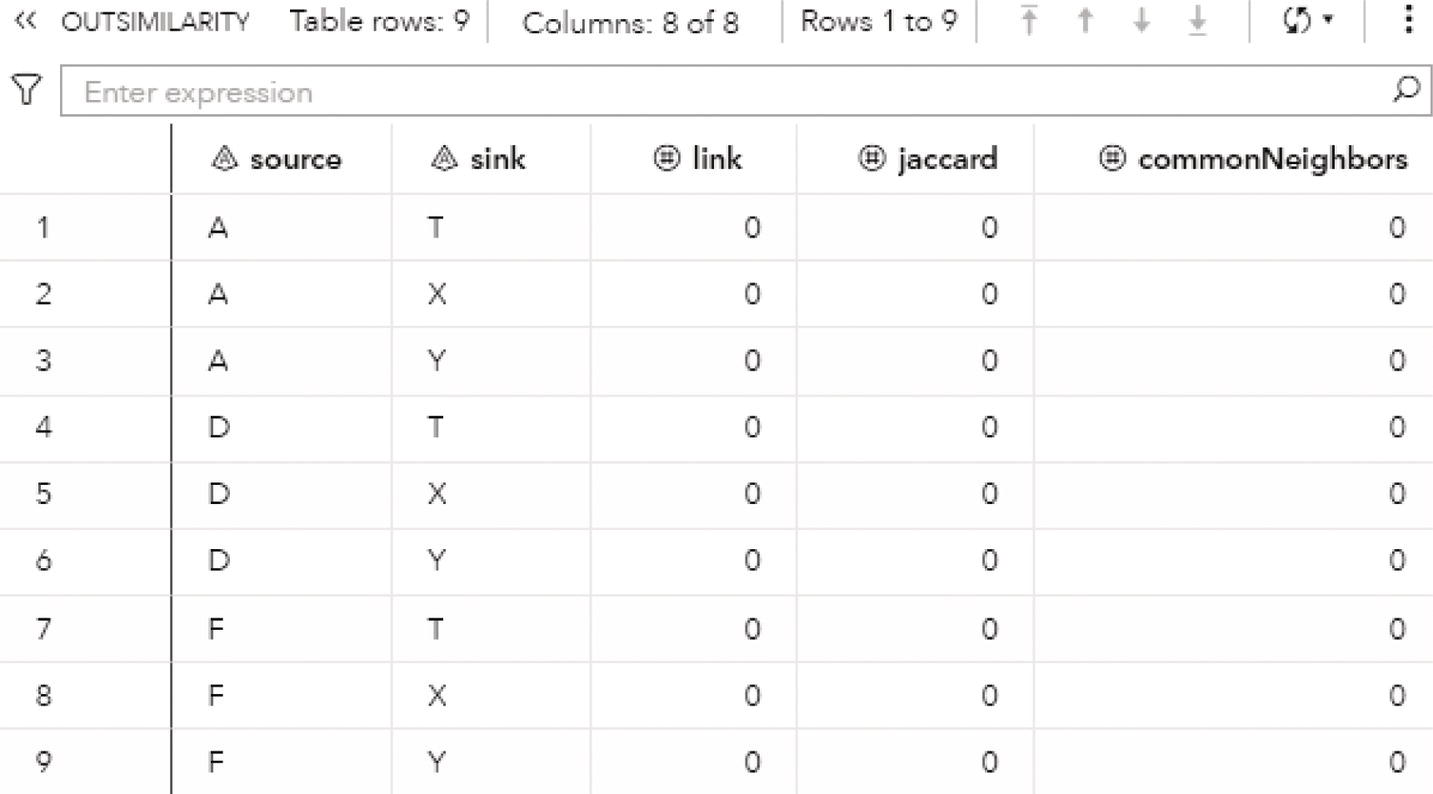

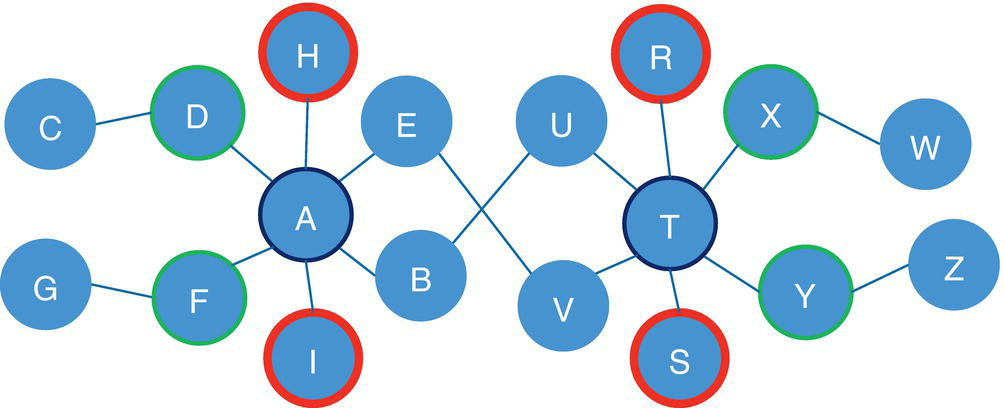

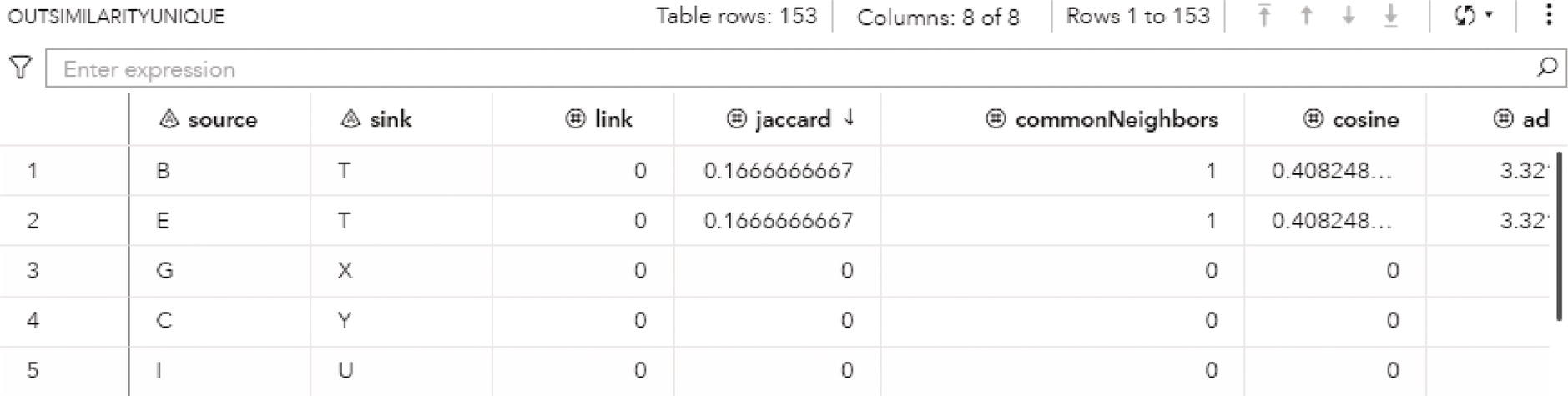

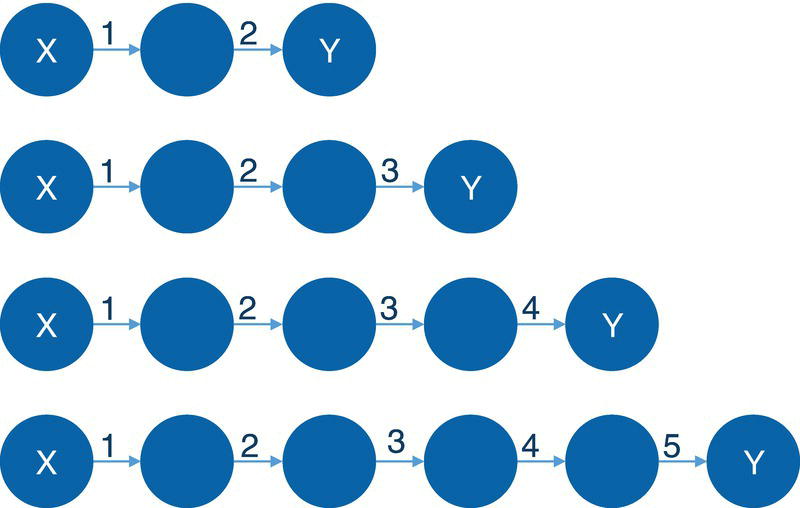

proc networkdirection = undirectedlinks = mycas.linksoutlinks = mycas.outlinksoutnodes = mycas.outnodes;linksvarfrom = fromto = to;communityalgorithm=labelpropresolutionlist = 1.0outlevel = mycas.outcommleveloutcommunity = mycas.outcommoutoverlap = mycas.outoverlapoutcommLinks = mycas.outcommlinks;run;