5

Real‐World Applications in Network Science

5.1 Introduction

This chapter presents some use cases where network analysis and network optimization algorithms were used to solve a particular problem, or at least were used as part of an overall solution. The first two cases were created to demonstrate some of the network optimization capabilities, providing practical examples on using network algorithms to understand a particular scenario. The first case explores multiple network algorithms to understand the public transportation system in Paris, France, its topology, reachability, and specific characteristics of the network created by tram, train, metro, and bus lines. On top of that, the case presents a comparison on the Traveling Salesman (TSP) Problem in Paris by using the multimodal public transportation and by just walking. A tourist wants to visit a set of places and can do it by walking or by using the transportation lines available. The case offers an overall perspective about distance and time traveled.

The second case covers the vehicle routing problem (VRP). Similar to the first case on the (TSP), the main idea is to demonstrate some crucial features of this important network optimization algorithm. The city chosen for this demonstration is Asheville, North Carolina, U.S. The city is known to have one of the highest ratios of breweries per capita in the country. The case demonstrates how a brewery can deliver beer kegs demanded by its customers (bars and restaurants), considering the available existing routes, one or multiple vehicles, and different vehicle capacities.

The third case is a real use case developed at the beginning of the COVID‐19 pandemic in early 2020. In this case, network analysis and network optimization algorithms play a crucial part of the overall solution to identify new outbreaks. Network algorithms were used to understand population movements along geographic locations, create correlation factors to virus spread, and ultimately feed supervised machine learning models to predict the likelihood of COVID‐19 outbreaks in specific regions.

Partially similar to the third case, the fourth one describes the overall urban mobility in a metropolitan area and how to use that outcome to improve distinct aspects of society such as identifying the vectors of spread diseases, better planning transportation networks, and strategically defining public surveillance tasks, among many others. Network analysis and network optimization algorithms play an important role in this case study in providing most of the outcomes used in further advanced statistical analysis.

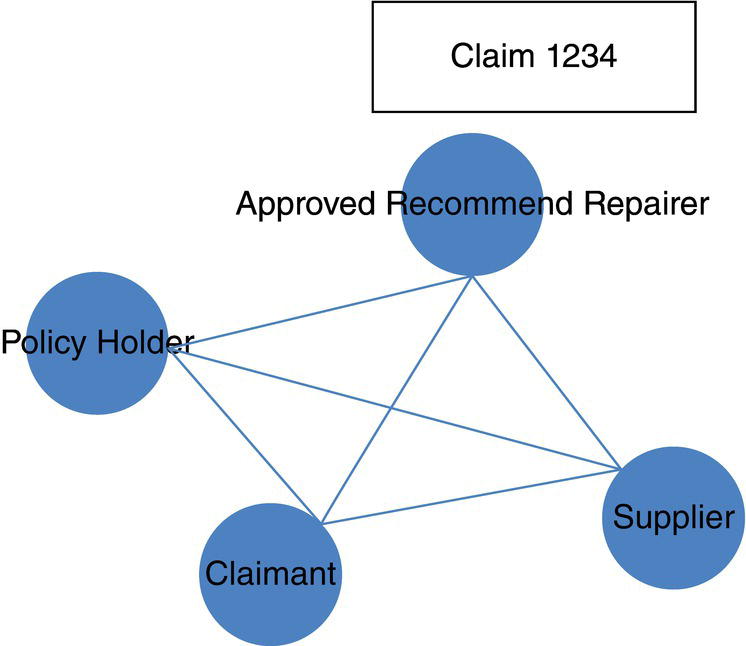

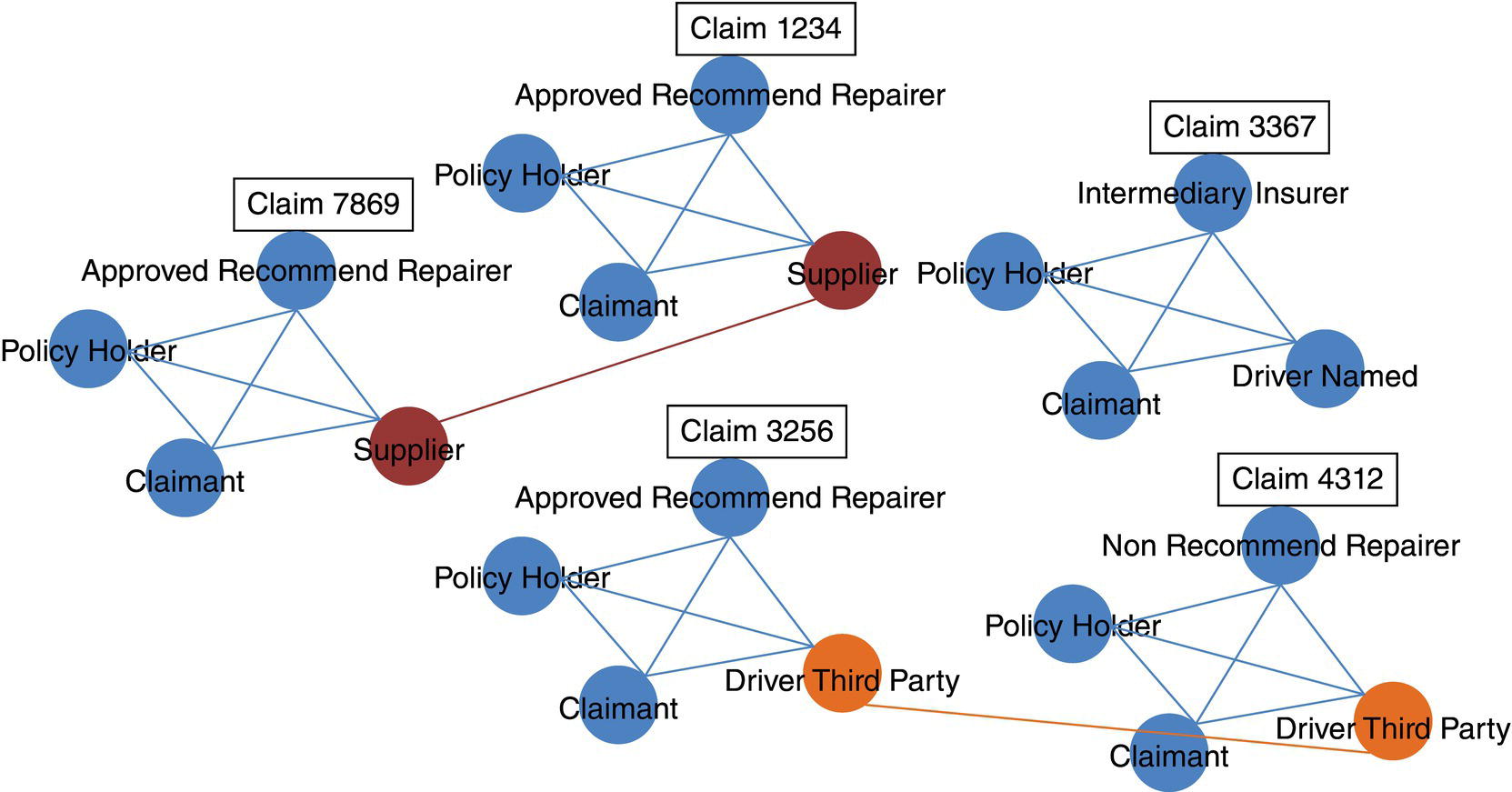

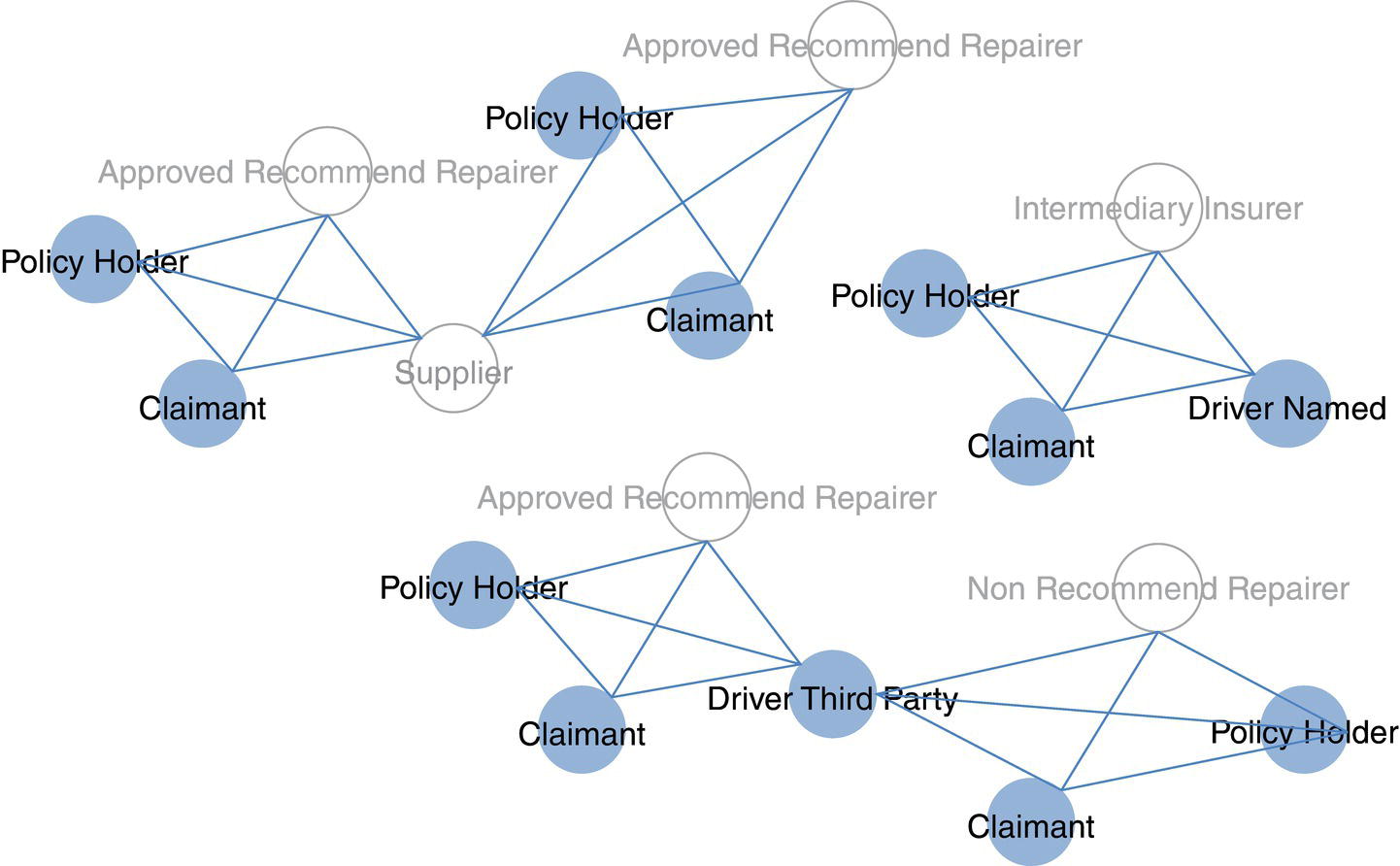

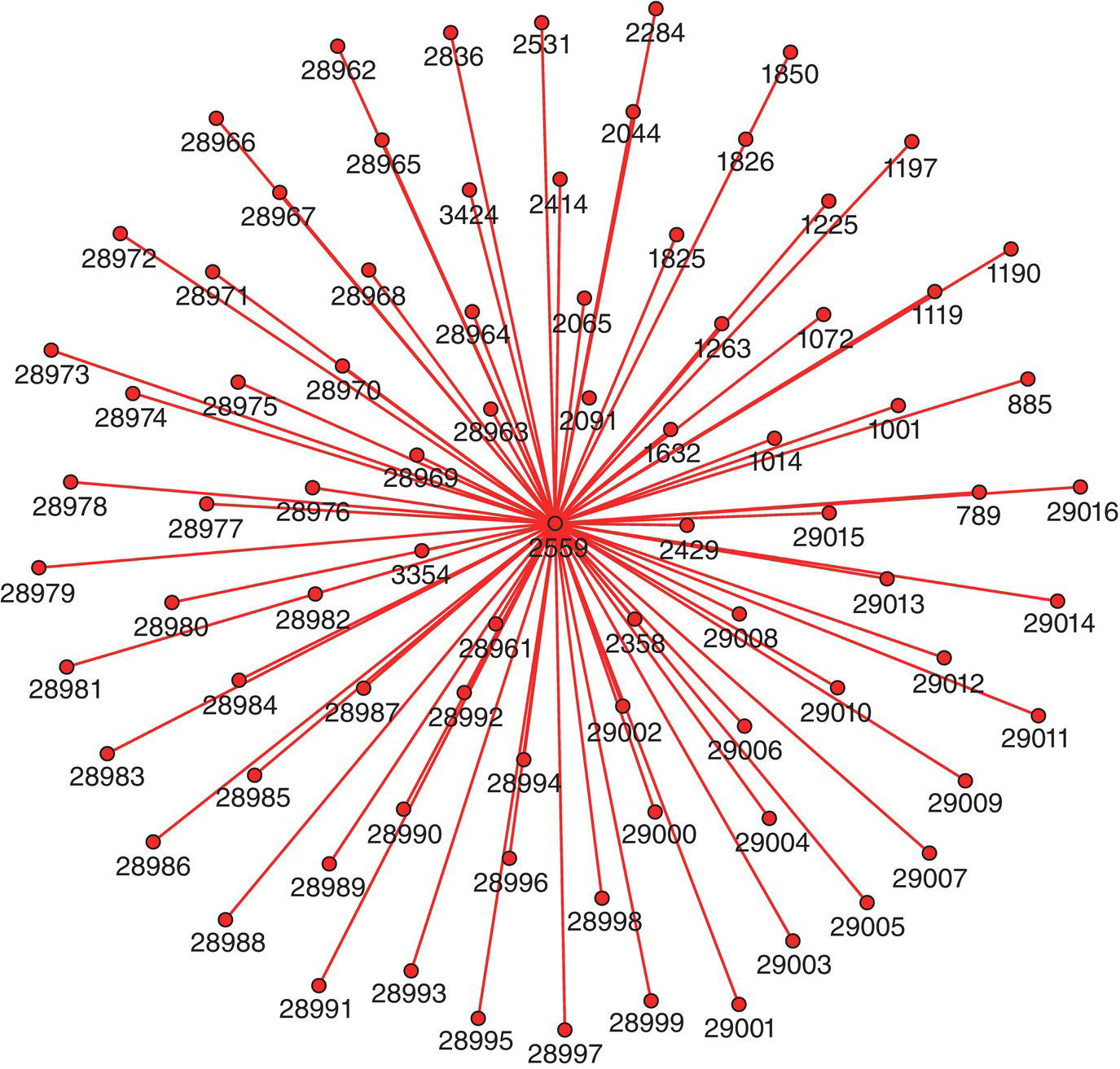

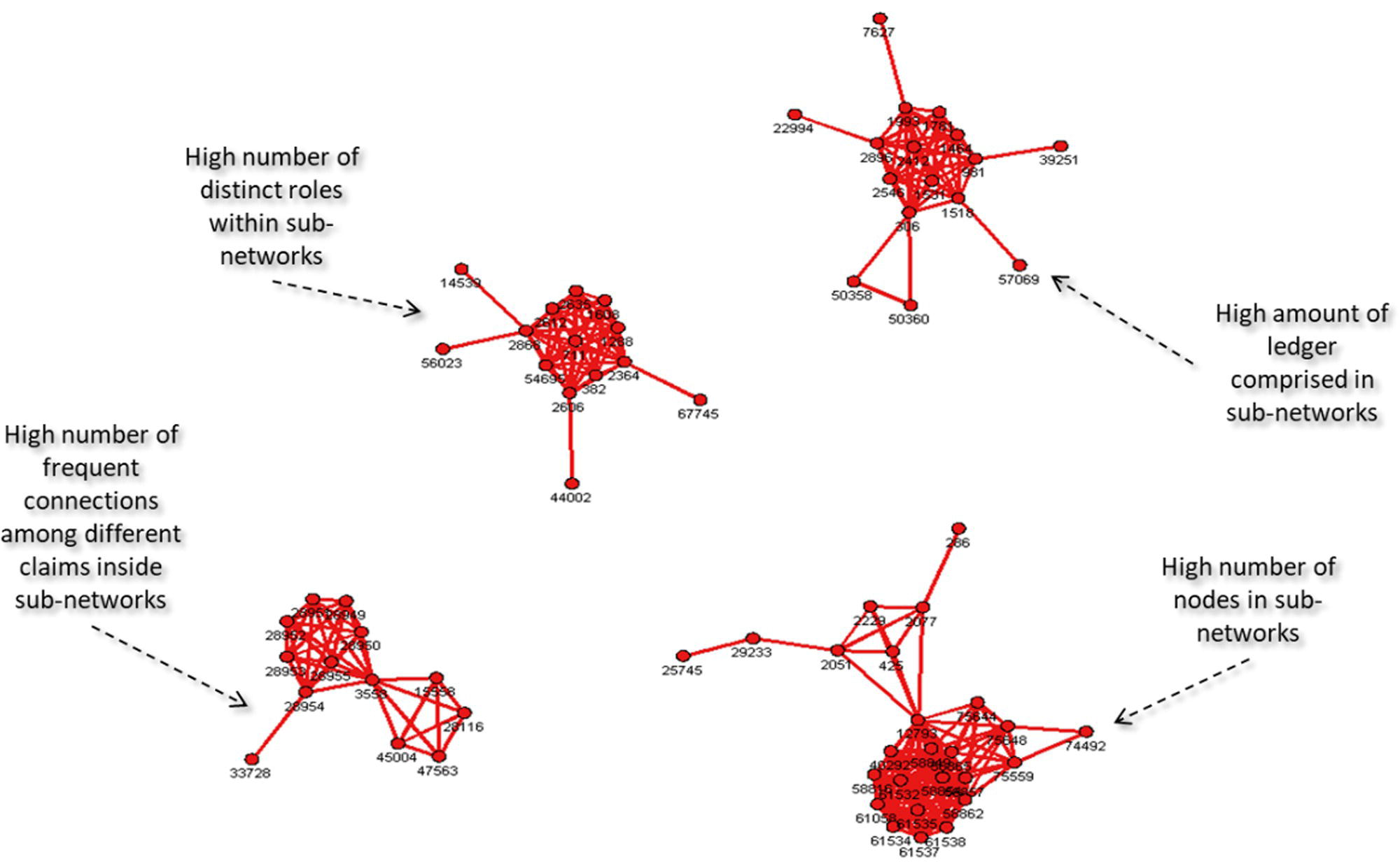

The remaining cases focus more on the network analysis algorithms, looking into multiple types of subgraph detection, centrality metrics calculation, and ultimately correlation analysis between those outcomes from some specific business events like churn, default, fraud, or consumption and the customer's profile in terms of social structures. The fifth case for instance focuses on the analysis of exaggeration in auto insurance. Based on the insurance transactions, a network is built associating all actors within the claims, like policy holders, drivers, witnesses, etc., and then connecting all similar actors throughout the claims within a particular period of time to build up the final social structure. Upon the final network, a series of subgroup algorithms are executed along a set of network centrality metrics. Finally, an outlier analysis on the different types of subgroups as well as on the centralities are performed in order to highlight suspicious activities on the original claims.

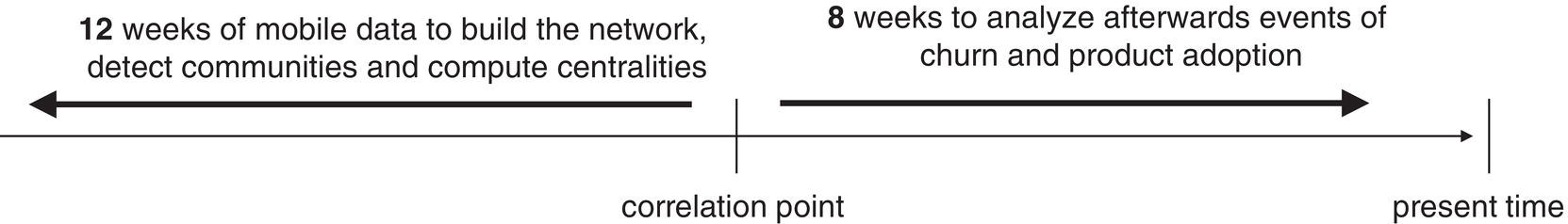

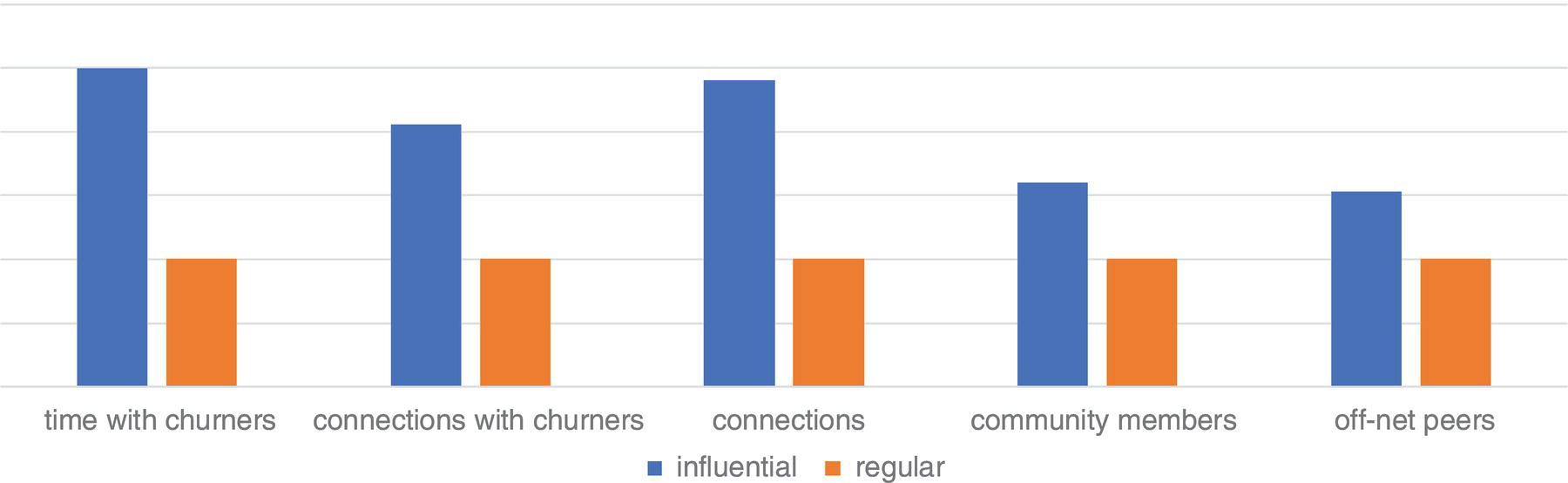

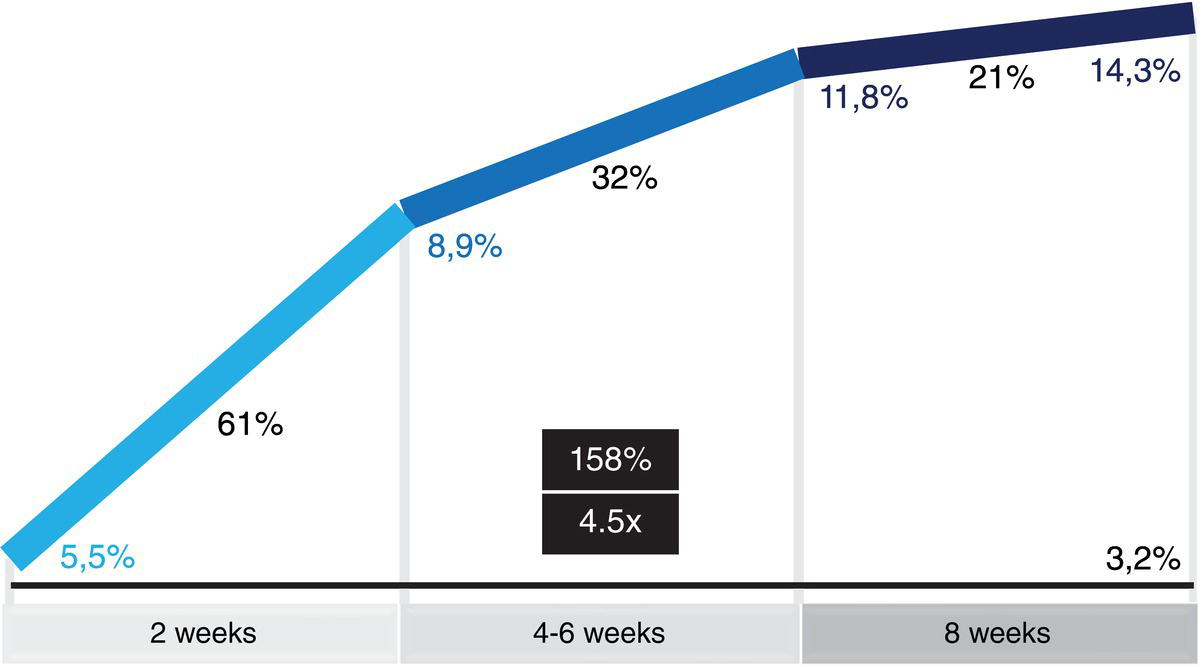

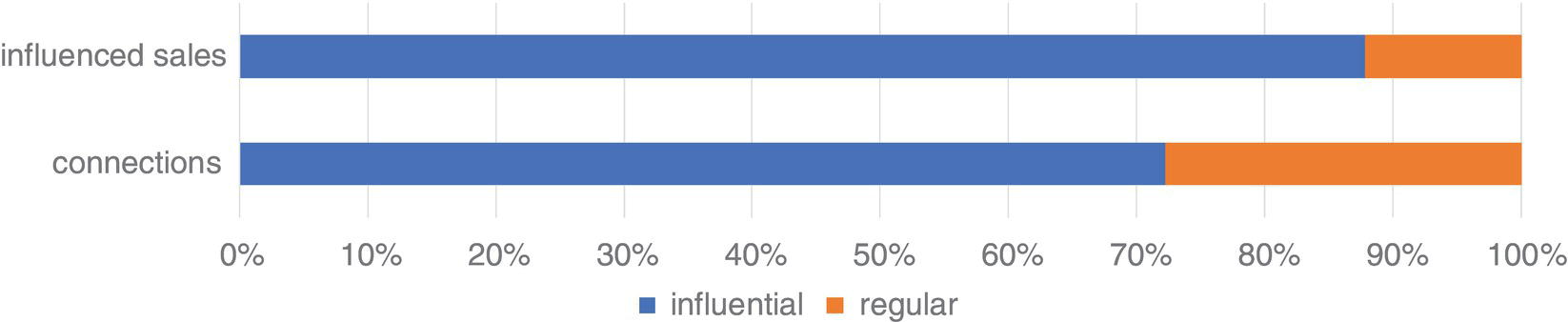

The sixth case describes the influential factors in business events like churn and product adoption within a communications company. Based on a correlation analysis over time between business events and the subscribers' profile in terms of network metrics, an influential factor is calculated to estimate the likelihood of customers affecting their peers on events such as churn and product adoption afterwards.

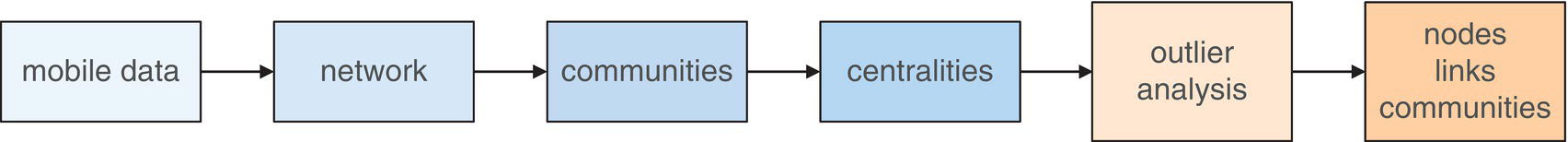

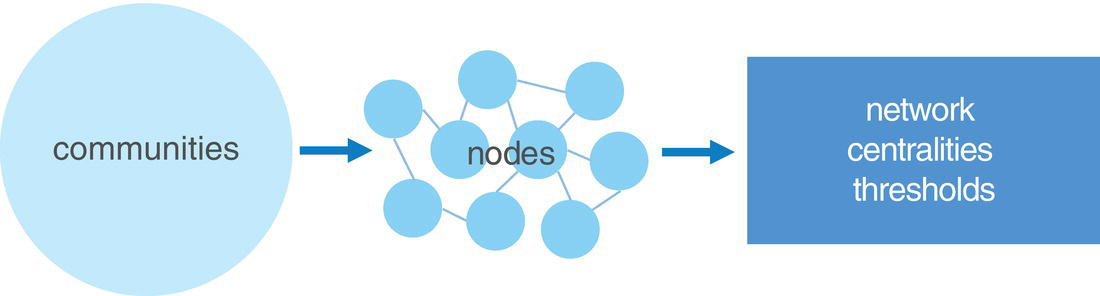

The seventh and last case study describers a methodology to detect fraud in telecommunications based on a combination of multiple analytical approaches. The first step is to create the network based on the mobile data. The second stage is to detect the communities within the overall network. Then a set of network centralities is computed for each node considering their respective community. The average value for all these metrics is calculated and an outlier analysis is performed on them, highlighting the unusual communities in terms of relationships and connections between its members.

5.2 An Optimal Tour Considering a Multimodal Transportation System – The Traveling Salesman Problem Example in Paris

A good public transportation system is crucial in developing smart cities, particularly in great metropolitan areas. It helps to effectively flow the population in commuting and allow tourists to easily move around the city. Public transportation agencies around the globe open and share their data for application development and research. All these data can be used to explore the network topology in order to optimize tasks in terms of public transportation offerings. Network optimization algorithms can be applied to better understand the urban mobility, particularly based on a multimodal public transportation network.

Various algorithms can be used to evaluate and understand a public transportation network. The Minimum Spanning Tree algorithm can reveal the most critical routes to be kept in order to maintain the same level of accessibility in the public transportation network. It basically identifies which stations of trains, metros, trams, and buses need to be kept in order to maintain the same reachability of the original network, considering all the stations available. The Minimum‐Cost Network Flow can describe an optimal way to flow the population throughout the city, allowing better plans for commuting based on the available routes and its capacities, considering all possible types of transportation. The Path algorithm can reveal all possible routes between any pair of locations. This is particularly important in a network considering a multimodal transportation system. It gives the transportation authorities a set of possible alternate routes in case of unexpected events. The Shortest Path can reveal an optimal route between any two locations in the city, allowing security agencies to establish faster emergency routes in case of special situations. The Transitive Closure algorithm identifies which pairs of locations are joined by a particular route, helping public transportation agencies to account for the reachability in the city.

Few algorithms are used to describe the overall topology of the network created by the public transportation system in Paris. However, the algorithm emphasized here is the TSP. It searches for the minimum‐cost tour within the network. In my case, the minimum cost is based on the distance traveled, considering a set of locations to be visited, based on all possible types of transportations available in the network. Particularly, the cost is the walking distance, which means we will try to minimize the walking distance in order to visit all the places we want.

Open public transportation data allows both companies and individuals to create applications that can help residents, tourists, and even government agencies in planning and deploying the best possible public services. In this case, we are going to use the open data provided by some transportation agencies in Paris (RAPT – Régie Autonome des Transports Parisiens – and SNCF – Société Nacionale des Chemins de fer Français).

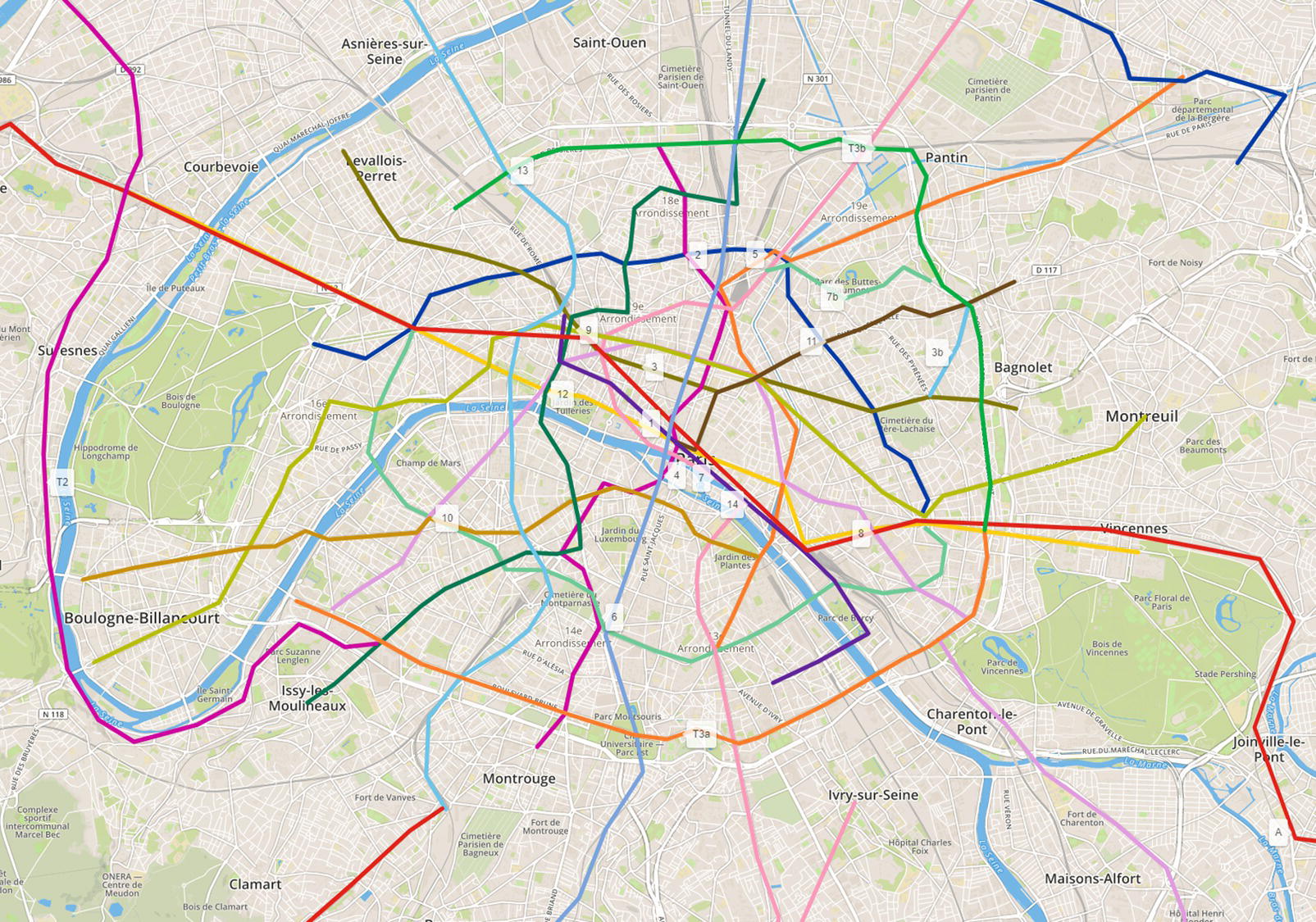

In order to create the public transportation systems in terms of a graph, with stations as nodes, and the multiple types of transport as links (between the nodes or stations), the first step is to evaluate and collect the appropriate open data provided by these agencies and to create the transportation network. This particular data contains information about 18 metro lines, 8 tram lines, and 2 major train lines. It comprises information about all lines, stations, timetables, and coordinates, among many others. This data was used to create the transportation network, identifying all possible stops and the sequence of steps performed using the public transportation system while traveling throughout the city.

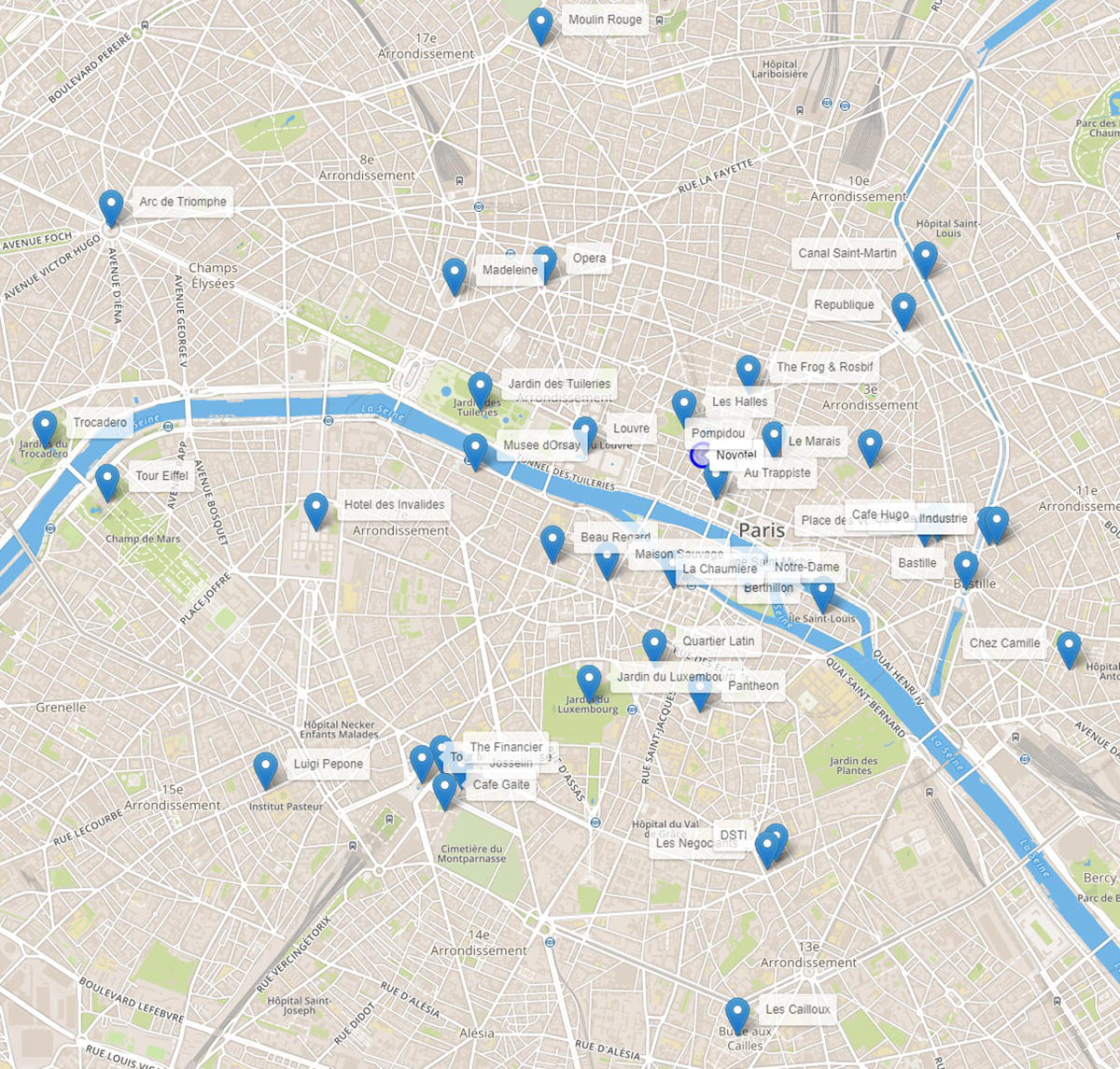

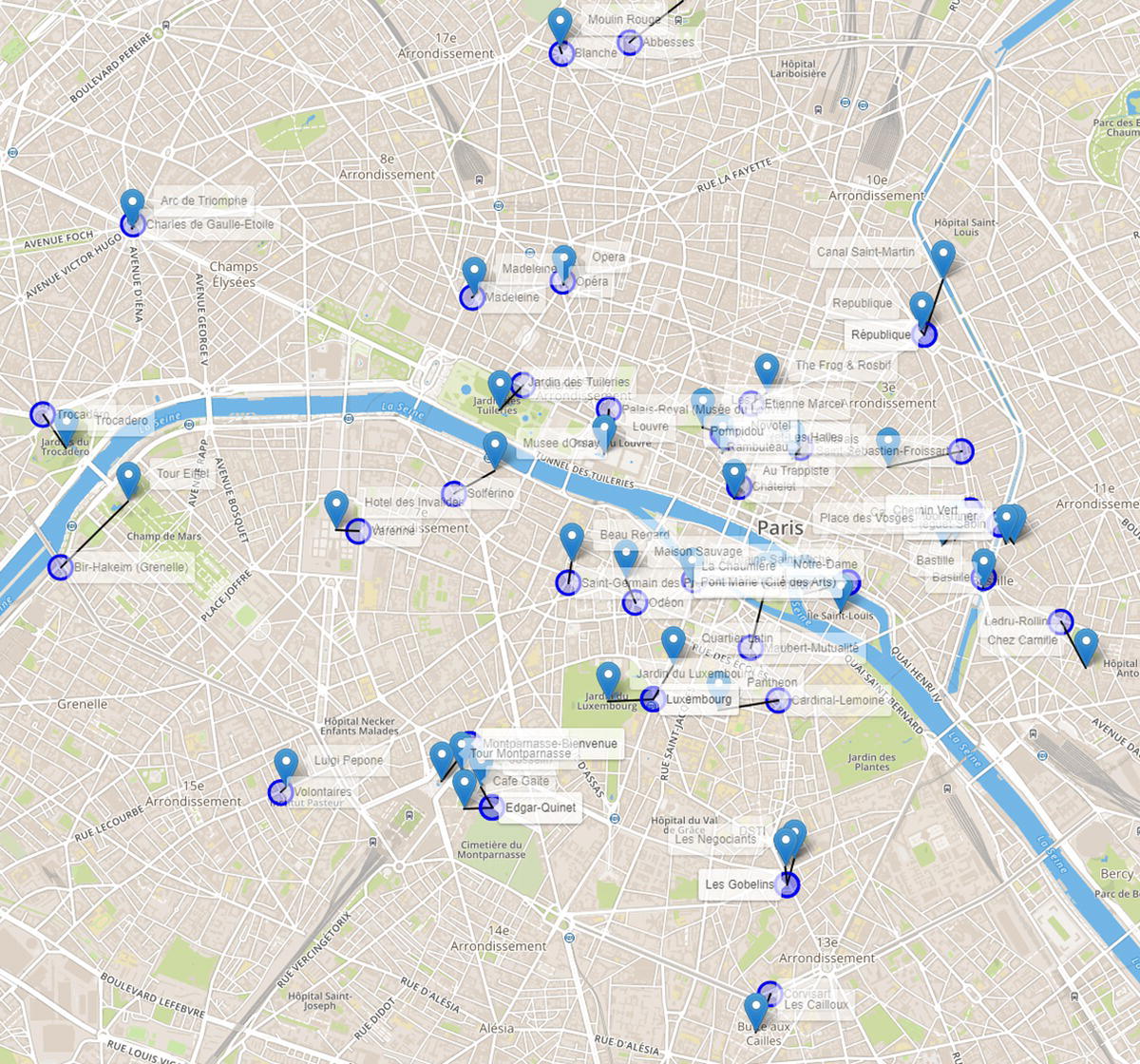

In addition to some network optimization algorithms to describe the overall public transportation topology, we want to particularly show the TSP algorithm. We selected a set of 42 places in Paris, a hotel in Les Halles, that works as the starting and ending point, and 41 places of interest, including the most popular tourist places in Paris, and some popular cafes and restaurants. That is probably the hardest part in this problem, to pick only 41 cafes in Paris! In this case study, we are going to execute mainly two different optimal tours. The first one is just by walking. It is a beautiful city, so nothing is better than just walking through the city of lights. The second tour considers the multimodal public transportation system. This tour actually does not help us to enjoy the wonderful view, but it surely helps us enjoy longer visits to the cafes, restaurants, and monuments.

The first step is to set up the 42 places based on x (latitude) and y (longitude) coordinates. The following code shows how to create the input data table with all places to visit.

data places;length name $20;infile datalines delimiter=",";input name $ x y;datalines;Novotel,48.860886,2.346407Tour Eiffel,48.858093,2.294694Louvre,48.860819,2.33614Jardin des Tuileries,48.86336,2.327042Trocadero,48.861157,2.289276Arc de Triomphe,48.873748,2.295059Jardin du Luxembourg,48.846658,2.336451Fontaine Saint Michel,48.853218,2.343757Notre-Dame,48.852906,2.350114Le Marais,48.860085,2.360859Les Halles,48.862371,2.344731Sacre-Coeur,48.88678,2.343011Musee dOrsay,48.859852,2.326634Opera,48.87053,2.332621Pompidou,48.860554,2.352507Tour Montparnasse,48.842077,2.321967Moulin Rouge,48.884124,2.332304Pantheon,48.846128,2.346117Hotel des Invalides,48.856463,2.312762Madeleine,48.869853,2.32481Quartier Latin,48.848663,2.342126Bastille,48.853156,2.369158Republique,48.867877,2.363756Canal Saint-Martin,48.870834,2.365655Place des Vosges,48.855567,2.365558Luigi Pepone,48.841696,2.308398Josselin,48.841711,2.325384The Financier,48.842607,2.323681Berthillon,48.851721,2.35672The Frog & Rosbif,48.864309,2.350315Moonshiner,48.855677,2.371183Cafe de lIndustrie,48.855655,2.371812Chez Camille,48.84856,2.378099Beau Regard,48.854614,2.333307Maison Sauvage,48.853654,2.338045Les Negociants,48.837129,2.351927Les Cailloux,48.827689,2.34934Cafe Hugo,48.855913,2.36669La Chaumiere,48.852816,2.353542Cafe Gaite,48.84049,2.323984Au Trappiste,48.858295,2.347485;run;

The following code shows how to use SAS data steps to create a HTML file in order to show all the selected places on a map. The map is actually created based on an open‐source package called Leaflet. The output file can be opened in any browser to show the places in a geographic approach. All the following maps are created based on the same approach, using multiple features of LeafLet.

data _null_;set places end=eof;file arq;length linha $1024.;k+1;if k=1 thendo;put '<!DOCTYPE html>';put '<html>';put '<head>';put '<title>SAS Network Optimization</title>';put '<meta charset="utf-8"/>';put '<meta name="viewport" content="width=device-width, initial...put '<link rel="stylesheet" href=" https://unpkg.com/[email protected] ...put '<script src=" https://unpkg.com/[email protected]/dist/leaflet ....put '<style>body{padding:0;margin:0;}html,body,#mapid{height:10...put '</head>';put '<body>';put '<div id="mapid"></div>';put '<script>';put 'var mymap=L.map("mapid").setView([48.856358, 2.351632],14);';put 'L.tileLayer(" https://api.tiles.mapbox.com/v4/{id}/{z}/{x}/ ...end;linha='L.marker(['||x||','||y||']).addTo(mymap).bindTooltip("'||name...if name = 'Novotel' thendo;linha='L.marker(['||x||','||y||']).addTo(mymap).bindTooltip("'|...linha='L.circle(['||x||','||y||'],{radius:75,color:"'||'blue'||...end;put linha;if eof thendo;put '</script>';put '<body>';put '</html>';end;run;

Figure 5.1 shows the map with the places to be visited by the optimal tour.

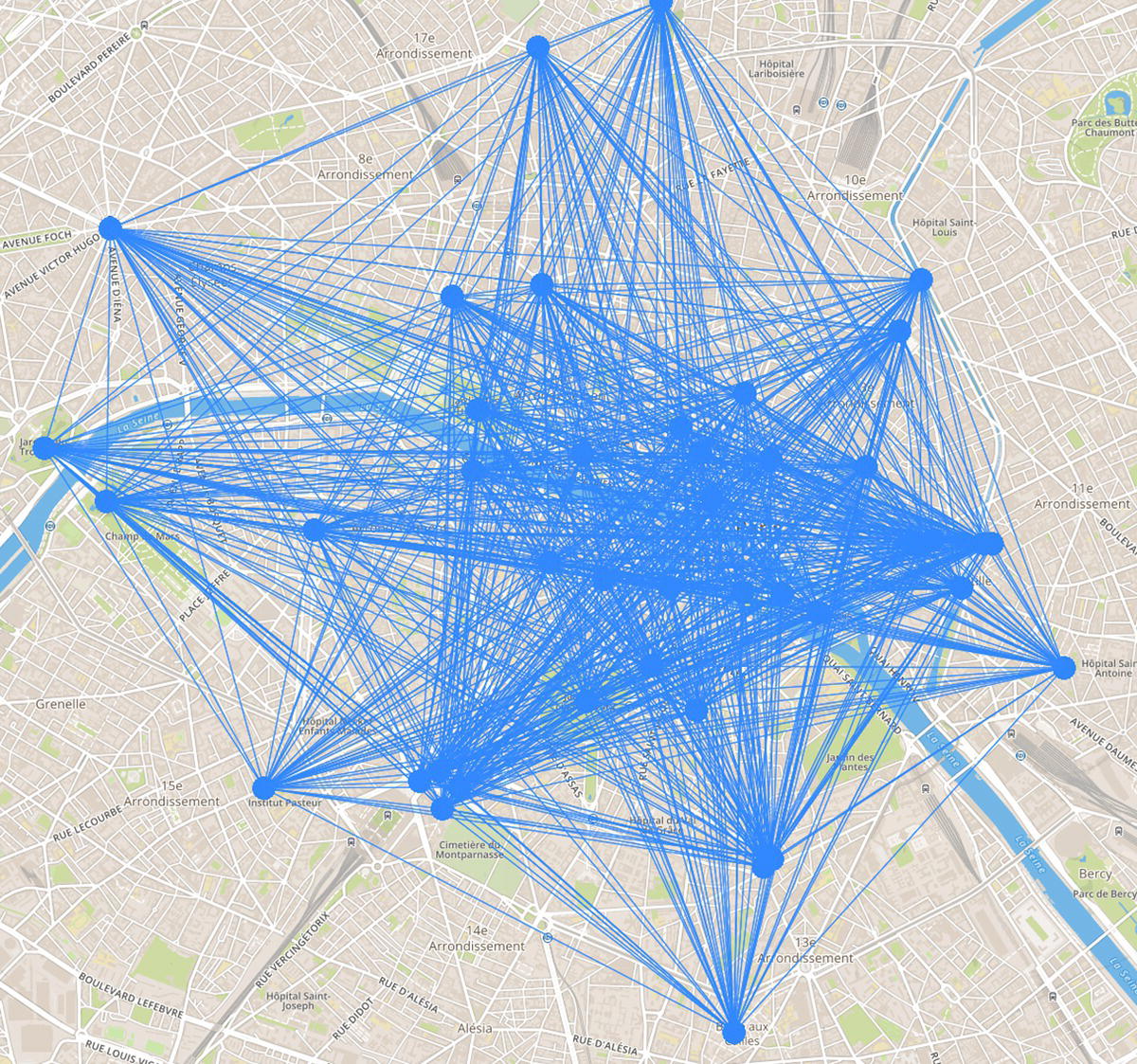

Considering the walking tour first, any possible connection between two places is a link between them. Based on that, there are 1722 possible steps (or links) to be considered when searching for the optimal tour. It is important to notice that here we are not considering existing possible routes. The distance between a pair of places, as well as the route between them, is just the Euclidian distance between the two points on the map. The next case study will consider the existing routes. The reason to do that in this first case is that we want to compare the walking tour with the multimodal public transportation tour. The transportation network does not follow the existing routes (streets, roads, highways, etc.), but it considers almost a straight line between the stations, similar to the Euclidian distance. To make a fair comparison between walking or taking the public transportation, we consider all distances as Euclidian distances instead of routing distances.

Figure 5.1 Places to be visited in the optimal tour in Paris.

The following code shows how to create the possible links between all pairs of places.

proc sql;create table placeslinktmp asselect http://a.name as org, a.x as xorg, a.y as yorg, http://b.name as dst,b.x as xdst, b.y as ydstfrom places as a, places as b;quit;

Figure 5.2 shows the possible links within the network.

Definitely the number of possible links makes the job quite difficult. The TSP is the network optimization algorithm that searches for the optimal sequence of places to visit in order to minimize a particular objective function. The goal may be to minimize the total time, the total distance, or any type of costs associated with the tour. The cost here is the total distance traveled.

As defined previously, the distance between the places is computed based on the Euclidian distance, rather than the existing road distance. The following code shows how to compute the Euclidian distance for all links.

data mycas.placesdist;set placeslink;distance=geodist(xdst,ydst,xorg,yorg,'M');output;run;

Once the links are created, considering origin and destination places, and the distance between these places, we can compute the optimal tour to visit all places by minimizing the overall distance traveled.

Figure 5.2 Links considered in the network when searching for the optimal tour.

proc optnetworkdirection = directedlinks = mycas.placesdistout_nodes = mycas.placesnodes;linksvarfrom = orgto = dstweight = distance;tspcutstrategy = noneheuristics = nonemilp = trueout = mycas.placesTSP;run;

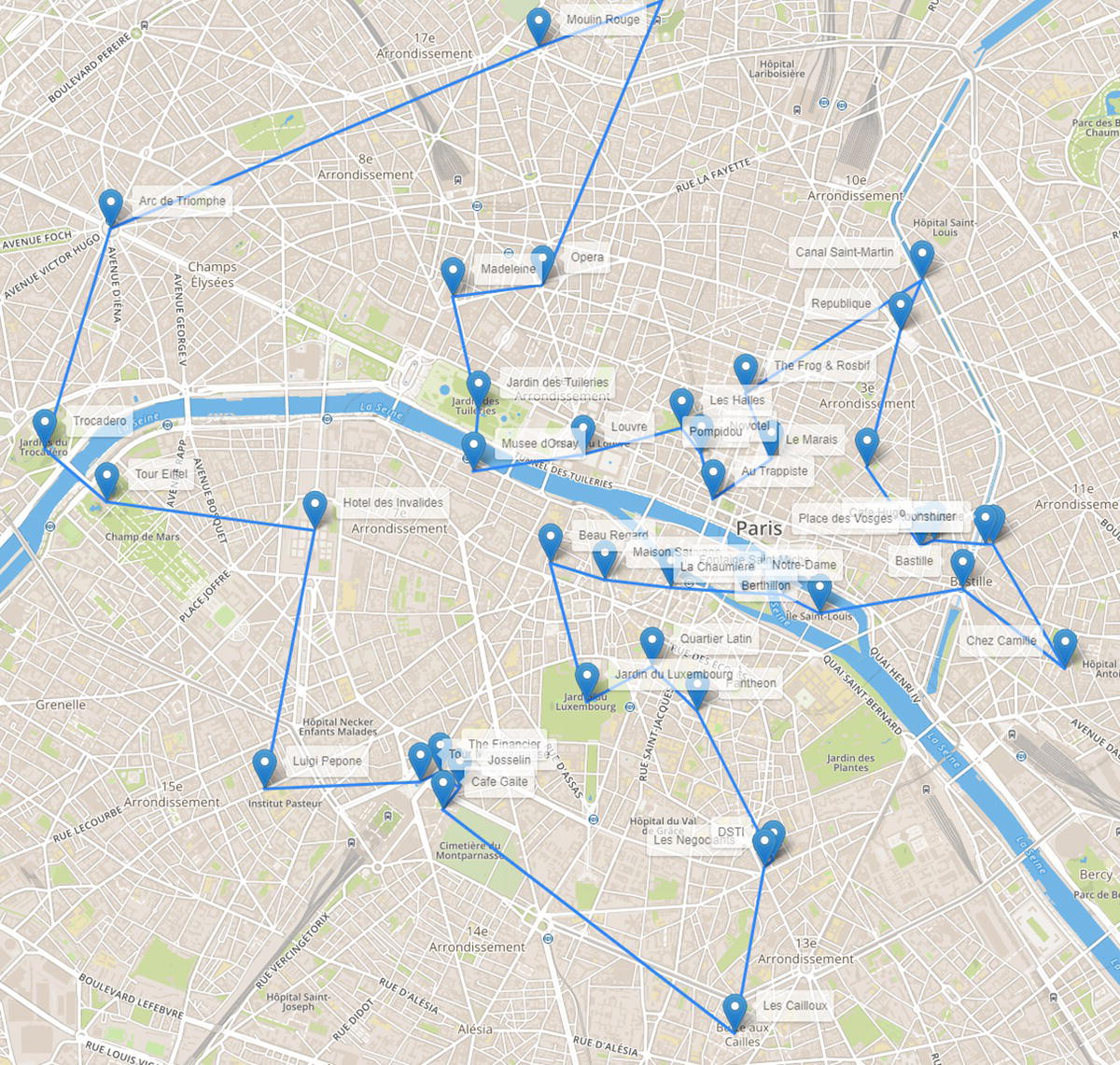

Figure 5.3 shows the optimal tour, or the sequence of places to be visited in order to minimize the overall distance traveled.

The best tour to visit those 41 locations, departing from and returning to the hotel, requires 19.4 miles of walking. This walking tour would take around six hours and 12 minutes.

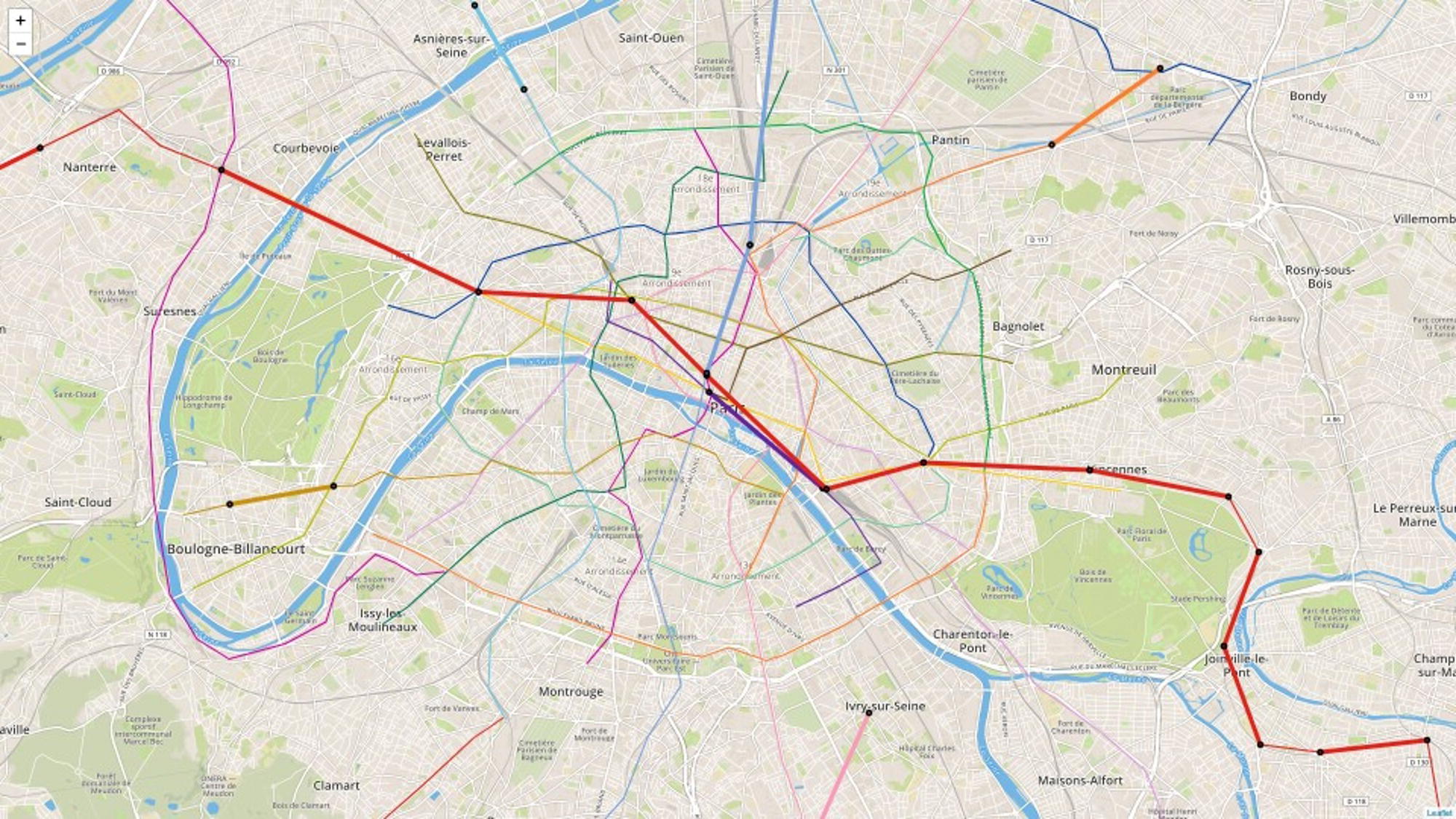

A feasible approach to reduce the walking distance is to use the public transportation system. In this case study, this transportation network considers train, tram, and metro. It could also consider the buses, but it is not the case here. The open data provided by the RATP is used to create the public transportation network, considering 27 lines (16 metro lines, 9 tram lines out of the existing 11, and 2 train lines out of the existing 5), and 518 stations.

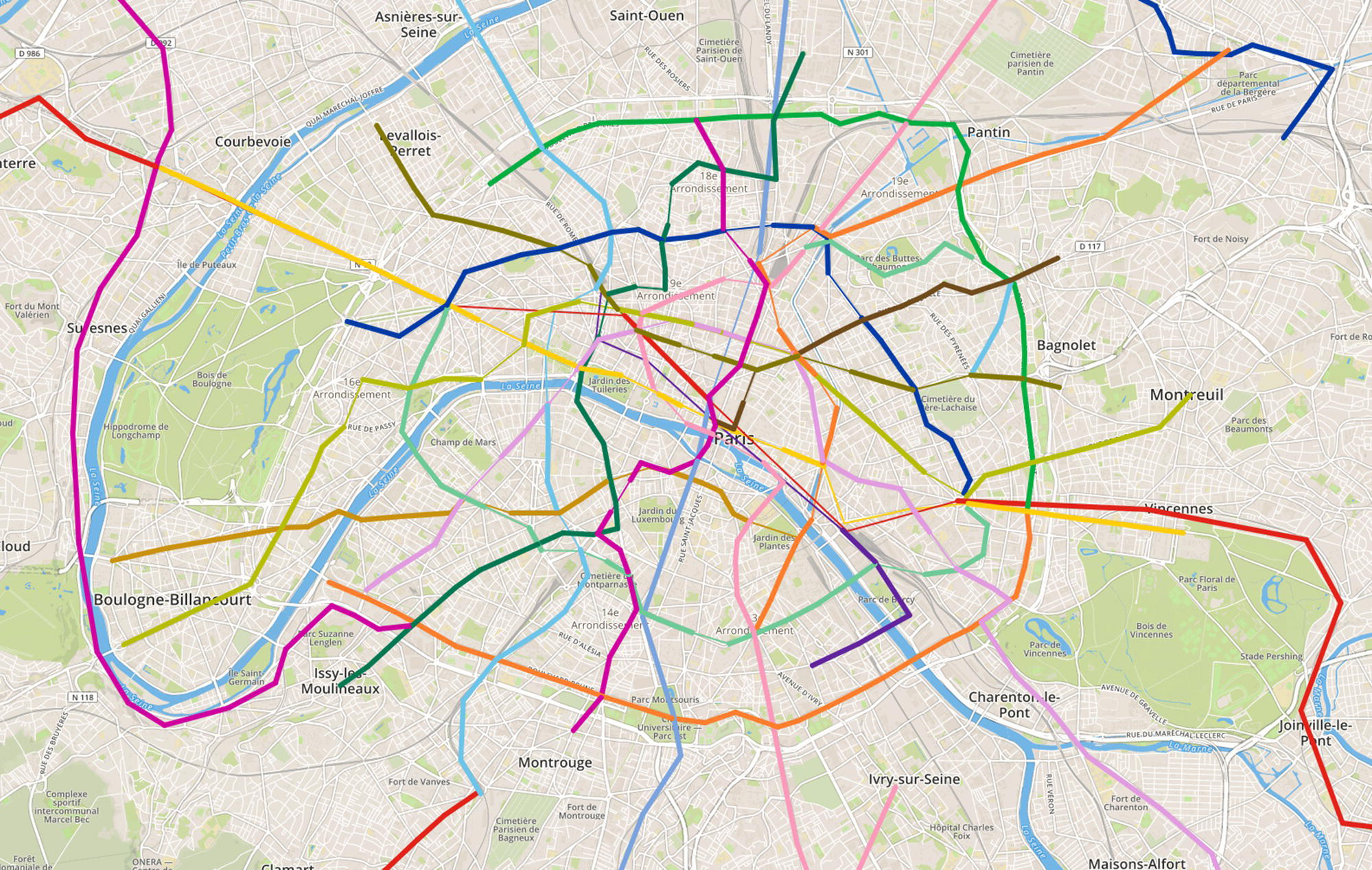

The code to access the open data and import into SAS is suppressed here. We can basically use two approaches to fetch the open data from RATP. The first one is downloading the available datasets and importing them into SAS. The second one is by using the available APIs to fetch the data automatically. SAS has procedures to perform this task without too much work. Once the data representing the transportation network is loaded, we can create the map showing all possible routes within the city, as shown in Figure 5.4.

Figure 5.3 Links considered in the network when searching for the optimal tour.

Figure 5.4 Transportation network.

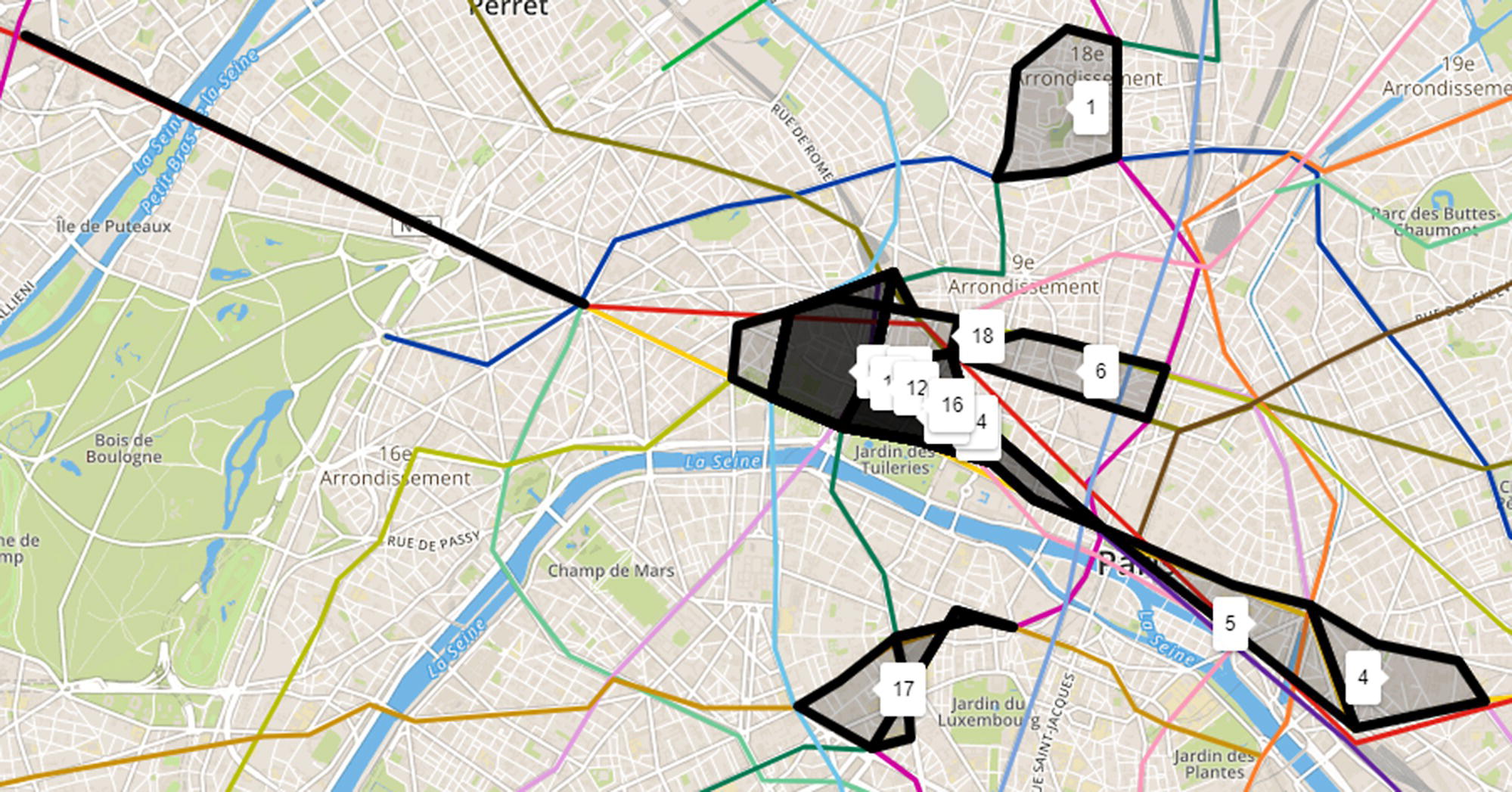

Figure 5.5 Cliques within the transportation network.

Before we move to the optimal tour considering the multimodal transportation systems, let us take a look at some other network optimization algorithms in order to describe the network topology we are about to use.

An option to find alternative stations in case of outage in the transportation lines is to identify stations that can be reached from each other, as a complete graph. The Clique algorithm can identify this subgraph within the network. To demonstrate the outcomes, the Clique algorithm was executed with a constraint of a minimum of three stations in the subgraphs, and eight distinct cycles were found. These subgraphs represent groups of stations completely connected to each other, creating a complete graph. Figure 5.5 shows the cliques within the transportation network.

The following code shows how to search for the cliques within the transportation network.

proc optnetworkdirection = undirectedlinks = mycas.metroparislinks;linksvarfrom = orgto = dstweight = dist;cliquemaxcliques = allminsize = 3out = mycas.cliquemetroparis;run;

The Cycle algorithm with a constraint of a minimum of 5 and a maximum of 10 stations found 18 cycles, which means groups of stations that creates a sequence of lines starting from one specific point and ending at that same point. Figure 5.6 shows the cycles within the transportation network.

The following code shows how to search for the cycles within the transportation network.

Figure 5.6 Cycles within the transportation network.

proc optnetworkdirection = directedlinks = mycas.metroparislinks;linksvarfrom = orgto = dstweight = dist;cyclealgorithm = buildmaxcycles = allminlength = 5maxlength = 10out = mycas.cyclemetroparis;run;

The Minimum Spanning Tree algorithm found that 510 stations can keep the same reachability from all of the 620 existing stations in the transportation network. The minimum spanning tree actually finds the minimum set of links to keep the same reachability of the original network. The stations associated with these links are the ones referred to previously. It is not a huge savings, but this difference tends to increase as the network gets more complex (for example by adding buses). Figure 5.7 shows all necessary pairs of stations, or existing links, to keep the same reachability of the original public transportation network. The thicker lines in the figure represent the links that must be kept maintaining the same reachability of the original network.

The following code shows how to search for the minimum spanning tree within the transportation network.

Figure 5.7 Minimum spanning tree within the transportation network.

proc optnetworkdirection = directedlinks = mycas.metroparislinks;linksvarfrom = orgto = dstweight = dist;minspantreeout = mycas.minspantreemetroparis;run;

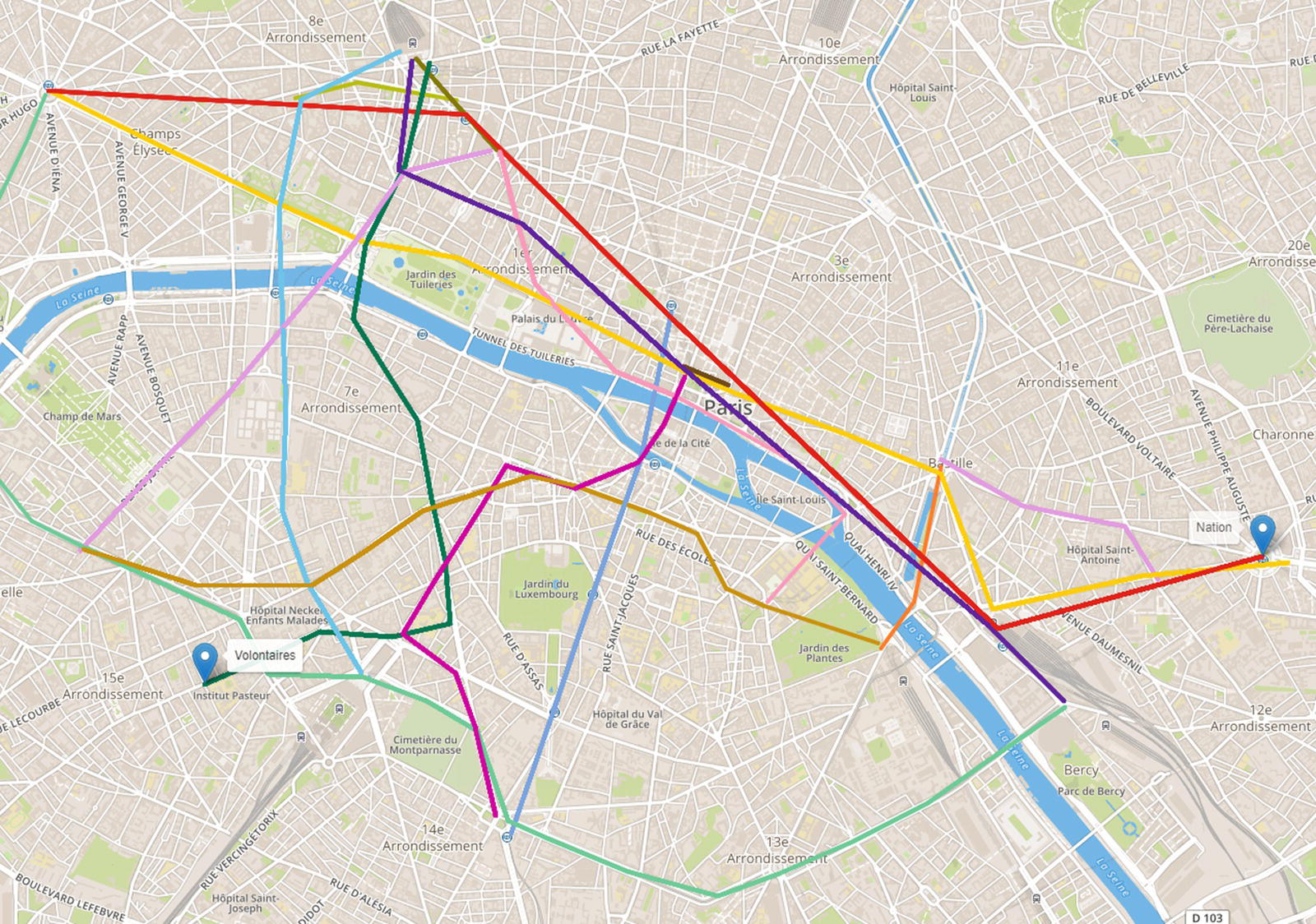

The transportation network in Paris is a dense network. It holds a great number of possible routes to connect a pair of locations. For example, considering two specific locations like Volontaires and Nation, there are 7875 possible routes to connect them, even considering a constraint of 20 stations as a maximum length for the route. All possible paths between Volontaires and Nation can be found by running the Path algorithm and they are presented in Figure 5.8. In the figure we cannot see all of these multiple lines representing all the paths as most of steps use at least partially the same lines between stations. There are multiple interchange stations in the transportation network, and one single different step between stations can represent a whole new path. For that reason, there are a huge number of possible paths between a pair of locations like Volontaires and Nation.

The following code shows how to search for the paths between two locations within the transportation network.

proc optnetworkdirection = directedlinks = mycas.metroparislinks;linksvarfrom = orgto = dstweight = dist;pathsource = Volontairessink = Nationmaxlength = 20outpathslinks = mycas.pathmetroparis;run;

Figure 5.8 Paths within the transportation network between two locations.

As there are many possible paths between any pair of locations, it is good to know which one is the shortest. The Shortest Path algorithm reveals important information about the best route between any pair of locations, which improves the urban mobility within the city. For example, between Volontaires and Nation, there are paths varying from 11 steps to 20 (set as a constraint in the Path algorithms), ranging from 4.8 to 11.7 miles. The shortest path considers 16 steps summarizing 4.8 miles. Figure 5.9 shows the shortest path between Volontaires and Nation. Notice that the shortest path considers multiple lines in order to minimize the distance traveled. Perhaps changing lines that much increases the overall time to travel from one location to another. If the goal is to minimize the total time of travel, information about departs and arrivals would need to be added.

The following code shows how to search for the shortest paths between two locations within the transportation network.

proc optnetworkdirection = directedlinks = mycas.metroparislinks;linksvarfrom = orgto = dstweight = dist;shortestpathsource = Volontairessink = Nationoutpaths = mycas.shortpathmetroparis;run;

Figure 5.9 The shortest path between two locations within the transportation network.

Figure 5.10 The Pattern Match within the transportation network.

Finally, the Pattern Match algorithm searches for a pattern of interest within the network. For instance, one possible pattern is the distance between any two consecutive stations in the same line. Out of the 620 steps between stations in the same line, there are 44 steps over one mile of distance, as shown in Figure 5.10.

The following code shows how to create the query graph and then execute the pattern matching within the transportation network.

data mycas.pmmetroparislinks;set mycas.metroparislinks;if dist ge 1 thenweight = 1;elseweight = 0;run;proc sql;select count(*) from mycas.pmmetroparislinks where weight eq 1;quit;data mycas.linksquery;input from to weight;datalines;1 2 1;run;proc networkdirection = undirectedlinks = mycas.pmmetroparislinkslinksquery = mycas.linksquery;linksvarfrom = orgto = dstweight = weight;linksqueryvarvars = (weight);patternmatchoutmatchlinks = mycas.matchlinksmetroparis;run;

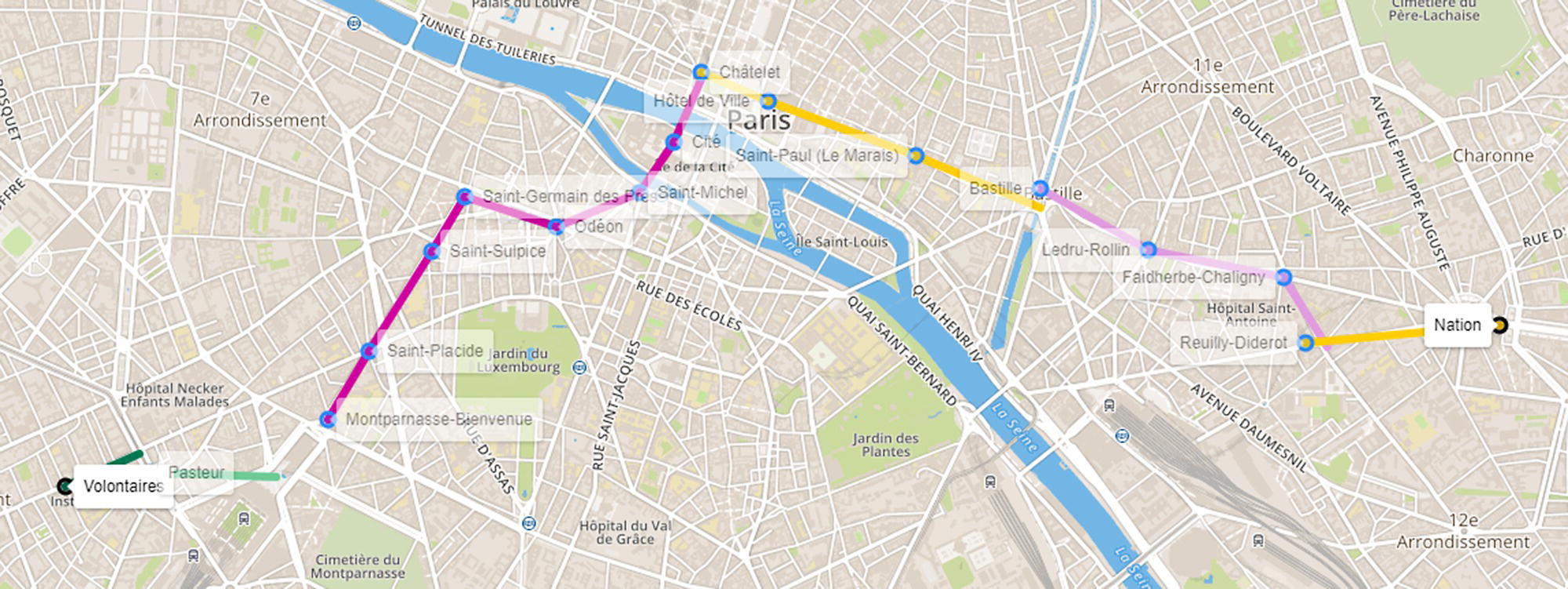

Now we have a better understanding of the transportation network topology, and we can enhance the optimal tour considering the multimodal transportation system, by adding the public lines options to the walking tour. A simple constraint on this new optimal tour is that the distance we need to walk from two points of interest and their respective closest stations should be less than the distance between those two points. For example, if we want to go from place A to place B. If the total distance of walking from the origin place A to the closest station to A plus from the closest station to B to the destination place B is greater than the distance to just walk from A to B, there is no reason to take the public transportation. We go by walking. If that distance is less than the distance from A to B, then we take the public transportation. Remember we are minimizing the walking distance, not the overall distance neither time.

In order to account for this small constraint, we need to calculate the closest station to each point of interest we want to visit. What stations serves those places we want to go, and which one is the closest. For each possible step in our best tour, we need to verify if it is better to walk or to take the public transportation.

The following code shows how to find the closest stations for each place. We basically need to calculate the distance between places and places, places and stations, and stations and stations. Then we order the links and pick the pair with the shortest distance. Figure 5.11 shows the locations and their closest stations on the map.

proc sql;create table placesstations asselect http://a.name as place, a.x as xp, a.y as yp, b.node as station,b.lat as xs, b.lon as ysfrom places as a, metronodes as b;quit;data placesstationsdist;set placesstations;distance=geodist(xs,ys,xp,yp,'M');output;run;proc sort data=placesstationsdist;by place distance;run;data stationplace;length ap $20.;ap=place;set placesstationsdist;if ap ne place thendo;drop ap;output;end;run;

Figure 5.11 The closest stations to the locations to be visited.

Once we calculate the distances between all the places we want to visit and those places to the closest stations, we can compare all possible steps in our paths in terms of shortest distances to see if we take the public transportation or if we just walk. We then execute the TSP algorithm once again to search for the optimal tour considering both walking and the public transportation options. At this point, we know the optimal sequence of places to visit in order to minimize the walking distance, and we also know when we need to walk and when we need to take the public transportation.

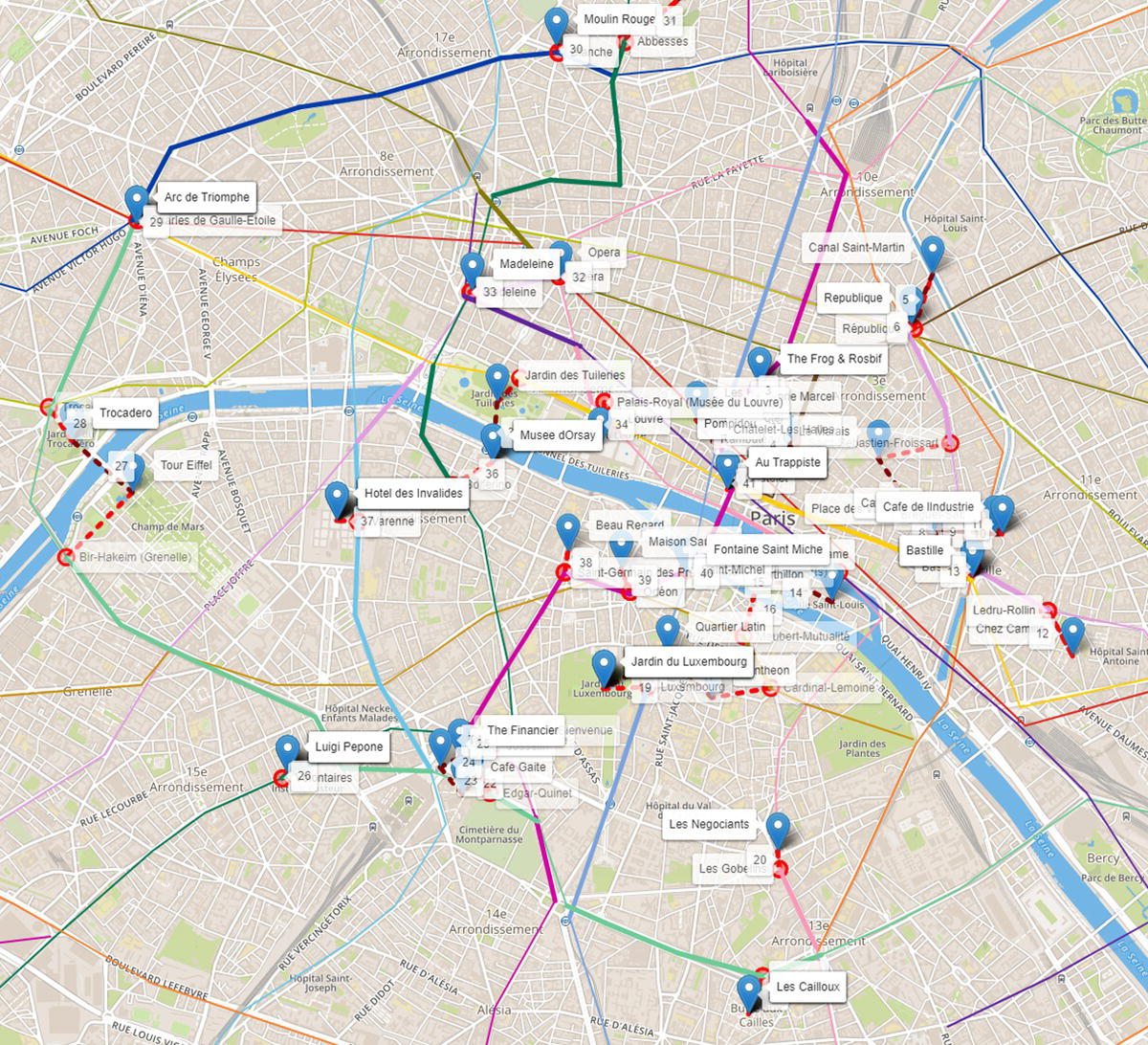

Figure 5.12 shows the final optimal tour considering the multimodal system.

Figure 5.12 The final optimal tour considering the transportation network.

The solid lines in different colors (according to the transportation line) represent the shortest path between a pair locations (place‐station or station‐place) by taking the public transportation. The dashed lines represent the walking between a pair of locations (place‐station, station‐place, or place‐place).

For example, in step 21 of our tour, we stop at Les Cailloux, an Italian restaurant in the Butte aux Cailles district, an art deco architectural heritage neighborhood. From there we walk to the Covisart station, and we take line 6 until Edgar Quinet. From there we walk to Josselin and we grab a crepe (one of the best in the area). Then we walk to Café Gaité and we enjoy a beer, just people watching. The concept of a shot of coffee lasting for hours on the outside tables works for the beer, too. It is a very nice area with lots of bars and restaurants. Then we walk to the Tour Montparnasse. Probably the best view of Paris, because from there you can see the Tour Eiffel, and from the Tour Eiffel you cannot see the Tour Eiffel. We do not need to buy the ticket (18€) for the Observation Deck. We can go to the restaurant Ciel de Paris at the 56th floor and enjoy a coffee or a glass of wine. We may lose one or two floors of height, but we definitely save 13€ or 9€ depending on what we pick. From there we walk to the Financier, a nice pub. From there we walk to the Montparnasse station, and we take line 6 again. We drop off at the Pasteur station and switch to line 12. We drop off at the Volontaires station and we walk to the Pizzeria Luigi Pepone (the best pizza in Paris). And from there our tour continues. We are still in step 26, and we have 14 more steps to go.

The walking tour took us 19.4 miles and around six hours and 12 minutes. The multimodal tour takes us 27.6 miles (more than the first one) but we will walk just 2.8 miles. The entire tour will take about two hours and 30 minutes. Here is the dilemma. By stopping at so many restaurants and bars, we should walk more. But by walking more, we could not stop at so many bars and restaurants. Perhaps there is an optimization algorithm that helps us to solve this complex problem.

5.3 An Optimal Beer Kegs Distribution – The Vehicle Routing Problem Example in Asheville

The VRP algorithm aims to find optimal routes for one or multiple vehicles visiting a set of locations and delivering a specific amount of goods demanded by these locations. Problems related to distribution of goods, normally between warehouses and customers or stores, are generally considered a VRP. The VRP was first proposed by Dantzig and Ramser in the paper “The Truck Dispatching Problem,” published in 1959 by Informs in volume 6 of the Management Science. The paper describes the search for the optimal routing of a fleet of gasoline deliveries between a bulk terminal and a large number of service stations supplied by the terminal. The shortest routes between any two points in the system are given and a demand for one or several products is specified for a number of stations within the distribution systems. The problem aims to find a way to assist stations to trucks in such a manner that station demands are satisfied and total milage covered by the fleet is a minimum.

The VRP is a generalization of the TSP. As in the TSP, the solution searches for the shortest route that passes through each point once. A major difference is that there is a demand from each point that needs to be supplied by the vehicle departing from the depot. Assuming that each pair of points is joined by a link, the total number of different routes passing through n points is ![]() . Even for a small network, or a reduced number of points to visit, the total number of possible routes can be extremely large. The example in this case study is perfect to describe that. This exercise comprises only 22 points (n = 22). Assuming that a link exists between any pair of locations set up in this case, the total number of different possible routes is 562 000 363 888 803 840 000.

. Even for a small network, or a reduced number of points to visit, the total number of possible routes can be extremely large. The example in this case study is perfect to describe that. This exercise comprises only 22 points (n = 22). Assuming that a link exists between any pair of locations set up in this case, the total number of different possible routes is 562 000 363 888 803 840 000.

VRPs are extremely expensive, and they are categorized as NP‐hard because real‐world problems involve complex constraints like time windows, time‐dependent travel times, multiple depots to originate the supply, multiple vehicles, and different capacities for distinct types of vehicles, among others. When looking at the TSP, trying to minimize a particular objective function like distance, time, or cost, the VRP variation sounds much more complex. The objective function for a VRP can be quite different mostly depending on the particular application, its constraints, and its resources. Some of the common objective functions in VRP may consist of minimizing the global transportation cost based on the overall distance traveled by the fleet, minimizing the number of vehicles to supply all customersnn' demands, minimizing the time of the overall travels considering the fleet, the depots, the customers, and the loading and unloading times, minimizing penalties for time‐windows restrictions in the delivery process, or even maximizing a particular objective function like the profit of the overall trips where it is not mandatory to visit all customers.

There are lots of VRP variants to accommodate multiple constraints and resources associated with the.

- The Vehicle Routing Problem with Profits (VRPPs) as described before.

- The Capacitated Vehicle Routing Problem (CVRP) where the vehicles have limit capacity to carry the goods to be delivered.

- The Vehicle routing Problem with Time Window (VRPTW) where the locations to be served by the vehicles have limited time windows to be visited.

- The Vehicle Routing Problem with Heterogenous Fleets (HFVRP) where different vehicles have different capacities to carry the goods.

- The Time Dependent Vehicle Routing Problem (TDVRP) where customers are assigned to vehicles, which are assigned to routes, and the total time of the overall routes needed to be minimized.

- The Multi Depot Vehicle Routing Problem (MDVRP), where there are multiple depots from which vehicles can start and end the routes.

- The Open Vehicle Routing Problem (OVRP), where the vehicles are not required to return to the depot.

- The Vehicle Routing Problem with Pickup and Delivery (VRPPD), where goods need to be moved from some locations (pickup) to others locations (delivery), among others.

The example here is remarkably simple. We can consider one or more vehicles, but all vehicles will have the same capacity. The depot has a demand equal to zero. Each customer location is serviced by only one vehicle. Each customer demand is indivisible. Each vehicle cannot exceed its maximum capacity. Each vehicle starts and ends its route at the depot. There is one single depot to supply goods for all customers. Finally, customers demand, distances between customers and depot, and delivery costs are known.

In order to make this case more realistic, perhaps more pleasant, let us consider a brewery that needs to deliver its beer kegs to different bars and restaurants throughout multiple locations. Asheville, North Carolina, is a place quite famous for beer. The city is famous for other things, of course. It has a beautiful art scene and architecture, the colorful autumn, the historic Biltmore estate, the Blue Ridge Parkway, and the River Arts District, among many others. But Asheville has more breweries per capita than any city in the US, with 28.1 breweries per 100 000 residents.

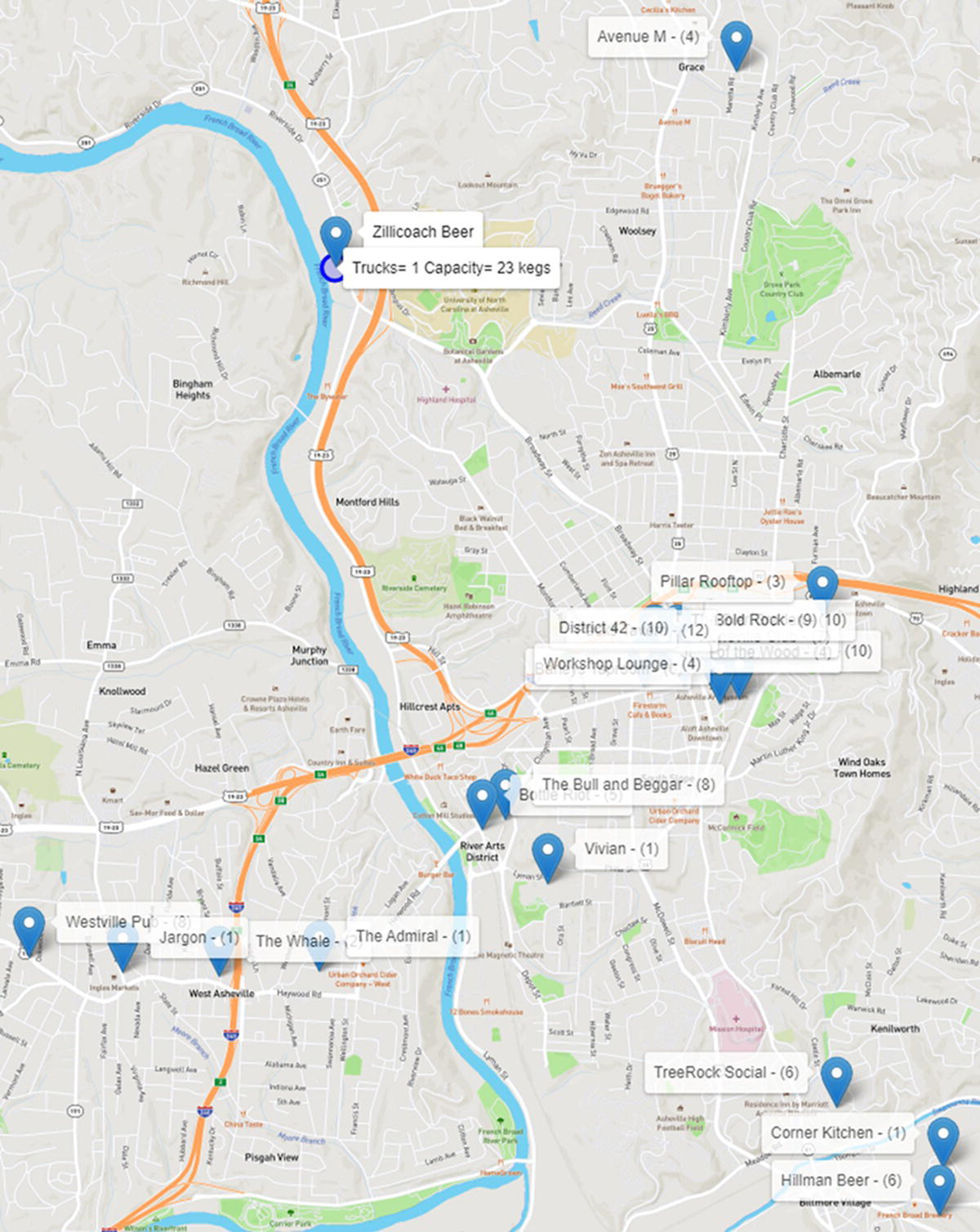

Let us select some real locations. These places are the customers in the case study. For the depot, let us select a brewery. This particular problem has then a total of 22 places, the depot, and 21 restaurants and bars. Each customer has its own demands, and we are starting off with one single pickup truck with a limited capacity.

The following code creates the list of places, with the coordinates, and the demand. It also creates the macro variables so we can be more flexible along the way, changing the number of trucks available and the capacity of the trucks.

%let depot = 'Zillicoach Beer';%let trucks = 4;%let capacity = 30;data places;length place $20;infile datalines delimiter=",";input place $ lat long demand;datalines;Zillicoach Beer,35.61727324024679,-82.57620125854477,12Barleys Taproom, 35.593508941040184,-82.55049904390695,6Foggy Mountain,35.594499248395294,-82.55286640671683,10Jack of the Wood,35.5944656344917,-82.55554641291447,4Asheville Club,35.595301953719876,-82.55427884441883,7The Bier Graden,35.59616807405638,-82.55487446973056,10Bold Rock,35.596168519758706,-82.5532906435109,9Packs Tavern,35.59563366969523,-82.54867278235423,12Bottle Riot,35.586701815340874,-82.5664137278939,5Hillman Beer,35.5657625849887,-82.53578181164393,6Westville Pub,35.5797705582317,-82.59669352112562,8District 42,35.59575560112859,-82.55142220123702,10Workshop Lounge,35.59381883030113,-82.54921206099571,4TreeRock Social,35.57164142260938,-82.54272668032107,6The Whale,35.57875963366179,-82.58401015401363,2Avenue M,35.62784935175343,-82.54935140167011,4Pillar Rooftop,35.59828820775747,-82.5436137644324,3The Bull and Beggar,35.58724435501913,-82.564799389471,8Jargon,35.5789624127538,-82.5903739015448,1The Admiral,35.57900392043434,-82.57730888246586,1Vivian,35.58378161962331,-82.56201676356083,1Corner Kitchen,35.56835364052998,-82.53558091251179,1;run;

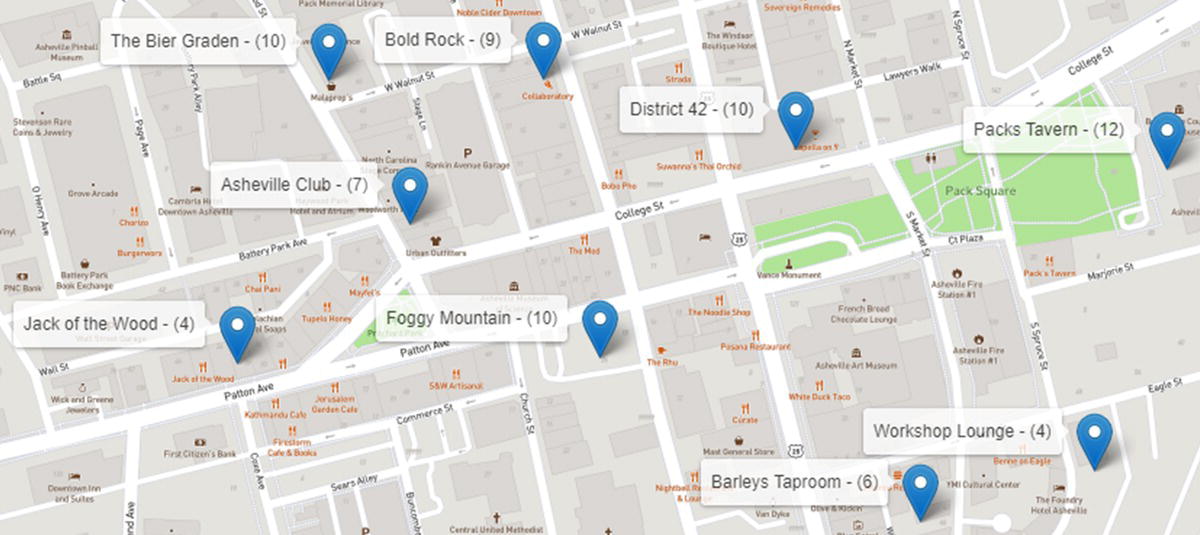

Here we are using the same open‐source package demonstrated in the previous case study to show the outcomes (places and routes) in a map. The code to create the HTML file showing the customersn' locations and their demands is quite similar to the code presented before, and it will be suppressed here. This code generates the map shown in Figure 5.13. The map presents the depot (brewery) and the 21 places (bars and restaurants) demanding different amounts of beer kegs. Notice in parentheses the number of beer kegs required by each customer.

Most of the customers are located in the downtown area, as shown in Figure 5.14.

The next step is to define the possible links between each pair of locations and compute the distance between them. Here, in order to simplify the problem, we are calculating the Euclidian distance between a pair of places, not the existing road distances. The VRP algorithm will take into account that Euclidian distance when searching for the optimal routing, trying to minimize the overall (Euclidian) distance for vehicle travel.

The following code shows how to create the links, compute the Euclidian distance, and create the customers' nodes without the depot.

Figure 5.13 The brewery and the customers with their demands of beer kegs.

Figure 5.14 Customers located in downtown.

proc sql;create table placeslink asselect a.place as org, a.lat as latorg, a.long as longorg,b.place as dst, b.lat as latdst, b.long as longdstfrom places as a, places as bwhere a.place<b.place;quit;data mycas.links;set placeslink;distance=geodist(latdst,longdst,latorg,longorg,'M');output;run;data mycas.nodes;set places(where=(place ne &depot));run;

Once the proper input data is set, we can use proc optnetwork to invoke the VRP algorithm. The following code shows how to do that.

proc optnetworkdirection = undirectedlinks = mycas.linksnodes = mycas.nodesoutnodes = mycas.nodesout;linksvarfrom = orgto = dstweight = distance;nodesvarnode = placelower = demandvars=(lat long);vrpdepot = &depotcapacity = &capacityout = mycas.routes;run;

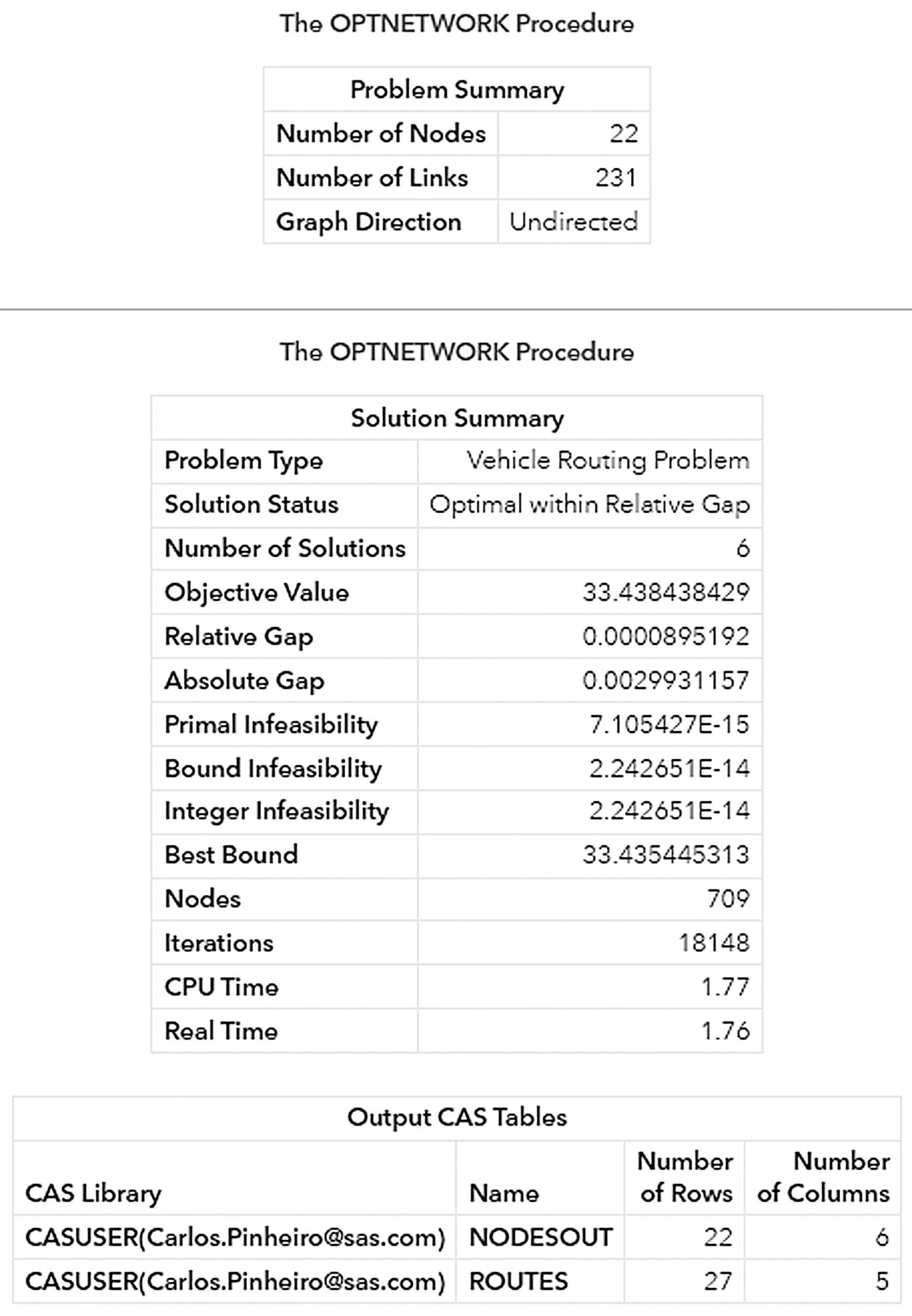

Figure 5.15 shows the results produced by proc optnetwork. It presents the size of the graph, the problem type, and details about the solution.

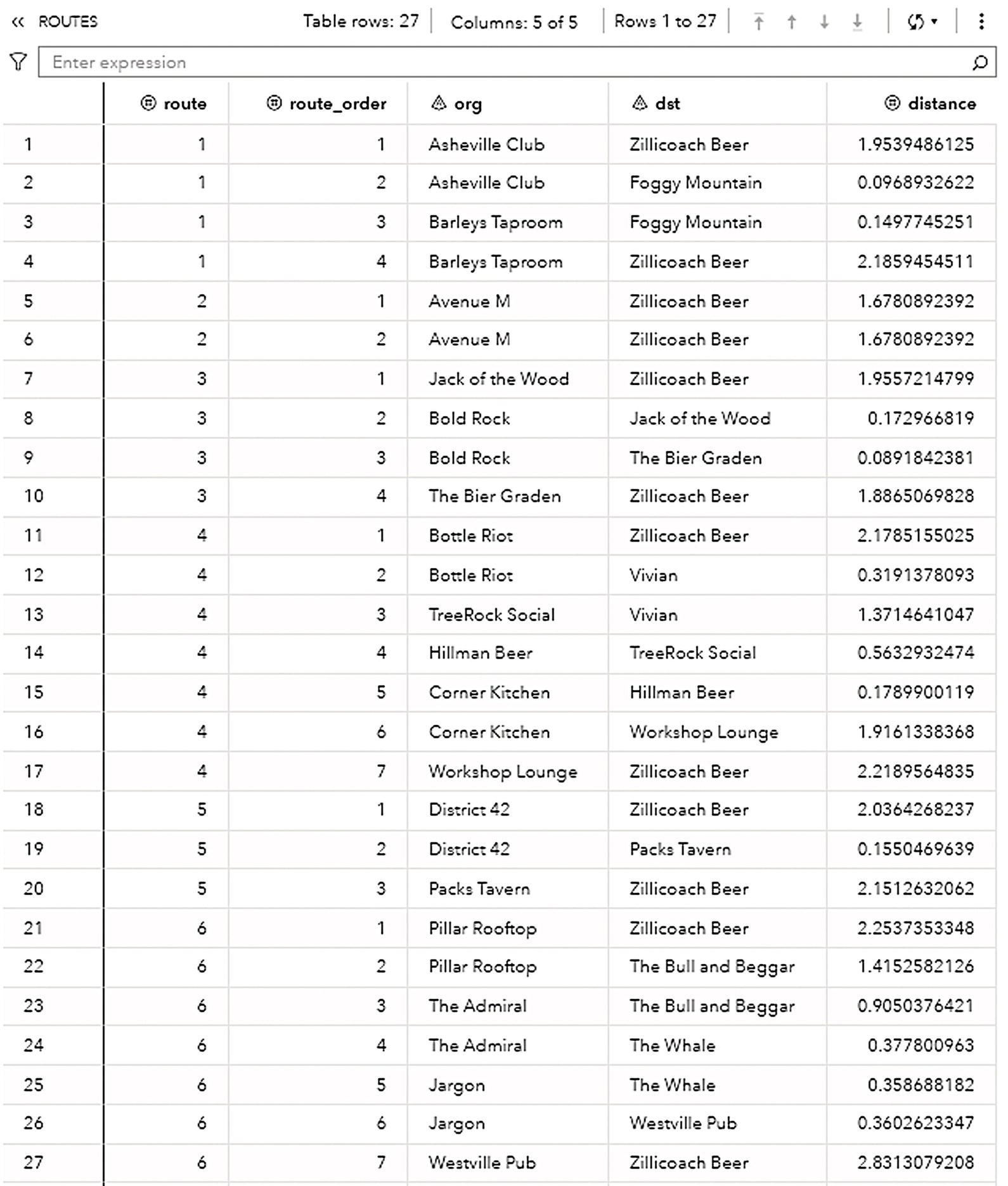

The output table ROUTES shown in Figure 5.16 presents the multiple trips the vehicle needs to perform in order to properly service all customers with their beer keg demands.

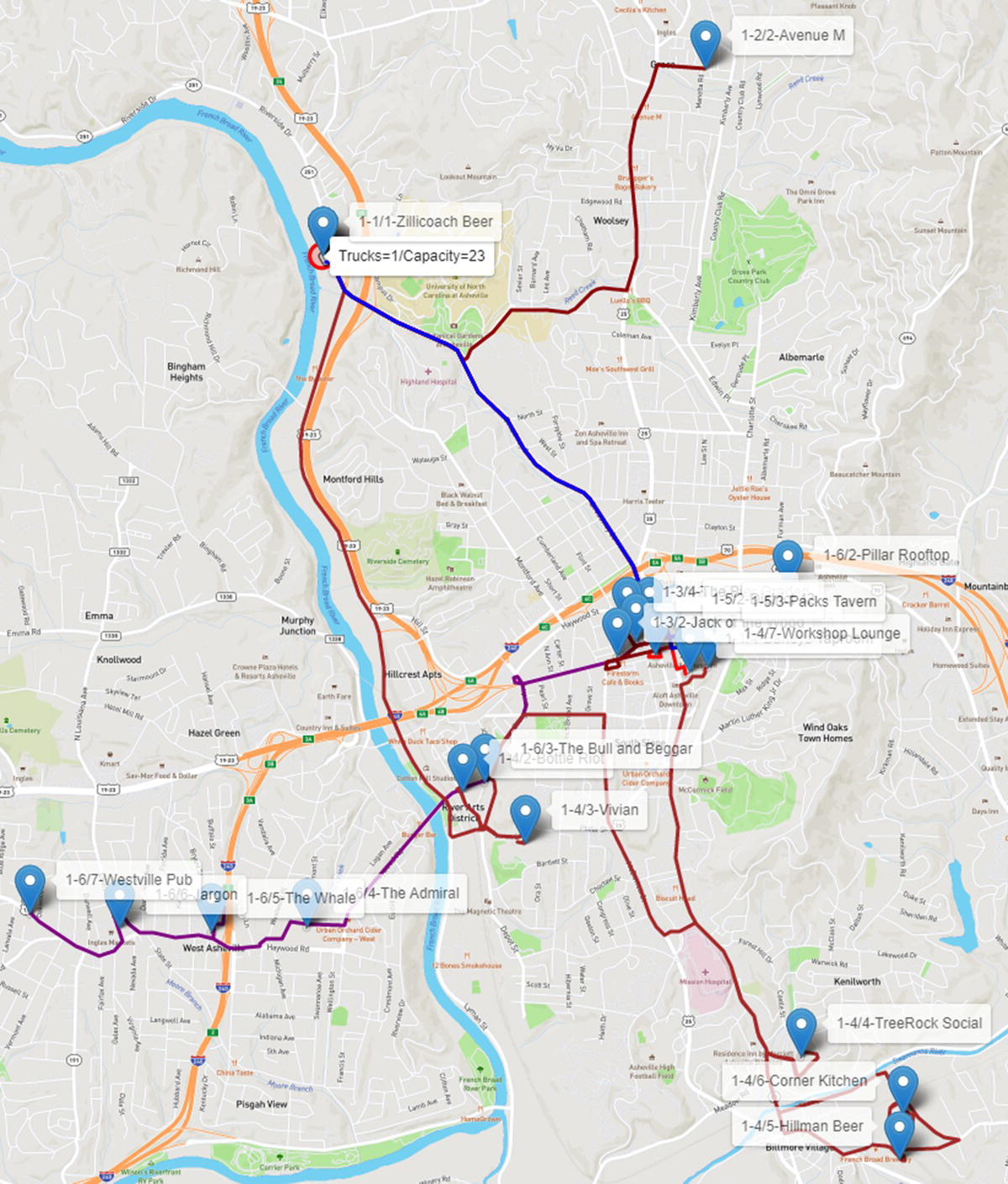

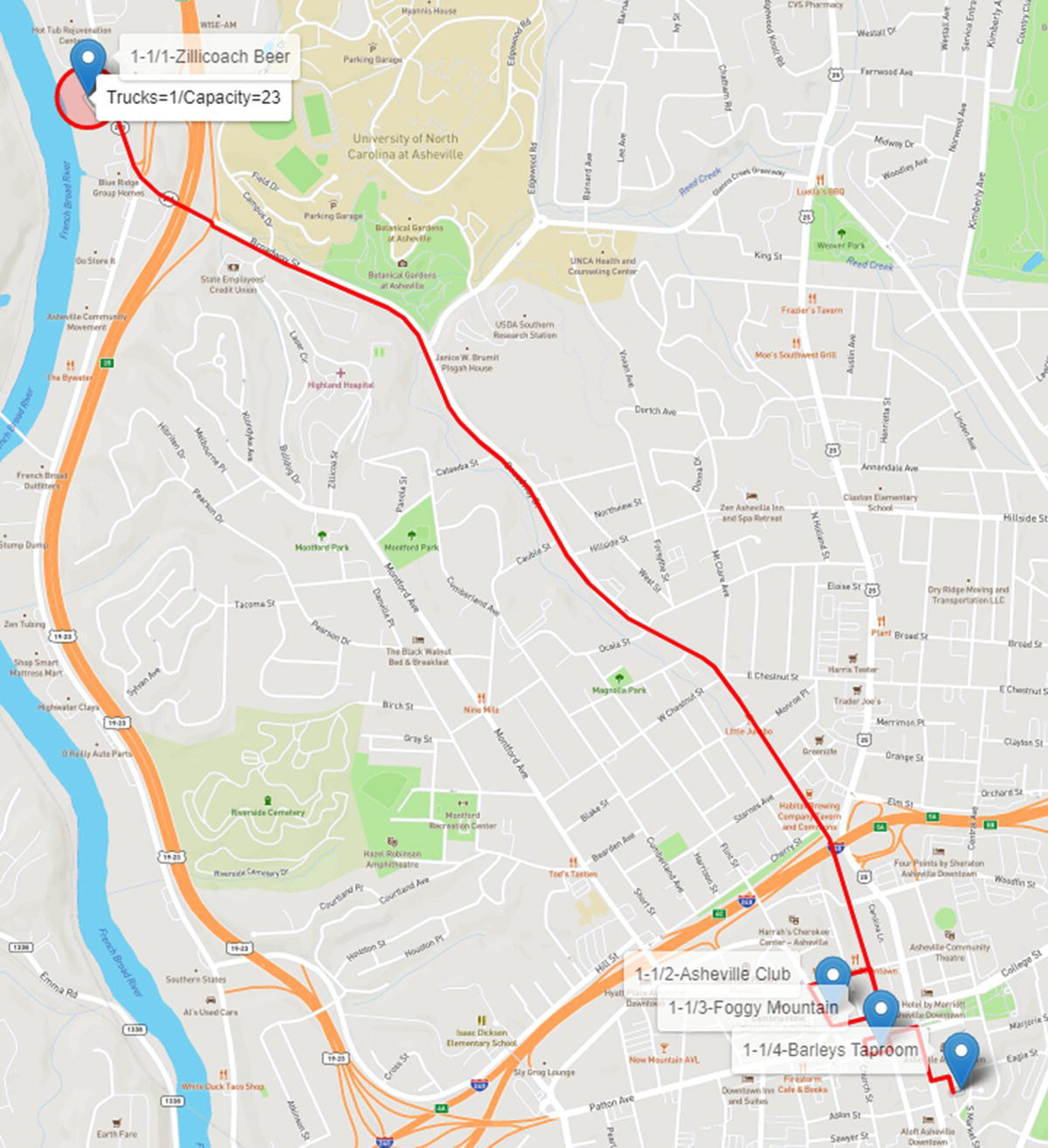

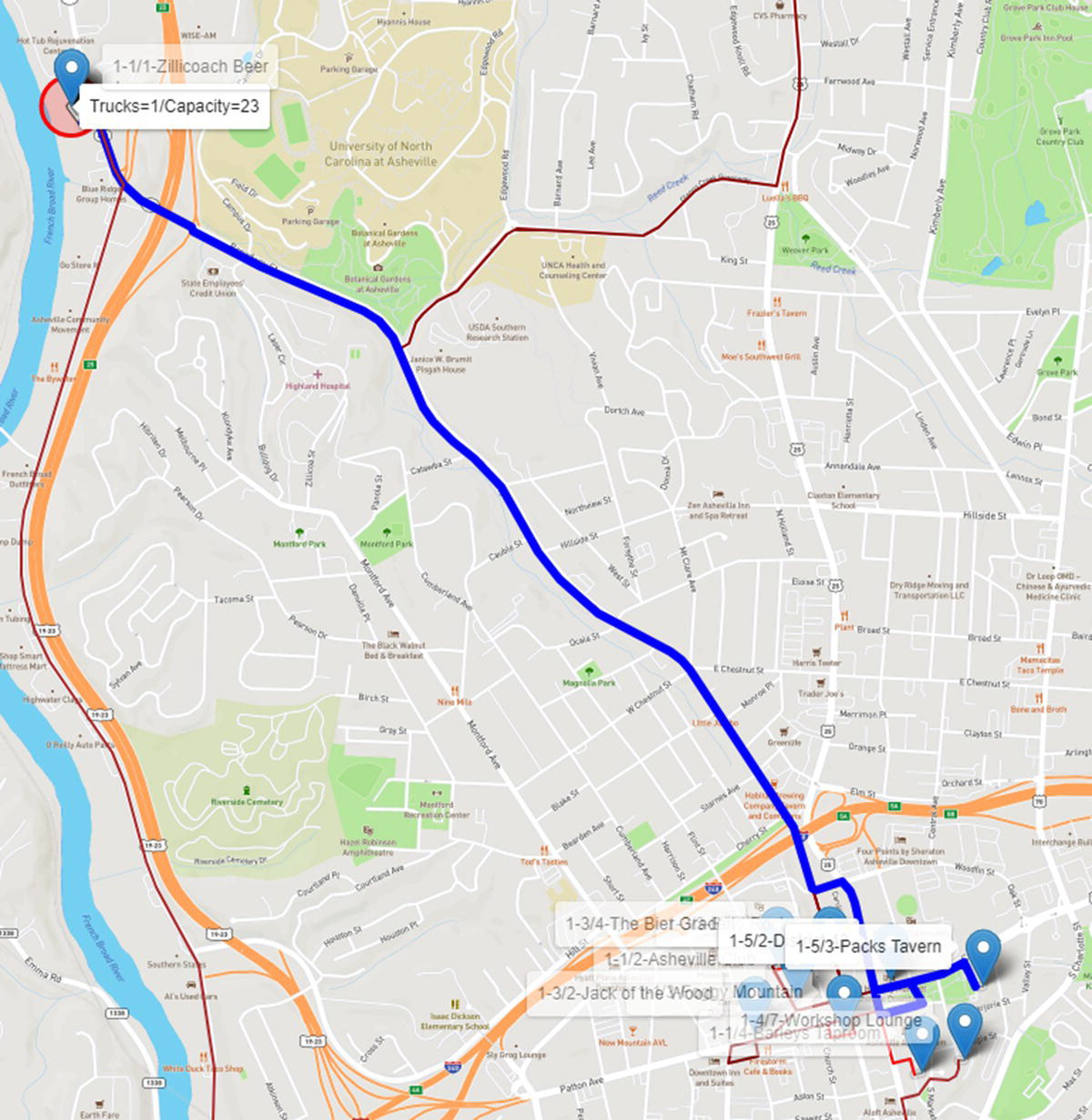

The VRP algorithm searches for the optimal number of trips the vehicle needs to perform, and the sequence of places to visit for each trip in order to minimize the overall travel distance, and properly supply the customers' demands. With one single vehicle, with a capacity of 23 beer kegs, this truck needs to perform six trips, going back and forth from the depot to the customers. Depending on the customersnnn' demands, and the distances between them, some trips can be really short, like route 2 with one single visit to Avenue M, while some trips can be substantially longer, like routes 4 and 6 with six customer visits. Figure 5.17 shows the outcomes of the VRP algorithm showing the existing routing plans in a map.

Let us take a step‐by‐step look at the overall routes. ho first route departs from the brewery and serves three downtown restaurants. The truck delivers exactly 23 beer kegs and returns to the brewery to reload. Figure 5.18 shows the first route.

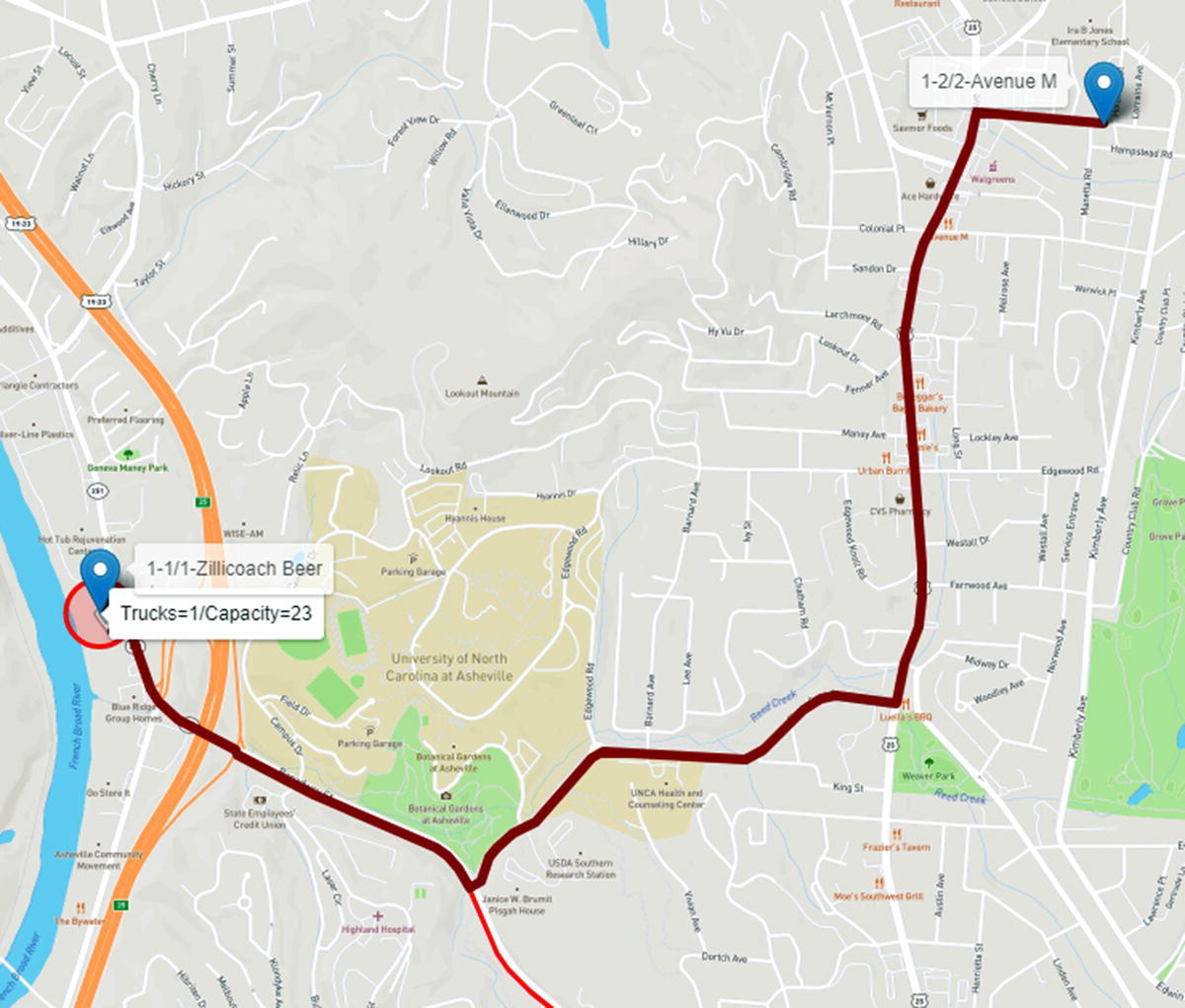

The second route in the output table should be actually, at least logistically, the last trip the truck would make, delivering the remaining beer kegs from all customers' demands. This route has one single customer to visit, supplying only four beer kegs. To keep it simple, let us keep following the order of the routes produced by proc optnetwork, even though this route would be the last trip. The truck goes from the brewery to Avenue M and delivers four beer kegs. Figure 5.19 shows the second route.

Figure 5.15 Output results from the VRP algorithm in proc optnetwork.

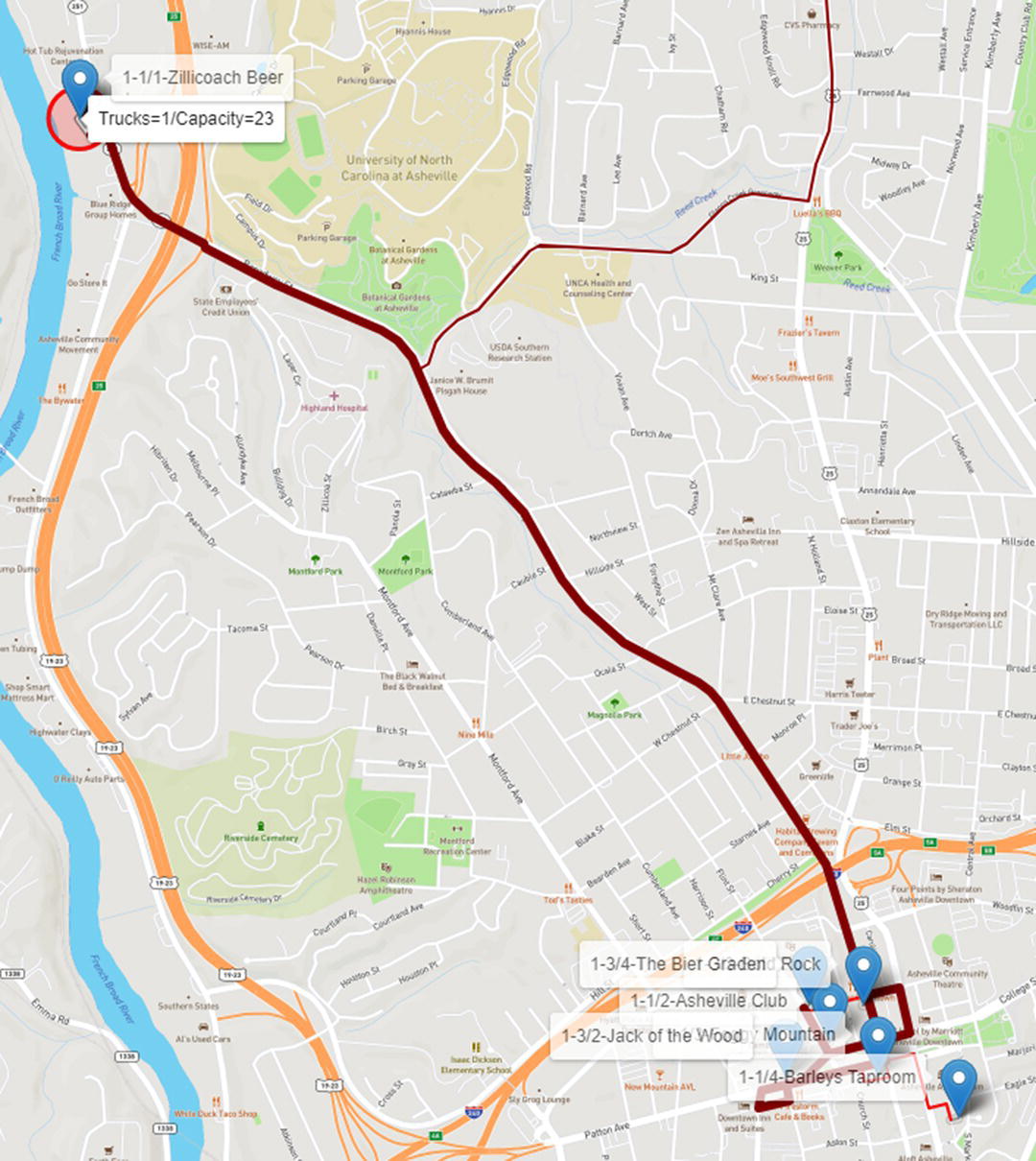

For the third route, the truck departs from the brewery and serves another three customers, delivering exactly 23 beer kegs, 4, 9, and 10, which is the maximum capacity of the vehicle. Figure 5.20 shows the third route.

Notice that the initial route is similar to the first one, but now the truck is serving three different customers.

Once again, the truck returns to the brewery to reload and departs to the fourth route. In the fourth route, shown in Figure 5.21, the truck serves six customers, delivering 23 beer kegs, 5, 1, 6, 6, 1, and 4.

Notice that the truck serves customers down to the south. It stops for two customers on the way to the south, continues on the routing serving another three customers down there, and before returning to the brewery to reload, it stops in the downtown to serve the last customer with the last four beer kegs.

Figure 5.16 Output table with each route and its sequence.

The fifth route is a short one, serving only two customers in downtown. The truck departs from the brewery but now not with full capacity as this route supplies only 22 beer kegs, 10 for the first customer, and 12 for the second. Figure 5.22 shows the fifth route.

Figure 5.17 Six routes for one truck with 23 capacity.

As most of the bars and restaurants are located in the downtown, and the vehicle has a limit capacity, the truck needs to make several trips there. Notice in all the figures all different routes in the downtown marked in different colors and thicknesses. The thicker dark line represents the fifth route serving the customers in this particular trip.

The last trip serves customers in the west part of the town. However, there is still one customer in downtown to be served. Again, the truck serves six customers delivering 23 beer kegs. Figure 5.23 shows the last route for 1 route with 23 beer kegs of capacity.

Notice that the truck departs from the brewery and goes straight to downtown to deliver the first three beer kegs to the Pillar Rooftop. Then, it goes to the west part of the town to continue delivering the kegs to the other restaurants.

As a tour, after delivering all the beer kegs and supplying all the customers' demands, the truck needs to return to the brewery.

As we can observe, with only one single vehicle, there are many trips to the downtown, where most of the customers are located. Eventually the brewery will realize that in order to optimize the delivery, it will need more trucks, or a truck with a bigger capacity. Let us see how these options work for the brewery.

Originally, the VRP algorithm in proc optnetwork computes the VRP considering only one single vehicle with a fixed capacity.

For this case study, to simplify the delivery process, we are just splitting the original routes by the available vehicles. Most of the work here is done while creating the map to identify which truck makes a particular set of trips. The following code shows how to present on the map the multiple trips performed by the available trucks.

Figure 5.18 Route 1 considering one truck with 23 capacity.

Figure 5.19 Route 2 considering one truck with 23 capacity.

Figure 5.20 Route 3 considering one truck with 23 capacity.

Figure 5.21 Route 4 considering one truck with 23 capacity.

Figure 5.22 Route 5 considering one truck with 23 capacity.

Figure 5.23 Route 6 considering one truck with 23 capacity.

proc sql;create table routing asselect a.place, a.demand, case when a.route=. then 1 else a.routeend as route, a.route_order, b.lat, b.longfrom mycas.nodesout as ainner join places as bon a.place=b.placeorder by route, route_order;quit;proc sql noprint;select place, lat, long into :d, :latd, :longd from routing wheredemand=.;quit;proc sql noprint;select max(route)/&trucks+0.1 into :t from routing;quit;filename arq "&dm/routing.htm";data _null_;array color{20} $ color1-color20 ('red','blue','darkred','darkblue',…length linha $1024.;length linhaR $32767.;ar=route;set routing end=eof;retain linhaR;file arq;k+1;tn=int(route/&t)+1;if k=1 thendo;put '<!DOCTYPE html>';put '<html>';put '<head>';put '<title>SAS Network Optimization</title>';put '<meta charset="utf-8"'/>';put '<meta name="viewport" content="width=device-width, ...put '<link rel="stylesheet" href=" https://unpkg.com/leaf ...put '<script src=" https://unpkg.com/[email protected]/dist/l ...put '<link rel="stylesheet" href=" https://unpkg.com/leaf ...put '<script src=" https://unpkg.com/leaflet-routing-mach ...put '<style>body{padding:0;margin:0;}html,body,#mapid{he...put '</head>';put '<body>';put '<div id="mapid"</div>';put '<script>';put 'var mymap=L.map("mapid").setView([35.59560349262985...put 'L.tileLayer(" https://api.mapbox.com/styles/v1/{id}/ ...linha='L.circle(['||"&latd"||','||"&longd"||'],{radius:8...put linha;linhaR='L.Routing.control({waypoints:[L.latLng('||"&latd...end;if route>ar thendo;if k > 1 thendo;linhaR=catt(linhaR,'],routeWhileDragging:fal...put linhaR;linhaR='L.Routing.control({waypoints:[L.latL...end;end;elselinhaR=catt(linhaR,',L.latLng('||lat||','||long||')');linha='L.marker(['||lat||','||long||']).addTo(mymap).bindToolt"'("'|...put linha;if eof thendo;linhaR=catt(linhaR,'],routeWhileDragging:false,showAlternatives:fals...put linhaR;put '</script>';put '</body>';put '</html>';end;run;

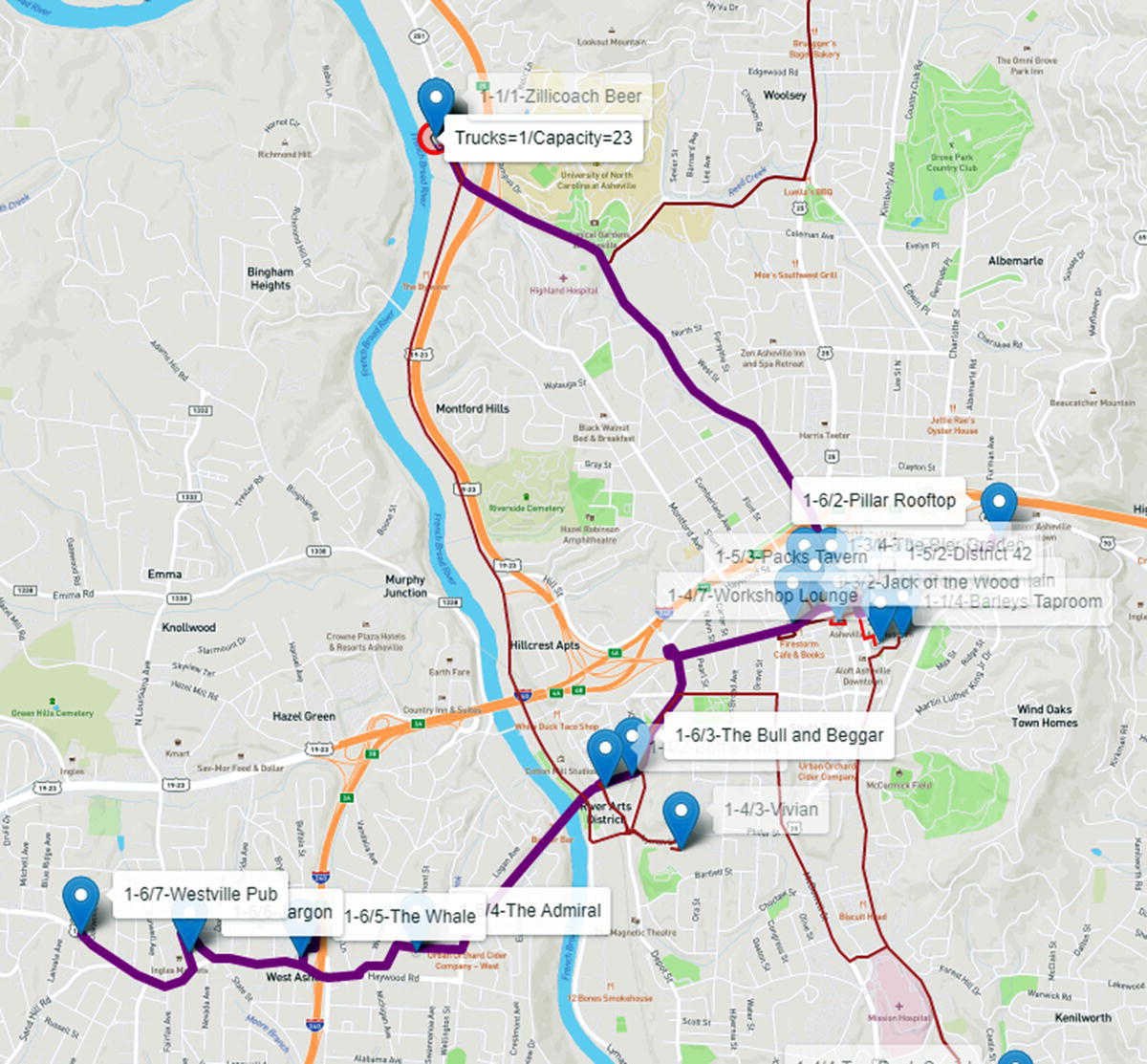

On the top of the map, where the brewery is located, we can see the number of trucks (2) and the capacity of the trucks (23) assigned to the depot. Now, each truck is represented by a different line size. First routes have thicker lines. Last routes have thinner lines so we can see the different trips performed by the distinct trucks, sometimes overlapping.

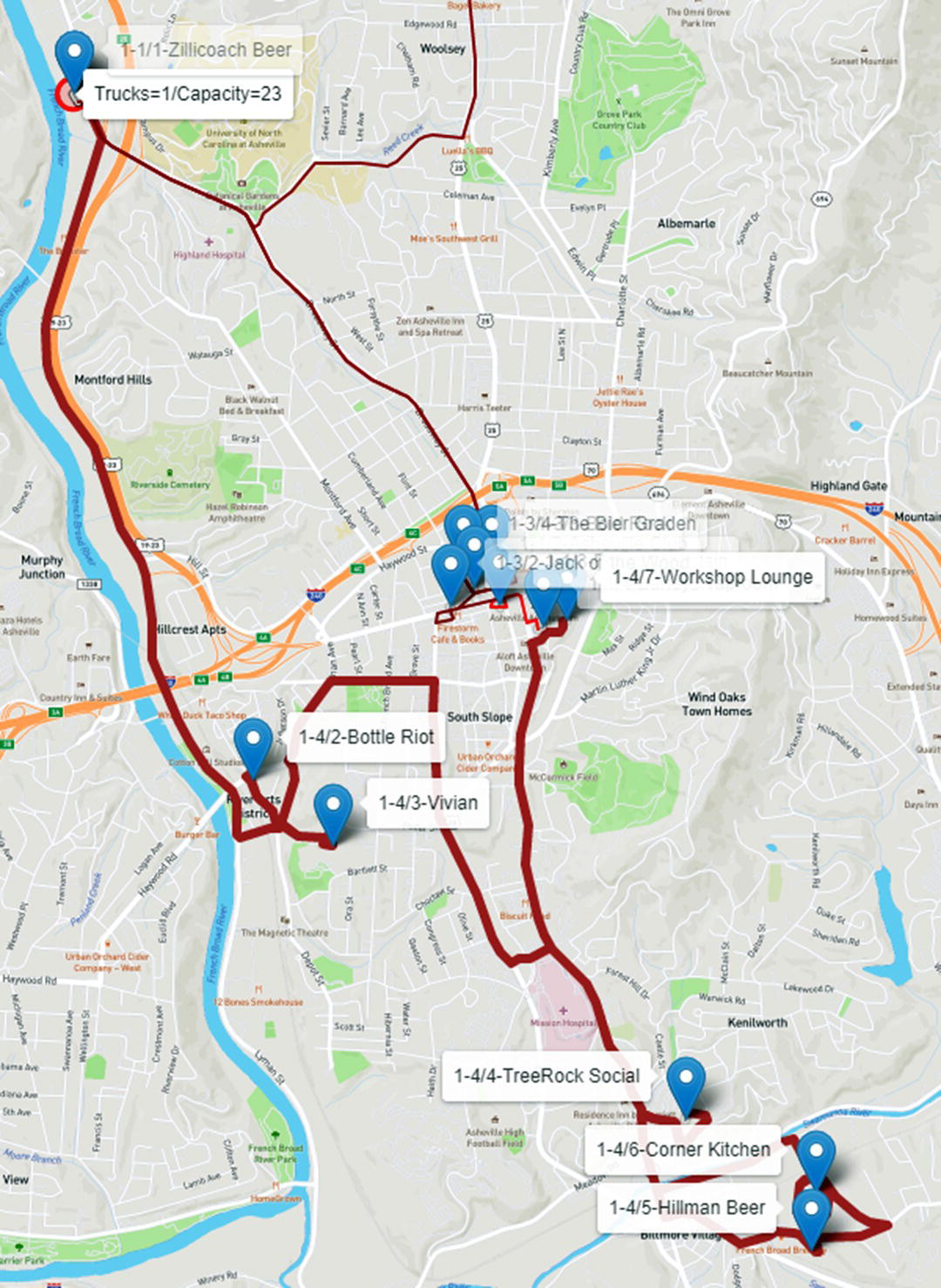

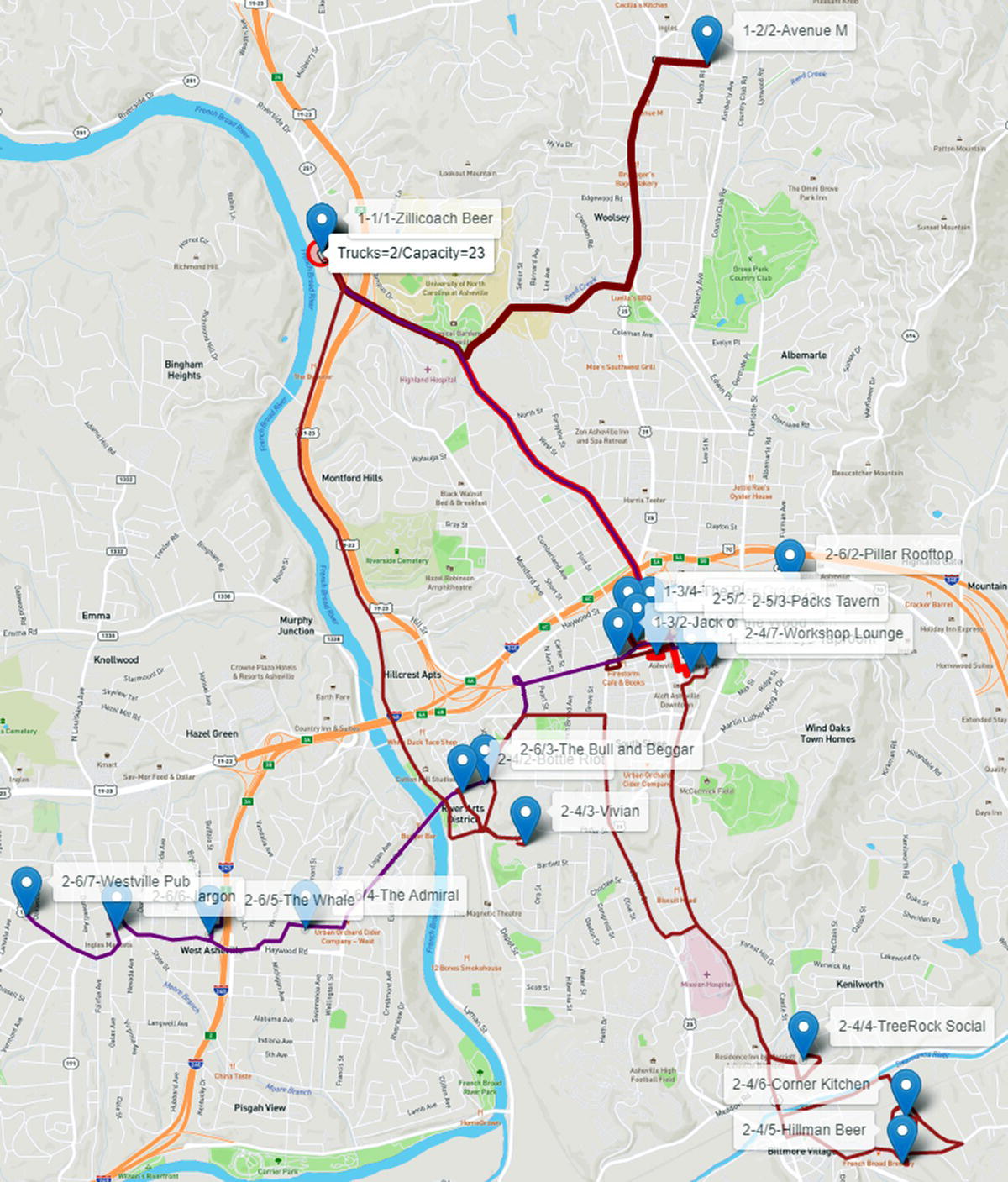

The first truck makes the routes 1, 2, and 3. Route 1 (thicker red) visits three customers in downtown, identified by the labels 1‐1/2‐Asheville Club, 1‐1/3‐Foggy Mountain, and 1‐1/4‐Barleys Taproom. This truck returns to the brewery, reloads, and visits in route 2 (thicker dark red) one single customer in the north, identified by the label 1‐2/2‐Avenue M. The truck returns to the depot, reloads and visits three other customers in route 3 (marron), once again in downtown, identified by the labels 1‐3/2‐Jack of the Wood, 1‐3‐3‐Bold Rock, and 1‐3/4‐The Bier Garden. It returns to the depot and stops the trips. In parallel, truck 2 goes west and down to the south in route 4 (brown), visiting six customers, labels 2‐4/2, 2‐4/3, 2‐4/4, 2‐4/5, 2‐4/6, and 2‐4/7. It returns to the depot, reloads, and goes for route 5 (violet) to visit two customers in downtown, identified by the labels 2‐5/2 and 2‐5/3. It returns to the brewery, reloads, and goes for the route 6 (purple) to visit the final six customers, 1 to the east, label 2‐6/2, 1 to the west, label 2‐6/4, and 4 to the far western part of the city, labels 2‐6/5, 2‐6/5, 2‐6/6, and 2‐6/7. The trucks return to the brewery and all routes are done in much less time. Figure 5.24 shows the routes for both trucks.

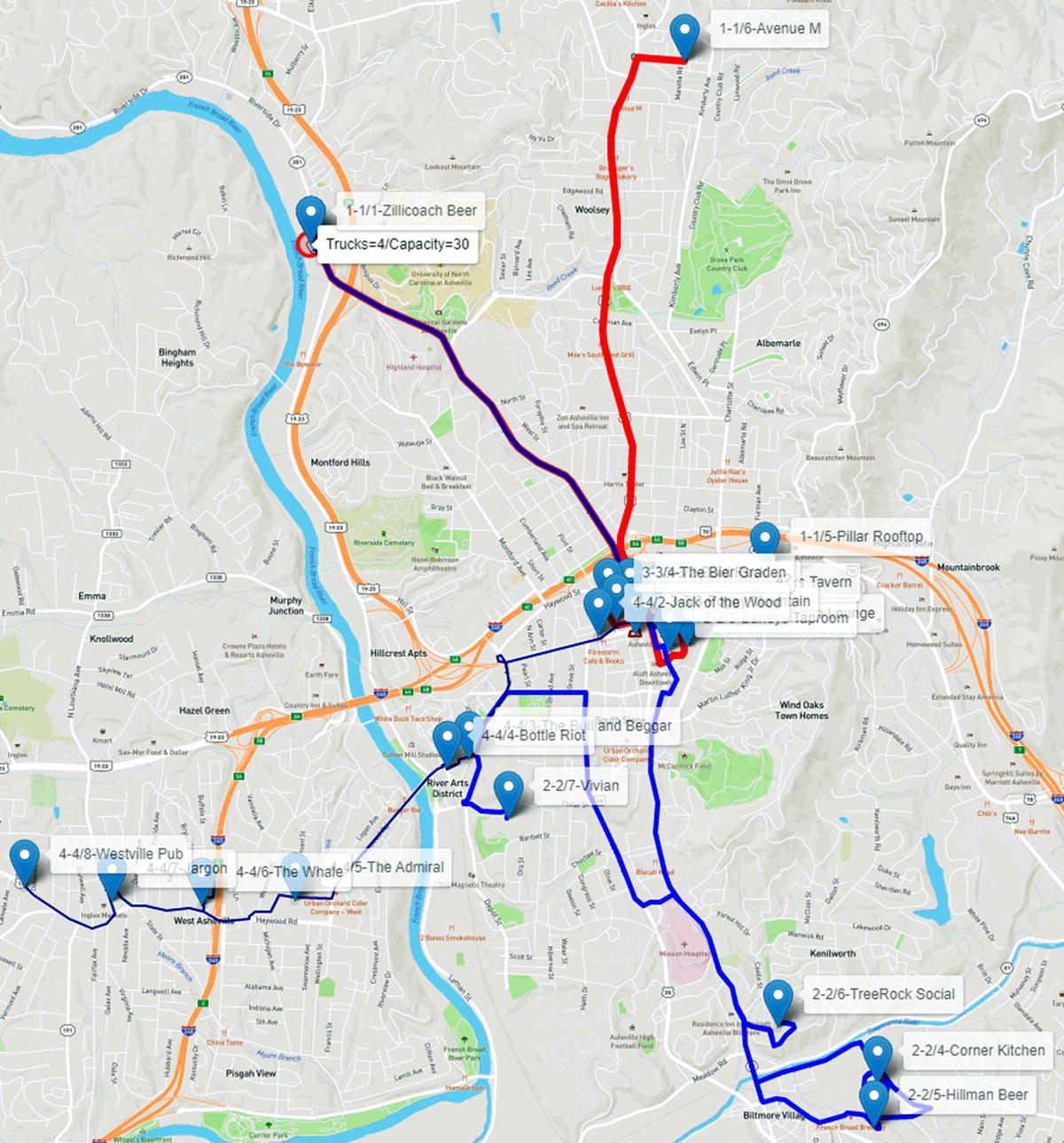

Finally, let us see what happens when we increase the number of trucks to 4 and the capacity of the trucks to 30 beer kegs. Now there are only four routes, and each truck will perform one trip to visit the customers.

The first truck takes route 1 (red) to downtown and the north part of the city visiting five customers, labeled 1‐1/2 to 1‐1/6. The second truck takes route 2 (blue) visiting two customers in downtown, labeled 2‐2/2 and 2‐2/3, three customer in the south, labeled 2‐2/4 to 2‐2/6, and one customer in the west, labeled 2‐2/7 before returning to the depot. Truck 3 takes route 3 (dark red) visiting three customers in downtown, labeled 3‐3/2 to 3‐3/4. Finally truck 4 takes route 4 (dark blue) visiting seven customers in the west and far west part of the city, labeled 4‐4/2 to 4‐4/8. Figure 5.25 shows the routes for all four trucks.

In this scenario, the downtown is served by trucks 1, 2, and 3, the north of the city is served by truck 1, while the south by truck 2. Finally, the west part of the city is served by truck 4.

Increasing the number of trucks and the capacity of the trucks certainly reduces the time to supply all customers’ demands. For example, in this particular case study, not considering the time to load and unload the beer kegs, just considering the routing time, the first scenario with one single truck, the brewery would take 122 minutes to cover all six trips and supply the demand of the 21 customers. The second scenario, with two trucks with the same capacity, the brewery would take 100 minutes to cover all six trips, three for each truck, 18% of reduction in the overall time. Finally, the last scenario with four trucks and a little higher capacity, the brewery would take only 60 minutes to cover all four trips, 1 for each truck, to serve all 21 customers around the city, with 51% of reduction in the overall time.

Figure 5.24 Routes considering two truck with 23 capacity.

The previous two case studies were created to advertise the SAS Viya Network Analytics features, particularly in the new TSP and VRP algorithms. For that reason, all the code used to create the cases were added to the case study description. Also, both case studies are pretty simple and straightforward, using only the TSP and VRP algorithms, with minor data preparation and post analysis procedures. The following case studies were real implementation in customers, considering multiple analytical procedures combined, such as data preparation and feature extraction, machine learning models, and statistical analysis, among others. The codes assigned to these cases are substantially bigger and much more complex than the ones created for the previous cases. For all these reasons, the code created for the following case studies are suppressed.

5.4 Network Analysis and Supervised Machine Learning Models to Predict COVID‐19 Outbreaks

Network analytics is the study of connected data. Every industry, in every domain, has information that can be analyzed in terms of linked data in a network perspective. Network analytics can be applied to understand the viral effects in some traditional business events, such as churn and product adoption in telecommunications, service consumption in retail, fraud in insurance, and money laundering in banking. In this case study, we are applying network analytics to correlate population movements to the spread of the coronavirus. At this stage, we attempt to identify specific areas to target for social containment policies, either to better define shelter in place measures or gradually opening locations for the new normal. Notice that this solution was developed at the beginning of the COVID‐19 pandemic, around April and May of 2020. At some point, identifying geographic locations assigned to outbreaks were almost useless as all locations around the globe were affected by the virus.

Figure 5.25 Routes considering four truck with 30 capacity.

Using mobile data, penetration of cell phones, companies market share, and population, we can infer the physical amount of movements over time between geographic areas. Based on this data, we use network algorithms to define relevant key performance indicators (KPIs) by geographic area to better understand the pattern of the spread of the virus according to the flow of people across locations.

These KPIs drive the creation of a set of interactive visualization dashboards and reports using visual analytics – which enable the investigation of mobility behavior and how key locations affect the spread of the virus across geographic areas over time.

Using network analytics and the KPIs, we can understand the network topology and how this topology is correlated to the spread of the virus. For example, one KPI can identify key locations that, according to the flow of people, contribute most to the velocity of the spread of the virus.

Another KPI can identify locations that serve as gatekeepers, locations that do not necessarily have a high number of positive cases but serve as bridges that spread the virus to other locations by flowing a substantial number of people across geographic regions. Another important KPI helps in understanding clusters of locations that have an elevated level of interconnectivity with respect to the mobility flow, and how these interconnected flows impact the spread of the virus among even distant geographic areas.

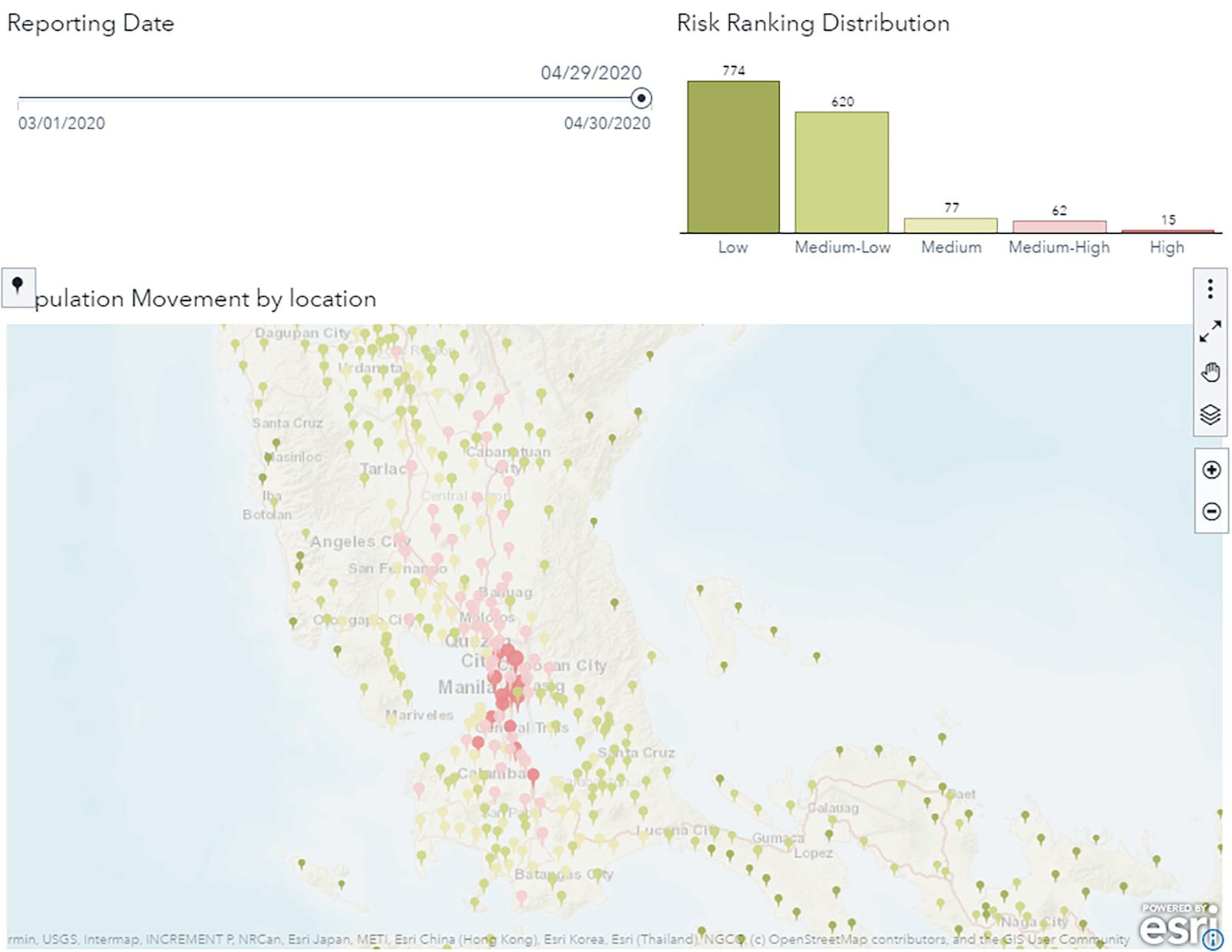

Several dashboards were created (in SAS Visual Analytics) in order to provide an interactive view, over time, of the mobility data and the health information, combined with the network KPIs, or the network metrics computed based on the mobility flows over time.

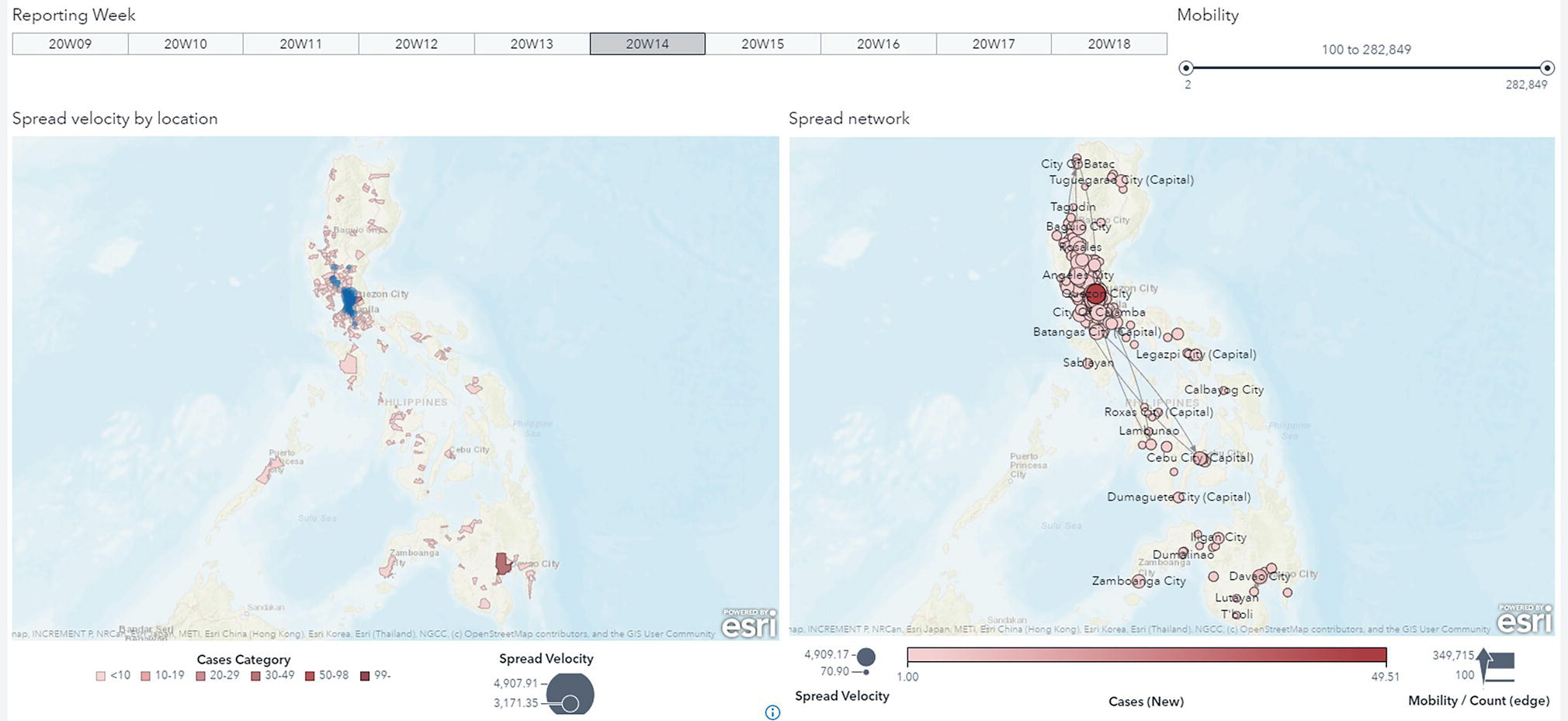

Figure 5.26 shows how population movements affects the COVID‐19 spread over the weeks in particular geographic locations. On the map, the blue circles indicate key locations identified by centrality metrics computed by the network algorithms. These locations play a key role in flowing people across regions. We can see the shades of red representing the number of positive cases over time. Notice that the key locations are also hot spots for the virus, presenting a substantial number of cases. These key locations are central to the flow of people in and out of geographic areas, even if those areas are distant from each other.

Figure 5.26 Population movement and COVID‐19 outbreaks over the weeks.

Notice that all those hot spots on the map are connected to each other by the flow of people. In other words, a substantial number of people flowing in and out between locations can affect the spread of the virus, even across a wide geographic region. The mobility behavior tells us how people travel between locations, and the population movement index basically tells us that a great volume of people flowing in and out increases the likelihood of the virus also flowing in and out between locations.

Here, we correlate the movement behavior and the spread of the virus over time. On the left‐side of the map, the areas in shades of red represent locations with positive cases and the blue bubbles represent the spread velocity KPI. In addition, note that most of the red areas are correlated to key locations highlighted by the KPI, on the right‐side of the map you can see the in and out flows between all these locations, which in fact drives the creation of this KPI. You can easily see how the flows between even distant locations can possibly also flow the virus across widespread geographic areas.

On the right side, the shades of red indicate how important these locations are in spreading the virus. These locations play an important role in connecting geographic regions by flowing people in and out over time. The right‐side map shows how all those hot spots on the left‐side map are connected to each other by the flow of people. The side‐ by‐ side maps show how movements between locations affect the spread of the virus.

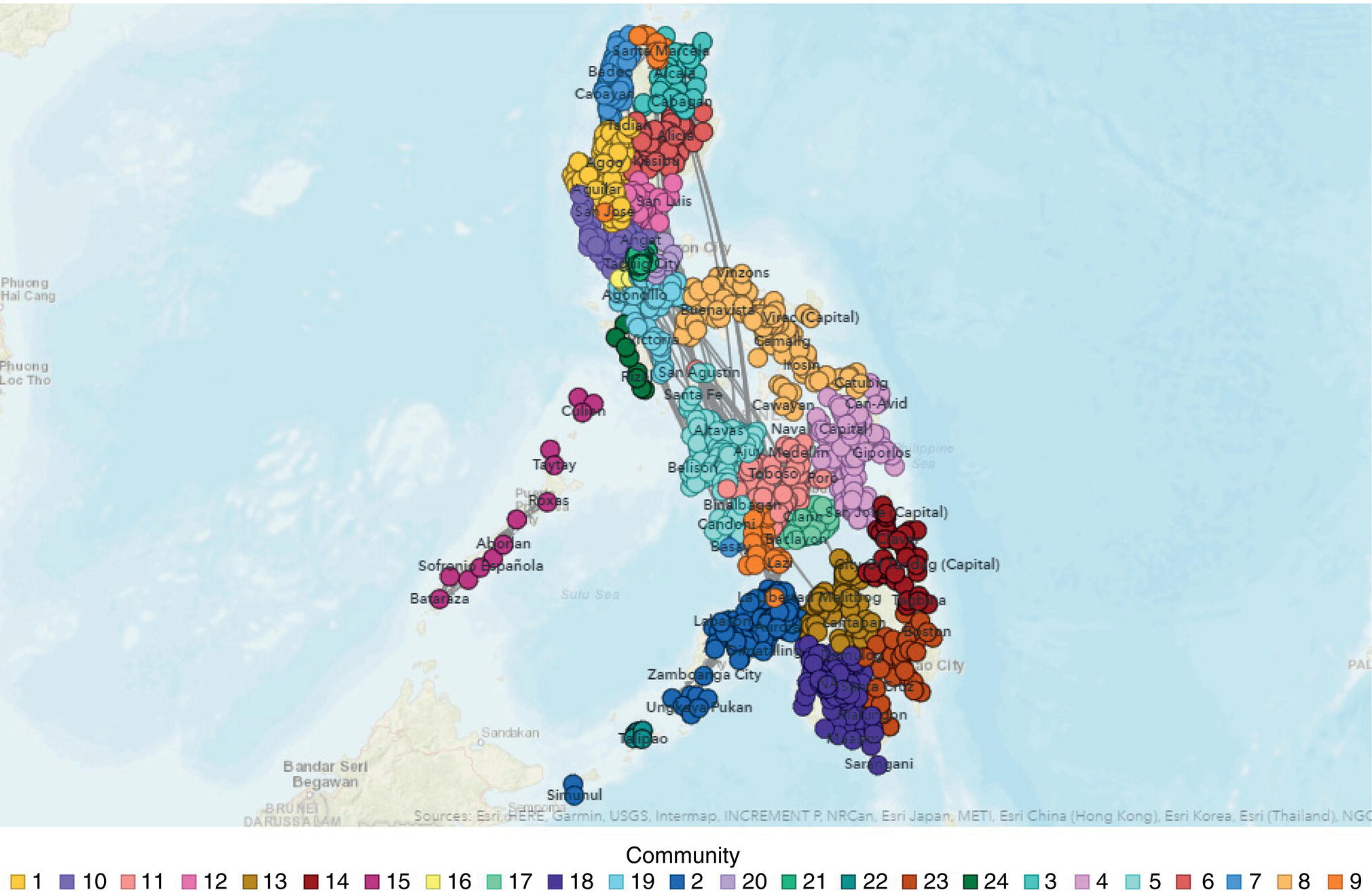

As we commonly do when applying network analysis for business events, particularly in marketing, we performed community detection to understand the mobility behavior ’groups’ locations and the flow of people traveling between them. And it's no surprise that most of the communities group together locations, which are geographically close to each other. That means people tend to travel to near locations. Of course, there are people that probably need to commute long distances. But most people try to somehow stay close to work, school, or any important community to them. If they must travel constantly to the same place, it makes sense to live as close as possible to that place. Therefore, based on the in and out flows of people traveling across geographic locations, most communities comprise locations in close proximity. In terms of virus spread, this information can be quite relevant. As one location turns out to be a hot spot, all other locations in the same community might be eventually at a higher risk, as the number of people flowing between locations inside communities are greater than between locations outside communities. Figure 5.27 shows the communities identified by the network analysis algorithm based on the in and out flows of people across the geographic locations.

Figure 5.27 Communities based on the population movements.

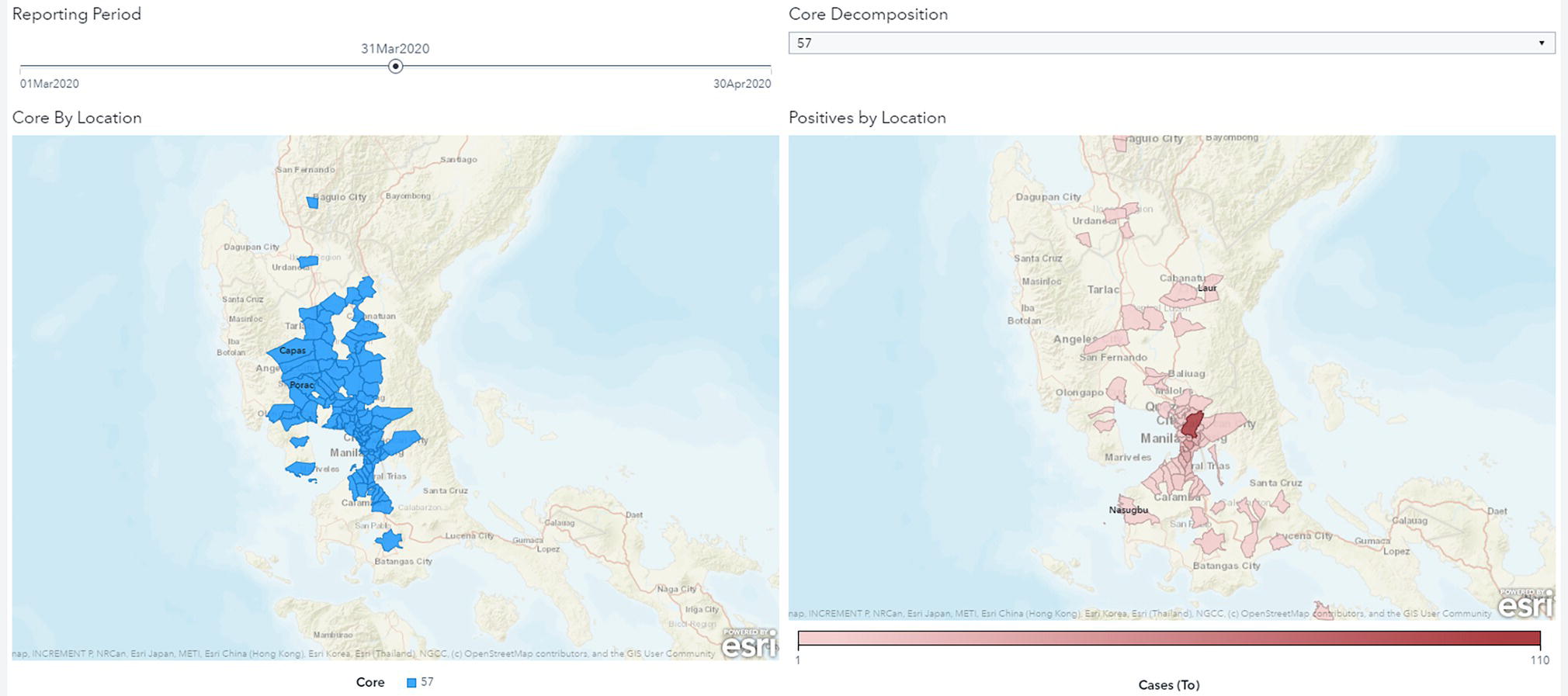

Core decomposition is a way of clustering locations based on similar levels of interconnectivity. Here, interconnectivity means mobility. Core locations do not necessarily show a correlation to geographic proximity but instead, it shows a correlation to interconnectivity, or how locations are close to each other in terms of the same level of movements between them.

One of the most important outcomes from core is the high correlation to the wider spread of the virus. Locations in the most cohesive core do correlate over time to locations where new positive cases arise over time. By identifying cores, social containment policies can be more proactive in identifying groups of locations that should be quarantined together – rather than simply relying on geographic proximity to hotspots.

Locations within the most cohesive core are not necessarily geographically close, but hold between them a high level of interconnectivity, which means they consistently flow people in and out between them. Then they spread the virus wider. This explains the spread of the virus over time throughout locations geographically distant from each other, but close in terms of interconnectivity. Figure 5.28 shows the most cohesive core in the network and how the locations within that core are correlated to the spread of the virus across multiple geographic regions.

A combination of network metrics, or network centralities, creates important KPIs to describe the network topology, which explain the mobility behavior and then how the virus spreads throughout geographic locations over time.

Considering a specific timeframe, we can see the number of positive cases rising in some locations by the darker shades of red on the map. At the same time, we can see the flow of people between some of those hot spots. We see a great amount of people flowing between those areas, spreading the virus across different regions even if they are geographically distant from each other.

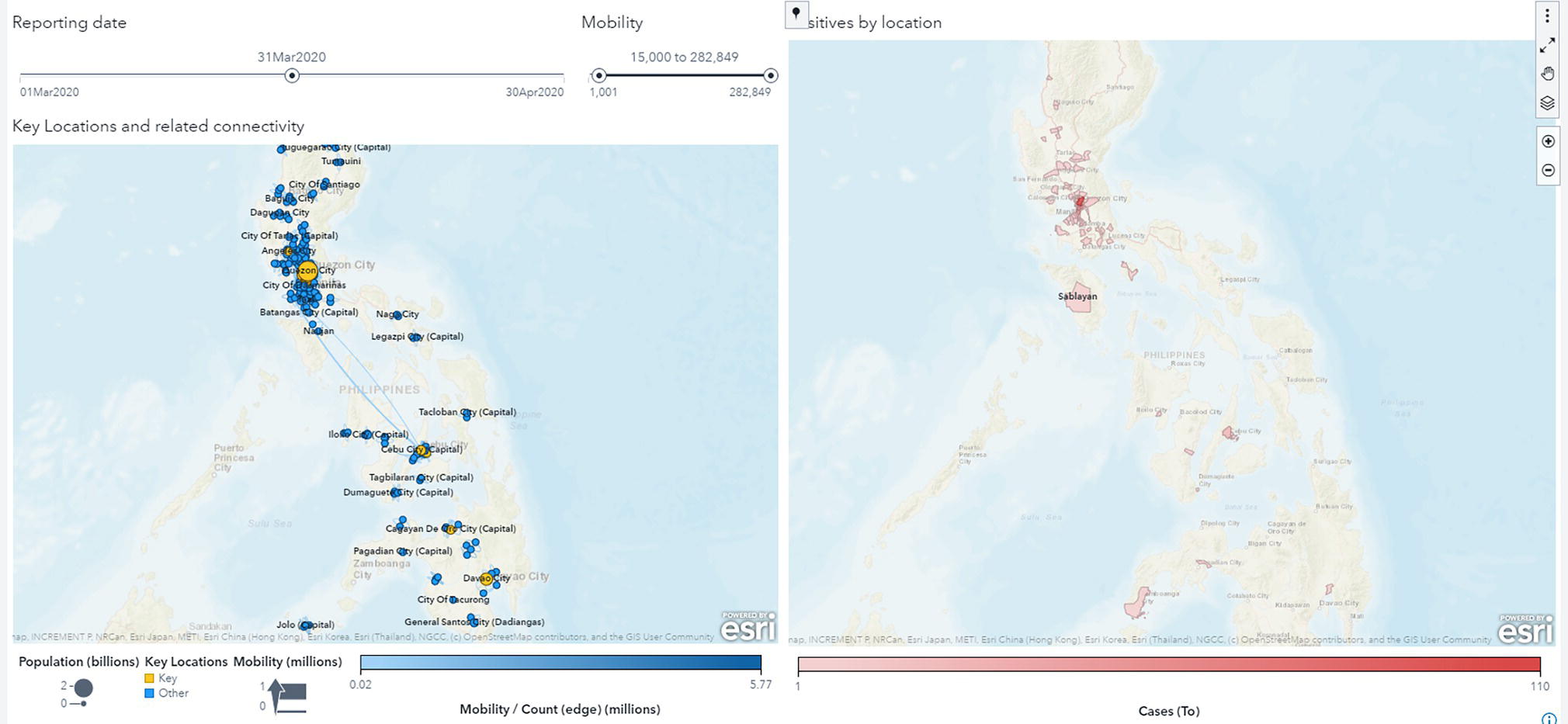

As time goes by, we notice the increase of the dark shades of red going farther from the initial hot spots, but also, we notice the flow of people between those locations. Again, the great volume of people moving from one location to another explains the spread of the virus throughout distant geographic regions. Even when you start getting even farther from the initial hot spots, we still see a substantial flow of people between locations involved in the spread of the virus. The mobility behavior, or the flow of people between locations, explains the spread across the most distant regions in the country. Figure 5.29 shows the key locations based on the centrality measures and the spread of the virus across the country.

Figure 5.28 Key cities highlighted by the network metrics and the hot spots for COVID-19.

Figure 5.29 Key locations based on the network centrality measures.

A series of networks were created based on the population movements between geographic regions and the number of positive cases in these regions over time. For each network metric and each geolocation, we compute the correlation coefficient of the change in the network metric to the change in the number of positive cases. These coefficients provide evidence supporting the hypothesis that the way the network evolves over time in terms of mobility is directly correlated to the way the number of positive cases changes across regions.

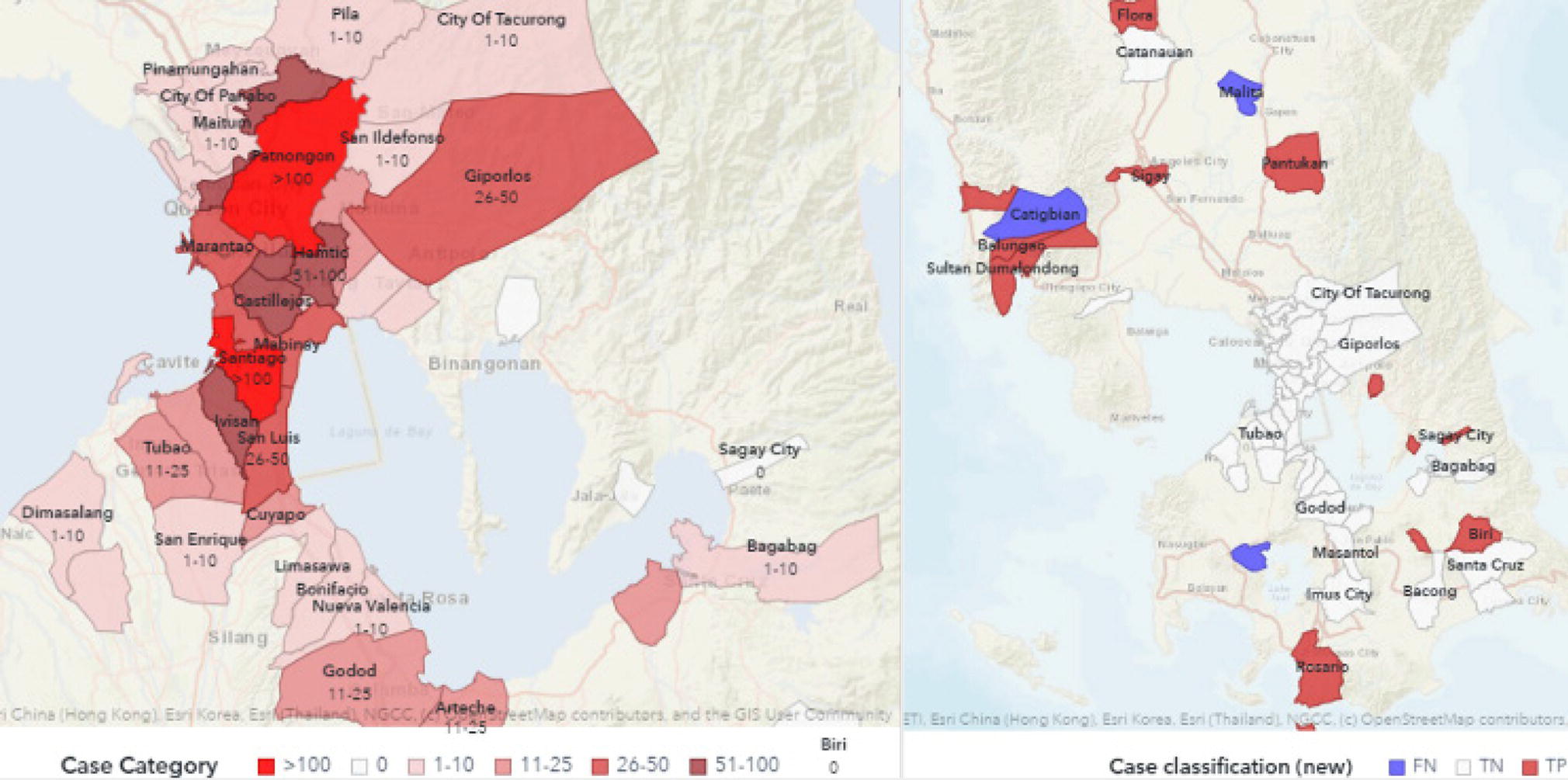

The correlation between the geographic locations and the number of positive cases over time can also be used to create a risk level factor. Each geolocation has a coefficient of correlation between the combined network metrics and the number of positive cases at the destination and origin locations. These coefficients were used to categorize the risk of virus spreading. The risk level is binned into five groups. The first bin has about 1% of the locations; it is considered substantial risk. All locations in this bin have the highest risk for the number of positive cases to increase over time because of their incoming connections. The second bin has about 3–4% of the locations and is considered medium‐high risk. The third bin has about 5% of the locations and is considered medium risk. The fourth bin has about 40% of the locations and is considered medium‐low risk. Finally, the fifth bin has about 50% of the locations and is considered low risk. Figure 5.30 shows all groups on the map. The risk level varies from light shades of green for minimal risk to dark shades of red for high risk.

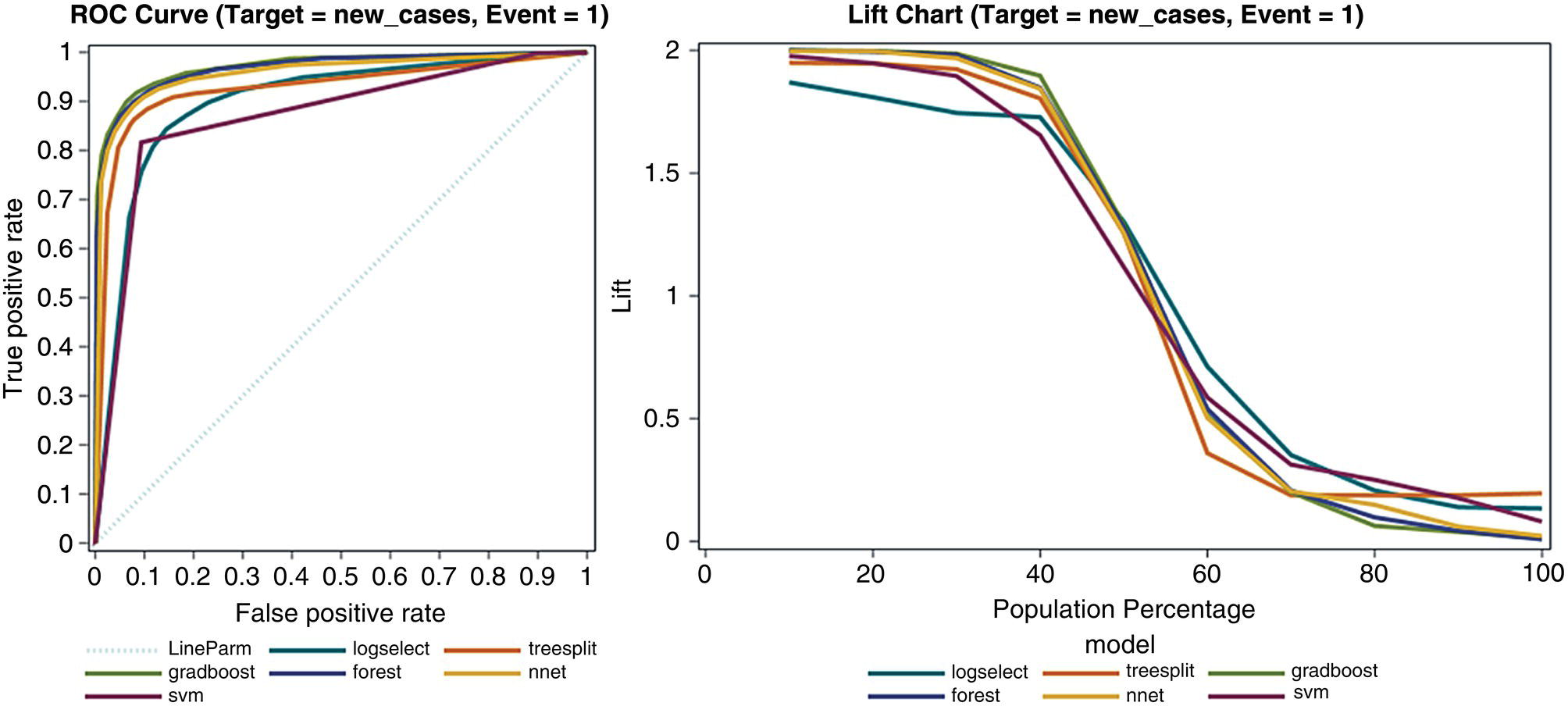

Based on the clear correlation between the computed network metrics and the virus spread over time, we decided to use these network measures as features to supervise machine learning models. In addition to the original network centralities and topologies measures, a new set of features were computed to describe the network evolution and the number of positive cases. Most of the derived variables are based on ratios of network metrics for each geographic location over time to determine how this changing topology affects the virus spread.

A set of supervised machine learning models were trained on a weekly basis to classify each geographic location in terms of how the number of positive cases would behave, increasing, decreasing, or remaining stable. Figure 5.31 shows the ROC and Lift curves for all models developed.

Local authorities can use the predictive probability reported by the supervised machine learning models to determine the level of risk associated with each geographic location in the country. This information can help them to better define effective social containment measures. The higher the probability, the more likely the location will face an increase in the number of positive cases. This risk might suggest stricter social containment. Figure 5.32 shows the results of the gradient boosting model in classifying the locations that are predicted to face an increase in the number of positive cases the following week. The model’s performance on average is about 92–98%, with an overall sensitivity around 90%. The overall accuracy measures the true positive rates as well as the true negative, false positive, and false negative rates. The sensitivity measures only the true positive rates, which can be more meaningful for local authorities when determining what locations would be targeted to social containment measures. The left side on the map shows the predicted locations. The shades of red determined the posterior probability of having an increase in the number of positive cases. The right side on the map shows how the model performed on the following week, with red showing the true‐positives (locations predicted and observed to increase cases), purple showing the false‐negatives (locations not predicted and observed to increase cases), and white showing the true‐negatives (locations predicted and observed to not increase cases).

Figure 5.30 Final links associated to the optimal tour.

Figure 5.31 Supervised machine learning models performance.

Figure 5.32 Outcomes of supervised machine learning models predicting outbreaks.

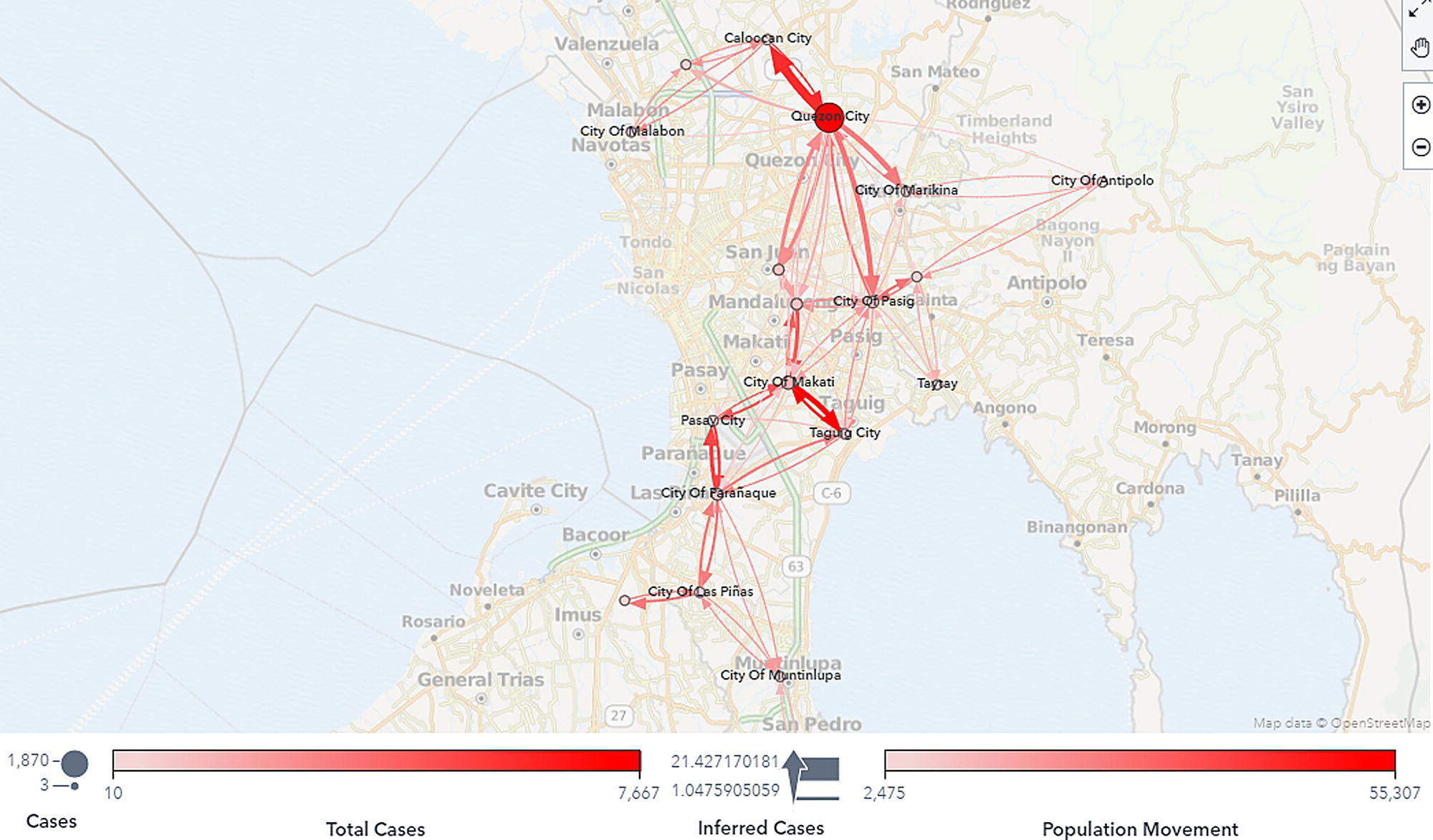

One of the last steps in the mobility behavior analysis is the calculation of inferred cases, or how the cases supposedly travel from one location to another over time. The mobility data collected from the carrier give us the information about how the population moves around geographic regions over time. The health data collected from the local authorities gives us the information about the number of cases in each location on a specific day. As the population moves between geographic regions within the country, and we assume that these moves affect the spread of the virus, we decided to infer the possible impact of the positives from one location to another. This information basically created a network of cases in a spatiotemporal perspective. Based on the inferred cases traveling from one location to another, we can observe what locations are supposedly flowing cases out, and what locations are supposedly receiving cases from other locations. The number of cases flowing between locations over time is an extrapolation of the number of people flowing in and out between locations and the number of positive cases in both origin and destination locations. Figure 5.33 shows the network of cases presenting possible flows of positive cases between geographic locations over time.

A spatiotemporal analysis on the mobility behavior can reveal valuable information about the virus spread throughout geographic regions over time. This type of analysis enables health authorities to understand the impact of the population movements on the spread of the virus and then foresee possible outbreaks in specific locations. The correlation between mobility behavior and virus spread allows local authorities to identify particular locations to be put in social containment together, as well as the level of severity required. Outbreak prediction provides sensitive information on the pattern of the virus spread, supporting better decisions when implementing shelter‐in‐place policies, planning public transportation services, or allocating medical resources to locations more likely to face an increase in positive cases. The analysis of mobility behavior can be used for any type of infectious disease, evaluating how population movements over time can affect virus spread in different geographic locations.

Figure 5.33 Inferred network of cases.

5.5 Urban Mobility in Metropolitan Cities

This case looks into urban mobility analysis, which has received reasonable attention over the past years. Urban mobility analysis reveals valuable insights about frequent paths, displacements, and overall motion behavior, supporting disciplines such as public transportation planning, traffic forecasting, route planning, communication networks optimization, and even spread diseases understanding. This study was conducted using mobile data from multiple mobile carriers in Rio de Janeiro, Brazil.

Mobile carriers in Brazil have an unbalanced distribution of prepaid and postpaid cell phones, with the majority (around 80%) of prepaid, which does not allow the proper customers address identification. Valuable information to identify frequent paths in urban mobility is the home and workplace addresses for the subscribers. For that, a presumed domicile and workplace addresses for each subscriber were created based on the most frequent cell visited during certain period of time. For example, overnight as home address and business hours as work address. Home and work addresses allow the definition of an Origin–Destination (OD) matrix and crucial analysis on the frequent commuting paths.

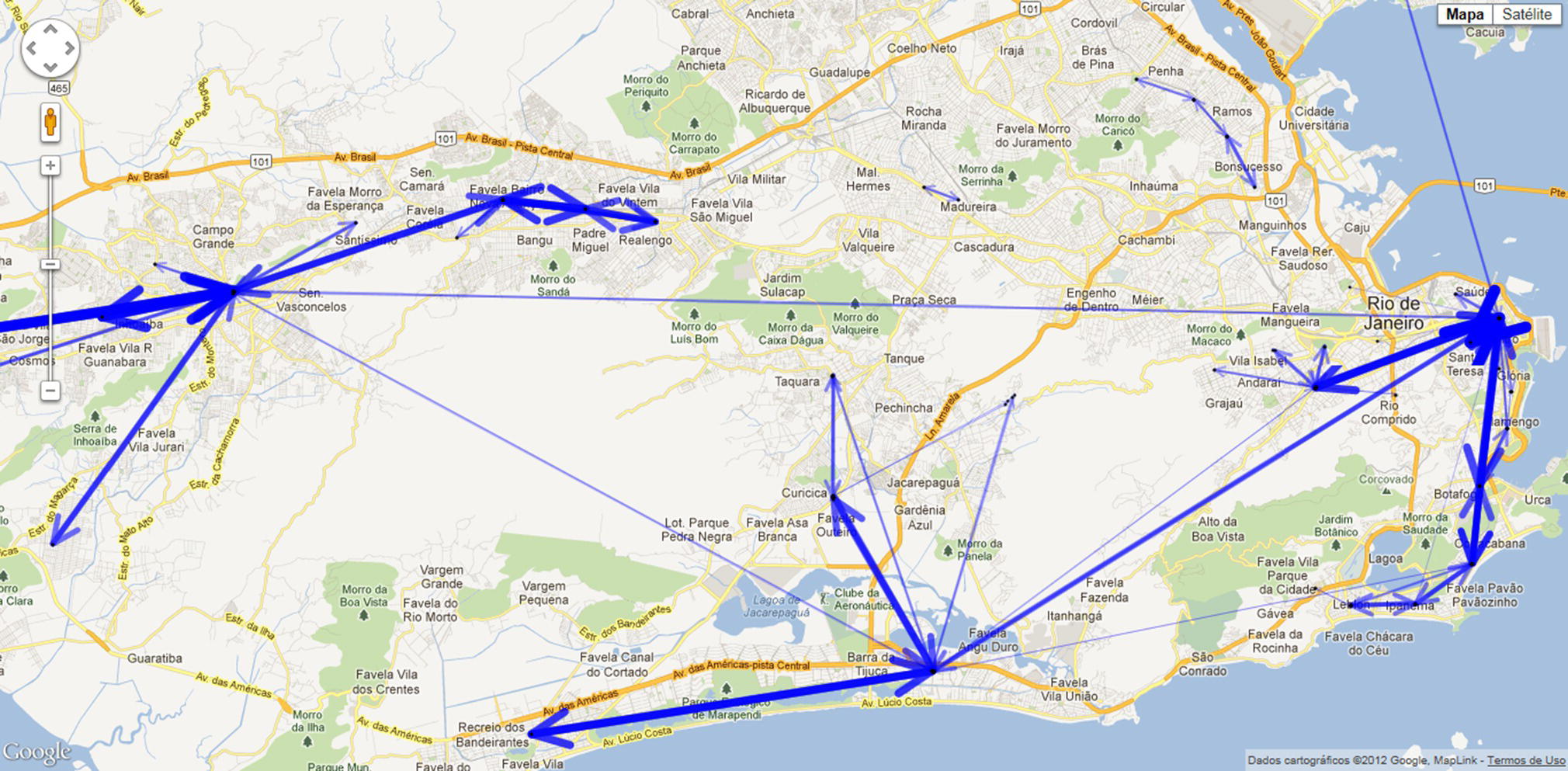

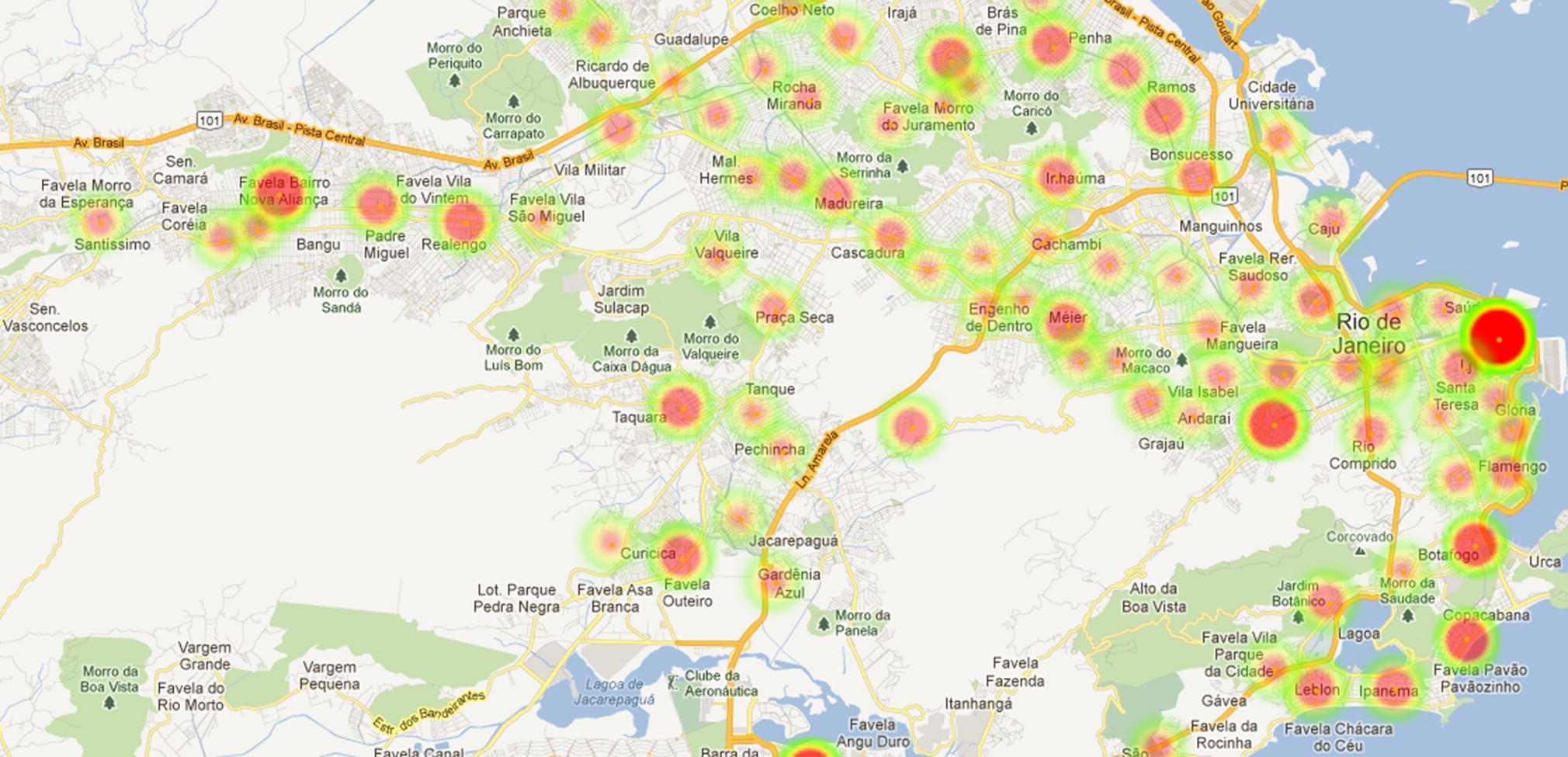

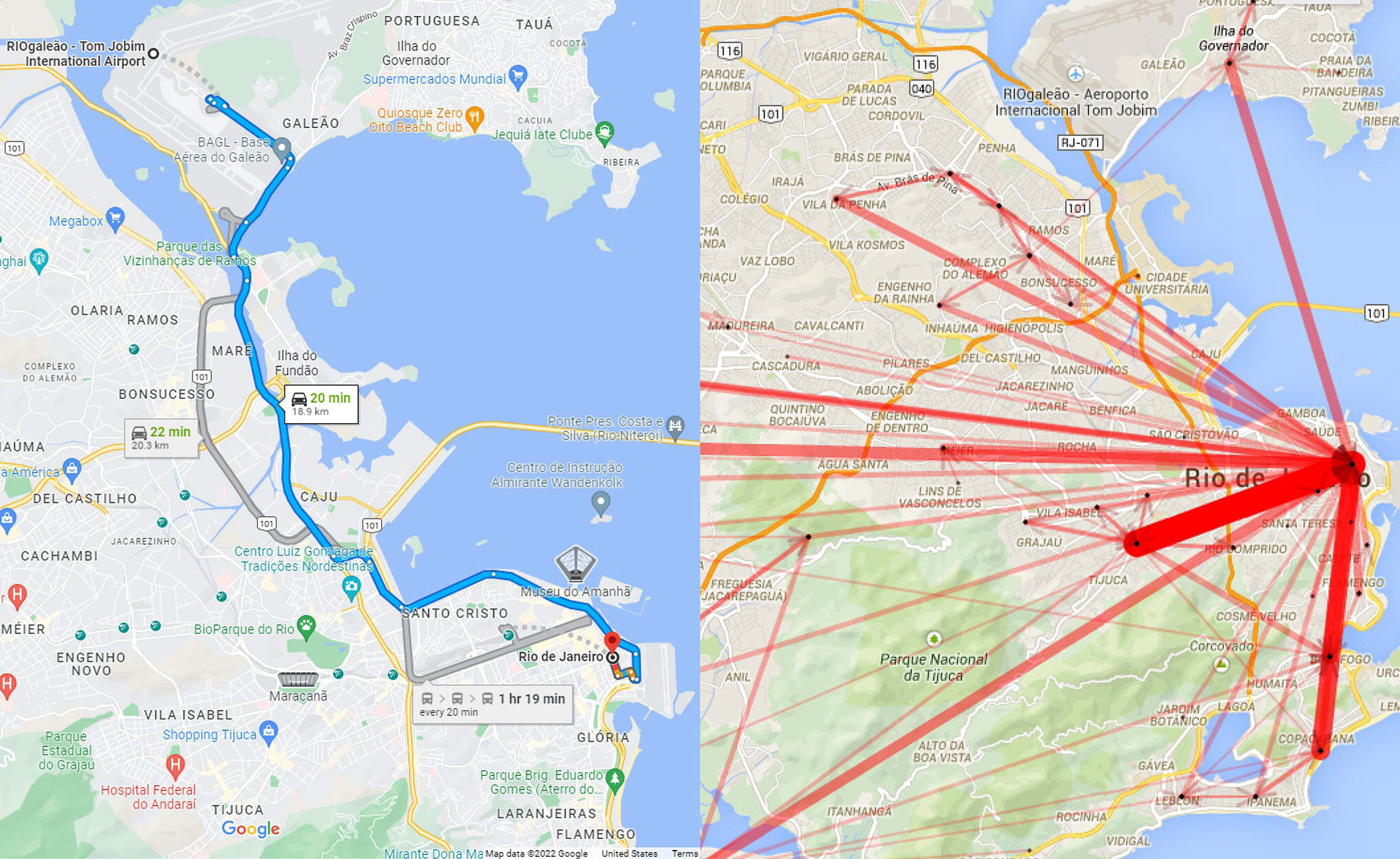

A trajectory matrix creates the foundation for the urban mobility analysis, including the frequent paths, cycles, average distances traveled, and network flows, as well as the development of supervised models to estimate number of trips between locations. A straightforward advantage of the trajectory matrix is to reveal the overall subscribers’ movements within urban areas. Figure 5.34 shows the most common movements performed in the city of Rio de Janeiro.

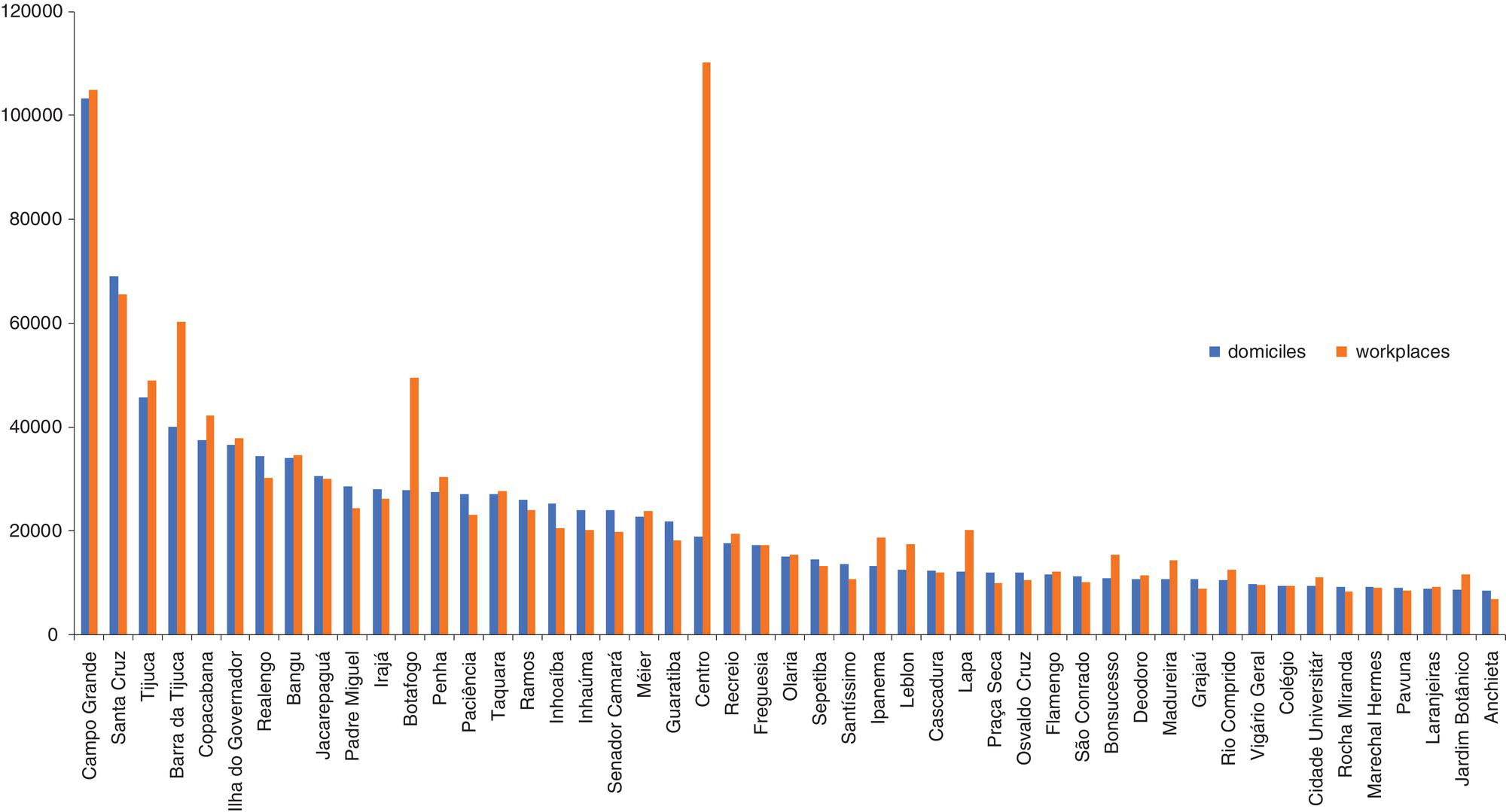

Two distinct types of urban mobility behavior can be analyzed based on the mobile data. The first one is related to the commuting paths based on the presumed domiciles and workplaces, which provides a good understanding of the population behavior on the home‐work travels. The second approach accounts for all trips considering the trajectory matrix. Commuting planning is a big challenge for great metropolitan areas, particularly considering strategies to better plan public transportation resources and network routes. Figure 5.35 shows the distribution of the presumed domiciles and workplaces throughout the city. Notice that some locations present a substantial difference in volume of domiciles and workplaces, which affects the commuting traffic throughout the city. For example, downtown has the highest volume of workplaces and a low volume of domiciles. Some places on the other hand show a well‐balanced distribution on both, like the first neighborhood in the chart, presenting almost the same number of domiciles and workplaces. As an industrial zone, workers tend to search home near around.

Figure 5.34 Frequent trajectories in the city of Rio de Janeiro.

Figure 5.35 Number of domiciles and workplaces by neighborhoods.

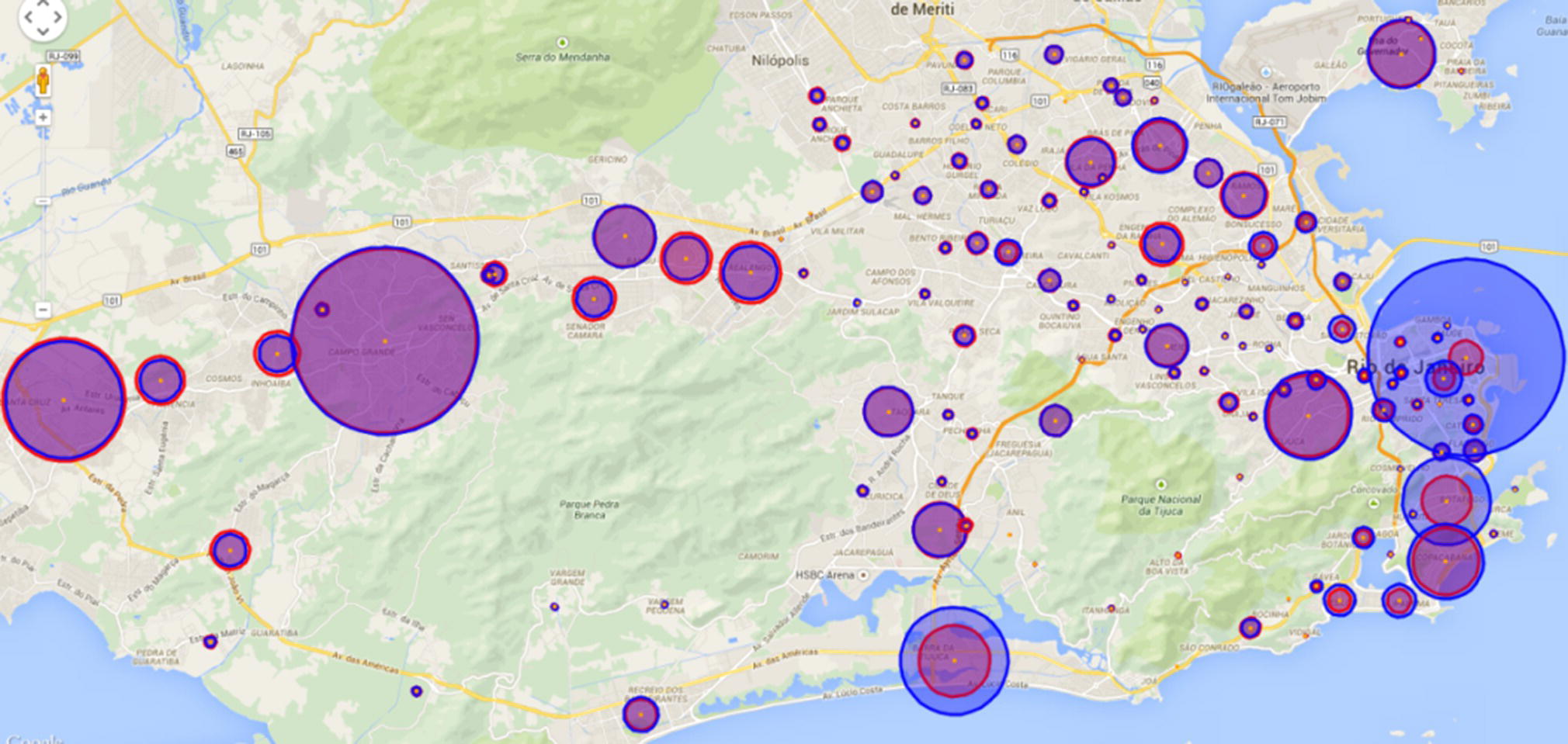

Figure 5.36 Geo map of the presumed domiciles and workplaces.

Figure 5.36 shows graphically the distribution of domiciles and workplaces throughout the city. The diameter of each circle indicates the population living or working on the locations, with light (red) circles representing the presumed domiciles and the dark (blue) circles representing the presumed workplaces. When the number of presumed domiciles and workplaces are similar, the circles overlap, and less traffic or commuting is expected. When the sizes of the circles are substantially different, it means significant commuting traffic is expected in the area.

The network created by the mobile data is defined by nodes and links. Here, the nodes represent the cell towers, and the links represent the subscribers moving from one cell tower to another. The nodes (cell towers) can be aggregated by different levels of geographic locations, like neighborhoods or towns. The links (subscribers’ movements) can also be aggregated in the same way, like, subscribers’ movements between cell towers, between neighborhoods, or between towns.

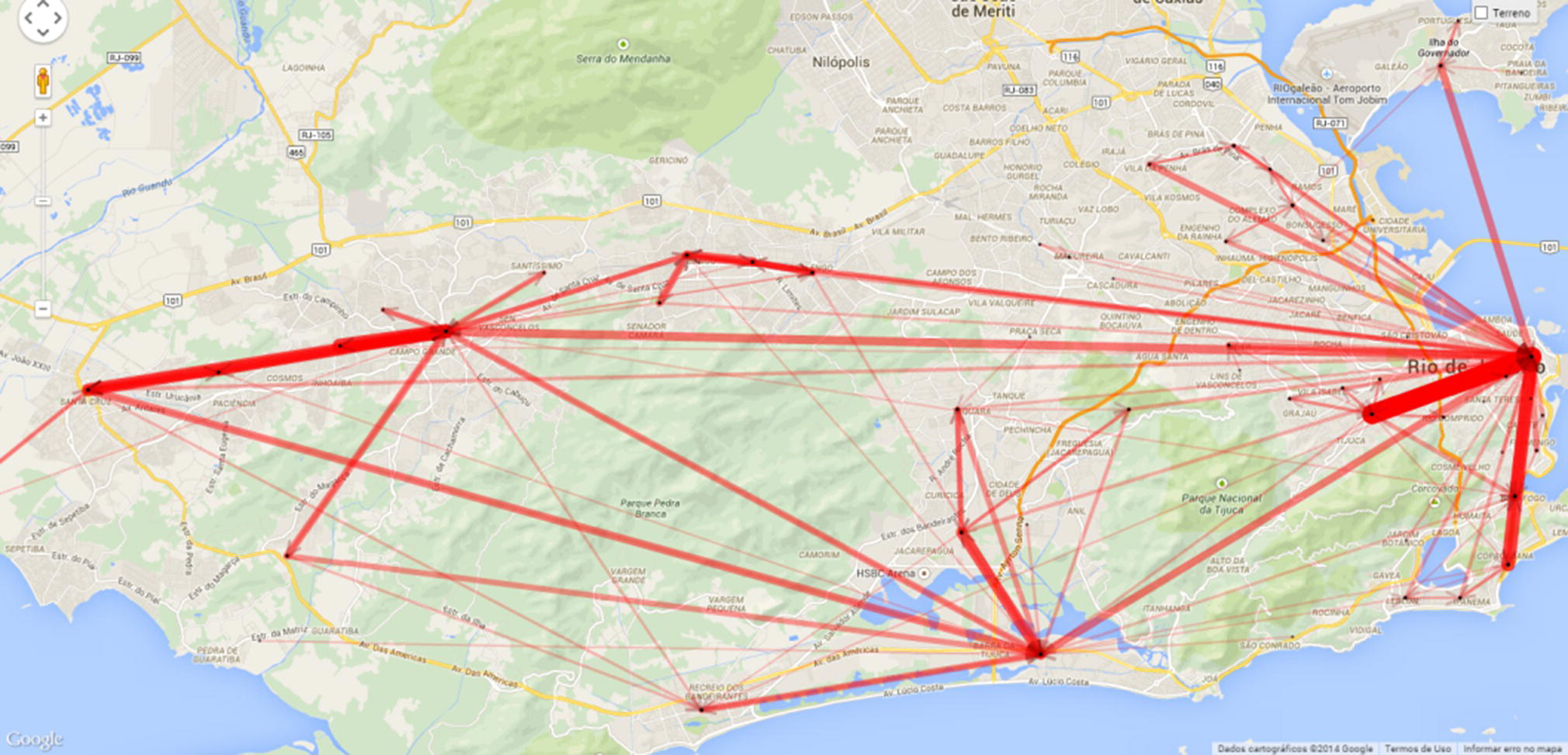

The most frequent commuting paths are identified based on the presumed domiciles and workplaces and are presented in Figure 5.37. The thicker the lines, the higher the traffic between the locations. These paths include commuting travels between various places within the western zone (characterized by domiciles and workplaces on the left side of the map) and within the eastern zone (mostly characterized by workplaces on the far right side of the map). The paths indicate that there are a reasonable number of people living near their workplaces because these displacements are concentrated in specific geographic areas (short movements). According to this study, 60% of movements are intra‐neighborhood, and 40% of them are inter‐neighborhood. Neighborhoods in Rio are sometimes quite large, around the size of town in U.S. or small cities in Europe. However, there are also significant commuting paths between different areas represented by long and high‐volume movements. On the map, we can observe a thicker line in the west part between Santa Cruz and Campo Grande, two huge neighborhoods on the left side of the map. There are also several high volume commuting paths between Santa Cruz and Campo Grande to Barra da Tijuca, a prominent neighborhood in the south zone, on the bottom of the map, and between those three neighborhoods to Centro (downtown), in the east zone of the city, on the far‐right side of the map. From Centro, the highest commuting traffic to Copacabana, the famous beach, and Tijuca, a popular neighborhood. One interesting commuting path is between downtown and the airport’s neighborhood, Ilha do Governador. Notice a thicker line between downtown and the airport crossing the bay. Here we have the Euclidian distances between each pair of locations, not the real routing.

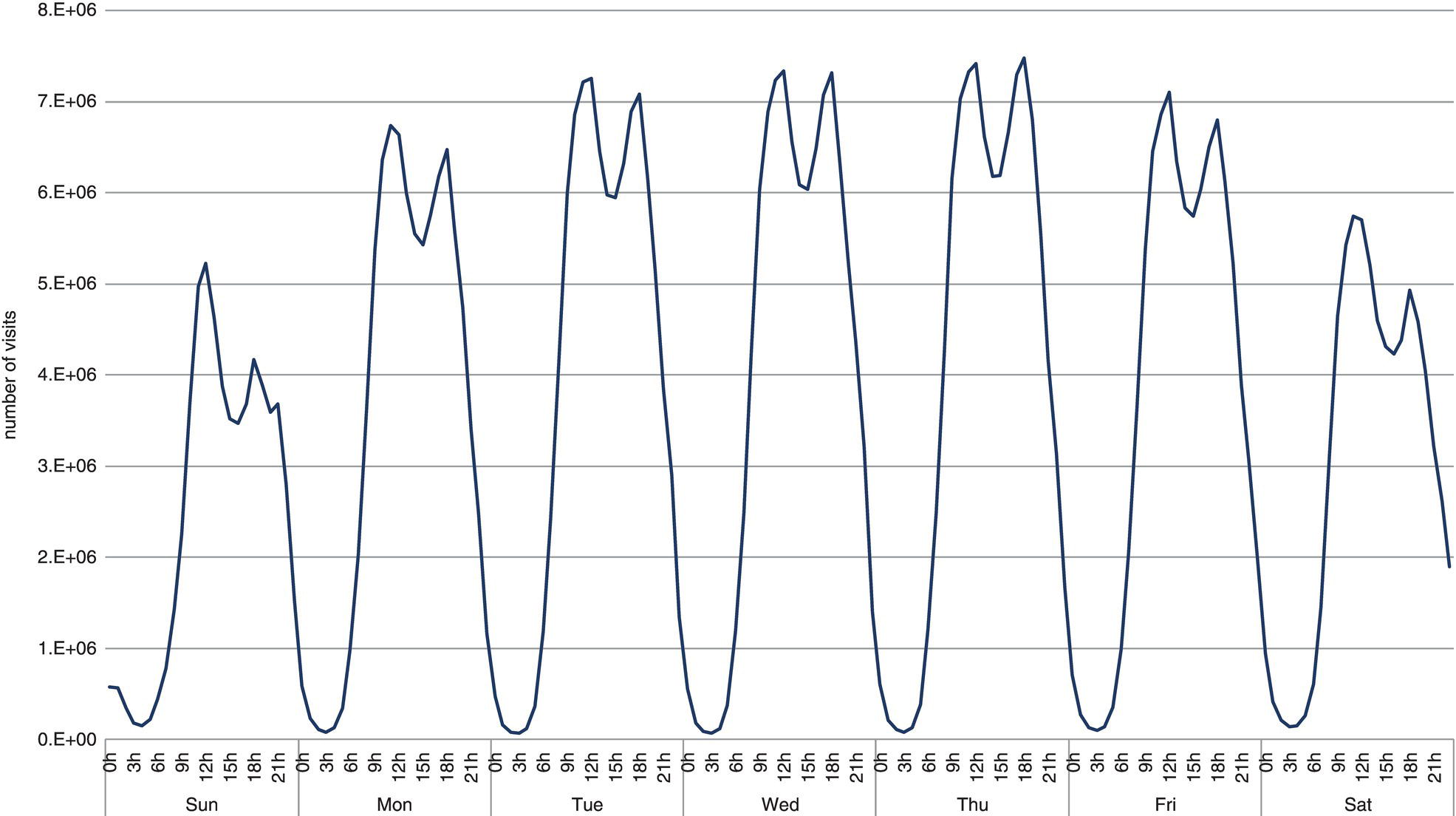

Figure 5.38 shows the number of subscribers’ displacements in the city of Rio de Janeiro during the week. This distribution is based on six months of subscribers’ movement aggregated by the day of the week and one‐hour time slots. We can observe that subscribers’ mobility behavior is approximately similar for all days of the week. For each day, regardless of weekday or weekend, the mobility activity is proportionally equal, varying similar during each day, and presenting two peaks during the 24 time slots. The first peak is around time slot 12, and the second peak is recorded at time slot 18. Both peaks occur daily. The difference in the mobility behavior on weekdays over weekends relies on the total number of movements and the peaks. The first peak of mobility activity is always during lunch time, both on weekdays and weekends. The second peak of mobility activity is always early evening. This period includes mostly commuting and leisure. At the beginning of the week – i.e. Mondays and Tuesdays – and at the end – i.e. Fridays, Saturdays, and Sundays, the first peak is higher than the second one, which means higher traffic at 12 than at 18. However, there is no difference on Wednesdays, where the traffic is virtually the same. On Thursdays, the highest peak is at 18, where the traffic is higher than at 12. A likely reason for this difference in mobility behavior is the happy hour, very popular on Thursdays. Nightlife in Rio is famous for locals and tourists. The city offers a variety of daily events, from early evening till late night. But a culture habit in the city is the happy hour, when people leave their jobs and go to bars, cafes, pubs, and restaurants to socialize (the formal excuse is to avoid the traffic jam). As in many great cosmopolitan cities around the globe, many people go for a happy hour after work. Even though all nights are busy in Rio, it is noticeable that on Thursdays it is the most frequented day for happy hour. Wednesdays are also quite famous, even more than Fridays. The mobility activity distribution during the week reinforces this perception.

Figure 5.37 Frequent commuting paths in the city of Rio de Janeiro.

Figure 5.38 Traffic volume by day.

When analyzing urban mobility behavior in great metropolitan areas over time, an important characteristic in many studies is that some locations are more frequently visited than others, and as a consequence, some paths are more frequently used than others. The urban mobility distribution is nonlinear, and often follows a power law, which represents a functional relation between two variables, where one of them varies as a power of another. Generally, the number of cities having a certain population size varies as a power of the size of the population. This means that few cities have a huge population size, while most of the cities have a small population. Similar behavior is found in the movements. The power law distribution in the urban mobility behavior contributes highly to the migration trend patterns and frequent paths in great cities, states, and even countries.

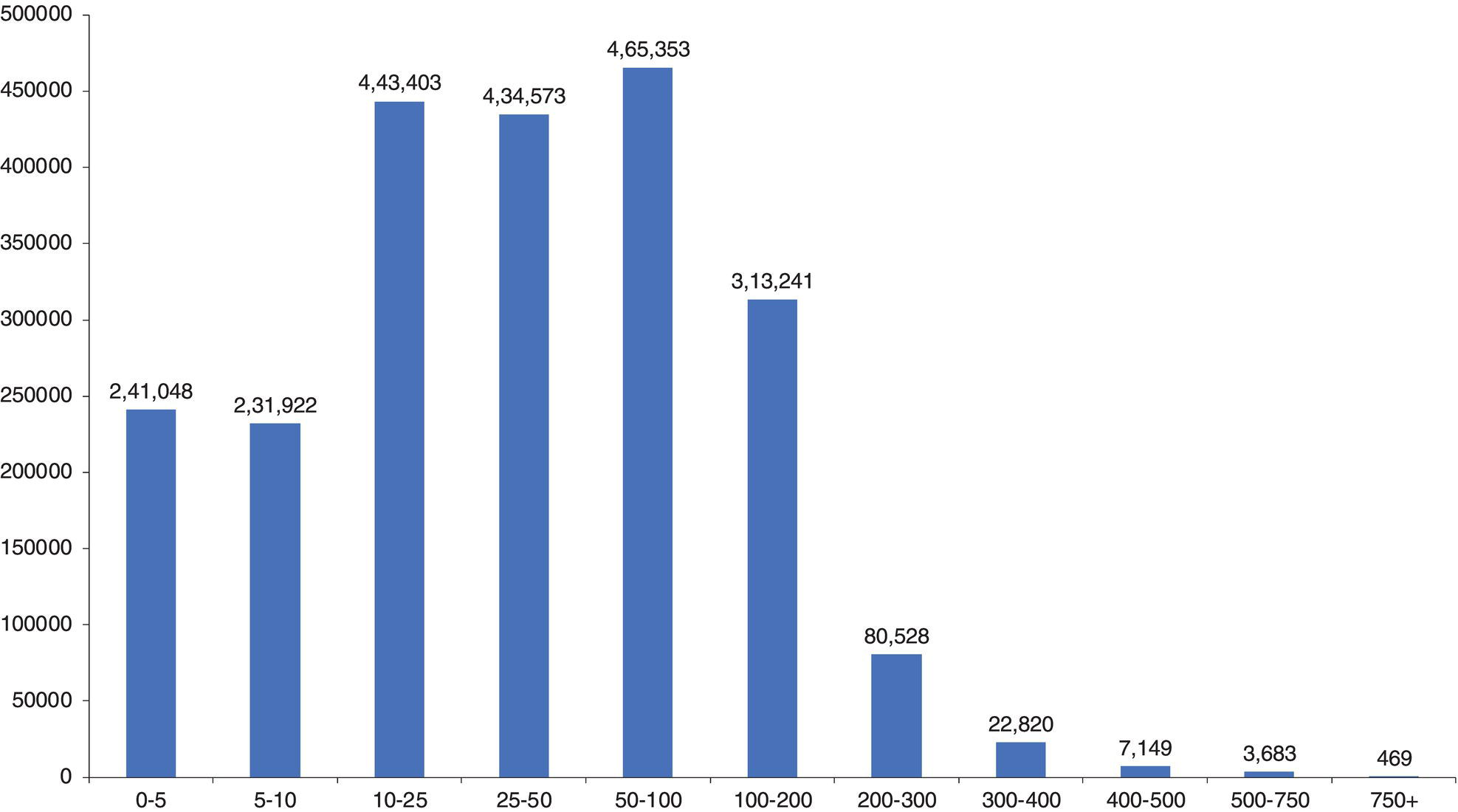

For example, consider the number of subscribers’ visits to the cell towers, which ultimately represents the subscribers’ displacements. We can observe that some locations are visited more than others. Based on the sample data, approximately 8% of the towers receive less than 500 visits per week and another 7% receive between 500 and 1000 visits. Almost 25% of the towers receive 1000 to 5000 visits a week, 11% 5–10 K and 15% 10–25 K. Those figures represent 66% of the locations, receiving only 15% of the traffic. On the other hand, 16% of the towers receive over 20% of the traffic, 9% almost 30%, 5% almost 15%, and 3% more than 11% of the traffic. That means one third of the locations represent over three quarters of the traffic.

The average level of mobility in the city of Rio de Janeiro is substantial. Most users visit a reasonable number of distinct locations during their frequently used paths. The average number of distinct locations visited on a weekly basis is around 30. However, a crucial metric in urban mobility is the average distance traveled. Rio de Janeiro is the second largest metropolitan area in Brazil, the third in South America, the sixth in America, and the 26th in the world. Because of that, it is reasonable that people need to travel midi to long distances when moving around the city, for both work and leisure. The average distance traveled by the subscribers during the frequent paths is around 60 km daily. As an example, the distance between the most populated neighborhood, Campo Grande, and the neighborhood with more workplaces, Centro, is 53.3 km. Everyone who lives in Campo Grande and works in Centro needs to travel at least 106 km daily. The distribution of the distance traveled by subscribers along their frequent paths is presented in Figure 5.39.

Figure 5.39 Distance traveled by subscribers.

We can observe that only 11% of the users travel on average less than 5 km daily. As a big metropolitan area, it is expected that people have to travel more than this. For example, 70% of the users travel between 5 and 100 km daily, 14% between 100 and 200 km, and 5% travel more than 200 km.

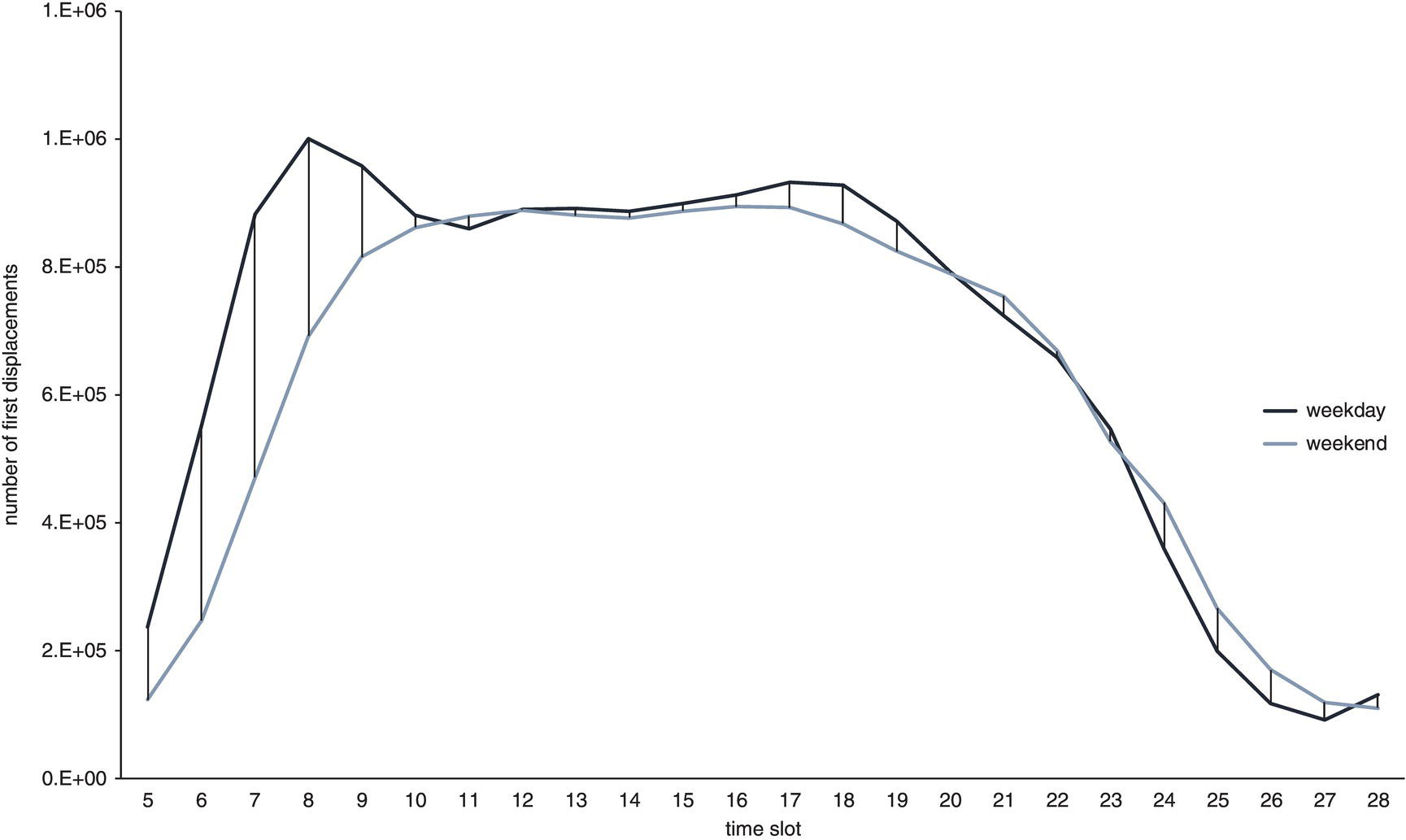

There is an interesting difference between the urban mobility patterns between weekdays and weekends. The mobility behavior in both cases is similar when looking at the movements over time, except by the traffic and the peaks. The number of movements on weekdays is around 75% higher than weekends. The second difference is the peak of the movements. During weekdays, people start moving earlier than during weekends. Here, the first movement is calculated from the presumed domicile to the first visited location. Figure 5.40 shows the movements along the day on both weekdays and weekends. The first movement starts around 5 a.m. and the traffic on weekdays is significantly higher than on weekends. Also, there is a traffic peak at 8 a.m. during weekdays, which means most of subscribers start moving pretty early. On the weekends, there is no such peak. There is actually a plateau between 11 and 17. This is expectable as during weekdays people have to work and during weekends people rest or are not in a rush.

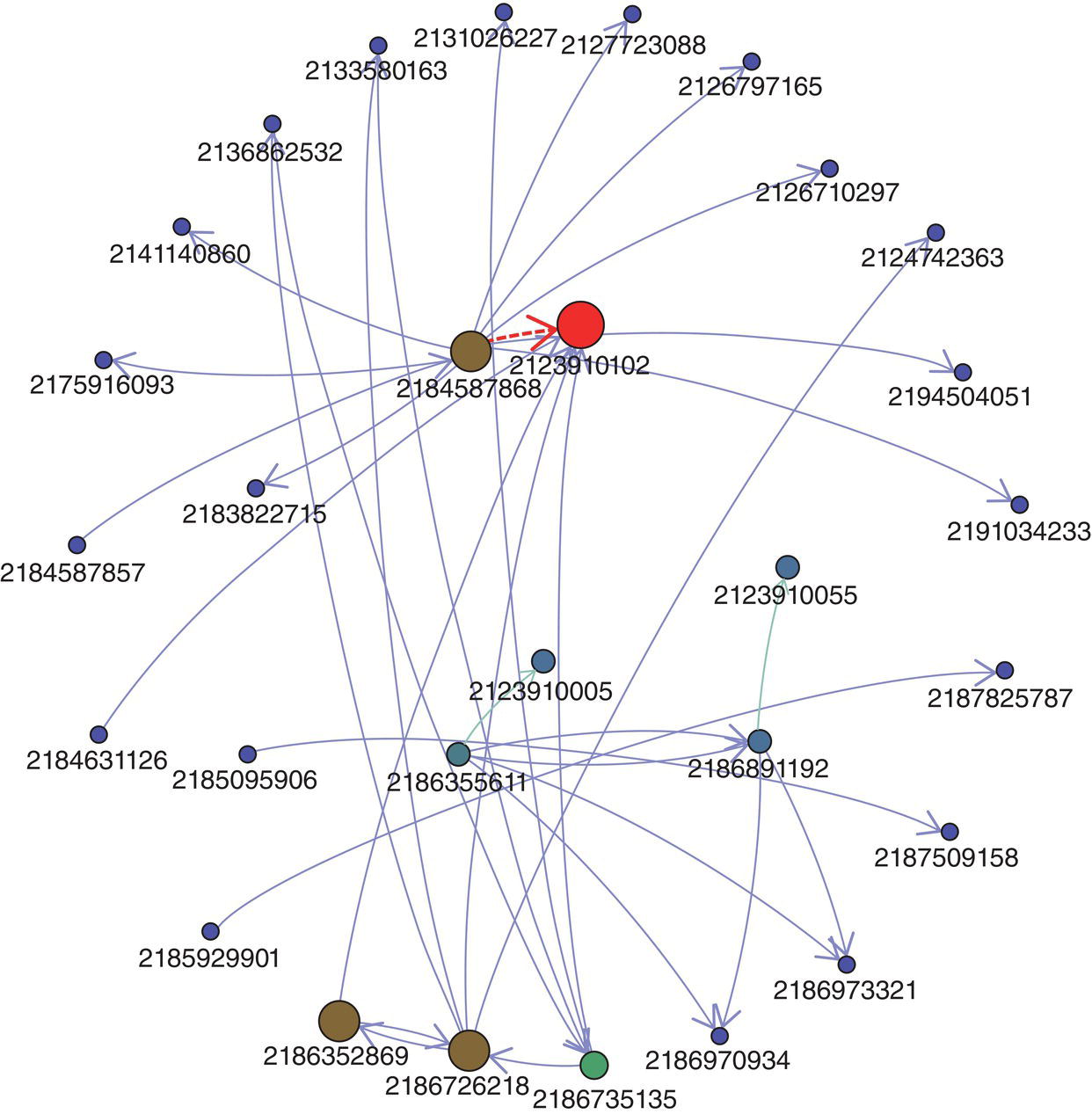

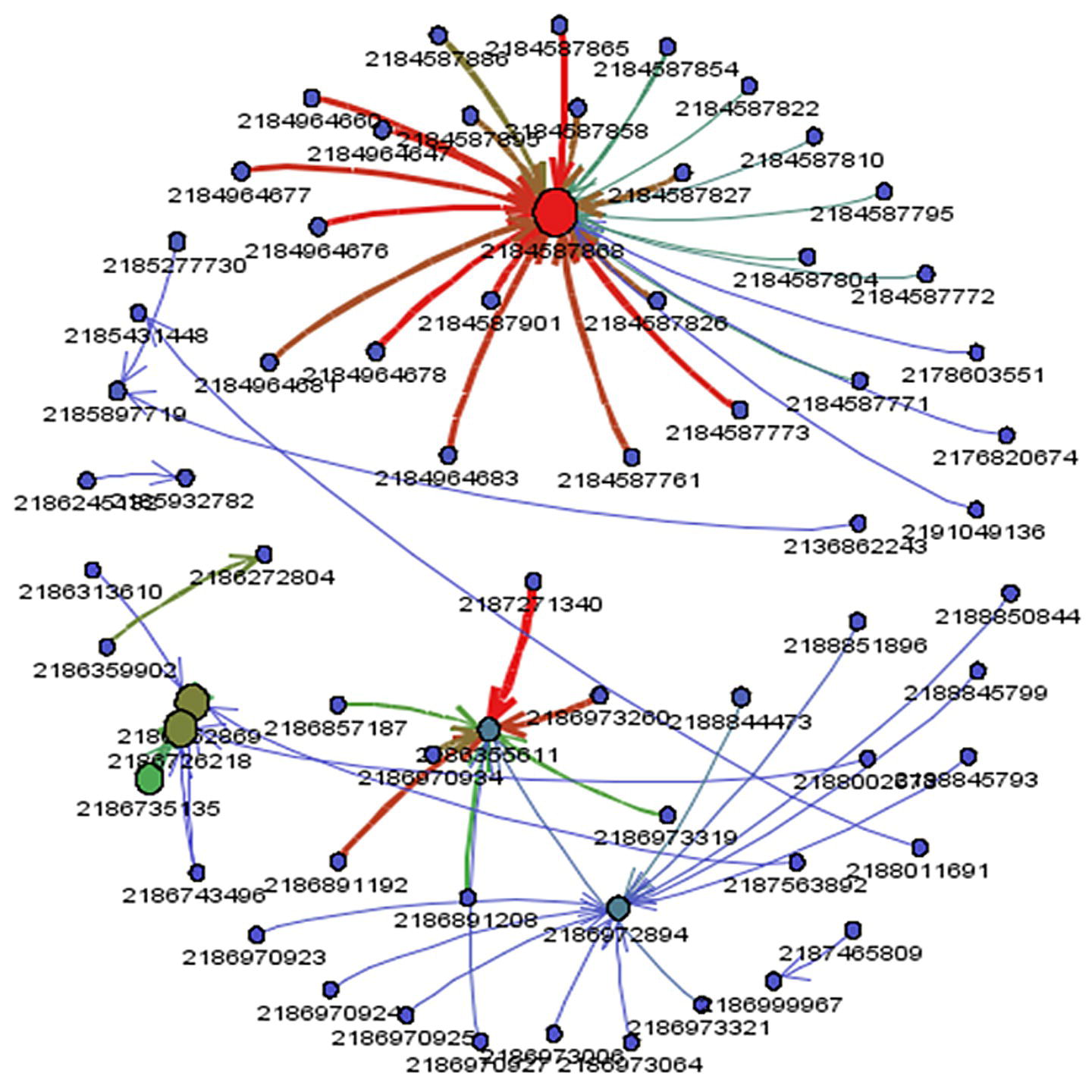

The analysis of the urban mobility has multiple applications in business and government agencies. Carriers can better plan the communications network, taking into account the peaks of the urban mobility, the travels between locations, and then optimize the network resources to accommodate subscribers’ needs over time. Government agencies can better plan public transportation resources, improve traffic routes, and even understand how spread diseases disseminate over metropolitan areas. For example, this study actually started by analyzing the vectors for the spread of the Dengue disease. Public health departments can use the vector of movements over time to better understand how some virus spreads out over particular geographic areas. Dengue is a recurrent spread disease in Rio de Janeiro. It is assessed over time by tagging the places where the diagnoses were made. However, the average paths of infected people may disclose more relevant information about vector‐borne diseases like Dengue and possibly more accurate disease control measures. Figure 5.41 shows some focus areas of Dengue in the city of Rio de Janeiro.