COMPUTER NETWORK SECURITY is very complex. New threats from inside and outside networks appear constantly. Just as constantly, the security community is always developing new products and procedures to defend against threats of the past and unknowns of the future.

As companies merge, people lose their jobs, new equipment comes on line, and business tasks change, people do not always do what we expect. Network security configurations that worked well yesterday, might not work quite as well tomorrow. In an ever-changing business climate, whom should you trust? Has your trust been violated? How would you even know? Who is attempting to harm your network this time?

Because of these complex issues, you need to understand the essentials of network security. This chapter will introduce you to the basic elements of network security. Once you have a firm grasp of these fundamentals, you will be well equipped to put effective security measures into practice on your organization's network.

While this textbook focuses on general network security, including firewalls and virtual private networks (VPNs), many of the important basics of network security are introduced in this chapter. In Chapters 1-4, network security fundamentals, concepts business challenges, and common threats are introduced. Chapters 2, 7-10, and 13 cover the issues and implementations of firewalls. Chapters 3, 11-12, and 14 discuss the use of VPNs and their implementation. Finally, Chapters 13-15 present real-world firewall and VPN implementation, best practices, and additional resources available regarding network security solutions.

This book is a foundation, and many of the topics covered here in a few paragraphs could easily fill multiple volumes. Other resources listed at the end of the book will help you learn more about the topics of greatest interest to you.

Network security is the control of unwanted intrusion into, use of, or damage to communications on your organization's computer network. This includes monitoring for abuses, looking for protocol errors, blocking non-approved transmissions, and responding to problems promptly. Network security is also about supporting essential communication necessary to the organization's mission and goals.

Network security includes elements that prevent unwanted activities while supporting desirable activities. This is hard to do efficiently, cost effectively, and transparently. Efficient network security provides quick and easy access to resources for users. Cost effective network security controls user access to resources and services without excessive expense. Transparent network security supports the mission and goals of the organization through enforcement of the organization's network security policies, without getting in the way of valid users performing valid tasks.

Computer networking technology is changing and improving faster today than ever before. Wireless connectivity is now a realistic option for most companies and individuals. Malicious hackers are becoming more adept at stealing identities and money using every means available.

Today, many companies spend more time, money, and effort protecting their assets than they do on the initial installation of the network. And little wonder. Threats, both internal and external, can cause a catastrophic system failure or compromise. Such security breaches can even result in a company going out of business. Without network security, many businesses and even individuals would not be able to work productively.

Network security must focus on the needs of workers, producing products and services, protecting against compromise, maintaining high performance, and keeping costs to a minimum. This can be an incredibly challenging job, but it is one that many organizations have successfully tackled.

Network security has to start somewhere. It has to start with trust.

Trust is confidence in your expectation that others will act in your best interest. With computers and networks, trust is the confidence that other users will act in accordance with your organization's security rules. You trust that they will not attempt to violate stability, privacy, or integrity of the network and its resources. Trust is the belief that others are trustworthy.

Unfortunately, people sometimes violate our trust. Sometimes they do this by accident, oversight, or ignorance that the expectation even existed. In other situations, they violate our trust deliberately. Because these people can be either internal personnel or external hackers, it's difficult to know whom to trust.

So how can you answer the question, "Who is trustworthy?" You begin by realizing that trust is based on past experiences and behaviors. Trust is usually possible between people who already know each other. It's neither easy nor desirable to trust strangers. However, once you've defined a set of rules and everyone agrees to abide by those rules, you have established a conditional trust. Over time, as people demonstrate that they are willing to abide by the rules and meet expectations of conduct, then you can consider them "trustworthy."

Trust can also come from using a third-party method. If a trustworthy third party knows you and me, and that third party states that you and I are both trustworthy people, then you and I can assume that we can conditionally trust each other. Over time, someone's behavior shows whether the initial conditional trust was merited or not.

A common example of a third-party trust system is the use of digital certificates that a public certificate authority issues over the Internet. As shown in Figure 1-1, a user communicates with a Web e-commerce server. The user does not initially know whether a Web server is what it claims to be or if someone is "spoofing" its identity. Once the user examines the digital certificate issued to the Web server from the same certificate authority that issued the user's digital certificate, the user can then trust that the identity of the Web site is valid. This occurs because both the user and the Web site have a common, trustworthy third party that they both know.

Ultimately, network security is based on trust. Companies assume that their employees are trustworthy and that all of the computers and network devices are trustworthy. But not all trust is necessarily the same. You can (and probably should) operate with different levels or layers of trust. Those with a higher level of trust can be assigned greater permissions and privileges. If someone or something violates your trust, then you remove the violator's access to the secure environment. For example, companies terminate an untrustworthy employee or replace a defective operating system.

Determining who or what is trustworthy is an ongoing activity of every organization, both global corporations and a family's home network. In both cases, you offer trust to others on a conditional basis. This conditional trust grows over time based on adherence to or violation of desired and prescribed behaviors.

If a program causes problems, it loses your trust and you remove it from the system. If a user violates security, that person loses your trust and might have access privileges revoked. If a worker abides by the rules, your trust grows and privileges increase. If an Internet site does not cause harm, you deem it trustworthy and allow access to that site.

To review, trust is subjective, tentative, and changes over time. You can offer trust based on the reputation of a third party. You withhold trust when others violate the rules. Trust stems from actions in the past and can grow based on future behaviors.

In network security, trust is complex. Extending trust to others without proper background investigation can be devastating. A network is only as secure as its weakest link. You need to vet every aspect of a network, including software, hardware, configuration, communication patterns, content, and users to maintain network security. Otherwise, you will not be able to accomplish the security objectives of your organization's network.

Security objectives are goals an organization strives to achieve through its security efforts. Typically, organizations recognize three primary security objectives:

Confidentiality is the protection against unauthorized access, while providing authorized users access to resources without obstruction. Confidentiality ensures that data is not intentionally or unintentionally disclosed to anyone without a valid need to know. A job description defines the person's "need to know." If a task does not require access to a specific resource, then you do not have a "need to know" that resource.

Integrity is the protection against unauthorized changes, while allowing for authorized changes performed by authorized users. Integrity ensures that data remain consistent, both internally and externally. Consistent data do not change over time and remain in sync with the real world. Integrity also protects against accidents and hacker modification by malicious code.

Availability is the protection against downtime, loss of data, and blocked access, while providing consistent uptime, protecting data, and supporting authorized access to resources. Availability ensures that users can get their work done in a timely manner with access to the proper resources.

Several other objectives support network security in addition to the three primary goals:

Authentication is the proof or verification of a user's identity before granting access to a secured area. This can occur both on a network as well as in the physical, real world. While the most common form of authentication is a password, password access is also the least secure method of authentication. Multi-factor authentication, therefore, is the method most network administrators prefer for secure logon.

Authorization is controlling what users are allowed and not allowed to do. Authorization is dictated by the organization's security structure, which may focus on discretionary access control (DAC), mandatory access control (MAC), or role-based access control (RBAC). Authorization restricts access based on need to know and users' job descriptions. Authorization is also known as access control.

Non-repudiation is the security service that prevents a user from being able to deny having performed an action. For example, non-repudiation prevents a sender from denying having sent a message. Auditing and public-key cryptography commonly provide non-repudiation services.

Privacy protects the confidentiality, integrity, and availability of personally identifiable or sensitive data. Private data often includes financial records and medical information. Privacy prevents unauthorized watching and monitoring of users and employees.

Maintaining and protecting these security objectives can be a challenge. As with most difficult tasks, breaking security down into simpler or smaller components will help you to understand and ultimately accomplish this objective. To support security objectives, you need to know clearly what you are trying to protect.

In terms of security, the things you want to protect are known as assets. An asset is anything used to conduct business over a computer network. Any object, computer, program, piece of data, or other logical or physical component employees need to accomplish a task is an asset.

Assets do not have to be expensive, complicated, or large. In fact, many assets are relatively inexpensive, common-place, and variable in size. But no matter the characteristics, an asset needs protection. When assets are unavailable for whatever reason, people can't get their work done.

For most organizations, including SOHO (small office, home office) environments, the assets of most concern include business and personal data. If this information is lost, damaged, or stolen, serious complications result. Businesses can fail. Individuals can lose money. Identities can be stolen. Even lives can be ruined.

What causes these problems? What violates network security? The answer includes accidents, ignorance, oversight, and hackers. Accidents happen, including hardware failures and natural disasters. Poor training equals ignorance. Workers with the best of intentions damage systems if they don't know proper procedures and have necessary skills. Overworked and rushed personnel overlook issues that can result in asset compromise or loss. Malicious hackers can launch attacks and exploits against the network, seeking to gain access or just to cause damage.

"Hacking" originally meant tinkering or modifying systems to learn and explore. However, the term has come to refer to malicious and possibly criminal intrusion into and manipulation of computers. In either case, a malicious hacker or criminal hacker is a serious threat. Every network administrator should be concerned about hacking.

Some important aspects of security stem from understanding the techniques, methods, and motivations of hackers. Once you learn to think like a hacker, you may be able to anticipate future attacks. This enables you to devise new defenses before a hacker can successfully breach your organization's network.

So how do hackers think? Hackers think along the lines of manipulation or change. They look into the rules to create new ways of bending, breaking, or changing them. Many successful security breaches have been little more than slight variations or violations of network communication rules.

Hackers look for easy targets or overlooked vulnerabilities. Hackers seek out targets that provide them the most gain, often financial rewards. Hackers turn things over, inside out, and in the wrong direction. Hackers attempt to perform tasks in different orders, with incorrect values, outside the boundaries, and with a purpose to cause a reaction. Hackers learn from and exploit mistakes, especially mistakes of the network security professionals who fail to properly protect an organization's assets.

Why is thinking like a "hacker" critically important? A sixth century Chinese general and author, Sun Tzu, in his famous military text The Art of War, stated: "If you know the enemy and know yourself you need not fear the results of a hundred battles." Once you understand how hackers think, the tools they use, their exploits, and attack techniques they employ, you can then create effective defenses to protect against them.

You've often heard that "the best defense is a good offense." While this statement may have merit elsewhere, most network security administrators do not have the luxury—or legal right—to attack hackers. Instead, you need to turn this strategic phrase around: The best offense is a good defense. While network security administrators cannot legally or ethically attack hackers, they are fully empowered to defend networks and assets against hacker onslaughts.

Chapter 4 further discusses hacking and other threats in greater detail.

Hackers look for any and every opportunity to exploit a target. No aspect of an IT infrastructure is without risk nor is immune to the scrutiny of a hacker. When thinking like a hacker, analyze every one of the seven domains of a typical IT infrastructure (Figure 1-2) for potential vulnerabilities and weaknesses. Be thorough. A hacker only needs one crack in the protections to begin chipping away at the defenses. You need to find every possible breach point to secure it and harden the network.

The seven domains of a typical IT infrastructure are:

User Domain—this domain refers to actual users whether they are employees, consultants, contractors, or other third-party users. Any user who accesses and uses the organization's IT infrastructure must review and sign an acceptable use policy (AUP) prior to being granted access to the organization's IT resources and infrastructure.

Workstation Domain—this domain refers to the end user's desktop devices such as a desktop computer, laptop, VoIP telephone, or other end-point device. Workstation devices typically require security countermeasures such as antivirus, anti-spyware, and vulnerability software patch management to maintain the integrity of the device.

LAN Domain—this domain refers to the physical and logical local area network (LAN) technologies (i.e., 100Mbps/1000Mbps switched Ethernet, 802.11-family of wireless LAN technologies) used to support workstation connectivity to the organization's network infrastructure.

LAN-to-WAN Domain—this domain refers to the organization's internetworking and interconnectivity point between the LAN and the WAN network infrastructures. Routers, firewalls, demilitarized zones (DMZs), and intrusion detection systems (IDS) and intrusion prevention systems (IPS) are commonly used as security monitoring devices in this domain.

Remote Access Domain—his domain refers to the authorized and authenticated remote access procedures for users to remotely access the organization's IT infrastructure, systems, and data. Remote access solutions typically involve SSL-128 bit encrypted remote browser access or encrypted VPN tunnels for secure remote communications.

WAN Domain—organizations with remote locations require a wide area network (WAN) to interconnect them. Organizations typically outsource WAN connectivity from service providers for end-to-end connectivity and bandwidth. This domain typically includes routers, circuits, switches, firewalls, and equivalent gear at remote locations sometimes under a managed service offering by the service provider.

Systems/Applications Domain—this domain refers to the hardware, operating system software, database software, client/server applications, and data that is typically housed in the organization's data center and/or computer rooms.

Recognizing the potential for compromise exists throughout an organization. The next step is to comprehend the goals of network security.

Network security goals vary from organization to organization. Often they include a few common mandates:

Ensure the confidentiality of resources

Protect the integrity of data

Maintain availability of the IT infrastructure

Ensure the privacy of personally identifiable data

Enforce access control

Monitor the IT environment for violations of policy

Support business tasks and the overall mission of the organization

Whatever your organization's security goals, to accomplish them you need to write down those goals and develop a thorough plan to execute them. Without a written plan, security will be haphazard at best and will likely fail to protect your assets. With a written plan, network security is on the path to success. Once you define your security goals, these goals will become your organization's roadmap for securing the entire IT infrastructure.

Organizations measure the success of network security by how well the stated, written security goals are accomplished or maintained. In essence, this becomes the organization's baseline definition for information systems security. For example, if private information on the network does not leak to outsiders, then your efforts to maintain confidentiality were successful. Or, if employees are able to complete their work on time and on budget, then your efforts to provide system integrity protection were successful.

If violations take place that compromise your assets or prevent maintaining a security goal, however, then network security was less than successful. But let's face it, security is never perfect. In fact, even with well-designed and executed security, accidents, mistakes, and even intentional harmful exploits will dog your best efforts. The perfect security components do not exist. All of them have weaknesses, limitations, backdoors, work-arounds, programming bugs, finite areas of affect, or some other exploitable element.

Fortunately, though, successful security doesn't rely on the installation of just a single defensive component. Instead, good network security relies on an interweaving of multiple, effective security components. You don't have just one lock on your house. By combining multiple protections, defenses, and detection systems you can rebuff many common, easy hacker exploits.

Network security success is not about preventing all possible attacks or compromises. Instead, you work to continually improve the state of security, so that in the future the network is better protected than it was in the past. As hackers create new exploits, security professionals learn about them, adapt their methods and systems, and establish new defenses. Successful network security is all about constant vigilance, not creating an end product. Security is an ongoing effort that constantly changes to meet the challenge of new threats.

A clearly written security policy establishes tangible goals. Without solid and defined goals, your security efforts would be chaotic and hard to manage. Written plans and procedures focus security efforts and resources on the most important tasks to support your organization's overall security objectives.

A written security policy is a road map. With this map, you can determine whether your efforts are on track or going in the wrong direction. The plan provides a common reference against which security tasks are compared. It serves as a measuring tool to judge that security efforts are helping rather than hurting maintenance of your organization's security objectives.

With a written security policy, all security professionals strive to accomplish the same end: a successful, secure work environment. By following the written plan, you can track progress so that you install and configure all the necessary components. A written plan validates what you do, defines what you still need to do, and guides you on how to repair the infrastructure when necessary.

Without a written security policy, you cannot trust that your network is secure. Without a written security policy, workers won't have a reliable guide on what to do and judging security success will be impossible. Without a written policy, you have no security.

Things invariably go wrong. Users make mistakes. Malicious code finds its way into your network. Hackers discover vulnerabilities and exploit them. In anticipating problems that threaten security, you must plan for the worst.

This type of planning has many names, including contingency planning, worst-case scenario planning, business continuity planning, disaster recovery planning, and continuation of operations planning. The name is not important. What's crucial is that you do the planning itself.

When problems occur, shift into response gear: respond, contain, and repair. Respond to all failures or security breaches to minimize damage, cost, and downtime. Contain threats to prevent them from spreading or affecting other areas of the infrastructure. Repair damage promptly to return systems to normal status quickly and efficiently. Remember the goals of security are confidentiality, integrity, and availability. Keep these foremost in mind as you plan for the worst.

The key purpose of planning for problems is to be properly prepared to protect your infrastructure. With a little luck, a major catastrophe won't occur. But better to prepare and not need the response plan, than to allow problems to cause your business to fail.

Network security is the responsibility of everyone who uses the network. Within an organization, no one has the luxury of ignoring security rules. This applies to global corporations as well as home networks. Every person is responsible for understanding his or her role in supporting and maintaining network security. The weakest link rule applies here: If only one person fails to fulfill this responsibility, security for all will suffer.

Senior management has the ultimate and final responsibility for security. For good reason—senior management is the most concerned about the protection of the organization's assets. Without the approval and support of senior management, no security effort can succeed. Senior management must ensure the creation of a written security policy that all personnel understand and follow.

Senior management also assigns the responsibility for designing, writing, and executing the security plan to the IT staff. Ideally, the result of these efforts is a secure network infrastructure. The security staff, in turn, must thoroughly manage all assets, system vulnerabilities, imminent threats, and all pertinent defenses. Their task is to design, execute, and maintain security throughout the organization.

In their role as overseers of groups of personnel, managers and supervisors must ensure that employees have all the tools and resources to accomplish their work. Managers must also ensure that workers are properly trained in skills, procedures, policies, boundaries, and restrictions. Employees can mount a legitimate legal case against an organization that requires them to perform work for which they are not properly trained.

Network administrators manage all the organization's computer resources. Resources include file servers, network access, databases, printer pools, and applications. The network administrator's job is to ensure that resources are functional and available for users while enforcing confidentiality and network integrity.

An organization's workers are the network users and operators. They ultimately do the work the business needs to accomplish. Users create products, provide services, perform tasks, input data, respond to queries, and much more. Job descriptions may apply to a single user or a group of users. Each job description defines a user's tasks. Users must perform these tasks within the limitations of network security.

Auditors watch for problems and violations. Auditors investigate the network, looking for anything not in compliance with the written security policy. Auditors watch the activity of systems and users to look for violations, trends toward bottlenecks, and attempts to perform violations. The information uncovered by auditors can help improve the security policy, adjust security configurations, or guide investigators toward apprehending security violators.

All of these roles exist within every organization. Sometimes different individuals perform these roles. In other situations, a single person performs all of these roles. In either case, these roles are essential to the creation, maintenance, and improvement of security.

As you design a network, you need to evaluate every aspect in light of its security consequences. With limited budgets, personnel, and time, you must also minimize risk and maximize protection. Consider how each of the following network security aspects affects security for large corporations, small companies, and even home-based businesses.

A workgroup is a form of networking in which each computer is a peer or equal. Peers are equal in how much power or controlling authority any one system has over the other members of the same workgroup. All workgroup members are able to manage their own local resources and assets, but not those of any other workgroup member.

Workgroups are an excellent network design for very small environments, such as home family networks or very small companies. In most cases, a workgroup comprises fewer than 10 computers and rarely contains more than 20 computers. No single rule dictates the size of a workgroup. Instead, the administrative overhead of larger workgroups encourages network managers to move to a client/server configuration.

Figure 1-3 shows a typical workgroup configuration. In this example, a switch interconnects the four desktop workgroup members as well as an Internet connection device and a wireless access point. Additional clients can connect wirelessly via the access point or wired via a cable connecting to the switch.

Workgroups do not have a central authority that controls or restricts network activity or resource access. Instead, each individual workgroup member makes the rules and restrictions over resources and assets. The security defined for one member does not apply to nor affect any other computer in the workgroup.

Due to system-by-system-based security, a worker or a workgroup member needs to have a user account defined on each of the other workgroup members to access resources on those systems. Each of these accounts is technically a unique user account, even if created by using the same characters for the username and password.

This results in either several unique user accounts with different names and different passwords or several unique user accounts with the same name and same password. In either case, security is poor. In the former case, the user must remember several sets of credentials. This often results in the user writing down the credentials. In the later case, an intruder need compromise only one set of credentials.

This lack of central authority is both a strength and weakness of workgroups. This characteristic is a strength in that each user of each computer can make his or her own choices about sharing resources with others. However, this is at the same time a weakness because of the inconsistent levels of access.

Workgroups are easy to create. Often the default network configuration of operating systems is to be a member of a workgroup. A new workgroup is created by just defining a unique name on a computer. Once one computer names the workgroup, it now exists. Other computers become members of the new workgroup just by using the same name. Since workgroups lack a central authority, anyone can join or leave a workgroup at any time. This includes unauthorized systems owned by rogue employees or external parties.

Most workgroups use only basic resource-share protections, fail to use encrypted protocols, and are lax on monitoring intrusions. While imposing some security on workgroups is possible, usually each workgroup member is configured individually. Fortunately, since workgroups are small, this does not represent a significant amount of effort.

SOHO stands for small office, home office. SOHO is a popular term that describes smaller networks commonly found in small businesses, often deployed in someone's home, garage, portable building, or leased office space. A SOHO environment can be a workgroup or a client/server network. Usually a SOHO network implies purposeful design with business and security in mind.

SOHO networks generally are more secure than a typical workgroup, usually because a manager or owner enforces network security. Security settings defined on each workgroup member are more likely to be consistent when the workgroup has a security administrator. Additionally, SOHO networks are more likely to employ security tools such as antivirus software, firewalls, and auditing.

A client/server network is a form of network where you designate some computers as servers and others as clients. Servers host resources shared with the network. Clients access resources and perform tasks. Users work from a client computer to interact with resources hosted by servers. In a client/server network, access is managed centrally from the servers. Thus, consistent security is easily imposed across all network members.

Figure 1-4 shows a possible basic layout of a client/server network. In this example, three servers host the resources, such as printers, Internet connectivity, and file storage shared with the network. Both wired and wireless clients are possible. Switches interconnect all nodes. Client/server networks are more likely to use hardware or appliance firewalls.

Note

This book will explore more complex network layouts, designs, and components in later sections.

Client/server networks also employ single sign-on (SSO). SSO allows for a single but stronger set of credentials per user. With SSO, each user must perform authentication to gain access to the client and the network. Once the user has logged in, access control manages resource use. In other words, client/server authentication with SSO is often more complex than workgroup authentication—but it's more secure. Users only need to log in once, not every time they contact a resource host server.

Because of their complexity, client/server networks are invariably more secure than SOHO and workgroup networks. But complexity alone is not security. Instead, because they are more complex, client/server networks require more thorough design and planning. Security is an important aspect of infrastructure planning and thus becomes integrated into the network's design.

Client/server networks are not necessarily secure because you can deploy a client/server network without any thought toward security. But most organizations understand that if they overlook network security, they are insuring their ultimate technological downfall. Security is rarely excluded from the deployment process. And some networks are by nature more secure than others.

LAN stands for local area network. A LAN is a network within a limited geographic area. This means that a LAN network is located in a single physical location rather than spreading across multiple locations. Some LANs are quite large, while others are very small. A more distinguishing characteristic of a LAN is that all of the segments or links of a LAN are owned and controlled by the organization. A LAN does not contain or use any leased or externally owned connections.

WAN stands for wide area network. A WAN is a network not limited by any geographic boundaries. This means that a WAN network can span a few city blocks, reach across the globe, and even extend into outer space. A distinguishing characteristic of a WAN is that it uses leased or external connections and links. Most organizations rely on telecommunication service providers (often referred to as telcos) for WAN circuits and links to physical buildings and facilities, including the last-mile connection to the physical demarcation point. Both LAN and WAN networks can be secure or insecure. They are secure if a written security policy guides their use. With a LAN, the owner of the network has the sole responsibility of ensuring that security is enforced. With a WAN, the leasing entity must select a telco that has a secure WAN infrastructure and incorporate service level agreements (SLAs) that define the level of service and performance that is to be provided on a monthly basis for the customer. In most cases, WAN data is secure only if the data sent across leased lines is encrypted before transmission. This service is the responsibility of the data owner, not the telecommunications service provider, unless this option is offered as a value-added service.

"Thin client computing," also known as terminal services, is an old computing idea that has made a comeback in the modern era. In the early days of computers, the main computing core, commonly called a mainframe, was controlled through an interface called a terminal. The terminal was nothing more than a video screen (usually monochrome) and a keyboard. The terminal had no local processing or storage capabilities. All activities took place on the mainframe and the results appeared on the screen of the terminal.

With the advent of personal computers (PCs), a computer at a worker's desk offered local processing and storage capabilities. These PCs became the clients of client/server computers. Modern networking environments can offer a wide range of options for end users. Fully capable PCs used as work stations or client systems are the most common. PCs can run thin-client software, which emulates the terminal system of the past. That means they perform all tasks on the server or mainframe system and use the PC only as a display screen with a keyboard and mouse. Even modern thin client terminals can connect into a server or mainframe without using a full PC.

Remote control is the ability to use a local computer system to remotely take over control of another computer over a network connection. In a way, this is the application of the thin client concept on a modern fully capable workstation to simulate working against a mainframe or to virtualize your physical presence.

With remote control connection, the local monitor, keyboard, and mouse control a remote system. This looks and feels like you are physically present at the keyboard of the remote system, which could be located in another city or even on the other side of the world. Every action you perform locally takes place at that remote computer via the remote control connection. The only limitations are speed of the intermediary network link and inability to physically insert or remove media such as a flash drive and use peripherals such as a printer.

You might consider remote control as a form of software-based thin client or terminal client. In fact, many thin client and terminal client products sell as remote control solutions.

Many modern operating systems include remote control features, such as Remote Desktop found in most versions of Windows. Once enabled, a Remote Desktop Connection remotely controls another Windows system from across the network. Chapter 14 discusses Remote Desktop in more detail.

Remote access is different from remote control. A remote access link enables access to network resources using a WAN link to connect to the geographically distant network. In effect, remote access creates a local network link for a system not physically local to the network. Over a remote access connection, a client system can technically perform all the same tasks as a locally connected client, with the only difference being the speed of the connection. Network administrators can impose restrictions on what resources and services a remote access client can use.

Remote access originally took place over dial-up telephone links using modems. Today, remote access encompasses a variety of connection types including ISDN, DSL, cable modem, satellite, mobile broadband, and more.

In most cases, a remote access connection links from a remote client back to a primary network. A remote access server (RAS) accepts the inbound connection from the remote client. Once the connection goes through, the remote client now interacts with the network as if it were locally connected.

Another variant of remote connections is the virtual private network (VPN). A VPN is a form of network connection created over other network connections. In most cases, a VPN link connects a remote system and a LAN, but only after a normal network connection links to an intermediary network.

In Figure 1-5, a LAN has a normal network connection to the Internet and a remote client has established a normal network connection to the Internet as well. These two connections work independently of each other. The LAN's connection is usually a permanent or dedicated connection supporting both inbound and outbound activities with the Internet. The remote client's connection to the Internet can be dedicated or non-dedicated. In the latter case, the connection precedes the VPN creation. Once both endpoints of the future VPN link have a connection to the intermediary network (in this example, the Internet), then the VPN exists.

In Figure 1-6, a new network connection connects the remote client to the LAN across the intermediary network. This new network connection is the VPN.

A VPN is a mechanism to establish a remote access connection across an intermediary network, often the Internet. VPNs allow for cheap long-distance connections over the Internet, as both endpoints only need a local Internet link. The Internet itself serves as a "free" long-distance carrier.

A VPN uses "tunneling" or encapsulation protocols. Tunneling protocols encase the original network protocol so that it can traverse the intermediary network. In many cases, the tunneling protocol employs encryption so that the original data securely traverses the intermediary network.

A boundary network is a subnetwork or "subnet" positioned on the edge of a LAN. A boundary network isolates certain activities from the production environment, such as programming or research and development. External users can also access resources hosted in a boundary network. Such a subnet is known as a demilitarized zone (DMZ) or "extranet."

A DMZ is a boundary network that hosts resource servers for the public Internet. An extranet is a boundary network that hosts resource servers for a limited and controlled group of external users, such as business partners, suppliers, distributors, contractors, and so forth.

A DMZ subnet is a common network design component used to host public information services, such as Web sites. The DMZ concept allows for anyone on the public Internet to access resources without needing to authenticate. But at the same time, the DMZ provides some filtering of obvious malicious traffic and prevents Internet users from accessing the private LAN.

An extranet subnet allows companies that need to exchange data with external partners a safe place for that activity. An extranet is secured so only the intended external users can access it. Often, accessing an extranet requires VPN connections. An extranet configuration keeps public users from the Internet out and keeps the external partners out of the private LAN.

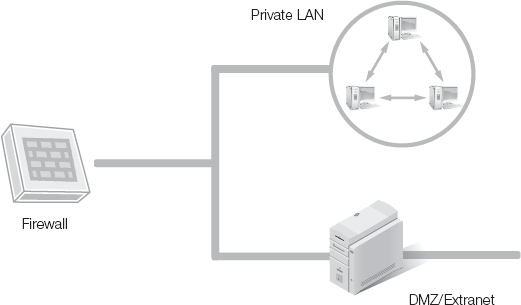

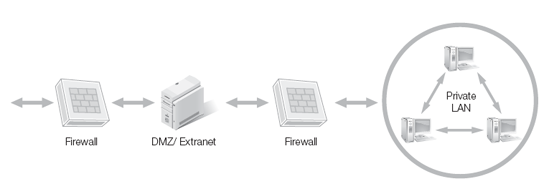

You can deploy both DMZ and extranet subnets in different ways. Two of the most common designs are a screened subnet using a three-homed firewall (Figure 1-7) and N-tier deployment (Figure 1-8). A screened subnet using a three-homed firewall creates a network segment for the private LAN and a network segment for the DMZ or extranet. The three-homed firewall serves as a filtering device as well as a router to control traffic entering these two segments.

The N-tier deployment creates a series of subnets separated by firewalls. The DMZ or extranet subnet serves as a buffer network between the Internet and the private LAN.

You need to evaluate every network design or layout for its security strengths and weaknesses. While there are no perfect deployments, some provide better security for a given set of parameters than others. For example, a network with only a single connection to the Internet is easier to secure than a network with multiple connections to the Internet.

When you are designing a network, consider the paths that malicious traffic could use to reach the interior of your private LAN. The more potential pathways, the more challenging securing the network will be. However, consider redundancy, as well. If your primary connection to the Internet fails, do you have an alternate connection? Remember, security is not just about preventing malicious events but also about ensuring that users can perform essential business tasks.

Try to avoid network designs that have a single point of failure. Always try to have redundant options to ensure that your mission-critical functions can take place. Keep in mind that bottlenecks are still likely to happen even with redundant pathways. Over time, monitor traffic and use levels on every segment across the network to look for trends towards reduced throughput or productivity. A bottleneck may at first be a slight hindrance to high performance and productivity, but it can later become a form of denial of service (DoS).

Another consideration is traffic control and filtering. Blocking or allowing traffic is an important element in network security. You control what traffic enters or leaves the network. Traffic filtering often takes place at network choke points. A choke point is a form of bottleneck and is a single controlled pathway between two different levels of network trust where a firewall or other filtering devices blocks or allows traffic based on a set of rules. Thus, rather than being only a benefit for filtering, a choke point can slow throughput or become a target for DoS attacks.

Another network design issue is the location of authorized users. Often we assume valid users are internal users. With the proliferation of telecommuting and out-sourcing, however, valid users can often be external users as well. Realizing this, you must design networks to support inbound connections from valid external users. This can involve traditional remote access connections, but more likely will use VPN links. VPNs provide connections for remote users that function like local connections and encryptions to keep the content of those connections confidential.

Wired networks offer a form of security that wireless networks lack. That security is the direct physical connection to a wired or cabled network. A hacker requires physical access to your facility or building. Usually, access control of the building can sufficiently prevent most external parties from accessing your private LAN.

Realize, however, that if you allow remote connection via telephone modem, high-speed broadband, or even basic Internet service, then you lose the advantage of a physical access limitation. Once remote access is allowed, the benefits of physical isolation disappear.

The same is true if you allow wireless connections into the network. Wireless networking grants valid and unknown users the ability to interact with the network. This completely eliminates the need to be physically present in the building to connect to the network. With the right type of antenna, an attacker could be over a mile away from your office building and still be able to affect your wireless network.

To regain some of the security offered by physical isolation, try to incorporate physical isolation into your network design. Isolate all remote access and wireless access points from the main wired network. You can achieve this by using separate subnets and filtering communications using firewalls. While design does not offer the same level of security as physical isolation, the arrangement provides a significant improvement over having no control over remote or wireless connections.

All remote connections should go through a rigorous gauntlet of verification before you grant access to the internal LAN. Think of a castle design in the Middle Ages that used multiple layers of defense: a moat, a drawbridge, thick riveted walls, an inner battlement, and finally, a strong keep or inner fortress. Your multi-layer defensive design should include multi-factor authentication and communication encryption, such as a VPN. Additional checks can include verification of operating system and patch level, confirmation of physical or logical location origin (such as caller ID, MAC address, or IP address), limitations to time of day, and limitation on protocols above the transport layer. Any intruder would need to circumvent layer after layer, making intrusion more and more difficult.

Note

An attack known as "Van Eck phreaking" allows an attacker to eavesdrop on electronic devices from a distance. This technique is not perfect or simple to perform, but has been demonstrated on LCD and CRT monitors as well as keyboard cables. With minor shielding, you can eliminate most of the risk from such an attack.

When deploying a new network or modifying an existing infrastructure, carefully evaluate impacts on security and security's impact on the infrastructure. When you or your team overlook or sidestep standard security practices, good business stops. Business interruptions can result not only in lost profits, but also in lost opportunities and even in lost jobs. If the compromise is serious enough, the business might not recover.

The threats facing business are numerous and constantly changing. Often these issues arise daily. Malicious code, information leakage, zero day exploits, unauthorized software, unethical employees, and complex network infrastructures are just a few of the concerns that every organization and network manager faces.

Malicious code can make its way into a computer through any communication channel. This includes file transfers, e-mail, portable device syncing, removable media, and Web sites. Precautions against malicious code include network traffic filtering with a firewall, anti-malware scanning, and user behavior modification.

Information leakage stems from malicious employees who purposefully release internal documentation to the public. It can also result from accidents, when a storage device is lost, recycled, donated, stolen, or thrown away. Or, it occurs when users accidentally publish documents to P2P file sharing services or Web sites. Precautions against information leakage include doing thorough background checks on employees, using the principle of least privilege, detail auditing and monitoring of all user activity, classifying all information and controlling communication pathways, using more stringent controls on use of portable devices, and enforcing zeroization procedures for storage devices.

Zero day exploits are new and previously unknown attacks for which no current specific defenses exist. Think of them as surprise attacks on your defenses. "Zero day" refers to the newness of an exploit, which may be known in the hacker community for days or weeks, but about which vendors and security professionals are just learning.

The zero day label comes from the idea that work to develop a patch begins the moment a vendor learns of a problem. The moment of discovery of the new exploit is called "day zero." No specific defenses against zero day attacks exist, but general security management, use of intrusion detection and intrusion prevention, along with detailed logging and monitoring can assist in discovering and preventing new attacks quickly. Once you know of an attack or exploit, you can begin taking steps to contain damage or minimize the extent of the compromise.

Unauthorized software is any piece of code that a user chooses to run on a client system that was not approved nor provided by the company. Not all unauthorized software is directly problematic, but you should prevent its use for many reasons. Such software might be a waste of time and productivity that costs the company money, time, and effort. The software could be a license violation. Software could include hidden malicious components, known or unknown to the user, which could compromise the security of the network. Steps you can take to prevent the use of unauthorized software should include limiting installation privileges of normal users and using white lists to block the execution of any program not on the approved list.

Unethical employees purposefully violate the stated rules and goals of the organization. These employees often believe the rules are not important, do not really apply to them, or are not really enforced. Most believe they will never get caught. When users violate the mission and goals of the organization, consequences could be catastrophic.

Users are the final link in security and are often the weakest link in network security. If a user chooses to violate a security policy and release information to the public or execute malicious code, the results could devastate the organization or land the perpetrator in court. Methods of preventing unethical employees from doing damage include better background screening, detail auditing and monitoring of all user activity, and regular management oversight and job performance reviews. When you discover a problematic person, you might be able to grant the employee a second chance after re-training, but in many cases, the safest choice for the organization is to terminate employment.

Complex network infrastructures lend themselves to complex vulnerabilities. The larger a network becomes, the more servers, clients, network devices, and segments it includes. The sheer number of "moving parts" almost guarantees that something is bound to be mis-configured, be improperly installed, lack current firmware or patches, have a bottleneck, or be used incorrectly. Any of these conditions could result in vulnerability that internal or external attackers can exploit. The larger and more complex a network, the more thoroughly the security team needs to watch over the infrastructure and investigate every symptom, trend, or alert. Preventing complexity from becoming a liability involves detailed planning, careful implementation, regular security management, and constant review of the effectiveness of the infrastructure.

Studies have shown that most threats come from internal sources, but too many organizations focus on external sources and discount the internal threats. A better stance is to count all threats—regardless of their source—as worthy of investigation. Once a potential threat is understood, its risk, potential loss, and likelihood can be better understood and evaluated.

One of the most obvious external threats is the Internet. The Internet is a global network linking people and resources with high-speed real-time communications. Unfortunately, this wonderful infrastructure also makes great abuse more possible. Once your company installs an Internet connection, the world is at your door—or rather, at the fingertips of every employee. That world includes both potential customers and potential attackers.

Without a global communication infrastructure, hackers had to be physically present—wired in—in or near your building to launch attacks. With the advent of the Internet and wireless technology, any hacker anywhere can initiate attempts to breach your network security. It's helpful, therefore, to think of the Internet as a threat. It's not one that you should lightly dismiss nor should you discard the Internet as a powerful tool. The benefits of Internet access are well worth the effort and expense you should expend to defend against its negative features.

Some of the best defenses against Internet threats include a well-researched, written security policy; thoroughly trained personnel; use of firewalls to filter traffic intrusion, detection, and prevention systems; use of encrypted communications (such as VPNs); and thorough auditing and monitoring of all user and node activity.

Again, perfect security solutions don't exist. Some form of attack, compromise, or exploit can get past any single defense. The point of network security is to interweave and interconnect multiple security components to construct a multi-faceted scheme of protection. This is often called multiple layers of defense or "defense in depth."

The goal is to balance the strengths and weaknesses of multiple security components. The ultimate functions of network security are to lock things down in the best way possible, then monitor for all attempts to violate the established defense. Since the perfect lock doesn't exist, improve on the best locks available with auditing and monitoring. Knowing this, your next step is to understand the common network security components and their uses.

When considering the deployment of a network or the modification of an existing network, evaluate each network component to determine its security strengths and weaknesses. This section discusses many common network security components.

A node is any device on the network. This includes client computers, servers, switches, routers, firewalls, and anything with a network interface that has a MAC address. A Media Access Control (MAC) address is the 48-bit physical hardware address of a network interface card (NIC) assigned by the manufacturer. A node is a component that can be communicated with, rather than only through or across. For example, network cables and patch panels are not nodes, but a printer is.

A host is a form of node that has a logical address assigned to it, usually an Internet Protocol (IP) address. This addressing typically implies that the node operates at or above the network layer. The network layer includes clients, servers, firewalls, proxies, and even routers. But it excludes switches, bridges, and other physical devices such as repeaters and hubs. In most cases, a host either shares or accesses resources and services from other hosts.

In terms of network security, node and host security does vary. Nodes and hosts can both be harmed by physical attacks and DoS attacks. However, a host can also be harmed by malicious code, authentication attacks, and might even be remotely controlled by hackers. Node protection is mostly physical access control along with basic network filtering against flooding.

Host security can be much more involved because the host itself should be hardened and you will need to perform general network security. Hardening is the process of securing or locking down a host against threats and attacks. This can include removing unnecessary software, installing updates, and imposing secure configuration settings.

Internet Protocol version 4 (IPv4) has been in use as the predominate protocol on the Internet for nearly three decades. It was originally defined in Request for Comment (RFC) 791 in 1981. IPv4 is a connectionless protocol that operates at the network layer of the open system interconnection reference model (OSI model). IPv4 is the foundation of the TCP/IP protocol suite as we know and use it today.

IPv4 was designed with several assumptions in mind, many of which have been proven inaccurate, grossly overestimated, or simply non-applicable. While IPv4 has served well as the predominate protocol on the Internet, a replacement has been long overdue. Some of the key issues of concern are a dwindling, if not exhausted, address space of only 32 bits, subnetting complexity, and lack of integrated security. Some of these issues have been minimized with the advent of network address translation (NAT), classless inter-domain routing (CIDR), and Internet Protocol Security (IPSec). But in spite of these advancements, IPv4 is being replaced with IPv6.

Note

If an IPv6 address has one or more consecutive 4-digit sections of all zeros, the sections of zeros can be dropped and replaced by just a double colon. For example: 2001:0f58:0000:0000:0000:0000:1986:62af can be shortened to 2001:0f58::1986:62af. However, if there are two sections of zero sets, only a single section can be replaced by double colons.

IPv6 was defined in 1998 in RFC 2460. The new version was designed specifically as the successor to IPv4 mainly due to the dwindling availability of public addresses. IPv6 uses a 128-bit address, which is significantly larger than IPv4. Additionally, changes to subnetting, address assignment, packet header, and simpler routing processing make IPv6 much preferred over its predecessor. Another significant improvement is native network layer security. Figure 1-9 below compares an IPv4 address to an IPv6 address.

The security features native to IPv6 were crafted into an add-on packet for IPv4 known as IPSec. While in IPv4, IPSec is an optional add-on; it is a built-in feature of and used by default with IPv6. This use is a significant change in the inherent security issues surrounding networking and the use of the Internet. With native network layer encryption, most forms of eavesdropping, man-in-the-middle attacks, hijacking, and replay attacks are no longer possible. This does not mean that IPv6 will be security problem-free, but at least most of the common security flaws experienced with IPv4 will be fixed.

As you use and manage computers and manage networks, use IPSec with IPv4 or switch over to IPv6. Most protocols that operate over IPv4 will operate without issue over IPv6. You will need to replace some applications that embed network-layer addresses into their application-level protocol, such as FTP and NTP. But valid IPv6 replacements already exist.

The complete industry transition from IPv4 to IPv6 will likely take upwards of a decade, mainly because of the need to upgrade, replace, or re-configure the millions of hosts and nodes spread across the world to support IPv6. During the transition, many techniques are available to allow a host to interact with both IPv4 and IPv6 network connections. These include:

Dual IP stacks—a computer system that runs both IPv4 and IPv6 at the same time. Windows Vista and Windows Server 7 both have dual IP stacks by default.

IPv4 addresses embedded into an IPv6 notation—a method of representing an IPv4 address using the common notation of IPv6. For example, ::ffff:192.168.3.125 is the IPv4-mapped IPv6 address for the IPv4 address: 192.168.3.125.

Note: The IPv6 address is 80 zero bits, followed by 16 one bits, then the 32-bit IPv4 address (in dotted decimal notation), which adds up to 128 bits total.

Tunneling—encapsulating IPv6 packets inside IPv4 packets, effectively creating a VPN-like tunnel out of IPv4 for IPv6.

IPv4/IPv6 NAT—using a network address translation service to translate between two networks, one running IPv4 and the other IPv6.

Whatever the hurdles of transition, the benefits of native network layer encryption are immeasurable. Long story short: when you have the option to use IPv6, take it because you will be helping the world IT community upgrade sooner rather than later.

Not all traffic on your network is from an authorized source, so you shouldn't allow it to enter or leave the network. Not all traffic is for an authorized purpose, so you should block it from reaching its destination. Not all traffic is within the boundaries of normal or acceptable network activity, so you should drop it before it causes compromises.

All of these protections are the job of a firewall. As its name implies, a firewall is a tool designed to stop damage, just as the firewall in an engine compartment protects the passengers in a vehicle from harm in an accident. A firewall is either a hardware device or a software product you deploy to enforce the access control policy on network communications. In other words, a firewall filters network traffic for harmful exploits, incursions, data, messages, or other events.

Firewalls are often positioned on the edge of a network or subnet. Firewalls protect networks against numerous threats from the Internet. Firewalls also protect the Internet from rogue users or applications on private networks. Firewalls protect the throughput or bandwidth of a private network so authorized users can get work done. Without firewalls, most of your network's capabilities would be consumed by worthless or malicious traffic from the Internet. Think of how a dam on a river works; without the dam, the river is prone to flooding and overflow. The dam prevents the flooding and damage.

Without firewalls, the security and stability of a network would depend mostly on the security of the nodes and hosts within the network. Based on the sordid security history of most host operating systems, having no firewall would not be a secure solution. Hardened hosts and nodes are important for network security, but they should not be the only component of reliable network security.

You also install firewalls on client and server computers. These host software firewalls protect a single host from threats from the Internet, and threats from the network itself, as well as threats from other internal network components.

In any case, a firewall is usually configured to control traffic based on a "deny-by-default / allow-by-exception" stance. This means that nothing passes the firewall just because it exists on the network (or is attempting to reach the network). Instead, all traffic that reaches the firewall must meet a set of requirements to continue on its path.

As a network administrator or IT security officer, you get to choose what traffic is allowed to pass through the firewalls, and what traffic is not. Additionally, you can also determine whether the filtering takes place on inbound traffic (known as "ingress filtering"), on outbound traffic (called "egress filtering")—or both.

Firewalls are an essential component of both host and network security. Additional firewall specifics and details are covered throughout this book in subsequent chapters.

A virtual private network (VPN) is a mechanism to establish a remote access connection across an intermediary network, often over the Internet. VPNs allow for cheap longdistance connections when established over the Internet, since both endpoints only need a local Internet link. The Internet itself serves as a "free" long-distance carrier.

A VPN uses tunneling or encapsulation protocols. Tunneling protocols encase the original network protocol so that it can traverse the intermediary network. In many cases, the tunneling protocol employs encryption so that the original data traverses the intermediary network securely.

You can use VPNs for remote access, remote control, or highly secured communications within a private network. Additional VPN specifics and details are covered throughout this book in subsequent chapters.

A proxy server is a variation of a firewall. A proxy server filters traffic, but also acts upon that traffic in a few specific ways. First, a proxy server acts as a "middle-man" between the internal client and the external server. Or, in the case of reverse proxy, this relationship can be inverted with an internal server and an external host. Second, a proxy server will hide the identity of the original requester from the server through a process known as network address translation (NAT) (see the following section).

Another feature of a proxy server can be content filtering. This type of filtering focuses either on the address of the server (typically by domain name or IP address) or on keywords appearing in the transmitted context. You can employ this form of filtering to block employee access to Internet resources that are not relevant or beneficial to business tasks or that might have direct impact on the business network. This could include malicious code, hacker tools, and excessive bandwidth consumption (such as video streaming or P2P file exchange).

Proxy servers can also provide caching services. Proxy server caching is a data storage mechanism that keeps a local copy of content that is fairly static in nature and which numerous internal clients have a pattern of requesting. Front pages of popular Web sites are commonly cached by a proxy server. When a user then requests a page from the Internet in cache, the proxy server provides that page to the user from cache rather than pulling it again from the Internet site. This provides the user with faster performance and reduces the load on the Internet link.

Caching of this type often results in all users experiencing faster Internet performance, even when the pages served to them are pulled from the Internet. Obviously, such caching must be tuned to prevent stale pages in the cache. Tuning is setting the time-out value on cached pages so they expire at a reasonable rate. All expired cache pages are replaced by fresh content from the original source server on the Internet.

Network address translation (NAT) converts between internal addresses and external public addresses. The network performs this conversion on packets as they enter or leave the network to mask and modify the internal client's configuration. The primary purpose of NAT is to prevent internal IP and network configuration details from being discovered by external entities, such as hackers.

Figure 1-10 shows an example pathway from an internal client to an external server across a proxy or firewall using NAT. In this example, the external server is a Web server operating on the default HTTP port 80. The Web server is using IP address 208.40.235.38 (used by www.itttech.edu). The requesting internal client is using IP address 192.168.12.153. The client randomly selects a source port between 1024 and 65,535 (such as 13571). With these source details, the client generates the initial request packet. This is step 1. This packet is sent over the network towards the external server, where it encounters the NAT service.

Another purpose or use of NAT is to reduce the need for a significant number of public IP addresses you need to lease from an ISP. Without NAT, you'd need a single public IP address for each individual system that would ever connect to the Internet. With NAT, you can lease a smaller set of public IP addresses to serve a larger number of internal users.

This consolidation is possible for two reasons. First, most network communications are "bursty" rather than constant in nature. This means that computers typically transmit short, fast busts of data instead of a long, continuous stream of data. Some forms of network traffic, such as file transfer and video streaming, are more continuous in nature. But these tasks are generally less common on business networks than home networks.

Second, NAT does not reserve a specific public address for use by a single internal client. Instead, NAT randomly assigns an available public address to each subsequent internal client request. Once the client's communication session is over, the public address returns to the pool of available addresses for future communications.

Additionally, NAT often employs not just IP to IP address translation, but a more granular option known as port address translation (PAT). With PAT, both the port and the IP address of the client convert into a random external port and public IP address. This allows for multiple simultaneous communications to take place over a single IP address. This process, in turn, allows you to support a greater number of communications from a single client or multiple clients on an even smaller number of leased public IP addresses.

NAT enables the use of the RFC 1918 private IP address ranges while still supporting Internet communications. The RFC 1918 addresses are:

Class A—10.0.0.0-10.255.255.255 /8 (1 Class A network)

Class B—172.16.0.0-172.31.255.255 /12 (16 Class B networks)

Class C—192.168.0.0-192.168.255.255 /16 (256 Class C networks)

The addresses in RFC 1918 are for use only in private networks. Internet routers drop any packet using one of these addresses. Without NAT, a network using these private IP addresses would be unable to communicate with the Internet. By using RFC 1918 addresses, networks create another barrier against Internet-based attacks and do not need to pay for leasing of internal addresses.

As briefly mentioned in the earlier section on IPSec, NAT is one of the technologies that have allowed the extended use of IPv4 even after the depletion of available public IP addresses. Now that the transition to IPv6 is underway, NAT is serving a new purpose in proving IPv4 to IPv6 translation. Many firewall and proxy devices may include IPv6 translation services. This feature is a worthwhile option that you might want to include on a feature list when researching a firewall purchase or deployment.

Routers, switches, and bridges are common network devices. While not directly or typically labeled as network security devices, you can deploy them to support security rather than hinder it.

A router's primary purpose is to direct traffic towards its stated destination along the best-known current available path (see Figure 1-11). Routing protocols such as RIP, OSPF, IGRP, EIGRP, BGP, and others dynamically manage route selection based on a variety of metrics. A router supports security by guiding traffic down preferred routes rather than routes that might not be as logically or physically secure. If a hacker can trick routers into altering the pathway of transmission, network traffic could traverse a segment where a hacker has positioned a "sniffer." A sniffer, also known as packet analyzer, network analyzer, and protocol analyzer, is a software utility or hardware device that captures network communications for investigation and analysis.

While this is an unlikely scenario, it's not an impossible one. Ensuring that routers are using authentication to exchange routing data and are protected against unauthorized physical access will prevent this and other infrastructure level attacks. Figure 1-11 depicts an example of a routed network deployment.

Switches provide network segmentation through hardware. Across a switch, temporary dedicated electronic communication pathways connect the endpoints of a session (such as a client and server). This switched pathway prevents collisions. Additionally, switches allow you to use the full potential throughput capacity of the network connection by the communication instead of 40 percent or more being wasted by collisions (as occurs with hubs). See Figure 1-12.

You can see this basic function of a switch as a security benefit once you examine how this process takes place. A switch operates at Layer 2, the Data Link layer, of the OSI model, where the MAC address is defined and used. Switches manage traffic through the use of the source and destination MAC address in an Ethernet frame. The Ethernet frame is a logical data set construction at the Data Link layer (layer 2) consisting of the payload from the Network layer (layer 3) with the addition of an Ethernet header and footer (Figure 1-13).

Switches employ four main procedures, labeled as "learn," "forward," "drop," and "flood." The learn procedure is the collection of MAC addresses from the source location in a frame header. The source MAC address goes into a mapping table along with the number of the port that received the frame. Forwarding occurs once a frame's destination MAC address appears in the mapping table. This table then guides the switch to transmit the frame out the port that originally discovered the MAC address in question.

If the frame goes on the same port that the mapping table indicates is the destination port, then the frame is dropped. The switch does not need to transmit the frame back onto the network segment from which it originated. Finally, if the destination MAC is not in the mapping table, the switch reverts to flooding—that is, a transmission of the frame out every port—to ensure that the frame has the best chance of reaching the destination.

By monitoring the activity of the switch, specifically watching the construction and modification of the mapping table and the variation of MAC addresses seen in frame headers, you can detect errors and malicious traffic. Intelligent or multi-layer switches themselves or external IDS/IPS services can perform this security monitoring function.

When the switch procedures fail, the network suffers. This could mean a reduction in throughput, a blockage causing a denial of service, or a redirection of traffic allowing a hacker to attempt to modify or eavesdrop. Fortunately, such attacks are "noisy" in terms of generating significant abnormal network traffic, and thus you can detect them with basic security sensors. This benefit alone is a significant reason to use switches rather than hubs.

Bridges link between networks. Bridges create a path or route between networks only used when the destination of a communication actually resides across the bridge on the opposing network. An analogy of this would be a city split in two by a river. When a person traveling in that city wants to visit a specific location, if that location is on the same side of the river that they are, then they have no need to cross the bridge to get there.

The same is true of a network bridge. Bridges work in a similar manner to switches in that they use several basic procedures to manage traffic. Bridges use the process of learn, forward, and drop. Thus, with these processes, only traffic intended for a destination on the opposing network will go by the bridge to that network. All traffic intended for a destination on the same side of the bridge that received it will be dropped since the traffic is already on the correct network.

Bridges are used to connect networks, network segments, or subnets whenever you desire a simple use-only-if-needed link and the complexity of a router, switch, or firewall is neither specifically required nor desired. See Figure 1-14. Bridges can also link networks with variations in most aspects of the infrastructure barring protocol. This includes different topologies, cable types, transmission speeds, and even wired versus wireless.

You can use bridges to detect the same types of traffic abuses that switches do. In addition, both bridges and switches can impose filtering on MAC addresses. Here you can use a black list or white list concept, where you allow all traffic except for that on the black/block list or you block all traffic except for that on the white/allow list.

You can arrange to log activities across a router, switch, and bridge. You should review traffic logs regularly, typically on the same schedule as firewall and IDS/IPS logs. You will note the signs of abuse, intrusion, denial of service, network consumption, unauthorized traffic patterns, and more in these common network devices not typically considered to be security devices.

Domain name system (DNS) is an essential element of both Internet and private network resource access. Users do not keep track of the IP address of servers. So, to access the file server or social networking site, users rely upon DNS to resolve the fully qualified domain names (FQDNs) into the associated IP address.

Most users don't even realize that networks rely upon IP addresses to direct traffic towards a destination rather than the domain name that they typed into the address field of client software. However, without this essential but often transparent service, most of how the Internet works today would fail. In such a case, users would need to maintain a list of IP addresses of sites they wanted to visit or always go to a search engine for a site's IP address.

Note

A few Internet index sites still exist, but they are not as exhaustive (nor current) as most search engines. Think of the difference between the Yellow Pages dropped on your front porch each year versus dialing 411: one is more current and relevant than the other.

DNS is the foundation of most directory services in use today (such as Active Directory and LDAP) (see the following section). Thus, DNS is essential for internal networks, as much as it is for the external Internet.

DNS is vulnerable in several ways. First, DNS is a non-authentication-query-based system. This allows a false or "spoofed" response to a DNS query to appear valid. Second, anyone can request transfers of the DNS mapping data (called "the zone file"), including external entities if TCP port 53 allows inbound access. Third, DNS uses a plain text communication allowing for eavesdropping, interception, and modification.

You can address some of the faults of DNS with local static DNS mapping in the HOSTS file, filtering DNS on network boundaries, and using IPSec for all communications between all hosts. However, these are defenses for DNS. Rather than providing a security to the network, DNS itself is an essential service that you need to protect.