Chapter 6 Understanding Common eDirectory Processes

Chapter 1, “The Four Basics of eDirectory Troubleshooting,” defines Novell’s directory services (DS) implementation as a loosely consistent distributed database. Several autonomous background processes ensure the integrity of the data in the DS database and must run smoothly to provide consistent operation. There are also several processes you initiate with administration tools such as ConsoleOne and NDS iMonitor when managing objects, partitions, and replicas.

This chapter looks at the most common of these processes, to help you develop a better understanding of how they work. A thorough understanding of how they work makes it easier to determine a proper course of action to take to resolve DS problems.

NOTE

The various DS processes discussed in this chapter exist in all versions of NDS, including eDirectory. There are some subtle differences in how the processes function internally (such as the frequency at which a process runs) depending on the version of DS in question. These differences are highlighted in this chapter as applicable.

TIP

This chapter goes into some detail about the DS processes themselves. For step-by-step detail of the operation of a specific process, refer to Novell’s CD-ROMs LogicSource II for NDS and LogicSource for eDirectory. These CD-ROMs were once available for purchase as part of the LogicSource offering, but now are offered as part of the Novell Professional Resource Suite and as part of the Novell Product Toolkits. For more information about Novell Technical Subscriptions, visit http://support.novell.com/subscriptions.

Before we talk about the background processes, we need to discuss DS name resolution, tree-walking, and obituaries, which DS uses to locate information in the tree and to keep track of the state of some of the operations, respectively.

NDS name resolution is the process of DS navigating through the different partitions in the tree—using tree-walking—until it finds the requested object. When DS finds the object, it retrieves the object ID and returns it to the caller. All DS information requests can be broken down into one or more names that identify the objects. In pursuing each name component in a request, DS searches for a partition that contains some part of the name path associated with the request. When a partition is found, the search moves from that partition to the partition that actually contains the object. Until a relevant partition is found, the search proceeds upward toward [Root]; any request can be pursued successfully by beginning at [Root] and working downward.

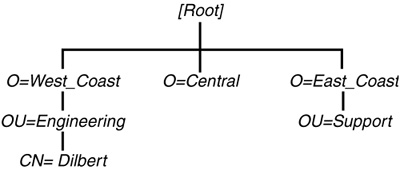

Consider the sample tree shown in Figure 6.1. Let’s assume that your currently context is at O=East_Coast. In order to locate the User object Dilbert, looking down the current tree branch does not find him. By moving upward to [Root], you have two additional tree branches (O=West_Coast and O=Central) from which to pursue the object.

Tree-walking is the process of a NetWare Core Protocol (NCP) client, commonly referred to as the DS agent (DSA), walking through the NDS tree to locate a server hosting the partition that has a particular object. Each DS server (be it a NetWare server or Linux system running eDirectory) has a built-in client agent to facilitate DS name resolution and tree-walking.

NOTE

The name resolution process is initiated by the DS DSAResolveName “verb.” (DS verbs are predefined functions within the DS engine. Refer to Appendix B, “DS Verbs,” for a complete list of DS verbs.)

An application may disable the tree-walking component of the name resolution process by setting the DCV_DISALLOW_REFERRALS flag to TRUE and calling the NWDSSetContext API. This is useful if the application wants to search only the database local to the server it is communicating with. When you set the DCV_DISALLOW_REFERRALS flag to TRUE, the DSA returns a failure (-601 error [object not found]) if the object being sought is not located in the local database.

The tree-walking process relies on Subordinate Reference (SubRef) partitions to connect the tree. If a server can provide no other DS information, the least it can offer is a reference to another server higher in the tree that has a partition with information about objects. When walking the tree, a server is given the object name of interest. Based on the name, the server decides whether it needs to move upward toward [Root] or downward away from [Root] in order to access the next partition in its efforts to locate the object.

NOTE

Tree-walking can go up or down a DS tree, depending on the location of the partition that holds the desired object.

NOTE

eDirectory 8.5 introduced a new feature called referral hints to help make tree-walking more efficient. Prior to eDirectory 8.5, NDS may have had to walk through a large portion of the tree, which could span slow WAN links, before locating the server holding a real replica of the desired object. This could consume a lot of time and overhead. With referral hints, the network addresses of servers that “should” have a real copy of the partition are kept on the External Reference (ExRef) partition root object. eDirectory simply walks to the partition root object and uses the referrals listed on that object to contact the servers directly and see whether they have real copies of the partition of interest. If this fails, the old way of walking the tree is then used to try to locate the desired partition.

The act of the workstation locating the server that holds the partition with the desired object constitutes half the name-resolution process. Up to this halfway point, the tree-walking process is solely carried out by the server on behalf of the workstation. The second half of name resolution is complete when the client retrieves the object ID from the server containing the partition. There are three ways in which this second half of the process is accomplished, depending on the DSA’s request setting (NWC_DS_PREFER_ONLY_REFERRALS, NWC_DS_PREFER_REFERRALS, or not set).

If the DSA has the resolve name request flag set to NWC_DS_PREFER_ONLY_REFERRALS (0x00004000) and the requested distinguished name (DN) is not in the local database, the agent returns to the caller a list of referrals of servers that will have real copies of the DN in question. If the DSA has the resolve name request flag set to NWC_DS_PREFER_REFERRALS (0x00002000) instead, the agent returns only one referral (the first entry in the list) instead of the entire list.

NOTE

When the resolve name request flag is set, the behavior of the DSAResolveName process is very similar to the LDAP referral process: The client is given a list of referrals, and it is up to the caller to decide what to do with that information.

When the referral information is returned to the call, it is up to the caller to make a new request to the new servers. If the caller (such as the Novell Client for Windows on a workstation) did not previously have a connection to the referred server, an authenticated-but-not-licensed connection is created in order to retrieve the (server-centric) object ID from the server.

NOTE

One of the steps in the DSAResolveName process in eDirectory 8.5 and higher checks whether the object has a global unique identifier (GUID). If a GUID value is not found, one will be created. eDirectory 8.7 requires that every object have a GUID. Therefore, DSAResolveName is a process that ensures that the objects have GUIDs because this routine is used often.

Many processes (such as file system trustee assignments on Novell Storage Service [NSS] version 3 volumes on NetWare 6 servers) are starting to use GUIDs instead of the server-centric IDs of the objects. To maintain backward compatibility with previous versions of NDS, however, the server-centric IDs are still being maintained and used.

If the DSA request flag did not specify referrals, a temporary external reference will be created on the local server (the one the workstation sent the initial request to), and its object ID will be returned to the caller. (If the server no longer needs an ExRef, background processes will have it removed after 192 hours [eight days]. See the section “The Backlink Process,” later in this chapter.)

NOTE

If the object ID of the real object is desired, the calling application should set the request flags for one of the two referral options discussed previously.

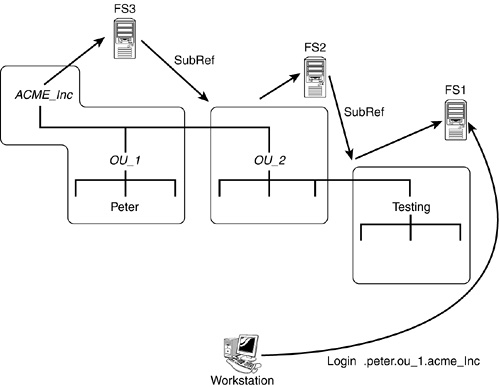

The name resolution and tree-walking processes are best illustrated by the following login example. In this example, three different partitions in the DS tree are located on three separate servers (see Figure 6.2), and the replica placement is as follows:

|

|

|

|

|

|

Server FS1 |

— |

— |

Master |

|

Server FS2 |

— |

Master |

SubRef |

|

Server FS3 |

Master |

SubRef |

— |

Suppose the workstation is initially attached to FS1. The user logs in as .peter.ou_1.acme_inc. Server FS1 does not contain information for this User object, nor does it have information about OU_1 or ACME_Inc. Rather than immediately returning an error message (indicating that the object is not found) to the workstation, however, FS1 passes the query up the tree—using its internal DS client agent—to FS2. (It knows FS2 is closer to [Root] because of the SubRef pointer.)

If FS2 does not contain information for the object (which it does not in this example), it passes the name of the server containing a parent partition of itself—in this case, FS3—back to FS1. Then FS1 queries FS3 for the desired information; FS1 has “walked” up the DS tree structure, toward [Root]. In this instance, FS3 holds the partition that contains the User object. Therefore, FS1 redirects the workstation (transparently to the user) to query server FS3 directly; if the workstation did not previously have a connection to FS3, an authenticated-but-not-licensed connection will be created in order to retrieve the information from the server. (The redirection here is a function of the Novell Client, and the behavior is hard-coded and cannot be changed.)

Tree-walking gives a DS client the ability to log in or authenticate without having to attach to the specific server holding the partition (or replica of the partition) that contains the User object. Tree-walking is also used to locate services—such as servers and print queues—anywhere within a DS tree. You might have noticed the similarities between DS’s tree-walking and LDAP’s chaining process. The main difference between the two is the DS’s tree-walking does not require the requesting server to authenticate to the target server every time it connects, whereas LDAP’s chaining process does.

NOTE

In an NDS/eDirectory environment, after a client is authenticated to a DS tree, that client can locate any (DS-aware) service within the tree without the use of a SAP packet or an SLP packet. NetWare 2 and NetWare 3 services, such as print servers and database servers, must broadcast the services’ availability (over IPX) on a regular basis (the default is 60 seconds) so clients that can locate these services.

This feature is especially beneficial for networks that have many services. The use of DS to locate services significantly reduces the amount of network broadcast or multicast traffic due to SAP or SLP. This reduction in network traffic is also of importance to companies that have WAN links. It is one of the many reasons NDS/eDirectory works well in large networks where other DS failed to deliver the expected performance.

Some of the most common problems in NDS/eDirectory are caused by obituaries (commonly referred to as obits) not being processed properly. Any number of reasons can cause obituaries to not be processed (resulting in what is known as stuck obits), ranging from a down server or communication link to an invalid backlink list in an object. If you understand the background processes and how certain processes use obituaries, you may find it easier to determine the best course of action for correcting a problem.

NOTE

Many of the most common DS problems are caused by problems with obituaries purging, but they initially appear to be caused by something else.

Obituaries are operational attributes (that is, not attributes that can be controlled by the user) that DS uses to ensure referential integrity between objects during certain operations—such as object and partition move operations, object deletions, and object restorations. DS uses the obit attribute internally, with the syntax type SYN_OCTET_STRING. The attribute has the DS_READ_ONLY_ATTR constraint flag that restricts its access to only the DS servers.

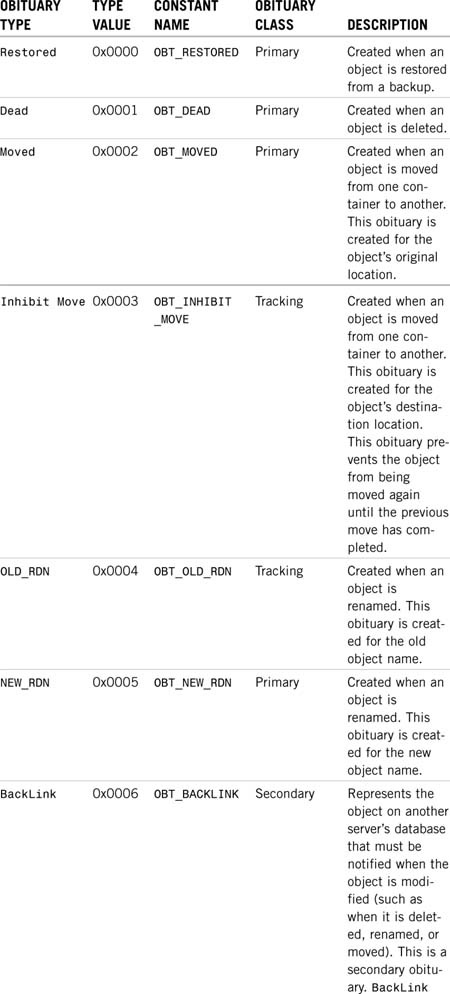

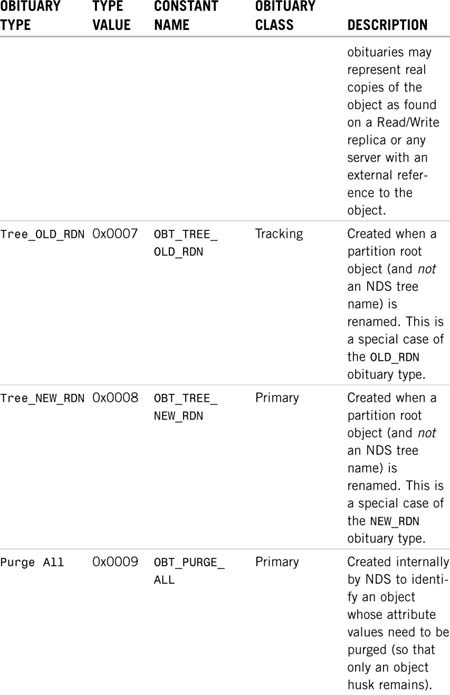

There are three obituary type classes: primary, secondary, and tracking. A primary obituary indicates an action on an object. A secondary obituary indicates the servers that must be contacted and informed of the primary obituary’s action. A tracking obituary is an informational obituary that is associated with certain primary obituaries. A tracking obit does not go through the same process as the primary and secondary obits.

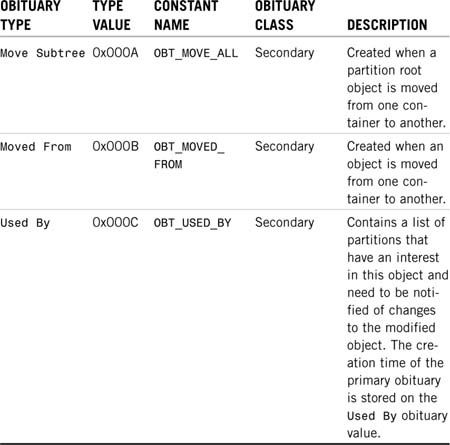

Table 6.1 shows the different obituary types and when DS generates them.

NOTE

Some Novell documentation and TIDs refers to the Used By obit as the Type C obit because of its value.

In addition to the obituary types and classes, obituaries move through four distinct states or stages. These states are always executed in the same order to ensure that the servers process obituaries properly and then purge them from the system. Obituary advancement through the four states occurs during the synchronization process. By observing the synchronization process, you can see the obituaries actually being purged. Listing 6.1 shows where obituaries appear in the synchronization process. Notice that the object User1.West.XYZCorp has two obituary entries: one of Type 2 (Moved) and one of Type 6 (BackLink). The obituary stage is shown in the flags= field.

Listing 6.1 Obituary State Advancement

SYNC: Start sync of partition <[Root]> state:[0] type:[0]

SYNC: Start outbound sync with (#=2, state=0, type=1)

![]() [010000C3] <RIGEL.West.XYZCorp>

[010000C3] <RIGEL.West.XYZCorp>

SYNC: Using version 5 on server <CN=RIGEL>

SENDING TO ------> CN=RIGEL

SYNC: sending updates to server <CN=RIGEL>

SYNC:[010000B8][(22:20:00),2,1] ORION.East.XYZCorp

![]() (NCP Server)

(NCP Server)

SYNC:[010002A4][(19:49:49),2,1] JimH.West.XYZCorp(User)

SYNC:[010000C3][(08:31:47),1,1] RIGEL.West.XYZCorp

![]() (NCP Server)

(NCP Server)

SYNC: [150002E4] obituary for User1.West.XYZCorp

valueTime=36905EB9,1,20 type=2, flags=0,![]() oldCTS=36905E6F,1,1

oldCTS=36905E6F,1,1

valueTime=36905EB9,1,21 type=6, flags=0,![]() oldCTS=36905E6F,1,1

oldCTS=36905E6F,1,1

SYNC:[150002E4][(00:04:05),1,1] User1.West.XYZCorp (User)

SYNC: [0E0002BC] obituary for User1.East.XYZCorp

valueTime=36905EB9,1,17 type=3, flags=0,![]() oldCTS=36905EB9,1,1

oldCTS=36905EB9,1,1

SYNC:[0E0002BC][(23:24:57),1,1] User1.East.XYZCorp (User)

SYNC: Objects: 7, total changes: 74, sent to server ![]() <CN=RIGEL>

<CN=RIGEL>

SYNC: update to server <CN=RIGEL> successfully completed

Merged transitive vector for [010000C3] <RIGEL.West.XYZCorp>

succeeded

SYNC: SkulkPartition for <[Root]> succeeded

SYNC: End sync of partition <[Root]> All processed = YES.

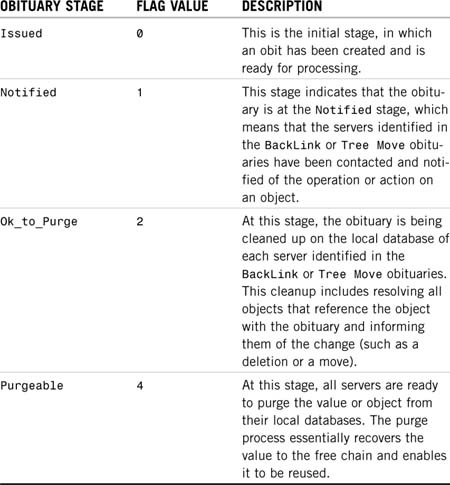

The stages that an obituary passes through before it is deleted are shown in Table 6.2. The four stages are always followed in the order presented. When an obituary is marked Flags=0004 (Purgeable), it is then up to each server to purge it from its local database.

You might notice a couple weird things about the information in Table 6.2. The first oddity is the flag values—the progression is 0, 1, 2, 4. This field is referred to as a bit field. The software checks specific bits rather than looking for specific integer values.

The second thing that may appear strange is the Issued (flags=0) and Ok_to_Purge (flags=2) states; these states indicate the beginning or end of another stage rather than their own processing procedure. Stage 0 is initially set when an obituary is set; this change is then replicated to all servers. When the replication cycle is complete, DS knows that all servers are at Stage 0, and it can go ahead and start the notification process (Stage 1). A change in the obituary is made, and that information is replicated to the other servers that need to be notified. After all servers have been notified, the obituary is set to Stage 2, meaning that Stage 1 (notification) has completed. When all servers have received a flag indicating that it is okay to purge (flags=2), the servers mark the obituaries as purgeable (flags=4), and that change is replicated to all the servers. At this point, the individual servers process the actual purge process, but because all servers have to know that the obituary is now purgeable, no additional notification needs to be done after the obituaries have actually been purged.

NOTE

The four obituary processing stages actually describe a multiserver transaction processing system. You can think of the processing of obituaries as a synchronized transaction that takes place nearly simultaneously on multiple servers.

Before an obituary (regardless of its class) can move to the next state, the current state must have been synchronized to all replicas of the real object. In order to determine whether all replicas in the replica ring have seen a given obituary state, a time is computed from the Transitive Vector attribute of the partition that contains the obituary. If the modification timestamp (MTS) on the obituary is older than this time, the server responsible for that obituary can advance it to the next state.

NOTE

eDirectory 8.5 and previous versions of NDS use the Purge Vector attribute’s time as the time to indicate when an obituary’s state should be advanced. (Purge Vector is a nonsynchronizing, single-valued attribute of the partition root object whose value, according to the Transitive Vector attribute (NetWare 5 and higher) or the Synchronized Up To attribute (NetWare 4) of this partition represents the oldest state in time that has been seen by each DSA in the replica ring. Purge Vector is updated only if the partition has a successful sync cycle. On the other hand, eDirectory 8.6 and higher use the Obituary Time Vector attribute—a value stored in server memory that is recalculated each time the Janitor process runs (every two minutes, by default).

Primary obituaries can be advanced in their states only after all associated secondary obituaries have advanced through all their states. After the primary obituary reaches its last state and that state synchronizes to all servers in the replica ring, all that remains is the object “husk,” which is an object without attributes—an object that can subsequently be purged from the database by the Purge process. Tracking obituaries are removed after the primary obituary is ready to be removed or, in the case of OBT_INHIBIT_MOVE, the tracking obituary is removed after the primary obituary has moved to the flags=1 (Notified) state on the Master replica.

For a secondary obituary of type BackLink, the server that holds the Master replica of the object with the obituary is responsible for advancing the states. For a secondary obituary of type Used By, the server that created it is responsible for advancing the obituary states as long as that replica still exists. If it does not still exist, the server holding the Master of that partition takes over advancing the obituary states for the Used By obituary. For a Move Subtree obituary, the Master replica of the parent partition is responsible for advancing the states.

NOTE

The Obituary process is scheduled on a per-partition basis, after the partition finishes a successful inbound sync. If only one replica (the Master) of a partition exists, the Heartbeat interval still schedules an Outbound Replication process, which in turns kicks off the Obituary process.

The type of the obituary determines the replica responsible for processing the obits (the sender). With the exception of OBT_USED_BY, the Master replica is responsible for starting the background process. The processing of a Used By obit is started by the replica that actually modified the object. If this replica no longer exists, the Master replica then kicks off the background process.

The steps involved in obituary processing are complicated. However, the basic concept can be illustrated by using a somewhat simplified example. eDirectory performs the following operations when an object is deleted:

1. eDirectory adds a primary obituary of type Dead to the “deleted” object and sets the flag to Issued. This takes place on the Master replica.

2. eDirectory creates a secondary obit of the type BackLink and sets the stage flag to Issued for every server that has an external reference to this object; the server DNs are listed in the BackLink attribute of the object. Store the creation time of the Dead obit as part of this secondary obit.

3. eDirectory creates a secondary obit of type BackLink and sets the stage flag to Issued for every server that holds a real replica of the object—not an external reference. Store the creation time of the Dead obit as part of this secondary obit.

4. eDirectory creates a secondary obit of type Used By and sets the stage flag to Issued for every DN listed in the Used By attribute of the deleted object. The Used By attribute contains a list of partitions (not servers) that have an interest in this object and need to be notified of changes to this entry. Store the creation time of the Dead obit as part of this secondary obit.

5. eDirectory removes all attributes except the obituaries, which results in an object husk. The flag on the entry’s Object Class attribute is set to Non Present, making the object “invisible” in most of the standard management tools, such as ConsoleOne (but not in DSBrowse or NDS iMonitor).

6. The Outbound Replication process synchronizes the deletion of attributes to all other servers in the replica ring.

7. After the next successful inbound synchronization of this partition, the Obituary process is started.

The Obituary process does the following:

![]() Computes a time vector that is equivalent to the minimum

Computes a time vector that is equivalent to the minimum Transitive Vector attribute, referred to as the Purge Vector attribute. eDirectory 8.6 and higher compute a second minimum vector, called the Obituary Time Vector attribute, which does not include timestamp values from Subordinate Reference replicas.

![]() Categorizes each obituary in this partition and takes appropriate action:

Categorizes each obituary in this partition and takes appropriate action:

![]() If the obituary is a

If the obituary is a Used By obit and this server is the server where the deletion occurred (determined by comparing the replica number in the obituary’s MTS to the replica number), this server is responsible for processing this obituary. Therefore, the main server notifies the other servers about this obit and sets the stage flag to Notified. The next time the Obituary process runs, this state flag is advanced to the next stage, until it reaches Purgeable (that is, after all partitions in the Used By attribute have been notified), and then it is purged.

![]() If the obituary is a

If the obituary is a BackLink obit and this server has the Master replica, this server is responsible for processing this obituary. Therefore, the xxx notifies the other servers about this obit and sets the stage flag to Notified. The next time the Obituary process runs, this state flag is advanced to the next stage, until it reaches Purgeable (that is, after all servers in the BackLink attribute have been notified), and then it is purged.

![]() If the obituary is a primary obituary (such as a

If the obituary is a primary obituary (such as a Dead obit, in this example), there are no secondary obituaries outstanding for this primary obit, and the attribute’s MTS on the obituary is older than the Purge Vector/Obit Time Vector attribute, the obit’s flag value can be set to Purgeable because all servers have seen the change.

![]() When the obit value flag on the primary obit is set to

When the obit value flag on the primary obit is set to Purgeable, the Purger process, also known as the Flat Cleaner process, removes the object’s record (which is no longer flagged as Present) from the database and completes the deletion action.

Because stuck obits—that is, servers not clearing out obits from their local databases, thus preventing certain operations from being performed—are the source of many NDS/eDirectory problems, your having a good grasp of obituaries’ dependency of other DS processes is important to understanding DS background processes, which are discussed next.

The DS module maintains the database through several background processes running on each server. These processes run automatically and generally do not need to be manually invoked. There are cases in which there is benefit in forcing a process to run, but as a general rule, you should not force them to run unless necessary. As discussed in Chapter 4, “Don’t Panic,” doing something just for the sake of doing it is frequently not a good idea.

The Synchronization process, sometimes referred to as the Skulker process, keeps the information in multiple replicas of the DS database current on all servers. The process is event driven, meaning it is kicked off after an object has been modified. Listing 6.2 shows a sample of the Sync process in the DSTrace screen.

NOTE

The exact format of DSTrace output varies, depending on the version of NDS/eDirectory (thus, the version of the utilities), flag settings, and sometimes the operating system platform. Therefore, the DSTrace, DSRepair, and other listings shown in this and other chapters in this book may not exactly match what you find on your systems, but they serve as a guide to the correct information.

Listing 6.2 A Sample Synchronization Process

SYNC: Start sync of partition <[Root]> state:[0] type:[0]

SYNC: Start outbound sync with (#=2, state=0, type=1)

[010000C3]<RIGEL.West.XYZCorp>

(21:11:57) SYNC: failed to communicate with server

<CN=RIGEL> ERROR: -625

SYNC: SkulkPartition for <[Root]> succeeded

SYNC: End sync of partition <[Root]> All processed = NO.

Listing 6.2 demonstrates a failed synchronization condition. The local server is attempting to contact the server named CN=Rigel.OU=West.O=XYZCorp but is unable to complete the Synchronization process. The error -625 indicates a transport failure—also known as a communications failure. To correct this problem, the easiest way to proceed is to verify that the target server is up and that the communications links between the two servers are working properly.

A successful synchronization cycle of the [Root] partition between the two servers is shown in Listing 6.3.

Listing 6.3 A Successful Synchronization

SYNC: Start sync of partition <[Root]> state:[0] type:[0]

SYNC: Start outbound sync with (#=2, state=0, type=1)

[010000C3]<RIGEL.West.XYZCorp>

SYNC: Using version 5 on server <CN=RIGEL>

SENDING TO ------> CN=RIGEL

SYNC: sending updates to server <CN=RIGEL>

SYNC:[010000B7][(20:02:16),1,3] XYZCorp (Organization)

SYNC:[010000B8][(22:20:00),2,1] ORION.East.XYZCorp

![]() (NCP Server)

(NCP Server)

SYNC:[0100029A][(20:02:50),2,1] Jim.East.XYZCorp (User)

SYNC:[0100029B][(19:50:43),2,1] Amy.East.XYZCorp (User)

SYNC:[010002A4][(19:49:49),2,1] Kenny.East.XYZCorp (User)

SYNC:[010002A8][(19:58:46),2,1] WINNT.Scripts.East.XYZCorp

![]() (Profile)

(Profile)

SYNC:[100002E1][(02:36:26),1,1] WIN98.Scripts.East.XYZCorp

![]() (Profile)

(Profile)

SYNC: Objects: 7, total changes: 25, sent to server

<CN=RIGEL>

SYNC: update to server <CN=RIGEL> successfully completed

Merged transitive vector for [010000C3]<RIGEL.West.XYZCorp>

succeeded

SYNC: SkulkPartition for <[Root]> succeeded

SYNC: End sync of partition <[Root]> All processed = YES.

This time the servers are talking to each other, and there are a few updates that need to be sent from one server to the other.

NOTE

Unlike many other DS implementations, NDS/eDirectory sends only the changed attribute values (the deltas) of a given object, even if they are part of a multivalued attribute.

The frequency at which the Sync process runs depends on the object attribute being changed. Each attribute has a flag called that determines whether it is “high convergence.” This flag has one of two possible values:

![]()

Sync Immediate (DS_SYNC_IMMEDIATE_ATTR)—With this flag, the attribute value is scheduled for immediate synchronization (with a 10-second holding time after the first event is detected so that if there are subsequent events within this time window, they can be processed at the same time). This is required on some attributes, such as the Password Required attribute of a User object, to either maintain proper data integrity or security.

![]()

Sync Never (DS_SCHEDULE_SYNC_NEVER)—The name of this flag is a little misleading. This flag indicates that a change to the attribute’s value does not trigger synchronization (immediately). The attribute can wait to propagate the change until the next regularly scheduled synchronization cycle (30 minutes for NetWare 4 servers and 60 minutes for NetWare 5 servers and higher, including eDirectory servers) or some other event triggers synchronization.

NOTE

If the Sync Immediate flag is not specified for an attribute, DS automatically assumes the attribute to be Sync Never.

A Per Replica (DS_PER_REPLICA) flag also exists and can be defined for attributes. When an attribute is defined as Per Replica, the information of the attribute is not synchronized with other servers in the replica ring. Most of the DirXML-related attributes are defined with this flag.

TIP

Appendix C, “eDirectory Classes, Objects, and Attributes,” lists all the attributes defined for eDirectory 8.7.3, along with synchronization flag information.

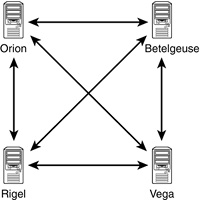

In NetWare 4.x any server that holds a replica of an NDS partition has to communicate with all the other servers that hold a replica of that partition. Figure 6.3 shows the type of communication that has to take place in order for synchronization to be completely successful on all NetWare 4.x servers.

As you can guess, the number of synchronization processes (or vectors, as they are sometimes called) that must complete grows exponentially as replicas are added. The amount of traffic generated can be tremendous. In fact, the number of communications vectors is n × (n–1), where n represents the number of replicas in the replica ring. Thus, at 27 replicas, a total of 27 × 26, or 702, communications vectors exist.

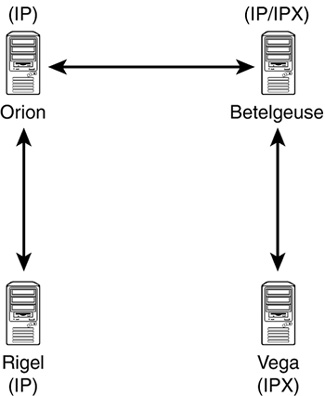

In NetWare 5 Novell introduced the idea of transitive synchronization. Transitive synchronization is a synchronization methodology wherein a server doesn’t have to contact every other server in the replica list. It can enable other servers to ensure that synchronization is complete, as demonstrated in Figure 6.4.

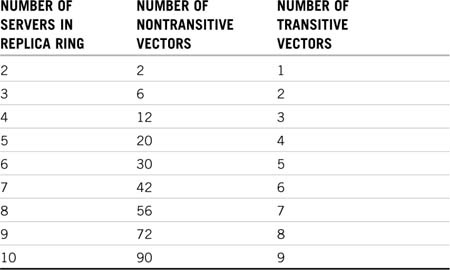

The reduction in traffic in a transitive synchronization environment is very significant, and the completion of the entire synchronization cycle is reduced. Ideally, this would create a scenario in which the vector count would simply equal n–1, so with 27 replicas, only 26 communications vectors would be needed. Table 6.3 shows the difference in vectors between transitive and nontransitive synchronization.

This discussion represents the ideal number of synchronization vectors when using transitive synchronization. As you can see in Table 6.3, the number of communications vectors with transitive synchronization is significantly smaller than the number with nontransitive synchronization, although it is possible that the number of vectors could increase, depending on the network design and availability of services. The actual number of synchronization vectors with transitive synchronization could be larger but will always be smaller than without transitive synchronization.

NOTE

In a way, you can consider transitive synchronization a feature of NDS 7 and higher. Therefore, you do not need to have NetWare servers to take advantage of it because the non-NetWare DS servers will be running eDirectory, which supports transitive synchronization.

Transitive synchronization also addresses mixed transport protocols used on different DS servers. Consider the example presented in Figure 6.4. Without transitive synchronization support, the servers Rigel and Orion will not be able to synchronize with the server Vega because they do not share a common transport protocol. With transitive synchronization, however, there is no problem because the server Betelgeuse acts as a gateway or a mediator.

WARNING

One side effect of replica rings with mixed transport protocols is that the servers Rigel and Orion in this example will attempt to talk directly to Vega (and vice versa). They will report “Unable to communicate with server x” errors. However, this does not indicate a problem with your DS. It’s just that DS has detected a situation that is really not a problem.

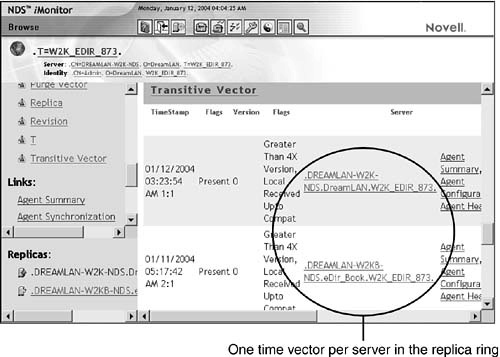

To understand how transitive synchronization works, you must first be familiar with transitive vectors. NDS uses a time vector—also called a time array—to keep track of changes to a given partition. This time vector holds timestamps for all the replicas in the replica ring from a given server’s perspective. (For instance, if there are two replicas for this partition, two timestamps will be found in the time vector, as illustrated in Figure 6.5.) Each server holds a copy of its own time vector as well as copies of time vectors from the other servers in the ring. This group of time vectors is collectively known as the transitive vector. The Transitive Vector attribute is multivalued and associated with the partition root object, so NDS/eDirectory can manage the synchronization process and determine what needs to be sent to other replicas. Each replica has its own transitive vector; there is only one transitive vector for each replica, and it is synchronized between all servers within the replica ring.

To see the transitive vector values in NDS iMonitor, as shown in Figure 6.5, from Agent Summary, you click the Partition Replica link and then click the Partition link, followed by Attributes. Finally, you click the Transitive Vector link.

NOTE

In NDS 6 and earlier, the single-valued attribute Synchronized Up To is used to determine when the latest changes were made. The value of this attribute is unique for each replica and is not synchronized to the other servers in the replica ring.

When you synchronize the transitive vector values, all the replicas can synchronize without needing to have every replica communicate with every other replica. Each time the replica synchronization process begins its scheduled run, it first checks the entries in the transitive vector to determine which other servers hold replicas that need to be synchronized. The check compares the timestamps of the time vectors of the source server that received the update with those of the destination server. If a timestamp is greater for the source server, replica updates are transferred. The source server updates its own time vector within the transitive vector and sends the updated transitive vector to the target server. At the end of the replica update process, the target server updates its own time vector within the transitive vector and sends that updated transitive vector back to the source server. Now the two servers know they are both up-to-date, and the target server will not try to sync with the source server with the same update.

One of the most significant performance-enhancement features in eDirectory is the introduction of multithreaded replica synchronization, starting with eDirectory 8.6. In previous versions of eDirectory and NDS, all inbound and outbound synchronization was performed using a single thread. Partitions were synchronized in a serial manner—changes in one partition could not be synchronized until the previous partition had been completely processed. However, this is not very efficient for trees where there may be many partitions.

Starting with eDirectory 8.6, outbound synchronization is now multithreaded. Partitions stored on one server can be synchronized out in a parallel manner, allowing replicas to be synchronized in a much more efficient manner.

NOTE

Inbound synchronization is still single threaded. An eDirectory server can receive inbound synchronization for only one partition at a time.

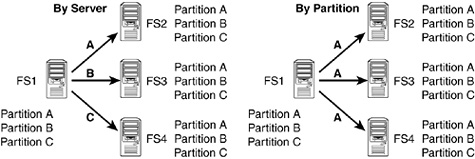

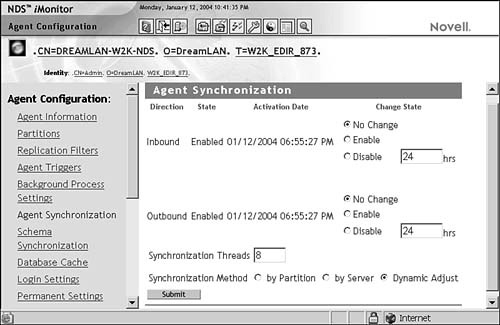

Multithreaded synchronization takes place using one of two synchronization methods (see Figure 6.6):

![]() By partition—This method causes eDirectory to send out one partition to multiple recipient servers at a time.

By partition—This method causes eDirectory to send out one partition to multiple recipient servers at a time.

![]() By server—This method causes eDirectory to send out multiple partitions to multiple unique servers at one time.

By server—This method causes eDirectory to send out multiple partitions to multiple unique servers at one time.

When eDirectory starts up, it analyzes all partitions and corresponding replica rings stored on the server. This analysis procedure results in a list of all servers involved in replica synchronization and all partitions stored on those servers. If the number of partitions stored on the local server is equal to or greater than the number of unique servers minus one (the local server), eDirectory will automatically synchronize using the by-partition method. Otherwise, eDirectory uses the by-server method. By default, the synchronization method is dynamically adjusted (that is, selected by eDirectory upon startup). But you can also manually select a preferred method via NDS iMonitor (see Figure 6.7).

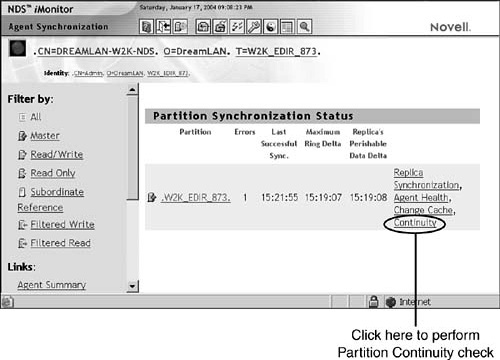

FIGURE 6.7 Use the Agent Synchronization link under Agent Configuration in NDS iMonitor to view and manage multithreaded synchronization.

The number of threads used for synchronization determines how multithreaded synchronization behaves. For example, if only one thread is configured for synchronization, multithreaded synchronization is effectively disabled. By default, eDirectory allows a maximum of eight threads for multithreaded synchronization.

eDirectory automatically determines the number of threads to use in multithreaded synchronization by determining whether the number of partitions is less than or equal to two times the number of unique servers in those partitions’ replica rings. If the number of partitions is less than or equal to two times the number of unique servers in those partitions’ replica rings, eDirectory will set its maximum thread usage to the number of partitions stored on the local server. Otherwise, the number of threads is set to half the number of unique servers in shared replica rings. This allocatable thread count is used only if it does not exceed the configured maximum thread count. If this count is exceeded, the number of allocatable threads will be set to the configured maximum thread count.

Perhaps the most problematic issue you’re likely to encounter with database synchronization is designing correct methods for protecting against data loss or preventing unnecessary duplication of synchronization work due to communication failures. Prior to eDirectory 8.6, any type of communication failure during the replica synchronization process would cause the entire process to be restarted when communication was reestablished. With large partitions containing millions of objects, this could prove to be a very costly restart, especially if slow WAN links are involved.

eDirectory 8.6 addressed this problem by implementing incremental replication. Incremental replication allows for the replica synchronization process to be interrupted and later resume from the point of failure. To understand the how the incremental replication process works, you need to first understand the following related key terms and concepts:

![]() Window vector—The window vector, stored in the

Window vector—The window vector, stored in the SyncWindowVector attribute (of type SYNC_OCTET_STRING) on the partition root object of the receiving server, is the point in time to which the source replica is attempting to move the destination replica. For example, if the latest modification timestamp in the source replica is 2/14/2004 2:35 p.m. and the destination replica has a timestamp of 2/14/2004 1:10 p.m., the window vector in use for the synchronization process would be 2/14/2004 2:35 p.m.

Generally speaking, the window vector is equal to the source replica’s transitive vector, unless the destination replica is more than 30 days behind the source replica. In that situation, the window vector is divided into 30-day intervals.

![]() Window pane—A window pane is a discrete unit of work. In the case of replica synchronization, a window pane represents a complete synchronization cycle. This would be the difference between the current transitive vector of the destination server and the transitive vector of the source server. In other words, the window vector represents the final point in the synchronization cycle, and the window pane represents the entire amount of work—the number of objects and attributes values that need to be sent—necessary to meet that window vector.

Window pane—A window pane is a discrete unit of work. In the case of replica synchronization, a window pane represents a complete synchronization cycle. This would be the difference between the current transitive vector of the destination server and the transitive vector of the source server. In other words, the window vector represents the final point in the synchronization cycle, and the window pane represents the entire amount of work—the number of objects and attributes values that need to be sent—necessary to meet that window vector.

![]() Distributed consistent ordering of objects—To allow incremental replication, the object synchronization process must be able to stop and then pick up again at the point where it was stopped. For fault tolerance and performance, the synchronization process must also be able to be resumed by any other server in the replica ring. This is possible only if all servers in the replica ring are synchronizing objects in the same order as every other server. Because objects can be added to any replica at any time, all servers in the replica ring must use a consistent index of objects, based on some unique value for all objects, within a partition. eDirectory uses the object creation timestamp because all creation timestamps are unique.

Distributed consistent ordering of objects—To allow incremental replication, the object synchronization process must be able to stop and then pick up again at the point where it was stopped. For fault tolerance and performance, the synchronization process must also be able to be resumed by any other server in the replica ring. This is possible only if all servers in the replica ring are synchronizing objects in the same order as every other server. Because objects can be added to any replica at any time, all servers in the replica ring must use a consistent index of objects, based on some unique value for all objects, within a partition. eDirectory uses the object creation timestamp because all creation timestamps are unique.

![]() Synchronization point—The synchronization point is a collection of information that can be used to determine how far the synchronization process has progressed. This collection of information consists of the following types of data:

Synchronization point—The synchronization point is a collection of information that can be used to determine how far the synchronization process has progressed. This collection of information consists of the following types of data:

![]() An object producer—The object producer is one of several sources or background processes that evaluate objects as candidates for the synchronization process. Examples of these producers are the Partition Send All, Change Cache, and Obituary processes.

An object producer—The object producer is one of several sources or background processes that evaluate objects as candidates for the synchronization process. Examples of these producers are the Partition Send All, Change Cache, and Obituary processes.

![]() An ordering of objects—The ordering of objects that have been produced by the object producer is based on the creation timestamps of the objects being produced.

An ordering of objects—The ordering of objects that have been produced by the object producer is based on the creation timestamps of the objects being produced.

![]() A key—The key is the value used to determine the current synchronization location within the ordering of objects. This key is typically the creation timestamp of the objects being synchronized.

A key—The key is the value used to determine the current synchronization location within the ordering of objects. This key is typically the creation timestamp of the objects being synchronized.

The synchronization point is stored as the SyncPanePoint attribute (of type SYN_OCTET_STRING) on the partition root object of the receiving server.

Now that you are familiar with the elements of incremental replication, let’s discuss the incremental replication process. The following is an overview of the incremental replication portion of the replica synchronization process:

1. The Replica Synchronization process begins. The transitive vector has been checked, and a replica synchronization process has been started.

2. The replication process checks for the existence of the SyncPanePoint attribute on the target server. If the SyncPanePoint attribute is found, it indicates that the receiving server was in the middle of a replica synchronization process and was interrupted. When a SyncPanePoint attribute is located, the source server reads in the value of the SyncPanePoint attribute and determines the window vector, object producer, and key for the interrupted synchronization. Using that information, the source server resumes the synchronization process that was interrupted.

If no SyncPanePoint attribute is located, the source server calculates and establishes a new window vector for the synchronization process.

NOTE

If the window vector of the interrupted synchronization is newer than the transitive vector of the source server, the source server reestablishes a window vector equal to the source server’s local transitive vector.

3. The replication process sends updates from the source server to the target server. Updates are sent as data packets across the wire. An individual packet can contain one or more object changes that need to be synchronized. To minimize data loss in the event of communication failure, each packet begins with a new SyncPanePoint attribute. The SyncPanePoint data contains the key, which indicates the present position in the synchronization process. This key provides a pointer for the last packet sent from the source server.

4. The receiving server updates its SyncPanePoint attribute for each data packet received. In the event that communication is interrupted, all data received before the last SyncPanePoint attribute will be preserved. At most, two data packets’ worth of information would be lost.

5. The receiving server removes the SyncPanePoint attribute at the end of a successful sync. When the replica update process is completed, the SyncPanePoint attribute is removed from the receiving server’s partition root object. This allows subsequent synchronization cycles to establish new window vectors.

As mentioned previously, incremental replication is available only in eDirectory 8.6 and higher. Safeguards are in place to prevent loss of data where DS servers running pre-eDirectory 8.6 are unable to synchronize replicas with SyncPanePoint attributes. When these servers with the older DS attempt to synchronize with an eDirectory 8.6 or higher server, they encounter error -698 (ERR_REPLICA_IN_SKULK), indicating that the target server is currently in the middle of a replica synchronization process. The purpose of the -698 error is to allow time for another eDirectory 8.6 or higher server to synchronize with the server reporting the -698 error. When another eDirectory server that is capable of incremental replication encounters the SyncPanePoint attribute, the synchronization process will be able to pick up at the point of failure (as indicated by the window vector), and no data will be lost.

TIP

The infrequent occurrence of the -698 error is an example of when an error is not indicative of a real error. However, if its frequency is high, it can indicate a communication issue lurking in the background.

To ensure that an eDirectory server capable of incremental replication is not a requirement for future synchronization (because of the presence of the SyncPanePoint attribute after an aborted sync), the SyncPanePoint attribute is automatically purged after a two-hour timeout. After the timeout period has passed, the SyncPanePoint attribute is purged, and any data received during the incomplete synchronization cycle is lost. At that point, any DS server can begin a new replica synchronization cycle with this server because there is no more SyncPanePoint attribute present to cause a -698 error.

NDS versions prior to NDS 8 do not understand or know how to handle auxiliary classes. Consequently, NDS 8 and higher servers only send auxiliary class and auxiliary attribute information to servers running NDS 8 and above. When synchronizing to servers running previous versions, eDirectory must send the auxiliary class information in a manner that is compatible with the previous releases. Because an auxiliary class adds attributes to an object that previous versions of NDS consider illegal, NDS 8 and eDirectory servers make the following modifications to the objects with auxiliary classes before they are sent to servers with previous versions of NDS:

![]() The

The AuxClass Object Class Backup attribute (of type SYNC_CLASS_NAME) is added to the object, and all the information from the object’s Object Class attribute is stored in the attribute. This attribute is stored only on the pre-NDS 8 servers.

![]() The object’s class is changed to

The object’s class is changed to Unknown.

![]() The

The auxClassCompatibility attribute (of type SYNC_CLASS_NAME) is added to the object on all replicas and is used to maintain timestamps for the object.

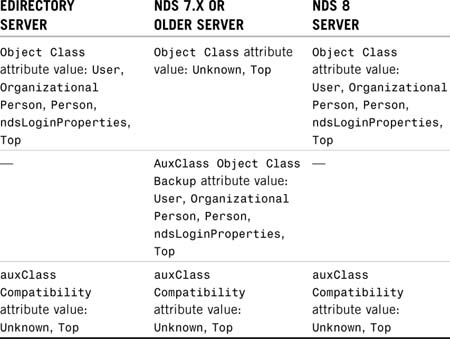

Table 6.4 demonstrates how eDirectory modifies an object’s Object Class, AuxClass Object Class Backup, and auxClassCompatibility attributes as it synchronizes to an NDS 7 or older server when an auxiliary class is present for the object.

TABLE 6.4 Auxiliary Class and Attribute Information, as Seen on Servers Running Different DS Versions

When an NDS 8/eDirectory server receives an Unknown object, it checks whether the object has an auxClassCompatibility attribute. If there is such an attribute, NDS 8/eDirectory replaces the Unknown class with information from the AuxClass Object Class Backup attribute and restores the object to normal. The auxClassCompatibility attribute is maintained on all servers in the replica ring as long as at least one NDS 7.x or older server is in the ring. When all NDS 7.x and older servers are removed from the replica ring, the attribute is removed from the object. This information is often referred to as the “Aux Class Lie.”

NOTE

Because many existing applications that read NDS/eDirectory class definitions do not necessarily understand auxiliary classes, Novell modified the read class definition APIs to provide backward compatibility. All the new routines do is intercept the client responses and substitute the class information located in the Object Class attribute with the information located in the AuxClass Object Class Backup attribute. As a result, if you look at the object in DSBrowse or NDS iMonitor, the object will still show up with an Unknown class, but NetWare Administrator and ConsoleOne will now show up as known objects. You should be able to administer such objects with NetWare Administrator or ConsoleOne as if they were normal objects. Only applications that have been updated to be compatible with NDS 8 and higher can display auxiliary class definitions with an auxiliary object class flag.

You can modify the NDS schema by adding or deleting attribute definitions and object class definitions. Such changes need to be replicated among all the servers within the same tree that contain replicas. This synchronization is done through the Schema Synchronization process. This process is started within 10 seconds following completion of the schema modification operations; the 10-second delay enables several modifications to be synchronized at the same time.

NOTE

Although the Schema Sync process targets only servers hosting replicas, servers without replicas still receive schema information through the Janitor process (which is discussed later in this chapter).

NOTE

Keep in mind that base schema definitions cannot be modified. When a new attribute is added to a base class object definition, it cannot be removed.

The updates to the schema are propagated from one server to another; this is similar to the Replica Synchronization process. However, the Schema Synchronization process does not use a replica ring to determine which servers to send the schema updates to. Schema updates are sent to servers that contain either replicas of a given partition or Child partitions of the given partition.

Because schema modifications must occur on the server that is hosting the Master replica of [Root], the modifications flow from the [Root] partition down to the extreme branches of the tree.

The actual Schema Synchronization process is made up of several different processes:

![]() Schema process—This process, which runs every four hours by default, is the main process. It schedules the execution of the following subprocesses (in the order listed). (DSTrace displays the message “Begin schema sync…” at the start of the sync and either an “All Processed = Yes” or an “All Processed = No” message at the end. If processing is successful, the next Schema process is scheduled to run again after

Schema process—This process, which runs every four hours by default, is the main process. It schedules the execution of the following subprocesses (in the order listed). (DSTrace displays the message “Begin schema sync…” at the start of the sync and either an “All Processed = Yes” or an “All Processed = No” message at the end. If processing is successful, the next Schema process is scheduled to run again after HeartBeatSchemaInterval, which is four hours by default; otherwise, the next Schema process is scheduled to run after SchemaUpdateInterval [60 seconds] plus 1 second.)

![]() Skulk Schema process—This process determines which servers the local server needs to synchronize to (by maintaining a server-centric schema sync list in server memory) and in what order to synchronize to them. It also ensures that the local server is in a state to successfully synchronize the schema. If the process detects that a schema epoch is in progress, DSTrace reports a -654 error (“partition busy”). A -657 error (“schema sync in progress”) will be reported if a schema reset is detected.

Skulk Schema process—This process determines which servers the local server needs to synchronize to (by maintaining a server-centric schema sync list in server memory) and in what order to synchronize to them. It also ensures that the local server is in a state to successfully synchronize the schema. If the process detects that a schema epoch is in progress, DSTrace reports a -654 error (“partition busy”). A -657 error (“schema sync in progress”) will be reported if a schema reset is detected.

![]() Send Schema Updates process—This process is the workhorse in the Schema Synchronization process. It is responsible for sending the schema changes—all deleted classes and deleted attributes—as well as the present attributes and present classes. eDirectory makes several passes through this process to ensure that all these changes are synchronized correctly. (During this phase, DSTrace reports “Sending <present or deleted> <Attributes or classes>”.)

Send Schema Updates process—This process is the workhorse in the Schema Synchronization process. It is responsible for sending the schema changes—all deleted classes and deleted attributes—as well as the present attributes and present classes. eDirectory makes several passes through this process to ensure that all these changes are synchronized correctly. (During this phase, DSTrace reports “Sending <present or deleted> <Attributes or classes>”.)

![]() Schema Purger process—This process is responsible for cleaning up any entry or value records that are no longer needed. (During the cleanup, DSTrace reports “Purger purged <class or attribute>; entries purged <number of values>.”

Schema Purger process—This process is responsible for cleaning up any entry or value records that are no longer needed. (During the cleanup, DSTrace reports “Purger purged <class or attribute>; entries purged <number of values>.”

![]() DSA Start Update Schema process—This process is the process that the receiving server goes through while another server is sending schema to it. When a server receives a request to send schema, it goes through the next two processes. (DSTrace reports “* Start inbound sync from server <senderID> version <protocol number>, epoch <epoch in seconds>:<epoch replica number>.”)

DSA Start Update Schema process—This process is the process that the receiving server goes through while another server is sending schema to it. When a server receives a request to send schema, it goes through the next two processes. (DSTrace reports “* Start inbound sync from server <senderID> version <protocol number>, epoch <epoch in seconds>:<epoch replica number>.”)

TIP

Although the Schema Synchronization process never sends schema to itself, a check is made to ensure that the sender is never the receiver. In the unlikely event that the sender is the receiver, DSTrace displays “Warning - Rejecting DSAStartUpdateSchema Client <serverID>” and reports the error –699 (“fatal”).

![]() DSA Update Schema process—This process details what the receiving server does with each update it receives. This process is looped through over and over, as long as the sending server continues to send updates. (During this phase, DSTrace reports “DSAUpdateSchema: Processing inbound packet one at a time because of…” or “DSAUpdateSchema: Packet with <number of updates> updates,” depending on the information found inside the data packets.)

DSA Update Schema process—This process details what the receiving server does with each update it receives. This process is looped through over and over, as long as the sending server continues to send updates. (During this phase, DSTrace reports “DSAUpdateSchema: Processing inbound packet one at a time because of…” or “DSAUpdateSchema: Packet with <number of updates> updates,” depending on the information found inside the data packets.)

![]() DSA End Update Schema process—This process signals the end of the update. The receiving server goes through the DSA End Update Schema process when it receives a

DSA End Update Schema process—This process signals the end of the update. The receiving server goes through the DSA End Update Schema process when it receives a DSAEndUpdateSchema request from the sending server. (Upon completion of the cycle, DSTrace reports “* End inbound sync from server <serverID>, Version <version>, Epoch <epoch in seconds>:<epoch replica number>.”)

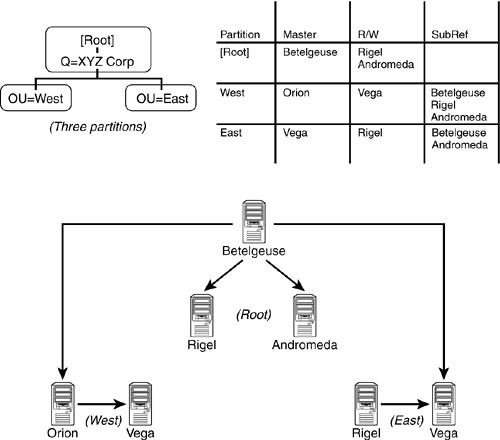

The detailed operation of the Schema Synchronization process is rather involved. The following simple example serves to illustrate how the Schema Synchronization process works from a high-level point of view. Figure 6.8 depicts a small tree with five servers and three partitions.

A schema change is made to Betelgeuse because it holds the Master replica of the [Root] partition. After this server has been updated, this server sends the schema changes out to the other servers that hold copies of [Root]: Rigel and Andromeda. After all servers in the [Root] partition have received the updates, DS sends the updates to the other servers in the tree. It does this by looking at the servers that hold copies of [Root] and reading the replica list information to find out what other replicas are out there; then it builds a schema sync list. Each server’s schema sync list may be different, depending on what replicas it hosts.

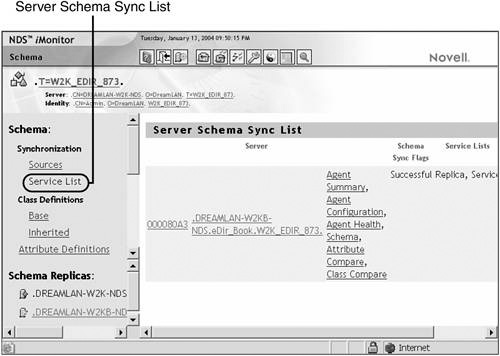

You can look up a server’s schema sync list by using either DSTrace or NDS iMonitor. It is easiest to use NDS iMonitor, as shown in Figure 6.9; it is found under Service List under the Schema link.

To use DSTrace, you first enable the DSTrace filter with the +SCHEMA flag on NetWare or the +SCMA flag on Unix, and then you use the set dstrace=*ssl DSTrace option. The output looks similar to this:

SchemaSyncList:

--->>> [000080a3] <.DREAMLAN-W2KB-NDS.eDir_Book.W2K_EDIR_873.>

Flags: 0001 Lists: 0005 Expiration: 2004/01/12

![]() 6:11:21

6:11:21

List(s): [0005] Replica Service

Inbound schema synchronization lock status: Released

resetSuccessfulSync = 0 in GetServersInSchemaSyncList

On Windows, you need to enable DSTrace’s Schema Details from the DSTrace Edit menu and then trigger the Schema Sync process from the Trace tab of the DSA window.

By looking at the replica list on Rigel, for example, DS can determine that there are two child partitions—OU=West.O=XYZCorp and OU=East.O=XYZCorp. The replica list on Rigel also indicates what other servers are in the tree. DS determines that the servers Vega and Orion also need to be updated. During this determination, note that Vega and Rigel are listed twice because of the replication scheme in this tree; even though Rigel receives an update in the first round of schema synchronization, after Vega receives the updates to the schema, Rigel is again checked to see whether its schema is current. If the schema is not current, it is updated.

TIP

Schema updates are normally not something to be concerned about unless the change is being made because of an update in the DS module. In cases where Novell has introduced a schema change in a new version of the DS module, you should first update the module on the server that holds the Master replica of [Root]—because that is where schema modification takes place—and then update the rest of your servers after the schema update has completed.

As discussed earlier in this chapter, schema changes are synchronized from the root of the DS tree down to its branches. Because a tree can have NDS 8 servers near the root, with NetWare 6 or 4.2 servers in the middle, and an eDirectory 8.7 server below them, eDirectory must be able to send schema information about auxiliary classes in a manner that is compatible with legacy versions of NDS. It must do so with sufficient clues that an eDirectory server can re-create an auxiliary class from the information. To accomplish this, when synchronizing schema with a server running NDS 7 or older, eDirectory makes the following changes to the three auxiliary class characteristics to make them compatible with previous versions of NDS:

![]() Auxiliary class flag—NDS 8 introduced this object class flag to indicate which classes are auxiliary classes. Because pre-NDS 8 versions do not recognize this flag, eDirectory servers send auxiliary class definitions as standard class definitions with one additional attribute, the

Auxiliary class flag—NDS 8 introduced this object class flag to indicate which classes are auxiliary classes. Because pre-NDS 8 versions do not recognize this flag, eDirectory servers send auxiliary class definitions as standard class definitions with one additional attribute, the Auxiliary Class Flag attribute, which contains the auxiliary class flag information. When an eDirectory server receives a class definition with this attribute, it removes the attribute from the class definition and re-creates an auxiliary class from the class definition.

![]() Superclasses—Prior to NDS 8, NDS required every class to have a superclass. To make auxiliary classes compatible with these rules, NDS 8 and higher servers send

Superclasses—Prior to NDS 8, NDS required every class to have a superclass. To make auxiliary classes compatible with these rules, NDS 8 and higher servers send Top as the superclass of any auxiliary class that has declared no superclass. When an eDirectory server receives a class definition with the Auxiliary Class Flag attribute and with Top as its superclass, the server removes Top as its superclass.

![]()

Object Class Attribute—In versions of NDS prior to NDS 8, the Object Class attribute is a Read-Only attribute. When NDS 8 or higher servers send the definition of this attribute to servers with previous versions of NDS, the source servers include the Read-Only constraint. When eDirectory servers receive the definition for this attribute from a server with previous versions of NDS, the Read-Only constraint is removed from the definition.

The NDS Janitor process is responsible for a number of different tasks, including the following:

![]() Scheduling the Flat Cleaner process.

Scheduling the Flat Cleaner process.

![]() Issuing console messages when synthetic time is issued (on NetWare servers only).

Issuing console messages when synthetic time is issued (on NetWare servers only).

![]() Optimizing the local DS database.

Optimizing the local DS database.

![]() Checking whether the partition root object has been renamed.

Checking whether the partition root object has been renamed.

![]() Updating and verifying the

Updating and verifying the Inherited ACL attributes of partition root objects.

![]() Updating the

Updating the Status attribute in the DS database for the local server.

![]() Ensuring that the local server is registered with another server to receive schema updates if there is no local replica.

Ensuring that the local server is registered with another server to receive schema updates if there is no local replica.

![]() Validating the partition nearest

Validating the partition nearest [Root] on the server and the replica depth of that partition.

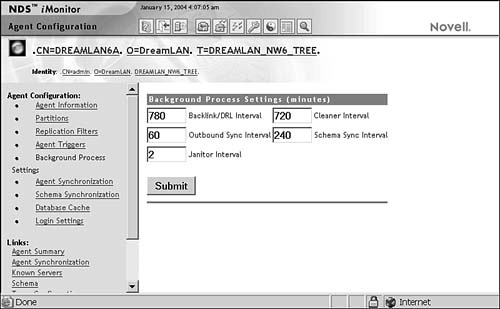

The Janitor process has responsibility for some fairly critical tasks. By default, the Janitor process runs every two minutes, although it doesn’t perform every task in its list each time it runs. (For example, it schedules the Flat Cleaner process only once every 60 minutes.)

DS uses synthetic time to manage situations where the current timestamp on an object is later than the current time. The Janitor process checks the timestamps on the objects in the server and when a new timestamp is needed for an object. If an object in the server’s replicas has a timestamp greater than the current server time, the Janitor process notifies the operating system, and a message is generated on NetWare’s system console:

1-02-2004 6:33:58 pm: DS-8.99-12

Synthetic Time is being issued on partition "NW7TEST."

Chapter 2, “eDirectory Basics,” discusses the importance of time synchronization in regard to event timestamping. The timestamp itself is not discussed in detail in Chapter 2. A timestamp consists of three fields: the time and date when the timestamp was issued (more specifically, the number of seconds since midnight January 1, 1970), the replica number, and an event counter. The event counter is incremented every time a timestamp is issued until one second has advanced or 65,535 (64KB minus 1) events have been issued. The following sample timestamp indicates that the server holding Replica 2 issued this timestamp on October 10, 2004, at 04:23:18, and it was for the 34th event within that second:

10/10/2004 04:23:18 2;34

DS uses two types of timestamps to keep track of changes in the database:

![]() Creation timestamp—This timestamp is issued when an object is created. A creation timestamp is used to identify an object; therefore, no two sibling objects (that is, objects in the same context) can have the same creation timestamp.

Creation timestamp—This timestamp is issued when an object is created. A creation timestamp is used to identify an object; therefore, no two sibling objects (that is, objects in the same context) can have the same creation timestamp.

![]() Modification timestamp—This timestamp is issued whenever an attribute is added to, modified, or removed from an object. Every attribute has a modification timestamp that denotes the date and time the attribute was created or last modified (but not when the attribute was removed).

Modification timestamp—This timestamp is issued whenever an attribute is added to, modified, or removed from an object. Every attribute has a modification timestamp that denotes the date and time the attribute was created or last modified (but not when the attribute was removed).

When a timestamp (either a creation or modification timestamp) is issued, the Next Timestamp field (also known as the Partition Timestamp field) in the partition record representing the partition in which this modified object resides is updated. The value placed in the Next Timestamp field is equivalent to the timestamp just issued, but the event counter is incremented by one. This allows DS to identify the minimum value for the next timestamp to be issued.

When a new timestamp is needed, the server obtains the next timestamp based on the partition timestamp of the partition in which the object is being modified. The server also obtains the current time from the operating system. The server then performs one of the following tasks:

![]() If the time obtained from the operating system is higher than the Next Timestamp value (that is, if it is later in time), the server resets the event counter back to 1 and issues a new timestamp, using the time provided by the operating system, its replica number, and the new event counter.

If the time obtained from the operating system is higher than the Next Timestamp value (that is, if it is later in time), the server resets the event counter back to 1 and issues a new timestamp, using the time provided by the operating system, its replica number, and the new event counter.

![]() If the time obtained from the operating system is equal to the Next Timestamp value, the server uses the value from the Next Timestamp field.

If the time obtained from the operating system is equal to the Next Timestamp value, the server uses the value from the Next Timestamp field.

![]() If the time obtained from the operating system is less than the Next Timestamp value (that is, if the Next Timestamp value is in the future compared to the operating system’s time), the server uses the Next Timestamp value and displays on the operating system console that it is using “synthetic time.”

If the time obtained from the operating system is less than the Next Timestamp value (that is, if the Next Timestamp value is in the future compared to the operating system’s time), the server uses the Next Timestamp value and displays on the operating system console that it is using “synthetic time.”

When synthetic time is used, the partition timestamp is frozen, and the only thing that changes is the event count portion of the timestamp. Because every change that occurs requires a unique timestamp, the event counter is incremented from 1 to 65,535 as the server issues timestamps. When the event counter reaches its maximum allowed value, the counter is reset to 1, the next second is used, and the process repeats until the partition timestamp catches up with the current system time.

Synthetic time being issued is not always a critical problem. If a server’s time is set back from within a few hours to within a few days, it is not necessary to correct the problem. This situation is a case where waiting is a better solution than doing something. Using DSRepair to repair timestamps is a serious step to take in that the fix actually destroys replicas on all servers except the server with the Master replica. When all non-Master replicas are destroyed, the replicas are re-created. See Chapter 12, “eDirectory Management Tools,” for information about resolving synthetic time errors.

One of the Janitor process optimization steps is the rehashing of the database information to enable the server to perform lookups more quickly.

If the Janitor process detects that the name of the partition root object has changed, it notifies all servers holding external references of this object of the new name.

Updating the Inherited ACL attribute values starts with the first partition in the partition database. After the Janitor process has located the partition, it validates that the parent object is not an external reference and looks at the ACL to determine whether any of the attribute values have been modified. If they have, it validates whether the attribute is inheritable, and if it is, it recalculates the Inherited ACL attribute values. The Janitor process performs this process for all the partitions on the server.

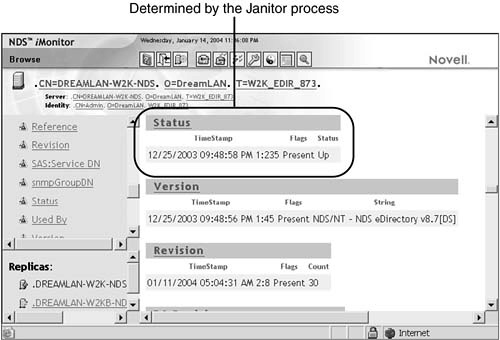

Updating the Status attribute involves validating that the DS attribute Status of the NCP Server object is set to Up. Because the server that performs the validation is up and running, this server always checks for an Up value. If it is set to Down, the Janitor process updates the attribute. Figure 6.10 shows where in NDS iMonitor you can see the result of this operation. To reach this screen, you select Agent Summary, Known Servers; click the server of interest; and select Status.

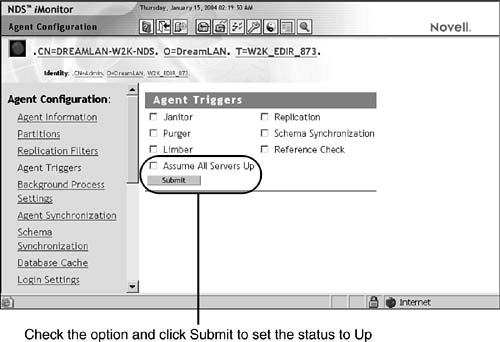

When an NCP Server object’s Status attribute is set to Down, the synchronization processes does not attempt to communicate with that server. Sometimes when a server is brought back online, its Status attribute value of Up might not be noticed by the other servers in the replica ring right way. You can manually force the status to Up by using NDS iMonitor as shown in Figure 6.11 by clicking the Agent Configuration link and then selecting the Agent Triggers link.

The Janitor process’s role in ensuring that the server can receive schema updates if it holds no replicas is particularly important. Even if a server has no local replicas, it still receives information for external references (such as those used to grant rights in the file system). In order to handle this properly, the server needs to know about all the different class definitions in case an extended class object receives rights to the file system. Equally important is the need for the schema partition to be maintained in case a new replica is added to the server later. If the server does not have current information about the schema and a replica is added to the server, many objects will change to Unknown objects in the local database, which can cause problems with object administration if those copies of the objects are read by the various management tools.

Finally, the Janitor process is also responsible for updating the Revision attribute of external references when the attribute value on the referenced object is changed.

The Flat Cleaner process is scheduled by the Janitor process and runs

192every 60 minutes by default. Responsibilities of the Flat Cleaner process include the following:

![]() Purging unused objects and attributes stored in the bindery partition or external reference partition

Purging unused objects and attributes stored in the bindery partition or external reference partition

![]() Purging obituaries that have reached the

Purging obituaries that have reached the Purgeable state

![]() Revalidating the

Revalidating the Status and Version attributes of servers in all partitions of which the server has the Master replica

![]() Verifying that all objects in the user-defined partitions on the server have valid public keys and Certificate Authority (CA) public keys.

Verifying that all objects in the user-defined partitions on the server have valid public keys and Certificate Authority (CA) public keys.

NOTE

Because the Flat Cleaner process performs much of the purging of deleted records, it is also known as the Replica Purger process or simply the Purger process.

As described in Chapter 2, the bindery partition is the partition that is used to store information about the bindery user Supervisor. This partition also stores the SAP information that is received from IPX networks connected to the server. If a service is removed from the network, the SAP table in the server needs to be updated; this is one of the tasks the Flat Cleaner process is responsible for.

Obituaries that have reached the Purgeable stage need to be removed from the database, and the Flat Cleaner takes care of this. Essentially, the Flat Cleaner process removes any object or attribute flagged as Non Present.

The Flat Cleaner process is also responsible for validating the Up state of all servers that hold Master replicas. As discussed earlier in this chapter, the Janitor process is responsible for setting the server Status attribute to Up. The Flat Cleaner process is responsible for setting the Status attribute to Down as necessary if it finds that it cannot communicate with the server.

To understand the process better, let’s consider a simple example where there are two servers, Orion and Rigel. Orion holds the Master copy of [Root], the only partition in the tree. If Rigel is offline when Orion’s Flat Cleaner process runs, Orion sets the Status attribute for Rigel to Down. When Rigel is brought back online, it runs its Janitor process, checks the Status attribute, and sees that it is set to Down. Because the server is no longer down, Rigel changes the Status attribute to Up.