Chapter 1

Secure Software Concepts

1.1 Introduction

Ask any architect and they are likely to agree with renowned author Thomas Hemerken on his famous quote, “the loftier the building, the deeper the foundation must be laid.” For superstructures to withstand the adversarial onslaught of natural forces, they must stand on a very solid and strong foundation. Hack-resilient software is one that reduces the likelihood of a successful attack and mitigates the extent of damage if an attack occurs. In order for software to be secure and hack resilient, it must factor in secure software concepts. These concepts are foundational and should be considered for incorporation into the design, development, and deployment of secure software.

1.2 Objectives

As a Certified Secure Software Lifecycle Professional (CSSLP), you are expected to:

- Understand the concepts and elements of what constitutes secure software.

- Be familiar with the principles of risk management as it pertains to software development.

- Know how to apply information security concepts to software development.

- Know the various design aspects that need to be taken into consideration to architect hack-resilient software.

- Understand how policies, standards, methodologies, frameworks, and best practices interplay in the development of secure software.

- Be familiar with regulatory, privacy, and compliance requirements for software and the potential repercussions of noncompliance.

- Understand security models and how they can be used to architect hacker-proof software.

- Know what trusted computing is and be familiar with mechanisms and related concepts of trusted computing.

- Understand security issues that need to be considered when purchasing or acquiring software.

This chapter will cover each of these objectives in detail. It is imperative that you fully understand not just what these secure software concepts are but also how to apply them in the software that your organization builds or buys.

1.3 Holistic Security

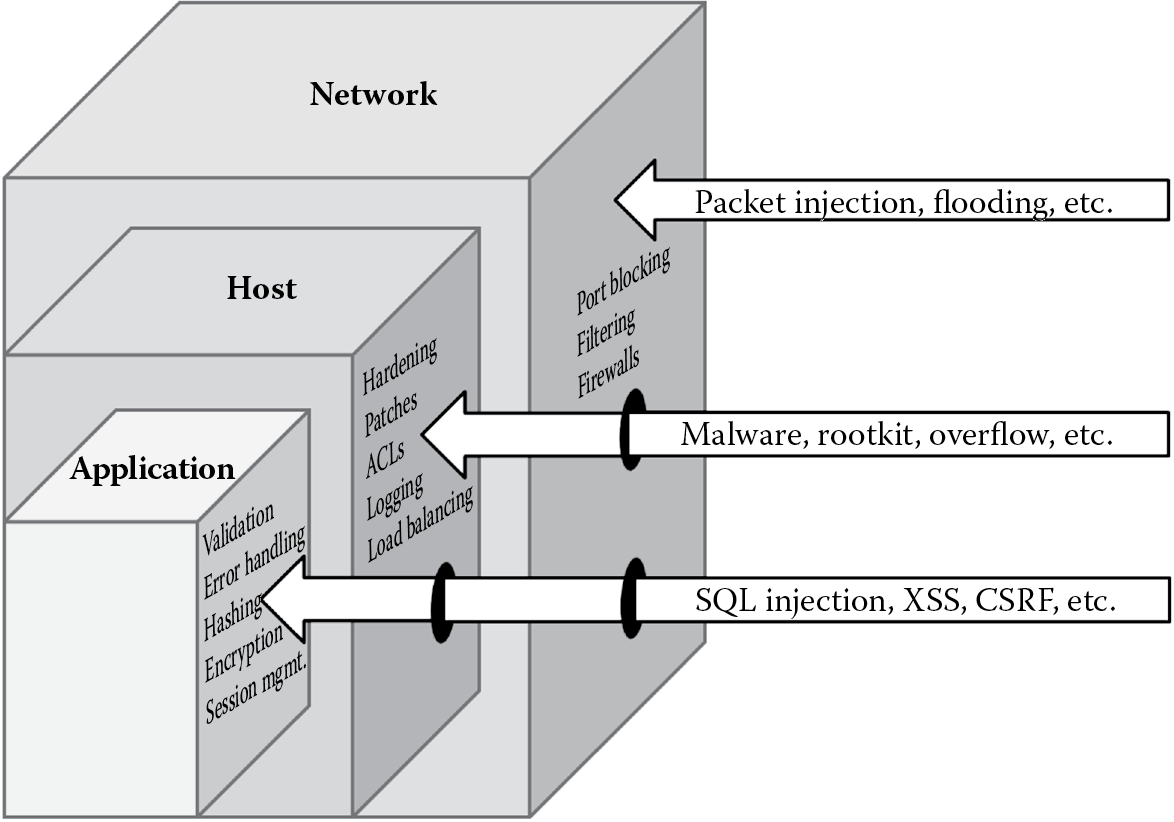

A few years ago, security was about keeping the bad guys out of your network. Network security relied extensively on perimeter defenses such as firewalls, demilitarized zones (DMZ), and bastion hosts to protect applications and data that were within the organization’s network. These perimeter defenses are absolutely necessary and critical, but with globalization and the changing landscape in the way we do business today, where there is a need to allow access to our internal systems and applications, the boundaries that demarcated our internal systems and applications from the external ones are slowly thinning and vanishing. This warrants that the hosts (systems) on which our software runs are even more closely guarded and secured. Having the need to open our networks and securely allow access now requires that our applications (software) are hardened, in addition to the network or perimeter security controls. The need is for secure applications running on secure hosts (systems) in secure networks. The need is for holistic security, which is the first and foremost software security concept that one must be familiar with. It is pivotal to recognize that software is only as secure as the weakest link. Today, software is rarely deployed as a stand-alone business application. It is often complex, running on host systems that are interconnected to several other systems on a network. A weakness (vulnerability) in any one of the layers may render all controls (safeguards and countermeasures) futile. The application, host, and network must all be secured adequately and appropriately. For example, a Structured Query Language (SQL) injection vulnerability in the application can allow an attacker to be able to compromise the database server (host) and from the host, launch exploits that can impact the entire network. Similarly, an open port on the network can lead to the discovery and exploitation of unpatched host systems and vulnerabilities in applications. Secure software is characterized by the securing of applications, hosts, and networks holistically, so there is no weak link, i.e., no Achilles’ heel (Figure 1.1).

1.4 Implementation Challenges

Despite the recognition of the fact that the security of networks, systems, and software is critical for the operations and sustainability of an organization or business, the computing ecosystem today seems to be plagued with a plethora of insecure networks and systems and more particularly insecure software. In today’s environment where software is rife with vulnerabilities, as is evident in full disclosure lists, bug tracking databases, and hacking incident reports, software security cannot be overlooked, but it is. Some of the primary reasons why there is a prevalence of insecure software may be attributed to the following:

- Iron triangle constraints

- Security as an afterthought

- Security versus usability

1.4.1 Iron Triangle Constraints

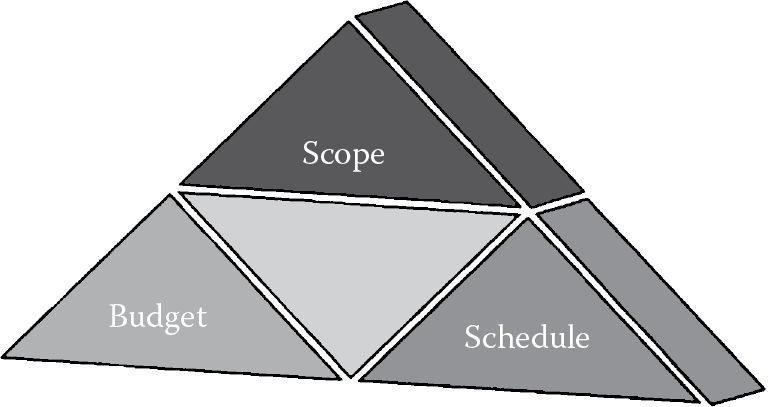

From the time an idea to solve a business problem using a software solution is born to the time that solution is designed, developed, and deployed, there is a need for time (schedule), resources (scope), and cost (budget). Resources (people) with appropriate skills and technical knowledge are not always readily available and are costly. The defender is expected to play vigilante 24/7, guarding against all attacks while being constrained to play by the rules of engagement, whereas the attacker has the upper hand because the attacker needs to be able to exploit just one weakness and can strike anytime without the need to have to play by the rules. Additionally, depending on your business model or type of organization, software development can involve many stakeholders. To say the least, software development in and of itself is a resource-, schedule- (time-), and budget-intensive process. Adding the need to incorporate security into the software is seen as having the need to do “more” with what is already deemed “less” or “insufficient”. Constraints in schedule, scope, and budget (the components of the iron triangle as shown in Figure 1.2) are often the reasons why security requirements are left out of software. If the software development project’s scope, schedule (time), and budget are very rigidly defined (as is often the case), it gives little to no room to incorporate even the basic, let alone additional, security requirements, and unfortunately what is typically overlooked are elements of software security.

1.4.2 Security as an Afterthought

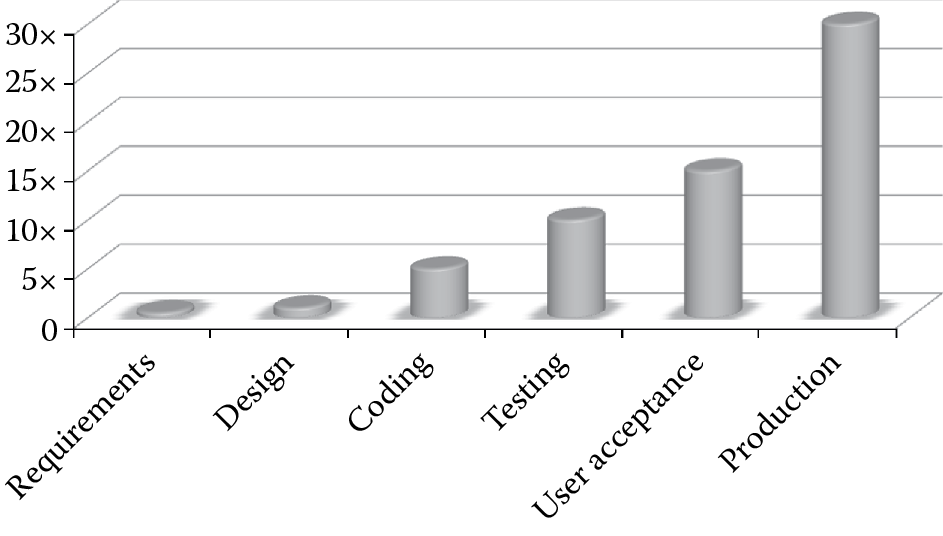

Developers and management tend to think that security does not add any business value because it is not very easy to show a one-to-one return on security investment (ROSI). Iron triangle constraints often lead to add-on security, wherein secure features are bolted on and not built into the software. It is important that secure features are built into the software, instead of being added on at a later stage, because it has been proven that the cost to fix insecure software earlier in the software development life cycle (SDLC) is significantly lower when compared to having the same issue addressed at a later stage, as illustrated in Figure 1.3. Addressing vulnerabilities just before a product is released is very expensive.

1.4.3 Security versus Usability

Another reason why it is a challenge to incorporate secure features in software is that the incorporation of secure features is viewed as rendering the software to become very complex, restrictive, and unusable. For example, the human resources organization needs to be able to view payroll data of employees and the software development team has been asked to develop an intranet Web application that the human resources personnel can access. When the software development team consults with the security consultant, the security consultant recommends that such access should be granted to only those who are authenticated and authorized and that all access requests must be logged for review purposes. The security consultant also advises the software team to ensure that the authentication mechanism uses passwords that are at least 15 characters long, require upper- and lowercase characters, and have a mix of alphanumeric and special characters, which need to be reset every 30 days. Once designed and developed, this software is deployed for use by the human resources organization. It is quickly apparent that the human resources personnel are writing their complex passwords on sticky notes and leaving them in insecure locations such as their desk drawers or in some cases even on their system monitors. They are also complaining that the software is not usable because it takes a lot of time for each access request to be processed, because all access requests are not only checked for authorization but also audited (logged). There is absolutely no doubt that the incorporation of security comes at the cost of performance and usability. This is true if the software design does not factor in the concept known as psychological acceptability. Software security must be balanced with usability and performance. We will be covering “psychological acceptability” in detail along with many other design concepts in Chapter 3.

1.5 Quality and Security

In a world that is driven by the need for and assurance of quality products, it is important to recognize that there is a distinction between quality and security, particularly as they apply to software products. Almost all software products go through a quality assurance (or testing) phase before being released or deployed, wherein the functionality of the software, as required by the business client or customer, is validated and verified. Quality assurance checks are indicative of the fact that the software is reliable (functioning as designed) and that it is functional (meets the requirements as specified by the business owner). Following Total Quality Management (TQM) processes like the Six Sigma (6σ) or certifying software with International Organization for Standardization (ISO) quality standards are important in creating good quality software and achieving a competitive edge in the marketplace, but it is important to realize that such quality validation and certifications do not necessarily mean that the software product is secure. A software product that is secure will add to the quality of that software, but the inverse is not always necessarily true.

It is also important to recognize that the presence of security functionality in software may allow it to support quality certification standards, but it does not necessarily imply that the software is secure. Vendors often tout the presence of security functionality in their products in order to differentiate themselves from their competitors, and while this may be true, it must be understood that the mere presence of security functionality in the vendor’s software does not make it secure. This is because security functionality may not be configured to work in your operating environment, or when it is, it may be implemented incorrectly. For example, software that has the functionality to turn on logging of all critical and administrative transactions may be certified as a quality secure product, but unless the option to log these transactions is turned on within your computing environment, it has added nothing to your security posture. It is therefore extremely important that you verify the claims of the vendors within your computing environment and address any concerns you may come across before purchase. In other words, trust, but always verify. This is vital when evaluating software whether you are purchasing it or building it in-house.

1.6 Security Profile: What Makes a Software Secure?

As mentioned, in order to develop hack-resilient software, it is important to incorporate security concepts in the requirements, design, code, release, and disposal phases of the SDLC.

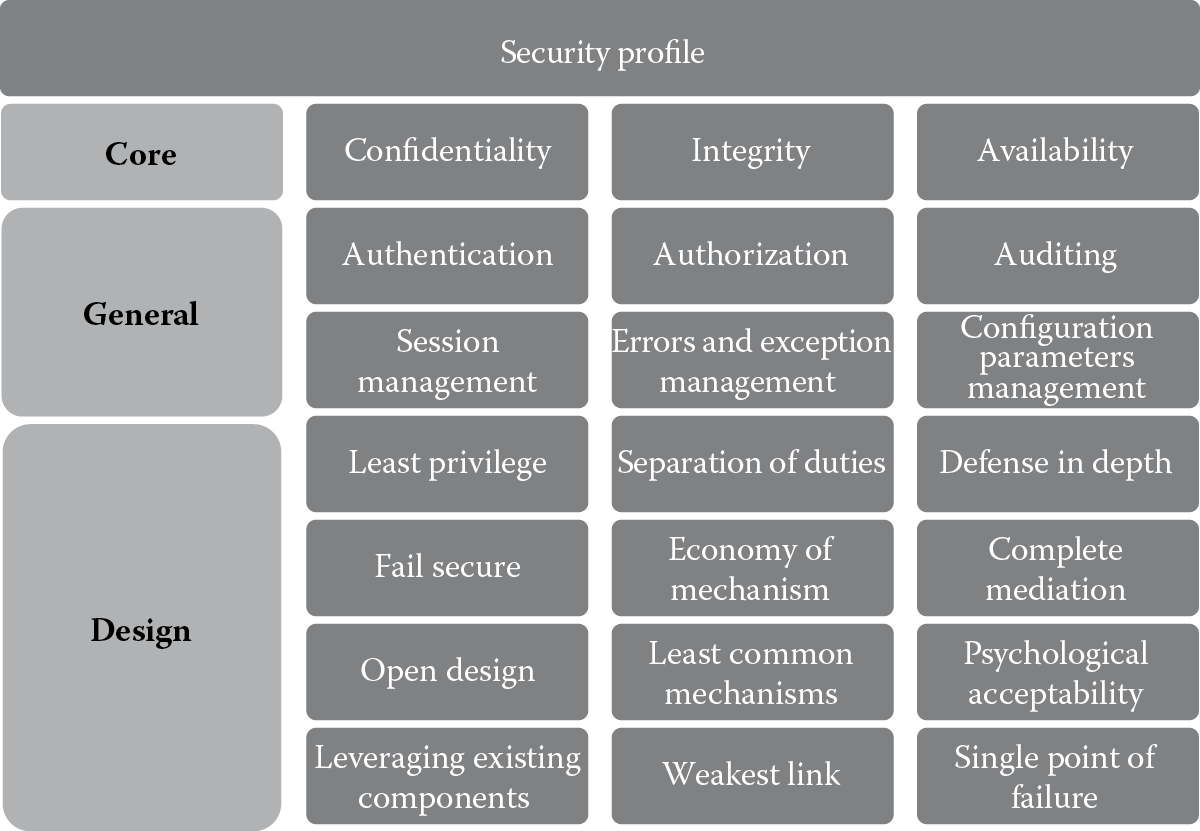

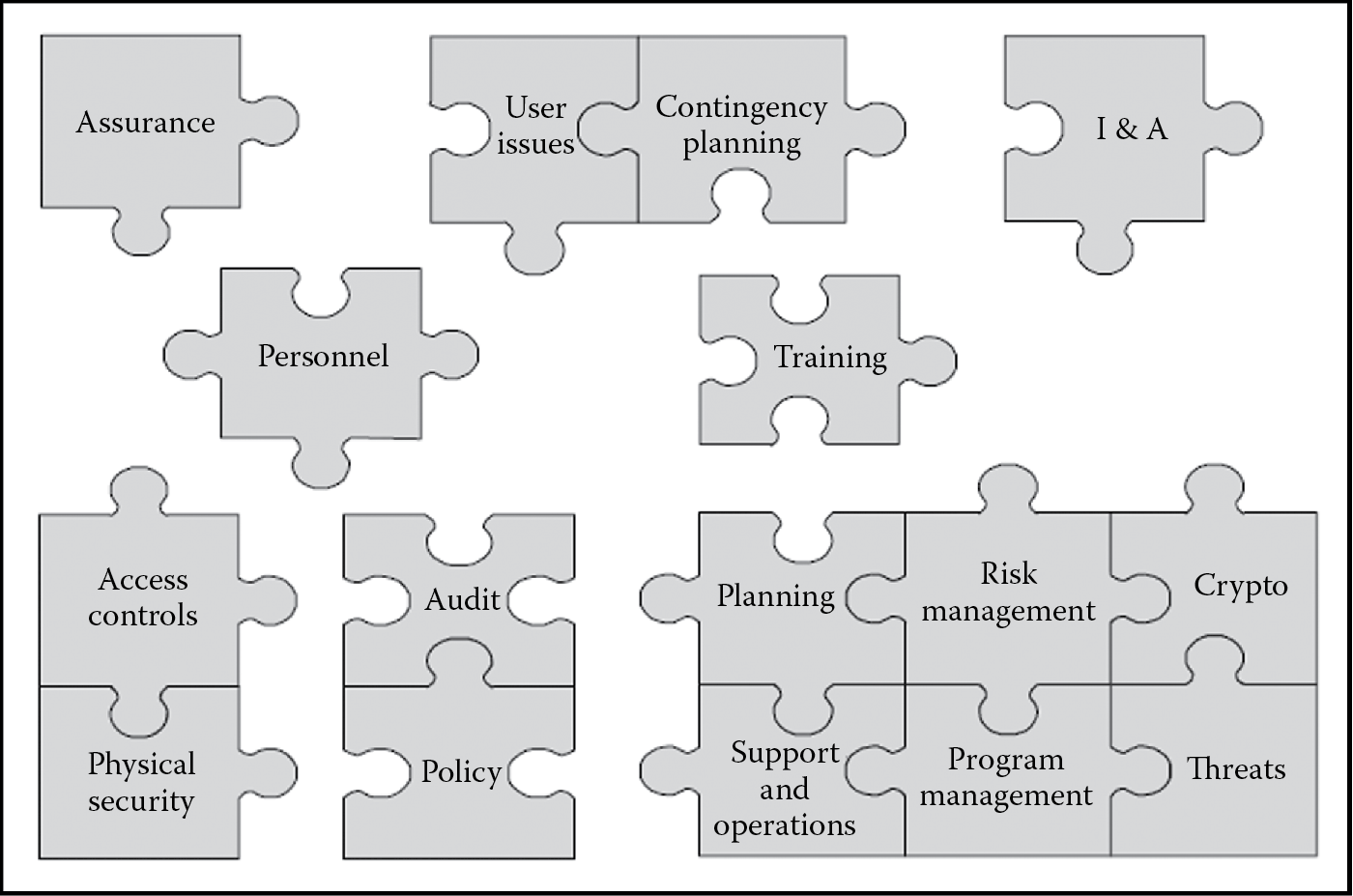

The makeup of your software from a security perspective is the security profile of your software, and it includes the incorporation of these concepts in the SDLC. As Figure 1.4 illustrates, some of these concepts can be classified as core security concepts, whereas others are general or design security concepts. However, these security concepts are essential building blocks for secure software development. In other words, they are the bare necessities that need to be addressed and cannot be ignored.

This section will cover these security concepts at an introductory level. They will be expanded in subsequent sections within the scope of each domain.

1.6.1 Core Security Concepts

1.6.1.1 Confidentiality

Prevalent in the industry today are serious incidents of identity theft and data breaches that can be directly tied to the lack or insufficiency of information disclosure protection mechanisms. When you log into your personal bank account, you expect to see only your information and not anyone else’s. Similarly, you expect your personal information not to be available to anyone who requests it. Confidentiality is the security concept that has to do with protection against unauthorized information disclosure. It has to do with the viewing of data. Not only does confidentiality assure the secrecy of data, but it also can help in maintaining data privacy.

1.6.1.2 Integrity

In software that is reliable or, in other words, performs as intended, protecting against improper data alteration is also known as resilient software. Integrity is the measure of software resiliency, and it pertains to the alternation or modification of data and the reliable functioning of software.

When you use an online bill payment system to pay your utility bill, you expect that upon initiating a transfer of payment from your bank to the utility service provider, the amount that you have authorized to transfer is exactly the same amount that is debited from your account and credited into the service provider’s account. Not only do you expect that the software that handles this transaction to work as it is intended, but you also expect that the amount you specified for the transaction is not altered by anyone or anything else. The software must debit from the account you specify (and not any other account) and credit into a valid account that is owned by the service provider (and not by anyone else). If you have authorized to pay $129.00, the amount debited from your account must be exactly $129.00 and the amount credited in the service provider’s account must not be $12.90 or $1290.00, but $129.00 as well. From the time the data transaction commences until the time that data come to rest or are destroyed, it must not be altered by anyone or any process that is not authorized.

So integrity of software has two aspects to it. First, it must ensure that the data that are transmitted, processed, and stored are as accurate as the originator intended and second, it must ensure that the software performs reliably.

1.6.1.3 Availability

Availability is the security concept that is related to the access of the software or the data or information it handles. Although the overall purpose of a business continuity program (BCP) may be to ensure that downtime is minimized and that the impact upon business disruption is minimal, availability as a concept is not merely a business continuity concept but a software security concept as well. Access must take into account the “who” and “when” aspects of availability. First, the software or the data it processes must be accessible by only those who are authorized (who) and, second, it must be accessible only at the time (when) that it is required. Data must not be available to the wrong people or at the wrong time.

A service level agreement (SLA) is an example of an instrument that can be used to explicitly state and govern availability requirements for business partners and clients. Load balancing and replication are mechanisms that can be used to ensure availability. Software can also be developed with monitoring and alerting functionality that can detect disruptions and notify appropriate personnel to minimize downtime, once again ensuring availability.

1.6.2 General Security Concepts

In this section, we will cover general security concepts that aim at mitigating disclosure, alteration, and destruction threats thereby ensuring the core security concepts of confidentiality, integrity, and availability.

1.6.2.1 Authentication

Software is a conduit to an organization’s internal databases, systems, and network, and so it is critically important that access to internal sensitive information is granted only to those entities that are valid. Authentication is a security concept that answers the question, “Are you who you claim to be?” It not only ensures that the identity of an entity (person or resource) is specified according to the format that the software is expecting it, but it also validates or verifies the identity information that has been supplied. In other words, it assures the claim of an entity by verifying identity information.

Authentication succeeds identification in the sense that the person or process must be identified before it can be validated or verified. The identifying information that is supplied is also known as credentials or claims. The most common form of credential is a combination of username (or user ID) and password, but authentication can be primarily achieved in any one or in combination of the following three factors:

- Knowledge: The identifying information provided in this mechanism for validation is something that one knows. Examples of this type of authentication include username and password, pass phrases, or a personal identification number (PIN).

- Ownership: The identifying information provided in this mechanism for validation is something that you own or have. Examples of this type of authentication include tokens and smart cards.

- Characteristic: The identifying information provided in this mechanism for validation is something you are. The best known example for this type of authentication is biometrics. The identifying information that is supplied in characteristic-based authentication such as biometric authentication is digitized representations of physical traits or features. Blood vessel patterns of the retina, fingerprints, and iris patterns are some common physical features that are used but there are limitations with biometrics as physical characteristics can change with medically related maladies (Schneier, 2000). Physical actions such as signatures (pressure and slant) that can be digitized can also be used in characteristic-based authentication.

Multifactor authentication, which is the use of more than one factor to authenticate, is considered to be more secure than single-factor authentication where only one of the three factors (knowledge, ownership, or characteristic) is used for validating credentials. Multifactor authentication is recommended for validating access to systems containing sensitive or critical information. The Federal Financial Institutions Examination Council (FFIEC) guidance on authentication in an Internet banking environment highlights that the use of single-factor authentication as the only control mechanism in such an environment is inadequate and additional mitigation compensating controls, layered security including multifactor authentication is warranted.

1.6.2.2 Authorization

Just because an entity’s credentials can be validated does not mean that the entity should be given access to all of the resources that it requests. For example, you may be able to log into the accounting software within your company, but you are still not able to access the human resources payroll data, because you do not have the rights or privileges to access the payroll data. Authorization is the security concept in which access to objects is controlled based on the rights and privileges that are granted to the requestor by the owner of the data or system or according to a policy. Authorization decisions are layered on top of authentication and must never precede authentication, i.e., you do not authorize before you authenticate unless your business requirements require you to give access to anonymous users (those who are not authenticated), in which case the authorization decision may be uniformly restrictive to all anonymous users.

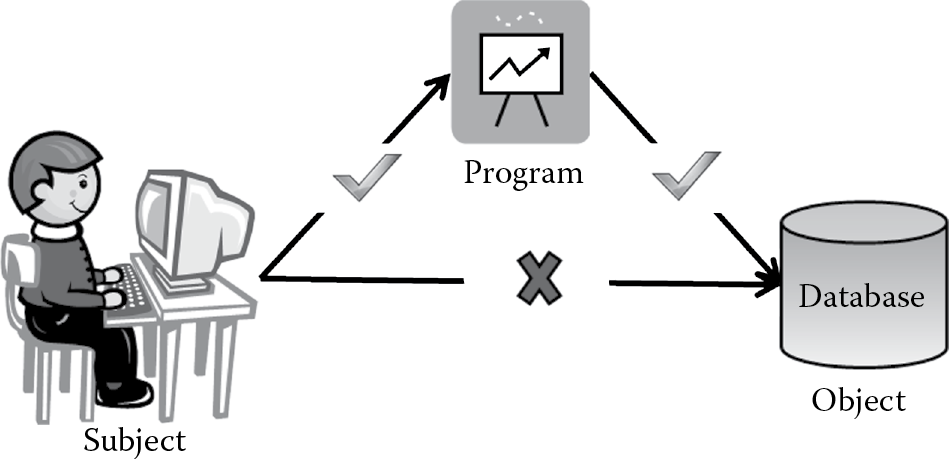

The requestor is referred to as the subject and the requested resource is referred to as the object. The subject may be human or nonhuman such as a process or another object. The subject may also be categorized by privilege level such as an administrative user, manager, or anonymous user. Examples of an object include a table in the database, a file, or a view. A subject’s actions such as creation, reading, update, or deletion (CRUD) on an object is dependent on the privilege level of the subject. An example of authorization based on the privilege level of the subject is that an administrative user may be able to create, read, update, and delete (CRUD) data, but an anonymous user may be allowed to only read (R) the data, whereas a manager may be allowed to create, read, and update (CRU) data.

1.6.2.3 Auditing/Logging

Consider the following scenario. You find out that the price of a product in the online store is different from the one in your brick and mortar store and you are unsure as to how this price discrepancy situation has come about. Upon preliminary research, it is determined that the screen used to update prices of products for the online store is not tightly controlled and any authenticated user within your company can make changes to the price. Unfortunately, there is no way to be able to tell who made the price changes because no information is logged for review upon the update of pricing information. Auditing is the security concept in which privileged and critical business transactions are logged. This logging can be used to build a history of events, which can be used for troubleshooting and forensic evidence. In the scenario above, if the authenticated credentials of the logged-on user who made the price changes is logged along with a timestamp of the change and the before and after price, the history of the changes can be built to track down the user who made the change. Auditing is a passive detective control mechanism.

At a bare minimum, audit fields that include who (user or process) did what (CRUD), where (file or table), and when (created or modified timestamp) along with a before and after snapshot of the information that was changed must be logged for all administrative (privilege) or critical transactions as defined by the business. Additionally, newer audit logs must always be appended to and never overwrite older logs. This could result in a capacity or space issue based on the retention period of these logs; this needs to be planned for. The retention period of these audit logs must be based on regulatory requirements or organizational policy, and in cases where the organizational policy for retention conflicts with regulatory requirements, the regulatory requirements must be followed and the organizational policy appropriately amended to prevent future conflicts.

Nonrepudiation addresses the deniability of actions taken by either a user or the software on behalf of the user. Accountability to ensure nonrepudiation can be accomplished by auditing when used in conjunction with identification. In the price change scenario, if the software had logged the price change action and the identity of the user who made that change, that individual can be held accountable for their action, giving the individual a limited opportunity to repudiate or deny their action, thereby assuring nonrepudiation.

Auditing is a detective control, and it can be a deterrent control as well. Because one can use the audit logs to determine the history of actions that are taken by a user or the software itself, auditing or logging is a passive detective control. The fore knowledge of being audited could potentially deter a user from taking unauthorized actions, but it does not necessarily prevent them from doing so.

It is understood that auditing is a very important security concept that is often not given the attention it deserves when building software. However, there are certain challenges with auditing as well that warrant attention and addressing. They are

- Performance impact

- Information overload

- Capacity limitation

- Configuration interfaces protection

- Audit log protection

Auditing can have impact on the performance of the software. It was noted earlier that there is usually a trade-off decision that is necessary when it comes to security versus usability. If your software is configured to log every administrative and critical business transaction, then each time those operations are performed, the time to log those actions can have a bearing on the performance of the software.

Additionally, the amount of data logged may result in information overload, and without proper correlation and pattern discerning abilities, administrative and critical operations may be overlooked, thereby reducing the security that auditing provides. It is therefore imperative to log only the needed information at the right frequency. A best practice would be to classify the logs when being logged using a bucketing scheme so that you can easily sort through large volumes of logs when trying to determine historical actions. An example of a bucketing scheme can be “Informational Only,” “Administrative,” “Business Critical,” “Error,” “Security,” “Miscellaneous,” etc. The frequency for reviewing the logs need to be defined by the business, and this is usually dependent on the value of the software or the data it transmits, processes, and stores to the business.

In addition to information overload, logging all information can result in capacity and space issues for the systems that hold the logs. Proper capacity planning and archival requirements need to be predetermined to address this.

Furthermore, the configuration interfaces to turn on or off the audit logs and the types of logs to audit must also be designed, developed, and protected. Failure to protect the audit log configuration interfaces can result in an attack going undetected. For example, if the configuration interfaces to turn auditing on or off is left unprotected, an attacker may be able to turn logging off, perform their attack, and turn it back on once they have completed their malicious activities. In this case, nonrepudiation is not ensured. So it must be understood that the configuration interfaces for auditing can potentially increase the attack surface area and nonrepudiation abilities can be seriously hampered.

Finally, the audit logs themselves are to be deemed an asset to the business and can be susceptible to information disclosure attacks. One must be diligent as to what to log and the format of the log itself. For example, if the business requirement for your software is to log authentication failure attempts, it is recommended that you do not log the value supplied for the password that was used, as the failure may have resulted from an inadvertent and innocuous user error. Should you have the need to log the password value for troubleshooting reasons, it would be advisable to hash the password before recording it so that even if someone gets unauthorized access to the logs, sensitive information is still protected.

1.6.2.4 Session Management

Just because someone is authenticated and authorized to access system resources does not mean that security controls can be lax after an authenticated session is established, because a session can be hijacked. Session hijacking attacks happen when an attacker impersonates the identity of a valid user and interjects themselves into the middle of an existing session, routing information from the user to the system and from the system to the user through them. This can lead to information disclosure (confidentiality threat), alteration (integrity threat), or a denial of service (availability threat). It is also known as a man-in-the-middle (MITM) attack. Session management is a security concept that aims at mitigating session hijacking or MITM attacks. It requires that the session is unique by the issuance of unique session tokens, and it also requires that user activity is tracked so that someone who is attempting to hijack a valid session is prevented from doing so.

1.6.2.5 Errors and Exception Management

Errors and exceptions are inevitable when dealing with software. Whereas errors may be a result of user ignorance or software breakdown, exceptions are software issues that are not handled explicitly when the software behaves in an unintended or unreliable manner. An example of user error is that the user mistypes his user ID when trying to log in. Now if the software was expecting the user ID to be supplied in a numeric format and the user typed in alpha characters in that field, the software operations will result in a data type conversion exception. If this exception is not explicitly handled, it would result in informing the user of this exception and in many cases disclose the entire exception stack. This can result in information disclosure potentially revealing the software’s internal architectural details and in some cases even the data value. It is recommended as a secure software best practice to ensure that the errors and exception messages be nonverbose and explicitly specified in the software. An example of a verbose error message would be displaying “User ID did not match” or “Password is incorrect,” instead of using the nonverbose or laconic equivalent such as “Login invalid.” Additionally, upon errors or exceptions, the software is to fail to a more secure state. Organizations are tolerant of user errors, which are inevitable, permitting a predetermined number of user errors before recording it as a security violation. This predetermined number is established as a baseline and is referred to in operations as clipping level. An example of this is, after three failed incorrect PIN entries, your account is locked out until an out-of-band process unlocks it or a certain period has elapsed. The software should never fail insecure, which would be characterized by the software allowing access after three failed incorrect PIN entries. Errors and exception management is the security concept that ensures that unintended and unreliable behavior of the software is explicitly handled, while maintaining a secure state and protection against confidentiality, integrity, and availability threats.

1.6.2.6 Configuration Parameters Management

Software is made up of code and parameters that need to be established for it to run. These parameters may include variables that need to be initialized in memory for the software to start, connection strings to databases in the backend, or cryptographic keys for secrecy to just name a few. These configuration parameters are part of the software makeup that needs to be not only configured but protected as well because they are to be deemed an asset that is valuable to the business. What good is it to lock the doors and windows in an attempt to secure your valuables within the house when you leave the key under the mat on the front porch? Configuration management in the context of software security is the security concept that ensures that the appropriate levels of protection are provided to secure the configurable parameters that are needed for the software to run. Note that we will also be covering configuration management as it pertains to IT services in Chapters 6 and 7.

1.6.3 Design Security Concepts

In this section we will discuss security concepts that need to be considered when designing and architecting software. These concepts are defined in the following. We will expand on each of these concepts in more concrete detail in Chapter 3.

- Least Privilege: A security principle in which a person or process is given only the minimum level of access rights (privileges) that is necessary for that person or process to complete an assigned operation. This right must be given only for a minimum amount of time that is necessary to complete the operation.

- Separation of Duties (or) Compartmentalization Principle: Also known as the compartmentalization principle, or separation of privilege, separation of duties is a security principle stating that the successful completion of a single task is dependent on two or more conditions that need to be met and just one of the conditions will be insufficient in completing the task by itself.

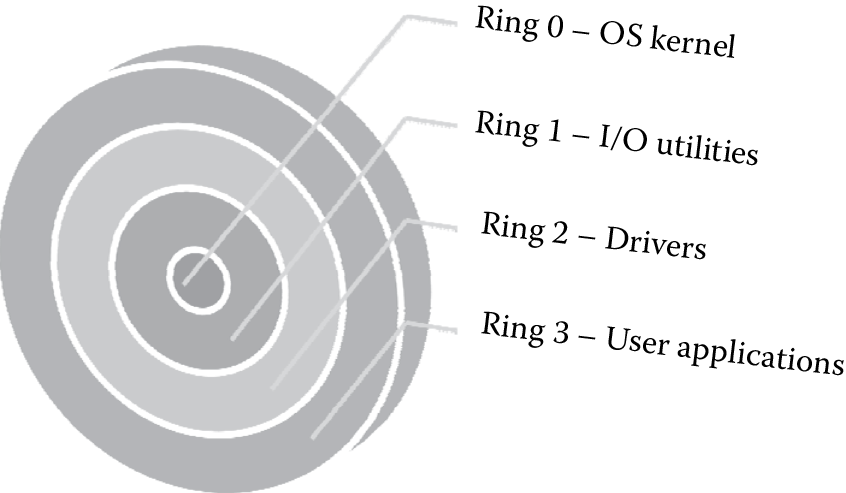

- Defense in Depth (or) Layered Defense: Also known as layered defense, defense in depth is a security principle where single points of complete compromise are eliminated or mitigated by the incorporation of a series or multiple layers of security safeguards and risk-mitigation countermeasures.

- Fail Secure: A security principle that aims to maintain confidentiality, integrity, and availability by defaulting to a secure state, rapid recovery of software resiliency upon design, or implementation failure. In the context of software security, fail secure can be used interchangeably with fail safe.

- Economy of Mechanisms: This, in layman terms, is the keep-it-simple principle because the likelihood of a greater number of vulnerabilities increases with the complexity of the software architectural design and code. By keeping the software design and implementation details simple, the attackability or attack surface of the software is reduced.

- Complete Mediation: A security principle that ensures that authority is not circumvented in subsequent requests of an object by a subject by checking for authorization (rights and privileges) upon every request for the object. In other words, the access requests by a subject for an object is completely mediated each time, every time.

- Open Design: The open design security principle states that the implementation details of the design should be independent of the design itself, which can remain open, unlike in the case of security by obscurity wherein the security of the software is dependent on the obscuring of the design itself. When software is architected using the open design concept, the review of the design itself will not result in the compromise of the safeguards in the software.

- Least Common Mechanisms: The security principle of least common mechanisms disallows the sharing of mechanisms that are common to more than one user or process if the users and processes are at different levels of privilege. For example, the use of the same function to retrieve the bonus amount of an exempt employee and a nonexempt employee will not be allowed. In this case the calculation of the bonus is the common mechanism.

- Psychological Acceptability: This security principle aims at maximizing the usage and adoption of the security functionality in the software by ensuring that the security functionality is easy to use and at the same time transparent to the user. Ease of use and transparency are essential requirements for this security principle to be effective.

- Leveraging Existing Components: This is a security principle that focuses on ensuring that the attack surface is not increased and no new vulnerabilities are introduced by promoting the reuse of existing software components, code, and functionality.

- Weakest Link: You have heard of the saying, a chain is only as strong as the weakest link. This security principle states that the hack resiliency of your software security will depend heavily on the protection of weakest components, be it code, service, or interface. A breakdown in the weakest link will result in a security breach.

- Single Point of Failure: Single point of failure is the security principle that ensures that your software is designed to eliminate any single source of complete compromise. Although this is similar to the weakest link principle, the distinguishing difference between the two is that the weakest link need not necessarily be a single point of failure but could be as a result of various weak sources. Usually, in software security, the weakest link is a superset of several single points of failure.

1.7 Security Concepts in the SDLC

Security concepts span across the entire life cycle and will need to be addressed in each phase. Software security requirements, design, development, and deployment must take into account all of these security concepts. Lack or insufficiency of attention in any one phase may render the efforts taken in other phases completely futile. For example, capturing requirements to handle disclosure protection (confidentiality) in the requirements gathering phase of your SDLC but not designing confidentiality controls in the design phase of your SDLC can potentially result in information disclosure breaches.

Often, these concepts are used in conjunction with other concepts or they can be used independently, but it is important that none of these concepts are ignored, even if it is deemed as not applicable or in some cases contradictory to other concepts. For example, the economy of mechanism concept in implementing a single sign-on mechanism for simplified user authentication may directly conflict with the complete mediation design concept and necessary architectural decisions must be taken to address this without compromising the security of the software. In no situation can they be ignored.

1.8 Risk Management

One of the key aspects of managing security is risk management. It must be recognized that the goal of risk management spans more than the mere protection of information technology (IT) assets as it is intended to protect the entire organization so that there are minimal to no disruption in the organization’s abilities to accomplish its mission. Risk management processes include the preliminary assessment for the need of security controls, the identification, development, testing, implementation, and verification (evaluation) of security controls so that the impact of any disruptive processes are at an acceptable or risk-appropriate level. Risk management, in the context of software security, is the balancing act between the protection of IT assets and the cost of implementing software security controls, so that the risk is handled appropriately. The second revision of the Special Publication 800-64 by the National Institute of Standards and Technology (NIST), entitled “Security Considerations in the Systems Development Life Cycle (SDLC),” highlights that a prerequisite to a comprehensive strategy to manage risk to IT assets is to consider security during the SDLC. By addressing risk throughout the SDLC, one can avoid a lot of headaches upon release or deployment of the software.

1.8.1 Terminology and Definitions

Before we delve into the challenges with risk management as it pertains to software and software development, it is imperative that there is a strong fundamental understanding of terms and risk computation formulae used in the context of traditional risk management.

Some of the most common terms and formulae that a CSSLP must be familiar with are covered in this section. Some of the definitions used in this section are from NIST Risk Management Guide to Information Technology Systems Special Publication 800-30.

1.8.1.1 Asset

Assets are those items that are valuable to the organization, the loss of which can potentially cause disruptions in the organization’s ability to accomplish its missions. These may be tangible or intangible in nature. Tangible assets, as opposed to intangible assets, are those that can be perceived by physical senses. They can be more easily evaluated than intangible assets. Examples of tangible IT assets include networking equipment, servers, software code, and also data that are transmitted and stored by your applications. In the realm of software security, data are the most important tangible asset, second only to people. Examples of intangible assets include intellectual property rights such as copyright, patents, trademarks, and brand reputation. The loss of brand reputation for an organization may be disastrous, and recovery from such a loss may be nearly impossible. Arguably, company brand reputation is the most valuable intangible asset, and the loss of intangible assets may have more dire consequences than the loss of tangible assets; however, regardless of whether the asset is tangible, the risk of loss must be assessed and appropriately managed. In threat modeling terminology, an asset is also referred to as an “Object.” We will cover subject/object matrix in the context of threat modeling in Section 3.3.3.2.

1.8.1.2 Vulnerability

A weakness or flaw that could be accidently triggered or intentionally exploited by an attacker, resulting in the breach or breakdown of the security policy is known as vulnerability. Vulnerabilities can be evident in the process, design, or implementation of the system or software. Examples of process vulnerabilities include improper check-in and check-out procedures of software code or backup of production data to nonproduction systems and incomplete termination access control mechanisms. The use of obsolete cryptographic algorithms such as Data Encryption Standard (DES), not designing for handling resource deadlocks, unhandled exceptions, and hard-coding database connection information in clear text (humanly readable form) in line with code are examples of design vulnerabilities. In addition to process and design vulnerabilities, weaknesses in software are made possible because of the way in which software is implemented. Some examples of implementation vulnerabilities are: the software accepts any user supplied data and processes it without first validating it; the software reveals too much information in the event of an error and not explicitly closing open connections to backend databases.

Some well-known and useful vulnerability tracking systems and vulnerability repositories that can be leveraged include the following:

- U.S. Computer Emergency Readiness Team (US-CERT) Vulnerability Notes Database: The CERT vulnerability analysis project aims at reducing security risks due to software vulnerabilities in both developed and deployed software. In software that is being developed, they focus on vulnerability discovery and in software that is already deployed, they focus on vulnerability remediation. Newly discovered vulnerabilities are added to the Vulnerability Notes Database. Existing ones are updated as needed.

- Common Vulnerability Scoring System (CVSS): As the name suggests, the CVSS is a system designed to rate IT vulnerabilities and help organizations prioritize security vulnerabilities.

- Open Source Vulnerability Database: This database is independent and open source created by and for the security community, with the goal of providing accurate, detailed, current, and unbiased technical information on security vulnerabilities.

- Common Vulnerabilities and Exposures (CVE): CVE is a dictionary of publicly known information security vulnerabilities and exposures. It is free for use and international in scope.

- Common Weakness Enumeration (CWE™): This specification provides a common language for describing architectural, design, or coding software security weaknesses. It is international in scope, freely available for public use, and intended to provide a standardized and definitive “formal” list of software weaknesses. Categorizations of software security weaknesses are derived from software security taxonomies.

1.8.1.3 Threat

Vulnerabilities pose threats to assets. A threat is merely the possibility of an unwanted, unintended, or harmful event occurring. When the event occurs upon manifestation of the threat, it results in an incident. These threats can be classified into threats of disclosure, alteration, or destruction. Without proper change control processes in place, a possibility of disclosure exists when sensitive code is disclosed to unauthorized individuals if they can check out the code without any authorization. The same threat of disclosure is possible when production data with actual and real significance is backed up to a developer or test machine, when sensitive database connection information is hard-coded in line with code in clear text, or if the error and exception messages are not handled properly. Lack of or insufficient input validation can pose the threat of data alteration, resulting in violation of software integrity. Insufficient load testing, stress testing, and code level testing pose the threat of destruction or unavailability.

1.8.1.4 Threat Source/Agent

Anyone or anything that has the potential to make a threat materialize is known as the threat source or threat agent. Threat agents may be human or nonhuman. Examples of nonhuman threat agents in addition to nature that are prevalent in this day and age are malicious software (malware), such as adware, spyware, viruses, and worms. We will cover the different types of threat agents when we discuss threat modeling in Chapter 3.

1.8.1.5 Attack

Threat agents may intentionally cause a threat to materialize or threats can occur as a result of plain user error or accidental discovery as well. When the threat agent actively and intentionally causes a threat to happen, it is referred to as an “attack” and the threat agents are commonly referred to as “attackers.” In other words, an intentional action attempting to cause harm is the simplest definition of an attack. When an attack happens as a result of an attacker taking advantage of a known vulnerability, it is known as an “exploit.” The attacker exploits a vulnerability causing the attacker (threat agent) to cause harm (materialize a threat).

1.8.1.6 Probability

Also known as “likelihood,” probability is the chance that a particular threat can happen. Because the goal of risk management is to reduce the risk to an acceptable level, the measurement of the probability of an unintended, unwanted, or harmful event being triggered is important. Probability is usually expressed as a percentile, but because the accuracy of quantifying the likelihood of a threat is mostly done using best guesstimates or sometimes mere heuristic techniques, some organizations use qualitative categorizations or buckets, such as high, medium, or low to express the likelihood of a threat occurring. Regardless of whether a quantitative or qualitative expression, the chance of harm caused by a threat must be determined or at least understood as the bare minimum.

1.8.1.7 Impact

The outcome of a materialized threat can vary from very minor disruptions to inconveniences imposed by levied fines for lack of due diligence, breakdown in organization leadership as a result of incarceration, to bankruptcy and complete cessation of the organization. The extent of how serious the disruptions to the organization’s ability to achieve its goal are referred to as the impact.

1.8.1.8 Exposure Factor

Exposure factor is defined as the opportunity for a threat to cause loss. Exposure factor plays an important role in the computation of risk. Although the probability of an attack may be high, and the corresponding impact severe, if the software is designed, developed, and deployed with security in mind, the exposure factor for attack may be low, thereby reducing the overall risk of exploitation.

1.8.1.9 Controls

Security controls are mechanisms by which threats to software and systems can be mitigated. These mechanisms may be technical, administrative, or physical in nature. Examples of some software security controls include input validation, clipping levels for failed password attempts, source control, software librarian, and restricted and supervised access control to data centers and filing cabinets that house sensitive information. Security controls can be broadly categorized into countermeasures and safeguards. As the name implies, countermeasures are security controls that are applied after a threat has been materialized, implying the reactive nature of these types of security controls. On the other hand, safeguards are security controls that are more proactive in nature. Security controls do not remove the threat itself but are built into the software or system to reduce the likelihood of a threat being materialized. Vulnerabilities are reduced by security controls.

However, it must be recognized that improper implementation of security controls themselves may pose a threat. For example, say that upon the failure of a login attempt, the software handles this exception and displays the message “Username is valid but the password did not match” to the end user. Although in the interest of user experience, this may be acceptable, an attacker can read that verbose error message and know that the username exists in the system that performs the validation of user accounts. The exception handling countermeasure in this case potentially becomes the vulnerability for disclosure, owing to improper implementation of the countermeasure. A more secure way to handle login failure would have been to use generic and nonverbose exception handling in which case the message displayed to the end user may just be “Login invalid.”

1.8.1.10 Total Risk

Total risk is the likelihood of the occurrence of an unwanted, unintended, or harmful event. This is traditionally computed using factors such as the asset value, threat, and vulnerability. This is the overall risk of the system, before any security controls are applied. This may be expressed qualitatively (e.g., high, medium, or low) or quantitatively (using numbers or percentiles).

1.8.1.11 Residual Risk

Residual risk is the risk that remains after the implementation of mitigating security controls (countermeasures or safeguards).

1.8.2 Calculation of Risk

Risk is conventionally expressed as the product of the probability of a threat source/agent taking advantage of a vulnerability and the corresponding impact. However, estimation of both probability and impact are usually subjective and so quantitative measurement of risk is not always accurate. Anyone who has been involved with risk management will be the first to acknowledge that the calculation of risk is not a black or white exercise, especially in the context of software security.

However, as a CSSLP, you are expected to be familiar with classical risk management terms such as single loss expectancy (SLE), annual rate of occurrence (ARO), and annual loss expectancy (ALE) and the formulae used to quantitatively compute risk.

- Single Loss Expectancy: SLE is used to estimate potential loss. It is calculated as the product of the value of the asset (usually expressed monetarily) and the exposure factor, which is expressed as a percentage of asset loss when a threat is materialized. See Figure 1.5 for a calculation of SLE.

- Annual Rate of Occurrence: The ARO is an expression of the number of incidents from a particular threat that can be expected in a year. This is often just a guesstimate in the field of software security and thus should be carefully considered. Looking at historical incident data within your industry is a good start for determining what the ARO should be.

- Annual Loss Expectancy: ALE is an indicator of the magnitude of risk in a year. ALE is a product of SLE × ARO (see Figure 1.6).

The identification and reduction of the total risk using controls so that the residual risk is within the acceptable range or threshold, wherein business operations are not disrupted, is the primary goal of risk management. To reduce total risk to acceptable levels, risk mitigation strategies in total instead of merely selecting a single control (safeguard) must be considered. For example, to address the risk of disclosure of sensitive information such as credit card numbers or personnel health information, mitigation strategies that include a layered defense approach using access control, encryption or hashing, and auditing of access requests may have to be considered, instead of merely selecting and implementing the Advanced Encryption Standard (AES). It is also important to understand that although the implementation of controls may be a decision made by the technical team, the acceptance of specific levels of residual risk is a management decision that factors in the recommendations from the technical team. The most effective way to ensure that software developed has taken into account security threats and addressed vulnerabilities, thereby reducing the overall risk of that software, is to incorporate risk management processes into the SDLC itself. From requirements definition to release, software should be developed with insight into the risk of it being compromised and necessary risk management decisions and steps must be taken to address it.

1.8.3 Risk Management for Software

It was aforementioned that risk management as it relates to software and software development has its challenges. Some of the reasons for these challenges are:

- Software risk management is still maturing.

- Determination of software asset values is often subjective.

- Data on the exposure factor, impact, and probability of software security breaches is lacking or limited.

- Technical security risk is only a portion of the overall state of secure software.

Risk management is still maturing in the context of software development, and there are challenges that one faces, because risk management is not yet an exact science when it comes to software development. Not only is this still an emerging field, but it is also difficult to quantify software assets accurately. Asset value is often determined as the value of the systems that the software runs on, instead of the value of the software itself. This is very subjective as well. The value of the data that the software processes is usually just an estimate of potential loss. Additionally, owing to the closed nature of the industry, wherein the exact terms of software security breaches are not necessarily fully disclosed, one is left to speculate on what it would cost an organization should a similar breach occur within their own organization. Although historical data such as the chronology of data breaches published by the Privacy Rights Clearing House are of some use to learn about the potential impact that can be imposed on an organization, they only date back a few years (since 2005) and there is really no way of determining the exposure factor or the probability of similar security breaches within your organization.

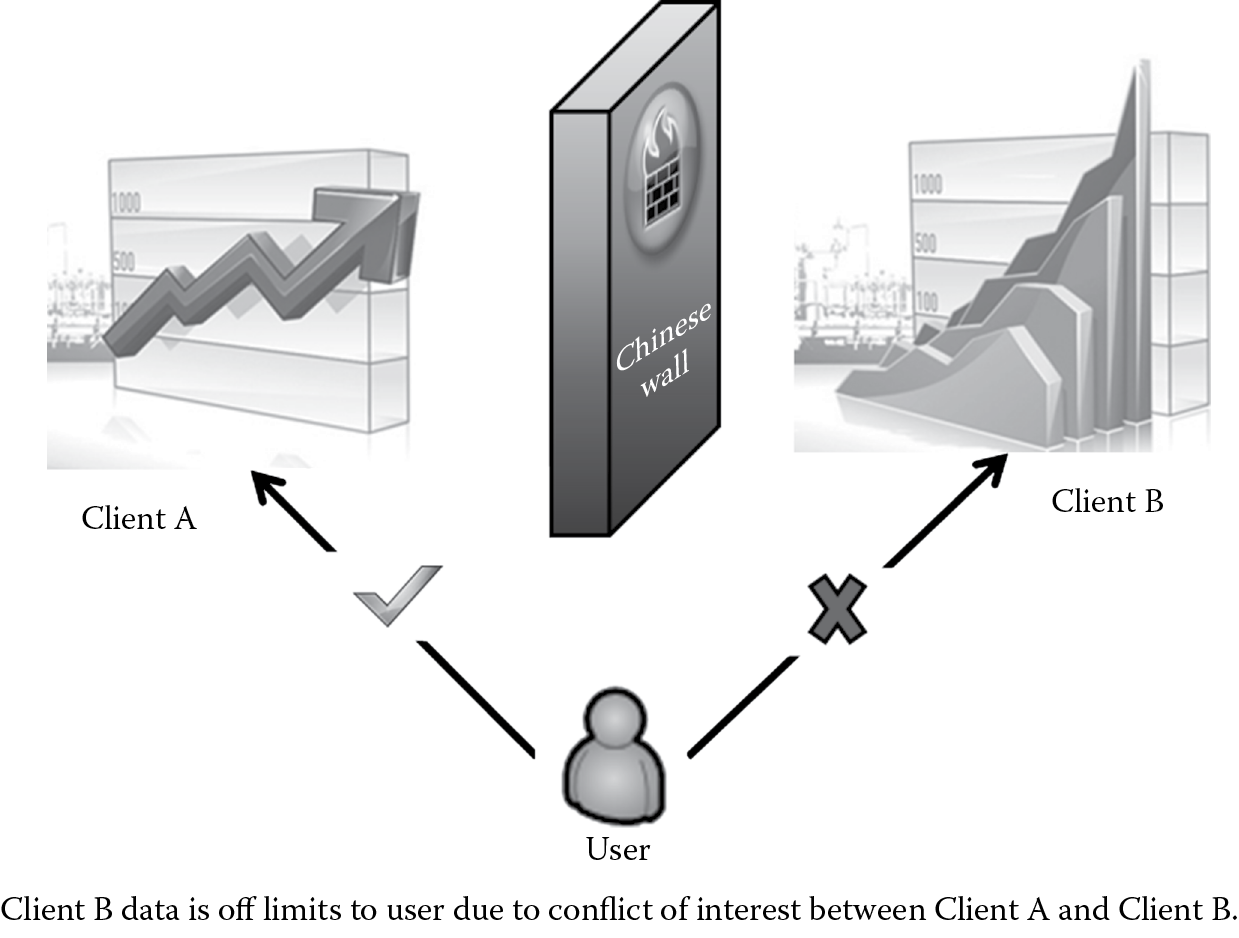

Software security is also more than merely writing secure code, and some of the current-day methodologies of computing risk using the number of threats and vulnerabilities that are found through source and object code scanning is only a small portion of the overall risk of that software. Process and people related risks must be factored in as well. For example, the lack of proper change control processes and inadequately trained and educated personnel can lead to insecure installation and operation of software that was deemed to be technically secure and had all of its code vulnerabilities addressed. A plethora of information breaches and data loss has been attributed to privileged third parties and employees who have access to internal systems and software. The risk of disclosure, alteration, and destruction of sensitive data imposed by internal employees and vendors who are allowed to have access within your organization is another very important aspect of software risk management that cannot be ignored.

Unless your organization has a legally valid document that transfers the liability to another party, your organization assumes all of the liability when it comes to software risk management. Your clients and customers will look for someone to be held accountable for a software security breach that affects them, and it will not be the perpetrators that they would go after but you, whom they have entrusted to keep them secure and serviced. The “real” risk belongs to your organization.

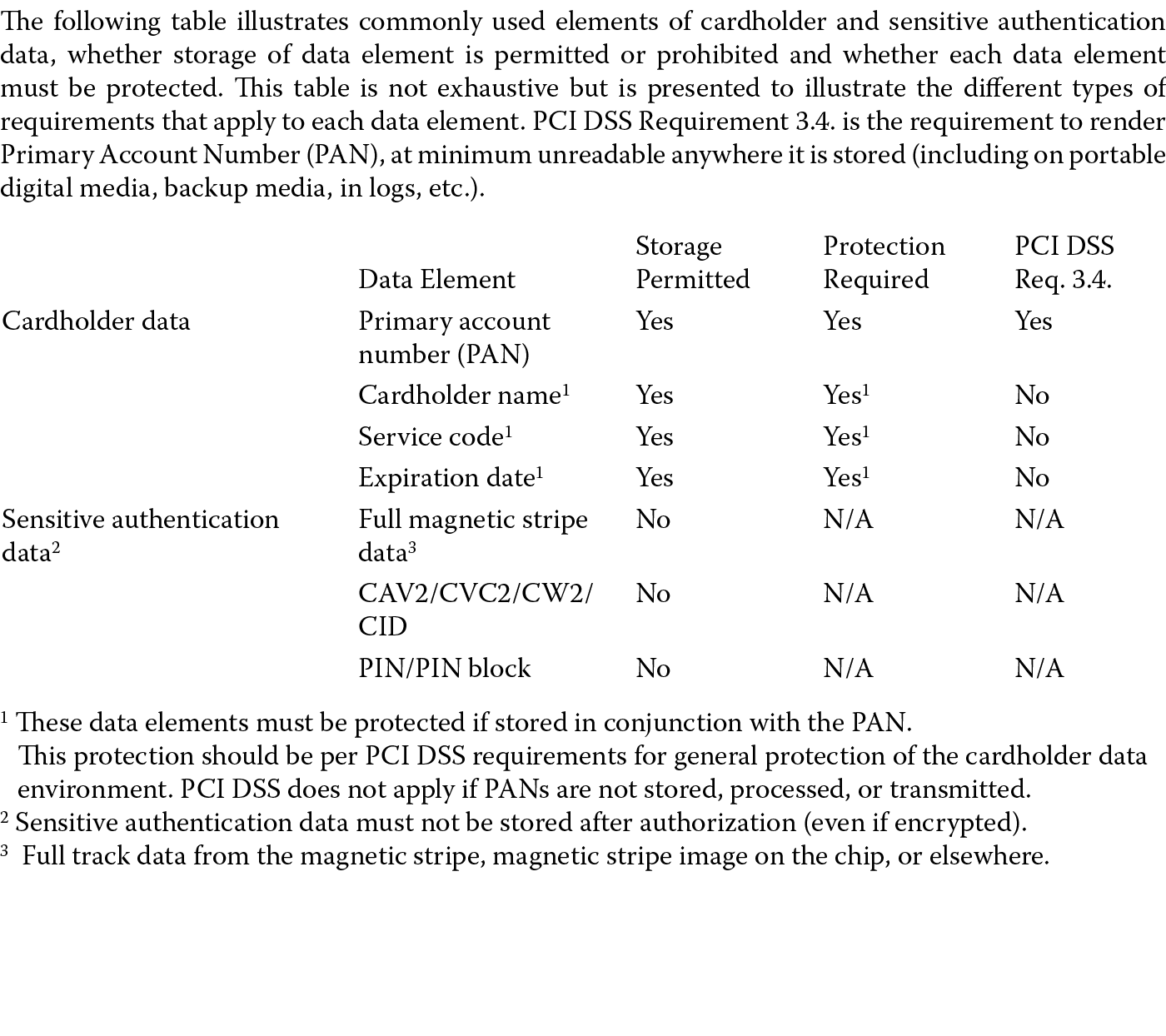

1.8.4 Handling Risk

Suppose your organization operates an e-commerce store selling products on the Internet. Today, it has to comply with data protection regulations such as the Payment Card Industry Data Security Standard (PCI DSS) to protect card holder data. Before the PCI DSS regulatory requirement was in effect, your organization has been transmitting and storing the credit card primary account number (PAN), card holder name, service code, expiration date of the card along with sensitive authentication data such as the full magnetic track data, the card verification code, and the PIN, all in clear text (humanly readable form). As depicted in Figure 1.7, PCI DSS version 1.2 disallows the storage of any sensitive authentication information even if it is encrypted or the storage of the PAN along with card holder name, service code, and expiration data is in clear text. Over open, public networks such as the Internet, Wireless, Global Systems for Mobile communications (GSM), or Global Packet Radio Service (GPRS), card holder data and sensitive authentication data cannot be transmitted in clear text.

Note that although the standard does not disallow transmission of these data in the clear over closed, private networks, it is still a best practice to comply with the standard and protect this information to avoid any potential disclosure, even to internal employees or privileged access users.

As a CSSLP, you advise the development team that the risk of disclosure is high and it needs to be addressed as soon as possible. The management team now has to decide on how to handle this risk, and they have five possible ways to address it.

- Ignore the risk: They can choose to not handle the risk and do nothing, leaving the software as is. The risk is left unhandled. This is highly ill advised because the organization can find itself at the end of a class action lawsuit and regulatory oversight for not protecting the data that its customers have entrusted to it.

- Avoid the risk: They can choose to discontinue the e-commerce store, which is not practical from a business perspective because the e-commerce store is the primary source of sales for your organization. In certain situations, discontinuing use of the existing software may be a viable option, especially when the software is being replaced by a newer product. Risk may be avoided, but it must never be ignored.

- Mitigate the risk: The development team chooses to implement security controls (safeguards and countermeasures) to reduce the risk. They plan to use security protocols such as Secure Sockets Layer (SSL)/Transport Layer Security (TLS) or IPSec to safeguard sensitive card holder data over open, public networks. Although the risk of disclosure during transmission is reduced, the residual risk that remains is the risk of disclosure in storage. You advise the development team of this risk. They choose to encrypt the information before storing it. Although it may seem like the risk is mitigated completely, there still remains the risk of someone deciphering the original clear text from the encrypted text if the encryption solution is weakly implemented. Moreover, according to the PCI DSS standard, sensitive authentication data cannot be stored even if it is encrypted and so the risk of noncompliance still remains. So it is important that the decision makers who are responsible for addressing the risk are made aware of the compliance, regulatory, and other aspects of risk and not merely yield to choosing a technical solution to mitigate it.

- Accept the risk: At this juncture, management can choose to accept the residual risk that remains and continue business operations or they can choose to continue to mitigate it by not storing disallowed card holder information. When the cost of implementing security controls outweighs the potential impact of the risk itself, one can accept the risk. However, it is imperative to realize that the risk acceptance process must be a formal process, and it must be well documented, preferably with a contingency plan to address the residual risk in subsequent releases of the software.

- Transfer the risk: One additional method by which management can choose to address the risk is to simply transfer it. This is usually done by buying insurance and works best for the organization when the cost of implementing the security controls exceeds the cost of potential impact of the risk itself. It must be understood, however, that it is the liability that is transferred and not necessarily the risk itself. This is because your customers are still going to hold you accountable for security breaches in your organization and the brand or reputational damage that can be realized may far outweigh the liability protection that your organization receives by way of transference of risk. Another way of transferring risk is to transfer the risk to independent third-party assessors who attest by way of vulnerability assessments and penetration testing that the software is secure for public release. However, when this is done, it must be contractually enforceable.

1.8.5 Risk Management Concepts: Summary

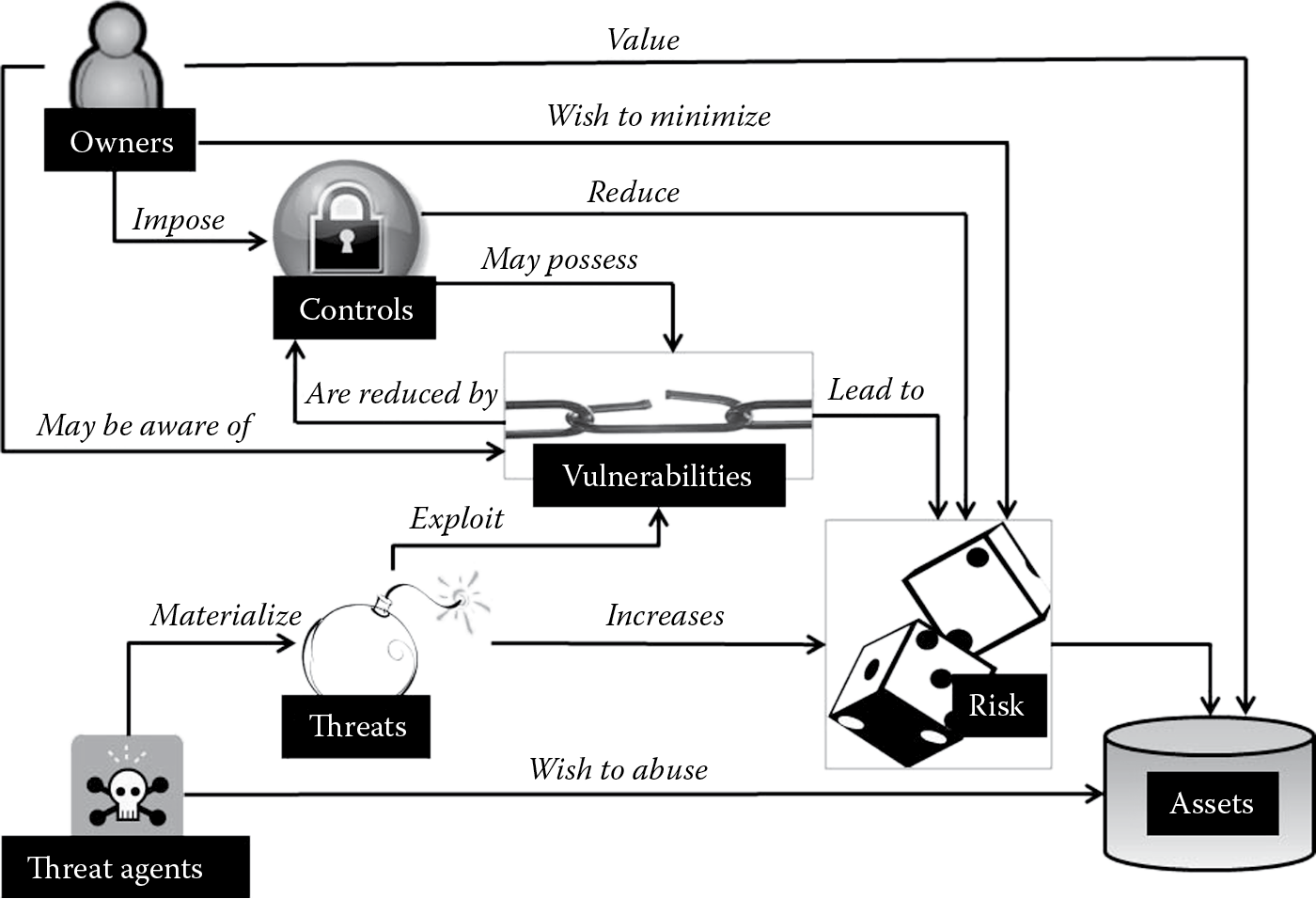

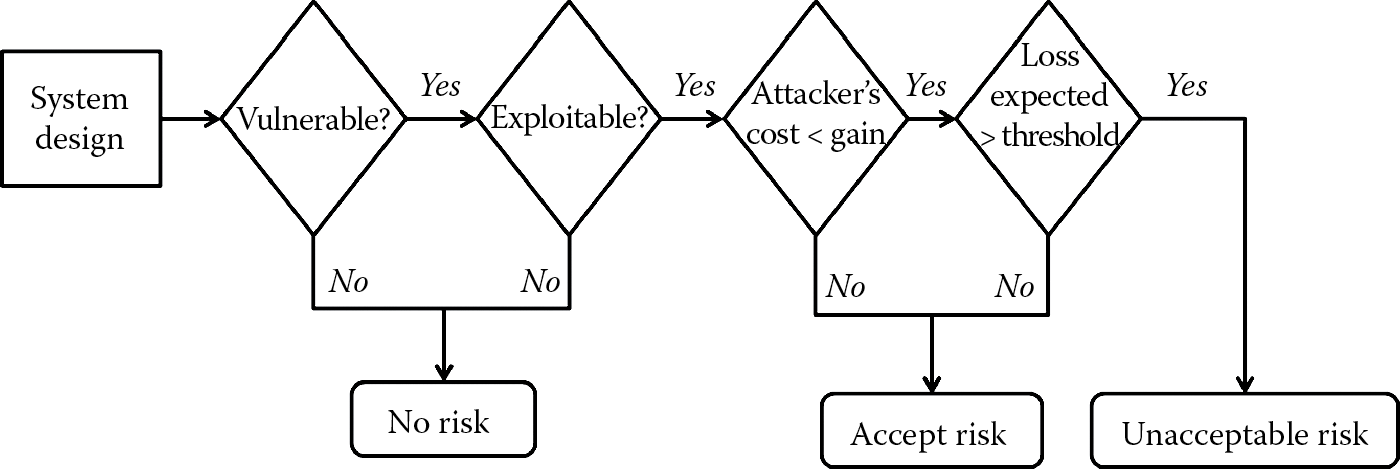

As you may know, a picture is worth a thousand words. The risk management concepts we have discussed so far are illustrated for easier understanding in Figure 1.8.

Owners value assets (software) and wish to minimize risk to assets. Threat agents wish to abuse and/or may damage assets. They may give rise to threats that increase the risk to assets. These threats may exploit vulnerabilities (weaknesses) leading to the risk to assets. Owners may or may not be aware of these vulnerabilities. When known, these vulnerabilities may be reduced by the implementation of controls that reduce the risk to assets. It is also noteworthy to understand that the controls themselves may pose vulnerabilities leading to risk to assets. For example, the implementation of fingerprint reader authentication in your software as a biometric control to mitigate access control issues may itself pose the threat of denial of service to valid users, if the crossover error rate, which is the point at which the false rejection rate equals the false acceptance rate, for that biometric control is high.

1.9 Security Policies: The “What” and “Why” for Security

Contrary to what one may think it to be, a security policy is more than merely a written document. It is the instrument by which digital assets that require protection can be identified. It specifies at a high level “What” needs to be protected and the possible repercussions of noncompliance.

In addition to defining the assets that the organization deems as valuable, security policies identify the organization’s goals and objectives and communicate management’s goals and objectives for the organization.

Recently, legal and regulatory compliance has been evident as an important driver of information security spending and initiatives. Security policies help in ensuring an organization’s compliance with legal and regulatory requirements, if they complement and not contradict these laws and regulations. With a clear-cut understanding of management’s expectations, the likelihood of personal interpretations and claiming ignorance is curtailed, especially when auditors find gaps between organizational processes and compliance requirements. It protects the organization from any surprises by providing a consistent basis for interpreting or resolving issues that arise. The security policy provides the framework and point of reference that can be used to measure an organization’s security posture. The gaps that are identified when being measured against a security policy, a consistent point of reference, can be used to determine effective executive strategy and decisions.

Additionally, security policies ensure nonrepudiation, because those who do not follow the security policy can be personally held accountable for their behavior or actions.

Security policies can also be used to provide guidance to architect secure software by addressing the confidentiality, integrity, and availability aspects of software.

Security policies can also define the functions and scope of the security team, document incident response and enforcement mechanisms, and provide for exception handling, rewards, and discipline.

1.9.1 Scope of the Security Policies

The scope of the information security policy may be organizational or functional. Organizational policy is universally applicable, and all who are part of the organization must comply with it, unlike a functional policy, which is limited to a specific functional unit or a specific issue. An example of organizational policy is the remote access policy that is applicable to all employees and nonemployees who require remote access into the organizational network. An example of a functional security policy is the data confidentiality policy, which specifies the functional units that are allowed to view sensitive or personal information. In some cases, these can even define the rights personnel have within these functional units. For example, not all members of the human resources team are allowed to view the payroll data of executives.

It may be a single comprehensive document or it may be comprised of many specific information security policy documents.

1.9.2 Prerequisites for Security Policy Development

It cannot be overstressed that security policies provide a framework for a comprehensive and effective information security program.

The success of an information security program and more specifically the software security initiatives within that program is directly related to the enforceability of the security controls that need to be determined and incorporated into the SDLC. A security policy is the instrument that can provide this needed enforceability. Without security policies, one can reasonably argue that there are no teeth in the secure software initiatives that a passionate CSSLP or security professional would like to have in place. Those who are or who have been responsible for incorporating security controls and activities within the SDLC know that a security program often initially faces resistance. You can probably empathize being challenged by those who are resistant, and who ask questions such as, “Why must I now take security more seriously as we have never done this before?” or “Can you show me where it mandates that I must do what you are asking me to do?” Security policies give authority to the security professional or security activity.

It is therefore imperative that security policies providing authority to enforce security controls in software are developed and implemented in case your organization does not already have them. However, the development of security policies is more than a mere act of jotting a few “Thou shall” or “Thou shall not” rules in paper. For security policies to be effectively developed and enforceable requires the support of executive management (top-level support). Without the support of executive management, even if security policies are successfully developed, their implementation will probably fail. The makeup of top-level support must include support from signature authorities from various teams and not just the security team. Including ancillary and related teams (such as legal, privacy, networking, development, etc.) in the development of the security policies has the added benefit of buy in and ease of adoption from the teams that need to comply with the security policy when implemented.

In addition to top-level support and inclusion of various teams in the development of a security policy, successful implementation of the security policy also requires marketing efforts that communicate the goals of management through the policy to end users. End users must be educated to determine security requirements (controls) that the security policy mandates, and those requirements must be factored into the software that is being designed and developed.

1.9.3 Security Policy Development Process

Security policy development is not a onetime activity. It must be an evergreen activity, i.e., security policies must be periodically evaluated so that they are contextually correct and relevant to address current-day threats. An example of a security policy that is not contextually correct is a regulatory imposed or adopted policy that mandates multifactor authentication in your software for all financial transactions, but your organization is not already set up to have the infrastructure such as token readers or biometric devices to support multifactor authentication. An example of a security policy that is not relevant is one in which the policy requires you to use obsolete and insecure cryptographic technology such as the DES for data protection. DES has been proven to be easily broken with modern technology, although it may have been the de facto standard when the policy was developed. With the standardization of the AES, DES is now deemed to be an obsolete technology. Policies that have explicitly mandated DES are no longer relevant, and so they must be reviewed and revised. Contextually incorrect, obsolete, and insecure requirements in policies are often flagged as noncompliant issues during an audit. This problem can be avoided by periodic review and revisions of the security policies in effect. Keeping the security policies high level and independent of technology alleviates the need for frequent revisions.

It is also important to monitor the effectiveness of security policies and address issues that are identified as part of the lessons learned.

1.10 Security Standards

High-level security policies are supported by more detailed security standards. Standards support policies in that adoption of security policies are made possible owing to more granular and specific standards. Like security policies, organizational standards are considered to be mandatory elements of a security program and must be followed throughout the enterprise unless a waiver is specifically granted for a particular function.

1.10.1 Types of Security Standards

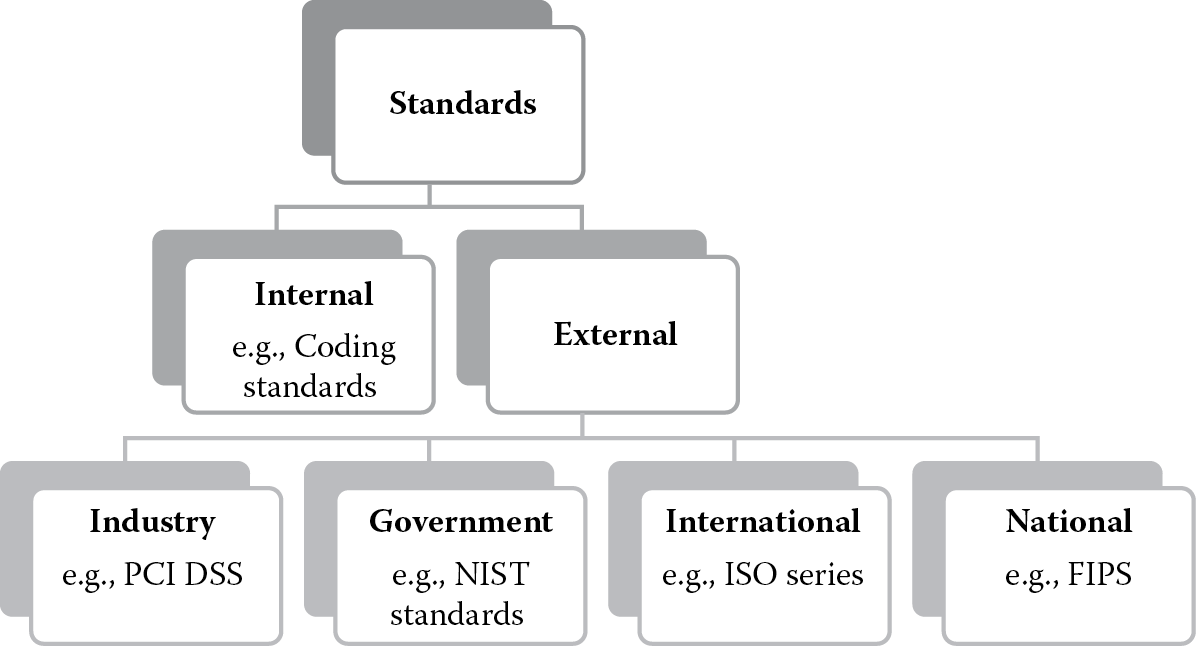

As Figure 1.9 depicts, security standards can be broadly categorized into Internal or External standards.

Internal standards are usually specific. The coding standard is an example of an internal software security standard. External standards can be further classified based on the issuer and recognition. Depending on who has issued the standard, external security standards can be classified into industry standards or government standards. An example of an industry issued standard is the PCI DSS. Examples of government issued standards include those generated by the NIST. Not all standards are geographically recognized and enforceable in all regions uniformly. Depending on the extent of recognition, external security standards (Weise, 2009) can be classified into national and international security standards. Although national security standards are often more focused and inclusive of local customs and practices, international standards are usually more comprehensive and generic in nature spanning various standards with the goal of interoperability. The most prevalent example of internationally recognized standards is ISO, whereas examples of nationally recognized standards are the Federal Information Processing Standards (FIPS) and those by the American National Standards Institute (ANSI), in the United States. It is also noteworthy to recognize that with globalization impacting the modicum of operations in the global landscape, most organizations lean more toward the adoption of international standards over national ones.

It is important to recognize that unlike standards that are mandatory, guidelines are not. External standards generally provide guidelines to organizations, but organizations tend to designate them as the organization’s standard, which make them mandatory.

It must be understood that within the scope of this book, a complete and thorough exposition of each standard related to software security would not be possible. As a CSSLP, it is important that you are not only familiar with the standards covered here but also other standards that apply to your organization. In the next section, we will be covering the following internal and external standards pertinent to security professionals as it applies to software:

- Coding standards

- PCI DSS

- NIST standards

- ISO standards

- Federal Information Processing standards

1.10.1.1 Coding Standards

One of the most important internal standards that has a tremendous impact on the security of software is the coding standard. The coding standard specifies the requirements that are allowed and that need to be adopted by the development organization or team while writing code (building software). Coding standards need not be developed for each programming language or syntax but can include various languages into one. Organizations that do not have a coding standard must plan to have one created and adopted.

The coding standard not only brings with it many security advantages but provides for nonsecurity related benefits as well. Consistency in style, improved code readability, and maintainability are some of the nonsecurity related benefits one gets when they follow a coding standard. Consistency in style can be achieved by ensuring that all development team members follow the prescribed naming conventions, overloaded operations syntax, or instrumentation, etc., explicitly specified in the coding standard. Instrumentation is the inline commenting of code that is used to describe the operations undertaken by a code section. Instrumentation also considerably increases code readability. One of the biggest benefits of following a coding standard is maintainability of code, especially in a situation when there is a high rate of employee turnover. When the developer who has been working on your critical software products leaves the organization, the inheriting team or team member will have a reduced learning time, if the developer who left had followed the prescribed coding standard.

Following the coding standard has security advantages as well. Software designed and developed to the coding standard is less prone to error and exposure to threats, especially if the coding standard has taken into account and incorporated in it, security aspects when writing secure code. For example, if the coding standard specifies that all exceptions must be explicitly handled with a laconic error message, then the likelihood of information disclosure is considerably reduced. Also, if the coding standard specifics that each try-catch block must include a finally block as well, where objects instantiated are disposed, then upon following this requirement, the chances of dangling pointers and objects in memory are reduced, thereby addressing not only security concerns but performance as well.

1.10.1.2 Payment Card Industry Data Security Standards

With the prevalence of e-commerce and Web computing in this day and age, it is highly unlikely that those who are engaged with business that transmits and processes payment card information have not already been inundated with the PCI requirements, more particularly the PCI DSS. Originally developed by American Express, Discover Financial Services, JCB International, MasterCard Worldwide, and Visa, Inc. International, the PCI is a set of comprehensive requirements aimed at increasing payment account data security. It is regarded as a multifaceted security standard because it includes requirements not only for the technological elements of computing such as network architecture and software design but also for security management, policies, procedures, and other critical protective measures.

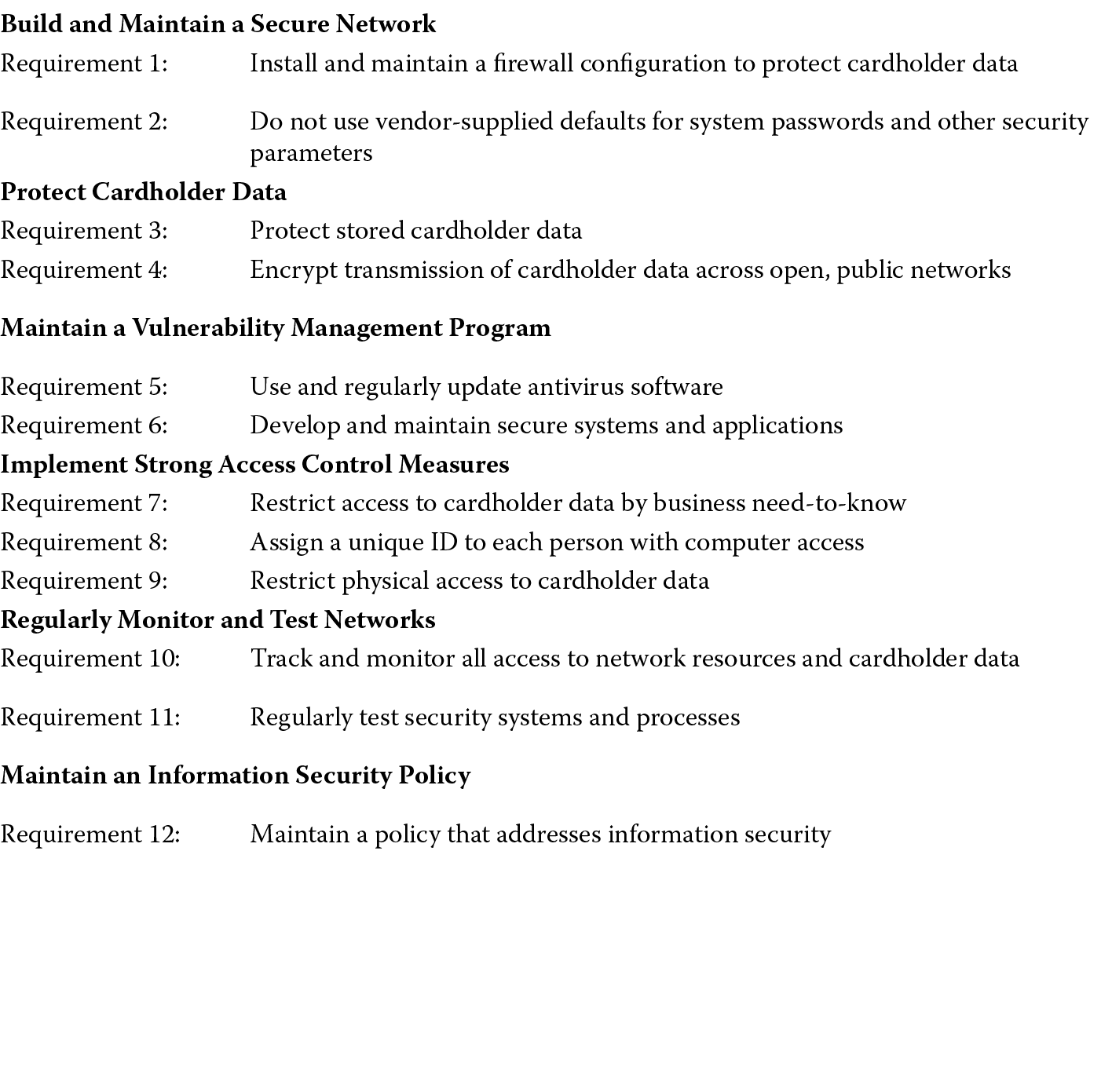

The goal of the PCI DSS is to facilitate organization’s efforts to proactively protect card holder payment account data. It comprises 12 foundational requirements that are mapped into six sections or control objectives as Figure 1.10 illustrates.

If your organization has the need to transmit, process, or store the PAN, then PCI DSS requirements are applicable. Certain card holder data elements such as the sensitive authentication data comprised of the full magnetic strip, the security code, and the PIN block are disallowed from being stored after authorization even if it is cryptographically protected. Although all of the requirements have a bearing on software security, the requirement that is directly and explicitly related to software security is Requirement 6, which is the requirement to develop and maintain secure systems and applications. Each of these requirements is further broken down into subrequirements, and it is recommended that you become familiar with each of the 12 foundational PCI DSS requirements if your organization is involved in the processing of credit card transactions. It is important to highlight Requirement 6 and its subrequirements (6.1 to 6.6) because they are directly related to software development. Table 1.1 tabulates PCI DSS Requirement 6 subrequirements one level deep.

PCI DSS Requirement 6 and Its Subrequirements

|

No. |

Requirement |

|

6 |

Develop and maintain secure systems and applications. |

|

6.1 |

Ensure that all system components and software have the latest vendor-supplied security patches installed. Install critical security patches within 1 month of release. |

|

6.2 |

Establish a process to identify newly discovered security vulnerabilities (e.g., alert subscriptions) and update configuration standards to address new vulnerability issues. |

|

6.3 |

Develop software applications in accordance with industry best practices (e.g., input validation, secure error handling, secure authentication, secure cryptography, secure communications, logging, etc.), and incorporate information security throughout the software development life cycle. |

|

6.4 |

Follow change control procedures for all changes to system components. |

|

6.5 |

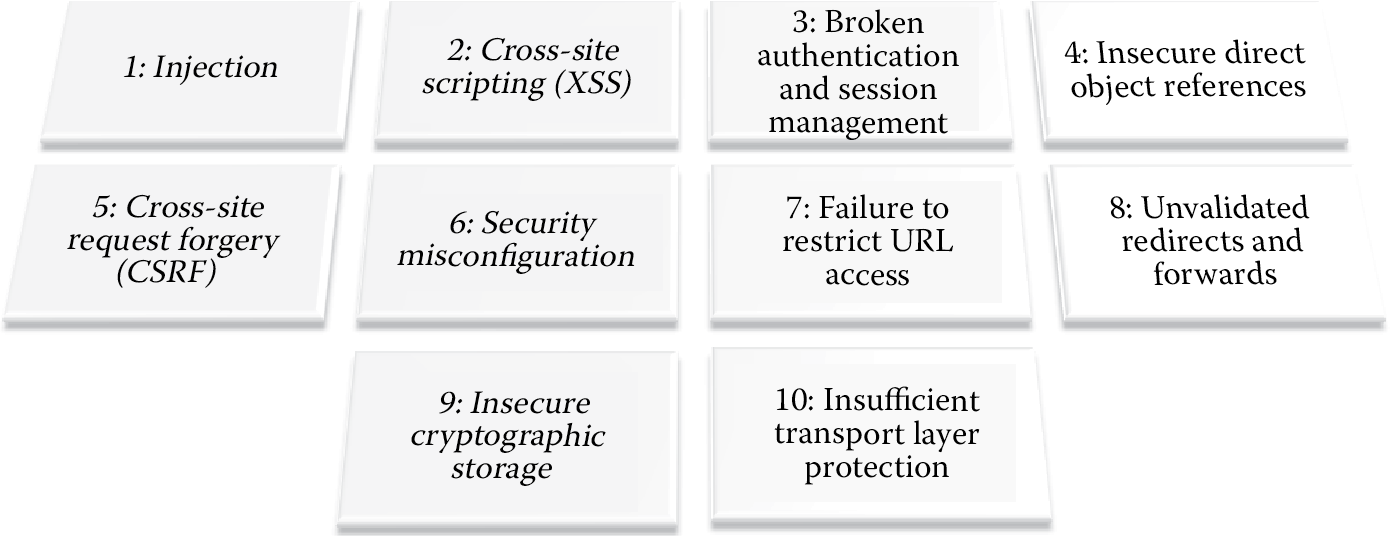

Develop all Web applications based on secure coding guidelines (such as OWASP) to cover common coding vulnerabilities in software development. |

|

6.6 |

For public-facing Web applications, address new threats and vulnerabilities on an ongoing basis and ensure these applications are protected against known attacks by either reviewing these applications annually or upon change, using manual or automated security assessment tools or methods, or by installing a Web application firewall in front of the public-facing Web application. |

1.10.1.3 NIST Standards