Chapter 4

Secure Software Implementation/Coding

4.1 Introduction

Although software assurance is more than just writing secure code, writing secure code is an important and critical component to ensuring the resiliency of software security controls. Reports in full disclosure and security mailing lists are evidence that software written today is rife with vulnerabilities that can be exploited. A majority of these weaknesses can be attributed to insecure software design and/or implementation, and it is vitally important that software first and foremost be reliable, and second less prone to attack and more resilient when it is. Successful hackers today are identified as individuals who have a thorough understanding of programming. It is therefore imperative that software developers who write code must also have a thorough understanding of how their code can be exploited, so that they can effectively protect their software and data. Today’s security landscape calls for software developers who additionally have a security mindset. This chapter will cover the basics of programming concepts, delve into topics that discuss common software coding vulnerabilities and defensive coding techniques and processes, cover code analysis and code protection techniques, and finally discuss building environment security considerations that are to be factored into the software.

4.2 Objectives

As a CSSLP, you are expected to

- Have a thorough understanding of the fundamentals of programming.

- Be familiar with the different types of software development methodologies.

- Be familiar with common software attacks and means by which software vulnerabilities can be exploited.

- Be familiar with defensive coding principles and code protection techniques.

- Know how to implement safeguards and countermeasures using defensive coding principles.

- Know the difference between static and dynamic analysis of code.

- Know how to conduct a code/peer review.

- Be familiar with how to build the software with security protection mechanisms in place.

This chapter will cover each of these objectives in detail. It is imperative that you fully understand the objectives and be familiar with how to apply them in the software that your organization builds.

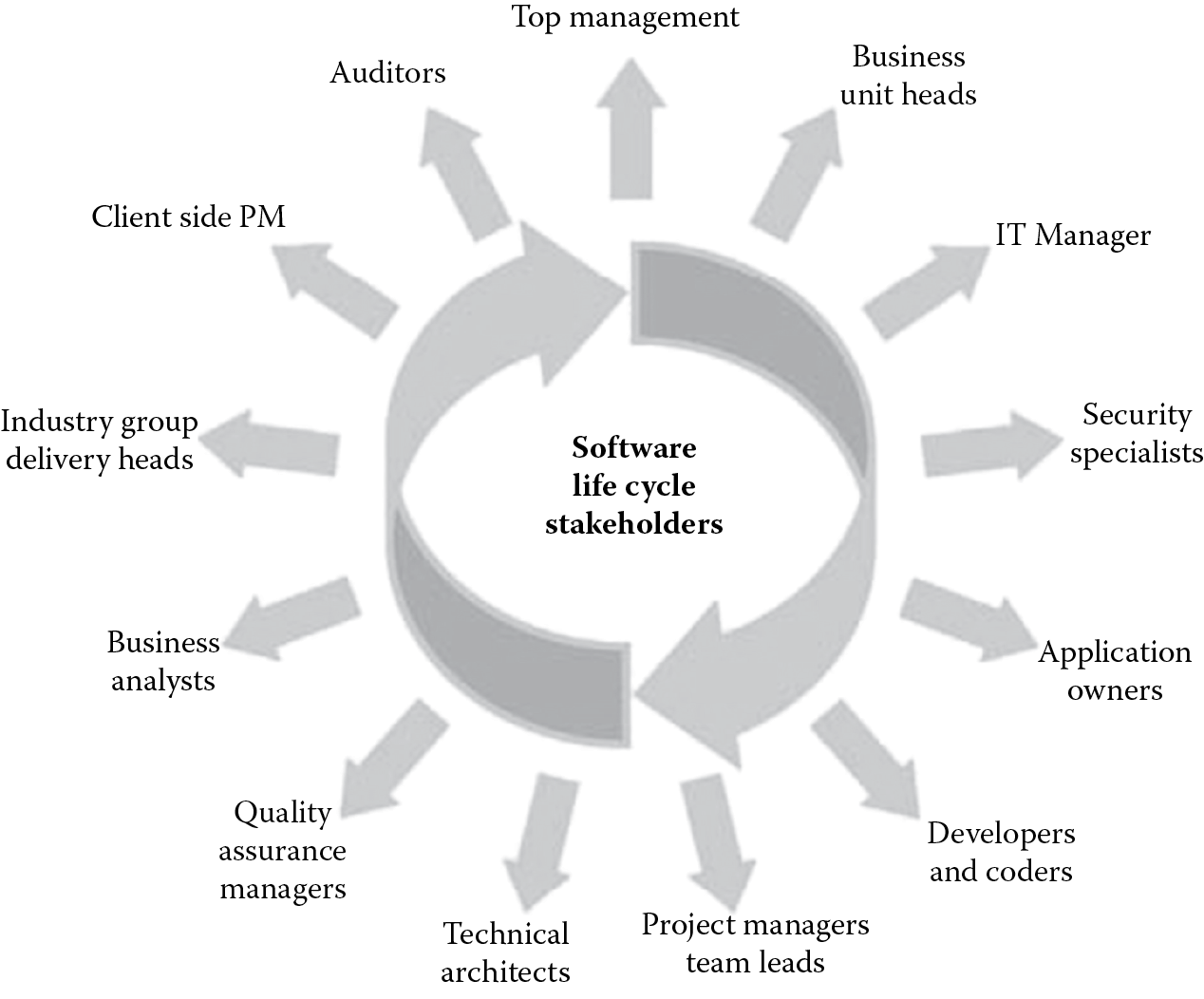

4.3 Who Is to Be Blamed for Insecure Software?

Although it may seem that the responsibility for insecure software lies primarily on the software developers who write the code, opinions vary, and the debate on who is ultimately responsible for a software breach is ongoing. Holding the coder solely responsible would be unreasonable since software is not developed in a silo. Software has many stakeholders, as depicted in Figure 4.1, and eventually all play a crucial role in the development of secure software. Ultimately, it is the organization (or company) that will be blamed for software security issues, and this state cannot be ignored.

4.4 Fundamental Concepts of Programming

Who is a programmer? What is their most important skill? A programmer is essentially someone who uses his/her technical know-how and skills to solve problems that the business has. The most important skills a programmer (used synonymously with a coder) has is problem solving. Programmers use their skills to construct business problem-solving programs (software) to automate manual processes, improving the efficiency of the business. They use programming languages to write programs. In the following section, we will learn about computer architecture, types of programming languages and code, and program utilities, such as assembler, compilers, and interpreters.

4.4.1 Computer Architecture

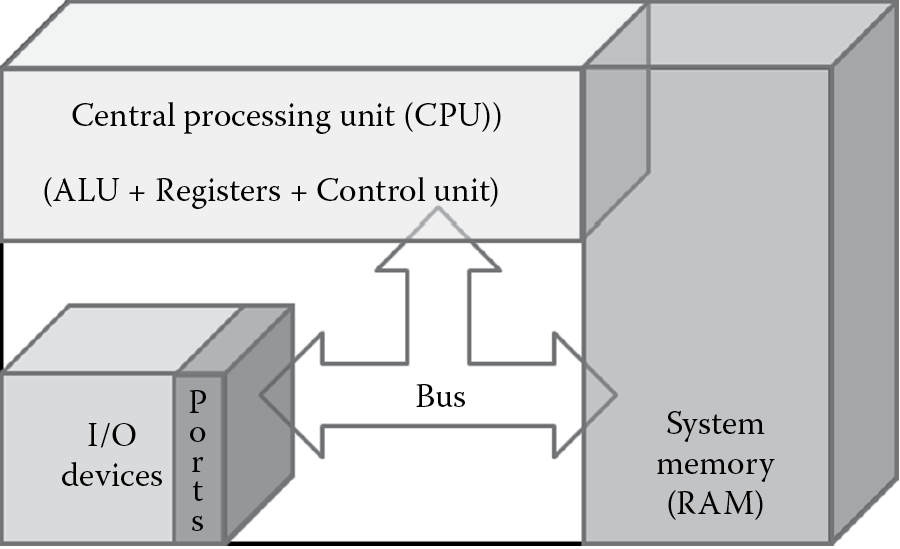

Most modern-day computers are primarily composed of the computer processor, system memory, and input/output (I/O) devices. Figure 4.2 depicts a simplified illustration of modern-day computer architecture.

The computer processor is more commonly known as the central processing unit (CPU). The CPU is made up of the

- Arithmetic logic unit (ALU), which is a specialized circuit used to perform mathematical and logical operations on the data.

- Control unit, which acts as a mediator controlling processing instructions. The control unit itself does not execute any instructions, but instructs and directs other parts of the system, such as the registers, to do so.

- Registers, which are specialized internal memory holding spaces within the processor itself. These are temporary storage areas for instruction or data, and they provide the advantage of speed.

Because CPU registers have only limited memory space, memory is augmented by system memory and secondary storage devices, such as the hard disks, digital video disks (DVDs), compact disks (CDs), and USB keys/fobs. The system memory is also commonly known as random access memory (RAM). The RAM is the main component with which the CPU communicates. I/O devices are used by the computer system to interact with external interfaces. Some common examples of input devices include a keyboard, mouse, etc., and some common examples of output devices include the monitor, printers, etc. The communication between each of these components occurs via a gateway channel that is called the bus.

The CPU, at its most basic level of operation, processes data based on binary codes that are internally defined by the processor chip manufacturer. These instruction codes are made up of several operational codes called opcodes. These opcodes tell the CPU what functions it can perform. For a software program to run, it reads instruction codes and data stored in the computer system memory and performs the intended operation on the data. The first thing that needs to happen is for the instruction and data to be loaded on to the system memory from an input device or a secondary storage device. Once this happens, the CPU does the following four functions for each instruction:

- Fetching: The control unit gets the instruction from system memory.

- The instruction pointer is used by the processor to keep track of which instruction codes have been processed and which ones are to be processed subsequently. The data pointer keeps track of where the data are stored in the computer memory, i.e., it points to the memory address.

- Decoding: The control unit deciphers the instruction and directs the needed data to be moved from system memory onto the ALU.

- Execution: Control moves from the control unit to the ALU, and the ALU performs the mathematical or logical operation on the data.

- Storing: The ALU stores the result of the operation in memory or in a register. The control unit finally directs the memory to release the result to an output device or a secondary storage device.

The fetch–decode–execute–store cycle is also known as the machine cycle. A basic understanding of this process is necessary for a CSSLP because they need to be aware of what happens to the code written by a programmer at the machine level.

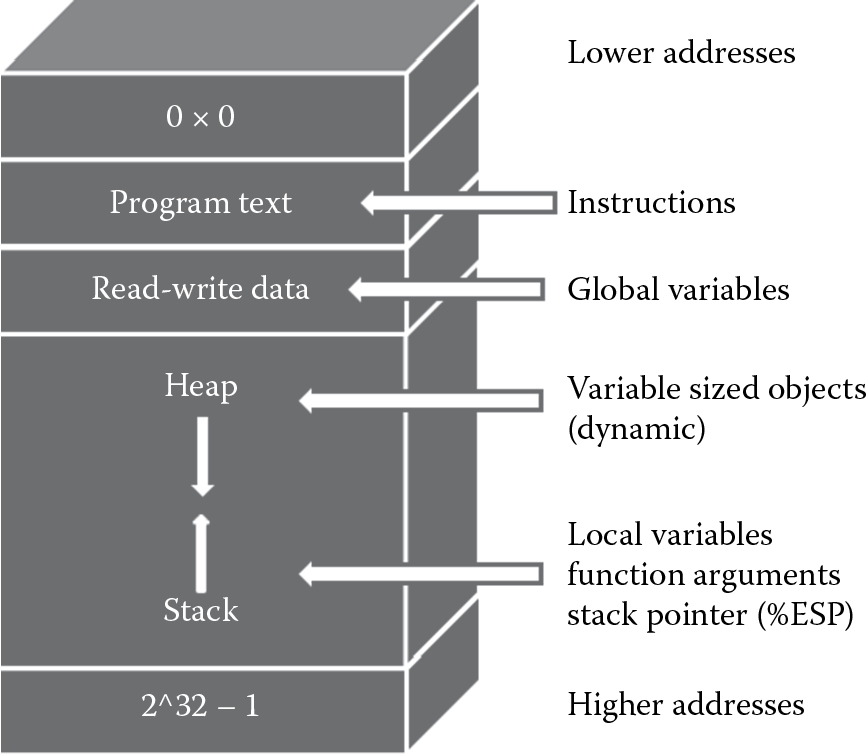

When the software program executes, the program allocates storage space in memory so that the program code and data can be loaded and processed as the programmer has intended it. The CPU registers are used to store the most immediate data; the compilers use the registers to cache frequently used function values and local variables that are defined in the source code of the program. However, since there are only a limited number of registers, most programs, especially the large ones, place their data values on the system memory (RAM) and use these values by referencing their unique addresses. Internal memory layout has the following segments: program text, data, stack, and heap, as depicted in Figure 4.3. Physically the stack and the heap are allocated areas on the RAM. The allocation of storage space in memory (also known as a memory object) is called instantiation. Program code uses the variables defined in the source code to access memory objects.

The series of execution instructions (program code) is contained in the program text segment. The next segment is the read–write data segment, which is the area in memory that contains both initialized and uninitialized global data. Function variables, local data, and some special register values, such as the execution stack pointer (ESP), are placed on the stack part of the RAM. The ESP points to the memory address location of the currently executing program function. Variable sized objects and objects that are too large to be placed on the stack are dynamically allocated on the heap part of the RAM. The heap provides the ability to run more than one process at a time, but for the most part with software, memory attacks on the stack are most prevalent.

The stack is an area of memory used to store function arguments and local variables, and it is allocated when a function in the source code is called to execute. When the function execution begins, space is allocated (pushed) on the stack, and when the function terminates, the allocated space is removed (popped off) the stack. This is known as the PUSH and POP operation. The stack is managed as a LIFO (last in, first out) data structure. This means that when a function is called, memory is first allocated in the higher addresses and used first. The PUSH direction is from higher memory addresses to lower memory addresses, and the POP direction is from lower memory addresses to higher memory addresses. This is important to understand because the ESP moves from higher memory to lower memory addresses, and, without proper management, serious security breaches can be evident.

Software hackers often have a thorough understanding of this machine cycle and how memory management happens, and without appropriate protection mechanisms in place, they can circumvent higher-level security controls by manipulating instruction and data pointers at the lowest level, as is the case with memory buffer overflow attacks and reverse engineering. These will be covered later in this chapter under the section about common software vulnerabilities and countermeasures.

4.4.2 Programming Languages

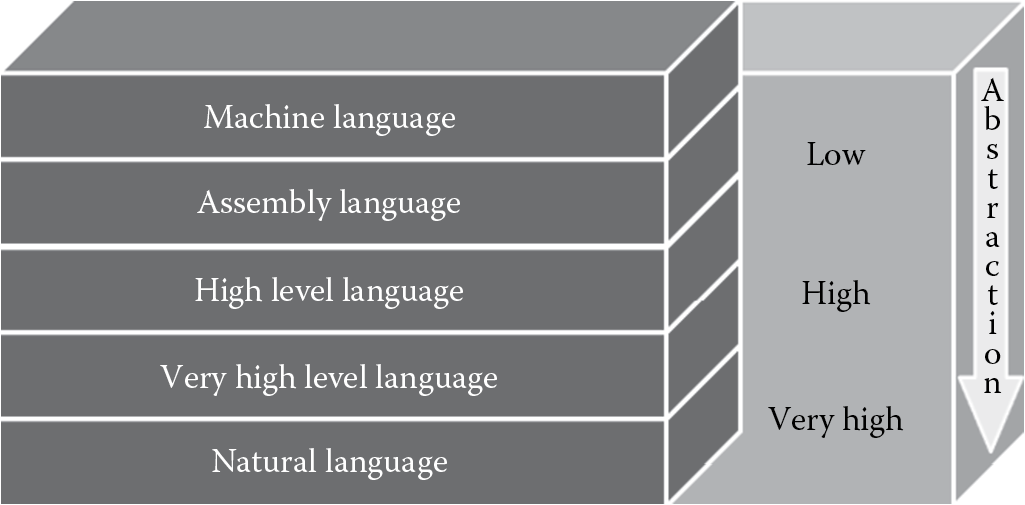

Knowledge of all the processor instruction codes can be extremely onerous on a programmer, if even humanly possible. Even an extremely simple program would require the programmer to write lines of code that manipulate data using opcodes, and in a fast-paced day and age where speed of delivery is critically important for the success of business, software programs, like any other product, cannot take an inordinate amount of time to create. To ease programmer’s effort and shorten the time to delivery of software development, simpler programming languages that abstract the raw processor instruction codes have been developed. There are many programming languages that exist today.

Software developers use a programming language to create programs, and they can choose a low-level programming language. A low-level programming language is closely related to the hardware (CPU) instruction codes. It offers little to no abstraction from the language that the machine understands, which is binary codes (0s and 1s). When there is no abstraction and the programmer writes code in 0s and 1s to manipulate data and processor instructions, which is a rarity, they are coding in machine language. However, the most common low-level programming language today is the assembly language, which offers little abstraction from the machine language using opcodes. Appendix C has a listing of the common opcodes used in assembly language for abstracting processor instruction codes in an Intel 80186 or higher microprocessor (CPU) chip. Machine language and assembly language are both examples of low-level programming languages. An assembler converts assembly code into machine code.

In contrast, high-level programming languages (HLL) isolate program execution instruction details and computer architecture semantics from the program’s functional specification itself. High-level programming languages abstract raw processor instruction codes into a notation that the programmer can easily understand. The specialized notation with which a programmer abstracts low-level instruction codes is called the syntax, and each programming language has its own syntax. This way, the programmer is focused on writing a code that addresses business requirements instead of being concerned with manipulating instruction and data pointers at the microprocessor level. This makes software development certainly simpler and the software program more easily understandable. It is, however, important to recognize that with the evolution of programming languages and integrated development environments (IDEs) and tools that facilitate the creation of software programs, even professionals lacking the internal knowledge of how their software program will execute at the machine level are now capable of developing software. This can be seriously damaging from a security standpoint because software creators may not necessarily understand or be aware of the protection mechanisms and controls that need to be developed and therefore inadvertently leave them out.

Today, the evolution of programming languages has given us goal-oriented programming languages that are also known as very high-level programming languages (VHLL). The level of abstraction in some of the VHLLs has been so increased that the syntax for programming in these VHLLs is like writing in English. Additionally, languages such as the natural language offer even greater abstraction and are based on solving problems using logic based on constraints given to the program instead of using the algorithms written in code by the software programmer. Natural languages are infrequently used in business settings and are also known as logic programming languages or constraint-based programming languages.

Figure 4.4 illustrates the evolution of programming languages from the low-level machine language to the VHLL natural language.

The syntax in which a programmer writes their program code is the source code. Source code needs to be converted into a set of instruction codes that the computer can understand and process. The code that the machine understands is the machine code, which is also known as native code. In some cases, instead of converting the source code into machine code, the source code is simply interpreted and run by a separate program. Depending on how the program is executed on the computer, HLL can be categorized into compiled languages and interpreted languages.

4.4.2.1 Compiled Languages

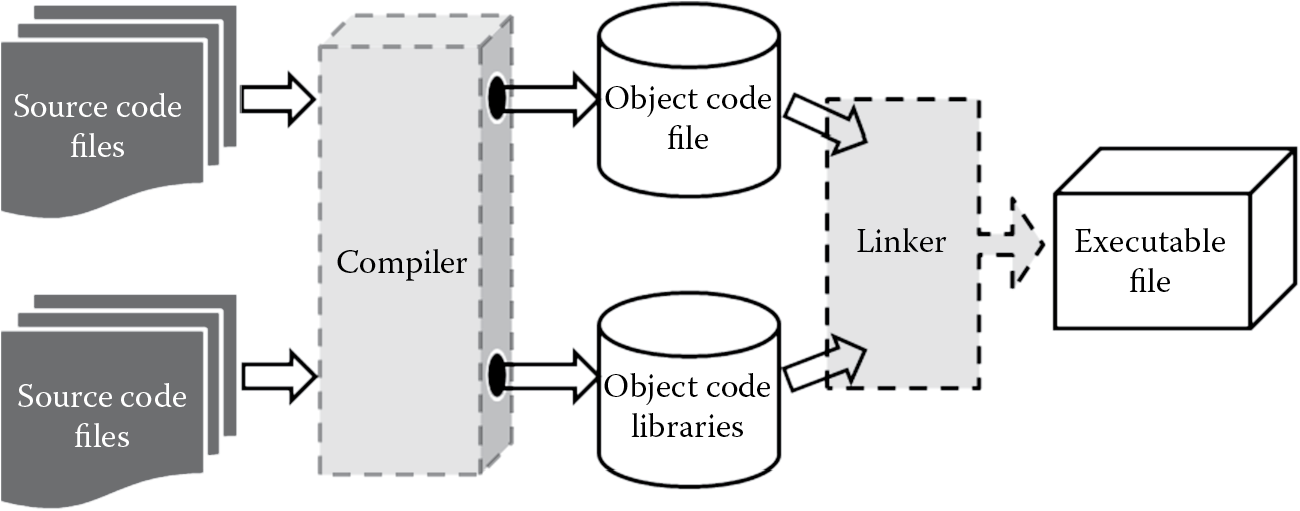

The predominant form of programming languages are compiled languages. Examples include COBOL, FORTRAN, BASIC, Pascal, C, C++, and Visual Basic. The source code that the programmer writes is converted into machine code. The conversion itself is a two-step process, as depicted in Figure 4.5, that includes two subprocesses: compilation and linking.

- Compilation: The process of converting textual source code written by the programmer into raw processor specific instruction codes. The output of the compilation process is called the object code, which is created by the compiler program. In short, compiled source code is the object code. The object code itself cannot be executed by the machine unless it has all the necessary code files and dependencies provided to the machine.

- Linking: The process of combining the necessary functions, variables, and dependencies files and libraries required for the machine to run the program. The output that results from the linking process is the executable program or machine code/file that machine can understand and process. In short, linked object code is the executable. Link editors that combine object codes are known as linkers. Upon the completion of the compilation process, the compiler invokes the linker to perform its function.

There are two types of linking: static linking and dynamic linking. When the linker copies all functions, variables, and libraries needed for the program to run into the executable itself, it is referred to as static linking. Static linking offers the benefit of faster processing speed and ease of portability and distribution because the required dependencies are present within the executable itself. However, based on the size and number of other dependencies files, the final executable can be bloated, and appropriate space considerations needs to be taken. Unlike static linking, in dynamic linking only the names and respective locations of the needed object code files are placed in the final executable, and actual linking does not happen until runtime, when both the executable and the library files are placed in memory. Although this requires less space, dynamically linked executables can face issues that relate to dependencies if they cannot be found at run time. Dynamic linking should be chosen only after careful consideration to security is given, especially if the linked object files are supplied from a remote location and are open source in nature. A hacker can maliciously corrupt a dependent library, and when they are linked at runtime, they can compromise all programs dependent on that library.

4.4.2.2 Interpreted Languages

While programs written in compiled languages can be directly run on the processor, interpreted languages require an intermediary host program to read and execute each statement of instruction line by line. The source code is not compiled or converted into processor-specific instruction codes. Common examples of interpreted languages include REXX, PostScript, Perl, Ruby, and Python. Programs written in interpreted languages are slower in execution speed, but they provide the benefit of quicker changes because there is no need for recompilation and relinking, as is the case with those written in compiled languages.

4.4.2.3 Hybrid Languages

To leverage the benefits provided by compiled languages and interpreted languages, there is also a combination (hybrid) of both compiled and interpreted languages. Here, the source code is compiled into an intermediate stage that resembles object code. The intermediate stage code is then interpreted as required. Java is a common example of a hybrid language. In Java, the intermediate stage code that results upon compilation of source code is known as the byte code. The byte code resembles processor instruction codes, but it cannot be executed as such. It requires an independent host program that runs on the computer to interpret the byte code, and the Java Virtual Machine (JVM) provides this for Java. In .Net programming languages, the source code is compiled into what is known as the common intermediate language (CIL), formerly known as Microsoft Intermediate Language (MSIL). At run time, the common language runtime’s (CLR) just in time compiler converts the CIL code into native code, which is then executed by the machine.

4.5 Software Development Methodologies

Software development is a structured and methodical process that requires the interplay of people expertise, processes, and technologies. The software development life cycle (SDLC) is often broken down into multiple phases that are either sequential or parallel. In this section, we will learn about the prevalent SDLC models that are used to develop software. These include:

- Waterfall model

- Iterative model

- Spiral model

- Agile development methodologies

4.5.1 Waterfall Model

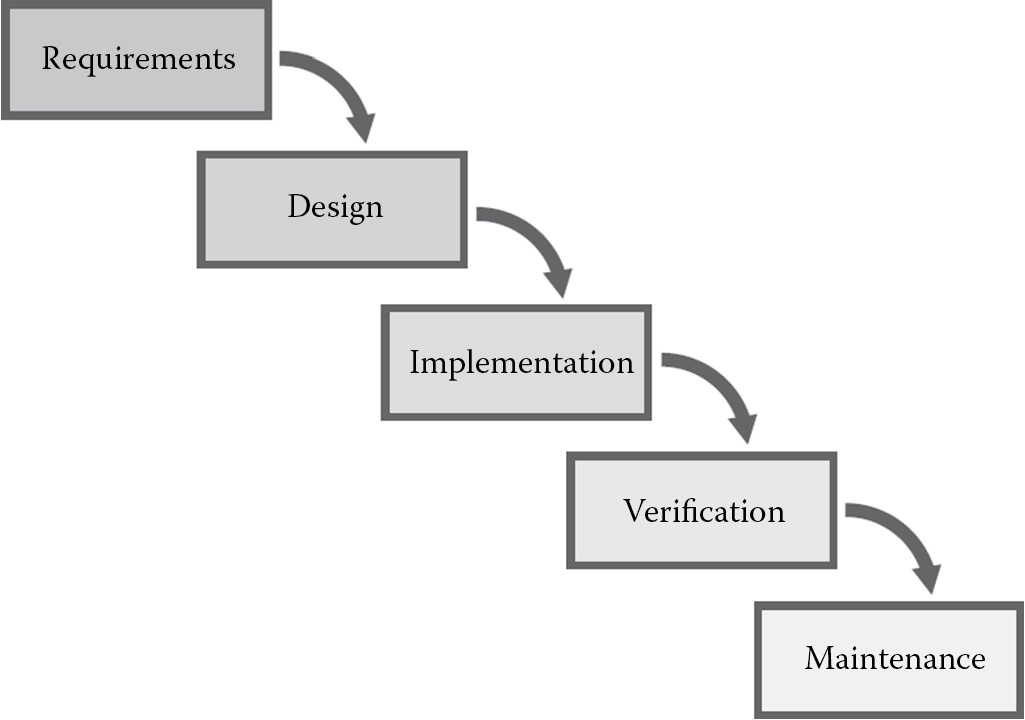

The waterfall model is one of the most traditional software development models still in use today. It is a highly structured, linear, and sequentially phased process characterized by predefined phases, each of which must be completed before one can move on to the next phase. Just as water can flow in only one direction down a waterfall, once a phase in the waterfall model is completed, one cannot go back to that phase. Winston W. Royce’s original waterfall model from 1970 has the following order of phases:

- Requirements specification

- Design

- Construction (also known as implementation or coding)

- Integration

- Testing and debugging (also known as verification)

- Installation

- Maintenance

The waterfall model is useful for large-scale software projects because it brings structure by phases to the software development process. The National Institute of Standards and Technology (NIST) Special Publication 800-64 REV 1d, covering Security Considerations in the Information Systems Development Life Cycle, breaks the linear waterfall SDLC model into five generic phases: initiation, acquisition/development, implementation/assessment, operations/maintenance, and sunset (Figure 4.6). Today, there are several other modified versions of the original waterfall model that include different phases with slight or major variations, but the definitive characteristic of each is the unidirectional sequential phased approach to software development.

From a security standpoint, it is important to ensure that the security requirements are part of the requirements phase. Incorporating any missed security requirements at a later point in time will result in additional costs and delays to the project.

4.5.2 Iterative Model

In the iterative model of software development, the project is broken into smaller versions and developed incrementally, as illustrated in Figure 4.7. This allows the development effort to be aligned with the business requirements, uncovering any important issues early in the project and therefore avoiding disastrous faulty assumptions. It is also commonly referred to as the prototyping model in which each version is a prototype of the final release to manufacturing (RTM) version. Prototypes can be built to clarify requirements and then discarded, or they may evolve into the final RTM version. The primary advantage of this model is that it offers increased user input opportunity to the customer or business, which can prove useful to solidify the requirements as expected before investing a lot of time, effort, and resources. However, it must be recognized that if the planning cycles are too short, nonfunctional requirements, especially security requirements, can be missed. If it is too long, then the project can suffer from analysis paralysis and excessive implementation of the prototype.

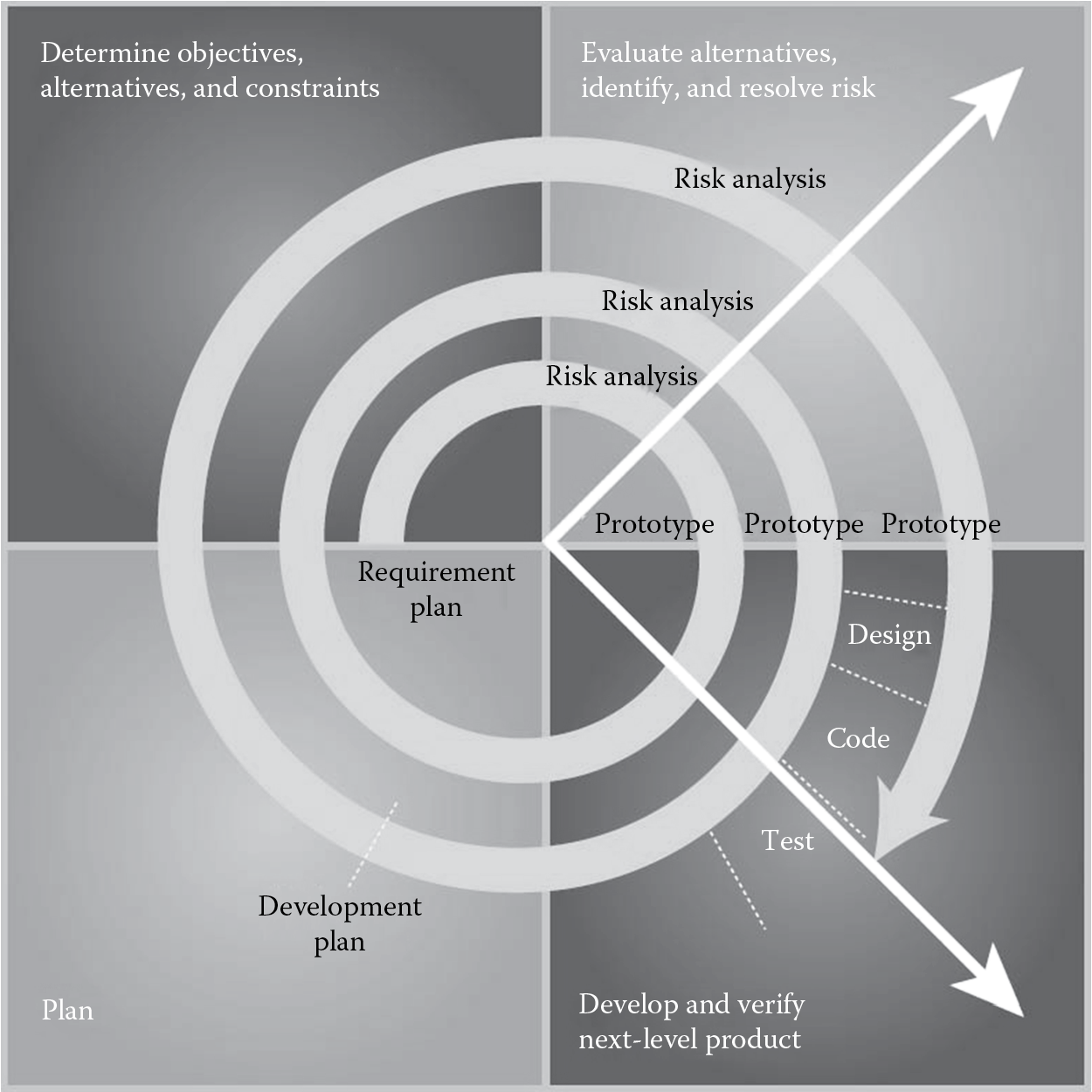

4.5.3 Spiral Model

The spiral model, as shown in Figure 4.8, is a software development model with elements of both the waterfall model and the prototyping model, generally used for larger projects. The key characteristic of this model is that each phase has a risk assessment review activity. The risk of not completing the software development project within the constraints of cost and time is estimated, and the results of the risk assessment activity are used to find out if the project needs to be continued or not. This way, should the success of completing the project be determined as questionable, then the project team has the opportunity to cut the losses before investing more into the project.

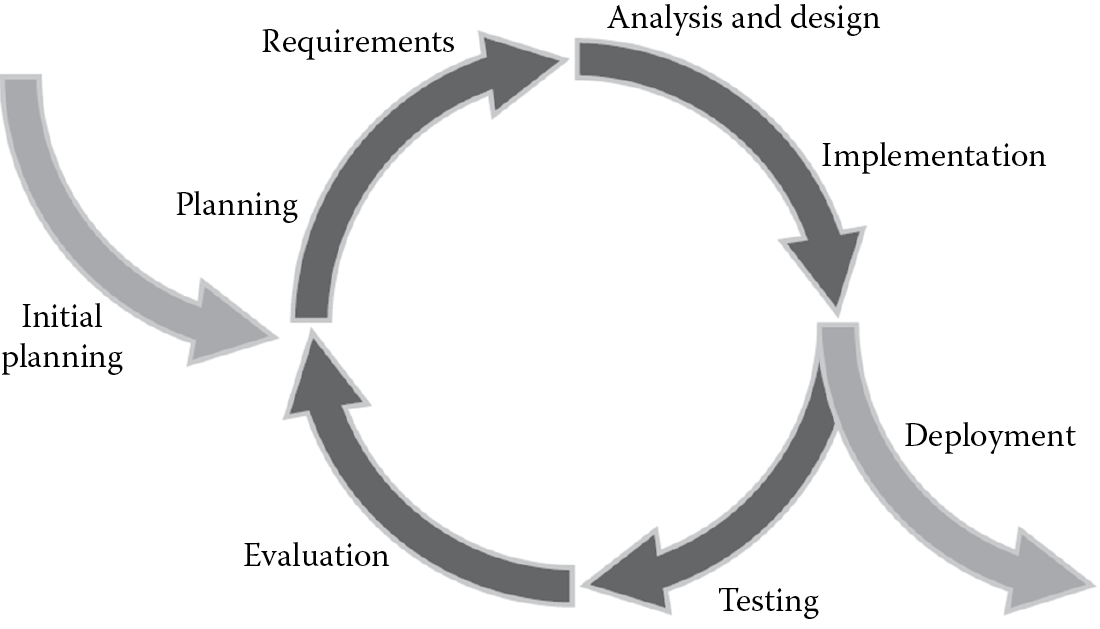

4.5.4 Agile Development Methodologies

Agile development methodologies are gaining a lot of acceptance today, and most organizations are embracing them for their software development projects. Agile development methodologies are built on the foundation of iterative development with the goal of minimizing software development project failure rates by developing the software in multiple repetitions (iterations) and small timeframes (called timeboxes). Each iteration includes the full SDLC. The primary benefit of agile development methodologies is that changes can be made quickly. This approach uses feedback driven by regular tests and releases of the evolving software as its primary control mechanism, instead of planning in the case of the spiral model.

The two main agile development methodologies include:

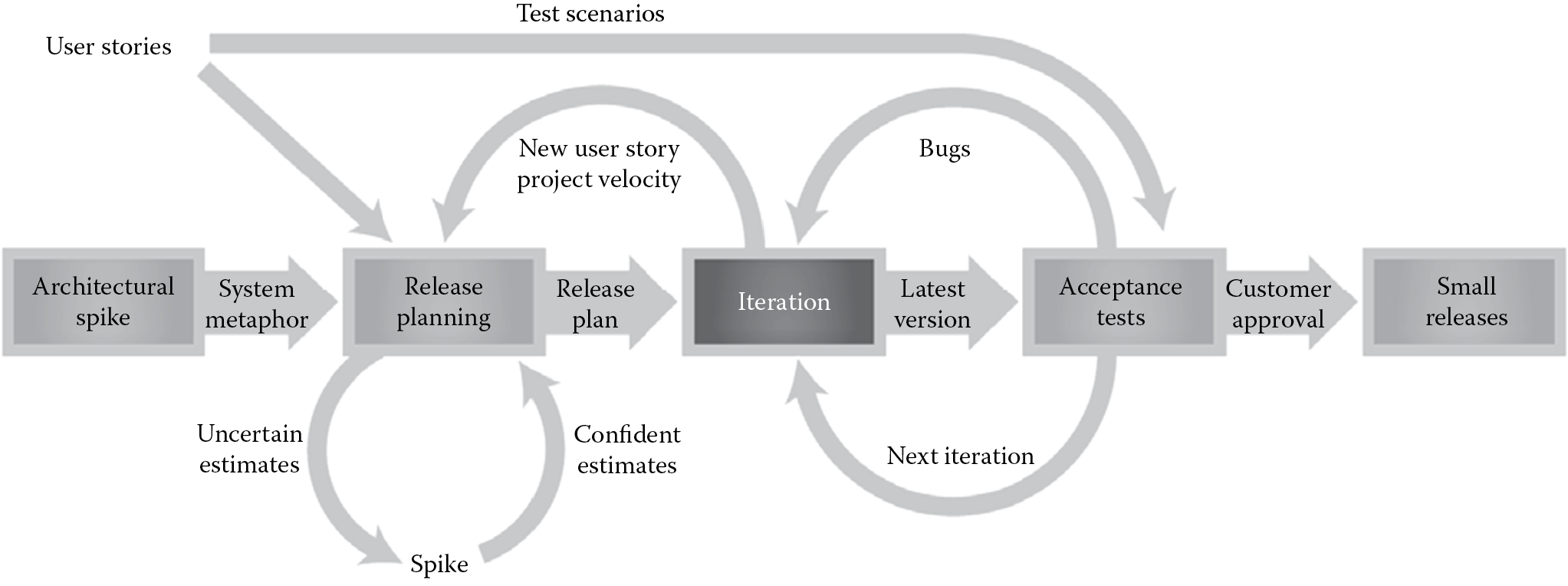

- Extreme Programming (XP) model: The XP model is also referred to as the “people-centric” model of programming and is useful for smaller projects. It is a structured process, as depicted in Figure 4.9, that storyboards and architects user requirements in iterations and validates the requirements using acceptance testing. Upon acceptance and customer approval, the software is released. Success factors for the XP model are: (1) starting with the simplest solutions and (2) communication between team members. Some of the other distinguishing characteristics of XP are adaptability to change, incremental implementation of updates, feedback from both the system and the business user or customer, and respect and courage for all who are part of the project.

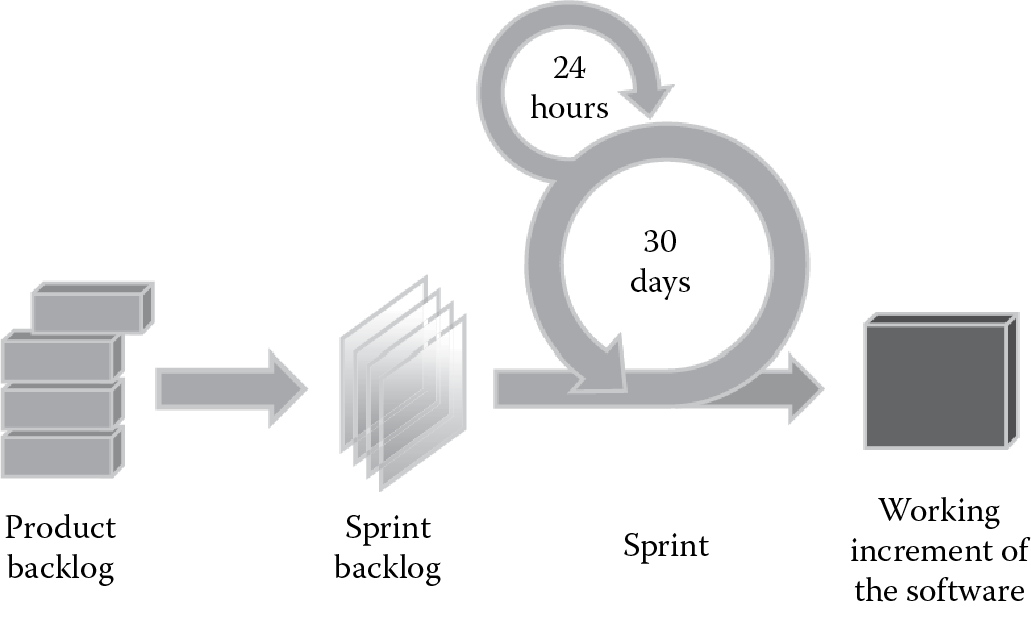

- Scrum: Another recent, very popular, and widely used agile development methodology is the Scrum programming approach. The Scrum approach calls for 30-day release cycles to allow the requirements to be changed on the fly, as necessary. In Scrum methodology, the software is kept in a constant state of readiness for release, as shown in Figure 4.10. The participants in Scrum have predefined roles of two types depending on their level of commitment: pig roles (those who are committed, whose bacon is on the line) and chicken roles (those who are part of the Scrum team participating in the project). Pig roles include the Scrum master who functions like a project manager in regular projects, the product owner who represents the stakeholders and is the voice of the customer, and the team of developers. The team size is usually between five and nine for effective communication. Chicken roles include the users who will use the software being developed, the stakeholders (the customer or vendor), and other managers. A prioritized list of high level requirements is first developed known as a product backlog. The time allowed for development of the product backlog, usually about 30 days, is called a sprint. The list of tasks to be completed during a sprint is called the sprint backlog. A daily progress for a sprint is recorded for review in the artifact known as the burn down chart.

4.5.5 Which Model Should We Choose?

In reality, the most conducive model for enterprise software development is usually a combination of two or more of these models. It is important, however, to realize that no model or combination of models can create inherently secure software. For software to be securely designed, developed, and deployed, a minimum set of security tasks needs to be effectively incorporated into the system development process, and the points of building security into the SDLC model should be identified.

4.6 Common Software Vulnerabilities and Controls

Although secure software is the result of a confluence between people, process, and technology, in this chapter, we will primarily focus on the technology and process aspects of writing secure code. We will learn about the most common vulnerabilities that result from insecure coding, how an attacker can exploit those vulnerabilities, and the anatomy of the attack itself. We will also discuss security controls that must be put in place (in the code) to resist and thwart actions of threat agents.

Nowadays, most of the reported incidents of security breaches seem to have one thing in common: they are attacks that exploited some weakness in the software layer. Analysis of the breaches invariably indicates one of the following to be the root cause of the breach: design flaws, coding (implementation) issues, and improper configuration and operations, with the prevalence of attacks exploiting software coding weaknesses. The Open Web Application Security Project (OWASP) Top 10 List and the Common Weakness Enumeration (CWE/SANS) Top 25 List of the most dangerous programming errors are testaments to the fact that software programming has a lot to do with its security. The 2010 OWASP Top 10 List, in addition to considering the most common application security issues from a weaknesses or vulnerabilities perspective (as did the 2004 and 2007 versions), views application security issues from an organizational risks (technical risk and business impact) perspective, as tabulated in Table 4.1. The 2009 CWE/SANS Top 25 List of the most dangerous programming errors is shown in Table 4.2.

The 2009 CWE/SANS Top 25 List of the most dangerous programming errors falls into the following three categories:

- Insecure interaction between components: includes weaknesses that relate to insecure ways in which data are sent and received between separate components, modules, programs, process, threads, or systems.

- Risky resource management: includes weaknesses that relate to ways in which software does not properly manage the creation, usage, transfer, or destruction of important system resources.

- Porous defenses: includes weaknesses that relate to defensive techniques that are often misused, abused, or just plain ignored.

The categorization of the 2009 CWE/SANS Top 25 List of most dangerous programming errors is shown in Table 4.3.

It is recommended that you visit the respective Web sites for the OWASP Top 10 List and the CWE/SANS Top 25 List, as a CSSLP is expected to be familiar with programming issues that can lead to security breaches and know how to address them. The most common software security vulnerabilities and risks are covered in the following section. Each vulnerability or risk is first described as to what it is and how it occurs and is followed by a discussion of security controls that can be implemented to mitigate it.

4.6.1 Injection Flaws

|

OWASP Top 10 Rank |

1 |

|

CWE Top 25 Rank |

2, 9 |

Considered one of the most prevalent software (or application) security weaknesses, injection flaws occur when the user-supplied data are not validated before being processed by an interpreter. The attacker supplies data that are accepted as they are and interpreted as a command or part of a command, thus allowing the attacker to execute commands using any injection vector. Almost any data accepting source are a potential injection vector if the data are not validated before they are processed. Common examples of injection vectors include QueryStrings, form input, and applets in Web applications. Injection flaws are easily discoverable using code review, and scanners, including fuzzing scans, can be used to detect them. There are several different types of injection attacks.

The most common injection flaws include SQL injection, OS command injection, LDAP injection, and XML injection.

- SQL Injection

This is probably the most well-known form of injection attack, as the databases that store business data are becoming the prime target for attackers. In SQL (Structured Query Language) injection, attackers exploit the way in which database queries are constructed. They supply input that, if not sanitized or validated, becomes part of the query that the database processes as a command. Let us consider an example of a vulnerable code implementation in which the query command text (sSQLQuery) is dynamically built using data supplied from text input fields (txtUserID and txtPassword) from the Web form.

string sSQLQuery = “ SELECT * FROM USERS WHERE user_id = ‘ ” + txtUserID.Text + ” ‘ AND user_password = ‘ ” + txtPassword.Text + ” ‘

If the attacker supplies ‘ OR 1=1 -- as the txtUserID value, then the SQL Query command text that is generated is as follows:

string sSQLQuery = “ SELECT * FROM USERS WHERE user_id = ‘ ” + ‘ OR 1=1 - - + ” ‘ AND user_password = ‘ ” + txtPassword.Text + ” ‘

This results in SQL syntax, as shown below, that the interpreter will evaluate and execute as a valid SQL command. Everything after the -- in T-SQL is ignored.

SELECT * FROM USERS WHERE user_id = ‘ ’ OR 1=1 - -

The attack flow in SQL injection comprises the following steps:

- Exploration by hypothesizing SQL queries to determine if the software is susceptible to SQL injection

- Experimenting to enumerate internal database schema by forcing database errors

- Exploiting the SQL injection vulnerability to bypass checks or modify, add, retrieve, or delete data from the database

Upon determining that the application is susceptible to SQL injection, an attacker will attempt to force the database to respond with messages that potentially disclose internal database structure and values by passing in SQL commands that cause the database to error. Suppressing database error messages considerably thwarts SQL injection attacks, but it has been proven that this control measure is not sufficient to prevent SQL injection completely. Attackers have found a way to go around the use of error messages for constructing their SQL commands, as is evident in the variant of SQL injection known as blind SQL injection. In blind SQL injection, instead of using information from error messages to facilitate SQL injection, the attacker constructs simple Boolean SQL expressions (true/false questions) to probe the target database iteratively. Depending on whether the query was successfully executed, the attacker can determine the syntax and structure of the injection. The attacker can also note the response time to a query with a logically true condition and one with a false condition and use that information to determine if a query executes successfully or not.

- OS Command Injection

This works in the same principle as the other injection attacks where the command string is generated dynamically using input supplied by the user. When the software allows the execution of operation system (OS) level commands using the supplied user input without sanitization or validation, it is said to be susceptible to OS Command injection. This could be seriously devastating to the business if the principle of least privilege is not designed into the environment that is being compromised. The two main types of OS Command injection are as follows:

- The software accepts arguments from the user to execute a single fixed program command. In such cases, the injection is contained only to the command that is allowed to execute, and the attacker can change the input but not the command itself. Here, the programming error is that the programmer assumes that the input supplied by users to be part of the arguments in the command to be executed will be trustworthy as intended and not malicious.

- The software accepts arguments from the user that specify what program command they would like the system to execute. This is a lot more serious than the previous case, because now the attacker can chain multiple commands and do some serious damage to the system by executing their own commands that the system supports. Here, the programming error is that the programmer assumes that the command itself will not be accessible to untrusted users.

An example of an OS Command injection that an attacker supplies as the value of a QueryString parameter to execute the bin/ls command to list all files in the “bin” directory is given below:

http://www.mycompany.com/sensitive/cgi-bin/userData.pl?doc=%20%3B%20/bin/ls%20-l %20 decodes to a space and %3B decodes to a ; and the command that is executed will be /bin/ls -l listing the contents of the program’s working directory.

- LDAP Injection

LDAP is used to store information about users, hosts, and other objects. LDAP injection works on the same principle as SQL injection and OS command injection. Unsanitized and unvalidated input is used to construct or modify syntax, contents, and commands that are executed as an LDAP query. Compromise can lead to the disclosure of sensitive and private information as well as manipulation of content within the LDAP tree (hierarchical) structure. Say you have the ldap query (_sldapQuery) built dynamically using the user-supplied input (userName) without any validation, as shown in the example below.

String _sldapQuery = ’’ (cn=’’ + $userName + ’’) ’ ’;

If the attacker supplies the wildcard ‘*”, information about all users listed in the directory will be disclosed. If the user supplies the value such as ‘’‘’sjohnson) (|password=*))‘’, the execution of the LDAP query will yield the password for the user sjohnson.

- XML Injection

XML injection occurs when the software does not properly filter or quote special characters or reserved words that are used in XML, allowing an attacker to modify the syntax, contents, or commands before execution. The two main types of XML injection are as follows:

- XPATH injection

- XQuery injection

In XPATH injection, the XPath expression used to retrieve data from the XML data store is not validated or sanitized before processing and built dynamically using user-supplied input. The structure of the query can thus be controlled by the user, and an attacker can take advantage of this weakness by injecting malformed XML expressions to perform malicious operations, such as modifying and controlling logic flow, retrieving unauthorized data, and circumventing authentication checks. XQuery injection works the same way as an XPath injection, except that the XQuery (not XPath) expression used to retrieve data from the XML data store is not validated or sanitized before processing and built dynamically using user-supplied input.

Consider the following XML document (accounts.xml) that stores the account information and pin numbers of customers and a snippet of Java code that uses XPath query to retrieve authentication information:

<customers>

<customer>

<user_name>andrew</user_name>

<accountnum>1234987655551379</accountnum>

<pin>2358</pin>

<homepage>/home/astrout</homepage>

</customer>

<customer>

<user_name>dave</user_name>

<accountnum>9865124576149436</accountnum>

<pin>7523</pin>

<homepage>/home/dclarke</homepage>

</customer>

</customers>

The Java code used to retrieve the home directory based on the provided credentials is:

XPath xpath = XPathFactory.newInstance().newXPath();

XPathExpression xPathExp = xpath.compile(“//customers/customer[user_name/text()=’” + login.getUserName() + “’ and pin/text() = ‘” + login.getPIN() + “’]/homepage/text()”);

Document doc = DocumentBuilderFactory.newInstance() .newDocumentBuilder().parse(new File(“accounts.xml”));

String homepage = xPathExp.evaluate(doc);

By passing in the value “andrew” into the getUserName() method and the value “’ or ‘’=’” into the getPIN() method call, the XPath expression becomes

//customers/customer[user_name/text()=’andrew’ or ‘’=’’ and pin/text() = ‘’ or ‘’=’’]/hompage/text()

This will allow the user logging in as andrew to bypass authentication without supplying a valid PIN.

Regardless of whether an injection flaw exploits a database, OS command, a directory protocol and structure, or a document, they are all characterized by one or more of the following traits:

- User-supplied input is interpreted as a command or part of a command that is executed. In other words, data are misunderstood by the interpreter as code.

- Input from the user is not sanitized or validated before processing.

- The query that is constructed is generated using user-supplied input dynamically.

The consequences of injection flaws are varied and serious. The most common ones include:

- Disclosed, altered, or destroyed data

- Compromise of the operating system

- Discovery of the internal structure (or schema) of the database or data store

- Enumeration of user accounts from a directory store

- Circumvention of nested firewalls

- Execution of extended procedures and privileged commands

- Bypass of authentication

4.6.1.1 Injection Flaws Controls

Commonly used mitigation and prevention strategies and controls for injection flaws are as follows:

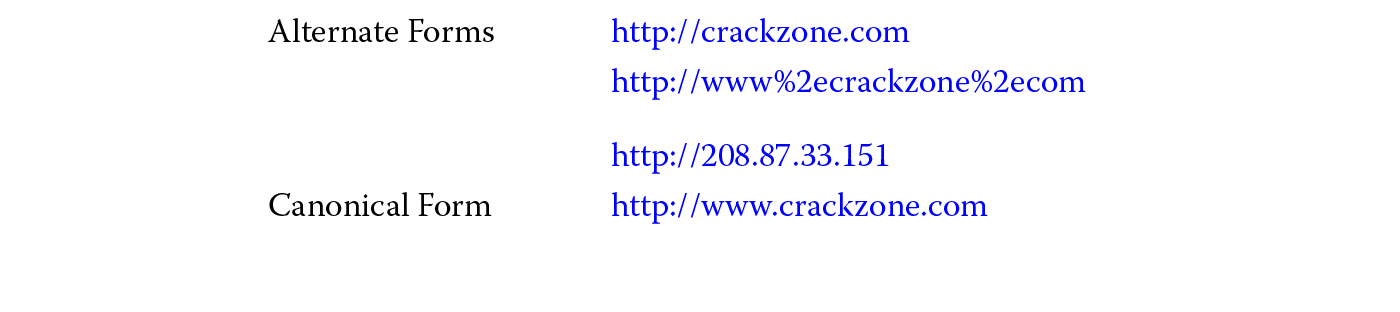

- Consider all input to be untrusted and validate all user input. Sanitize and filter input using a whitelist of allowable characters and their noncanonical forms. Although using a blacklist of disallowed characters can be useful in detecting potential attacks or determining malformed inputs, sole reliance on blacklists can prove to be insufficient, as the attacker can try variations and alternate representations of the blacklist form. Validation must be performed on both the client and server side, or at least on the server side, so that attackers cannot simply bypass client-side validation checks and still perform injection attacks. User input must be validated for data type, range, length, format, values, and canonical representations. SQL keywords such as union, select, insert, update, delete, and drop must be filtered in addition to characters such as single-quote (‘) or SQL comments (--) based on the context. Input validation should be one of the first lines of defense in an in-depth strategy for preventing or mitigating injection attacks, as it significantly reduces the attack surface.

- Encode output using the appropriate character set, escape special characters, and quote input, besides disallowing meta-characters. In some cases, when the input needs to be collected from various sources and is required to support free-form text, then the input cannot be constrained for business reasons, this may be the only effective solution to preventing injection attacks. Additionally, it provides protection even when some input sources are not covered with input validation checks.

- Use structured mechanisms to separate data from code.

- Avoid dynamic query (SQL, LDAP, XPATH Expression or XQuery) construction.

- Use a safe application programming interface (API) that avoids the use of the interpreter entirely or that provides escape syntax for the interpreter to escape special characters. A well-known example is the ESAPI published by OWASP.

- Just using parameterized queries (stored procedures or prepared statements) does not guarantee that the software is no longer susceptible to injection attacks. When using parameterized queries, make sure that the design of the parameterized queries truly accepts the user-supplied input as parameters and not the query itself as a parameter that will be executed without any further validation.

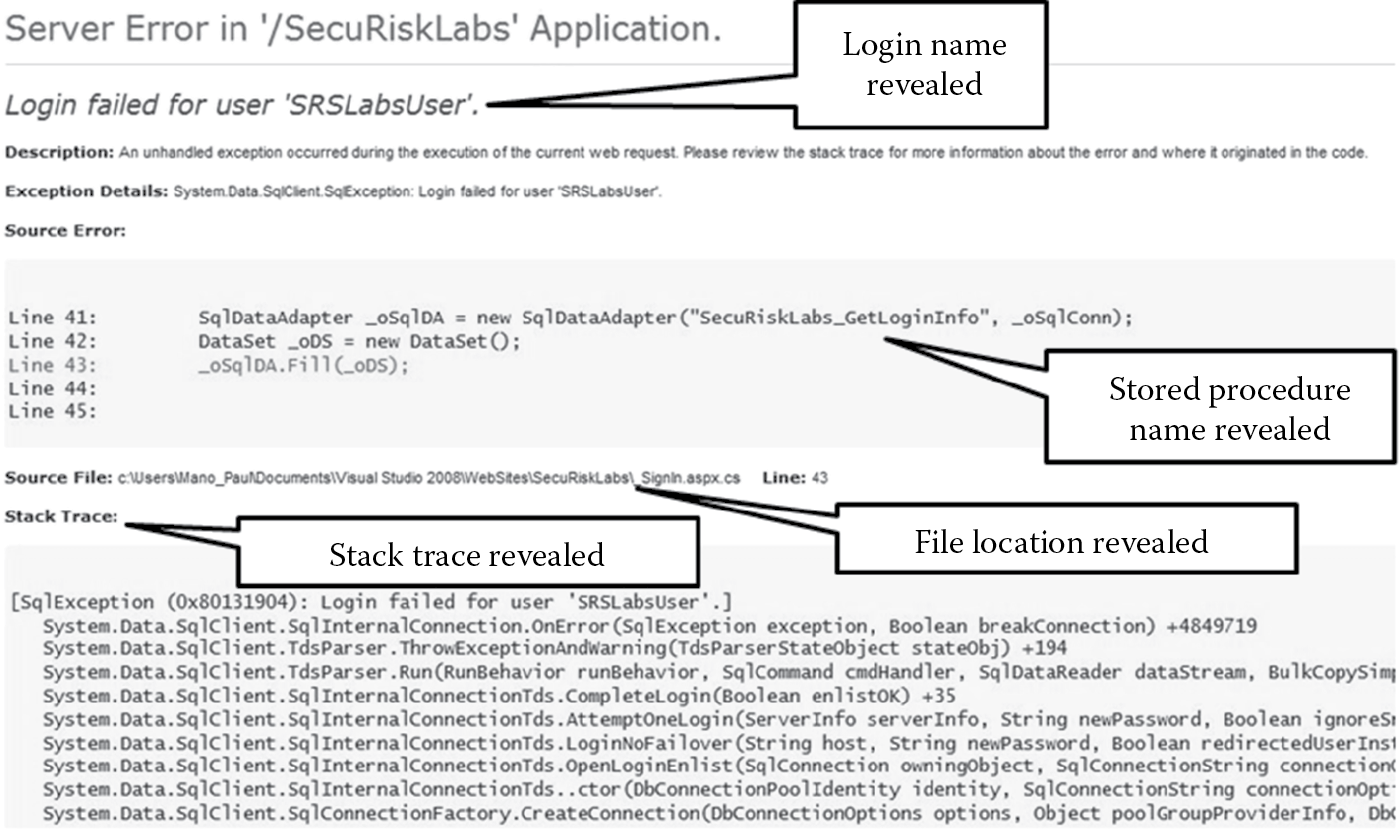

- Display generic error messages that yield minimal to no additional information.

- Implement failsafe by redirecting all errors to a generic error page and logging it for later review.

- Remove any unused, unnecessary functions or procedures from the database server. Remove all extended procedures that will allow a user to run system commands.

- Implement least privilege by using views, restricting tables, queries, and procedures to only the authorized set of users and/or accounts. The database users should be authorized to have only the minimum rights necessary to use their account. Using datareader, datawriter accounts as opposed to a database owner (dbo) account when accessing the database from the software is a recommended option.

- Audit and log the executed queries along with their response times to detect injection attacks, especially blind injection attacks.

- To mitigate OS command injection, run the code in a sandbox environment that enforces strict boundaries between the processes being executed and the operating system. Some examples include the Linux AppArmor and the Unix chroot jail. Managed code is also known to provide some degree of sandboxing protection.

- Use runtime policy enforcement to create the list of allowable commands (whitelist) and reject any command that does not match the whitelist.

- When having to implement defenses against LDAP injection attacks, the best method to handle user input properly is to filter or quote LDAP syntax from user-controlled input. This is dependent on whether the user input is used to create the distinguish name (DN) or used as part of the search filter text. When the input is used to create the DN, the backslash () escape method can be used, and when the input is used as part of the search filter, the ASCII equivalent of the character being escaped needs to be used. Table 4.4 lists the characters that need to be escaped and their respective escape method. It is important to ensure that the escaping method takes into consideration the alternate representations of the canonical form of user input.

In the event that the code cannot be fixed, using an application layer firewall to detect injection attacks can be a compensating control.

LDAP Mitigation Character Escaping

|

User input used |

Character(s) |

Escape sequence substitute |

|

To create DN |

&, !, |, =, <, >, +,−,’’, ‘ , ; , and comma (,) |

|

|

As part of search filter |

( |

28 |

|

) |

29 |

|

|

5c |

||

|

/ |

2f |

|

|

* |

2a |

|

|

NUL |

�0 |

4.6.2 Cross-Site Scripting (XSS)

|

OWASP Top 10 Rank |

2 |

|

CWE Top 25 Rank |

1 |

Injection flaws and cross-site scripting (XSS) can arguably be considered as the two most frequently exploitable weaknesses prevalent in software today. Some experts refer to these two flaws as a “1-2 punch,” as shown by the OWASP and CWE ranking.

XSS is the most prevalent Web application security attack today. A Web application is said to be susceptible to XSS vulnerability when the user-supplied input is sent back to the browser client without being properly validated and its content escaped. An attacker will provide a script (hence the scripting part) instead of a legitimate value, and that script, if not escaped before being sent to the client, gets executed. Any input source can be the attack vector, and the threat agents include anyone who has access to supplying input. Code review and testing can be used to detect XSS vulnerabilities in software.

The three main types of XSS are:

- Nonpersistent or reflected XSS

As the name indicates, nonpersistent or reflected XSS are attacks in which the user-supplied input script that is injected (also referred to as payload) is not stored, but merely included in the response from the Web server, either in the results of a search or as an error message. There are two primary ways in which the attacker can inject their malicious script. One is that they provide the input script directly into your Web application. The other way is that they can send a link with the script embedded and hidden in it. When a user clicks the link, the injected script takes advantage of the vulnerable Web server, which reflects the script back to the user’s browser, where it is executed.

- Persistent or stored XSS

Persistent or stored XSS is characterized by the fact that the injected script is permanently stored on the target servers, in a database, a message forum, a visitor log, or an input field. Each time the victims visit the page that has the injected code stored in it or served to it from the Web server, the payload script executes in the user’s browser. The infamous Samy Worm and the Flash worm are well-known examples of a persistent or stored XSS attack.

- DOM-based XSS

DOM-based XSS is an XSS attack in which the payload is executed in the victim’s browser as a result of DOM environment modifications on the client side. The HTTP response (or the Web page) itself is not modified, but weaknesses in the client side allow the code contained in the Web page client to be modified so that the payload can be executed. This is strikingly different from the nonpersistent (or reflected) and the persistent (or stored) XSS versions because, in these cases, the attack payload is placed in the response page due to weaknesses on the server side.

The consequences of a successful XSS attack are varied and serious. Attackers can execute script in the victim’s browser and:

- Steal authentication information using the Web application.

- Hijack and compromise users’ sessions and accounts.

- Tamper or poison state management and authentication cookies.

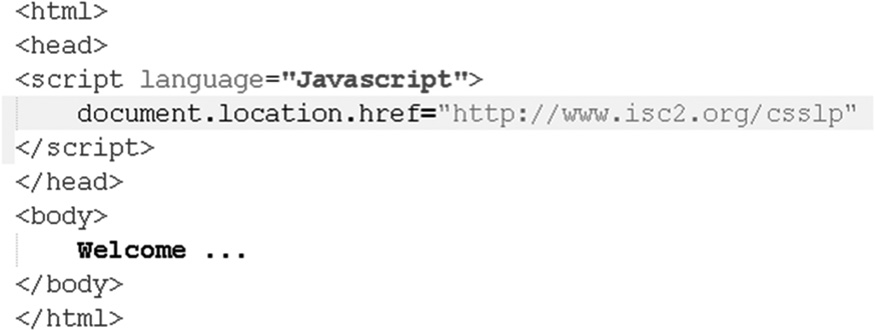

- Cause denial of service (DoS) by defacing the Web sites and redirecting users.

- Insert hostile content.

- Change user settings.

- Phish and steal sensitive information using embedded links.

- Impersonate a genuine user.

- Hijack the user’s browser using malware.

4.6.2.1 XSS Controls

Controls against XSS attacks include the following defensive strategies and implementations:

- Handle the output to the client by either using escaping sequences or encoding. This can be considered as the best way to protect against XSS attacks in conjunction with input validation. Escaping all untrusted data based on the HTML context (body, attribute, JavaScript, CSS, or URL) is the preferred option. Additionally, setting the appropriate character encoding and encoding user-supplied input renders the payload that the attacker injects as script into text-based output that the browser will merely read and not execute.

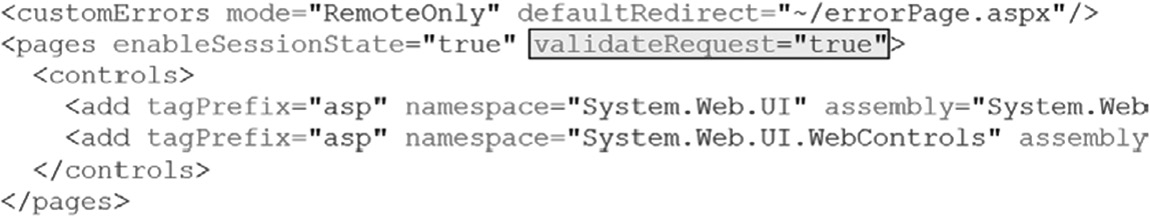

- Validating user-supplied input with a whitelist also provides additional protection against XSS. All headers, cookies, URL querystring values, form fields, and hidden fields must be validated. This validation should decode any encoded input and then validate the length, characters, format, and any business rules on the data before accepting the input. Each of the requests made to the server should be validated as well. In .Net, when the validateRequest flag is configured at the application, Web, or page level, as depicted in Figure 4.11, any unencoded script tag sent to the server is flagged as a potentially dangerous request to the server and is not processed.

- Disallow the upload of .htm or .html extensions.

- Use the innerText properties of HTML controls instead of the innetHtml property when storing the input supplied, so that when this information is reflected back on the browser client, the data renders the output to be processed by the browser as literal and as nonexecutable content instead of executable scripts.

- Use secure libraries and encoding frameworks that provide protection against XSS issues. The Microsoft Anti-Cross-Site Scripting, OWASP ESAPI Encoding module, Apache Wicket, and SAP Output Encoding framework are well-known examples.

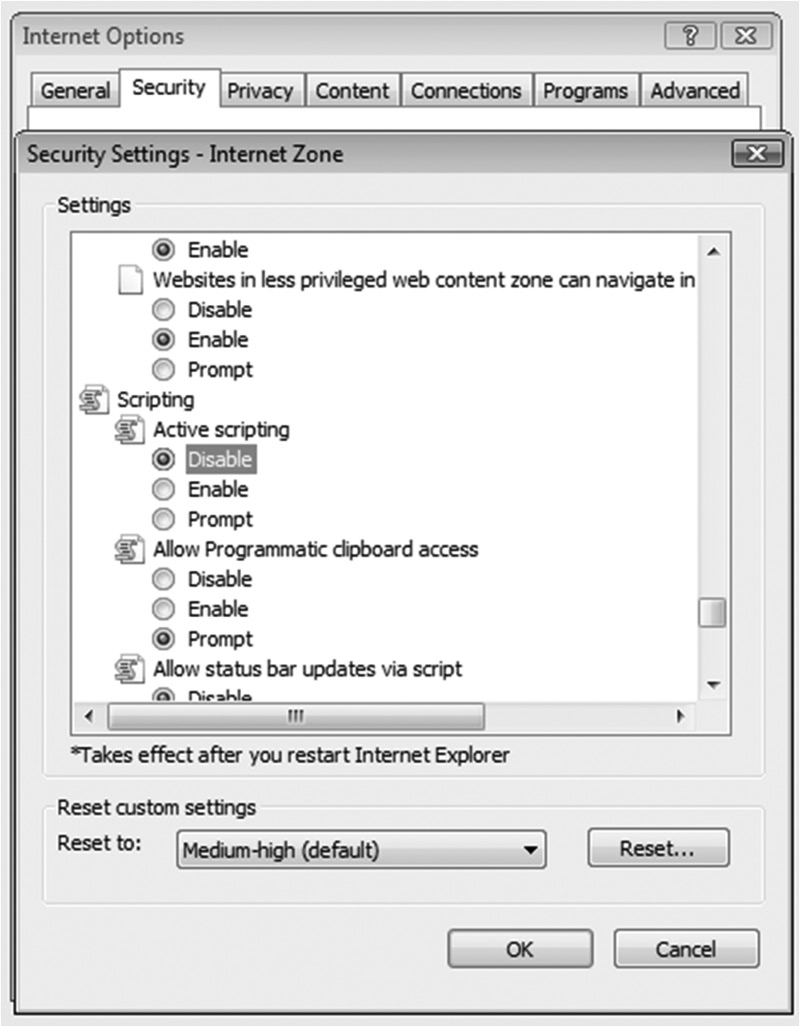

- The client can be secured by disabling the active scripting option in the browser so that scripts are not automatically executed on the browser. Figure 4.12 shows the configuration options for active scripting in the Internet Explorer browser. It is also advisable to install add-on plugins that will prevent the execution of scripts on the browser unless permissions are explicitly granted to run them. NoScript is a popular add-on for the Mozilla Firefox browser.

- Use the HTTPOnly flag on the session or any custom cookie so that the cookie cannot be accessed by any client-side code or script (if the browser supports it), which mitigates XSS attacks. However, if the browser does not support HTTPOnly cookies, then even if you have set the HTTPOnly flag in the Set-Cookie HTTP response header, this flag is ignored, and the cookie may still be susceptible to malicious script modifications and theft. Additionally, with the prevalence in Web 2.0 technologies, primarily Asynchronous JavaScript and XML (AJAX), the XMLHTTPRequest offers read access to HTTP headers, including the Set-Cookie HTTP response header.

- An application layer firewall can be useful against XSS attacks, but one must recognize that although this may not be preventive in nature, it is useful when the code cannot be fixed (as in the case of a third-party component).

4.6.3 Buffer Overflow

|

OWASP Top 10 Rank |

N/A |

|

CWE Top 25 Rank |

3, 12, 14, 17, 18 |

Historically, one of the most dangerous and serious attacks against software has been buffer overflow attacks. To understand what constitutes a buffer overflow, it is first important that you understand how program execution and memory management work. This was covered earlier in Section 4.4.1.

A buffer overflow is the condition that occurs when data being copied into the buffer (contiguous allocated storage space in memory) are more than what the buffer can handle. This means that the length of the data being copied is equal to (in languages that need a byte for the NULL terminator) or is greater than the byte count of the buffer. The two types of buffer overflows are:

- Stack overflow

A stack overflow occurs when the memory buffer has been overflowed in the stack space. When the software program runs, the executing instructions are placed on the program text segment of the RAM, global variables are placed on the read–write data section of the RAM, and the data (local variables, function arguments) and ESP register value that is necessary for the function to complete is pushed on to the stack (unless the datum is a variable sized object, in which case it is placed in the heap). As the program runs in memory, it calls each function sequentially and pushes that function’s data on the stack from higher address space to lower address space, creating a chain of functions to be executed in the order the programmer intended. Upon completion of a function, that function and its associated data are popped off the stack, and the program continues to execute the next function in the chain.

But how does the program know which function it should execute and which function it should go to once the current function has completed its operation? The ESP register (introduced earlier) tells the program which function it should execute. Another special register within the CPU is the execution instruction counter (EIP), which is used to maintain the sequence order of functions and indicates the address of the next instruction to be executed. This is the return address (RET) of the function. The return address is also placed on the stack when a function is called, and the protection of the return address from being improperly overwritten is critical from a security standpoint. If a malicious user manages to overwrite the return address to point to an address space in memory, where an exploit code (also known as payload) has been injected, then upon the completion of a function, the overwritten (tainted) return address will be loaded into the EIP register, and program execution will be overflowed, potentially executing the malicious payload.

The use of unsafe functions such as strcpy() and strcat() can result in stack overflows, since they do not intrinsically perform length checks before copying data into the memory buffer.

- Heap overflow

As opposed to a stack overflow, in which data flows from one buffer space into another, causing the return address instruction pointer to be overwritten, a heap overflow does not necessarily overflow, but corrupts the heap memory space (buffer), overwriting variables and function pointers on the heap. The corrupted heap memory may or may not be usable or exploitable. A heap overflow is not really an overflow, but rather a corruption of heap memory, and variable sized objects or objects too large to be pushed on the stack are dynamically allocated on the heap. Allocation of heap memory usually requires special function operators, such as malloc() (ANSI C), HeapAlloc() (Windows), and new() (C++), and deallocation of heap memory uses other special function operators, such as free(), HeapFree(), and delete(). Since no intrinsic controls on allocated memory boundaries exist, it is possible to overwrite adjacent memory chunks if there is no validation of size coded by the programmer. Exploitation of the heap space requires many more requirements to be met than is the case with stack overflow. Nonetheless, heap corruption can cause serious side effects, including DoS and exploit code execution, and protection mechanisms must not be ignored.

Any one of the following reasons can be attributed to causing buffer overflows:

- Copying of data into the buffer without checking the size of input.

- Accessing the buffer with incorrect length values.

- Improper validation of array (simplest expression of a buffer) index: When proper out-of-bounds array index checks are not conducted, reference indices in array buffers that do not exist will throw an out-of-bounds exception and can potentially cause overflows.

- Integer overflows or wraparounds: When checks are not performed to ensure that numeric inputs are within the expected range (maximum and minimum values), then overflow of integers can occur, resulting in faulty calculations, infinite loops, and arbitrary code execution.

- Incorrect calculation of buffer size before its allocation: Overflows can result if the software program does not accurately calculate the size of the data that will be input into the buffer space that it is going to allocate. Without this size check, the buffer size allocated may be insufficient to handle the data being copied into it.

4.6.3.1 Buffer Overflow Controls

Regardless of what causes a buffer overflow or whether a buffer overflow is on the stack or on the heap memory buffer, the one thing common in software susceptible to overflow attacks is that the program does not perform appropriate size checks of the input data. Input size validation is the number one implementation (programming) defense against buffer overflow attacks. Double-checking buffer size to ensure that the buffer is sufficiently large enough to handle the input data copied into it, checking buffer boundaries to make sure that the functions in a loop do not attempt to write past the allocated space, and performing integer type (size, precision, signed/unsigned) checks to make sure that they are within the expected range and values are other defensive implementations of controls in code.

Some programs are written to truncate the input string to a specified length before reading them into a buffer, but when this is done, careful attention must be given to ensure that the integrity of the data is not compromised.

In addition to implementation controls, there are other controls, such as requirements, architectural, build/compile, and operations controls, that can be put in place to defend against buffer overflow attacks:

- Choose a programming language that performs its own memory management and is type safe. Type safe languages are those that prevent undesirable type errors that result from operations (usually casting or conversion) on values that are not of the appropriate data type. Type safety (covered in more detail later in this chapter) is closely related to memory safety, as type unsafe languages will not prevent an arbitrary integer to be used as a pointer in memory. Ada, Perl, Java, and .Net programming languages are examples of languages that perform memory management and/or type safe. It is important, however, to recognize that the intrinsic overflow protection provided by some of these languages can be overwritten by the programmer. Also, although the language itself may be safe, the interfaces that they provide to native code can be vulnerable to various attacks. When invoking native functions from these languages, proper testing must be conducted to ensure that overflow attacks are not possible.

- Use a proven and tested library or framework that includes safer string manipulation functions, such as the Safe C String (SafeStr) library or the Safe Integer handling packages such as SafeInt (C++_ or IntegerLib (C or C++).

- Replace banned API functions that are susceptible to overflow issues with safer alternatives that perform size checks before performing their operations. It is recommended that you familiarize yourself with the banned API functions and their safer alternatives for the languages you use within your organization. When using functions that take in the number of bytes to copy as a parameter, such as the strncpy() or strncat(), one must be aware that if the destination buffer size is equal to the source buffer size, you may run into a condition where the string is not terminated because there is no place in the destination buffer to hold the NULL terminator.

- Design the software to use unsigned integers whenever possible, and when signed integers are used, make sure that checks are coded to validate both the maximum and minimum values of the range.

- Leverage compiler security if possible. Certain compilers and extensions provide overflow mitigation and protection by incorporating mechanisms to detect buffer overflows into the compiled (build) code. The Microsoft Visual Studio/GS flag, Fedora/Red Hat FORTIFY_SOURCE GCC flag, and StackGuard (covered later in this chapter in more detail) are some examples of this.

- Leverage operating system features such as Address Space Layout Randomization (ASLR), which forces the attacker to have to guess the memory address since its layout is randomized upon each execution of the program. Another OS feature to leverage is data execution protection (DEP) or execution space protection (ESP), which perform additional checks on memory to prevent malicious code from running on a system. However, this protection can fall short when the malicious code has the ability to modify itself to seem like innocuous code. ASLR and DEP/ESP are covered in more detail later in this chapter under the memory management topic.

- Use of memory checking tools and other tools that surround all dynamically allocated memory chunks with invalid pages so that memory cannot be overflowed into that space is a means of defense against heap corruption. MemCheck, Memwatch, Memtest86, Valgrind, and ElectricFence are examples of such tools.

4.6.4 Broken Authentication and Session Management

|

OWASP Top 10 Rank |

3 |

|

CWE Top 25 Rank |

6, 11, 19, 21, 22 |

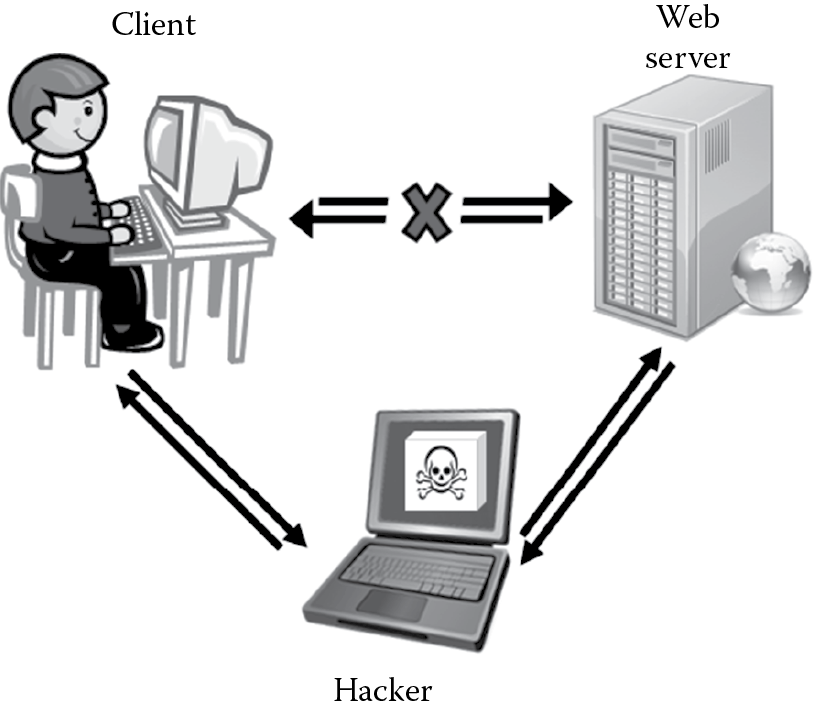

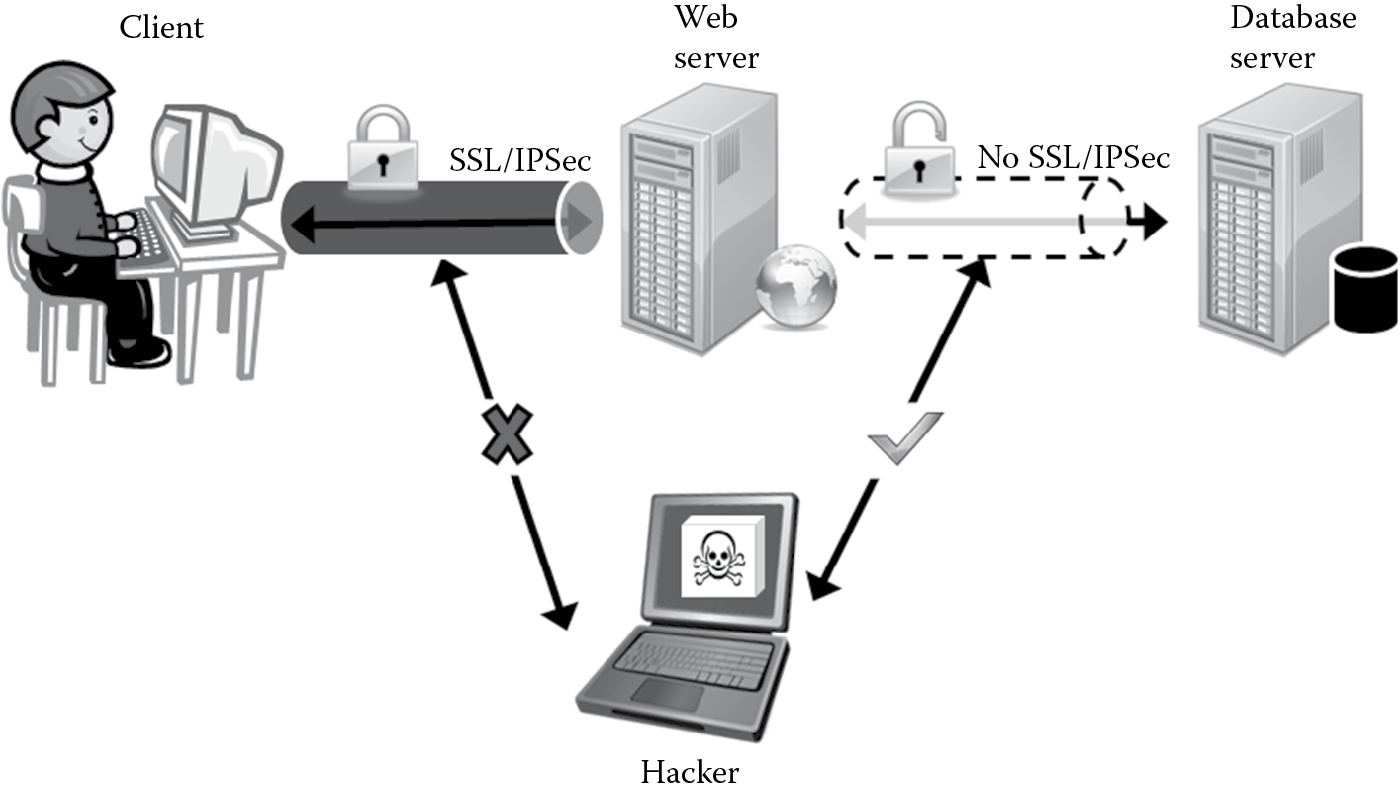

Weaknesses in authentication mechanisms and session management are not uncommon in software. Areas susceptible to these flaws are usually found in secondary functions that deal with logout, password management, time outs, remember me, secret questions, and account updates. Vulnerabilities in these areas can lead to the discovery and control of sessions. Once the attacker has control of a session (hijack) they can interject themselves in the middle, impersonating valid and legitimate users to both parties engaged in that session transaction. The man-in-the-middle (MITM) attack, as depicted in Figure 4.13, is a classic result of broken authentication and session management.

In addition to session hijacking, impersonation, and MITM attacks, these vulnerabilities can also allow an attacker to circumvent any authentication and authorization decisions that are in place. In cases when the account being hijacked is that of a privileged user, it can potentially lead to granting access to restricted resources and subsequently total system compromise.

Some of the common software programming failures that end up resulting in broken authentication and broken session management include, but are not limited to, the following:

- Allowing more than one set of authentication or session management controls that allow access to critical resources via multiple communication channels or paths

- Transmitting authentication credentials and session IDs over the network in cleartext

- Storing authentication credentials without hashing or encrypting them

- Hard coding credentials or cryptographic keys in cleartext inline in code or in configuration files

- Not using a random or pseudo-random mechanism to generate system-generated passwords or session IDs

- Implementing weak account management functions that deal with account creation, changing passwords, or password recovery

- Exposing session IDs in the URL by rewriting the URL

- Insufficient or improper session timeouts and account logout implementation

- Not implementing transport protection or data encryption

4.6.4.1 Broken Authentication and Session Management Controls

Mitigation and prevention of authentication and session management flaws require careful planning and design. Some of the most important design considerations include:

- Built-in and proven authentication and session management mechanisms: These support the principle of leveraging existing components as well. When developers implement their custom authentication and session management mechanisms, the likelihood of programming errors are increased.

- A single and centralized authentication mechanism that supports multifactor authentication and role-based access control: Segmenting the software to provide functionality based on the privilege level (anonymous, guest, normal, and administrator) is a preferred option. This not only eases administration and rights configuration, but it also reduces the attack surface considerably.

- A unique, nonguessable, and random session identifier to manage state and session along with performing session integrity checks: For the credentials, do not use claims that can be easily spoofed and replayed. Some examples of these include IP address, MAC address, DNS or reverse-DNS lookups, and referrer headers. Tamper-proof, hardware-based tokens can also provide a high degree of protection.

- When storing authentication credentials for outbound authentication, encrypt or hash the credentials before storing them in a configuration file or data store, which should also be protected from unauthorized users.

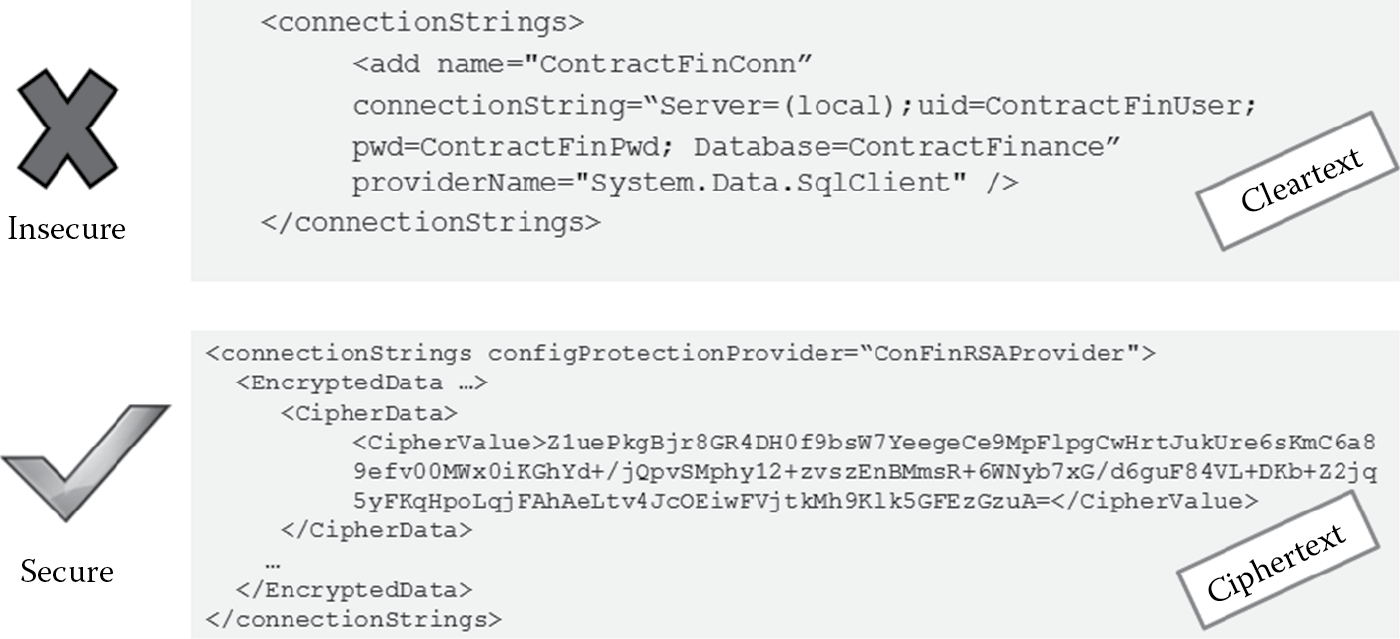

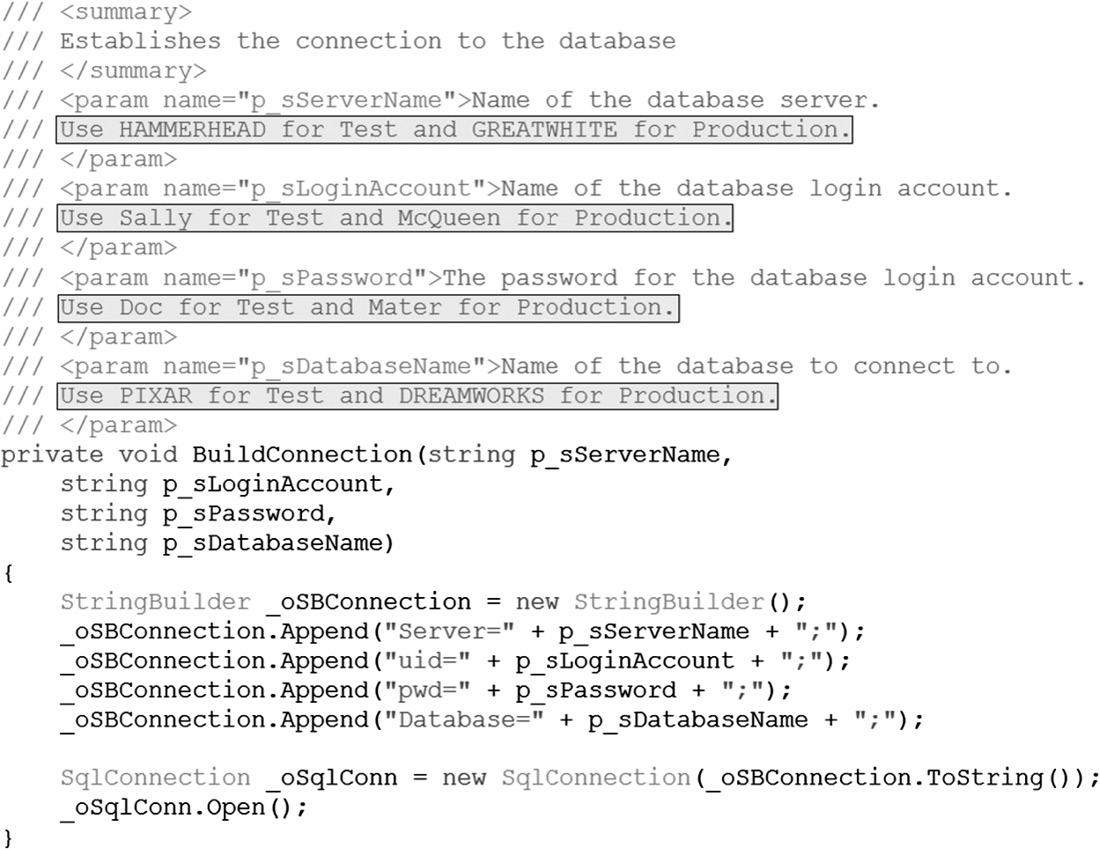

- Do not hard code database connection strings, passwords, or cryptographic keys in cleartext in the code or configuration files. Figure 4.14 illustrates an example of insecure and secure ways of storing database connecting strings in a configuration file.

- Identify and verify users at both the source as well as at the end of the communication channel to ensure that no malicious users have interjected themselves in between. Always authenticate users only from an encrypted source (Web page).

- Do not expose session ID in URLs or accept preset or timed-out session identifiers from the URL or HTTP request. Accepting session IDs from the URL can lead to what are known as session fixation and session replay attacks.

- Ensure that XSS protection mechanism are in place and working effectively, as XSS attacks can be used to steal authentication credentials and session IDs.

- Require the user to reauthenticate upon account update with such input as password changes, and, if feasible, generate a new session ID upon successful authentication or change in privilege level.

- Do not implement custom cookies in code to manage state. Use secure implementation of cookies by encrypting them to prevent tampering and cookie replay.

- Do not store, cache, or maintain state information on the client without appropriate integrity checking or encryption. If you are required to cache for user experience reasons, ensure that the cache is encrypted and valid only for an explicit period, after which it will expire. This is referred to as cache windowing.

- Ensure that all pages have a logout link. Do not assume that the closing of the browser window will abandon all sessions and client cookies. When the user closes the browser window, explicitly prompt the user to log off before closing the browser window. When you plan to implement user confirmation mechanisms, keep the design principle of psychological acceptability in mind: security mechanisms should not make the resource more difficult to access than if the security mechanisms were not present.

- Explicitly set a timeout, and design the software to log out of an inactive session automatically. The length of the timeout setting must be inversely proportional to the value of the data being protected. For example, if the software is marshalling and processing highly sensitive information, then the length of the timeout setting must be shorter.

- Implement the maximum number of authentication attempts allowed, and when that number has passed, deny by default and deactivate (lock) the account for a specific period or until the user follows an out-of-band process to reactivate (unlock) the account. Implementing throttle (clipping) levels prevents not only brute force attacks, but also DoS.

- Encrypt all client/server communications.

- Implement transport layer protection either at the transport layer (SSL/TLS) or at the network layer (IPSec), and encrypt data even if they are being sent over a protected network channel.

4.6.5 Insecure Direct Object References

|

OWASP Top 10 Rank |

4 |

|

CWE Top 25 Rank |

5 |

An insecure direct object reference flaw is one wherein an unauthorized user or process can invoke the internal functionality of the software by manipulating parameters and other object values that directly reference this functionality. Let us take a look at an example. A Web application is architected to pass the name of the logged-in user in cleartext as the value of the key “userName” and indicate whether the logged-in user is an administrator or not by passing the value to the key “isAdmin,” in the querystring of the URL, as shown in Figure 4.15.

Upon load, this page reads the value of the userName key from the querystring and renders information about the user whose name was passed and displays it on the screen. It also exposes administrative menu options if the isAdmin value is 1. In our example, information about Reuben will be displayed on the screen. We also see that Reuben is not an administrator, as indicated by the value of the isAdmin key. Without proper authentication and authorization checks, an attacker can change the value of the userName key from “reuben” to “jessica” and view information about Jessica. Additionally, by manipulating the isAdmin key value from 0 to 1, a nonadministrator can get access to administrative functionality when the Web application is susceptible to an insecure direct object reference flaw.

Such flaws can be seriously detrimental to the business. Data disclosure, privilege escalation, authentication and authorization checks bypass, and restricted resource access are some of the most common impacts when this flaw is exploited. This can be exploited to conduct other types of attacks as well, including injection and scripting attacks.

4.6.5.1 Insecure Direct Object References Controls

The most effective control against insecure direct object reference attacks is to avoid exposing internal functionality of the software using a direct object reference that can be easily manipulated. The following are some defensive strategies that can be taken to accomplish this objective:

- Use indirect object reference by using an index of the value or a reference map so that direct parameter manipulation is rendered futile unless the attacker is also aware of how the parameter maps to the internal functionality.

- Do not expose internal objects directly via URLs or form parameters to the end user.

- Either mask or cryptographically protect (encrypt/hash) exposed parameters, especially querystring key value pairs.

- Validate the input (change in the object/parameter value) to ensure that the change is allowed as per the whitelist.

- Perform multiaccess control and authorization checks each and every time a parameter is changed, according to the principle of complete mediation. If a direct object reference must be used, first ensure that the user is authorized.

- Use RBAC to enforce roles at appropriate boundaries and reduce the attack surface by mapping roles with the data and functionality. This will protect against attackers who are trying to attack users with a different role (vertical authorization), but not against users who are at the same role (horizontal authorization).

- Ensure that both context- and content-based RBAC is in place.

Manual code reviews and parameter manipulation testing can be used to detect and address insecure direct object reference flaws. Automated tools often fall short of detecting insecure direct object reference because they are not aware of what object requires protection and what the safe or unsafe values are.

4.6.6 Cross-Site Request Forgery (CSRF)

|

OWASP Top 10 Rank |

5 |

|

CWE Top 25 Rank |

4 |

Although the cross-site request forgery (CSRF) attack is unique in the sense that it requires a user to be already authenticated to a site and possess the authentication token, its impact can be devastating and is rightfully classified within the top five application security attacks in both the OWASP Top 10 and the CWE/SANS Top 25. The most popular Web sites, such as ING Direct, NYTimes.com, and YouTube have been proven to be susceptible to this.

In CSRF, an attacker masquerades (forges) a malicious HTTP request as a legitimate one and tricks the victim into submitting that request. Because most browsers automatically include HTTP requests, that is, the credentials associated with the site (e.g., user session cookies, basic authentication information, source IP addresses, windows domain credentials), if the user is already authenticated, the attack will succeed. These forged requests can be submitted using email links, zero-byte image tags (images whose height and width are both 0 pixel each so that the image is invisible to the human eye), tags stored in an iFrames (stored CSRF), URLs susceptible to clickjacking (where the URL is hijacked, and clicking on an URL that seems innocuous and legitimate actually results in clicking on the malicious URL that is hidden beneath), and XSS redirects. Forms that invoke state changing function are the prime targets for CSRF. CSRF is also known by a number of other names, including XSRF, Session riding attack, sea surf attack, hostile linking, and automation attack.

The attack flow in a CSRF attack is as follows:

- User authenticates into a legitimate Web site and receives the authentication token associated with that site.

- User is tricked into clicking a link that has a forged malicious HTTP request to be performed against the site to which the user is already authenticated.

- Since the browser sends the malicious HTTP request, the authentication credentials, this request surfs or rides on top of the authenticated token and performs the action as if it were a legitimate action requested by the user (now the victim).

Although a preauthenticated token is necessary for this attack to succeed, the hostile actions and damage that can be caused from CSRF attacks can be extremely perilous, limited only to what the victim is already authorized to do. Authentication bypass, identity compromise, and phishing are just a few examples of impact from successful CSRF attacks. If the user is a privileged user, then total system compromise is a possibility. When CSRF is combined with XSS, the impact can be extensive. XSS worms that propagate and impact several Web sites within a short period usually have a CSRF attack fueling them. CSRF potency is further augmented by the fact that the forced hostile actions appear as legitimate actions (since they come with an authenticated token) and thereby may go totally undetected. The OWASP CSRF Tester tool can be used to generate test cases to demonstrate the dangers of CSRF flaws.

4.6.6.1 CSRF Controls

The best defense against CSRF is to implement the software so that it is not dependent on the authenticated credentials automatically submitted by the browser. Controls can be broadly classified into user controls and developer controls.

The following are some defensive strategies that can be employed by users to prevent and mitigate CSRF attacks:

- Do not save username/password in the browser.

- Do not check the “remember me” option in Web sites.

- Do not use the same browser to surf the Internet and access sensitive Web sites at the same time, if you are accessing both from the same machine.

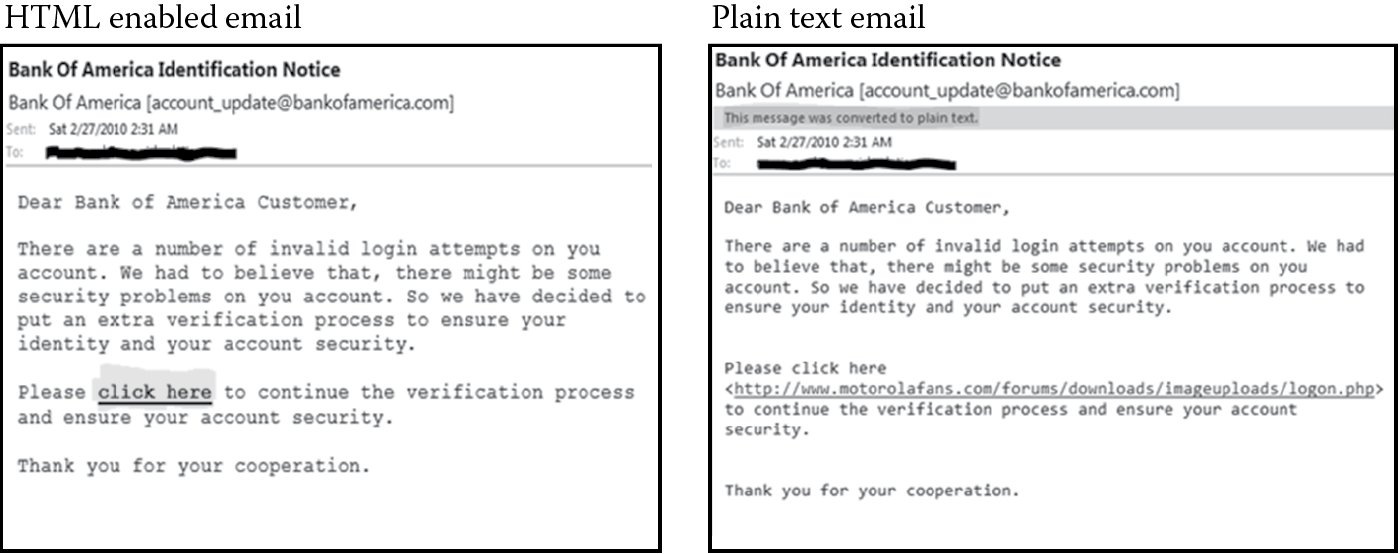

- Read standard emails in plain text. Viewing emails in plain text format shows the user the actual link that the user is being tricked to click on by rendering the embedded malicious HTML links into the actual textual link. Figure 4.16 depicts how a phishing email is shown to a potential victim when the email client is configured to read email in HTML format and in plain text format.

- Explicitly log off after using a Web application.

- Use client-side browser extensions that mitigate CSRF attacks. An example of this is the CSRF Protector, which is a client-side add-on extension for the Mozilla Firefox browser.

The following are some defensive strategies that can be used by developers to prevent and mitigate CSRF attacks:

- The most effective developer defensive control against CSRF is to implement the software to use a unique session-specific token (called a nonce) that is generated in a random, nonpredictable, nonguessable, and/or sequential manner. Such tokens need to be unique by function, page, or overall session.

- CAPTCHAs (Completely Automated Public Turing Test to Tell Computers and Humans Apart) can be used to establish specific token identifiers per session. CAPTCHAs do not provide a foolproof defense, but they increase the work factor of an attacker and prevent automated execution of scripts that can exploit CSRF vulnerabilities.

- The uniqueness of session tokens is to be validated on the server side and not be solely dependent on client-based validation.

- Use POST methods instead of GET requests for sensitive data transactions and privileged and state change transactions, along with randomized session identifier generation and usage.

- Use a double-submitted cookie. When a user visits a site, the site first generates a cryptographically strong pseudorandom value and sets it as a cookie on the user’s machine. Any subsequent request from the site should include this pseudorandom value as a form value and also as a cookie value, and when the POST request is validated on the server side, it should consider the request valid if and only if the form value and the cookie value are the same. Since an attacker can modify form values but not cookie values as per the same-origin policy, an attacker will not be able to submit a form successfully unless she is able to guess the pseudorandom value.

- Check the URL referrer tag for the origin of request before processing the request. However, when this method is implemented, it is important to ensure that legitimate actions are not impacted. If the users or proxies have disabled sending the referrer information for privacy reasons, legitimate functionality can be denied. Also, it is possible to spoof referrer information using XSS, so this defense must be in conjunction with other developer controls as part of a defense in depth strategy.

- For sensitive transactions, reauthenticate each and every time (as per the principle of complete mediation).

- Use transaction signing to assure that the request is genuine.