13

Leveraging Serverless Containers with Dapr

In this chapter, at the end of our journey of discovery of Dapr, we will learn how to deploy our containerized application to Azure Container Apps: a fully managed serverless container service in Microsoft Azure.

With the newly acquired experience of developing microservices applications with Dapr and operating it on Kubernetes, we will be able to appreciate how Azure Container Apps simplifies the developer’s job by giving us all the benefits of a container orchestrator without the burden of operating on a Kubernetes cluster.

This chapter will help us understand the business and technical advantages offered by a serverless container service.

In this chapter, we will cover the following topics:

- Learning about the Azure Container Apps architecture

- Setting up Azure Container Apps

- Deploying Dapr with Azure Container Apps

- Autoscaling Azure Container Apps with Kubernetes Event-Driven Autoscaling (KEDA)

As developers, we want to focus our attention and effort on the definition and implementation of an application. However, it is equally as important to comprehend how to operate our solution on the infrastructure and services at our disposal.

Our first objective is to understand the architecture of Azure Container Apps and find out how it can benefit the Biscotti Brutti Ma Buoni (Italian for “ugly but good cookies”) fictional solution we built so far.

Technical requirements

The code for the examples in this chapter can be found in this book’s GitHub repository at https://github.com/PacktPublishing/Practical-Microservices-with-Dapr-and-.NET-Second-Edition/tree/main/chapter13.

In this chapter, the working area for scripts and code is expected to be <repository path>chapter13. In my local environment, it is C:Reposdapr-sampleschapter13.

Please refer to the Setting up Dapr section in Chapter 1, Introducing Dapr, for a complete guide on the tools needed to develop with Dapr and work with the examples in this book.

In this chapter, we will also reuse Locust: to refresh that knowledge, please refer to Chapter 12, Load Testing and Scaling Dapr.

Learning about the Azure Container Apps architecture

Azure Container Apps is a managed serverless container service on the Microsoft Azure cloud.

It is managed in the sense that you, the user, can configure the service to fit your needs but you are not involved in the management of the underlying resources; it is serverless as you do not have to care about the characteristics and scaling of the VMs used by the underlying hosting platform.

With Azure Container Apps, your application does run on an Azure Kubernetes Service (AKS) cluster managed on your behalf by the Azure platform: you will not have to configure and maintain the resource by yourself.

Here are the three main components of Azure Container Apps:

- KEDA is already configured, ready to be used to scale your workload based on HTTP requests, CPU, and memory usage, or any of the other scale triggers supported by KEDA

- Dapr is integrated into Azure Container Apps, so it can be leveraged by any container workload already adopting it

- Envoy is an open source proxy designed for cloud-native applications and is integrated into Azure Container Apps to provide ingress and traffic-splitting functionalities

Cloud-native

As described at https://www.cncf.io/, the Cloud Native Computing Foundation (CNCF) serves as the vendor-neutral home for many of the fastest-growing open source projects. KEDA, Envoy, and Dapr are all projects under the CNCF umbrella.

Azure Container Apps provides the following functionalities:

- Autoscales based on any KEDA scale trigger

- Provides HTTPS ingress to expose your workload to external or internal clients

- Supports multiple versions of your workload and splits traffic across them

- Observes application logs via Azure Application Insights and Azure Log Analytics

As we can see from the following diagram, the environment is the highest-level concept in Azure Container Apps: it does isolate a solution made of multiple container apps from other workloads, from a networking, logging, and management perspective:

Figure 13.1 – Azure Container Apps architecture

As depicted in Figure 13.1, an environment can contain multiple container apps: we can already guess each of the microservices in our solution will become a distinct container app.

These are the core concepts in container apps:

- A revision is an immutable snapshot of our container app: each container app has at least one, created at first deployment, and you can create new revisions to update a new version of your container app

- A Pod is a familiar concept from the Kubernetes architecture: in the context of Azure Container Apps, it does not surface as a keyword in the CLI or portal but, as AKS is supporting our workload under the covers, we know that a container image of a specific revision is being executed in this context

- A container image can originate from any container registry: over the course of this book, we used Azure Container Registry but, in this chapter, we will leverage the readily available images in Docker Hub

- As Dapr is already configured in the Azure Container Apps service, the sidecar can be enabled in any container app

Before we dive into the configuration details of Azure Container Apps, let us explore why should we take this option into consideration for our solution.

Understanding the importance of Azure Container Apps

Let us recap the progress made so far with our fictional solution. We managed to create a fully functional solution with Dapr, and we successfully deployed it to AKS. Why should we care about Azure Container Apps, the newest service for containers on the Azure cloud?

Please bear with me as I expand the fictional scenario at the core of the sample in this book. Driven by the success of Biscotti Brutti Ma Buoni e-commerce solution we built so far, the capabilities of made-to-order cookie customization gained the attention of other businesses: the company behind Biscotti Brutti Ma Buoni decides to market the in-house developed solution to other businesses, selling it as a Software as a Service (SaaS) in tandem with the equipment to run a shop. Given this business objective, how can we accomplish it?

Starting with Chapter 9, Deploying to Kubernetes, we gained experience with AKS; over Chapter 10, Exposing Dapr Applications, we learned a few alternative approaches for exposing our applications in AKS; and in Chapter 11, Tracing Dapr Applications, we explored how to configure a monitoring stack in AKS and integrate it with Dapr. Finally, in Chapter 12, Load Testing and Scaling Dapr, we explored how to scale our application to respond to increased client load with a simple Horizontal Pod AutoScaler (HPA).

Kubernetes is a powerful container orchestrator, and AKS can significantly simplify its management. Nevertheless we, as developers and architects, must deal with many tools (kubectl, Helm, YAML, Prometheus, Grafana, and more) and concepts such as Pod, service, Deployment, replica, and ingress controller that help us get the work done but do not add any value to our solution.

The Biscotti Brutti Ma Buoni company adopted AKS and integrated it with the IT infrastructure to support our solution and many other business applications as well. The operations team can maintain the internal and externally facing applications at the business continuity (BC) level required by the company.

Offering a solution as SaaS to external customers is a completely different story; the level of service you must guarantee to your customers might require a different effort in development and operations, probably higher than what you can accept for your own internal usage.

Given the experience with AKS, the solution team has the following open questions:

- Do we extend the existing AKS cluster to SaaS customers?

- How challenging would it be to support additional AKS clusters, devoted to SaaS customers?

- How do we manage the multi-tenancy of our solution on Kubernetes?

- How can we combine internal DevOps practices with what is required for SaaS customers?

After a thorough conversation involving all the company stakeholders, the company comes up with the decision to not keep the existing AKS infrastructure separate from the new SaaS initiative. The operational model achieved is fit for the company’s internal operations.

The idea of adding AKS clusters to isolate SaaS customers does not attract the favor of the IT and operations team. Furthermore, even if this goal can be achieved, how can they adapt the solution to support multiple tenants? There are approaches to support multi-tenancy in Kubernetes; it is likely this requirement would increase the operational complexity.

Answering these questions triggers new questions. The consensus is that it would be best to keep the solution on the existing evolution path, hopefully finding a way to enable multi-tenancy without adding complexity to the toolchain. Also, no stakeholder finds any value in managing Kubernetes if not strictly necessary: Biscotti Brutti Ma Buoni is a purely cloud-native solution, and the company would like to venture into the SaaS market without the risk of turning into a managed service provider (MSP).

With Azure Container Apps, the Biscotti Brutti Ma Buoni solution can be deployed as is, with no changes to the code or containers. An isolated environment can be provisioned for each SaaS customer with no additional complexity. The rich scaling mechanisms enable a company to pay for the increase in resources only when the solution needs it. In a nutshell, all the benefits of running containers without any concerns about managing Kubernetes.

Container options in Azure

In this book, we explored two options to support a container workload: AKS and Azure Container Apps. I highly recommend reading this article to compare Azure Container Apps with the other Azure container options: https://docs.microsoft.com/en-us/azure/container-apps/compare-options.

To recap, the reason the team behind Biscotti Brutti Ma Buoni decided to adopt Azure Container Apps can be condensed into a single sentence: to achieve the flexibility of microservices running in a Kubernetes orchestrator without any of the complexities.

You can see an overview of the Azure Container Apps architecture in the following diagram:

Figure 13.2 – An overall architecture of Azure Container Apps

Hopefully, Figure 13.2 will not spoil too much the discovery of Azure Container Apps over the next sections. At a very high level, we will find a way to expose some of the solution APIs to external clients; all the involved microservices will leverage the Dapr feature in Azure Container Apps. We will define Dapr components in the Azure Container Apps environment and define which of the Dapr applications can use which ones. Ultimately, we will define scale rules for each Azure container app in the environment.

In the next section, we will start learning how to provision and configure Azure Container Apps.

Setting up Azure Container Apps

We are going to use the Azure CLI and the Azure portal to manage the deployment of our solution to Azure Container Apps. Let’s start with enabling the CLI.

After we log in to Azure, our first objective is to make sure the extension for the CLI and the Azure resource provider are both registered. We can achieve this with the following commands:

C:Repospractical-daprchapter13> az extension add --name containerapp --upgrade … omitted … C:Repospractical-daprchapter13> az provider register -- namespace Microsoft.App … omitted …

Our next steps are set out here:

- Create a resource group

- Create a Log Analytics workspace

- Create an Application Insights resource

- Create an Azure Container Apps environment

Instructions for the first three steps are provided in the prepare.ps1 file, using the CLI.

At this stage in our journey, you have probably accumulated a certain level of experience with Azure: therefore, I leave to you the choice of whether to follow the Azure documentation or the provided script to provision the Log Analytics and Application Insights resources via the portal or the CLI.

Let’s focus now on the most important step—creating our first Azure Container Apps environment, as you can see in the following command snippet from the deploy-aca.ps1 file:

PS C:Repospractical-daprchapter13> az containerapp env create --name $CONTAINERAPPS_ENVIRONMENT --resource-group $RESOURCE_GROUP --dapr-instrumentation-key $APPLICATIONINSIGHTS_KEY --logs-workspace-id $LOG_ANALYTICS_WORKSPACE_CLIENT_ID --logs-workspace-key $LOG_ANALYTICS_WORKSPACE_CLIENT_SECRET --location $LOCATION Command group 'containerapp' is in preview and under development. Reference and support levels: https://aka.ms/CLI_refstatus Running .. Container Apps environment created. To deploy a container app, use: az containerapp create –help … omitted …

In the previous command snippet, we created an environment and connected it to the Log Analytics workspace. This way, all output and error messages from the container apps will flow there. We also specified which Application Insights instance to use in the Dapr observability building block.

The environment in Azure Container Apps plays a role similar to a cluster in AKS. We are now ready to deploy and configure our microservices as Dapr applications in it.

Deploying Dapr with Azure Container Apps

In this section, we will learn how to configure Dapr components in Azure Container Apps and how to deploy Dapr applications. These are our next steps:

- Configuring Dapr components in Azure Container Apps

- Exposing Azure Container Apps to external clients

- Observing Azure Container Apps

We start by configuring the components needed by the Dapr applications.

Configuring Dapr components in Azure Container Apps

In the components folder, I have prepared all the components used by the Dapr applications: reservation-service, reservationactor-service, customization-service, and order-service.

Let’s observe the component in the componentscomponent-pubsub.yaml file, used by all microservices to communicate via the publish and subscribe (pub/sub) building block of Dapr:

name: commonpubsub type: pubsub.azure.servicebus version: v1 metadata: - name: connectionString secretRef: pubsub-servicebus-connectionstring value : "" scopes: - order-service - reservation-service - reservationactor-service - customization-service secrets: - name: pubsub-servicebus-connectionstring value: SECRET

If you compare the .yaml file with the format that we used in Chapter 6, Publish and Subscribe, you will notice the terminology used is similar for name and type, but the .yaml file is structured differently. As you can read in the documentation at https://docs.microsoft.com/en-us/azure/container-apps/dapr-overview?tabs=bicep1%2Cyaml#configure-dapr-components, this is a change specific to Container Apps.

Once you have replaced all the secrets in the components prepared for you in the components folder, we can create components via the CLI.

In the deploy-aca.ps1 file, you can find the commands to create Dapr components, as shown here:

PS C:Repospractical-daprchapter13> az containerapp env dapr-component set ' --name $CONTAINERAPPS_ENVIRONMENT --resource-group $RESOURCE_GROUP ' --dapr-component-name commonpubsub ' --yaml ($COMPONENT_PATH + "component-pubsub.yaml") … omitted …

Once you execute all the commands for the five components, you will have configured the state stores for each Dapr application and the common pub/sub.

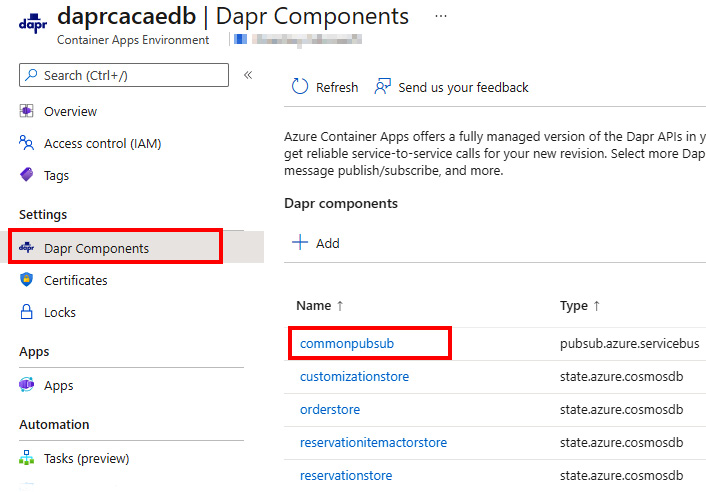

We can see the installed components via the CLI or the portal, which offers a great experience with Azure Container Apps:

Figure 13.3 – Dapr components in an Azure Container Apps environment

In Figure 13.3, we can see that by selecting the Dapr Components setting in the environment, we get the list of the five components. Let’s select the commonpubsub component to see its configuration:

Figure 13.4 – Settings of a Dapr component in an Azure Container Apps environment

In Figure 13.4, we can see the settings of the commonpubsub component that we specified via the CLI in the previous steps: they should look very familiar to you as they reflect the elements of a pub/sub Dapr component.

Our next goal is to deploy the Azure Container App to our environment. From the file, let’s examine one of the commands:

az containerapp create ' --name t1-reservationactor ' --resource-group $RESOURCE_GROUP ' --environment $CONTAINERAPPS_ENVIRONMENT ' --image ($REGISTRY_NAME + "/sample.microservice .reservationactor:2.0") ' --target-port 80 ' --ingress 'internal' ' --min-replicas 1 ' --max-replicas 1 ' --enable-dapr ' --dapr-app-port 80 ' --dapr-app-id reservationactor-service

Many elements are noteworthy in the previous az containerapp create command. With the enable-dapr parameter, we request to onboard Dapr in this application; with dapr-app-port, we control the internal port Dapr can use to reach our microservice; and with dapr-app-id, we name our microservice in Dapr. With ingress ‘internal’, we indicate our microservice can be invoked by other microservices in the same environment.

Finally, the image parameter gives us the chance to specify the full container image to be used. It can be from an Azure container registry or any other registry such as Docker Hub. As introduced in Chapter 9, Deploying to Kubernetes, you can find ready-to-use images in Docker Hub. For instance, the image aligned with this edition of the book for the reservationactor-service microservice would be davidebedin/sample.microservice.reservationactor:2.0.

By being able to use the very same container image we deployed to a fully managed AKS, we are proving one of the distinctive traits of Azure Container Apps. We are using the same deployment assets, with no changes required to code, to deploy our solution to an environment with capabilities aligned with Kubernetes but with none of its complexities.

Once we have deployed reservationactor-service, please proceed to deploy reservation-service, customization-service, and order-service, as shown in the following screenshot. The instructions are available in the deploy-aca.ps1 file:

Figure 13.5 – Apps listed in an Azure Container Apps environment

As shown in Figure 13.5, if the deployment of the four container apps succeeds, we should be able to see these in the Apps section of the environment resource using the Azure portal.

How can we now test our deployed application? Let’s explore a new option to expose a container app to external clients.

Exposing Azure container apps to external clients

If we examine the configuration for all of our microservices as Dapr applications, each one has ingress as internal: they can communicate with each other, but they are not reachable from external clients. Is something missing here?

In Chapter 10, Exposing Dapr Applications, we learned how to use reverse proxies such as NGINX and an API manager such as Azure API Management to expose our solution to an external audience.

We could apply any of these two approaches to deploy an abstraction layer between our Dapr applications and the externally reachable API. Instead, I took the chance to propose a different approach for a nimble reverse proxy built as a Dapr application with Yet Another Reverse Proxy (YARP) (documentation at https://microsoft.github.io/reverse-proxy/): an open source project for an easy-to-use, yet flexible reverse proxy built with .NET.

Following the very same approach we used to create projects for the first Dapr applications in Chapter 4, Service-to-Service Invocation, I prepared a fully functional project in the sample.proxy folder, with a corresponding Docker file in case you want to build it or a ready-to-use davidebedin/sample.proxy:0.1.6 image available on Docker Hub.

A YARP reverse proxy can be configured via code or the appsettings.json file of the .NET project. You can find the rules at sample.proxyappsettings.json. As an example of the configuration, let’s observe the following snippet:

{

… omitted …

"AllowedHosts": "*",

"ReverseProxy": {

"Routes": {

… omitted …

"balance" : {

"ClusterId": "daprsidecar",

"Match": {

"Path": "/balance/{**remainder}",

"Methods" : [ "GET" ]

},

"Transforms": [

{ "PathPattern": "invoke/reservation-

service/method/balance/{**remainder}" }

]

}

},

"Clusters": {

"daprsidecar": {

"Destinations": {

"dapr": {

"Address":"http://localhost:{{DAPR_HTTP_PORT}}

/v1.0/"

}

}

}

}

}

}The preceding configuration shows a simple rule to accept a route with the path as /balance/{**remainder}, in which {**remainder} identifies anything passed afterward. The requested path gets transformed to adhere to the Dapr methods for the sidecar, resulting in a invoke/reservation-service/method/balance/{**remainder} path. The route, then transformed, is sent to a cluster backend, in this case with the Dapr sidecar as a destination.

It is very simple to deploy a YARP-based reverse proxy such as this sample.proxy project as a Dapr application in Azure Container Apps. In the deploy-aca.ps1 file, we find the following command:

az containerapp create ' --name t1-proxy ' --resource-group $RESOURCE_GROUP ' --environment $CONTAINERAPPS_ENVIRONMENT ' --image ($REGISTRY_NAME + "/sample.proxy:0.1.6") ' --target-port 80 ' --ingress 'external' ' --min-replicas 1 ' --max-replicas 1 ' --enable-dapr ' --dapr-app-port 80 ' --dapr-app-id proxy-service

What has changed in this Dapr-enabled container app, compared to the previous ones, is that the ingress parameter is now set to external.

We can also verify this configuration via the Azure portal, as shown in the following screenshot, which shows the Ingress settings for the container app:

Figure 13.6 – Ingress configuration of a container app

Azure Container Apps will provide an externally reachable address that we can get in the portal or via the CLI, as shown in the following command:

PS C:Repospractical-daprchapter13> (az containerapp show --resource-group $RESOURCE_GROUP --name t1-proxy --query "properties.configuration.ingress.fqdn" -o tsv) t1-proxy.OMITTED.azurecontainerapps.io

A layer of indirection between clients and our APIs is always important as it does give us ample options for evolution, especially in a microservices-oriented architecture. A proper, fully-featured API manager such as Azure API Management might be a better choice in some scenarios. In our case, with the objective to simplify the deployment of our solution and offer it as a service to external clients, a simple and effective reverse proxy such as YARP can be a good match.

At this stage, all five Azure container apps have been deployed. Let’s check this with the Azure CLI, with the following command taken from the deploy-aca.ps1 file:

PS C:Repospractical-daprchapter13> az containerapp

list '

--resource-group $RESOURCE_GROUP '

--query "[].{Name:name, Provisioned:properties

.provisioningState'

-o table

Name Provisioned

------------------- -------------

t1-customization Succeeded

t1-order Succeeded

t1-proxy Succeeded

t1-reservationactor Succeeded

t1-reservation SucceededOur next step is to test our solution deployed to Azure Container Apps and observe its behavior.

Observing Azure container apps

Azure Container Apps offers several tools and approaches to monitor the health and behavior of our applications, as listed here:

- Log streaming

- Application Insights

- Logs in Log Analytics

- Container console

- Azure Monitor

Let’s examine the first two, log streaming and Application Insights:

- Log streaming: This is the equivalent of the kubectl command we learned to use in Chapter 9, Deploying to Kubernetes. This time, we can read the output of the log coming from all the instances of a container in a specific Azure container app in the Azure portal or via the Azure CLI. You can learn more at https://docs.microsoft.com/en-us/azure/container-apps/observability?tabs=powershell#log-streaming. With log streaming, you get a stream of real-time logs from the container app stdout and stderr output.

- Application Insights: This is an observability option for Dapr that we only mentioned in the previous chapters. With Azure Container Apps in conjunction with Dapr, it is the primary end-to-end (E2E) observability option.

Before we learn how to leverage these two options, let’s run a test of the overall solution, which, in turn, will generate log messages and metrics we can observe. In the test.http file, we have our API calls submitting an order and retrieving the resulting quantity balance for a product involved in the overall process, from order to customization:

@baseUrl = x.y.z.k

###

GET https://{{baseUrl}}/balance/bussola1

###

# NON problematic order

# @name simpleOrderRequest

POST https://{{baseUrl}}/order HTTP/1.1

content-type: application/json

{

"CustomerCode": "Davide",

"Date": "{{$datetime 'YYYY-MM-DD'}}",

"Items": [

… omitted …

],

"SpecialRequests" : []

}

… continued …In the snippet from test.http, you have to replace the value of the @baseUrl variable with the externally reachable Fully Qualified Domain Name (FQDN) of the Azure container app named t1-proxy, which acts as the external endpoint for the solution: we obtained it via the CLI in the previous section; otherwise, you can read it from the Azure portal.

If we succeeded in the configuration and deployment of our solution in Azure Container Apps, we should receive a positive result with the balance of a stock-keeping unit (SKU) (a product), and our order should have been accepted. If so, it means the microservices of our solution successfully managed to interact via Dapr.

It is time to first observe the t1-proxy container app. In the Azure portal, let’s select the Log Stream option from the Monitoring section. As depicted in Figure 13.7, we have the choice to observe the container for the application or the Dapr sidecar container:

Figure 13.7 – Log stream of the t1-proxy container app

As we can observe in the preceding screenshot, we see the logs confirming our requests reached the YARP-based reverse proxy and have been processed by interacting with the local Dapr sidecar.

As getting balance or posting order route calls both interact with the reservationactor-service microservice running in the t1-reservationactor container app, we can observe interactions reaching this microservice via Dapr service-to-service and actor building blocks by looking at the output in its log stream, as follows:

Figure 13.8 – Log stream of the t1-reservationactor container app

As shown in Figure 13.8, with Azure Container Apps, we can have the same visibility on the output from a microservice that we have locally in our development environment, all with the simplicity of the Azure portal or CLI.

Another observability option in Azure Container Apps is Application Insights. While provisioning the container app environment with az containerapp env create … --dapr-instrumentation-key, we specified an Application Insights instance to be configured for the Dapr observability building block.

If we inspect the Application map section in the Azure Application Insights resource, we should see a representation of interactions between our Dapr microservices, like the one in Figure 13.9:

Figure 13.9 – Application Insights application map

In Chapter 11, Tracing Dapr Applications, we explored the several options Dapr provides us for observing the interactions between microservices: in the deployment of our solution to AKS, we explored Zipkin, while Application Insights was an option we did not explore. In Azure Container Apps, Application Insights is the primary observability option for Dapr microservices.

In the next section, we will explore a powerful feature of Azure Container Apps to scale workload based on load.

Autoscaling Azure Container Apps with KEDA

In this section, we will learn how to leverage the autoscale features of Azure Container Apps. We will cover the following main topics:

- Learning about KEDA autoscalers

- Applying KEDA to Azure container apps

- Testing KEDA with container apps

Our first objective is to learn about KEDA.

Learning about KEDA autoscalers

The Azure Container Apps service provides a powerful abstraction on a complex topic such as scaling microservices by abstracting the Kubernetes default scaling concepts and by adopting KEDA. You can learn all about it in the documentation at https://docs.microsoft.com/en-us/azure/container-apps/scale-app.

KEDA is an open source project started by Red Hat and Microsoft, now a CNCF incubating project, that extends the capabilities of the Kubernetes autoscaler to allow developers to scale workload on event-based metrics coming from an incredibly diverse set of scalers. As an example, with KEDA, you can scale your application component based on the number of pending messages in an Azure Service Bus subscription. You can find more details about KEDA at https://keda.sh/.

In Chapter 12, Load Testing and Scaling Dapr, we experimented with the Locust open source testing framework to generate, relying on Azure resources, an increasing volume of requests on our applications. The testing framework created simulated a pattern of requests against the APIs to measure the response of the infrastructure and applications under load and to verify how the scaling behavior in an AKS deployment could help to achieve optimal performance.

In Chapter 12, we also specified scaling rules leveraging the default metrics available in any Kubernetes autoscaler: usage of CPU and memory. Those metrics are an effective scaling indicator for memory and CPU-intensive workload.

It is worth reminding you that, by leveraging Dapr, most data operations occur outside of the microservices in our solution. The Dapr sidecar performs input/output (I/O) operations toward Dapr components, relying on Platform as a Service (PaaS) services such as Azure Cosmos DB for managing the state of each microservice, and asynchronous communications between microservices are supported by Azure Service Bus.

Scaling up and down the instances of a microservice based on the number of pending messages in a queue is a far more effective approach to anticipating the stress conditions than measuring the impact of too many messages reaching too few instances. KEDA is a powerful approach to anticipating, rather than reacting to, stress conditions common to event-driven architecture (EDA).

Azure Container Apps leverages many open source technologies including KEDA, which enables the user. With KEDA, we can configure scaling rules on external resources; for example, we can scale a container app based on the pending messages in an Azure Service Bus subscription.

As we have learned the basics of KEDA autoscalers, it is time to apply these configurations to each of our container apps.

Applying KEDA to Azure container apps

Our first objective is to configure scale rules for our container apps. Secondly, we will launch a load test with Locust to verify if the container apps scale accordingly to plan.

In the scale folder, I prepared .yaml files with scale rules for each of the five container apps. We will configure these via the Azure CLI with the code prepared in scale-aca.ps1.

For instance, let’s see the content of the scalescale-reservationservice.yaml file, which is going to be applied to the reservation-service microservice configuration:

properties: template: scale: minReplicas: 1 maxReplicas: 5 rules: - name: servicebus custom: type: azure-servicebus metadata: topicName: onorder_submitted subscriptionName: reservation-service messageCount: 10 auth: - secretRef: pubsub-secret triggerParameter: connection

In the previous snippet, we configured the container app to scale between 1 and 5 replicas, following a custom rule using the KEDA scaler of type azure-servicebus. This instructs the KEDA component in Azure Container Apps to add an instance if there are more than 10 pending messages, as specified with messageCount in the subscriptionName of the topicName. You can also notice a secret named pubsub-secret is referenced.

In Chapter 6, Publish and Subscribe, we learned how Dapr can leverage Azure Service Bus for messaging between microservices. While exploring our sample solution, Dapr automatically created several topics and subscriptions in Azure Service Bus. KEDA will observe these subscription metrics to understand if the microservice is processing messages as fast as possible or is in need of extra help by an additional instance.

In addition to KEDA, Azure Container Apps provides CPU, memory, and HTTP scale rules. Let’s examine the following scalescale-proxy.yaml file prepared for the proxy-service microservice:

properties: template: scale: minReplicas: 1 maxReplicas: 4 rules: - name: httpscale http: metadata: concurrentRequests: '10' - name: cpuscalerule custom: type: cpu metadata: type: averagevalue value: '50'

In the previous snippet, we ask to scale instances, from 1 and up to 4, if there are more than 10 concurrent HTTP requests or the CPU exceeds 50% on average.

How can we apply these new scale rules to the configurations of our deployed container apps? We will use the Azure CLI to create a new revision for each container app. As an example, let’s examine a snippet from scale-aca.ps1:

az containerapp secret set ' --name t1-reservation ' --resource-group $RESOURCE_GROUP ' --secrets pubsub-secret=$SB_CONNECTION_STRING ... omitted … az containerapp revision copy ' --name t1-reservation ' --resource-group $RESOURCE_GROUP ' --yaml .scalescale-reservationservice.yaml

In the previous code snippet, we are first creating a secret to keep the connection string to the Azure Service Bus resource, and secondly, we are creating a new revision for the t1-reservation container apps, specifying the scalescale-reservationservice.yaml file for all the configurations.

Having executed the command, a new revision is going to be created.

Revision management

According to the default single revision mode, only one revision is active at the same time. The new one takes the place of the old revision.

Azure Container Apps can support more complex scenarios. In multiple revision mode, for example, you can split traffic between the existing revision and the new one, both active. The traffic splitting of Azure Container Apps can be instructed to direct a small portion of traffic to the revision carrying the updated application, while most requests continue to be served by the previous revision.

As it is common with canary deployment practices, if the newer revision properly behaves, the traffic can be increasingly shifted toward the newer revision. Finally, the previous revision can be disabled.

You can find more information about revision management in Container Apps in the documentation at https://docs.microsoft.com/en-us/azure/container-apps/revisions.

Let’s examine a scale rule on the Azure portal, as shown in the following screenshot.

Figure 13.10 – KEDA scale rule applied to a container app

As shown in Figure 13.10, the Scale setting for the container app named t1-reservation, which supports the reservation-service Dapr application, shows the scale rule we configured with the KEDA scaler.

If we successfully executed all the commands in scale-aca.ps1, we should have new revisions for all our five container apps, some with scale rules reacting to pending messages in an Azure Service Bus subscription, and others with scale rules reacting to average used CPU or memory.

Our next step is to run a load test on our solution deployed to Azure Container Apps to test if the scale rules have any impact on the number of instances.

Testing KEDA with container apps

We will reuse the knowledge gathered in Chapter 12, Load Testing and Scaling Dapr, to run a load test with the Locust load testing framework.

If you forgot the syntax, I suggest you go back to the Chapter 12 instructions to refresh your knowledge of Locust and the distributed testing infrastructure leveraging Azure resources.

In the scope of this chapter, the load test can be the very same available in Chapter 12 or the test provided in the loadtestlocustfile.py file. The main change we need to apply to the Locust test, whether we are launching it via the command line or accessing the web UI deployed to Azure, is to replace the hostname with the URL of the ingress to the externally exposed t1-proxy container app, which can also be gathered with the CLI command, as previously described in this chapter. The rest of the test is the same.

The load test is designed to request balance and submit orders, randomly picking products (also known as SKUs) from a previously defined set. If you are reusing the state stores from Chapter 12 also in this chapter, you probably already have a range of products available. On the contrary, if you reset the data or provisioned new state stores, in the loadergeneratedata.py file there is a Python script to provision the SKU/products so that the load test can find it.

With the following command, you can regenerate the state for all the SKUs you need in the tests, as the script leverages a route supported by proxy-service to refill the balance of SKUs in the catalog:

PS C:Repospractical-daprchapter13> python

.loadergeneratedata.py https://<BASE_URL>

SKU:cookie000, Balance:

{"sku":"cookie000","balanceQuantity":63}

SKU:cookie001, Balance:

{"sku":"cookie001","balanceQuantity":32}

… omitted …After you have made sure there are plenty of products in the configured state stores, we can proceed with the load test.

Please consider that there are many factors influencing the outcome of a load test, especially the capacity of the state store’s backend (Azure Cosmos DB) and the message bus (the Azure Service Bus resource). Luckily for us, these cloud services can reach the performance objectives if we properly configure the service-level tier.

Given this preparation and context, let’s launch a Locust load test and see if the Azure Container Apps instances scale as expected.

One way to get a comprehensive view of instance count for each of the five is to compose a dashboard in the Azure portal displaying the Replica Count metric, accessible from the Metrics sidebar menu in the Monitoring section of an Azure container app page, as illustrated in the following screenshot (for more information on how to compose dashboards in the Azure portal, please see the documentation at https://docs.microsoft.com/en-us/azure/azure-portal/azure-portal-dashboards. For a deep dive into Azure Container Apps monitoring, please check https://docs.microsoft.com/en-us/azure/container-apps/observability?tabs=bash#azure-monitor-metrics):

Figure 13.11 – Azure Container Apps instances scaling up

In Figure 13.11, we can see that t1-reservation, t1-customization, and t1-reservationactor container apps are scaling up.

The first two container apps, corresponding to reservation-service and customization-service Dapr microservices, have KEDA scale rules triggered by the increasing number of pending messages in their respective Azure Service Bus subscriptions.

The latest, corresponding to the reservationactor-service Dapr application, is scaling based on the overall memory usage. As this microservice is leveraging the actor building block of Dapr, memory could be a good indicator of growth in demand: the more actors kept alive, the more memory needed.

In this section, we learned how to create new revisions of Azure container apps with the intent of setting up scale rules, using default and KEDA scalers.

Summary

In this chapter, we learned the core concepts of Azure Container Apps, the newest serverless container option in Azure.

We effortlessly managed to deploy the Dapr microservices to Azure Container Apps with no changes to the code or containers. By leveraging a simplified control plane via the Azure portal and the Azure CLI, we configured a complex solution with minimal effort.

By experimenting with revisions, we briefly touched on a powerful feature of Azure Container Apps that can simplify the adoption of blue/green or canary deployment practices.

By testing the scaling features in Azure Container Apps, we learned how easy it is to leverage KEDA, as it is an integral part of the Azure service.

The objective of the fictional company behind the Biscotti Brutti Ma Buoni solution, stated at the beginning of this chapter, was to find a suitable platform to offer the solution—the one we built with Dapr in this book—to external businesses with a SaaS approach.

While the fictional company had experience with Kubernetes, a serverless platform such as Azure Container Apps will greatly simplify the management of their solution, providing a scalable managed environment with built-in support for Dapr.

With this chapter, we complete our journey in learning Dapr through the development, configuration, and deployment of a microservices-oriented architecture.

There are many topics to be explored in Dapr at https://docs.dapr.io/. As we learned, Dapr is a rapidly growing open source project with a rich roadmap (see https://docs.dapr.io/contributing/roadmap/), and lots of interesting new features are planned for the next versions of Dapr.

I hope you found this book interesting, that you learned the basics of Dapr, and that it stimulated you to learn more.