C H A P T E R 14

![]()

External Tables

Sometimes DBAs and developers don’t grasp the utility of external tables. The Oracle external-table feature enables you to perform two distinct operations:

- Directly select information from operating-system flat files via SQL, which allows you to do tasks such as loading operating-system comma-separated-value (CSV) files into the database.

- Create platform-independent dump files that can be used to transfer data. You can also create these files as compressed and encrypt them for efficient and secure data transportation.

One common use of an external table is that it allows you to use SQL*Plus to select data from an operating-system flat file. When using an external table in this mode, you must specify what type of data is in the file and how it’s organized. You can select from an external table but aren’t permitted to modify the data (no inserts, updates, or deletes).

You can also use an Oracle’s external-table feature that lets you select data from the database and write that information to a binary dump file. The definition of the external table defines what tables and columns will be used to unload data. Using an external table in this mode provides a method for extracting large amounts of data to a platform-independent file that you can later load into a different database.

All that is required to enable external tables is to first create a database-directory object that specifies the location of the operating-system file. Then, you use the CREATE TABLE...ORGANIZATION EXTERNAL statement to make the database aware of operating-system files that can be used as sources or targets of data.

This chapter starts by comparing using SQL*Loader—Oracle’s traditional data-loading utility—to external tables for the loading of data into the database. Several examples illustrate the flexibility and power of using external tables as a loading and data-transformation tool. The chapter finishes with an external-table example of how to unload data into a dump file.

SQL*Loader vs. External Tables

One general use of an external table lets you use SQL to load data from an operating-system file into a regular database table. This facilitates the loading of large amounts of data from flat files into the database. In older versions of Oracle, this type of loading was performed via SQL*Loader or through custom Pro*C programs.

Almost anything you can do with SQL*Loader, you can achieve with external tables. External tables are more flexible and intuitive to use than SQL*Loader. Additionally, you can obtain very good performance when loading data with external tables by using direct path and parallel features.

A quick comparison of using SQL*Loader and external tables highlights the differences. Listed next are the SQL*Loader steps that you use to load and transform of data:

- Create a parameter file that SQL*Loader uses to interpret the format of the data in the operating-system file.

- Create a regular database table into which SQL*Loader will insert records. The data will be staged here until it can be further processed.

- Run the SQL*Loader

sqlldrutility to load data from the operating-system file into the database table (created in step 2). When loading data, SQL*Loader has some features that allow you to transform data. This step is sometimes frustrating because it can take several trial-and-error runs to correctly map the parameter file to the table and corresponding columns. - Create another table that will contain the completely transformed data.

- First run SQL statements to load the data from the staging table (created in step 2); then transform and insert the data into the production table (created in step 4).

Compare the previous SQL*Loader list to the following steps to load and transform data using external tables:

- Execute a

CREATE TABLE...ORGANIZATION EXTERNALscript that maps the structure of the operating-system file to table columns. After this script is run, you can directly use SQL to query the contents of the operating-system file. - Create a regular table to hold the completely transformed data.

- Run SQL statements to load and fully transform the data from the external table into the table created in step 2.

For many shops, SQL*Loader underpins large data-loading operations. It continues to be a good tool to use for that task. However, you may want to investigate using external tables. External tables have the following advantages:

- Loading data with external tables is more straightforward and requires fewer steps.

- The interface for creating and loading from external tables is SQL*Plus. Many DBAs/developers find using SQL*Plus more intuitive and powerful than SQL*Loader’s parameter-file interface.

- You can view data in an external table before it’s loaded into a database table.

- You can load, transform, and aggregate the data without an intermediate staging table. For large amounts of data, this can be a huge space savings.

The next several sections contain examples of using external tables to read from operating-system files.

Loading CSV Files into the Database

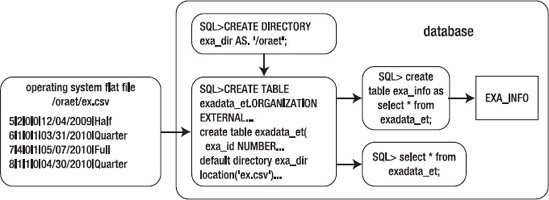

You can load small or very large CSV flat files into the database using external tables and SQL. Figure 14–1 shows the architectural components involved with using an external table to view and load data from an operating-system file. A directory object is required that specifies the location of the operating-system file. The CREATE TABLE...ORGANIZATION EXTERNAL statement creates a database object that SQL*Plus can use to directly select from the operating-system file.

Figure 14–1. Architectural components of an external table used to read a flat file

Here are the steps for using an external table to access an operating-system flat file:

- Create a database-directory object that points to the location of the CSV file.

- Grant read and write privileges on the directory object to the user creating the external table. I usually use a DBA privileged account, so I don’t need to perform this step.

- Run the

CREATE TABLE...ORGANIZATION EXTERNALstatement. - Use SQL*Plus to access the contents of the CSV file.

In this example, the flat file is named ex.csv and is located in the /oraet directory. It contains the following data:

5|2|0|0|12/04/2009|Half

6|1|0|1|03/31/2010|Quarter

7|4|0|1|05/07/2010|Full

8|1|1|0|04/30/2010|Quarter

![]() Note Some of the CSV file examples used in this chapter are actually separated by characters other than a comma, such as a pipe

Note Some of the CSV file examples used in this chapter are actually separated by characters other than a comma, such as a pipe | character. The character used depends on the data and the user who supplies the CSV file. CSV files are also often commonly referred to as flat files.

Creating a Directory Object and Granting Access

First, create a directory object that points to the location of the flat file on disk:

SQL> create directory exa_dir as '/oraet';

This example uses a database account that has the DBA role granted to it; therefore, you don’t need to grant READ and WRITE to the directory object to the user (your account) that is accessing the directory object. If you’re not using a DBA account to read from the directory object, then grant these privileges to the account using this object:

SQL> grant read, write on directory exa_dir to reg_user;

Creating an External Table

Next, fashion the script that creates the external table that will reference the flat file. The CREATE TABLE...ORGANIZATION EXTERNAL statement provides the database with the following information:

- How to interpret data in the flat file, and a mapping of data in file-to-column definitions in the database

- A

DEFAULT DIRECTORYclause that identifies the directory object, which in turn specifies the directory of the flat file on disk - The

LOCATIONclause, which identifies the name of the flat file

The next statement creates a database object that looks like a table yet is able to retrieve data directly from the flat file:

create table exadata_et(

exa_id NUMBER

,machine_count NUMBER

,hide_flag NUMBER

,oracle NUMBER

,ship_date DATE

,rack_type VARCHAR2(32)

)

organization external (

type oracle_loader

default directory exa_dir

access parameters

(

records delimited by newline

fields terminated by '|'

missing field values are null

(exa_id

,machine_count

,hide_flag

,oracle

,ship_date char date_format date mask "mm/dd/yyyy"

,rack_type)

)

location ('ex.csv')

)

reject limit unlimited;

An external table named EXADATA_ET is created when you execute this script. Now, use SQL*Plus to view the contents of the flat file:

SQL> select * from exadata_et;

EXA_ID MACHINE_COUNT HIDE_FLAG ORACLE SHIP_DATE RACK_TYPE

---------- ------------- ---------- ---------- --------- ---------

5 2 0 0 04-DEC-09 Half

6 1 0 1 31-MAR-10 Quarter

7 4 0 1 07-MAY-10 Full

8 1 1 0 30-APR-10 Quarter

Viewing External-Table Metadata

At this point, you can also view metadata regarding the external table. Query the DBA_EXTERNAL_TABLES view for details:

select

owner

,table_name

,default_directory_name

,access_parameters

from dba_external_tables;

Here’s a partial listing of the output:

OWNER TABLE_NAME DEFAULT_DIRECTO ACCESS_PARAMETERS

--------- ----------------- ------------------ ---------------------------

BARTS EXADATA_ET EXA_DIR records delimited by newline

Additionally, you can select from the DBA_EXTERNAL_LOCATIONS table for information regarding any flat files referenced in an external table:

select

owner

,table_name

,location

from dba_external_locations;

Here’s some sample output:

OWNER TABLE_NAME LOCATION

--------------- -------------------- ---------------

BARTS EXADATA_ET ex.csv

Loading a Regular Table from the External Table

Now you can load data contained in the external table into a regular database table. When you do this, you can take advantage of Oracle’s direct-path loading and parallel features. This example creates a regular database table that will be loaded with data from the external table:

create table exa_info(

exa_id NUMBER

,machine_count NUMBER

,hide_flag NUMBER

,oracle NUMBER

,ship_date DATE

,rack_type VARCHAR2(32)

) nologging parallel 2;

You can direct-path load this regular table (via the APPEND hint) from the contents of the external table as follows:

SQL> insert /*+ APPEND */ into exa_info select * from exadata_et;

You can verify that the table was direct-path loaded by attempting to select from it before you commit the data:

SQL> select * from exa_info;

Here’s the expected error:

ORA-12838: cannot read/modify an object after modifying it in parallel

After you commit the data, you can select from the table:

SQL> commit;

SQL> select * from exa_info;

The other way to direct-path load a table is to use the CREATE TABLE AS SELECT (CTAS) statement. A CTAS statement automatically attempts to do a direct-path load. In this example, the EXA_INFO table is created and loaded in one statement:

SQL> create table exa_info nologging parallel 2 as select * from exadata_et;

By using direct-path loading and parallelism, you can achieve loading performance similar to that from using SQL*Loader. The advantage of using SQL to create a table from an external table is that you can now perform complex data transformations using standard SQL*Plus features when building your regular database table (EXA_INFO, in this example).

Any CTAS statements automatically process with the degree of parallelism that has been defined for the underlying table. However, when you use INSERT AS SELECT statements, you need to enable parallelism for the session:

SQL> alter session enable parallel dml;

As a last step, you should generate statistics for any table that has been loaded with a large amount of data. Here’s an example:

exec dbms_stats.gather_table_stats(-

ownname=>'BARTS',-

tabname=>'EXA_INFO',-

estimate_percent => 20, -

cascade=>true);

Performing Advanced Transformations

Oracle provides sophisticated techniques for transforming data. This section details how to use a pipelined function to transform data in an external table. Listed next are the steps for doing this:

- Create an external table.

- Create a record type that maps to the columns in the external table.

- Create a table based on the record type created in step 2

- Create a piplelined function that is used to inspect each row as it’s loaded and transform data based on business requirements.

- Use an

INSERTstatement that selects from the external table and uses the pipelined function to transform data as it’s loaded.

This example uses the same external table and CSV file created in the previous section on loading data from CSV files. Recall that the external table name is EXADATA_ET and the CSV file name is ex.csv. After you create the external table, next create a record type that maps to the column names in the external table:

create or replace type rec_exa_type is object

(

exa_id number

,machine_count number

,hide_flag number

,oracle number

,ship_date date

,rack_type varchar2(32)

);

/

Next, create a table based on the previous record type:

create or replace type table_exa_type is table of rec_exa_type;

/

Oracle PL/SQL allows you to use functions as a row source for SQL operations. This feature is known as pipelining. It lets you use complex transformation logic combined with the power of SQL*Plus. For this example, you create a pipelined function to transform selected column data as it’s loaded. Specifically, this function adds 30 days to the SHIP_DATE when the ORACLE column has a 0 value:

create or replace function exa_trans

return table_exa_type pipelined is

begin

for r1 in

(select rec_exa_type(

exa_id, machine_count, hide_flag

,oracle, ship_date, rack_type

) exa_rec

from exadata_et) loop

if (r1.exa_rec.oracle = 0) then

r1.exa_rec.ship_date := r1.exa_rec.ship_date + 30;

end if;

pipe row (r1.exa_rec);

end loop;

return;

end;

/

Now you can use this function to load data into a regular database table. For reference, here’s the CREATE TABLE statement that instantiates the table to be loaded:

create table exa_info(

exa_id NUMBER

,machine_count NUMBER

,hide_flag NUMBER

,oracle NUMBER

,ship_date DATE

,rack_type VARCHAR2(32)

) nologging parallel 2;

Next, use the pipelined function to transform data selected from the external table and insert it into the regular database table in one step:

SQL> insert into exa_info select * from table(exa_trans);

Here’s the data that was loaded into the EXA_INFO table for this example:

SQL> select * from exa_info;

EXA_ID MACHINE_COUNT HIDE_FLAG ORACLE SHIP_DATE RACK_TYPE

---------- ------------- ---------- ---------- --------- ------------

5 2 0 0 03-JAN-10 Half

6 1 0 1 31-MAR-10 Quarter

7 4 0 1 07-MAY-10 Full

8 1 1 0 30-MAY-10 Quarter

Although the example in this section is simple, you can use the technique to apply any degree of sophisticated transformational logic. This technique allows you to embed the transformation requirements in a pipelined PL/SQL function that modifies data as each row is loaded.

Viewing Text Files from SQL

External tables allow you to use SQL SELECT statements to retrieve information from operating-system flat files. For example, say you want to report on the contents of the alert log file. First, create a directory object that points to the location of the alert log:

SQL> select value from v$parameter where name ='background_dump_dest';

Here’s the output for this example:

/oracle/app/oracle/diag/rdbms/o11r2/O11R2/trace

Next, create a directory object that points at the location of the background dump destination:

SQL> create directory t_loc as '/oracle/app/oracle/diag/rdbms/o11r2/O11R2/trace';

Now, create an external table that maps to the database alert log operating-system file. In this example, the database name is O11R2, and thus the alert log file name is alert_O11R2.log:

create table alert_log_file(

alert_text varchar2(4000))

organization external

( type oracle_loader

default directory t_loc

access parameters (

records delimited by newline

nobadfile

nologfile

nodiscardfile

fields terminated by '#$~=ui$X'

missing field values are null

(alert_text)

)

location ('alert_O11R2.log')

)

reject limit unlimited;

You can query the table via SQL queries. For example:

SQL> select * from alert_log_file where alert_text like 'ORA-%';

This allows you to use SQL to view and report on the contents of the alert log. You may find this a convenient way to provide SQL access to otherwise inaccessible operating-system files.

The ACCESS PARAMETERS clause of an external table’s ORACLE_LOADER access driver may look familiar if you’ve previously worked with SQL*Loader. Table 14–1 describes some of the more commonly used access parameters. For a full list of access parameters, see Oracle’s Database Utilities guide (available on OTN).

Table 14–1. Selected Access Parameters for the ORACLE_LOADER Driver

| Access Parameter | Description |

DELIMITED BY |

Indicates which character delimits the fields |

TERMINATED BY |

Indicates how a field is terminated |

FIXED |

Specifies the size of records having a fixed length |

BADFILE |

Name of the file that stores records that can’t be loaded due to an error |

NOBADFILE |

Specifies that a file shouldn’t be created to hold records that can’t be loaded due to errors |

LOGFILE |

Name of the file in which general messages are recorded when creating an external table |

NOLOGFILE |

Specifies that no log file should be created |

DISCARDFILE |

Names the file to which records are written that fail the LOAD WHEN clause |

NODISCARDFILE |

Specifies that no discard file should be created |

SKIP |

Skips the specified number of records in the file before loading |

PREPROCESSOR |

Specifies the user-named program that runs and modifies the contents of the file before Oracle loads the data |

MISSING FIELD VALUES ARE NULL |

Loads fields that have no data as NULL values |

Unloading and Loading Data Using an External Table

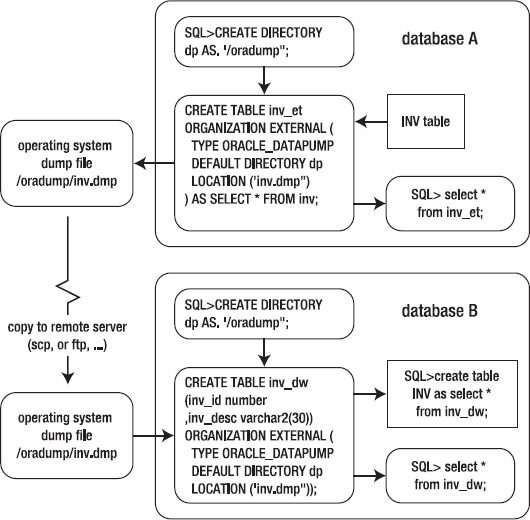

Another use for external tables is to select data from a regular database table and create a binary dump file. This is referred to as unloading data. The advantage of this technique is that the dump file is platform independent and can be used to move large amounts of data between servers of different platforms.

You can also encrypt and/or compress data when creating the dump file. Doing so provides you with an efficient and secure way of transporting databases between database servers.

Figure 14–2 illustrates the components involved with using an external table to unload and load data. On the source database (referred to as Database A), you create a dump file using an external table that selects data from a table named INV. After it’s created, you copy the dump file to a destination server (referenced as Database B) and subsequently load it into the database using an external table.

Figure 14–2. Using external tables to unload and load data

A small example illustrates the technique of using an external table to unload data. Here are the steps required:

- Create a directory object that specifies where you want the dump file placed on disk. If you’re not using a DBA account, then grant read and write access to the directory object to the database user that needs access.

- Use the

CREATE TABLE...ORGANIZATION EXTERNAL...AS SELECTstatement to unload data from the database into the dump file.

First, create a directory object. The next bit of code creates a directory object named DP that points at the /oradump directory:

S-QL> create directory dp as '/oradump';

If you’re not using a user with DBA privileges, then explicitly grant access to the directory object to the required user:

SQL> grant read, write on directory dp to larry;

To create a dump file, use the ORACLE_DATAPUMP access driver of the CREATE TABLE...ORGANIZATION EXTERNAL statement. This example unloads the INV table’s contents into the inv.dmp file:

CREATE TABLE inv_et

ORGANIZATION EXTERNAL (

TYPE ORACLE_DATAPUMP

DEFAULT DIRECTORY dp

LOCATION ('inv.dmp')

)

AS SELECT * FROM inv;

The previous command does two things:

- Creates an external table named INV_ET based on the INV table

- Creates a platform-independent dump file named

inv.dmp

Now you can copy the inv.dmp file to a separate database server and base an external table on this dump file. The remote server (to which you copy the dump file) can be a different platform than the server where you created the file. For example, you can create a dump file on a Windows box, copy to a Solaris server, and select from the dump file via an external table. In this example, the external table is named INV_DW:

CREATE TABLE inv_dw

(inv_id number

,inv_desc varchar2(30))

ORGANIZATION EXTERNAL (

TYPE ORACLE_DATAPUMP

DEFAULT DIRECTORY dp

LOCATION ('inv.dmp')

);

After it’s created, you can access the external table data from SQL*Plus:

SQL> select * from inv_dw;

You can also create and load data into regular tables using the dump file:

SQL> create table inv as select * from inv_dw;

This provides a simple and efficient mechanism for transporting data from one platform to another.

Using Parallelism to Reduce Elapsed Time

To maximize the unload performance when you create a dump file via an external table, use the PARALLEL clause. This example creates two dump files in parallel:

CREATE TABLE inv_et

ORGANIZATION EXTERNAL (

TYPE ORACLE_DATAPUMP

DEFAULT DIRECTORY dp

LOCATION ('inv1.dmp','inv2.dmp')

)

PARALLEL 2

AS SELECT * FROM inv;

To access the data in the dump files, create a different external table that references the two dump files:

CREATE TABLE inv_dw

(inv_id number

,inv_desc varchar2(30))

ORGANIZATION EXTERNAL (

TYPE ORACLE_DATAPUMP

DEFAULT DIRECTORY dp

LOCATION ('inv1.dmp','inv2.dmp')

);

You can now use this external table to select data from the dump files:

SQL> select * from inv_dw;

Compressing a Dump File

You can create a compressed dump file via an external table. For example, use the COMPRESS option of the ACCESS PARAMETERS clause:

CREATE TABLE inv_et

ORGANIZATION EXTERNAL (

TYPE ORACLE_DATAPUMP

DEFAULT DIRECTORY dp

ACCESS PARAMETERS (COMPRESSION ENABLED)

LOCATION ('inv1.dmp')

)

AS SELECT * FROM inv;

You should see quite good compression ratios when using this option. In my testing, the output dump file was 10 to 20 times smaller when compressed. Your mileage may vary, depending on the type data being compressed.

Encrypting a Dump File

You can also create an encrypted dump file using an external table. This example uses the ENCRYPTION option of the ACCESS PARAMETERS clause:

CREATE TABLE inv_et

ORGANIZATION EXTERNAL (

TYPE ORACLE_DATAPUMP

DEFAULT DIRECTORY dp

ACCESS PARAMETERS

(ENCRYPTION ENABLED)

LOCATION ('inv1.dmp')

)

AS SELECT * FROM inv;

For this example to work, you need to have a security wallet in place and open for your database.

![]() Note Using encryption requires an additional license from Oracle. Contact Oracle for details on using the Advanced Security Option.

Note Using encryption requires an additional license from Oracle. Contact Oracle for details on using the Advanced Security Option.

You enable compression and encryption via the ACCESS PARAMETERS clause. Table 14–2 contains a listing of all access parameters available with the ORACLE_DATAPUMP access driver.

Table 14–2. Parameters of the ORACLE_DATAPUMP Access Driver

| Access Parameter | Description |

COMPRESSION |

Compresses the dump file. DISABLED is the default value. |

ENCRYPTION |

Encrypts the dump file. DISABLED is the default value. |

NOLOGFILE |

Suppresses the generation of a log file. |

LOGFILE=[directory_object:]logfile_name |

Allows you to name a log file. |

VERSION |

Specifies the minimum version of Oracle that can read the dump file. |

Preprocessing an External Table

Oracle added (in 10.2.0.5 and higher) the ability to preprocess the file on which an external table is based. For example, you can instruct the CREATE TABLE...ORGANIZATION EXTERNAL statement to uncompress a compressed operating-system file before it’s processed.

Here’s a simple example to illustrate this concept. First, create a directory object that contains the location of the compressed file:

SQL> create or replace directory data_dir as '/orahome/oracle/dk/et';

You also need to create a directory object that contains the location of the script that will perform the preprocessing on the data file. In this example, the directory is /bin:

SQL> create or replace directory exe_dir as '/bin';

This example compressed the operating-system flat file with the gzip utility. The compressed file is named exa.csv.gz. You instruct the CREATE TABLE...ORGANIZATION EXTERNAL statement to preprocess the compressed file by specifying the PREPROCESSOR clause. Because the CSV file was compressed by the gzip utility, it can be uncompressed by the corresponding uncompress utility, gunzip. Look carefully for the PREPROCESSOR clause in the following listing; it’s nested under ACCESS PARAMETERS:

create table exadata_et(

machine_count NUMBER

,hide_flag NUMBER

,oracle NUMBER

,ship_date DATE

,rack_type VARCHAR2(32)

)

organization external (

type oracle_loader

default directory data_dir

access parameters

(

records delimited by newline

preprocessor exe_dir: 'gunzip'

fields terminated by '|'

missing field values are null

(exa_id

,machine_count

,hide_flag

,oracle

,ship_date char date_format date mask "mm/dd/yyyy"

,rack_type)

)

location ('ex.csv.gz')

)

reject limit unlimited;

The advantage of preprocessing the dump file is that it saves you the step of having to first uncompress the file.

![]() Note Oracle doesn’t let you use the

Note Oracle doesn’t let you use the PREPROCESSOR clause in databases that have the Database Vault feature installed.

Summary

I used to use SQL*Loader for all types of data-loading tasks. In the past few years, I’ve become an external-table convert. Almost anything you can do with SQL*Loader, you can also do with an external table. The external-table approach is advantageous because there are fewer moving parts and the interface is SQL*Plus. Most DBAs and developers find SQL*Plus easier to use than a SQL*Loader control file.

You can easily use an external table to enable SQL*Plus access to operating-system flat files. You simply have to define the structure of the flat file in your CREATE TABLE...ORGANIZATION EXTERNAL statement. After the external table is created, you can select directly from the flat file as if it were a database table. You can select from an external table, but you can’t insert, update, or delete.

When you create an external table, if required, you can then create regular database tables by using CREATE TABLE AS SELECT from the external table. Doing so provides a fast and effective way to load data stored in external operating-system files.

The external-table feature also allows you to select data from a table and write it to a binary dump file. The external table CREATE TABLE...ORGANIZATION EXTERNAL statement defines which tables and columns are used to unload the data. A dump file created in this manner is platform independent, meaning you can copy it to a server using a different operating system and seamlessly load the data. Additionally, the dump file can be encrypted and compressed for secure and efficient transportation. You can also use parallel features to reduce the amount of time it takes to create the dump file.

The next chapter deals with materialized views. These database objects provide you with a flexible, maintainable, and scalable mechanism for aggregating and replicating data.