An Oracle database consists of three types of mandatory files: data files, control files, and online redo logs. Chapter 4 focused on tablespaces and data files. This chapter looks at managing control files and online redo logs and implementing archivelogs. The first part of the chapter discusses typical control file maintenance tasks, such as adding, moving, and removing control files. The middle part of the chapter examines DBA activities related to online redo log files, such as renaming, adding, dropping, and relocating these critical files. Finally, the architectural aspects of enabling and implementing archiving are covered.

Managing Control Files

Database name

Names and locations of data files

Names and locations of online redo log files

Current online redo log sequence number

Checkpoint information

Names and locations of RMAN backup files

If any of the control files listed in the CONTROL_FILES initialization parameter are not available, then you cannot mount your database.

Note

Keep in mind that when you issue the STARTUP command (with no options), the previously described three phases are automatically performed in this order: nomount, mount, open. When you issue a SHUTDOWN command , the phases are reversed: close the database, unmount the control file, stop the instance.

The control file is created when the database is created. As you saw in Chapter 2, you should create at least two control files when you create your database (to avoid a single point of failure). Previously, you should have multiple control files stored on separate storage devices controlled by separate controllers, but because of storage devices it might be difficult to know if it is a separate device, so it is important to have fault-tolerant devices with mirroring. The control file is a very important part of the database and needs to be available or very quickly restored if needed.

Control files can also be on ASM disk groups. This allows for one control file in the +ORADATA disk group and another file in +FRA disk group. Managing the control files and details inside remain the same as on the file system except that the control files are just using ASM disk groups.

If one of your control files becomes unavailable, shut down your database, and resolve the issue before restarting (see Chapter 19 for using RMAN to restore a control file). Fixing the problem may mean resolving a storage-device failure or modifying the CONTROL_FILES initialization parameter to remove the control file entry for the control file that is not available.

Displaying the Contents of a Control File

You can inspect the contents of the control file when troubleshooting or when trying to gain a better understanding of Oracle internals.

Viewing Control File Names and Locations

Adding a Control File

Adding a control file means copying an existing control file and making your database aware of the copy by modifying your CONTROL_FILES parameter. This task must be done while your database is shut down. This procedure only works when you have a good existing control file that can be copied. Adding a control file isn’t the same thing as creating or restoring a control file.

Tip

See Chapter 4 for an example of re-creating a control file for the purpose of renaming and moving data files. See Chapter 19 for an example of re-creating a control file for the purpose of renaming a database.

If your database uses only one control file, and that control file becomes damaged, you need to either restore a control file from a backup (if available) and perform a recovery or re-create the control file. If you are using two or more control files, and one becomes damaged, you can use the remaining good control file(s) to quickly get your database into an operating state.

- 1.

Alter the initialization file CONTROL_FILES parameter to include the new location and name of the control file.

- 2.

Shut down your database.

- 3.

Use an OS command to copy an existing control file to the new location and name.

- 4.

Restart your database.

Depending on whether you use a spfile or an init.ora file, the previous steps vary slightly. The next two sections detail these different scenarios.

Spfile Scenario

- 1.Determine the CONTROL_FILES parameter’s current value:SQL> show parameter control_filesThe output shows that this database is using only one control file:NAME TYPE VALUE------------------------------- ----------- ------------------------------control_files string /u01/dbfile/o18c/control01.ctl

- 2.Alter your CONTROL_FILES parameter to include the new control file that you want to add, but limit the scope of the operation to the spfile (you cannot modify this parameter in memory). Make sure you also include any control files listed in step 1:SQL> alter system set control_files='/u01/dbfile/o18c/control01.ctl','/u01/dbfile/o18c/control02.ctl' scope=spfile;

- 3.Shut down your database:SQL> shutdown immediate;

- 4.Copy an existing control file to the new location and name. In this example, a new control file named control02.ctl is created via the OS cp command:$ cp /u01/dbfile/o18c/control01.ctl /u01/dbfile/o18c/control02.ctl

- 5.Start up your database:SQL> startup;

Init.ora Scenario

- 1.Shut down your database:SQL> shutdown immediate;

- 2.Edit your init.ora file with an OS utility (such as vi), and add the new control file location and name to the CONTROL_FILES parameter. This example opens the init.ora file, using vi, and adds control02.ctl to the CONTROL_FILES parameter:$ vi $ORACLE_HOME/dbs/inito18c.oraListed next is the CONTROL_FILES parameter after control02.ctl is added:control_files='/u01/dbfile/o18c/control01.ctl','/u01/dbfile/o18c/control02.ctl'

- 3.From the OS, copy the existing control file to the location and name of the control file being added:$ cp /u01/dbfile/o18c/control01.ctl /u01/dbfile/o18c/control02.ctl

- 4.Start up your database:SQL> startup;

Moving a Control File

You may occasionally need to move a control file from one location to another. For example, if new storage is added to the database server, you may want to move an existing control file to the newly available location.

- 1.Determine the CONTROL_FILES parameter’s current value:SQL> show parameter control_filesThe output shows that this database is using only one control file:NAME TYPE VALUE------------------------------- ----------- ------------------------------control_files string /u01/dbfile/o18c/control01.ctl

- 2.Alter your CONTROL_FILES parameter to reflect that you are moving a control file. In this example, the control file is currently in this location:/u01/dbfile/o18c/control01.ctlYou are moving the control file to this location:/u02/dbfile/o18c/control01.ctlAlter the spfile to reflect the new location for the control file. You have to specify SCOPE=SPFILE because the CONTROL_FILES parameter cannot be modified in memory:SQL> alter system setcontrol_files='/u02/dbfile/o18c/control01.ctl' scope=spfile;

- 3.Shut down your database:SQL> shutdown immediate;

- 4.At the OS prompt, move the control file to the new location. This example uses the OS mv command:$ mv /u01/dbfile/o18c/control01.ctl /u02/dbfile/o18c/control01.ctl

- 5.Start up your database:SQL> startup;

Removing a Control File

- 1.Identify which control file has experienced media failure by inspecting the alert.log for information:ORA-00210: cannot open the specified control fileORA-00202: control file: '/u01/dbfile/o18c/control02.ctl'

- 2.Remove the unavailable control file name from the CONTROL_FILES parameter. If you are using an init.ora file, modify the file directly with an OS editor (such as vi). If you are using a spfile, modify the CONTROL_FILES parameter with the ALTER SYSTEM statement. In this spfile example the control02.ctl control file is removed from the CONTROL_FILES parameter:SQL> alter system set control_files='/u01/dbfile/o18c/control01.ctl'scope=spfile;

This database now has only one control file associated with it. You should never run a production database with just one control file. See the section “Adding a Control File,” earlier in this chapter, for details on how to add more control files to your database.

- 3.Stop and start your database:SQL> shutdown immediate;SQL> startup;

Note

If SHUTDOWN IMMEDIATE does not work, use SHUTDOWN ABORT to shut down your database. There is nothing wrong with using SHUTDOWN ABORT to quickly close a database when SHUTDOWN IMMEDIATE hangs; however, remember that the database is rolling back changes and might not be hanging. Depending on the transactions that are in a rollback state, the startup might take some time or hinder performance.

Control files can be in an ASM diskgroup. This will allow for you to move the back-end disk and storage around without having to move datafiles or control files. If the ASM layer is used, the storage devices and disks become transparent to the database files. This does not protect from a possible recovery from corruption of a file or if a file is removed, but it does prevent having to move files because of location and disk being used. The files will be of type CONTROLFILE in the ASM views to know the location of the files.

Online Redo Logs

Provide a mechanism for recording changes to the database so that in the event of a media failure, you have a method of recovering transactions.

Ensure that in the event of total instance failure, committed transactions can be recovered (crash recovery) even if committed data changes have not yet been written to the data files.

Allow administrators to inspect historical database transactions through the Oracle LogMiner utility.

They are read by Oracle tools such as GoldenGate or Streams to replicate data.

You are required to have at least two online redo log groups in your database. Each online redo log group must contain at least one online redo log member. The member is the physical file that exists on disk. You can create multiple members in each redo log group, which is known as multiplexing your online redo log group.

Tip

I highly recommend that you multiplex your online redo log groups and, if possible, have each member on a separate physical device governed by a separate controller.

A COMMIT is issued.

A log switch occurs.

Three seconds go by.

The redo log buffer is one-third full.

Since this is a database process, the container database (CDB) will manage the redo logs. PDBs do not have their own redo logs, which also means that planning for space and sizing of the redo logs is at the CDB level and includes all of the PDB transactions. This architecture will be discussed more in Chapter 22, but the transaction sizing is based on all of the PDBs for a CDB.

The online redo log group that the log writer is actively writing to is the current online redo log group. The log writer writes simultaneously to all members of a redo log group. The log writer needs to successfully write to only one member in order for the database to continue operating. The database ceases operating if the log writer cannot write successfully to at least one member of the current group.

When the current online redo log group fills up, a log switch occurs, and the log writer starts writing to the next online redo log group. A log sequence number is assigned to each redo log when a switch occurs to be used for archiving. The log writer writes to the online redo log groups in a round-robin fashion. Because you have a finite number of online redo log groups, eventually the contents of each online redo log group are overwritten. If you want to save a history of the transaction information, you must place your database in archivelog mode (see the section “Implementing Archivelog Mode” later in this chapter).

When your database is in archivelog mode, after every log switch the archiver background process copies the contents of the online redo log file to an archived redo log file. In the event of a failure, the archived redo log files allow you to restore the complete history of transactions that have occurred since your last database backup.

Online redo log configuration

The online redo log files are not intended to be backed up. These files contain only the most recent redo transaction information generated by the database. When you enable archiving, the archived redo log files are the mechanism for protecting your database transaction history.

Multiplex the groups.

Consider setting the ARCHIVE_LAG_TARGET initialization parameter to ensure that the online redo logs are switched at regular intervals.

If possible, never allow two members of the same group to share the same physical disk.

Ensure that OS file permissions are set appropriately (restrictive, that only the owner of the Oracle binaries has permissions to write and read).

Use physical storage devices that are redundant (i.e., RAID [redundant array of inexpensive disks]).

Appropriately size the log files, so that they switch and are archived at regular intervals.

Note

The only tool provided by Oracle that can protect you and preserve all committed transactions in the event that you lose all members of the current online redo log group is Oracle Data Guard, implemented in maximum protection mode. See MOS note 239100.1 for more details regarding Oracle Data Guard protection modes.

Flash is another option for redo logs. Since the logs are written out to archivelogs and require fast writes, flash drives are a way to improve performance of redo logs. If flash is not available, the options are to place redo logs on physical disks and based on the previous list to minimize failures. Solid state disks might not provide faster writes, which does not make them the ideal choice for redo logs.

The online redo log files are never backed up by an RMAN backup or by a user-managed hot backup. If you did back up the online redo log files, it would be meaningless to restore them. The online redo log files contain the latest redo generated by the database. You would not want to overwrite them from a backup with old redo information. For a database in archivelog mode, the online redo log files contain the most recently generated transactions that are required to perform a complete recovery. The redo log files should also be excluded from other system backup (non-database) along with other data files.

Displaying Online Redo Log Information

Useful Views Related to Online Redo Logs

View | Description |

|---|---|

V$LOG | Displays the online redo log group information stored in the control file |

V$LOGFILE | Displays online redo log file member information |

Status for Online Redo Log Groups in the V$LOG View

Status | Meaning |

|---|---|

CURRENT | The log group is currently being written to by the log writer. |

ACTIVE | The log group is required for crash recovery and may or may not have been archived. |

CLEARING | The log group is being cleared out by an ALTER DATABASE CLEAR LOGFILE command. |

CLEARING_CURRENT | The current log group is being cleared of a closed thread. |

INACTIVE | The log group is not required for crash recovery and may or may not have been archived. |

UNUSED | The log group has never been written to; it was recently created. |

Status for Online Redo Log File Members in the V$LOGFILE View

Status | Meaning |

|---|---|

INVALID | The log file member is inaccessible or has been recently created. |

DELETED | The log file member is no longer in use. |

STALE | The log file member’s contents are not complete. |

NULL | The log file member is being used by the database. |

It is important to differentiate between the STATUS column in V$LOG and the STATUS column in V$LOGFILE. The STATUS column in V$LOG reflects the status of the log group. The STATUS column in V$LOGFILE reports the status of the physical online redo log file member. Refer to these tables when diagnosing issues with your online redo logs.

Determining the Optimal Size of Online Redo Log Groups

From the previous output, you can see that a great deal of log switch activity occurred from approximately 4:00 am to 7:00 am This could be due to a nightly batch job or users in different time zones updating data. For this database the size of the online redo logs should be increased. You should try to size the online redo logs to accommodate peak transaction loads on the database.

The V$LOG_HISTORY system change number (SCN). As stated, a general rule of thumb is that you should size your online redo log files so that they switch approximately two to six times per hour. You do not want them switching too often because there is overhead with the log switch; however, leaving transaction information in the redo log without archiving will create issues with recovery. If a disaster causes a media failure in your current online redo log, you can lose those transactions that haven’t been archived. If a disaster causes a media failure in your current online redo log, you can lose those transactions that haven’t been archived.

Oracle initiates a checkpoint as part of a log switch. During a checkpoint, the database-writer background process writes modified (also called dirty) blocks to disk, which is resource intensive. Checkpoint messages in the alert log will also be a way of looking at how fast logs are switching or if there are waits associated with archiving.

Tip

Use the ARCHIVE_LAG_TARGET initialization parameter to set a maximum amount of time (in seconds) between log switches. A typical setting for this parameter is 1,800 seconds (30 minutes). A value of 0 (default) disables this feature. This parameter is commonly used in Oracle Data Guard environments to force log switches after the specified amount of time elapses.

This column reports the redo log file size (in megabytes) that is considered optimal, based on the initialization parameter setting of FAST_START_MTTR_TARGET. Oracle recommends that you configure all online redo logs to be at least the value of OPTIMAL_LOGFILE_SIZE. However, when sizing your online redo logs, you must take into consideration information about your environment (such as the frequency of the switches) .

Determining the Optimal Number of Redo Log Groups

Redo protected until the modified (dirty) buffer is written to disk

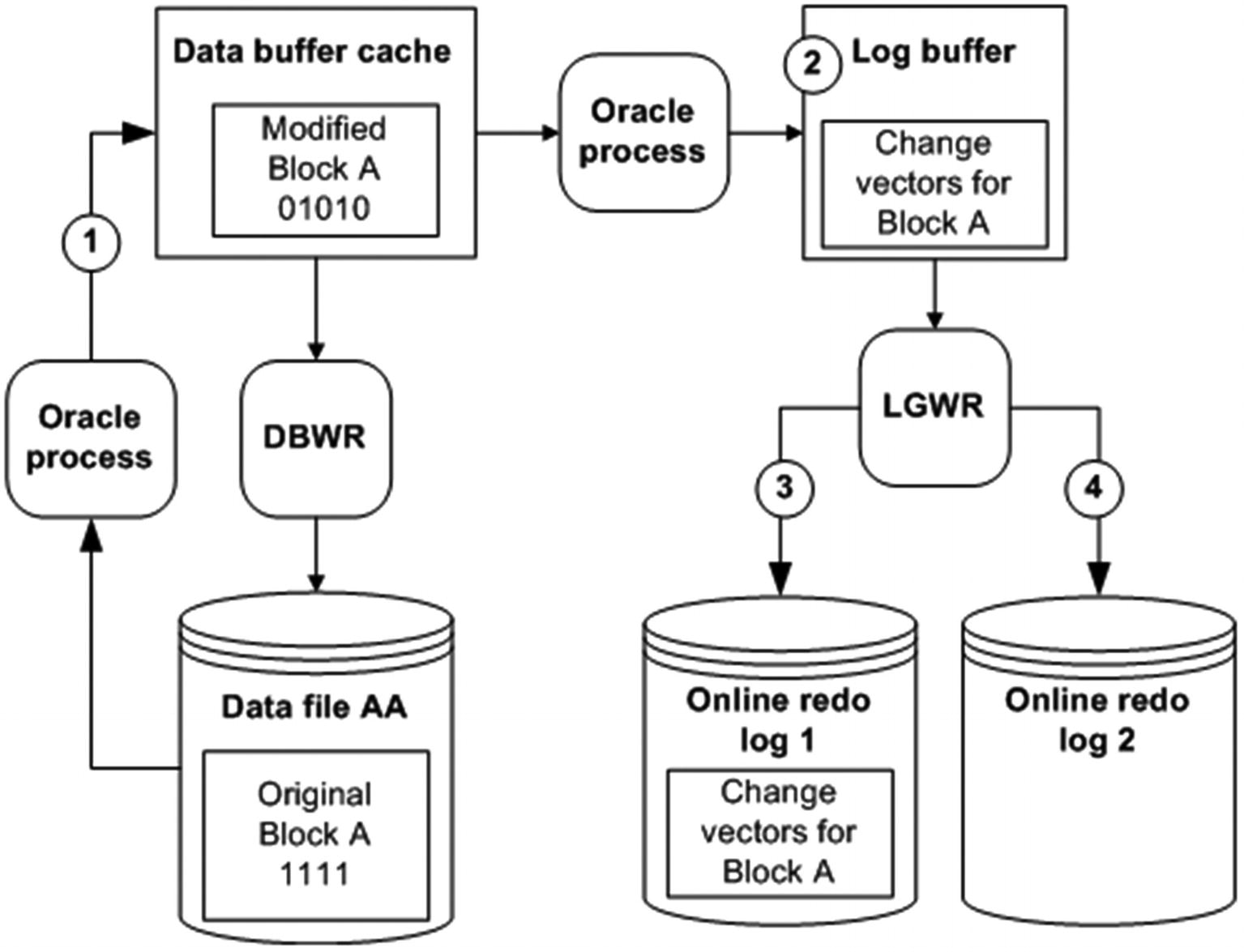

At time 1, Block A is read from Data File AA into the buffer cache and modified. At time 2 the redo-change vector information (how the block changed) is written to the log buffer. At time 3 the log-writer process writes the Block A change-vector information to online redo log 1. At time 4 a log switch occurs, and online redo log 2 becomes the current online redo log.

Add more redo log groups.

Lower the value of FAST_START_MTTR_TARGET . Doing so causes the database-writer process to write older modified blocks to disk in a shorter time frame.

Tune the database-writer process (modify DB_WRITER_PROCESSES).

If you notice that the Checkpoint not complete message is occurring often (say, several times a day), I recommend that you add one or more log groups to resolve the issue. Adding an extra redo log gives the database writer more time to write modified blocks in the database buffer cache to the data files before the associated redo with a block is overwritten. There is little downside to adding more redo log groups. The main concern is that you could bump up against the MAXLOGFILES value that was used when you created the database. If you need to add more groups and have exceeded the value of MAXLOGFILES, then you must re-create your control file and specify a high value for this parameter.

If adding more redo log groups doesn’t resolve the issue, you should carefully consider lowering the value of FAST_START_MTTR_TARGET . When you lower this value, you can potentially see more I/O because the database-writer process is more actively writing modified blocks to data files. Ideally, it would be nice to verify the impact of modifying FAST_START_MTTR_TARGET in a test environment before making the change in production. You can modify this parameter while your instance is up; this means you can quickly modify it back to its original setting if there are unforeseen side effects.

Finally, consider increasing the value of the DB_WRITER_PROCESSES parameter . Carefully analyze the impact of modifying this parameter in a test environment before you apply it to production. This value requires that you stop and start your database; therefore, if there are adverse effects, downtime is required to change this value back to the original setting.

Adding Online Redo Log Groups

In this scenario I highly recommend that the log group you add be the same size and have the same number of members as the existing online redo logs. If the newly added group doesn’t have the same physical characteristics as the existing groups, it’s harder to accurately determine performance issues. If a larger size is preferred, the new group can be added at the larger size, then the other groups can be dropped and re-created with the larger size value in order to keep the size of the redo logs the same (an example of this is in the next section).

For example, if you have two log groups sized at 50MB, and you add a new log group sized at 500MB, this is very likely to produce the Checkpoint not complete issue described in the previous section. This is because flushing all modified blocks from the SGA that are protected by the redo in a 500MB log file can potentially take much longer than flushing modified blocks from the SGA that are protected by a 50MB log file.

Resizing and Dropping Online Redo Log Groups

You may need to change the size of your online redo logs (see the section “Determining the Optimal Size of Online Redo Log Groups” earlier in this chapter). You cannot directly modify the size of an existing online redo log (as you can a data file). To resize an online redo log, you have to first add online redo log groups that are the size you want, and then drop the online redo logs that are the old size.

Note

You can specify the size of the log file in bytes, kilobytes, megabytes, or gigabytes.

Additionally, you cannot drop an online redo log group if doing so leaves your database with only one log group. If you attempt to do this, Oracle throws an ORA-01567 error and informs you that dropping the log group is not permitted because it would leave you with fewer than two log groups for your database (as mentioned earlier, Oracle requires at least two redo log groups in order to function).

Tip

These steps of adding and removing redo logs is another exercise to perform before turning over a new database or a regularly scheduled testing period for practice and testing scripts to perform in production databases.

When you are absolutely sure the file is not in use, you can remove it. The danger in removing a file is that if it happens to be an in-use online redo log, and the only member of a group, you can cause serious damage to your database. Ensure that you have a good backup of your database and that the file you are removing is not used by any databases on the server.

Adding Online Redo Log Files to a Group

Make certain you follow standards with regard to the location and names of any newly added redo log files.

Removing Online Redo Log Files from a Group

Moving or Renaming Redo Log Files

Add the new log files in the new location and drop the old log files.

Physically rename the files from the OS.

If you cannot afford any downtime, consider adding new log files in the new location and then dropping the old log files. See the section “Adding Online Redo Log Groups,” earlier in this chapter, for details on how to add a log group. See also the section “Resizing and Dropping Online Redo Log Groups,” earlier in this chapter, for details on how to drop a log group.

Alternatively, you can physically move the files from the OS. You can do this with the database open or closed. If your database is open, ensure that the files you move are not part of the current online redo log group (because those are actively written to by the log-writer background process). It is dangerous to try to do this task while your database is open because on an active system, the online redo logs may be switching at a rapid rate, which creates the possibility of attempting to move a file while it is being switched to be the current online redo log. Therefore, I recommend that you only try to do this while your database is closed.

- 1.Shut down your database:SQL> shutdown immediate;

- 2.From the OS prompt, move the files. This example uses the mv command to accomplish this task:$ mv /u02/oraredo/o18c/redo02b.rdo /u01/oraredo/o18c/redo02b.rdo

- 3.Start up your database in mount mode:SQL> startup mount;

- 4.Update the control file with the new file locations and names:SQL> alter database rename file '/u02/oraredo/o18c/redo02b.rdo'to '/u01/oraredo/o18c/redo02b.rdo';

- 5.Open your database:SQL> alter database open;

You can verify that your online redo logs are in the new locations by querying the V$LOGFILE view. I recommend as well that you switch your online redo logs several times and then verify from the OS that the files have recent timestamps. Also check the alert.log file for any pertinent errors.

Controlling the Generation of Redo

Direct path INSERT statements

Direct path SQL*Loader

CREATE TABLE ... AS SELECT (NOLOGGING only affects the initial create, not subsequent regular DML, statements against the table)

ALTER TABLE ... MOVE

ALTER TABLE ... ADD/MERGE/SPLIT/MOVE/MODIFY PARTITION

CREATE INDEX

ALTER INDEX ... REBUILD

CREATE MATERIALIZED VIEW

ALTER MATERIALIZED VIEW ... MOVE

CREATE MATERIALIZED VIEW LOG

ALTER MATERIALIZED VIEW LOG ... MOVE

Be aware that if redo isn’t logged for a table or index, and you have a media failure before the object is backed up, then you cannot recover the data; you receive an ORA-01578 error, indicating that there is logical corruption of the data.

Note

You can also override the tablespace level of logging at the object level. For example, even if a tablespace is specified as NOLOGGING, you can create a table with the LOGGING clause.

Implementing Archivelog Mode

Recall from the discussion earlier in this chapter that archive redo logs are created only if your database is in archivelog mode. If you want to preserve your database transaction history to facilitate point-in-time and other types of recovery, you need to enable that mode.

In normal operation, changes to your data generate entries in the database redo log files. As each online redo log group fills up, a log switch is initiated. When a log switch occurs, the log-writer process stops writing to the most recently filled online redo log group and starts writing to a new online redo log group. The online redo log groups are written to in a round-robin fashion—meaning the contents of any given online redo log group will eventually be overwritten. Archivelog mode preserves redo data for the long term by employing an archiver background process to copy the contents of a filled online redo log to what is termed an archive redo log file . The trail of archive redo log files is crucial to your ability to recover the database with all changes intact, right up to the precise point of failure.

Making Architectural Decisions

Where to place the archive redo logs and whether to use the fast recovery area to store them

How to name the archive redo logs

How much space to allocate to the archive redo log location

How often to back up the archive redo logs

When it’s okay to permanently remove archive redo logs from disk

How to remove archive redo logs (e.g., have RMAN remove the logs, based on a retention policy)

Whether multiple archive redo log locations should be enabled

When to schedule the small amount of downtime that is required (if a production database)

As a general rule of thumb, you should have enough space in your primary archive redo location to hold at least a day’s worth of archive redo logs. This lets you back them up on a daily basis and then remove them from disk after they have been backed up.

If you decide to use a fast recovery area (FRA) for your archive redo log location, you must ensure that it contains sufficient space to hold the number of archive redo logs generated between backups. Keep in mind that the FRA typically contains other types of files, such as RMAN backup files, flashback logs, and so on. If you use an FRA, be aware that the generation of other types of files can potentially impact the space required by the archive redo log files. There are parameters that can be set to manage the FRA and provide a way to resize the space for recovery in order for the database to continue instead of having to increase space on the file system.

The parameters DB_RECOVERY_FILE_DEST and DB_RECOVERY_FILE_DEST_SIZE set the file location for the FRA and the size of the space to be used by the database. These can also prevent one database filling up the space for other databases that might be on the same server. The ASM diskgroup FRA can be created to manage the space using ASM. DB_RECOVERY_FILE_DEST = +FRA, will allow the area to use the FRA diskgroup. Again, there are advantages of managing space behind the scene in a scenario that fills up space. Using these parameters along with ASM removes specific file systems and allows for more options to quickly address issues with archive logs and using the recovery areas. FRA is recommended for this since the parameters are dynamic and can will allow for changes to occur to prevent the database hanging. This should be included in the planning and architecting of the archive mode of the database.

You need a strategy for automating the backup and removal of archive redo log files. For user-managed backups, this can be implemented with a shell script that periodically copies the archive redo logs to a backup location and then removes them from the primary location. As you will see in later chapters, RMAN automates the backup and removal of archive redo log files.

If your business requirements are such that you must have a certain degree of high availability and redundancy, then you should consider writing your archive redo logs to more than one location. Some shops set up jobs to copy the archive redo logs periodically to a different location on disk or even to a different server.

Setting the Archive Redo File Location

Set the LOG_ARCHIVE_DEST_N database initialization parameter.

Implement FRA.

These two approaches are discussed in detail in the following sections.

Tip

If you do not specifically set the archive redo log location via an initialization parameter or by enabling the FRA, then the archive redo logs are written to a default location. For Linux/Unix, the default location is ORACLE_HOME/dbs. For Windows, the default location is ORACLE_HOMEdatabase. For active production database systems, the default archive redo log location is rarely appropriate.

Setting the Archive Location to a User-Defined Disk Location (non-FRA)

In the prior line of code, my standard for naming archive redo log files includes the ORACLE_SID (in this example, o18c to start the string); the mandatory parameters %t, %s, and %r; and the string .arc, to end. I like to embed the name of the ORACLE_SID in the string to avoid confusion when multiple databases are housed on one server. I like to use the extension .arc to differentiate the files from other types of database files.

Tip

If you do not specify a value for LOG_ARCHIVE_FORMAT , Oracle uses a default, such as %t_%s_%r.dbf. One aspect of the default format that I do not like is that it ends with the extension .dbf, which is widely used for data files. This can cause confusion about whether a particular file can be safely removed because it is an old archive redo log file or should not be touched because it is a live data file. Most DBAs are reluctant to issue commands such as rm *.dbf for fear of accidentally removing live data files.

You can dynamically change the LOG_ARCHIVE_DEST_n parameters while your database is open. However, you have to stop and start your database for the LOG_ARCHIVE_FORMAT parameter to take effect.

Recovering from Setting a Bad spfile Parameter

In this situation, if you are using a spfile, you cannot start your instance. You have a couple of options here. If you are using RMAN and are backing up the spfile, then restore the spfile from a backup.

If you are not using RMAN, you can also try to edit the spfile directly with an OS editor (such as vi), but Oracle doesn’t recommend or support this.

In SQLPlus create the init.ora file from the spfile.

SQL> create pfile=inito18c.ora from spfile;

Then you can start up the database using the fixed spfile.

Valid Variables for the Log Archive Format String

Format String | Meaning |

|---|---|

%s | Log sequence number |

%S | Log sequence number padded to the left with zeros |

%t | Thread number |

%T | Thread number padded to the left with zeros |

%a | Activation ID |

%d | Database ID |

%r | Resetlogs ID required to ensure uniqueness across multiple incarnations of the database |

You can enable up to 31 different locations for the archive redo log file destination. For most production systems, one archive redo log destination location is usually sufficient. If you need a higher degree of protection, you can enable multiple destinations. Keep in mind that when you use multiple destinations, the archiver must be able to write to at least one location successfully. If you enable multiple mandatory locations and set LOG_ARCHIVE_MIN_SUCCEED_DEST to be higher than 1, then your database may hang if the archiver cannot write to all mandatory locations.

Using the FRA for Archive Log Files

DB_RECOVERY_FILE_DEST_SIZE specifies the maximum space to be used for all files that are stored in the FRA for a database.

DB_RECOVERY_FILE_DEST specifies the base directory for the FRA.

If you are using an init.ora file, modify it with an OS utility (such as vi) with the appropriate entries.

After you enable FRA, by default, Oracle writes archive redo logs to subdirectories in the FRA.

Note

If you’ve set the LOG_ARCHIVE_DEST_N parameter to be a location on disk, archive redo logs are not written to the FRA.

Each day that archive redo logs are generated results in a new directory’s being created in the FRA, using the directory name format YYYY_MM_DD. Archive redo logs written to the FRA use the OMF format naming convention (regardless of whether you’ve set the LOG_ARCHIVE_FORMAT parameter).

Enabling Archivelog Mode

Disabling Archivelog Mode

Usually, you don’t disable archivelog mode for a production database. However, you may be doing a big data load and want to reduce any overhead associated with the archiving process, and so you want to turn off archivelog mode before the load begins and then re-enable it after the load. If you do this, be sure you make a backup as soon as possible after re-enabling archiving.

Reacting to a Lack of Disk Space in Your Archive Log Destination

As a production-support DBA, you never want to let your database get into that state. Sometimes, unpredictable events happen, and you have to deal with unforeseen issues.

Note

DBAs who support production databases have a mindset completely different from that of architect DBAs. Getting new ideas or learning about new technologies is a perfect time to work together and communicate what might work or not work in your environment. Set up time outside of troubleshooting with production DBAs and architects to plan and set strategies for the environment.

Move files to a different location.

Compress old files in the archive redo log location.

Permanently remove old files.

Switch the archive redo log destination to a different location (this can be changed dynamically, while the database is up and running).

If using FRA, increase the space allocation for DB_RECOVERY_FILE_DEST_SIZE.

If using FRA, change the destination to different location in the parameter DB_RECOVERY_FILE_DEST.

RMAN backup and delete the archive log files.

Remove expired files from the directory using RMAN.

Moving files is usually the quickest and safest way to resolve the archiver error along with increasing the allocation or directory with the DB_RECOVERY_FILE_DEST parameters. You can use an OS utility such as mv to move old archive redo logs to a different location. If they are needed for a subsequent restore and recovery, you can let the recovery process know about the new location. Be careful not to move an archive redo log that is currently being written to. If an archived redo log file appears in V$ARCHIVED_LOG, that means it has been completely archived.

You can use an OS utility such as gzip to compress archive redo log files in the current archive destination. If you do this, you have to remember to uncompress any files that may be later needed for a restore and recovery. Be careful not to compress an archive redo log that is currently being written to.

Another option is to use an OS utility such as rm to remove archive redo logs from the disk permanently. This approach is dangerous because you may need those archive redo logs for a subsequent recovery. If you do remove archive redo log files, and you don’t have a backup of them, you should make a full backup of your database as soon as possible. Using RMAN to back up and delete the files is a much safer approach and assures that recovery is possible until another backup is performed. Again, this approach is risky and should only be done as a last resort; if you delete archive redo logs that haven’t been backed up, then you chance not being able to perform a complete recovery.

After you’ve resolved the issue with the primary location, you can switch back the original location.

Note

When a log switch occurs, the archiver determines where to write the archive redo logs, based on the current FRA setting or a LOG_ARCHIVE_DEST_N parameter. It doesn’t matter to the archiver if the destination has recently changed.

When the archive redo log file destination is full, you have to scramble to resolve it. This is why a good deal of thought should precede enabling archiving for 24-7 production databases.

For most databases, writing the archive redo logs to one location is sufficient. However, if you have any type of disaster recovery or high-availability requirement, then you should write to multiple locations. Sometimes, DBAs set up a job to back up the archive redo logs every hour and copy them to an alternate location or even to an alternate server.

Backing Up Archive Redo Log Files

Periodically copying archive redo logs to an alternate location and then removing them from the primary destination

Copying the archive redo logs to tape and then deleting them from disk

Using two archive redo log locations

Using Data Guard for a robust disaster recovery solution

Keep in mind that you need all archive redo logs generated since the begin time of the last good backup to ensure that you can completely recover your database. Only after you are sure you have a good backup of your database should you consider removing archive redo logs that were generated prior to the backup.

If you are using RMAN as a backup and recovery strategy, then you should use RMAN to back up the archive redo logs. Additionally, you should specify an RMAN retention policy for these files and have RMAN remove the archive redo logs only after the retention policy requirements are met (e.g., back up files at least once before removing from disk) (see Chapter 18 for details on using RMAN) .

Summary

This chapter described how to configure and manage control files and online redo log files and enable archiving. Control files and online redo logs are critical database files; a normally operating database cannot function without them.

Control files are small binary files that contain information about the structure of the database. Any control files specified in the parameter file must be available in order for you to mount the database. If a control file becomes unavailable, then your database will cease operating with the next log switch or needed write to the control file until you resolve the issue. I highly recommend that you configure your database with at least three control files. If one control file becomes unavailable, you can replace it with a copy of a good existing control file. It is critical that you know how to configure, add, and remove these files.

Online redo logs are crucial files that record the database’s transaction history. If you have multiple instances connected to one database, then each instance generates its own redo thread. Each database must be created with two or more online redo log groups. You can operate a database with each group’s having just one online redo log member. However, I highly recommend that you create your online redo log groups with two members in each group. If an online redo log has at least one member that can be written to, your database will continue to function. If all members of an online redo log group are unavailable, then your database will cease to operate. As a DBA you must be extremely proficient in creating, adding, moving, and dropping these critical database files. Using storage options such as flash will allow for faster writes to the redo logs. Solid state disks might not provide faster writes, which does not make them the ideal choice for redo logs.

Archiving is the mechanism for ensuring you have all the transactions required to recover the database. Once enabled, the archiver needs to successfully copy the online redo log after a log switch occurs. If the archiver cannot write to the primary archive destination, then your database will hang. Therefore, you need to map out carefully the amount of disk space required and how often to back up and subsequently remove these files.

Using Fast Recovery Area (FRA) provides additional ways to manage the archive logs and allows for some flexibility in planning for growth and sizing of the disk needed for the archive logs.

The chapters up to this point in the book have covered tasks such as installing the Oracle software; creating databases; and managing tablespaces, data files, control files, online redo log files, and archiving. The next several chapters concentrate on how to configure a database for application use and include topics such as creating users and database objects.