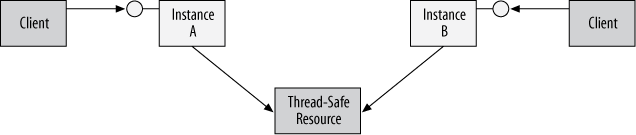

Synchronizing access to the service instance using

ConcurrencyMode.Single or an explicit

synchronization lock only manages concurrent access to the service

instance state itself. It does not provide safe access to the underlying

resources the service may be using. These resources must also be

thread-safe. For example, consider the application shown in Figure 8-2.

Even though the service instances are thread-safe, the two instances try to concurrently access the same resource (such as a static variable, a helper static class, or a file), and therefore the resource itself must have synchronized access. This is true regardless of the service instancing mode. Even a per-call service could run into the situation shown in Figure 8-2.

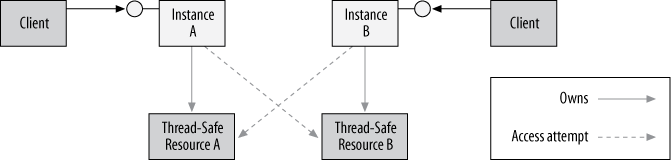

The naive solution to providing thread-safe access to resources is to provide each resource with its own lock, potentially encapsulating that lock in the resource itself, and ask the resource to lock the lock when it’s accessed and unlock the lock when the service is done with the resource. The problem with this approach is that it is deadlock-prone. Consider the situation depicted in Figure 8-3.

In the figure, Instance A of the service accesses the thread-safe Resource A. Resource A has its own synchronization lock, and Instance A acquires that lock. Similarly, Instance B accesses Resource B and acquires its lock. A deadlock then occurs when Instance A tries to access Resource B while Instance B tries to access Resource A, since each instance will be waiting for the other to release its lock.

The concurrency and instancing modes of the service are almost

irrelevant to avoiding this deadlock. The only case that avoids it is

if the service is configured both with InstanceContextMode.Single and ConcurrencyMode.Single, because a

synchronized singleton by definition can only have one client at a

time and there will be no other instance to deadlock with over access

to resources. All other combinations are still susceptible to this

kind of deadlock. For example, a per-session synchronized service may

have two separate thread-safe instances associated with two different

clients, yet the two instances can deadlock when accessing the

resources.

There are a few possible ways to avoid the deadlock. If all instances of the service meticulously access all resources in the same order (e.g., always trying to acquire the lock of Resource A first, and then the lock of Resource B), there will be no deadlock. The problem with this approach is that it is difficult to enforce, and over time, during code maintenance, someone may deviate from this strict guideline (even inadvertently, by calling methods on helper classes) and trigger the deadlock.

Another solution is to have all resources use the same shared

lock. In order to minimize the chances of a deadlock, you’ll also want

to minimize the number of locks in the system and have the service

itself use the same lock. To that end, you can configure the service

with ConcurrencyMode.Multiple (even with a

per-call service) to avoid using the

WCF-provided lock. The first service instance to acquire the

shared lock will lock out all other instances and own all underlying

resources. A simple technique for using such a shared lock is locking

on the service type, as shown in Example 8-3.

Example 8-3. Using the service type as a shared lock

[ServiceBehavior(InstanceContextMode = InstanceContextMode.PerCall,

ConcurrencyMode = ConcurrencyMode.Multiple)]

class MyService : IMyContract

{

public void MyMethod()

{

lock(typeof(MyService))

{

...

MyResource.DoWork();

...

}

}

}

static class MyResource

{

public static void DoWork()

{

lock(typeof(MyService))

{

...

}

}

}The resources themselves must also lock on the service type (or some other shared type agreed upon in advance). There are two problems with the approach of using a shared lock. First, it introduces coupling between the resources and the service, because the resource developer has to know about the type of the service or the type used for synchronization. While you could get around that by providing the type as a resource construction parameter, it will likely not be applicable with third-party-provided resources. The second problem is that while your service instance is executing, all other instances (and their respective clients) will be blocked. Therefore, in the interest of throughput and responsiveness, you should avoid lengthy operations when using a shared lock.

If you think the situation in Example 8-3, where the two instances are of the same service, is problematic, imagine what happens if the two instances are of different services. The observation to make here is that services should never share resources. Regardless of concurrency management, resources are local implementation details and therefore should not be shared across services. Most importantly, sharing resources across the service boundary is also deadlock-prone. Such shared resources have no easy way to share locks across technologies and organizations, and the services need to somehow coordinate the locking order. This necessitates a high degree of coupling between the services, violating the best practices and tenets of service-orientation.