Home is a place you grow up wanting to leave and grow old wanting to get back to.

—John Ed Pearce

The first four chapters of this book showed you how to bring in video streams of all sorts and analyze them first on a computer and then on the small limited device that is the Raspberry Pi.

This last chapter closes the gap while opening up the possibilities by linking the video part to the voice part.

With the advent of voice platforms such as Apple Siri, Google Assistant, Amazon Alexa, and Microsoft Cortona, voice-assisted services are popping up everywhere. Humans are language creatures; we have to express ourselves. Drawing is also an option to interact with devices, like was done in the movie Minority Report, but to be honest, you need pretty big and expensive screens to do something like that. Voice works everywhere, and we don’t need expensive tooling to use it. Using voice is intuitive and empowering.

I personally have many examples of how voice assistants could be used in everyday life. Start by entering a coffee shop and asking for a “double-shot cappuccino with cinnamon on top, on the front terrace, please”, and then it is automatically made and delivered to you while you’re sitting in the sun outside.

Here’s another simple example: I wish it were possible to go to an ATM and say, “Please withdraw $100 from my main account, in five notes of $20.” The voice assistant recognizes your voice so you do not need to scroll through endless confirmation screens; you just get your bills. (I know, with all the virtual currencies nowadays, you don’t really even need to go to the ATM anymore, do you?)

Those are some day-to-day examples, but you can also look to science-fiction movies to see voice-controlled spaceships as well as coffee machines. This is big.

So, why don’t we use one of the existing software assistants for voice recognition, like Siri? Well, all of the big players basically control all of your user data and can access whatever data is going through their pipelines at any time. This can be annoying as an end user—just about as annoying when creating and engineering your own solutions. But, as much as possible, you want to be able to control where your data flows or, indeed, doesn’t flow.

In this chapter, we thus introduce Rhasspy (https://github.com/synesthesiam/rhasspy).

Function completely disconnected from the Internet

Work well with Home Assistant, Hass.io, and Node-RED

We will install and learn how to interact with the MQTT protocol and Mosquitto, a broker that can be used to send recognized messages from Rhasspy.

We will set up the Rhasspy interface to listen to intents and view those messages in the MQTT queue.

We will integrate those messages with the content of the previous chapters and run real-time video analysis, updating the object detection parameters and using the voice commands received from Rhasspy.

Rhasspy Message Flow

Basically, Rhasspy continuously looks for “wake-up words.” It wakes up when it’s called by one of these words and records the next sentence and turns it into words. Then it analyzes the sentence against what it can recognize. Finally, if the sentence matches against known sentences, it returns a result with probabilities.

From an application point of view, the main thing you need to interact with when building a voice application is the messaging queue, which in most IoT cases is an MQTT server. MQTT was developed to focus on telemetry measures and thus had to be lightweight and as close to real time as possible. Mosquitto is an open source implementation of the MQTT protocol; it is lightweight and secure and was designed specifically to service IoT applications and small devices.

To do voice recognition, you start by creating Rhasspy commands, or intents, with a set of sentences. Each sentence is of course a set of words and can contain one or more variables. Variables are words that can be replaced by others and are assigned corresponding values once the voice detection has been performed by the voice engine.

What is the weather tomorrow?

What is the weather <when:=datetime>?

Here, when is of type datetime and could be anything like today, tomorrow, this morning, or the first Tuesday of next month.

“What is the weather” is fixed within the context of that command and will never change. But the <datetime> location is a variable, so we give the engine hints on its type for better recognition.

Once an intent is recognized, Rhasspy posts a JSON message in a Mosquitto topic associated with the intent.

The example we will build in this chapter is to ask the application to highlight certain objects detected in the video stream.

Show me only cats!

Some message headers, notably the recognized input.

Automatic speech recognition (ASR) tokens, or the confidence of each word of the sentence.

The overall ASR confidence.

The intent that was recognized and its confidence score.

The value for each of the “slots,” the variable defined within the sentence, and their associated confidence scores.

Alternatives intents are also provided, although I found them to not be very useful in most contexts; therefore, looking for second best choice and trying to recover the flow is usually not worth it.

JSON Message

Rhasspy message flow

Since the main point of interaction for the system is the system queue, let’s see first how we can install the queue broker and then interact with it.

MQTT Message Queues

To be able to use the message queue and receive messages from Rhasspy, we will install the message broker Mosquitto, one of the most widely used MQTT brokers. MQTT is a lightweight and energy-efficient protocol, with different levels of quality of service that can be set at the message level. Mosquitto is a very lightweight implementation of MQTT.

Installing Mosquitto

On the command line, you already have two commands available to you: one to publish messages on given topics and one to subscribe to messages on given topics.

Comparison of Other MQTT Brokers

For your reference, you can find a comparison of a few other MQTT brokers here: https://github.com/mqtt/mqtt.github.io/wiki/server-support.

RabbitMQ is a strong open source contender, but while clustering support is definitely robust, the setup is not quite easy.

MQTT Messages on the Command Line

It’s good practice to start the queue on the Raspberry Pi and read messages from the computer, or vice versa.

The main problem is simply in knowing the IP address or the hostname of the target machine, computer, or Raspberry, but sending messages is done in the same way using the mosquitto_pub and mosquitto_sub commands.

As an extra step, you could even run your Mosquitto MQTT broker in the cloud, for example, creating and integrating a queue from a service like https://www.cloudmqtt.com/.

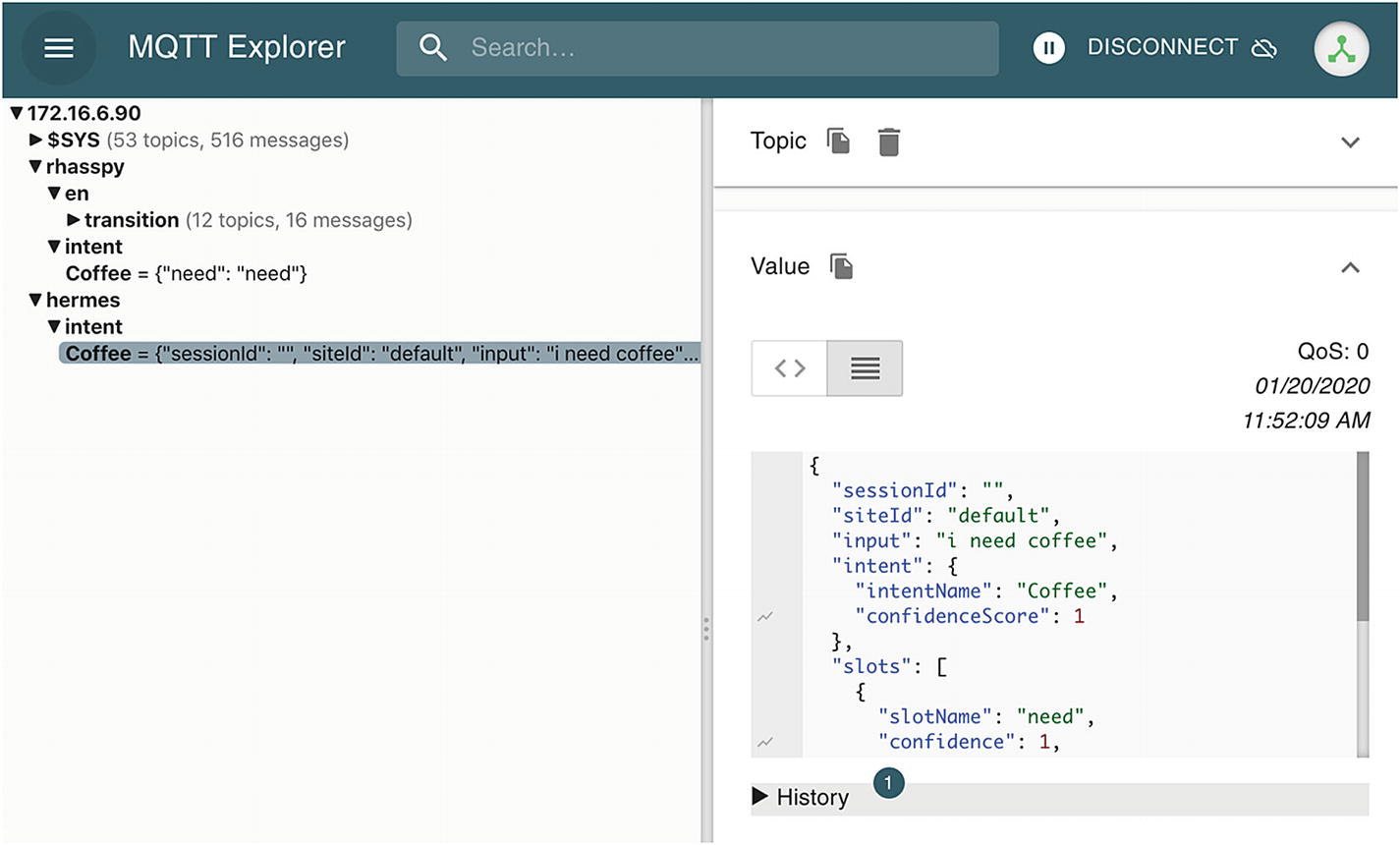

MQTT Explorer, I am a MQTT message

It should also be available through your favorite package manager.

Let’s get back to sending the full equivalent of an intent message. You can use the command line to send a JSON file directly to the target intent queue, which as we have just seen is as follows: hermes/intent/hellonico:highlight.

where onlycats.json is the JSON file with the content we have just seen in Listing 5-1.

Sending Rhasspy-like intent messages from the command line

We have seen how to interact, publish, and subscribe to messages with MQTT from the command line. Let’s do the same using Java now.

MQTT Messaging in Java

In this section, we will send and receive messages to and from our Java/Visual Studio Code setup. This will help you understand the message flow more easily.

Dependencies Setup

Either using the project from previous chapters or creating a new one based on the project template, we are going to add some Java libraries to interact with the MQTT messaging queue and to parse JSON content.

Java Dependencies

origami and origami-filters for real-time video processing

org.json for JSON content parsing

mqttv3 for interacting with the MQTT broker and handling messages

Sending a Basic MQTT Message

Since I’m writing part of this book on a plane flying over Russia, we will send a quick “hello” message to our fellow Russian spies using the MQTT protocol.

- 1.

Create an MQTT client object, connecting to the host where the broker is running.

- 2.

Use that object to connect to the broker using the connect method.

- 3.

Create an MQTT message with a payload made of a string converted to bytes.

- 4.

Publish the message.

- 5.

Finally, cleanly disconnect.

Hello, Russia

MQTT Explorer in Russia

Note that you should of course be listening to the hello topic first.

Simulating a Rhasspy Message

Intent message handling from Java

JSON files showing in the MQTT Explorer window

JSON Fun

Before being able to handle Rhasspy messages from the MQTT topic, we need to be able to do a bit of JSON parsing.

Obviously, there are many ways to do parsing in Java; we can parse manually (hint, don’t do this) or use different libraries. Here we are going to use the org.json library because it’s pretty fast at handling incoming MQTT messages.

- 1.

Create a JSONObject using the string version of the JSON message.

- 2.Use one of the following functions to navigate the JSON document:

get(),

getJSONObject()

getJSONArray()

- 3.

Get the wanted value with getInt, getBoolean, getString, etc.

Most of the time, Java is seen as complicated because somewhere in the layers a complicated piece of middleware was introduced. I actually find the language pretty succinct these days and fast to work with given all the autocompletion and refactoring tooling.

Anyway, here we are just pulling out one value of the message, but you could also unmarshal the whole JSON message into a Java object...and also create an open source library for us. Let me know when it is ready to use!

Parsing JSON Content

Listening to MQTT Basic Messages

- 1.

Subscribe to a topic or parent topic.

- 2.

Adda listener that implements the IMqttMessageListener interface.

From Russia with Love

Messages with Java Lambdas

From Russia with love

Obviously, the fun is in having a distributed system and handling messages coming from different places. Here, try to run the broker on the Raspberry Pi. After setting up the IP address of your Raspberry Pi in the sender listing, send a message from your computer to the Raspberry Pi in Java.

Or send a message in Java from the Raspberry Pi to a broker located remotely or, again, in the cloud.

Listening to MQTT JSON Messages

Cats Coming

Now that you know everything about keys and brokers, it is time to get and install Rhasspy.

Voice and Rhasspy Setup

The Rhasspy voice platform needs a set of services running to be able to deploy applications with intents. In this section, you will see how to install the platform first and then how to create applications with intents.

The easiest way to install Rhasspy is actually via Docker (https://www.docker.com/), which is a container engine on which you can run images. The Rhasspy maintainer has created a ready-to-use Docker image for Rhasspy, so we will take advantage of that.

Preparing the Speaker

You do need a working microphone setup before executing any of the voice commands.

Standard USB microphones should be plug-and-play, and I have been using conference room microphones with pretty good results.

ReSpeaker lights

Installing Docker

Installing Rhasspy with Docker

The whole Rhasspy suite can be started by running the prepared container, and this is done by executing the following command on the Raspberry Pi, provided you have installed Docker properly.

docker: This is the command-line executable that communicates with the Docker daemon and sends commands to start and stop the container.

-d: This tells Docker to run the image as a daemon, not waiting for the execution of the image to finish and starting the container as a background process.

-p 12101:12101: This does port mapping between Docker and the host; without this, open ports within the container cannot be viewed from the outside world. Here we tell Docker to map port 12101 of the container to port 12101 of the Raspberry Pi.

--restart unless-stopped: This speaks for itself, meaning even if the container is dying because of an internal exception or error, the Docker daemon will automatically restart the image.

-v "$HOME/.config/rhasspy/profiles:/profiles": Here we want to “mount” a folder of the Raspberry Pi and make it available to the Docker container, and vice versa. This is kind of a shared-network folder. It will be used to store files downloaded by Rhasspy for us.

--device /dev/snd:/dev/snd: This is pretty neat; it maps the raw data coming to the sound system of the Raspberry Pi and makes it available to the Docker container.

synesthesiam/rhasspy-server:latest: This is the name of the image used to start the container. Think of this image as a verbatim copy of the file system used to start the container.

- The next two are parameters that the Rhasspy image understands:

--user-profiles /profiles: This is where to store the profiles, and this is the path of the folder we are sharing with the Raspberry Pi.

--profile en: This is the language to use.

The Rhasspy server and its console are ready, so let’s go back to the main computer and access the Raspberry Pi via the browser-based IDE.

Starting the Rhasspy Console

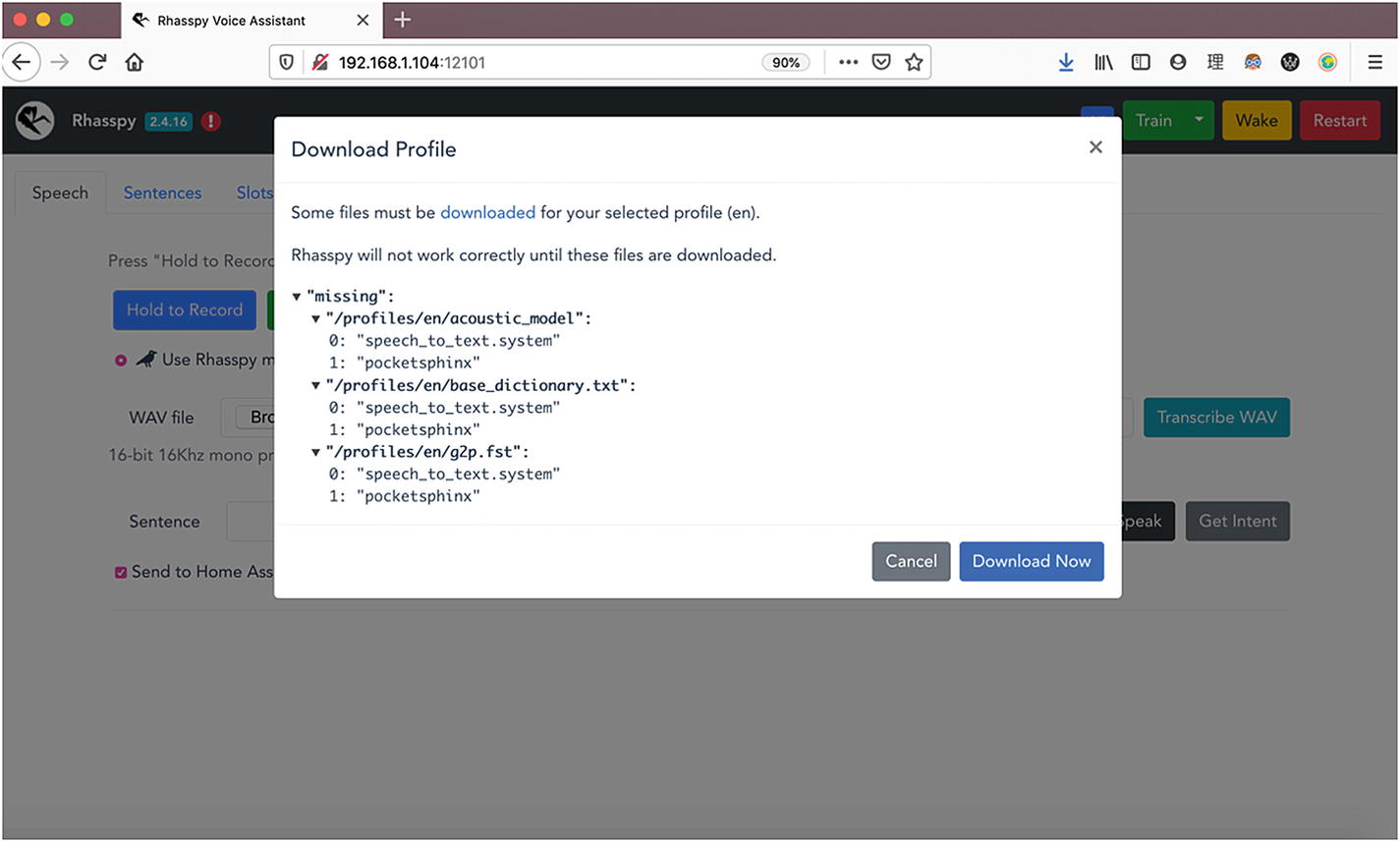

To access the console, go to http://192.168.1.104:12101/, where 192.168.1.104 is the IP address of the Raspberry Pi.

The Rhasspy console

The console makes it quite obvious for you on first start that it should go and fetch some remote files, so provided your Raspberry Pi is connected to the Internet, just click the Download Now button.

Download complete

Refreshed Rhasspy console

Red markers

The setup problems are nicely explained

The console is ready!

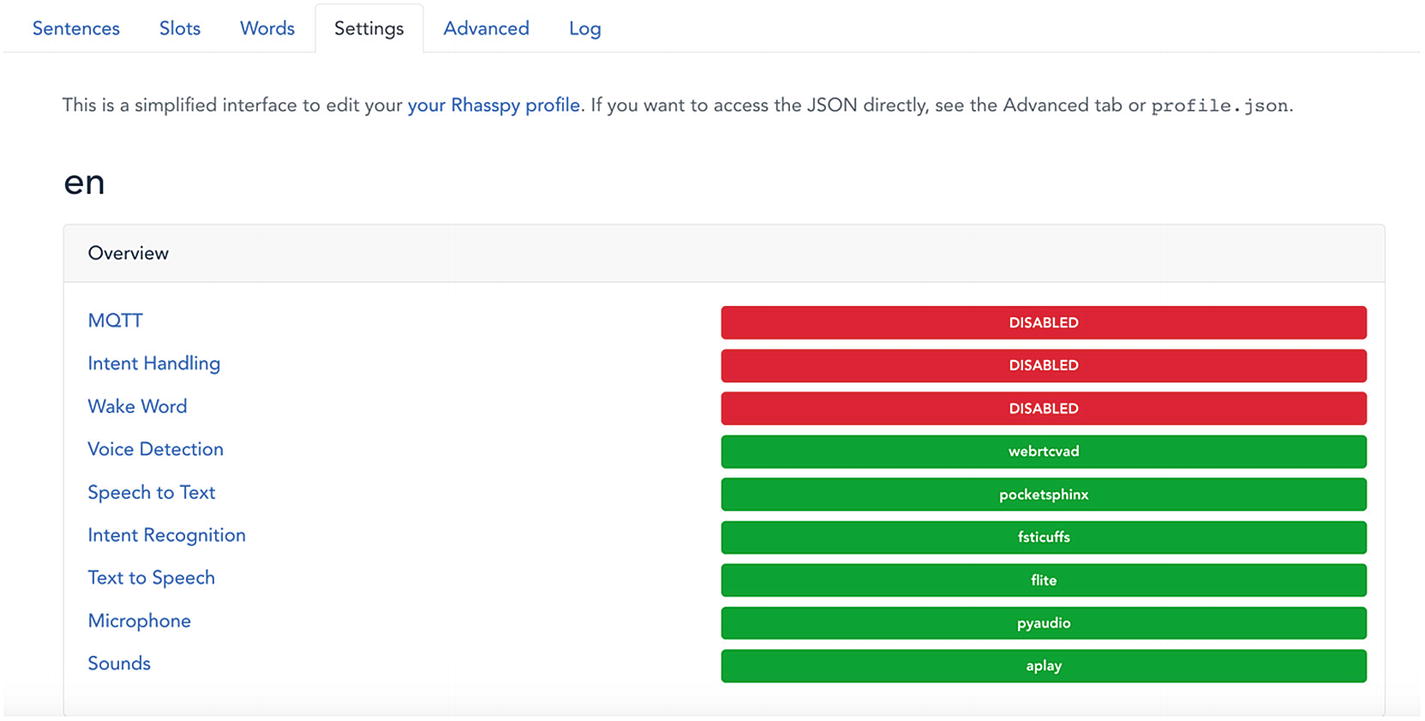

The Rhasspy Console

More configuration options

Speech: This is where you can try your assistant.

Sentences: This is where you define what your vocal assistant can detect and recognize.

Slots: Sentences have variables in them. Each possible value for a variable can be defined in a line in the Sentences section, or you can create lists of possible values in the Slots section.

Words: This is the section where you define how to pronounce words. This is especially useful for names, as the English engine is sometimes not so well prepared for French people’s pronunciations!

Settings: This is an all-encompassing screen where you can configure the way engines work and what to do with recognized intents.

Advanced: This is the same as Settings except the configuration is exposed as a text file that can be edited directly in the browser.

Log: This is where engine logs can be viewed in real time.

A few notes…

The browser IDE (to be correct, the processes inside the Docker container) will ask you to restart Rhasspy each time you change a configuration setting.

It will also ask you to train Rhasspy each time you make changes to either the Sentences or Words section.

There is no specific reason to not do this, unless you’re in the middle of surgery and need to ask your assistant for immediate help.

We know how to navigate the console now, so let’s make our vocal assistant recognize something for us.

First Voice Command

To create a voice command that generates an intent, we need to make some edits in the Sentences section. As just explained, the Sentences section is where we define the sentences our engine can recognize.

First Command, Full Sentence

For once, the grammar used to define intents is quite simple and is inserted via an .ini file (which comes from the good old Windows 95 days).

I do really need coffee

First Rhasspy training

Speech Section and Trying Your Intent

Speech section

The Speech section is where you can either speak or write a sentence and see whether and what intent is recognized.

I…need…coffee

The voice command “Coffee” is recognized, and an associated generated JSON document is attached to it, with various inputs on confidence and raw values.

Since we’re focusing on voice commands here, you can also just tap and hold the “Hold to record” button, say “I need coffee,” and see the spoken sentence being turned into text first, then displayed in the Sentence field, and then analyzed as was just done.

This is probably the time to plug in the ReSpeaker or your USB microphone.

Upload that ineedcoffee.wav file and click the Get Intent button.

Fine-Tuned Intents

It is of course possible to just pop in optional words, variables, and placeholders in the commands defined in the Sentences section. Let’s improve our game here and add details to our intent so as to create better voice commands.

Optional Words

It is of course possible to make words optional in the sentence to make the commands more natural to users.

Adding Alternatives

I don’t need coffee (although, to be honest, that’s not true)

You probably already noticed one problem with this generated intent. Yes, it’s not very useful as is, because the same intent is generated whether we want coffee or not.

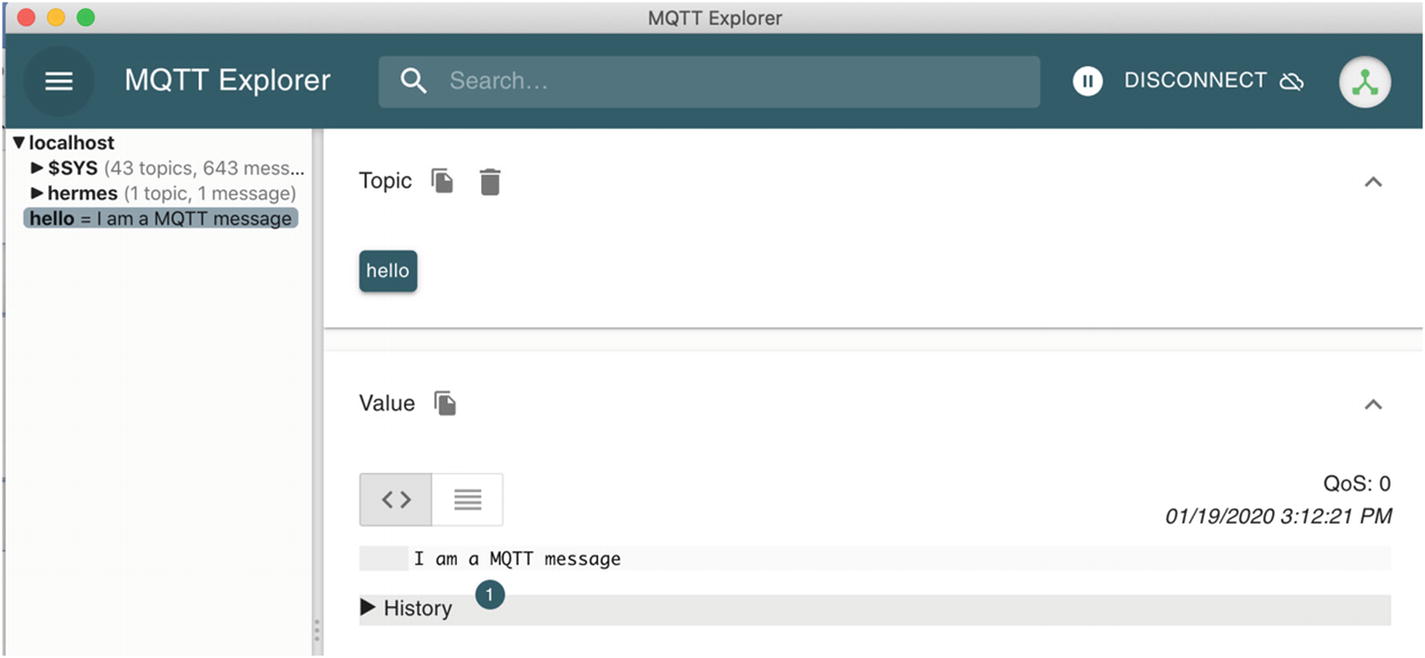

Now, our Coffee intent will add a slot named “need” to the JSON document, and its value will be either “need” or “don’t need.”

Maybe I don’t need coffee

You should notice several things here. First, the summary of the intent is now showing more details, the intent is marked in red, and each slot is nicely shown in a list with its associated value (here, need = don’t need).

Second, the full JSON document of the intent contains an entities section, and each entity has an entity name and associated value.

As a side note, the JSON document also contains a slots section, with a simple list of all the slots of the intent.

What’s next?

Making Intents with Slots More Readable

You probably noticed that if we add one too many slots, then the whole sentence becomes messy quite quickly.

We can move the slot definition right below the intent name and assign possible values with =.

Nothing has changed, and the intent behaves the same as before, but it is now vastly more readable.

That’s great, but what’s next?

Defining Reusable Slots

Yes, defining slots inline also brings extra complexity to our sentence definitions, so it is possible to move them out and define them on the Slots tab.

Slots of coffee needs

myneed: This is the placeholder in the sentence, meaning its definition can be seen as being inlined at training time.

$need: The dollar sign specifies that this is coming from the slots JSON file.

{need}: This is the name of the slot within that intent, meaning you can reuse the same list in different intents, each time with a different slot name.

Great. Now we have highly configurable intents, with a complex list of options on hand.

Let’s move to the section where the external system can make use of the generated JSON file when an intent is recognized.

Settings: Get That Intent in the Queue

The generated JSON file looks like it could be useful, but we want to have it external to Rhasspy and in the MQTT queue so that we can do more processing.

Rhasspy Settings section

MQTT: This sends the JSON to the MQTT queue.

Wake word: The background process is always listening for possible “wake-up” keywords; this is your “Hey, Siri” or “Hey, Google” spy here.

MQTT settings

Make sure to fill in the hostname or the IP address of your Raspberry Pi here. Restart Rhasspy when asked, and that’s it. Now let’s try to get some coffee on MQTT.

One more coffee?

What do you see? We see a message being sent to the hermes/intent/coffee topic, which is something similar to what you saw in the previous sections of this chapter.

Yes, you can feel it too—this is going to work.

You can also see that there is a simple MQTT message sent to Rhasspy/intent/coffee, with just the slot’s name and value in the message body. If you don’t need to play with the confidence and other details, this is the best alternative. Working with this message is left as an exercise for you.

This is easy.

You can get some instant gratification by trying a few messages specifying whether you want or don’t want coffee; just make sure to not drink too much of it.

Settings: Wake-Up Word

It’s nice to go back to speech every now and then, but it would be nice to just leave the Raspberry Pi by itself, and when saying a given word, or set of words, get Rhasspy to listen for intents.

This is done by using wake-up words , which are defined in the Settings section.

Snowboy configuration

Now, when you say “Snowboy,” Rhasspy will wake up, as if you were holding the Record button.

“Snowboy”

“I need coffee”

We’re getting there, aren’t we?

Creating the Highlight Intent

Now, we have to create a small intent that we will use to tell our main object detection application which object we want to focus on.

Name: highlight

Command: show me only <something>, where <something> is either cat or person

Name of the slot: only

You should definitely try this on your own before reading ahead…

“Snowboy”

“Show me only cats”

Show me only cats, Snowboy

Voilà, we’re mostly done with the Rhasspy and voice command section, so we can now go back to detecting objects, cats, and people in real time.

Voice and Real-Time Object Detection

This is the part of the chapter where we bridge it all, meaning the video analyses from previous chapters and the voice recognition using Rhasspy.

First, let’s get ready with a simple origami project setup, with messages being sent by Rhasspy.

Simple Setup: Origami + Voice

- 1.

Start a video streaming on the main camera in full-screen, in a new thread.

- 2.

The video streaming is using the origami core filter annotate, where you can just add some text to the picture.

- 3.

Connect to MQTT on the main thread.

- 4.

On a new intent message, we update the annotate text.

The intent used here is the highlight intent that was defined earlier, with a slot to recognize the names taken from the object categories of the COCO dataset.

Origami + Rhasspy

Webcam streaming

Now let’s connect this setup with object recognition and with the real-time video streaming part.

Origami Real-Time Video Analysis Setup

From the previous chapter, you will remember that we were using an origami filter, called Yolo, to perform object detection. Just like the annotate filter, the Yolo filter is included in the origami-filters library, and in a very Java way, you just have to extend the base filter to highlight the detected objects.

Available in the origami-filter library, the Yolo filter allows you to download and retrieve networks on demand using a network specification.So, for example, networks.yolo:yolov3-tiny:1.0.0 will download yolov3-tiny and cache for further usage, depending on which device you’re on. This is a feature of the origami framework.

networks.yolo:yolov3-tiny:1.0.0

networks.yolo:yolov3:1.0.0

networks.yolo:yolov2:1.0.0

networks.yolo:yolov2-tiny:1.0.0

Each one is either faster or more precise than the one before it.

Creating the Yolo Filter

In Listing 5-10, we prepare the groundwork for showing either the number of all the objects detected, using annotateWithTotal, or the display only, via the only switch. Note that the entry point is in the function annotateAll, where it is decided whether to display all the boxes or a subset of them.

MyYolo Filter

With the filter now ready, we can start doing analysis again.

Running the Video Analysis Alone

Running Yolo on a Video, Updating Parameters in Real Time

You can probably tell where we are heading now, linking the message received from Rhasspy to update the selection of the Yolo analysis.

Integrating with Voice

In this final section, we will highlight a subset of objects based on the input received from the Rhasspy intent.

- 1.

Start a video streaming on the main camera in full screen, in a new thread.

- 2.

The video streaming is using a MyYolo filter loading the Yolo network.

- 3.

Connect to MQTT on the main thread.

- 4.

On a new message, we update the annotate text.

- 5.When a message arrives in the highlight queue, we do the following:

Parse the JSON object.

Retrieve the only value.

Update the selection of the MyYolo filter.

Real-Time Detection, Integrating with the Rhasspy Queue

In the different sample videos or directly in the video stream of the webcam of the Raspberry Pi, you can directly update the objects to be detected.

Mei at school

Mei at night

The Rhasspy message flow

How to interact from Java with MQTT for IoT messaging

How to set up Rhasspy and intents

How to connect messages sent by Rhasspy to our Java code

How to update the real-time video analysis running on the Raspberry Pi by interpreting voice sent by Rhasspy with custom intents

This short book has come to an end, so now it’s your turn to change the world. We’ve only scratched the surface of what is possible, but I really hope this gives you some ideas to try to implement.